How to write a good scientific review article

Affiliation.

- 1 The FEBS Journal Editorial Office, Cambridge, UK.

- PMID: 35792782

- DOI: 10.1111/febs.16565

Literature reviews are valuable resources for the scientific community. With research accelerating at an unprecedented speed in recent years and more and more original papers being published, review articles have become increasingly important as a means to keep up to date with developments in a particular area of research. A good review article provides readers with an in-depth understanding of a field and highlights key gaps and challenges to address with future research. Writing a review article also helps to expand the writer's knowledge of their specialist area and to develop their analytical and communication skills, amongst other benefits. Thus, the importance of building review-writing into a scientific career cannot be overstated. In this instalment of The FEBS Journal's Words of Advice series, I provide detailed guidance on planning and writing an informative and engaging literature review.

© 2022 Federation of European Biochemical Societies.

Publication types

- Review Literature as Topic*

- Research Process

Writing a good review article

- 3 minute read

- 77.6K views

Table of Contents

As a young researcher, you might wonder how to start writing your first review article, and the extent of the information that it should contain. A review article is a comprehensive summary of the current understanding of a specific research topic and is based on previously published research. Unlike research papers, it does not contain new results, but can propose new inferences based on the combined findings of previous research.

Types of review articles

Review articles are typically of three types: literature reviews, systematic reviews, and meta-analyses.

A literature review is a general survey of the research topic and aims to provide a reliable and unbiased account of the current understanding of the topic.

A systematic review , in contrast, is more specific and attempts to address a highly focused research question. Its presentation is more detailed, with information on the search strategy used, the eligibility criteria for inclusion of studies, the methods utilized to review the collected information, and more.

A meta-analysis is similar to a systematic review in that both are systematically conducted with a properly defined research question. However, unlike the latter, a meta-analysis compares and evaluates a defined number of similar studies. It is quantitative in nature and can help assess contrasting study findings.

Tips for writing a good review article

Here are a few practices that can make the time-consuming process of writing a review article easier:

- Define your question: Take your time to identify the research question and carefully articulate the topic of your review paper. A good review should also add something new to the field in terms of a hypothesis, inference, or conclusion. A carefully defined scientific question will give you more clarity in determining the novelty of your inferences.

- Identify credible sources: Identify relevant as well as credible studies that you can base your review on, with the help of multiple databases or search engines. It is also a good idea to conduct another search once you have finished your article to avoid missing relevant studies published during the course of your writing.

- Take notes: A literature search involves extensive reading, which can make it difficult to recall relevant information subsequently. Therefore, make notes while conducting the literature search and note down the source references. This will ensure that you have sufficient information to start with when you finally get to writing.

- Describe the title, abstract, and introduction: A good starting point to begin structuring your review is by drafting the title, abstract, and introduction. Explicitly writing down what your review aims to address in the field will help shape the rest of your article.

- Be unbiased and critical: Evaluate every piece of evidence in a critical but unbiased manner. This will help you present a proper assessment and a critical discussion in your article.

- Include a good summary: End by stating the take-home message and identify the limitations of existing studies that need to be addressed through future studies.

- Ask for feedback: Ask a colleague to provide feedback on both the content and the language or tone of your article before you submit it.

- Check your journal’s guidelines: Some journals only publish reviews, while some only publish research articles. Further, all journals clearly indicate their aims and scope. Therefore, make sure to check the appropriateness of a journal before submitting your article.

Writing review articles, especially systematic reviews or meta-analyses, can seem like a daunting task. However, Elsevier Author Services can guide you by providing useful tips on how to write an impressive review article that stands out and gets published!

- Manuscript Preparation

What are Implications in Research?

How to write the results section of a research paper

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Scholarly Sources: What are They and Where can You Find Them?

Input your search keywords and press Enter.

Reviewing review articles

A review article is written to summarize the current state of understanding on a topic, and peer reviewing these types of articles requires a slightly different set of criteria compared with empirical articles. Unless it is a systematic review/meta-analysis methods are not important or reported. The quality of a review article can be judged on aspects such as timeliness, the breadth and accuracy of the discussion, and if it indicates the best avenues for future research. The review article should present an unbiased summary of the current understanding of the topic, and therefore the peer reviewer must assess the selection of studies that are cited by the paper. As review article contains a large amount of detailed information, its structure and flow are also important.

Back │ Next

- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Education and Communications

- Critical Reviews

How to Write an Article Review (With Examples)

Last Updated: April 24, 2024 Fact Checked

Preparing to Write Your Review

Writing the article review, sample article reviews, expert q&a.

This article was co-authored by Jake Adams . Jake Adams is an academic tutor and the owner of Simplifi EDU, a Santa Monica, California based online tutoring business offering learning resources and online tutors for academic subjects K-College, SAT & ACT prep, and college admissions applications. With over 14 years of professional tutoring experience, Jake is dedicated to providing his clients the very best online tutoring experience and access to a network of excellent undergraduate and graduate-level tutors from top colleges all over the nation. Jake holds a BS in International Business and Marketing from Pepperdine University. There are 12 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 3,101,737 times.

An article review is both a summary and an evaluation of another writer's article. Teachers often assign article reviews to introduce students to the work of experts in the field. Experts also are often asked to review the work of other professionals. Understanding the main points and arguments of the article is essential for an accurate summation. Logical evaluation of the article's main theme, supporting arguments, and implications for further research is an important element of a review . Here are a few guidelines for writing an article review.

Education specialist Alexander Peterman recommends: "In the case of a review, your objective should be to reflect on the effectiveness of what has already been written, rather than writing to inform your audience about a subject."

Article Review 101

- Read the article very closely, and then take time to reflect on your evaluation. Consider whether the article effectively achieves what it set out to.

- Write out a full article review by completing your intro, summary, evaluation, and conclusion. Don't forget to add a title, too!

- Proofread your review for mistakes (like grammar and usage), while also cutting down on needless information.

- Article reviews present more than just an opinion. You will engage with the text to create a response to the scholarly writer's ideas. You will respond to and use ideas, theories, and research from your studies. Your critique of the article will be based on proof and your own thoughtful reasoning.

- An article review only responds to the author's research. It typically does not provide any new research. However, if you are correcting misleading or otherwise incorrect points, some new data may be presented.

- An article review both summarizes and evaluates the article.

- Summarize the article. Focus on the important points, claims, and information.

- Discuss the positive aspects of the article. Think about what the author does well, good points she makes, and insightful observations.

- Identify contradictions, gaps, and inconsistencies in the text. Determine if there is enough data or research included to support the author's claims. Find any unanswered questions left in the article.

- Make note of words or issues you don't understand and questions you have.

- Look up terms or concepts you are unfamiliar with, so you can fully understand the article. Read about concepts in-depth to make sure you understand their full context.

- Pay careful attention to the meaning of the article. Make sure you fully understand the article. The only way to write a good article review is to understand the article.

- With either method, make an outline of the main points made in the article and the supporting research or arguments. It is strictly a restatement of the main points of the article and does not include your opinions.

- After putting the article in your own words, decide which parts of the article you want to discuss in your review. You can focus on the theoretical approach, the content, the presentation or interpretation of evidence, or the style. You will always discuss the main issues of the article, but you can sometimes also focus on certain aspects. This comes in handy if you want to focus the review towards the content of a course.

- Review the summary outline to eliminate unnecessary items. Erase or cross out the less important arguments or supplemental information. Your revised summary can serve as the basis for the summary you provide at the beginning of your review.

- What does the article set out to do?

- What is the theoretical framework or assumptions?

- Are the central concepts clearly defined?

- How adequate is the evidence?

- How does the article fit into the literature and field?

- Does it advance the knowledge of the subject?

- How clear is the author's writing? Don't: include superficial opinions or your personal reaction. Do: pay attention to your biases, so you can overcome them.

- For example, in MLA , a citation may look like: Duvall, John N. "The (Super)Marketplace of Images: Television as Unmediated Mediation in DeLillo's White Noise ." Arizona Quarterly 50.3 (1994): 127-53. Print. [9] X Trustworthy Source Purdue Online Writing Lab Trusted resource for writing and citation guidelines Go to source

- For example: The article, "Condom use will increase the spread of AIDS," was written by Anthony Zimmerman, a Catholic priest.

- Your introduction should only be 10-25% of your review.

- End the introduction with your thesis. Your thesis should address the above issues. For example: Although the author has some good points, his article is biased and contains some misinterpretation of data from others’ analysis of the effectiveness of the condom.

- Use direct quotes from the author sparingly.

- Review the summary you have written. Read over your summary many times to ensure that your words are an accurate description of the author's article.

- Support your critique with evidence from the article or other texts.

- The summary portion is very important for your critique. You must make the author's argument clear in the summary section for your evaluation to make sense.

- Remember, this is not where you say if you liked the article or not. You are assessing the significance and relevance of the article.

- Use a topic sentence and supportive arguments for each opinion. For example, you might address a particular strength in the first sentence of the opinion section, followed by several sentences elaborating on the significance of the point.

- This should only be about 10% of your overall essay.

- For example: This critical review has evaluated the article "Condom use will increase the spread of AIDS" by Anthony Zimmerman. The arguments in the article show the presence of bias, prejudice, argumentative writing without supporting details, and misinformation. These points weaken the author’s arguments and reduce his credibility.

- Make sure you have identified and discussed the 3-4 key issues in the article.

You Might Also Like

- ↑ https://libguides.cmich.edu/writinghelp/articlereview

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4548566/

- ↑ Jake Adams. Academic Tutor & Test Prep Specialist. Expert Interview. 24 July 2020.

- ↑ https://guides.library.queensu.ca/introduction-research/writing/critical

- ↑ https://www.iup.edu/writingcenter/writing-resources/organization-and-structure/creating-an-outline.html

- ↑ https://writing.umn.edu/sws/assets/pdf/quicktips/titles.pdf

- ↑ https://owl.purdue.edu/owl/research_and_citation/mla_style/mla_formatting_and_style_guide/mla_works_cited_periodicals.html

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4548565/

- ↑ https://writingcenter.uconn.edu/wp-content/uploads/sites/593/2014/06/How_to_Summarize_a_Research_Article1.pdf

- ↑ https://www.uis.edu/learning-hub/writing-resources/handouts/learning-hub/how-to-review-a-journal-article

- ↑ https://writingcenter.unc.edu/tips-and-tools/editing-and-proofreading/

About This Article

If you have to write an article review, read through the original article closely, taking notes and highlighting important sections as you read. Next, rewrite the article in your own words, either in a long paragraph or as an outline. Open your article review by citing the article, then write an introduction which states the article’s thesis. Next, summarize the article, followed by your opinion about whether the article was clear, thorough, and useful. Finish with a paragraph that summarizes the main points of the article and your opinions. To learn more about what to include in your personal critique of the article, keep reading the article! Did this summary help you? Yes No

- Send fan mail to authors

Reader Success Stories

Prince Asiedu-Gyan

Apr 22, 2022

Did this article help you?

Sammy James

Sep 12, 2017

Juabin Matey

Aug 30, 2017

Vanita Meghrajani

Jul 21, 2016

Nov 27, 2018

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

Don’t miss out! Sign up for

wikiHow’s newsletter

Explore millions of high-quality primary sources and images from around the world, including artworks, maps, photographs, and more.

Explore migration issues through a variety of media types

- Part of The Streets are Talking: Public Forms of Creative Expression from Around the World

- Part of The Journal of Economic Perspectives, Vol. 34, No. 1 (Winter 2020)

- Part of Cato Institute (Aug. 3, 2021)

- Part of University of California Press

- Part of Open: Smithsonian National Museum of African American History & Culture

- Part of Indiana Journal of Global Legal Studies, Vol. 19, No. 1 (Winter 2012)

- Part of R Street Institute (Nov. 1, 2020)

- Part of Leuven University Press

- Part of UN Secretary-General Papers: Ban Ki-moon (2007-2016)

- Part of Perspectives on Terrorism, Vol. 12, No. 4 (August 2018)

- Part of Leveraging Lives: Serbia and Illegal Tunisian Migration to Europe, Carnegie Endowment for International Peace (Mar. 1, 2023)

- Part of UCL Press

Harness the power of visual materials—explore more than 3 million images now on JSTOR.

Enhance your scholarly research with underground newspapers, magazines, and journals.

Explore collections in the arts, sciences, and literature from the world’s leading museums, archives, and scholars.

- Data, AI, & Machine Learning

- Managing Technology

- Social Responsibility

- Workplace, Teams, & Culture

- AI & Machine Learning

- Diversity & Inclusion

- Big ideas Research Projects

- Artificial Intelligence and Business Strategy

- Responsible AI

- Future of the Workforce

- Future of Leadership

- All Research Projects

- AI in Action

- Most Popular

- The Truth Behind the Nursing Crisis

- Work/23: The Big Shift

- Coaching for the Future-Forward Leader

- Measuring Culture

The spring 2024 issue’s special report looks at how to take advantage of market opportunities in the digital space, and provides advice on building culture and friendships at work; maximizing the benefits of LLMs, corporate venture capital initiatives, and innovation contests; and scaling automation and digital health platform.

- Past Issues

- Upcoming Events

- Video Archive

- Me, Myself, and AI

- Three Big Points

How AI Skews Our Sense of Responsibility

Research shows how using an AI-augmented system may affect humans’ perception of their own agency and responsibility.

- Data, AI, & Machine Learning

- AI & Machine Learning

Matt Chinworth/theispot.com

As artificial intelligence plays an ever-larger role in automated systems and decision-making processes, the question of how it affects humans’ sense of their own agency is becoming less theoretical — and more urgent. It’s no surprise that humans often defer to automated decision recommendations, with exhortations to “trust the AI!” spurring user adoption in corporate settings. However, there’s growing evidence that AI diminishes users’ sense of responsibility for the consequences of those decisions.

This question is largely overlooked in current discussions about responsible AI. In reality, such practices are intended to manage legal and reputational risk — a limited view of responsibility, if we draw on German philosopher Hans Jonas’s useful conceptualization . He defined three types of responsibility, but AI practice appears concerned with only two. The first is legal responsibility , wherein an individual or corporate entity is held responsible for repairing damage or compensating for losses, typically via civil law, and the second is moral responsibility , wherein individuals are held accountable via punishment, as in criminal law.

Get Updates on Leading With AI and Data

Get monthly insights on how artificial intelligence impacts your organization and what it means for your company and customers.

Please enter a valid email address

Thank you for signing up

Privacy Policy

What we’re most concerned about here is the third type, what Jonas called the sense of responsibility . It’s what we mean when we speak admiringly of someone “acting responsibly.” It entails critical thinking and predictive reflection on the purpose and possible consequences of one’s actions, not only for oneself but for others. It’s this sense of responsibility that AI and automated systems can alter.

To gain insight into how AI affects users’ perceptions of their own responsibility and agency, we conducted several studies. Two studies examined what influences a driver’s decision to regain control of a self-driving vehicle when the autonomous driving system is activated. In the first study, we found that the more individuals trust the autonomous system, the less likely they are to maintain situational awareness that would enable them to regain control of the vehicle in the event of a problem or incident. Even though respondents overall said they accepted responsibility when operating an autonomous vehicle, their sense of agency had no significant influence on their intention to regain control of the vehicle in the event of a problem or incident. On the basis of these findings, we might expect to find that a sizable proportion of users feel encouraged, in the presence of an automated system, to shun responsibility to intervene.

About the Author

Ryad Titah is associate professor and chair of the Academic Department of Information Technologies at HEC Montréal. The research in progress described in this article is being conducted with Zoubeir Tkiouat, Pierre-Majorique Léger, Nicolas Saunier, Philippe Doyon-Poulin, Sylvain Sénécal, and Chaïma Merbouh.

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

MIT Technology Review

- Newsletters

Google DeepMind’s new AlphaFold can model a much larger slice of biological life

AlphaFold 3 can predict how DNA, RNA, and other molecules interact, further cementing its leading role in drug discovery and research. Who will benefit?

- James O'Donnell archive page

Google DeepMind has released an improved version of its biology prediction tool, AlphaFold, that can predict the structures not only of proteins but of nearly all the elements of biological life.

It’s a development that could help accelerate drug discovery and other scientific research. The tool is currently being used to experiment with identifying everything from resilient crops to new vaccines.

While the previous model, released in 2020, amazed the research community with its ability to predict proteins structures, researchers have been clamoring for the tool to handle more than just proteins.

Now, DeepMind says, AlphaFold 3 can predict the structures of DNA, RNA, and molecules like ligands, which are essential to drug discovery. DeepMind says the tool provides a more nuanced and dynamic portrait of molecule interactions than anything previously available.

“Biology is a dynamic system,” DeepMind CEO Demis Hassabis told reporters on a call. “Properties of biology emerge through the interactions between different molecules in the cell, and you can think about AlphaFold 3 as our first big sort of step toward [modeling] that.”

AlphaFold 2 helped us better map the human heart , model antimicrobial resistance , and identify the eggs of extinct birds , but we don’t yet know what advances AlphaFold 3 will bring.

Mohammed AlQuraishi, an assistant professor of systems biology at Columbia University who is unaffiliated with DeepMind, thinks the new version of the model will be even better for drug discovery. “The AlphaFold 2 system only knew about amino acids, so it was of very limited utility for biopharma,” he says. “But now, the system can in principle predict where a drug binds a protein.”

Isomorphic Labs, a drug discovery spinoff of DeepMind, is already using the model for exactly that purpose, collaborating with pharmaceutical companies to try to develop new treatments for diseases, according to DeepMind.

AlQuraishi says the release marks a big leap forward. But there are caveats.

“It makes the system much more general, and in particular for drug discovery purposes (in early-stage research), it’s far more useful now than AlphaFold 2,” he says. But as with most models, the impact of AlphaFold will depend on how accurate its predictions are. For some uses, AlphaFold 3 has double the success rate of similar leading models like RoseTTAFold. But for others, like protein-RNA interactions, AlQuraishi says it’s still very inaccurate.

DeepMind says that depending on the interaction being modeled, accuracy can range from 40% to over 80%, and the model will let researchers know how confident it is in its prediction. With less accurate predictions, researchers have to use AlphaFold merely as a starting point before pursuing other methods. Regardless of these ranges in accuracy, if researchers are trying to take the first steps toward answering a question like which enzymes have the potential to break down the plastic in water bottles, it’s vastly more efficient to use a tool like AlphaFold than experimental techniques such as x-ray crystallography.

A revamped model

AlphaFold 3’s larger library of molecules and higher level of complexity required improvements to the underlying model architecture. So DeepMind turned to diffusion techniques, which AI researchers have been steadily improving in recent years and now power image and video generators like OpenAI’s DALL-E 2 and Sora. It works by training a model to start with a noisy image and then reduce that noise bit by bit until an accurate prediction emerges. That method allows AlphaFold 3 to handle a much larger set of inputs.

That marked “a big evolution from the previous model,” says John Jumper, director at Google DeepMind. “It really simplified the whole process of getting all these different atoms to work together.”

It also presented new risks. As the AlphaFold 3 paper details, the use of diffusion techniques made it possible for the model to hallucinate, or generate structures that look plausible but in reality could not exist. Researchers reduced that risk by adding more training data to the areas most prone to hallucination, though that doesn’t eliminate the problem completely.

Restricted access

Part of AlphaFold 3’s impact will depend on how DeepMind divvies up access to the model. For AlphaFold 2, the company released the open-source code , allowing researchers to look under the hood to gain a better understanding of how it worked. It was also available for all purposes, including commercial use by drugmakers. For AlphaFold 3, Hassabis said, there are no current plans to release the full code. The company is instead releasing a public interface for the model called the AlphaFold Server , which imposes limitations on which molecules can be experimented with and can only be used for noncommercial purposes. DeepMind says the interface will lower the technical barrier and broaden the use of the tool to biologists who are less knowledgeable about this technology.

Artificial intelligence

What’s next for generative video.

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

- Will Douglas Heaven archive page

Is robotics about to have its own ChatGPT moment?

Researchers are using generative AI and other techniques to teach robots new skills—including tasks they could perform in homes.

- Melissa Heikkilä archive page

Sam Altman says helpful agents are poised to become AI’s killer function

Open AI’s CEO says we won’t need new hardware or lots more training data to get there.

An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary

Synthesia's new technology is impressive but raises big questions about a world where we increasingly can’t tell what’s real.

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

- Search Menu

- Author Guidelines

- Submission Site

- Open Access

- About International Studies Review

- About the International Studies Association

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Ai: a global governance challenge, empirical perspectives, normative perspectives, acknowledgement, conflict of interest.

- < Previous

The Global Governance of Artificial Intelligence: Next Steps for Empirical and Normative Research

- Article contents

- Figures & tables

- Supplementary Data

Jonas Tallberg, Eva Erman, Markus Furendal, Johannes Geith, Mark Klamberg, Magnus Lundgren, The Global Governance of Artificial Intelligence: Next Steps for Empirical and Normative Research, International Studies Review , Volume 25, Issue 3, September 2023, viad040, https://doi.org/10.1093/isr/viad040

- Permissions Icon Permissions

Artificial intelligence (AI) represents a technological upheaval with the potential to change human society. Because of its transformative potential, AI is increasingly becoming subject to regulatory initiatives at the global level. Yet, so far, scholarship in political science and international relations has focused more on AI applications than on the emerging architecture of global AI regulation. The purpose of this article is to outline an agenda for research into the global governance of AI. The article distinguishes between two broad perspectives: an empirical approach, aimed at mapping and explaining global AI governance; and a normative approach, aimed at developing and applying standards for appropriate global AI governance. The two approaches offer questions, concepts, and theories that are helpful in gaining an understanding of the emerging global governance of AI. Conversely, exploring AI as a regulatory issue offers a critical opportunity to refine existing general approaches to the study of global governance.

La inteligencia artificial (IA) representa una revolución tecnológica que tiene el potencial de poder cambiar la sociedad humana. Debido a este potencial transformador, la IA está cada vez más sujeta a iniciativas reguladoras a nivel global. Sin embargo, hasta ahora, el mundo académico en el área de las ciencias políticas y las relaciones internacionales se ha centrado más en las aplicaciones de la IA que en la arquitectura emergente de la regulación global en materia de IA. El propósito de este artículo es esbozar una agenda para la investigación sobre la gobernanza global en materia de IA. El artículo distingue entre dos amplias perspectivas: por un lado, un enfoque empírico, destinado a mapear y explicar la gobernanza global en materia de IA y, por otro lado, un enfoque normativo, destinado a desarrollar y a aplicar normas para una gobernanza global adecuada de la IA. Los dos enfoques ofrecen preguntas, conceptos y teorías que resultan útiles para comprender la gobernanza global emergente en materia de IA. Por el contrario, el hecho de estudiar la IA como si fuese una cuestión reguladora nos ofrece una oportunidad de gran relevancia para poder perfeccionar los enfoques generales existentes en el estudio de la gobernanza global.

L'intelligence artificielle (IA) constitue un bouleversement technologique qui pourrait bien changer la société humaine. À cause de son potentiel transformateur, l'IA fait de plus en plus l'objet d'initiatives réglementaires au niveau mondial. Pourtant, jusqu'ici, les chercheurs en sciences politiques et relations internationales se sont davantage concentrés sur les applications de l'IA que sur l’émergence de l'architecture de la réglementation mondiale de l'IA. Cet article vise à exposer les grandes lignes d'un programme de recherche sur la gouvernance mondiale de l'IA. Il fait la distinction entre deux perspectives larges : une approche empirique, qui vise à représenter et expliquer la gouvernance mondiale de l'IA; et une approche normative, qui vise à mettre au point et appliquer les normes d'une gouvernance mondiale de l'IA adéquate. Les deux approches proposent des questions, des concepts et des théories qui permettent de mieux comprendre l’émergence de la gouvernance mondiale de l'IA. À l'inverse, envisager l'IA telle une problématique réglementaire présente une opportunité critique d'affiner les approches générales existantes de l’étude de la gouvernance mondiale.

Artificial intelligence (AI) represents a technological upheaval with the potential to transform human society. It is increasingly viewed by states, non-state actors, and international organizations (IOs) as an area of strategic importance, economic competition, and risk management. While AI development is concentrated to a handful of corporations in the United States, China, and Europe, the long-term consequences of AI implementation will be global. Autonomous weapons will have consequences for armed conflicts and power balances; automation will drive changes in job markets and global supply chains; generative AI will affect content production and challenge copyright systems; and competition around the scarce hardware needed to train AI systems will shape relations among both states and businesses. While the technology is still only lightly regulated, state and non-state actors are beginning to negotiate global rules and norms to harness and spread AI’s benefits while limiting its negative consequences. For example, in the past few years, the United Nations Educational, Scientific and Cultural Organization (UNESCO) adopted recommendations on the ethics of AI, the European Union (EU) negotiated comprehensive AI legislation, and the Group of Seven (G7) called for developing global technical standards on AI.

Our purpose in this article is to outline an agenda for research into the global governance of AI. 1 Advancing research on the global regulation of AI is imperative. The rules and arrangements that are currently being developed to regulate AI will have a considerable impact on power differentials, the distribution of economic value, and the political legitimacy of AI governance for years to come. Yet there is currently little systematic knowledge on the nature of global AI regulation, the interests influential in this process, and the extent to which emerging arrangements can manage AI’s consequences in a just and democratic manner. While poised for rapid expansion, research on the global governance of AI remains in its early stages (but see Maas 2021 ; Schmitt 2021 ).

This article complements earlier calls for research on AI governance in general ( Dafoe 2018 ; Butcher and Beridze 2019 ; Taeihagh 2021 ; Büthe et al. 2022 ) by focusing specifically on the need for systematic research into the global governance of AI. It submits that global efforts to regulate AI have reached a stage where it is necessary to start asking fundamental questions about the characteristics, sources, and consequences of these governance arrangements.

We distinguish between two broad approaches for studying the global governance of AI: an empirical perspective, informed by a positive ambition to map and explain AI governance arrangements; and a normative perspective, informed by philosophical standards for evaluating the appropriateness of AI governance arrangements. Both perspectives build on established traditions of research in political science, international relations (IR), and political philosophy, and offer questions, concepts, and theories that are helpful as we try to better understand new types of governance in world politics.

We argue that empirical and normative perspectives together offer a comprehensive agenda of research on the global governance of AI. Pursuing this agenda will help us to better understand characteristics, sources, and consequences of the global regulation of AI, with potential implications for policymaking. Conversely, exploring AI as a regulatory issue offers a critical opportunity to further develop concepts and theories of global governance as they confront the particularities of regulatory dynamics in this important area.

We advance this argument in three steps. First, we argue that AI, because of its economic, political, and social consequences, presents a range of governance challenges. While these challenges initially were taken up mainly by national authorities, recent years have seen a dramatic increase in governance initiatives by IOs. These efforts to regulate AI at global and regional levels are likely driven by several considerations, among them AI applications creating cross-border externalities that demand international cooperation and AI development taking place through transnational processes requiring transboundary regulation. Yet, so far, existing scholarship on the global governance of AI has been mainly descriptive or policy-oriented, rather than focused on theory-driven positive and normative questions.

Second, we argue that an empirical perspective can help to shed light on key questions about characteristics and sources of the global governance of AI. Based on existing concepts, the emerging governance architecture for AI can be described as a regime complex—a structure of partially overlapping and diverse governance arrangements without a clearly defined central institution or hierarchy. IR theories are useful in directing our attention to the role of power, interests, ideas, and non-state actors in the construction of this regime complex. At the same time, the specific conditions of AI governance suggest ways in which global governance theories may be usefully developed.

Third, we argue that a normative perspective raises crucial questions regarding the nature and implications of global AI governance. These questions pertain both to procedure (the process for developing rules) and to outcome (the implications of those rules). A normative perspective suggests that procedures and outcomes in global AI governance need to be evaluated in terms of how they meet relevant normative ideals, such as democracy and justice. How could the global governance of AI be organized to live up to these ideals? To what extent are emerging arrangements minimally democratic and fair in their procedures and outcomes? Conversely, the global governance of AI raises novel questions for normative theorizing, for instance, by invoking aims for AI to be “trustworthy,” “value aligned,” and “human centered.”

Advancing this agenda of research is important for several reasons. First, making more systematic use of social science concepts and theories will help us to gain a better understanding of various dimensions of the global governance of AI. Second, as a novel case of governance involving unique features, AI raises questions that will require us to further refine existing concepts and theories of global governance. Third, findings from this research agenda will be of importance for policymakers, by providing them with evidence on international regulatory gaps, the interests that have influenced current arrangements, and the normative issues at stake when developing this regime complex going forward.

The remainder of this article is structured in three substantive sections. The first section explains why AI has become a concern of global governance. The second section suggests that an empirical perspective can help to shed light on the characteristics and drivers of the global governance of AI. The third section discusses the normative challenges posed by global AI governance, focusing specifically on concerns related to democracy and justice. The article ends with a conclusion that summarizes our proposed agenda for future research on the global governance of AI.

Why does AI pose a global governance challenge? In this section, we answer this question in three steps. We begin by briefly describing the spread of AI technology in society, then illustrate the attempts to regulate AI at various levels of governance, and finally explain why global regulatory initiatives are becoming increasingly common. We argue that the growth of global governance initiatives in this area stems from AI applications creating cross-border externalities that demand international cooperation and from AI development taking place through transnational processes requiring transboundary regulation.

Due to its amorphous nature, AI escapes easy definition. Instead, the definition of AI tends to depend on the purposes and audiences of the research ( Russell and Norvig 2020 ). In the most basic sense, machines are considered intelligent when they can perform tasks that would require intelligence if done by humans ( McCarthy et al. 1955 ). This could happen through the guiding hand of humans, in “expert systems” that follow complex decision trees. It could also happen through “machine learning,” where AI systems are trained to categorize texts, images, sounds, and other data, using such categorizations to make autonomous decisions when confronted with new data. More specific definitions require that machines display a level of autonomy and capacity for learning that enables rational action. For instance, the EU’s High-Level Expert Group on AI has defined AI as “systems that display intelligent behaviour by analysing their environment and taking actions—with some degree of autonomy—to achieve specific goals” (2019, 1). Yet, illustrating the potential for conceptual controversy, this definition has been criticized for denoting both too many and too few technologies as AI ( Heikkilä 2022a ).

AI technology is already implemented in a wide variety of areas in everyday life and the economy at large. For instance, the conversational chatbot ChatGPT is estimated to have reached 100 million users just two months after its launch at the end of 2022 ( Hu 2023 ). AI applications enable new automation technologies, with subsequent positive or negative effects on the demand for labor, employment, and economic equality ( Acemoglu and Restrepo 2020 ). Military AI is integral to lethal autonomous weapons systems (LAWS), whereby machines take autonomous decisions in warfare and battlefield targeting ( Rosert and Sauer 2018 ). Many governments and public agencies have already implemented AI in their daily operations in order to more efficiently evaluate welfare eligibility, flag potential fraud, profile suspects, make risk assessments, and engage in mass surveillance ( Saif et al. 2017 ; Powers and Ganascia 2020 ; Berk 2021 ; Misuraca and van Noordt 2022 , 38).

Societies face significant governance challenges in relation to the implementation of AI. One type of challenge arises when AI systems function poorly, such as when applications involving some degree of autonomous decision-making produce technical failures with real-world implications. The “Robodebt” scheme in Australia, for instance, was designed to detect mistaken social security payments, but the Australian government ultimately had to rescind 400,000 wrongfully issued welfare debts ( Henriques-Gomes 2020 ). Similarly, Dutch authorities recently implemented an algorithm that pushed tens of thousands of families into poverty after mistakenly requiring them to repay child benefits, ultimately forcing the government to resign ( Heikkilä 2022b ).

Another type of governance challenge arises when AI systems function as intended but produce impacts whose consequences may be regarded as problematic. For instance, the inherent opacity of AI decision-making challenges expectations on transparency and accountability in public decision-making in liberal democracies ( Burrell 2016 ; Erman and Furendal 2022a ). Autonomous weapons raise critical ethical and legal issues ( Rosert and Sauer 2019 ). AI applications for surveillance in law enforcement give rise to concerns of individual privacy and human rights ( Rademacher 2019 ). AI-driven automation involves changes in labor markets that are painful for parts of the population ( Acemoglu and Restrepo 2020 ). Generative AI upends conventional ways of producing creative content and raises new copyright and data security issues ( Metz 2022 ).

More broadly, AI presents a governance challenge due to its effects on economic competitiveness, military security, and personal integrity, with consequences for states and societies. In this respect, AI may not be radically different from earlier general-purpose technologies, such as the steam engine, electricity, nuclear power, and the internet ( Frey 2019 ). From this perspective, it is not the novelty of AI technology that makes it a pressing issue to regulate but rather the anticipation that AI will lead to large-scale changes and become a source of power for state and societal actors.

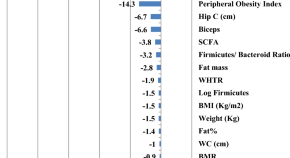

Challenges such as these have led to a rapid expansion in recent years of efforts to regulate AI at different levels of governance. The OECD AI Policy Observatory records more than 700 national AI policy initiatives from 60 countries and territories ( OECD 2021 ). Earlier research into the governance of AI has therefore naturally focused mostly on the national level ( Radu 2021 ; Roberts et al. 2021 ; Taeihagh 2021 ). However, a large number of governance initiatives have also been undertaken at the global level, and many more are underway. According to an ongoing inventory of AI regulatory initiatives by the Council of Europe, IOs overtook national authorities as the main source of such initiatives in 2020 ( Council of Europe 2023 ). Figure 1 visualizes this trend.

Origins of AI governance initiatives, 2015–2022. Source : Council of Europe (2023 ).

According to this source, national authorities launched 170 initiatives from 2015 to 2022, while IOs put in place 210 initiatives during the same period. Over time, the share of regulatory initiatives emanating from IOs has thus grown to surpass the share resulting from national authorities. Examples of the former include the OECD Principles on Artificial Intelligence agreed in 2019, the UNESCO Recommendation on Ethics of AI adopted in 2021, and the EU’s ongoing negotiations on the EU AI Act. In addition, several governance initiatives emanate from the private sector, civil society, and multistakeholder partnerships. In the next section, we will provide a more developed characterization of these global regulatory initiatives.

Two concerns likely explain why AI increasingly is becoming subject to governance at the global level. First, AI creates externalities that do not follow national borders and whose regulation requires international cooperation. China’s Artificial Intelligence Development Plan, for instance, clearly states that the country is using AI as a leapfrog technology in order to enhance national competitiveness ( Roberts et al. 2021 ). Since states with less regulation might gain a competitive edge when developing certain AI applications, there is a risk that such strategies create a regulatory race to the bottom. International cooperation that creates a level playing field could thus be said to be in the interest of all parties.

Second, the development of AI technology is a cross-border process carried out by transnational actors—multinational firms in particular. Big tech corporations, such as Google, Meta, or the Chinese drone maker DJI, are investing vast sums into AI development. The innovations of hardware manufacturers like Nvidia enable breakthroughs but depend on complex global supply chains, and international research labs such as DeepMind regularly present cutting-edge AI applications. Since the private actors that develop AI can operate across multiple national jurisdictions, the efforts to regulate AI development and deployment also need to be transboundary. Only by introducing common rules can states ensure that AI businesses encounter similar regulatory environments, which both facilitates transboundary AI development and reduces incentives for companies to shift to countries with laxer regulation.

Successful global governance of AI could help realize many of the potential benefits of the technology while mitigating its negative consequences. For AI to contribute to increased economic productivity, for instance, there needs to be predictable and clear regulation as well as global coordination around standards that prevent competition between parallel technical systems. Conversely, a failure to provide suitable global governance could lead to substantial risks. The intentional misuse of AI technology may undermine trust in institutions, and if left unchecked, the positive and negative externalities created by automation technologies might fall unevenly across different groups. Race dynamics similar to those that arose around nuclear technology in the twentieth century—where technological leadership created large benefits—might lead international actors and private firms to overlook safety issues and create potentially dangerous AI applications ( Dafoe 2018 ; Future of Life Institute 2023 ). Hence, policymakers face the task of disentangling beneficial from malicious consequences and then foster the former while regulating the latter. Given the speed at which AI is developed and implemented, governance also risks constantly being one step behind the technological frontier.

A prime example of how AI presents a global governance challenge is the efforts to regulate military AI, in particular autonomous weapons capable of identifying and eliminating a target without the involvement of a remote human operator ( Hernandez 2021 ). Both the development and the deployment of military applications with autonomous capabilities transcend national borders. Multinational defense companies are at the forefront of developing autonomous weapons systems. Reports suggest that such autonomous weapons are now beginning to be used in armed conflicts ( Trager and Luca 2022 ). The development and deployment of autonomous weapons involve the types of competitive dynamics and transboundary consequences identified above. In addition, they raise specific concerns with respect to accountability and dehumanization ( Sparrow 2007 ; Stop Killer Robots 2023 ). For these reasons, states have begun to explore the potential for joint global regulation of autonomous weapons systems. The principal forum is the Group on Governmental Experts (GGE) within the Convention on Certain Conventional Weapons (CCW). Yet progress in these negotiations is slow as the major powers approach this issue with competing interests in mind, illustrating the challenges involved in developing joint global rules.

The example of autonomous weapons further illustrates how the global governance of AI raises urgent empirical and normative questions for research. On the empirical side, these developments invite researchers to map emerging regulatory initiatives, such as those within the CCW, and to explain why these particular frameworks become dominant. What are the principal characteristics of global regulatory initiatives in the area of autonomous weapons, and how do power differentials, interest constellations, and principled ideas influence those rules? On the normative side, these developments invite researchers to address key normative questions raised by the development and deployment of autonomous weapons. What are the key normative issues at stake in the regulation of autonomous weapons, both with respect to the process through which such rules are developed and with respect to the consequences of these frameworks? To what extent are existing normative ideals and frameworks, such as just war theory, applicable to the governing of military AI ( Roach and Eckert 2020 )? Despite the global governance challenge of AI development and use, research on this topic is still in its infancy (but see Maas 2021 ; Schmitt 2021 ). In the remainder of this article, we therefore present an agenda for research into the global governance of AI. We begin by outlining an agenda for positive empirical research on the global governance of AI and then suggest an agenda for normative philosophical research.

An empirical perspective on the global governance of AI suggests two main questions: How may we describe the emerging global governance of AI? And how may we explain the emerging global governance of AI? In this section, we argue that concepts and theories drawn from the general study of global governance will be helpful as we address these questions, but also that AI, conversely, raises novel issues that point to the need for new or refined theories. Specifically, we show how global AI governance may be mapped along several conceptual dimensions and submit that theories invoking power dynamics, interests, ideas, and non-state actors have explanatory promise.

Mapping AI Governance

A key priority for empirical research on the global governance of AI is descriptive: Where and how are new regulatory arrangements emerging at the global level? What features characterize the emergent regulatory landscape? In answering such questions, researchers can draw on scholarship on international law and IR, which have conceptualized mechanisms of regulatory change and drawn up analytical dimensions to map and categorize the resulting regulatory arrangements.

Any mapping exercise must consider the many different ways in global AI regulation may emerge and evolve. Previous research suggests that legal development may take place in at least three distinct ways. To begin with, existing rules could be reinterpreted to also cover AI ( Maas 2021 ). For example, the principles of distinction, proportionality, and precaution in international humanitarian law could be extended, via reinterpretation, to apply to LAWS, without changing the legal source. Another manner in which new AI regulation may appear is via “ add-ons ” to existing rules. For example, in the area of global regulation of autonomous vehicles, AI-related provisions were added to the 1968 Vienna Road Traffic Convention through an amendment in 2015 ( Kunz and Ó hÉigeartaigh 2020 ). Finally, AI regulation may appear as a completely new framework , either through new state behavior that results in customary international law or through a new legal act or treaty ( Maas 2021 , 96). Here, one example of regulating AI through a new framework is the aforementioned EU AI Act, which would take the form of a new EU regulation.

Once researchers have mapped emerging regulatory arrangements, a central task will be to categorize them. Prior scholarship suggests that regulatory arrangements may be fruitfully analyzed in terms of five key dimensions (cf. Koremenos et al. 2001 ; Wahlgren 2022 , 346–347). A first dimension is whether regulation is horizontal or vertical . A horizontal regulation covers several policy areas, whereas a vertical regulation is a delimited legal framework covering one specific policy area or application. In the field of AI, emergent governance appears to populate both ends of this spectrum. For example, the proposed EU AI Act (2021), the UNESCO Recommendations on the Ethics of AI (2021), and the OECD Principles on AI (2019), which are not specific to any particular AI application or field, would classify as attempts at horizontal regulation. When it comes to vertical regulation, there are fewer existing examples, but discussions on a new protocol on LAWS within the CCW signal that this type of regulation is likely to become more important in the future ( Maas 2019a ).

A second dimension runs from centralization to decentralization . Governance is centralized if there is a single, authoritative institution at the heart of a regime, such as in trade, where the World Trade Organization (WTO) fulfills this role. In contrast, decentralized arrangements are marked by parallel and partly overlapping institutions, such as in the governance of the environment, the internet, or genetic resources (cf. Raustiala and Victor 2004 ). While some IOs with universal membership, such as UNESCO, have taken initiatives relating to AI governance, no institution has assumed the role as the core regulatory body at the global level. Rather, the proliferation of parallel initiatives, across levels and regions, lends weight to the conclusion that contemporary arrangements for the global governance of AI are strongly decentralized ( Cihon et al. 2020a ).

A third dimension is the continuum from hard law to soft law . While domestic statutes and treaties may be described as hard law, soft law is associated with guidelines of conduct, recommendations, resolutions, standards, opinions, ethical principles, declarations, guidelines, board decisions, codes of conduct, negotiated agreements, and a large number of additional normative mechanisms ( Abbott and Snidal 2000 ; Wahlgren 2022 ). Even though such soft documents may initially have been drafted as non-legal texts, they may in actual practice acquire considerable strength in structuring international relations ( Orakhelashvili 2019 ). While some initiatives to regulate AI classify as hard law, including the EU’s AI Act, Burri (2017 ) suggests that AI governance is likely to be dominated by “supersoft law,” noting that there are currently numerous processes underway creating global standards outside traditional international law-making fora. In a phenomenon that might be described as “bottom-up law-making” ( Koven Levit 2017 ), states and IOs are bypassed, creating norms that defy traditional categories of international law ( Burri 2017 ).

A fourth dimension concerns private versus public regulation . The concept of private regulation overlaps partly with substance understood as soft law, to the extent that private actors develop non-binding guidelines ( Wahlgren 2022 ). Significant harmonization of standards may be developed by private standardization bodies, such as the IEEE ( Ebers 2022 ). Public authorities may regulate the responsibility of manufacturers through tort law and product liability law ( Greenstein 2022 ). Even though contracts are originally matters between private parties, some contractual matters may still be regulated and enforced by law ( Ubena 2022 ).

A fifth dimension relates to the division between military and non-military regulation . Several policymakers and scholars describe how military AI is about to escalate into a strategic arms race between major powers such as the United States and China, similar to the nuclear arms race during the Cold War (cf. Petman 2017 ; Thompson and Bremmer 2018 ; Maas 2019a ). The process in the CCW Group of Governmental Experts on the regulation of LAWS is probably the largest single negotiation on AI ( Maas 2019b ) next to the negotiations on the EU AI Act. The zero-sum logic that appears to exist between states in the area of national security, prompting a military AI arms race, may not be applicable to the same extent to non-military applications of AI, potentially enabling a clearer focus on realizing positive-sum gains through regulation.

These five dimensions can provide guidance as researchers take up the task of mapping and categorizing global AI regulation. While the evidence is preliminary, in its present form, the global governance of AI must be understood as combining horizontal and vertical elements, predominantly leaning toward soft law, being heavily decentralized, primarily public in nature, and mixing military and non-military regulation. This multi-faceted and non-hierarchical nature of global AI governance suggests that it is best characterized as a regime complex , or a “larger web of international rules and regimes” ( Alter and Meunier 2009 , 13; Keohane and Victor 2011 ) rather than as a single, discrete regime.

If global AI governance can be understood as a regime complex, which some researchers already claim ( Cihon et al. 2020a ), future scholarship should look for theoretical and methodological inspiration in research on regime complexity in other policy fields. This research has found that regime complexes are characterized by path dependence, as existing rules shape the formulation of new rules; venue shopping, as actors seek to steer regulatory efforts to the fora most advantageous to their interests; and legal inconsistencies, as rules emerge from fractious and overlapping negotiations in parallel processes ( Raustiala and Victor 2004 ). Scholars have also considered the design of regime complexes ( Eilstrup-Sangiovanni and Westerwinter 2021 ), institutional overlap among bodies in regime complexes ( Haftel and Lenz 2021 ), and actors’ forum-shopping within regime complexes ( Verdier 2022 ). Establishing whether these patterns and dynamics are key features also of the AI regime complex stands out as an important priority in future research.

Explaining AI Governance

As our understanding of the empirical patterns of global AI governance grows, a natural next step is to turn to explanatory questions. How may we explain the emerging global governance of AI? What accounts for variation in governance arrangements and how do they compare with those in other policy fields, such as environment, security, or trade? Political science and IR offer a plethora of useful theoretical tools that can provide insights into the global governance of AI. However, at the same time, the novelty of AI as a governance challenge raises new questions that may require novel or refined theories. Thus far, existing research on the global governance of AI has been primarily concerned with descriptive tasks and largely fallen short in engaging with explanatory questions.

We illustrate the potential of general theories to help explain global AI governance by pointing to three broad explanatory perspectives in IR ( Martin and Simmons 2012 )—power, interests, and ideas—which have served as primary sources of theorizing on global governance arrangements in other policy fields. These perspectives have conventionally been associated with the paradigmatic theories of realism, liberalism, and constructivism, respectively, but like much of the contemporary IR discipline, we prefer to formulate them as non-paradigmatic sources for mid-level theorizing of more specific phenomena (cf. Lake 2013 ). We focus our discussion on how accounts privileging power, interests, and ideas have explained the origins and designs of IOs and how they may help us explain wider patterns of global AI governance. We then discuss how theories of non-state actors and regime complexity, in particular, offer promising avenues for future research into the global governance of AI. Research fields like science and technology studies (e.g., Jasanoff 2016 ) or the political economy of international cooperation (e.g., Gilpin 1987 ) can provide additional theoretical insights, but these literatures are not discussed in detail here.

A first broad explanatory perspective is provided by power-centric theories, privileging the role of major states, capability differentials, and distributive concerns. While conventional realism emphasizes how states’ concern for relative gains impedes substantive international cooperation, viewing IOs as epiphenomenal reflections of underlying power relations ( Mearsheimer 1994 ), developed power-oriented theories have highlighted how powerful states seek to design regulatory contexts that favor their preferred outcomes ( Gruber 2000 ) or shape the direction of IOs using informal influence ( Stone 2011 ; Dreher et al. 2022 ).

In research on global AI governance, power-oriented perspectives are likely to prove particularly fruitful in investigating how great-power contestation shapes where and how the technology will be regulated. Focusing on the major AI powerhouses, scholars have started to analyze the contrasting regulatory strategies and policies of the United States, China, and the EU, often emphasizing issues of strategic competition, military balance, and rivalry ( Kania 2017 ; Horowitz et al. 2018 ; Payne 2018 , 2021 ; Johnson 2019 ; Jensen et al. 2020 ). Here, power-centric theories could help understand the apparent emphasis on military AI in both the United States and China, as witnessed by the recent establishment of a US National Security Commission on AI and China’s ambitious plans of integrating AI into its military forces ( Ding 2018 ). The EU, for its part, is negotiating the comprehensive AI Act, seeking to use its market power to set a European standard for AI that subsequently can become the global standard, as it previously did with its GDPR law on data protection and privacy ( Schmitt 2021 ). Given the primacy of these three actors in AI development, their preferences and outlook regarding regulatory solutions will remain a key research priority.

Power-based accounts are also likely to provide theoretical inspiration for research on AI governance in the domain of security and military competition. Some scholars are seeking to assess the implications of AI for strategic rivalries, and their possible regulation, by drawing on historical analogies ( Leung 2019 ; see also Drezner 2019 ). Observing that, from a strategic standpoint, military AI exhibits some similarities to the problems posed by nuclear weapons, researchers have examined whether lessons from nuclear arms control have applicability in the domain of AI governance. For example, Maas (2019a ) argues that historical experience suggests that the proliferation of military AI can potentially be slowed down via institutionalization, while Zaidi and Dafoe (2021 ), in a study of the Baruch Plan for Nuclear Weapons, contend that fundamental strategic obstacles—including mistrust and fear of exploitation by other states—need to be overcome to make regulation viable. This line of investigation can be extended by assessing other historical analogies, such as the negotiations that led to the Strategic Arms Limitation Talks (SALT) in 1972 or more recent efforts to contain the spread of nuclear weapons, where power-oriented factors have shown continued analytical relevance (e.g., Ruzicka 2018 ).

A second major explanatory approach is provided by the family of theoretical accounts that highlight how international cooperation is shaped by shared interests and functional needs ( Keohane 1984 ; Martin 1992 ). A key argument in rational functionalist scholarship is that states are likely to establish IOs to overcome barriers to cooperation—such as information asymmetries, commitment problems, and transaction costs—and that the design of these institutions will reflect the underlying problem structure, including the degree of uncertainty and the number of involved actors (e.g., Koremenos et al. 2001 ; Hawkins et al. 2006 ; Koremenos 2016 ).

Applied to the domain of AI, these approaches would bring attention to how the functional characteristics of AI as a governance problem shape the regulatory response. They would also emphasize the investigation of the distribution of interests and the possibility of efficiency gains from cooperation around AI governance. The contemporary proliferation of partnerships and initiatives on AI governance points to the suitability of this theoretical approach, and research has taken some preliminary steps, surveying state interests and their alignment (e.g., Campbell 2019 ; Radu 2021 ). However, a systematic assessment of how the distribution of interests would explain the nature of emerging governance arrangements, both in the aggregate and at the constituent level, has yet to be undertaken.

A third broad explanatory perspective is provided by theories emphasizing the role of history, norms, and ideas in shaping global governance arrangements. In contrast to accounts based on power and interests, this line of scholarship, often drawing on sociological assumptions and theory, focuses on how institutional arrangements are embedded in a wider ideational context, which itself is subject to change. This perspective has generated powerful analyses of how societal norms influence states’ international behavior (e.g., Acharya and Johnston 2007 ), how norm entrepreneurs play an active role in shaping the origins and diffusion of specific norms (e.g., Finnemore and Sikkink 1998 ), and how IOs socialize states and other actors into specific norms and behaviors (e.g., Checkel 2005 ).

Examining the extent to which domestic and societal norms shape discussions on global governance arrangements stands out as a particularly promising area of inquiry. Comparative research on national ethical standards for AI has already indicated significant cross-country convergence, indicating a cluster of normative principles that are likely to inspire governance frameworks in many parts of the world (e.g., Jobin et al. 2019 ). A closely related research agenda concerns norm entrepreneurship in AI governance. Here, preliminary findings suggest that civil society organizations have played a role in advocating norms relating to fundamental rights in the formulation of EU AI policy and other processes ( Ulnicane 2021 ). Finally, once AI governance structures have solidified further, scholars can begin to draw on norms-oriented scholarship to design strategies for the analysis of how those governance arrangements may play a role in socialization.

In light of the particularities of AI and its political landscape, we expect that global governance scholars will be motivated to refine and adapt these broad theoretical perspectives to address new questions and conditions. For example, considering China’s AI sector-specific resources and expertise, power-oriented theories will need to grapple with questions of institutional creation and modification occurring under a distribution of power that differs significantly from the Western-centric processes that underpin most existing studies. Similarly, rational functionalist scholars will need to adapt their tools to address questions of how the highly asymmetric distribution of AI capabilities—in particular between producers, which are few, concentrated, and highly resourced, and users and subjects, which are many, dispersed, and less resourced—affects the formation of state interests and bargaining around institutional solutions. For their part, norm-oriented theories may need to be refined to capture the role of previously understudied sources of normative and ideational content, such as formal and informal networks of computer programmers, which, on account of their expertise, have been influential in setting the direction of norms surrounding several AI technologies.

We expect that these broad theoretical perspectives will continue to inspire research on the global governance of AI, in particular for tailored, mid-level theorizing in response to new questions. However, a fully developed research agenda will gain from complementing these theories, which emphasize particular independent variables (power, interests, and norms), with theories and approaches that focus on particular issues, actors, and phenomena. There is an abundance of theoretical perspectives that can be helpful in this regard, including research on the relationship between science and politics ( Haas 1992 ; Jasanoff 2016 ), the political economy of international cooperation ( Gilpin 1987 ; Frieden et al. 2017 ), the complexity of global governance ( Raustiala and Victor 2004 ; Eilstrup-Sangiovanni and Westerwinter 2021 ), and the role of non-state actors ( Risse 2012 ; Tallberg et al. 2013 ). We focus here on the latter two: theories of regime complexity, which have grown to become a mainstream approach in global governance scholarship, as well as theories of non-state actors, which provide powerful tools for understanding how private organizations influence regulatory processes. Both literatures hold considerable promise in advancing scholarship of AI global governance beyond its current state.

As concluded above, the current structure of global AI governance fits the description of a regime complex. Thus, approaching AI governance through this theoretical lens, understanding it as a larger web of rules and regulations, can open new avenues of research (see Maas 2021 for a pioneering effort). One priority is to analyze the AI regime complex in terms of core dimensions, such as scale, diversity, and density ( Eilstrup-Sangiovanni and Westerwinter 2021 ). Pointing to the density of this regime complex, existing studies have suggested that global AI governance is characterized by a high degree of fragmentation ( Schmitt 2021 ), which has motivated assessments of the possibility of greater centralization ( Cihon et al. 2020b ). Another area of research is to examine the emergence of legal inconsistencies and tensions, likely to emerge because of the diverging preferences of major AI players and the tendency of self-interest actors to forum-shop when engaging within a regime complex. Finally, given that the AI regime complex exists in a very early state, it provides researchers with an excellent opportunity to trace the origins and evolution of this form of governance structure from the outset, thus providing a good case for both theory development and novel empirical applications.

If theories of regime complexity can shine a light on macro-level properties of AI governance, other theoretical approaches can guide research into micro-level dynamics and influences. Recognizing that non-state actors are central in both AI development and its emergent regulation, researchers should find inspiration in theories and tools developed to study the role and influence of non-state actors in global governance (for overviews, see Risse 2012 ; Jönsson and Tallberg forthcoming ). Drawing on such work will enable researchers to assess to what extent non-state actor involvement in the AI regime complex differs from previous experiences in other international regimes. It is clear that large tech companies, like Google, Meta, and Microsoft, have formed regulatory preferences and that their monetary resources and technological expertise enable them to promote these interests in legislative and bureaucratic processes. For example, the Partnership on AI (PAI), a multistakeholder organization with more than 50 members, includes American tech companies at the forefront of AI development and fosters research on issues of AI ethics and governance ( Schmitt 2021 ). Other non-state actors, including civil society watchdog organizations, like the Civil Liberties Union for Europe, have been vocal in the negotiations of the EU AI Act, further underlining the relevance of this strand of research.