An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.23(Suppl 3); 2019 Sep

An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors

Priya ranganathan.

1 Department of Anesthesiology, Critical Care and Pain, Tata Memorial Hospital, Mumbai, Maharashtra, India

2 Department of Surgical Oncology, Tata Memorial Centre, Mumbai, Maharashtra, India

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors.

How to cite this article

Ranganathan P, Pramesh CS. An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors. Indian J Crit Care Med 2019;23(Suppl 3):S230–S231.

Two papers quoted in this issue of the Indian Journal of Critical Care Medicine report. The results of studies aim to prove that a new intervention is better than (superior to) an existing treatment. In the ABLE study, the investigators wanted to show that transfusion of fresh red blood cells would be superior to standard-issue red cells in reducing 90-day mortality in ICU patients. 1 The PROPPR study was designed to prove that transfusion of a lower ratio of plasma and platelets to red cells would be superior to a higher ratio in decreasing 24-hour and 30-day mortality in critically ill patients. 2 These studies are known as superiority studies (as opposed to noninferiority or equivalence studies which will be discussed in a subsequent article).

SAMPLE VERSUS POPULATION

A sample represents a group of participants selected from the entire population. Since studies cannot be carried out on entire populations, researchers choose samples, which are representative of the population. This is similar to walking into a grocery store and examining a few grains of rice or wheat before purchasing an entire bag; we assume that the few grains that we select (the sample) are representative of the entire sack of grains (the population).

The results of the study are then extrapolated to generate inferences about the population. We do this using a process known as hypothesis testing. This means that the results of the study may not always be identical to the results we would expect to find in the population; i.e., there is the possibility that the study results may be erroneous.

HYPOTHESIS TESTING

A clinical trial begins with an assumption or belief, and then proceeds to either prove or disprove this assumption. In statistical terms, this belief or assumption is known as a hypothesis. Counterintuitively, what the researcher believes in (or is trying to prove) is called the “alternate” hypothesis, and the opposite is called the “null” hypothesis; every study has a null hypothesis and an alternate hypothesis. For superiority studies, the alternate hypothesis states that one treatment (usually the new or experimental treatment) is superior to the other; the null hypothesis states that there is no difference between the treatments (the treatments are equal). For example, in the ABLE study, we start by stating the null hypothesis—there is no difference in mortality between groups receiving fresh RBCs and standard-issue RBCs. We then state the alternate hypothesis—There is a difference between groups receiving fresh RBCs and standard-issue RBCs. It is important to note that we have stated that the groups are different, without specifying which group will be better than the other. This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we start a study, we are not 100% certain that the new treatment can only be better than the standard treatment—it could be worse, and if it is so, the study should pick it up as well. One tailed hypothesis and one-sided statistical testing is done for non-inferiority studies, which will be discussed in a subsequent paper in this series.

STATISTICAL ERRORS

There are two possibilities to consider when interpreting the results of a superiority study. The first possibility is that there is truly no difference between the treatments but the study finds that they are different. This is called a Type-1 error or false-positive error or alpha error. This means falsely rejecting the null hypothesis.

The second possibility is that there is a difference between the treatments and the study does not pick up this difference. This is called a Type 2 error or false-negative error or beta error. This means falsely accepting the null hypothesis.

The power of the study is the ability to detect a difference between groups and is the converse of the beta error; i.e., power = 1-beta error. Alpha and beta errors are finalized when the protocol is written and form the basis for sample size calculation for the study. In an ideal world, we would not like any error in the results of our study; however, we would need to do the study in the entire population (infinite sample size) to be able to get a 0% alpha and beta error. These two errors enable us to do studies with realistic sample sizes, with the compromise that there is a small possibility that the results may not always reflect the truth. The basis for this will be discussed in a subsequent paper in this series dealing with sample size calculation.

Conventionally, type 1 or alpha error is set at 5%. This means, that at the end of the study, if there is a difference between groups, we want to be 95% certain that this is a true difference and allow only a 5% probability that this difference has occurred by chance (false positive). Type 2 or beta error is usually set between 10% and 20%; therefore, the power of the study is 90% or 80%. This means that if there is a difference between groups, we want to be 80% (or 90%) certain that the study will detect that difference. For example, in the ABLE study, sample size was calculated with a type 1 error of 5% (two-sided) and power of 90% (type 2 error of 10%) (1).

Table 1 gives a summary of the two types of statistical errors with an example

Statistical errors

In the next article in this series, we will look at the meaning and interpretation of ‘ p ’ value and confidence intervals for hypothesis testing.

Source of support: Nil

Conflict of interest: None

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

1.1 Definitions of Statistics, Probability, and Key Terms

The science of statistics deals with the collection, analysis, interpretation, and presentation of data . We see and use data in our everyday lives.

Collaborative Exercise

In your classroom, try this exercise. Have class members write down the average time—in hours, to the nearest half-hour—they sleep per night. Your instructor will record the data. Then create a simple graph, called a dot plot, of the data. A dot plot consists of a number line and dots, or points, positioned above the number line. For example, consider the following data:

5, 5.5, 6, 6, 6, 6.5, 6.5, 6.5, 6.5, 7, 7, 8, 8, 9.

The dot plot for this data would be as follows:

Does your dot plot look the same as or different from the example? Why? If you did the same example in an English class with the same number of students, do you think the results would be the same? Why or why not?

Where do your data appear to cluster? How might you interpret the clustering?

The questions above ask you to analyze and interpret your data. With this example, you have begun your study of statistics.

In this course, you will learn how to organize and summarize data. Organizing and summarizing data is called descriptive statistics . Two ways to summarize data are by graphing and by using numbers, for example, finding an average. After you have studied probability and probability distributions, you will use formal methods for drawing conclusions from good data. The formal methods are called inferential statistics . Statistical inference uses probability to determine how confident we can be that our conclusions are correct.

Effective interpretation of data, or inference, is based on good procedures for producing data and thoughtful examination of the data. You will encounter what will seem to be too many mathematical formulas for interpreting data. The goal of statistics is not to perform numerous calculations using the formulas, but to gain an understanding of your data. The calculations can be done using a calculator or a computer. The understanding must come from you. If you can thoroughly grasp the basics of statistics, you can be more confident in the decisions you make in life.

Statistical Models

Statistics, like all other branches of mathematics, uses mathematical models to describe phenomena that occur in the real world. Some mathematical models are deterministic. These models can be used when one value is precisely determined from another value. Examples of deterministic models are the quadratic equations that describe the acceleration of a car from rest or the differential equations that describe the transfer of heat from a stove to a pot. These models are quite accurate and can be used to answer questions and make predictions with a high degree of precision. Space agencies, for example, use deterministic models to predict the exact amount of thrust that a rocket needs to break away from Earth’s gravity and achieve orbit.

However, life is not always precise. While scientists can predict to the minute the time that the sun will rise, they cannot say precisely where a hurricane will make landfall. Statistical models can be used to predict life’s more uncertain situations. These special forms of mathematical models or functions are based on the idea that one value affects another value. Some statistical models are mathematical functions that are more precise—one set of values can predict or determine another set of values. Or some statistical models are mathematical functions in which a set of values do not precisely determine other values. Statistical models are very useful because they can describe the probability or likelihood of an event occurring and provide alternative outcomes if the event does not occur. For example, weather forecasts are examples of statistical models. Meteorologists cannot predict tomorrow’s weather with certainty. However, they often use statistical models to tell you how likely it is to rain at any given time, and you can prepare yourself based on this probability.

Probability

Probability is a mathematical tool used to study randomness. It deals with the chance of an event occurring. For example, if you toss a fair coin four times, the outcomes may not be two heads and two tails. However, if you toss the same coin 4,000 times, the outcomes will be close to half heads and half tails. The expected theoretical probability of heads in any one toss is 1 2 1 2 or .5. Even though the outcomes of a few repetitions are uncertain, there is a regular pattern of outcomes when there are many repetitions. After reading about the English statistician Karl Pearson who tossed a coin 24,000 times with a result of 12,012 heads, one of the authors tossed a coin 2,000 times. The results were 996 heads. The fraction 996 2,000 996 2,000 is equal to .498 which is very close to .5, the expected probability.

The theory of probability began with the study of games of chance such as poker. Predictions take the form of probabilities. To predict the likelihood of an earthquake, of rain, or whether you will get an A in this course, we use probabilities. Doctors use probability to determine the chance of a vaccination causing the disease the vaccination is supposed to prevent. A stockbroker uses probability to determine the rate of return on a client's investments.

In statistics, we generally want to study a population . You can think of a population as a collection of persons, things, or objects under study. To study the population, we select a sample . The idea of sampling is to select a portion, or subset, of the larger population and study that portion—the sample—to gain information about the population. Data are the result of sampling from a population.

Because it takes a lot of time and money to examine an entire population, sampling is a very practical technique. If you wished to compute the overall grade point average at your school, it would make sense to select a sample of students who attend the school. The data collected from the sample would be the students' grade point averages. In presidential elections, opinion poll samples of 1,000–2,000 people are taken. The opinion poll is supposed to represent the views of the people in the entire country. Manufacturers of canned carbonated drinks take samples to determine if a 16-ounce can contains 16 ounces of carbonated drink.

From the sample data, we can calculate a statistic. A statistic is a number that represents a property of the sample. For example, if we consider one math class as a sample of the population of all math classes, then the average number of points earned by students in that one math class at the end of the term is an example of a statistic. Since we do not have the data for all math classes, that statistic is our best estimate of the average for the entire population of math classes. If we happen to have data for all math classes, we can find the population parameter. A parameter is a numerical characteristic of the whole population that can be estimated by a statistic. Since we considered all math classes to be the population, then the average number of points earned per student over all the math classes is an example of a parameter.

One of the main concerns in the field of statistics is how accurately a statistic estimates a parameter. In order to have an accurate sample, it must contain the characteristics of the population in order to be a representative sample . We are interested in both the sample statistic and the population parameter in inferential statistics. In a later chapter, we will use the sample statistic to test the validity of the established population parameter.

A variable , usually notated by capital letters such as X and Y , is a characteristic or measurement that can be determined for each member of a population. Variables may describe values like weight in pounds or favorite subject in school. Numerical variables take on values with equal units such as weight in pounds and time in hours. Categorical variables place the person or thing into a category. If we let X equal the number of points earned by one math student at the end of a term, then X is a numerical variable. If we let Y be a person's party affiliation, then some examples of Y include Republican, Democrat, and Independent. Y is a categorical variable. We could do some math with values of X —calculate the average number of points earned, for example—but it makes no sense to do math with values of Y —calculating an average party affiliation makes no sense.

Data are the actual values of the variable. They may be numbers or they may be words. Datum is a single value.

Two words that come up often in statistics are mean and proportion . If you were to take three exams in your math classes and obtain scores of 86, 75, and 92, you would calculate your mean score by adding the three exam scores and dividing by three. Your mean score would be 84.3 to one decimal place. If, in your math class, there are 40 students and 22 are males and 18 females, then the proportion of men students is 22 40 22 40 and the proportion of women students is 18 40 18 40 . Mean and proportion are discussed in more detail in later chapters.

The words mean and average are often used interchangeably. In this book, we use the term arithmetic mean for mean.

Example 1.1

Determine what the population, sample, parameter, statistic, variable, and data referred to in the following study.

We want to know the mean amount of extracurricular activities in which high school students participate. We randomly surveyed 100 high school students. Three of those students were in 2, 5, and 7 extracurricular activities, respectively.

The population is all high school students.

The sample is the 100 high school students interviewed.

The parameter is the mean amount of extracurricular activities in which all high school students participate.

The statistic is the mean amount of extracurricular activities in which the sample of high school students participate.

The variable could be the amount of extracurricular activities by one high school student. Let X = the amount of extracurricular activities by one high school student.

The data are the number of extracurricular activities in which the high school students participate. Examples of the data are 2, 5, 7.

Find an article online or in a newspaper or magazine that refers to a statistical study or poll. Identify what each of the key terms—population, sample, parameter, statistic, variable, and data—refers to in the study mentioned in the article. Does the article use the key terms correctly?

Example 1.2

Determine what the key terms refer to in the following study.

A study was conducted at a local high school to analyze the average cumulative GPAs of students who graduated last year. Fill in the letter of the phrase that best describes each of the items below.

1. Population ____ 2. Statistic ____ 3. Parameter ____ 4. Sample ____ 5. Variable ____ 6. Data ____

- a) all students who attended the high school last year

- b) the cumulative GPA of one student who graduated from the high school last year

- c) 3.65, 2.80, 1.50, 3.90

- d) a group of students who graduated from the high school last year, randomly selected

- e) the average cumulative GPA of students who graduated from the high school last year

- f) all students who graduated from the high school last year

- g) the average cumulative GPA of students in the study who graduated from the high school last year

1. f ; 2. g ; 3. e ; 4. d ; 5. b ; 6. c

Example 1.3

As part of a study designed to test the safety of automobiles, the National Transportation Safety Board collected and reviewed data about the effects of an automobile crash on test dummies (The Data and Story Library, n.d.). Here is the criterion they used.

Cars with dummies in the front seats were crashed into a wall at a speed of 35 miles per hour. We want to know the proportion of dummies in the driver’s seat that would have had head injuries, if they had been actual drivers. We start with a simple random sample of 75 cars.

The population is all cars containing dummies in the front seat.

The sample is the 75 cars, selected by a simple random sample.

The parameter is the proportion of driver dummies—if they had been real people—who would have suffered head injuries in the population.

The statistic is proportion of driver dummies—if they had been real people—who would have suffered head injuries in the sample.

The variable X = whether driver dummies—if they had been real people—would have suffered head injuries.

The data are either: yes, had head injury, or no, did not.

Example 1.4

An insurance company would like to determine the proportion of all medical doctors who have been involved in one or more malpractice lawsuits. The company selects 500 doctors at random from a professional directory and determines the number in the sample who have been involved in a malpractice lawsuit.

The population is all medical doctors listed in the professional directory.

The parameter is the proportion of medical doctors who have been involved in one or more malpractice suits in the population.

The sample is the 500 doctors selected at random from the professional directory.

The statistic is the proportion of medical doctors who have been involved in one or more malpractice suits in the sample.

The variable X records whether a doctor has or has not been involved in a malpractice suit.

The data are either: yes, was involved in one or more malpractice lawsuits; or no, was not.

Do the following exercise collaboratively with up to four people per group. Find a population, a sample, the parameter, the statistic, a variable, and data for the following study: You want to determine the average—mean—number of glasses of milk college students drink per day. Suppose yesterday, in your English class, you asked five students how many glasses of milk they drank the day before. The answers were 1, 0, 1, 3, and 4 glasses of milk.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/1-1-definitions-of-statistics-probability-and-key-terms

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.1: Basic Definitions and Concepts

- Last updated

- Save as PDF

- Page ID 567

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- To learn the basic definitions used in statistics and some of its key concepts.

We begin with a simple example. There are millions of passenger automobiles in the United States. What is their average value? It is obviously impractical to attempt to solve this problem directly by assessing the value of every single car in the country, add up all those values, then divide by the number of values, one for each car. In practice the best we can do would be to estimate the average value. A natural way to do so would be to randomly select some of the cars, say \(200\) of them, ascertain the value of each of those cars, and find the average of those \(200\) values. The set of all those millions of vehicles is called the population of interest, and the number attached to each one, its value, is a measurement . The average value is a parameter : a number that describes a characteristic of the population, in this case monetary worth. The set of \(200\) cars selected from the population is called a sample , and the \(200\) numbers, the monetary values of the cars we selected, are the sample data . The average of the data is called a statistic : a number calculated from the sample data. This example illustrates the meaning of the following definitions.

Definitions: populations and samples

A population is any specific collection of objects of interest. A sample is any subset or subcollection of the population, including the case that the sample consists of the whole population, in which case it is termed a census.

Definitions: measurements and Sample Data

A measurement is a number or attribute computed for each member of a population or of a sample. The measurements of sample elements are collectively called the sample data .

Definition: parameters

A parameter is a number that summarizes some aspect of the population as a whole. A statistic is a number computed from the sample data.

Continuing with our example, if the average value of the cars in our sample was \($8,357\), then it seems reasonable to conclude that the average value of all cars is about \($8,357\). In reasoning this way we have drawn an inference about the population based on information obtained from the sample . In general, statistics is a study of data: describing properties of the data, which is called descriptive statistics , and drawing conclusions about a population of interest from information extracted from a sample, which is called inferential statistics . Computing the single number \($8,357\) to summarize the data was an operation of descriptive statistics; using it to make a statement about the population was an operation of inferential statistics.

Definition: Statistics

Statistics is a collection of methods for collecting, displaying, analyzing, and drawing conclusions from data.

Definition: Descriptive statistics

Descriptive statistics is the branch of statistics that involves organizing, displaying, and describing data.

Definition: Inferential statistics

Inferential statistics is the branch of statistics that involves drawing conclusions about a population based on information contained in a sample taken from that population.

Definition: Qualitative data

Qualitative data are measurements for which there is no natural numerical scale, but which consist of attributes, labels, or other non-numerical characteristics.

Definition: Quantitative data

Quantitative data are numerical measurements that arise from a natural numerical scale.

Qualitative data can generate numerical sample statistics. In the automobile example, for instance, we might be interested in the proportion of all cars that are less than six years old. In our same sample of \(200\) cars we could note for each car whether it is less than six years old or not, which is a qualitative measurement. If \(172\) cars in the sample are less than six years old, which is \(0.86\) or \(86\% \), then we would estimate the parameter of interest, the population proportion, to be about the same as the sample statistic, the sample proportion, that is, about \(0.86\).

The relationship between a population of interest and a sample drawn from that population is perhaps the most important concept in statistics, since everything else rests on it. This relationship is illustrated graphically in Figure \(\PageIndex{1}\). The circles in the large box represent elements of the population. In the figure there was room for only a small number of them but in actual situations, like our automobile example, they could very well number in the millions. The solid black circles represent the elements of the population that are selected at random and that together form the sample. For each element of the sample there is a measurement of interest, denoted by a lower case \(x\) (which we have indexed as \(x_1 , \ldots, x_n\) to tell them apart); these measurements collectively form the sample data set. From the data we may calculate various statistics. To anticipate the notation that will be used later, we might compute the sample mean \(\bar{x}\) and the sample proportion \(\hat{p}\), and take them as approximations to the population mean \(\mu\) (this is the lower case Greek letter mu, the traditional symbol for this parameter) and the population proportion \(p\), respectively. The other symbols in the figure stand for other parameters and statistics that we will encounter.

Key Takeaway

- Statistics is a study of data: describing properties of data (descriptive statistics) and drawing conclusions about a population based on information in a sample (inferential statistics).

- The distinction between a population together with its parameters and a sample together with its statistics is a fundamental concept in inferential statistics.

- Information in a sample is used to make inferences about the population from which the sample was drawn.

- Encyclopedia ›

- Statistical hypothesis testing

Definition Statistical hypothesis testing

Statistical hypothesis testing (also 'confirmatory data analysis') is used in inferential statistics to either confirm or falsify a hypothesis based on empirical observations .

An example: It is assumed, that people in the US, over time, are getting older (on average). In this case, the hypothesis to be confirmed is: 'the average age of people in the US is rising'. This is called the alternative hypothesis , whereas the current opinion 'the average age of people in the US stays the same' is called the null hypothesis . The goal of a statistical test would be to either verify of falsify the alternative hypothesis.

In hypothesis testing, we differentiate between parametric and non-parametric tests. In parametric tests we compare location and dispersion parameters of two samples and check for compliance. Examples for parametric tests are the t-test , f-test and the χ2-test. In nonparametric tests on the other hand, no assumptions about probability distributions of the population which is being assessed are being made. Examples are the Kolmogorov-Smirnov test, the chi-square test and the Shapiro-Wilk test.

Performing hypothesis tests: In order to perform statistical hypothesis testing, we first have to collect the according empirical data (for example: age reached of 100 people, born in 1900 and 1920 respectively). Depending on the hypothesis made and the resulting test procedure, a mathematically defined test statistic (f-statistic, t-statistic, …) is deducted from the observed data. Based on this value, we can determine whether the null hypothesis can be rejected or not – accounting for a specified rate of reliability (1- error probability). The null hypothesis should only be rejected based on a very low probability of error (p≤5%). Since errors when verifying or falsifying hypotheses cannot be generally excluded, errors of the first kind (=a true null hypothesis is incorrectly rejected, also: type I error) and errors of the second kind (= a true alternative hypothesis is incorrectly rejected, also: type II errors) are usually explicitly specified.

Please note that the definitions in our statistics encyclopedia are simplified explanations of terms. Our goal is to make the definitions accessible for a broad audience; thus it is possible that some definitions do not adhere entirely to scientific standards.

- Supervised learning

- Subjective and objective propability

- Statistical unit

- Statistical significance

- Standard deviation

- Spurious correlation

- Social desirability bias

- Simple moving average

- Selection method

- Secondary data

- Sample survey

Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, a complete guide on hypothesis testing in statistics, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, a comprehensive guide to understand mean squared error, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, what is hypothesis testing in statistics types and examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world , decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternate Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps of Hypothesis Testing

Step 1: specify your null and alternate hypotheses.

It is critical to rephrase your original research hypothesis (the prediction that you wish to study) as a null (Ho) and alternative (Ha) hypothesis so that you can test it quantitatively. Your first hypothesis, which predicts a link between variables, is generally your alternate hypothesis. The null hypothesis predicts no link between the variables of interest.

Step 2: Gather Data

For a statistical test to be legitimate, sampling and data collection must be done in a way that is meant to test your hypothesis. You cannot draw statistical conclusions about the population you are interested in if your data is not representative.

Step 3: Conduct a Statistical Test

Other statistical tests are available, but they all compare within-group variance (how to spread out the data inside a category) against between-group variance (how different the categories are from one another). If the between-group variation is big enough that there is little or no overlap between groups, your statistical test will display a low p-value to represent this. This suggests that the disparities between these groups are unlikely to have occurred by accident. Alternatively, if there is a large within-group variance and a low between-group variance, your statistical test will show a high p-value. Any difference you find across groups is most likely attributable to chance. The variety of variables and the level of measurement of your obtained data will influence your statistical test selection.

Step 4: Determine Rejection Of Your Null Hypothesis

Your statistical test results must determine whether your null hypothesis should be rejected or not. In most circumstances, you will base your judgment on the p-value provided by the statistical test. In most circumstances, your preset level of significance for rejecting the null hypothesis will be 0.05 - that is, when there is less than a 5% likelihood that these data would be seen if the null hypothesis were true. In other circumstances, researchers use a lower level of significance, such as 0.01 (1%). This reduces the possibility of wrongly rejecting the null hypothesis.

Step 5: Present Your Results

The findings of hypothesis testing will be discussed in the results and discussion portions of your research paper, dissertation, or thesis. You should include a concise overview of the data and a summary of the findings of your statistical test in the results section. You can talk about whether your results confirmed your initial hypothesis or not in the conversation. Rejecting or failing to reject the null hypothesis is a formal term used in hypothesis testing. This is likely a must for your statistics assignments.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.

Bootstrap distributions and randomization distributions are created using comparable simulation techniques. The observed sample statistic is the focal point of a bootstrap distribution, whereas the null hypothesis value is the focal point of a randomization distribution.

A variety of feasible population parameter estimates are included in confidence ranges. In this lesson, we created just two-tailed confidence intervals. There is a direct connection between these two-tail confidence intervals and these two-tail hypothesis tests. The results of a two-tailed hypothesis test and two-tailed confidence intervals typically provide the same results. In other words, a hypothesis test at the 0.05 level will virtually always fail to reject the null hypothesis if the 95% confidence interval contains the predicted value. A hypothesis test at the 0.05 level will nearly certainly reject the null hypothesis if the 95% confidence interval does not include the hypothesized parameter.

Simple and Composite Hypothesis Testing

Depending on the population distribution, you can classify the statistical hypothesis into two types.

Simple Hypothesis: A simple hypothesis specifies an exact value for the parameter.

Composite Hypothesis: A composite hypothesis specifies a range of values.

A company is claiming that their average sales for this quarter are 1000 units. This is an example of a simple hypothesis.

Suppose the company claims that the sales are in the range of 900 to 1000 units. Then this is a case of a composite hypothesis.

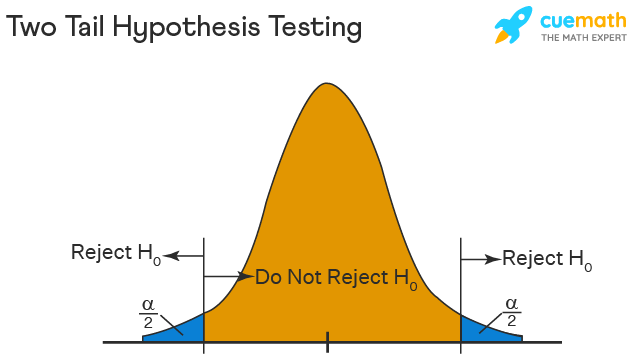

One-Tailed and Two-Tailed Hypothesis Testing

The One-Tailed test, also called a directional test, considers a critical region of data that would result in the null hypothesis being rejected if the test sample falls into it, inevitably meaning the acceptance of the alternate hypothesis.

In a one-tailed test, the critical distribution area is one-sided, meaning the test sample is either greater or lesser than a specific value.

In two tails, the test sample is checked to be greater or less than a range of values in a Two-Tailed test, implying that the critical distribution area is two-sided.

If the sample falls within this range, the alternate hypothesis will be accepted, and the null hypothesis will be rejected.

Become a Data Scientist With Real-World Experience

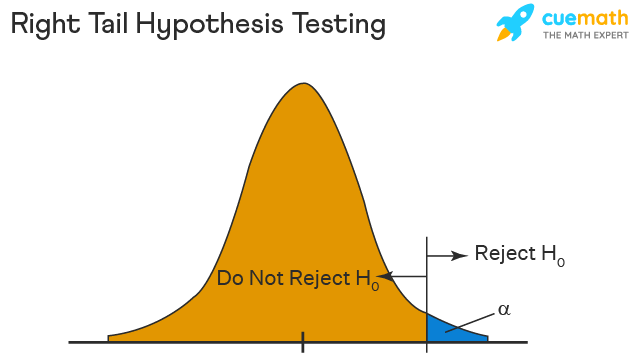

Right Tailed Hypothesis Testing

If the larger than (>) sign appears in your hypothesis statement, you are using a right-tailed test, also known as an upper test. Or, to put it another way, the disparity is to the right. For instance, you can contrast the battery life before and after a change in production. Your hypothesis statements can be the following if you want to know if the battery life is longer than the original (let's say 90 hours):

- The null hypothesis is (H0 <= 90) or less change.

- A possibility is that battery life has risen (H1) > 90.

The crucial point in this situation is that the alternate hypothesis (H1), not the null hypothesis, decides whether you get a right-tailed test.

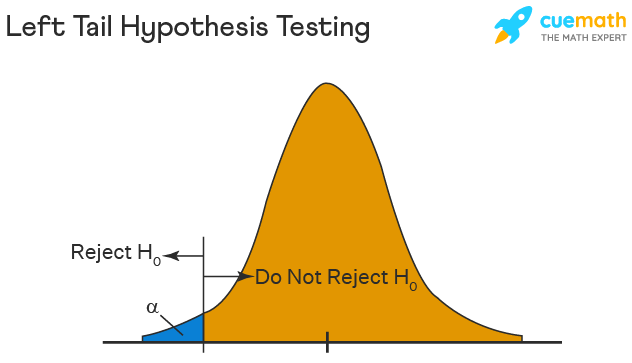

Left Tailed Hypothesis Testing

Alternative hypotheses that assert the true value of a parameter is lower than the null hypothesis are tested with a left-tailed test; they are indicated by the asterisk "<".

Suppose H0: mean = 50 and H1: mean not equal to 50

According to the H1, the mean can be greater than or less than 50. This is an example of a Two-tailed test.

In a similar manner, if H0: mean >=50, then H1: mean <50

Here the mean is less than 50. It is called a One-tailed test.

Type 1 and Type 2 Error

A hypothesis test can result in two types of errors.

Type 1 Error: A Type-I error occurs when sample results reject the null hypothesis despite being true.

Type 2 Error: A Type-II error occurs when the null hypothesis is not rejected when it is false, unlike a Type-I error.

Suppose a teacher evaluates the examination paper to decide whether a student passes or fails.

H0: Student has passed

H1: Student has failed

Type I error will be the teacher failing the student [rejects H0] although the student scored the passing marks [H0 was true].

Type II error will be the case where the teacher passes the student [do not reject H0] although the student did not score the passing marks [H1 is true].

Level of Significance

The alpha value is a criterion for determining whether a test statistic is statistically significant. In a statistical test, Alpha represents an acceptable probability of a Type I error. Because alpha is a probability, it can be anywhere between 0 and 1. In practice, the most commonly used alpha values are 0.01, 0.05, and 0.1, which represent a 1%, 5%, and 10% chance of a Type I error, respectively (i.e. rejecting the null hypothesis when it is in fact correct).

Future-Proof Your AI/ML Career: Top Dos and Don'ts

A p-value is a metric that expresses the likelihood that an observed difference could have occurred by chance. As the p-value decreases the statistical significance of the observed difference increases. If the p-value is too low, you reject the null hypothesis.

Here you have taken an example in which you are trying to test whether the new advertising campaign has increased the product's sales. The p-value is the likelihood that the null hypothesis, which states that there is no change in the sales due to the new advertising campaign, is true. If the p-value is .30, then there is a 30% chance that there is no increase or decrease in the product's sales. If the p-value is 0.03, then there is a 3% probability that there is no increase or decrease in the sales value due to the new advertising campaign. As you can see, the lower the p-value, the chances of the alternate hypothesis being true increases, which means that the new advertising campaign causes an increase or decrease in sales.

Why is Hypothesis Testing Important in Research Methodology?

Hypothesis testing is crucial in research methodology for several reasons:

- Provides evidence-based conclusions: It allows researchers to make objective conclusions based on empirical data, providing evidence to support or refute their research hypotheses.

- Supports decision-making: It helps make informed decisions, such as accepting or rejecting a new treatment, implementing policy changes, or adopting new practices.

- Adds rigor and validity: It adds scientific rigor to research using statistical methods to analyze data, ensuring that conclusions are based on sound statistical evidence.

- Contributes to the advancement of knowledge: By testing hypotheses, researchers contribute to the growth of knowledge in their respective fields by confirming existing theories or discovering new patterns and relationships.

Limitations of Hypothesis Testing

Hypothesis testing has some limitations that researchers should be aware of:

- It cannot prove or establish the truth: Hypothesis testing provides evidence to support or reject a hypothesis, but it cannot confirm the absolute truth of the research question.

- Results are sample-specific: Hypothesis testing is based on analyzing a sample from a population, and the conclusions drawn are specific to that particular sample.

- Possible errors: During hypothesis testing, there is a chance of committing type I error (rejecting a true null hypothesis) or type II error (failing to reject a false null hypothesis).

- Assumptions and requirements: Different tests have specific assumptions and requirements that must be met to accurately interpret results.

After reading this tutorial, you would have a much better understanding of hypothesis testing, one of the most important concepts in the field of Data Science . The majority of hypotheses are based on speculation about observed behavior, natural phenomena, or established theories.

If you are interested in statistics of data science and skills needed for such a career, you ought to explore Simplilearn’s Post Graduate Program in Data Science.

If you have any questions regarding this ‘Hypothesis Testing In Statistics’ tutorial, do share them in the comment section. Our subject matter expert will respond to your queries. Happy learning!

1. What is hypothesis testing in statistics with example?

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample data to draw conclusions about a population. It involves formulating two competing hypotheses, the null hypothesis (H0) and the alternative hypothesis (Ha), and then collecting data to assess the evidence. An example: testing if a new drug improves patient recovery (Ha) compared to the standard treatment (H0) based on collected patient data.

2. What is hypothesis testing and its types?

Hypothesis testing is a statistical method used to make inferences about a population based on sample data. It involves formulating two hypotheses: the null hypothesis (H0), which represents the default assumption, and the alternative hypothesis (Ha), which contradicts H0. The goal is to assess the evidence and determine whether there is enough statistical significance to reject the null hypothesis in favor of the alternative hypothesis.

Types of hypothesis testing:

- One-sample test: Used to compare a sample to a known value or a hypothesized value.

- Two-sample test: Compares two independent samples to assess if there is a significant difference between their means or distributions.

- Paired-sample test: Compares two related samples, such as pre-test and post-test data, to evaluate changes within the same subjects over time or under different conditions.

- Chi-square test: Used to analyze categorical data and determine if there is a significant association between variables.

- ANOVA (Analysis of Variance): Compares means across multiple groups to check if there is a significant difference between them.

3. What are the steps of hypothesis testing?

The steps of hypothesis testing are as follows:

- Formulate the hypotheses: State the null hypothesis (H0) and the alternative hypothesis (Ha) based on the research question.

- Set the significance level: Determine the acceptable level of error (alpha) for making a decision.

- Collect and analyze data: Gather and process the sample data.

- Compute test statistic: Calculate the appropriate statistical test to assess the evidence.

- Make a decision: Compare the test statistic with critical values or p-values and determine whether to reject H0 in favor of Ha or not.

- Draw conclusions: Interpret the results and communicate the findings in the context of the research question.

4. What are the 2 types of hypothesis testing?

- One-tailed (or one-sided) test: Tests for the significance of an effect in only one direction, either positive or negative.

- Two-tailed (or two-sided) test: Tests for the significance of an effect in both directions, allowing for the possibility of a positive or negative effect.

The choice between one-tailed and two-tailed tests depends on the specific research question and the directionality of the expected effect.

5. What are the 3 major types of hypothesis?

The three major types of hypotheses are:

- Null Hypothesis (H0): Represents the default assumption, stating that there is no significant effect or relationship in the data.

- Alternative Hypothesis (Ha): Contradicts the null hypothesis and proposes a specific effect or relationship that researchers want to investigate.

- Nondirectional Hypothesis: An alternative hypothesis that doesn't specify the direction of the effect, leaving it open for both positive and negative possibilities.

Find our Data Analyst Online Bootcamp in top cities:

About the author.

Avijeet is a Senior Research Analyst at Simplilearn. Passionate about Data Analytics, Machine Learning, and Deep Learning, Avijeet is also interested in politics, cricket, and football.

Recommended Resources

Free eBook: Top Programming Languages For A Data Scientist

Normality Test in Minitab: Minitab with Statistics

Machine Learning Career Guide: A Playbook to Becoming a Machine Learning Engineer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example