- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Null Hypothesis Examples

The null hypothesis (H 0 ) is the hypothesis that states there is no statistical difference between two sample sets. In other words, it assumes the independent variable does not have an effect on the dependent variable in a scientific experiment .

The null hypothesis is the most powerful type of hypothesis in the scientific method because it’s the easiest one to test with a high confidence level using statistics. If the null hypothesis is accepted, then it’s evidence any observed differences between two experiment groups are due to random chance. If the null hypothesis is rejected, then it’s strong evidence there is a true difference between test sets or that the independent variable affects the dependent variable.

- The null hypothesis is a nullifiable hypothesis. A researcher seeks to reject it because this result strongly indicates observed differences are real and not just due to chance.

- The null hypothesis may be accepted or rejected, but not proven. There is always a level of confidence in the outcome.

What Is the Null Hypothesis?

The null hypothesis is written as H 0 , which is read as H-zero, H-nought, or H-null. It is associated with another hypothesis, called the alternate or alternative hypothesis H A or H 1 . When the null hypothesis and alternate hypothesis are written mathematically, they cover all possible outcomes of an experiment.

An experimenter tests the null hypothesis with a statistical analysis called a significance test. The significance test determines the likelihood that the results of the test are not due to chance. Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01). But, even if the confidence in the test is high, there is always a small chance the outcome is incorrect. This means you can’t prove a null hypothesis. It’s also a good reason why it’s important to repeat experiments.

Exact and Inexact Null Hypothesis

The most common type of null hypothesis assumes no difference between two samples or groups or no measurable effect of a treatment. This is the exact hypothesis . If you’re asked to state a null hypothesis for a science class, this is the one to write. It is the easiest type of hypothesis to test and is the only one accepted for certain types of analysis. Examples include:

There is no difference between two groups H 0 : μ 1 = μ 2 (where H 0 = the null hypothesis, μ 1 = the mean of population 1, and μ 2 = the mean of population 2)

Both groups have value of 100 (or any number or quality) H 0 : μ = 100

However, sometimes a researcher may test an inexact hypothesis . This type of hypothesis specifies ranges or intervals. Examples include:

Recovery time from a treatment is the same or worse than a placebo: H 0 : μ ≥ placebo time

There is a 5% or less difference between two groups: H 0 : 95 ≤ μ ≤ 105

An inexact hypothesis offers “directionality” about a phenomenon. For example, an exact hypothesis can indicate whether or not a treatment has an effect, while an inexact hypothesis can tell whether an effect is positive of negative. However, an inexact hypothesis may be harder to test and some scientists and statisticians disagree about whether it’s a true null hypothesis .

How to State the Null Hypothesis

To state the null hypothesis, first state what you expect the experiment to show. Then, rephrase the statement in a form that assumes there is no relationship between the variables or that a treatment has no effect.

Example: A researcher tests whether a new drug speeds recovery time from a certain disease. The average recovery time without treatment is 3 weeks.

- State the goal of the experiment: “I hope the average recovery time with the new drug will be less than 3 weeks.”

- Rephrase the hypothesis to assume the treatment has no effect: “If the drug doesn’t shorten recovery time, then the average time will be 3 weeks or longer.” Mathematically: H 0 : μ ≥ 3

This null hypothesis (inexact hypothesis) covers both the scenario in which the drug has no effect and the one in which the drugs makes the recovery time longer. The alternate hypothesis is that average recovery time will be less than three weeks:

H A : μ < 3

Of course, the researcher could test the no-effect hypothesis (exact null hypothesis): H 0 : μ = 3

The danger of testing this hypothesis is that rejecting it only implies the drug affected recovery time (not whether it made it better or worse). This is because the alternate hypothesis is:

H A : μ ≠ 3 (which includes μ <3 and μ >3)

Even though the no-effect null hypothesis yields less information, it’s used because it’s easier to test using statistics. Basically, testing whether something is unchanged/changed is easier than trying to quantify the nature of the change.

Remember, a researcher hopes to reject the null hypothesis because this supports the alternate hypothesis. Also, be sure the null and alternate hypothesis cover all outcomes. Finally, remember a simple true/false, equal/unequal, yes/no exact hypothesis is easier to test than a more complex inexact hypothesis.

- Adèr, H. J.; Mellenbergh, G. J. & Hand, D. J. (2007). Advising on Research Methods: A Consultant’s Companion . Huizen, The Netherlands: Johannes van Kessel Publishing. ISBN 978-90-79418-01-5 .

- Cox, D. R. (2006). Principles of Statistical Inference . Cambridge University Press. ISBN 978-0-521-68567-2 .

- Everitt, Brian (1998). The Cambridge Dictionary of Statistics . Cambridge, UK New York: Cambridge University Press. ISBN 978-0521593465.

- Weiss, Neil A. (1999). Introductory Statistics (5th ed.). ISBN 9780201598773.

Related Posts

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null and Alternative Hypotheses | Definitions & Examples

Published on 5 October 2022 by Shaun Turney . Revised on 6 December 2022.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis (H 0 ): There’s no effect in the population .

- Alternative hypothesis (H A ): There’s an effect in the population.

The effect is usually the effect of the independent variable on the dependent variable .

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, differences between null and alternative hypotheses, how to write null and alternative hypotheses, frequently asked questions about null and alternative hypotheses.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”, the null hypothesis (H 0 ) answers “No, there’s no effect in the population.” On the other hand, the alternative hypothesis (H A ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample.

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept. Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect”, “no difference”, or “no relationship”. When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis (H A ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect”, “a difference”, or “a relationship”. When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes > or <). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question

- They both make claims about the population

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis (H 0 ): Independent variable does not affect dependent variable .

- Alternative hypothesis (H A ): Independent variable affects dependent variable .

Test-specific

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Turney, S. (2022, December 06). Null and Alternative Hypotheses | Definitions & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/stats/null-and-alternative-hypothesis/

Is this article helpful?

Shaun Turney

Other students also liked, levels of measurement: nominal, ordinal, interval, ratio, the standard normal distribution | calculator, examples & uses, types of variables in research | definitions & examples.

Null hypothesis

Null hypothesis n., plural: null hypotheses [nʌl haɪˈpɒθɪsɪs] Definition: a hypothesis that is valid or presumed true until invalidated by a statistical test

Table of Contents

Null Hypothesis Definition

Null hypothesis is defined as “the commonly accepted fact (such as the sky is blue) and researcher aim to reject or nullify this fact”.

More formally, we can define a null hypothesis as “a statistical theory suggesting that no statistical relationship exists between given observed variables” .

In biology , the null hypothesis is used to nullify or reject a common belief. The researcher carries out the research which is aimed at rejecting the commonly accepted belief.

What Is a Null Hypothesis?

A hypothesis is defined as a theory or an assumption that is based on inadequate evidence. It needs and requires more experiments and testing for confirmation. There are two possibilities that by doing more experiments and testing, a hypothesis can be false or true. It means it can either prove wrong or true (Blackwelder, 1982).

For example, Susie assumes that mineral water helps in the better growth and nourishment of plants over distilled water. To prove this hypothesis, she performs this experiment for almost a month. She watered some plants with mineral water and some with distilled water.

In a hypothesis when there are no statistically significant relationships among the two variables, the hypothesis is said to be a null hypothesis. The investigator is trying to disprove such a hypothesis. In the above example of plants, the null hypothesis is:

There are no statistical relationships among the forms of water that are given to plants for growth and nourishment.

Usually, an investigator tries to prove the null hypothesis wrong and tries to explain a relation and association between the two variables.

An opposite and reverse of the null hypothesis are known as the alternate hypothesis . In the example of plants the alternate hypothesis is:

There are statistical relationships among the forms of water that are given to plants for growth and nourishment.

The example below shows the difference between null vs alternative hypotheses:

Alternate Hypothesis: The world is round Null Hypothesis: The world is not round.

Copernicus and many other scientists try to prove the null hypothesis wrong and false. By their experiments and testing, they make people believe that alternate hypotheses are correct and true. If they do not prove the null hypothesis experimentally wrong then people will not believe them and never consider the alternative hypothesis true and correct.

The alternative and null hypothesis for Susie’s assumption is:

- Null Hypothesis: If one plant is watered with distilled water and the other with mineral water, then there is no difference in the growth and nourishment of these two plants.

- Alternative Hypothesis: If one plant is watered with distilled water and the other with mineral water, then the plant with mineral water shows better growth and nourishment.

The null hypothesis suggests that there is no significant or statistical relationship. The relation can either be in a single set of variables or among two sets of variables.

Most people consider the null hypothesis true and correct. Scientists work and perform different experiments and do a variety of research so that they can prove the null hypothesis wrong or nullify it. For this purpose, they design an alternate hypothesis that they think is correct or true. The null hypothesis symbol is H 0 (it is read as H null or H zero ).

Why is it named the “Null”?

The name null is given to this hypothesis to clarify and explain that the scientists are working to prove it false i.e. to nullify the hypothesis. Sometimes it confuses the readers; they might misunderstand it and think that statement has nothing. It is blank but, actually, it is not. It is more appropriate and suitable to call it a nullifiable hypothesis instead of the null hypothesis.

Why do we need to assess it? Why not just verify an alternate one?

In science, the scientific method is used. It involves a series of different steps. Scientists perform these steps so that a hypothesis can be proved false or true. Scientists do this to confirm that there will be any limitation or inadequacy in the new hypothesis. Experiments are done by considering both alternative and null hypotheses, which makes the research safe. It gives a negative as well as a bad impact on research if a null hypothesis is not included or a part of the study. It seems like you are not taking your research seriously and not concerned about it and just want to impose your results as correct and true if the null hypothesis is not a part of the study.

Development of the Null

In statistics, firstly it is necessary to design alternate and null hypotheses from the given problem. Splitting the problem into small steps makes the pathway towards the solution easier and less challenging. how to write a null hypothesis?

Writing a null hypothesis consists of two steps:

- Firstly, initiate by asking a question.

- Secondly, restate the question in such a way that it seems there are no relationships among the variables.

In other words, assume in such a way that the treatment does not have any effect.

The usual recovery duration after knee surgery is considered almost 8 weeks.

A researcher thinks that the recovery period may get elongated if patients go to a physiotherapist for rehabilitation twice per week, instead of thrice per week, i.e. recovery duration reduces if the patient goes three times for rehabilitation instead of two times.

Step 1: Look for the problem in the hypothesis. The hypothesis either be a word or can be a statement. In the above example the hypothesis is:

“The expected recovery period in knee rehabilitation is more than 8 weeks”

Step 2: Make a mathematical statement from the hypothesis. Averages can also be represented as μ, thus the null hypothesis formula will be.

In the above equation, the hypothesis is equivalent to H1, the average is denoted by μ and > that the average is greater than eight.

Step 3: Explain what will come up if the hypothesis does not come right i.e., the rehabilitation period may not proceed more than 08 weeks.

There are two options: either the recovery will be less than or equal to 8 weeks.

H 0 : μ ≤ 8

In the above equation, the null hypothesis is equivalent to H 0 , the average is denoted by μ and ≤ represents that the average is less than or equal to eight.

What will happen if the scientist does not have any knowledge about the outcome?

Problem: An investigator investigates the post-operative impact and influence of radical exercise on patients who have operative procedures of the knee. The chances are either the exercise will improve the recovery or will make it worse. The usual time for recovery is 8 weeks.

Step 1: Make a null hypothesis i.e. the exercise does not show any effect and the recovery time remains almost 8 weeks.

H 0 : μ = 8

In the above equation, the null hypothesis is equivalent to H 0 , the average is denoted by μ, and the equal sign (=) shows that the average is equal to eight.

Step 2: Make the alternate hypothesis which is the reverse of the null hypothesis. Particularly what will happen if treatment (exercise) makes an impact?

In the above equation, the alternate hypothesis is equivalent to H1, the average is denoted by μ and not equal sign (≠) represents that the average is not equal to eight.

Significance Tests

To get a reasonable and probable clarification of statistics (data), a significance test is performed. The null hypothesis does not have data. It is a piece of information or statement which contains numerical figures about the population. The data can be in different forms like in means or proportions. It can either be the difference of proportions and means or any odd ratio.

The following table will explain the symbols:

P-value is the chief statistical final result of the significance test of the null hypothesis.

- P-value = Pr(data or data more extreme | H 0 true)

- | = “given”

- Pr = probability

- H 0 = the null hypothesis

The first stage of Null Hypothesis Significance Testing (NHST) is to form an alternate and null hypothesis. By this, the research question can be briefly explained.

Null Hypothesis = no effect of treatment, no difference, no association Alternative Hypothesis = effective treatment, difference, association

When to reject the null hypothesis?

Researchers will reject the null hypothesis if it is proven wrong after experimentation. Researchers accept null hypothesis to be true and correct until it is proven wrong or false. On the other hand, the researchers try to strengthen the alternate hypothesis. The binomial test is performed on a sample and after that, a series of tests were performed (Frick, 1995).

Step 1: Evaluate and read the research question carefully and consciously and make a null hypothesis. Verify the sample that supports the binomial proportion. If there is no difference then find out the value of the binomial parameter.

Show the null hypothesis as:

H 0 :p= the value of p if H 0 is true

To find out how much it varies from the proposed data and the value of the null hypothesis, calculate the sample proportion.

Step 2: In test statistics, find the binomial test that comes under the null hypothesis. The test must be based on precise and thorough probabilities. Also make a list of pmf that apply, when the null hypothesis proves true and correct.

When H 0 is true, X~b(n, p)

N = size of the sample

P = assume value if H 0 proves true.

Step 3: Find out the value of P. P-value is the probability of data that is under observation.

Rise or increase in the P value = Pr(X ≥ x)

X = observed number of successes

P value = Pr(X ≤ x).

Step 4: Demonstrate the findings or outcomes in a descriptive detailed way.

- Sample proportion

- The direction of difference (either increases or decreases)

Perceived Problems With the Null Hypothesis

Variable or model selection and less information in some cases are the chief important issues that affect the testing of the null hypothesis. Statistical tests of the null hypothesis are reasonably not strong. There is randomization about significance. (Gill, 1999) The main issue with the testing of the null hypothesis is that they all are wrong or false on a ground basis.

There is another problem with the a-level . This is an ignored but also a well-known problem. The value of a-level is without a theoretical basis and thus there is randomization in conventional values, most commonly 0.q, 0.5, or 0.01. If a fixed value of a is used, it will result in the formation of two categories (significant and non-significant) The issue of a randomized rejection or non-rejection is also present when there is a practical matter which is the strong point of the evidence related to a scientific matter.

The P-value has the foremost importance in the testing of null hypothesis but as an inferential tool and for interpretation, it has a problem. The P-value is the probability of getting a test statistic at least as extreme as the observed one.

The main point about the definition is: Observed results are not based on a-value

Moreover, the evidence against the null hypothesis was overstated due to unobserved results. A-value has importance more than just being a statement. It is a precise statement about the evidence from the observed results or data. Similarly, researchers found that P-values are objectionable. They do not prefer null hypotheses in testing. It is also clear that the P-value is strictly dependent on the null hypothesis. It is computer-based statistics. In some precise experiments, the null hypothesis statistics and actual sampling distribution are closely related but this does not become possible in observational studies.

Some researchers pointed out that the P-value is depending on the sample size. If the true and exact difference is small, a null hypothesis even of a large sample may get rejected. This shows the difference between biological importance and statistical significance. (Killeen, 2005)

Another issue is the fix a-level, i.e., 0.1. On the basis, if a-level a null hypothesis of a large sample may get accepted or rejected. If the size of simple is infinity and the null hypothesis is proved true there are still chances of Type I error. That is the reason this approach or method is not considered consistent and reliable. There is also another problem that the exact information about the precision and size of the estimated effect cannot be known. The only solution is to state the size of the effect and its precision.

Null Hypothesis Examples

Here are some examples:

Example 1: Hypotheses with One Sample of One Categorical Variable

Among all the population of humans, almost 10% of people prefer to do their task with their left hand i.e. left-handed. Let suppose, a researcher in the Penn States says that the population of students at the College of Arts and Architecture is mostly left-handed as compared to the general population of humans in general public society. In this case, there is only a sample and there is a comparison among the known population values to the population proportion of sample value.

- Research Question: Do artists more expected to be left-handed as compared to the common population persons in society?

- Response Variable: Sorting the student into two categories. One category has left-handed persons and the other category have right-handed persons.

- Form Null Hypothesis: Arts and Architecture college students are no more predicted to be lefty as compared to the common population persons in society (Lefty students of Arts and Architecture college population is 10% or p= 0.10)

Example 2: Hypotheses with One Sample of One Measurement Variable

A generic brand of antihistamine Diphenhydramine making medicine in the form of a capsule, having a 50mg dose. The maker of the medicines is concerned that the machine has come out of calibration and is not making more capsules with the suitable and appropriate dose.

- Research Question: Does the statistical data recommended about the mean and average dosage of the population differ from 50mg?

- Response Variable: Chemical assay used to find the appropriate dosage of the active ingredient.

- Null Hypothesis: Usually, the 50mg dosage of capsules of this trade name (population average and means dosage =50 mg).

Example 3: Hypotheses with Two Samples of One Categorical Variable

Several people choose vegetarian meals on a daily basis. Typically, the researcher thought that females like vegetarian meals more than males.

- Research Question: Does the data recommend that females (women) prefer vegetarian meals more than males (men) regularly?

- Response Variable: Cataloguing the persons into vegetarian and non-vegetarian categories. Grouping Variable: Gender

- Null Hypothesis: Gender is not linked to those who like vegetarian meals. (Population percent of women who eat vegetarian meals regularly = population percent of men who eat vegetarian meals regularly or p women = p men).

Example 4: Hypotheses with Two Samples of One Measurement Variable

Nowadays obesity and being overweight is one of the major and dangerous health issues. Research is performed to confirm that a low carbohydrates diet leads to faster weight loss than a low-fat diet.

- Research Question: Does the given data recommend that usually, a low-carbohydrate diet helps in losing weight faster as compared to a low-fat diet?

- Response Variable: Weight loss (pounds)

- Explanatory Variable: Form of diet either low carbohydrate or low fat

- Null Hypothesis: There is no significant difference when comparing the mean loss of weight of people using a low carbohydrate diet to people using a diet having low fat. (population means loss of weight on a low carbohydrate diet = population means loss of weight on a diet containing low fat).

Example 5: Hypotheses about the relationship between Two Categorical Variables

A case-control study was performed. The study contains nonsmokers, stroke patients, and controls. The subjects are of the same occupation and age and the question was asked if someone at their home or close surrounding smokes?

- Research Question: Did second-hand smoke enhance the chances of stroke?

- Variables: There are 02 diverse categories of variables. (Controls and stroke patients) (whether the smoker lives in the same house). The chances of having a stroke will be increased if a person is living with a smoker.

- Null Hypothesis: There is no significant relationship between a passive smoker and stroke or brain attack. (odds ratio between stroke and the passive smoker is equal to 1).

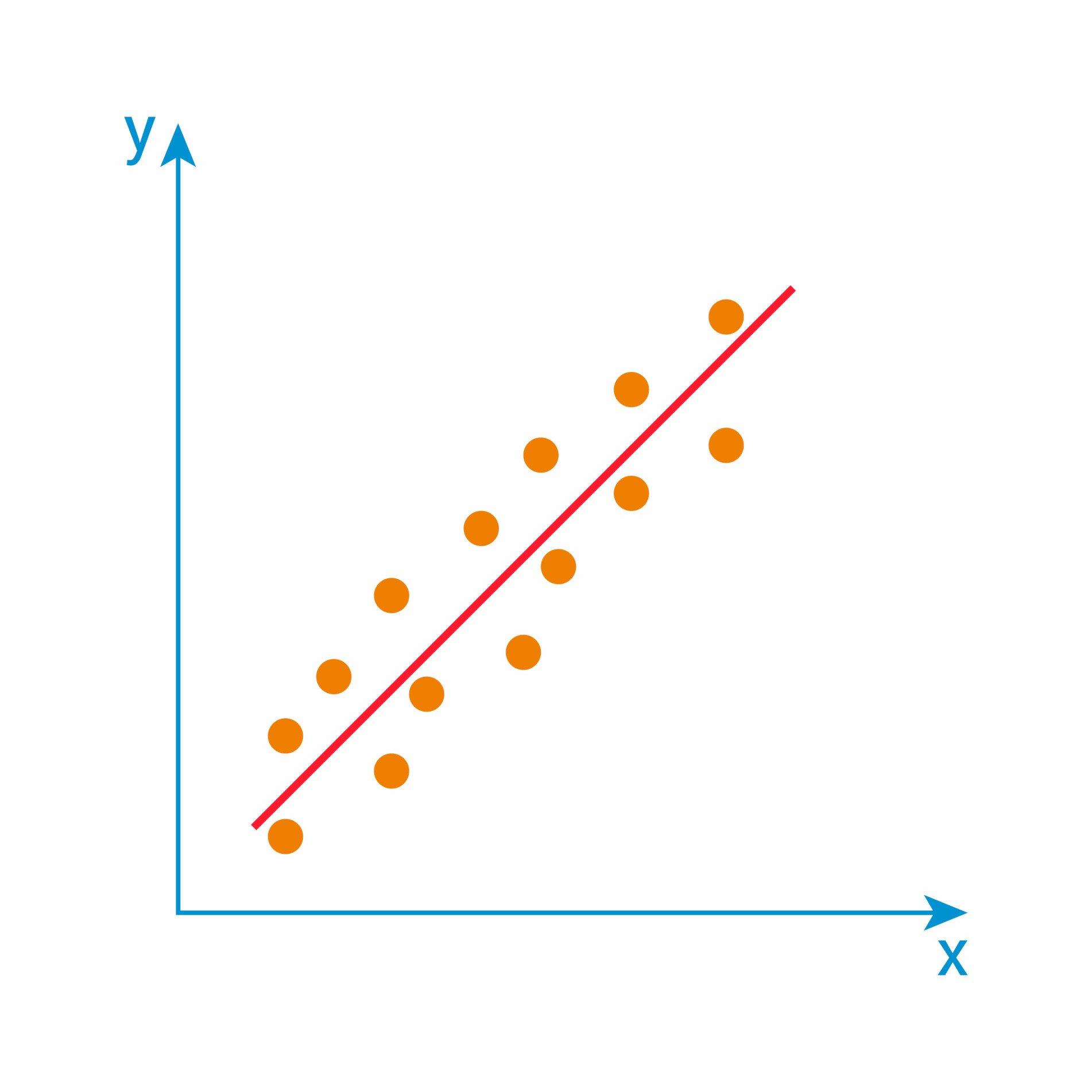

Example 6: Hypotheses about the relationship between Two Measurement Variables

A financial expert observes that there is somehow a positive and effective relationship between the variation in stock rate price and the quantity of stock bought by non-management employees

- Response variable- Regular alteration in price

- Explanatory Variable- Stock bought by non-management employees

- Null Hypothesis: The association and relationship between the regular stock price alteration ($) and the daily stock-buying by non-management employees ($) = 0.

Example 7: Hypotheses about comparing the relationship between Two Measurement Variables in Two Samples

- Research Question: Is the relation between the bill paid in a restaurant and the tip given to the waiter, is linear? Is this relation different for dining and family restaurants?

- Explanatory Variable- total bill amount

- Response Variable- the amount of tip

- Null Hypothesis: The relationship and association between the total bill quantity at a family or dining restaurant and the tip, is the same.

Try to answer the quiz below to check what you have learned so far about the null hypothesis.

Choose the best answer.

Send Your Results (Optional)

- Blackwelder, W. C. (1982). “Proving the null hypothesis” in clinical trials. Controlled Clinical Trials , 3(4), 345–353.

- Frick, R. W. (1995). Accepting the null hypothesis. Memory & Cognition, 23(1), 132–138.

- Gill, J. (1999). The insignificance of null hypothesis significance testing. Political Research Quarterly , 52(3), 647–674.

- Killeen, P. R. (2005). An alternative to null-hypothesis significance tests. Psychological Science, 16(5), 345–353.

©BiologyOnline.com. Content provided and moderated by Biology Online Editors.

Last updated on June 16th, 2022

You will also like...

IQ, Creativity and Learning

Human intelligence provided the means to utilize abstract ideas and implement reasoning. This tutorial takes a further l..

Effect of Chemicals on Growth & Development in Organisms

Plants and animals need elements, such as nitrogen, phosphorus, potassium, and magnesium for proper growth and developme..

Running Water Freshwater Communities

This tutorial introduces flowing water communities, which bring new and dithering factors into the equation for possible..

Freshwater Communities & Lentic Waters

Lentic or still water communities can vary greatly in appearance -- from a small temporary puddle to a large lake. The s..

New Zealand’s Unique Fauna

Meet some of New Zealand's unique fauna, including endemic insects, frogs, reptiles, birds, and mammals, and investigate..

Origins of Life on Earth

Earth was created around 4.5 billion years ago and life began not long after. Primitive life likely possessed the elemen..

Related Articles...

No related articles found

What is The Null Hypothesis & When Do You Reject The Null Hypothesis

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A null hypothesis is a statistical concept suggesting no significant difference or relationship between measured variables. It’s the default assumption unless empirical evidence proves otherwise.

The null hypothesis states no relationship exists between the two variables being studied (i.e., one variable does not affect the other).

The null hypothesis is the statement that a researcher or an investigator wants to disprove.

Testing the null hypothesis can tell you whether your results are due to the effects of manipulating the dependent variable or due to random chance.

How to Write a Null Hypothesis

Null hypotheses (H0) start as research questions that the investigator rephrases as statements indicating no effect or relationship between the independent and dependent variables.

It is a default position that your research aims to challenge or confirm.

For example, if studying the impact of exercise on weight loss, your null hypothesis might be:

There is no significant difference in weight loss between individuals who exercise daily and those who do not.

Examples of Null Hypotheses

When do we reject the null hypothesis .

We reject the null hypothesis when the data provide strong enough evidence to conclude that it is likely incorrect. This often occurs when the p-value (probability of observing the data given the null hypothesis is true) is below a predetermined significance level.

If the collected data does not meet the expectation of the null hypothesis, a researcher can conclude that the data lacks sufficient evidence to back up the null hypothesis, and thus the null hypothesis is rejected.

Rejecting the null hypothesis means that a relationship does exist between a set of variables and the effect is statistically significant ( p > 0.05).

If the data collected from the random sample is not statistically significance , then the null hypothesis will be accepted, and the researchers can conclude that there is no relationship between the variables.

You need to perform a statistical test on your data in order to evaluate how consistent it is with the null hypothesis. A p-value is one statistical measurement used to validate a hypothesis against observed data.

Calculating the p-value is a critical part of null-hypothesis significance testing because it quantifies how strongly the sample data contradicts the null hypothesis.

The level of statistical significance is often expressed as a p -value between 0 and 1. The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01) as general guidelines to decide if you should reject or keep the null.

When your p-value is less than or equal to your significance level, you reject the null hypothesis.

In other words, smaller p-values are taken as stronger evidence against the null hypothesis. Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis.

In this case, the sample data provides insufficient data to conclude that the effect exists in the population.

Because you can never know with complete certainty whether there is an effect in the population, your inferences about a population will sometimes be incorrect.

When you incorrectly reject the null hypothesis, it’s called a type I error. When you incorrectly fail to reject it, it’s called a type II error.

Why Do We Never Accept The Null Hypothesis?

The reason we do not say “accept the null” is because we are always assuming the null hypothesis is true and then conducting a study to see if there is evidence against it. And, even if we don’t find evidence against it, a null hypothesis is not accepted.

A lack of evidence only means that you haven’t proven that something exists. It does not prove that something doesn’t exist.

It is risky to conclude that the null hypothesis is true merely because we did not find evidence to reject it. It is always possible that researchers elsewhere have disproved the null hypothesis, so we cannot accept it as true, but instead, we state that we failed to reject the null.

One can either reject the null hypothesis, or fail to reject it, but can never accept it.

Why Do We Use The Null Hypothesis?

We can never prove with 100% certainty that a hypothesis is true; We can only collect evidence that supports a theory. However, testing a hypothesis can set the stage for rejecting or accepting this hypothesis within a certain confidence level.

The null hypothesis is useful because it can tell us whether the results of our study are due to random chance or the manipulation of a variable (with a certain level of confidence).

A null hypothesis is rejected if the measured data is significantly unlikely to have occurred and a null hypothesis is accepted if the observed outcome is consistent with the position held by the null hypothesis.

Rejecting the null hypothesis sets the stage for further experimentation to see if a relationship between two variables exists.

Hypothesis testing is a critical part of the scientific method as it helps decide whether the results of a research study support a particular theory about a given population. Hypothesis testing is a systematic way of backing up researchers’ predictions with statistical analysis.

It helps provide sufficient statistical evidence that either favors or rejects a certain hypothesis about the population parameter.

Purpose of a Null Hypothesis

- The primary purpose of the null hypothesis is to disprove an assumption.

- Whether rejected or accepted, the null hypothesis can help further progress a theory in many scientific cases.

- A null hypothesis can be used to ascertain how consistent the outcomes of multiple studies are.

Do you always need both a Null Hypothesis and an Alternative Hypothesis?

The null (H0) and alternative (Ha or H1) hypotheses are two competing claims that describe the effect of the independent variable on the dependent variable. They are mutually exclusive, which means that only one of the two hypotheses can be true.

While the null hypothesis states that there is no effect in the population, an alternative hypothesis states that there is statistical significance between two variables.

The goal of hypothesis testing is to make inferences about a population based on a sample. In order to undertake hypothesis testing, you must express your research hypothesis as a null and alternative hypothesis. Both hypotheses are required to cover every possible outcome of the study.

What is the difference between a null hypothesis and an alternative hypothesis?

The alternative hypothesis is the complement to the null hypothesis. The null hypothesis states that there is no effect or no relationship between variables, while the alternative hypothesis claims that there is an effect or relationship in the population.

It is the claim that you expect or hope will be true. The null hypothesis and the alternative hypothesis are always mutually exclusive, meaning that only one can be true at a time.

What are some problems with the null hypothesis?

One major problem with the null hypothesis is that researchers typically will assume that accepting the null is a failure of the experiment. However, accepting or rejecting any hypothesis is a positive result. Even if the null is not refuted, the researchers will still learn something new.

Why can a null hypothesis not be accepted?

We can either reject or fail to reject a null hypothesis, but never accept it. If your test fails to detect an effect, this is not proof that the effect doesn’t exist. It just means that your sample did not have enough evidence to conclude that it exists.

We can’t accept a null hypothesis because a lack of evidence does not prove something that does not exist. Instead, we fail to reject it.

Failing to reject the null indicates that the sample did not provide sufficient enough evidence to conclude that an effect exists.

If the p-value is greater than the significance level, then you fail to reject the null hypothesis.

Is a null hypothesis directional or non-directional?

A hypothesis test can either contain an alternative directional hypothesis or a non-directional alternative hypothesis. A directional hypothesis is one that contains the less than (“<“) or greater than (“>”) sign.

A nondirectional hypothesis contains the not equal sign (“≠”). However, a null hypothesis is neither directional nor non-directional.

A null hypothesis is a prediction that there will be no change, relationship, or difference between two variables.

The directional hypothesis or nondirectional hypothesis would then be considered alternative hypotheses to the null hypothesis.

Gill, J. (1999). The insignificance of null hypothesis significance testing. Political research quarterly , 52 (3), 647-674.

Krueger, J. (2001). Null hypothesis significance testing: On the survival of a flawed method. American Psychologist , 56 (1), 16.

Masson, M. E. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior research methods , 43 , 679-690.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

Rozeboom, W. W. (1960). The fallacy of the null-hypothesis significance test. Psychological bulletin , 57 (5), 416.

Related Articles

Research Methodology

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 10.

- Idea behind hypothesis testing

Examples of null and alternative hypotheses

- Writing null and alternative hypotheses

- P-values and significance tests

- Comparing P-values to different significance levels

- Estimating a P-value from a simulation

- Estimating P-values from simulations

- Using P-values to make conclusions

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

7.3: The Research Hypothesis and the Null Hypothesis

- Last updated

- Save as PDF

- Page ID 18038

- Michelle Oja

- Taft College

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Hypotheses are predictions of expected findings.

The Research Hypothesis

A research hypothesis is a mathematical way of stating a research question. A research hypothesis names the groups (we'll start with a sample and a population), what was measured, and which we think will have a higher mean. The last one gives the research hypothesis a direction. In other words, a research hypothesis should include:

- The name of the groups being compared. This is sometimes considered the IV.

- What was measured. This is the DV.

- Which group are we predicting will have the higher mean.

There are two types of research hypotheses related to sample means and population means: Directional Research Hypotheses and Non-Directional Research Hypotheses

Directional Research Hypothesis

If we expect our obtained sample mean to be above or below the other group's mean (the population mean, for example), we have a directional hypothesis. There are two options:

- Symbol: \( \displaystyle \bar{X} > \mu \)

- (The mean of the sample is greater than than the mean of the population.)

- Symbol: \( \displaystyle \bar{X} < \mu \)

- (The mean of the sample is less than than mean of the population.)

Example \(\PageIndex{1}\)

A study by Blackwell, Trzesniewski, and Dweck (2007) measured growth mindset and how long the junior high student participants spent on their math homework. What’s a directional hypothesis for how scoring higher on growth mindset (compared to the population of junior high students) would be related to how long students spent on their homework? Write this out in words and symbols.

Answer in Words: Students who scored high on growth mindset would spend more time on their homework than the population of junior high students.

Answer in Symbols: \( \displaystyle \bar{X} > \mu \)

Non-Directional Research Hypothesis

A non-directional hypothesis states that the means will be different, but does not specify which will be higher. In reality, there is rarely a situation in which we actually don't want one group to be higher than the other, so we will focus on directional research hypotheses. There is only one option for a non-directional research hypothesis: "The sample mean differs from the population mean." These types of research hypotheses don’t give a direction, the hypothesis doesn’t say which will be higher or lower.

A non-directional research hypothesis in symbols should look like this: \( \displaystyle \bar{X} \neq \mu \) (The mean of the sample is not equal to the mean of the population).

Exercise \(\PageIndex{1}\)

What’s a non-directional hypothesis for how scoring higher on growth mindset higher on growth mindset (compared to the population of junior high students) would be related to how long students spent on their homework (Blackwell, Trzesniewski, & Dweck, 2007)? Write this out in words and symbols.

Answer in Words: Students who scored high on growth mindset would spend a different amount of time on their homework than the population of junior high students.

Answer in Symbols: \( \displaystyle \bar{X} \neq \mu \)

See how a non-directional research hypothesis doesn't really make sense? The big issue is not if the two groups differ, but if one group seems to improve what was measured (if having a growth mindset leads to more time spent on math homework). This textbook will only use directional research hypotheses because researchers almost always have a predicted direction (meaning that we almost always know which group we think will score higher).

The Null Hypothesis

The hypothesis that an apparent effect is due to chance is called the null hypothesis, written \(H_0\) (“H-naught”). We usually test this through comparing an experimental group to a comparison (control) group. This null hypothesis can be written as:

\[\mathrm{H}_{0}: \bar{X} = \mu \nonumber \]

For most of this textbook, the null hypothesis is that the means of the two groups are similar. Much later, the null hypothesis will be that there is no relationship between the two groups. Either way, remember that a null hypothesis is always saying that nothing is different.

This is where descriptive statistics diverge from inferential statistics. We know what the value of \(\overline{\mathrm{X}}\) is – it’s not a mystery or a question, it is what we observed from the sample. What we are using inferential statistics to do is infer whether this sample's descriptive statistics probably represents the population's descriptive statistics. This is the null hypothesis, that the two groups are similar.

Keep in mind that the null hypothesis is typically the opposite of the research hypothesis. A research hypothesis for the ESP example is that those in my sample who say that they have ESP would get more correct answers than the population would get correct, while the null hypothesis is that the average number correct for the two groups will be similar.

In general, the null hypothesis is the idea that nothing is going on: there is no effect of our treatment, no relation between our variables, and no difference in our sample mean from what we expected about the population mean. This is always our baseline starting assumption, and it is what we seek to reject. If we are trying to treat depression, we want to find a difference in average symptoms between our treatment and control groups. If we are trying to predict job performance, we want to find a relation between conscientiousness and evaluation scores. However, until we have evidence against it, we must use the null hypothesis as our starting point.

In sum, the null hypothesis is always : There is no difference between the groups’ means OR There is no relationship between the variables .

In the next chapter, the null hypothesis is that there’s no difference between the sample mean and population mean. In other words:

- There is no mean difference between the sample and population.

- The mean of the sample is the same as the mean of a specific population.

- \(\mathrm{H}_{0}: \bar{X} = \mu \nonumber \)

- We expect our sample’s mean to be same as the population mean.

Exercise \(\PageIndex{2}\)

A study by Blackwell, Trzesniewski, and Dweck (2007) measured growth mindset and how long the junior high student participants spent on their math homework. What’s the null hypothesis for scoring higher on growth mindset (compared to the population of junior high students) and how long students spent on their homework? Write this out in words and symbols.

Answer in Words: Students who scored high on growth mindset would spend a similar amount of time on their homework as the population of junior high students.

Answer in Symbols: \( \bar{X} = \mu \)

Contributors and Attributions

Foster et al. (University of Missouri-St. Louis, Rice University, & University of Houston, Downtown Campus)

Dr. MO ( Taft College )

What Is a Hypothesis? (Science)

If...,Then...

Angela Lumsden/Getty Images

- Scientific Method

- Chemical Laws

- Periodic Table

- Projects & Experiments

- Biochemistry

- Physical Chemistry

- Medical Chemistry

- Chemistry In Everyday Life

- Famous Chemists

- Activities for Kids

- Abbreviations & Acronyms

- Weather & Climate

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

A hypothesis (plural hypotheses) is a proposed explanation for an observation. The definition depends on the subject.

In science, a hypothesis is part of the scientific method. It is a prediction or explanation that is tested by an experiment. Observations and experiments may disprove a scientific hypothesis, but can never entirely prove one.

In the study of logic, a hypothesis is an if-then proposition, typically written in the form, "If X , then Y ."

In common usage, a hypothesis is simply a proposed explanation or prediction, which may or may not be tested.

Writing a Hypothesis

Most scientific hypotheses are proposed in the if-then format because it's easy to design an experiment to see whether or not a cause and effect relationship exists between the independent variable and the dependent variable . The hypothesis is written as a prediction of the outcome of the experiment.

- Null Hypothesis and Alternative Hypothesis

Statistically, it's easier to show there is no relationship between two variables than to support their connection. So, scientists often propose the null hypothesis . The null hypothesis assumes changing the independent variable will have no effect on the dependent variable.

In contrast, the alternative hypothesis suggests changing the independent variable will have an effect on the dependent variable. Designing an experiment to test this hypothesis can be trickier because there are many ways to state an alternative hypothesis.

For example, consider a possible relationship between getting a good night's sleep and getting good grades. The null hypothesis might be stated: "The number of hours of sleep students get is unrelated to their grades" or "There is no correlation between hours of sleep and grades."

An experiment to test this hypothesis might involve collecting data, recording average hours of sleep for each student and grades. If a student who gets eight hours of sleep generally does better than students who get four hours of sleep or 10 hours of sleep, the hypothesis might be rejected.

But the alternative hypothesis is harder to propose and test. The most general statement would be: "The amount of sleep students get affects their grades." The hypothesis might also be stated as "If you get more sleep, your grades will improve" or "Students who get nine hours of sleep have better grades than those who get more or less sleep."

In an experiment, you can collect the same data, but the statistical analysis is less likely to give you a high confidence limit.

Usually, a scientist starts out with the null hypothesis. From there, it may be possible to propose and test an alternative hypothesis, to narrow down the relationship between the variables.

Example of a Hypothesis

Examples of a hypothesis include:

- If you drop a rock and a feather, (then) they will fall at the same rate.

- Plants need sunlight in order to live. (if sunlight, then life)

- Eating sugar gives you energy. (if sugar, then energy)

- White, Jay D. Research in Public Administration . Conn., 1998.

- Schick, Theodore, and Lewis Vaughn. How to Think about Weird Things: Critical Thinking for a New Age . McGraw-Hill Higher Education, 2002.

- Null Hypothesis Definition and Examples

- Definition of a Hypothesis

- What Are the Elements of a Good Hypothesis?

- Six Steps of the Scientific Method

- Independent Variable Definition and Examples

- What Are Examples of a Hypothesis?

- Understanding Simple vs Controlled Experiments

- Scientific Method Flow Chart

- Scientific Method Vocabulary Terms

- What Is a Testable Hypothesis?

- Null Hypothesis Examples

- What 'Fail to Reject' Means in a Hypothesis Test

- How To Design a Science Fair Experiment

- What Is an Experiment? Definition and Design

- Hypothesis Test for the Difference of Two Population Proportions

Best Practices in Science

The Null Hypothesis

Show Topics

Publications

- Journals and Blogs

The null hypothesis, as described by Anthony Greenwald in ‘Consequences of Prejudice Against the Null Hypothesis,’ is the hypothesis of no difference between treatment effects or of no association between variables. Unfortunately in academia, the ‘null’ is often associated with ‘insignificant,’ ‘no value,’ or ‘invalid.’ This association is due to the bias against papers that accept the null hypothesis by journals. This prejudice by journals to only accept papers that show ‘significant’ results (also known as rejecting this ‘null hypothesis’) puts added pressure on those working in academia, especially with their relevance and salaries often depend on publications. This pressure may also be correlated with increased scientific misconduct, which you can also read more about on this website by clicking here . If you would like to read publication, journal articles, and blogs about the null hypothesis, views on rejecting and accepting the null, and journal bias against the null hypothesis, please see the resources we have linked below.

Most scientific journals are prejudiced against papers that demonstrate support for null hypotheses and are unlikely to publish such papers and articles. This phenomenon leads to selective publishing of papers and ensures that the portion of articles that do get published is unrepresentative of the total research in the field.

Anderson, D. R., Burnham, K. P., & Thompson, W. L. (2000). Null hypothesis testing: problems, prevalence, and an alternative. The journal of wildlife management , 912-923.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the royal statistical society . Series B (Methodological), 289-300.

Berger, J. O., & Sellke, T. (1987). Testing a point null hypothesis: The irreconcilability of p values and evidence. Journal of the American statistical Association , 82 (397), 112-122.

Blackwelder, W. C. (1982). “Proving the null hypothesis” in clinical trials. Controlled clinical trials , 3 (4), 345-353.

Dirnagl, U. (2010). Fighting publication bias: introducing the Negative Results section. Journal of cerebral blood flow and metabolism: official journal of the International Society of Cerebral Blood Flow and Metabolism , 30 (7), 1263.

Dickersin, K., Chan, S. S., Chalmersx, T. C., Sacks, H. S., & Smith, H. (1987). Publication bias and clinical trials. Controlled clinical trials , 8 (4), 343-353.

Efron, B. (2004). Large-scale simultaneous hypothesis testing: the choice of a null hypothesis. Journal of the American Statistical Association , 99 (465), 96-104.

Fanelli, D. (2010). Do pressures to publish increase scientists’ bias? An empirical support from US States Data. PloS one , 5 (4), e10271.

Fanelli, D. (2011). Negative results are disappearing from most disciplines and countries. Scientometrics , 90 (3), 891-904.

Greenwald, A. G. (1975). Consequences of Prejudice Against the Null Hypothesis. Psychological Bulletin , 82 (1).

Hubbard, R., & Armstrong, J. S. (1997). Publication bias against null results. Psychological Reports , 80 (1), 337-338.

I’ve Got Your Impact Factor Right Here (Science, February 24, 2012)

Johnson, R. T., & Dickersin, K. (2007). Publication bias against negative results from clinical trials: three of the seven deadly sins. Nature Clinical Practice Neurology , 3 (11), 590-591.

Keep negativity out of politics. We need more of it in journals (STAT, October 14, 2016)

Knight, J. (2003). Negative results: Null and void. Nature , 422 (6932), 554-555.

Koren, G., & Klein, N. (1991). Bias against negative studies in newspaper reports of medical research. Jama , 266 (13), 1824-1826.

Koren, G., Shear, H., Graham, K., & Einarson, T. (1989). Bias against the null hypothesis: the reproductive hazards of cocaine. The Lancet , 334 (8677), 1440-1442.

Krantz, D. (2012). The Null Hypothesis Testing Controversy in Psychology. Journal of American Statistical Association .

Lash, T. (2017). The Harm Done to Reproducibility by the Culture of Null Hypothesis Significance Testing. American Journal of Epidemiology .

Mahoney, M. J. (1977). Publication prejudices: An experimental study of confirmatory bias in the peer review system. Cognitive therapy and research , 1 (2), 161-175.

Matosin, N., Frank, E., Engel, M., Lum, J. S., & Newell, K. A. (2014). Negativity towards negative results: a discussion of the disconnect between scientific worth and scientific culture.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

No result is worthless: the value of negative results in science (BioMed Central, October 10, 2012)

Negative Results: The Dark Matter of Research (American Journal Experts)

Neil Malhotra: Why No News Is Still Important News in Research (Stanford Graduate School of Business, October 27, 2014)

Null Hypothesis Definition and Example (Statistics How To, November 5, 2012)

Null Hypothesis Glossary Definition (Statlect Digital Textbook)

Opinion: Publish Negative Results (The Scientist, January 15, 2013)

Positives in negative results: when finding ‘nothing’ means something (The Conversation, September 24, 2014)

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic bulletin & review , 16 (2), 225-237.

Unknown Unknowns: The War on Null and Negative Results (social science space, September 19, 2014)

Valuing Null and Negative Results in Scientific Publishing (Scholastica, November 4, 2015)

Vasilev, M. R. (2013). Negative results in European psychology journals. Europe’s Journal of Psychology , 9 (4), 717-730

Where have all the negative results gone? (bioethics.net, December 4, 2013)

Where to publish negative results (BitesizeBio, November 27, 2013)

Why it’s time to publish research “failures” (Elsevier, May 5, 2015)

Woolson, R. F., & Kleinman, J. C. (1989). Perspectives on statistical significance testing. Annual review of public health , 10 (1), 423-440.

Would you publish your negative results? If no, why? (ResearchGate, October 26, 2012)

- More from M-W

- To save this word, you'll need to log in. Log In

null hypothesis

Definition of null hypothesis

Examples of null hypothesis in a sentence.

These examples are programmatically compiled from various online sources to illustrate current usage of the word 'null hypothesis.' Any opinions expressed in the examples do not represent those of Merriam-Webster or its editors. Send us feedback about these examples.

Word History

1935, in the meaning defined above

Dictionary Entries Near null hypothesis

Nullarbor Plain

Cite this Entry

“Null hypothesis.” Merriam-Webster.com Dictionary , Merriam-Webster, https://www.merriam-webster.com/dictionary/null%20hypothesis. Accessed 17 May. 2024.

More from Merriam-Webster on null hypothesis

Britannica.com: Encyclopedia article about null hypothesis

Subscribe to America's largest dictionary and get thousands more definitions and advanced search—ad free!

Can you solve 4 words at once?

Word of the day.

See Definitions and Examples »

Get Word of the Day daily email!

Popular in Grammar & Usage

More commonly misspelled words, your vs. you're: how to use them correctly, every letter is silent, sometimes: a-z list of examples, more commonly mispronounced words, how to use em dashes (—), en dashes (–) , and hyphens (-), popular in wordplay, the words of the week - may 17, birds say the darndest things, a great big list of bread words, 10 scrabble words without any vowels, 12 more bird names that sound like insults (and sometimes are), games & quizzes.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PMC5635437.1 ; 2015 Aug 25

- PMC5635437.2 ; 2016 Jul 13

- ➤ PMC5635437.3; 2016 Oct 10

Null hypothesis significance testing: a short tutorial

Cyril pernet.

1 Centre for Clinical Brain Sciences (CCBS), Neuroimaging Sciences, The University of Edinburgh, Edinburgh, UK

Version Changes

Revised. amendments from version 2.

This v3 includes minor changes that reflect the 3rd reviewers' comments - in particular the theoretical vs. practical difference between Fisher and Neyman-Pearson. Additional information and reference is also included regarding the interpretation of p-value for low powered studies.

Peer Review Summary

Although thoroughly criticized, null hypothesis significance testing (NHST) remains the statistical method of choice used to provide evidence for an effect, in biological, biomedical and social sciences. In this short tutorial, I first summarize the concepts behind the method, distinguishing test of significance (Fisher) and test of acceptance (Newman-Pearson) and point to common interpretation errors regarding the p-value. I then present the related concepts of confidence intervals and again point to common interpretation errors. Finally, I discuss what should be reported in which context. The goal is to clarify concepts to avoid interpretation errors and propose reporting practices.

The Null Hypothesis Significance Testing framework

NHST is a method of statistical inference by which an experimental factor is tested against a hypothesis of no effect or no relationship based on a given observation. The method is a combination of the concepts of significance testing developed by Fisher in 1925 and of acceptance based on critical rejection regions developed by Neyman & Pearson in 1928 . In the following I am first presenting each approach, highlighting the key differences and common misconceptions that result from their combination into the NHST framework (for a more mathematical comparison, along with the Bayesian method, see Christensen, 2005 ). I next present the related concept of confidence intervals. I finish by discussing practical aspects in using NHST and reporting practice.

Fisher, significance testing, and the p-value

The method developed by ( Fisher, 1934 ; Fisher, 1955 ; Fisher, 1959 ) allows to compute the probability of observing a result at least as extreme as a test statistic (e.g. t value), assuming the null hypothesis of no effect is true. This probability or p-value reflects (1) the conditional probability of achieving the observed outcome or larger: p(Obs≥t|H0), and (2) is therefore a cumulative probability rather than a point estimate. It is equal to the area under the null probability distribution curve from the observed test statistic to the tail of the null distribution ( Turkheimer et al. , 2004 ). The approach proposed is of ‘proof by contradiction’ ( Christensen, 2005 ), we pose the null model and test if data conform to it.

In practice, it is recommended to set a level of significance (a theoretical p-value) that acts as a reference point to identify significant results, that is to identify results that differ from the null-hypothesis of no effect. Fisher recommended using p=0.05 to judge whether an effect is significant or not as it is roughly two standard deviations away from the mean for the normal distribution ( Fisher, 1934 page 45: ‘The value for which p=.05, or 1 in 20, is 1.96 or nearly 2; it is convenient to take this point as a limit in judging whether a deviation is to be considered significant or not’). A key aspect of Fishers’ theory is that only the null-hypothesis is tested, and therefore p-values are meant to be used in a graded manner to decide whether the evidence is worth additional investigation and/or replication ( Fisher, 1971 page 13: ‘it is open to the experimenter to be more or less exacting in respect of the smallness of the probability he would require […]’ and ‘no isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon’). How small the level of significance is, is thus left to researchers.

What is not a p-value? Common mistakes

The p-value is not an indication of the strength or magnitude of an effect . Any interpretation of the p-value in relation to the effect under study (strength, reliability, probability) is wrong, since p-values are conditioned on H0. In addition, while p-values are randomly distributed (if all the assumptions of the test are met) when there is no effect, their distribution depends of both the population effect size and the number of participants, making impossible to infer strength of effect from them.

Similarly, 1-p is not the probability to replicate an effect . Often, a small value of p is considered to mean a strong likelihood of getting the same results on another try, but again this cannot be obtained because the p-value is not informative on the effect itself ( Miller, 2009 ). Because the p-value depends on the number of subjects, it can only be used in high powered studies to interpret results. In low powered studies (typically small number of subjects), the p-value has a large variance across repeated samples, making it unreliable to estimate replication ( Halsey et al. , 2015 ).

A (small) p-value is not an indication favouring a given hypothesis . Because a low p-value only indicates a misfit of the null hypothesis to the data, it cannot be taken as evidence in favour of a specific alternative hypothesis more than any other possible alternatives such as measurement error and selection bias ( Gelman, 2013 ). Some authors have even argued that the more (a priori) implausible the alternative hypothesis, the greater the chance that a finding is a false alarm ( Krzywinski & Altman, 2013 ; Nuzzo, 2014 ).

The p-value is not the probability of the null hypothesis p(H0), of being true, ( Krzywinski & Altman, 2013 ). This common misconception arises from a confusion between the probability of an observation given the null p(Obs≥t|H0) and the probability of the null given an observation p(H0|Obs≥t) that is then taken as an indication for p(H0) (see Nickerson, 2000 ).

Neyman-Pearson, hypothesis testing, and the α-value

Neyman & Pearson (1933) proposed a framework of statistical inference for applied decision making and quality control. In such framework, two hypotheses are proposed: the null hypothesis of no effect and the alternative hypothesis of an effect, along with a control of the long run probabilities of making errors. The first key concept in this approach, is the establishment of an alternative hypothesis along with an a priori effect size. This differs markedly from Fisher who proposed a general approach for scientific inference conditioned on the null hypothesis only. The second key concept is the control of error rates . Neyman & Pearson (1928) introduced the notion of critical intervals, therefore dichotomizing the space of possible observations into correct vs. incorrect zones. This dichotomization allows distinguishing correct results (rejecting H0 when there is an effect and not rejecting H0 when there is no effect) from errors (rejecting H0 when there is no effect, the type I error, and not rejecting H0 when there is an effect, the type II error). In this context, alpha is the probability of committing a Type I error in the long run. Alternatively, Beta is the probability of committing a Type II error in the long run.

The (theoretical) difference in terms of hypothesis testing between Fisher and Neyman-Pearson is illustrated on Figure 1 . In the 1 st case, we choose a level of significance for observed data of 5%, and compute the p-value. If the p-value is below the level of significance, it is used to reject H0. In the 2 nd case, we set a critical interval based on the a priori effect size and error rates. If an observed statistic value is below and above the critical values (the bounds of the confidence region), it is deemed significantly different from H0. In the NHST framework, the level of significance is (in practice) assimilated to the alpha level, which appears as a simple decision rule: if the p-value is less or equal to alpha, the null is rejected. It is however a common mistake to assimilate these two concepts. The level of significance set for a given sample is not the same as the frequency of acceptance alpha found on repeated sampling because alpha (a point estimate) is meant to reflect the long run probability whilst the p-value (a cumulative estimate) reflects the current probability ( Fisher, 1955 ; Hubbard & Bayarri, 2003 ).

The figure was prepared with G-power for a one-sided one-sample t-test, with a sample size of 32 subjects, an effect size of 0.45, and error rates alpha=0.049 and beta=0.80. In Fisher’s procedure, only the nil-hypothesis is posed, and the observed p-value is compared to an a priori level of significance. If the observed p-value is below this level (here p=0.05), one rejects H0. In Neyman-Pearson’s procedure, the null and alternative hypotheses are specified along with an a priori level of acceptance. If the observed statistical value is outside the critical region (here [-∞ +1.69]), one rejects H0.

Acceptance or rejection of H0?

The acceptance level α can also be viewed as the maximum probability that a test statistic falls into the rejection region when the null hypothesis is true ( Johnson, 2013 ). Therefore, one can only reject the null hypothesis if the test statistics falls into the critical region(s), or fail to reject this hypothesis. In the latter case, all we can say is that no significant effect was observed, but one cannot conclude that the null hypothesis is true. This is another common mistake in using NHST: there is a profound difference between accepting the null hypothesis and simply failing to reject it ( Killeen, 2005 ). By failing to reject, we simply continue to assume that H0 is true, which implies that one cannot argue against a theory from a non-significant result (absence of evidence is not evidence of absence). To accept the null hypothesis, tests of equivalence ( Walker & Nowacki, 2011 ) or Bayesian approaches ( Dienes, 2014 ; Kruschke, 2011 ) must be used.

Confidence intervals