Guidelines for performing systematic literature reviews in software engineering

Recommended format for most reference management software

Recommended format for BibTeX-specific software

- Kitchenham, B (Author)

- Charters, S (Author)

Citations per year

Duplicate citations, merged citations, add co-authors co-authors, cited by view all.

Systematic Literature Reviews

- First Online: 01 January 2012

Cite this chapter

- Claes Wohlin 7 ,

- Per Runeson 8 ,

- Martin Höst 8 ,

- Magnus C. Ohlsson 9 ,

- Björn Regnell 8 &

- Anders Wesslén 10

11k Accesses

8 Citations

1 Altmetric

Systematic literature reviews are conducted to “ identify, analyse and interpret all available evidence related to a specific research question ” [96]. As it aims to give a complete, comprehensive and valid picture of the existing evidence, both the identification, analysis and interpretation must be conducted in a scientifically and rigorous way. In order to achieve this goal, Kitchenham and Charters have adapted guidelines for systematic literature reviews, primarily from medicine, evaluated them [24] and updated them accordingly [96]. These guidelines, structured according to a three-step process for planning, conducting and reporting the review, are summarized below.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Anastas, J.W., MacDonald, M.L.: Research Design for the Social Work and the Human Services, 2nd edn. Columbia University Press, New York (2000)

Google Scholar

Andersson, C., Runeson, P.: A spiral process model for case studies on software quality monitoring – method and metrics. Softw. Process: Improv. Pract. 12 (2), 125–140 (2007). doi: 10.1002/spip.311

Andrews, A.A., Pradhan, A.S.: Ethical issues in empirical software engineering: the limits of policy. Empir. Softw. Eng. 6 (2), 105–110 (2001)

American Psychological Association: Ethical principles of psychologists and code of conduct. Am. Psychol. 47 , 1597–1611 (1992)

Avison, D., Baskerville, R., Myers, M.: Controlling action research projects. Inf. Technol. People 14 (1), 28–45 (2001). doi: 10.1108/09593840110384762 http://www.emeraldinsight.com/10.1108/09593840110384762

Babbie, E.R.: Survey Research Methods. Wadsworth, Belmont (1990)

Basili, V.R.: Quantitative evaluation of software engineering methodology. In: Proceedings of the First Pan Pacific Computer Conference, vol. 1, pp. 379–398. Australian Computer Society, Melbourne (1985)

Basili, V.R.: Software development: a paradigm for the future. In: Proceedings of the 13th Annual International Computer Software and Applications Conference, COMPSAC’89, Orlando, pp. 471–485. IEEE Computer Society Press, Washington (1989)

Basili, V.R.: The experimental paradigm in software engineering. In: H.D. Rombach, V.R. Basili, R.W. Selby (eds.) Experimental Software Engineering Issues: Critical Assessment and Future Directives. Lecture Notes in Computer Science, vol. 706. Springer, Berlin Heidelberg (1993)

Basili, V.R.: Evolving and packaging reading technologies. J. Syst. Softw. 38 (1), 3–12 (1997)

Basili, V.R., Weiss, D.M.: A methodology for collecting valid software engineering data. IEEE Trans. Softw. Eng. 10 (6), 728–737 (1984)

Basili, V.R., Selby, R.W.: Comparing the effectiveness of software testing strategies. IEEE Trans. Softw. Eng. 13 (12), 1278–1298 (1987)

Basili, V.R., Rombach, H.D.: The TAME project: towards improvement-oriented software environments. IEEE Trans. Softw. Eng. 14 (6), 758–773 (1988)

Basili, V.R., Green, S.: Software process evaluation at the SEL. IEEE Softw. 11 (4), pp. 58–66 (1994)

Basili, V.R., Selby, R.W., Hutchens, D.H.: Experimentation in software engineering. IEEE Trans. Softw. Eng. 12 (7), 733–743 (1986)

Basili, V.R., Caldiera, G., Rombach, H.D.: Experience factory. In: J.J. Marciniak (ed.) Encyclopedia of Software Engineering, pp. 469–476. Wiley, New York (1994)

Basili, V.R., Caldiera, G., Rombach, H.D.: Goal Question Metrics paradigm. In: J.J. Marciniak (ed.) Encyclopedia of Software Engineering, pp. 528–532. Wiley (1994)

Basili, V.R., Green, S., Laitenberger, O., Lanubile, F., Shull, F., Sørumgård, S., Zelkowitz, M.V.: The empirical investigation of perspective-based reading. Empir. Soft. Eng. 1 (2), 133–164 (1996)

Basili, V.R., Green, S., Laitenberger, O., Lanubile, F., Shull, F., Sørumgård, S., Zelkowitz, M.V.: Lab package for the empirical investigation of perspective-based reading. Technical report, Univeristy of Maryland (1998). http://www.cs.umd.edu/projects/SoftEng/ESEG/manual/pbr_package/manual.html

Basili, V.R., Shull, F., Lanubile, F.: Building knowledge through families of experiments. IEEE Trans. Softw. Eng. 25 (4), 456–473 (1999)

Baskerville, R.L., Wood-Harper, A.T.: A critical perspective on action research as a method for information systems research. J. Inf. Technol. 11 (3), 235–246 (1996). doi: 10.1080/026839696345289

Benbasat, I., Goldstein, D.K., Mead, M.: The case research strategy in studies of information systems. MIS Q. 11 (3), 369 (1987). doi: 10.2307/248684

Bergman, B., Klefsjö, B.: Quality from Customer Needs to Customer Satisfaction. Studentlitteratur, Lund (2010)

Brereton, P., Kitchenham, B.A., Budgen, D., Turner, M., Khalil, M.: Lessons from applying the systematic literature review process within the software engineering domain. J. Syst. Softw. 80 (4), 571–583 (2007). doi: 10.1016/j.jss.2006.07.009

Brereton, P., Kitchenham, B.A., Budgen, D.: Using a protocol template for case study planning. In: Proceedings of the 12th International Conference on Evaluation and Assessment in Software Engineering. University of Bari, Italy (2008)

Briand, L.C., Differding, C.M., Rombach, H.D.: Practical guidelines for measurement-based process improvement. Softw. Process: Improv. Pract. 2 (4), 253–280 (1996)

Briand, L.C., El Emam, K., Morasca, S.: On the application of measurement theory in software engineering. Empir. Softw. Eng. 1 (1), 61–88 (1996)

Briand, L.C., Bunse, C., Daly, J.W.: A controlled experiment for evaluating quality guidelines on the maintainability of object-oriented designs. IEEE Trans. Softw. Eng. 27 (6), 513–530 (2001)

British Psychological Society: Ethical principles for conducting research with human participants. Psychologist 6 (1), 33–35 (1993)

Budgen, D., Kitchenham, B.A., Charters, S., Turner, M., Brereton, P., Linkman, S.: Presenting software engineering results using structured abstracts: a randomised experiment. Empir. Softw. Eng. 13 , 435–468 (2008). doi: 10.1007/s10664-008-9075-7

Budgen, D., Burn, A.J., Kitchenham, B.A.: Reporting computing projects through structured abstracts: a quasi-experiment. Empir. Softw. Eng. 16 (2), 244–277 (2011). doi: 10.1007/s10664-010-9139-3

Campbell, D.T., Stanley, J.C.: Experimental and Quasi-experimental Designs for Research. Houghton Mifflin Company, Boston (1963)

Chrissis, M.B., Konrad, M., Shrum, S.: CMMI(R): Guidelines for process integration and product improvement. Technical report, SEI (2003)

Ciolkowski, M., Differding, C.M., Laitenberger, O., Münch, J.: Empirical investigation of perspective-based reading: A replicated experiment. Technical report, 97-13, ISERN (1997)

Coad, P., Yourdon, E.: Object-Oriented Design, 1st edn. Prentice-Hall, Englewood (1991)

Cohen, J.: Weighted kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol. Bull. 70 , 213–220 (1968)

Cook, T.D., Campbell, D.T.: Quasi-experimentation – Design and Analysis Issues for Field Settings. Houghton Mifflin Company, Boston (1979)

Corbin, J., Strauss, A.: Basics of Qualitative Research, 3rd edn. SAGE, Los Angeles (2008)

Cruzes, D.S., Dybå, T.: Research synthesis in software engineering: a tertiary study. Inf. Softw. Technol. 53 (5), 440–455 (2011). doi: 10.1016/j.infsof.2011.01.004

Dalkey, N., Helmer, O.: An experimental application of the delphi method to the use of experts. Manag. Sci. 9 (3), 458–467 (1963)

DeMarco, T.: Controlling Software Projects. Yourdon Press, New York (1982)

Demming, W.E.: Out of the Crisis. MIT Centre for Advanced Engineering Study, MIT Press, Cambridge, MA (1986)

Dieste, O., Grimán, A., Juristo, N.: Developing search strategies for detecting relevant experiments. Empir. Softw. Eng. 14 , 513–539 (2009). http://dx.doi.org/10.1007/s10664-008-9091-7

Dittrich, Y., Rönkkö, K., Eriksson, J., Hansson, C., Lindeberg, O.: Cooperative method development. Empir. Softw. Eng. 13 (3), 231–260 (2007). doi: 10.1007/s10664-007-9057-1

Doolan, E.P.: Experiences with Fagan’s inspection method. Softw. Pract. Exp. 22 (2), 173–182 (1992)

Dybå, T., Dingsøyr, T.: Empirical studies of agile software development: a systematic review. Inf. Softw. Technol. 50 (9-10), 833–859 (2008). doi: DOI: 10.1016/j.infsof.2008.01.006

Dybå, T., Dingsøyr, T.: Strength of evidence in systematic reviews in software engineering. In: Proceedings of the 2nd ACM-IEEE International Symposium on Empirical Software Engineering and Measurement, ESEM ’08, Kaiserslautern, pp. 178–187. ACM, New York (2008). doi: http://doi.acm.org/10.1145/1414004.1414034

Dybå, T., Kitchenham, B.A., Jørgensen, M.: Evidence-based software engineering for practitioners. IEEE Softw. 22 , 58–65 (2005). doi: http://doi.ieeecomputersociety.org/10.1109/MS.2005.6

Dybå, T., Kampenes, V.B., Sjøberg, D.I.K.: A systematic review of statistical power in software engineering experiments. Inf. Softw. Technol. 48 (8), 745–755 (2006). doi: 10.1016/j.infsof.2005.08.009

Easterbrook, S., Singer, J., Storey, M.-A., Damian, D.: Selecting empirical methods for software engineering research. In: F. Shull, J. Singer, D.I. Sjøberg (eds.) Guide to Advanced Empirical Software Engineering. Springer, London (2008)

Eick, S.G., Loader, C.R., Long, M.D., Votta, L.G., Vander Wiel, S.A.: Estimating software fault content before coding. In: Proceedings of the 14th International Conference on Software Engineering, Melbourne, pp. 59–65. ACM Press, New York (1992)

Eisenhardt, K.M.: Building theories from case study research. Acad. Manag. Rev. 14 (4), 532 (1989). doi: 10.2307/258557

Endres, A., Rombach, H.D.: A Handbook of Software and Systems Engineering – Empirical Observations, Laws and Theories. Pearson Addison-Wesley, Harlow/New York (2003)

Fagan, M.E.: Design and code inspections to reduce errors in program development. IBM Syst. J. 15 (3), 182–211 (1976)

Fenton, N.: Software measurement: A necessary scientific basis. IEEE Trans. Softw. Eng. 3 (20), 199–206 (1994)

Fenton, N., Pfleeger, S.L.: Software Metrics: A Rigorous and Practical Approach, 2nd edn. International Thomson Computer Press, London (1996)

Fenton, N., Pfleeger, S.L., Glass, R.: Science and substance: A challenge to software engineers. IEEE Softw. 11 , 86–95 (1994)

Fink, A.: The Survey Handbook, 2nd edn. SAGE, Thousand Oaks/London (2003)

Flyvbjerg, B.: Five misunderstandings about case-study research. In: Qualitative Research Practice, concise paperback edn., pp. 390–404. SAGE, London (2007)

Frigge, M., Hoaglin, D.C., Iglewicz, B.: Some implementations of the boxplot. Am. Stat. 43 (1), 50–54 (1989)

Fusaro, P., Lanubile, F., Visaggio, G.: A replicated experiment to assess requirements inspection techniques. Empir. Softw. Eng. 2 (1), 39–57 (1997)

Glass, R.L.: The software research crisis. IEEE Softw. 11 , 42–47 (1994)

Glass, R.L., Vessey, I., Ramesh, V.: Research in software engineering: An analysis of the literature. Inf. Softw. Technol. 44 (8), 491–506 (2002). doi: 10.1016/S0950-5849(02)00049-6

Gómez, O.S., Juristo, N., Vegas, S.: Replication types in experimental disciplines. In: Proceedings of the 4th ACM-IEEE International Symposium on Empirical Software Engineering and Measurement, Bolzano-Bozen (2010)

Gorschek, T., Wohlin, C.: Requirements abstraction model. Requir. Eng. 11 , 79–101 (2006). doi: 10.1007/s00766-005-0020-7

Gorschek, T., Garre, P., Larsson, S., Wohlin, C.: A model for technology transfer in practice. IEEE Softw. 23 (6), 88–95 (2006)

Gorschek, T., Garre, P., Larsson, S., Wohlin, C.: Industry evaluation of the requirements abstraction model. Requir. Eng. 12 , 163–190 (2007). doi: 10.1007/s00766-007-0047-z

Grady, R.B., Caswell, D.L.: Software Metrics: Establishing a Company-Wide Program. Prentice-Hall, Englewood (1994)

Grant, E.E., Sackman, H.: An exploratory investigation of programmer performance under on-line and off-line conditions. IEEE Trans. Human Factor Electron. HFE-8 (1), 33–48 (1967)

Gregor, S.: The nature of theory in information systems. MIS Q. 30 (3), 491–506 (2006)

Hall, T., Flynn, V.: Ethical issues in software engineering research: a survey of current practice. Empir. Softw. Eng. 6 , 305–317 (2001)

Hannay, J.E., Sjøberg, D.I.K., Dybå, T.: A systematic review of theory use in software engineering experiments. IEEE Trans. Softw. Eng. 33 (2), 87–107 (2007). doi: 10.1109/TSE.2007.12

Hannay, J.E., Dybå, T., Arisholm, E., Sjøberg, D.I.K.: The effectiveness of pair programming: a meta-analysis. Inf. Softw. Technol. 51 (7), 1110–1122 (2009). doi: 10.1016/j.infsof.2009.02.001

Hayes, W.: Research synthesis in software engineering: a case for meta-analysis. In: Proceedings of the 6th International Software Metrics Symposium, Boca Raton, pp. 143–151 (1999)

Hetzel, B.: Making Software Measurement Work: Building an Effective Measurement Program. Wiley, New York (1993)

Hevner, A.R., March, S.T., Park, J., Ram, S.: Design science in information systems research. MIS Q. 28 (1), 75–105 (2004)

Höst, M., Regnell, B., Wohlin, C.: Using students as subjects – a comparative study of students and professionals in lead-time impact assessment. Empir. Softw. Eng. 5 (3), 201–214 (2000)

Höst, M., Wohlin, C., Thelin, T.: Experimental context classification: Incentives and experience of subjects. In: Proceedings of the 27th International Conference on Software Engineering, St. Louis, pp. 470–478 (2005)

Höst, M., Runeson, P.: Checklists for software engineering case study research. In: Proceedings of the 1st International Symposium on Empirical Software Engineering and Measurement, Madrid, pp. 479–481 (2007)

Hove, S.E., Anda, B.: Experiences from conducting semi-structured interviews in empirical software engineering research. In: Proceedings of the 11th IEEE International Software Metrics Symposium, pp. 1–10. IEEE Computer Society Press, Los Alamitos (2005)

Humphrey, W.S.: Managing the Software Process. Addison-Wesley, Reading (1989)

Humphrey, W.S.: A Discipline for Software Engineering. Addison Wesley, Reading (1995)

Humphrey, W.S.: Introduction to the Personal Software Process. Addison Wesley, Reading (1997)

IEEE: IEEE standard glossary of software engineering terminology. Technical Report, IEEE Std 610.12-1990, IEEE (1990)

Iversen, J.H., Mathiassen, L., Nielsen, P.A.: Managing risk in software process improvement: an action research approach. MIS Q. 28 (3), 395–433 (2004)

Jedlitschka, A., Pfahl, D.: Reporting guidelines for controlled experiments in software engineering. In: Proceedings of the 4th International Symposium on Empirical Software Engineering, Noosa Heads, pp. 95–104 (2005)

Johnson, P.M., Tjahjono, D.: Does every inspection really need a meeting? Empir. Softw. Eng. 3 (1), 9–35 (1998)

Juristo, N., Moreno, A.M.: Basics of Software Engineering Experimentation. Springer, Kluwer Academic Publishers, Boston (2001)

Juristo, N., Vegas, S.: The role of non-exact replications in software engineering experiments. Empir. Softw. Eng. 16 , 295–324 (2011). doi: 10.1007/s10664-010-9141-9

Kachigan, S.K.: Statistical Analysis: An Interdisciplinary Introduction to Univariate and Multivariate Methods. Radius Press, New York (1986)

Kachigan, S.K.: Multivariate Statistical Analysis: A Conceptual Introduction, 2nd edn. Radius Press, New York (1991)

Kampenes, V.B., Dyba, T., Hannay, J.E., Sjø berg, D.I.K.: A systematic review of effect size in software engineering experiments. Inf. Softw. Technol. 49 (11–12), 1073–1086 (2007). doi: 10.1016/j.infsof.2007.02.015

Karahasanović, A., Anda, B., Arisholm, E., Hove, S.E., Jørgensen, M., Sjøberg, D., Welland, R.: Collecting feedback during software engineering experiments. Empir. Softw. Eng. 10 (2), 113–147 (2005). doi: 10.1007/s10664-004-6189-4. http://www.springerlink.com/index/10.1007/s10664-004-6189-4

Karlström, D., Runeson, P., Wohlin, C.: Aggregating viewpoints for strategic software process improvement. IEE Proc. Softw. 149 (5), 143–152 (2002). doi: 10.1049/ip-sen:20020696

Kitchenham, B.A.: The role of replications in empirical software engineering – a word of warning. Empir. Softw. Eng. 13 , 219–221 (2008). 10.1007/s10664-008-9061-0

Kitchenham, B.A., Charters, S.: Guidelines for performing systematic literature reviews in software engineering (version 2.3). Technical Report, EBSE Technical Report EBSE-2007-01, Keele University and Durham University (2007)

Kitchenham, B.A., Pickard, L.M., Pfleeger, S.L.: Case studies for method and tool evaluation. IEEE Softw. 12 (4), 52–62 (1995)

Kitchenham, B.A., Pfleeger, S.L., Pickard, L.M., Jones, P.W., Hoaglin, D.C., El Emam, K., Rosenberg, J.: Preliminary guidelines for empirical research in software engineering. IEEE Trans. Softw. Eng. 28 (8), 721–734 (2002). doi: 10.1109/TSE.2002.1027796. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=1027796

Kitchenham, B., Fry, J., Linkman, S.G.: The case against cross-over designs in software engineering. In: Proceedings of the 11th International Workshop on Software Technology and Engineering Practice, Amsterdam, pp. 65–67. IEEE Computer Society, Los Alamitos (2003)

Kitchenham, B.A., Dybå, T., Jørgensen, M.: Evidence-based software engineering. In: Proceedings of the 26th International Conference on Software Engineering, Edinburgh, pp. 273–281 (2004)

Kitchenham, B.A., Al-Khilidar, H., Babar, M.A., Berry, M., Cox, K., Keung, J., Kurniawati, F., Staples, M., Zhang, H., Zhu, L.: Evaluating guidelines for reporting empirical software engineering studies. Empir. Softw. Eng. 13 (1), 97–121 (2007). doi: 10.1007/s10664-007-9053-5. http://www.springerlink.com/index/10.1007/s10664-007-9053-5

Kitchenham, B.A., Jeffery, D.R., Connaughton, C.: Misleading metrics and unsound analyses. IEEE Softw. 24 , 73–78 (2007). doi: 10.1109/MS.2007.49

Kitchenham, B.A., Brereton, P., Budgen, D., Turner, M., Bailey, J., Linkman, S.G.: Systematic literature reviews in software engineering – a systematic literature review. Inf. Softw. Technol. 51 (1), 7–15 (2009). doi: 10.1016/j.infsof.2008.09.009. http://www.dx.doi.org/10.1016/j.infsof.2008.09.009

Kitchenham, B.A., Pretorius, R., Budgen, D., Brereton, P., Turner, M., Niazi, M., Linkman, S.: Systematic literature reviews in software engineering – a tertiary study. Inf. Softw. Technol. 52 (8), 792–805 (2010). doi: 10.1016/j.infsof.2010.03.006

Kitchenham, B.A., Sjøberg, D.I.K., Brereton, P., Budgen, D., Dybå, T., Höst, M., Pfahl, D., Runeson, P.: Can we evaluate the quality of software engineering experiments? In: Proceedings of the 4th ACM-IEEE International Symposium on Empirical Software Engineering and Measurement. ACM, Bolzano/Bozen (2010)

Kitchenham, B.A., Budgen, D., Brereton, P.: Using mapping studies as the basis for further research – a participant-observer case study. Inf. Softw. Technol. 53 (6), 638–651 (2011). doi: 10.1016/j.infsof.2010.12.011

Laitenberger, O., Atkinson, C., Schlich, M., El Emam, K.: An experimental comparison of reading techniques for defect detection in UML design documents. J. Syst. Softw. 53 (2), 183–204 (2000)

Larsson, R.: Case survey methodology: quantitative analysis of patterns across case studies. Acad. Manag. J. 36 (6), 1515–1546 (1993)

Lee, A.S.: A scientific methodology for MIS case studies. MIS Q. 13 (1), 33 (1989). doi: 10.2307/248698. http://www.jstor.org/stable/248698?origin=crossref

Lehman, M.M.: Program, life-cycles and the laws of software evolution. Proc. IEEE 68 (9), 1060–1076 (1980)

Lethbridge, T.C., Sim, S.E., Singer, J.: Studying software engineers: data collection techniques for software field studies. Empir. Softw. Eng. 10 , 311–341 (2005)

Linger, R.: Cleanroom process model. IEEE Softw. pp. 50–58 (1994)

Linkman, S., Rombach, H.D.: Experimentation as a vehicle for software technology transfer – a family of software reading techniques. Inf. Softw. Technol. 39 (11), 777–780 (1997)

Lucas, W.A.: The case survey method: aggregating case experience. Technical Report, R-1515-RC, The RAND Corporation, Santa Monica (1974)

Lucas, H.C., Kaplan, R.B.: A structured programming experiment. Comput. J. 19 (2), 136–138 (1976)

Lyu, M.R. (ed.): Handbook of Software Reliability Engineering. McGraw-Hill, New York (1996)

Maldonado, J.C., Carver, J., Shull, F., Fabbri, S., Dória, E., Martimiano, L., Mendonça, M., Basili, V.: Perspective-based reading: a replicated experiment focused on individual reviewer effectiveness. Empir. Softw. Eng. 11 , 119–142 (2006). doi: 10.1007/s10664-006-5967-6

Manly, B.F.J.: Multivariate Statistical Methods: A Primer, 2nd edn. Chapman and Hall, London (1994)

Marascuilo, L.A., Serlin, R.C.: Statistical Methods for the Social and Behavioral Sciences. W. H. Freeman and Company, New York (1988)

Miller, J.: Estimating the number of remaining defects after inspection. Softw. Test. Verif. Reliab. 9 (4), 167–189 (1999)

Miller, J.: Applying meta-analytical procedures to software engineering experiments. J. Syst. Softw. 54 (1), 29–39 (2000)

Miller, J.: Statistical significance testing: a panacea for software technology experiments? J. Syst. Softw. 73 , 183–192 (2004). doi: http://dx.doi.org/10.1016/j.jss.2003.12.019

Miller, J.: Replicating software engineering experiments: a poisoned chalice or the holy grail. Inf. Softw. Technol. 47 (4), 233–244 (2005)

Miller, J., Wood, M., Roper, M.: Further experiences with scenarios and checklists. Empir. Softw. Eng. 3 (1), 37–64 (1998)

Montgomery, D.C.: Design and Analysis of Experiments, 5th edn. Wiley, New York (2000)

Myers, G.J.: A controlled experiment in program testing and code walkthroughs/inspections. Commun. ACM 21 , 760–768 (1978). doi: http://doi.acm.org/10.1145/359588.359602

Noblit, G.W., Hare, R.D.: Meta-Ethnography: Synthesizing Qualitative Studies. Sage Publications, Newbury Park (1988)

Ohlsson, M.C., Wohlin, C.: A project effort estimation study. Inf. Softw. Technol. 40 (14), 831–839 (1998)

Owen, S., Brereton, P., Budgen, D.: Protocol analysis: a neglected practice. Commun. ACM 49 (2), 117–122 (2006). doi: 10.1145/1113034.1113039

Paulk, M.C., Curtis, B., Chrissis, M.B., Weber, C.V.: Capability maturity model for software. Technical Report, CMU/SEI-93-TR-24, Software Engineering Institute, Pittsburgh (1993)

Petersen, K., Feldt, R., Mujtaba, S., Mattsson, M.: Systematic mapping studies in software engineering. In: Proceedings of the 12th International Conference on Evaluation and Assessment in Software Engineering, Electronic Workshops in Computing (eWIC). BCS, University of Bari, Italy (2008)

Petersen, K., Wohlin, C.: Context in industrial software engineering research. In: Proceedings of the 3rd ACM-IEEE International Symposium on Empirical Software Engineering and Measurement, Lake Buena Vista, pp. 401–404 (2009)

Pfleeger, S.L.: Experimental design and analysis in software engineering part 1–5. ACM Sigsoft, Softw. Eng. Notes, 19 (4), 16–20; 20 (1), 22–26; 20 (2), 14–16; 20 (3), 13–15; 20 , (1994)

Pfleeger, S.L., Atlee, J.M.: Software Engineering: Theory and Practice, 4th edn. Pearson Prentice-Hall, Upper Saddle River (2009)

Pickard, L.M., Kitchenham, B.A., Jones, P.W.: Combining empirical results in software engineering. Inf. Softw. Technol. 40 (14), 811–821 (1998). doi: 10.1016/S0950-5849(98)00101-3

Porter, A.A., Votta, L.G.: An experiment to assess different defect detection methods for software requirements inspections. In: Proceedings of the 16th International Conference on Software Engineering, Sorrento, pp. 103–112 (1994)

Porter, A.A., Votta, L.G.: Comparing detection methods for software requirements inspection: a replicated experiment. IEEE Trans. Softw. Eng. 21 (6), 563–575 (1995)

Porter, A.A., Votta, L.G.: Comparing detection methods for software requirements inspection: a replicated experimentation: a replication using professional subjects. Empir. Softw. Eng. 3 (4), 355–380 (1998)

Porter, A.A., Siy, H.P., Toman, C.A., Votta, L.G.: An experiment to assess the cost-benefits of code inspections in large scale software development. IEEE Trans. Softw. Eng. 23 (6), 329–346 (1997)

Potts, C.: Software engineering research revisited. IEEE Softw. pp. 19–28 (1993)

Rainer, A.W.: The longitudinal, chronological case study research strategy: a definition, and an example from IBM Hursley Park. Inf. Softw. Technol. 53 (7), 730–746 (2011)

Robinson, H., Segal, J., Sharp, H.: Ethnographically-informed empirical studies of software practice. Inf. Softw. Technol. 49 (6), 540–551 (2007). doi: 10.1016/j.infsof.2007.02.007

Robson, C.: Real World Research: A Resource for Social Scientists and Practitioners-Researchers, 1st edn. Blackwell, Oxford/Cambridge (1993)

Robson, C.: Real World Research: A Resource for Social Scientists and Practitioners-Researchers, 2nd edn. Blackwell, Oxford/Madden (2002)

Runeson, P., Skoglund, M.: Reference-based search strategies in systematic reviews. In: Proceedings of the 13th International Conference on Empirical Assessment and Evaluation in Software Engineering. Electronic Workshops in Computing (eWIC). BCS, Durham University, UK (2009)

Runeson, P., Höst, M., Rainer, A.W., Regnell, B.: Case Study Research in Software Engineering. Guidelines and Examples. Wiley, Hoboken (2012)

Sandahl, K., Blomkvist, O., Karlsson, J., Krysander, C., Lindvall, M., Ohlsson, N.: An extended replication of an experiment for assessing methods for software requirements. Empir. Softw. Eng. 3 (4), 381–406 (1998)

Seaman, C.B.: Qualitative methods in empirical studies of software engineering. IEEE Trans. Softw. Eng. 25 (4), 557–572 (1999)

Selby, R.W., Basili, V.R., Baker, F.T.: Cleanroom software development: An empirical evaluation. IEEE Trans. Softw. Eng. 13 (9), 1027–1037 (1987)

Shepperd, M.: Foundations of Software Measurement. Prentice-Hall, London/New York (1995)

Shneiderman, B., Mayer, R., McKay, D., Heller, P.: Experimental investigations of the utility of detailed flowcharts in programming. Commun. ACM 20 , 373–381 (1977). doi: 10.1145/359605.359610

Shull, F.: Developing techniques for using software documents: a series of empirical studies. Ph.D. thesis, Computer Science Department, University of Maryland, USA (1998)

Shull, F., Basili, V.R., Carver, J., Maldonado, J.C., Travassos, G.H., Mendonça, M.G., Fabbri, S.: Replicating software engineering experiments: addressing the tacit knowledge problem. In: Proceedings of the 1st International Symposium on Empirical Software Engineering, Nara, pp. 7–16 (2002)

Shull, F., Mendoncça, M.G., Basili, V.R., Carver, J., Maldonado, J.C., Fabbri, S., Travassos, G.H., Ferreira, M.C.: Knowledge-sharing issues in experimental software engineering. Empir. Softw. Eng. 9 , 111–137 (2004). doi: 10.1023/B:EMSE.0000013516.80487.33

Shull, F., Carver, J., Vegas, S., Juristo, N.: The role of replications in empirical software engineering. Empir. Softw. Eng. 13 , 211–218 (2008). doi: 10.1007/s10664-008-9060-1

Sieber, J.E.: Protecting research subjects, employees and researchers: implications for software engineering. Empir. Softw. Eng. 6 (4), 329–341 (2001)

Siegel, S., Castellan, J.: Nonparametric Statistics for the Behavioral Sciences, 2nd edn. McGraw-Hill International Editions, New York (1988)

Singer, J., Vinson, N.G.: Why and how research ethics matters to you. Yes, you! Empir. Softw. Eng. 6 , 287–290 (2001). doi: 10.1023/A:1011998412776

Singer, J., Vinson, N.G.: Ethical issues in empirical studies of software engineering. IEEE Trans. Softw. Eng. 28 (12), 1171–1180 (2002). doi: 10.1109/TSE.2002.1158289. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=1158289

Simon S.: Fermat’s Last Theorem. Fourth Estate, London (1997)

Sjøberg, D.I.K., Hannay, J.E., Hansen, O., Kampenes, V.B., Karahasanovic, A., Liborg, N.-K., Rekdal, A.C.: A survey of controlled experiments in software engineering. IEEE Trans. Softw. Eng. 31 (9), 733–753 (2005). doi: 10.1109/TSE.2005.97. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=1514443

Sjøberg, D.I.K., Dybå, T., Anda, B., Hannay, J.E.: Building theories in software engineering. In: Shull, F., Singer, J., Sjøberg D. (eds.) Guide to Advanced Empirical Software Engineering. Springer, London (2008)

Sommerville, I.: Software Engineering, 9th edn. Addison-Wesley, Wokingham, England/ Reading (2010)

Sørumgård, S.: Verification of process conformance in empirical studies of software development. Ph.D. thesis, The Norwegian University of Science and Technology, Department of Computer and Information Science, Norway (1997)

Stake, R.E.: The Art of Case Study Research. SAGE Publications, Thousand Oaks (1995)

Staples, M., Niazi, M.: Experiences using systematic review guidelines. J. Syst. Softw. 80 (9), 1425–1437 (2007). doi: 10.1016/j.jss.2006.09.046

Thelin, T., Runeson, P.: Capture-recapture estimations for perspective-based reading – a simulated experiment. In: Proceedings of the 1st International Conference on Product Focused Software Process Improvement (PROFES), Oulu, pp. 182–200 (1999)

Thelin, T., Runeson, P., Wohlin, C.: An experimental comparison of usage-based and checklist-based reading. IEEE Trans. Softw. Eng. 29 (8), 687–704 (2003). doi: 10.1109/TSE.2003.1223644

Tichy, W.F.: Should computer scientists experiment more? IEEE Comput. 31 (5), 32–39 (1998)

Tichy, W.F., Lukowicz, P., Prechelt, L., Heinz, E.A.: Experimental evaluation in computer science: a quantitative study. J. Syst. Softw. 28 (1), 9–18 (1995)

Trochim, W.M.K.: The Research Methods Knowledge Base, 2nd edn. Cornell Custom Publishing, Cornell University, Ithaca (1999)

van Solingen, R., Berghout, E.: The Goal/Question/Metric Method: A Practical Guide for Quality Improvement and Software Development. McGraw-Hill International, London/Chicago (1999)

Verner, J.M., Sampson, J., Tosic, V., Abu Bakar, N.A., Kitchenham, B.A.: Guidelines for industrially-based multiple case studies in software engineering. In: Third International Conference on Research Challenges in Information Science, Fez, pp. 313–324 (2009)

Vinson, N.G., Singer, J.: A practical guide to ethical research involving humans. In: Shull, F., Singer, J., Sjøberg, D. (eds.) Guide to Advanced Empirical Software Engineering. Springer, London (2008)

Votta, L.G.: Does every inspection need a meeting? In: Proceedings of the ACM SIGSOFT Symposium on Foundations of Software Engineering, ACM Software Engineering Notes, vol. 18, pp. 107–114. ACM Press, New York (1993)

Wallace, C., Cook, C., Summet, J., Burnett, M.: Human centric computing languages and environments. In: Proceedings of Symposia on Human Centric Computing Languages and Environments, Arlington, pp. 63–65 (2002)

Wohlin, C., Gustavsson, A., Höst, M., Mattsson, C.: A framework for technology introduction in software organizations. In: Proceedings of the Conference on Software Process Improvement, Brighton, pp. 167–176 (1996)

Wohlin, C., Runeson, P., Höst, M., Ohlsson, M.C., Regnell, B., Wesslén, A.: Experimentation in Software Engineering: An Introduction. Kluwer, Boston (2000)

Wohlin, C., Aurum, A., Angelis, L., Phillips, L., Dittrich, Y., Gorschek, T., Grahn, H., Henningsson, K., Kågström, S., Low, G., Rovegård, P., Tomaszewski, P., van Toorn, C., Winter, J.: Success factors powering industry-academia collaboration in software research. IEEE Softw. (PrePrints) (2011). doi: 10.1109/MS.2011.92

Yin, R.K.: Case Study Research Design and Methods, 4th edn. Sage Publications, Beverly Hills (2009)

Zelkowitz, M.V., Wallace, D.R.: Experimental models for validating technology. IEEE Comput. 31 (5), 23–31 (1998)

Zendler, A.: A preliminary software engineering theory as investigated by published experiments. Empir. Softw. Eng. 6 , 161–180 (2001). doi: http://dx.doi.org/10.1023/A:1011489321999

Download references

Author information

Authors and affiliations.

School of Computing Blekinge Institute of Technology, Karlskrona, Sweden

Claes Wohlin

Department of Computer Science, Lund University, Lund, Sweden

Per Runeson, Martin Höst & Björn Regnell

System Verification Sweden AB, Malmö, Sweden

Magnus C. Ohlsson

ST-Ericsson AB, Lund, Sweden

Anders Wesslén

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this chapter

Wohlin, C., Runeson, P., Höst, M., Ohlsson, M.C., Regnell, B., Wesslén, A. (2012). Systematic Literature Reviews. In: Experimentation in Software Engineering. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-29044-2_4

Download citation

DOI : https://doi.org/10.1007/978-3-642-29044-2_4

Published : 02 May 2012

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-642-29043-5

Online ISBN : 978-3-642-29044-2

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, a systematic literature review on the impact of ai models on the security of code generation.

- 1 Security and Trust, University of Luxembourg, Luxembourg, Luxembourg

- 2 École Normale Supérieure, Paris, France

- 3 Faculty of Humanities, Education, and Social Sciences, University of Luxembourg, Luxembourg, Luxembourg

Introduction: Artificial Intelligence (AI) is increasingly used as a helper to develop computing programs. While it can boost software development and improve coding proficiency, this practice offers no guarantee of security. On the contrary, recent research shows that some AI models produce software with vulnerabilities. This situation leads to the question: How serious and widespread are the security flaws in code generated using AI models?

Methods: Through a systematic literature review, this work reviews the state of the art on how AI models impact software security. It systematizes the knowledge about the risks of using AI in coding security-critical software.

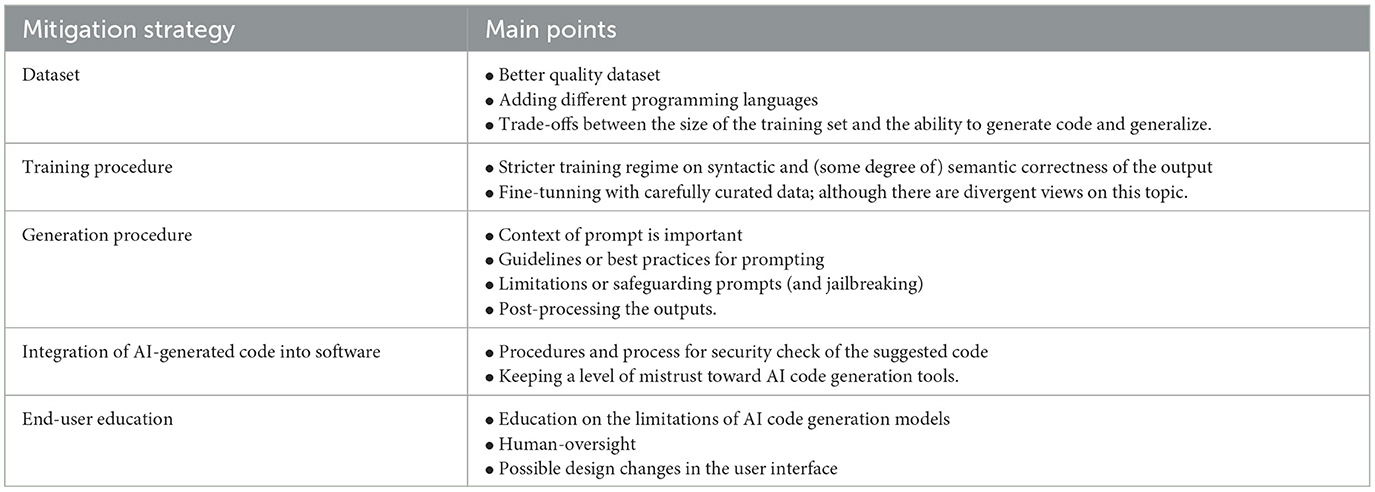

Results: It reviews what security flaws of well-known vulnerabilities (e.g., the MITRE CWE Top 25 Most Dangerous Software Weaknesses) are commonly hidden in AI-generated code. It also reviews works that discuss how vulnerabilities in AI-generated code can be exploited to compromise security and lists the attempts to improve the security of such AI-generated code.

Discussion: Overall, this work provides a comprehensive and systematic overview of the impact of AI in secure coding. This topic has sparked interest and concern within the software security engineering community. It highlights the importance of setting up security measures and processes, such as code verification, and that such practices could be customized for AI-aided code production.

1 Introduction

Despite initial concerns, increasingly, many organizations rely on artificial intelligence (AI) to enhance the operational workflows in their software development life cycle and to support writing software artifacts. One of the most well-known tools is GitHub Copilot. It is created by Microsoft relies on OpenAI's Codex model, and is trained on open-source code publicly available on GitHub ( Chen et al., 2021 ). Like many similar tools—such as CodeParrot, PolyCoder, StarCoder—Copilot is built atop a large language model (LLM) that has been trained on programming languages. Using LLMs for such tasks is an idea that dates back at least as far back as the public release of OpenAI's ChatGPT.

However, using automation and AI in software development is a double-edged sword. While it can improve code proficiency, the quality of AI-generated code is problematic. Some models introduce well-known vulnerabilities, such as those documented in MITRE's Common Weakness Enumeration (CWE) list of the top 25 “most dangerous software weaknesses.” Others generate so-called “stupid bugs,” naïve single-line mistakes that developers would qualify as “stupid” upon review ( Karampatsis and Sutton, 2020 ).

This behavior was identified early on and is supported to a varying degree by academic research. Pearce et al. (2022) concluded that 40% of the code suggested by Copilot had vulnerabilities. Yet research also shows that users trust AI-generator code more than their own ( Perry et al., 2023 ). These situations imply that new processes, mitigation strategies, and methodologies should be implemented to reduce or control the risks associated with the participation of generative AI in the software development life cycle.

It is, however, difficult to clearly attribute the blame, as the tooling landscape evolves, different training strategies and prompt engineering are used to alter LLMs behavior, and there is conflicting if anecdotal, evidence that human-generated code could be just as bad as AI-generated code.

This systematic literature review (SLR) aims to critically examine how the code generated by AI models impacts software and system security. Following the categorization of the research questions provided by Kitchenham and Charters (2007) on SLR questions, this work has a 2-fold objective: analyzing the impact and systematizing the knowledge produced so far. Our main question is:

“ How does the code generation from AI models impact the cybersecurity of the software process? ”

This paper discusses the risks and reviews the current state-of-the-art research on this still actively-researched question.

Our analysis shows specific trends and gaps in the literature. Overall, there is a high-level agreement that AI models do not produce safe code and do introduce vulnerabilities , despite mitigations. Particular vulnerabilities appear more frequently and prove to be more problematic than others ( Pearce et al., 2022 ; He and Vechev, 2023 ). Some domains (e.g., hardware design) seem more at risk than others, and there is clearly an imbalance in the efforts deployed to address these risks.

This work stresses the importance of relying on dedicated security measures in current software production processes to mitigate the risks introduced by AI-generated code and highlights the limitations of AI-based tools to perform this mitigation themselves.

The article is divided as follows: we first introduce the reader to AI models and code generation in Section 2 to proceed to explain our research method in Section 3. We then present our results in Section 4. In Section 5 we discuss the results, taking in consideration AI models, exploits, programming languages, mitigation strategies and future research. We close the paper by addressing threats to validity in Section 6 and conclusion in Section 7.

2 Background and previous work

2.1 ai models.

The sub-branch of AI models that is relevant to our discussion are generative models, especially large-language models (LLMs) that developed out of the attention-based transformer architecture ( Vaswani et al., 2017 ), made widely known and available through pre-trained models (such as OpenAI's GPT series and Codex, Google's PaLM, Meta's LLaMA, or Mistral's Mixtral).

In a transformer architecture, inputs (e.g., text) are converted to tokens 1 which are then mapped to an abstract latent space, a process known as encoding ( Vaswani et al., 2017 ). Mapping back from the latent space to tokens is accordingly called decoding , and the model's parameters are adjusted so that encoding and decoding work properly. This is achieved by feeding the model with human-generated input, from which it can learn latent space representations that match the input's distribution and identify correlations between tokens.

Pre-training amortizes the cost of training, which has become prohibitive for LLMs. It consists in determining a reasonable set of weights for the model, usually through autocompletion tasks, either autoregressive (ChatGPT) or masked (BERT) for natural language, during which the model is faced with an incomplete input and must correctly predict the missing parts or the next token. This training happens once, is based on public corpora, and results in an initial set of weights that serves as a baseline ( Tan et al., 2018 ). Most “open-source” models today follow this approach. 2

It is possible to fine-tune parameters to handle specific tasks from a pre-trained model, assuming they remain within a small perimeter of what the model was trained to do. This final training often requires human feedback and correction ( Tan et al., 2018 ).

The output of a decoder is not directly tokens, however, but a probability distribution over tokens. The temperature hyperparameter of LLMs controls how much the likelihood of less probable tokens is amplified: a high temperature would allow less probable tokens to be selected more often, resulting in a less predictable output. This is often combined with nucleus sampling ( Holtzman et al., 2020 ), i.e., requiring that the total sum of token probabilities is large enough and various penalty mechanisms to avoid repetition.

Finally, before being presented to the user, an output may undergo one or several rounds of (possibly non-LLM) filtering, including for instance the detection of foul language.

2.2 Code generation with AI models

With the rise of generative AI, there has also been a rise in the development of AI models for code generation. Multiple examples exist, such as Codex, Polycoder, CodeGen, CodeBERT, and StarCoder, to name a few (337, Xu, Li). These new tools should help developers of different domains be more efficient when writing code—or at least expected to ( Chen et al., 2021 ).

The use of LLMs for code generation is a domain-specific application of generative methods that greatly benefit from the narrower context. Contrary to natural language, programming languages follow a well-defined syntax using a reduced set of keywords, and multiple clues can be gathered (e.g., filenames, other parts of a code base) to help nudging the LLM in the right direction. Furthermore, so-called boilerplate code is not project-specific and can be readily reused across different code bases with minor adaptations, meaning that LLM-powered code assistants can already go a long way simply by providing commonly-used code snippets at the right time.

By design, LLMs generate code based on their training set ( Chen et al., 2021 ). 3 In doing so, there is a risk that sensitive, incorrect, or dangerous code is uncritically copied verbatim from the training set or that the “minor adaptations” necessary to transfer code from one project to another introduces mistakes ( Chen et al., 2021 ; Pearce et al., 2022 ; Niu et al., 2023 ). Therefore, generated code may include security issues, such as well-documented bugs, malpractices, or legacy issues found in the training data. A parallel issue often brought up is the copyright status of works produced by such tools, a still-open problem that is not the topic of this paper.

Similarly, other challenges and concerns have been highlighted by different academic research. From an educational point of view, some concerns are that using AI code generation models may impact acquiring bad security habits between novice programmers or students ( Becker et al., 2023 ). However, the usage of such models can also help lower the entry barrier to the field ( Becker et al., 2023 ). Similarly, cite337 has suggested that using AI code generation models does not output secure code all the time, as they are non-deterministic, and future research on mitigation is required ( Pearce et al., 2022 ). For example, Pearce et al. (2022) was one of the first to research this subject.

There are further claims that it may be possible to use by cyber criminal ( Chen et al., 2021 ; Natella et al., 2024 ). In popular communication mediums, there are affirmations that ChatGPT and other LLMs will be “useful” for criminal activities, for example Burgess (2023) . However, these tools can be used defensively in cyber security, as in ethical hacking ( Chen et al., 2021 ; Natella et al., 2024 ).

3 Research method

This research aims to systematically gather and analyze publications that answer our main question: “ How does the code generation of AI models impact the cybersecurity of the software process? ” Following Kitchenham and Charters (2007) classification of questions for SLR, our research falls into the type of questions of “Identifying the impact of technologies” on security, and “Identifying cost and risk factors associated with a technology” in security too.

To carry out this research, we have followed different SLR guidelines, most notably Wieringa et al. (2006) , Kitchenham and Charters (2007) , Wohlin (2014) , and Petersen et al. (2015) . Each of these guidelines was used for different elements of the research. We list out in a high-level approach which guidelines were used for each element, which we further discuss in different subsections of this article.

• For the general structure and guideline on how to carry out the SLR, we used Kitchenham and Charters (2007) . This included exclusion and inclusion criteria, explained in Section 3.2 ;

• The identification of the Population, Intervention, Comparison, and Outcome (PICO) is based both in Kitchenham and Charters (2007) and Petersen et al. (2015) , as a framework to create our search string. We present and discuss this framework in Section 3.1 ;

• The questions and quality check of the sample, we used the research done by Kitchenham et al. (2010) , which we describe in further details at Section 3.4 ;

• The taxonomy of type of research is from Wieringa et al. (2006) as a strategy to identify if a paper falls under our exclusion criteria. We present and discuss this taxonomy in Section 3.2. Although their taxonomy focuses on requirements engineering, it is broad enough to be used in other areas as recognized by Wohlin et al. (2013) ;

• For the snowballing technique, we used the method presented in Wohlin (2014) , which we discuss in Section 3.3 ;

• Mitigation strategies from Wohlin et al. (2013) are used, aiming to increase the reliability and validity of this study. We further analyze the threats to validity of our research in Section 6.

In the following subsections, we explain our approach to the SLR in more detail. The results are presented in Section 4.

3.1 Search planning and string

To answer our question systematically, we need to create a search string that reflects the critical elements of our questions. To achieve this, we thus need to frame the question in a way that allows us to (1) identify keywords, (2) identify synonyms, (3) define exclusion and inclusion criteria, and (4) answer the research question. One common strategy is the PICO (population, intervention, comparison, outcome) approach ( Petersen et al., 2015 ). Originally from medical sciences, it has been adapted for computer science and software engineering ( Kitchenham and Charters, 2007 ; Petersen et al., 2015 ).

To frame our work with the PICO approach, we follow the methodologies outlined in Kitchenham and Charters (2007) and Petersen et al. (2015) . We can identify the set of keywords and their synonyms by identifying these four elements, which are explained in detail in the following bullet point.

• Population: Cybersecurity.

• Following Kitchenham and Charters (2007) , a population can be an area or domain of technology. Population can be very specific.

• Intervention: AI models.

• Following Kitchenham and Charters (2007) “The intervention is the software methodology/tool/technology, such as the requirement elicitation technique.”

• Comparison: we compare the security issues identified by the code generated in the research articles. In Kitchenham and Charters (2007) word, “This is the software engineering methodology/tool/technology/procedure with which the intervention is being compared. When the comparison technology is the conventional or commonly-used technology, it is often referred to as the ‘control' treatment.”

• Outcomes: A systematic list of security issues of using AI models for code generation and possible mitigation strategies.

• Context: Although not mandatory (per Kitchenham and Charters, 2007 ) in general we consider code generation.

With the PICO elements done, it is possible to determine specific keywords to generate our search string. We have identified three specific sets: security, AI, and code generation. Consequently, we need to include synonyms of these three sets for generating the search string, taking a similar approach as Petersen et al. (2015) . The importance of including different synonyms arises from different research papers referring to the same phenomena differently. If synonyms are not included, essential papers may be missed from the final sample. The three groups are explained in more detail:

• Set 1: search elements related to security and insecurity due to our population of interest and comparison.

• Set 2: AI-related elements based on our intervention. This set should include LLMs, generative AI, and other approximations.

• Set 3: the research should focus on code generation.

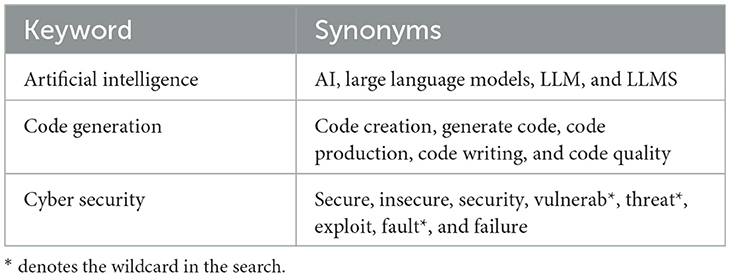

With these three sets of critical elements that our research focuses on, a search string is created. We constructed the search string by including synonyms based on the three sets (as seen in Table 1 ). In a concurrent manner, while identifying the synonyms, we create the search string. Through different iterations, we aim at achieving the “golden” string, following a test-retest approach by Kitchenham et al. (2010) . In every iteration, we checked if the vital papers of our study were in the sample. The final string was selected based on the new synonym that would add meaningful results. For example, one of the iterations included “ hard* ,” which did not add any extra article. Hence, it was excluded. Due to space constraints, the different iterations are available in the public repository of this research. The final string, with the unique query per database, is presented in Table 2 .

Table 1 . Keywords and synonyms.

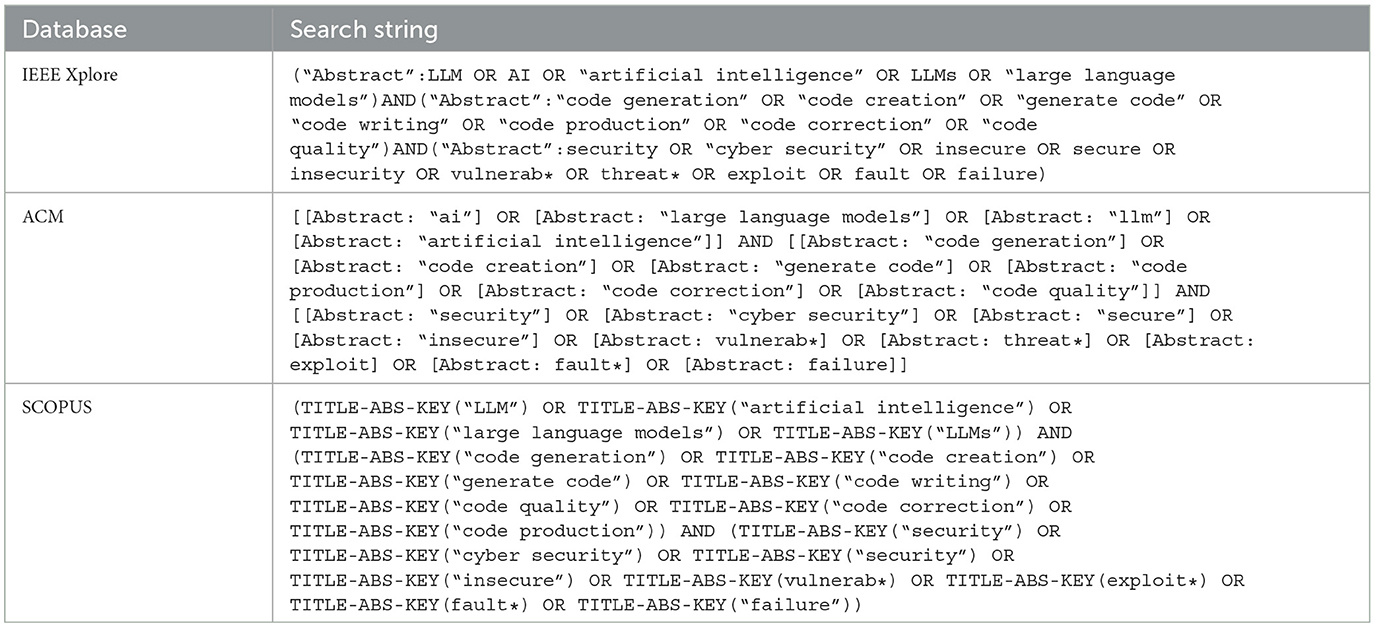

Table 2 . Search string per database.

For this research, we selected the following databases to gather our sample: IEEE Explore, ACM, and Scopus (which includes Springer and ScienceDirect). The databases were selected based on their relevance for computer science research, publication of peer-reviewed research, and alignment with this research objective. Although other databases from other domains could have been selected, the ones selected are notably known in computer science.

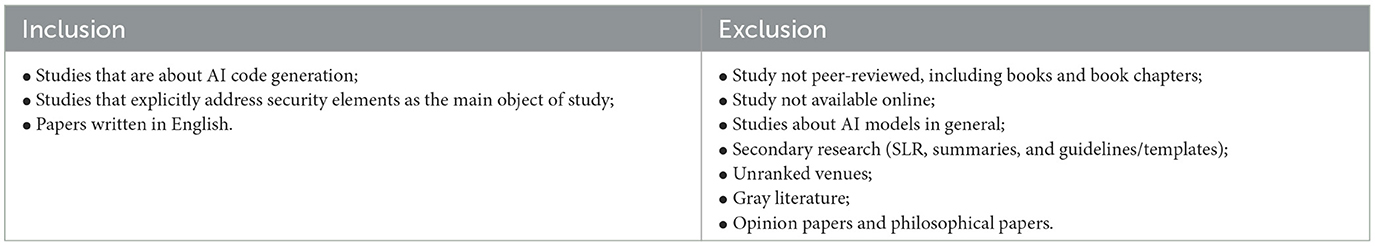

3.2 Exclusion and inclusion criteria

The exclusion and inclusion criteria were decided to align our research objectives. Our interest in excluding unranked venues is to avoid literature that is not peer-reviewed and act as a first quality check. This decision also applies to gray literature or book chapters. Finally, we excluded opinion and philosophical papers, as they do not carry out primary research. Table 3 shows are inclusion and exclusion criteria.

Table 3 . Inclusion and exclusion criteria.

We have excluded articles that address AI models or AI technology in general, as our interest—based on PICO—is on the security issue of AI models in code generation. So although such research is interesting, it does not align with our main objective.

For identifying the secondary research, opinion, and philosophical papers—which are all part of our exclusion criteria in Table 3 —we follow the taxonomy provided by Wieringa et al. (2006) . Although this classification was written for the requirements engineering domain, it can be generalized to other domains ( Wieringa et al., 2006 ). In addition, apart from helping us identify if a paper falls under our exclusion criteria, this taxonomy also allows us to identify how complete the research might be. The classification is as follows:

• Solution proposal: Proposes a solution to a problem ( Wieringa et al., 2006 ). “The solution can be novel or a significant extension of an existing technique ( Petersen et al., 2015 ).”

• Evaluation research: “This is the investigation of a problem in RE practice or an implementation of an RE technique in practice [...] novelty of the knowledge claim made by the paper is a relevant criterion, as is the soundness of the research method used ( Petersen et al., 2015 ).”

• Validation research: “This paper investigates the properties of a solution proposal that has not yet been implemented... ( Wieringa et al., 2006 ).”

• Philosophical papers: “These papers sketch a new way of looking at things, a new conceptual framework ( Wieringa et al., 2006 ).”

• Experience papers: Is where the authors publish their experience over a matter. “In these papers, the emphasis is on what and not on why ( Wieringa et al., 2006 ; Petersen et al., 2015 ).”

• Opinion papers: “These papers contain the author's opinion about what is wrong or good about something, how we should do something, etc. ( Wieringa et al., 2006 ).”

3.3 Snowballing

Furthermore, to increase the reliability and validity of this research, we applied a forward snowballing technique ( Wohlin et al., 2013 ; Wohlin, 2014 ). Once the first sample (start set) has passed an exclusion and inclusion criteria based on the title, abstract, and keyword, we forward snowballed the whole start set ( Wohlin et al., 2013 ). That is to say; we checked which papers were citing the papers from our starting set, as suggested by Wohlin (2014) . For this section, we used Google Scholar.

In the snowballing phase, we analyzed the title, abstract, and key words of each possible candidate ( Wohlin, 2014 ). In addition, we did an inclusion/exclusion analysis based on the title, abstract, and publication venue. If there was insufficient information, we analyzed the full text to make a decision, following the recommendations by Wohlin (2014) .

Our objective with the snowballing is to increase the reliability and validity. Furthermore, some articles found through the snowballing had been accepted at different peer-reviewed venues but had not been published yet in the corresponding database. This is a situation we address at Section 6.

3.4 Quality analysis

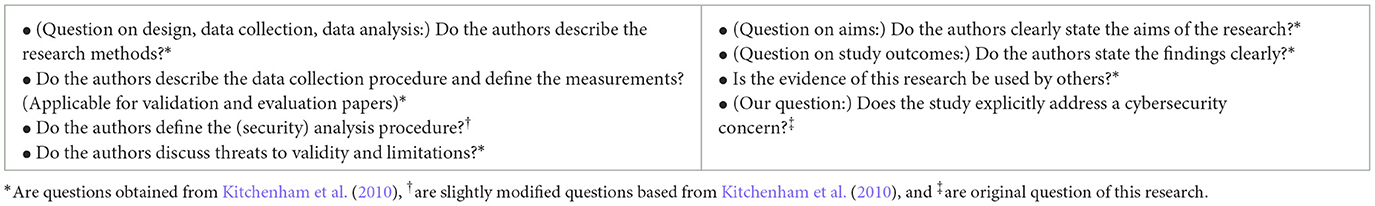

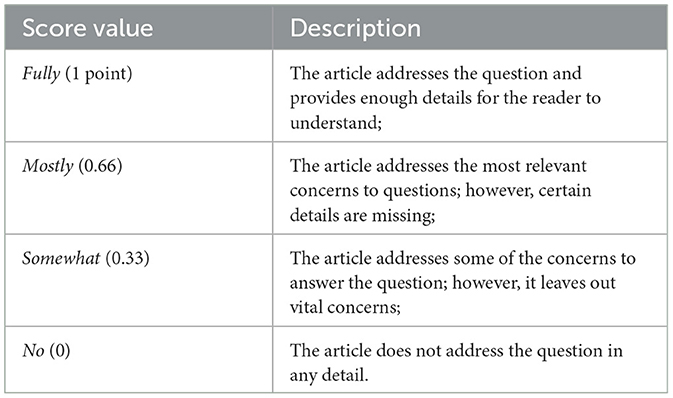

Once the final sample of papers is collected, we proceed with the quality check, following the procedure of Kitchenham and Charters (2007) and Kitchenham et al. (2010) . The objective behind a quality checklist if 2-fold: “to provide still more detailed inclusion/exclusion criteria” and act “as a means of weighting the importance of individual studies when results are being synthesized ( Kitchenham and Charters, 2007 ).” We followed the approach taken by Kitchenham et al. (2010) for the quality check, taking their questions and categorizing. In addition, to further adapt the questionnaire to our objectives, we added one question on security and adapted another one. The questionnaire is properly described at Table 4 . Each question was scored, according to the scoring scale defined in Table 5 .

Table 4 . Quality criteria questionnaire.

Table 5 . Quality criteria assessment.

The quality analysis is done by at least two authors of this research, for reliability and validity purposes ( Wohlin et al., 2013 ).

3.5 Data extraction

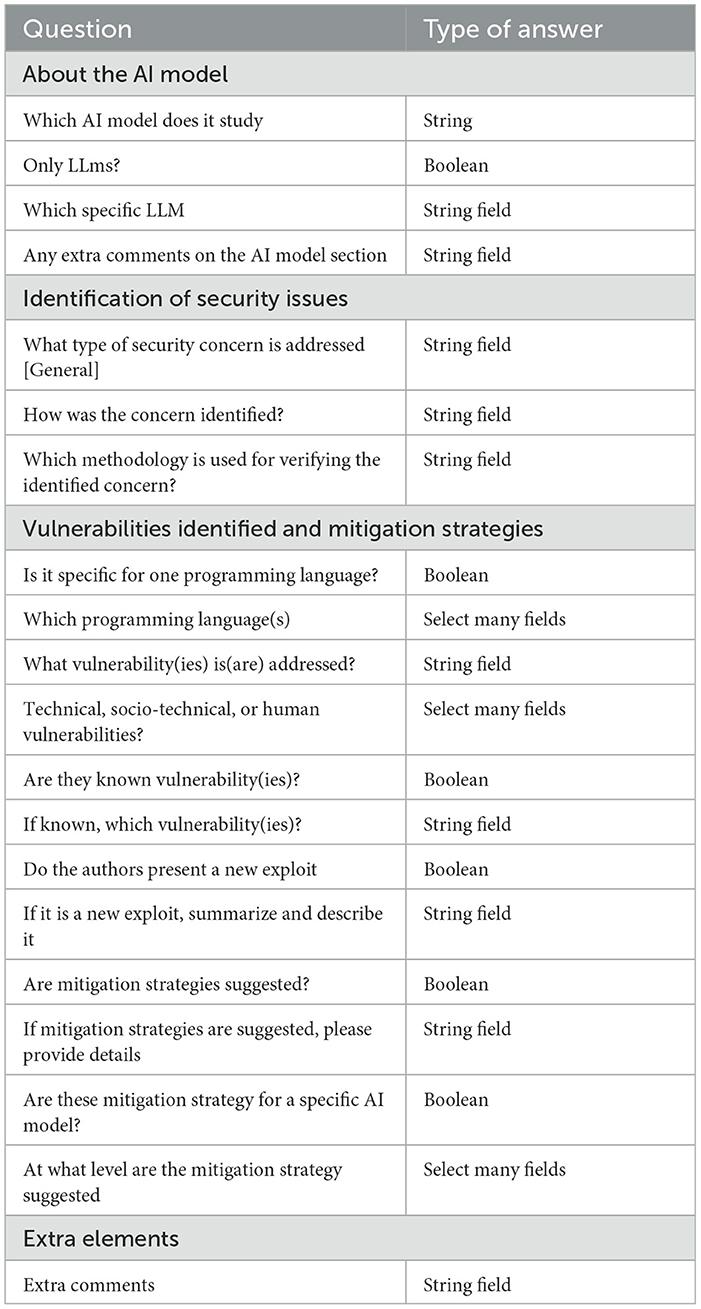

To answer the main question and extract the data, we have subdivided the main question, to answer it. This allows us to extract information and summarize it systematically; we created an extract form in line with ( Kitchenham and Charters, 2007 ; Carrera-Rivera et al., 2022 ). The data extraction form is presented in Table 6 .

Table 6 . Data extraction form and type of answer.

The data extraction was done by at least two researchers per article. Afterward, the results are compared, and if there are “disagreements, [they must be] resolved either by consensus among researchers or arbitration by an additional independent researcher ( Kitchenham and Charters, 2007 ).”

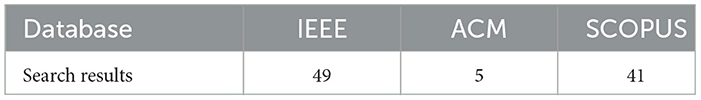

4.1 Search results

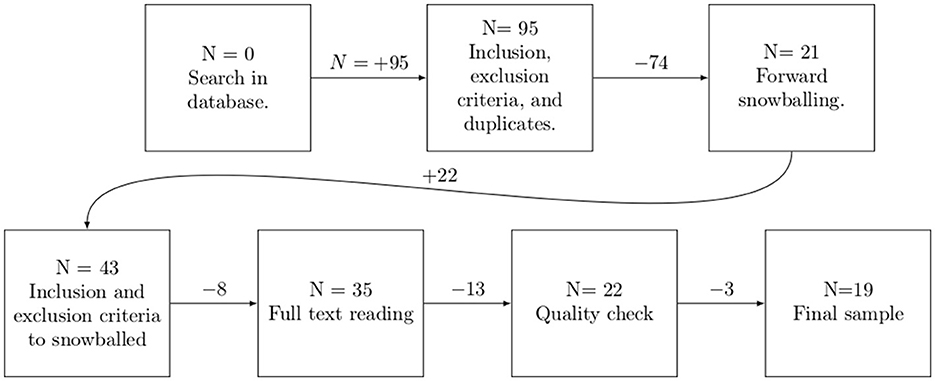

The search and recollection of papers were done during the last week of November 2023. Table 7 shows the total number of articles gathered per database. The selection process for our final samples is exemplified in Figure 1 .

Table 7 . Search results per database.

Figure 1 . Selection of sample papers for this SLR.

The total number of articles in our first round, among all the databases, was 95. We then identified duplicates and applied our inclusion and exclusion criteria for the first round of selected papers. This process left us with a sample of 21 articles.

These first 21 artcles are our starting set, from which we proceeded for a forward snowballing. We snowballed each paper of the starting set by searching Google Scholar to find where it had been cited. The selected papers at this phase were based on the title, abstract, based on Wohlin (2014) . From this step, 22 more articles were added to the sample, leaving 43 articles. We then applied inclusion and exclusion criteria to the new snowballed papers, that left us with 35 papers. We discuss this high number of snowballed papers at Section 6.

At this point, we read all the articles to analyze if they should pass to the final phase. In this phase, we discarded 12 articles deemed out of scope for this research, leaving us with 23 articles for quality check. For example, they would not focus on cybersecurity, code generation, or the usage of AI models for code generation.

At this phase, three particular articles (counted among the eight articles previously discarded) sparked discussion between the first and fourth authors regarding whether they were within the scope of this research. We defined AI code generation as artifacts that suggest or produce code. Hence, those artifacts that use AI to check and/or verify code, and vulnerability detection without suggesting new code are not within scope. In addition, the article's main focus should be on code generation and not other areas, such as code verification. So, although an article might discuss code generation, the paper was not accepted as it was not the main topic. As a result, two of the three discussion articles were accepted, and one was rejected.

4.2 Quality evaluation

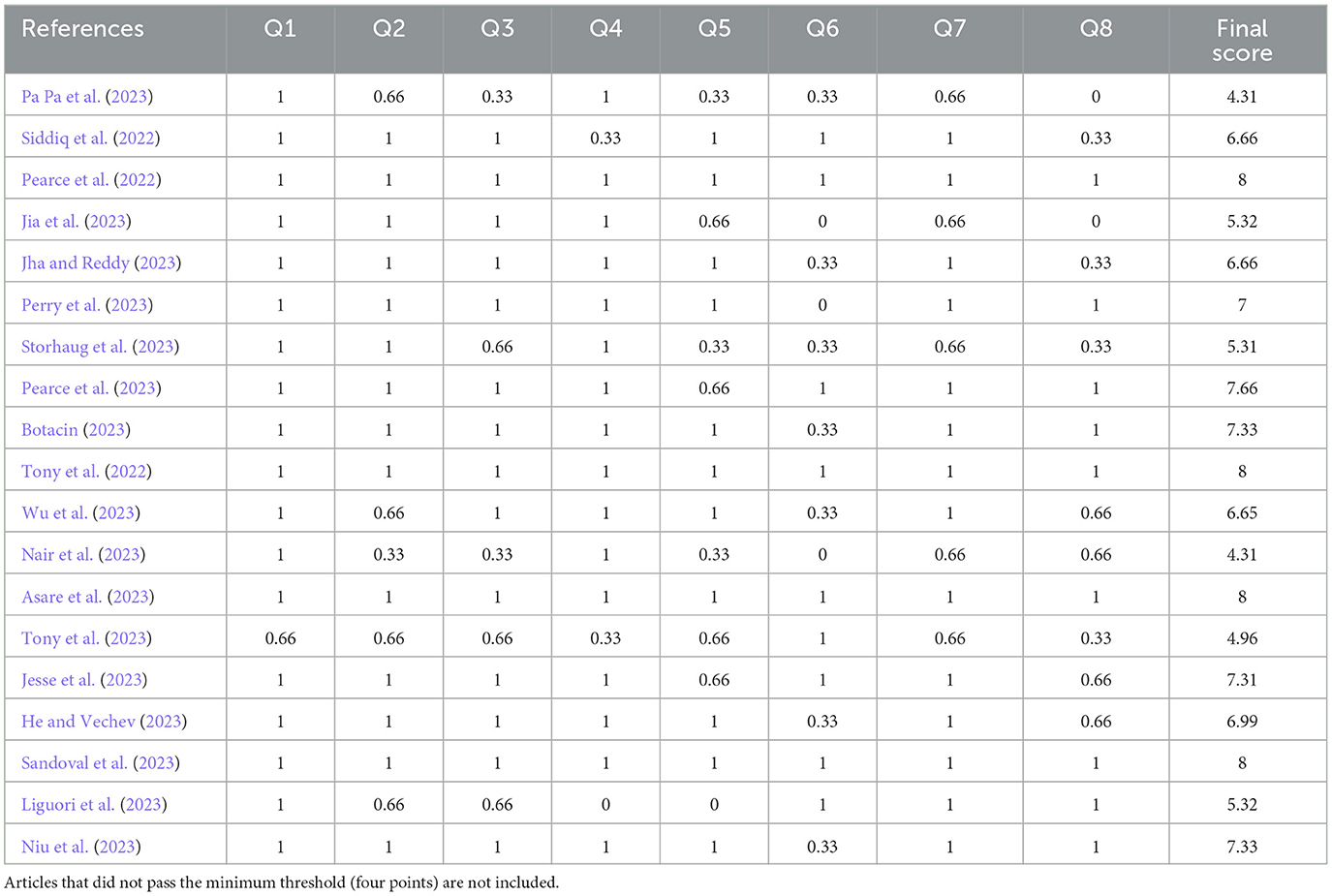

We carried out a quality check for our preliminary sample of papers ( N = 23) as detailed at Section 3.4. Based on the indicated scoring system, we discarded articles that did not pass 50% of the total possible score (four points). If there were disagreements in the scoring, these were discussed and resolved between authors. Each paper's score details are provided in Table 8 , for transparency purposes ( Carrera-Rivera et al., 2022 ). Quality scores guides us on where to place more weight of importance, and on which articles to focus ( Kitchenham and Charters, 2007 ). The final sample is of N = 19.

Table 8 . Quality scores of the final sample.

4.3 Final sample

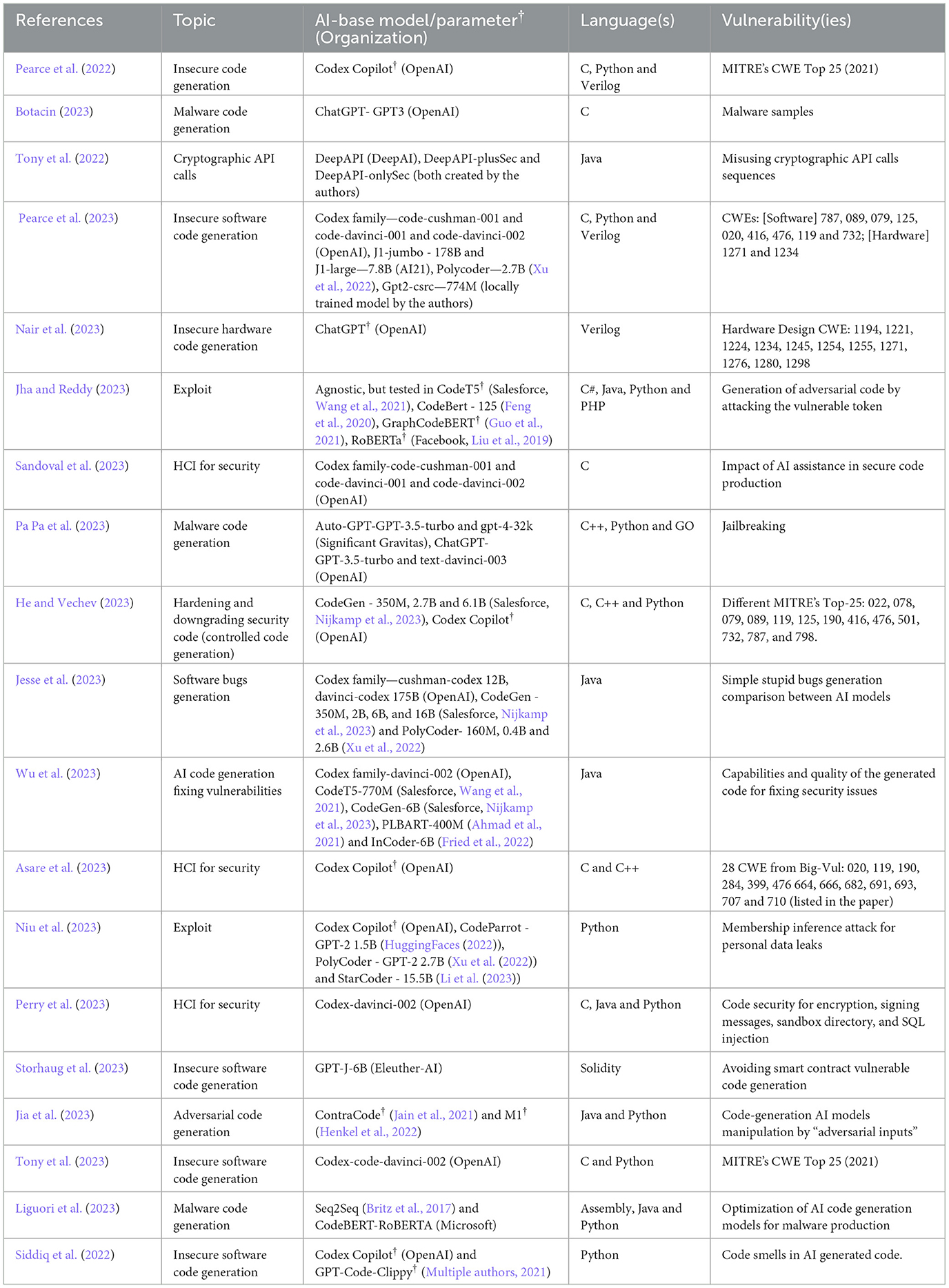

The quality check discarded three papers, which left us with 19 as a final sample, as seen in Table 9 . The first article published in this sample was in 2022 and the number of publications has been increasing every year. This situation is not surprising, as generative AI has risen in popularity in 2020 and has expanded into widespread knowledge with the release of ChatGPT 3.5.

Table 9 . Sample of papers, with the main information of interest ( † means no parameter or base model was specified in the article).

5 Discussion

5.1 about ai models comparisons and methods for investigation.

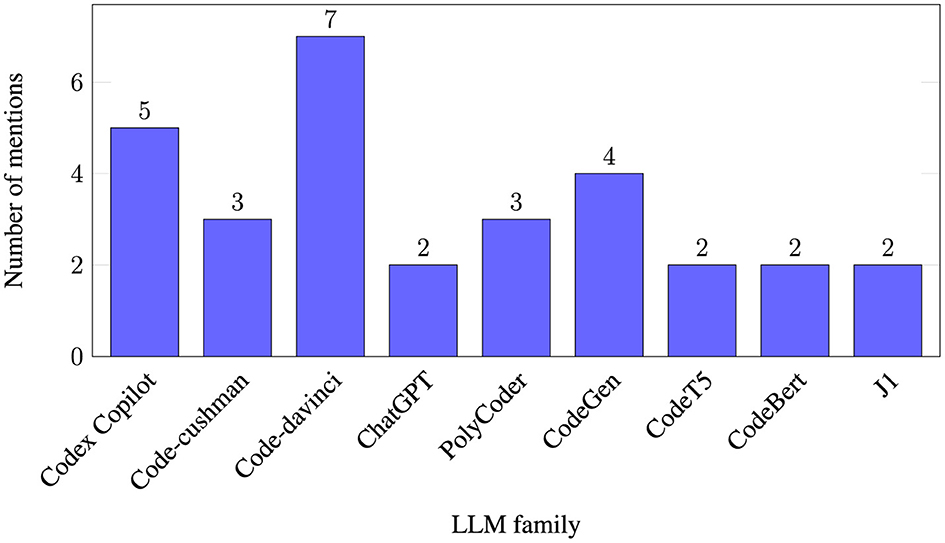

Almost the majority (14 papers—73%) of the papers research at least one OpenAI model, Codex being the most popular option. OpenAI owns ChatGPT, which was adopted massively by the general public. Hence, it is not surprising that most articles focus on OpenAI models. However, other AI models from other organizations are also studied, Salesforce's CodeGen and CodeT5, both open-source, are prime examples. Similarly, Xu et al. (2022) Polycoder was a popular selection in the sample. Finally, different authors benchmarked in-house AI models and popular models. For example, papers such as Tony et al. (2022) with DeepAPI-plusSec and DeepAPI-onlySec and Pearce et al. (2023) with Gpt2-csrc. Figure 3 shows the LLM instances researched by two or more articles grouped by family.

As the different papers researched different vulnerabilities, it remains difficult to compare the results. Some articles researched specific CWE, other MITRE Top-25, the impact of AI in code, the quality of the code generated, and malware generation, among others. It was also challenging to find the same methodological approach for comparing results, and therefore, we can only infer certain tendencies. For this reason, future research could focus on generating a standardized approach and analyzing vulnerabilities to analyze the quality of security. Furthermore, it would be interesting to have more analysis between open-source and proprietary models.

Having stated this, two articles with similar approaches, topics, and vulnerabilities are Pearce et al. (2022 , 2023) . Both papers share authors, which can help explain the similarity in the approach. Both have similar conclusions on the security of the output of different OpenAI models: they can generate functional and safe code, but the percentage of this will vary between CWE and programming language ( Pearce et al., 2022 , 2023 ). For both authors, the security of the code generated in C was inferior to that in Python ( Pearce et al., 2022 , 2023 ). For example, Pearce et al. (2022) indicates that for Python, 39% of the code suggested is vulnerable and 50% for code in C. Pearce et al. (2023) highlights that the models they studied struggled with fixes for certain CWE, such as CWE-787 in C. So even though they compared different models of the OpenAI family, they produced similar results (albeit some models had better performance than others).

Based on the work of Pearce et al. (2023) , when comparing OpenAI's models to others (such as the AI21 family, Polycoder, and GPT-csrc) in C and Python with CWE vulnerabilities, OpenAI's models would perform better than the rest. In the majority of the cases, code-davinci-002 would outperform the rest. Furthermore, when applying the AI models to other programming languages, such as Verilog, not all models (namely Polycoder and gpt2-csrc) supported it ( Pearce et al., 2023 ). We cannot fully compare these results with other research articles, as they focused on different CWEs but identified tendencies. To name the difference,

• He and Vechev (2023) studies mainly CodeGen and mentions that Copilot can help with CWE-089,022 and 798. They do not compare the two AI models but compare CodeGen with SVEN. They use scenarios to evaluate CWE, adopting the method from Pearce et al. (2022) . CodeGen does seem to provide similar tendencies as Pearce et al. (2022) : certain CWE appeared more recurrently than others. For example, comparing with Pearce et al. (2022) and He and Vechev (2023) , CWE-787, 089, 079, and 125 in Python and C appeared in most scenarios at a similar rate. 4

• This data shows that even OpenAI's and CodeGen models have similar outputs. When He and Vechev (2023) present the “overall security rate” at different temperatures of CodeGen, they have equivalent security rates: 42% of the code suggested being vulnerable in He and Vechev (2023) vs. a 39% in Python and 50% in C in Pearce et al. (2022) .

• Nair et al. (2023) also studies CWE vulnerabilities for Verilog code. Both Pearce et al. (2022 , 2023) also analyze Verilog in OpenAI's models, but with very different research methods. Furthermore, their objectives are different: Nair et al. (2023) focuses on prompting and how to modify prompts for a secure output. What can be compared is that Nair et al. (2023) and Pearce et al. (2023) highlight the importance of prompting.

• Finally Asare et al. (2023) also studies OpenAI from a very different perspective: the human-computer interaction (HCI). Therefore, we cannot compare the study results of Asare et al. (2023) with Pearce et al. (2022 , 2023) .

Regarding malware code generation, both Botacin (2023) and Pa Pa et al. (2023) OpenAI's models, but different base-models. Both conclude that AI models can help generate malware but to different degrees. Botacin (2023) indicates that ChatGPT cannot create malware from scratch but can create snippets and help less-skilled malicious actors with the learning curve. Pa Pa et al. (2023) experiment with different jailbreaks and suggest that the different models can create malware, up to 400 lines of code. In contrast, Liguori et al. (2023) researchers Seq2Seq and CodeBERT and highlight the importance for malicious actors that AI models output correct code if not their attack fails. Therefore, human review is still necessary to fulfill the goals of malicious actors ( Liguori et al., 2023 ). Future work could benefit from comparing these results with other AI code generation models to understand if they have similar outputs and how to jailbreak them.

The last element we can compare is the HCI aspects, specifically Asare et al. (2023) , Perry et al. (2023) , and Sandoval et al. (2023) , who all researched on C. Both Asare et al. (2023) and Sandoval et al. (2023) agree that AI code generation models do not seem to be worse, if not the same, in generating insecure code and introducing vulnerabilities. In contrast, Perry et al. (2023) concludes that developers who used AI assistants generated more insecure code—although this is inconclusive for the C language—as these developers believed they had written more secure code. Perry et al. (2023) suggest that there is a relationship between how much trust there is between the AI model and the security of code. All three agree that AI assistant tools should not be used carefully, particularly between non-experts ( Asare et al., 2023 ; Perry et al., 2023 ; Sandoval et al., 2023 ).

5.2 New exploits

Firstly, Niu et al. (2023) hand-crafted prompts that seemed could leak personal data, which yielded 200 prompts. Then, they queried each of these prompts, obtaining five responses per prompt, giving 1,000 responses. Two authors then looked through the outputs to identify if the prompts had leaked personal data. The authors then improved these with the identified prompts. They tweaked elements such as context, pre-fixing or the natural language (English and Chinese), and meta-variables such as prompt programming language style for the final data set.

With the final set of prompts, the model was queried for privacy leaks. B efore querying the model, the authors also tuned specific parameters, such as temperature. “Using the BlindMI attack allowed filtering out 20% of the outputs, with the high recall ensuring that most of the leakages are classified correctly and not discarded ( Niu et al., 2023 ).” Once the outputs had been labeled as members, a human checked if they contained “sensitive data” ( Niu et al., 2023 ). The human could categorize such information as targeted leak, indirect leak, or uncategorized leak.

When applying the exploit to Codex Copilot and verifying with GitHub, it shows there is indeed a leakage of information ( Niu et al., 2023 ). 2.82% of the outputs contained identifiable information such as address, email, and date of birth; 0.78% private information such as medical records or identities; and 0.64% secret information such as private keys, biometric authentication or passwords ( Niu et al., 2023 ). The instances in which data was leaked varied; specific categories, such as bank statements, had much lower leaks than passwords, for example Niu et al. (2023) . Furthermore, most of the leaks tended to be indirect rather than direct. This finding implies that “the model has a tendency to generate information pertaining to individuals other than the subject of the prompt, thereby breaching privacy principles such as contextual agreement ( Niu et al., 2023 ).”

Their research proposes a scalable and semi-automatic manner to leak personal data from the training data in a code-generation AI model. The authors do note that the outputs are not verbatim or memorized data.

To achieve this, He and Vechev (2023) curated a dataset of vulnerabilities from CrossVul ( Nikitopoulos et al., 2021 ) and Big-Vul ( Fan et al., 2020 ), which focuses in C/C++ and VUDENC ( Wartschinski et al., 2022 ) for Python. In addition, they included data from commits from GitHub, taking into special consideration that they were true commits, avoiding that SVEN learns “undesirable behavior.” At the end, they target 9 CWES from MITRE Top 25.

Through benchmarking, they evaluate SVEN output's security (and functional) correctness against CodeGen (350M, 2.7B, and 6.1B). They follow a scenario-based approach “that reflect[s] real-world coding ( He and Vechev, 2023 ),” with each scenario targeting one CWE. They measure the security rate, which is defined as “the percentage of secure programs among valid programs ( He and Vechev, 2023 ).” They set the temperature at 0.4 for the samples.

Their results show that SVEN can significantly increase and decrease (depending on the controlled generation output) the code security score. “CodeGen LMs have a security rate of ≈60%, which matches the security level of other LMs [...] SVEN sec significantly improves the security rate to >85%. The best-performing case is 2.7B, where SVENsec increases the security rate from 59.1 to 92.3% ( He and Vechev, 2023 ).” Similar results are obtained for SVEN vul with the “security rate greatly by 23.5% for 350M, 22.3% for 2.7B, and 25.3% for 6.1B ( He and Vechev, 2023 )”. 5 When analyzed per CWE, in almost all cases (except CWE-416 language C) SVEN sec increases the security rate. Finally, even when tested with 4 CWE that were not included in the original training set of 9, SVEN had positive results.

Although the authors aim at evaluating and validating SVEN, as an artifact for cybersecurity, they also recognize its potential use as a malicious tool. They suggest that SVEN can be inserted in open-source projects and distributed ( He and Vechev, 2023 ). Future work could focus on how to integrate SVEN—or similar approaches—as plug-ins into AI code generations, to lower the security of the code generated. Furthermore, replication of this approach could raise security alarms. Other research can focus on seeking ways to lower the security score while keeping the functionality and how it can be distributed across targeted actors.

They benchmark CodeAttack against the TextFooler and BERT-Attack, two other adversarial attacks in three tasks: code translation (translating code between different programming languages, in this case between C# and Java), code repair (fixes bugs for Java) and code (a summary of the code in natural language). The authors also applied the benchmark in different AI models (CodeT5, CodeBERT, GraphCode-BERT, and RoBERTa) in different programming languages (C#, Java, Python, and PHP). In the majority of the tests, CodeAttack had the best results.

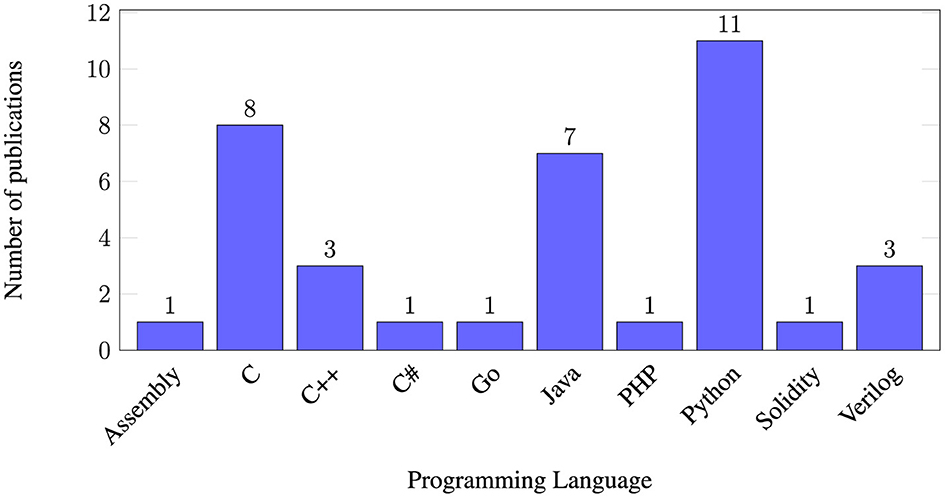

5.3 Performance per programming language

Different programming languages are studied. Python and the C family are the most common languages, including C, C++, and C# (as seen in Figure 2 ). To a lesser extent, Java and Verilog are tested. Finally, specific articles would study more specific programming languages, such as Solidity, Go or PHP. Figure 2 offers a graphical representation of the distribution of the programming languages.

Figure 2 . Number of articles that research specific programming languages. An article may research 2 or more programming languages.

Figure 3 . Number of times each LLM instance was researched by two or more articles, grouped by family. One paper might study several instances of the same family (e.g., Code-davinci-001 and Code-davinci-002), therefore counting twice. Table 9 offers details on exactly which AI models are studied per article.

5.3.1 Python

Python is the second most used programming language 6 as of today. As a result most publicly-available training corpora include Python and it is therefore reasonable to assume that AI models can more easily be tuned to handle this language ( Pearce et al., 2022 , 2023 ; Niu et al., 2023 ; Perry et al., 2023 ). Being a rather high level, interpreted language, Python should also expose a smaller attack surface. As a result, AI-generated Python code has fewer avenues to cause issues to begin with, and this is indeed backed up by evidence ( Pearce et al., 2022 , 2023 ; Perry et al., 2023 ).

In spite of this, issues still occur: Pearce et al. (2022) experimented with 29 scenarios, producing 571 Python programs. Out of these, 219 (38.35%) presented some kind of Top-25 MITRE (2021) vulnerability, with 11 (37.92%) scenarios having a top-vulnerable score. Unaccounted in these statistics are the situations where generated programs fail to achieve functional correctness ( Pearce et al., 2023 ), which could yield different conclusions. 7

Pearce et al. (2023) , building from Pearce et al. (2022) , study to what extent post-processing can automatically detect and fix bugs introduced during code generation. For instance, on CWE-089 (SQL injection) they found that “29.6% [3197] of the 10,796 valid programs for the CWE-089 scenario were repaired” by an appropriately-tuned LLM ( Pearce et al., 2023 ). In addition, they claim that AI models can generate bug-free programs without “additional context ( Pearce et al., 2023 ).”

It is however difficult to support such claims, which need to be nuanced. Depending on the class of vulnerability, AI models varied in their ability in producing secure Python code ( Pearce et al., 2022 ; He and Vechev, 2023 ; Perry et al., 2023 ; Tony et al., 2023 ). Tony et al. (2023) experimented with code generation from natural language prompts, findings that indeed, Codex output included vulnerabilities. In another research,Copilot reports only rare occurences of CWE-079 or CWE-020, but common occurences of CWE-798 and CWE- 089 ( Pearce et al., 2022 ). Pearce et al. (2022) report a 75% vulnerable score for scenario 1, 48% scenario 2, and 65% scenario 3 with regards to CWE-089 vulnerability ( Pearce et al., 2022 ). In February 2023, Copilot launched a prevention system for CWEs 089, 022, and 798 ( He and Vechev, 2023 ), the exact mechanism of which is unclear. At the time of writing it falls behind other approaches such as SVEN ( He and Vechev, 2023 ).

Perhaps surprisingly, there is not much variability across different AI models: CodeGen-2.7B has comparable vulnerability rates ( He and Vechev, 2023 ), with CWE-089 still on top. CodeGen-2.7B also produced code that exhibited CWE-078, 476, 079, or 787, which are considered more critical.

One may think that using AI as an assistant to a human programmer could alleviate some of these issues. Yet evidence points to the opposite: when using AI models as pair programmers, developers consistently deliver more insecure code for Python ( Perry et al., 2023 ). Perry et al. (2023) led a user-oriented study on how the usage of AI models for programming affects the security and functionality of code, focusing on Python, C, and SQL. For Python, they asked participants to write functions that performed basic cryptographic operations (encryption, signature) and file manipulation. 8 They show a statistically significant difference between subjects that used AI models (experimental group) and those that did not (control group), with the experimental group consistently producing less secure code ( Perry et al., 2023 ). For instance, for task 1 (encryption and decryption), 21% of the responses of the experiment group was secure and correct vs. 43% of the control group ( Perry et al., 2023 ). In comparison, 36% of the experiment group provided insecure but correct code, compared to 14%.

Even if AI models produce on occasion bug-free and secure code, evidence points out that it cannot be guaranteed. In this light, both Pearce et al. (2022 , 2023) recommend deploying additional security-aware tools and methodologies whenever using AI models. Moreover, Perry et al. (2023) suggests a relationship between security awareness and trust in AI models on the one hand, and the security of the AI-(co)generated code.