| | | Discuss the contribution of other researchers to improve reliability and validity. | Frequently Asked QuestionsWhat is reliability and validity in research. Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.  What is validity?Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility. What is reliability?Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability. What is reliability in psychology?In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions. What is test retest reliability?Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time. How to improve reliability of an experiment?- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

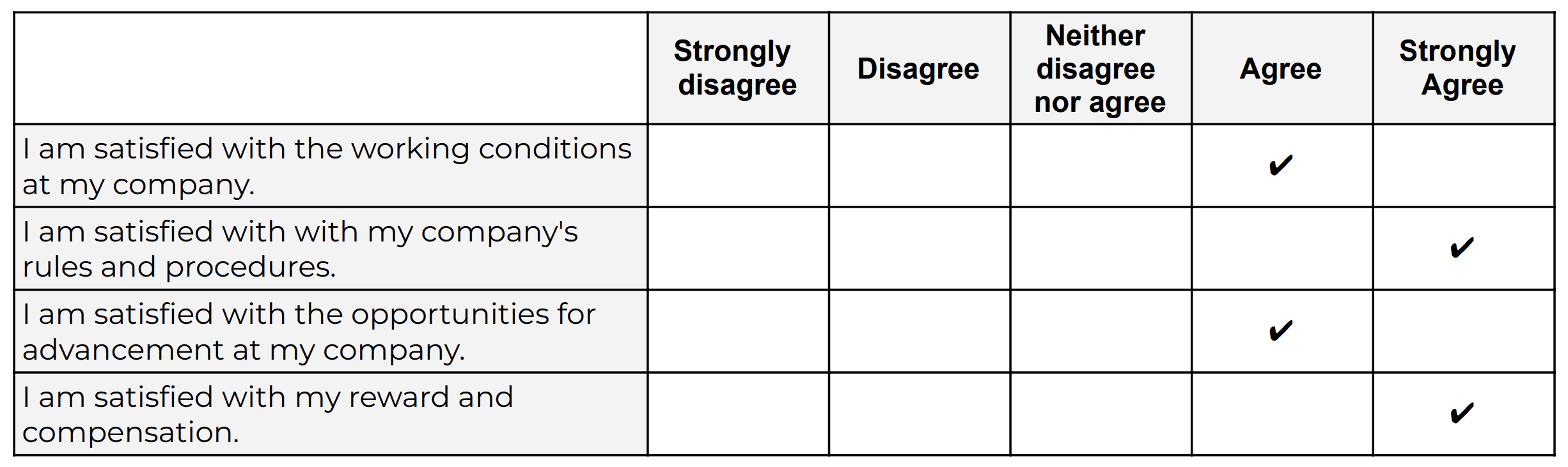

What is the difference between reliability and validity?Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research. Are interviews reliable and valid?Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised. Are IQ tests valid and reliable?IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns. Are questionnaires reliable and valid?Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity. You May Also LikeAction research for my dissertation?, A brief overview of action research as a responsive, action-oriented, participative and reflective research technique. What are the different research strategies you can use in your dissertation? Here are some guidelines to help you choose a research strategy that would make your research more credible. Experimental research refers to the experiments conducted in the laboratory or under observation in controlled conditions. Here is all you need to know about experimental research. USEFUL LINKS LEARNING RESOURCES  COMPANY DETAILS  - First Online: 14 May 2021

Cite this chapter - Cecil R. Reynolds 4 ,

- Robert A. Altmann 5 &

- Daniel N. Allen 6

3306 Accesses 2 Citations Validity is a fundamental psychometric property of psychological tests. For any given test, the term validity refers to evidence that supports interpretation of test results as reflecting the psychological construct(s) the test was designed to measure. Validity is threatened when the test does not measure important aspects of the construct of interest, or when the test measures characteristics, content, or skills that are unrelated to the test construct. A test must produce reliable test scores to produce valid interpretations, but even highly reliable tests may produce invalid interpretations. This chapter considers these matters in depth, including various types of validity and validity evidence, sources of validity evidence, and integration of validity evidence across different sources to support a validity argument for the test. The chapter ends with a practical discussion of how validity evidence is reported in test manuals, using as an example the Reynolds Intellectual Assessment Scales, second edition. Validity refers to the degree to which evidence and theory support interpretations of test scores for the proposed uses of the test. Validity is, therefore, the most fundamental consideration in developing and evaluating tests. This is a preview of subscription content, log in via an institution to check access. Access this chapterSubscribe and save. - Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout Purchases are for personal use only Institutional subscriptions American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (2014). Standards for educational and psychological testing . Washington, DC: American Educational Research Association. Google Scholar American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for educational and psychological testing. Washington, DC: American Educational Research Association. American Psychological Association. (1954). Technical recommendations for psychological tests and diagnostic techniques. Psychological Bulletin, 51 (2 Pt. 2), 1–28. Article Google Scholar American Psychological Association. (1966). Standards for educational and psychological tests and manuals . Washington, DC: Author. American Psychological Association, American Educational Research Association, & National Council on Measurement in Education. (1974). Standards for educational and psychological testing . Washington, DC: Author. American Psychological Association, American Educational Research Association, & National Council on Measurement in Education. (1985). Standards for educational and psychological testing . Washington, DC: Author. Anastasi, A., & Urbina, S. (1997). Psychological testing (7th ed.). Upper Saddle River, NJ: Prentice Hall. Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin, 56 (2), 546–553. Carroll, J. B. (1993). Human cognitive abilities: A survey of factor-analytic studies . New York, NY: Cambridge University Press. Book Google Scholar Cattell, R. (1966). Handbook of multivariate experimental psychology . Chicago, IL: Rand McNally. Chan, D., Schmitt, N., DeShon, R. P., Clause, C. S., & Delbridge, K. (1997). Reaction to cognitive ability tests: The relationship between race, test performance, face validity, and test-taking motivation. Journal of Applied Psychology, 82 , 300–310. Cronbach, L. J. (1990). Essentials of psychological testing (5th ed.). New York, NY: HarperCollins. Cronbach, L. J., & Gleser, G. C. (1965). Psychological tests and personnel decisions (2nd ed.). Champaign, IL: University of Illinois Press. Hunter, J. E., Schmidt, F. L., & Rauschenberger, L. (1984). Methodological, statistical, and ethical issues in the study of bias in psychological tests. In C. R. Reynolds & R. T. Brown (Eds.), Perspectives on bias in mental testing (pp. 41–100). New York, NY: Plenum Press. Chapter Google Scholar Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel Psychology, 28 , 563–575. Lee, D., Reynolds, C., & Willson, V. (2003). Standardized test administration: Why bother? Journal of Forensic Neuropsychology, 3 (3), 55–81. Linn, R. L., & Gronlund, N. E. (2000). Measurement and assessment in teaching (8th ed.). Upper Saddle River, NJ: Prentice Hall. McFall, R. M., & Treat, T. T. (1999). Quantifying the information value of clinical assessment with signal detection theory. Annual Review of Psychology, 50 , 215–241. Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). Upper Saddle River, NJ: Merrill Prentice Hall. Messick, S. (1994). The interplay of evidence and consequences in the validation of performance assessments. Educational Researcher, 23 , 13–23. Meyer, G. J., Finn, S. E., Eyde, L. D., Kay, G. G., Moreland, K. L., Dies, R. R., … Reed, G. M. (2001). Psychological testing and psychological assessment: A review of evidence and issues. American Psychologist, 56 , 128–165. Pearson. (2009). WIAT-III: Examiners manual . San Antonio, TX: Author. Popham, W. J. (2000). Modern educational measurement: Practical guidelines for educational leaders . Boston, MA: Allyn & Bacon. Reynolds, C. R. (1998). Fundamentals of measurement and assessment in psychology. In A. Bellack & M. Hersen (Eds.), Comprehensive clinical psychology (pp. 33–55). New York, NY: Elsevier. Reynolds, C. R., & Kamphaus, R. W. (2003). Reynolds Intellectual Assessment Scales . Lutz, FL: Psychological Assessment Resources. Reynolds, C. R., & Kamphaus, R. W. (2015). Reynolds Intellectual Assessment Scales (2nd ed.). Lutz, FL: Psychological Assessment Resources. Schmidt, F. L., & Hunter, J. (1998). The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychological Bulletin, 124 (2), 262–274. Sireci, S. G. (1998). Gathering and analyzing content validity data. Educational Assessment, 5 , 299–321. Wechsler, D. (2014). Wechsler intelligence scale for children (5th ed.). Bloomington, MN: NCS Pearson. Recommended ReadingAmerican Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for educational and psychological testing . Washington, DC: American Educational Research Association. Chapter 1 is a must read for those wanting to gain a thorough understanding of validity. Cronbach, L. J., & Gleser, G. C. (1965). Psychological tests and personnel decisions (2nd ed.). Champaign, IL: University of Illinois Press. A classic, particularly with regard to validity evidence based on relations to external variables! Gorsuch, R. L. (1983). Factor analysis (2nd ed.). Hillsdale, NJ: Erlbaum. A classic for those really interested in understanding factor analysis. Hunter, J. E., Schmidt, F. L., & Rauschenberger, J. (1984). Methodological, statistical, and ethical issues in the study of bias in psychological tests. In C. R. Reynolds & R. T. Brown (Eds.), Perspectives on bias in mental testing (pp. 41–100). New York, NY: Plenum. Lee, D., Reynolds, C. R., & Willson, V. L. (2003). Standardized test administration: Why bother? Journal of Forensic Neuropsychology, 3 , 55–81. McFall, R. M., & Treat, T. T. (1999). Quantifying the information value of clinical assessments with signal detection theory. Annual Review of Psychology, 50 , 215–241. Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). Upper Saddle River, NJ: Merrill/Prentice Hall. A little technical at times, but very influential. Schmidt, F. L., & Hunter, J. E. (1998). The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychological Bulletin, 124 , 262–274. A must read on personnel selection! Sireci, S. G. (1998). Gathering and analyzing content validity data. Educational Assessment, 5 , 299–321. This article provides a good review of approaches to collecting validity evidence based on test content, including some of the newer quantitative approaches. Tabachnick, B. G., & Fidel, L. S. (1996). Using multivariate statistics (3rd ed.). New York, NY: HarperCollins. A great chapter on factor analysis that is less technical than Gorsuch (1993). Download references Author informationAuthors and affiliations. Austin, TX, USA Cecil R. Reynolds Minneapolis, MN, USA Robert A. Altmann Department of Psychology, University of Nevada, Las Vegas, Las Vegas, NV, USA Daniel N. Allen You can also search for this author in PubMed Google Scholar 5.1 Electronic Supplementary MaterialSupplementary file 5.1. (PPTX 258 kb) Rights and permissionsReprints and permissions Copyright information© 2021 Springer Nature Switzerland AG About this chapterReynolds, C.R., Altmann, R.A., Allen, D.N. (2021). Validity. In: Mastering Modern Psychological Testing. Springer, Cham. https://doi.org/10.1007/978-3-030-59455-8_5 Download citationDOI : https://doi.org/10.1007/978-3-030-59455-8_5 Published : 14 May 2021 Publisher Name : Springer, Cham Print ISBN : 978-3-030-59454-1 Online ISBN : 978-3-030-59455-8 eBook Packages : Behavioral Science and Psychology Behavioral Science and Psychology (R0) Share this chapterAnyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. Provided by the Springer Nature SharedIt content-sharing initiative Policies and ethics - Find a journal

- Track your research

Content Validity in Research: Definition & ExamplesCharlotte Nickerson Research Assistant at Harvard University Undergraduate at Harvard University Charlotte Nickerson is a student at Harvard University obsessed with the intersection of mental health, productivity, and design. Learn about our Editorial Process Saul McLeod, PhD Editor-in-Chief for Simply Psychology BSc (Hons) Psychology, MRes, PhD, University of Manchester Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology. Olivia Guy-Evans, MSc Associate Editor for Simply Psychology BSc (Hons) Psychology, MSc Psychology of Education Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors. - Content validity is a type of criterion validity that demonstrates how well a measure covers the construct it is meant to represent.

- It is important for researchers to establish content validity in order to ensure that their study is measuring what it intends to measure.

- There are several ways to establish content validity, including expert opinion, focus groups , and surveys.

What Is Content Validity?Content Validity is the degree to which elements of an assessment instrument are relevant to a representative of the targeted construct for a particular assessment purpose. This encompasses aspects such as the appropriateness of the items, tasks, or questions to the specific domain being measured and whether the assessment instrument covers a broad enough range of content to enable conclusions to be drawn about the targeted construct (Rossiter, 2008). One example of an assessment with high content validity is the Iowa Test of Basic Skills (ITBS). The ITBS is a standardized test that has been used since 1935 to assess the academic achievement of students in grades 3-8. The test covers a wide range of academic skills, including reading, math, language arts, and social studies. The items on the test are carefully developed and reviewed by a panel of experts to ensure that they are fair and representative of the skills being tested. As a result, the ITBS has high content validity and is widely used by schools and districts to measure student achievement. Meanwhile, most driving tests have low content validity. The questions on the test are often not representative of the skills needed to drive safely. For example, many driving permit tests do not include questions about how to parallel park or how to change lanes. Meanwhile, driving license tests often do not test drivers in non-ideal conditions, such as rain or snow. As a result, these tests do not provide an accurate measure of a person’s ability to drive safely. The higher the content validity of an assessment, the more accurately it can measure what it is intended to measure — the target construct (Rossiter, 2008). Why is content validity important in research?Content validity is important in research as it provides confidence that an instrument is measuring what it is supposed to be measuring. This is particularly relevant when developing new measures or adapting existing ones for use with different populations. It also has implications for the interpretation of results, as findings can only be accurately applied to groups for which the content validity of the measure has been established. Step-by-step guide: How to measure content validity?Haynes et al. (1995) emphasized the importance of content validity and gave an overview of ways to assess it. One of the first ways of measuring content validity was the Delphi method, which was invented by NASA in 1940 as a way of systematically creating technical predictions. The method involves a group of experts who make predictions about the future and then reach a consensus about those predictions. Today, the Delphi method is most commonly used in medicine. In a content validity study using the Delphi method, a panel of experts is asked to rate the items on an assessment instrument on a scale. The expert panel also has the opportunity to add comments about the items. After all ratings have been collected, the average item rating is calculated. In the second round, the experts receive summarized results of the first round and are able to make further comments and revise their first-round answers. This back-and-forth continues until some homogeneity criterion — similarity between the results of researchers — is achieved (Koller et al., 2017). Lawshie (1975) and Lynn (1986) created numerical methods to assess content validity. Both of these methods require the development of a content validity index (CVI). A content validity index is a statistical measure of the degree to which an assessment instrument covers the content domain of interest. There are two steps in calculating a content validity index: - Determining the number of items that should be included in the assessment instrument;

- Determining the percentage of items that actually are included in the assessment instrument.

The first step, determining the number of items that should be included in an assessment instrument, can be done using one of two approaches: item sampling or expert consensus. Item sampling involves selecting a sample of items from a larger set of items that cover the content domain. The number of items in the sample is then used to estimate the total number of items needed to cover the content domain. This approach has the advantage of being quick and easy, but it can be biased if the sample of items is not representative of the larger set (Koller et al., 2017). The second approach, expert consensus, involves asking a group of experts how many items should be included in an assessment instrument to adequately cover the content domain. This approach has the advantage of being more objective, but it can be time-consuming and expensive. Experts are able to assign these items to dimensions of the construct that they intend to measure and assign relevance values to decide whether an item is a strong measure of the construct. Although various attempts to numerize the process of measuring content validity exist, there is no systematic procedure that could be used as a general guideline for the evaluation of content validity (Newman et al., 2013). When is content validity used?Education assessment. In the context of educational assessment, validity is the extent to which an assessment instrument accurately measures what it is intended to measure. Validity concerns anyone who is making inferences and decisions about a learner based on data. This can have deep implications for students’ education and future. For instance, a test that poorly measures students’ abilities can lead to placement in a future course that is unsuitable for the student and, ultimately, to the student’s failure (Obilor, 2022). There are a number of factors that specifically affect the validity of assessments given to students, such as (Obilor, 2018): - Unclear Direction: If directions do not clearly indicate to the respondent how to respond to the tool’s items, the validity of the tool is reduced.

- Vocabulary: If the vocabulary of the respondent is poor, and he does not understand the items, the validity of the instrument is affected.

- Poorly Constructed Test Items: If items are constructed in such a way that they have different meanings for different respondents, validity is affected.

- Difficulty Level of Items: In an achievement test, too easy or too difficult test items would not discriminate among students, thereby lowering the validity of the test.

- Influence of Extraneous Factors: Extraneous factors like the style of expression, legibility, mechanics of grammar (spelling, punctuation), handwriting, and length of the tool, amongst others, influence the validity of a tool.

- Inappropriate Time Limit: In a speed test, if enough time limit is given, the result will be invalidated as a measure of speed. In a power test, an inappropriate time limit will lower the validity of the test.

There are a few reasons why interviews may lack content validity . First, interviewers may ask different questions or place different emphases on certain topics across different candidates. This can make it difficult to compare candidates on a level playing field. Second, interviewers may have their own personal biases that come into play when making judgments about candidates. Finally, the interview format itself may be flawed. For example, many companies ask potential programmers to complete brain teasers — such as calculating the number of plumbers in Chicago or coding tasks that rely heavily on theoretical knowledge of data structures — even if this knowledge would be used rarely or never on the job. QuestionnairesQuestionnaires rely on the respondents’ ability to accurately recall information and report it honestly. Additionally, the way in which questions are worded can influence responses. To increase content validity when designing a questionnaire, careful consideration must be given to the types of questions that will be asked. Open-ended questions are typically less biased than closed-ended questions, but they can be more difficult to analyze. It is also important to avoid leading or loaded questions that might influence respondents’ answers in a particular direction. The wording of questions should be clear and concise to avoid confusion (Koller et al., 2017). Is content validity internal or external?Most experts agree that content validity is primarily an internal issue. This means that the concepts and items included in a test should be based on a thorough analysis of the specific content area being measured. The items should also be representative of the range of difficulty levels within that content area. External factors, such as the opinions of experts or the general public, can influence content validity, but they are not necessarily the primary determinant. In some cases, such as when developing a test for licensure or certification, external stakeholders may have a strong say in what is included in the test (Koller et al., 2017). How can content validity be improved?There are a few ways to increase content validity. One is to create items that are more representative of the targeted construct. Another is to increase the number of items on the assessment so that it covers a greater range of content. Finally, experts can review the items on the assessment to ensure that they are fair and representative of the skills being tested (Koller et al., 2017). How do you test the content validity of a questionnaire?There are a few ways to test the content validity of a questionnaire. One way is to ask experts in the field to review the questions and provide feedback on whether or not they believe the questions are relevant and cover all important topics. Another way is to administer the questionnaire to a small group of people and then analyze the results to see if there are any patterns or themes emerging from the responses. Finally, it is also possible to use statistical methods to test for content validity, although this approach is more complex and usually requires access to specialized software (Koller et al., 2017). How can you tell if an instrument is content-valid?There are a few ways to tell if an instrument is content-valid. The first of these involves looking at two subsets of content validity: face and construct validity. Face validity is a measure of whether or not the items on the test appear to measure what they claim to measure. This is highly subjective but convenient to assess. Another way is to look at the construct validity, which is whether or not the items on the test measure what they are supposed to measure. Finally, you can also look at the criterion-related validity, which is whether or not the items on the test predict future performance. What is the difference between content and criterion validity?Content validity is a measure of how well a test covers the content it is supposed to cover. Criterion validity, meanwhile, is an index of how well a test correlates with an established standard of comparison or a criterion. For example, if a measure of criminal behavior is criterion valid, then it should be possible to use it to predict whether an individual will be arrested in the future for a criminal violation, is currently breaking the law, and has a previous criminal record (American Psychological Association). Are content validity and construct validity the same?Content validity is not the same as construct validity. Content validity is a method of assessing the degree to which a measure covers the range of content that it purports to measure. In contrast, construct validity is a method of assessing the degree to which a measure reflects the underlying construct that it purports to measure. It is important to note that content validity and construct validity are not mutually exclusive; a measure can be both valid and invalid with respect to content and construct. However, content validity is a necessary but not sufficient condition for construct validity. That is, a measure cannot be construct valid if it does not first have content validity (Koller et al., 2017). For example, an academic achievement test in math may have content validity if it contains questions from all areas of math a student is expected to have learned before the test, but it may not have construct validity if it does not somehow relate to tests of similar and different constructs. How many experts are needed for content validity?There is no definitive answer to this question as it depends on a number of factors, including the nature of the instrument being validated and the purpose of the validation exercise. However, in general, a minimum of three experts should be used in order to ensure that the content validity of an instrument is adequately established (Koller et al., 2017). American Psychological Association. (n.D.). Content Validity. American Psychological Association Dictionary. Haynes, S. N., Richard, D., & Kubany, E. S. (1995). Content validity in psychological assessment: A functional approach to concepts and methods. Psychological assessment , 7 (3), 238. Koller, I., Levenson, M. R., & Glück, J. (2017). What do you think you are measuring? A mixed-methods procedure for assessing the content validity of test items and theory-based scaling. Frontiers in psychology , 8 , 126. Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel psychology , 28 (4), 563-575. Lynn, M. R. (1986). Determination and quantification of content validity. Nursing research . Obilor, E. I. (2018). Fundamentals of research methods and Statistics in Education and Social Sciences. Port Harcourt: SABCOS Printers & Publishers. OBILOR, E. I. P., & MIWARI, G. U. P. (2022). Content Validity in Educational Assessment. Newman, Isadore, Janine Lim, and Fernanda Pineda. “Content validity using a mixed methods approach: Its application and development through the use of a table of specifications methodology.” Journal of Mixed Methods Research 7.3 (2013): 243-260. Rossiter, J. R. (2008). Content validity of measures of abstract constructs in management and organizational research. British Journal of Management , 19 (4), 380-388.  InformationInitiativesYou are accessing a machine-readable page. In order to be human-readable, please install an RSS reader. All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess . Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications. Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers. Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal. Original Submission Date Received: . - Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu - Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website. Please let us know what you think of our products and services. Visit our dedicated information section to learn more about MDPI. JSmol ViewerImbalance between employees and the organisational context: a catalyst for workplace bullying behaviours in both targets and perpetrators.  1. Introduction1.1. theoretical approaches—the three-way model, 1.2. theoretical approaches—job demands and resources model, 1.3. theoretical approaches combined—the dimensions of imbalances created by organisations triggering wb, 1.3.1. organisational focus, 1.3.2. organisational atmosphere, 1.3.3. organisational hierarchy, 1.3.4. research hypotheses, 2.1. participants, 2.2. measures, 2.3. procedure, 2.4. data analysis, 3.1. descriptive statistics, 3.2. correspondence analysis on hypothesis, 3.3. correspondence analysis of wb experiences, 4. discussion, 4.1. limitations and future research, 4.2. theoretical and practical implications, 5. conclusions, author contributions, institutional review board statement, informed consent statement, data availability statement, conflicts of interest. - Ashforth, B.E.; Gioia, D.A.; Robinson, S.L.; Treviño, L.K. Introduction to special topic forum: Reviewing organisational corruption. Acad. Manag. Rev. 2008 , 33 , 670–684. [ Google Scholar ] [ CrossRef ]

- Kessler, S.R.; Spector, P.E.; Chang, C.-H.; Parr, A.D. Organisational violence and aggression: Development of the three-factor Violence Climate Survey. Work Stress 2008 , 22 , 108–124. [ Google Scholar ] [ CrossRef ]

- Skogstad, A.; Torsheim, T.; Einarsen, S.; Hauge, L.J. Testing the work environment hypothesis of bullying on a group level of analysis: Psychosocial factors as precursors of observed workplace bullying. Appl. Psychol. Int. Rev. 2011 , 60 , 475–495. [ Google Scholar ] [ CrossRef ]

- Einarsen, S.; Hoel, H.; Zapf, D.; Cooper, C.L. (Eds.) Workplace Bullying: Developments in Theory, Research and Practice ; Taylor & Francis: Oxfordshire, UK, 2011. [ Google Scholar ]

- Salin, D. Organisational responses to workplace harassment. Pers. Rev. 2009 , 38 , 26–44. [ Google Scholar ] [ CrossRef ]

- Samnani, A.; Singh, P. 20 Years of workplace bullying research: A review of the antecedents and consequences of bullying in the workplace. Aggress. Violent Behav. 2012 , 17 , 581–589. [ Google Scholar ] [ CrossRef ]

- Tuckey, M.; Dollard, M.; Hosking, P.; Winefield, A. Workplace bullying: The role of psychosocial work environment factors. Int. J. Stress Manag. 2009 , 16 , 215–232. [ Google Scholar ] [ CrossRef ]

- Demerouti, E.; Bakker, A.B.; Nachreiner, F.; Schaufeli, W.B. The job demands–resources model of burnout. J. Appl. Psychol. 2001 , 86 , 499–512. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Baillien, E.; Rodriguez-Muñoz, A.; Van den Broeck, A.; De Witte, H. Do demands and resources affect victim’s and perpetrators’ reports of workplace bullying? A two-wave cross-lagged study. Work Stress 2011 , 25 , 128–146. [ Google Scholar ] [ CrossRef ]

- Balducci, C.; Cecchin, M.; Fraccaroli, F. The impact of role stressors on workplace bullying in both victims and perpetrators, controlling for personal vulnerability factors: A longitudinal analysis. Work Stress 2012 , 26 , 195–212. [ Google Scholar ] [ CrossRef ]

- Özer, G.; Escartín, J. The making and breaking of workplace bullying perpetration: A systematic review on the antecedents, moderators, mediators, outcomes of perpetration and suggestions for organisations. Aggress. Violent Behav. 2023 , 69 , 101823. [ Google Scholar ] [ CrossRef ]

- Bakker, A.B.; Demerouti, E. The Job Demands-Resources model: State of the art. J. Manag. Psychol. 2007 , 22 , 309–328. [ Google Scholar ] [ CrossRef ]

- Hart, P.M.; Cooper, C.L. Occupational stress: Toward a more integrated framework. In Handbook of Industrial, Work and Organizational Psychology ; SAGE: Thousand Oaks, CA, USA, 2001; Volume 2, pp. 93–114. [ Google Scholar ]

- Baillien, E.; Neyens, I.; De Witte, H.; De Cuyper, N. A qualitative study on the development of workplace bullying: Towards a three-way model. J. Community Appl. Soc. Psychol. 2009 , 19 , 1–16. [ Google Scholar ] [ CrossRef ]

- Berkowitz, L. The frustration-aggression hypothesis: An examination and reformulation. Psychol. Bull. 1989 , 106 , 59–73. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Felson, R.B.; Tedeschi, J.T. Aggression and Violence: Social Interactionists’ Perspectives ; American Psychological Association: Worcester, MA, USA, 1993. [ Google Scholar ]

- Salanova, M.; Agut, S.; Peiró, J.M. Linking organisational resources and work engagement to employee performance and customer loyalty: The mediating role of service climate. J. Appl. Psychol. 2005 , 90 , 1217–1227. [ Google Scholar ] [ CrossRef ]

- Demerouti, E.; Bakker, A.B. The Job Demands–Resources model: Challenges for future research. SA J. Ind. Psychol. 2011 , 37 , 974–983. [ Google Scholar ] [ CrossRef ]

- Schaufeli, W.B.; Bakker, A.B.; Van Rhenen, W. How changes in job demands and resources predict burnout, work engagement, and sickness absenteeism. J. Organ. Behav. 2009 , 30 , 893–917. [ Google Scholar ] [ CrossRef ]

- Xanthopoulou, D.; Bakker, A.B.; Demerouti, E.; Schaufeli, W.B. The role of personal resources in the job demands-resources model. Int. J. Stress Manag. 2007 , 14 , 121–141. [ Google Scholar ] [ CrossRef ]

- Deci, E.L.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior ; Plenum Press: New York, NY, USA, 1985. [ Google Scholar ]

- Bakker, A.B.; Van Veldhoven, M.J.P.M.; Xanthopoulou, D. Beyond the Demand-Control model: Thriving on high job demands and resources. J. Pers. Psychol. 2010 , 9 , 3–16. [ Google Scholar ] [ CrossRef ]

- Bruursema, K.; Kessler, S.R.; Spector, P.E. Bored employees misbehaving: The relationship between boredom and counterproductive work behavior. Work Stress 2011 , 5 , 93–107. [ Google Scholar ] [ CrossRef ]

- Karasek, R.A. Job demands, job decision latitude, and mental strain: Implications for job redesign. Adm. Sci. Q. 1979 , 24 , 285–308. [ Google Scholar ] [ CrossRef ]

- Meijman, T.F.; Mulder, G. Psychological aspects of workload. In Handbook of Work and Organisational Psychology , 2nd ed.; Drenth, P.J., Thierry, H., de Wolff, C.J., Eds.; Erlbaum: Mahwah, NJ, USA, 1998; pp. 5–33. [ Google Scholar ]

- Van den Broeck, A.; Baillien, E.; De Witte, H. Workplace bullying: A perspective from the job demands-resources model. Scand. J. Ind. Psychol. 2011 , 37 , 1–12. [ Google Scholar ] [ CrossRef ]

- Ceja, L.; Escartín, J.; Rodríguez-Carballeira, A. Organisational contexts that foster positive behaviour and well-being: A comparison between family-owned firms and non-family businesses. Rev. De Psicol. Soc. 2012 , 27 , 69–84. [ Google Scholar ] [ CrossRef ]

- Parzefall, M.R.; Salin, D. Perceptions of and reactions to workplace bullying: A social exchange perspective. Hum. Relat. 2010 , 63 , 761–780. [ Google Scholar ] [ CrossRef ]

- VanOudenhoven, J.P. Do organisations reflect national cultures? A 10-nation study. Int. J. Intercult. Relat. 2001 , 25 , 89–107. [ Google Scholar ] [ CrossRef ]

- Gioia, D.A.; Schultz, M.; Corley, K.G. Organisational identity, image, and adaptative instability. Acad. Manag. J. 2000 , 25 , 63–81. [ Google Scholar ]

- Stiles, D.R. Pictorial representation. In Essential Guide to Qualitative Methods in Organizational Research ; Cassell, C., Symon, G., Eds.; SAGE Publications: Thousand Oaks, CA, USA, 2004; pp. 127–139. [ Google Scholar ]

- Escartín, J.; Sora, B.; Rodríguez-Muñoz, A.; Rodríguez-Carballeira, A. Adaptación y validación de la versión Española de la escala de conductas negativas en el trabajo realizadas por acosadores: NAQ-Perpetrators. Rev. Psicol. Trab. Organ. 2012 , 28 , 157–170. [ Google Scholar ] [ CrossRef ]

- Escartín, J.; Rodríguez-Carballeira, A.; Gómez-Benito, J.; Zapf, D. Development and validation of the workplace bullying scale “EAPA-T. Int. J. Clin. Health Psychol. 2010 , 10 , 519–539. [ Google Scholar ]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977 , 33 , 159–174. [ Google Scholar ] [ CrossRef ]

- Leymann, H. The content and development of mobbing at work. Eur. J. Work Organ. Psychol. 1996 , 5 , 165–184. [ Google Scholar ] [ CrossRef ]

- van de Velden, M.; van den Heuvel, W.; Galy, H.; Groenen, P.J. Retrieving a contingency table from a correspondence analysis solution. Eur. J. Oper. Res. 2020 , 283 , 541–548. [ Google Scholar ] [ CrossRef ]

- Fernández-del-Río, E.; Ramos-Villagrasa, P.J.; Escartín, J. The incremental effect of Dark personality over the Big Five in workplace bullying: Evidence from perpetrators and targets. Personal. Individ. Differ. 2021 , 168 , 110291. [ Google Scholar ] [ CrossRef ]

- George, D.; Mallery, P. IBM SPSS Statistics 26 Step by Step. In Routledge eBooks ; Routledge: Oxfordshire, UK, 2019. [ Google Scholar ] [ CrossRef ]

- Rayner, C.; Cooper, C.L. The black hole in “bullying at work research”. Int. J. Manag. Decis. Mak. 2003 , 4 , 47–64. [ Google Scholar ] [ CrossRef ]

- Bakker, A.B.; Demerouti, E.; Sanz-Vergel, A. Job Demands–Resources Theory: Ten years later. Annu. Rev. Organ. Psychol. Organ. Behav. 2023 , 10 , 25–53. [ Google Scholar ] [ CrossRef ]

- Özer, G.; Griep, Y.; Escartín, J. The relationship between organisational environment and perpetrators’ physical and psychological State: A three-wave of longitudinal study. Int. J. Environ. Res. Public Health 2022 , 19 , 3699. [ Google Scholar ] [ CrossRef ]

- Jenkins, M.F.; Zapf, D.; Winefield, H.; Sarris, A. bullying allegations from the accused bully’s perspective. Br. J. Manag. 2011 , 23 , 489–501. [ Google Scholar ] [ CrossRef ]

- Vranjes, I.; Elst, T.V.; Griep, Y.; De Witte, H.; Baillien, E. What goes around comes around: How perpetrators of workplace bullying become targets themselves. Group Organ. Manag. 2022 , 48 , 1135–1172. [ Google Scholar ] [ CrossRef ]

- O’Neill, R.E.; Vandenberghe, C.; Stroink, M.L. Implicit reciprocity norms between employees and their employers: A psychological contract perspective. J. Organ. Behav. 2009 , 30 , 203–221. [ Google Scholar ] [ CrossRef ]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience ; Harper & Row: Manhattan, NY, USA, 1990. [ Google Scholar ]

- Csikszentmihalyi, M. Finding Flow: The Psychology of Engagement with Everyday Life ; Basic Books; Hachette: London, UK, 1997. [ Google Scholar ]

- Glambek, M.; Skogstad, A.; Einarsen, S.V. Do the bullies survive? A five-year, three-wave prospective study of indicators of expulsion in working life among perpetrators of workplace bullying. Ind. Health 1997 , 54 , 68–73. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Özer, G.; Griep, Y.; Escartín, J. A matter of health? A 24-week daily and weekly diary study on workplace bullying perpetrators’ psychological and physical health. Int. J. Environ. Res. Public Health 2023 , 20 , 479. [ Google Scholar ] [ CrossRef ]

- Salin, D.; Hoel, H. Organisational causes of workplace bullying. In Workplace Bullying: Development in Theory, Research and Practice ; Einarsen, S., Hoel, H., Zapf, D., Cooper, C., Eds.; Taylor & Francis: Oxfordshire, UK, 2011. [ Google Scholar ]

- Salin, D. Ways of explaining workplace bullying: A review of enabling, motivating and precipitating structures and processes in the work environment. Hum. Relat. 2003 , 56 , 1213–1232. [ Google Scholar ] [ CrossRef ]

- Escartín, J.; Dollard, M.; Zapf, D.; Kozlowski, S. Multilevel emotional exhaustion: Psychosocial safety climate and workplace bullying as higher level contextual and individual explanatory factors. Eur. J. Work Organ. Psychol. 2021 , 30 , 742–752. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure | Baseline Characteristics | n | % | | n | % |

|---|

| Gender | | | Supervisor | | | | Males | 404 | 38.7 | Not a supervisor | 872 | 83.5 | | Females | 640 | 61.3 | Supervisor | 172 | 16.5 | | Sector | | | Civil Status | | | | Education | 257 | 24.6 | Single | 431 | 41.3 | | Industry | 96 | 9.2 | Married/Living together | 523 | 50.1 | | Trade | 151 | 14.5 | Separated/divorced | 80 | 7.7 | | Services | 540 | 51.7 | Widowed | 10 | 1.0 | | Education | | | Income | | | | No studies | 14 | 1.3 | Equal or less than €10k | 221 | 21.2 | | Basic | 123 | 11.8 | €10,001–€20,000 | 338 | 32.4 | | Secondary | 409 | 39.2 | €20,001–€30,000 | 303 | 29.0 | | Diploma | 211 | 20.2 | €30,001–€40,000 | 117 | 11.2 | | Undergraduate | 219 | 21.0 | €40,001–€50,000 | 32 | 3.1 | | Postgraduate | 68 | 6.5 | More than €50,000 | 33 | 3.2 | | Contract | | | | | | | No permanent contract | 304 | 29.1 | | | | | Permanent contract | 740 | 70.9 | | | | | Organisational Dimensions | Continuum | Example Adjectives |

|---|

| 1. Organisational Focus | Task-Focused | Exploitative, obsolete, statistical | | | Balanced Focus | Organised, participative, supportive | | | Employee-Focused | Unstructured, disorganised, chaotic | | 2. Organisational Atmosphere | Hostile or Negative | Controlling, manipulative, inhumane | | | Balanced or Positive | Amiable, respectful, empathetic | | | Too Informal | Overwhelmed, unmotivated, suffocating | | 3. Organisational Hierarchy | Too Much | Authoritarian, inefficient, dictatorial | | | Balanced Hierarchy | Hierarchical, cheerful, coherent | | | Too Little | Uncoordinated, little prepared, unclear | | Variables | Mean | SD | 1 | 2 | 3 | 4 | 5 |

|---|

| 1 | Age | 35.43 | 10.91 | - | | | | | | 2 | Gender | 1.61 | 0.49 | 0.06 | - | | | | | 3 | Supervisor | 1.16 | 0.37 | 0.10 ** | −0.08 * | - | | | | 4 | WB Target Score | 0.24 | 0.41 | −0.07 * | −0.04 | 0.01 | - | | | 5 | WB Perpetration Score | 0.22 | 0.39 | −0.06 | −0.08 * | −0.00 | 0.52 ** | - | | | WB Perpetration Level | |

|---|

| WB Target Level | No WBP | Low WBP | Medium WBP | High WBP | Total | No WBP | Low WBP | Medium WBP | High WBP | Total |

|---|

| No WBT | 295 | 131 | 7 | 12 | 445 | 28.3% | 12.5% | 0.7% | 1.1% | 42.6% | | Low WBT | 173 | 228 | 19 | 15 | 435 | 16.6% | 21.8% | 1.8% | 1.4% | 41.7% | | Medium WBT | 12 | 43 | 17 | 4 | 76 | 1.1% | 4.1% | 1.6% | 0.4% | 7.3% | | High WBT | 26 | 28 | 21 | 13 | 88 | 2.5% | 2.7% | 2.0% | 1.2% | 8.4% | | Total | 506 | 430 | 64 | 44 | 1044 | 48.5% | 41.2% | 6.1% | 4.2% | 100.0% | | Categories | N | % |

|---|

| Target not a perpetrator | 211 | 20.2 | | Target perpetrator | 388 | 37.2 | | Perpetrator not a target | 150 | 14.4 | | Uninvolved | 295 | 28.3 | | Total | 1044 | 100.0 | | WB Categories | Task

Focus | Balanced

Focus | Employee

Focus | Total | Task

Focus | Balanced

Focus | Employee

Focus | Total |

|---|

| Target not a perpetrator | 55 | 66 | 29 | 150 | 7.3% | 8.8% | 3.9% | 20.0% | | Target perpetrator | 112 | 107 | 75 | 294 | 15.0% | 14.3% | 10.0% | 39.3% | | Perpetrator not a target | 30 | 54 | 17 | 101 | 4.0% | 7.2% | 2.3% | 13.5% | | Uninvolved | 52 | 125 | 27 | 204 | 6.9% | 16.7% | 3.6% | 27.2% | | Total | 249 | 352 | 148 | 749 | 33.2% | 47.0% | 19.8% | 100.0% | | WB Categories | Negative A | Balanced

A | Too Informal A | Total | Negative A | Balanced

A | Too Informal A | Total |

|---|

| Target not a perpetrator | 40 | 78 | 28 | 146 | 5.5% | 10.6% | 3.8% | 19.9% | | Target perpetrator | 98 | 132 | 60 | 290 | 13.4% | 18.0% | 8.2% | 39.6% | | Perpetrator not a target | 17 | 65 | 16 | 98 | 2.3% | 8.9% | 2.2% | 13.4% | | Uninvolved | 24 | 153 | 22 | 199 | 3.3% | 20.9% | 3.0% | 27.1% | | Total | 179 | 428 | 126 | 733 | 24.4% | 58.4% | 17.2% | 100.0% | | WB Categories | Too Little H | Balanced H | Too High H | Total | Too Little H | Balanced H | Too High H | Total |

|---|

| Target not a perpetrator | 25 | 79 | 44 | 148 | 3.4% | 10.8% | 6.0% | 20.2% | | Target perpetrator | 68 | 124 | 96 | 288 | 9.3% | 16.9% | 13.1% | 39.3% | | Perpetrator not a target | 16 | 61 | 22 | 99 | 2.2% | 8.3% | 3.0% | 13.5% | | Uninvolved | 17 | 143 | 38 | 198 | 2.3% | 19.5% | 5.2% | 27.0% | | Total | 126 | 407 | 200 | 733 | 17.2% | 55.5% | 27% | 100.0% | | The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and CiteÖzer, G.; Escartín, J. Imbalance between Employees and the Organisational Context: A Catalyst for Workplace Bullying Behaviours in Both Targets and Perpetrators. Behav. Sci. 2024 , 14 , 751. https://doi.org/10.3390/bs14090751 Özer G, Escartín J. Imbalance between Employees and the Organisational Context: A Catalyst for Workplace Bullying Behaviours in Both Targets and Perpetrators. Behavioral Sciences . 2024; 14(9):751. https://doi.org/10.3390/bs14090751 Özer, Gülüm, and Jordi Escartín. 2024. "Imbalance between Employees and the Organisational Context: A Catalyst for Workplace Bullying Behaviours in Both Targets and Perpetrators" Behavioral Sciences 14, no. 9: 751. https://doi.org/10.3390/bs14090751 Article MetricsArticle access statistics, further information, mdpi initiatives, follow mdpi.  Subscribe to receive issue release notifications and newsletters from MDPI journals  An official website of the United States government The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site. The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely. - Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now . - Advanced Search

- Journal List

- CBE Life Sci Educ

- v.15(1); Spring 2016

Contemporary Test Validity in Theory and Practice: A Primer for Discipline-Based Education ResearchersTodd d. reeves. *Educational Technology, Research and Assessment, Northern Illinois University, DeKalb, IL 60115 Gili Marbach-Ad† College of Computer, Mathematical and Natural Sciences, University of Maryland, College Park, MD 20742 This essay offers a contemporary social science perspective on test validity and the validation process. The instructional piece explores the concepts of test validity, the validation process, validity evidence, and key threats to validity. The essay also includes an in-depth example of a validity argument and validation approach for a test of student argument analysis. In addition to discipline-based education researchers, this essay should benefit practitioners (e.g., lab directors, faculty members) in the development, evaluation, and/or selection of instruments for their work assessing students or evaluating pedagogical innovations. Most discipline-based education researchers (DBERs) were formally trained in the methods of scientific disciplines such as biology, chemistry, and physics, rather than social science disciplines such as psychology and education. As a result, DBERs may have never taken specific courses in the social science research methodology—either quantitative or qualitative—on which their scholarship often relies so heavily. One particular aspect of (quantitative) social science research that differs markedly from disciplines such as biology and chemistry is the instrumentation used to quantify phenomena. In response, this Research Methods essay offers a contemporary social science perspective on test validity and the validation process. The instructional piece explores the concepts of test validity, the validation process, validity evidence, and key threats to validity. The essay also includes an in-depth example of a validity argument and validation approach for a test of student argument analysis. In addition to DBERs, this essay should benefit practitioners (e.g., lab directors, faculty members) in the development, evaluation, and/or selection of instruments for their work assessing students or evaluating pedagogical innovations. INTRODUCTIONThe field of discipline-based education research ( Singer et al ., 2012 ) has emerged in response to long-standing calls to advance the status of U.S. science education at the postsecondary level (e.g., Boyer Commission on Educating Undergraduates in the Research University, 1998 ; National Research Council, 2003 ; American Association for the Advancement of Science, 2011 ). Discipline-based education research applies scientific principles to study postsecondary science education processes and outcomes systematically to improve the scientific enterprise. In particular, this field has made significant progress with respect to the study of 1) active-learning pedagogies (e.g., Freeman et al. , 2014 ); 2) interventions to support those pedagogies among both faculty (e.g., Brownell and Tanner, 2012 ) and graduate teaching assistants (e.g., Schussler et al. , 2015 ); and 3) undergraduate research experiences (e.g., Auchincloss et al. , 2014 ). Most discipline-based education researchers (DBERs) were formally trained in the methods of scientific disciplines such as biology, chemistry, and physics, rather than social science disciplines such as psychology and education. As a result, DBERs may have never taken specific courses in the social science research methodology—either quantitative or qualitative—on which their scholarship often relies so heavily ( Singer et al. , 2012 ). While the same principles of science ground all these fields, the specific methods used and some criteria for methodological and scientific rigor differ along disciplinary lines. One particular aspect of (quantitative) social science research that differs markedly from research in disciplines such as biology and chemistry is the instrumentation used to quantify phenomena. Instrumentation is a critical aspect of research methodology, because it provides the raw materials input to statistical analyses and thus serves as a basis for credible conclusions and research-based educational practice ( Opfer et al ., 2012 ; Campbell and Nehm, 2013 ). A notable feature of social science instrumentation is that it generally targets variables that are latent, that is, variables that are not directly observable but instead must be inferred through observable behavior ( Bollen, 2002 ). For example, to elicit evidence of cognitive beliefs, which are not observable directly, respondents are asked to report their level of agreement (e.g., “strongly disagree,” “disagree,” “agree,” “strongly agree”) with textually presented statements (e.g., “I like science,” “Science is fun,” and “I look forward to science class”). Even a multiple-choice final examination does not directly observe the phenomenon of interest (e.g., student knowledge). As such, compared with work in traditional scientific disciplines, in the social sciences, more of an inferential leap is often required between the derivation of a score and its intended interpretation ( Opfer et al ., 2012 ). Instruments designed to elicit evidence of variables of interest to DBERs have proliferated in recent years. Some well-known examples include the Experimental Design Ability Test (EDAT; Sirum and Humburg, 2011 ); the Genetics Concept Assessment ( Smith et al. , 2008 ); the Classroom Undergraduate Research Experience survey ( Denofrio et al. , 2007 ); and the Classroom Observation Protocol for Undergraduate STEM ( Smith et al. , 2013 ). However, available instruments vary widely in their quality and nuance ( Opfer et al. , 2012 ; Singer et al. , 2012 ; Campbell and Nehm, 2013 ), necessitating understanding on the part of DBERs of how to evaluate instruments for use in their research. Practitioners, too, should know how to evaluate and select high-quality instruments for program evaluation and/or assessment purposes. Where high-quality instruments do not already exist for use in one’s context, which is commonplace ( Opfer et al ., 2012 ), they need to be developed, and corresponding empirical validity evidence needs to be gathered in accord with contemporary standards. In response, this Research Methods essay offers a contemporary social science perspective on test validity and the validation process. It is intended to offer a primer for DBERs who may not have received formal training on the subject. Using examples from discipline-based education research, the instructional piece explores the concepts of test validity, the validation process, validity evidence, and key threats to validity. The essay also includes an in-depth example of a validity argument and validation approach for a test of student argument analysis. In addition to DBERs, this essay should benefit practitioners (e.g., lab directors, faculty members) in the development, evaluation, and/or selection of instruments for their work assessing students or evaluating pedagogical innovations. TEST VALIDITY AND THE TEST VALIDATION PROCESSA test is a sample of behavior gathered in order to draw an inference about some domain or construct within a particular population (American Educational Research Association, American Psychological Association, and National Council on Measurement in Education [ AERA, APA, and NCME], 2014 ). 1 In the social sciences, the domain about which an inference is desired is typically a latent (unobservable) variable. For example, the STEM GTA-Teaching Self-Efficacy Scale ( DeChenne et al. , 2012 ) was developed to support inferences about the degree to which a graduate teaching assistant believes he or she is capable of 1) cultivating an effective learning environment and 2) implementing particular instructional strategies. As another example, the inference drawn from an introductory biology final exam is typically about the degree to which a student understands content covered over some extensive unit of instruction. While beliefs or conceptual knowledge are not directly accessible, what can be observed is the sample of behavior the test elicits, such as test-taker responses to questions or responses to rating scales. Diverse forms of instrumentation are used in discipline-based education research ( Singer et al. , 2012 ). Notable subcategories of instruments include self-report (e.g., attitudinal and belief scales) and more objective measures (e.g., concept inventories, standardized observation protocols, and final exams). By the definition of “test” above, any of these instrument types can be conceived as tests—though the focus here is only on instruments that yield quantitative data, that is, scores. The paramount consideration in the evaluation of any test’s quality is validity: “the degree to which evidence and theory support the interpretations of test scores for proposed uses of tests” ( Angoff, 1988 ; AERA, APA, and NCME, 2014 , p. 11). 2 , 3 In evaluating test validity, the focus is not on the test itself, but rather the proposed inference(s) drawn on the basis of the test’s score(s). Noteworthy in the validity definition above is that validity is a matter of degree (“the inferences supported by this test have a high or low degree of validity”), rather than a dichotomous character (e.g., “the inferences supported by this test are or are not valid”). Assessment validation is theorized as an iterative process in which the test developer constructs an evidence-based argument for the intended test-based score interpretations in a particular population ( Kane, 1992 ; Messick, 1995 ). An example validity argument claim is that the test’s content (e.g., questions, items) is representative of the domain targeted by the test (e.g., body of knowledge/skills). With this argument-based approach, claims within the validity argument are substantiated with various forms of relevant evidence. Altogether, the goal of test validation is to accumulate over time a comprehensive body of relevant evidence to support each intended score interpretation within a particular population (i.e., whether the scores should in fact be interpreted to mean what the developer intends them to mean). CATEGORIES OF TEST VALIDITY EVIDENCEHistorically, test validity theory in the social sciences recognized several categorically different “types” of validity (e.g., “content validity,” “criterion validity”). However, contemporary validity theory posits that test validity is a unitary (single) concept. Rather than providing evidence of each “type” of validity, the charge for test developers is to construct a cohesive argument for the validity of test score–based inferences that integrates different forms of validity evidence. The categories of validity evidence include evidence based on test content, evidence based on response processes, evidence based on internal structure, evidence based on relations with other variables, and evidence based on the consequences of testing ( AERA, APA, and NCME, 2014 ). Figure 1 provides a graphical representation of the categories and subcategories of validity evidence.  Categories of evidence used to argue for the validity of test score interpretations and uses ( AERA, APA, and NCME, 2014 ). Validity evidence based on test content concerns “the relationship between the content of a test and the construct it is intended to measure” ( AERA, APA, and NCME, 2014 , p. 14). Such validity evidence concerns the match between the domain purportedly measured by (e.g., diagnostic microscopy skills) and the content of the test (e.g., the specific slides examined by the test taker). For example, if a test is intended to elicit evidence of students’ understanding of the key principles of evolution by means of natural selection (e.g., variation, heredity, differential fitness), the test should fully represent those principles in the sample of behavior it elicits. As a concrete example from the literature, in the development of the Host-Pathogen Interaction (HPI) concept inventory, Marbach-Ad et al. (2009) explicitly mapped each test item to one of 13 HPI concepts intended to be assessed by their instrument. Content validity evidence alone is insufficient for establishing a high degree of validity; it should be combined with other forms of evidence to yield a strong evidence-based validity argument marked by relevancy, accuracy, and sufficiency. In practice, providing validity evidence based on test content involves evaluating and documenting content representativeness. One standard approach to collecting evidence of content representativeness is to submit the test to external systematic review by subject matter–area experts (e.g., biology faculty) and to document such reviews (as well as revisions made on their basis). External reviews focus on the adequacy of the test’s overall elicited sample of behavior in representing the domain assessed and any corresponding subdomains, as well as the relevance or irrelevance of particular questions/items to the domain. We refer the reader to Webb (2006) for a comprehensive and sophisticated framework for evaluating different dimensions of domain–test content alignment. Another approach used to design a test, so as to support and document construct representativeness, is to employ a “table of specifications” (e.g., Fives and DiDonato-Barnes, 2013 ). A table of specifications (or test blueprint) is a tool for designing a test that classifies test content along two dimensions, a content dimension and a cognitive dimension. The content dimension pertains to the different aspects of the construct one intends to measure. In a classroom setting, aspects of the construct are typically defined by behavioral/instructional objectives (i.e., students will analyze phylogenetic trees). The cognitive dimension represents the level of cognitive processing or thinking called for by test components (e.g., knowledge, comprehension, analysis). Within a table of specifications, one indicates the number/percent of test questions or items for each aspect of the construct at each cognitive level. Often, one also provides a summary measure of the number of items pertaining to each content area (regardless of cognitive demand) and at each cognitive level (regardless of content). Instead of or in addition to the number of items, one can also indicate the number/percent of available points for each content area and cognitive level. Because a table of specifications indicates how test components represent the construct one intends to measure, it serves as one source of validity evidence based on test content. Table 1 presents an example table of specifications for a test concerning the principles of evolution by means of natural selection. Example table of specifications for evolution by means of natural selection test showing numbers of test items pertaining to each content area at each cognitive level and total number of items per content area and cognitive level | Cognitive process | |

|---|

| Content (behavioral objective) | Comprehension | Application | Analysis | Total |

|---|

| 1. Students will define evolution by means of natural selection. | 1 | | | 1 | | 2. Students will define key principles of evolution by means of natural selection (e.g., heredity, differential fitness). | 5 | | | 5 | | 3. Students will compute measures of absolute and relative fitness. | | 5 | | 5 | | 4. Students will compare evolution by means of natural selection with earlier evolution theories. | | | 3 | 3 | | 5. Student will analyze phylogenetic trees. | | | 4 | 4 | | Total | 6 | 5 | 7 | 18 |

Evidence of validity based on response processes concerns “the fit between the construct and the detailed nature of the performance or response actually engaged in by test takers” ( AERA, APA, and NCME, 2014 , p. 15). For example, if a test purportedly elicits evidence of undergraduate students’ critical evaluative thinking concerning evidence-based scientific arguments, during the test the student should be engaged in the cognitive process of examining argument claims, evidence, and warrants, and the relevance, accuracy, and sufficiency of evidence. Most often one gathers such evidence through respondent think-aloud procedures. During think alouds, respondents verbally explain and rationalize their thought processes and responses concurrently during test completion. One particular method commonly used by professional test vendors to gather response process–based validity evidence is cognitive labs, which involve both concurrent and retrospective verbal reporting by respondents ( Willis, 1999 ; Zucker et al ., 2004 ). As an example from the literature, developers of the HPI concept inventory asked respondents to provide open-ended responses to ensure that their reasons for selecting a particular response option (e.g., “B”) were consistent with the developer’s intentions, that is, the student indeed held the particular alternative conception presented in response option B ( Marbach-Ad et al. , 2009 ). Think alouds are formalized via structured protocols, and the elicited think-aloud data are recorded, transcribed, analyzed, and interpreted to shed light on validity. Evidence based on internal structure concerns “the degree to which the relationships among test item and test components conform to the construct on which the proposed test score interpretations are based” ( AERA, APA, and NCME, 2014 , p. 16). 4 For instance, suppose a professor plans to teach one topic (eukaryotes) using small-group active-learning instruction and another topic (prokaryotes) through lecture instruction; and he or she wants to make within-class comparisons of the effectiveness of these methods. As an outcome measure, a test may be designed to support inferences about the two specific aspects of biology content (e.g., characteristics of prokaryotic and eukaryotic cells). Collection of evidence based on internal structure seeks to confirm empirically whether the scores reflect the (in this case two) distinct domains targeted by the test ( Messick, 1995 ). In practice, one can formally establish the fidelity of test scores to their theorized internal structure through methodological techniques such as factor analysis, item response theory, and Rasch modeling ( Harman, 1960 ; Rasch, 1960 ; Embretson and Reise, 2013 ). With factor analysis, for example, item intercorrelations are analyzed to determine whether particular item responses cluster together (i.e., whether scores from components of the test related to one aspect of the domain [e.g., questions about prokaryotes] are more interrelated with one another than they are with scores derived from other components of the test [e.g., questions about eukaryotes]). Item response theory and Rasch models hypothesize that the probability of a particular response to a test item is a function of the respondent’s ability (in terms of what is being measured) and characteristics of the item (e.g., difficulty, discrimination, pseudo-guessing). Examining test score internal structure with such models involves examining whether such model-based predictions bear out in the observed data. There are a variety of such models for use with test questions with different (or different combinations of) response formats such as the Rasch rating-scale model ( Andrich, 1978 ) and the Rasch partial-credit Rasch model ( Wright and Masters, 1982 ). Validity evidence based on relations with other variables concerns “the relationship of test scores to variables external to the test” ( AERA, APA, and NCME, 2014 , p. 16). The collection of this form of validity evidence centers on examining how test scores are related to both measures of the same or similar constructs and measures of distinct and different constructs (i.e., respectively termed “convergent validity” and “discriminant validity” 5 evidence). In other words, such evidence pertains to how scores relate to other variables as would be theoretically expected. For example, if a new self-report instrument purports to measure experimental design skills, scores should correlate highly with an existing measure of experimental design ability such as the EDAT ( Sirum and Humburg, 2011 ). On the other hand, scores derived from this self-report instrument should be considerably less correlated or uncorrelated with scores from a personality measure such as the Minnesota Multiphasic Personality Inventory ( Greene, 2000 ). As another discipline-based education research example, Nehm and Schonfeld (2008) collected discriminant validity evidence by relating scores from both the Conceptual Inventory of Natural Section (CINS) and the Open Response Instrument (ORI), which both purport to assess understanding of and conceptions concerning natural selection, and a geology test of knowledge about rocks. A subcategory of evidence based on relations with other variables is evidence related to test-criterion relationships, which concerns how test scores are related to some other nontest indicator or outcome either at the same time (so-called concurrent validity evidence) or in the future (so-called predictive validity evidence). For instance, developers of a new biostatistics test might examine how scores from the test correlate as expected with professor ability judgments or mathematics course grade point average at the same point in time; alternatively, the developer might follow tested individuals over time to examine how scores relate to the probability of successfully completing biostatistics course work. As another example, given prior research on self-efficacy, scores from instruments that probe teaching self-efficacy should be related to respondents’ levels of teacher training and experience ( Prieto and Altmaier, 1994 ; Prieto and Meyers, 1999 ). Examination of how test scores are related or not to other variables as expected is often associational in nature (e.g., correlational analysis). There are also two other specific methods for eliciting such validity evidence. The first is to examine score differences between theoretically different groups (e.g., whether scientists’ and nonscientists’ scores from an experimental design test differ systematically on average)—the “known groups method.” The second is to examine whether scores increase or decrease as expected in response to an intervention ( Hattie and Cooksey, 1984 ; AERA, APA, and NCME, 2014 ). For example, Marbach-Ad et al. (2009 , 2010 ) examined HPI concept inventory score differences between majors and nonmajors and students in introductory and upper-level courses. To inform the collection of validity evidence based on relations with other variables, individuals should consult the literature to formulate a theory around how good measures of the construct should relate to different variables. One should also note that the quality of such validity evidence hinges on the quality (e.g., validity) of measures of external variables. Finally, validity evidence based on the consequences of testing concerns the “soundness of proposed interpretations [of test scores] for their intended uses” ( AERA, APA, and NCME, 2014 , p. 19) and the value implications and social consequences of testing ( Messick, 1994 , 1995 ). Such evidence pertains to both the intended and unintended effects of test score interpretation and use ( Linn, 1991 ; Messick, 1995 ). Example intended consequences of test use would include motivating students, better-targeted instruction, and populating a special program with only those students who are in need of the program (if those are the intended purposes of test use). An example of an unintended consequence of test use would be significant reduction in instructional time because of overly time-consuming test administration (assuming, of course, that this would not be a desired outcome) or drop out of particular student populations because of an excessively difficult test administered early in a course. In K–12 settings, a classic example of an unintended consequence of testing is the “narrowing of the curriculum” that occurred as a result of the No Child Left Behind Act testing regime; when faced with annual tests focused only on particular content areas (i.e., English/language arts and mathematics), schools and teachers focused more on tested content and less on nontested content such as science, social studies, art, and music (e.g., Berliner, 2011 ). Evidence based on the consequences of a test is often gathered via surveys, interviews, and focus groups administered with test users. TEST VALIDITY ARGUMENT EXAMPLEIn this section, we provide an example validity argument for a test designed to elicit evidence of students’ skills in analyzing the elements of evidence-based scientific arguments. This hypothetical test presents text-based arguments concerning scientific topics (e.g., global climate change, natural selection) to students, who then directly interact with the texts to identify their elements (i.e., claims, reasons, and warrants). The test is intended to support inferences about 1) students’ overall evidence-based science argument-element analysis skills; 2) students’ skills in identifying particular evidence-based science argument elements (e.g., claims); and 3) errors made when students identify particular argument elements (e.g., evidence). Additionally, the test is intended to 4) support instructional decision-making to improve science teaching and learning. The validity argument claims undergirding this example test’s score interpretations and uses (and the categories of validity evidence advanced to substantiate each) are shown in Table 2 . Example validity argument and validation approach for a test of students’ ability to analyze the elements of evidence-based scientific arguments showing argument claims and subclaims concerning the validity of the intended test score interpretations and uses and relevant validity evidence used to substantiate those claims | Validity argument claims and subclaims | Relevant validity evidence based on |

|---|

| 1. The overall score represents a student’s current level of argument-element analysis skills, because: | – | | a single higher-order construct (i.e., argument-element analysis skill) underlies all item responses. | Internal structure | | the overall score is distinct from content knowledge and thinking dispositions. | Relations with other variables | | the items represent a variety of arguments and argument elements. | Test content | | items engage respondents in the cognitive process of argument-element analysis. | Response processes | | the overall score is highly related to other argument analysis measures and less related to content knowledge and thinking disposition measures. | Relations with other variables | | 2. A subscore (e.g., claim identification) represents a student’s current level of argument-element identification skill, because: | – | | each subscore is distinct from other subscores and the total score (the internal structure is multidimensional and hierarchical). | Internal structure | | the items represent a variety of arguments and particular argument elements (e.g., claims). | Test content | | the subscore is distinct from content knowledge and thinking dispositions. | Relations with other variables | | items engage respondents in the cognitive process of identifying a particular element argument (e.g., claims). | Response processes | | subscores are highly related to other argument analysis measures and less related to content knowledge and thinking disposition measures. | Relations with other variables | | 3. Error indicators can be interpreted to represent students’ current errors made in identifying particular argument elements, because when students misclassify an element in the task, they are making cognitive errors. | Response processes | | 4. Use of the test will facilitate improved argument instruction and student learning, because: | – | | teachers report that the test is useful and easy to use and have positive attitudes toward it. | Consequences of testing | | teachers report using the test to improve argument instruction. | Consequences of testing | | teachers report that the provided information is timely. | Consequences of testing | | teachers learn about argumentation with test use. | Consequences of testing | | students learn about argumentation with test use | Consequences of testing | | any unintended consequences of test use do not outweigh intended consequences. | Consequences of testing |