An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Int J Environ Res Public Health

The Effectiveness of an Evidence-Based Practice (EBP) Educational Program on Undergraduate Nursing Students’ EBP Knowledge and Skills: A Cluster Randomized Control Trial

Daniela cardoso.

1 Health Sciences Research Unit: Nursing, Nursing School of Coimbra, Portugal Centre for Evidence-Based Practice: A Joanna Briggs Institute Centre of Excellence, 3004-011 Coimbra, Portugal; tp.cfnese@osodracf (A.F.C.); tp.cfnese@oiregor (R.R.); moc.liamg@7ramed (M.A.R.); tp.cfnese@olotsopa (J.A.)

2 FMUC—Faculty of Medicine, University of Coimbra, 3000-370 Coimbra, Portugal

Filipa Couto

3 Alfena Hospital—Trofa Health Group, Health Sciences Research Unit: Nursing, Nursing School of Coimbra, 3000-232 Coimbra, Portugal; moc.liamg@otuoccdapilif

Ana Filipa Cardoso

Elzbieta bobrowicz-campos.

4 Health Sciences Research Unit: Nursing, Nursing School of Coimbra, 3004-011 Coimbra, Portugal; [email protected] (E.B.-C.); tp.cfnese@stnasasiul (L.S.); tp.cfnese@ohnituocv (V.C.); tp.cfnese@otnipaleinad (D.P.)

Luísa Santos

Rogério rodrigues, verónica coutinho, daniela pinto, mary-anne ramis.

5 Mater Health, Evidence in Practice Unit & Queensland Centre for Evidence Based Nursing and Midwifery: A Joanna Briggs Institute Centre of Excellence, 4101 Brisbane, Australia; [email protected]

Manuel Alves Rodrigues

João apóstolo, associated data.

The data presented in this study are available on request from the corresponding author. The data are not publicly available because this issue was not considered within the informed consent signed by the participants of the study.

Evidence-based practice (EBP) prevents unsafe/inefficient practices and improves healthcare quality, but its implementation is challenging due to research and practice gaps. A focused educational program can assist future nurses to minimize these gaps. This study aims to assess the effectiveness of an EBP educational program on undergraduate nursing students’ EBP knowledge and skills. A cluster randomized controlled trial was undertaken. Six optional courses in the Bachelor of Nursing final year were randomly assigned to the experimental (EBP educational program) or control group. Nursing students’ EBP knowledge and skills were measured at baseline and post-intervention. A qualitative analysis of 18 students’ final written work was also performed. Results show a statistically significant interaction between the intervention and time on EBP knowledge and skills ( p = 0.002). From pre- to post-intervention, students’ knowledge and skills on EBP improved in both groups (intervention group: p < 0.001; control group: p < 0.001). At the post-intervention, there was a statistically significant difference in EBP knowledge and skills between intervention and control groups ( p = 0.011). Students in the intervention group presented monographs with clearer review questions, inclusion/exclusion criteria, and methodology compared to students in the control group. The EBP educational program showed a potential to promote the EBP knowledge and skills of future nurses.

1. Introduction

Evidence-based practice (EBP) is defined as “clinical decision-making that considers the best available evidence; the context in which the care is delivered; client preference; and the professional judgment of the health professional” [ 1 ] (p. 2). EBP implementation is recommended in clinical settings [ 2 , 3 , 4 , 5 ] as it has been attributed to promoting high-value health care, improving the patient experience and health outcomes, as well as reducing health care costs [ 6 ]. Nevertheless, EBP is not the standard of care globally [ 7 , 8 , 9 ], and some studies acknowledge education as an approach to promote EBP adoption, implementation, and sustainment [ 10 , 11 , 12 , 13 , 14 , 15 ].

It has been recommended that educational curricula for health students should be based on the five steps of EBP in order to support developing knowledge, skills, and positive attitudes toward EBP [ 16 ]. These steps are: translation of uncertainty into an answerable question; search for and retrieval of evidence; critical appraisal of evidence for validity and clinical importance; application of appraised evidence to practice; and evaluation of performance [ 16 ].

To respond to this recommendation, undergraduate nursing curricula should include courses, teaching strategies, and training that focus on the development of research and EBP skills for nurses to be able to incorporate valid and relevant research findings in practice. Nevertheless, teaching research and EBP to undergraduate nursing students is a challenging task. Some studies report that undergraduate students have negative attitudes/beliefs toward research and EBP, especially toward the statistical components of the research courses and the complex terminology used. Additionally, students may not understand the importance of the link between research and clinical practice [ 17 , 18 , 19 ]. In fact, a lack of EBP and research knowledge is commonly reported by nurses and nursing students as a barrier to EBP. It is imperative to provide the future nurses with research and EBP skills in order to overcome the barriers to EBP use in clinical settings.

At an international level, several studies have been performed with undergraduate nursing students to assess the effectiveness of EBP interventions on multiple outcomes, such as EBP knowledge and skills [ 20 , 21 , 22 , 23 ]. The Classification Rubric for EBP Assessment Tools in Education (CREATE) [ 24 ] suggests EBP knowledge should be assessed cognitively using paper and pencil tests, as EBP knowledge is defined as “learners’ retention of facts and concepts about EBP” [ 24 ] (p. 5). Additionally, the CREATE framework suggests EBP skills should be assessed using performance tests, as skills are defined as “the application of knowledge” [ 24 ] (p. 5). Despite these recommendations, few studies have assessed EBP knowledge and skills using both cognitive and performance instruments.

Therefore, this study aims to evaluate the effectiveness of an EBP educational program on undergraduate nursing students’ EBP knowledge and skills using a specific cognitive and performance instrument. The intervention used in this study was recently developed [ 25 ], and this is the first study designed to assess its effectiveness in undergraduate EBP.

2. Materials and Methods

2.1. design.

A cluster randomized controlled trial with two-armed parallel group design was undertaken (ClinicalTrials.gov Identifier: {"type":"clinical-trial","attrs":{"text":"NCT03411668","term_id":"NCT03411668"}} NCT03411668 ).

2.2. Sample Size Calculation

The sample size was calculated using the software G*Power 3.1.9.2. (Heinrich-Heine-Universität Dusseldorf, Düsseldorf, Germany) Recognizing that there were no studies performed a priori using a cognitive and performance instrument to assess the effectiveness of an EBP educational program on undergraduate nursing students’ EBP knowledge and skills, we used an effect size of 0.25, which is a small effect size as proposed by Cohen [ 26 ]. A power analysis based on a type I error of 0.05; power of 0.80; effect size f = 0.25; and ANOVA repeated measures between factors determined a sample size of 98 as total.

Taking into account that our study used clusters (optional courses) and that each one had an average of 25 students, we needed at least four clusters to cover the total sample size of 98. However, to cover potential losses to follow-up, we included a total of six optional courses.

2.3. Participants’ Recruitment and Randomization

We recruited participants from one Portuguese nursing school in 2018. From the 12 optional clinical nursing courses (such as Community Nursing Intervention in Vulnerable Groups; Ageing; Health and Citizenship; The Child with Special Needs: Diagnoses and Interventions in Pediatric Nursing; Liaison Psychiatry Nursing; Nursing in the Emergency Room; etc.) in the 8th semester of the nursing program (last year before graduation), students from three clinical nursing courses were randomly assigned to the experimental group (EBP educational program) and students from another three clinical nursing courses were randomly assigned to the control group (no intervention— education as usual ) before the baseline assessment. An independent researcher performed this assignment using a random number generator from the random.org website [ 27 ]. This assignment was performed based on a list of the 12 optional courses provided through the nursing school’s website.

2.4. Intervention Condition

The participants in the intervention group received education as usual plus the EBP educational program, which was developed by Cardoso, Rodrigues, and Apóstolo [ 25 ]. This intervention included EBP contents regarding models of thinking about EBP, systematic reviews types, review question development, searching for studies, study selection process, data extraction, and data synthesis.

This program was implemented in 6 sessions over 17 weeks:

- Sessions 1–3—total of 12 h (4 h per session) during the first 7 weeks using expository methods with practice tasks to groups of 20–30 students.

- Sessions 4–6—total of 6 h (2 h per session) during the last 10 weeks using active methods through mentoring to groups of 2–3 students.

Due to the nature of the intervention, it was not possible to blind participants regarding treatment assignment nor was it feasible to blind the individuals delivering treatment.

2.5. Control Condition

The participants in the control group received only education as usual; i.e., students allocated to this control condition received the standard educational contents (theoretical, theoretical–practical, practical) delivered by the nursing educators of the selected nursing school.

2.6. Assessment

All participants were assessed before (week 0) and after the intervention (week 18) using a self-report instrument. EBP knowledge and skills were assessed by the Adapted Fresno Test for undergraduate nursing students [ 28 ]. This instrument was adapted from the Fresno Test, which was originally developed in 2003 to measure knowledge and skills on EBP in family practice residents [ 29 ]. The Adapted Fresno Test for undergraduate nursing students has seven short answer questions and two fill-in-the-blank questions [ 28 ]. At the beginning of the instrument, two scenarios, which suggest clinical uncertainty, are presented. These two scenarios are used to guide the answers to questions 1 to 4: (1) write a clinical question; (2) identify and discuss the strengths and weaknesses of information sources as well as the advantages and disadvantages of information sources; (3) identify the type of study most suitable for answering the question of one of the clinical scenarios and justify the choice; and (4) describe a possible search strategy in Medline for one of the clinical scenarios, explaining the rationale. The next three short answer questions require that the students identify topics for determining the relevance and validity of a research study and address the magnitude and value of research findings. The last two questions are fill-in-the-blank questions. The answers are scored using a modified standardized grading system [ 28 ], which was adapted from the original [ 29 ]. The instrument has a total minimum score of 0 and a maximum score of 101. The inter-rater correlation for the total score of the Adapted Fresno Test was 0.826 [ 28 ]. The rater that graded the answers to the Adapted Fresno Test was blinded to treatment assignment.

Despite the fact that in the study proposal we did not consider any kind of qualitative analysis in order to assess EBP knowledge and skills in a more practical context, we decided during the development of the study to perform a qualitative analysis of monographs at the posttest. The monographs were developed by small groups of nursing students and were the final written work submitted by the students for their bachelor’s degree course. In this work, the students were asked to define a review question regarding the context of clinical practice where they were performing their clinical training. Students then proceeded to answer the review question through a systematic process of searching and selecting relevant studies and extracting and synthesizing the data. From the 58 submitted monographs (30 from the control group and 28 from the intervention group), 18 were randomized for evaluation (nine from the control group and nine from the intervention group) by an independent researcher using the random.org website [ 27 ] based on a list provided by the research team. Three independent experts (one psychologist with a doctoral qualification and two qualified nurses, one with a master’s degree) performed a qualitative analysis of the selected monographs. All experts had experience with the EBP approach and were blinded to treatment assignment. The experts independently used an evaluation form to guide the qualitative analysis of each monograph. This form presented 11 guiding criteria regarding review questions, inclusion/exclusion criteria, methodology (namely search strategy, study selection process, data extraction, and data synthesis), results presentation, and congruency between the review questions and the answers to them that were provided in the conclusion section. Thereafter, the experts met to discuss any discrepancies in their qualitative analysis until consensus was reached.

2.7. Statistical Analyses

The data were analyzed using Statistical Package for the Social Sciences (SPSS; version 24.0; SPSS Inc., Chicago, IL, USA). Differences in sociodemographic characteristics of study participants and outcome data at baseline were analyzed using Pearson’s chi-squared test for nominal data and independent the t -test for continuous data.

Taking into account the central limit theorem and that ANOVA tests are robust to violation of assumptions [ 30 ], we decided to perform two-way mixed ANOVA to compare the outcome between and within groups. The Wilcoxon signed-rank test was used to analyze how many participants had improved their EBP knowledge and skills item-by-item, how many remained the same, and how many had decreased performance within each group. Statistical significance was determined by p -values less than 0.05.

To minimize the noncompliance impact, an intention-to-treat (ITT) analysis was used to analyze participants in the groups that they were initially randomized to [ 31 ] by using the last observation carried forward imputation method.

2.8. Ethics

This study was approved by the Ethical Committee of the Faculty of Medicine of the University of Coimbra (Reference: CE-037/2017). The institution where the study was carried out provided written approval. All participants gave informed consent, and the data were managed in a confidential way.

Twelve potential clusters (optional courses in the 8th semester of the nursing program) were identified as eligible for this study. Of these, three were randomized for the intervention group and three for the control group. During the intervention, eight participants (two in the intervention group and six in the control group) were lost to follow-up because they did not fill-in the instrument in the post-intervention. Figure 1 shows the flow of participants through each stage of the trial.

Consolidated Standards of Reporting Trials (CONSORT) diagram showing the flow of participants through each stage of the trial. ITT: intention-to-treat.

3.1. Demographic Characteristics

As Table 1 displays, 148 undergraduate nursing students with an average age of 21.95 years (SD = 2.25; range: 21–41) participated in the study. A large majority of the sample were female ( n = 118, 79.7%), had a 12th grade educational level ( n = 144, 97.3%), and had participated in some form of EBP training ( n = 121, 81.8%).

Socio-demographic characterization of the sample—ITT analysis.

* Defined as any kind and duration of evidence-based practice (EBP) training, such as EBP contents in a course, a workshop, a seminar.

At baseline, the experimental and control groups were comparable regarding sex, age, education, EBP training, and performance on the Adapted Fresno Test ( Table 1 and Table 3). The baseline data were similar with dropouts excluded; therefore, only ITT analysis results are presented.

3.2. EBP Knowledge and Skills

3.2.1. adapted fresno test.

The two-way mixed ANOVA showed a statistically significant interaction between the intervention and time on EBP knowledge and skills, F (1, 146) = 9.550, p = 0.002, partial η 2 = 0.061 ( Table 2 ). Excluding the dropouts, the two-way mixed ANOVA analysis was similar. Thus, only the ITT analysis results are presented.

Main effects of time and group and interaction effects on EBP knowledge and skills—ITT analysis.

To determine the difference between groups at baseline and post-intervention, two separate between-subjects ANOVAs (i.e., two separate one-way ANOVAs) were performed. At the pre-intervention, there was no statistically significant difference in EBP knowledge and skills between groups: F (1,146) = 0.221, p = 0.639, partial η 2 = 0.002. At the post-intervention, there was a statistically significant difference in EBP knowledge and skills between groups: F (1,146) = 6.720, p = 0.011, partial η 2 = 0.044 ( Table 3 ).

Repeated measures ANOVA and between-subjects ANOVA—ITT analysis.

To determine the differences within groups from the baseline to post-intervention, two separate within-subjects ANOVAs (repeated measures ANOVAs) were performed. There was a statistically significant effect of time on EBP knowledge and skills for the intervention group: F (1,73) = 53.028, p < 0.001, partial η 2 = 0.421 and for the control group: F (1,73) = 13.832, p < 0.001, partial η 2 = 0.159 ( Table 3 ).

The results of repeated measures ANOVA and between-subjects ANOVA analysis are similar if we exclude the dropouts; therefore, only ITT analysis results are presented.

The results of the Wilcoxon signed-rank test for each item of the Adapted Fresno Test are presented in Table 4 . The results of this analysis revealed that students in both the intervention and control groups significantly improved their knowledge and skills in writing a focused clinical question (Item 1) (intervention group: Z = −4.572, p < 0.000; control group: Z = −2.338, p = 0.019), in building a search strategy (item 3) (intervention group: Z = −4.740, p < 0.000; control group: Z = −4.757, p < 0.000), in identifying and justifying the study design most suitable for answering the question of one of the clinical scenarios (item 4) (intervention group: Z = −4.508, p < 0.000; control group: Z = −3.738, p < 0.000), and in describing the characteristics of a study to determine its relevance (item 5) (intervention group: Z = −2.699, p = 0.007; control group: Z = −1.980, p = 0.048).

Within groups comparison with Wilcoxon signed-rank test for each item of the Adapted Fresno Test—ITT analysis.

The students in the control group significantly improved their knowledge and skills in describing the characteristics of a study to determine its validity (item 6) ( Z = −2.714, p = 0.007). The students in the intervention group significantly improved their knowledge and skills in describing the characteristics of a study to determine its magnitude and significance (item 7) ( Z = −2.543, p = 0.011). No other significant differences were detected.

The results of the within groups comparison with the Wilcoxon signed-rank test are similar if we exclude the dropouts; therefore, only ITT analysis results are presented.

3.2.2. Qualitative Analysis of Monographs

Based on the experts’ consensus report of each monograph, the analysis of the intervention group monographs showed that the students’ groups clearly defined their review questions and inclusion/exclusion criteria. These groups of students effectively searched for studies using appropriate databases, keywords, Boolean operators, and truncation. Additionally, we found thorough descriptions from students concerning the selection process, data extraction, and data synthesis. However, only three students’ groups provided a good description of the review findings with an appropriate data synthesis as well as a clear answer to the review question in the conclusion section of their monographs. It is noted that the criteria for the results and conclusion sections were more difficult to successfully achieve, even in the intervention group.

The monographs of the control groups showed weaknesses throughout. From the nine monographs of the control group, only two presented the review question in a way that was clearly defined. In all of the monographs, the inclusion/exclusion criteria were either not very informative, unclear, or did not match with the defined review questions. Additionally, the search strategies were not clear and demonstrated limited understanding, such as lack of use of appropriate synonyms, absent truncations, and no definition of the search field for each word or expression to be searched. None of the monographs from the control group reported information about the methods used to study the selection process, to extract data, or to synthesize data. In the conclusion section, students from the control group also demonstrated difficulties in synthesizing the data and limitations by providing a clear answer to the review question.

4. Discussion

This study sought to evaluate the effectiveness of an EBP educational program on undergraduate nursing students’ EBP knowledge and skills. Even though both groups improved after the intervention in EBP knowledge and skills, the study results showed that the improvement was greater in the intervention group. This result was reinforced by the results of the qualitative analysis of monographs.

To the best of our knowledge, this is the first study to use a cognitive and performance assessment instrument (Adapted Fresno Test) with undergraduate nursing students, as suggested by CREATE [ 24 ]. Additionally, it is the first study conducted using the EBP education program [ 25 ]. Therefore, comparison of our findings with similar studies in terms of the type of assessment instrument and intervention is limited.

However, comparing our study with other previous research using other types of instruments and interventions demonstrates similar results [ 20 , 21 , 22 , 23 ]. In a quasi-experimental study [ 20 ], it was found that an EBP educational teaching strategy showed positive results in improving EBP knowledge in undergraduate nursing students. A study showed that undergraduate nursing students who received an EBP-focused interactive teaching intervention improved their EBP knowledge [ 21 ]. Another study indicated that a 15-week educational intervention in undergraduate nursing students (second- and third-year) significantly improved their EBP knowledge and skills [ 22 ]. In addition, a study by Zhang, Zeng, Chen, and Li revealed a significant improvement in undergraduate nursing students’ EBP knowledge after participating in a two-phase intervention: a self-directed learning process and a workshop for critical appraisal of literature [ 23 ].

Despite the effectiveness of the program in improving EBP knowledge and skills, the students included in the present study had low levels of EBP knowledge and skills as assessed by the Adapted Fresno Test at the pretest and posttest. These low levels of EBP knowledge and skills, especially at the pretest, might have influenced our study results. As a matter of fact, the Adapted Fresno Test is a demanding test since it requires that students retrieve and apply knowledge while doing a task associated with EBP based on scenarios involving clinical uncertainty. Consequently, this kind of test is very useful to truly assess EBP knowledge retention and abilities in clinical scenarios that do not allow guessing the answers. Notwithstanding, due to these characteristics, the Adapted Fresno Test may possibly be less sensitive when small changes occur or when students have low levels of EBP knowledge and skills. Nevertheless, even using instruments with Likert scales, other studies also showed that students have low levels of EBP knowledge and skills [ 21 , 22 , 23 ].

The low levels of EBP knowledge and skills of the undergraduate nursing students may be a reflection of a persistent, traditional education with regard to research. By this we mean that the focus of training remains on primary research—preparing students to be “research generators” instead of preparing them to be “evidence users” [ 32 ]. Furthermore, the designed and tested intervention used in this study was limited in time (only 17 weeks), was provided by only two instructors, and was delivered to fourth-year undergraduate nursing students, which are limitations for curriculum-wide integration of EBP.

Indeed, a curriculum that promotes EBP should facilitate students’ acquisition of EBP knowledge and skills over time and with levels of increasing complexity through their participation in EBP courses and during their clinical practice experiences [ 32 , 33 , 34 , 35 ]. As Moch, Cronje, and Branson suggest, “It is only in such practical settings that students can experience the challenges intrinsic to applying scientific evidence to the care of real patients. In these clinical settings, students can experience both the frustrations and the triumphs inevitable to integrating scientific knowledge into patient care.” [ 35 ] (p. 11). Therefore, in future studies, other broad approaches for curriculum-wide integration of EBP as well as its long-term effects should be evaluated.

Previously in the Discussion, we highlighted the limitations of the proposed intervention in terms of time constraints (only 17 weeks), instructors’ constraints (only two instructors provided the intervention), and participants’ constraints (fourth-year undergraduate nursing students). In addition, the study was also restricted to one Portuguese nursing school, which can limit the generalization of the results. However, our study tried to address some of the fragilities identified in other studies [ 20 , 21 , 22 , 23 ] on the effectiveness of EBP educational interventions by including a control group and by measuring EBP knowledge and skills with an objective measure and not a self-reported measure.

Bearing this in mind, future studies in multiple sites should assess the long-term effects of the EBP educational intervention and the impact on EBP knowledge and skills of potential variations in contents and teaching methods. In addition, studies using more broad interventions for curriculum-wide integration of EBP should also be performed.

5. Conclusions

Our findings show that the EBP educational program was effective in improving the EBP knowledge and skills of undergraduate nursing students. Therefore, the use of an EBP approach as a complement to the research education of undergraduate nursing students should be promoted by nursing schools and educators. This will help to prepare the future nurses with the EBP knowledge and skills that are essential to overcome the barriers to EBP use in clinical settings, and consequently, to contribute to better health outcomes.

Acknowledgments

This paper contributed toward the D.C. PhD in Health Sciences—Nursing. The authors gratefully acknowledge the support of the Health Sciences Research Unit: Nursing (UICISA: E), hosted by the Nursing School of Coimbra (ESEnfC) and funded by the Foundation for Science and Technology (FCT). Moreover, the authors gratefully thank Catarina Oliveira for all the support as a Ph.D. supervisor and Isabel Fernandes, Maria da Nazaré Cerejo, and Irma Brito for help and facilitation of data collection.

Author Contributions

Conceptualization, D.C., M.A.R., and J.A.; methodology, D.C., M.A.R., and J.A.; validation, D.C., M.A.R., and J.A.; formal analysis, D.C., F.C., and A.F.C.; investigation, D.C., F.C., A.F.C., E.B.-C., L.S., R.R., V.C., D.P., M.-A.R., M.A.R., and J.A.; resources, D.C., M.A.R., and J.A.; data curation, D.C., F.C., and A.F.C.; writing—original draft preparation, D.C.; writing—review and editing, F.C., A.F.C., E.B.-C., L.S., R.R., V.C., D.P., M.-A.R., M.A.R., and J.A.; supervision, M.A.R. and J.A.; project administration, D.C. All authors have read and agreed to the published version of the manuscript.

This work was funded by National Funds through the FCT—Foundation for Science and Technology, I.P., within the scope of the project Ref. UIDP/00742/2020.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by Ethical Committee of Faculty of Medicine of the University of Coimbra (protocol code: CE-037/2017 and date of approval: 22 May 2017).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Conflicts of interest.

The authors declare no conflict of interest.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Psychology: Research and Review

- Open access

- Published: 19 March 2021

Appraising psychotherapy case studies in practice-based evidence: introducing Case Study Evaluation-tool (CaSE)

- Greta Kaluzeviciute ORCID: orcid.org/0000-0003-1197-177X 1

Psicologia: Reflexão e Crítica volume 34 , Article number: 9 ( 2021 ) Cite this article

7263 Accesses

5 Citations

5 Altmetric

Metrics details

Systematic case studies are often placed at the low end of evidence-based practice (EBP) due to lack of critical appraisal. This paper seeks to attend to this research gap by introducing a novel Case Study Evaluation-tool (CaSE). First, issues around knowledge generation and validity are assessed in both EBP and practice-based evidence (PBE) paradigms. Although systematic case studies are more aligned with PBE paradigm, the paper argues for a complimentary, third way approach between the two paradigms and their ‘exemplary’ methodologies: case studies and randomised controlled trials (RCTs). Second, the paper argues that all forms of research can produce ‘valid evidence’ but the validity itself needs to be assessed against each specific research method and purpose. Existing appraisal tools for qualitative research (JBI, CASP, ETQS) are shown to have limited relevance for the appraisal of systematic case studies through a comparative tool assessment. Third, the paper develops purpose-oriented evaluation criteria for systematic case studies through CaSE Checklist for Essential Components in Systematic Case Studies and CaSE Purpose-based Evaluative Framework for Systematic Case Studies. The checklist approach aids reviewers in assessing the presence or absence of essential case study components (internal validity). The framework approach aims to assess the effectiveness of each case against its set out research objectives and aims (external validity), based on different systematic case study purposes in psychotherapy. Finally, the paper demonstrates the application of the tool with a case example and notes further research trajectories for the development of CaSE tool.

Introduction

Due to growing demands of evidence-based practice, standardised research assessment and appraisal tools have become common in healthcare and clinical treatment (Hannes, Lockwood, & Pearson, 2010 ; Hartling, Chisholm, Thomson, & Dryden, 2012 ; Katrak, Bialocerkowski, Massy-Westropp, Kumar, & Grimmer, 2004 ). This allows researchers to critically appraise research findings on the basis of their validity, results, and usefulness (Hill & Spittlehouse, 2003 ). Despite the upsurge of critical appraisal in qualitative research (Williams, Boylan, & Nunan, 2019 ), there are no assessment or appraisal tools designed for psychotherapy case studies.

Although not without controversies (Michels, 2000 ), case studies remain central to the investigation of psychotherapy processes (Midgley, 2006 ; Willemsen, Della Rosa, & Kegerreis, 2017 ). This is particularly true of systematic case studies, the most common form of case study in contemporary psychotherapy research (Davison & Lazarus, 2007 ; McLeod & Elliott, 2011 ).

Unlike the classic clinical case study, systematic cases usually involve a team of researchers, who gather data from multiple different sources (e.g., questionnaires, observations by the therapist, interviews, statistical findings, clinical assessment, etc.), and involve a rigorous data triangulation process to assess whether the data from different sources converge (McLeod, 2010 ). Since systematic case studies are methodologically pluralistic, they have a greater interest in situating patients within the study of a broader population than clinical case studies (Iwakabe & Gazzola, 2009 ). Systematic case studies are considered to be an accessible method for developing research evidence-base in psychotherapy (Widdowson, 2011 ), especially since they correct some of the methodological limitations (e.g. lack of ‘third party’ perspectives and bias in data analysis) inherent to classic clinical case studies (Iwakabe & Gazzola, 2009 ). They have been used for the purposes of clinical training (Tuckett, 2008 ), outcome assessment (Hilliard, 1993 ), development of clinical techniques (Almond, 2004 ) and meta-analysis of qualitative findings (Timulak, 2009 ). All these developments signal a revived interest in the case study method, but also point to the obvious lack of a research assessment tool suitable for case studies in psychotherapy (Table 1 ).

To attend to this research gap, this paper first reviews issues around the conceptualisation of validity within the paradigms of evidence-based practice (EBP) and practice-based evidence (PBE). Although case studies are often positioned at the low end of EBP (Aveline, 2005 ), the paper suggests that systematic cases are a valuable form of evidence, capable of complimenting large-scale studies such as randomised controlled trials (RCTs). However, there remains a difficulty in assessing the quality and relevance of case study findings to broader psychotherapy research.

As a way forward, the paper introduces a novel Case Study Evaluation-tool (CaSE) in the form of CaSE Purpose - based Evaluative Framework for Systematic Case Studies and CaSE Checklist for Essential Components in Systematic Case Studies . The long-term development of CaSE would contribute to psychotherapy research and practice in three ways.

Given the significance of methodological pluralism and diverse research aims in systematic case studies, CaSE will not seek to prescribe explicit case study writing guidelines, which has already been done by numerous authors (McLeod, 2010 ; Meganck, Inslegers, Krivzov, & Notaerts, 2017 ; Willemsen et al., 2017 ). Instead, CaSE will enable the retrospective assessment of systematic case study findings and their relevance (or lack thereof) to broader psychotherapy research and practice. However, there is no reason to assume that CaSE cannot be used prospectively (i.e. producing systematic case studies in accordance to CaSE evaluative framework, as per point 3 in Table 2 ).

The development of a research assessment or appraisal tool is a lengthy, ongoing process (Long & Godfrey, 2004 ). It is particularly challenging to develop a comprehensive purpose - oriented evaluative framework, suitable for the assessment of diverse methodologies, aims and outcomes. As such, this paper should be treated as an introduction to the broader development of CaSE tool. It will introduce the rationale behind CaSE and lay out its main approach to evidence and evaluation, with further development in mind. A case example from the Single Case Archive (SCA) ( https://singlecasearchive.com ) will be used to demonstrate the application of the tool ‘in action’. The paper notes further research trajectories and discusses some of the limitations around the use of the tool.

Separating the wheat from the chaff: what is and is not evidence in psychotherapy (and who gets to decide?)

The common approach: evidence-based practice.

In the last two decades, psychotherapy has become increasingly centred around the idea of an evidence-based practice (EBP). Initially introduced in medicine, EBP has been defined as ‘conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients’ (Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996 ). EBP revolves around efficacy research: it seeks to examine whether a specific intervention has a causal (in this case, measurable) effect on clinical populations (Barkham & Mellor-Clark, 2003 ). From a conceptual standpoint, Sackett and colleagues defined EBP as a paradigm that is inclusive of many methodologies, so long as they contribute towards clinical decision-making process and accumulation of best currently available evidence in any given set of circumstances (Gabbay & le May, 2011 ). Similarly, the American Psychological Association (APA, 2010 ) has recently issued calls for evidence-based systematic case studies in order to produce standardised measures for evaluating process and outcome data across different therapeutic modalities.

However, given EBP’s focus on establishing cause-and-effect relationships (Rosqvist, Thomas, & Truax, 2011 ), it is unsurprising that qualitative research is generally not considered to be ‘gold standard’ or ‘efficacious’ within this paradigm (Aveline, 2005 ; Cartwright & Hardie, 2012 ; Edwards, 2013 ; Edwards, Dattilio, & Bromley, 2004 ; Longhofer, Floersch, & Hartmann, 2017 ). Qualitative methods like systematic case studies maintain an appreciation for context, complexity and meaning making. Therefore, instead of measuring regularly occurring causal relations (as in quantitative studies), the focus is on studying complex social phenomena (e.g. relationships, events, experiences, feelings, etc.) (Erickson, 2012 ; Maxwell, 2004 ). Edwards ( 2013 ) points out that, although context-based research in systematic case studies is the bedrock of psychotherapy theory and practice, it has also become shrouded by an unfortunate ideological description: ‘anecdotal’ case studies (i.e. unscientific narratives lacking evidence, as opposed to ‘gold standard’ evidence, a term often used to describe the RCT method and the therapeutic modalities supported by it), leading to a further need for advocacy in and defence of the unique epistemic process involved in case study research (Fishman, Messer, Edwards, & Dattilio, 2017 ).

The EBP paradigm prioritises the quantitative approach to causality, most notably through its focus on high generalisability and the ability to deal with bias through randomisation process. These conditions are associated with randomised controlled trials (RCTs) but are limited (or, as some argue, impossible) in qualitative research methods such as the case study (Margison et al., 2000 ) (Table 3 ).

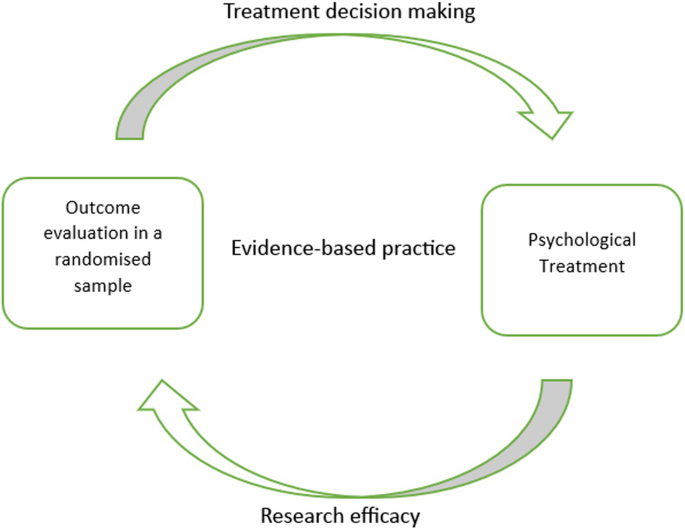

‘Evidence’ from an EBP standpoint hovers over the epistemological assumption of procedural objectivity : knowledge can be generated in a standardised, non-erroneous way, thus producing objective (i.e. with minimised bias) data. This can be achieved by anyone, as long as they are able to perform the methodological procedure (e.g. RCT) appropriately, in a ‘clearly defined and accepted process that assists with knowledge production’ (Douglas, 2004 , p. 131). If there is a well-outlined quantitative form for knowledge production, the same outcome should be achieved regardless of who processes or interprets the information. For example, researchers using Cochrane Review assess the strength of evidence using meticulously controlled and scrupulous techniques; in turn, this minimises individual judgment and creates unanimity of outcomes across different groups of people (Gabbay & le May, 2011 ). The typical process of knowledge generation (through employing RCTs and procedural objectivity) in EBP is demonstrated in Fig. 1 .

Typical knowledge generation process in evidence–based practice (EBP)

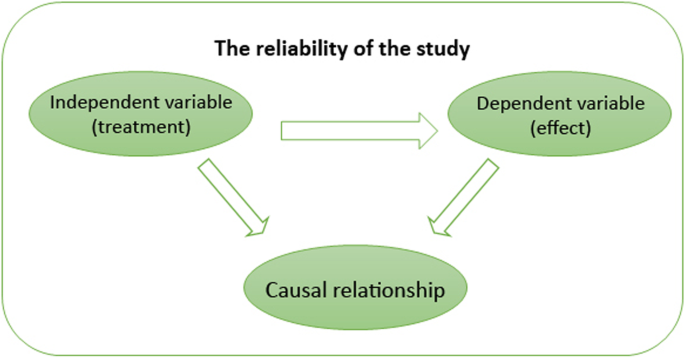

In EBP, the concept of validity remains somewhat controversial, with many critics stating that it limits rather than strengthens knowledge generation (Berg, 2019 ; Berg & Slaattelid, 2017 ; Lilienfeld, Ritschel, Lynn, Cautin, & Latzman, 2013 ). This is because efficacy research relies on internal validity . At a general level, this concept refers to the congruence between the research study and the research findings (i.e. the research findings were not influenced by anything external to the study, such as confounding variables, methodological errors and bias); at a more specific level, internal validity determines the extent to which a study establishes a reliable causal relationship between an independent variable (e.g. treatment) and independent variable (outcome or effect) (Margison et al., 2000 ). This approach to validity is demonstrated in Fig. 2 .

Internal validity

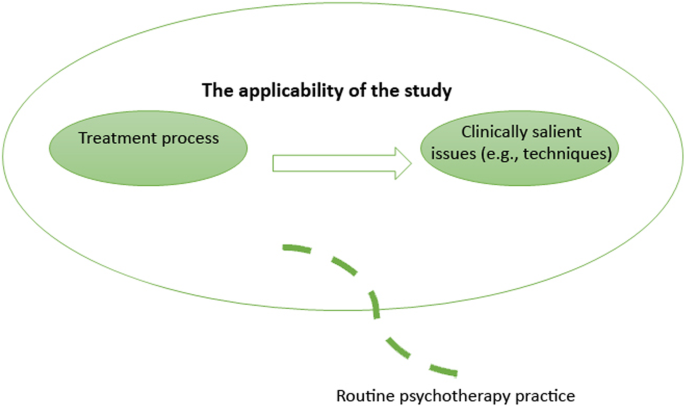

Social scientists have argued that there is a trade-off between research rigour and generalisability: the more specific the sample and the more rigorously defined the intervention, the outcome is likely to be less applicable to everyday, routine practice. As such, there remains a tension between employing procedural objectivity which increases the rigour of research outcomes and applying such outcomes to routine psychotherapy practice where scientific standards of evidence are not uniform.

According to McLeod ( 2002 ), inability to address questions that are most relevant for practitioners contributed to a deepening research–practice divide in psychotherapy. Studies investigating how practitioners make clinical decisions and the kinds of evidence they refer to show that there is a strong preference for knowledge that is not generated procedurally, i.e. knowledge that encompasses concrete clinical situations, experiences and techniques. A study by Stewart and Chambless ( 2007 ) sought to assess how a larger population of clinicians (under APA, from varying clinical schools of thought and independent practices, sample size 591) make treatment decisions in private practice. The study found that large-scale statistical data was not the primary source of information sought by clinicians. The most important influences were identified as past clinical experiences and clinical expertise ( M = 5.62). Treatment materials based on clinical case observations and theory ( M = 4.72) were used almost as frequently as psychotherapy outcome research findings ( M = 4.80) (i.e. evidence-based research). These numbers are likely to fluctuate across different forms of psychotherapy; however, they are indicative of the need for research about routine clinical settings that does not isolate or generalise the effect of an intervention but examines the variations in psychotherapy processes.

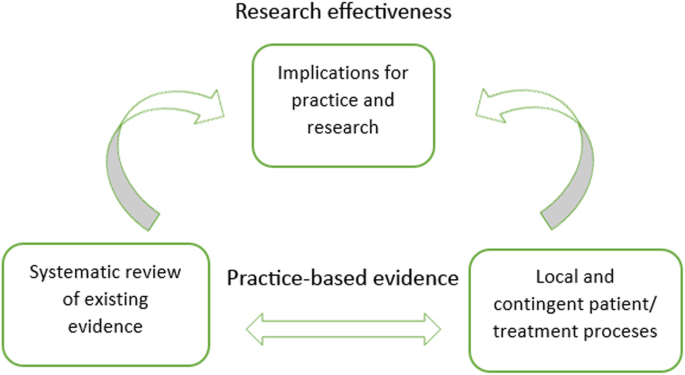

The alternative approach: practice-based evidence

In an attempt to dissolve or lessen the research–practice divide, an alternative paradigm of practice-based evidence (PBE) has been suggested (Barkham & Mellor-Clark, 2003 ; Fox, 2003 ; Green & Latchford, 2012 ; Iwakabe & Gazzola, 2009 ; Laska, Motulsky, Wertz, Morrow, & Ponterotto, 2014 ; Margison et al., 2000 ). PBE represents a shift in how we think about evidence and knowledge generation in psychotherapy. PBE treats research as a local and contingent process (at least initially), which means it focuses on variations (e.g. in patient symptoms) and complexities (e.g. of clinical setting) in the studied phenomena (Fox, 2003 ). Moreover, research and theory-building are seen as complementary rather than detached activities from clinical practice. That is to say, PBE seeks to examine how and which treatments can be improved in everyday clinical practice by flagging up clinically salient issues and developing clinical techniques (Barkham & Mellor-Clark, 2003 ). For this reason, PBE is concerned with the effectiveness of research findings: it evaluates how well interventions work in real-world settings (Rosqvist et al., 2011 ). Therefore, although it is not unlikely for RCTs to be used in order to generate practice-informed evidence (Horn & Gassaway, 2007 ), qualitative methods like the systematic case study are seen as ideal for demonstrating the effectiveness of therapeutic interventions with individual patients (van Hennik, 2020 ) (Table 4 ).

PBE’s epistemological approach to ‘evidence’ may be understood through the process of concordant objectivity (Douglas, 2004 ): ‘Instead of seeking to eliminate individual judgment, … [concordant objectivity] checks to see whether the individual judgments of people in fact do agree’ (p. 462). This does not mean that anyone can contribute to the evaluation process like in procedural objectivity, where the main criterion is following a set quantitative protocol or knowing how to operate a specific research design. Concordant objectivity requires that there is a set of competent observers who are closely familiar with the studied phenomenon (e.g. researchers and practitioners who are familiar with depression from a variety of therapeutic approaches).

Systematic case studies are a good example of PBE ‘in action’: they allow for the examination of detailed unfolding of events in psychotherapy practice, making it the most pragmatic and practice-oriented form of psychotherapy research (Fishman, 1999 , 2005 ). Furthermore, systematic case studies approach evidence and results through concordant objectivity (Douglas, 2004 ) by involving a team of researchers and rigorous data triangulation processes (McLeod, 2010 ). This means that, although systematic case studies remain focused on particular clinical situations and detailed subjective experiences (similar to classic clinical case studies; see Iwakabe & Gazzola, 2009 ), they still involve a series of validity checks and considerations on how findings from a single systematic case pertain to broader psychotherapy research (Fishman, 2005 ). The typical process of knowledge generation (through employing systematic case studies and concordant objectivity) in PBE is demonstrated in Fig. 3 . The figure exemplifies a bidirectional approach to research and practice, which includes the development of research-supported psychological treatments (through systematic reviews of existing evidence) as well as the perspectives of clinical practitioners in the research process (through the study of local and contingent patient and/or treatment processes) (Teachman et al., 2012 ; Westen, Novotny, & Thompson-Brenner, 2004 ).

Typical knowledge generation process in practice-based evidence (PBE)

From a PBE standpoint, external validity is a desirable research condition: it measures extent to which the impact of interventions apply to real patients and therapists in everyday clinical settings. As such, external validity is not based on the strength of causal relationships between treatment interventions and outcomes (as in internal validity); instead, the use of specific therapeutic techniques and problem-solving decisions are considered to be important for generalising findings onto routine clinical practice (even if the findings are explicated from a single case study; see Aveline, 2005 ). This approach to validity is demonstrated in Fig. 4 .

External validity

Since effectiveness research is less focused on limiting the context of the studied phenomenon (indeed, explicating the context is often one of the research aims), there is more potential for confounding factors (e.g. bias and uncontrolled variables) which in turn can reduce the study’s internal validity (Barkham & Mellor-Clark, 2003 ). This is also an important challenge for research appraisal. Douglas ( 2004 ) argues that appraising research in terms of its effectiveness may produce significant disagreements or group illusions, since what might work for some practitioners may not work for others: ‘It cannot guarantee that values are not influencing or supplanting reasoning; the observers may have shared values that cause them to all disregard important aspects of an event’ (Douglas, 2004 , p. 462). Douglas further proposes that an interactive approach to objectivity may be employed as a more complex process in debating the evidential quality of a research study: it requires a discussion among observers and evaluators in the form of peer-review, scientific discourse, as well as research appraisal tools and instruments. While these processes of rigour are also applied in EBP, there appears to be much more space for debate, disagreement and interpretation in PBE’s approach to research evaluation, partly because the evaluation criteria themselves are subject of methodological debate and are often employed in different ways by researchers (Williams et al., 2019 ). This issue will be addressed more explicitly again in relation to CaSE development (‘Developing purpose-oriented evaluation criteria for systematic case studies’ section).

A third way approach to validity and evidence

The research–practice divide shows us that there may be something significant in establishing complementarity between EBP and PBE rather than treating them as mutually exclusive forms of research (Fishman et al., 2017 ). For one, EBP is not a sufficient condition for delivering research relevant to practice settings (Bower, 2003 ). While RCTs can demonstrate that an intervention works on average in a group, clinicians who are facing individual patients need to answer a different question: how can I make therapy work with this particular case ? (Cartwright & Hardie, 2012 ). Systematic case studies are ideal for filling this gap: they contain descriptions of microprocesses (e.g. patient symptoms, therapeutic relationships, therapist attitudes) in psychotherapy practice that are often overlooked in large-scale RCTs (Iwakabe & Gazzola, 2009 ). In particular, systematic case studies describing the use of specific interventions with less researched psychological conditions (e.g. childhood depression or complex post-traumatic stress disorder) can deepen practitioners’ understanding of effective clinical techniques before the results of large-scale outcome studies are disseminated.

Secondly, establishing a working relationship between systematic case studies and RCTs will contribute towards a more pragmatic understanding of validity in psychotherapy research. Indeed, the very tension and so-called trade-off between internal and external validity is based on the assumption that research methods are designed on an either/or basis; either they provide a sufficiently rigorous study design or they produce findings that can be applied to real-life practice. Jimenez-Buedo and Miller ( 2010 ) call this assumption into question: in their view, if a study is not internally valid, then ‘little, or rather nothing, can be said of the outside world’ (p. 302). In this sense, internal validity may be seen as a pre-requisite for any form of applied research and its external validity, but it need not be constrained to the quantitative approach of causality. For example, Levitt, Motulsky, Wertz, Morrow, and Ponterotto ( 2017 ) argue that, what is typically conceptualised as internal validity, is, in fact, a much broader construct, involving the assessment of how the research method (whether qualitative or quantitative) is best suited for the research goal, and whether it obtains the relevant conclusions. Similarly, Truijens, Cornelis, Desmet, and De Smet ( 2019 ) suggest that we should think about validity in a broader epistemic sense—not just in terms of psychometric measures, but also in terms of the research design, procedure, goals (research questions), approaches to inquiry (paradigms, epistemological assumptions), etc.

The overarching argument from research cited above is that all forms of research—qualitative and quantitative—can produce ‘valid evidence’ but the validity itself needs to be assessed against each specific research method and purpose. For example, RCTs are accompanied with a variety of clearly outlined appraisal tools and instruments such as CASP (Critical Appraisal Skills Programme) that are well suited for the assessment of RCT validity and their implications for EBP. Systematic case studies (or case studies more generally) currently have no appraisal tools in any discipline. The next section evaluates whether existing qualitative research appraisal tools are relevant for systematic case studies in psychotherapy and specifies the missing evaluative criteria.

The relevance of existing appraisal tools for qualitative research to systematic case studies in psychotherapy

What is a research tool.

Currently, there are several research appraisal tools, checklists and frameworks for qualitative studies. It is important to note that tools, checklists and frameworks are not equivalent to one another but actually refer to different approaches to appraising the validity of a research study. As such, it is erroneous to assume that all forms of qualitative appraisal feature the same aims and methods (Hannes et al., 2010 ; Williams et al., 2019 ).

Generally, research assessment falls into two categories: checklists and frameworks . Checklist approaches are often contrasted with quantitative research, since the focus is on assessing the internal validity of research (i.e. researcher’s independence from the study). This involves the assessment of bias in sampling, participant recruitment, data collection and analysis. Framework approaches to research appraisal, on the other hand, revolve around traditional qualitative concepts such as transparency, reflexivity, dependability and transferability (Williams et al., 2019 ). Framework approaches to appraisal are often challenging to use because they depend on the reviewer’s familiarisation and interpretation of the qualitative concepts.

Because of these different approaches, there is some ambiguity in terminology, particularly between research appraisal instruments and research appraisal tools . These terms are often used interchangeably in appraisal literature (Williams et al., 2019 ). In this paper, research appraisal tool is defined as a method-specific (i.e. it identifies a specific research method or component) form of appraisal that draws from both checklist and framework approaches. Furthermore, a research appraisal tool seeks to inform decision making in EBP or PBE paradigms and provides explicit definitions of the tool’s evaluative framework (thus minimising—but by no means eliminating—the reviewers’ interpretation of the tool). This definition will be applied to CaSE (Table 5 ).

In contrast, research appraisal instruments are generally seen as a broader form of appraisal in the sense that they may evaluate a variety of methods (i.e. they are non-method specific or they do not target a particular research component), and are aimed at checking whether the research findings and/or the study design contain specific elements (e.g. the aims of research, the rationale behind design methodology, participant recruitment strategies, etc.).

There is often an implicit difference in audience between appraisal tools and instruments. Research appraisal instruments are often aimed at researchers who want to assess the strength of their study; however, the process of appraisal may not be made explicit in the study itself (besides mentioning that the tool was used to appraise the study). Research appraisal tools are aimed at researchers who wish to explicitly demonstrate the evidential quality of the study to the readers (which is particularly common in RCTs). All forms of appraisal used in the comparative exercise below are defined as ‘tools’, even though they have different appraisal approaches and aims.

Comparing different qualitative tools

Hannes et al. ( 2010 ) identified CASP (Critical Appraisal Skills Programme-tool), JBI (Joanna Briggs Institute-tool) and ETQS (Evaluation Tool for Qualitative Studies) as the most frequently used critical appraisal tools by qualitative researchers. All three instruments are available online and are free of charge, which means that any researcher or reviewer can readily utilise CASP, JBI or ETQS evaluative frameworks to their research. Furthermore, all three instruments were developed within the context of organisational, institutional or consortium support (Tables 6 , 7 and 8 ).

It is important to note that neither of the three tools is specific to systematic case studies or psychotherapy case studies (which would include not only systematic but also experimental and clinical cases). This means that using CASP, JBI or ETQS for case study appraisal may come at a cost of overlooking elements and components specific to the systematic case study method.

Based on Hannes et al. ( 2010 ) comparative study of qualitative appraisal tools as well as the different evaluation criteria explicated in CASP, JBI and ETQS evaluative frameworks, I assessed how well each of the three tools is attuned to the methodological , clinical and theoretical aspects of systematic case studies in psychotherapy. The latter components were based on case study guidelines featured in the journal of Pragmatic Case Studies in Psychotherapy as well as components commonly used by published systematic case studies across a variety of other psychotherapy journals (e.g. Psychotherapy Research , Research In Psychotherapy : Psychopathology Process And Outcome , etc.) (see Table 9 for detailed descriptions of each component).

The evaluation criteria for each tool in Table 9 follows Joanna Briggs Institute (JBI) ( 2017a , 2017b ); Critical Appraisal Skills Programme (CASP) ( 2018 ); and ETQS Questionnaire (first published in 2004 but revised continuously since). Table 10 demonstrates how each tool should be used (i.e. recommended reviewer responses to checklists and questionnaires).

Using CASP, JBI and ETQS for systematic case study appraisal

Although JBI, CASP and ETQS were all developed to appraise qualitative research, it is evident from the above comparison that there are significant differences between the three tools. For example, JBI and ETQS are well suited to assess researcher’s interpretations (Hannes et al. ( 2010 ) defined this as interpretive validity , a subcategory of internal validity ): the researcher’s ability to portray, understand and reflect on the research participants’ experiences, thoughts, viewpoints and intentions. JBI has an explicit requirement for participant voices to be clearly represented, whereas ETQS involves a set of questions about key characteristics of events, persons, times and settings that are relevant to the study. Furthermore, both JBI and ETQS seek to assess the researcher’s influence on the research, with ETQS particularly focusing on the evaluation of reflexivity (the researcher’s personal influence on the interpretation and collection of data). These elements are absent or addressed to a lesser extent in the CASP tool.

The appraisal of transferability of findings (what this paper previously referred to as external validity ) is addressed only by ETQS and CASP. Both tools have detailed questions about the value of research to practice and policy as well as its transferability to other populations and settings. Methodological research aspects are also extensively addressed by CASP and ETQS, but less so by JBI (which relies predominantly on congruity between research methodology and objectives without any particular assessment criteria for other data sources and/or data collection methods). Finally, the evaluation of theoretical aspects (referred to by Hannes et al. ( 2010 ) as theoretical validity ) is addressed only by JBI and ETQS; there are no assessment criteria for theoretical framework in CASP.

Given these differences, it is unsurprising that CASP, JBI and ETQS have limited relevance for systematic case studies in psychotherapy. First, it is evident that neither of the three tools has specific evaluative criteria for the clinical component of systematic case studies. Although JBI and ETQS feature some relevant questions about participants and their context, the conceptualisation of patients (and/or clients) in psychotherapy involves other kinds of data elements (e.g. diagnostic tools and questionnaires as well as therapist observations) that go beyond the usual participant data. Furthermore, much of the clinical data is intertwined with the therapist’s clinical decision-making and thinking style (Kaluzeviciute & Willemsen, 2020 ). As such, there is a need to appraise patient data and therapist interpretations not only on a separate basis, but also as two forms of knowledge that are deeply intertwined in the case narrative.

Secondly, since systematic case studies involve various forms of data, there is a need to appraise how these data converge (or how different methods complement one another in the case context) and how they can be transferred or applied in broader psychotherapy research and practice. These systematic case study components are attended to a degree by CASP (which is particularly attentive of methodological components) and ETQS (particularly specific criteria for research transferability onto policy and practice). These components are not addressed or less explicitly addressed by JBI. Overall, neither of the tools is attuned to all methodological, theoretical and clinical components of the systematic case study. Specifically, there are no clear evaluation criteria for the description of research teams (i.e. different data analysts and/or clinicians); the suitability of the systematic case study method; the description of patient’s clinical assessment; the use of other methods or data sources; the general data about therapeutic progress.

Finally, there is something to be said about the recommended reviewer responses (Table 10 ). Systematic case studies can vary significantly in their formulation and purpose. The methodological, theoretical and clinical components outlined in Table 9 follow guidelines made by case study journals; however, these are recommendations, not ‘set in stone’ case templates. For this reason, the straightforward checklist approaches adopted by JBI and CASP may be difficult to use for case study researchers and those reviewing case study research. The ETQS open-ended questionnaire approach suggested by Long and Godfrey ( 2004 ) enables a comprehensive, detailed and purpose-oriented assessment, suitable for the evaluation of systematic case studies. That said, there remains a challenge of ensuring that there is less space for the interpretation of evaluative criteria (Williams et al., 2019 ). The combination of checklist and framework approaches would, therefore, provide a more stable appraisal process across different reviewers.

Developing purpose-oriented evaluation criteria for systematic case studies

The starting point in developing evaluation criteria for Case Study Evaluation-tool (CaSE) is addressing the significance of pluralism in systematic case studies. Unlike RCTs, systematic case studies are pluralistic in the sense that they employ divergent practices in methodological procedures ( research process ), and they may include significantly different research aims and purpose ( the end - goal ) (Kaluzeviciute & Willemsen, 2020 ). While some systematic case studies will have an explicit intention to conceptualise and situate a single patient’s experiences and symptoms within a broader clinical population, others will focus on the exploration of phenomena as they emerge from the data. It is therefore important that CaSE is positioned within a purpose - oriented evaluative framework , suitable for the assessment of what each systematic case is good for (rather than determining an absolute measure of ‘good’ and ‘bad’ systematic case studies). This approach to evidence and appraisal is in line with the PBE paradigm. PBE emphasises the study of clinical complexities and variations through local and contingent settings (e.g. single case studies) and promotes methodological pluralism (Barkham & Mellor-Clark, 2003 ).

CaSE checklist for essential components in systematic case studies

In order to conceptualise purpose-oriented appraisal questions, we must first look at what unites and differentiates systematic case studies in psychotherapy. The commonly used theoretical, clinical and methodological systematic case study components were identified earlier in Table 9 . These components will be seen as essential and common to most systematic case studies in CaSE evaluative criteria. If these essential components are missing in a systematic case study, then it may be implied there is a lack of information, which in turn diminishes the evidential quality of the case. As such, the checklist serves as a tool for checking whether a case study is, indeed, systematic (as opposed to experimental or clinical; see Iwakabe & Gazzola, 2009 for further differentiation between methodologically distinct case study types) and should be used before CaSE Purpose - based Evaluative Framework for Systematic Case Studie s (which is designed for the appraisal of different purposes common to systematic case studies).

As noted earlier in the paper, checklist approaches to appraisal are useful when evaluating the presence or absence of specific information in a research study. This approach can be used to appraise essential components in systematic case studies, as shown below. From a pragmatic point view (Levitt et al., 2017 ; Truijens et al., 2019 ), CaSE Checklist for Essential Components in Systematic Case Studies can be seen as a way to ensure the internal validity of systematic case study: the reviewer is assessing whether sufficient information is provided about the case design, procedure, approaches to inquiry, etc., and whether they are relevant to the researcher’s objectives and conclusions (Table 11 ).

CaSE purpose-based evaluative framework for systematic case studies

Identifying differences between systematic case studies means identifying the different purposes systematic case studies have in psychotherapy. Based on the earlier work by social scientist Yin ( 1984 , 1993 ), we can differentiate between exploratory (hypothesis generating, indicating a beginning phase of research), descriptive (particularising case data as it emerges) and representative (a case that is typical of a broader clinical population, referred to as the ‘explanatory case’ by Yin) cases.

Another increasingly significant strand of systematic case studies is transferable (aggregating and transferring case study findings) cases. These cases are based on the process of meta-synthesis (Iwakabe & Gazzola, 2009 ): by examining processes and outcomes in many different case studies dealing with similar clinical issues, researchers can identify common themes and inferences. In this way, single case studies that have relatively little impact on clinical practice, research or health care policy (in the sense that they capture psychotherapy processes rather than produce generalisable claims as in Yin’s representative case studies) can contribute to the generation of a wider knowledge base in psychotherapy (Iwakabe, 2003 , 2005 ). However, there is an ongoing issue of assessing the evidential quality of such transferable cases. According to Duncan and Sparks ( 2020 ), although meta-synthesis and meta-analysis are considered to be ‘gold standard’ for assessing interventions across disparate studies in psychotherapy, they often contain case studies with significant research limitations, inappropriate interpretations and insufficient information. It is therefore important to have a research appraisal process in place for selecting transferable case studies.

Two other types of systematic case study research include: critical (testing and/or confirming existing theories) cases, which are described as an excellent method for falsifying existing theoretical concepts and testing whether therapeutic interventions work in practice with concrete patients (Kaluzeviciute, 2021 ), and unique (going beyond the ‘typical’ cases and demonstrating deviations) cases (Merriam, 1998 ). These two systematic case study types are often seen as less valuable for psychotherapy research given that unique/falsificatory findings are difficult to generalise. But it is clear that practitioners and researchers in our field seek out context-specific data, as well as detailed information on the effectiveness of therapeutic techniques in single cases (Stiles, 2007 ) (Table 12 ).

Each purpose-based case study contributes to PBE in different ways. Representative cases provide qualitatively rich, in-depth data about a clinical phenomenon within its particular context. This offers other clinicians and researchers access to a ‘closed world’ (Mackrill & Iwakabe, 2013 ) containing a wide range of attributes about a conceptual type (e.g. clinical condition or therapeutic technique). Descriptive cases generally seek to demonstrate a realistic snapshot of therapeutic processes, including complex dynamics in therapeutic relationships, and instances of therapeutic failure (Maggio, Molgora, & Oasi, 2019 ). Data in descriptive cases should be presented in a transparent manner (e.g. if there are issues in standardising patient responses to a self-report questionnaire, this should be made explicit). Descriptive cases are commonly used in psychotherapy training and supervision. Unique cases are relevant for both clinicians and researchers: they often contain novel treatment approaches and/or introduce new diagnostic considerations about patients who deviate from the clinical population. Critical cases demonstrate the application of psychological theories ‘in action’ with particular patients; as such, they are relevant to clinicians, researchers and policymakers (Mackrill & Iwakabe, 2013 ). Exploratory cases bring new insight and observations into clinical practice and research. This is particularly useful when comparing (or introducing) different clinical approaches and techniques (Trad & Raine, 1994 ). Findings from exploratory cases often include future research suggestions. Finally, transferable cases provide one solution to the generalisation issue in psychotherapy research through the previously mentioned process of meta-synthesis. Grouped together, transferable cases can contribute to theory building and development, as well as higher levels of abstraction about a chosen area of psychotherapy research (Iwakabe & Gazzola, 2009 ).

With this plurality in mind, it is evident that CaSE has a challenging task of appraising research components that are distinct across six different types of purpose-based systematic case studies. The purpose-specific evaluative criteria in Table 13 was developed in close consultation with epistemological literature associated with each type of case study, including: Yin’s ( 1984 , 1993 ) work on establishing the typicality of representative cases; Duncan and Sparks’ ( 2020 ) and Iwakabe and Gazzola’s ( 2009 ) case selection criteria for meta-synthesis and meta-analysis; Stake’s ( 1995 , 2010 ) research on particularising case narratives; Merriam’s ( 1998 ) guidelines on distinctive attributes of unique case studies; Kennedy’s ( 1979 ) epistemological rules for generalising from case studies; Mahrer’s ( 1988 ) discovery oriented case study approach; and Edelson’s ( 1986 ) guidelines for rigorous hypothesis generation in case studies.

Research on epistemic issues in case writing (Kaluzeviciute, 2021 ) and different forms of scientific thinking in psychoanalytic case studies (Kaluzeviciute & Willemsen, 2020 ) was also utilised to identify case study components that would help improve therapist clinical decision-making and reflexivity.

For the analysis of more complex research components (e.g. the degree of therapist reflexivity), the purpose-based evaluation will utilise a framework approach, in line with comprehensive and open-ended reviewer responses in ETQS (Evaluation Tool for Qualitative Studies) (Long & Godfrey, 2004 ) (Table 13 ). That is to say, the evaluation here is not so much about the presence or absence of information (as in the checklist approach) but the degree to which the information helps the case with its unique purpose, whether it is generalisability or typicality. Therefore, although the purpose-oriented evaluation criteria below encompasses comprehensive questions at a considerable level of generality (in the sense that not all components may be required or relevant for each case study), it nevertheless seeks to engage with each type of purpose-based systematic case study on an individual basis (attending to research or clinical components that are unique to each of type of case study).

It is important to note that, as this is an introductory paper to CaSE, the evaluative framework is still preliminary: it involves some of the core questions that pertain to the nature of all six purpose-based systematic case studies. However, there is a need to develop a more comprehensive and detailed CaSE appraisal framework for each purpose-based systematic case study in the future.

Using CaSE on published systematic case studies in psychotherapy: an example

To illustrate the use of CaSE Purpose - based Evaluative Framework for Systematic Case Studies , a case study by Lunn, Daniel, and Poulsen ( 2016 ) titled ‘ Psychoanalytic Psychotherapy With a Client With Bulimia Nervosa ’ was selected from the Single Case Archive (SCA) and analysed in Table 14 . Based on the core questions associated with the six purpose-based systematic case study types in Table 13 (1 to 6), the purpose of Lunn et al.’s ( 2016 ) case was identified as critical (testing an existing theoretical suggestion).

Sometimes, case study authors will explicitly define the purpose of their case in the form of research objectives (as was the case in Lunn et al.’s study); this helps identifying which purpose-based questions are most relevant for the evaluation of the case. However, some case studies will require comprehensive analysis in order to identify their purpose (or multiple purposes). As such, it is recommended that CaSE reviewers first assess the degree and manner in which information about the studied phenomenon, patient data, clinical discourse and research are presented before deciding on the case purpose.

Although each purpose-based systematic case study will contribute to different strands of psychotherapy (theory, practice, training, etc.) and focus on different forms of data (e.g. theory testing vs extensive clinical descriptions), the overarching aim across all systematic case studies in psychotherapy is to study local and contingent processes, such as variations in patient symptoms and complexities of the clinical setting. The comprehensive framework approach will therefore allow reviewers to assess the degree of external validity in systematic case studies (Barkham & Mellor-Clark, 2003 ). Furthermore, assessing the case against its purpose will let reviewers determine whether the case achieves its set goals (research objectives and aims). The example below shows that Lunn et al.’s ( 2016 ) case is successful in functioning as a critical case as the authors provide relevant, high-quality information about their tested therapeutic conditions.

Finally, it is also possible to use CaSE to gather specific type of systematic case studies for one’s research, practice, training, etc. For example, a CaSE reviewer might want to identify as many descriptive case studies focusing on negative therapeutic relationships as possible for their clinical supervision. The reviewer will therefore only need to refer to CaSE questions in Table 13 (2) on descriptive cases. If the reviewed cases do not align with the questions in Table 13 (2), then they are not suitable for the CaSE reviewer who is looking for “know-how” knowledge and detailed clinical narratives.

Concluding comments

This paper introduces a novel Case Study Evaluation-tool (CaSE) for systematic case studies in psychotherapy. Unlike most appraisal tools in EBP, CaSE is positioned within purpose-oriented evaluation criteria, in line with the PBE paradigm. CaSE enables reviewers to assess what each systematic case is good for (rather than determining an absolute measure of ‘good’ and ‘bad’ systematic case studies). In order to explicate a purpose-based evaluative framework, six different systematic case study purposes in psychotherapy have been identified: representative cases (purpose: typicality), descriptive cases (purpose: particularity), unique cases (purpose: deviation), critical cases (purpose: falsification/confirmation), exploratory cases (purpose: hypothesis generation) and transferable cases (purpose: generalisability). Each case was linked with an existing epistemological network, such as Iwakabe and Gazzola’s ( 2009 ) work on case selection criteria for meta-synthesis. The framework approach includes core questions specific to each purpose-based case study (Table 13 (1–6)). The aim is to assess the external validity and effectiveness of each case study against its set out research objectives and aims. Reviewers are required to perform a comprehensive and open-ended data analysis, as shown in the example in Table 14 .

Along with CaSE Purpose - based Evaluative Framework (Table 13 ), the paper also developed CaSE Checklist for Essential Components in Systematic Case Studies (Table 12 ). The checklist approach is meant to aid reviewers in assessing the presence or absence of essential case study components, such as the rationale behind choosing the case study method and description of patient’s history. If essential components are missing in a systematic case study, then it may be implied that there is a lack of information, which in turn diminishes the evidential quality of the case. Following broader definitions of validity set out by Levitt et al. ( 2017 ) and Truijens et al. ( 2019 ), it could be argued that the checklist approach allows for the assessment of (non-quantitative) internal validity in systematic case studies: does the researcher provide sufficient information about the case study design, rationale, research objectives, epistemological/philosophical paradigms, assessment procedures, data analysis, etc., to account for their research conclusions?