Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals

You are here

- Volume 25, Issue 3

- Impact of virtual simulation on nursing students’ learning outcomes: a systematic review

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- http://orcid.org/0000-0002-0211-3055 Terri Kean

- Retired Faculty of Nursing , University of Prince Edward Island , Harrington , PE C1A 4P3 , Canada

- Correspondence to Terri Kean, Retired Faculty of Nursing, University of Prince Edward Island, Charlottetown, PE C1A 4P3, Canada; tkean1965{at}gmail.com

https://doi.org/10.1136/ebnurs-2022-103567

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Background and purpose

This is a summary of Foronda C, Fernandez-Burgos M, Nadeau C, et al . Virtual simulation in nursing education: a systematic review spanning 1996 to 2018. Simulation in Healthcare. 2020;15(1):46.

Despite its growing use, there is limited synthesised knowledge on the effectiveness of virtual simulation (VS) as a pedagogical approach in nursing education.

Measuring the effectiveness of VS as a nursing pedagogy may contribute to the development of best practices for its use and enhance student learning.

This systematic …

Funding The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests None declared.

Provenance and peer review Commissioned; internally peer reviewed.

Read the full text or download the PDF:

- Open access

- Published: 01 May 2024

The effectiveness of virtual reality training on knowledge, skills and attitudes of health care professionals and students in assessing and treating mental health disorders: a systematic review

- Cathrine W. Steen 1 , 2 ,

- Kerstin Söderström 1 , 2 ,

- Bjørn Stensrud 3 ,

- Inger Beate Nylund 2 &

- Johan Siqveland 4 , 5

BMC Medical Education volume 24 , Article number: 480 ( 2024 ) Cite this article

1080 Accesses

2 Altmetric

Metrics details

Virtual reality (VR) training can enhance health professionals’ learning. However, there are ambiguous findings on the effectiveness of VR as an educational tool in mental health. We therefore reviewed the existing literature on the effectiveness of VR training on health professionals’ knowledge, skills, and attitudes in assessing and treating patients with mental health disorders.

We searched MEDLINE, PsycINFO (via Ovid), the Cochrane Library, ERIC, CINAHL (on EBSCOhost), Web of Science Core Collection, and the Scopus database for studies published from January 1985 to July 2023. We included all studies evaluating the effect of VR training interventions on attitudes, knowledge, and skills pertinent to the assessment and treatment of mental health disorders and published in English or Scandinavian languages. The quality of the evidence in randomized controlled trials was assessed with the Cochrane Risk of Bias Tool 2.0. For non-randomized studies, we assessed the quality of the studies with the ROBINS-I tool.

Of 4170 unique records identified, eight studies were eligible. The four randomized controlled trials were assessed as having some concern or a high risk of overall bias. The four non-randomized studies were assessed as having a moderate to serious overall risk of bias. Of the eight included studies, four used a virtual standardized patient design to simulate training situations, two studies used interactive patient scenario training designs, while two studies used a virtual patient game design. The results suggest that VR training interventions can promote knowledge and skills acquisition.

Conclusions

The findings indicate that VR interventions can effectively train health care personnel to acquire knowledge and skills in the assessment and treatment of mental health disorders. However, study heterogeneity, prevalence of small sample sizes, and many studies with a high or serious risk of bias suggest an uncertain evidence base. Future research on the effectiveness of VR training should include assessment of immersive VR training designs and a focus on more robust studies with larger sample sizes.

Trial registration

This review was pre-registered in the Open Science Framework register with the ID-number Z8EDK.

Peer Review reports

A robustly trained health care workforce is pivotal to forging a resilient health care system [ 1 ], and there is an urgent need to develop innovative methods and emerging technologies for health care workforce education [ 2 ]. Virtual reality technology designs for clinical training have emerged as a promising avenue for increasing the competence of health care professionals, reflecting their potential to provide effective training [ 3 ].

Virtual reality (VR) is a dynamic and diverse field, and can be described as a computer-generated environment that simulates sensory experiences, where user interactions play a role in shaping the course of events within that environment [ 4 ]. When optimally designed, VR gives users the feeling that they are physically within this simulated space, unlocking its potential as a dynamic and immersive learning tool [ 5 ]. The cornerstone of the allure of VR is its capacity for creating artificial settings via sensory deceptions, encapsulated by the term ‘immersion’. Immersion conveys the sensation of being deeply engrossed or enveloped in an alternate world, akin to absorption in a video game. Some VR systems will be more immersive than others, based on the technology used to influence the senses. However, the degree of immersion does not necessarily determine the user’s level of engagement with the application [ 6 ].

A common approach to categorizing VR systems is based on the design of the technology used, allowing them to be classified into: 1) non-immersive desktop systems, where users experience virtual environments through a computer screen, 2) immersive CAVE systems with large projected images and motion trackers to adjust the image to the user, and 3) fully immersive head-mounted display systems that involve users wearing a headset that fully covers their eyes and ears, thus entirely immersing them in the virtual environment [ 7 ]. Advances in VR technology have enabled a wide range of VR experiences. The possibility for health care professionals to repeatedly practice clinical skills with virtual patients in a risk-free environment offers an invaluable learning platform for health care education.

The impact of VR training on health care professionals’ learning has predominantly been researched in terms of the enhancement of technical surgical abilities. This includes refining procedural planning, familiarizing oneself with medical instruments, and practicing psychomotor skills such as dexterity, accuracy, and speed [ 8 , 9 ]. In contrast, the exploration of VR training in fostering non-technical or ‘soft’ skills, such as communication and teamwork, appears to be less prevalent [ 10 ]. A recent systematic review evaluates the outcomes of VR training in non-technical skills across various medical specialties [ 11 ], focusing on vital cognitive abilities (e.g., situation awareness, decision-making) and interprofessional social competencies (e.g., teamwork, conflict resolution, leadership). These skills are pivotal in promoting collaboration among colleagues and ensuring a safe health care environment. At the same time, they are not sufficiently comprehensive for encounters with patients with mental health disorders.

For health care professionals providing care to patients with mental health disorders, acquiring specific skills, knowledge, and empathic attitudes is of utmost importance. Many individuals experiencing mental health challenges may find it difficult to communicate their thoughts and feelings, and it is therefore essential for health care providers to cultivate an environment where patients feel safe and encouraged to share feelings and thoughts. Beyond fostering trust, health care professionals must also possess in-depth knowledge about the nature and treatment of various mental health disorders. Moreover, they must actively practice and internalize the skills necessary to translate their knowledge into clinical practice. While the conventional approach to training mental health clinical skills has been through simulation or role-playing with peers under expert supervision and practicing with real patients, the emergence of VR applications presents a compelling alternative. This technology promises a potentially transformative way to train mental health professionals. Our review identifies specific outcomes in knowledge, skills, and attitudes, covering areas from theoretical understanding to practical application and patient interaction. By focusing on these measurable concepts, which are in line with current healthcare education guidelines [ 12 ], we aim to contribute to the knowledge base and provide a detailed analysis of the complexities in mental health care training. This approach is designed to highlight the VR training’s practical relevance alongside its contribution to academic discourse.

A recent systematic review evaluated the effects of virtual patient (VP) interventions on knowledge, skills, and attitudes in undergraduate psychiatry education [ 13 ]. This review’s scope is limited to assessing VP interventions and does not cover other types of VR training interventions. Furthermore, it adopts a classification of VP different from our review, rendering their findings and conclusions not directly comparable to ours.

To the best of our knowledge, no systematic review has assessed and summarized the effectiveness of VR training interventions for health professionals in the assessment and treatment of mental health disorders. This systematic review addresses the gap by exploring the effectiveness of virtual reality in the training of knowledge, skills, and attitudes health professionals need to master in the assessment and treatment of mental health disorders.

This systematic review follows the guidelines of Preferred Reporting Items for Systematic Reviews and Meta-Analysis [ 14 ]. The protocol of the systematic review was registered in the Open Science Framework register with the registration ID Z8EDK.

We included randomized controlled trials, cohort studies, and pretest–posttest studies, which met the following criteria: a) a population of health care professionals or health care professional students, b) assessed the effectiveness of a VR application in assessing and treating mental health disorders, and c) reported changes in knowledge, skills, or attitudes. We excluded studies evaluating VR interventions not designed for training in assessing and treating mental health disorders (e.g., training of surgical skills), studies evaluating VR training from the first-person perspective, studies that used VR interventions for non-educational purposes and studies where VR interventions trained patients with mental health problems (e.g., social skills training). We also excluded studies not published in English or Scandinavian languages.

Search strategy

The literature search reporting was guided by relevant items in PRISMA-S [ 15 ]. In collaboration with a senior academic librarian (IBN), we developed the search strategy for the systematic review. Inspired by the ‘pearl harvesting’ information retrieval approach [ 16 ], we anticipated a broad spectrum of terms related to our interdisciplinary query. Recognizing that various terminologies could encapsulate our central ideas, we harvested an array of terms for each of the four elements ‘health care professionals and health care students’, ‘VR’, ‘training’, and ‘mental health’. The pearl harvesting framework [ 16 ] consists of four steps which we followed with some minor adaptions. Step 1: We searched for and sampled a set of relevant research articles, a book chapter, and literature reviews. Step 2: The librarian scrutinized titles, abstracts, and author keywords, as well as subject headings used in databases, and collected relevant terms. Step 3: The librarian refined the lists of terms. Step 4: The review group, in collaboration with a VR consultant from KildeGruppen AS (a Norwegian media company), validated the refined lists of terms to ensure they included all relevant VR search terms. This process for the element VR resulted in the inclusion of search terms such as ‘3D simulated environment’, ‘second life simulation’, ‘virtual patient’, and ‘virtual world’. We were given a peer review of the search strategy by an academic librarian at Inland Norway University of Applied Sciences.

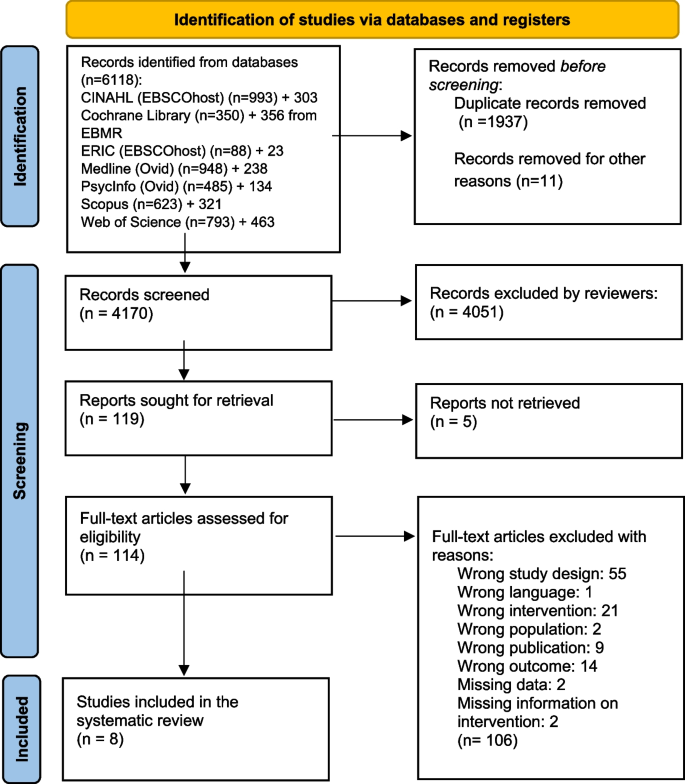

In June and July 2021, we performed comprehensive searches for publications dating from January 1985 to the present. This period for the inclusion of studies was chosen since VR systems designed for training in health care first emerged in the early 1990s. The searches were carried out in seven databases: MEDLINE and PsycInfo (on Ovid), ERIC and CINAHL (on EBSCOhost), the Cochrane Library, Web of Science Core Collection, and Scopus. Detailed search strategies from each database are available for public access at DataverseNO [ 17 ]. On July 2, 2021, a search in CINAHL yielded 993 hits. However, when attempting to transfer these records to EndNote using the ‘Folder View’—a feature designed for organizing and managing selected records before export—only 982 records were successfully transferred. This discrepancy indicates that 11 records could not be transferred through Folder View, for reasons not specified. The process was repeated twice, consistently yielding the same discrepancy. The missing 11 records pose a risk of failing to capture relevant studies in the initial search. In July 2023, to make sure that we included the latest publications, we updated our initial searches, focusing on entries since January 1, 2021. This ensured that we did not miss any new references recently added to these databases. Due to a lack of access to the Cochrane Library in July 2023, we used EBMR (Evidence Based Medicine Reviews) on the Ovid platform instead, including the databases Cochrane Central Register of Controlled Trials, Cochrane Database of Systematic Reviews, and Cochrane Clinical Answers. All references were exported to Endnote and duplicates were removed. The number of records from each database can be observed in the PRISMA diagram [ 14 ], Fig. 1 .

PRISMA flow chart of the records and study selection process

Study selection and data collection

Two reviewers (JS, CWS) independently assessed the titles and abstracts of studies retrieved from the literature search based on the eligibility criteria. We employed the Rayyan website for the screening process [ 18 ]. The same reviewers (JS, CWS) assessed the full-text articles selected after the initial screening. Articles meeting the eligibility criteria were incorporated into the review. Any disagreements were resolved through discussion.

Data extracted from the studies by the first author (CWS) and cross-checked by another reviewer (JS) included: authors of the study, publication year, country, study design, participant details (education, setting), interventions (VR system, class label), comparison types, outcomes, and main findings. This data is summarized in Table 1 and Additional file 1 . In the process of reviewing the VR interventions utilized within the included studies, we sought expertise from advisers associated with VRINN, a Norwegian immersive learning cluster, and SIMInnlandet, a center dedicated to simulation in mental health care at Innlandet Hospital Trust. This collaboration ensured a thorough examination and accurate categorization of the VR technologies applied. Furthermore, the classification of the learning designs employed in the VP interventions was conducted under the guidance of an experienced VP scholar at Paracelcus Medical University in Salzburg.

Data analysis

We initially intended to perform a meta-analysis with knowledge, skills, and attitudes as primary outcomes, planning separate analyses for each. However, due to significant heterogeneity observed among the included studies, it was not feasible to carry out a meta-analysis. Consequently, we opted for a narrative synthesis based on these pre-determined outcomes of knowledge, skills, and attitudes. This approach allowed for an analysis of the relationships both within and between the studies. The effect sizes were calculated using a web-based effect size calculator [ 27 ]. We have interpreted effect sizes based on commonly used descriptions for Cohen’s d: small = 0.2, moderate = 0.5, and large = 0.8, and for Cramer’s V: small = 0.10, medium = 0.30, and large = 0.50.

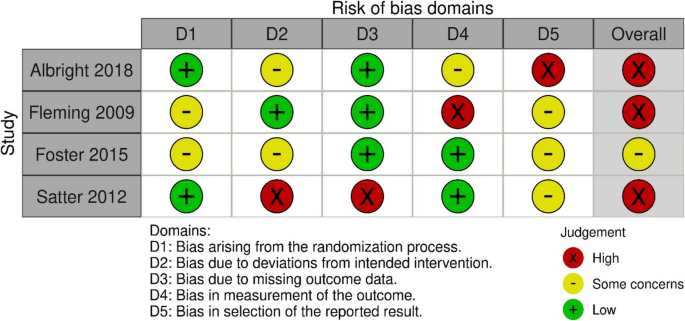

Risk of bias assessment

JS and CWS independently evaluated the risk of bias for all studies using two distinct assessment tools. We used the Cochrane risk of bias tool RoB 2 [ 28 ] to assess the risk of bias in the RCTs. With the RoB 2 tool, the bias was assessed as high, some concerns or low for five domains: randomization process, deviations from the intended interventions, missing outcome data, measurement of the outcome, and selection of the reported result [ 28 ].

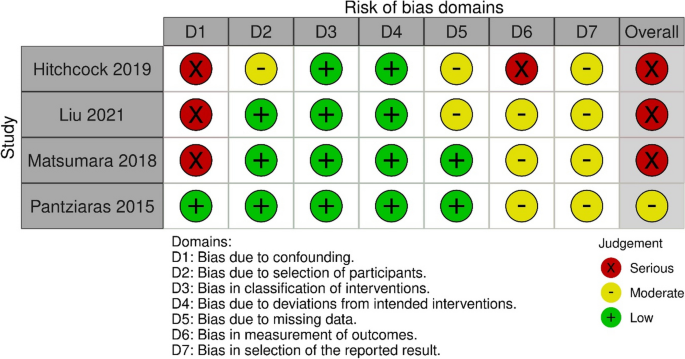

We used the Risk Of Bias In Non-randomized Studies of Interventions (ROBINS-I) tool [ 29 ] to assess the risk of bias in the cohort and single-group studies. By using ROBINS-I for the non-randomized trials, the risk of bias was assessed using the categories low, moderate, serious, critical or no information for seven domains: confounding, selection of participants, classification of interventions, deviations from intended interventions, missing data, measurement of outcomes, and selection of the reported result [ 29 ].

We included eight studies in the review (Fig. 1 ). An overview of the included studies is presented in detail in Table 1 .

Four studies were RCTs [ 19 , 20 , 21 , 22 ], two were single group pretest–posttest studies [ 23 , 26 ], one was a controlled before and after study [ 25 ], and one was a cohort study [ 24 ]. The studies included health professionals from diverse educational backgrounds, including some from mental health and medical services, as well as students in medicine, social work, and nursing. All studies, published from 2009 to 2021, utilized non-immersive VR desktop system interventions featuring various forms of VP designs. Based on an updated classification of VP interventions by Kononowicz et al. [ 30 ] developed from a model proposed by Talbot et al. [ 31 ], we have described the characteristics of the interventions in Table 1 . Four of the studies utilized a virtual standardized patient (VSP) intervention [ 20 , 21 , 22 , 23 ], a conversational agent that simulates clinical presentations for training purposes. Two studies employed an interactive patient scenario (IPS) design [ 25 , 26 ], an approach that primarily uses text-based multimedia, enhanced with images and case histories through text or voice narratives, to simulate clinical scenarios. Lastly, two studies used a virtual patient game (VP game) intervention [ 19 , 24 ]. These interventions feature training scenarios using 3D avatars, specifically designed to improve clinical reasoning and team training skills. It should be noted that the interventions classified as VSPs in this review, being a few years old, do not encompass artificial intelligence (AI) as we interpret it today. However, since the interventions include some kind of algorithm that provides answers to questions, we consider them as conversational agents, and therefore as VSPs. As the eight included studies varied significantly in terms of design, interventions, and outcome measures, we could not incorporate them into a meta-analysis.

The overall risk of bias for the four RCTs was high [ 19 , 20 , 22 ] or of some concern [ 21 ] (Fig. 2 ). They were all assessed as low or of some concern in the domains of randomization. Three studies were assessed with a high risk of bias in one [ 19 , 20 ] or two domains [ 22 ]; one study had a high risk of bias in the domain of selection of the reported result [ 19 ], one in the domain of measurement of outcome [ 20 ], and one in the domains of deviation from the intended interventions and missing outcome data [ 22 ]. One study was not assessed as having a high risk of bias in any domain [ 21 ].

Risk of bias summary: review authors assessments of each risk of bias item in the included RCT studies

For the four non-randomized studies, the overall risk of bias was judged to be moderate [ 26 ] or serious [ 23 , 24 , 25 ] (Fig. 3 ). One study had a serious risk of bias in two domains: confounding and measurement of outcomes [ 23 ]. Two studies had a serious risk of bias in one domain, namely confounding [ 24 , 25 ], while one study was judged not to have a serious risk of bias in any domain [ 26 ].

Risk of bias summary: review authors assessments of each risk of bias item in the included non-randomized studies

Three studies investigated the impact of virtual reality training on mental health knowledge [ 24 , 25 , 26 ]. One study with 32 resident psychiatrists in a single group pretest–posttest design assessed the effect of a VR training intervention on knowledge of posttraumatic stress disorder (PTSD) symptomatology, clinical management, and communication skills [ 26 ]. The intervention consisted of an IPS. The assessment of the outcome was conducted using a knowledge test with 11 multiple-choice questions and was administered before and after the intervention. This study reported a significant improvement on the knowledge test after the VR training intervention.

The second study examined the effect of a VR training intervention on knowledge of dementia [ 25 ], employing a controlled before and after design. Seventy-nine medical students in clinical training were divided into two groups, following a traditional learning program. The experimental group received an IPS intervention. The outcome was evaluated with a knowledge test administered before and after the intervention with significantly higher posttest scores in the experimental group than in the control group, with a moderate effects size observed between the groups.

A third study evaluated the effect of a VR training intervention on 299 undergraduate nursing students’ diagnostic recognition of depression and schizophrenia (classified as knowledge) [ 24 ]. In a prospective cohort design, the VR intervention was the only difference in the mental health related educational content provided to the two cohorts, and consisted of a VP game design, developed to simulate training situations with virtual patient case scenarios, including depression and schizophrenia. The outcome was assessed by determining the accuracy of diagnoses made after reviewing case vignettes of depression and schizophrenia. The study found no statistically significant effect of VR training on diagnostic accuracy between the simulation and the non-simulation cohort.

Summary: All three studies assessing the effect of a VR intervention on knowledge were non-randomized studies with different study designs using different outcome measures. Two studies used an IPS design, while one study used a VP game design. Two of the studies found a significant effect of VR training on knowledge. Of these, one study had a moderate overall risk of bias [ 26 ], while the other was assessed as having a serious overall risk of bias [ 25 ]. The third study, which did not find any effect of the virtual reality intervention on knowledge, was assessed to have a serious risk of bias [ 24 ].

Three RCTs assessed the effectiveness of VR training on skills [ 20 , 21 , 22 ]. One of them evaluated the effect of VR training on clinical skills in alcohol screening and intervention [ 20 ]. In this study, 102 health care professionals were randomly allocated to either a group receiving no training or a group receiving a VSP intervention. To evaluate the outcome, three standardized patients rated each participant using a checklist based on clinical criteria. The VSP intervention group demonstrated significantly improved posttest skills in alcohol screening and brief intervention compared to the control group, with moderate and small effect sizes, respectively.

Another RCT, including 67 medical college students, evaluated the effect of VR training on clinical skills by comparing the frequency of questions asked about suicide in a VSP intervention group and a video module group [ 21 ]. The assessment of the outcome was a psychiatric interview with a standardized patient. The primary outcome was the frequency with which the students asked the standardized patient five questions about suicide risk. Minimal to small effect sizes were noted in favor of the VSP intervention, though they did not achieve statistical significance for any outcomes.

One posttest only RCT evaluated the effect of three training programs on skills in detecting and diagnosing major depressive disorder and posttraumatic stress disorder (PTSD) [ 22 ]. The study included 30 family physicians, and featured interventions that consisted of two different VSPs designed to simulate training situations, and one text-based program. A diagnostic form filled in by the participants after the intervention was used to assess the outcome. The results revealed a significant effect on diagnostic accuracy for major depressive disorder for both groups receiving VR training, compared to the text-based program, with large effect sizes observed. For PTSD, the intervention using a fixed avatar significantly improved diagnostic accuracy with a large effect size, whereas the intervention with a choice avatar demonstrated a moderate to large effect size compared to the text-based program.

Summary: Three RCTs assessed the effectiveness of VR training on clinical skills [ 20 , 21 , 22 ], all of which used a VSP design. To evaluate the effect of training, two of the studies utilized standardized patients with checklists. The third study measured the effect on skills using a diagnostic form completed by the participants. Two of the studies found a significant effect on skills [ 20 , 22 ], both were assessed to have a high risk of bias. The third study, which did not find any effect of VR training on skills, had some concern for risk of bias [ 21 ].

Knowledge and skills

One RCT study with 227 health care professionals assessed knowledge and skills as a combined outcome compared to a waitlist control group, using a self-report survey before and after the VR training [ 19 ]. The training intervention was a VP game designed to practice knowledge and skills related to mental health and substance abuse disorders. To assess effect of the training, participants completed a self-report scale measuring perceived knowledge and skills. Changes between presimulation and postsimulation scores were reported only for the within treatment group ( n = 117), where the composite postsimulation score was significantly higher than the presimulation score, with a large effect size observed. The study was judged to have a high risk of bias in the domain of selection of the reported result.

One single group pretest–posttest study with 100 social work and nursing students assessed the effect of VSP training on attitudes towards individuals with substance abuse disorders [ 23 ]. To assess the effect of the training, participants completed an online pretest and posttest survey including questions from a substance abuse attitudes survey. This study found no significant effect of VR training on attitudes and was assessed as having a serious risk of bias.

Perceived competence

The same single group pretest–posttest study also assessed the effect of a VSP training intervention on perceived competence in screening, brief intervention, and referral to treatment in encounters with patients with substance abuse disorders [ 23 ]. A commonly accepted definition of competence is that it comprises integrated components of knowledge, skills, and attitudes that enable the successful execution of a professional task [ 32 ]. To assess the effect of the training, participants completed an online pretest and posttest survey including questions on perceived competence. The study findings demonstrated a significant increase in perceived competence following the VSP intervention. The risk of bias in this study was judged as serious.

This systematic review aimed to investigate the effectiveness of VR training on knowledge, skills, and attitudes that health professionals need to master in the assessment and treatment of mental health disorders. A narrative synthesis of eight included studies identified VR training interventions that varied in design and educational content. Although mixed results emerged, most studies reported improvements in knowledge and skills after VR training.

We found that all interventions utilized some type of VP design, predominantly VSP interventions. Although our review includes a limited number of studies, it is noteworthy that the distribution of interventions contrasts with a literature review on the use of ‘virtual patient’ in health care education from 2015 [ 30 ], which identified IPS as the most frequent intervention. This variation may stem from our review’s focus on the mental health field, suggesting a different intervention need and distribution than that observed in general medical education. A fundamental aspect of mental health education involves training skills needed for interpersonal communication, clinical interviews, and symptom assessment, which makes VSPs particularly appropriate. While VP games may be suitable for clinical reasoning in medical fields, offering the opportunity to perform technical medical procedures in a virtual environment, these designs may present some limitations for skills training in mental health education. Notably, avatars in a VP game do not comprehend natural language and are incapable of engaging in conversations. Therefore, the continued advancement of conversational agents like VSPs is particularly compelling and considered by scholars to hold the greatest potential for clinical skills training in mental health education [ 3 ]. VSPs, equipped with AI dialogue capabilities, are particularly valuable for repetitive practice in key skills such as interviewing and counseling [ 31 ], which are crucial in the assessment and treatment of mental health disorders. VSPs could also be a valuable tool for the implementation of training methods in mental health education, such as deliberate practice, a method that has gained attention in psychotherapy training in recent years [ 33 ] for its effectiveness in refining specific performance areas through consistent repetition [ 34 ]. Within this evolving landscape, AI system-based large language models (LLMs) like ChatGPT stand out as a promising innovation. Developed from extensive datasets that include billions of words from a variety of sources, these models possess the ability to generate and understand text in a manner akin to human interaction [ 35 ]. The integration of LLMs into educational contexts shows promise, yet careful consideration and thorough evaluation of their limitations are essential [ 36 ]. One concern regarding LLMs is the possibility of generating inaccurate information, which represents a challenge in healthcare education where precision is crucial [ 37 ]. Furthermore, the use of generative AI raises ethical questions, notably because of potential biases in the training datasets, including content from books and the internet that may not have been verified, thereby risking the perpetuation of these biases [ 38 ]. Developing strategies to mitigate these challenges is imperative, ensuring LLMs are utilized safely in healthcare education.

All interventions in our review were based on non-immersive desktop VR systems, which is somewhat surprising considering the growing body of literature highlighting the impact of immersive VR technology in education, as exemplified by reviews such as that of Radianti et al. [ 39 ]. Furthermore, given the recent accessibility of affordable, high-quality head-mounted displays, this observation is noteworthy. Research has indicated that immersive learning based on head-mounted displays generally yields better learning outcomes than non-immersive approaches [ 40 ], making it an interesting research area in mental health care training and education. Studies using immersive interventions were excluded in the present review because of methodological concerns, paralleling findings described in a systematic review on immersive VR in education [ 41 ], suggesting the potential early stage of research within this field. Moreover, the integration of immersive VR technology into mental health care education may encounter challenges associated with complex ethical and regulatory frameworks, including data privacy concerns exemplified by the Oculus VR headset-Facebook integration, which could restrict the implementation of this technology in healthcare setting. Prioritizing specific training methodologies for enhancing skills may also affect the utilization of immersive VR in mental health education. For example, integrating interactive VSPs into a fully immersive VR environment remains a costly endeavor, potentially limiting the widespread adoption of immersive VR in mental health care. Meanwhile, the use of 360-degree videos in immersive VR environments for training purposes [ 42 ] can be realized with a significantly lower budget. Immersive VR offers promising opportunities for innovative training, but realizing its full potential in mental health care education requires broader research validation and the resolution of existing obstacles.

This review bears some resemblance to the systematic review by Jensen et al. on virtual patients in undergraduate psychiatry education [ 13 ] from 2024, which found that virtual patients improved learning outcomes compared to traditional methods. However, these authors’ expansion of the commonly used definition of virtual patient makes their results difficult to compare with the findings in the present review. A recognized challenge in understanding VR application in health care training arises from the literature on VR training for health care personnel, where ‘virtual patient’ is a term broadly used to describe a diverse range of VR interventions, which vary significantly in technology and educational design [ 3 , 30 ]. For instance, reviews might group different interventions using various VR systems and designs under a single label (virtual patient), or primary studies may use misleading or inadequately defined classifications for the virtual patient interventions evaluated. Clarifying the similarities and differences among these interventions is vital to inform development and enhance communication and understanding in educational contexts [ 43 ].

Strengths and limitations

To the best of our knowledge, this is the first systematic review to evaluate the effectiveness of VR training on knowledge, skills, and attitudes in health care professionals and students in assessing and treating mental health disorders. This review therefore provides valuable insights into the use of VR technology in training and education for mental health care. Another strength of this review is the comprehensive search strategy developed by a senior academic librarian at Inland Norway University of Applied Sciences (HINN) and the authors in collaboration with an adviser from KildeGruppen AS (a Norwegian media company). The search strategy was peer-reviewed by an academic librarian at HINN. Advisers from VRINN (an immersive learning cluster in Norway) and SIMInnlandet (a center for simulation in mental health care at Innlandet Hospital Trust) provided assistance in reviewing the VR systems of the studies, while the classification of the learning designs was conducted under the guidance of a VP scholar. This systematic review relies on an established and recognized classification of VR interventions for training health care personnel and may enhance understanding of the effectiveness of VR interventions designed for the training of mental health care personnel.

This review has some limitations. As we aimed to measure the effect of the VR intervention alone and not the effect of a blended training design, the selection of included studies was limited. Studies not covered in this review might have offered different insights. Given the understanding that blended learning designs, where technology is combined with other forms of learning, have significant positive effects on learning outcomes [ 44 ], we were unable to evaluate interventions that may be more effective in clinical settings. Further, by limiting the outcomes to knowledge, skills, and attitudes, we might have missed insights into other outcomes that are pivotal to competence acquisition.

Limitations in many of the included studies necessitate cautious interpretation of the review’s findings. Small sample sizes and weak designs in several studies, coupled with the use of non-validated outcome measures in some studies, diminish the robustness of the findings. Furthermore, the risk of bias assessment in this review indicates a predominantly high or serious risk of bias across most of the studies, regardless of their design. In addition, the heterogeneity of the studies in terms of study design, interventions, and outcome measures prevented us from conducting a meta-analysis.

Further research

Future research on the effectiveness of VR training for specific learning outcomes in assessing and treating mental health disorders should encompass more rigorous experimental studies with larger sample sizes. These studies should include verifiable descriptions of the VR interventions and employ validated tools to measure outcomes. Moreover, considering that much professional learning involves interactive and reflective practice, research on VR training would probably be enhanced by developing more in-depth study designs that evaluate not only the immediate learning outcomes of VR training but also the broader learning processes associated with it. Future research should also concentrate on utilizing immersive VR training applications, while additionally exploring the integration of large language models to augment interactive learning in mental health care. Finally, this review underscores the necessity in health education research involving VR to communicate research findings using agreed terms and classifications, with the aim of providing a clearer and more comprehensive understanding of the research.

This systematic review investigated the effect of VR training interventions on knowledge, skills, and attitudes in the assessment and treatment of mental health disorders. The results suggest that VR training interventions can promote knowledge and skills acquisition. Further studies are needed to evaluate VR training interventions as a learning tool for mental health care providers. This review emphasizes the necessity to improve future study designs. Additionally, intervention studies of immersive VR applications are lacking in current research and should be a future area of focus.

Availability of data and materials

Detailed search strategies from each database is available in the DataverseNO repository, https://doi.org/10.18710/TI1E0O .

Abbreviations

Virtual Reality

Cave Automatic Virtual Environment

Randomized Controlled Trial

Non-Randomized study

Virtual Standardized Patient

Interactive Patient Scenario

Virtual Patient

Post Traumatic Stress Disorder

Standardized Patient

Artificial intelligence

Inland Norway University of Applied Sciences

Doctor of Philosophy

Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010;376(9756):1923–58.

Article Google Scholar

World Health Organization. eLearning for undergraduate health professional education: a systematic review informing a radical transformation of health workforce development. Geneva: World Health Organization; 2015.

Google Scholar

Talbot T, Rizzo AS. Virtual human standardized patients for clinical training. In: Rizzo AS, Bouchard S, editors. Virtual reality for psychological and neurocognitive interventions. New York: Springer; 2019. p. 387–405.

Chapter Google Scholar

Merriam-Webster dictionary. Springfield: Merriam-Webster Incorporated; c2024. Virtual reality. Available from: https://www.merriam-webster.com/dictionary/virtual%20reality . [cited 2024 Mar 24].

Winn W. A conceptual basis for educational applications of virtual reality. Technical Publication R-93–9. Seattle: Human Interface Technology Laboratory, University of Washington; 1993.

Bouchard S, Rizzo AS. Applications of virtual reality in clinical psychology and clinical cognitive neuroscience–an introduction. In: Rizzo AS, Bouchard S, editors. Virtual reality for psychological and neurocognitive interventions. New York: Springer; 2019. p. 1–13.

Waller D, Hodgson E. Sensory contributions to spatial knowledge of real and virtual environments. In: Steinicke F, Visell Y, Campos J, Lécuyer A, editors. Human walking in virtual environments: perception, technology, and applications. New York: Springer New York; 2013. p. 3–26. https://doi.org/10.1007/978-1-4419-8432-6_1 .

Choudhury N, Gélinas-Phaneuf N, Delorme S, Del Maestro R. Fundamentals of neurosurgery: virtual reality tasks for training and evaluation of technical skills. World Neurosurg. 2013;80(5):e9–19. https://doi.org/10.1016/j.wneu.2012.08.022 .

Gallagher AG, Ritter EM, Champion H, Higgins G, Fried MP, Moses G, et al. Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg. 2005;241(2):364–72. https://doi.org/10.1097/01.sla.0000151982.85062.80 .

Kyaw BM, Saxena N, Posadzki P, Vseteckova J, Nikolaou CK, George PP, et al. Virtual reality for health professions education: systematic review and meta-analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019;21(1):e12959. https://doi.org/10.2196/12959 .

Bracq M-S, Michinov E, Jannin P. Virtual reality simulation in nontechnical skills training for healthcare professionals: a systematic review. Simul Healthc. 2019;14(3):188–94. https://doi.org/10.1097/sih.0000000000000347 .

World Health Organization. Transforming and scaling up health professionals’ education and training: World Health Organization guidelines 2013. Geneva: World Health Organization; 2013. Available from: https://www.who.int/publications/i/item/transforming-and-scaling-up-health-professionals%E2%80%99-education-and-training . Accessed 15 Jan 2024.

Jensen RAA, Musaeus P, Pedersen K. Virtual patients in undergraduate psychiatry education: a systematic review and synthesis. Adv Health Sci Educ. 2024;29(1):329–47. https://doi.org/10.1007/s10459-023-10247-6 .

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Syst Rev. 2021;10(1):89. https://doi.org/10.1186/s13643-021-01626-4 .

Rethlefsen ML, Kirtley S, Waffenschmidt S, Ayala AP, Moher D, Page MJ, et al. PRISMA-S: an extension to the PRISMA statement for reporting literature searches in systematic reviews. Syst Rev. 2021;10(1):39. https://doi.org/10.1186/s13643-020-01542-z .

Sandieson RW, Kirkpatrick LC, Sandieson RM, Zimmerman W. Harnessing the power of education research databases with the pearl-harvesting methodological framework for information retrieval. J Spec Educ. 2010;44(3):161–75. https://doi.org/10.1177/0022466909349144 .

Steen CW, Söderström K, Stensrud B, Nylund IB, Siqveland J. Replication data for: the effectiveness of virtual reality training on knowledge, skills and attitudes of health care professionals and students in assessing and treating mental health disorders: a systematic review. In: Inland Norway University of Applied S, editor. V1 ed: DataverseNO; 2024. https://doi.org/10.18710/TI1E0O .

Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan—a web and mobile app for systematic reviews. Syst Rev. 2016;5(1):210. https://doi.org/10.1186/s13643-016-0384-4 .

Albright G, Bryan C, Adam C, McMillan J, Shockley K. Using virtual patient simulations to prepare primary health care professionals to conduct substance use and mental health screening and brief intervention. J Am Psych Nurses Assoc. 2018;24(3):247–59. https://doi.org/10.1177/1078390317719321 .

Fleming M, Olsen D, Stathes H, Boteler L, Grossberg P, Pfeifer J, et al. Virtual reality skills training for health care professionals in alcohol screening and brief intervention. J Am Board Fam Med. 2009;22(4):387–98. https://doi.org/10.3122/jabfm.2009.04.080208 .

Foster A, Chaudhary N, Murphy J, Lok B, Waller J, Buckley PF. The use of simulation to teach suicide risk assessment to health profession trainees—rationale, methodology, and a proof of concept demonstration with a virtual patient. Acad Psych. 2015;39:620–9. https://doi.org/10.1007/s40596-014-0185-9 .

Satter R. Diagnosing mental health disorders in primary care: evaluation of a new training tool [dissertation]. Tempe (AZ): Arizona State University; 2012.

Hitchcock LI, King DM, Johnson K, Cohen H, McPherson TL. Learning outcomes for adolescent SBIRT simulation training in social work and nursing education. J Soc Work Pract Addict. 2019;19(1/2):47–56. https://doi.org/10.1080/1533256X.2019.1591781 .

Liu W. Virtual simulation in undergraduate nursing education: effects on students’ correct recognition of and causative beliefs about mental disorders. Comput Inform Nurs. 2021;39(11):616–26. https://doi.org/10.1097/CIN.0000000000000745 .

Matsumura Y, Shinno H, Mori T, Nakamura Y. Simulating clinical psychiatry for medical students: a comprehensive clinic simulator with virtual patients and an electronic medical record system. Acad Psych. 2018;42(5):613–21. https://doi.org/10.1007/s40596-017-0860-8 .

Pantziaras I, Fors U, Ekblad S. Training with virtual patients in transcultural psychiatry: Do the learners actually learn? J Med Internet Res. 2015;17(2):e46. https://doi.org/10.2196/jmir.3497 .

Wilson DB. Practical meta-analysis effect size calculator [Online calculator]. n.d. https://campbellcollaboration.org/research-resources/effect-size-calculator.html . Accessed 08 March 2024.

Sterne JA, Savović J, Page MJ, Elbers RG, Blencowe NS, Boutron I, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. Br Med J. 2019;366:l4898.

Sterne JA, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. Br Med J. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919 .

Kononowicz AA, Zary N, Edelbring S, Corral J, Hege I. Virtual patients - what are we talking about? A framework to classify the meanings of the term in healthcare education. BMC Med Educ. 2015;15(1):11. https://doi.org/10.1186/s12909-015-0296-3 .

Talbot TB, Sagae K, John B, Rizzo AA. Sorting out the virtual patient: how to exploit artificial intelligence, game technology and sound educational practices to create engaging role-playing simulations. Int J Gaming Comput-Mediat Simul. 2012;4(3):1–19.

Baartman LKJ, de Bruijn E. Integrating knowledge, skills and attitudes: conceptualising learning processes towards vocational competence. Educ Res Rev. 2011;6(2):125–34. https://doi.org/10.1016/j.edurev.2011.03.001 .

Mahon D. A scoping review of deliberate practice in the acquisition of therapeutic skills and practices. Couns Psychother Res. 2023;23(4):965–81. https://doi.org/10.1002/capr.12601 .

Ericsson KA, Lehmann AC. Expert and exceptional performance: evidence of maximal adaptation to task constraints. Annu Rev Psychol. 1996;47(1):273–305.

Roumeliotis KI, Tselikas ND. ChatGPT and Open-AI models: a preliminary review. Future Internet. 2023;15(6):192. https://doi.org/10.3390/fi15060192 .

Kasneci E, Sessler K, Küchemann S, Bannert M, Dementieva D, Fischer F, et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn Individ Differ. 2023;103:102274. https://doi.org/10.1016/j.lindif.2023.102274 .

Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nat Med. 2023;29(8):1930–40. https://doi.org/10.1038/s41591-023-02448-8 .

Touvron H, Lavril T, Gautier I, Martinet X, Marie-Anne L, Lacroix T, et al. LLaMA: open and efficient foundation language models. arXivorg. 2023;2302.13971. https://doi.org/10.48550/arxiv.2302.13971 .

Radianti J, Majchrzak TA, Fromm J, Wohlgenannt I. A systematic review of immersive virtual reality applications for higher education: design elements, lessons learned, and research agenda. Comput Educ. 2020;147:103778. https://doi.org/10.1016/j.compedu.2019.103778 .

Wu B, Yu X, Gu X. Effectiveness of immersive virtual reality using head-mounted displays on learning performance: a meta-analysis. Br J Educ Technol. 2020;51(6):1991–2005. https://doi.org/10.1111/bjet.13023 .

Di Natale AF, Repetto C, Riva G, Villani D. Immersive virtual reality in K-12 and higher education: a 10-year systematic review of empirical research. Br J Educ Technol. 2020;51(6):2006–33. https://doi.org/10.1111/bjet.13030 .

Haugan S, Kværnø E, Sandaker J, Hustad JL, Thordarson GO. Playful learning with VR-SIMI model: the use of 360-video as a learning tool for nursing students in a psychiatric simulation setting. In: Akselbo I, Aune I, editors. How can we use simulation to improve competencies in nursing? Cham: Springer International Publishing; 2023. p. 103–16. https://doi.org/10.1007/978-3-031-10399-5_9 .

Huwendiek S, De leng BA, Zary N, Fischer MR, Ruiz JG, Ellaway R. Towards a typology of virtual patients. Med Teach. 2009;31(8):743–8. https://doi.org/10.1080/01421590903124708 .

Ødegaard NB, Myrhaug HT, Dahl-Michelsen T, Røe Y. Digital learning designs in physiotherapy education: a systematic review and meta-analysis. BMC Med Educ. 2021;21(1):48. https://doi.org/10.1186/s12909-020-02483-w .

Download references

Acknowledgements

The authors thank Mole Meyer, adviser at SIMInnlandet, Innlandet Hospital Trust, and Keith Mellingen, manager at VRINN, for their assistance with the categorization and classification of VR interventions, and Associate Professor Inga Hege at the Paracelcus Medical University in Salzburg for valuable contributions to the final classification of the interventions. The authors would also like to thank Håvard Røste from the media company KildeGruppen AS, for assistance with the search strategy; Academic Librarian Elin Opheim at the Inland Norway University of Applied Sciences for valuable peer review of the search strategy; and the Library at the Inland Norway University of Applied Sciences for their support. Additionally, we acknowledge the assistance provided by OpenAI’s ChatGPT for support with translations and language refinement.

Open access funding provided by Inland Norway University Of Applied Sciences The study forms a part of a collaborative PhD project funded by South-Eastern Norway Regional Health Authority through Innlandet Hospital Trust and the Inland University of Applied Sciences.

Author information

Authors and affiliations.

Mental Health Department, Innlandet Hospital Trust, P.B 104, Brumunddal, 2381, Norway

Cathrine W. Steen & Kerstin Söderström

Inland Norway University of Applied Sciences, P.B. 400, Elverum, 2418, Norway

Cathrine W. Steen, Kerstin Söderström & Inger Beate Nylund

Norwegian National Advisory Unit On Concurrent Substance Abuse and Mental Health Disorders, Innlandet Hospital Trust, P.B 104, Brumunddal, 2381, Norway

Bjørn Stensrud

Akershus University Hospital, P.B 1000, Lørenskog, 1478, Norway

Johan Siqveland

National Centre for Suicide Research and Prevention, Oslo, 0372, Norway

You can also search for this author in PubMed Google Scholar

Contributions

CWS, KS, BS, and JS collaboratively designed the study. CWS and JS collected and analysed the data and were primarily responsible for writing the manuscript text. All authors contributed to the development of the search strategy. IBN conducted the literature searches and authored the chapter on the search strategy in the manuscript. All authors reviewed, gave feedback, and granted their final approval of the manuscript.

Corresponding author

Correspondence to Cathrine W. Steen .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Not applicable .

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: table 2..

Effects of VR training in the included studies: Randomized controlled trials (RCTs) and non-randomized studies (NRSs).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Steen, C.W., Söderström, K., Stensrud, B. et al. The effectiveness of virtual reality training on knowledge, skills and attitudes of health care professionals and students in assessing and treating mental health disorders: a systematic review. BMC Med Educ 24 , 480 (2024). https://doi.org/10.1186/s12909-024-05423-0

Download citation

Received : 19 January 2024

Accepted : 12 April 2024

Published : 01 May 2024

DOI : https://doi.org/10.1186/s12909-024-05423-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Health care professionals

- Health care students

- Virtual reality

- Mental health

- Clinical skills

- Systematic review

BMC Medical Education

ISSN: 1472-6920

- Submission enquiries: [email protected]

- General enquiries: [email protected]

- Open access

- Published: 17 June 2024

The effects of simulation-based education on undergraduate nursing students' competences: a multicenter randomized controlled trial

- Lai Kun Tong 1 ,

- Yue Yi Li 1 ,

- Mio Leng Au 1 ,

- Wai I. Ng 1 ,

- Si Chen Wang 1 ,

- Yongbing Liu 2 ,

- Yi Shen 3 ,

- Liqiang Zhong 4 &

- Xichenhui Qiu 5

BMC Nursing volume 23 , Article number: 400 ( 2024 ) Cite this article

60 Accesses

Metrics details

Education in nursing has noticed a positive effect of simulation-based education. There are many studies available on the effects of simulation-based education, but most of those involve a single institution, nonrandomized controlled trials, small sample sizes and subjective evaluations of the effects. The purpose of this multicenter randomized controlled trial was to evaluate the effects of high-fidelity simulation, computer-based simulation, high-fidelity simulation combined with computer-based simulation, and case study on undergraduate nursing students.

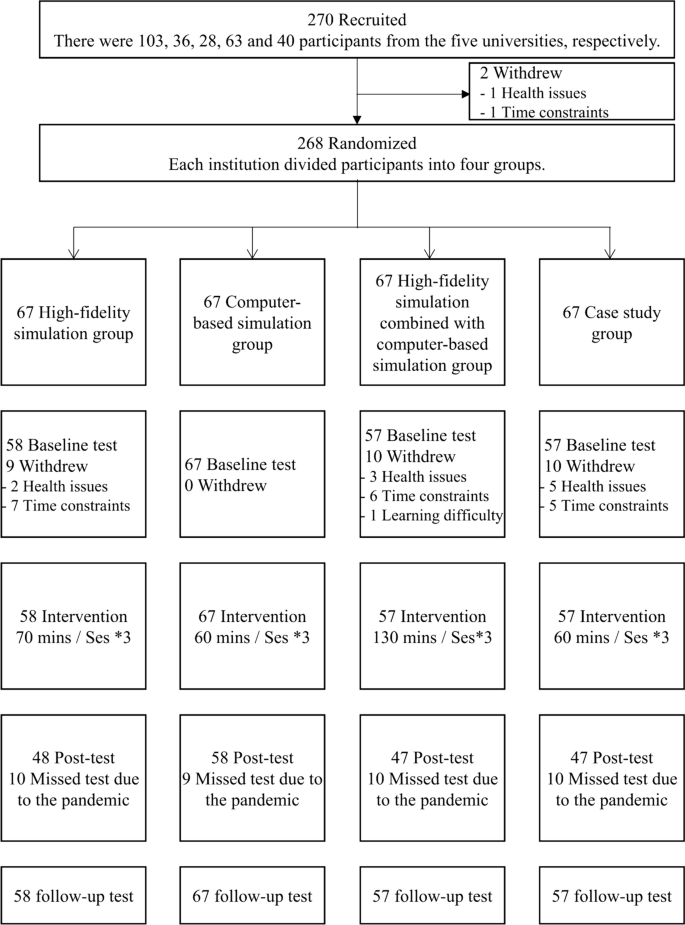

A total of 270 nursing students were recruited from five universities in China. Participants were randomly divided into four groups at each institution: the high-fidelity simulation group, the computer-based simulation group, the high-fidelity simulation combined with computer-based simulation group, and the case study group. Finally, 239 participants completed the intervention and evaluation, with 58, 67, 57, and 57 participants in each group. The data were collected at three stages: before the intervention, immediately after the intervention, and three months after the intervention.

The demographic data and baseline evaluation indices did not significantly differ among the four groups. A statistically significant difference was not observed between the four methods for improving knowledge, interprofessional collaboration, critical thinking, caring, or interest in learning. While skill improvement differed significantly among the different groups after the intervention ( p = 0.020), after three months, no difference was observed ( p = 0.139). The improvement in skill in the computer-based simulation group was significantly lower at the end of the intervention than that in the high-fidelity simulation group ( p = 0.048) or the high-fidelity simulation combined with computer-based simulation group ( p = 0.020).

Conclusions

Nursing students benefit equally from four methods in cultivating their knowledge, interprofessional collaboration, critical thinking, caring, and interest in learning both immediately and over time. High-fidelity simulation and high-fidelity simulation combined with computer-based simulation improve skill more effectively than computer-based simulation in the short term. Nursing educators can select the most suitable teaching method to achieve the intended learning outcomes depending on the specific circumstances.

Trial registration

This clinical trial was registered at the Chinese Clinical Trial Registry (clinical trial number: ChiCTR2400084880, date of the registration: 27/05/2024).

Peer Review reports

Introduction

There are many challenges nursing students face in the clinical setting because of the gap between theory and practice, the lack of resources, and unfamiliarity with the medical environment [ 1 ]. Nursing education needs an innovative teaching method that is more closely related to the clinical environment. Simulation-based education is an effective teaching method for nursing students [ 2 ]. It provides students with an immersive clinical environment for practicing skills and gaining experience in a safe, controlled setting [ 3 ]. This educational approach not only supports the development of various competencies [ 2 , 4 ], including knowledge, skill, interprofessional collaboration, critical thinking, caring, and interest in learning, but also enables students to apply learned concepts to complex and challenging situations [ 5 ].

Manikin-based and computer-based simulations are commonly employed simulators in nursing education. Manikin-based simulation involves the use of a manikin to mimic a patient’s characteristics, such as heart and lung sounds [ 6 ]. Computer-based simulation involves the modeling of real-life processes solely using computers, usually with a keyboard and monitor as inputs and outputs [ 6 ]. According to a recent meta-analysis, manikin-based simulation improves nursing students' knowledge acquisition more than computer-based simulation does, but there are no significant differences in confidence or satisfaction with learning [ 4 ].

Based on the level of fidelity, manikin-based simulation can be categorized as low, medium, or high fidelity [ 7 ]. High-fidelity simulation has become increasingly popular since it replaces part of clinical placement without compromising nursing student quality [ 8 ]. Compared to other teaching methods, high-fidelity simulation is associated with elevated equipment and labor costs [ 9 ]. To enhance cost-effectiveness, it is imperative to maximize the impact of high-fidelity simulation. To improve learning outcomes, mixed learning has gained popularity across higher education in recent years [ 10 ]. The most widely used mixed learning method for simulation education in the nursing field is high-fidelity simulation combined with computer-based simulation. There have been only a few studies on the effect of high-fidelity simulation combined with computer-based simulation on nursing students, and these are either pre-post comparison studies without control groups [ 11 ] or quasi-experimental studies without randomization [ 12 ]. To obtain a better grasp of the effects of combining high-fidelity simulation and computer-based simulation, a randomized controlled trial is needed.

In addition to enhancing effectiveness, optimizing cost-effectiveness can be achieved by implementing cost reduction measures. Case study, which eliminates the need for additional equipment, offers a relatively low-cost alternative. A traditional case study provides all pertinent information, whereas an unfolding case study purposefully leaves out information [ 13 ]. It has been shown that unfolding case study fosters critical thinking in students more effectively than traditional case studies [ 14 ]. Despite being regarded as an innovative and inexpensive teaching method, there is little research comparing unfolding case study with other simulation-based teaching methods. To address this knowledge gap, further study is necessary.

An umbrella review highlights that the existing literature on the learning outcomes of simulation-based education predominantly emphasizes knowledge and skills, while conferring limited focus on other core competencies, such as interprofessional collaboration and caring [ 15 ]. Therefore, future research should evaluate various learning outcome indicators.

This multicenter randomized controlled trial aimed to assess the effectiveness of high-fidelity simulation, computer-based simulation, high-fidelity simulation combined with computer-based simulation, and case study on nursing students’ knowledge, skill, interprofessional collaboration, critical thinking, caring, and interest in learning.

Study design

A multicenter randomized controlled trial was conducted between March 2022 and May 2023 in China. The study conforms to the CONSORT guidelines. This clinical trial was registered at the Chinese Clinical Trial Registry (clinical trial number: ChiCTR2400084880, date of the registration: 27/05/2024).

Participants and setting

Participants were recruited from five universities in China, two of which were private and three of which were public. Among the five universities, four were equipped with two high-fidelity simulation laboratories. Specifically, three universities had laboratories simulating intensive care unit wards and delivery rooms, while the remaining university had two laboratories simulating general wards. Additionally, one university possessed a high-fidelity simulation laboratory specifically designed to simulate a general ward setting. Three universities utilized Laerdal patient simulators in their laboratories, while the other two universities employed Gaumard patient simulators.

A recruitment poster with the time and location of the project promotion was posted on the school bulletin board. The research team provided a briefing to students at the designated time and location indicated on the poster, affording them the opportunity to inquire about and enhance their understanding of the project.

The study mandated that participants fulfill the following criteria: 1) enroll in a nursing undergraduate program; 2) have full-time student status; 3) complete courses in Anatomy and Physiology, Pathophysiology, Pharmacology, Health Assessment, Basic Nursing, and Medical and Surgical Nursing (Respiratory System); 4) have proficiency in reading and writing Chinese; and 5) participate voluntarily. Those who met the following criteria were excluded: 1) had a degree or diploma and 2) took the course again.

The sample size was calculated through the use of G*Power 3.1, which was based on F tests (ANOVA: Repeated measures, between factors). Several assumptions were taken into consideration, including a 5% level of significance, 80% power, four groups, three measurements, and a 0.50 correlation between pre- and postintervention time points. Compared to other teaching methods, high-fidelity simulation exhibited a medium effect size (d = 0.49 for knowledge, d = 0.50 for performance) [ 16 ]. The calculation employed a conservative approach, accommodating a small yet clinically significant effect size (0.25), thereby bolstering the reliability and validity of the findings. Based on these assumptions, the total sample size required was determined to be 124, with each group requiring 31 participants.

Randomization and blinding

Due to inconsistent teaching schedules at the five universities involved in the study, the participants were divided into four groups at each institution: the high-fidelity simulation group, the computer-based simulation group, the high-fidelity simulation combined with computer-based simulation group, and the case study group. Participant grouping was carried out by study team members who were not involved in the intervention or evaluation. The participants were each assigned a random nonduplicate number between zero and 100 using Microsoft Excel. The random numbers/participants were divided into four groups based on quartiles: the lower quarter, the lower quarter to a half, the half to three-fourths, and the upper quarter were assigned to the high-fidelity simulation group, the computer-based simulation group, the high-fidelity simulation combined with computer-based simulation group, and the case study group, respectively. It was not possible to implement participant blinding because the four teaching methods differed significantly, while effect evaluation and data analysis were conducted in a blinded manner. Each participant was assigned a unique identifier to maintain anonymity throughout the study.

Baseline test

Baseline testing started after participant recruitment had ended, so the timing of the study varied between universities. The baseline test items were the same for all participants and included general characteristics, knowledge, skills, interprofessional collaboration, critical thinking, caring, and interest in learning. The evaluation of skills was conducted by trained assessors, whereas a non-face-to-face online survey was utilized for the assessment of others.

Intervention

The four groups were taught with three scenarios covering the three different cases, in the following order: asthma worsening, drug allergy, and ventricular fibrillation. These three cases represent commonly encountered scenarios necessitating emergency treatment. It is anticipated that by means of training, students can enhance their aptitude to effectively handle emergency situations within clinical settings. It is vital that the case used in simulation-based education is valid so that its effectiveness can be enhanced [ 17 ]. The cases used in this study were from vSim® for Nursing | Lippincott Nursing Education, which was developed by Wolters Kluwer Health (Lippincott), Laerdal Medical, and the National League for Nursing. Hence, the validity of the cases can be assured. Participants received all the materials, including learning outcomes, theoretical learning materials, and case materials (medical history and nursing document), at least one day before teaching. All the teachers in charge of teaching participated in the meeting to discuss the lesson plans to reach a consensus on the lesson plans. The lesson plans were written by three members of the research team and revised according to the feedback. Table 1 shows the teaching experience of each case in the different intervention groups. The instructors involved had at least five years of teaching experience and a master's degree or higher.

Posttest and follow-up test

The posttest was conducted within one week of the intervention using the same items as those used in the baseline test. The follow-up test was administered after three months of the intervention.

General characteristics

The general characteristics of the participants included gender, age, and previous semester grade.

This was measured by five multiple-choice items developed for this study. The items were derived from the National Nurse Licensing Examination [ 18 ]. The maximum score was five, with one awarded for each correct answer. The questionnaire exhibited high content validity (CVI = 1.00) and good reliability (Kuder-Richardson 20 = 0.746).

The Creighton Competency Evaluation Instrument (CCEI) is designed to assess clinical skills in a simulated environment by measuring 23 general nursing behaviors. This tool was originally developed by Todd et al. [ 19 ] and subsequently modified by Hayden et al. [ 20 ]. The Chinese version of the CCEI has good reliability (Cronbach’s α = 0.94) and validity (CVI = 0.98) [ 21 ]. The CCEI was scored by nurses with master’s degrees who were trained by the research team and blinded to the intervention information. A dedicated person was assigned to handle the rating for each university, and the raters did not rotate among the participants. The Kendall's W coefficient for the raters' measures was calculated to be 0.832, indicating a high level of interrater agreement and reliability. All participants were tested using a high-fidelity simulator, with each test lasting ten minutes. The skills test without debriefing employed a single-person format, and the nursing procedures did not rely on laboratory results, so the items "Delegates Appropriately," "Reflects on Clinical Experience," "Interprets Lab Results," and "Reflects on Potential Hazards and Errors" were excluded from the assessment. The total score ranged from 0–19 and a higher score indicated a higher level of skill.

- Interprofessional collaboration

The Assessment of the Interprofessional Team Collaboration Scale for Students (AITCS-II Student) was used to assess interprofessional collaboration. It consists of 17 items rated on a 5-point Likert scale (1 = never, 5 = always), for a total score ranging from 17 to 85 [ 22 ]. The Chinese version of the AITCS-II has good reliability (Cronbach’s α = 0.961) and validity [ 23 ].

- Critical thinking

Critical thinking was measured by Yoon's Critical Thinking Disposition Scale (YCTD). It is a five-point Likert scale with values ranging from 1 to 5, resulting in a total score ranging from 27 to 135 [ 24 ]. Higher scores on this scale indicate greater critical thinking ability. The YCTD has good reliability (Cronbach’s α = 0.948) and validity when applied to Chinese nursing students [ 25 ].

Caring was assessed using the Caring Dimensions Inventory (CDI), which employs a five-point Likert scale ranging from 25 to 125 [ 26 ]. Higher scores on the CDI indicate a greater level of caring. The Chinese version of the CDI exhibited good reliability (Cronbach’s α = 0.97) and validity [ 27 ].

- Interest in learning

The Study Interest Questionnaire (SIQ) was used to assess interest in learning. The SIQ is a four-point Likert scale ranging from 18 to 72, where a higher total score indicates a greater degree of interest in the field of study [ 28 ]. The SIQ has good reliability (Cronbach’s α = 0.90) and validity when applied to Chinese nursing students [ 29 ].

Ethical considerations

The institution of the first author granted ethical approval (ethical approval number: REC-2021.801). Written informed consent was obtained from all participants. Participants were permitted to withdraw for any reason at any time without penalty. Guidelines emphasizing safety measures and precautions during the intervention were provided to participants, and study coordinators closely monitored laboratory and simulation sessions to address concerns or potential harm promptly.

Data analysis

Descriptive statistics were used to describe the participant characteristics and baseline characteristics. Continuous variables are presented as the mean and standard deviation, while categorical variables are presented as frequencies and percentages. According to the Quantile–Quantile Plot, the data exhibited an approximately normal distribution. Furthermore, Levene's test indicated equal variances for the variables of knowledge, skill, interprofessional collaboration, critical thinking, caring, and interest in learning, with p-values of 0.171, 0.249, 0.986, 0.634, 0.992, and 0.407, respectively. The baseline characteristics of the four groups were compared using one-way analysis of variance. The indicators of knowledge, skill, interprofessional collaboration, critical thinking, caring, and interest in learning were assessed at baseline, immediately after the intervention, and three months postintervention. Changes in these indicators from baseline were calculated for both the postintervention and three-month follow-up periods. The changes among the four groups were compared using one-way analysis of variance. Cohen's d effect sizes were computed for the between-group comparisons (small effect size = 0.2; medium effect size = 0.5; large effect size = 0.8). Missing data were treated as missing without imputation. The data analysis was conducted using jamovi 2.3.28 ( https://www.jamovi.org/ ). Jamovi was developed on the foundation of the R programming language, and is recognized for its user-friendly interface. The threshold for statistical significance was established at a two-sided p < 0.05.

Participants

A total of 270 participants were initially recruited from five universities for this study. However, an attrition rate of 11.5% was observed, resulting in 31 participants discontinuing their involvement. Consequently, the final analysis included data from 239 participants who successfully completed the intervention and remained in the study. Specifically, there were 58 participants in the high-fidelity simulation group, 67 in the computer-based simulation group, 57 in the high-fidelity simulation combined with computer-based simulation group, and 57 in the case study group (Fig. 1 ). The participant demographics and baseline characteristics are displayed in Table 2 , and no significant differences were observed in these variables.

Study subject disposition flow chart

Efficacy outcomes

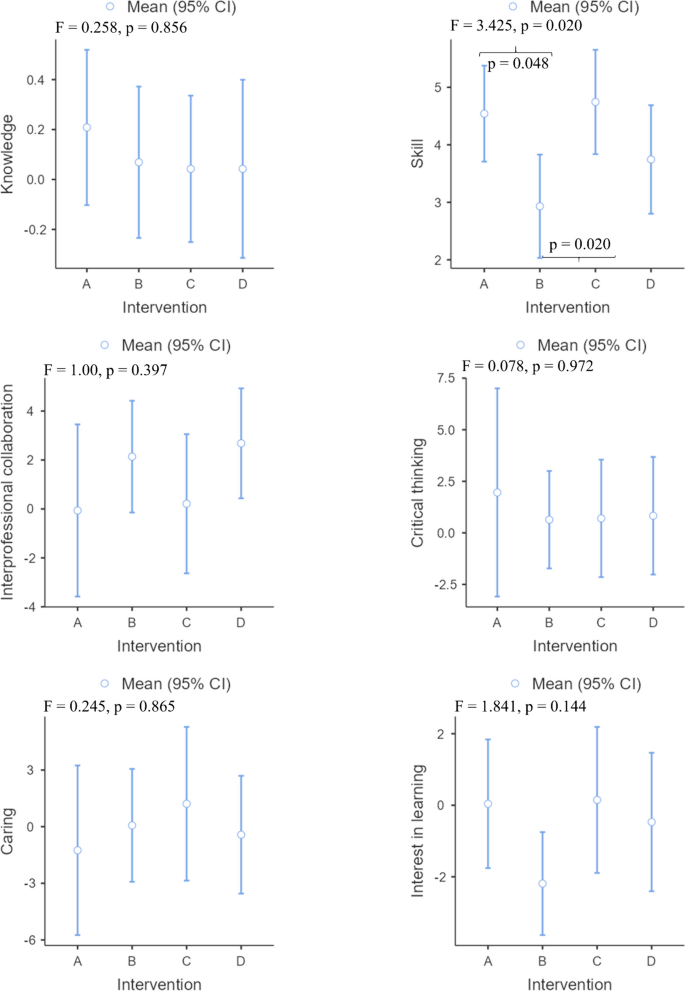

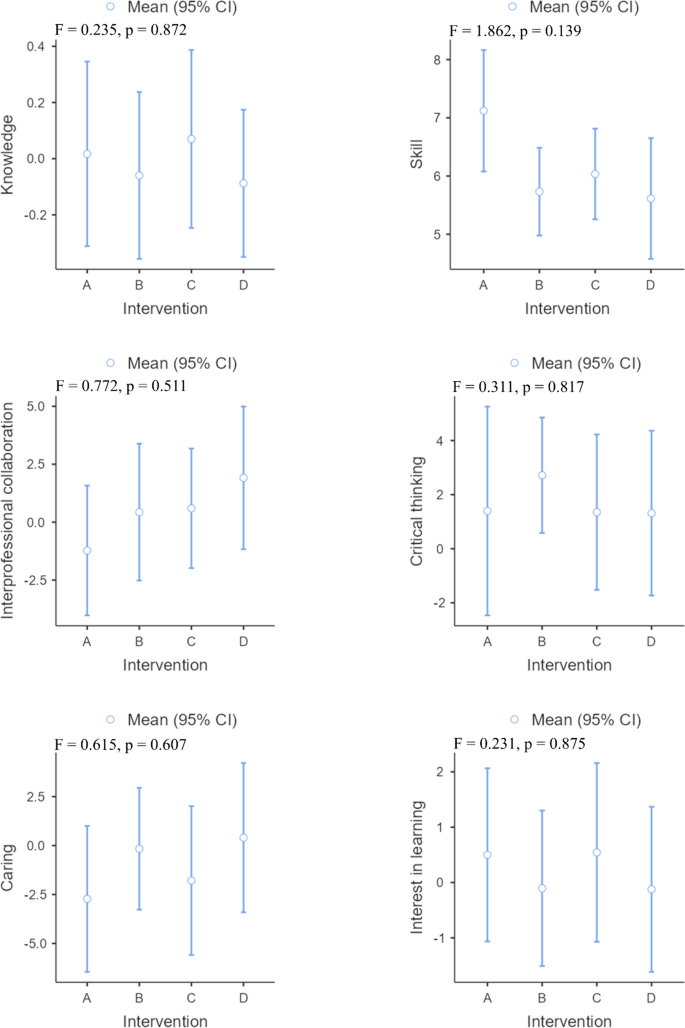

All the intervention groups showed improvements in knowledge after the intervention, with the high-fidelity simulation group showing the greatest improvement (Fig. 2 ). However, there were no significant differences in knowledge improvement among the groups (p = 0.856). The computer-based simulation group and case study group experienced a decrease in knowledge compared to baseline three months after the intervention, while the other groups showed an increase in knowledge. The high-fidelity simulation combined with computer-based simulation group performed best (Fig. 3 ), but no significant differences were observed (p = 0.872). The effect sizes between groups were found to be small, both immediately after the intervention and at the three-month follow-up (Table 3 ).

Changes in all effectiveness outcomes at post intervention. Note: A High-fidelity simulation group; B Computer-based simulation group; C High-fidelity simulation combined with computer-based simulation group; D Case study group

Changes in all effectiveness outcomes at three months of intervention. Note: A High-fidelity simulation group; B Computer-based simulation group; C High-fidelity simulation combined with computer-based simulation group; D Case study group

The different intervention groups showed improvements in skills after the intervention and three months after the intervention. The high-fidelity simulation combined with computer-based simulation group showed the greatest improvement after the intervention (Fig. 2 ), while the greatest improvement was observed in the high-fidelity simulation group three months after the intervention (Fig. 3 ). There was a significant difference in the improvement in skills among the different groups after the intervention ( p = 0.020). Specifically, the improvement observed in the computer-based simulation group was significantly lower than that in both the high-fidelity simulation group ( p = 0.048) and the high-fidelity simulation combined with computer-based simulation group ( p = 0.020). However, three months after the intervention, there was no statistically significant difference in skill improvement among the groups ( p = 0.139). Except for the between-group effect sizes of the high-fidelity simulation group compared to the computer-based simulation group (Cohen d = 0.51) and the computer-based simulation group compared to the high-fidelity simulation combined with computer-based simulation group (Cohen d = 0.56), the effects were found to be medium after the intervention, while the other between-group effect sizes were small both after the intervention and three months after the intervention (Table 3 ).

In all intervention groups except for the high-fidelity simulation group, interprofessional collaboration improved after the intervention and three months after the intervention, with the case study group (Figs. 2 and 3 ) demonstrating the greatest improvement. No significant difference was found between the intervention groups after or three months after the intervention in terms of changes in interprofessional collaboration. Both immediately following the intervention and three months later, the effect sizes between groups were small (Table 3 ).