Systematic Reviews and Meta Analysis

- Getting Started

- Guides and Standards

- Review Protocols

- Databases and Sources

- Randomized Controlled Trials

- Controlled Clinical Trials

- Observational Designs

- Tests of Diagnostic Accuracy

- Software and Tools

- Where do I get all those articles?

- Collaborations

- EPI 233/528

- Countway Mediated Search

- Risk of Bias (RoB)

Systematic review Q & A

What is a systematic review.

A systematic review is guided filtering and synthesis of all available evidence addressing a specific, focused research question, generally about a specific intervention or exposure. The use of standardized, systematic methods and pre-selected eligibility criteria reduce the risk of bias in identifying, selecting and analyzing relevant studies. A well-designed systematic review includes clear objectives, pre-selected criteria for identifying eligible studies, an explicit methodology, a thorough and reproducible search of the literature, an assessment of the validity or risk of bias of each included study, and a systematic synthesis, analysis and presentation of the findings of the included studies. A systematic review may include a meta-analysis.

For details about carrying out systematic reviews, see the Guides and Standards section of this guide.

Is my research topic appropriate for systematic review methods?

A systematic review is best deployed to test a specific hypothesis about a healthcare or public health intervention or exposure. By focusing on a single intervention or a few specific interventions for a particular condition, the investigator can ensure a manageable results set. Moreover, examining a single or small set of related interventions, exposures, or outcomes, will simplify the assessment of studies and the synthesis of the findings.

Systematic reviews are poor tools for hypothesis generation: for instance, to determine what interventions have been used to increase the awareness and acceptability of a vaccine or to investigate the ways that predictive analytics have been used in health care management. In the first case, we don't know what interventions to search for and so have to screen all the articles about awareness and acceptability. In the second, there is no agreed on set of methods that make up predictive analytics, and health care management is far too broad. The search will necessarily be incomplete, vague and very large all at the same time. In most cases, reviews without clearly and exactly specified populations, interventions, exposures, and outcomes will produce results sets that quickly outstrip the resources of a small team and offer no consistent way to assess and synthesize findings from the studies that are identified.

If not a systematic review, then what?

You might consider performing a scoping review . This framework allows iterative searching over a reduced number of data sources and no requirement to assess individual studies for risk of bias. The framework includes built-in mechanisms to adjust the analysis as the work progresses and more is learned about the topic. A scoping review won't help you limit the number of records you'll need to screen (broad questions lead to large results sets) but may give you means of dealing with a large set of results.

This tool can help you decide what kind of review is right for your question.

Can my student complete a systematic review during her summer project?

Probably not. Systematic reviews are a lot of work. Including creating the protocol, building and running a quality search, collecting all the papers, evaluating the studies that meet the inclusion criteria and extracting and analyzing the summary data, a well done review can require dozens to hundreds of hours of work that can span several months. Moreover, a systematic review requires subject expertise, statistical support and a librarian to help design and run the search. Be aware that librarians sometimes have queues for their search time. It may take several weeks to complete and run a search. Moreover, all guidelines for carrying out systematic reviews recommend that at least two subject experts screen the studies identified in the search. The first round of screening can consume 1 hour per screener for every 100-200 records. A systematic review is a labor-intensive team effort.

How can I know if my topic has been been reviewed already?

Before starting out on a systematic review, check to see if someone has done it already. In PubMed you can use the systematic review subset to limit to a broad group of papers that is enriched for systematic reviews. You can invoke the subset by selecting if from the Article Types filters to the left of your PubMed results, or you can append AND systematic[sb] to your search. For example:

"neoadjuvant chemotherapy" AND systematic[sb]

The systematic review subset is very noisy, however. To quickly focus on systematic reviews (knowing that you may be missing some), simply search for the word systematic in the title:

"neoadjuvant chemotherapy" AND systematic[ti]

Any PRISMA-compliant systematic review will be captured by this method since including the words "systematic review" in the title is a requirement of the PRISMA checklist. Cochrane systematic reviews do not include 'systematic' in the title, however. It's worth checking the Cochrane Database of Systematic Reviews independently.

You can also search for protocols that will indicate that another group has set out on a similar project. Many investigators will register their protocols in PROSPERO , a registry of review protocols. Other published protocols as well as Cochrane Review protocols appear in the Cochrane Methodology Register, a part of the Cochrane Library .

- Next: Guides and Standards >>

- Last Updated: Feb 26, 2024 3:17 PM

- URL: https://guides.library.harvard.edu/meta-analysis

How to Do a Systematic Review: A Best Practice Guide for Conducting and Reporting Narrative Reviews, Meta-Analyses, and Meta-Syntheses

Affiliations.

- 1 Behavioural Science Centre, Stirling Management School, University of Stirling, Stirling FK9 4LA, United Kingdom; email: [email protected].

- 2 Department of Psychological and Behavioural Science, London School of Economics and Political Science, London WC2A 2AE, United Kingdom.

- 3 Department of Statistics, Northwestern University, Evanston, Illinois 60208, USA; email: [email protected].

- PMID: 30089228

- DOI: 10.1146/annurev-psych-010418-102803

Systematic reviews are characterized by a methodical and replicable methodology and presentation. They involve a comprehensive search to locate all relevant published and unpublished work on a subject; a systematic integration of search results; and a critique of the extent, nature, and quality of evidence in relation to a particular research question. The best reviews synthesize studies to draw broad theoretical conclusions about what a literature means, linking theory to evidence and evidence to theory. This guide describes how to plan, conduct, organize, and present a systematic review of quantitative (meta-analysis) or qualitative (narrative review, meta-synthesis) information. We outline core standards and principles and describe commonly encountered problems. Although this guide targets psychological scientists, its high level of abstraction makes it potentially relevant to any subject area or discipline. We argue that systematic reviews are a key methodology for clarifying whether and how research findings replicate and for explaining possible inconsistencies, and we call for researchers to conduct systematic reviews to help elucidate whether there is a replication crisis.

Keywords: evidence; guide; meta-analysis; meta-synthesis; narrative; systematic review; theory.

- Guidelines as Topic

- Meta-Analysis as Topic*

- Publication Bias

- Review Literature as Topic

- Systematic Reviews as Topic*

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- The PRISMA 2020...

The PRISMA 2020 statement: an updated guideline for reporting systematic reviews

PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews

- Related content

- Peer review

- Matthew J Page , senior research fellow 1 ,

- Joanne E McKenzie , associate professor 1 ,

- Patrick M Bossuyt , professor 2 ,

- Isabelle Boutron , professor 3 ,

- Tammy C Hoffmann , professor 4 ,

- Cynthia D Mulrow , professor 5 ,

- Larissa Shamseer , doctoral student 6 ,

- Jennifer M Tetzlaff , research product specialist 7 ,

- Elie A Akl , professor 8 ,

- Sue E Brennan , senior research fellow 1 ,

- Roger Chou , professor 9 ,

- Julie Glanville , associate director 10 ,

- Jeremy M Grimshaw , professor 11 ,

- Asbjørn Hróbjartsson , professor 12 ,

- Manoj M Lalu , associate scientist and assistant professor 13 ,

- Tianjing Li , associate professor 14 ,

- Elizabeth W Loder , professor 15 ,

- Evan Mayo-Wilson , associate professor 16 ,

- Steve McDonald , senior research fellow 1 ,

- Luke A McGuinness , research associate 17 ,

- Lesley A Stewart , professor and director 18 ,

- James Thomas , professor 19 ,

- Andrea C Tricco , scientist and associate professor 20 ,

- Vivian A Welch , associate professor 21 ,

- Penny Whiting , associate professor 17 ,

- David Moher , director and professor 22

- 1 School of Public Health and Preventive Medicine, Monash University, Melbourne, Australia

- 2 Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam University Medical Centres, University of Amsterdam, Amsterdam, Netherlands

- 3 Université de Paris, Centre of Epidemiology and Statistics (CRESS), Inserm, F 75004 Paris, France

- 4 Institute for Evidence-Based Healthcare, Faculty of Health Sciences and Medicine, Bond University, Gold Coast, Australia

- 5 University of Texas Health Science Center at San Antonio, San Antonio, Texas, USA; Annals of Internal Medicine

- 6 Knowledge Translation Program, Li Ka Shing Knowledge Institute, Toronto, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 7 Evidence Partners, Ottawa, Canada

- 8 Clinical Research Institute, American University of Beirut, Beirut, Lebanon; Department of Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada

- 9 Department of Medical Informatics and Clinical Epidemiology, Oregon Health & Science University, Portland, Oregon, USA

- 10 York Health Economics Consortium (YHEC Ltd), University of York, York, UK

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, University of Ottawa, Ottawa, Canada; Department of Medicine, University of Ottawa, Ottawa, Canada

- 12 Centre for Evidence-Based Medicine Odense (CEBMO) and Cochrane Denmark, Department of Clinical Research, University of Southern Denmark, Odense, Denmark; Open Patient data Exploratory Network (OPEN), Odense University Hospital, Odense, Denmark

- 13 Department of Anesthesiology and Pain Medicine, The Ottawa Hospital, Ottawa, Canada; Clinical Epidemiology Program, Blueprint Translational Research Group, Ottawa Hospital Research Institute, Ottawa, Canada; Regenerative Medicine Program, Ottawa Hospital Research Institute, Ottawa, Canada

- 14 Department of Ophthalmology, School of Medicine, University of Colorado Denver, Denver, Colorado, United States; Department of Epidemiology, Johns Hopkins Bloomberg School of Public Health, Baltimore, Maryland, USA

- 15 Division of Headache, Department of Neurology, Brigham and Women's Hospital, Harvard Medical School, Boston, Massachusetts, USA; Head of Research, The BMJ , London, UK

- 16 Department of Epidemiology and Biostatistics, Indiana University School of Public Health-Bloomington, Bloomington, Indiana, USA

- 17 Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, UK

- 18 Centre for Reviews and Dissemination, University of York, York, UK

- 19 EPPI-Centre, UCL Social Research Institute, University College London, London, UK

- 20 Li Ka Shing Knowledge Institute of St. Michael's Hospital, Unity Health Toronto, Toronto, Canada; Epidemiology Division of the Dalla Lana School of Public Health and the Institute of Health Management, Policy, and Evaluation, University of Toronto, Toronto, Canada; Queen's Collaboration for Health Care Quality Joanna Briggs Institute Centre of Excellence, Queen's University, Kingston, Canada

- 21 Methods Centre, Bruyère Research Institute, Ottawa, Ontario, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- 22 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada; School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, Canada

- Correspondence to: M J Page matthew.page{at}monash.edu

- Accepted 4 January 2021

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement, published in 2009, was designed to help systematic reviewers transparently report why the review was done, what the authors did, and what they found. Over the past decade, advances in systematic review methodology and terminology have necessitated an update to the guideline. The PRISMA 2020 statement replaces the 2009 statement and includes new reporting guidance that reflects advances in methods to identify, select, appraise, and synthesise studies. The structure and presentation of the items have been modified to facilitate implementation. In this article, we present the PRISMA 2020 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and the revised flow diagrams for original and updated reviews.

Systematic reviews serve many critical roles. They can provide syntheses of the state of knowledge in a field, from which future research priorities can be identified; they can address questions that otherwise could not be answered by individual studies; they can identify problems in primary research that should be rectified in future studies; and they can generate or evaluate theories about how or why phenomena occur. Systematic reviews therefore generate various types of knowledge for different users of reviews (such as patients, healthcare providers, researchers, and policy makers). 1 2 To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did (such as how studies were identified and selected) and what they found (such as characteristics of contributing studies and results of meta-analyses). Up-to-date reporting guidance facilitates authors achieving this. 3

The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement published in 2009 (hereafter referred to as PRISMA 2009) 4 5 6 7 8 9 10 is a reporting guideline designed to address poor reporting of systematic reviews. 11 The PRISMA 2009 statement comprised a checklist of 27 items recommended for reporting in systematic reviews and an “explanation and elaboration” paper 12 13 14 15 16 providing additional reporting guidance for each item, along with exemplars of reporting. The recommendations have been widely endorsed and adopted, as evidenced by its co-publication in multiple journals, citation in over 60 000 reports (Scopus, August 2020), endorsement from almost 200 journals and systematic review organisations, and adoption in various disciplines. Evidence from observational studies suggests that use of the PRISMA 2009 statement is associated with more complete reporting of systematic reviews, 17 18 19 20 although more could be done to improve adherence to the guideline. 21

Many innovations in the conduct of systematic reviews have occurred since publication of the PRISMA 2009 statement. For example, technological advances have enabled the use of natural language processing and machine learning to identify relevant evidence, 22 23 24 methods have been proposed to synthesise and present findings when meta-analysis is not possible or appropriate, 25 26 27 and new methods have been developed to assess the risk of bias in results of included studies. 28 29 Evidence on sources of bias in systematic reviews has accrued, culminating in the development of new tools to appraise the conduct of systematic reviews. 30 31 Terminology used to describe particular review processes has also evolved, as in the shift from assessing “quality” to assessing “certainty” in the body of evidence. 32 In addition, the publishing landscape has transformed, with multiple avenues now available for registering and disseminating systematic review protocols, 33 34 disseminating reports of systematic reviews, and sharing data and materials, such as preprint servers and publicly accessible repositories. To capture these advances in the reporting of systematic reviews necessitated an update to the PRISMA 2009 statement.

Summary points

To ensure a systematic review is valuable to users, authors should prepare a transparent, complete, and accurate account of why the review was done, what they did, and what they found

The PRISMA 2020 statement provides updated reporting guidance for systematic reviews that reflects advances in methods to identify, select, appraise, and synthesise studies

The PRISMA 2020 statement consists of a 27-item checklist, an expanded checklist that details reporting recommendations for each item, the PRISMA 2020 abstract checklist, and revised flow diagrams for original and updated reviews

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders

Development of PRISMA 2020

A complete description of the methods used to develop PRISMA 2020 is available elsewhere. 35 We identified PRISMA 2009 items that were often reported incompletely by examining the results of studies investigating the transparency of reporting of published reviews. 17 21 36 37 We identified possible modifications to the PRISMA 2009 statement by reviewing 60 documents providing reporting guidance for systematic reviews (including reporting guidelines, handbooks, tools, and meta-research studies). 38 These reviews of the literature were used to inform the content of a survey with suggested possible modifications to the 27 items in PRISMA 2009 and possible additional items. Respondents were asked whether they believed we should keep each PRISMA 2009 item as is, modify it, or remove it, and whether we should add each additional item. Systematic review methodologists and journal editors were invited to complete the online survey (110 of 220 invited responded). We discussed proposed content and wording of the PRISMA 2020 statement, as informed by the review and survey results, at a 21-member, two-day, in-person meeting in September 2018 in Edinburgh, Scotland. Throughout 2019 and 2020, we circulated an initial draft and five revisions of the checklist and explanation and elaboration paper to co-authors for feedback. In April 2020, we invited 22 systematic reviewers who had expressed interest in providing feedback on the PRISMA 2020 checklist to share their views (via an online survey) on the layout and terminology used in a preliminary version of the checklist. Feedback was received from 15 individuals and considered by the first author, and any revisions deemed necessary were incorporated before the final version was approved and endorsed by all co-authors.

The PRISMA 2020 statement

Scope of the guideline.

The PRISMA 2020 statement has been designed primarily for systematic reviews of studies that evaluate the effects of health interventions, irrespective of the design of the included studies. However, the checklist items are applicable to reports of systematic reviews evaluating other interventions (such as social or educational interventions), and many items are applicable to systematic reviews with objectives other than evaluating interventions (such as evaluating aetiology, prevalence, or prognosis). PRISMA 2020 is intended for use in systematic reviews that include synthesis (such as pairwise meta-analysis or other statistical synthesis methods) or do not include synthesis (for example, because only one eligible study is identified). The PRISMA 2020 items are relevant for mixed-methods systematic reviews (which include quantitative and qualitative studies), but reporting guidelines addressing the presentation and synthesis of qualitative data should also be consulted. 39 40 PRISMA 2020 can be used for original systematic reviews, updated systematic reviews, or continually updated (“living”) systematic reviews. However, for updated and living systematic reviews, there may be some additional considerations that need to be addressed. Where there is relevant content from other reporting guidelines, we reference these guidelines within the items in the explanation and elaboration paper 41 (such as PRISMA-Search 42 in items 6 and 7, Synthesis without meta-analysis (SWiM) reporting guideline 27 in item 13d). Box 1 includes a glossary of terms used throughout the PRISMA 2020 statement.

Glossary of terms

Systematic review —A review that uses explicit, systematic methods to collate and synthesise findings of studies that address a clearly formulated question 43

Statistical synthesis —The combination of quantitative results of two or more studies. This encompasses meta-analysis of effect estimates (described below) and other methods, such as combining P values, calculating the range and distribution of observed effects, and vote counting based on the direction of effect (see McKenzie and Brennan 25 for a description of each method)

Meta-analysis of effect estimates —A statistical technique used to synthesise results when study effect estimates and their variances are available, yielding a quantitative summary of results 25

Outcome —An event or measurement collected for participants in a study (such as quality of life, mortality)

Result —The combination of a point estimate (such as a mean difference, risk ratio, or proportion) and a measure of its precision (such as a confidence/credible interval) for a particular outcome

Report —A document (paper or electronic) supplying information about a particular study. It could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report, or any other document providing relevant information

Record —The title or abstract (or both) of a report indexed in a database or website (such as a title or abstract for an article indexed in Medline). Records that refer to the same report (such as the same journal article) are “duplicates”; however, records that refer to reports that are merely similar (such as a similar abstract submitted to two different conferences) should be considered unique.

Study —An investigation, such as a clinical trial, that includes a defined group of participants and one or more interventions and outcomes. A “study” might have multiple reports. For example, reports could include the protocol, statistical analysis plan, baseline characteristics, results for the primary outcome, results for harms, results for secondary outcomes, and results for additional mediator and moderator analyses

PRISMA 2020 is not intended to guide systematic review conduct, for which comprehensive resources are available. 43 44 45 46 However, familiarity with PRISMA 2020 is useful when planning and conducting systematic reviews to ensure that all recommended information is captured. PRISMA 2020 should not be used to assess the conduct or methodological quality of systematic reviews; other tools exist for this purpose. 30 31 Furthermore, PRISMA 2020 is not intended to inform the reporting of systematic review protocols, for which a separate statement is available (PRISMA for Protocols (PRISMA-P) 2015 statement 47 48 ). Finally, extensions to the PRISMA 2009 statement have been developed to guide reporting of network meta-analyses, 49 meta-analyses of individual participant data, 50 systematic reviews of harms, 51 systematic reviews of diagnostic test accuracy studies, 52 and scoping reviews 53 ; for these types of reviews we recommend authors report their review in accordance with the recommendations in PRISMA 2020 along with the guidance specific to the extension.

How to use PRISMA 2020

The PRISMA 2020 statement (including the checklists, explanation and elaboration, and flow diagram) replaces the PRISMA 2009 statement, which should no longer be used. Box 2 summarises noteworthy changes from the PRISMA 2009 statement. The PRISMA 2020 checklist includes seven sections with 27 items, some of which include sub-items ( table 1 ). A checklist for journal and conference abstracts for systematic reviews is included in PRISMA 2020. This abstract checklist is an update of the 2013 PRISMA for Abstracts statement, 54 reflecting new and modified content in PRISMA 2020 ( table 2 ). A template PRISMA flow diagram is provided, which can be modified depending on whether the systematic review is original or updated ( fig 1 ).

Noteworthy changes to the PRISMA 2009 statement

Inclusion of the abstract reporting checklist within PRISMA 2020 (see item #2 and table 2 ).

Movement of the ‘Protocol and registration’ item from the start of the Methods section of the checklist to a new Other section, with addition of a sub-item recommending authors describe amendments to information provided at registration or in the protocol (see item #24a-24c).

Modification of the ‘Search’ item to recommend authors present full search strategies for all databases, registers and websites searched, not just at least one database (see item #7).

Modification of the ‘Study selection’ item in the Methods section to emphasise the reporting of how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process (see item #8).

Addition of a sub-item to the ‘Data items’ item recommending authors report how outcomes were defined, which results were sought, and methods for selecting a subset of results from included studies (see item #10a).

Splitting of the ‘Synthesis of results’ item in the Methods section into six sub-items recommending authors describe: the processes used to decide which studies were eligible for each synthesis; any methods required to prepare the data for synthesis; any methods used to tabulate or visually display results of individual studies and syntheses; any methods used to synthesise results; any methods used to explore possible causes of heterogeneity among study results (such as subgroup analysis, meta-regression); and any sensitivity analyses used to assess robustness of the synthesised results (see item #13a-13f).

Addition of a sub-item to the ‘Study selection’ item in the Results section recommending authors cite studies that might appear to meet the inclusion criteria, but which were excluded, and explain why they were excluded (see item #16b).

Splitting of the ‘Synthesis of results’ item in the Results section into four sub-items recommending authors: briefly summarise the characteristics and risk of bias among studies contributing to the synthesis; present results of all statistical syntheses conducted; present results of any investigations of possible causes of heterogeneity among study results; and present results of any sensitivity analyses (see item #20a-20d).

Addition of new items recommending authors report methods for and results of an assessment of certainty (or confidence) in the body of evidence for an outcome (see items #15 and #22).

Addition of a new item recommending authors declare any competing interests (see item #26).

Addition of a new item recommending authors indicate whether data, analytic code and other materials used in the review are publicly available and if so, where they can be found (see item #27).

PRISMA 2020 item checklist

- View inline

PRISMA 2020 for Abstracts checklist*

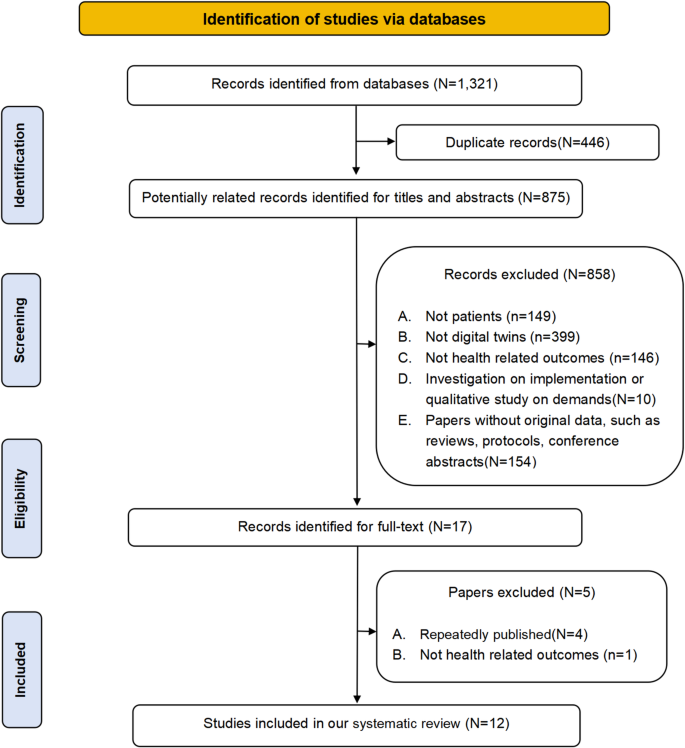

PRISMA 2020 flow diagram template for systematic reviews. The new design is adapted from flow diagrams proposed by Boers, 55 Mayo-Wilson et al. 56 and Stovold et al. 57 The boxes in grey should only be completed if applicable; otherwise they should be removed from the flow diagram. Note that a “report” could be a journal article, preprint, conference abstract, study register entry, clinical study report, dissertation, unpublished manuscript, government report or any other document providing relevant information.

- Download figure

- Open in new tab

- Download powerpoint

We recommend authors refer to PRISMA 2020 early in the writing process, because prospective consideration of the items may help to ensure that all the items are addressed. To help keep track of which items have been reported, the PRISMA statement website ( http://www.prisma-statement.org/ ) includes fillable templates of the checklists to download and complete (also available in the data supplement on bmj.com). We have also created a web application that allows users to complete the checklist via a user-friendly interface 58 (available at https://prisma.shinyapps.io/checklist/ and adapted from the Transparency Checklist app 59 ). The completed checklist can be exported to Word or PDF. Editable templates of the flow diagram can also be downloaded from the PRISMA statement website.

We have prepared an updated explanation and elaboration paper, in which we explain why reporting of each item is recommended and present bullet points that detail the reporting recommendations (which we refer to as elements). 41 The bullet-point structure is new to PRISMA 2020 and has been adopted to facilitate implementation of the guidance. 60 61 An expanded checklist, which comprises an abridged version of the elements presented in the explanation and elaboration paper, with references and some examples removed, is available in the data supplement on bmj.com. Consulting the explanation and elaboration paper is recommended if further clarity or information is required.

Journals and publishers might impose word and section limits, and limits on the number of tables and figures allowed in the main report. In such cases, if the relevant information for some items already appears in a publicly accessible review protocol, referring to the protocol may suffice. Alternatively, placing detailed descriptions of the methods used or additional results (such as for less critical outcomes) in supplementary files is recommended. Ideally, supplementary files should be deposited to a general-purpose or institutional open-access repository that provides free and permanent access to the material (such as Open Science Framework, Dryad, figshare). A reference or link to the additional information should be included in the main report. Finally, although PRISMA 2020 provides a template for where information might be located, the suggested location should not be seen as prescriptive; the guiding principle is to ensure the information is reported.

Use of PRISMA 2020 has the potential to benefit many stakeholders. Complete reporting allows readers to assess the appropriateness of the methods, and therefore the trustworthiness of the findings. Presenting and summarising characteristics of studies contributing to a synthesis allows healthcare providers and policy makers to evaluate the applicability of the findings to their setting. Describing the certainty in the body of evidence for an outcome and the implications of findings should help policy makers, managers, and other decision makers formulate appropriate recommendations for practice or policy. Complete reporting of all PRISMA 2020 items also facilitates replication and review updates, as well as inclusion of systematic reviews in overviews (of systematic reviews) and guidelines, so teams can leverage work that is already done and decrease research waste. 36 62 63

We updated the PRISMA 2009 statement by adapting the EQUATOR Network’s guidance for developing health research reporting guidelines. 64 We evaluated the reporting completeness of published systematic reviews, 17 21 36 37 reviewed the items included in other documents providing guidance for systematic reviews, 38 surveyed systematic review methodologists and journal editors for their views on how to revise the original PRISMA statement, 35 discussed the findings at an in-person meeting, and prepared this document through an iterative process. Our recommendations are informed by the reviews and survey conducted before the in-person meeting, theoretical considerations about which items facilitate replication and help users assess the risk of bias and applicability of systematic reviews, and co-authors’ experience with authoring and using systematic reviews.

Various strategies to increase the use of reporting guidelines and improve reporting have been proposed. They include educators introducing reporting guidelines into graduate curricula to promote good reporting habits of early career scientists 65 ; journal editors and regulators endorsing use of reporting guidelines 18 ; peer reviewers evaluating adherence to reporting guidelines 61 66 ; journals requiring authors to indicate where in their manuscript they have adhered to each reporting item 67 ; and authors using online writing tools that prompt complete reporting at the writing stage. 60 Multi-pronged interventions, where more than one of these strategies are combined, may be more effective (such as completion of checklists coupled with editorial checks). 68 However, of 31 interventions proposed to increase adherence to reporting guidelines, the effects of only 11 have been evaluated, mostly in observational studies at high risk of bias due to confounding. 69 It is therefore unclear which strategies should be used. Future research might explore barriers and facilitators to the use of PRISMA 2020 by authors, editors, and peer reviewers, designing interventions that address the identified barriers, and evaluating those interventions using randomised trials. To inform possible revisions to the guideline, it would also be valuable to conduct think-aloud studies 70 to understand how systematic reviewers interpret the items, and reliability studies to identify items where there is varied interpretation of the items.

We encourage readers to submit evidence that informs any of the recommendations in PRISMA 2020 (via the PRISMA statement website: http://www.prisma-statement.org/ ). To enhance accessibility of PRISMA 2020, several translations of the guideline are under way (see available translations at the PRISMA statement website). We encourage journal editors and publishers to raise awareness of PRISMA 2020 (for example, by referring to it in journal “Instructions to authors”), endorsing its use, advising editors and peer reviewers to evaluate submitted systematic reviews against the PRISMA 2020 checklists, and making changes to journal policies to accommodate the new reporting recommendations. We recommend existing PRISMA extensions 47 49 50 51 52 53 71 72 be updated to reflect PRISMA 2020 and advise developers of new PRISMA extensions to use PRISMA 2020 as the foundation document.

We anticipate that the PRISMA 2020 statement will benefit authors, editors, and peer reviewers of systematic reviews, and different users of reviews, including guideline developers, policy makers, healthcare providers, patients, and other stakeholders. Ultimately, we hope that uptake of the guideline will lead to more transparent, complete, and accurate reporting of systematic reviews, thus facilitating evidence based decision making.

Acknowledgments

We dedicate this paper to the late Douglas G Altman and Alessandro Liberati, whose contributions were fundamental to the development and implementation of the original PRISMA statement.

We thank the following contributors who completed the survey to inform discussions at the development meeting: Xavier Armoiry, Edoardo Aromataris, Ana Patricia Ayala, Ethan M Balk, Virginia Barbour, Elaine Beller, Jesse A Berlin, Lisa Bero, Zhao-Xiang Bian, Jean Joel Bigna, Ferrán Catalá-López, Anna Chaimani, Mike Clarke, Tammy Clifford, Ioana A Cristea, Miranda Cumpston, Sofia Dias, Corinna Dressler, Ivan D Florez, Joel J Gagnier, Chantelle Garritty, Long Ge, Davina Ghersi, Sean Grant, Gordon Guyatt, Neal R Haddaway, Julian PT Higgins, Sally Hopewell, Brian Hutton, Jamie J Kirkham, Jos Kleijnen, Julia Koricheva, Joey SW Kwong, Toby J Lasserson, Julia H Littell, Yoon K Loke, Malcolm R Macleod, Chris G Maher, Ana Marušic, Dimitris Mavridis, Jessie McGowan, Matthew DF McInnes, Philippa Middleton, Karel G Moons, Zachary Munn, Jane Noyes, Barbara Nußbaumer-Streit, Donald L Patrick, Tatiana Pereira-Cenci, Ba’ Pham, Bob Phillips, Dawid Pieper, Michelle Pollock, Daniel S Quintana, Drummond Rennie, Melissa L Rethlefsen, Hannah R Rothstein, Maroeska M Rovers, Rebecca Ryan, Georgia Salanti, Ian J Saldanha, Margaret Sampson, Nancy Santesso, Rafael Sarkis-Onofre, Jelena Savović, Christopher H Schmid, Kenneth F Schulz, Guido Schwarzer, Beverley J Shea, Paul G Shekelle, Farhad Shokraneh, Mark Simmonds, Nicole Skoetz, Sharon E Straus, Anneliese Synnot, Emily E Tanner-Smith, Brett D Thombs, Hilary Thomson, Alexander Tsertsvadze, Peter Tugwell, Tari Turner, Lesley Uttley, Jeffrey C Valentine, Matt Vassar, Areti Angeliki Veroniki, Meera Viswanathan, Cole Wayant, Paul Whaley, and Kehu Yang. We thank the following contributors who provided feedback on a preliminary version of the PRISMA 2020 checklist: Jo Abbott, Fionn Büttner, Patricia Correia-Santos, Victoria Freeman, Emily A Hennessy, Rakibul Islam, Amalia (Emily) Karahalios, Kasper Krommes, Andreas Lundh, Dafne Port Nascimento, Davina Robson, Catherine Schenck-Yglesias, Mary M Scott, Sarah Tanveer and Pavel Zhelnov. We thank Abigail H Goben, Melissa L Rethlefsen, Tanja Rombey, Anna Scott, and Farhad Shokraneh for their helpful comments on the preprints of the PRISMA 2020 papers. We thank Edoardo Aromataris, Stephanie Chang, Toby Lasserson and David Schriger for their helpful peer review comments on the PRISMA 2020 papers.

Contributors: JEM and DM are joint senior authors. MJP, JEM, PMB, IB, TCH, CDM, LS, and DM conceived this paper and designed the literature review and survey conducted to inform the guideline content. MJP conducted the literature review, administered the survey and analysed the data for both. MJP prepared all materials for the development meeting. MJP and JEM presented proposals at the development meeting. All authors except for TCH, JMT, EAA, SEB, and LAM attended the development meeting. MJP and JEM took and consolidated notes from the development meeting. MJP and JEM led the drafting and editing of the article. JEM, PMB, IB, TCH, LS, JMT, EAA, SEB, RC, JG, AH, TL, EMW, SM, LAM, LAS, JT, ACT, PW, and DM drafted particular sections of the article. All authors were involved in revising the article critically for important intellectual content. All authors approved the final version of the article. MJP is the guarantor of this work. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: There was no direct funding for this research. MJP is supported by an Australian Research Council Discovery Early Career Researcher Award (DE200101618) and was previously supported by an Australian National Health and Medical Research Council (NHMRC) Early Career Fellowship (1088535) during the conduct of this research. JEM is supported by an Australian NHMRC Career Development Fellowship (1143429). TCH is supported by an Australian NHMRC Senior Research Fellowship (1154607). JMT is supported by Evidence Partners Inc. JMG is supported by a Tier 1 Canada Research Chair in Health Knowledge Transfer and Uptake. MML is supported by The Ottawa Hospital Anaesthesia Alternate Funds Association and a Faculty of Medicine Junior Research Chair. TL is supported by funding from the National Eye Institute (UG1EY020522), National Institutes of Health, United States. LAM is supported by a National Institute for Health Research Doctoral Research Fellowship (DRF-2018-11-ST2-048). ACT is supported by a Tier 2 Canada Research Chair in Knowledge Synthesis. DM is supported in part by a University Research Chair, University of Ottawa. The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at http://www.icmje.org/conflicts-of-interest/ and declare: EL is head of research for the BMJ ; MJP is an editorial board member for PLOS Medicine ; ACT is an associate editor and MJP, TL, EMW, and DM are editorial board members for the Journal of Clinical Epidemiology ; DM and LAS were editors in chief, LS, JMT, and ACT are associate editors, and JG is an editorial board member for Systematic Reviews . None of these authors were involved in the peer review process or decision to publish. TCH has received personal fees from Elsevier outside the submitted work. EMW has received personal fees from the American Journal for Public Health , for which he is the editor for systematic reviews. VW is editor in chief of the Campbell Collaboration, which produces systematic reviews, and co-convenor of the Campbell and Cochrane equity methods group. DM is chair of the EQUATOR Network, IB is adjunct director of the French EQUATOR Centre and TCH is co-director of the Australasian EQUATOR Centre, which advocates for the use of reporting guidelines to improve the quality of reporting in research articles. JMT received salary from Evidence Partners, creator of DistillerSR software for systematic reviews; Evidence Partners was not involved in the design or outcomes of the statement, and the views expressed solely represent those of the author.

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient and public involvement: Patients and the public were not involved in this methodological research. We plan to disseminate the research widely, including to community participants in evidence synthesis organisations.

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/ .

- Gurevitch J ,

- Koricheva J ,

- Nakagawa S ,

- Liberati A ,

- Tetzlaff J ,

- Altman DG ,

- PRISMA Group

- Tricco AC ,

- Sampson M ,

- Shamseer L ,

- Leoncini E ,

- de Belvis G ,

- Ricciardi W ,

- Fowler AJ ,

- Leclercq V ,

- Beaudart C ,

- Ajamieh S ,

- Rabenda V ,

- Tirelli E ,

- O’Mara-Eves A ,

- McNaught J ,

- Ananiadou S

- Marshall IJ ,

- Noel-Storr A ,

- Higgins JPT ,

- Chandler J ,

- McKenzie JE ,

- López-López JA ,

- Becker BJ ,

- Campbell M ,

- Sterne JAC ,

- Savović J ,

- Sterne JA ,

- Hernán MA ,

- Reeves BC ,

- Whiting P ,

- Higgins JP ,

- ROBIS group

- Hultcrantz M ,

- Stewart L ,

- Bossuyt PM ,

- Flemming K ,

- McInnes E ,

- France EF ,

- Cunningham M ,

- Rethlefsen ML ,

- Kirtley S ,

- Waffenschmidt S ,

- PRISMA-S Group

- ↵ Higgins JPT, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions : Version 6.0. Cochrane, 2019. Available from https://training.cochrane.org/handbook .

- Dekkers OM ,

- Vandenbroucke JP ,

- Cevallos M ,

- Renehan AG ,

- ↵ Cooper H, Hedges LV, Valentine JV, eds. The Handbook of Research Synthesis and Meta-Analysis. Russell Sage Foundation, 2019.

- IOM (Institute of Medicine)

- PRISMA-P Group

- Salanti G ,

- Caldwell DM ,

- Stewart LA ,

- PRISMA-IPD Development Group

- Zorzela L ,

- Ioannidis JP ,

- PRISMAHarms Group

- McInnes MDF ,

- Thombs BD ,

- and the PRISMA-DTA Group

- Beller EM ,

- Glasziou PP ,

- PRISMA for Abstracts Group

- Mayo-Wilson E ,

- Dickersin K ,

- MUDS investigators

- Stovold E ,

- Beecher D ,

- Noel-Storr A

- McGuinness LA

- Sarafoglou A ,

- Boutron I ,

- Giraudeau B ,

- Porcher R ,

- Chauvin A ,

- Schulz KF ,

- Schroter S ,

- Stevens A ,

- Weinstein E ,

- Macleod MR ,

- IICARus Collaboration

- Kirkham JJ ,

- Petticrew M ,

- Tugwell P ,

- PRISMA-Equity Bellagio group

- Locations and Hours

- UCLA Library

- Research Guides

- Biomedical Library Guides

Systematic Reviews

- Types of Literature Reviews

What Makes a Systematic Review Different from Other Types of Reviews?

- Planning Your Systematic Review

- Database Searching

- Creating the Search

- Search Filters and Hedges

- Grey Literature

- Managing and Appraising Results

- Further Resources

Reproduced from Grant, M. J. and Booth, A. (2009), A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information & Libraries Journal, 26: 91–108. doi:10.1111/j.1471-1842.2009.00848.x

- << Previous: Home

- Next: Planning Your Systematic Review >>

- Last Updated: Apr 17, 2024 2:02 PM

- URL: https://guides.library.ucla.edu/systematicreviews

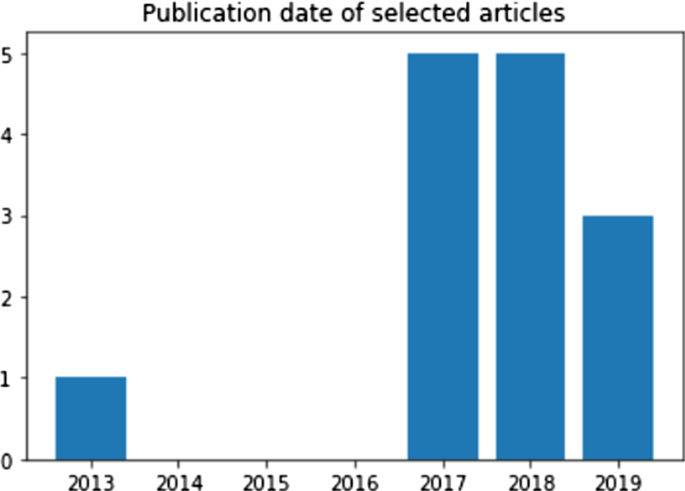

Systematic reviews in sentiment analysis: a tertiary study

- Open access

- Published: 03 March 2021

- Volume 54 , pages 4997–5053, ( 2021 )

Cite this article

You have full access to this open access article

- Alexander Ligthart 1 ,

- Cagatay Catal ORCID: orcid.org/0000-0003-0959-2930 2 &

- Bedir Tekinerdogan 1

31k Accesses

122 Citations

1 Altmetric

Explore all metrics

With advanced digitalisation, we can observe a massive increase of user-generated content on the web that provides opinions of people on different subjects. Sentiment analysis is the computational study of analysing people's feelings and opinions for an entity. The field of sentiment analysis has been the topic of extensive research in the past decades. In this paper, we present the results of a tertiary study, which aims to investigate the current state of the research in this field by synthesizing the results of published secondary studies (i.e., systematic literature review and systematic mapping study) on sentiment analysis. This tertiary study follows the guidelines of systematic literature reviews (SLR) and covers only secondary studies. The outcome of this tertiary study provides a comprehensive overview of the key topics and the different approaches for a variety of tasks in sentiment analysis. Different features, algorithms, and datasets used in sentiment analysis models are mapped. Challenges and open problems are identified that can help to identify points that require research efforts in sentiment analysis. In addition to the tertiary study, we also identified recent 112 deep learning-based sentiment analysis papers and categorized them based on the applied deep learning algorithms. According to this analysis, LSTM and CNN algorithms are the most used deep learning algorithms for sentiment analysis.

Similar content being viewed by others

A survey on sentiment analysis methods, applications, and challenges

A review on sentiment analysis and emotion detection from text

Sentiment Analysis in the Age of Generative AI

Avoid common mistakes on your manuscript.

1 Introduction

Sentiment analysis or opinion mining is the computational study of people's opinions, sentiments, emotions, and attitudes towards entities such as products, services, issues, events, topics, and their attributes (Liu 2015). As such, sentiment analysis can allow tracking the mood of the public about a particular entity to create actionable knowledge. Also, this type of knowledge can be used to understand, explain, and predict social phenomena (Pozzi et al. 2017 ). For the business domain, sentiment analysis plays a vital role in enabling businesses to improve strategy and gain insight into customers' feedback about their products. In today's customer-oriented business culture, understanding the customer is increasingly important (Chagas et al. 2018 ).

The explosive growth of discussion platforms, product review websites, e-commerce, and social media facilitates a continuous stream of thoughts and opinions. This growth makes it challenging for companies to get a better understanding of customers' aggregate opinions and attitudes towards products. The explosion of internet-generated content coupled with techniques like sentiment analysis provides opportunities for marketers to gain intelligence on consumers' attitudes towards their products (Rambocas and Pacheco 2018 ). Extracting sentiments from product reviews helps marketers to reach out to customers who need extra care, which will improve customer satisfaction, sales, and ultimately benefits businesses (Vyas and Uma 2019 ).

Sentiment analysis is a multidisciplinary field, including psychology, sociology, natural language processing, and machine learning. Recently, the exponentially growing amounts of data and computing power enabled more advanced forms of analytics. Machine learning, therefore, became a dominant tool for sentiment analysis. There is an abundance of scientific literature available on sentiment analysis, and there are also several secondary studies conducted on the topic.

A secondary study can be considered as a review of primary studies that empirically analyze one or more research questions (Nurdiani et al. 2016 ). The use of secondary studies (i.e., systematic reviews) in software engineering was suggested in 2004, and the term “Evidence-based Software Engineering” (EBSE) was coined by Kitchenham et al. ( 2004 ). Nowadays, secondary studies are widely used as a well-established tool in software engineering research (Budgen et al. 2018 ). The following two kinds of secondary studies can be conducted within the scope of EBSE:

Systematic Literature Review (SLR): An SLR study aims to identify relevant primary studies, extract the required information regarding the research questions (RQs), and synthesize the information to respond to these RQs. It follows a well-defined methodology and assesses the literature in an unbiased and repeatable way (Kitchenham and Charters 2007 ).

Systematic Mapping Study (SMS): An SMS study presents an overview of a particular research area by categorizing and mapping the studies based on several dimensions (i.e., facets) (Petersen et al. 2008 ).

SLR and SMS studies are different than traditional review papers (a.k.a., survey articles) because we systematically search in electronic databases and follow a well-defined protocol to identify the articles. There are also several differences between SLR and SMS studies (Catal and Mishra 2013 ; Kitchenham et al. 2010b ). For instance, while RQs of the SLR studies are very specific, RQs of SMS are general. The search process of the SLR is driven by research questions, but the search process of the SMS is based on the research topic. For the SLR, all relevant papers must be retrieved, and quality assessments of identified articles must be performed; however, requirements for the SMS are less stringent.

When there is a sufficient number of secondary studies on a research topic, a tertiary study can be performed (Kitchenham et al. 2010a ; Nurdiani et al. 2016 ). A tertiary study synthesizes data from secondary studies and provides a comprehensive review of research in a research area (Rios et al. 2018 ). They are used to summarize the existing secondary studies and can be considered as a special form of review that uses other secondary studies as primary studies (Raatikainen et al. 2019 ).

Although sentiment analysis has been the topic of some SLR studies, a tertiary study characterizing these systematic reviews has not been performed yet. As such, the aim of our study is to identify and characterize systematic reviews in sentiment analysis and present a consolidated view of the published literature to better understand the limitations and challenges of sentiment analysis. We follow the research methodology guidelines suggested for the tertiary studies (Kitchenham et al. 2010a ).

The objective of this study is thus to better understand the sentiment analysis research area by synthesizing results of these secondary studies, namely SLR and SMS, and providing a thorough overview of the topic. The methodology that we followed applies a systematic literature review to a sample of systematic reviews, and therefore, this type of tertiary study is valuable to determine the potential research areas for further research.

As part of this tertiary study, different models, tasks, features, datasets, and approaches in sentiment analysis have been mapped and also, challenges and open problems in this field are identified. Although tertiary studies have been performed for other topics in several fields such as software engineering and software testing (Raatikainen et al. 2019 ; Nurdiani et al. 2016 ; Verner et al. 2014 ; Cruzes and Dybå, 2011 ; Cadavid et al. 2020 ), this is the first study that performs a tertiary study on sentiment analysis.

The main contributions of this article are three-fold:

We present the results of the first tertiary study in the literature on sentiment analysis.

We identify systematic review studies of sentiment analysis systematically and explain the consolidated view of these systematic studies.

We support our study with recent survey papers that review deep learning-based sentiment analysis papers and explain the popular lexicons in this field.

The rest of the paper is organized as follows: Sect. 2 provides the background and related work. Section 3 explains the methodology, which was followed in this study. Section 4 presents the results in detail. Section 5 provides the discussion, and Sect. 6 explains the conclusions.

2 Background and related work

Sentiment analysis and opinion mining are often used interchangeably. Some researchers indicate a subtle difference between sentiments and opinions, namely that opinions are more concrete thoughts, whereas sentiments are feelings (Pozzi et al. 2017 ). However, sentiment and opinion are related constructs, and both sentiment and opinion are included when referring to either one. This research adopts sentiment analysis as a general term for both opinion mining and sentiment analysis.

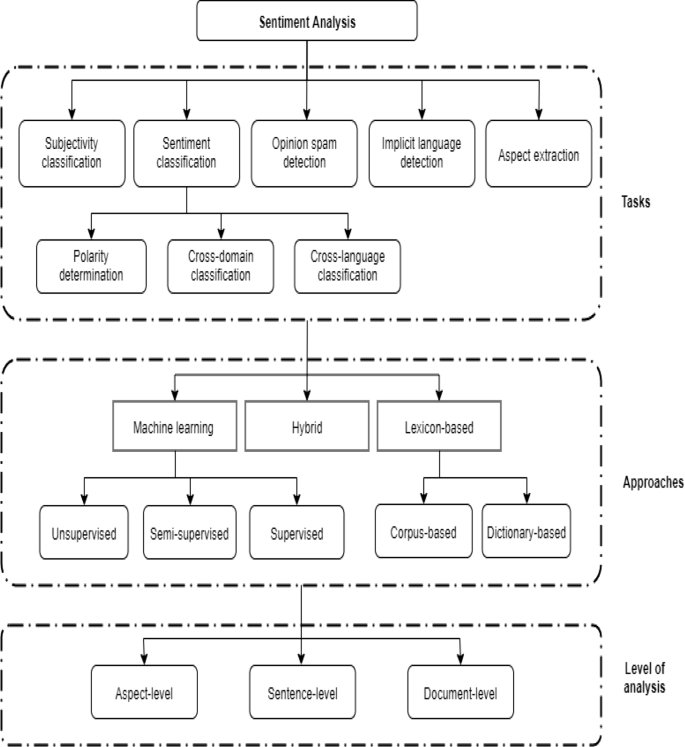

Sentiment analysis is a broad concept that consists of many different tasks, approaches, and types of analysis, which are explained in this section. In addition, an overview of sentiment analysis is represented in Fig. 1 , which is adapted from (Hemmatian and Sohrabi 2017 ; Kumar and Jaiswal 2020 ; Mite-Baidal et al. 2018 ; Pozzi et al. 2017 ; Ravi and Ravi 2015 ). Cambria et al. ( 2017 ) stated that a holistic approach to sentiment analysis is required, and only categorization or classification is not sufficient. They presented the problem as a three-layer structure that includes 15 Natural Language Processing (NLP) problems as follows:

Syntactics layer: Microtext normalization, sentence boundary disambiguation, POS tagging, text chunking, and lemmatization

Semantics layer: Word sense disambiguation, concept extraction, named entity recognition, anaphora resolution, and subjectivity detection

Pragmatics layer: Personality recognition, sarcasm detection, metaphor understanding, aspect extraction, and polarity detection

Sentiment analysis concept overview

Cambria ( 2016 ) state that approaches for sentiment analysis and affective computing can be divided into the following three categories: knowledge-based techniques, statistical approaches (e.g., machine learning and deep learning approaches), and hybrid techniques that combine the knowledge-based and statistical techniques.

Sentiment analysis models can adopt different pre-processing methods and apply a variety of feature selection methods. While pre-processing means transforming the text into normalized tokens (e.g., removing article words and applying the stemming or lemmatization techniques), feature selection means determining what features will be used as inputs. In the following subsections, related tasks, approaches, and levels of analysis are presented in detail.

2.1.1 Sentiment classification

One of the most widely known and researched tasks in sentiment analysis is sentiment classification. Polarity determination is a subtask of sentiment classification and is often improperly used when referring to sentiment analysis. However, it is merely a subtask aimed at identifying sentiment polarity in each text document. Traditionally, polarity is classified as either positive or negative (Wang et al. 2014 ). Some studies include a third class called neutral . Cross-domain and cross-language classification are subtasks of sentiment classification that aim to transfer knowledge from a data-rich source domain to a target domain where data and labels are limited. The cross-domain analysis predicts the sentiment of a target domain, with a model (partly) trained on a more data-rich source domain. A popular method is to extract domain invariant features whose distribution in the source domain is close to that of the target domain (Peng et al. 2018 ). The model can be extended with target domain-specific information. The cross-language analysis is practiced in a similar way by training a model on a source language dataset and testing it on a different language where data is limited, for example by translating the target language to the source language before processing (Can et al. 2018 ). Xia et al. ( 2015 ) stated that opinion-level context is beneficial to solve polarity ambiguity of sentiment words and applied the Bayesian model. Word polarity ambiguity is one of the challenges that need to be addressed for sentiment analysis. Vechtomova ( 2017 ) showed that the information retrieval-based model is an alternative to machine learning-based approaches for word polarity disambiguation.

2.1.2 Subjectivity classification

Subjectivity classification is a task to determine the existence of subjectivity in the text (Kasmuri and Basiron 2017 ). The goal of subjectivity classification is to restrict unwanted objective data objects for further processing (Kamal 2013 ). It is often considered the first step in sentiment analysis. Subjectivity classification detects subjective clues , words that carry emotion or subjective notions like ‘expensive’, ‘easy’, and ‘better’ (Kasmuri and Basiron 2017 ). These clues are used to classify text objects as subjective or objective.

2.1.3 Opinion spam detection

The growing popularity of e-commerce websites and review websites caused opinion spam detection to be a prominent issue in sentiment analysis. Opinion spams also referred to as false or fake reviews are intelligently written comments that either promote or discredit a product. Opinion spam detection aims to identify three types of features that relate to a fake review: review content, metadata of review, and real-life knowledge about the product (Ravi and Ravi 2015 ). Review content is often analyzed with machine learning techniques to uncover deception. Metadata includes the star rating, IP address, geo-location, user-id, etc.; however, in many cases, it is not accessible for analysis. The third method includes real-life knowledge. For instance, if a product has a good reputation, and suddenly the inferior product is rated superior in some period, reviews of that period might be suspected.

2.1.4 Implicit language detection

Implicit language refers to humor, sarcasm, and irony. There are vagueness and ambiguity in this form of speech, which is sometimes hard to detect even for humans. However, an implicit meaning to a sentence can completely flip the polarity of a sentence. Implicit language detection often aims at understanding facts related to an event. For example, in the phrase “I love pain”, pain is a factual word with a negative polarity load. The contradiction of the factual word ‘pain’ and subjective word ‘love’ can indicate sarcasm, irony, and humor. More traditional methods for implicit language detection include exploring clues such as emoticons, expressions for laughter, and heavy punctuation mark usage (Filatova 2012 ).

2.1.5 Aspect extraction

Aspect extraction refers to retrieving the target entity and aspects of the target entity in the document. The target entity can be a product, person, event, organization, etc. (Akshi Kumar and Sebastian 2012 ). People's opinions on various parts of a product need to be identified for fine-grained sentiment analysis (Ravi and Ravi 2015 ). Aspect extraction is especially important in sentiment analysis of social media and blogs that often do not have predefined topics.

Multiple methods exist for aspect extraction. The first and most traditional method is frequency-based analysis. This method finds frequently used nouns or compound nouns (POS tags), which are likely to be aspects. A rule of thumb that is often used is that if the (compound) noun occurs in at least 1% of the sentences, it is considered an aspect. This straightforward method turns out to be quite powerful (Schouten and Frasincar 2016 ). However, there are some drawbacks to this method (e.g., not all nouns are referring to aspects).

Syntax-based methods find aspects by means of syntactic relations they are in. A simple example is identifying aspects that are preceded by a modifying adjective that is a sentiment word. This method allows for low-frequency aspects to be identified. The drawback of this method is that many relations need to be found for complete coverage, which requires knowledge of sentiment words. Extra aspects can be found if more sentiment words that serve as adjectives can be identified. Qiu et al. ( 2009 ) propose a syntax-based algorithm that identifies aspects as well as sentiment words that works both ways. The algorithm identifies sentiment words for known aspects and aspects for known sentiment words.

2.2 Approaches

2.2.1 machine learning-based approaches.

Machine learning approaches for sentiment analysis tasks can be divided into three categories: unsupervised learning, semi-supervised learning, and supervised learning.

The unsupervised learning methods group unlabelled data into clusters that are similar to each other. For example, the algorithm can consider data as similar based on common words or word pairs in the document (Li and Liu 2014 ).

Semi-supervised learning uses both labeled and unlabelled data in the training process (da Silva et al. 2016a , b ). A set of unlabelled data is complemented with some examples of labeled data (often limited) included building a classifier. This technique can yield decent accuracy and requires less human effort compared to supervised learning. In cross-domain and cross-language classification, domain, or language invariant features can be extracted with the help of unlabelled data, while fine-tuning the classifier with labeled target data (Peng et al. 2018 ). Semi-supervised learning is especially popular for Twitter sentiment analysis, where large sets of unlabelled data are available (da Silva et al. 2016a , b ). Hussain and Cambria ( 2018 ) compared the computational complexity of several semi-supervised learning methods and presented a new semi-supervised model based on biased SVM (bSVM) and biased Regularized Least Squares (bRLS). Wu et al. ( 2019 ) developed a semi-supervised Dimensional Sentiment Analysis (DSA) model using the variational autoencoder algorithm. DSA calculates the sentiment score of texts based on several dimensions, such as dominance, valence, and arousal. Xu and Tan ( 2019 ) proposed the target-oriented semi-supervised sequential generative model (TSSGM) for target-oriented aspect-based sentiment analysis and showed that this approach outperforms two semi-supervised learning methods. Han et al. (2019) developed a semi-supervised model using dynamic thresholding and multiple classifiers for sentiment analysis. They evaluated their model on the Large Movie Review dataset and showed that it provides higher performance than the other models. Duan et al. ( 2020 ) proposed the Generative Emotion Model with Categorized Words (GEM-CW) model for stock message sentiment classification and demonstrated that this model is effective. Gupta et al. ( 2018 ) investigated the semi-supervised approaches for low resource sentiment classification and showed that their proposed methods improve the model performance against supervised learning models.

The most widely known machine learning method is supervised learning. This approach trains a model with labeled source data. The trained model can subsequently make predictions for an output considering new unlabelled input data. In most cases, supervised learning often outperforms unsupervised and semi-supervised learning approaches, but the dependency on labeled training data can require lots of human effort and is therefore sometimes inefficient (Hemmatian and Sohrabi 2017 ).

Machine learning methods are increasingly popular for aspect extraction. The most commonly used approach for aspect extraction is topic modeling , an unsupervised method that assumes any document contains a certain amount of hidden topics (Hemmatian and Sohrabi 2017 ). Latent Dirichlet Allocation (LDA) algorithm, which has many different variations, is a popular topic modeling algorithm (Nguyen and Shirai 2015 ) that allows observations to be explained by unsupervised grouping of similar data. LDA outputs some topics of a text document and attributes each word in the document to one of the identified topics. The drawback of machine learning methods is that they require lots of labeled data.

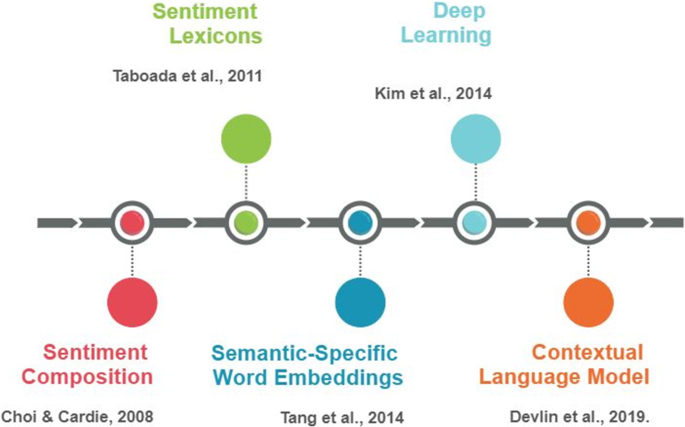

2.2.2 Deep learning-based approaches

Deep learning is a sub-branch of machine learning that uses deep neural networks. Recently, deep learning algorithms have been widely applied for sentiment analysis. In this section, first, we discuss the articles that present an overview of papers that applied deep learning for sentiment analysis. These articles are neither SLR nor SMS papers. Instead, they are either traditional review (a.k.a., survey) articles or comparative assessment papers that explain the existing deep learning-based approaches in addition to the experimental analysis. Later, we also present some of the deep learning-based models used in sentiment analysis papers.

In Table 1 , we present the survey papers that analyzed deep learning-based sentiment analysis papers. In this table, we also show the number of papers investigated in these survey papers.

Dang et al. ( 2020 ) presented a summary of 32 deep learning-based sentiment analysis papers and analyzed the performance of Deep Neural Networks (DNN), Convolutional Neural Networks (CNN), and Recurrent Neural Networks (RNN) on eight datasets. They selected these deep learning algorithms because they are the most widely used deep learning algorithms according to their analysis of 32 deep learning-based sentiment analysis papers. They used both word embedding and term frequency-inverse document frequency (TF-IDF) to prepare inputs for classification algorithms and reported that the RNN-based model using word embedding achieved the best performance among other algorithms. However, the processing time of the RNN-based model is ten times larger than the CNN-based one. In addition, they reported that the following deep learning algorithms were used in the 32 deep learning-based sentiment analysis papers: CNN, Long-Short Term Memory (LSTM) (tree-LSTM, discourse-LSTM, coattention-LSTM, bi-LSTM), Gated Recurrent Units (GRU), RNN, Coattention-MemNet, Latent Rating Neural Network (LRNN), Simple Recurrent Networks (SRN), and Recurrent Neural Tensor Network (RNTN)).

Yadav and Vishwakarma (2019) reviewed 130 research papers that apply deep learning techniques in sentiment analysis. They identified the following deep learning methods used for sentiment analysis: CNN, Recursive Neural Network (Rec NN), RNN (LSTM and GRU), Deep Belief Networks (DBN), Attention-based Network, Bi-RNN, and Capsule Network. They reported that LSTM provides better results, and the use of deep learning approaches for sentiment analysis is promising. However, they stated that they require a huge amount of data, and there is a lack of training datasets.

Zhang et al. ( 2018 ) published a survey article on the application of deep learning methods for sentiment analysis. They explained several papers that address one of the following levels: document level, sentence level, and the aspect level sentiment classification. The applied algorithms per analysis level are listed as follows:

Document-level sentiment classification: Artificial Neural Networks (ANN), Stacked Denoising Autoencoder (DSA), Denoising Autoencoder, CNN, LSTM, GRU, Memory Network, and GRU-based Encoder

Sentence-level sentiment classification: CNN, RNN, Semi-supervised Recursive Autoencoders Network (RAE), Recursive Neural Network, Recursive Neural Tensor Network, Dynamic CNN, LSTM, CNN-LSTM, Bi-LSTM, and Recurrent Random Walk Network

Aspect-level sentiment classification: Adaptive Recursive Neural Network, LSTM, Bi-LSTM, Attention-based LSTM, Memory Network, Interactive Attention Network, Recurrent Attention Network, and Dyadic Memory Network

Rojas‐Barahona (2016) presented an overview of deep learning approaches used for sentiment analysis and divided the techniques into the following categories:

Non-Recursive Neural Networks: RNN (variant: Bi-RNN), LSTM (variant: Bi-LSTM), and CNN (variants: CNN-Multichannel, CNN-non-static, Dynamic CNN)

Recursive Neural Networks: Recursive Autoencoders and Constituency Tree Recursive Neural Networks

Combination of Non-Recursive and Recursive Methods: Tree-Long Short-Term Memory (Tree-LSTM) and Deep Recursive Neural Networks (Deep RsNN)

For the movie reviews dataset, Rojas‐Barahona (2016) showed that the Dynamic CNN model provides the best performance. For the Sentiment TreeBank dataset, the Constituency Tree‐LSTM that is a Recursive Neural Network outperforms all the other algorithms.

Habimana et al. ( 2020a ) reviewed papers that applied deep learning algorithms for sentiment analysis and also performed several experiments with the specified algorithms on different datasets. They reported that dynamic sentiment analysis, sentiment analysis for heterogeneous information, and language structure are the main challenges for the sentiment analysis research field. They categorized the techniques used in the papers based on several analysis levels that are listed as follows:

Document-level Sentiment Analysis: CNN-based models, RNN with attention-based models, RNN with the user and product attention-based models, Adversarial Network Models, and Hybrid Models

Sentence-Level Sentiment Classification: Unsupervised Pre-Trained Networks (UPN), CNN, Recurrent Neural Networks, Deep Reinforcement Learning (DRL), RNN, RNN with cognition attention-based models

Aspect-based Sentiment Analysis: Attention-based models with aspect information, attention-based models with the aspect context, RNN with attention memory model, RNN with commonsense knowledge model, CNN-based model, and Hybrid model

Do et al. ( 2019 ) presented an overview of over 40 deep learning approaches used for aspect-based sentiment analysis. They categorized papers based on the following categories: CNN, RNN, Recursive Neural Network, and Hybrid methods. Also, they presented the advantages, disadvantages, and implications for aspect-based sentiment analysis (ABSA). They concluded that deep learning and ABSA are still in the early stages, and there are four main challenges in this field, namely domain adaptation, multi-lingual application, technical requirements (labeled data and computational resources and time), and linguistic complications.

Minaee et al. ( 2020 ) reviewed more than 150 deep learning-based text classification studies and presented their strengths and contributions. 22 of these studies proposed approaches for sentiment analysis. They provided more than 40 popular text classification datasets and showed the performance of some deep learning models on popular datasets. Since they did not only focus on sentiment analysis problems, they explained other kinds of models used for other tasks such as news categorization, topic analysis, question answering (QA), and natural language inference. They explained the following deep learning models in their paper: Feed-forward neural networks, RNN-based models, CNN-based models, Capsule Neural Networks, Models with attention mechanism, Memory augmented networks, Transformers, Graph Neural Networks, Siamese Neural Networks, Hybrid models, Autoencoders, Adversarial training, and Reinforcement learning. The challenges reported in this study are new datasets for multi-lingual text classification, interpretable deep learning models, and memory-efficient models. They concluded that the use of deep learning in text classification improves the performance of the models.

Some of the highly cited deep learning-based sentiment analysis papers are shown in Table 2 .

Kim ( 2014 ) performed several experiments with the CNN algorithm for sentence classification and showed that even with little parameter tuning, the CNN model that includes only one convolutional layer provides better performance than the state-of-the-art models of sentiment analysis.

Wang et al. ( 2016 ) developed an attention-based LSTM approach that can learn aspect embeddings. These aspects are used to compute the attention weights. Their models provided a state-of-the-art performance on SemEval 2014 dataset. Similarly, Pergola et al. ( 2019 ) proposed a topic-dependent attention model for sentiment classification and showed that the use of recurrent unit and multi-task learning provides better representations for accurate sentiment analysis.

Chen et al. ( 2017 ) developed the Recurrent Attention on Memory (RAM) model and showed that their model outperforms other state-of-the-art techniques on four datasets, namely SemEval 2014 (two datasets), Twitter dataset, and Chinese news comment dataset. Multiple attentions were combined with a Recurrent Neural Network in this study.

Ma et al. ( 2018 ) incorporated a hierarchical attention mechanism to the LSTM network and also extended the LSTM cell to incorporate commonsense knowledge. They demonstrated that the combination of this new LSTM model called Sentic LSTM and the attention architecture outperforms the other models for targeted aspect-based sentiment analysis.

Chen et al. ( 2016 ) developed a hierarchical LSTM model that incorporates user and product information via different levels of attention. They showed that their model achieves significant improvements over models without user and product information on IMDB, Yelp2013, and Yelp2014 datasets.

Wehrmann et al. ( 2017 ) proposed a language-agnostic sentiment analysis model based on the CNN algorithm, and the model does not require any translation. They demonstrated that their model outperforms other models on a dataset, including tweets from four languages, namely English, German, Spanish, and Portuguese. The dataset consists of 1.6 million annotated tweets (i.e., positive, negative, and neutral) from 13 European languages.

Ebrahimi et al. ( 2017 ) presented the challenges of building a sentiment analysis platform and focused on the 2016 US presidential election. They reported that they reached the best accuracy using the CNN algorithm, and the content-related challenges were hashtags, links, and sarcasm.

Poria et al. ( 2018 ) investigated three deep learning-based architectures for multimodal sentiment analysis and created a baseline based on state-of-the-art models.

Xu et al. ( 2019 ) developed an improved word representation approach, used the weighted word vectors as input into the Bi-LSTM model, obtained the comment text representation, and applied the feedforward neural network classifier to predict the comment sentiment tendency.

Majumder et al. ( 2019 ) proposed a GRU-based Neural Network that can be trained on sarcasm or sentiment datasets. They demonstrated that multitask learning-based approaches provide better performance than standalone classifiers developed on sarcasm and sentiment datasets.

After investigating these above-mentioned survey and highly cited articles, we searched in Google Scholar by using our search criteria (i.e., “deep learning” and “sentiment analysis”) to reach the recent state-of-the-art deep learning-based studies published in 2020. We retrieved 112 deep learning-based sentiment analysis papers published in 2020 and extracted the applied deep learning algorithms from these papers. In Appendix (Table 16 ), we present these recent deep learning-based sentiment analysis papers. In Table 3 , we show the distribution of applied deep learning algorithms used in these 112 recent papers.