News alert: UC Berkeley has announced its next university librarian

Secondary menu

- Log in to your Library account

- Hours and Maps

- Connect from Off Campus

- UC Berkeley Home

Search form

Research methods--quantitative, qualitative, and more: overview.

- Quantitative Research

- Qualitative Research

- Data Science Methods (Machine Learning, AI, Big Data)

- Text Mining and Computational Text Analysis

- Evidence Synthesis/Systematic Reviews

- Get Data, Get Help!

About Research Methods

This guide provides an overview of research methods, how to choose and use them, and supports and resources at UC Berkeley.

As Patten and Newhart note in the book Understanding Research Methods , "Research methods are the building blocks of the scientific enterprise. They are the "how" for building systematic knowledge. The accumulation of knowledge through research is by its nature a collective endeavor. Each well-designed study provides evidence that may support, amend, refute, or deepen the understanding of existing knowledge...Decisions are important throughout the practice of research and are designed to help researchers collect evidence that includes the full spectrum of the phenomenon under study, to maintain logical rules, and to mitigate or account for possible sources of bias. In many ways, learning research methods is learning how to see and make these decisions."

The choice of methods varies by discipline, by the kind of phenomenon being studied and the data being used to study it, by the technology available, and more. This guide is an introduction, but if you don't see what you need here, always contact your subject librarian, and/or take a look to see if there's a library research guide that will answer your question.

Suggestions for changes and additions to this guide are welcome!

START HERE: SAGE Research Methods

Without question, the most comprehensive resource available from the library is SAGE Research Methods. HERE IS THE ONLINE GUIDE to this one-stop shopping collection, and some helpful links are below:

- SAGE Research Methods

- Little Green Books (Quantitative Methods)

- Little Blue Books (Qualitative Methods)

- Dictionaries and Encyclopedias

- Case studies of real research projects

- Sample datasets for hands-on practice

- Streaming video--see methods come to life

- Methodspace- -a community for researchers

- SAGE Research Methods Course Mapping

Library Data Services at UC Berkeley

Library Data Services Program and Digital Scholarship Services

The LDSP offers a variety of services and tools ! From this link, check out pages for each of the following topics: discovering data, managing data, collecting data, GIS data, text data mining, publishing data, digital scholarship, open science, and the Research Data Management Program.

Be sure also to check out the visual guide to where to seek assistance on campus with any research question you may have!

Library GIS Services

Other Data Services at Berkeley

D-Lab Supports Berkeley faculty, staff, and graduate students with research in data intensive social science, including a wide range of training and workshop offerings Dryad Dryad is a simple self-service tool for researchers to use in publishing their datasets. It provides tools for the effective publication of and access to research data. Geospatial Innovation Facility (GIF) Provides leadership and training across a broad array of integrated mapping technologies on campu Research Data Management A UC Berkeley guide and consulting service for research data management issues

General Research Methods Resources

Here are some general resources for assistance:

- Assistance from ICPSR (must create an account to access): Getting Help with Data , and Resources for Students

- Wiley Stats Ref for background information on statistics topics

- Survey Documentation and Analysis (SDA) . Program for easy web-based analysis of survey data.

Consultants

- D-Lab/Data Science Discovery Consultants Request help with your research project from peer consultants.

- Research data (RDM) consulting Meet with RDM consultants before designing the data security, storage, and sharing aspects of your qualitative project.

- Statistics Department Consulting Services A service in which advanced graduate students, under faculty supervision, are available to consult during specified hours in the Fall and Spring semesters.

Related Resourcex

- IRB / CPHS Qualitative research projects with human subjects often require that you go through an ethics review.

- OURS (Office of Undergraduate Research and Scholarships) OURS supports undergraduates who want to embark on research projects and assistantships. In particular, check out their "Getting Started in Research" workshops

- Sponsored Projects Sponsored projects works with researchers applying for major external grants.

- Next: Quantitative Research >>

- Last Updated: Apr 25, 2024 11:09 AM

- URL: https://guides.lib.berkeley.edu/researchmethods

Module 2: Research Methods in Learning and Behavior

Module Overview

Module 2 will cover the critical issue of how research is conducted in the experimental analysis of behavior. To do this, we will discuss the scientific method, research designs, the apparatus we use, how we collect data, and dependent measures used to show that learning has occurred. We also will break down the structure of a research article and make a case for the use of both humans and animals in learning and behavior research.

Module Outline

2.1. The Scientific Method

2.2. research designs used in the experimental analysis of behavior, 2.3. dependent measures, 2.4. animal and human research.

Module Learning Outcomes

- Describe the steps in the scientific method and how this process is utilized in the experimental analysis of behavior.

- Describe specific research designs, data collection methods, and apparatus used in the experimental analysis of behavior.

- Understand the basic structure of a research article.

- List and describe dependent measures used in learning experiments.

- Explain why animals are used in learning research.

- Describe safeguards to protect human beings in scientific research.

Section Learning Objectives

- Define scientific method.

- Outline and describe the steps of the scientific method, defining all key terms.

- Define functional relationship and explain how it produces a contingency.

- Explain the concept of a behavioral definition.

- Distinguish between stimuli and responses and define related concepts.

- Distinguish types of contiguity, and the term from contingency.

- Describe the typical phases in learning research.

2.1.1. The Steps of The Scientific Method

In Module 1, we learned that psychology was the scientific study of behavior and mental processes. We will spend quite a lot of time on the behavior and mental processes part, but before we proceed, it is prudent to elaborate more on what makes psychology scientific. It is safe to say that most people not within our discipline or a sister science would be surprised to learn that psychology utilizes the scientific method at all.

So what is the scientific method? Simply, the scientific method is a systematic method for gathering knowledge about the world around us. The key word here is that it is systematic, meaning there is a set way to use it. What is that way? Well, depending on what source you look at it can include a varying number of steps. For our purposes, the following will be used:

Table 2.1: The Steps of the Scientific Method

2.1.2. Making Cause and Effect Statements in the Experimental Analysis of Behavior

As you have seen, scientists seek to make causal statements about what they are studying. In the study of learning and behavior, we call this a functional relationship. This occurs when we can say a target behavior has changed due to the use of a procedure/treatment/strategy and this relationship has been replicated at least one other time. A contingency is when one thing occurs due to another. Think of it as an if-then statement. If I do X then Y will happen. We can also say that when we experience Y that X preceded it. Concerning a functional relationship, if I introduce a treatment, then the animal responds as such or if that animal pushes the lever, then she receives a food pellet.

To help arrive at a functional relationship, we have to understand what we are studying. In science, we say we operationally define our variables. In the realm of learning, we call this a behavioral definition, or a precise, objective, unambiguous description of the behavior. The key is that we must state our behavioral definition with enough precision that anyone can read it and be able to accurately measure the behavior when it occurs.

2.1.3. Frequently Used Terms in the Experimental Analysis of Behavior

In the experimental analysis of behavior, we frequently talk about an animal or person experiencing a trial. Simply, a trial is one instance or attempt at learning. Each time a rat is placed in a maze this is considered one trial. We can then determine if learning is occurring using different dependent measures described in Section 2.3. If a child is asked to complete a math problem and then a second is introduced, and then a third, each practice problem represents a trial.

As you saw in Module 1, behaviorism is the science of stimuli and responses. What do these terms indicate? Stimuli are the environmental events that have the potential to trigger behavior, called a response . If your significant other does something nice for you and you say, ‘Thank you,’ the kind act is the stimulus which leads to your response of thanking him/her. Stimuli have to be sensed to bring about a response. This occurs through the five senses — vision, hearing, touch, smell, and taste. Stimuli can take on two forms. Appetitive stimuli are those that an organism desires and seeks out while aversive stimuli are readily avoided. An example of the former would be food or water and the latter is exemplified by extremes of temperature, shock, or a spanking by a parent.

As you will come to see in Module 6, we can make a stimulus more desirable or undesirable, called an establishing operation , or make it less desirable or undesirable, called an abolishing operation . Such techniques are called motivating operations . Food may be seen as more attractive, desirable, or pleasant if we are hungry but less desirable (or more undesirable) if we are full. A punishment such as taking away video games is more undesirable if the child likes to play games such as Call of Duty or Madden but is less undesirable (or maybe even has no impact) if they do not enjoy video games. Linked to the discussion above, food is an appetitive stimulus and could be an establishing operation if we are hungry. A valued video game also represents an establishing operation if we threaten its removal, and we will want to avoid such punishment, which makes the threat an aversive stimulus.

As noted earlier, the response is simply the behavior that is made and can take on many different forms. A dog may learn to salivate (response) to the sound of a bell (stimulus). A person may begin going to the gym if he or she seeks to gain tokens to purchase back up reinforcers (more on this in Module 7). A person may work harder in the future if they received a compliment from their boss today (either through email and visual or spoken or through hearing).

Another important concept is contiguity and occurs when two events are associated with one another because they occur together closely, whether in time called temporal contiguity or in space called spatial contiguity . In the case of time, we may come to associate thanking someone for saying ‘good job’ if we hear others doing this and the two verbal behaviors occur very close in time. Usually, the ‘Thank you’ (or other response) follows the praise within seconds. In the case of space, we may learn to use a spatula to flip our hamburgers on the grill if the spatula is placed next to the stove and not in another room. Do not confuse contiguity with contingency. Though the terms look the same they have very different meanings.

Finally, in learning research, we often distinguish two phases — baseline and treatment. Baseline Phase occurs before any strategy or strategies are put into effect. This phase will essentially be used to compare against the treatment phase. We are also trying to find out exactly how much of the target behavior the person or animal is engaging in. Treatment Phase occurs when the strategy or strategies are used, or you might say when the manipulation is implemented. Note that in behavior modification we also talk about what is called the maintenance phase. More on this in Module 7.

- List the five main research methods used in psychology.

- Describe observational research, listing its advantages and disadvantages.

- Describe the case study approach to research, listing its advantages and disadvantages.

- Describe survey research, listing its advantages and disadvantages.

- Describe correlational research, listing its advantages and disadvantages.

- Describe experimental research, listing its advantages and disadvantages.

- Define key terms related to experiments.

- Describe specific types of experimental designs used in learning research.

- Describe the ways we gather data in learning research (or applied behavior analysis).

- Outline the types of apparatus used in learning experiments.

- Outline the parts of a research article and describe their function.

Step 3 called on the scientist to test his or her hypothesis. Psychology as a discipline uses five main research designs to do just that. These include observational research, case studies, surveys, correlational designs, and experiments.

2.2.1. Observational Research

In terms of naturalistic observation , the scientist studies human or animal behavior in its natural environment which could include the home, school, or a forest. The researcher counts, measures, and rates behavior in a systematic way and at times uses multiple judges to ensure accuracy in how the behavior is being measured. This is called inter-rater reliability . The advantage of this method is that you witness behavior as it occurs and it is not tainted by the experimenter. The disadvantage is that it could take a long time for the behavior to occur and if the researcher is detected then this may influence the behavior of those being observed. In the case of the latter, the behavior of the observed becomes artificial .

Laboratory observation involves observing people or animals in a laboratory setting. The researcher might want to know more about parent-child interactions and so brings a mother and her child into the lab to engage in preplanned tasks such as playing with toys, eating a meal, or the mother leaving the room for a short period of time. The advantage of this method over the naturalistic method is that the experimenter can use sophisticated equipment and videotape the session to examine it later. The problem is that since the subjects know the experimenter is watching them, their behavior could become artificial.

2.2.2. Case Studies

Psychology can also utilize a detailed description of one person or a small group based on careful observation. The advantage of this method is that you arrive at a rich description of the behavior being investigated, but the disadvantage is that what you are learning may be unrepresentative of the larger population and so lacks generalizability . Again, bear in mind that you are studying one person or a very small group. Can you possibly make conclusions about all people from just one or even five or ten? The other issue is that the case study is subject to the bias of the researcher in terms of what is included in the final write up and what is left out. Despite these limitations, case studies can lead us to novel ideas about the cause of a behavior and help us to study unusual conditions that occur too infrequently to study with large sample sizes and in a systematic way.

2.2.3. Surveys/Self-Report Data

A survey is a questionnaire consisting of at least one scale with a number of questions that assess a psychological construct of interest such as parenting style, depression, locus of control, attitudes, or sensation-seeking behavior. It may be administered by paper and pencil or computer. Surveys allow for the collection of large amounts of data quickly, but the actual survey could be tedious for the participant, and social desirability , or when a participant answers questions dishonestly so that he/she is seen in a more favorable light, could be an issue. For instance, if you are asking high school students about their sexual activity, they may not give genuine answers for fear that their parents will find out. Or if you wanted to know about prejudiced attitudes of a group of people, you could use the survey method. You could alternatively gather this information via an interview in a structured, semi-structured, or unstructured fashion. Important to survey research is that you have random sampling, or when everyone in the population has an equal chance of being included in the sample. This helps the survey to be representative of the population, and in terms of key demographic variables such as gender, age, ethnicity, race, education level, and religious orientation. Surveys are not frequently used in the experimental analysis of behavior.

2.2.4. Correlational Research

This research method examines the relationship between two variables or two groups of variables. A numerical measure of the strength of this relationship is derived, called the correlation coefficient , and can range from -1.00, which indicates a perfect inverse relationship meaning that as one variable goes up the other goes down, to 0 or no relationship at all, to +1.00 or a perfect relationship in which as one variable goes up or down so does the other. In terms of a negative correlation we might say that as a parent becomes more rigid, controlling, and cold, the attachment of the child to parent goes down. In contrast, as a parent becomes warmer, more loving, and provides structure, the child becomes more attached. The advantage of correlational research is that you can correlate anything. The disadvantage is also that you can correlate anything. Variables that do not have any relationship to one another could be viewed as related. Yes. This is both an advantage and a disadvantage. For instance, we might correlate instances of making peanut butter and jelly sandwiches with someone we are attracted to sitting near us at lunch. Are the two related? Not likely, unless you make a really good PB&J, but then the person is probably only interested in you for food and not companionship. The main issue here is that correlation does not allow you to make a causal statement.

2.2.5. Experiments

An experiment is a controlled test of a hypothesis in which a researcher manipulates one variable and measures its effect on another. A variable is anything that varies over time or from one situation to the next. Patience could be an example of a variable. Though we may be patient in one situation, we may have less if a second situation occurs close in time. The first could have lowered our ability to cope making an emotional reaction quicker to occur even if the two situations are about the same in terms of impact. Another variable is weight. Anyone who has tried to shed some pounds and weighs in daily knows just how much weight can vary from day to day, or even on the same day. In terms of experiments, the variable that is manipulated is called the independent variable (IV) and the one that is measured is called the dependent variable (DV) .

A common feature of experiments is to have a control group that does not receive the treatment, or is not manipulated, and an experimental group that does receive the treatment or manipulation. If the experiment includes random assignment, participants have an equal chance of being placed in the control or experimental group. The control group allows the researcher to make a comparison to the experimental group, making a causal statement possible, and stronger.

Within the experimental analysis of behavior (and applied behavior analysis), experimental procedures take on several different forms. In discussing each, understand that we will use the following notations:

A will represent the baseline phase and B will represent the treatment phase.

- A-B design — This is by far the most basic of all designs used in behavior modification and includes just one rotation from baseline to treatment phase and from that we see if the behavior changed in the predicted manner. The issue with this design is that no functional relationship can be established since there is no replication. It is possible that the change occurred not due to the treatment that was used, but due to an extraneous variable , or an unseen and unaccounted for factor on the results and specifically our DV.

- A-B-A-B Reversal Design — In this design, the baseline and treatment phases are implemented twice. After the first treatment phase occurs, the individual(s) is/are taken back to baseline and then the treatment phase is implemented again. Replication is built into this design, allowing for a causal statement, but it may not be possible or ethical to take the person back to baseline after a treatment has been introduced, and one that likely is working well. What if you developed a successful treatment to reduce self-injurious behavior in children or to increase feelings of self-worth? You would want to know if the decrease in this behavior or increase in the positive thoughts was due to your treatment and not extraneous behaviors, but can you take the person back to baseline? Is it ethical to remove a treatment for something potentially harmful to the person? Now let’s say a teacher developed a new way to teach fractions to a fourth-grade class. Was it the educational paradigm or maybe additional help a child received from his/her parents or a tutor that accounts for improvement in performance? Well, we need to take the child back to baseline and see if the strategy works again, but can we? How can the child forget what has been learned already? ABAB Reversal Designs work well at establishing functional relationships if you can take the person back to baseline but are problematic if you cannot. An example of them working well includes establishing a system, such as a token economy (more on this later), to ensure your son does his chores, having success with it, and then taking it away. If the child stops doing chores and only restarts when the token economy is put back into place, then your system works. Note that with time the behavior of doing chores would occur on its own and the token economy would be fazed out.

- Multiple-baseline designs — This design can take on three different forms. In an across-subjects design, there is a baseline and treatment phase for two or more subjects for the same target behavior. For example, an applied behavior analyst is testing a new intervention to reduce disruptions in the classroom. The intervention involves a combination of antecedent manipulations, prompts, social support, differential reinforcement, and time-outs. He uses the intervention on six problematic students in a 6th period math class. Secondly, the across-settings design has a baseline and treatment phase for two or more settings in the same person for which the same behavior is measured. What if this same specialist now tests the intervention with one student but across her other five classes which include social studies, gym, science, English, and shop. Finally, in an across-behaviors design , there is a baseline and treatment phase for two or more different behaviors the same participant makes. The intervention continues to show promise and now the ABA specialist wants to see if it can help the same student but with his problem with procrastination and inability to organize.

- Changing-Criterion Design — In this design, the performance criteria changes as the subject achieves specific goals. The individual may go from having to workout at the gym 2 days a week to 3 days, then 4 days, and then finally 5 days. Once the goal of 2 days a week is met, the criterion changes to 3 days a week. In a learning study, a rat may have to press the lever 5 times to receive a food pellet and then once this is occurring regularly, the schedule changes to 10 times to receive the same food pellet. We are asking the rat to make more behaviors for the same consequence. The changing-criterion design has an A-B design but rules out extraneous variables since the person or animal continues meeting the changing criterion/new goals using the same treatment plan or experimental manipulation. Hence successfully moving from one goal to the next must be due to the strategies that were selected.

2.2.6. Ways We Gather Data

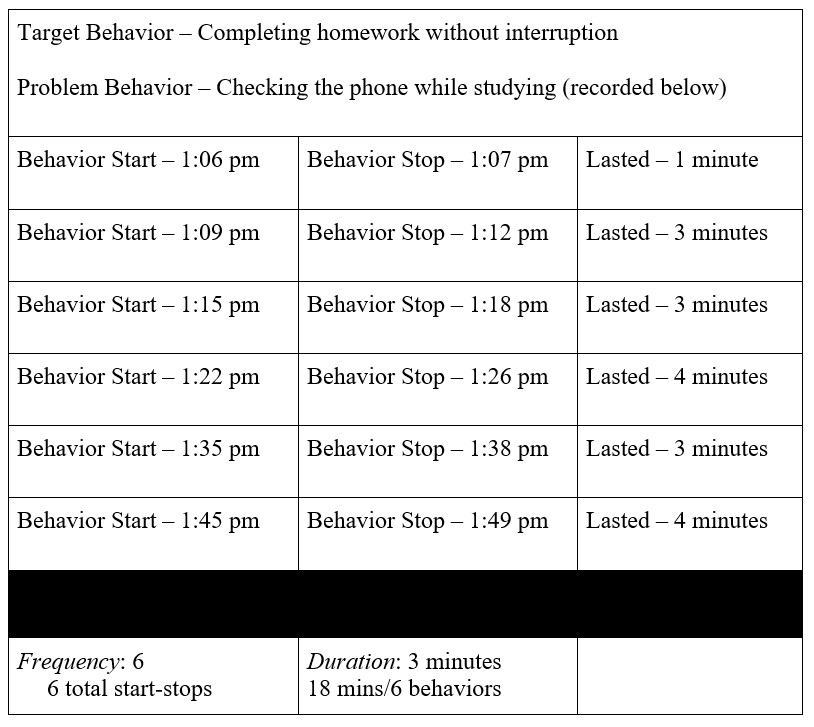

When we record, we need to decide what method we will use. Several strategies are possible to include continuous, product or outcome, and interval. First, in continuous recording, we watch a person or animal continuously throughout an observation period , or time when observations will be made, and all occurrences of the behavior are recorded. This technique allows you to record both frequency and duration. The frequency is reported as a rate, or the number of responses that occur per minute. Duration is the total time the behavior takes from start to finish. You can also record the intensity using a rating scale in which 1 is low intensity and 5 is high intensity. Finally, latency can be recorded by noting how long it took the person to engage in the desirable behavior, or to discontinue a problem behavior, from when the demand was uttered. You can also use real-time recording in which you write down the time when the behavior starts and when it ends, and then do this each time the behavior occurs. You can look at the number of start-stops to get the frequency and then average out the time each start-stop lasted to get the duration. For instance:

Next is product or outcome recording . This technique can be used when there is a tangible outcome you are interested in, such as looking at how well a student has improved his long division skills by examining his homework assignment or a test. Or you might see if your friend’s plan to keep a cleaner house is working by inspecting his or her house randomly once a week. This will allow you to know if an experimental teaching technique works. It is an indirect assessment method meaning that the observer does not need to be present. You can also examine many types of behaviors. But because the observer is not present, you are not sure if the person did the work himself or herself. It may be that answers were looked up online, cheating occurred as in the case of a test, or someone else did the homework for the student such as a sibling, parent, or friend. Also, you have to make sure you are examining the result/outcome of the behavior and not the behavior itself.

Finally, interval recording occurs when you take the observation period and divide it up into shorter periods of time. The person or animal is observed, and the target behavior recorded based on whether it occurs during the entire interval, called whole interval recording, or some part of the interval, called partial interval recording. With the latter, you are not interested in the dimensions of duration and frequency. We also say the interval recording is continuous if each subsequent interval follows immediately after the current one. Let’s say you are studying students in a classroom. Your observation period is the 50 minutes the student is in his home economics class and you divide it up into ten, 5-minute intervals. If using whole, then the behavior must occur during the entire 5-minute interval. If using partial, it only must occur sometime during the 5-minute interval. You can also use what is called time sample recording in which you divide the observation period into intervals of time but then observe and record during part of each interval (the sample). There are periods of time in between the observation periods in which no observation and recording occur. As such, the recording is discontinuous. This is a useful method since the observer does not have to observe the entire interval and the level of behavior is reported as the percentage of intervals in which the behavior occurred. Also, more than one behavior can be observed.

2.2.7. The Apparatus We Use

What we need to understand next in relation to learning research is what types of apparatus’ are used. As you might expect, the maze is the primary tool and has been so for over 100 years. Through the use of mazes, we can determine general principles about learning that apply to not only animals such as rats, but to human beings too. The standard or classic maze is built on a large platform with vertical walls and a transparent ceiling. The rat begins at a start point or box and moves through the maze until it reaches the end or goal box. There may be a reward at the end such as food or water to encourage the rat to learn the maze. Through the use of such a maze, we can determine how many trials it takes for the rat to reach the goal box without making a mistake. As you will see, in Section 2.3, we can also determine how long it took the rat to run the maze.

An alternative to this design is what is called the T-maze which obtains its name from its characteristic T-structure. The rat begins in a start box and proceeds up the corridor until it reaches a decision point – go left or right. We might discover if rats have a side preference or how fast they can learn if food-deprived the night before. One arm would have a food pellet while the other would not. It is also a great way to distinguish place and response learning (Blodgett & McCutchan, 1947). Some forms of the T-maze have multiple T-junctions in which the rat can make the correct decision and continues in the maze or makes a wrong decision. The rat can use cues in the environment to learn how to correctly navigate the maze and once learned, the rat will make few errors and run through it very quickly (Gentry, Brown, & Lee, 1948; Stone & Nyswander, 1927).

Similar to the T-maze is what is called the Y-maze . Starting in one arm, the rat moves forward and then has to choose one of two arms. The turns are not as sharp as in a T-maze making learning a bit easier. There is also a radial arm maze (Olton, 1987; Olton, Collison, & Werz, 1977) in which a rat starts in the center and can choose to enter any of 8, 12, or 16 spokes radiating out from this central location. It is a great test of short-term memory as the rat has to recall which arms have been visited and which have not. The rat successfully completes the maze when all arms have been visited.

One final maze is worth mentioning. The Morris water maze (Morris, 1984) is an apparatus that includes a large round tub of opaque water. There are two hidden platforms 1-2 cm under the water’s surface. The rat begins on a start platform and swims around until the other platform is located and it stands on it. It utilizes external cues placed outside the maze to find the end platform and run time is the typical dependent measure that is used.

To learn more about rat mazes, please visit: http://ratbehavior.org/RatsAndMazes.htm

Check this Out

Do you want to increase how fast rats learn their way through a multiple T-maze? Research has shown that you can do this by playing Mozart. Rats were exposed in utero plus 60 days to either a complex piece of music in the form of a sonata from Mozart, minimalist music, white noise, or silence. They were then tested over 5 days with 3 trials per day in a multiple T-maze. Results showed that rats exposed to Mozart completed the maze quicker and made fewer errors than the rats in the other conditions. The authors state that exposure to complex music facilitates spatial-temporal learning in rats and this matches results found in humans (Rauscher, Robinson, & Jens, 1998). Another line of research found that when rats were stressed they performed worse in water maze learning tasks than their non-stressed counterparts (Holscher, 1999).

So when you are studying for your quizzes or exams in this class (or other classes), play Mozart and minimize stress. These actions could result in a higher grade.

Outside of mazes, learning researchers may also utilize a Skinner Box . This is a small chamber used to conduct operant conditioning experiments with animals such as rats or pigeons. Inside the chamber, there is a lever for rats to push or a key for pigeons to peck which results in the delivery of food or water. The behavior of pushing or pecking is recorded through electronic equipment which allows for the behavior to be counted or quantified. This device is also called an operant conditioning chamber .

Finally, Edward Thorndike (1898) used a puzzle box to arrive at his law of effect or the idea that an organism will be more likely to repeat a behavior if it produced a satisfying effect in the past than if the effect was negative. This later became the foundation upon which operant conditioning was built. In his experiments, a hungry cat was placed in a box with a plate of fish outside the box. It was close enough that the cat could see and smell it but could not touch it. To get to the food, the cat had to figure out how to escape the box or which mechanism would help it to escape. Once free, the cat would take a bite, be placed back into the box, and then had to work to get out again. Thorndike discovered that the cat was able to get out quicker each time which demonstrated learning.

2.2.8. The Scientific Research Article

In scientific research, it is common practice to communicate the findings of our investigation. By reporting what we found in our study, other researchers can critique our methodology and address our limitations. Publishing allows psychology to grow its knowledge base about human behavior. We can also see where gaps still exist. We move it into the public domain so others can read and comment on it. Scientists can also replicate what we did and possibly extend our work if it is published.

As noted earlier, there are several ways to communicate our findings. We can do so at conferences in the form of posters or oral presentations, through newsletters from APA itself or one of its many divisions or other organizations, or through research journals and specifically scientific research articles. Published journal articles represent a form of communication between scientists and in them, the researchers describe how their work relates to previous research, how it replicates and/or extends this work, and what their work might mean theoretically.

Research articles begin with an abstract or a 150-250-word summary of the entire article. The purpose is to describe the experiment and allows the reader to decide whether he or she wants to read it further. The abstract provides a statement of purpose, overview of the methods, main results, and a brief statement of what these results mean. Keywords are also given that allow for students and other researchers alike to find the article when doing a search.

The abstract is followed by four major sections – Introduction, Method, Results, and Discussion. First, the introduction is designed to provide a summary of the current literature as it relates to the topic. It helps the reader to see how the researcher arrived at their hypothesis and the design of the study. Essentially, it gives the logic behind the decisions that were made.

Next, is the method section. Since replication is a required element of science, we must have a way to share information on our design and sample with readers. This is the essence of the method section and covers three major aspects of a study — the participants, materials or apparatus, and procedure. The reader needs to know who was in the study so that limitations related to the generalizability of the findings can be identified and investigated in the future. The researcher will also state the operational/behavioral definition, describe any groups that were used, identify random sampling or assignment procedures, and provide information about how a scale was scored or if a specific piece of apparatus was used, etc. Think of the method section as a cookbook. The participants are the ingredients, the materials or apparatus are whatever tools are needed, and the procedure is the instructions for how to bake the cake.

Third, is the results section. In this section, the researcher states the outcome of the experiment and whether it was statistically significant or not. The researchers can also present tables and figures. It is here we will find both descriptive and inferential statistics.

Finally, the discussion section starts by restating the main findings and hypothesis of the study. Next, is an interpretation of the findings and what their significance might be. Finally, the strengths and limitations of the study are stated which will allow the researcher to propose future directions or for other researchers to identify potential areas of exploration for their work. Whether you are writing a research paper for a class, preparing an article for publication, or reading a research article, the structure and function of a research article is the same. Understanding this will help you when reading articles in learning and behavior but also note, this same structure is used across disciplines.

- List typical dependent measures used in learning experiments.

- Describe the use of errors as a dependent measure.

- Describe the use of frequency as a dependent measure.

- Describe the use of intensity as a dependent measure.

- Describe the use of duration/run time/speed as a dependent measure.

- Describe the use of latency as a dependent measure.

- Describe the use of topography as a dependent measure.

- Describe the use of rate as a dependent measure.

- Describe the use of fluency as a dependent measure.

As we have learned, experiments include dependent and independent variables. The independent variable is the manipulation we are making while the dependent variable is what is being measured to see the effect of the manipulation. So, what types of DVs might we use in the experimental analysis of behavior or applied behavior analysis? We will cover the following: errors, frequency, intensity, duration, latency, topography, rate, and fluency.

2.3.1. Errors

A very simple measure of learning is to assess the number of errors made. If an animal running a maze has learned the maze, he/she should make fewer errors or mistakes with each trial, compared to say the first trial when many errors were made. The same goes for a child learning how to do multiplication. There will be numerous errors at start and then fewer to none later.

2.3.2. Frequency

Frequency is a measure of how often a behavior occurs. If we want to run more often, we may increase the number of days we run each week from 3 to 5. In terms of behavior modification, I once had a student who wished to decrease the number of times he used expletives throughout the day.

2.3.3. Intensity

Intensity is a measure of how strong the response is. For instance, a person on a treadmill may increase the intensity from 5 mph to 6 mph meaning the belt moves quicker and so the runner will have to move faster to keep up. We might tell children in a classroom to use their inside voices or to speak softer as opposed to their playground voices when they can yell.

2.3.4. Duration/Run Time/Speed

Duration is a measure of how long the behavior lasts. A runner may run more often (frequency), faster (intensity), or may run longer (duration). In the case of the latter, the runner may wish to build endurance and run for increasingly longer periods of time. A parent may wish to decrease the amount of time a child plays video games or is on his/her phone before bed. For rats in a maze, the first few attempts will likely take longer to reach the goal box than later attempts once the path needed to follow is learned. In other words, duration, or run time, will go down which demonstrates learning.

2.3.5. Latency

Latency represents the time it takes for a behavior to follow from the presentation of a stimulus. For instance, if a parent tells a child to take out the trash and he does so 5 minutes later, then the latency for the behavior of walking the trash outside is 5 minutes.

2.3.6. Topography

Topography represents the physical form a behavior takes. For instance, if a child is being disruptive, in what way is this occurring? Could it be the child is talking out of turn, being aggressive with other students, fidgeting in his/her seat, etc? In the case of rats and pushing levers, the mere act of pushing may not be of interest, but which paw is used or how much pressure is applied to the lever?

2.3.7. Rate

Rate is a measure of the change in response over time, or how often a behavior occurs. We may wish the rat to push the lever more times per minute to earn food reinforcement. Initially, the rat was required to push the lever 20 times per minute and now the experimenter requires 35 times per minute to receive a food pellet. In humans, a measure of rate would be words typed per minute. I may start at 20 words per minute but with practice (representing learning) I could type 60 words per minute or more.

2.3.8. Fluency

Though I may type fast, do I type accurately? This is where fluency comes in. Think about a foreign language. If you are fluent you speak it well. So, fluency is a measure of the number of correct responses made per minute. I may make 20 errors per minute of typing but with practice, I not only get quicker (up to 60 words per minute) but more accurate and reduce mistakes measure to 5 errors per minute. A student taking a semester of Spanish may measure learning by how many verbs he can correctly conjugate in a minute. Initially, he could only conjugate 8 verbs per minute but by the end of the semester can conjugate 24.

- Defend the use of animals in research.

- Describe safeguards to protect human research subjects.

2.4.1. Animal Models of Behavior

Learning research frequently uses animal models. According to AnimalResearch.info , animals are used “…when there is a need to find out what happens in the whole, living body, which is far more complex than the sum of its parts. It is difficult, and in most cases simply not yet possible, to replace the use of living animals in research with alternative methods.” They cite four main reasons to use animals. First, to advance scientific understanding such as how living things work to apply that knowledge for the benefit of both humans and animals. They state, “Many basic cell processes are the same in all animals, and the bodies of animals are like humans in the way that they perform many vital functions such as breathing, digestion, movement, sight, hearing, and reproduction.”

Second, animals can serve as models to study disease. For example, “Dogs suffer from cancer, diabetes, cataracts, ulcers and bleeding disorders such as hemophilia, which make them natural candidates for research into these disorders. Cats suffer from some of the same visual impairments as humans.” Therefore, animal models help us to understand how diseases affect the body and how our immune system responds.

Third, animals can be used to develop and test potential treatments for these diseases. As the website says, “Data from animal studies is essential before new therapeutic techniques and surgical procedures can be tested on human patients.”

Finally, animals help protect the safety of people, other animals, and our environment. Before a new medicine can go to market, it must be tested to ensure that the benefits outweigh the harmful effects. Legally and ethically, we have to move away from in vitro testing of tissues and isolated organs to suitable animal models and then testing in humans.

In conducting research with animals, three principles are followed. First, when possible, animals should be replaced with alternative techniques such as cell cultures, tissue engineering, and computer modeling. Second, the number of animals used in research should be reduced to a minimum. We can do this by “re-examining the findings of studies already conducted (e.g. by systematic reviews), by improving animal models, and by use of good experimental design.” Finally, we should refine the way experiments are conducted to reduce any suffering the animals may experience as much as possible. This can include better housing and improving animal welfare. Outside of the obvious benefit to the animals, the quality of research findings can also increase due to reduced stress in the animals. This framework is called the 3Rs.

Please visit: http://www.animalresearch.info/en/

One way to guarantee these principles are followed is through what is called the Institutional Animal Care and Use Committee (IACUC). The IACUC is responsible for the oversight and review of the humane care and use of animals; upholds standards set forth in laws, policies, and guidance; inspects animal housing facilities; approves protocols for use of animals in research, teaching, or education; addresses animal welfare concerns of the public; and reports to the appropriate bodies within a university, accrediting organizations, or government agencies. At times, projects may have to be suspended if found to be noncompliant with the regulations and policies of that institution.

- For more on the IACUC within the National Institutes of Health, please visit: https://olaw.nih.gov/resources/tutorial/iacuc.htm

- For another article on the use of animals in research, please check out the following published in the National Academies Press – https://www.nap.edu/read/10089/chapter/3

- The following is an article published on the ethics of animal research and discusses the 3Rs in more detail – https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2002542/

- And finally, here is a great article published by the Washington State University IACUC on the use of animals in research and teaching at WSU – https://research.wsu.edu/frequently-asked-questions-about-animal-care-and-use-at-washington-state-university/

2.4.2. Human Models of Behavior

Throughout this module, we have seen that it is important for researchers to understand the methods they are using. Equally important, they must understand and appreciate ethical standards in research. As we have seen already in Section 2.3.1, such standards exist for the use of animals in research. The American Psychological Association (APA) identifies high standards of ethics and conduct as one of its four main guiding principles or missions and as it relates to humans. To read about the other three, please visit https://www.apa.org/about/index.aspx . Studies such as Milgram’s obedience study, Zimbardo’s Stanford prison study, and others, have necessitated standards for the use of humans in research. The standards can be broken down in terms of when they should occur during the process of a person participating in the study.

2.4.2.1. Before participating. First, researchers must obtain informed consent or when the person agrees to participate because they are told what will happen to them. They are given information about any risks they face, or potential harm that could come to them, whether physical or psychological. They are also told about confidentiality or the person’s right not to be identified. Since most research is conducted with students taking introductory psychology courses, they have to be given the right to do something other than a research study to likely earn required credits for the class. This is called an alternative activity and could take the form of reading and summarizing a research article. The amount of time taken to do this should not exceed the amount of time the student would be expected to participate in a study.

2.4.2.2. While participating. Participants are afforded the ability to withdraw or the person’s right to exit the study if any discomfort is experienced.

2.4.2.3. After participating . Once their participation is over, participants should be debriefed or when the true purpose of the study is revealed and they are told where to go if they need assistance and how to reach the researcher if they have questions. So, can researchers deceive participants, or intentionally withhold the true purpose of the study from them? According to the APA, a minimal amount of deception is allowed.

Human research must be approved by an Institutional Review Board or IRB. It is the IRB that will determine whether the researcher is providing enough information for the participant to give consent that is truly informed, if debriefing is adequate, and if any deception is allowed or not. According to the Food and Drug Administration (FDA), “The purpose of IRB review is to assure, both in advance and by periodic review, that appropriate steps are taken to protect the rights and welfare of humans participating as subjects in the research. To accomplish this purpose, IRBs use a group process to review research protocols and related materials (e.g., informed consent documents and investigator brochures) to ensure the protection of the rights and welfare of human subjects of research.”

If you would like to learn more about how to use ethics in your research, please read: https://opentext.wsu.edu/carriecuttler/chapter/putting-ethics-into-practice/

To learn more about IRBs, please visit: https://www.fda.gov/RegulatoryInformation/Guidances/ucm126420.htm

Module Recap

That’s it. In Module 2 we discussed the process of research used when studying learning and behavior. We learned about the scientific method and its steps which are universally used in all sciences and social sciences. Our breakdown consisted of six steps but be advised that other authors could combine steps or separate some of the ones in this module. Still, the overall spirit is the same. In the experimental analysis of behavior, we do talk about making a causal statement in the form of an If-Then statement, or respectfully we discuss functional relationships and contingencies. We also define our terms clearly, objectively, and precisely through a behavioral definition. In terms of research designs, psychology uses five main ones and our investigation of learning and behavior focuses on three of those designs, with experiment and observation being the main two. Methods by which we collect data, the apparatus we use, and later, who our participants/subjects are, were discussed. The structure of a research article was outlined which is consistent across disciplines and we covered some typical dependent variables or measures used in the study of learning and behavior. These include errors, frequency, intensity, duration, latency, topography, rate, and fluency.

Armed with this information we begin to explore the experimental analysis of behavior by investigating elicited behaviors and more in Module 3. From this, we will move to a discussion of respondent and then operant conditioning and finally observational learning. Before closing out with complementary cognitive processes we will engage in an exercise to see how the three models complement one another and are not competing with each other.

2nd edition

Share This Book

- Increase Font Size

Learning Strategies That Work

Dr. Mark A. McDaniel shares effective, evidence-based strategies about learning to replace less effective but widely accepted practices.

How do we learn and absorb new information? Which learning strategies actually work and which are mere myths?

Such questions are at the center of the work of Mark McDaniel , professor of psychology and the director of the Center for Integrative Research on Cognition, Learning, and Education at Washington University in St. Louis. McDaniel coauthored the book Make it Stick: The Science of Successful Learning .

In this Q&A adapted from a Career & Academic Resource Center podcast episode , McDaniel discusses his research on human learning and memory, including the most effective strategies for learning throughout a lifetime.

Harvard Extension: In your book, you talk about strategies to help students be better learners in and outside of the classroom. You write, “We harbor deep convictions that we learn better through single-minded focus and dogged repetition. And these beliefs are validated time and again by the visible improvement that comes during practice, practice, practice.”

McDaniel: This judgment that repetition is effective is hard to shake. There are cues present that your brain picks up when you’re rereading, when you’re repeating something that give you the metacognitive, that is your judgment about your own cognition, give you the misimpression that you really have learned this stuff well.

Older learners shouldn’t feel that they’re at a definitive disadvantage, because they’re not. Older learners really want to try to leverage their prior knowledge and use that as a basis to structure and frame and understand new information coming in.

And two of the primary cues are familiarity. So as you keep rereading, the material becomes more familiar to you. And we mistakenly judge familiarity as meaning robust learning.

And the second cue is fluency. It’s very clear from much work in reading and cognitive processes during reading that when you reread something at every level, the processes are more fluent. Word identification is more fluent. Parsing the structure of the sentence is more fluent. Extracting the ideas is more fluent. Everything is more fluent. And we misinterpret these fluency cues that the brain is getting. And these are accurate cues. It is more fluent. But we misinterpret that as meaning, I’ve really got this. I’ve really learned this. I’m not going to forget this. And that’s really misleading.

So let me give you another example. It’s not just rereading. It’s situations in, say, the STEM fields or any place where you’ve got to learn how to solve certain kinds of problems. One of the standard ways that instructors present homework is to present the same kind of problem in block fashion. You may have encountered this in your own math courses, your own physics courses.

So for example, in a physics course, you might get a particular type of work problem. And the parameters on it, the numbers might change, but in your homework, you’re trying to solve two or three or four of these work problems in a row. Well, it gets more and more fluid because exactly what formula you have to use. You know exactly what the problem is about. And as you get more fluid, and as we say in the book, it looks like you’re getting better. You are getting better at these problems.

But the issue is that can you remember how to identify which kinds of problems go with which kinds of solutions a week later when you’re asked to do a test where you have all different kinds of problems? And the answer is no, you cannot when you’ve done this block practice. So even though instructors who feel like their students are doing great with block practice and students will feel like they’re doing great, they are doing great on that kind of block practice, but they’re not at all good now at retaining information about what distinguishing features or problems are signaling certain kinds of approaches.

What you want to do is interleave practice in these problems. You want to randomly have a problem of one type and then solve a problem of another type and then a problem of another type. And in doing that, it feels difficult and it doesn’t feel fluent. And the signals to your brain are, I’m not getting this. I’m not doing very well. But in fact, that effort to try to figure out what kinds of approaches do I need for each problem as I encounter a different kind of problem, that’s producing learning. That’s producing robust skills that stick with you.

So this is a seductive thing that we have to, instructors and students alike, have to understand and have to move beyond those initial judgments, I haven’t learned very much, and trust that the more difficult practice schedule really is the better learning.

And I’ve written more on this since Make It Stick . And one of my strong theoretical tenets now is that in order for students to really embrace these techniques, they have to believe that they work for them. Each student has to believe it works for them. So I prepare demonstrations to show students these techniques work for them.

The net result of adopting these strategies is that students aren’t spending more time. Instead they’re spending more effective time. They’re working better. They’re working smarter.

When students take an exam after doing lots of retrieval practice, they see how well they’ve done. The classroom becomes very exciting. There’s lots of buy-in from the students. There’s lots of energy. There’s lots of stimulation to want to do more of this retrieval practice, more of this difficulty. Because trying to retrieve information is a lot more difficult than rereading it. But it produces robust learning for a number of reasons.

I think students have to trust that these techniques, and I think they also have to observe that these techniques work for them. It’s creating better learning. And then as a learner, you are more motivated to replace these ineffective techniques with more effective techniques.

Harvard Extension: You talk about tips for learners , how to make it stick. And there are several methods or tips that you share: elaboration, generation, reflection, calibration, among others. Which of these techniques is best?

McDaniel: It depends on the learning challenges that are faced. So retrieval practice, which is practicing trying to recall information from memory is really super effective if the requirements of your course require you to reproduce factual information.

For other things, it may be that you want to try something like generating understanding, creating mental models. So if your exams require you to draw inferences and work with new kinds of problems that are illustrative of the principles, but they’re new problems you haven’t seen before, a good technique is to try to connect the information into what I would call mental models. This is your representation of how the parts and the aspects fit together, relate together.

It’s not that one technique is better than the other. It’s that different techniques produce certain kinds of outcomes. And depending on the outcome you want, you might select one technique or the other.

I really firmly believe that to the extent that you can make learning fun and to the extent that one technique really seems more fun to you, that may be your go to technique. I teach a learning strategy course and I make it very clear to students. You don’t need to use all of these techniques. Find a couple that really work for you and then put those in your toolbox and replace rereading with these techniques.

Harvard Extension: You reference lifelong learning and lifelong learners. You talk about the brain being plastic, mutability of the brain in some ways, and give examples of how some lifelong learners approach their learning.

McDaniel: In some sense, more mature learners, older learners, have an advantage because they have more knowledge. And part of learning involves relating new information that’s coming into your prior knowledge, relating it to your knowledge structures, relating it to your schemas for how you think about certain kinds of content.

And so older adults have the advantage of having this richer knowledge base with which they can try to integrate new material. So older learners shouldn’t feel that they’re at a definitive disadvantage, because they’re not. Older learners really want to try to leverage their prior knowledge and use that as a basis to structure and frame and understand new information coming in.

Our challenges as older learners is that we do have these habits of learning that are not very effective. We turn to these habits. And if these aren’t such effective habits, we maybe attribute our failures to learn to age or a lack of native ability or so on and so forth. And in fact, that’s not it at all. In fact, if you adopt more effective strategies at any age, you’re going to find that your learning is more robust, it’s more successful, it falls into place.

You can learn these strategies at any age. Successful lifelong learning is getting these effective strategies in place, trusting them, and having them become a habit for how you’re going to approach your learning challenges.

6 Benefits of Connecting with an Enrollment Coach

Thinking about pursuing a degree or certificate at Harvard Extension School? Learn more about how working with an enrollment coach will get you off to a great start.

Harvard Division of Continuing Education

The Division of Continuing Education (DCE) at Harvard University is dedicated to bringing rigorous academics and innovative teaching capabilities to those seeking to improve their lives through education. We make Harvard education accessible to lifelong learners from high school to retirement.

- Tutorial Review

- Open access

- Published: 24 January 2018

Teaching the science of learning

- Yana Weinstein ORCID: orcid.org/0000-0002-5144-968X 1 ,

- Christopher R. Madan 2 , 3 &

- Megan A. Sumeracki 4

Cognitive Research: Principles and Implications volume 3 , Article number: 2 ( 2018 ) Cite this article

245k Accesses

89 Citations

763 Altmetric

Metrics details

The science of learning has made a considerable contribution to our understanding of effective teaching and learning strategies. However, few instructors outside of the field are privy to this research. In this tutorial review, we focus on six specific cognitive strategies that have received robust support from decades of research: spaced practice, interleaving, retrieval practice, elaboration, concrete examples, and dual coding. We describe the basic research behind each strategy and relevant applied research, present examples of existing and suggested implementation, and make recommendations for further research that would broaden the reach of these strategies.

Significance

Education does not currently adhere to the medical model of evidence-based practice (Roediger, 2013 ). However, over the past few decades, our field has made significant advances in applying cognitive processes to education. From this work, specific recommendations can be made for students to maximize their learning efficiency (Dunlosky, Rawson, Marsh, Nathan, & Willingham, 2013 ; Roediger, Finn, & Weinstein, 2012 ). In particular, a review published 10 years ago identified a limited number of study techniques that have received solid evidence from multiple replications testing their effectiveness in and out of the classroom (Pashler et al., 2007 ). A recent textbook analysis (Pomerance, Greenberg, & Walsh, 2016 ) took the six key learning strategies from this report by Pashler and colleagues, and found that very few teacher-training textbooks cover any of these six principles – and none cover them all, suggesting that these strategies are not systematically making their way into the classroom. This is the case in spite of multiple recent academic (e.g., Dunlosky et al., 2013 ) and general audience (e.g., Dunlosky, 2013 ) publications about these strategies. In this tutorial review, we present the basic science behind each of these six key principles, along with more recent research on their effectiveness in live classrooms, and suggest ideas for pedagogical implementation. The target audience of this review is (a) educators who might be interested in integrating the strategies into their teaching practice, (b) science of learning researchers who are looking for open questions to help determine future research priorities, and (c) researchers in other subfields who are interested in the ways that principles from cognitive psychology have been applied to education.

While the typical teacher may not be exposed to this research during teacher training, a small cohort of teachers intensely interested in cognitive psychology has recently emerged. These teachers are mainly based in the UK, and, anecdotally (e.g., Dennis (2016), personal communication), appear to have taken an interest in the science of learning after reading Make it Stick (Brown, Roediger, & McDaniel, 2014 ; see Clark ( 2016 ) for an enthusiastic review of this book on a teacher’s blog, and “Learning Scientists” ( 2016c ) for a collection). In addition, a grassroots teacher movement has led to the creation of “researchED” – a series of conferences on evidence-based education (researchED, 2013 ). The teachers who form part of this network frequently discuss cognitive psychology techniques and their applications to education on social media (mainly Twitter; e.g., Fordham, 2016 ; Penfound, 2016 ) and on their blogs, such as Evidence Into Practice ( https://evidenceintopractice.wordpress.com/ ), My Learning Journey ( http://reflectionsofmyteaching.blogspot.com/ ), and The Effortful Educator ( https://theeffortfuleducator.com/ ). In general, the teachers who write about these issues pay careful attention to the relevant literature, often citing some of the work described in this review.

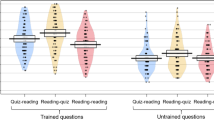

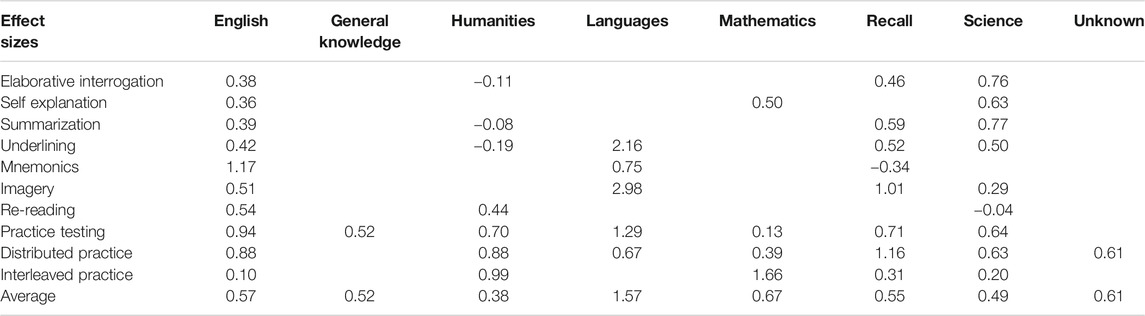

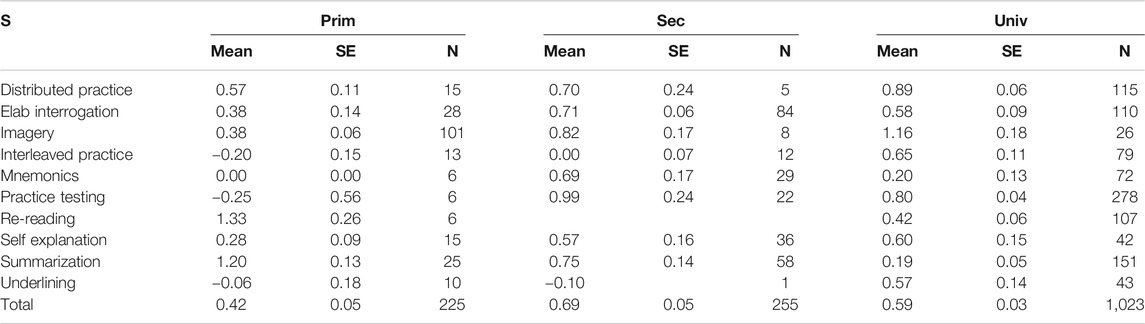

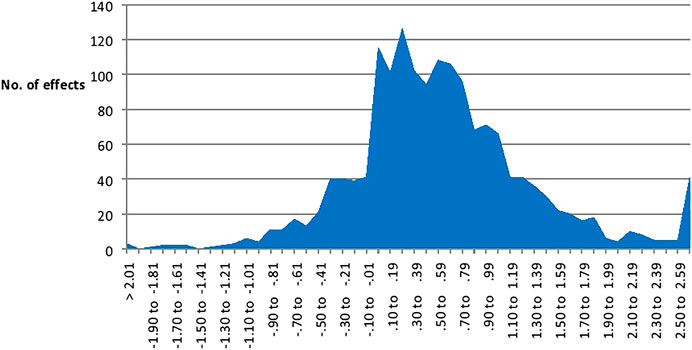

These informal writings, while allowing teachers to explore their approach to teaching practice (Luehmann, 2008 ), give us a unique window into the application of the science of learning to the classroom. By examining these blogs, we can not only observe how basic cognitive research is being applied in the classroom by teachers who are reading it, but also how it is being misapplied, and what questions teachers may be posing that have gone unaddressed in the scientific literature. Throughout this review, we illustrate each strategy with examples of how it can be implemented (see Table 1 and Figs. 1 , 2 , 3 , 4 , 5 , 6 and 7 ), as well as with relevant teacher blog posts that reflect on its application, and draw upon this work to pin-point fruitful avenues for further basic and applied research.

Spaced practice schedule for one week. This schedule is designed to represent a typical timetable of a high-school student. The schedule includes four one-hour study sessions, one longer study session on the weekend, and one rest day. Notice that each subject is studied one day after it is covered in school, to create spacing between classes and study sessions. Copyright note: this image was produced by the authors

a Blocked practice and interleaved practice with fraction problems. In the blocked version, students answer four multiplication problems consecutively. In the interleaved version, students answer a multiplication problem followed by a division problem and then an addition problem, before returning to multiplication. For an experiment with a similar setup, see Patel et al. ( 2016 ). Copyright note: this image was produced by the authors. b Illustration of interleaving and spacing. Each color represents a different homework topic. Interleaving involves alternating between topics, rather than blocking. Spacing involves distributing practice over time, rather than massing. Interleaving inherently involves spacing as other tasks naturally “fill” the spaces between interleaved sessions. Copyright note: this image was produced by the authors, adapted from Rohrer ( 2012 )

Concept map illustrating the process and resulting benefits of retrieval practice. Retrieval practice involves the process of withdrawing learned information from long-term memory into working memory, which requires effort. This produces direct benefits via the consolidation of learned information, making it easier to remember later and causing improvements in memory, transfer, and inferences. Retrieval practice also produces indirect benefits of feedback to students and teachers, which in turn can lead to more effective study and teaching practices, with a focus on information that was not accurately retrieved. Copyright note: this figure originally appeared in a blog post by the first and third authors ( http://www.learningscientists.org/blog/2016/4/1-1 )

Illustration of “how” and “why” questions (i.e., elaborative interrogation questions) students might ask while studying the physics of flight. To help figure out how physics explains flight, students might ask themselves the following questions: “How does a plane take off?”; “Why does a plane need an engine?”; “How does the upward force (lift) work?”; “Why do the wings have a curved upper surface and a flat lower surface?”; and “Why is there a downwash behind the wings?”. Copyright note: the image of the plane was downloaded from Pixabay.com and is free to use, modify, and share

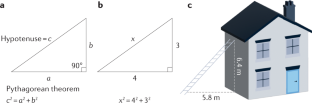

Three examples of physics problems that would be categorized differently by novices and experts. The problems in ( a ) and ( c ) look similar on the surface, so novices would group them together into one category. Experts, however, will recognize that the problems in ( b ) and ( c ) both relate to the principle of energy conservation, and so will group those two problems into one category instead. Copyright note: the figure was produced by the authors, based on figures in Chi et al. ( 1981 )

Example of how to enhance learning through use of a visual example. Students might view this visual representation of neural communications with the words provided, or they could draw a similar visual representation themselves. Copyright note: this figure was produced by the authors

Example of word properties associated with visual, verbal, and motor coding for the word “SPOON”. A word can evoke multiple types of representation (“codes” in dual coding theory). Viewing a word will automatically evoke verbal representations related to its component letters and phonemes. Words representing objects (i.e., concrete nouns) will also evoke visual representations, including information about similar objects, component parts of the object, and information about where the object is typically found. In some cases, additional codes can also be evoked, such as motor-related properties of the represented object, where contextual information related to the object’s functional intention and manipulation action may also be processed automatically when reading the word. Copyright note: this figure was produced by the authors and is based on Aylwin ( 1990 ; Fig. 2 ) and Madan and Singhal ( 2012a , Fig. 3 )

Spaced practice

The benefits of spaced (or distributed) practice to learning are arguably one of the strongest contributions that cognitive psychology has made to education (Kang, 2016 ). The effect is simple: the same amount of repeated studying of the same information spaced out over time will lead to greater retention of that information in the long run, compared with repeated studying of the same information for the same amount of time in one study session. The benefits of distributed practice were first empirically demonstrated in the 19 th century. As part of his extensive investigation into his own memory, Ebbinghaus ( 1885/1913 ) found that when he spaced out repetitions across 3 days, he could almost halve the number of repetitions necessary to relearn a series of 12 syllables in one day (Chapter 8). He thus concluded that “a suitable distribution of [repetitions] over a space of time is decidedly more advantageous than the massing of them at a single time” (Section 34). For those who want to read more about Ebbinghaus’s contribution to memory research, Roediger ( 1985 ) provides an excellent summary.

Since then, hundreds of studies have examined spacing effects both in the laboratory and in the classroom (Kang, 2016 ). Spaced practice appears to be particularly useful at large retention intervals: in the meta-analysis by Cepeda, Pashler, Vul, Wixted, and Rohrer ( 2006 ), all studies with a retention interval longer than a month showed a clear benefit of distributed practice. The “new theory of disuse” (Bjork & Bjork, 1992 ) provides a helpful mechanistic explanation for the benefits of spacing to learning. This theory posits that memories have both retrieval strength and storage strength. Whereas retrieval strength is thought to measure the ease with which a memory can be recalled at a given moment, storage strength (which cannot be measured directly) represents the extent to which a memory is truly embedded in the mind. When studying is taking place, both retrieval strength and storage strength receive a boost. However, the extent to which storage strength is boosted depends upon retrieval strength, and the relationship is negative: the greater the current retrieval strength, the smaller the gains in storage strength. Thus, the information learned through “cramming” will be rapidly forgotten due to high retrieval strength and low storage strength (Bjork & Bjork, 2011 ), whereas spacing out learning increases storage strength by allowing retrieval strength to wane before restudy.

Teachers can introduce spacing to their students in two broad ways. One involves creating opportunities to revisit information throughout the semester, or even in future semesters. This does involve some up-front planning, and can be difficult to achieve, given time constraints and the need to cover a set curriculum. However, spacing can be achieved with no great costs if teachers set aside a few minutes per class to review information from previous lessons. The second method involves putting the onus to space on the students themselves. Of course, this would work best with older students – high school and above. Because spacing requires advance planning, it is crucial that the teacher helps students plan their studying. For example, teachers could suggest that students schedule study sessions on days that alternate with the days on which a particular class meets (e.g., schedule review sessions for Tuesday and Thursday when the class meets Monday and Wednesday; see Fig. 1 for a more complete weekly spaced practice schedule). It important to note that the spacing effect refers to information that is repeated multiple times, rather than the idea of studying different material in one long session versus spaced out in small study sessions over time. However, for teachers and particularly for students planning a study schedule, the subtle difference between the two situations (spacing out restudy opportunities, versus spacing out studying of different information over time) may be lost. Future research should address the effects of spacing out studying of different information over time, whether the same considerations apply in this situation as compared to spacing out restudy opportunities, and how important it is for teachers and students to understand the difference between these two types of spaced practice.

It is important to note that students may feel less confident when they space their learning (Bjork, 1999 ) than when they cram. This is because spaced learning is harder – but it is this “desirable difficulty” that helps learning in the long term (Bjork, 1994 ). Students tend to cram for exams rather than space out their learning. One explanation for this is that cramming does “work”, if the goal is only to pass an exam. In order to change students’ minds about how they schedule their studying, it might be important to emphasize the value of retaining information beyond a final exam in one course.

Ideas for how to apply spaced practice in teaching have appeared in numerous teacher blogs (e.g., Fawcett, 2013 ; Kraft, 2015 ; Picciotto, 2009 ). In England in particular, as of 2013, high-school students need to be able to remember content from up to 3 years back on cumulative exams (General Certificate of Secondary Education (GCSE) and A-level exams; see CIFE, 2012 ). A-levels in particular determine what subject students study in university and which programs they are accepted into, and thus shape the path of their academic career. A common approach for dealing with these exams has been to include a “revision” (i.e., studying or cramming) period of a few weeks leading up to the high-stakes cumulative exams. Now, teachers who follow cognitive psychology are advocating a shift of priorities to spacing learning over time across the 3 years, rather than teaching a topic once and then intensely reviewing it weeks before the exam (Cox, 2016a ; Wood, 2017 ). For example, some teachers have suggested using homework assignments as an opportunity for spaced practice by giving students homework on previous topics (Rose, 2014 ). However, questions remain, such as whether spaced practice can ever be effective enough to completely alleviate the need or utility of a cramming period (Cox, 2016b ), and how one can possibly figure out the optimal lag for spacing (Benney, 2016 ; Firth, 2016 ).

There has been considerable research on the question of optimal lag, and much of it is quite complex; two sessions neither too close together (i.e., cramming) nor too far apart are ideal for retention. In a large-scale study, Cepeda, Vul, Rohrer, Wixted, and Pashler ( 2008 ) examined the effects of the gap between study sessions and the interval between study and test across long periods, and found that the optimal gap between study sessions was contingent on the retention interval. Thus, it is not clear how teachers can apply the complex findings on lag to their own classrooms.