- Create Account

Design and Analysis of Algorithms

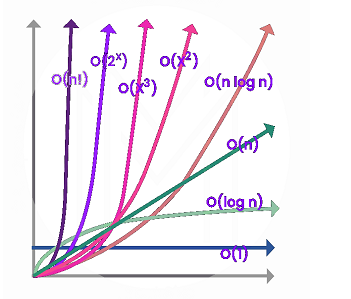

This course introduces basic elements of the design and analysis of computer algorithms. Topics include asymptotic notations and analysis, divide and conquer strategy, greedy methods, dynamic programming, basic graph algorithms, NP-completeness, and approximation algorithms. For each topic, beside in-depth coverage, one or more representative problems and their algorithms shall be discussed.

- Question Banks

- DAA Question Bank 2080

- DAA Question Bank 2079

- DAA Question Bank 2076

- DAA Question Bank 2078

Tribhuvan University

Institute of Science and Technology

Bachelor Level / fifth-semester / Science

Computer Science and Information Technology( CSC314 )

Full Marks: 60 + 20 + 20

Pass Marks: 24 + 8 + 8

Time: 3 Hours

Candidates are required to give their answers in their own words as far as practicable.

The figures in the margin indicate full marks.

Attempt any two questions.

What is recurrence relation? How it can be solved? Show that time complexity of the recurrence relation T(n) = 2T(n/2) + 1 is O(n) using substitution method.

Write down the advantages of dynamic programming over greedy strategy. Find optimal bracketing to multiply 4 matrices of order 2,3,4,2,5.

Discuss heapify operation with example. Write down its algorithm and analyze its time and space complexity.

Attempt any eight questions

Define RAM model. Write down iterative algorithm for finding factorial and provide its detailed analysis.

Write down algorithm of insertion sort and analyze its time and space complexity.

Write down minmax algorithm and analyze its complexity.

When greedy strategy provides optimal solution? Write down job sequencing with deadlines algorithm and analyze its complexity.

Suppose that a message contains alphabet frequencies as given below and find Huffman codes for each alphabet

Does backtracking give multiple solution? Trace subset sum algorithm for the set {3,5,2,4,1} andd sum=8.

Why extended euclidean algorithm is used? Write down its algorithm and analyze its complexity.

Define NP-complete problems with examples. Give brief proof of the statement “SAT is NP-complete”.

Write short notes on

a) Aggregate Analysis

b) Selection problems

Design and Analysis of Algorithms Question Bank Solution 2080

Share this link via

Or copy link

Copyright 2024 | HAMROCSIT.COM | All Right Reserved - Nymna Technology

Browse Course Material

Course info, instructors.

- Prof. Erik Demaine

- Prof. Srini Devadas

- Prof. Nancy Lynch

Departments

- Electrical Engineering and Computer Science

- Mathematics

As Taught In

- Algorithms and Data Structures

- Computer Networks

- Cryptography

- Applied Mathematics

Learning Resource Types

Design and analysis of algorithms, class on design and analysis of algorithms, problem set 1.

This resource contains information regarding class on design and analysis of algorithms, problem set 1.

You are leaving MIT OpenCourseWare

- Arts & Humanities

- English Literature

DAA Assignment Questions - Farah Institute of Technology

Related documents

![daa assignment questions with solutions ADA Assignment 2 Questions 2023[1]](https://s2.studylib.net/store/data/027098425_1-6a9cd014cdd3327d019d0649645a34db-300x300.png)

Add this document to collection(s)

You can add this document to your study collection(s)

Add this document to saved

You can add this document to your saved list

Suggest us how to improve StudyLib

(For complaints, use another form )

Input it if you want to receive answer

DAA Unit 1: Introduction to Algorithm Previous Year Questions

In this article, you will get the all-important questions that are mostly asked in the semester exams. We have collected this list of questions by taking help from the previous year’s question paper from the subject design and analysis of the algorithm.

So Before going for the final semester exam of the subject Algorithm, Please revise all the below questions and answers at least once. It will boost your knowledge and confidence as well.

The below questions are common question that is applicable to all colleges and universities. In the upcoming days, we will bring the university-wise questions and answers.

- What do you mean by algorithm? Explain it with example.

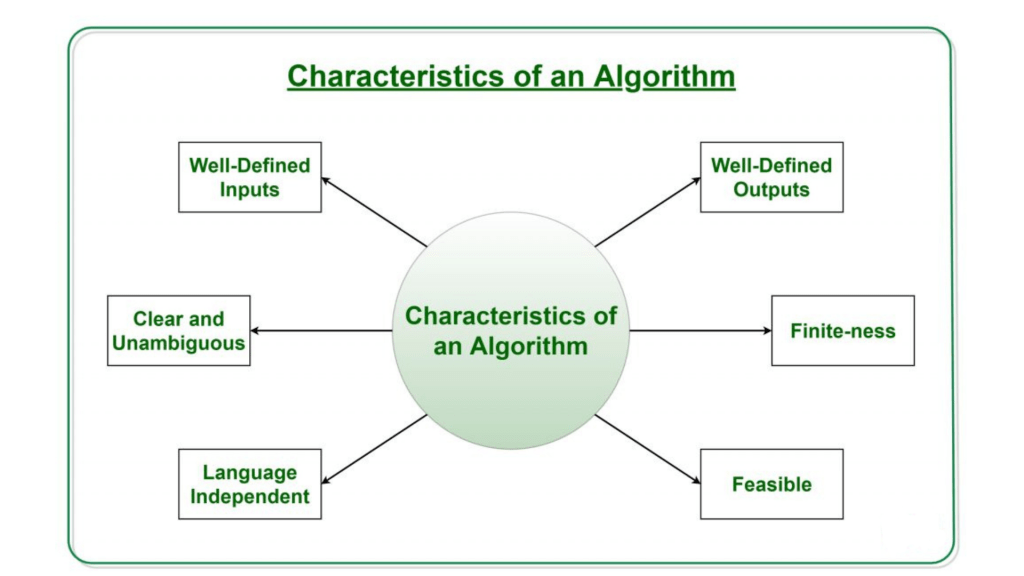

- Write the characteristics of algorithm.

- What do you mean by the complexity of algorithm?

- Explain Asymptotic Notation.

- Explain Little-oh notation and Little omega notation . Also Discuss relationship between O, o, theta, omega, and omega notation.

- Write a note on optimality and Reduction.

- Explain Master’s Theorem.

- Explain divide and conquer recurrences.

- What do you mean by recursion? Explain your answer with an example.

- Explain Recursion Tree.

- Write pseudocode of Insertion sort. Also find out its computing time and do analysis of insertion sort.

- Write pseudocode for QuickSort. Also find out its computing time and do analysis of QuickSort.

- Write a short note on Randomized Version of Quick Sort.

- Explain Merge Sort. Write its algorithm and do its analysis.

- Explain Heap Sort. Write its algorithm and do its analysis.

- Explain Heapify sort algorithm with its analysis.

- Explain Shell sort algorithm with its analysis.

- Explain Radix sort algorithm with its analysis.

- Explain Bucket sort algorithm with its analysis.

- Explain Counting sort algorithm with its analysis.

- Determine the best case time complexity of merge sort Algorithm.

All Interview

Company interview, technical interview, web interview, php interview, .net interview, java interview, database interview, you may also like:.

- Java Interview Questions

- SQL Interview Questions

- Python Interview Questions

- JavaScript Interview Questions

- Angular Interview Questions

- Selenium Interview Questions

- Spring Boot Interview Questions

- HR Interview Questions

- C Programming Interview Questions

- C++ Interview Questions

- Data Structure Interview Questions

- DBMS Interview Questions

- HTML Interview Questions

- IAS Interview Questions

- Manual Testing Interview Questions

- OOPs Interview Questions

- .Net Interview Questions

- C# Interview Questions

- ReactJS Interview Questions

- Networking Interview Questions

- PHP Interview Questions

- CSS Interview Questions

- Node.js Interview Questions

- Spring Interview Questions

- Hibernate Interview Questions

- AWS Interview Questions

- Accounting Interview Questions

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Interview Questions

Company Questions

Trending Technologies

Artificial Intelligence

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Control System

Data Mining

Data Warehouse

- Analysis of Algorithms

- Backtracking

- Dynamic Programming

- Divide and Conquer

- Geometric Algorithms

- Mathematical Algorithms

- Pattern Searching

- Bitwise Algorithms

- Branch & Bound

- Randomized Algorithms

Design and Analysis of Algorithms

- Complete Guide On Complexity Analysis - Data Structure and Algorithms Tutorial

- Why the Analysis of Algorithm is important?

- Types of Asymptotic Notations in Complexity Analysis of Algorithms

- Worst, Average and Best Case Analysis of Algorithms

- Asymptotic Notation and Analysis (Based on input size) in Complexity Analysis of Algorithms

- How to Analyse Loops for Complexity Analysis of Algorithms

- Sample Practice Problems on Complexity Analysis of Algorithms

- Basics on Analysis of Algorithms

- How to analyse Complexity of Recurrence Relation

- Introduction to Amortized Analysis

- Asymptotic Notations

- Big O Notation Tutorial - A Guide to Big O Analysis

- Difference between Big O vs Big Theta Θ vs Big Omega Ω Notations

- Examples of Big-O analysis

- Difference between big O notations and tilde

- Analysis of Algorithms | Big-Omega Ω Notation

- Analysis of Algorithms | Big - Θ (Big Theta) Notation

Some Advance Topics

- P, NP, CoNP, NP hard and NP complete | Complexity Classes

- Can Run Time Complexity of a comparison-based sorting algorithm be less than N logN?

- Why does accessing an Array element take O(1) time?

- What is the time efficiency of the push(), pop(), isEmpty() and peek() operations of Stacks?

- Complexity Proofs

- Proof that Clique Decision problem is NP-Complete

- Proof that Independent Set in Graph theory is NP Complete

- Prove that a problem consisting of Clique and Independent Set is NP Complete

- Prove that Dense Subgraph is NP Complete by Generalisation

- Prove that Sparse Graph is NP-Complete

- Top MCQs on Complexity Analysis of Algorithms with Answers

Design and Analysis of Algorithms is a fundamental aspect of computer science that involves creating efficient solutions to computational problems and evaluating their performance. DSA focuses on designing algorithms that effectively address specific challenges and analyzing their efficiency in terms of time and space complexity .

.png)

Complete Guide On Complexity Analysis

Table of Content

- What is meant by Algorithm Analysis?

- Why Analysis of Algorithms is important?

- Types of Algorithm Analysis

- Some Advance topics

Basics on Analysis of Algorithms:

- What is algorithm and why analysis of it is important?

Asymptotic Notations:

- Analysis of Algorithms | Big-O analysis

- Difference between Big Oh, Big Omega and Big Theta

- Analysis of Algorithms | Big – Ω (Big- Omega) Notation

- Analysis of Algorithms | Big – Θ (Big Theta) Notation

Some Advance topics:

- Types of Complexity Classes | P, NP, CoNP, NP hard and NP complete

Complexity Proofs:

Please login to comment..., similar reads.

- Algorithms-Analysis of Algorithms

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

ROHITVERMAA/DAA-elab-answers

Folders and files, repository files navigation, srm university daa e-lab answers.

This repository contains the answers to the e-lab of DAA of SRM university.

The repository is organized by questions, labeled according to the Question Content.

Feel free to use these answers as a reference or for study purposes. However, I would strongly advise against copying these answers directly and submitting them as your own work. Not only is this plagiarism, but it also goes against the principles of academic integrity.

Contributing

If you have any suggestions for improving my answers, feel free to open a pull request or submit an issue. I am always looking for ways to improve my understanding of the material, and I welcome any constructive feedback.

- IT Jobs in India

- Freshers Jobs

- Internships

- Private Jobs

- Placement Papers

- IT Companies Syllabus

- Technical Interview Questions

- Technical Quizzes

- Startup Jobs

- AP Govt Jobs

- UP Govt Jobs

- Telangana Govt Jobs

- Punjab Govt Jobs

- Gujarat Govt Jobs

- TN Govt Jobs

- MP Govt Jobs

- Other State Govt Jobs

- Central Govt Jobs

- Government Jobs

- Freejobalert

- Employment News

- Jobs By Company

- Govt Jobs by Qualification

- Jobs by Designation

- Sarkari Naukri

- PSC Notifications

- Post Office Recruitment

- Railway Jobs

- Police Jobs

- Teaching Jobs

- Indian Army Jobs

- Indian Navy Jobs

- Indian Air Force Jobs

- RRB Recruitment

- Preparation Tips

- Free Mock Tests

- Engineering

- Common Entrance Exams

- University Time Tables

- University Hall Tickets

- University Results

- Syllabus (Govt)

- Previous Papers (Govt)

- Admit Cards

- Answer Keys

- Exam Calendars

- Academic Calendars

- Exam Syllabus

- Question Papers

- Hall Tickets

- Application Form

- Exam Analysis

- Scholarships in India

- TET (All States)

- Career Guidance

- Govt Schemes

- Seat Allotment

- Computer Awareness

- Schools.Freshersnow.com

- Privacy Policy

Home » Technical Interview » Top 100 DAA Interview Questions and Answers 2023

Top 100 DAA Interview Questions and Answers 2023

DAA Interview Questions and Answers – DAA (Design and Analysis of Algorithms) is a critical aspect of computer science and programming that deals with creating efficient and effective algorithms for solving complex problems. The process involves designing an algorithm, analyzing its efficiency, and optimizing it for better performance. The study of DAA is crucial for anyone seeking a career in software development or computer science, as it helps in understanding the fundamental principles of problem-solving and algorithmic design. So candidates, who are appearing for the interview can have a look at these Top 100 DAA Interview Questions and prepare well for the interview.

Table of Contents

DAA Interview Questions and Answers

In this article, we will explore the DAA Interview Questions that are frequently asked in the interview. So, let’s delve into the world of DAA and equip ourselves with the necessary knowledge and skills to tackle complex programming challenges and get succeed in the interview.

1. What is an algorithm?

Answer: An algorithm is a step-by-step procedure for solving a problem or accomplishing a task.

2. What are the characteristics of a good algorithm?

Answer: A good algorithm should be correct, efficient, and easy to understand.

3. What is the difference between an algorithm and a program?

Answer: An algorithm is a step-by-step procedure for solving a problem, while a program is an implementation of an algorithm in a particular programming language.

4. What is the time complexity of an algorithm?

Answer: The time complexity of an algorithm is a measure of the amount of time it takes to run as a function of the size of the input data.

5. What is the space complexity of an algorithm?

Answer: The space complexity of an algorithm is a measure of the amount of memory it requires as a function of the size of the input data.

6. What is the Big O notation?

Answer: The Big O notation is used to describe the upper bound on the time complexity of an algorithm.

7. What is the worst-case time complexity of an algorithm?

Answer: The worst-case time complexity of an algorithm is the maximum amount of time it takes to run

over all possible inputs of a given size.

8. What is the best-case time complexity of an algorithm?

Answer: The best-case time complexity of an algorithm is the minimum amount of time it takes to run over all possible inputs of a given size.

9. What is the average-case time complexity of an algorithm?

Answer: The average-case time complexity of an algorithm is the expected amount of time it takes to run over all possible inputs of a given size.

10. What is the difference between the best-case and worst-case time complexity of an algorithm?

Answer: The best-case time complexity is the minimum amount of time an algorithm can take to run, while the worst-case time complexity is the maximum amount of time an algorithm can take to run.

11. What is the difference between the average-case and worst-case time complexity of an algorithm?

Answer: The worst-case time complexity is the maximum amount of time an algorithm can take to run, while the average-case time complexity is the expected amount of time an algorithm will take to run.

12. What is the difference between time complexity and space complexity?

Answer: Time complexity measures the amount of time an algorithm takes to run, while space complexity measures the amount of memory an algorithm requires.

13. What is the difference between an array and a linked list?

Answer: An array is a contiguous block of memory used to store a collection of data items, while a linked list is a data structure in which each data item is stored in a separate node that contains a reference to the next node.

14. What is a binary search?

Answer: A binary search is an algorithm that searches for a particular value in a sorted array by repeatedly dividing the search interval in half.

15. What is a sorting algorithm?

Answer: A sorting algorithm is an algorithm that puts a collection of data items into a specific order, such as alphabetical or numerical order.

16. What is the difference between a stable and an unstable sorting algorithm?

Answer: A stable sorting algorithm preserves the relative order of equal elements in the sorted output, while an unstable sorting algorithm does not guarantee the relative order of equal elements.

17. What is the difference between top-down and bottom-up dynamic programming approaches?

Answer: The top-down approach starts from the main problem and recursively breaks it down into subproblems, while the bottom-up approach starts from subproblems and builds up to solve the main problem.

18. What is memoization in dynamic programming?

Answer: Memoization is a technique of storing the results of solved subproblems in a table to avoid their repeated calculation in future recursive calls.

19. What is the difference between dynamic programming and divide-and-conquer algorithms?

Answer: Dynamic programming involves solving subproblems and reusing their solutions to solve the main problem, while divide-and-conquer algorithms divide the problem into independent subproblems and solve them separately.

20. What is the time complexity of the brute-force approach?

Answer: The time complexity of the brute-force approach is typically O(n^n) or O(2^n), where n is the size of the input.

21. What is the difference between stable and unstable sorting algorithms?

Answer: Stable sorting algorithms preserve the relative order of equal elements in the input, while unstable sorting algorithms may not.

22. What is the time complexity of the quicksort algorithm?

Answer: The average-case time complexity of the quicksort algorithm is O(n*logn), where n is the size of the input.

23. What is the difference between in-place and out-of-place sorting algorithms?

Answer: In-place sorting algorithms sort the input array in place without using additional memory, while out-of-place sorting algorithms require additional memory to store the sorted output.

24. What is the time complexity of the mergesort algorithm?

Answer: The time complexity of the mergesort algorithm is O(n*logn), where n is the size of the input.

25. What is the difference between breadth-first search and depth-first search algorithms?

Answer: Breadth-first search explores the nodes in the graph in a breadth-first order, while depth-first search explores the nodes in a depth-first order.

26. What is the difference between a graph and a tree data structure?

Answer: A tree is a special case of a graph, where there are no cycles, and every pair of nodes is connected by a unique path.

27. What is the time complexity of the Dijkstra’s algorithm?

Answer: The time complexity of Dijkstra’s algorithm is O(E*logV), where E is the number of edges and V is the number of vertices in the graph.

28. What is the difference between a directed graph and an undirected graph?

Answer: A directed graph has directed edges, where each edge points from one vertex to another, while an undirected graph has undirected edges, where each edge connects two vertices without any direction.

29. What is the difference between a complete graph and a sparse graph?

Answer: A complete graph has all possible edges between every pair of vertices, while a sparse graph has relatively fewer edges.

30. What is the time complexity of the Bellman-Ford algorithm?

Answer: The time complexity of the Bellman-Ford algorithm is O(VE), where V is the number of vertices and E is the number of edges in the graph.

31. What is the difference between a greedy algorithm and a dynamic programming algorithm?

Answer: A greedy algorithm makes locally optimal choices at each step, while a dynamic programming algorithm solves subproblems and reuses their solutions to solve the main problem.

32. What is a divide-and-conquer algorithm?

Answer: A divide-and-conquer algorithm is an algorithm that recursively divides a problem into subproblems of smaller size, solves the subproblems, and combines the solutions to solve the original problem.

33. What is a greedy algorithm?

Answer: A greedy algorithm is an algorithm that makes locally optimal choices at each step, hoping to find a globally optimal solution.

34. What is dynamic programming?

Answer: Dynamic programming is an algorithmic technique that solves problems by breaking them down into smaller subproblems and storing the solutions to these subproblems to avoid redundant calculations.

35. What is backtracking?

Answer: Backtracking is an algorithmic technique that involves exploring all possible solutions to a problem by systematically trying different choices, and undoing choices that lead to dead ends.

36. What is memoization?

Answer: Memoization is an optimization technique that stores the results of expensive function calls and returns the cached result when the same inputs occur again.

37. What is recursion?

Answer: Recursion is a programming technique in which a function calls itself to solve a problem.

38. What is the difference between recursion and iteration?

Answer: Recursion involves calling a function from within itself to solve a problem, while iteration involves using loops to repeat a block of code until a condition is met.

39. What is the difference between top-down and bottom-up dynamic programming?

Answer: Top-down dynamic programming involves solving a problem by breaking it down into subproblems, while bottom-up dynamic programming involves solving the subproblems first and combining them to solve the original problem.

40. What is the Knapsack problem?

Answer: The Knapsack problem is a classic optimization problem in which a set of items with different weights and values must be packed into a knapsack of a given capacity, while maximizing the total value of the items.

41. What is the traveling salesman problem?

Answer: The traveling salesman problem is a classic optimization problem in which a salesman must visit a set of cities, each only once, and return to his starting point, while minimizing the total distance traveled.

42. What is the complexity of the brute-force solution to the traveling salesman problem?

Answer: The brute-force solution to the traveling salesman problem has a time complexity of O(n!), where n is the number of cities.

43. What is the greedy approach to the traveling salesman problem?

Answer: The greedy approach to the traveling salesman problem involves starting at a random city, and at each step, choosing the closest unvisited city as the next destination.

44. What is the complexity of the greedy approach to the traveling salesman problem?

Answer: The greedy approach to the traveling salesman problem has a time complexity of O(n^2), where n is the number of cities.

45. What is a heuristic?

Answer: A heuristic is a technique that is used to find a good solution to a problem quickly, but does not guarantee an optimal solution.

46. What is the A* algorithm?

Answer: The A* algorithm is a heuristic search algorithm that uses both the cost of the path already taken and an estimate of the cost of the remaining path to guide the search.

47. What is the difference between breadth-first search and depth-first search?

Answer: Breadth-first search explores all nodes at the same level before moving on to the next level, while depth-first search explores as far as possible along each branch before backtracking.

48. What is the difference between Dijkstra’s algorithm and A* algorithm?

Answer: Dijkstra’s algorithm is a shortest path algorithm that uses only the cost of the path already taken, while the A* algorithm uses both the cost of the path already taken and an estimate of the cost of the remaining path.

49. What is a hash table?

Answer: A hash table is a data structure that maps keys to values by using a hash function to compute an index into an array of buckets.

50. What is collision resolution in hash tables?

Answer: Collision resolution is the process of dealing with two or more keys that hash to the same index in a hash table. There are several techniques for collision resolution, such as chaining and open addressing.

51. What is the time complexity of a hash table operation?

Answer: The time complexity of a hash table operation depends on the specific operation and the implementation, but is typically O(1) on average.

52. What is a priority queue?

Answer: A priority queue is a data structure that stores a collection of elements and allows access to the element with the highest priority according to a specified priority function.

53. What is a heap?

Answer: A heap is a data structure that maintains a partially ordered tree in which each node is greater than or equal to its children (for a max heap) or less than or equal to its children (for a min heap).

54. What is the time complexity of a heap operation?

Answer: The time complexity of a heap operation depends on the specific operation and the implementation, but is typically O(log n) for insertion and deletion, and O(1) for accessing the maximum or minimum element.

55. What is a graph?

Answer: A graph is a data structure that consists of a set of vertices (nodes) and a set of edges that connect pairs of vertices.

56. What is a directed graph?

Answer: A directed graph is a graph in which each edge has a direction, indicating a one-way connection between two vertices.

57. What is an undirected graph?

Answer: An undirected graph is a graph in which each edge has no direction, indicating a bidirectional connection between two vertices.

58. What is a weighted graph?

Answer: A weighted graph is a graph in which each edge has a weight or cost assigned to it, indicating the cost or distance between the two vertices it connects.

59. What is a cycle in a graph?

Answer: A cycle in a graph is a path that starts and ends at the same vertex, and includes at least one edge.

60. What is a connected graph?

Answer: A connected graph is a graph in which there is a path between every pair of vertices.

61. What is a spanning tree?

Answer: A spanning tree of a graph is a subgraph that includes all the vertices of the graph and forms a tree (a connected acyclic graph).

62. What is the minimum spanning tree of a graph?

Answer: The minimum spanning tree of a graph is the spanning tree with the minimum sum of the weights of its edges.

63. What is Kruskal’s algorithm?

Answer: Kruskal’s algorithm is a greedy algorithm that finds the minimum spanning tree of a graph by repeatedly adding the next lightest edge that does not form a cycle.

64. What is Prim’s algorithm?

Answer: Prim’s algorithm is a greedy algorithm that finds the minimum spanning tree of a graph by starting at a random vertex and repeatedly adding the next lightest edge that connects a vertex in the tree to a vertex outside the tree.

65. What is the time complexity of Kruskal’s algorithm and Prim’s algorithm?

Answer: The time complexity of Kruskal’s algorithm and Prim’s algorithm is O(E log E), where E is the number of edges in the graph.

66. What is a topological sort?

Answer: A topological sort of a directed acyclic graph is a linear ordering of its vertices such that for every directed edge from vertex u to vertex v, u comes before v in the ordering.

67. What is the time complexity of a topological sort?

Answer: The time complexity of a topological sort is O(V+E), where V is the number of vertices and E is the number of edges in the graph.

68. What is the difference between breadth-first search and topological sort?

Answer: Breadth-first search is a graph traversal algorithm that visits all the vertices in the graph at a given distance (level) from a starting vertex before moving on to vertices at a greater distance. Topological sort, on the other hand, is a way of ordering the vertices of a directed acyclic graph such that for every directed edge u->v, u comes before v in the ordering. While both algorithms involve visiting the vertices of a graph, their purposes and methods are quite different.

69. What is Dijkstra’s algorithm?

Answer: Dijkstra’s algorithm is a greedy algorithm that finds the shortest path between a starting vertex and all other vertices in a graph with non-negative edge weights. It works by maintaining a set of vertices for which the shortest path is known, and repeatedly selecting the vertex with the shortest path and updating the shortest paths to its neighbors.

70. What is the time complexity of Dijkstra’s algorithm?

Answer: The time complexity of Dijkstra’s algorithm is O((V+E)log V) using a binary heap, where V is the number of vertices and E is the number of edges in the graph.

71. What is the Bellman-Ford algorithm?

Answer: The Bellman-Ford algorithm is a dynamic programming algorithm that finds the shortest path between a starting vertex and all other vertices in a graph with possibly negative edge weights. It works by repeatedly relaxing the edges in the graph, i.e., updating the shortest path estimate for each vertex based on the shortest path estimate of its neighbors.

72. What is the Floyd-Warshall algorithm?

Answer: The Floyd-Warshall algorithm is a dynamic programming algorithm that finds the shortest path between all pairs of vertices in a graph with possibly negative edge weights. It works by maintaining a table of shortest path estimates for all pairs of vertices, and repeatedly updating these estimates based on intermediate vertices.

73. What is the time complexity of the Floyd-Warshall algorithm?

Answer: The time complexity of the Floyd-Warshall algorithm is O(V^3), where V is the number of vertices in the graph.

74. What is the difference between dynamic programming and greedy algorithms?

Answer: Both dynamic programming and greedy algorithms are techniques for solving optimization problems, but they differ in their approach. Dynamic programming involves breaking a problem down into smaller subproblems and solving each subproblem only once, storing the solution in a table to avoid recomputation. Greedy algorithms, on the other hand, make the locally optimal choice at each step, without considering the global optimal solution. Dynamic programming is generally more computationally expensive than greedy algorithms, but can handle more complex optimization problems.

75. What is the principle of optimality?

Answer: The principle of optimality is a key concept in dynamic programming that states that an optimal solution to a problem can be constructed from optimal solutions to its subproblems.

76. What is a heuristic algorithm?

Answer: A heuristic algorithm is an algorithm that uses an approximate, “rule of thumb” approach to find a solution to a problem, rather than an exact method. Heuristic algorithms are often used when exact solutions are infeasible or too expensive to compute.

77. What is simulated annealing?

Answer: Simulated annealing is a probabilistic metaheuristic algorithm for finding global optima in a large search space. It is inspired by the process of annealing in metallurgy, where a metal is slowly cooled to increase its strength and reduce defects. Simulated annealing works by accepting moves that decrease the objective function with a probability that depends on the current “temperature” of the system, which is gradually decreased over time.

78. What is genetic algorithm?

Answer: Genetic algorithm is a metaheuristic algorithm inspired by the process of natural selection in biological evolution. It involves maintaining a population of potential solutions to a problem, and using a process of selection, recombination, and mutation to create new solutions and evolve the population towards better solutions.

79. What is quicksort algorithm?

Answer: Quicksort is a popular sorting algorithm that uses a divide-and-conquer strategy to sort an array of elements. It works by selecting a pivot element from the array, partitioning the array into two subarrays based on the pivot, and recursively sorting the subarrays. Quicksort has an average case time complexity of O(n log n), making it one of the fastest sorting algorithms in practice.

80. What is merge sort algorithm?

Answer: Merge sort is a popular sorting algorithm that uses a divide-and-conquer strategy to sort an array of elements. It works by dividing the array into two halves, recursively sorting each half, and then merging the two sorted halves together. Merge sort has a worst-case time complexity of O(n log n), making it a good choice for sorting large datasets.

81. What is the difference between quicksort and mergesort?

Answer: Quicksort and mergesort are both popular sorting algorithms that use the divide-and-conquer strategy, but they differ in their approach. Quicksort uses a pivot element to partition the array and sort the subarrays recursively, while mergesort divides the array into two halves and sorts them recursively before merging the sorted halves together. In general, quicksort is faster than mergesort in practice, but mergesort has a more predictable worst-case time complexity.

82. What is dynamic programming used for?

Answer: Dynamic programming is a technique used to solve optimization problems that can be broken down into smaller subproblems. It is often used in problems involving sequence alignment, shortest path finding, knapsack problems, and other optimization problems.

83. What is the difference between a breadth-first search and a depth-first search?

Answer: Breadth-first search and depth-first search are two popular graph traversal algorithms that differ in their approach. Breadth-first search visits all the vertices at a given distance from the starting vertex before moving on to vertices at a greater distance, while depth-first search explores each branch of the graph as far as possible before backtracking. Breadth-first search is guaranteed to find the shortest path in an unweighted graph, while depth-first search can be used to search for a target vertex in a large graph.

84. What is the time complexity of binary search?

Answer: The time complexity of binary search is O(log n), where n is the size of the array being searched. Binary search works by repeatedly dividing the search interval in half, so the number of comparisons needed to find a target element is proportional to the logarithm of the size of the array.

85. What is the time complexity of bubble sort?

Answer: The time complexity of bubble sort is O(n^2), where n is the size of the array being sorted. Bubble sort works by repeatedly swapping adjacent elements that are out of order, so it needs to make O(n^2) comparisons and swaps in the worst case.

86. What is the time complexity of insertion sort?

Answer: The time complexity of insertion sort is O(n^2), where n is the size of the array being sorted. Insertion sort works by iteratively inserting each element in the proper position in a sorted subarray, so it needs to make O(n^2) comparisons and swaps in the worst case.

87. What is the time complexity of selection sort?

Answer: The time complexity of selection sort is O(n^2), where n is the size of the array being sorted. Selection sort works by repeatedly finding the smallest element in the unsorted portion of the array and swapping it with the first unsorted element, so it needs to make O(n^2) comparisons and swaps in the worst case.

88. What is the time complexity of a linear search?

Answer: The time complexity of a linear search is O(n), where n is the size of the array being searched. Linear search works by iterating through each element in the array until it finds the target element, so it needs to make O(n) comparisons in the worst case.]

89. What is the time complexity of a hash table lookup?

Answer: The time complexity of a hash table lookup is O(1) on average, but can be O(n) in the worst case, where n is the number of keys in the table. Hash table lookup works by computing the hash value of a key, which maps it to an index in an array, and then checking if the key is present at that index. In the average case, there is only one key at each index, so the lookup takes constant time. However, in the worst case, all the keys map to the same index, resulting in a linear search through a linked list at that index, which takes O(n) time.

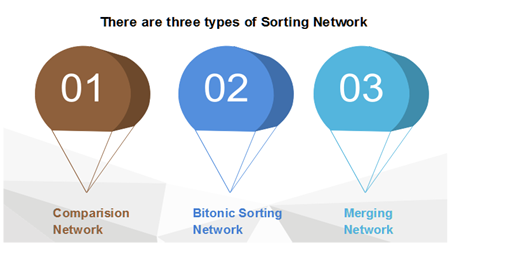

90. What is Sorting Network?

Answer: A sorting network is a numerical representation of a network comprising wires and comparator modules, which is utilized for sorting a sequence of numbers. The comparator modules connect two wires and sort the values by directing the smaller value to one wire and the higher value to the other. The key distinction between sorting networks and comparison sorting algorithms is that the sequence of comparisons is predetermined in a sorting network, irrespective of the outcomes of prior comparisons. This decoupling of comparison series is beneficial for parallel implementation of the algorithms.

91. What is the difference between the Dynamic programming and Greedy method?

92. List the advantage of Huffman’s encoding?

Answer: Huffman’s encoding is a crucial file compression technique that provides the following benefits:

- Easy to use

- Implements optimal and minimum length encoding

93. What is the Algorithm’s Time Complexity?

Answer: The time complexity of an algorithm refers to the total amount of time required for the program to run until it finishes.

It is typically expressed using the big O notation.

The algorithm’s time complexity indicates the length of time needed for the program to run entirely.

94. What is a Greedy method in DAA?

Answer: Greedy algorithms solve optimization problems by constructing a solution piece by piece. At each step, they select the next component that provides an immediate benefit without taking prior decisions into account. This method is primarily employed for addressing optimization problems.

95. Can you explain Asymptotic Notation?

Answer: Asymptotic Notation is a mathematical technique used to analyze and describe the behavior of functions as their input size approaches infinity. This notation involves methods whose domains are the set of natural numbers and is useful for defining the worst-case running time function T(n). It can also be extended to the domain of the real numbers.

96. What is the difference between Time Efficiency and Space Efficiency?

Time Efficiency refers to the measure of the number of times the critical algorithm functions are executed, while Space Efficiency calculates the number of additional memory units utilized by the algorithm.

97. Can you provide an overview of how Merge sort works, and can you give an example of its implementation?

Answer: Merge sort is a sorting algorithm that involves dividing the original list into two smaller sub-lists until only one item is left in each sub-list. These sub-lists are then sorted, and the sorted sub-lists are merged to form a sorted parent list. This process is repeated recursively until the original list is completely sorted.

For example, suppose we have an unsorted list of numbers: [5, 2, 8, 4, 7, 1, 3, 6]. The Merge sort algorithm will first divide the list into two sub-lists: [5, 2, 8, 4] and [7, 1, 3, 6]. Each sub-list will then be recursively divided until only one item is left in each sub-list: [5], [2], [8], [4], [7], [1], [3], [6]. These single-item sub-lists are then sorted and merged pairwise to form new sub-lists: [2, 5], [4, 8], [1, 7], [3, 6]. The process continues recursively until the final sorted list is obtained: [1, 2, 3, 4, 5, 6, 7, 8].

98. Can you explain the concept of Huffman code?

Answer: Huffman code refers to a variable-length encoding technique that involves constructing an optimal prefix tree to assign bit strings to characters based on their frequency in a given text.

99. Can you describe dynamic Huffman coding?

Answer: Dynamic Huffman coding involves updating the coding tree every time a new character is read from the source text. It is an improved version of the simplest Huffman coding technique and is used to overcome its limitations.

100. Can you define the n-queen problem?

Answer: The n-queen problem involves placing n queens on an n-by-n chessboard in a way that none of the queens attack each other by being in the same row, column, or diagonal.

The study of DAA algorithms is essential for computer science professionals and software developers as it equips them with critical problem-solving and algorithmic design skills. By understanding the fundamental principles of DAA and familiarizing oneself DAA Interview Questions and Answers , one can confidently navigate complex programming challenges and excel in their career. And follow our Freshersnow website frequently to know more interview questions to get succeed in an technical interview easily.

Jobs by Qualification

Freshersnow.com is one of the best job sites in India. On this website you can find list of jobs such as IT jobs, government jobs, bank jobs, railway jobs, work from home jobs, part time jobs, online jobs, pharmacist jobs, software jobs etc. Along with employment updates, we also provide online classes for various courses through our android app. Freshersnow.com also offers recruitment board to employers to post their job advertisements for free.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

DAA PROBLEMS WITH SOLUTIONS

Related Papers

parvin asude

Aliakbar Haghighi

IEEE Transactions on Computers

manoj kumar

Ciclo de discusion de tesis doctorales

Alejandra Verde

Glaucio Moro

HAL (Le Centre pour la Communication Scientifique Directe)

Volker Ziegler

Education Sciences

Gabriela Chavira , Patchareeya Kwan

RELATED PAPERS

Biochimica et Biophysica Acta (BBA) - Molecular Basis of Disease

sara caldarola

Coral CALERO

Molecular Microbiology

Michael Ferguson

Research in Consumer Behavior

Annamma Joy

Proceedings of the National Academy of Sciences

Lila Davachi

Revista De Ciencias Agroveterinarias

Jackson A D R I A N O Albuquerque

Amal Jayawardane

Tạp chí phát triển Khoa học và Công nghệ

Thanh Hiền Nguyễn

Biochimica et Biophysica Acta (BBA) - Bioenergetics

H. Westerhoff

MATEC Web of Conferences

Applied Physics Letters

Cadernos de Pesquisa

Marina Muniz Rossa Nunes

Revista de Gestão e Secretariado

Raimundo Nonato

Positif, nº 540, feb 2006

Floreal Peleato

Journal of Advanced Studies in Topology

Syed Ali Fathima

Revista Brasileira de Fruticultura

elias Almeida de Sousa

Andrew Shankman

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Course Enquiry +91-7669 100 251 +91-9953 306 008

- Hire from Us +91-7845 696 520

- Interview Questions

40+ [REAL-TIME] DAA Interview Questions and Answers

Last updated on 30th Apr 2024, Popular Course

DAA , or Design and Analysis of Algorithms, is a field in computer science concerned with the study of algorithms: their design, analysis, implementation, and application. It involves understanding various algorithmic techniques, such as divide and conquer, dynamic programming, greedy algorithms, and more, and analyzing their efficiency in terms of time and space complexity. The goal is to develop algorithms that solve computational problems effectively and efficiently.

1. What is an algorithm?

An algorithm is a step-by-step procedure or set of rules used to solve a specific problem or perform a particular task. It’s a finite sequence of well-defined instructions that transforms input into output. They can range from simple calculations to complex processes used in artificial intelligence and data analysis. Essentially, they provide a systematic approach to solving problems and achieving desired outcomes efficiently and accurately.

2. What is the significance of analyzing algorithms?

- Analyzing algorithms helps in understanding their efficiency in terms of time and space requirements.

- It allows us to compare different algorithms for the same problem and choose the most suitable one based on performance characteristics.

- This analysis is crucial for optimizing software performance, improving resource utilization, and ultimately enhancing user experience.

3. Explain the importance of time complexity in algorithm analysis.

Time complexity measures the amount of time an algorithm takes to complete as a function of the size of its input. It’s crucial because it helps us predict how the algorithm will perform as the input size grows. By understanding the time complexity, we can choose the most efficient algorithm for a given problem.

4. What is space complexity, and why is it important?

Space complexity measures the amount of memory an algorithm requires as a function of the size of its input. It’s essential because it helps us understand an algorithm’s memory usage, which is crucial, especially in resource-constrained environments like embedded systems or when dealing with large datasets.

5. What is the difference between worst-case, best-case, and average-case time complexity?

6. Explain the divide and conquer algorithm paradigm with an example.

- Divide and conquer is a problem-solving technique where a problem is divided into smaller subproblems, solved recursively, and then combined to find the solution to the original problem.

- An example is the merge sort algorithm, where the array is divided into halves, sorted recursively, and then merged.

7. What is dynamic programming, and when is it used?

Dynamic programming is a technique used to solve problems by breaking them down into simpler subproblems and solving each subproblem only once. It’s typically used when the problem exhibits overlapping subproblems and optimal substructure properties, such as in the case of the Fibonacci sequence or the knapsack problem.

8. Explain greedy algorithms and provide an example of their application.

Greedy algorithms make locally optimal choices at each step in the hope of finding a global optimum. They’re used when a problem can be solved by making a series of optimal choices at the time. An example is the coin change problem, where the goal is to make change for a given amount using the fewest possible coins.

9. What is backtracking, and how is it different from brute force?

- Backtracking is a technique for solving problems by exploring all possible solutions and eliminating those that do not satisfy the problem constraints.

- It differs from brute force in that it intelligently searches the solution space, pruning branches that cannot lead to a valid solution, which makes it more efficient than exhaustively trying every possible solution.

10. Explain the importance of algorithm design paradigms in problem-solving.

Algorithm design paradigms provide structured approaches for solving different types of problems efficiently. By understanding and applying these paradigms, such as divide and conquer, dynamic programming, greedy algorithms, and backtracking, we can develop algorithms that are easier to understand, analyze, and implement, ultimately leading to more effective solutions to complex problems.

11. What are the main steps involved in designing an algorithm?

The main steps in designing an algorithm include understanding the problem, determining the inputs and outputs, choosing appropriate data structures and algorithmic techniques, devising a plan or algorithm, implementing the algorithm, testing and debugging it, and finally, analyzing its efficiency.

12. Explain the concept of asymptotic analysis in algorithm analysis.

Asymptotic analysis evaluates an algorithm’s performance as the input size approaches infinity. It focuses on the algorithm’s behaviour for large inputs and provides insights into how the algorithm scales. Common notations used in asymptotic analysis include Big O, Big Omega, and Big Theta, which describe the upper, lower, and tight bounds of an algorithm’s time or space complexity, respectively.

13. What are the different types of algorithm complexity?

- The different types of algorithm complexity include time complexity, space complexity, and sometimes auxiliary space complexity.

- Time complexity measures the number of operations or steps an algorithm takes to complete as a function of the input size.

- Space complexity measures the amount of memory an algorithm uses as a function of the input size.

- Auxiliary space complexity accounts for the extra space used by the algorithm apart from the input space.

14. Explain the concept of recurrence relation in algorithm analysis.

A recurrence relation is a mathematical equation that recursively defines a sequence or function in terms of its previous values. In algorithm analysis, recurrence relations are often used to describe the time complexity of recursive algorithms. Solving recurrence relations involves finding a closed-form solution that expresses the time complexity of the algorithm in terms of its input size.

15. What is the Master Theorem, and when is it used in algorithm analysis?

The Master Theorem is a mathematical tool used to solve recurrence relations that arise in the analysis of divide-and-conquer algorithms. It provides a way to determine the time complexity of algorithms with a specific form of recurrence relation. The Master Theorem simplifies the process of analyzing the time complexity of algorithms like merge sort, binary search, and quicksort.

16. Explain the concept of amortized analysis in algorithm analysis.

- Amortized analysis is a method for analyzing the average time or space complexity of a sequence of operations on a data structure.

- It considers the total cost of a series of operations divided by the number of operations, providing an average cost per operation.

- Amortized analysis is particularly useful for analyzing data structures with expensive individual operations but overall efficient performance, such as dynamic arrays or hash tables.

17. What are some common algorithmic techniques used to solve graph problems?

Some common algorithmic techniques used to solve graph problems include depth-first search (DFS) and breadth-first search (BFS) for traversal and pathfinding, Dijkstra’s algorithm for single-source shortest paths, the Bellman-Ford algorithm for single-source shortest paths with negative weights, and the Floyd-Warshall algorithm for all pairs of shortest paths

18. Explain the concept of NP-completeness and its significance in algorithm analysis.

NP-completeness is a class of problems for which no known efficient algorithm exists to solve them in polynomial time. A problem is considered NP-complete if every problem in the NP class can be reduced to it in polynomial time. The significance of NP-completeness lies in its implications for algorithm design. If a problem is NP-complete, finding an efficient solution is unlikely, and heuristic or approximation algorithms may be necessary.

19. What is the difference between a greedy algorithm and a dynamic programming algorithm?

- Greedy algorithms make locally optimal choices at each step with the hope of finding a global optimum.

- In contrast, dynamic programming algorithms solve problems by breaking them down into simpler subproblems and solving each subproblem only once.

- The main difference lies in their approach to problem-solving: greedy algorithms make decisions based solely on the current state, while dynamic programming algorithms consider the entire problem space and optimize globally.

20. How do you determine the correctness of an algorithm?

An algorithm’s correctness can be determined by analyzing its logic, verifying its compliance with the problem requirements, and testing it with various input cases, including boundary cases and edge cases. Additionally, mathematical proofs, such as loop invariants or induction, can be used to formally verify an algorithm’s correctness. Debugging techniques, such as step-by-step execution and code reviews, are also essential for identifying and fixing any errors or inconsistencies in the algorithm.

Subscribe For Free Demo

21. Explain the concept of a greedy choice property in greedy algorithms.

The greedy choice property states that at each step of a greedy algorithm, the locally optimal choice leads to a globally optimal solution. In other words, a greedy algorithm makes the best possible choice at each step without considering the consequences of that choice on future steps. This property is crucial for the correctness of greedy algorithms and is often proven using mathematical induction or contradiction.

22. What is a dynamic programming table, and how is it used in dynamic programming algorithms?

- A dynamic programming table is a data structure used to store intermediate results in dynamic programming algorithms.

- It typically has dimensions corresponding to the sizes of the input parameters and is filled in a bottom-up or top-down fashion with the solutions to subproblems.

- The table allows dynamic programming algorithms to avoid redundant computations by storing and reusing previously computed results, leading to improved efficiency.

23. Explain the concept of memoization in dynamic programming.

Memoization is a technique used to optimize dynamic programming algorithms by storing the results of expensive function calls and returning the cached result when the same inputs occur again. It typically involves using a data structure, such as a dictionary or an array, to store the computed values along with their corresponding inputs. Memoization helps reduce redundant computations and improves the efficiency of dynamic programming algorithms, especially for problems with overlapping subproblems.

24. What is the difference between top-down and bottom-up dynamic programming?

Top-down dynamic programming, also known as memoization, starts with the original problem and recursively solves smaller subproblems, storing the results in a memoization table to avoid redundant computations. Bottom-up dynamic programming, on the other hand, starts with the smallest subproblems and iteratively builds up the solution to the original problem by solving larger subproblems. While both approaches yield the same result, bottom-up dynamic programming tends to be more efficient and space-saving since it avoids the overhead of function call stack and recursion.

25. Explain the concept of backtracking and provide an example of its application.

- Backtracking is a technique used to solve problems by recursively exploring all possible solutions and eliminating those that do not satisfy the problem constraints.

- It involves systematically searching the solution space and backtracking when a dead end is reached.

- An example of backtracking is the N-Queens problem, where the goal is to place N queens on an N×N chessboard such that no two queens threaten each other.

26. What is a branch-and-bound algorithm, and when is it used?

Branch-and-bound is a technique for solving optimization problems. It involves systematically enumerating all possible solutions and pruning branches that do not lead to a better solution than the current best-known solution. This technique is typically used for combinatorial optimization problems, such as the travelling salesperson problem or the knapsack problem, where an exhaustive search is impractical due to the large solution space.

27. Explain the concept of a heuristic in algorithm design.

A heuristic is a rule of thumb or a practical approach used to solve problems more efficiently, especially when an optimal solution is difficult or impossible to find in a reasonable amount of time. Heuristics often sacrifice optimality for efficiency by making informed guesses or approximations based on available information. Common examples of heuristics include greedy algorithms, genetic algorithms, and simulated annealing.

28. What is the role of randomness in algorithm design, and when are randomized algorithms used?

Randomness plays a crucial role in algorithm design by introducing uncertainty or randomness into the algorithm’s behaviour. Randomized algorithms use random numbers to make decisions or computations, leading to probabilistic outcomes. They are often used in situations where the problem space is too large to explore exhaustively or when the input data is uncertain or noisy. Examples of randomized algorithms include quicksort with random pivot selection and the Monte Carlo method for numerical integration.

29. Explain the concept of approximation algorithms and when they are used.

- Approximation algorithms are algorithms that find near-optimal solutions to optimization problems in polynomial time, even if an exact solution is computationally infeasible.

- They provide solutions that are guaranteed to be within a certain factor of the optimal solution, known as the approximation ratio.

- Approximation algorithms are used when finding an exact solution to a problem is NP-hard or impractical, but finding a good approximation quickly is sufficient for practical purposes.

30. What are some common techniques for reducing the time complexity of algorithms?

Some common techniques for reducing the time complexity of algorithms include optimizing loop structures, minimizing unnecessary computations, using efficient data structures (such as hash tables, binary search trees, or heaps), applying algorithmic paradigms like divide and conquer or dynamic programming, parallelizing computations, and exploiting problem-specific properties or constraints. Additionally, algorithmic analysis and optimization tools, such as profiling and benchmarking, can help identify and eliminate bottlenecks in the algorithm.

31. Explain the concept of NP-hardness and NP-completeness. How are they related?

NP-hardness refers to a problem’s difficulty, indicating that no known polynomial-time algorithm exists to solve it. NP-completeness, on the other hand, is a specific subset of NP-hard problems for which every problem in the NP class can be reduced to it in polynomial time. In other words, NP-completeness implies both NP-hardness and the property of being as hard as any problem in NP. Problems that are NP-complete are among the most challenging in computer science, as finding efficient solutions to them is unlikely.

32. Explain the concept of approximation ratio in approximation algorithms.

- The approximation ratio of an approximation algorithm is a measure of how close the solution it provides is to the optimal solution for a given optimization problem.

- It’s defined as the ratio of the value of the approximate solution to the value of the optimal solution.

- A good approximation algorithm aims for a small approximation ratio, indicating that its solution is close to the optimal solution.

- The approximation ratio provides a quantitative measure of the quality of the approximation achieved by the algorithm.

33. What is the difference between deterministic and randomized algorithms? Provide examples of each.

Deterministic algorithms always produce the same output for a given input and have predictable behaviour. Examples include sorting algorithms like merge sort and binary search. Randomized algorithms, on the other hand, use randomness or random numbers during their execution to achieve a desired result or improve efficiency. Examples include quicksort with random pivot selection and the Monte Carlo algorithm for estimating the value of pi.

34. Explain the concept of a reduction in the context of algorithm analysis.

In algorithm analysis, reduction refers to the process of transforming one problem into another problem in a way that preserves the complexity of the original problem. It’s commonly used to show that one problem is at least as hard as another problem by demonstrating that if the second problem could be solved efficiently, then so could the first problem. Reductions are often used in proving NP-completeness and in designing approximation algorithms.

35. What are some common strategies for solving optimization problems using metaheuristic algorithms?

- Some common strategies for solving optimization problems using metaheuristic algorithms include simulated annealing, genetic algorithms, ant colony optimization, particle swarm optimization, and tabu search.

- These algorithms typically use stochastic or probabilistic techniques to explore the solution space and escape local optima.

- They are well-suited for solving complex optimization problems where traditional methods may fail.

36. Explain the concept of a local search algorithm and provide an example of its application.

A local search algorithm is a type of optimization algorithm that iteratively explores the neighbourhood of a current solution, making small incremental changes to improve it. It does not guarantee finding the global optimum but aims to find a satisfactory solution within a reasonable amount of time. An example of a local search algorithm is hill climbing, which repeatedly adjusts a single solution component to improve it until no further improvements are possible.

37. What is the travelling salesperson problem (TSP), and how is it typically solved?

The travelling salesperson problem (TSP) is a classic optimization problem where the goal is to find the shortest possible route that visits each city exactly once and returns to the starting city. TSP is NP-hard, meaning that there is no known polynomial-time algorithm to solve it optimally for large instances. It’s typically solved using heuristic or approximation algorithms, such as a nearest neighbour, genetic algorithms, or simulated annealing, which provide near-optimal solutions in a reasonable amount of time.

38. Explain the concept of a greedy choice property in the context of greedy algorithms.

- The greedy choice property states that at each step of a greedy algorithm, the locally optimal choice leads to a globally optimal solution.

- In other words, a greedy algorithm makes the best possible choice at each step without considering the consequences of that choice on future steps.

- This property is crucial for the correctness of greedy algorithms and is often proven using mathematical induction or contradiction.

39. What are some common techniques for solving integer programming problems?

Some common techniques for solving integer programming problems include branch and bound, cutting-plane methods, branch and cut, and branch and price. These techniques efficiently explore the solution space and identify the optimal integer solution or prove optimality within a reasonable amount of time. Integer programming problems arise in various applications, such as resource allocation, scheduling, and network optimization.

40. Explain the concept of linear programming relaxation and its role in solving integer programming problems.

Linear programming relaxation involves relaxing the integrality constraints of an integer programming problem, converting it into a linear programming problem that can be solved efficiently using standard techniques like the simplex method. The solution to the relaxed problem provides a lower bound on the optimal solution of the original integer programming problem. Linear programming relaxation is often used as a component of branch and bound algorithms for solving integer programming problems, helping guide the search for the optimal integer solution.

Get JOB DAA Training for Beginners By MNC Experts

- Instructor-led Sessions

- Real-life Case Studies

- Assignments

41. Explain the concept of parallel algorithms and their significance in algorithm design.

- Parallel algorithms are designed to execute multiple tasks simultaneously, taking advantage of parallel processing architectures such as multi-core CPUs or distributed computing systems.

- They aim to improve the efficiency and performance of algorithms by dividing tasks into smaller, independent units that can be executed concurrently.

- Parallel algorithms are significant because they can exploit the computational power of modern hardware and solve large-scale problems more efficiently than sequential algorithms.

42. What are some common parallel algorithm design paradigms? Provide examples.

Some common parallel algorithm design paradigms include task parallelism, where independent tasks are executed concurrently (e.g., parallel mergesort); data parallelism, where the same operation is performed on different data elements concurrently (e.g., parallel matrix multiplication); and pipeline parallelism, where tasks are divided into stages and executed in a pipeline fashion (e.g., parallel pipeline for image processing).

43. Explain the concept of MapReduce and its role in parallel and distributed computing.

MapReduce is a programming model and framework for processing and generating large datasets in parallel and distributed computing environments. It divides a computation into two phases: the map phase, where input data is processed and transformed into key-value pairs, and the reduce phase, where the intermediate results are aggregated and combined to produce the final output. MapReduce is widely used for data-intensive tasks such as large-scale data processing, indexing, and web crawling.

44. What is the significance of algorithmic optimization techniques in practical algorithm design?

- Algorithmic optimization techniques play a crucial role in practical algorithm design by improving their efficiency and performance.

- They aim to reduce algorithms’ time and space complexity, making them faster and more scalable for large-scale problems.

- Optimization techniques include algorithmic analysis, algorithmic profiling, code optimization, parallelization, and exploiting problem-specific properties or constraints.

45. Explain the concept of dynamic programming in the context of optimization problems.

Dynamic programming is a technique for solving optimization problems by breaking them down into simpler subproblems and solving each subproblem only once. It involves storing the solutions to subproblems in a table and reusing them to solve larger subproblems, leading to improved efficiency. Dynamic programming is particularly useful for optimization problems with overlapping subproblems and optimal substructure properties, such as the knapsack problem or the longest common subsequence problem.

46. What are some common applications of dynamic programming algorithms in real-world problems?

Dynamic programming algorithms are used in various real-world problems, including sequence alignment in bioinformatics, resource allocation and scheduling in operations research, route planning and shortest path algorithms in transportation and logistics, text processing and pattern matching in natural language processing, and image processing and computer vision tasks such as image segmentation and object recognition.

47. Explain the concept of a maximum subarray problem and provide an efficient algorithm to solve it.

- The maximum subarray problem involves finding the contiguous subarray within an array of numbers that has the largest sum.

- An efficient algorithm to solve it is Kadane’s algorithm, which iterates through the array, maintaining two variables: the current maximum sum ending at the current position and the maximum sum seen so far.

- By updating these variables at each step, Kadane’s algorithm finds the maximum subarray sum in linear time complexity.

48. What is the difference between a linear programming problem and an integer programming problem?

Linear programming problems involve optimizing a linear objective function subject to linear inequality and equality constraints, where the decision variables are continuous real numbers. Integer programming problems, on the other hand, are a generalization of linear programming problems where the decision variables are restricted to integer values. Integer programming problems are often more challenging to solve due to the combinatorial nature of the decision variables.

49. Explain the concept of a greedy choice property in the context of Huffman coding.

In Huffman coding, the greedy choice property states that at each step of the algorithm, the two least frequent symbols are combined into a single composite symbol with a frequency equal to the sum of their frequencies. This process continues recursively until all symbols are combined into a binary tree, with the most frequent symbols closer to the root. The resulting binary tree represents the optimal prefix-free code for encoding the input symbols, with shorter codes assigned to more frequent symbols.

50. What are some common techniques for solving constraint satisfaction problems?

Some common techniques for solving constraint satisfaction problems include backtracking, constraint propagation, local search algorithms such as simulated annealing or genetic algorithms, and constraint satisfaction programming (CSP) frameworks. These techniques aim to efficiently explore the solution space and find assignments to variables that satisfy all constraints, making them suitable for a wide range of combinatorial optimization problems.

51. Explain the concept of a greedy choice property in the context of Prim’s algorithm for minimum spanning trees.

- In Prim’s algorithm for minimum spanning trees, the greedy choice property states that at each step, the algorithm selects the edge with the smallest weight that connects a vertex in the current tree to a vertex outside the tree.

- This locally optimal choice ensures that the algorithm always adds the edge that contributes the least to the total weight of the spanning tree.

- By repeatedly applying this property, Prim’s algorithm constructs a minimum spanning tree with optimal weight.

52. What is the Floyd-Warshall algorithm, and how does it work?

The Floyd-Warshall algorithm is a dynamic programming algorithm used to find the shortest paths between all pairs of vertices in a weighted graph, including negative edge weights. It works by iteratively updating a matrix of shortest path distances between all pairs of vertices. Each iteration considers all possible intermediate vertices and updates the shortest path distances accordingly. After completing all iterations, the resulting matrix contains the shortest path distances between all pairs of vertices.

53. Explain the concept of NP-hardness in the context of decision problems.

In the context of decision problems, NP-hardness refers to the property of a problem being at least as hard as the hardest problems in the NP class. A decision problem is NP-hard if every problem in NP can be reduced to it in polynomial time. This means that if an efficient algorithm exists for solving an NP-hard problem, then efficient algorithms also exist for solving all problems in NP, implying that P = NP. NP-hard problems are among the most challenging in computer science, as finding efficient solutions to them is unlikely.

54. What is the Knapsack problem, and how is it typically solved?

- The Knapsack problem is a classic optimization problem where the goal is to maximize the value of items that can be included in a knapsack without exceeding its weight capacity.

- It’s typically solved using dynamic programming techniques, where the decision to include or exclude each item is made iteratively, considering the maximum value that can be achieved for each possible combination of items and knapsack capacities.

55. Explain the concept of a stable matching problem and provide an algorithm to solve it.

In a stable matching problem, the goal is to find a matching between two sets of elements such that there are no unstable pairs, where an unstable pair consists of two elements who prefer each other over their current partners. The Gale-Shapley algorithm is a popular algorithm to solve the stable matching problem. It involves iteratively proposing and accepting or rejecting matches between elements from one set to the other until a stable matching is found.

56. What is the travelling salesperson problem (TSP), and how is it typically solved?

- The travelling salesperson problem (TSP) is a classic optimization problem where the goal is to find the shortest possible route that visits each city exactly once and returns to the starting city.

- TSP is NP-hard, meaning that there is no known polynomial-time algorithm to solve it optimally for large instances.

- It’s typically solved using heuristic or approximation algorithms, such as a nearest neighbour, genetic algorithms, or simulated annealing, which provide near-optimal solutions in a reasonable amount of time.

57. Explain the concept of a matching problem and provide an algorithm to solve it.

In a matching problem, the goal is to find a subset of edges in a graph such that no two edges share a common vertex. The maximum matching problem seeks to find the largest possible matching, while the maximum weight matching problem aims to maximize the sum of weights of the edges in the matching. The Ford-Fulkerson algorithm, also known as the augmenting path algorithm, is commonly used to solve maximum matching problems by iteratively augmenting the matching until no further improvements are possible.

58. What is the concept of amortized analysis, and how is it used to analyze the time complexity of algorithms?

Amortized analysis is a method for analyzing the average time or space complexity of a sequence of operations on a data structure. It considers the total cost of a series of operations divided by the number of operations, providing an average cost per operation. Amortized analysis is particularly useful for analyzing data structures with expensive individual operations but overall efficient performance, such as dynamic arrays or hash tables.

59. Explain the concept of a flow network and provide an example of its application.

- A flow network is a directed graph where each edge has a capacity and represents the maximum amount of flow that can be sent from one vertex to another.

- It’s commonly used to model transportation or network flow problems, such as the maximum flow problem, where the goal is to find the maximum amount of flow that can be sent from a source vertex to a sink vertex without violating capacity constraints along the paths.

60. What is the concept of network flow algorithms, and how are they used in practical applications?

Network flow algorithms solve optimization problems involving flow networks, such as the maximum flow problem, minimum cut problem, or circulation problem. They are applied in various practical applications, including transportation and logistics, telecommunications, computer networking, and supply chain management, to optimize resource allocation, minimize costs, or maximize efficiency in flow networks.

Develop Your Skills with DAA Certification Training

61. Explain the concept of the Bellman-Ford algorithm and its application.

The Bellman-Ford algorithm is used to find the shortest paths from a single source vertex to all other vertices in a weighted graph, even in the presence of negative edge weights. It iteratively relaxes the edges in the graph by considering all possible paths from the source vertex to each destination vertex and updating the shortest path distances accordingly. The algorithm detects negative weight cycles and reports if they exist. Bellman-Ford is commonly used in network routing protocols and distributed systems.

62. What is the concept of maximum bipartite matching, and how is it typically solved?