![triac method of essay writing SASC - Student Academic Success Center - UNE [logo]](https://files.uneportfolio.org/wp-content/uploads/sites/5/2022/02/SASCLogoSquare_Smaller-3-e1644936452801.jpeg)

Dr. Eric Drown

Reading and Writing are Superpowers*

TRIAC Paragraph Structure

Emerging academic writers often wonder how to beef up their paragraphs without adding fluff. TRIAC can help writers explain their ideas in depth. TRIAC paragraphs (or paragraph sequences) feature these elements:

Topic . Start the paragraph by introducing a topic that supports or complicates your thesis – the central problem or idea that the paragraph aims to explore. Better topic sentences function as a mini-thesis and make a claim about the topic.

Restriction . Because there can be many aspects and approaches to a topic, it makes sense to specify the aspect or approach you’re going to take. Follow the topic with sentences that narrow the scope of the topic. In these sentences, you’re rewriting the topic in more specific terms and setting the direction the paragraph will follow.

Illustration/Example/Evidence . Help your reader understand the restricted topic in concrete terms, and give him or her something to think about by offering an example, exhibit, or evidence.

Analysis . Help your reader see your point by looking at the evidence you offered through your eyes. What parts of it are the most significant? How does the evidence support, complicate, or counter the restricted claim about the topic you (or another writer) are making?

Conclusion/Connections . What, having understood your evidence as you see it, should your reader have learned from your paragraph? How can this conclusion be connected to other relevant ideas in your essay?

Here’s an example of a short TRIAC paragraph.

[TOPIC] Many critics worry that the way we use the Internet is reshaping our minds. [RESTRICTION] Their biggest concern is that our shallow-reading habits are fostering inattention and undermining literacy. [ILLUSTRATION/EXAMPLE] For example, in “ Is Google Making Us Stupid? ,” journalist Nicholas Carr worries that the connection-making state of mind promoted by slow, deep reading is giving way to an information-seeking state of mind best adapted to finding separate bits of information. In his view, instead of diving deep into the ocean of ideas, we merely “zip along the surface like a guy on a Jet Ski.” [ ANALYSIS ] Carr rightly points out that our reading habits are certainly changing. It is true that much of our everyday reading feeds our information-seeking appetites. It is also true that it takes work to learn how to read and think slowly and deeply. But his insistence that we are losing our ability to think in a complex way is countered by the slow patient thinking that takes place in activities such as prayer, meditation, and scholarship . [CONCLUSION] While it may indeed take conscious and disciplined effort to learn how to read and think well, today’s students are capable of making that effort, provided that we recognize that, like previous generations, some may need guided practice in the habit.

Visit my Barclay’s Formula or Observation-Implication-Conclusion pages for other useful paragraph structures.

- Services for education institutions

- Academic subject areas

- Peer connection

- Evidence of Studiosity impact

- Case studies from our partners

- Research Hub

- The Tracey Bretag Integrity Prize

- The Studiosity Symposium

- Studiosity for English learners

- Video case studies

- Meet the online team

Academic Advisory Board

Meet the board.

- Social responsibility

- Meet the team

- Join the team

How to structure paragraphs using the PEEL method

Sophia Gardner

Sep 1, 2023

You may have heard of the acronym PEEL for essays, but what exactly does it mean? And how can it help you? We’re here to explain it all, plus give you some tips on how to nail your next essay.

There’s certainly an art to writing essays. If you haven’t written one for a while, or if you would like to hone your academic writing skills, the PEEL paragraph method is an easy way to get your point across in a clear and concise way, that is easily digestible to the reader.

So, what exactly is PEEL ?

The PEEL paragraph method is a technique used in writing to help structure paragraphs in a way that presents a single clear and focused argument, which links back to the essay topic or thesis statement.

It’s good practice to dedicate each paragraph to one aspect of your argument, and the PEEL structure simplifies this for you.

It allows you to create a paragraph that is easy and accessible for others to understand. Remember, when you’re writing something, it’s not just you who is reading it - you need to consider the reader and how they are going to be digesting this new information.

What does PEEL stand for?

P = Point: start your paragraph with a clear topic sentence that establishes what your paragraph is going to be about. Your point should support your essay argument or thesis statement.

E = Evidence/Example: here you should use a piece of evidence or an example that helps to reaffirm your initial point and develop the argument.

E = Explain: next you need to explain exactly how your evidence/example supports your point, giving further information to ensure that your reader understands its relevance.

L = Link: to finish the paragraph off, you need to link the point you’ve just made back to your essay question, topic, or thesis.

Download a free PEEL paragraph planner below. 👇

Studiosity English specialist Ellen, says says students often underestimate the importance of a well-structured paragraph.

PEEL in practice

Here’s an example of what you might include in a PEEL structured paragraph:

Topic: Should infants be given iPads? Thesis/argument: Infants should not be given iPads.

Point : Infants should not be given iPads, because studies show children under two can face developmental delays if they are exposed to too much screen time.

Evidence/Example: A recent paediatric study showed that infants who are exposed to too much screen time may experience delays in speech development.

Explanation: The reason infants are facing these delays is because screen time is replacing other key developmental activities.

Link: The evidence suggests that infants who have a lot of screen time experience negative consequences in their speech development, and therefore they should not be exposed to iPads at such a young age.

Once you’ve written your PEEL paragraph, do a checklist to ensure you have covered off all four elements of the PEEL structure. Your point should be a clear introduction to the argument you are making in this paragraph; your example or evidence should be strong and relevant (ask yourself, have you chosen the best example?); your explanation should be demonstrate why your evidence is important and how it conveys meaning; and your link should summarise the point you’ve just made and link back to the broader essay argument or topic.

Keep your paragraphs clear, focused, and not too long. If you find your paragraphs are getting lengthy, take a look at how you could split them into multiple paragraphs, and ensure you’re creating a new paragraph for each new idea you introduce to the essay.

Finally, it’s important to always proofread your paragraph. Read it once, twice, and then read it again. Check your paragraph for spelling, grammar, language and sentence flow. A good way to do this is to read it aloud to yourself, and if it sounds clunky or unclear, consider rewriting it.

That’s it! We hope this helps explain the PEEL method and how it can help you with your next essay. 😊

You might also like: Proofreading vs editing: what's the difference? How to get easy marks in an exam 5 study hacks that actually work

Topics: English , Writing , Grammar

About Studiosity

Asking for feedback on your work is an essential part of learning. So when you want to better understand a concept or check you're on the right track, we're here for you.

Find out if you have free access through your institution here .

Recent Posts

Posts by topic.

- Students (85)

- Higher education (67)

- Student Experience (46)

- University (46)

- Education (41)

- online study (34)

- Interview (28)

- Learning (28)

- Tertiary education (28)

- Educators (27)

- Research (24)

- Parents (23)

- English (18)

- High School (18)

- Podcast (17)

- Technology (17)

- students first (17)

- Writing (16)

- Student stories (14)

- Homework (13)

- student wellbeing (13)

- Assignment Help (12)

- Education policy (12)

- Formative feedback (12)

- Literacy (12)

- academic integrity (12)

- Events (11)

- Academic Advisory Board (10)

- Studiosity (10)

- covid19 (10)

- international student (10)

- Australia (9)

- Health and Wellbeing (9)

- Learning trends (9)

- Student satisfaction (9)

- Teaching (9)

- Secondary education (8)

- Equality (7)

- Science (7)

- Student retention (7)

- UK students (7)

- staff wellbeing (7)

- Student support (6)

- UK Higher Education (6)

- academic services (6)

- online learning (6)

- CanHigherEd (5)

- Online Tutoring (5)

- Workload (5)

- belonging (5)

- CVs and cover letters (4)

- Internet (4)

- Mathematics (4)

- Partnerships (4)

- School holidays (4)

- Student performance (4)

- Widening Participation (4)

- student success (4)

- #InthisTogether (3)

- Grammar (3)

- University of Exeter (3)

- teaching & learning (3)

- Charity (2)

- Government (2)

- Mentors (2)

- Primary education (2)

- Subject Specialists (2)

- accessibility (2)

- community (2)

- diversity (2)

- plagiarism prevention (2)

- student stress (2)

- webinar (2)

- Biology (1)

- Careers (1)

- Chemistry (1)

- EU students (1)

- First years (1)

- Indigenous Strategy (1)

- Middle East (1)

- Nutrition (1)

- Teacher (1)

- academic support (1)

- academic writing (1)

- business schools (1)

- choice of language (1)

- dyslexia (1)

- ethical AI (1)

- generativeAI (1)

- job help (1)

- library services (1)

- podcasts (1)

- reflection (1)

- university of west of england (1)

- July 2015 (12)

- March 2020 (11)

- June 2020 (10)

- July 2020 (8)

- September 2020 (8)

- March 2015 (7)

- April 2015 (7)

- October 2019 (7)

- April 2020 (7)

- May 2018 (6)

- April 2019 (6)

- May 2020 (6)

- September 2022 (6)

- June 2015 (5)

- August 2015 (5)

- December 2017 (5)

- March 2018 (5)

- February 2020 (5)

- March 2021 (5)

- June 2021 (5)

- July 2016 (4)

- March 2017 (4)

- October 2017 (4)

- February 2018 (4)

- August 2018 (4)

- May 2019 (4)

- July 2019 (4)

- August 2019 (4)

- March 2024 (4)

- August 2024 (4)

- February 2015 (3)

- May 2015 (3)

- September 2015 (3)

- December 2015 (3)

- January 2016 (3)

- April 2016 (3)

- October 2016 (3)

- December 2016 (3)

- April 2017 (3)

- September 2017 (3)

- April 2018 (3)

- October 2018 (3)

- March 2019 (3)

- January 2020 (3)

- October 2020 (3)

- November 2020 (3)

- June 2022 (3)

- October 2022 (3)

- November 2022 (3)

- August 2023 (3)

- November 2023 (3)

- April 2024 (3)

- July 2024 (3)

- March 2016 (2)

- May 2016 (2)

- August 2016 (2)

- July 2017 (2)

- January 2018 (2)

- November 2018 (2)

- December 2018 (2)

- February 2019 (2)

- June 2019 (2)

- September 2019 (2)

- January 2021 (2)

- February 2021 (2)

- April 2021 (2)

- August 2021 (2)

- September 2021 (2)

- December 2021 (2)

- August 2022 (2)

- February 2023 (2)

- March 2023 (2)

- May 2023 (2)

- December 2023 (2)

- June 2024 (2)

- October 2008 (1)

- August 2013 (1)

- October 2015 (1)

- February 2016 (1)

- June 2016 (1)

- September 2016 (1)

- November 2016 (1)

- January 2017 (1)

- May 2017 (1)

- June 2017 (1)

- August 2017 (1)

- November 2017 (1)

- June 2018 (1)

- September 2018 (1)

- January 2019 (1)

- November 2019 (1)

- December 2019 (1)

- August 2020 (1)

- December 2020 (1)

- May 2021 (1)

- February 2022 (1)

- March 2022 (1)

- July 2022 (1)

- December 2022 (1)

- January 2023 (1)

- June 2023 (1)

- July 2023 (1)

- September 2023 (1)

- October 2023 (1)

- February 2024 (1)

Studiosity is personalised study help, anytime, anywhere. We partner with institutions to extend their core academic skills support online with timely, after-hours help for all their students, at scale - regardless of their background, study mode or location.

Now you can subscribe to our educator newsletter, for insights and free downloads straight to your inbox:

ABN 41 114 279 668

Student zone, assignment calculator, calendars and organisers, study survival guides, free practice tests, student faqs, download our mobile app, student sign in, success stories.

Student Reviews & Testimonials

Specialist Sign In

Meet our specialists

Meet the team, media and research, student reviews.

Read more on Google

Studiosity acknowledges the Traditional Indigenous Custodians of country throughout Australia, and all lands where we work, and recognises their continuing connection to land, waters, and culture. We pay our respects to Elders past and present.

Contact • FAQ • Privacy • Accessibility • Acceptable Use • Terms of Use AI-for-Learning Polic y • Academic Integrity Policy

Beef Up Critical Thinking and Writing Skills: Comparison Essays

Organizing the Compare-Contrast Essay

- Teaching Resources

- An Introduction to Teaching

- Tips & Strategies

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida

- B.A., History, University of Florida

The compare/contrast essay is an excellent opportunity to help students develop their critical thinking and writing skills. A compare and contrast essay examines two or more subjects by comparing their similarities and contrasting their differences.

Compare and contrast is high on Bloom's Taxonomy of critical reasoning and is associated with a complexity level where students break down ideas into simpler parts in order to see how the parts relate. For example, in order to break down ideas for comparison or to contrast in an essay, students may need to categorize, classify, dissect, differentiate, distinguish, list, and simplify.

Preparing to Write the Essay

First, students need to select pick comparable objects, people, or ideas and list their individual characteristics. A graphic organizer, like a Venn Diagram or top hat chart, is helpful in preparing to write the essay:

- What is the most interesting topic for comparison? Is the evidence available?

- What is the most interesting topic to contrast? Is the evidence available?

- Which characteristics highlight the most significant similarities?

- Which characteristics highlight the most significant differences?

- Which characteristics will lead to a meaningful analysis and an interesting paper?

A link to 101 compare and contrast essay topics for students provides opportunities for students to practice the similarities and differences such as

- Fiction vs. Nonfiction

- Renting a home vs. Owning a home

- General Robert E. Lee vs General Ulysses S. Grant

Writing the Block Format Essay: A, B, C points vs A, B, C points

The block method for writing a compare and contrast essay can be illustrated using points A, B, and C to signify individual characteristics or critical attributes.

A. history B. personalities C. commercialization

This block format allows the students to compare and contrast subjects, for example, dogs vs. cats, using these same characteristics one at a time.

The student should write the introductory paragraph to signal a compare and contrast essay in order to identify the two subjects and explain that they are very similar, very different or have many important (or interesting) similarities and differences. The thesis statement must include the two topics that will be compared and contrasted.

The body paragraph(s) after the introduction describe characteristic(s) of the first subject. Students should provide the evidence and examples that prove the similarities and/or differences exist, and not mention the second subject. Each point could be a body paragraph. For example,

A. Dog history. B. Dog personalities C. Dog commercialization.

The body paragraphs dedicated to the second subject should be organized in the same method as the first body paragraphs, for example:

A. Cat history. B. Cat personalities. C. Cat commercialization.

The benefit of this format is that it allows the writer to concentrate on one characteristic at a time. The drawback of this format is that there may be some imbalance in treating the subjects to the same rigor of comparing or contrasting.

The conclusion is in the final paragraph, the student should provide a general summary of the most important similarities and differences. The student could end with a personal statement, a prediction, or another snappy clincher.

Point by Point Format: AA, BB, CC

Just as in the block paragraph essay format, students should begin the point by point format by catching the reader's interest. This might be a reason people find the topic interesting or important, or it might be a statement about something the two subjects have in common. The thesis statement for this format must also include the two topics that will be compared and contrasted.

In the point by point format, the students can compare and/or contrast the subjects using the same characteristics within each body paragraph. Here the characteristics labeled A, B, and C are used to compare dogs vs. cats together, paragraph by paragraph.

A. Dog history A Cat history

B. Dog personalities B. Cat personalities

C. Dog commercialization C. Cat commercialization

This format does help students to concentrate on the characteristic(s) which may be may result in a more equitable comparison or contrast of the subjects within each body paragraph(s).

Transitions to Use

Regardless of the format of the essay, block or point-by-point, the student must use transition words or phrases to compare or contrast one subject to another. This will help the essay sound connected and not sound disjointed.

Transitions in the essay for comparison can include:

- in the same way or by the same token

- in like manner or likewise

- in similar fashion

Transitions for contrasts can include:

- nevertheless or nonetheless

- however or though

- otherwise or on the contrary

- in contrast

- notwithstanding

- on the other hand

- at the same time

In the final concluding paragraph, the student should give a general summary of the most important similarities and differences. The student could also end with a personal statement, a prediction, or another snappy clincher.

Part of the ELA Common Core State Standards

The text structure of compare and contrast is so critical to literacy that it is referenced in several of the English Language Arts Common Core State Standards in both reading and writing for K-12 grade levels. For example, the reading standards ask students to participate in comparing and contrasting as a text structure in the anchor standard R.9 :

"Analyze how two or more texts address similar themes or topics in order to build knowledge or to compare the approaches the authors take."

The reading standards are then referenced in the grade level writing standards, for example, as in W7.9

"Apply grade 7 Reading standards to literature (e.g., 'Compare and contrast a fictional portrayal of a time, place, or character and a historical account of the same period as a means of understanding how authors of fiction use or alter history')."

Being able to identify and create compare and contrast text structures is one of the more important critical reasoning skills that students should develop, regardless of grade level.

- How to Teach the Compare and Contrast Essay

- Organizing Compare-Contrast Paragraphs

- Compare-Contrast Prewriting Chart

- 101 Compare and Contrast Essay Topics

- 6 Skills Students Need to Succeed in Social Studies Classes

- How to Teach Topic Sentences Using Models

- Higher Level Thinking: Synthesis in Bloom's Taxonomy

- 25 Essay Topics for American Government Classes

- Bloom's Taxonomy: Analysis Category

- What to Include in a Student Portfolio

- Expository Essay Genre With Suggested Prompts

- 61 General Expository Essay Topic to Practice Academic Writing

- Social Studies Warmups: Exercises to Get Students Thinking

- Cosmos Episode 6 Viewing Worksheet

- 9 Tips for Successful Textbook Adoption

- How Many Electoral Votes Does a Candidate Need to Win?

Portable Walls™ for Academic Writing

Included in the University-Ready Writing .

Click here to purchase 10 copies of Portable Walls for Academic Writing .

Specifications: 1 three-panel folder

ISBN: 978-1-62341-409-2

Copyright Date: 2024

All rights reserved.

No part of this product may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the author, except as provided by U.S.A. copyright law.

Related Products

Portable Walls™ Grammar on the Go

Portable Walls™ for Structure and Style® Students

Portable Walls™ for the Public Speaker

![triac method of essay writing University-Ready Writing [Forever Streaming or DVD]](https://storage.googleapis.com/iew-public-production/products/urw-fs_thumb.jpg)

University-Ready Writing [Forever Streaming or DVD]

100% satisfaction guarantee.

We offer a 100% satisfaction, no time limit guarantee on everything we sell.

Essay Papers Writing Online

Tips and tricks for crafting engaging and effective essays.

Writing essays can be a challenging task, but with the right approach and strategies, you can create compelling and impactful pieces that captivate your audience. Whether you’re a student working on an academic paper or a professional honing your writing skills, these tips will help you craft essays that stand out.

Effective essays are not just about conveying information; they are about persuading, engaging, and inspiring readers. To achieve this, it’s essential to pay attention to various elements of the essay-writing process, from brainstorming ideas to polishing your final draft. By following these tips, you can elevate your writing and produce essays that leave a lasting impression.

Understanding the Essay Prompt

Before you start writing your essay, it is crucial to thoroughly understand the essay prompt or question provided by your instructor. The essay prompt serves as a roadmap for your essay and outlines the specific requirements or expectations.

Here are a few key things to consider when analyzing the essay prompt:

- Read the prompt carefully and identify the main topic or question being asked.

- Pay attention to any specific instructions or guidelines provided, such as word count, formatting requirements, or sources to be used.

- Identify key terms or phrases in the prompt that can help you determine the focus of your essay.

By understanding the essay prompt thoroughly, you can ensure that your essay addresses the topic effectively and meets the requirements set forth by your instructor.

Researching Your Topic Thoroughly

One of the key elements of writing an effective essay is conducting thorough research on your chosen topic. Research helps you gather the necessary information, facts, and examples to support your arguments and make your essay more convincing.

Here are some tips for researching your topic thoroughly:

| Don’t rely on a single source for your research. Use a variety of sources such as books, academic journals, reliable websites, and primary sources to gather different perspectives and valuable information. | |

| While conducting research, make sure to take detailed notes of important information, quotes, and references. This will help you keep track of your sources and easily refer back to them when writing your essay. | |

| Before using any information in your essay, evaluate the credibility of the sources. Make sure they are reliable, up-to-date, and authoritative to strengthen the validity of your arguments. | |

| Organize your research materials in a systematic way to make it easier to access and refer to them while writing. Create an outline or a research plan to structure your essay effectively. |

By following these tips and conducting thorough research on your topic, you will be able to write a well-informed and persuasive essay that effectively communicates your ideas and arguments.

Creating a Strong Thesis Statement

A thesis statement is a crucial element of any well-crafted essay. It serves as the main point or idea that you will be discussing and supporting throughout your paper. A strong thesis statement should be clear, specific, and arguable.

To create a strong thesis statement, follow these tips:

- Be specific: Your thesis statement should clearly state the main idea of your essay. Avoid vague or general statements.

- Be concise: Keep your thesis statement concise and to the point. Avoid unnecessary details or lengthy explanations.

- Be argumentative: Your thesis statement should present an argument or perspective that can be debated or discussed in your essay.

- Be relevant: Make sure your thesis statement is relevant to the topic of your essay and reflects the main point you want to make.

- Revise as needed: Don’t be afraid to revise your thesis statement as you work on your essay. It may change as you develop your ideas.

Remember, a strong thesis statement sets the tone for your entire essay and provides a roadmap for your readers to follow. Put time and effort into crafting a clear and compelling thesis statement to ensure your essay is effective and persuasive.

Developing a Clear Essay Structure

One of the key elements of writing an effective essay is developing a clear and logical structure. A well-structured essay helps the reader follow your argument and enhances the overall readability of your work. Here are some tips to help you develop a clear essay structure:

1. Start with a strong introduction: Begin your essay with an engaging introduction that introduces the topic and clearly states your thesis or main argument.

2. Organize your ideas: Before you start writing, outline the main points you want to cover in your essay. This will help you organize your thoughts and ensure a logical flow of ideas.

3. Use topic sentences: Begin each paragraph with a topic sentence that introduces the main idea of the paragraph. This helps the reader understand the purpose of each paragraph.

4. Provide evidence and analysis: Support your arguments with evidence and analysis to back up your main points. Make sure your evidence is relevant and directly supports your thesis.

5. Transition between paragraphs: Use transitional words and phrases to create flow between paragraphs and help the reader move smoothly from one idea to the next.

6. Conclude effectively: End your essay with a strong conclusion that summarizes your main points and reinforces your thesis. Avoid introducing new ideas in the conclusion.

By following these tips, you can develop a clear essay structure that will help you effectively communicate your ideas and engage your reader from start to finish.

Using Relevant Examples and Evidence

When writing an essay, it’s crucial to support your arguments and assertions with relevant examples and evidence. This not only adds credibility to your writing but also helps your readers better understand your points. Here are some tips on how to effectively use examples and evidence in your essays:

- Choose examples that are specific and relevant to the topic you’re discussing. Avoid using generic examples that may not directly support your argument.

- Provide concrete evidence to back up your claims. This could include statistics, research findings, or quotes from reliable sources.

- Interpret the examples and evidence you provide, explaining how they support your thesis or main argument. Don’t assume that the connection is obvious to your readers.

- Use a variety of examples to make your points more persuasive. Mixing personal anecdotes with scholarly evidence can make your essay more engaging and convincing.

- Cite your sources properly to give credit to the original authors and avoid plagiarism. Follow the citation style required by your instructor or the publication you’re submitting to.

By integrating relevant examples and evidence into your essays, you can craft a more convincing and well-rounded piece of writing that resonates with your audience.

Editing and Proofreading Your Essay Carefully

Once you have finished writing your essay, the next crucial step is to edit and proofread it carefully. Editing and proofreading are essential parts of the writing process that help ensure your essay is polished and error-free. Here are some tips to help you effectively edit and proofread your essay:

1. Take a Break: Before you start editing, take a short break from your essay. This will help you approach the editing process with a fresh perspective.

2. Read Aloud: Reading your essay aloud can help you catch any awkward phrasing or grammatical errors that you may have missed while writing. It also helps you check the flow of your essay.

3. Check for Consistency: Make sure that your essay has a consistent style, tone, and voice throughout. Check for inconsistencies in formatting, punctuation, and language usage.

4. Remove Unnecessary Words: Look for any unnecessary words or phrases in your essay and remove them to make your writing more concise and clear.

5. Proofread for Errors: Carefully proofread your essay for spelling, grammar, and punctuation errors. Pay attention to commonly misused words and homophones.

6. Get Feedback: It’s always a good idea to get feedback from someone else. Ask a friend, classmate, or teacher to review your essay and provide constructive feedback.

By following these tips and taking the time to edit and proofread your essay carefully, you can improve the overall quality of your writing and make sure your ideas are effectively communicated to your readers.

Related Post

How to master the art of writing expository essays and captivate your audience, convenient and reliable source to purchase college essays online, step-by-step guide to crafting a powerful literary analysis essay, unlock success with a comprehensive business research paper example guide, unlock your writing potential with writers college – transform your passion into profession, “unlocking the secrets of academic success – navigating the world of research papers in college”, master the art of sociological expression – elevate your writing skills in sociology.

- Network Sites:

- Technical Articles

- Join EEPower

- Or sign in with

- iHeartRadio

An Introduction to TRIAC Basics

Join our engineering community sign-in with:, learn about the triode for alternative currents (triac), including its construction, circuit characteristics, applications, and testing procedures..

A thyristor is a common term for a wide variety of semiconductor components used as an electronic switch. Like a mechanical switch, thyristors only have two states: on (conductive) and off (non-conductive). They can also be used, in addition to switching, to adjust the amount of power given to a load.

Thyristors are used mostly with high voltages and currents. The triode for alternative current (TRIAC) and silicon controlled rectifier (SCR) are the most commonly used thyristor devices. This article explores the construction, characteristics, and applications of TRIACs.

What is a TRIAC?

A TRIAC is a bidirectional, three-electrode AC switch that allows electrons to flow in either direction. It is the equivalent of two SCRs connected in a reverse-parallel arrangement with gates connected to each other.

A TRIAC is triggered into conduction in both directions by a gate signal like that of an SCR. TRIACs were designed to provide a means for the development of improved AC power controls.

TRIACs are available in a variety of packaging arrangements. They can handle a wide range of current and voltage. TRIACs generally have relatively low-current capabilities compared to SCRs — they are usually limited to less than 50 A and cannot replace SCRs in high-current applications.

TRIACs are considered versatile because of their ability to operate with positive or negative voltages across their terminals. Since SCRs have a disadvantage of conducting current in only one direction, controlling low power in an AC circuit is better served with the use of a TRIAC.

TRIAC Construction

Although TRIACs and SCRs look alike, their schematic symbols are dissimilar. The terminals of a TRIAC are the gate, terminal 1 (T1), and terminal 2 (T2). See Figure 1.

Figure 1. TRIAC terminals include a gate, terminal 1 (T1), and terminal 2 (T2).

There is no designation of anode and cathode. Current may flow in either direction through the main switch terminals, T1 and T2. Terminal 1 is the reference terminal for all voltages. Terminal 2 is the case or metal-mounting tab to which a heat sink can be attached.

TRIAC Triggering Circuit and its Advantages

TRIACs block current in either direction between T1 and T2. A TRIAC can be triggered into conduction in either direction by a momentary positive or negative pulse supplied to the gate.

If the appropriate signal is applied to the TRIAC gate, it conducts electricity. The TRIAC remains off until the gate is triggered at point A. See Figure 2.

Figure 2. A TRIAC remains off until its gate is triggered.

At point A, the trigger circuit pulses the gate, and the TRIAC is turned on, allowing the current to flow.

At point B, the forward current is reduced to zero, and the TRIAC is turned off.

The trigger circuit can be designed to generate a pulse that changes in the positive or negative half-cycle at any point. Consequently, the average current delivered to the load can vary.

One advantage of the TRIAC is that virtually no power is wasted by being converted to heat. Heat is generated when the current is impeded, not when the current is switched off. The TRIAC is either fully ON or fully OFF. It never partially limits current.

Another essential feature of the TRIAC is the absence of a reverse breakdown condition of high voltages and high currents, such as those found in diodes and SCRs.

If the voltage across the TRIAC goes too high, the TRIAC is turned on. Once on, the TRIAC can conduct a reasonably high current.

The Characteristic Curve of TRIACs

The characteristics of a TRIAC are based on T1 as the voltage reference point. The polarities shown for voltage and current are the polarities of T2 with respect to T1.

The polarities shown for the gate are also with respect to T1. See Figure 3.

Figure 3. A TRIAC characteristic curve shows the characteristics of a TRIAC when triggered into conduction.

Again, the TRIAC may be triggered into conduction in either direction by a gate current (IG) of either polarity.

TRIAC Applications

TRIACs are often used instead of mechanical switches because of their versatility. Also, where amperage is low, TRIACs are more economical than back-to-back SCRs.

Single-Phase Motor Starters

Often, a capacitor-start or split-phase motor must operate where arcing of a mechanical cut-out start switch is undesirable or even dangerous. In such cases, the mechanical cut- out start switch can be replaced by a TRIAC. See Figure 4.

Figure 4. A mechanical cut-out start switch may be replaced by a TRIAC.

A TRIAC is able to operate in such dangerous environments because it does not create an arc. The gate and cut-out signal are given to the TRIAC through a current transformer.

As the motor speeds up, the current is reduced in the current transformer, and the transformer no longer triggers the TRIAC. With the TRIAC turned off, the start windings are removed from the circuit.

Testing Procedures for TRIACs

TRIACs should be tested under operating conditions using an oscilloscope. A DMM may be used to make a rough test with the TRIAC out of the circuit. See Figure 5.

Figure 5. A DMM may be used to make a rough test of a TRIAC that is out of the circuit.

To test a TRIAC using a DMM, the following procedure is applied:

- Set the DMM on the Ω scale.

- Connect the negative lead to the main terminal 1.

- Connect the positive lead to the main terminal 2. The DMM should read infinity.

- Short-circuit the gate to main terminal 2 using a jumper wire. The DMM should read almost 0 Ω. The zero reading should remain when the lead is removed.

- Reverse the DMM leads so that the positive lead is on the main terminal 1, and the negative lead is on the main terminal 2. The DMM should read infinity.

- Short-circuit the gate of the TRIAC to main terminal 2 using a jumper wire. The DMM should read almost 0 Ω. The zero reading should remain after the lead is removed.

Related Content

- Introduction to STATCOM Systems

- Introduction to Power Regulation

- Introduction to Battery Emulators

- Back to Capacitor Basics

- Introduction to Transportation Electrification

Learn More About:

- Silicon Controlled Rectifier

- triode for alternative current

- TRIAC circuit

- TRIAC testing

You May Also Like

Online Design Kit Makes EV Battery Design Easier and Faster

by Mike Falter

EV Battery Test System Improves Production Capacity

New Battery Storage Tech Emerges From Iron, Air, Water

by Jake Hertz

EV Charger Test Platform Handles Four Vehicles Simultaneously

Defining Voltage Pulse Test Requirements for Insulation Endurance Assessment

by Benjamin Sahan

Welcome Back

Don't have an EEPower account? Create one now .

Forgot your password? Click here .

Provide details on what you need help with along with a budget and time limit. Questions are posted anonymously and can be made 100% private.

Studypool matches you to the best tutor to help you with your question. Our tutors are highly qualified and vetted.

Your matched tutor provides personalized help according to your question details. Payment is made only after you have completed your 1-on-1 session and are satisfied with your session.

TRIAC method of paragraphing, writing homework help

User Generated

Description

Essay structure:

Introduction (with the article’s title and author’s name) that must contain your thesis

At least three TRIAC body paragraphs (with in-text parenthetical citations of the articles)

A Conclusion

## I uploaded a file for the (TRIAC method of paragraphing).

## every thing is uploaded in files.

Unformatted Attachment Preview

Explanation & Answer

Attached. Running head: ARGUMENT ANALYSIS 1 Applying the TRIAC Method in Arguments Name Institution ARGUMENT ANALYSIS 2 Applying the TRIAC Method in Arguments There is no shortage of tales of pest control methods that have caused serious problems. Examples of pest control going terribly wrong are abundant in history (Lafrance, 2016). The importation of the importation of the mongoose to the Hawaiian Islands in the 1880s is one such example. Another example is the Australia’s 1930s ill-fated introduction of poisonous toads in an attempt to control the beetle invasions of sugar cane fields is another. These tales are a historical lesson in how complex ecosystems are. In light of the recent Zika virus epidemic, there is a debate on the viability of...

24/7 Study Help

Stuck on a study question? Our verified tutors can answer all questions, from basic math to advanced rocket science !

Similar Content

Related tags.

writing assignment Industrial Revolution spiritual classis Maquiladora philosophy Common Core Standards formatting christian bible personality types Europe’s Cold War essay science

Their Eyes Were Watching God

by Zora Neale Hurston

by Chaim Potok

Unf*ck Yourself

by Gary John Bishop

Sharp Objects

by Gillian Flynn

Death on the Nile

by Agatha Christie

Sense And Sensibility

by Jane Austen

12 Rules for Life

by Jordan Peterson

Slaughterhouse Five

by Kurt Vonnegut

by Ayn Rand

working on a study question?

Studypool is powered by Microtutoring TM

Copyright © 2024. Studypool Inc.

Studypool is not sponsored or endorsed by any college or university.

Ongoing Conversations

Access over 35 million study documents through the notebank

Get on-demand Q&A study help from verified tutors

Read 1000s of rich book guides covering popular titles

Sign up with Google

Sign up with Facebook

Already have an account? Login

Login with Google

Login with Facebook

Don't have an account? Sign Up

Linking essay-writing tests using many-facet models and neural automated essay scoring

- Original Manuscript

- Open access

- Published: 20 August 2024

Cite this article

You have full access to this open access article

- Masaki Uto ORCID: orcid.org/0000-0002-9330-5158 1 &

- Kota Aramaki 1

76 Accesses

Explore all metrics

For essay-writing tests, challenges arise when scores assigned to essays are influenced by the characteristics of raters, such as rater severity and consistency. Item response theory (IRT) models incorporating rater parameters have been developed to tackle this issue, exemplified by the many-facet Rasch models. These IRT models enable the estimation of examinees’ abilities while accounting for the impact of rater characteristics, thereby enhancing the accuracy of ability measurement. However, difficulties can arise when different groups of examinees are evaluated by different sets of raters. In such cases, test linking is essential for unifying the scale of model parameters estimated for individual examinee–rater groups. Traditional test-linking methods typically require administrators to design groups in which either examinees or raters are partially shared. However, this is often impractical in real-world testing scenarios. To address this, we introduce a novel method for linking the parameters of IRT models with rater parameters that uses neural automated essay scoring technology. Our experimental results indicate that our method successfully accomplishes test linking with accuracy comparable to that of linear linking using few common examinees.

Similar content being viewed by others

Rater-Effect IRT Model Integrating Supervised LDA for Accurate Measurement of Essay Writing Ability

Integration of Automated Essay Scoring Models Using Item Response Theory

Robust Neural Automated Essay Scoring Using Item Response Theory

Avoid common mistakes on your manuscript.

Introduction

The growing demand for assessing higher-order skills, such as logical reasoning and expressive capabilities, has led to increased interest in essay-writing assessments (Abosalem, 2016 ; Bernardin et al., 2016 ; Liu et al., 2014 ; Rosen & Tager, 2014 ; Schendel & Tolmie, 2017 ). In these assessments, human raters assess the written responses of examinees to specific writing tasks. However, a major limitation of these assessments is the strong influence that rater characteristics, including severity and consistency, have on the accuracy of ability measurement (Bernardin et al., 2016 ; Eckes, 2005 , 2023 ; Kassim, 2011 ; Myford & Wolfe, 2003 ). Several item response theory (IRT) models that incorporate parameters representing rater characteristics have been proposed to mitigate this issue (Eckes, 2023 ; Myford & Wolfe, 2003 ; Uto & Ueno, 2018 ).

The most prominent among them are many-facet Rasch models (MFRMs) (Linacre, 1989 ), and various extensions of MFRMs have been proposed to date (Patz & Junker, 1999 ; Patz et al., 2002 ; Uto & Ueno, 2018 , 2020 ). These IRT models have the advantage of being able to estimate examinee ability while accounting for rater effects, making them more accurate than simple scoring methods based on point totals or averages.

However, difficulties can arise when essays from different groups of examinees are evaluated by different sets of raters, a scenario often encountered in real-world testing. For instance, in academic settings such as university admissions, individual departments may use different pools of raters to assess essays from specific applicant pools. Similarly, in the context of large-scale standardized tests, different sets of raters may be allocated to various test dates or locations. Thus, when applying IRT models with rater parameters to account for such real-world testing cases while also ensuring that ability estimates are comparable across groups of examinees and raters, test linking becomes essential for unifying the scale of model parameters estimated for each group.

Conventional test-linking methods generally require some overlap of examinees or raters across the groups being linked (Eckes, 2023 ; Engelhard, 1997 ; Ilhan, 2016 ; Linacre, 2014 ; Uto, 2021a ). For example, linear linking based on common examinees, a popular linking method, estimates the IRT parameters for shared examinees using data from each group. These estimates are then used to build a linear regression model, which adjusts the parameter scales across groups. However, the design of such overlapping groups can often be impractical in real-world testing environments.

To facilitate test linking in these challenging environments, we introduce a novel method that leverages neural automated essay scoring (AES) technology. Specifically, we employ a cutting-edge deep neural AES method (Uto & Okano, 2021 ) that can predict IRT-based abilities from examinees’ essays. The central concept of our linking method is to construct an AES model using the ability estimates of examinees in a reference group, along with their essays, and then to apply this model to predict the abilities of examinees in other groups. An important point is that the AES model is trained to predict examinee abilities on the scale established by the reference group. This implies that the trained AES model can predict the abilities of examinees in other groups on the ability scale established by the reference group. Therefore, we use the predicted abilities to calculate the linking coefficients required for linear linking and to perform a test linking. In this study, we conducted experiments based on real-world data to demonstrate that our method successfully accomplishes test linking with accuracy comparable to that of linear linking using few common examinees.

It should be noted that previous studies have attempted to employ AES technologies for test linking (Almond, 2014 ; Olgar, 2015 ), but their focus has primarily been on linking tests with varied writing tasks or a mixture of essay tasks and objective items, while overlooking the influence of rater characteristics. This differs from the specific scenarios and goals that our study aims to address. To the best of our knowledge, this is the first study that employs AES technologies to link IRT models incorporating rater parameters for writing assessments without the need for common examinees and raters.

Setting and data

In this study, we assume scenarios in which two groups of examinees respond to the same writing task and their written essays are assessed by two distinct sets of raters following the same scoring rubric. We refer to one group as the reference group , which serves as the basis for the scale, and the other as the focal group , whose scale we aim to align with that of the reference group.

Let \(u^{\text {ref}}_{jr}\) be the score assigned by rater \(r \in \mathcal {R}^{\text {ref}}\) to the essay of examinee \(j \in \mathcal {J}^{\text {ref}}\) , where \(\mathcal {R}^{\text {ref}}\) and \(\mathcal {J}^{\text {ref}}\) denote the sets of raters and examinees in the reference group, respectively. Then, a collection of scores for the reference group can be defined as

where \(\mathcal{K} = \{1,\ldots ,K\}\) represents the rating categories, and \(-1\) indicates missing data.

Similarly, a collection of scores for the focal group can be defined as

where \(u^{\text {foc}}_{jr}\) indicates the score assigned by rater \(r \in \mathcal {R}^{\text {foc}}\) to the essay of examinee \(j \in \mathcal {J}^{\text {foc}}\) , and \(\mathcal {R}^{\text {foc}}\) and \(\mathcal {J}^{\text {foc}}\) represent the sets of raters and examinees in the focal group, respectively.

The primary objective of this study is to apply IRT models with rater parameters to the two sets of data, \(\textbf{U}^{\text {ref}}\) and \(\textbf{U}^{\text {foc}}\) , and to establish IRT parameter linking without shared examinees and raters: \(\mathcal {J}^{\text {ref}} \cap \mathcal {J}^{\text {foc}} = \emptyset \) and \(\mathcal {R}^{\text {ref}} \cap \mathcal {R}^{\text {foc}} = \emptyset \) . More specifically, we seek to align the scale derived from \(\textbf{U}^{\text {foc}}\) with that of \(\textbf{U}^{\text {ref}}\) .

- Item response theory

IRT (Lord, 1980 ), a test theory grounded in mathematical models, has recently gained widespread use in various testing situations due to the growing prevalence of computer-based testing. In objective testing contexts, IRT makes use of latent variable models, commonly referred to as IRT models. Traditional IRT models, such as the Rasch model and the two-parameter logistic model, give the probability of an examinee’s response to a test item as a probabilistic function influenced by both the examinee’s latent ability and the item’s characteristic parameters, such as difficulty and discrimination. These IRT parameters can be estimated from a dataset consisting of examinees’ responses to test items.

However, traditional IRT models are not directly applicable to essay-writing test data, where the examinees’ responses to test items are assessed by multiple human raters. Extended IRT models with rater parameters have been proposed to address this issue (Eckes, 2023 ; Jin and Wang, 2018 ; Linacre, 1989 ; Shin et al., 2019 ; Uto, 2023 ; Wilson & Hoskens, 2001 ).

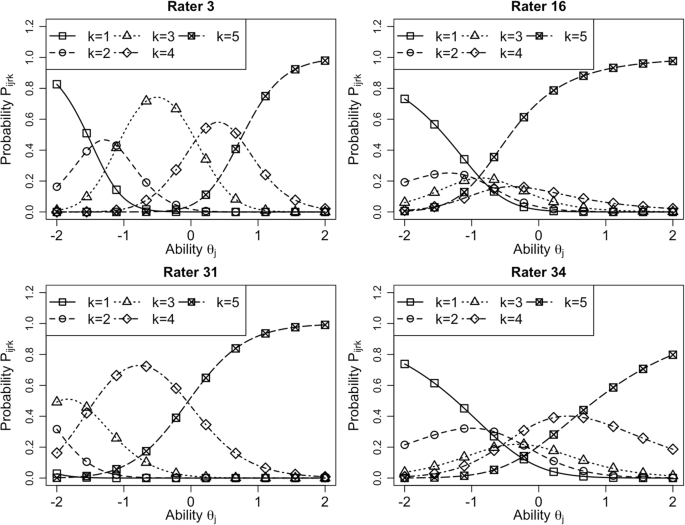

Many-facet Rasch models and their extensions

The MFRM (Linacre, 1989 ) is the most commonly used IRT model that incorporates rater parameters. Although several variants of the MFRM exist (Eckes, 2023 ; Myford & Wolfe, 2004 ), the most representative model defines the probability that the essay of examinee j for a given test item (either a writing task or prompt) i receives a score of k from rater r as

where \(\theta _j\) is the latent ability of examinee j , \(\beta _{i}\) represents the difficulty of item i , \(\beta _{r}\) represents the severity of rater r , and \(d_{m}\) is a step parameter denoting the difficulty of transitioning between scores \(m-1\) and m . \(D = 1.7\) is a scaling constant used to minimize the difference between the normal and logistic distribution functions. For model identification, \(\sum _{i} \beta _{i} = 0\) , \(d_1 = 0\) , \(\sum _{m = 2}^{K} d_{m} = 0\) , and a normal distribution for the ability \(\theta _j\) are assumed.

Another popular MFRM is one in which \(d_{m}\) is replaced with \(d_{rm}\) , a rater-specific step parameter denoting the severity of rater r when transitioning from score \(m-1\) to m . This model is often used to investigate variations in rating scale criteria among raters caused by differences in the central tendency, extreme response tendency, and range restriction among raters (Eckes, 2023 ; Myford & Wolfe, 2004 ; Qiu et al., 2022 ; Uto, 2021a ).

A recent extension of the MFRM is the generalized many-facet model (GMFM) (Uto & Ueno, 2020 ) Footnote 1 , which incorporates parameters denoting rater consistency and item discrimination. GMFM defines the probability \(P_{ijrk}\) as

where \(\alpha _i\) indicates the discrimination power of item i , and \(\alpha _r\) indicates the consistency of rater r . For model identification, \(\prod _{r} \alpha _i = 1\) , \(\sum _{i} \beta _{i} = 0\) , \(d_{r1} = 0\) , \(\sum _{m = 2}^{K} d_{rm} = 0\) , and a normal distribution for the ability \(\theta _j\) are assumed.

In this study, we seek to apply the aforementioned IRT models to data involving a single test item, as detailed in the Setting and data section. When there is only one test item, the item parameters in the above equations become superfluous and can be omitted. Consequently, the equations for these models can be simplified as follows.

MFRM with rater-specific step parameters (referred to as MFRM with RSS in the subsequent sections):

Note that the GMFM can simultaneously capture the following typical characteristics of raters, whereas the MFRM and MFRM with RSS can only consider a subset of these characteristics.

Severity : This refers to the tendency of some raters to systematically assign higher or lower scores compared with other raters regardless of the actual performance of the examinee. This tendency is quantified by the parameter \(\beta _r\) .

Consistency : This is the extent to which raters maintain their scoring criteria consistently over time and across different examinees. Consistent raters exhibit stable scoring patterns, which make their evaluations more reliable and predictable. In contrast, inconsistent raters show varying scoring tendencies. This characteristic is represented by the parameter \(\alpha _r\) .

Range Restriction : This describes the limited variability in scores assigned by a rater. Central tendency and extreme response tendency are special cases of range restriction. This characteristic is represented by the parameter \(d_{rm}\) .

For details on how these characteristics are represented in the GMFM, see the article (Uto & Ueno, 2020 ).

Based on the above, it is evident that both the MFRM and MFRM with RSS are special cases of the GMFM. Specifically, the GMFM with constant rater consistency corresponds to the MFRM with RSS. Moreover, the MFRM with RSS that assumes no differences in the range restriction characteristic among raters aligns with the MFRM.

When the aforementioned IRT models are applied to datasets from multiple groups composed of different examinees and raters, such as \(\textbf{U}^{\text {red}}\) and \(\textbf{U}^{\text {foc}}\) , the scales of the estimated parameters generally differ among them. This discrepancy arises because IRT permits arbitrary scaling of parameters for each independent dataset. An exception occurs when it is feasible to assume equality in between-test distributions of examinee abilities and rater parameters (Linacre, 2014 ). However, real-world testing conditions may not always satisfy this assumption. Therefore, if the aim is to compare parameter estimates between different groups, test linking is generally required to unify the scale of model parameters estimated from each individual group’s dataset.

One widely used approach for test linking is linear linking . In the context of the essay-writing test considered in this study, implementing linear linking necessitates designing two groups so that there is some overlap in examinees between them. With this design, IRT parameters for the shared examinees are estimated individually for each group. These estimates are then used to construct a linear regression model for aligning the parameter scales across groups, thereby rendering them comparable. We now introduce the mean and sigma method (Kolen & Brennan, 2014 ; Marco, 1977 ), a popular method for linear linking, and illustrate the procedures for parameter linking specifically for the GMFM, as defined in Eq. 7 , because both the MFRM and the MFRM with RSS can be regarded as special cases of the GMFM, as explained earlier.

To elucidate this, let us assume that the datasets corresponding to the reference and focal groups, denoted as \(\textbf{U}^{\text {ref}}\) and \(\textbf{U}^{\text {foc}}\) , contain overlapping sets of examinees. Furthermore, let us assume that \(\hat{\varvec{\theta }}^{\text {foc}}\) , \(\hat{\varvec{\alpha }}^{\text {foc}}\) , \(\hat{\varvec{\beta }}^{\text {foc}}\) , and \(\hat{\varvec{d}}^{\text {foc}}\) are the GMFM parameters estimated from \(\textbf{U}^{\text {foc}}\) . The mean and sigma method aims to transform these parameters linearly so that their scale aligns with those estimated from \(\textbf{U}^{\text {ref}}\) . This transformation is guided by the equations

where \(\tilde{\varvec{\theta }}^{\text {foc}}\) , \(\tilde{\varvec{\alpha }}^{\text {foc}}\) , \(\tilde{\varvec{\beta }}^{\text {foc}}\) , and \(\tilde{\varvec{d}}^{\text {foc}}\) represent the scale-transformed parameters for the focal group. The linking coefficients are defined as

where \({\mu }^{\text {ref}}\) and \({\sigma }^{\text {ref}}\) represent the mean and standard deviation (SD) of the common examinees’ ability values estimated from \(\textbf{U}^{\text {ref}}\) , and \({\mu }^{\text {foc}}\) and \({\sigma }^{\text {foc}}\) represent those values obtained from \(\textbf{U}^{\text {foc}}\) .

This linear linking method is applicable when there are common examinees across different groups. However, as discussed in the introduction, arranging for multiple groups with partially overlapping examinees (and/or raters) can often be impractical in real-world testing environments. To address this limitation, we aim to facilitate test linking without the need for common examinees and raters by leveraging AES technology.

Automated essay scoring models

Many AES methods have been developed over recent decades and can be broadly categorized into either feature-engineering or automatic feature extraction approaches (Hussein et al., 2019 ; Ke & Ng, 2019 ). The feature-engineering approach predicts essay scores using either a regression or classification model that employs manually designed features, such as essay length and the number of spelling errors (Amorim et al., 2018 ; Dascalu et al., 2017 ; Nguyen & Litman, 2018 ; Shermis & Burstein, 2002 ). The advantages of this approach include greater interpretability and explainability. However, it generally requires considerable effort in developing effective features to achieve high scoring accuracy for various datasets. Automatic feature extraction approaches based on deep neural networks (DNNs) have recently attracted attention as a means of eliminating the need for feature engineering. Many DNN-based AES models have been proposed in the last decade and have achieved state-of-the-art accuracy (Alikaniotis et al., 2016 ; Dasgupta et al., 2018 ; Farag et al., 2018 ; Jin et al., 2018 ; Mesgar & Strube, 2018 ; Mim et al., 2019 ; Nadeem et al., 2019 ; Ridley et al., 2021 ; Taghipour & Ng, 2016 ; Uto, 2021b ; Wang et al., 2018 ). In the next section, we introduce the most widely used DNN-based AES model, which utilizes Bidirectional Encoder Representations from Transformers (BERT) (Devlin et al., 2019 ).

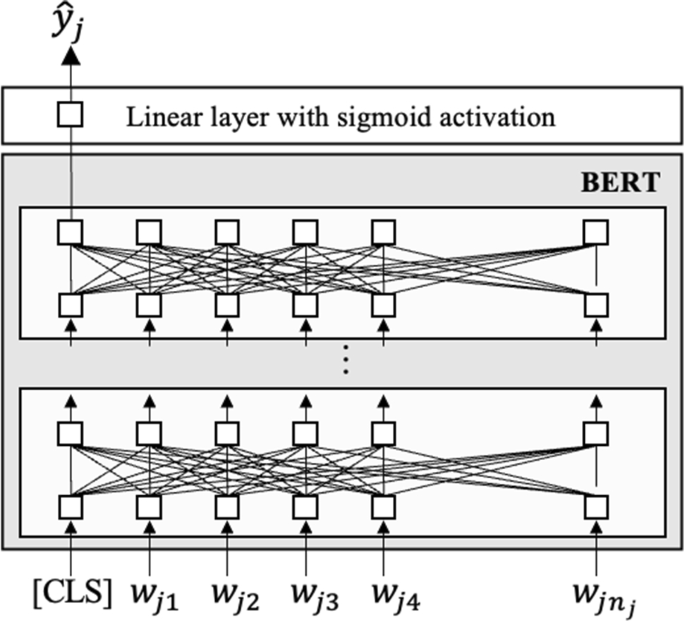

BERT-based AES model

BERT, a pre-trained language model developed by Google’s AI language team, achieved state-of-the-art performance in various natural language processing (NLP) tasks in 2019 (Devlin et al., 2019 ). Since then, it has frequently been applied to AES (Rodriguez et al., 2019 ) and automated short-answer grading (Liu et al., 2019 ; Lun et al., 2020 ; Sung et al., 2019 ) and has demonstrated high accuracy.

BERT is structured as a multilayer bidirectional transformer network, where the transformer is a neural network architecture designed to handle ordered sequences of data using an attention mechanism. See Ref. (Vaswani et al., 2017 ) for details of transformers.

BERT undergoes training in two distinct phases, pretraining and fine-tuning . The pretraining phase utilizes massive volumes of unlabeled text data and is conducted through two unsupervised learning tasks, specifically, masked language modeling and next-sentence prediction . Masked language modeling predicts the identities of words that have been masked out of the input text, while next-sequence prediction predicts whether two given sentences are adjacent.

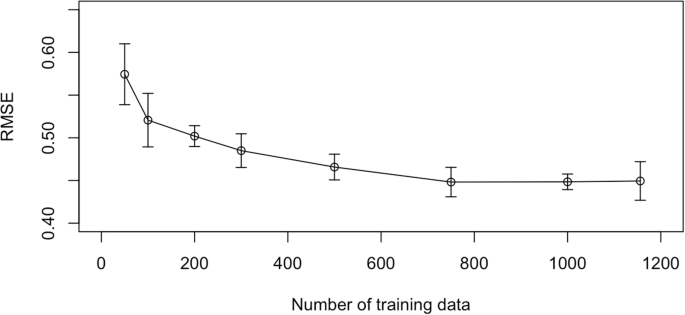

Fine-tuning is required to adapt a pre-trained BERT model for a specific NLP task, including AES. This entails retraining the BERT model using a task-specific supervised dataset after initializing the model parameters with pre-trained values and augmenting with task-specific output layers. For AES applications, the addition of a special token, [CLS] , at the beginning of each input is required. Then, BERT condenses the entire input text into a fixed-length real-valued hidden vector referred to as the distributed text representation , which corresponds to the output of the special token [CLS] (Devlin et al., 2019 ). AES scores can thus be derived by feeding the distributed text representation into a linear layer with sigmoid activation , as depicted in Fig. 1 . More formally, let \( \varvec{h} \) be the distributed text representation. The linear layer with sigmoid activation is defined as \(\sigma (\varvec{W}\varvec{h}+\text{ b})\) , where \(\varvec{W}\) is a weight matrix and \(\text{ b }\) is a bias, both learned during the fine-tuning process. The sigmoid function \(\sigma ()\) maps its input to a value between 0 and 1. Therefore, the model is trained to minimize an error loss function between the predicted scores and the gold-standard scores, which are normalized to the [0, 1] range. Moreover, score prediction using the trained model is performed by linearly rescaling the predicted scores back to the original score range.

BERT-based AES model architecture. \(w_{jt}\) is the t -th word in the essay of examinee j , \(n_j\) is the number of words in the essay, and \(\hat{y}_{j}\) represents the predicted score from the model

Problems with AES model training

As mentioned above, to employ BERT-based and other DNN-based AES models, they must be trained or fine-tuned using a large dataset of essays that have been graded by human raters. Typically, the mean-squared error (MSE) between the predicted and the gold-standard scores serves as the loss function for model training. Specifically, let \(y_{j}\) be the normalized gold-standard score for the j -th examinee’s essay, and let \(\hat{y}_{j}\) be the predicted score from the model. The MSE loss function is then defined as

where J denotes the number of examinees, which is equivalent to the number of essays, in the training dataset.

Here, note that a large-scale training dataset is often created by assigning a few raters from a pool of potential raters to each essay to reduce the scoring burden and to increase scoring reliability. In such cases, the gold-standard score for each essay is commonly determined by averaging the scores given by multiple raters assigned to that essay. However, as discussed in earlier sections, these straightforward average scores are highly sensitive to rater characteristics. When training data includes rater bias effects, an AES model trained on that data can show decreased performance as a result of inheriting these biases (Amorim et al., 2018 ; Huang et al., 2019 ; Li et al., 2020 ; Wind et al., 2018 ). An AES method that uses IRT has been proposed to address this issue (Uto & Okano, 2021 ).

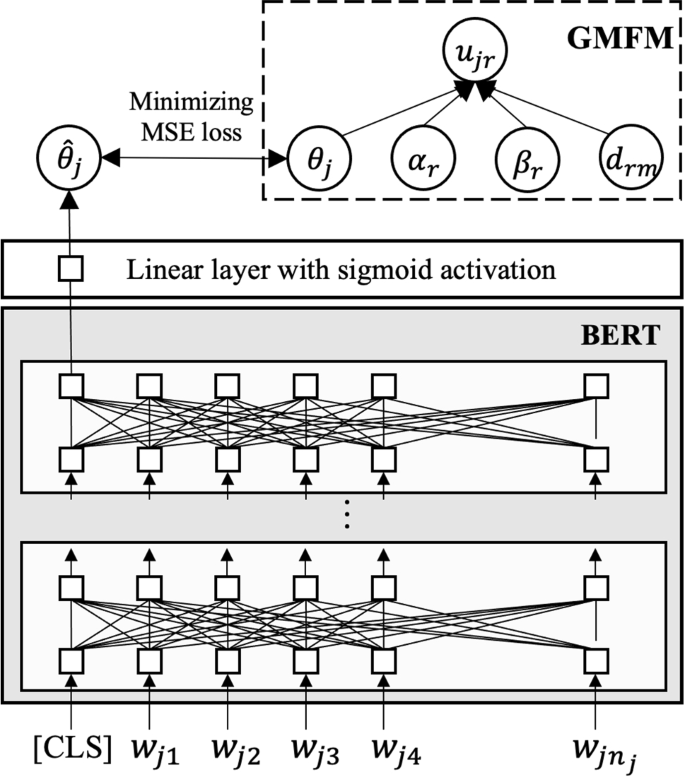

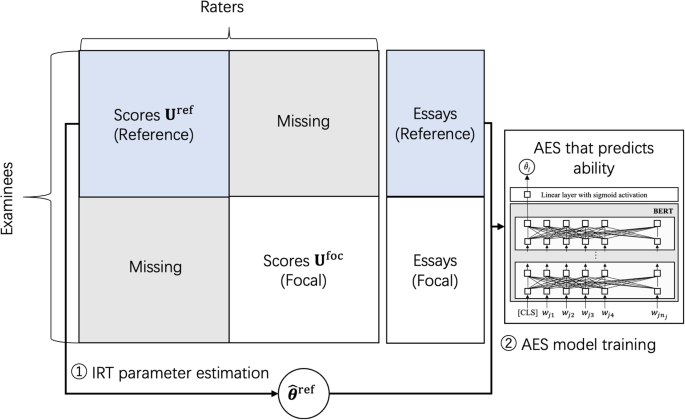

AES method using IRT

The main idea behind the AES method using IRT (Uto & Okano, 2021 ) is to train an AES model using the ability value \(\theta _j\) estimated by IRT models with rater parameters, such as MFRM and its extensions, from the data given by multiple raters for each essay, instead of a simple average score. Specifically, AES model training in this method occurs in two steps, as outlined in Fig. 2 .

Estimate the IRT-based abilities \(\varvec{\theta }\) from a score dataset, which includes scores given to essays by multiple raters.

Train an AES model given the ability estimates as the gold-standard scores. Specifically, the MSE loss function for training is defined as

where \(\hat{\theta }_j\) represents the AES’s predicted ability of the j -th examinee, and \(\theta _{j}\) is the gold-standard ability for the examinee obtained from Step 1. Note that the gold-standard scores are rescaled into the range [0, 1] by applying a linear transformation from the logit range \([-3, 3]\) to [0, 1]. See the original paper (Uto & Okano, 2021 ) for details.

Architecture of a BERT-based AES model that uses IRT

A trained AES model based on this method will not reflect bias effects because IRT-based abilities \(\varvec{\theta }\) are estimated while removing rater bias effects.

In the prediction phase, the score for an essay from examinee \(j^{\prime }\) is calculated in two steps.

Predict the IRT-based ability \(\theta _{j^{\prime }}\) for the examinee using the trained AES model, and then linearly rescale it to the logit range \([-3, 3]\) .

Calculate the expected score \(\mathbb {E}_{r,k}\left[ P_{j^{\prime }rk}\right] \) , which corresponds to an unbiased original-scaled score, given \(\theta _{j'}\) and the rater parameters. This is used as a predicted essay score in this method.

This method originally aimed to train an AES model while mitigating the impact of varying rater characteristics present in the training data. A key feature, however, is its ability to predict an examinee’s IRT-based ability from their essay texts. Our linking approach leverages this feature to enable test linking without requiring common examinees and raters.

Outline of our proposed method, steps 1 and 2

Outline of our proposed method, steps 3–6

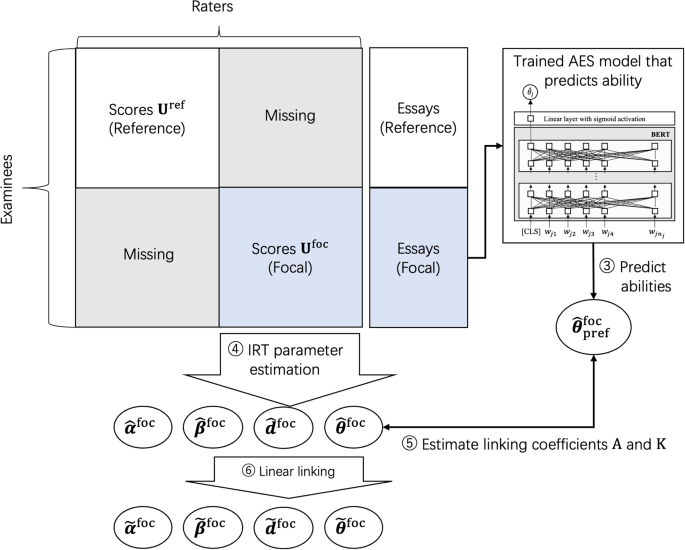

Proposed method

The core idea behind our method is to develop an AES model that predicts examinee ability using score and essay data from the reference group, and then to use this model to predict the abilities of examinees in the focal group. These predictions are then used to estimate the linking coefficients for a linear linking. An outline of our method is illustrated in Figs. 3 and 4 . The detailed steps involved in the procedure are as follows.

Estimate the IRT model parameters from the reference group’s data \(\textbf{U}^{\text {ref}}\) to obtain \(\hat{\varvec{\theta }}^{\text {ref}}\) indicating the ability estimates of the examinees in the reference group.

Use the ability estimates \(\hat{\varvec{\theta }}^{\text {ref}}\) and the essays written by the examinees in the reference group to train the AES model that predicts examinee ability.

Use the trained AES model to predict the abilities of examinees in the focal group by inputting their essays. We designate these AES-predicted abilities as \(\hat{\varvec{\theta }}^{\text {foc}}_{\text {pred}}\) from here on. An important point to note is that the AES model is trained to predict ability values on the parameter scale aligned with the reference group’s data, meaning that the predicted abilities for examinees in the focal group follow the same scale.

Estimate the IRT model parameters from the focal group’s data \(\textbf{U}^{\text {foc}}\) .

Calculate the linking coefficients A and K using the AES-predicted abilities \(\hat{\varvec{\theta }}^{\text {foc}}_{\text {pred}}\) and the IRT-based ability estimates \(\hat{\varvec{\theta }}^{\text {foc}}\) for examinees in the focal group as follows.

where \({\mu }^{\text {foc}}_{\text {pred}}\) and \({\sigma }^{\text {foc}}_{\text {pred}}\) represent the mean and the SD of the AES-predicted abilities \(\hat{\varvec{\theta }}^{\text {foc}}_{\text {pred}}\) , respectively. Furthermore, \({\mu }^{\text {foc}}\) and \({\sigma }^{\text {foc}}\) represent the corresponding values for the IRT-based ability estimates \(\hat{\varvec{\theta }}^{\text {foc}}\) .

Apply linear linking based on the mean and sigma method given in Eq. 8 using the above linking coefficients and the parameter estimates for the focal group obtained in Step 4. This procedure yields parameter estimates for the focal group that are aligned with the scale of the parameters of the reference group.

As described in Step 3, the AES model used in our method is trained to predict examinee abilities on the scale derived from the reference data \(\textbf{U}^{\text {ref}}\) . Therefore, the abilities predicted by the trained AES model for the examinees in the focal group, denoted as \(\hat{\varvec{\theta }}^{\text {foc}}_{\text {pred}}\) , also follow the ability scale derived from the reference data. Consequently, by using the AES-predicted abilities, we can infer the differences in the ability distribution between the reference and focal groups. This enables us to estimate the linking coefficients, which then allows us to perform linear linking based on the mean and sigma method. Thus, our method allows for test linking without the need for common examinees and raters.

It is important to note that the current AES model for predicting examinees’ abilities does not necessarily offer sufficient prediction accuracy for individual ability estimates. This implies that their direct use in mid- to high-stakes assessments could be problematic. Therefore, we focus solely on the mean and SD values of the ability distribution based on predicted abilities, rather than using individual predicted ability values. Our underlying assumption is that these AES models can provide valuable insights into differences in the ability distribution across various groups, even though the individual predictions might be somewhat inaccurate, thereby substantiating their utility for test linking.

Experiments

In this section, we provide an overview of the experiments we conducted using actual data to evaluate the effectiveness of our method.

Actual data

We used the dataset previously collected in Uto and Okano ( 2021 ). It consists of essays written in English by 1805 students from grades 7 to 10 along with scores from 38 raters for these essays. The essays originally came from the ASAP (Automated Student Assessment Prize) dataset, which is a well-known benchmark dataset for AES studies. The raters were native English speakers recruited from Amazon Mechanical Turk (AMT), a popular crowdsourcing platform. To alleviate the scoring burden, only a few raters were assigned to each essay, rather than having all raters evaluate every essay. Rater assignment was conducted based on a systematic links design (Shin et al., 2019 ; Uto, 2021a ; Wind & Jones, 2019 ) to achieve IRT-scale linking. Consequently, each rater evaluated approximately 195 essays, and each essay was graded by four raters on average. The raters were asked to grade the essays using a holistic rubric with five rating categories, which is identical to the one used in the original ASAP dataset. The raters were provided no training before the scoring process began. The average Pearson correlation between the scores from AMT raters and the ground-truth scores included in the original ASAP dataset was 0.70 with an SD of 0.09. The minimum and maximum correlations were 0.37 and 0.81, respectively. Furthermore, we also calculated the intraclass correlation coefficient (ICC) between the scores from each AMT rater and the ground-truth scores. The average ICC was 0.60 with an SD of 0.15, and the minimum and maximum ICCs were 0.29 and 0.79, respectively. The calculation of the correlation coefficients and ICC for each AMT rater excluded essays that the AMT rater did not assess. Furthermore, because the ground-truth scores were given as the total scores from two raters, we divided them by two in order to align the score scale with the AMT raters’ scores.

For further analysis, we also evaluated the ICC among the AMT raters as their interrater reliability. In this analysis, missing value imputation was required because all essays were evaluated by a subset of AMT raters. Thus, we first applied multiple imputation with predictive mean matching to the AMT raters’ score dataset. In this process, we generated five imputed datasets. For each imputed dataset, we calculated the ICC among all AMT raters. Finally, we aggregated the ICC values from each imputed dataset to calculate the mean ICC and its SD. The results revealed a mean ICC of 0.43 with an SD of 0.01.

These results suggest that the reliability of raters is not necessarily high. This variability in scoring behavior among raters underscores the importance of applying IRT models with rater parameters. For further details of the dataset see Uto and Okano ( 2021 ).

Experimental procedures

Using this dataset, we conducted the following experiment for three IRT models with rater parameters, MFRM, MFRM with RSS, and GMFM, defined by Eqs. 5 , 6 , and 7 , respectively.

We estimated the IRT parameters from the dataset using the No-U-Turn sampler-based Markov chain Monte Carlo (MCMC) algorithm, given the prior distributions \(\theta _j, \beta _r, d_m, d_{rm} \sim N(0, 1)\) , and \(\alpha _r \sim LN(0, 0.5)\) following the previous work (Uto & Ueno, 2020 ). Here, \( N(\cdot , \cdot )\) and \(LN(\cdot , \cdot )\) indicate normal and log-normal distributions with mean and SD values, respectively. The expected a posteriori (EAP) estimator was used as the point estimates.

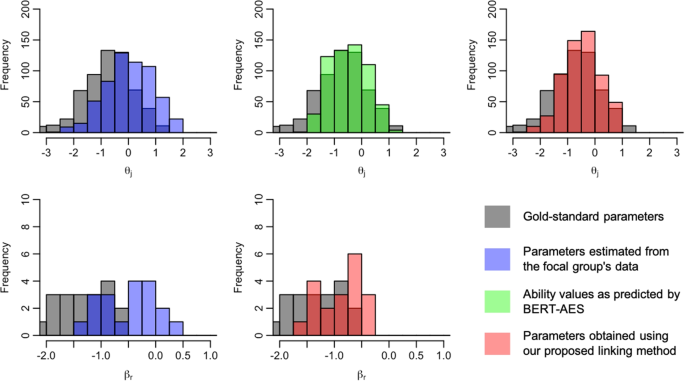

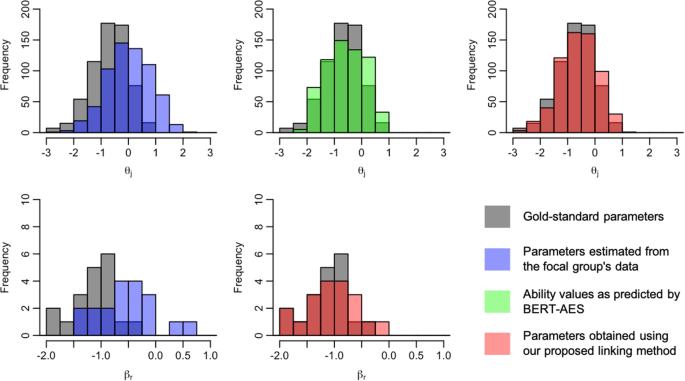

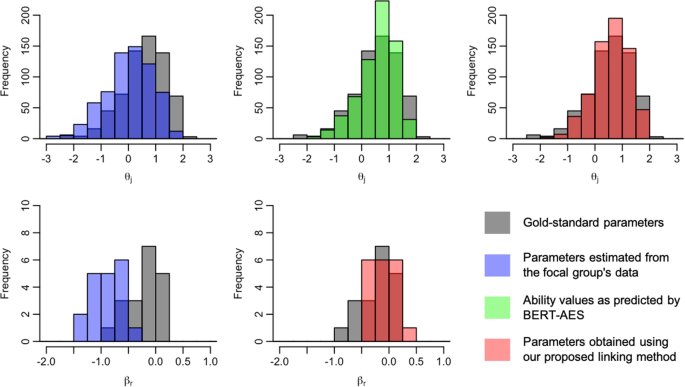

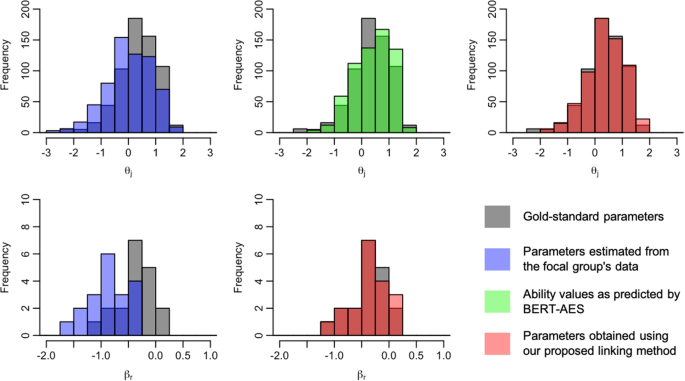

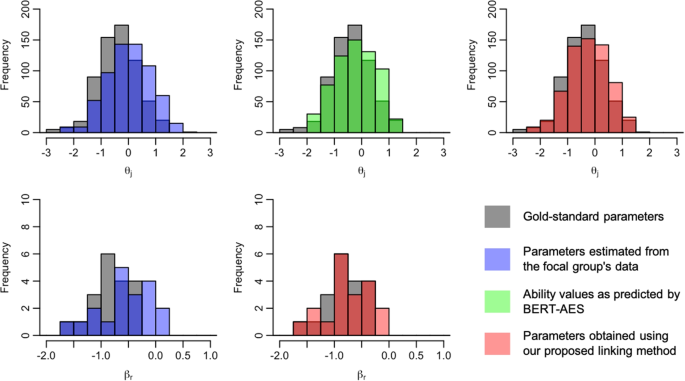

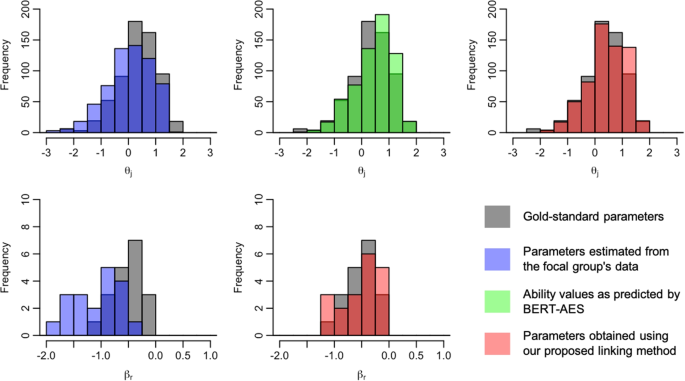

We then separated the dataset randomly into two groups, the reference group and the focal group, ensuring no overlap of examinees and raters between them. In this separation, we selected examinees and raters in each group to ensure distinct distributions of examinee abilities and rater severities. Various separation patterns were tested and are listed in Table 1 . For example, condition 1 in Table 1 means that the reference group comprised randomly selected high-ability examinees and low-severity raters, while the focal group comprised low-ability examinees and high-severity raters. Condition 2 provided a similar separation but controlled for narrower variance in rater severity in the focal group. Details of the group creation procedures can be found in Appendix A .

Using the obtained data for the reference and focal groups, we conducted test linking using our method, the details of which are given in the Proposed method section. In it, the IRT parameter estimations were carried out using the same MCMC algorithm as in Step 1.

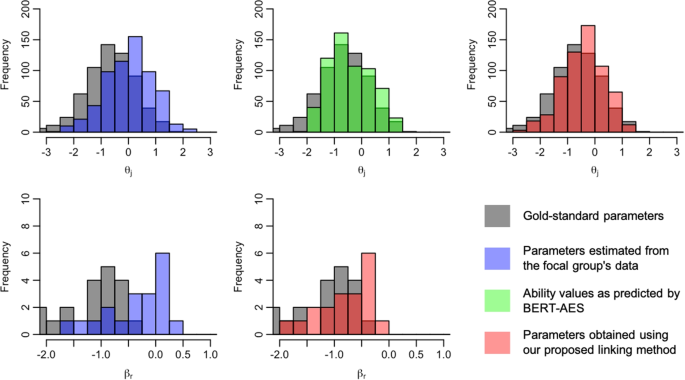

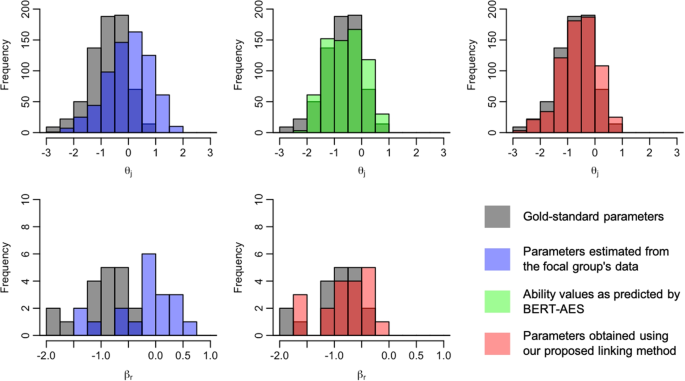

We calculated the Root Mean Squared Error (RMSE) between the IRT parameters for the focal group, which were linked using our proposed method, and their gold-standard parameters. In this context, the gold-standard parameters were obtained by transforming the scale of the parameters estimated from the entire dataset in Step 1 so that it aligned with that of the reference group. Specifically, we estimated the IRT parameters using data from the reference group and collected those estimated from the entire dataset in Step 1. Then, using the examinees in the reference group as common examinees, we applied linear linking based on the mean and sigma method to adjust the scale of the parameters estimated from the entire dataset to match that of the reference group.

For comparison, we also calculated the RMSE between the focal group’s IRT parameters, obtained without applying the proposed linking, and their gold-standard parameters. This functions as the worst baseline against which the results of the proposed method are compared. Additionally, we examined other baselines that use linear linking based on common examinees. For these baselines, we randomly selected five or ten examinees from the reference group, who were assigned scores by at least two focal group’s raters in the entire dataset. The scores given to these selected examinees by the focal group’s raters were then merged with the focal group’s data, where the added examinees worked as common examinees between the reference and focal groups. Using this data, we examined linear linking using common examinees. Specifically, we estimated the IRT parameters from the data of the focal group with common examinees and applied linear linking based on the mean and sigma method using the ability estimates of the common examinees to align its scale with that of the reference group. Finally, we calculated the RMSE between the linked parameter estimates for the examinees and raters belonging only to the original focal group and their gold-standard parameters. Note that this common examinee approach operates under more advantageous conditions compared with the proposed linking method because it can utilize larger samples for estimating the parameters of raters in the focal group.

We repeated Steps 2–5 ten times for each data separation condition and calculated the average RMSE for four cases: one in which our proposed linking method was applied, one without linking, and two others where linear linkings using five and ten common examinees were applied.