- University of Texas Libraries

- UT Libraries

Systematic Reviews & Evidence Synthesis Methods

- Types of Reviews

- Formulate Question

- Find Existing Reviews & Protocols

- Register a Protocol

- Searching Systematically

- Supplementary Searching

- Managing Results

- Deduplication

- Critical Appraisal

- Glossary of terms

- Librarian Support

- Video tutorials This link opens in a new window

- Systematic Review & Evidence Synthesis Boot Camp

Once you have completed your analysis, you will want to both summarize and synthesize those results. You may have a qualitative synthesis, a quantitative synthesis, or both.

Qualitative Synthesis

In a qualitative synthesis, you describe for readers how the pieces of your work fit together. You will summarize, compare, and contrast the characteristics and findings, exploring the relationships between them. Further, you will discuss the relevance and applicability of the evidence to your research question. You will also analyze the strengths and weaknesses of the body of evidence. Focus on where the gaps are in the evidence and provide recommendations for further research.

Quantitative Synthesis

Whether or not your Systematic Review includes a full meta-analysis, there is typically some element of data analysis. The quantitative synthesis combines and analyzes the evidence using statistical techniques. This includes comparing methodological similarities and differences and potentially the quality of the studies conducted.

Summarizing vs. Synthesizing

In a systematic review, researchers do more than summarize findings from identified articles. You will synthesize the information you want to include.

While a summary is a way of concisely relating important themes and elements from a larger work or works in a condensed form, a synthesis takes the information from a variety of works and combines them together to create something new.

Synthesis :

"The goal of a systematic synthesis of qualitative research is to integrate or compare the results across studies in order to increase understanding of a particular phenomenon, not to add studies together. Typically the aim is to identify broader themes or new theories – qualitative syntheses usually result in a narrative summary of cross-cutting or emerging themes or constructs, and/or conceptual models."

Denner, J., Marsh, E. & Campe, S. (2017). Approaches to reviewing research in education. In D. Wyse, N. Selwyn, & E. Smith (Eds.), The BERA/SAGE Handbook of educational research (Vol. 2, pp. 143-164). doi: 10.4135/9781473983953.n7

- Approaches to Reviewing Research in Education from Sage Knowledge

Data synthesis (Collaboration for Environmental Evidence Guidebook)

Interpreting findings and and reporting conduct (Collaboration for Environmental Evidence Guidebook)

Interpreting results and drawing conclusions (Cochrane Handbook, Chapter 15)

Guidance on the conduct of narrative synthesis in systematic reviews (ESRC Methods Programme)

- Last Updated: May 16, 2024 11:05 AM

- URL: https://guides.lib.utexas.edu/systematicreviews

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- For authors

- Browse by collection

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 8, Issue 2

- Improving Conduct and Reporting of Narrative Synthesis of Quantitative Data (ICONS-Quant): protocol for a mixed methods study to develop a reporting guideline

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Mhairi Campbell 1 ,

- Srinivasa Vittal Katikireddi 1 ,

- Amanda Sowden 2 ,

- Joanne E McKenzie 3 ,

- Hilary Thomson 1

- 1 MRC/CSO Social and Public Health Sciences Unit , University of Glasgow , Glasgow , UK

- 2 Centre for Reviews and Dissemination , University of York , York , UK

- 3 School of Public Health and Preventive Medicine , Monash University , Melbourne , Victoria , Australia

- Correspondence to Ms Mhairi Campbell; Mhairi.Campbell{at}glasgow.ac.uk

Introduction Reliable evidence syntheses, based on rigorous systematic reviews, provide essential support for evidence-informed clinical practice and health policy. Systematic reviews should use reproducible and transparent methods to draw conclusions from the available body of evidence. Narrative synthesis of quantitative data (NS) is a method commonly used in systematic reviews where it may not be appropriate, or possible, to meta-analyse estimates of intervention effects. A common criticism of NS is that it is opaque and subject to author interpretation, casting doubt on the trustworthiness of a review’s conclusions. Despite published guidance funded by the UK’s Economic and Social Research Council on the conduct of NS, recent work suggests that this guidance is rarely used and many review authors appear to be unclear about best practice. To improve the way that NS is conducted and reported, we are developing a reporting guideline for NS of quantitative data.

Methods We will assess how NS is implemented and reported in Cochrane systematic reviews and the findings will inform the creation of a Delphi consensus exercise by an expert panel. We will use this Delphi survey to develop a checklist for reporting standards for NS. This will be accompanied by supplementary guidance on the conduct and reporting of NS, as well as an online training resource.

Ethics and dissemination Ethical approval for the Delphi survey was obtained from the University of Glasgow in December 2017 (reference 400170060). Dissemination of the results of this study will be through peer-reviewed publications, and national and international conferences.

- evidence synthesis

- health policy

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/

https://doi.org/10.1136/bmjopen-2017-020064

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Strengths and limitations of this study

This study will be the first to develop a consensus-based reporting guideline for narrative synthesis of quantitative data (NS) in systematic reviews.

The study follows the recommended methodology for developing reporting standards.

The online Delphi survey of international experts in NS will be an effective method of gaining reliable consensus from a group of experts.

The reporting guideline and the supplementary materials developed to support use of existing guidance will aid the implementation of best practice conduct and reporting of NS.

Introduction

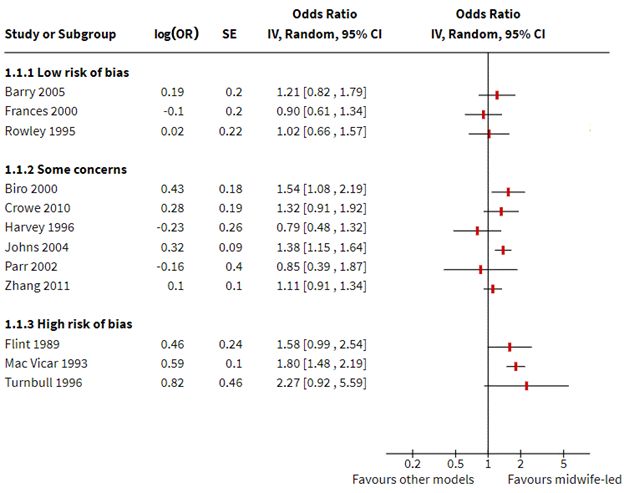

Well-conducted systematic reviews are important for informing clinical practice and health policy. 1 In some reviews, meta-analysis of effect estimates may not be possible or sensible. For example, data may be insufficient to allow calculation of standardised effect estimates, the effect metrics arising from different study designs may not be amenable to synthesis (eg, those arising from interrupted time series and randomised trials), or high levels of statistical heterogeneity may mean that presenting an average effect is misleading. For reviews of quantitative data where statistical synthesis is not possible, narrative synthesis of quantitative data (NS) is often the alternative method of choice. A major concern about NS is that it lacks transparency and therefore introduces bias into the synthesis. 2 3 This is an important criticism, which raises questions about the validity and utility of reviews using NS, and ultimately increases the risk of adding to research waste. 4 NS involves collating study findings into a coherent textual narrative, with descriptions of differences in characteristics of the studies including context and validity, often using tables and graphs to display results. 5 6 Published guidance for NS funded by the UK’s Economic and Social Research Council (ESRC) describes techniques for promoting transparency between review level data and conclusions; these include graphical and structured tabulation of the data. 5 However, a recent analysis of systematic reviews of public health interventions suggests that this guidance is rarely used. 7

Relative to developments in meta-analysis or statistical synthesis, and synthesis of qualitative data in the past decade, work to support improved conduct and transparent reporting in NS has been scarce. While a reporting guideline has been developed for systematic reviews and meta-analysis, the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), 8 the focus of the synthesis items is on meta-analysis of effect estimates, with no items for alternative approaches to synthesis. The Cochrane Methodological Expectations of Cochrane Intervention Reviews (MECIR) standards for conducting and reporting Cochrane reviews specify one general item referring to non-quantitative synthesis or non-statistical synthesis, and do not have any items specifically for NS. 2 Reporting guidelines have had some impact on improving the reporting for randomised trials and may have similar benefits for improving the reporting of methods and results from NS. 9

There is a growing demand for reviews addressing complex questions, and which incorporate diverse sources of data. Cochrane, a global leader in evidence synthesis of health and public health interventions, has recognised this. 10 Following the prioritisation of relevance and breadth of coverage in the Cochrane strategy, 10 it is likely that the proportion of Cochrane reviews addressing complex questions will increase; this may result in increased use of NS methods. Realising the need for improved implementation and reporting of NS methods, the Cochrane Strategic Methods Fund has funded the ICONS-Quant project: Improving Conduct and Reporting of Narrative Synthesis of Quantitative Data. This paper presents the protocol for the work that will be undertaken.

ICONS-Quant

The ICONS-Quant project aims to improve the implementation of NS methods through enhancing existing guidance on the conduct of NS and developing a reporting guideline. Provision of reporting guidelines alone will not necessarily lead to improved research conduct; provision of explanatory guidance, dissemination, endorsement and support for adherence is also necessary. 11 We will produce materials to support the implementation of best practice in the application of NS methods, and improved reporting. While our focus is on Cochrane reviews, the key outputs of the project will be of use for reviews published elsewhere and will be made freely available. We will:

describe current practice in conduct and reporting of NS in Cochrane reviews;

achieve expert consensus on reporting standards for NS;

provide support for those involved in NS through the provision of enhanced guidance on NS conduct and online training resources.

We intend ICONS-Quant guideline to be used in combination with the PRISMA guidelines. 8 The PRISMA guidelines provide items relating to the various stages of review conduct, for example, providing a clear abstract, explaining the literature search strategy, reporting methods to assess risk of bias. The ICONS-Quant reporting guideline will focus on the methods of synthesis, relating most closely to expanding on PRISMA Item 14 ‘synthesis of results’, outlining details that require to be reported to promote transparency in NS.

Methods and analysis

The ICONS-Quant project will be conducted over a period of 24 months from May 2017. Here, we outline the development of a reporting guideline for NS and supporting materials for existing guidance. In line with recommendations for best practice in developing reporting guidelines, 11 we will:

identify the need for the ICONS-Quant guideline (Work Programme One);

conduct a Delphi survey and consensus meeting (Work Programme Two);

enhance existing guidance on NS (Work Programme Three);

develop learning materials for implementation of NS (Work Programme Four).

Below we outline the Project Advisory Group (PAG) and the research that will be conducted within each Work Programme. Details of the ICONS-Quant project have been registered with the Enhancing the Quality and Transparency of Health Research Network, which provides a database of reporting guidelines in development ( http://www.equator-network.org/library/reporting-guidelines-under-development/ #74).

Project Advisory Group

We have established an ICONS-Quant PAG which will provide governance for the project as well as expert advice. The ICONS PAG includes named project collaborators from Cochrane Review Groups (Effective Practice and Organisation of Care, Consumers and Communication, and Tobacco Addiction), a representative with experience of NS from the Campbell Collaboration Methods Group and a user representative from the National Institute for Health and Care Excellence.

Work Programme One: assessment of current reporting and conduct of NS in Cochrane reviews

Previously we investigated current practice in the conduct and reporting of NS in systematic reviews of public health interventions. 7 Work Programme One will extend this exercise to assess use of NS methods and their reporting across all Cochrane Review Groups. We will identify all Cochrane reviews published between April 2016 and April 2017 and screen them to determine the method of synthesis for the primary outcome. Reviews will be included for further examination if the method for reporting the synthesis of the primary outcomes relies on text. We will identify those that use NS or that synthesise studies using text only, whether or not the authors refer to the use of NS or textual methods for synthesis. Reviews will be excluded if they are empty, include only one study, report on diagnostic test accuracy, or are a review of methodology. We will record how the synthesis has been conducted and reported. We will use the existing data extraction template designed for our previous assessment of NS in public health reviews. This template is based on key sources of best practice for NS, 12–15 including the ESRC guidance on the conduct of NS. 5 Questions relate to use of theory; investigation of differences across included studies and reported findings; transparency of links between data and text (including data visualisation tools used); assessment of robustness of the synthesis; and adequacy of description of NS methods. 5 Using a similar format to our review of NS in public health reviews, 16 we will tabulate the extracted data. This will allow description of:

the extent of reporting of NS methods: the amount and type of detail included;

the range of approaches and tools used to narratively synthesise data;

how conceptual and methodological heterogeneity is managed;

review authors’ reflection on robustness of synthesis.

The results of this exercise will be used to inform development of the initial checklist for inclusion in the Delphi survey.

Work Programme Two: Delphi survey

A Delphi consensus survey will be conducted. This is the standard approach to elicit expert opinion for the purposes of developing consensus-based reporting guidelines. 17 18 The results of the assessment exercise in Work Programme One, in conjunction with key texts on NS, 12–15 19 20 findings from the previous assessment of reporting NS in public health reviews 16 and input from the ICONS PAG, will be used to develop the initial items for Round One of the Delphi survey. An expert panel will then be consulted to inform the development of the Delphi survey. The panel will be identified by the project team and members of the ICONS PAG, and will comprise 15–20 authors and methodologists experienced in or familiar with the purpose and conduct of NS. A videoconference with the expert panel will be used to present findings from Work Programme One and a draft of the proposed Delphi survey. Participants’ input will be recorded and used to refine the Delphi survey.

The Delphi online survey will use a questionnaire to achieve consensus on the content and wording of reporting items considered to capture the pertinent details of NS. The online platform will be created by the MRC/CSO Social and Public Health Sciences Unit, University of Glasgow, using a web-based platform recently developed for this purpose. The platform facilitates personalised invitations to participate, password-protected logins and personalised reminders, and enables data collation for quantitative and qualitative analyses. There will be two rounds of the survey, with a third version conducted if necessary to gain consensus among participants.

Participants will include members of the ICONS PAG and others experienced in NS. Suitable participants will be identified by the project team, through recommendations from the ICONS PAG and through the data extraction exercise described in Work Programme One. The data extraction exercise will help articulate identified gaps in reporting of methods and findings of NS where transparency is particularly lacking. The identified gaps will be used when drafting reporting item questions for the Delphi consensus exercise, to improve transparency in NS. We will invite a maximum of 100 individuals to participate and they will be recruited via their workplace email address. We will ask for their professional opinion on the content of a draft reporting guideline. The invitation will outline the aim of the Delphi survey, the process involved and the time commitment, and include a participant information sheet. Individuals who accept the invitation will be asked to take part in each round of the survey. It will be clearly stated that at any stage, a respondent can opt out of the Delphi survey. The survey will ask participants to provide details of their job category; no personal information will be collected. Respondents will be asked to use their email address to log in to the survey. This information will be used only to verify the appropriate use of the survey and will not be used in the analysis. The Delphi survey will involve implied consent: it will be made clear to participants that by responding to the survey, they are consenting to participate in the study. It will be explained to respondents that: their responses will not be linked to their identity (deidentified); only researchers will have access to the data; and the data will be stored on a password-encrypted computer and stored and destroyed in accordance with Medical Research Council guidelines.

The Delphi survey will consist of closed and open-ended questions. Round One of the survey will provide an introduction to the project and instructions for the survey. The participants will be invited to rank each of the proposed guideline items on a 4-point Likert scale (essential, desirable, possible, omit, used in previous Delphi surveys for developing reporting guidelines 21 22 ). For each item, the participants will be invited to provide comments. A reminder email will be sent approximately 2 weeks after the initial invitation. Round One will close approximately 4 weeks after the first invitations are issued. Responses to Round One of the Delphi will be exported verbatim into a Microsoft Excel spreadsheet and collated. Responses to the scale rating will be summarised as counts and percentage frequencies. The free-text content will be collated and summarised. The results from both the quantitative and qualitative data collation will be used to inform the development of Round Two of the Delphi and the content of the final guideline checklist. Redrafting of the Delphi survey items will be conducted in discussion with all study group members within 1 month of closure of the round.

All participants from Round One will be invited to take part in Round Two. In Round Two, the proposed checklist items will be presented in three sections:

Items that reached high consensus in Round One and that are expected to be included in the final checklist. These items will have an a priori agreement of >70% approval, as recommended by Diamond et al . 23 Participants will not be asked to rate these items again but will be asked to comment on whether they agree with the inclusion of each item in the checklist and to provide comments if they disagree or with suggestions to clarify the wording of the items.

Items that have been significantly altered or are additional as a result of Round One. The participants will be invited to rate these items on the 4-point scale and provide comments on each item.

Items that were rated as ‘omit’ in Round One and that are not expected to be included in the final checklist. Participants will not be asked to rate these items; they will be invited to provide their opinion on the removal of these items from the final checklist.

If there is a substantial lack of consensus remaining following Round Two of the Delphi, a third round will be prepared and conducted. Round Three will follow the same format as Round Two, providing the reporting guideline items in three sections: items that are expected to be included in the final guideline; those significantly altered; and items that will be removed from the final checklist.

Consensus meeting

An expert panel of individuals experienced in NS methods will be invited to participate in the consensus meeting to finalise the content of the guideline. It is anticipated that this will be held as a face-to-face meeting at the Cochrane colloquium in 2018 in Edinburgh, UK. If this is not possible, an online consensus meeting will be conducted using webinar software. If necessary, an additional virtual meeting will be held to accommodate different time zones of invitees. At the consensus meeting the reporting guideline items developed from the Delphi survey will be discussed, with priority given to establishing consensus on the content and wording of items for which the level of consensus is less clear.

Work Programme Three: enhancement of existing guidance on NS methods

We will produce materials to support the current guidance that includes information on the rationale for, as well as implementation of each stage of NS. This will be developed as a supplement to the reporting guideline items. The enhanced guidance will be accessible to novice reviewers and will provide examples of good practice to illustrate how methods of NS may be used. The findings of Work Programmes One and Two, the assessment of current reporting of NS and the Delphi consensus will be used to inform development of enhanced guidance on NS. 5 Cochrane Review Groups who publish reviews incorporating NS will be identified through the process of Work Programme One. We anticipate that these will include a range of Cochrane Review Groups and examples will be developed which are relevant to all groups. An overview of methodological tools which can be used to support NS and which have been developed since publication of the ESRC guidance in 2006 will also be incorporated. The PAG will be asked for comments on the draft guidance before it is piloted.

Work Programme Four: development of learning materials on implementation of NS

Training materials based on the guidance developed in Work Programmes Two and Three will be produced to promote improved use of NS methods. We have secured support from Cochrane Training to collaborate in Work Programme Four. We will deliver two to three live participatory webinars (to allow for different time zones) to present the agreed guidance developed in Work Programme Two. One webinar will be recorded and provided on a web page, along with a record of the questions raised in the webinar, and any other frequently asked questions that emerge.

In addition, an online training module on NS will be developed in collaboration with Cochrane Training and a specialist e-learning company. The module will include a mix of didactic and participatory teaching methods involving assessment and interpretation of data and syntheses. We will work with Cochrane colleagues to incorporate the reporting items into the MECIR standards, and offer to update the relevant chapters of the Cochrane Handbook.

Ethics and dissemination

Dissemination of the results of this study will be through peer-reviewed publications, and national and international conferences. In addition, the objectives of Work Programmes Three and Four are to distribute and encourage use of the ICONS-Quant guideline through webinars and an online training module.

- Posada FB ,

- Haines A , et al

- Higgins J ,

- Lasserson T ,

- Chandler J , et al

- Valentine JC ,

- Wilson SJ ,

- Rindskopf D , et al

- Glasziou P ,

- Altman DG ,

- Bossuyt P , et al

- Roberts H ,

- Sowden A , et al

- Petticrew M ,

- Rehfuess E ,

- Noyes J , et al

- Campbell M ,

- Thomson H ,

- Katikireddi SV , et al

- Liberati A ,

- Tetzlaff J , et al

- Shamseer L ,

- Altman DG , et al

- 10. ↵ Cochrane Collaboration . Cochrane strategy to 2020 . 2015 http://community.cochrane.org/organizational-info/resources/strategy-2020

- Schulz KF ,

- Simera I , et al

- Armstrong R ,

- Jackson N , et al

- Williamson PR

- Higgins JP ,

- 20. ↵ World Health Organization . WHO handbook for guideline development . Geneva : World Health Organization , 2014 .

- Hoffmann TC ,

- Glasziou PP ,

- Boutron I , et al

- Craig P , et al

- Diamond IR ,

- Feldman BM , et al

Contributors HT conceived the idea of the study. HT, SVK, AS, JEM and MC designed the study methodology. MC prepared the first draft of the protocol manuscript and all authors critically reviewed and approved the final manuscript.

Funding This project was supported by funds provided by the Cochrane Strategic Methods Fund. MC, HT and SVK receive funding from the UK Medical Research Council (MC_UU_12017-13 and MC_UU_12017-15) and the Scottish Government Chief Scientist Office (SPHSU13 and SPHSU15). SVK is supported by an NHS Research Scotland Senior Clinical Fellowship (SCAF/15/02). JEM is supported by a National Health and Medical Research Council (NHMRC) Australian Public Health Fellowship (1072366).

Disclaimer The views expressed in the protocol are those of the authors and not necessarily those of Cochrane or its registered entities, committees or working groups.

Competing interests HT and SVK are Cochrane editors. JEM is a co-convenor of the Cochrane Statistical Methods Group.

Patient consent Not required.

Ethics approval University of Glasgow College of Social Sciences Ethics Committee (reference number400170060)

Provenance and peer review Not commissioned; externally peer reviewed.

Read the full text or download the PDF:

- Methodology

- Open access

- Published: 24 April 2023

Quantitative evidence synthesis: a practical guide on meta-analysis, meta-regression, and publication bias tests for environmental sciences

- Shinichi Nakagawa ORCID: orcid.org/0000-0002-7765-5182 1 , 2 ,

- Yefeng Yang ORCID: orcid.org/0000-0002-8610-4016 1 ,

- Erin L. Macartney ORCID: orcid.org/0000-0003-3866-143X 1 ,

- Rebecca Spake ORCID: orcid.org/0000-0003-4671-2225 3 &

- Malgorzata Lagisz ORCID: orcid.org/0000-0002-3993-6127 1

Environmental Evidence volume 12 , Article number: 8 ( 2023 ) Cite this article

7320 Accesses

18 Citations

29 Altmetric

Metrics details

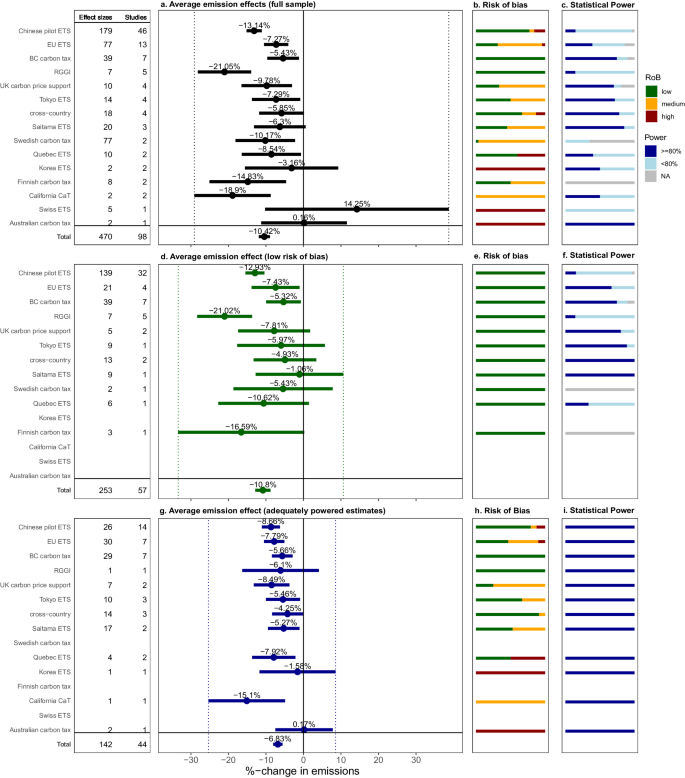

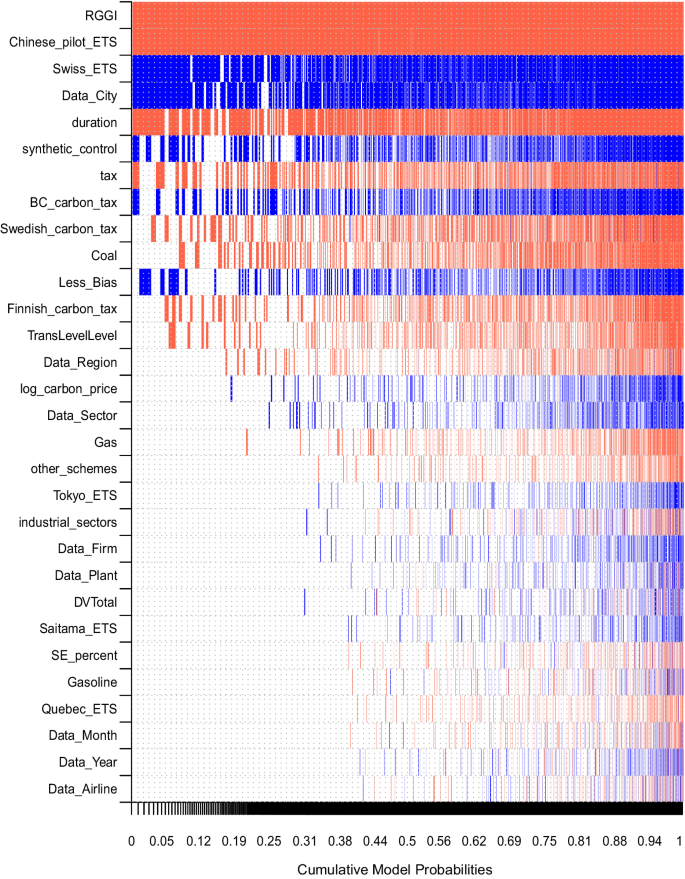

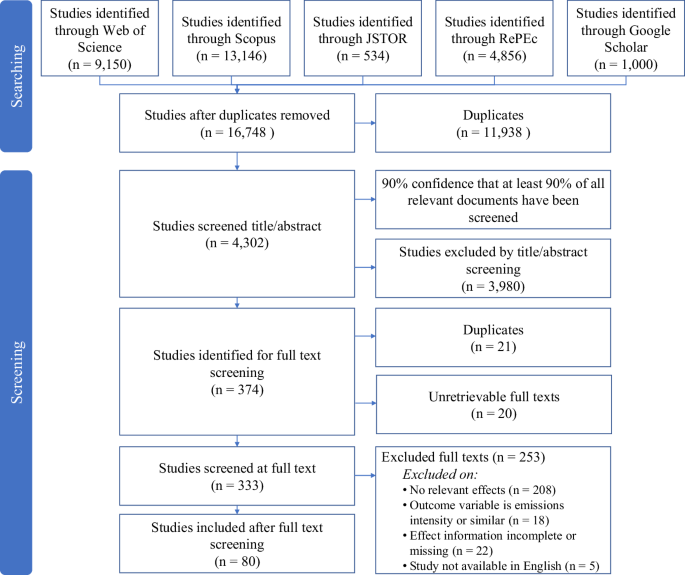

Meta-analysis is a quantitative way of synthesizing results from multiple studies to obtain reliable evidence of an intervention or phenomenon. Indeed, an increasing number of meta-analyses are conducted in environmental sciences, and resulting meta-analytic evidence is often used in environmental policies and decision-making. We conducted a survey of recent meta-analyses in environmental sciences and found poor standards of current meta-analytic practice and reporting. For example, only ~ 40% of the 73 reviewed meta-analyses reported heterogeneity (variation among effect sizes beyond sampling error), and publication bias was assessed in fewer than half. Furthermore, although almost all the meta-analyses had multiple effect sizes originating from the same studies, non-independence among effect sizes was considered in only half of the meta-analyses. To improve the implementation of meta-analysis in environmental sciences, we here outline practical guidance for conducting a meta-analysis in environmental sciences. We describe the key concepts of effect size and meta-analysis and detail procedures for fitting multilevel meta-analysis and meta-regression models and performing associated publication bias tests. We demonstrate a clear need for environmental scientists to embrace multilevel meta-analytic models, which explicitly model dependence among effect sizes, rather than the commonly used random-effects models. Further, we discuss how reporting and visual presentations of meta-analytic results can be much improved by following reporting guidelines such as PRISMA-EcoEvo (Preferred Reporting Items for Systematic Reviews and Meta-Analyses for Ecology and Evolutionary Biology). This paper, along with the accompanying online tutorial, serves as a practical guide on conducting a complete set of meta-analytic procedures (i.e., meta-analysis, heterogeneity quantification, meta-regression, publication bias tests and sensitivity analysis) and also as a gateway to more advanced, yet appropriate, methods.

Evidence synthesis is an essential part of science. The method of systematic review provides the most trusted and unbiased way to achieve the synthesis of evidence [ 1 , 2 , 3 ]. Systematic reviews often include a quantitative summary of studies on the topic of interest, referred to as a meta-analysis (for discussion on the definitions of ‘meta-analysis’, see [ 4 ]). The term meta-analysis can also mean a set of statistical techniques for quantitative data synthesis. The methodologies of the meta-analysis were initially developed and applied in medical and social sciences. However, meta-analytic methods are now used in many other fields, including environmental sciences [ 5 , 6 , 7 ]. In environmental sciences, the outcomes of meta-analyses (within systematic reviews) have been used to inform environmental and related policies (see [ 8 ]). Therefore, the reliability of meta-analytic results in environmental sciences is important beyond mere academic interests; indeed, incorrect results could lead to ineffective or sometimes harmful environmental policies [ 8 ].

As in medical and social sciences, environmental scientists frequently use traditional meta-analytic models, namely fixed-effect and random-effects models [ 9 , 10 ]. However, we contend that such models in their original formulation are no longer useful and are often incorrectly used, leading to unreliable estimates and errors. This is mainly because the traditional models assume independence among effect sizes, but almost all primary research papers include more than one effect size, and this non-independence is often not considered (e.g., [ 11 , 12 , 13 ]). Furthermore, previous reviews of published meta-analyses in environmental sciences (hereafter, ‘environmental meta-analyses’) have demonstrated that less than half report or investigate heterogeneity (inconsistency) among effect sizes [ 14 , 15 , 16 ]. Many environmental meta-analyses also do not present any sensitivity analysis, for example, for publication bias (i.e., statistically significant effects being more likely to be published, making collated data unreliable; [ 17 , 18 ]). These issues might have arisen for several reasons, for example, because of no clear conduct guideline for the statistical part of meta-analyses in environmental sciences and rapid developments in meta-analytic methods. Taken together, the field urgently requires a practical guide to implement correct meta-analyses and associated procedures (e.g., heterogeneity analysis, meta-regression, and publication bias tests; cf. [ 19 ]).

To assist environmental scientists in conducting meta-analyses, the aims of this paper are five-fold. First, we provide an overview of the processes involved in a meta-analysis while introducing some key concepts. Second, after introducing the main types of effect size measures, we mathematically describe the two commonly used traditional meta-analytic models, demonstrate their utility, and introduce a practical, multilevel meta-analytic model for environmental sciences that appropriately handles non-independence among effect sizes. Third, we show how to quantify heterogeneity (i.e., consistencies among effect sizes and/or studies) using this model, and then explain such heterogeneity using meta-regression. Fourth, we show how to test for publication bias in a meta-analysis and describe other common types of sensitivity analysis. Fifth, we cover other technical issues relevant to environmental sciences (e.g., scale and phylogenetic dependence) as well as some advanced meta-analytic techniques. In addition, these five aims (sections) are interspersed with two more sections, named ‘Notes’ on: (1) visualisation and interpretation; and (2) reporting and archiving. Some of these sections are accompanied by results from a survey of 73 environmental meta-analyses published between 2019 and 2021; survey results depict current practices and highlight associated problems (for the method of the survey, see Additional file 1 ). Importantly, we provide easy-to-follow implementations of much of what is described below, using the R package, metafor [ 20 ] and other R packages at the webpage ( https://itchyshin.github.io/Meta-analysis_tutorial/ ), which also connects the reader to the wealth of online information on meta-analysis (note that we also provide this tutorial as Additional file 2 ; see also [ 21 ]).

Overview with key concepts

Statistically speaking, we have three general objectives when conducting a meta-analysis [ 12 ]: (1) estimating an overall mean , (2) quantifying consistency ( heterogeneity ) between studies, and (3) explaining the heterogeneity (see Table 1 for the definitions of the terms in italic ). A notable feature of a meta-analysis is that an overall mean is estimated by taking the sampling variance of each effect size into account: a study (effect size) with a low sampling variance (usually based on a larger sample size) is assigned more weight in estimating an overall mean than one with a high sampling variance (usually based on a smaller sample size). However, an overall mean estimate itself is often not informative because one can get the same overall mean estimates in different ways. For example, we may get an overall estimate of zero if all studies have zero effects with no heterogeneity. In contrast, we might also obtain a zero mean across studies that have highly variable effects (e.g., ranging from strongly positive to strongly negative), signifying high heterogeneity. Therefore, quantifying indicators of heterogeneity is an essential part of a meta-analysis, necessary for interpreting the overall mean appropriately. Once we observe non-zero heterogeneity among effect sizes, then, our job is to explain this variation by running meta-regression models, and, at the same time, quantify how much variation is accounted for (often quantified as R 2 ). In addition, it is important to conduct an extra set of analyses, often referred to as publication bias tests , which are a type of sensitivity analysis [ 11 ], to check the robustness of meta-analytic results.

Choosing an effect size measure

In this section, we introduce different kinds of ‘effect size measures’ or ‘effect measures’. In the literature, the term ‘effect size’ is typically used to refer to the magnitude or strength of an effect of interest or its biological interpretation (e.g., environmental significance). Effect sizes can be quantified using a range of measures (for details, see [ 22 ]). In our survey of environmental meta-analyses (Additional file 1 ), the two most commonly used effect size measures are: the logarithm of response ratio, lnRR ([ 23 ]; also known as the ratio of means; [ 24 ]) and standardized mean difference, SMD (often referred to as Hedges’ g or Cohen’s d [ 25 , 26 ]). These are followed by proportion (%) and Fisher’s z -transformation of correlation, or Zr . These four effect measures nearly fit into the three categories, which are named: (1) single-group measures (a statistical summary from one group; e.g., proportion), (2) comparative measures (comparing between two groups e.g., SMD and lnRR), and (3) association measures (relationships between two variables; e.g., Zr ). Table 2 summarizes effect measures that are common or potentially useful for environmental scientists. It is important to note that any measures with sampling variance can become an ‘effect size’. The main reason why SMD, lnRR, Zr, or proportion are popular effect measures is that they are unitless, while a meta-analysis of mean, or mean difference, can only be conducted when all effect sizes have the same unit (e.g., cm, kg).

Table 2 also includes effect measures that are likely to be unfamiliar to environmental scientists; these are effect sizes that characterise differences in the observed variability between samples, (i.e., lnSD, lnCV, lnVR and lnCVR; [ 27 , 28 ]) rather than central tendencies (averages). These dispersion-based effect measures can provide us with extra insights along with average-based effect measures. Although the literature survey showed none of these were used in our sample, these effect sizes have been used in many fields, including agriculture (e.g., [ 29 ]), ecology (e.g., [ 30 ]), evolutionary biology (e.g., [ 31 ]), psychology (e.g., [ 32 ]), education (e.g., [ 33 ]), psychiatry (e.g., [ 34 ]), and neurosciences (e.g. [ 35 ],),. Perhaps, it is not difficult to think of an environmental intervention that can affect not only the mean but also the variance of measurements taken on a group of individuals or a set of plots. For example, environmental stressors such as pesticides and eutrophication are likely to increase variability in biological systems because stress accentuates individual differences in environmental responses (e.g. [ 36 , 37 ],). Such ideas are yet to be tested meta-analytically (cf. [ 38 , 39 ]).

Choosing a meta-analytic model

Fixed-effect and random-effects models.

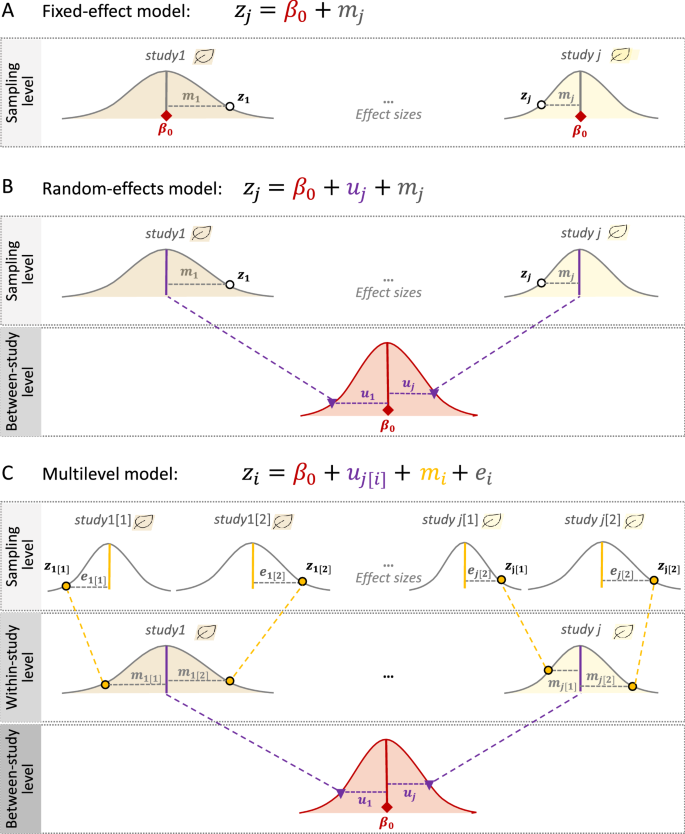

Two traditional meta-analytic models are called the ‘fixed-effect’ model and the ‘random-effects’ model. The former assumes that all effect sizes (from different studies) come from one population (i.e., they have one true overall mean), while the latter does not have such an assumption (i.e., each study has different overall means or heterogeneity exists among studies; see below for more). The fixed-effect model, which should probably be more correctly referred to as the ‘common-effect’ model, can be written as [ 9 , 10 , 40 ]:

where the intercept, \({\beta }_{0}\) is the overall mean, z j (the response/dependent variable) is the effect size from the j th study ( j = 1, 2,…, N study ; in this model, N study = the number of studies = the number of effect sizes), m j is the sampling error, related to the j th sampling variance ( v j ), which is normally distributed with the mean of 0 and the ‘study-specific’ sampling variance, v j (see also Fig. 1 A).

Visualisation of the three statistical models of meta-analysis: A a fixed-effect model (1-level), B a random-effects model (2-level), and C a multilevel model (3-level; see the text for what symbols mean)

The overall mean needs to be estimated and often done so as the weighted average with the weights, \({w}_{j}=1/{v}_{j}\) (i.e., the inverse-variance approach). An important, but sometimes untenable, assumption of meta-analysis is that sampling variance is known. Indeed, we estimate sampling variance, using formulas, as in Table 2 , meaning that vj is submitted by sampling variance estimates (see also section ‘ Scale dependence ’). Of relevance, the use of the inverse-variance approach has been recently criticized, especially for SMD and lnRR [ 41 , 42 ] and we note that the inverse-variance approach using the formulas in Table 2 is one of several different weighting approaches used in meta-analysis (e.g., for adjusted sampling-variance weighing, see [ 43 , 44 ]; for sample-size-based weighting, see [ 41 , 42 , 45 , 46 ]). Importantly, the fixed-effect model assumes that the only source of variation in effect sizes ( z j ) is the effect due to sampling variance (which is inversely proportional to the sample size, n ; Table 2 ).

Similarly, the random-effects model can be expressed as:

where u j is the j th study effect, which is normally distributed with the mean of 0 and the between-study variance, \({\tau }^{2}\) (for different estimation methods, see [ 47 , 48 , 49 , 50 ]), and other notations are the same as in Eq. 1 (Fig. 1 B). Here, the overall mean can be estimated as the weighted average with weights \({w}_{j}=1/\left({\tau }^{2}+{v}_{j}^{2}\right)\) (note that different weighting approaches, mentioned above, are applicable to the random-effects model and some of them are to the multilevel model, introduced below). The model assumes each study has its specific mean, \({b}_{0}+{u}_{j}\) , and (in)consistencies among studies (effect sizes) are indicated by \({\tau }^{2}\) . When \({\tau }^{2}\) is 0 (or not statistically different from 0), the random-effects model simplifies to the fixed-effect model (cf. Equations 1 and 2 ). Given no studies in environmental sciences are conducted in the same manner or even at exactly the same place and time, we should expect different studies to have different means. Therefore, in almost all cases in the environmental sciences, the random-effects model is a more ‘realistic’ model [ 9 , 10 , 40 ]. Accordingly, most environmental meta-analyses (68.5%; 50 out of 73 studies) in our survey used the random-effects model, while only 2.7% (2 of 73 studies) used the fixed-effect model (Additional file 1 ).

Multilevel meta-analytic models

Although we have introduced the random-effects model as being more realistic than the fixed-effect model (Eq. 2 ), we argue that the random-effects model is rather limited and impractical for the environmental sciences. This is because random-effects models, like fixed-effect models, assume all effect sizes ( z j ) to be independent. However, when multiple effect sizes are obtained from a study, these effect sizes are dependent (for more details, see the next section on non-independence). Indeed, our survey showed that in almost all datasets used in environmental meta-analyses, this type of non-independence among effect sizes occurred (97.3%; 71 out of 73 studies, with two studies being unclear, so effectively 100%; Additional file 1 ). Therefore, we propose the simplest and most practical meta-analytic model for environmental sciences as [ 13 , 40 ] (see also [ 51 , 52 ]):

where we explicitly recognize that N effect ( i = 1, 2,…, N effect ) > N study ( j = 1, 2,…, N study ) and, therefore, we now have the study effect (between-study effect), u j[i] (for the j th study and i th effect size) and effect-size level (within-study) effect, e i (for the i th effect size), with the between-study variance, \({\tau }^{2}\) , and with-study variance, \({\sigma }^{2}\) , respectively, and other notations are the same as above. We note that this model (Eq. 3 ) is an extension of the random-effects model (Eq. 2 ), and we refer to it as the multilevel/hierarchical model (used in 7 out of 73 studies: 9.6% [Additional file 1 ]; note that Eq. 3 is also known as a three-level meta-analytic model; Fig. 1 C). Also, environmental scientists who are familiar with (generalised) linear mixed-models may recognize u j (the study effect) as the effect of a random factor which is associated with a variance component, i.e., \({\tau }^{2}\) [ 53 ]; also, e i and m i can be seen as parts of random factors, associated with \({\sigma }^{2}\) and v i (the former is comparable to the residuals, while the latter is sampling variance, specific to a given effect size).

It seems that many researchers are aware of the issue of non-independence so that they often use average effect sizes per study or choose one effect size (at least 28.8%, 21 out of 73 environmental meta-analyses; Additional file 1 ). However, as we discussed elsewhere [ 13 , 40 ], such averaging or selection of one effect size per study dramatically reduces our ability to investigate environmental drivers of variation among effect sizes [ 13 ]. Therefore, we strongly support the use of the multilevel model. Nevertheless, this proposed multilevel model, formulated as Eq. 3 does not usually deal with the issue of non-independence completely, which we elaborate on in the next section.

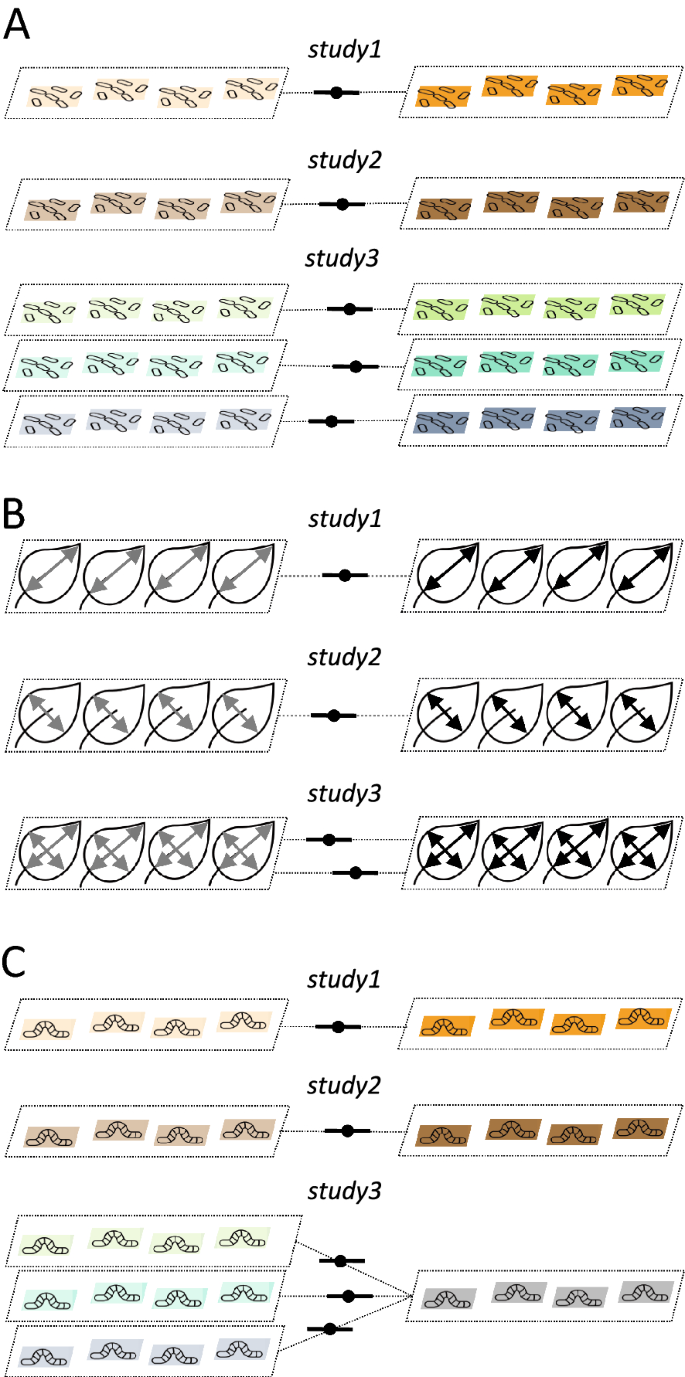

Non-independence among effect sizes and among sampling errors

When you have multiple effect sizes from a study, there are two broad types and three cases of non-independence (cf. [ 11 , 12 ]): (1) effect sizes are calculated from different cohorts of individuals (or groups of plots) within a study (Fig. 2 A, referred to as ‘shared study identity’), and (2) effects sizes are calculated from the same cohort of individuals (or group of plots; Fig. 2 B, referred to as ‘shared measurements’) or partially from the same individuals and plots, more concretely, sharing individuals and plots from the control group (Fig. 2 C, referred to as ‘shared control group’). The first type of non-independence induces dependence among effect sizes, but not among sampling variances, and the second type leads to non-independence among sampling variances. Many datasets, if not almost all, will have a combination of these three cases (or even are more complex, see the section " Complex non-independence "). Failing to deal with these non-independences will inflate Type 1 error (note that the overall estimate, b 0 is unlikely to be biased, but standard error of b 0 , se( b 0 ), will be underestimated; note that this is also true for all other regression coefficients, e.g., b 1 ; see Table 1 ). The multilevel model (as in Eq. 3 ) only takes care of cases of non-independence that are due to the shared study identity but neither shared measurements nor shared control group.

Visualisation of the three types of non-independence among effect sizes: A due to shared study identities (effect sizes from the same study), B due to shared measurements (effect sizes come from the same group of individuals/plots but are based on different types of measurements), and C due to shared control (effect sizes are calculated using the same control group and multiple treatment groups; see the text for more details)

There are two practical ways to deal with non-independence among sampling variances. The first method is that we explicitly model such dependence using a variance–covariance (VCV) matrix (used in 6 out of 73 studies: 8.2%; Additional file 1 ). Imagine a simple scenario with a dataset of three effect sizes from two studies where two effects sizes from the first study are calculated (partially) using the same cohort of individuals (Fig. 2 B); in such a case, the sampling variance effect, \({m}_{i}\) , as in Eq. 3 , should be written as:

where M is the VCV matrix showing the sampling variances, \({v}_{1\left[1\right]}\) (study 1 and effect size 1), \({v}_{1\left[2\right]}\) (study 1 and effect size 2), and \({v}_{2\left[3\right]}\) (study 2 and effect size 3) in its diagonal, and sampling covariance, \(\rho \sqrt{{v}_{1\left[1\right]}{v}_{1\left[2\right]}}= \rho \sqrt{{v}_{1\left[2\right]}{v}_{1\left[1\right]}}\) in its off-diagonal elements, where \(\rho \) is a correlation between two sampling variances due to shared samples (individuals/plots). Once this VCV matrix is incorporated into the multilevel model (Eq. 3 ), all the types of non-independence, as in Fig. 2 , are taken care of. Table 3 shows formulas for the sampling variance and covariance of the four common effect sizes (SDM, lnRR, proportion and Zr ). For comparative effect measures (Table 2 ), exact covariances can be calculated under the case of ‘shared control group’ (see [ 54 , 55 ]). But this is not feasible for most circumstances because we usually do not know what \(\rho \) should be. Some have suggested fixing this value at 0.5 (e.g., [ 11 ]) or 0.8 (e.g., [ 56 ]); the latter is a more conservative assumption. Or one can run both and use one for the main analysis and the other for sensitivity analysis (for more, see the ‘ Conducting sensitivity analysis and critical appraisal " section).

The second method overcomes this very issue of unknown \(\rho \) by approximating average dependence among sampling variance (and effect sizes) from the data and incorporating such dependence to estimate standard errors (only used in 1 out of 73 studies; Additional file 1 ). This method is known as ‘robust variance estimation’, RVE, and the original estimator was proposed by Hedges and colleagues in 2010 [ 57 ]. Meta-analysis using RVE is relatively new, and this method has been applied to multilevel meta-analytic models only recently [ 58 ]. Note that the random-effects model (Eq. 2 ) and RVE could correctly model both types of non-independence. However, we do not recommend the use of RVE with Eq. 2 because, as we will later show, estimating \({\sigma }^{2}\) as well as \({\tau }^{2}\) will constitute an important part of understanding and gaining more insights from one’s data. We do not yet have a definite recommendation on which method to use to account for non-independence among sampling errors (using the VCV matrix or RVE). This is because no simulation work in the context of multilevel meta-analysis has been done so far, using multilevel meta-analyses [ 13 , 58 ]. For now, one could use both VCV matrices and RVE in the same model [ 58 ] (see also [ 21 ]).

Quantifying and explaining heterogeneity

Measuring consistencies with heterogeneity.

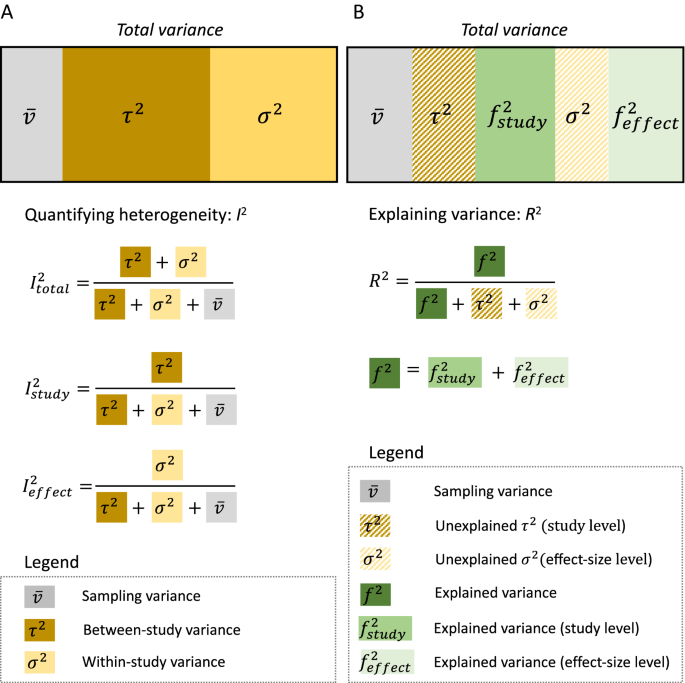

As mentioned earlier, quantifying heterogeneity among effect sizes is an essential component of any meta-analysis. Yet, our survey showed only 28 out of 73 environmental meta-analyses (38.4%; Additional file 1 ) report at least one index of heterogeneity (e.g., \({\tau }^{2}\) , Q , and I 2 ). Conventionally, the presence of heterogeneity is tested by Cochrane’s Q test. However, Q (often noted as Q T or Q total ), and its associated p value, are not particularly informative: the test does not tell us about the extent of heterogeneity (e.g. [ 10 ],), only whether heterogeneity is zero or not (when p < 0.05). Therefore, for environmental scientists, we recommend two common ways of quantifying heterogeneity from a meta-analytic model: absolute heterogeneity measure (i.e., variance components, \({\tau }^{2}\) and \({\sigma }^{2}\) ) and relative heterogeneity measure (i.e., I 2 ; see also the " Notes on visualisation and interpretation " section for another way of quantifying and visualising heterogeneity at the same time, using prediction intervals; see also [ 59 ]). We have already covered the absolute measure (Eqs. 2 & 3 ), so here we explain I 2 , which ranges from 0 to 1 (for some caveats for I 2 , see [ 60 , 61 ]). The heterogeneity measure, I 2 , for the random-effect model (Eq. 2 ) can be written as:

Where \(\overline{v}\) is referred to as the typical sampling variance (originally this is called ‘within-study’ variance, as in Eq. 2 , and note that in this formulation, within-study effect and the effect of sampling error is confounded; see [ 62 , 63 ]; see also [ 64 ]) and the other notations are as above. As you can see from Eq. 5 , we can interpret I 2 as relative variation due to differences between studies (between-study variance) or relative variation not due to sampling variance.

By seeing I 2 as a type of interclass correlation (also known as repeatability [ 65 ],), we can generalize I 2 to multilevel models. In the case of Eq. 3 ([ 40 , 66 ]; see also [ 52 ]), we have:

Because we can have two more I 2 , Eq. 7 is written as \({I}_{total}^{2}\) ; these other two are \({I}_{study}^{2}\) and \({I}_{effect}^{2}\) , respectively:

\({I}_{total}^{2}\) represents relative variance due to differences both between and within studies (between- and within-study variance) or relative variation not due to sampling variance, while \({I}_{study}^{2}\) is relative variation due to differences between studies, and \({I}_{effect}^{2}\) is relative variation due to differences within studies (Fig. 3 A). Once heterogeneity is quantified (note almost all data will have non-zero heterogeneity and an earlier meta-meta-analysis suggests in ecology, we have on average, I 2 close to 90% [ 66 ]), it is time to fit a meta-regression model to explain the heterogeneity. Notably, the magnitude of \({I}_{study}^{2}\) (and \({\tau }^{2}\) ) and \({I}_{effect}^{2}\) (and \({\sigma }^{2}\) ) can already inform you which predictor variable (usually referred to as ‘moderator’) is likely to be important, which we explain in the next section.

Visualisation of variation (heterogeneity) partitioned into different variance components: A quantifying different types of I 2 from a multilevel model (3-level; see Fig. 1 C) and B variance explained, R 2 , by moderators. Note that different levels of variances would be explained, depending on which level a moderator belongs to (study level and effect-size level)

Explaining variance with meta-regression

We can extend the multilevel model (Eq. 3 ) to a meta-regression model with one moderator (also known as predictor, independent, explanatory variable, or fixed factor), as below:

where \({\beta }_{1}\) is a slope of the moderator ( x 1 ), \({x}_{1j\left[i\right]}\) denotes the value of x 1 , corresponding to the j th study (and the i th effect sizes). Equation ( 10 ) (meta-regression) is comparable to the simplest regression with the intercept ( \({\beta }_{0}\) ) and slope ( \({\beta }_{1}\) ). Notably, \({x}_{1j\left[i\right]}\) differs between studies and, therefore, it will mainly explain the variance component, \({\tau }^{2}\) (which relates to \({I}_{study}^{2}\) ). On the other hand, if noted like \({x}_{1i}\) , this moderator would vary within studies or at the level of effect sizes, therefore, explaining \({\sigma }^{2}\) (relating to \({I}_{effect}^{2}\) ). Therefore, when \({\tau }^{2}\) ( \({I}_{study}^{2}\) ), or \({\sigma }^{2}\) ( \({I}_{effect}^{2}\) ), is close to zero, there will be little point fitting a moderator(s) at the level of studies, or effect sizes, respectively.

As in multiple regression, we can have multiple (multi-moderator) meta-regression, which can be written as:

where \(\sum_{h=1}^{q}{\beta }_{h}{x}_{h\left[i\right]}\) denotes the sum of all the moderator effects, with q being the number of slopes (staring with h = 1). We note that q is not necessarily the number of moderators. This is because when we have a categorical moderator, which is common, with more than two levels (e.g., method A, B & C), the fixed effect part of the formula is \({\beta }_{0}+{\beta }_{1}{x}_{1}+{\beta }_{2}{x}_{2}\) , where x 1 and x 2 are ‘dummy’ variables, which code whether the i th effect size belongs to, for example, method B or C, with \({\beta }_{1}\) and \({\beta }_{2}\) being contrasts between A and B and between A and C, respectively (for more explanations of dummy variables, see our tutorial page [ https://itchyshin.github.io/Meta-analysis_tutorial/ ]; also see [ 67 , 68 ]). Traditionally, researchers conduct separate meta-analyses per different groups (known as ‘sub-group analysis’), but we prefer a meta-regression approach with a categorical variable, which is statistically more powerful [ 40 ]. Also, importantly, what can be used as a moderator(s) is very flexible, including, for example, individual/plot characteristics (e.g., age, location), environmental factors (e.g., temperature), methodological differences between studies (e.g., randomization), and bibliometric information (e.g., publication year; see more in the section ‘Checking for publication bias and robustness’). Note that moderators should be decided and listed a priori in the meta-analysis plan (i.e., a review protocol or pre-registration).

As with meta-analysis, the Q -test ( Q m or Q moderator ) is often used to test the significance of the moderator(s). To complement this test, we can also quantify variance explained by the moderator(s) using R 2 . We can define R 2 using Eq. ( 11 ) as:

where R 2 is known as marginal R 2 (sensu [ 69 , 70 ]; cf. [ 71 ]), \({f}^{2}\) is the variance due to the moderator(s), and \({(f}^{2}+{\tau }^{2}+{\sigma }^{2})\) here equals to \(({\tau }^{2}+{\sigma }^{2})\) in Eq. 7 , as \({f}^{2}\) ‘absorbs’ variance from \({\tau }^{2}\) and/or \({\sigma }^{2}\) . We can compare the similarities and differences in Fig. 3 B where we denote a part of \({f}^{2}\) originating from \({\tau }^{2}\) as \({f}_{study}^{2}\) while \({\sigma }^{2}\) as \({f}_{effect}^{2}\) . In a multiple meta-regression model, we often want to find a model with the ‘best’ or an adequate set of predictors (i.e., moderators). R 2 can potentially help such a model selection process. Yet, methods based on information criteria (such as Akaike information criterion, AIC) may be preferable. Although model selection based on the information criteria is beyond the scope of the paper, we refer the reader to relevant articles (e.g., [ 72 , 73 ]), and we show an example of this procedure in our online tutorial ( https://itchyshin.github.io/Meta-analysis_tutorial/ ).

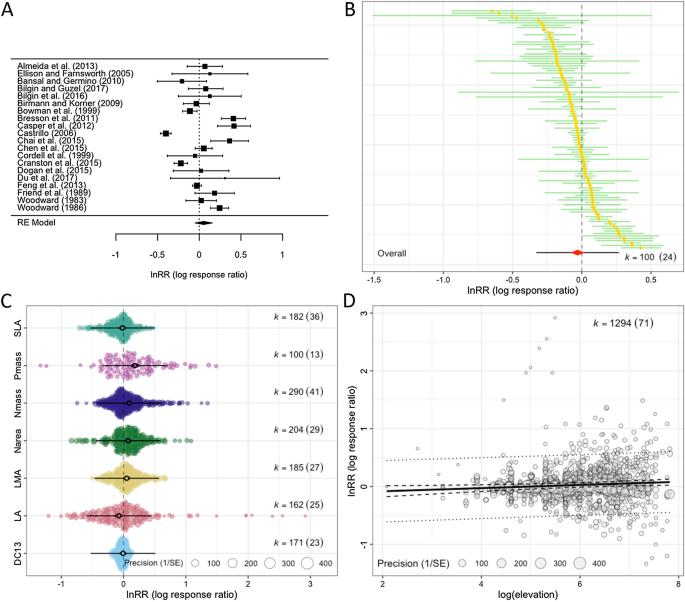

Notes on visualisation and interpretation

Visualization and interpretation of results is an essential part of a meta-analysis [ 74 , 75 ]. Traditionally, a forest plot is used to display the values and 95% of confidence intervals (CIs) for each effect size and the overall effect and its 95% CI (the diamond symbol is often used, as shown in Fig. 4 A). More recently, adding a 95% prediction interval (PI) to the overall estimate has been strongly recommended because 95% PIs show a predicted range of values in which an effect size from a new study would fall, assuming there is no sampling error [ 76 ]. Here, we think that examining the formulas for 95% CIs and PIs for the overall mean (from Eq. 3 ) is illuminating:

where \({t}_{df\left[\alpha =0.05\right]}\) denotes the t value with the degree of freedom, df , at 97.5 percentile (or \(\alpha =0.05\) ) and other notations are as above. In a meta-analysis, it has been conventional to use z value 1.96 instead of \({t}_{df\left[\alpha =0.05\right]}\) , but simulation studies have shown the use of t value over z value reduces Type 1 errors under many scenarios and, therefore, is recommended (e.g., [ 13 , 77 ]). Also, it is interesting to note that by plotting 95% PIs, we can visualize heterogeneity as Eq. 15 includes \({\tau }^{2}\) and \({\sigma }^{2}\) .

Different types of plots useful for a meta-analysis using data from Midolo et al. [ 133 ]: A a typical forest plot with the overall mean shown as a diamond at the bottom (20 effect sizes from 20 studies are used), B a caterpillar plot (100 effect sizes from 24 studies are used), C an orchard plot of categorical moderator with seven levels (all effect sizes are used), and D a bubble plot of a continuous moderator. Note that the first two only show confidence intervals, while the latter two also show prediction intervals (see the text for more details)

A ‘forest’ plot can become quickly illegible as the number of studies (effect sizes) becomes large, so other methods of visualizing the distribution of effect sizes have been suggested. Some suggested to present a ‘caterpillar’ plot, which is a version of the forest plot, instead (Fig. 4 B; e.g., [ 78 ]). We here recommend an ‘orchard’ plot, as it can present results across different groups (or a result of meta-regression with a categorical variable), as shown in Fig. 4 C [ 78 ]. For visualization of a continuous variable, we suggest what is called a ‘bubble’ plot, shown in Fig. 4 D. Visualization not only helps us interpret meta-analytic results, but can also help to identify something we may not see from statistical results, such as influential data points and outliers that could threaten the robustness of our results.

Checking for publication bias and robustness

Detecting and correcting for publication bias.

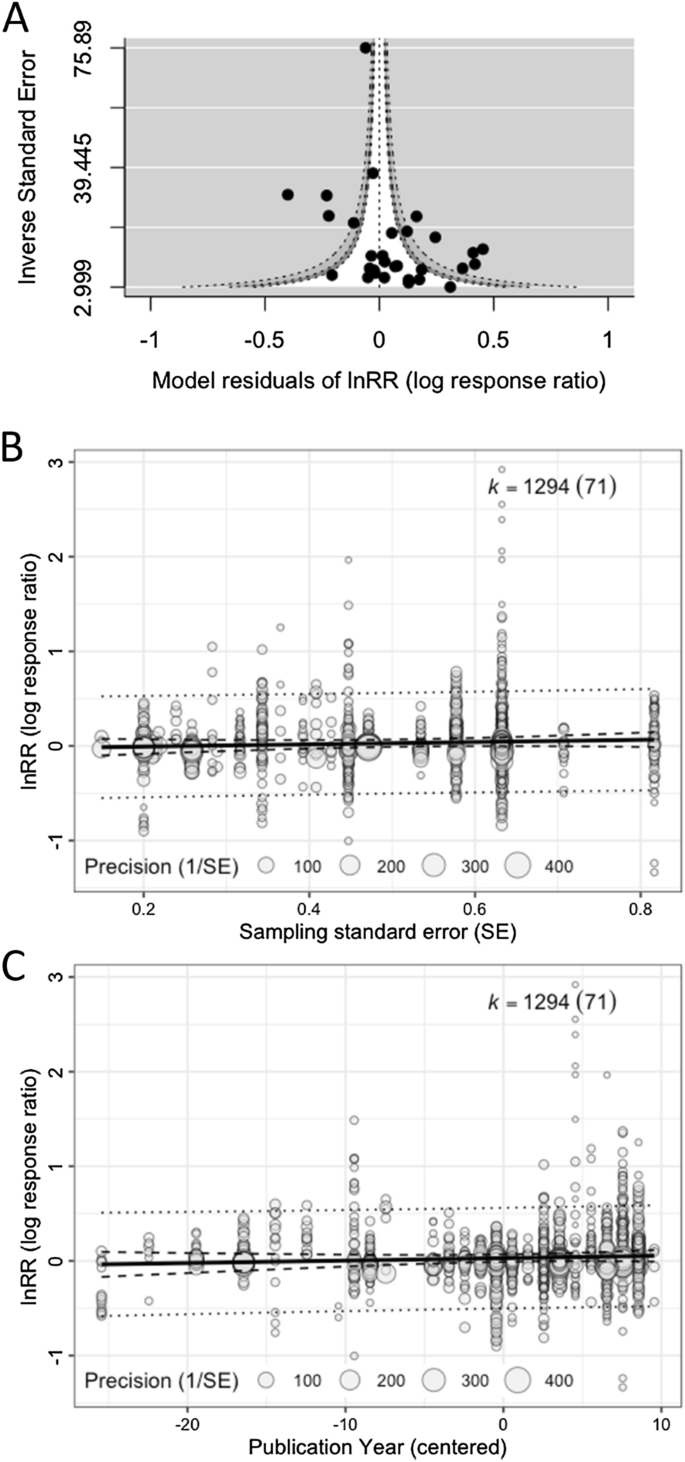

Checking for and adjusting for any publication bias is necessary to ensure the validity of meta-analytic inferences [ 79 ]. However, our survey showed almost half of the environmental meta-analyses (46.6%; 34 out of 73 studies; Additional file 1 ) neither tested for nor corrected for publication bias (cf. [ 14 , 15 , 16 ]). The most popular methods used were: (1) graphical tests using funnel plots (26 studies; 35.6%), (2) regression-based tests such as Egger regression (18 studies; 24.7%), (3) Fail-safe number tests (12 studies; 16.4%), and (4) trim-and-fill tests (10 studies; 13.7%). We recently showed that these methods are unsuitable for datasets with non-independent effect sizes, with the exception of funnel plots [ 80 ] (for an example of funnel plots, see Fig. 5 A). This is because these methods cannot deal with non-independence in the same way as the fixed-effect and random-effects models. Here, we only introduce a two-step method for multilevel models that can both detect and correct for publication bias [ 80 ] (originally proposed by [ 81 , 82 ]), more specifically, the “small study effect” where an effect size value from a small-sample-sized study can be much larger in magnitude than a ‘true’ effect [ 83 , 84 ]. This method is a simple extension of Egger’s regression [ 85 ], which can be easily implemented by using Eq. 10 :

where \({\widetilde{n}}_{i}\) is known as effective sample size; for Zr and proportion it is just n i , and for SMD and lnRR, it is \({n}_{iC}{n}_{iT}/\left({n}_{iC}+{n}_{iT}\right)\) , as in Table 2 . When \({\beta }_{1}\) is significant, we conclude there exists a small-study effect (in terms of a funnel plot, this is equivalent to significant funnel asymmetry). Then, we fit Eq. 17 and we look at the intercept \({\beta }_{0}\) , which will be a bias-corrected overall estimate [note that \({\beta }_{0}\) in Eq. ( 16 ) provides less accurate estimates when non-zero overall effects exist [ 81 , 82 ]; Fig. 5 B]. An intuitive explanation of why \({\beta }_{0}\) (Eq. 17 ) is the ‘bias-corrected’ estimate is that the intercept represents \(1/\widetilde{{n}_{i}}=0\) (or \(\widetilde{{n}_{i}}=\infty \) ); in other words, \({\beta }_{0}\) is the estimate of the overall effect when we have a very large (infinite) sample size. Of note, appropriate bias correction requires a selection-mode-based approach although such an approach is yet to be available for multilevel meta-analytic models [ 80 ].

Different types of plots for publication bias tests: A a funnel plot using model residuals, showing a funnel (white) that shows the region of statistical non-significance (30 effect sizes from 30 studies are used; note that we used the inverse of standard errors for the y -axis, but for some effect sizes, sample size or ‘effective’ sample size may be more appropriate), B a bubble plot visualising a multilevel meta-regression that tests for the small study effect (note that the slope was non-significant: b = 0.120, 95% CI = [− 0.095, 0.334]; all effect sizes are used), and C a bubble plot visualising a multilevel meta-regression that tests for the decline effect (the slope was non-significant: b = 0.003, 95%CI = [− 0.002, 0.008])

Conveniently, this proposed framework can be extended to test for another type of publication bias, known as time-lag bias, or the decline effect, where effect sizes tend to get closer to zero over time, as larger or statistically significant effects are published more quickly than smaller or non-statistically significant effects [ 86 , 87 ]. Again, a decline effect can be statistically tested by adding year to Eq. ( 3 ):

where \(c\left(yea{r}_{j\left[i\right]}\right)\) is the mean-centred publication year of a particular study (study j and effect size i ); this centring makes the intercept \({\beta }_{0}\) meaningful, representing the overall effect estimate at the mean value of publication years (see [ 68 ]). When the slope is significantly different from 0, we deem that we have a decline effect (or time-lag bias; Fig. 5 C).

However, there may be some confounding moderators, which need to be modelled together. Indeed, Egger’s regression (Eqs. 16 and 17 ) is known to detect the funnel asymmetry when there is little heterogeneity; this means that we need to model \(\sqrt{1/{\widetilde{n}}_{i}}\) with other moderators that account for heterogeneity. Given this, we probably should use a multiple meta-regression model, as below:

where \(\sum_{h=3}^{q}{\beta }_{h}{x}_{h\left[i\right]}\) is the sum of the other moderator effects apart from the small-study effect and decline effect, and other notations are as above (for more details see [ 80 ]). We need to carefully consider which moderators should go into Eq. 19 (e.g., fitting all moderators or using an AIC-based model selection method; see [ 72 , 73 ]). Of relevance, when running complex models, some model parameters cannot be estimated well, or they are not ‘identifiable’ [ 88 ]. This is especially so for variance components (random-effect part) rather than regression coeffects (fixed-effect part). Therefore, it is advisable to check whether model parameters are all identifiable, which can be checked using the profile function in metafor (for an example, see our tutorial webpage [ https://itchyshin.github.io/Meta-analysis_tutorial/ ]).

Conducting sensitivity analysis and critical appraisal

Sensitivity analysis explores the robustness of meta-analytic results by running a different set of analyses from the original analysis, and comparing the results (note that some consider publication bias tests a part of sensitivity analysis; [ 11 ]). For example, we might be interested in assessing how robust results are to the presence of influential studies, to the choice of method for addressing non-independence, or weighting effect sizes. Unfortunately, in our survey, only 37% of environmental meta-analyses (27 out of 73) conducted sensitivity analysis (Additional file 1 ). There are two general and interrelated ways to conduct sensitivity analyses [ 73 , 89 , 90 ]. The first one is to take out influential studies (e.g., outliers) and re-run meta-analytic and meta-regression models. We can also systematically take each effect size out and run a series of meta-analytic models to see whether any resulting overall effect estimates are different from others; this method is known as ‘leave-one-out’, which is considered less subjective and thus recommended.

The second way of approaching sensitivity analysis is known as subset analysis, where a certain group of effect sizes (studies) will be excluded to re-run the models without this group of effect sizes. For example, one may want to run an analysis without studies that did not randomize samples. Yet, as mentioned earlier, we recommend using meta-regression (Eq. 13 ) with a categorical variable of randomization status (‘randomized’ or ‘not randomized’), to statistically test for an influence of moderators. It is important to note that such tests for risk of bias (or study quality) can be considered as a way of quantitatively evaluating the importance of study features that were noted at the stage of critical appraisal, which is an essential part of any systematic review (see [ 11 , 91 ]). In other words, we can use meta-regression or subset analysis to quantitatively conduct critical appraisal using (study-level) moderators that code, for example, blinding, randomization, and selective reporting. Despite the importance of critical appraisal ([ 91 ]), only 4 of 73 environmental meta-analyses (5.6%) in our survey assessed the risk of bias in each study included in a meta-analysis (i.e., evaluating a primary study in terms of the internal validity of study design and reporting; Additional file 1 ). We emphasize that critically appraising each paper or checking them for risk of bias is an extremely important topic. Also, critical appraisal is not restricted to quantitative synthesis. Therefore, we do not cover any further in this paper for more, see [ 92 , 93 ]).

Notes on transparent reporting and open archiving

For environmental systematic reviews and maps, there are reporting guidelines called RepOrting standards for Systematic Evidence Syntheses in environmental research, ROSES [ 94 ] and synthesis assessment checklist, the Collaboration for Environmental Evidence Synthesis Appraisal Tool (CEESAT; [ 95 ]). However, these guidelines are somewhat limited in terms of reporting quantitative synthesis because they cover only a few core items. These two guidelines are complemented by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses for Ecology and Evolutionary Biology (PRISMA-EcoEvo; [ 96 ]; cf. [ 97 , 98 ]), which provides an extended set of reporting items covering what we have described above. Items 20–24 from PRISMA-EcoEvo are most relevant: these items outline what should be reported in the Methods section: (i) sample sizes and study characteristics, (ii) meta-analysis, (iii) heterogeneity, (iv) meta-regression and (v) outcomes of publication bias and sensitivity analysis (see Table 4 ). Our survey, as well as earlier surveys, suggest there is a large room for improvement in the current practice ([ 14 , 15 , 16 ]). Incidentally, the orchard plot is well aligned with Item 20, as this plot type shows both the number of effect sizes and studies for different groups (Fig. 4 C). Further, our survey of environmental meta-analyses highlighted the poor standards of data openness (with 24 studies sharing data: 32.9%) and code sharing (7 studies: 29.2%; Additional file 1 ). Environmental scientists must archive their data as well as their analysis code in accordance with the FAIR principles (Findable, Accessible, Interoperable, and Reusable [ 99 ]) using dedicated depositories such as Dryad, FigShare, Open Science Framework (OSF), Zenodo or others (cf. [ 100 , 101 ]), preferably not on publisher’s webpages (as paywall may block access). However, archiving itself is not enough; data requires metadata (detailed descriptions) and the code needs to also be FAIR [ 102 , 103 ].

Other relevant and advanced issues

Scale dependence.

The issue of scale dependence is a unique yet widespread problem in environmental sciences (see [ 7 , 104 ]); our literature survey indicated three quarters of the environmental meta-analyses (56 out of 73 studies) have inferences that are potentially vulnerable to scale-dependence [ 105 ]. For example, studies that set out to compare group means in biodiversity measures, such as species richness, can vary as a function of the scale (size) of the sampling unit. When the unit of replication is a plot (not an individual animal or plant), the aerial size of a plot (e.g., 100 cm 2 or 1 km 2 ) will affect both the precision and accuracy of effect size estimates (e.g., lnRR and SMD). In general, a study with larger plots might have more accurately estimated species richness differences, but less precisely than a study with smaller plots and greater replication. Lower replication means that our sampling variance estimates are likely to be misestimated, and the study with larger plots will generally have less weight than the study with smaller plots, due to higher sampling variance. Inaccurate variance estimates in little-replicated ecological studies are known to cause an accumulating bias in precision-weighted meta-analysis, requiring correction [ 43 ]. To assess the potential for scale-dependence, it is recommended that analysts test for possible covariation among plot size, replication, variances, and effect sizes [ 104 ]. If detected, analysts should use an effect size measure that is less sensitive to scale dependence (lnRR), and could use the size of a plot as a moderator in meta-regression, or alternatively, they consider running an unweighted model ([ 7 ]; note that only 12%, 9 out of 73 studies, accounted for sampling area in some way; Additional file 1 ).

- Missing data

In many fields, meta-analytic data almost always encompass missing values see [ 106 , 107 , 108 ]. Broadly, we have two types of missing data in meta-analyses [ 109 , 110 ]: (1) missing data in standard deviations or sample sizes, associated with means, preventing effect size calculations (Table 2 ), and (2) missing data in moderators. There are several solutions for both types. The best, and first to try, should be contacting the authors. If this fails, we can potentially ‘impute’ missing data. Single imputation methods using the strong correlation between standard deviation and mean values (known as mean–variance relationship) are available, although single imputation can lead to Type I error [ 106 , 107 ] (see also [ 43 ]) because we do not model the uncertainty of imputation itself. Contrastingly, multiple imputation, which creates multiple versions of imputed datasets, incorporates such uncertainty. Indeed, multiple imputation is a preferred and proven solution for missing data in effect sizes and moderators [ 109 , 110 ]. Yet, correct implementation can be challenging (see [ 110 ]). What we require now is an automated pipeline of merging meta-analysis and multiple imputation, which accounts for imputation uncertainty, although it may be challenging for complex meta-analytic models. Fortunately, however, for lnRR, there is a series of new methods that can perform better than the conventional method and which can deal with missing SDs [ 44 ]; note that these methods do not deal with missing moderators. Therefore, where applicable, we recommend these new methods, until an easy-to-implement multiple imputation workflow arrives.

Complex non-independence

Above, we have only dealt with the model that includes study identities as a clustering/grouping (random) factor. However, many datasets are more complex, with potentially more clustering variables in addition to the study identity. It is certainly possible that an environmental meta-analysis contains data from multiple species. Such a situation creates an interesting dependence among effect sizes from different species, known as phylogenetic relatedness, where closely related species are more likely to be similar in effect sizes compared to distantly related ones (e.g., mice vs. rats and mice vs. sparrows). Our multilevel model framework is flexible and can accommodate phylogenetic relatedness. A phylogenetic multilevel meta-analytic model can be written as [ 40 , 111 , 112 ]:

where \({a}_{k\left[i\right]}\) is the phylogenetic (species) effect for the k th species (effect size i ; N effect ( i = 1, 2,…, N effect ) > N study ( j = 1, 2,…, N study ) > N species ( k = 1, 2,…, N species )), normally distributed with \({\omega }^{2}{\text{A}}\) where is the phylogenetic variance and A is a correlation matrix coding how close each species are to each other and \({\omega }^{2}\) is the phylogenetic variance, \({s}_{k\left[i\right]}\) is the non-phylogenetic (species) effect for the k th species (effect size i ), normally distributed with the variance of \({\gamma }^{2}\) (the non-phylogenetic variance), and other notations are as above. It is important to realize that A explicitly models relatedness among species, and we do need to provide this correlation matrix, using a distance relationship usually derived from a molecular-based phylogenetic tree (for more details, see [ 40 , 111 , 112 ]). Some may think that the non-phylogenetic term ( \({s}_{k\left[i\right]}\) ) is unnecessary or redundant because \({s}_{k\left[i\right]}\) and the phylogenetic term ( \({a}_{k\left[i\right]}\) ) are both modelling variance at the species level. However, a simulation recently demonstrated that failing to have the non-phylogenetic term ( \({s}_{k\left[i\right]}\) ) will often inflate the phylogenetic variance \({\omega }^{2}\) , leading to an incorrect conclusion that there is a strong phylogenetic signal (as shown in [ 112 ]). The non-phylogenetic variance ( \({\gamma }^{2}\) ) arises from, for example, ecological similarities among species (herbivores vs. carnivores or arboreal vs. ground-living) not phylogeny [ 40 ].

Like phylogenetic relatedness, effect sizes arising from closer geographical locations are likely to be more correlated [ 113 ]. Statistically, spatial correlation can be also modelled in a manner analogous to phylogenetic relatedness (i.e., rather than a phylogenetic correlation matrix, A , we fit a spatial correlation matrix). For example, Maire and colleagues [ 114 ] used a meta-analytic model with spatial autocorrelation to investigate the temporal trends of fish communities in the network of rivers in France. We note that a similar argument can be made for temporal correlation, but in many cases, temporal correlations could be dealt with, albeit less accurately, as a special case of ‘shared measurements’, as in Fig. 2 . An important idea to take away is that one can model different, if not all, types of non-independence as the random factor(s) in a multilevel model.

Advanced techniques

Here we touch upon five advanced meta-analytic techniques with potential utility for environmental sciences, providing relevant references so that interested readers can obtain more information on these advanced topics. The first one is the meta-analysis of magnitudes, or absolute values (effect sizes), where researchers may be interested in deviations from 0, rather than the directionality of the effect [ 115 ]. For example, Cohen and colleagues [ 116 ] investigated absolute values of phenological responses, as they were concerned with the magnitudes of changes in phenology rather than directionality.

The second method is the meta-analysis of interaction where our focus is on synthesizing the interaction effect of, usually, 2 × 2 factorial design (e.g., the effect of two simultaneous environmental stressors [ 54 , 117 , 118 ]; see also [ 119 ]). Recently, Siviter and colleagues [ 120 ] showed that agrochemicals interact synergistically (i.e., non-additively) to increase the mortality of bees; that is, two agrochemicals together caused more mortality than the sum of mortalities of each chemical.