Articles in this section

- (for authors) Policy on submissions already published on another platform, including Proceedings

- I'm publishing a paper about a randomized controlled trial. What specific items are required for publication?

- What are some examples of methods/study designs that should appear in the article title?

- (for authors) Guidelines for writing abstracts

Guidelines for Reporting Statistics

- I am getting an error message in the Google form for CONSORT-EHEALTH suggesting that the text I am entering is too long

- How should trial and systematic review registrations be cited?

- How should P values be reported?

- What are JMIR's guidelines for article titles?

- We are doing a substudy of a RCT - do we still need to report according to CONSORT-EHEALTH?

- April 25, 2024 09:39

JMIR Publications employs professional external copyeditors (see What are the steps during copyediting? and What are the authors' responsibilities during copyediting? ) to bring articles into editorial style, so authors do not have to worry too much about the style on initial submission, although it does shorten the production process after acceptance if the article is roughly aligned with our guidelines (see also JMIR's editorial guidelines ) .

While pointing out issues in statistical reporting is also a responsibility of the editor/section editor—who should point out missing statistics and incorrectly reported statistics already during the review process (and not rely on external peer-reviewers)—copyeditors act as the "second line of defense" and must enforce reporting in line with generally accepted guidelines. For details on common statistical terms, please refer to the AMA's Glossary of Statistical Terms . The recent SAMPL Reporting Guideline , which provides best practices on statistical reporting, is a useful resource for copyeditors.

Omission of leading zero

- We follow the guidelines set out by the AMA (sections 18.7.1 and 19.5 ).

- A zero should be placed before the decimal point for numbers less than 1, except when expressing the 3 values related to probability: P , α , and β . These values cannot equal 1, except when rounding. Because they appear frequently, eliminating the zero can save substantial space in tables and text. (Although other statistical values also may never equal 1, their use is less frequent; to simplify usage, the zero before the decimal point is included.)

Our predetermined α level was .05.

- Sometimes α and β are used to indicate other statistics (eg, Cronbach α or β coefficient), which may have values ≥1. In these cases, a leading zero is necessary.

- N designates the entire population under study.

- n designates a sample of the population under study.

- Do not insert spaces before and after the sign, and delete spaces on either sides of mathematical operators, except in equations.

Examples: N=468, n=234

Percentages and decimal places

- If N<100, there is no decimal point in the percentage.

- If N is between 100 and 999, 1 decimal point is reported.

- If N≥1000, 2 decimal points are preferred, but 1 decimal point is acceptable.

- BUT if a table contains mixed denominators, be consistent and use, for example, 1 decimal point consistently even if some denominators are less than 100.

- Do not add a zero after the decimal if the percentage value is a whole number (ie, 64/100=64%, not 64.0%). There are no exceptions to this guideline.

- Note: When rounding significant digits, if the digit immediately to the right of the last significant digit is 5, with either no digits or all zeros after the 5, the last significant digit is rounded up if it is odd and not changed if it is even (eg, 47.75 is rounded to 47.8, but 47.65 is rounded to 47.6; AMA section 19.4.2 ).

- See AMA 18.7.1 , 18.7.2 , and 18.7.3

If N=87, use 45%

If N=356, use 45.1%

If N=1024, use 45.13%

Percentages within a sentence

- Preferred JMIR style is to always make clear what the numerator and denominator associated with a percentage are.

"the majority of participants (59%) felt..." OR “the majority of participants (59%, 59/100) felt..."

should be revised as follows:

"the majority of participants (59/100, 59%) felt..."

When the percentage is emphasized in the sentence:

"…where 59% (59/100) of the participants felt that…"

Example II:

"a vast majority (n=488, 88.7%) of participants"

should be changed as follows:

"a vast majority (488/550, 88.7%)" ( Note: The "n=" has been dropped and the "N" value, that is, 550, has been added).

- In expressing a series of proportions or percentages drawn from the same sample, the denominator need be provided only once.

Example: Of the 200 patients, 6 (3%) died, 18 (9%) experienced an adverse event, and 22 (11%) were lost to follow-up.

- Do not use square brackets within parentheses for statistics. Separate values with a comma if statistics are linked (eg, % and numerator/denominator); separate values with a semicolon if statistics are unlinked (eg, % and odds ratio).

Example s :

“a vast majority (488/550, 88.7%; P =.002)”

“…were not significant ( P =.50; OR 2.72, 95% CI 0.45-2.6)”

"The most common functions among studies that involved children with special needs were consultation (8 studies [73%]) and diagnosis (7 studies [64%]). "

Mean, standard deviation, standard error, and range

- Equal signs are not used; separate the value from the statistic with a space.

- The SD should always be displayed along with mean values; preferred format: mean (SD)

mean 4.71 (SD 0.47) cm; mean 35% (SD 4.3%)

...were aged 20 to 69 years (mean 38.9, SD 11.1 years)

- When reporting multiple statistics in a sentence, use a semicolon to separate the unrelated items.

Example: “The age (in years) of the participants (mean 4.71, SD 0.47; range 4-5)...”

- For mean (SD), we prefer not to use the ± Instead, an expression like 1.11 ± 2.33 should be formatted as "1.11 (SD 2.33)." Authors are advised to review such changes made by your copyeditor. Copyeditors are advised to add a query notifying the author(s) of this change, in case there are other statistics that have also been formatted by authors with a ± sign (eg, SE) and they now need to specify the expression.

- When reporting a value that is calculated from a mean and SD value, report it in the following manner: mean + SD = 6

- When reporting mean and SD in a table, include both values in the same column. The column or row header should include the wording “mean (SD)” after the variable name.

Example: Age (years), mean (SD)

- As of December 4, 2013, AMA no longer requires the expansion of "SD" or "SE" in the text (section 19.6 ). Also see Which abbreviations don't need to be expanded?.

Median and interquartile range

- The interquartile range ( IQR )—distance between the 25th and 75th percentiles—should be displayed along with median values; preferred format: median (IQR).

- Include the leading zero before the decimal point for values <1.

- Do not format as a single number indicating the difference between the 75th and 25th percentiles (AMA section 19.5 ).

- We prefer indicating the 25th and 75th percentiles as a hyphenated range, not as comma-separated values.

- IQR does not need to be expanded.

Examples:

A median value of 54 (IQR 45-62)...

The median (IQR) was 54 (45-62)...

Odds ratio and confidence intervals

- ORs should always be presented with CIs.

- If one value in the CI range is negative, then “to” should be used rather than a hyphen. If this occurs in a table, replace the hyphen in all ranges in that table for consistent presentation.

- Avoid brackets within parentheses. If brackets within parentheses are necessary, use square brackets. In addition, avoid using parentheses inside another set of parentheses altogether; eg, (OR 2.92 (2.36-3.62)) should be rewritten as (OR 2.92, 95% CI 2.36-3.62).

- CI does not need to be expanded (AMA section 19.6 ; also see Which abbreviations don't need to be expanded? ).

- When defining “OR” within parentheses, use square brackets.

- The odds ratio was 3.1 (95% CI 2.2-4.8).

- OR 1.2% (95% CI 0.8%-1.6%)

- …(odds ratio [OR] 2.92, 95% CI –0.1 to 0.8). Note that OR needs to be defined in tables (through a footnote or in the caption).

Confidence limit

- Report the upper and lower boundaries of the confidence limit with a comma separating the 2 values.

Example:

The mean (95% confidence limits) was 30% (28%, 32%).

P value

From our instructions for authors:

(Again, this is the primary responsibility of the academic editor, but the copyeditor acts as a second line of defense if this has been overlooked by the editor/section editor.)

- Note for copyeditors: point the author to the relevant section in the Instructions for Authors if P values are missing (ie, stating "no significant differences were found..." without reporting the P level), incorrectly reported, or replaced by statements of inequality (or asterisks in tables instead of exact values) such as P <.05.

- Ensure P values are present and correctly reported rather than a statement of inequality (eg, P <.05), unless P <.001 (eg, if P =.00005, change to P <.001; see exception below).

- Express specific P values as decimals (eg, P =.34).

- If reported as P =0, change to P <.001

- If reported as P =1, change to P >.99

- Copyeditors should leave a note for authors when making such changes.

- Use two-digit precision for most P values.

- Use three digits for P <.01 and when rounding affects significance.

- P =.0027 rounded off to P =.003

- P =.049 should not be rounded to P =.05 ( P is considered significant at the ≤.05 level).

- N ote: our typesetting scripts can convert the P to italics if the copyeditor forgets this.

- Do not use leading zero before the decimal point.

- Remove spaces around mathematical operators in P values (eg, P < .001 → P <.001).

- In tables, use "P value" as the column heading (not just "P").

- Use superscripted letters (a-z) for table footnote symbols.

- Use of asterisks as footnotes to indicate significance levels is discouraged (eg, * P <.05, ** P <.01, *** P <.001). Authors are asked to provide exact P values instead.

- In tables of systematic reviews, which tend to be busy and where the original P values can't be found in the original publications

- When odds ratios instead of P values are presented

- If for any reason the authors are unable to provide the exact P values

P values for very large sample sizes (according to AMA guidelines)

- Express P values to the level required by the study's significance threshold.

- Although the AMA (section 19.5 ) recommends that “[expressing] P to more than 3 significant digits does not add useful information to P <.001,” but in specific cases, such as GWAS (genome-wide association studies), studies using adjustments like Bonferroni correction, or studies with stringent significance thresholds, more digits may be needed.

- For example, if the threshold of significance is P <.0004, then by definition the P value must be expressed to at least 4 digits to indicate whether a result is statistically significant. GWAS express P values to very small numbers, using scientific notation. If a manuscript you are editing defines statistical significance as a P value substantially less than .05, possibly even using scientific notation to express P values to very small numbers, it is best to retain the values as the author presents them.

- For very large sample sizes, it may be necessary to report P values to a value smaller than P <.001 in order to show statistical significance, at the editor's discretion.

“Trending” towards significance

- Avoid phrases like "trending towards significance." (eg, “There was a trend ( P =.06) showing that…was significant”).

- Instead, state if there was a trend, followed by acknowledging that the results were not statistically significant. Alternatively, clearly state the results' significance without using variations of "trending."

Guidance on formatting common statistical measures

| Statistic | Guidelines | Zero before decimal | Example |

|---|---|---|---|

| test | and " " | Yes | Text: 4,76=12.2 Table header: test ( ) –> 12.2 (4, 76) |

| test | df | Yes | Text: 15=2.68 Table header: test ( ) –> 2.68 (15) |

| — | Yes | ...an effect size of 0.277 SD units. | |

| — | No | Our predetermined α level was .05. | |

| Yes | Cronbach α=0.78 | ||

| Yes | Cohen =0.29 Cohen =1.45 | ||

|

| : Italicize “ ” | Yes | |

| — | No | β=.2 | |

| — | Yes | Standardized β coefficient=2.34 | |

| Yes | ρ=0.67 | ||

| Yes | =0.92 | ||

| Yes | κ=0.51 | ||

| in text and " " | Yes | Text: =0.3 Table header: Chi-square ( ) –> 0.3 (4) |

Other statistics

Formatting rules for additional statistics are specified below:

- F 1 -score: italicize “ F ,” place “1” as a subscript, use a hyphen before “score,” and start “score” with a lowercase “s”

- I 2 for heterogeneity: use an uppercase italicized “ I ”

- Type I error and type II error: do not use the numerals 1 and 2; AMA section 19.5

- R 2 : use the uppercase italicized “ R ”; do not italicize the superscript

- z score: lowercase italicized " z " without a hyphen

Additional guidelines

- Do not use possessives for the name of any statistical test (see Use of possessives with eponyms and AMA section 15.2 ).

Example: Hedges g (instead of Hedges’ g )

Equal and inequality signs

- Mac shortcuts: option + > = ≥ and option + < = ≤

- Windows shortcuts: ALT + 8805 = ≥ and ALT + 8804 = ≤

- Do not use an underlined greater than/less than symbol.

Example: y≤0

- For very small or large numbers (eg, P values), do not precede the exponent with “e” or “^”. Superscript the exponent.

- Use an en dash to indicate negative exponents.

- For more information, refer to the AMA (section 20.3 ).

Greek letters in text

- Use of Greek letters rather than spelled-out words is preferred per AMA guidelines, unless common usage dictates otherwise.

- In titles, subtitles, headings, and at the beginning of sentences, the first non-Greek letter after a lowercase Greek letter should be capitalized.

Cronbach α

Beta-thalassemia

tau protein

β-Blockers help control heart rate...

- Specify all currencies in US$. For amounts reported in non-US currency, the current exchange rate should be used to calculate the amount in US dollars, and that amount should be shown in parentheses.

- If there are more than 10 instances of another currency presented, a blanket statement of the exchange rate from the original currency to US$ should be included: "A currency exchange rate of CAD $1=US $0.72 is applicable." This rate should be the current conversion rate and is available online here: https://www1.oanda.com/currency/converter/ (AMA-recommended resource). You can directly add this into the manuscript (and ask the author to verify) or ask the author to add it in for you.

- For all currency measures, refer to the 11th edition of the AMA here: https://www.amamanualofstyle.com/view/10.1093/jama/9780190246556.001.0001/med-9780190246556-chapter-17-div2-34?rskey=z4zEI1&result=1 .

- Do not use zeros after whole numbers of currency.

CAD $125.35

Aus $100 ( Note: AMA uses "A$" for Australian dollars, but since we use a space before the $, this would be confusing as "A $100"; therefore, we'll abbreviate to "Aus $")

Each participant was rewarded with Amazon gift cards worth CAD $5 (US $7.18) for their participation.

In the text, whenever possible, characters in equations should be inserted using the Advanced Symbols feature of MS Word. Equations can be inserted within a paragraph (in line with the text) or on a separate line. Do not use the equation editor/tools in Word; the special formatting will not be picked up by our scripts.

Simple equations that are kept in text should be indented and numbered (number in bold) in parenthesis after the equation itself if they meet the following criteria: (1) if there are numerous equations (3 or more) in a manuscript, (2) if the equations are related to each other, or (3) if the equations are referred to after initial presentation (eg, Per equation 3, we recalculated…).

y i = C i - c i (1)

Use spaces between all mathematical operators in complex equations (including “=”). Note in this case, “=” is functioning as an operator; it is not a statement of equality. In the case of “n=2,” no spaces are used as it is a statement of equality.

Guidelines for formatting complex equations can be found here .

Forest plots

Meta-analyses and systematic reviews often contain forest plots to summarize their results—these display individual study results (tabularly) and, usually, the weighted mean of studies (graphically) included in a meta-analysis focused on a specific outcome. For details, see chapter 4.2.1.11 in the AMA Manual of Style (11th edition). Shown below are 2 ways in which a forest plot can be presented.

(1) As a table:

Table 1. Forest plot of 2 studies comparing the effectiveness of serious games with that of conventional exercises on verbal learning.

(2) As a figure:

Figure 1. Forest plot of 6 studies comparing the effectiveness of serious games with that of no or sham interventions on verbal learning [29,30,31-34].

Related articles

- JMIR House Style and Editorial Guidelines

- Which abbreviations don't need to be expanded?

- How should tables be formatted?

- [Kriyadocs] What are the authors' responsibilities during copyediting (Author Revisions stage)?

Reporting Statistics APA Style

APA style can be finicky. Trying to remember the very particular rules for spacing, italics and other formatting rules can be overwhelming if you’re also writing a fairly technical paper. My best advice is to write your paper and then edit it for grammar. Don’t worry about reporting statistics APA style until your paper is almost ready to submit for publication. Then go through your paper and make a second edit for statistical notation based on this list.

1. General tips for Reporting Statistics APA Style

- Correct : r(55) = .49, p < .001

- Incorrect : r(55)=.49,p<.001.

- Don’t state formulas for common statistics (e.g. variance , z-score ). Similarly, don’t use references for statistics unless they are uncommon or the focus of your study.

- Place a zero before the decimal point if the statistic can be greater than one (e.g. 0.26 lb).

- If number cannot be greater than one, leave out the decimal point (e.g. p = .015).

- Do not bold or italicize abbreviations (unless it is a variable ), Greek letters , or a subscript that is an identifier (e.g. z i – 1 ).

- Vectors and matrices are bolded (not italicized): V, Σ

- Use an uppercase N for number in the total sample ( N = 45) and a lowercase n for a fraction of the sample (n = 20).

- Place percentages in parentheses. For example: “Almost a quarter of the sample (25.5%) was already infected with the virus.”

- If you use a table to report results, don’t duplicate the information in the text.

2. Reporting Specific Statistics in APA Style

Confidence intervals : For CIs, use brackets: 95% CI [2.47, 2.99], [-5.1, 1.56], and [-3.43, 2.89]. If you are reporting a list of statistics within parentheses, you do not need to use brackets within the parentheses. For example: ( SD = 1.5, CI = -5, 5)

Use parentheses to enclose degrees of freedom . For example, t(10) = 2.16.

Probability values: report the p-value exactly, unless it is less than .001. If less than that amount, the convention is to report it as: p < .001. Note: I wrote “Probability Values” here for a reason: A lowercase symbol cannot be capitalized at the beginning of a sentence or as a table header as uppercase and lowercase are significant.

Mean , Standard Deviation (and similar single statistics): use parentheses: ( M = 22, SD = 3.4).

3. Hypothesis Tests in APA Style

Nouns (p value, z test, t test) are not hyphenated, but as an adjective they are: t-test results, z-test score.

At the beginning of the results section, restated your hypothesis and then state if your results supported it. This should be followed by the data and statistics to support or reject the null hypothesis.

One-Way/Two-Way ANOVA : State the between-groups degrees of freedom , then state the within-groups degrees of freedom, followed by the F statistic and significance level . For example: “The main effect was significant, F (1, 149) = 2.12, p = .02.”

Chi-Square test of Independence: Report degrees of freedom and sample size in parentheses, then the chi-square value, followed by the significance level. For example: “Animal response to the stimuli did not differ by species, Χ 2 (1, N = 75) = 0.89, p = .25.”

t tests : Report the t value and significance level as follows: t (54) = 5.43, p < .001. What you put in the wording will differ slightly depending on if you have a one sample t-test, or a t-test for groups. Examples:

- One sample: “Younger teens woke up earlier ( M = 7:30, SD = .45) than teens in general, t (33) = 2.10, p = 0.31″

- Dependent/Independent samples: “Younger teens indicated a significant preference for video games ( M = 7.45, SD = 2.51) than books ( M = 4.22, SD = 2.23), t (15) = 4.00, p < .001.”

Report correlations with degrees of freedom (N-2), followed by the significance level. For example: “The two sets of exam results are strongly correlated, r(55) = .49, p < .001.”

Thank you to Mark Suggs for contributions to this article.

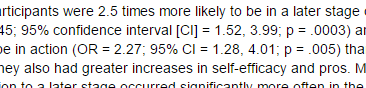

References: American Psychological Association. (2019). Publication Manual of the American Psychological Association, (7th ed). Milan, JE, White, AA. Impact of a stage-tailored, web-based intervention on folic acid-containing multivitamin use by college women. Am J Health Promot. 2010 Jul-Aug;24(6):388-95. doi: 10.4278/ajhp.071231143. Sheldon. (2013). APA Dictionary of Statistics and Research Methods (APA Reference Books) 1st Edition. American Psychological Association (APA).

Online Plagiarism Checker for Academic Assignments

Start Plagiarism Check

Editing & Proofreading for your Academic Assignments

Get it proofread now

Free Express Delivery to All Places in the UK

Configure binding now

- Academic essay overview

- The writing process

- Structuring academic essays

- Types of academic essays

- Academic writing overview

- Sentence structure

- Academic writing process

- Improving your academic writing

- Titles and headings

- APA style overview

- APA citation & referencing

- APA structure & sections

- Citation & referencing

- Structure and sections

- APA examples overview

- Commonly used citations

- Other examples

- British English vs. American English

- Chicago style overview

- Chicago citation & referencing

- Chicago structure & sections

- Chicago style examples

- Citing sources overview

- Citation format

- Citation examples

- university essay overview

- Application

- How to write a university essay

- Types of university essays

- Commonly confused words

- Definitions

- Dissertation overview

- Dissertation structure & sections

- Dissertation writing process

- autumnacies

- Graduate school overview

- Application & admission

- Study abroad

- Harvard referencing overview

- Language rules overview

- Grammatical rules & structures

- Parts of speech

- Punctuation

- Methodology overview

- analysing data

- Experiments

- Observations

- Inductive vs. Deductive

- Qualitative vs. Quantitative

- Types of validity

- Types of reliability

- Sampling methods

- Theories & Concepts

- Types of research studies

- Types of variables

- MLA style overview

- MLA examples

- MLA citation & referencing

- MLA structure & sections

- Plagiarism overview

- Plagiarism checker

- Types of plagiarism

- Printing production overview

- Research bias overview

- Types of research bias

- Research paper structure & sections

- Types of research papers

- Research process overview

- Problem statement

- Research proposal

- Research topic

- Statistics overview

- Levels of measurment

- Measures of central tendency

- Measures of variability

- Hypothesis testing

- Parametres & test statistics

- Types of distributions

- Correlation

- Effect size

- Hypothesis testing assumptions

- Types of ANOVAs

- Types of chi-square

- Statistical data

- Statistical models

- Spelling mistakes

- Tips overview

- Academic writing tips

- Dissertation tips

- Sources tips

- Working with sources overview

- Evaluating sources

- Finding sources

- Including sources

- Types of sources

Your Step to Success

Plagiarism Check for Academic Paper

Editing & Proofreading for your Dissertation

Printing & Binding with Free Express Delivery

Reporting Statistics In APA – A Guide With Rules & Examples

How do you like this article cancel reply.

Save my name, epost, and website in this browser for the next time I comment.

In academic writing, accurately reporting statistics is crucial. Following the APA guidelines for reporting statistics ensures clarity and consistency. This guide provides researchers with a definitive roadmap for presenting quantitative results in APA style , from basic descriptive statistics to complex inferential analyses. Mastering the art of reporting statistics in APA enhances the credibility and impact of research, fostering academic rigor and evidence-based conclusions.

Inhaltsverzeichnis

- 1 In a Nutshell: Reporting statistics in APA

- 2 Definition: Reporting statistics in APA

- 3 Reporting statistics in APA: Statistical results

- 4 Reporting statistics in APA: Formatting guidelines

- 5 Reporting statistic tests in APA

In a Nutshell: Reporting statistics in APA

- Statistical analysis is the process of collecting and testing quantitative data to make extrapolations about certain elements or the world in general.

- The APA Publication Manual provides guidelines and standard suggestions for reporting statistics in APA.

- The formula for representing statistics in APA differs depending on the type of statistics.

Definition: Reporting statistics in APA

The APA Publication Manual provides guidelines and standard suggestions for formatting and reporting statistics in APA. Here are the general rules for reporting statistics in APA:

- Use words for numbers under ten (1-9) and numerals for ten and over

- Use space after commas, variables, and mathsematical symbols

- Round the decimal points to two places, except for p-values

- Italicize the symbols and abbreviations, except if you have Greek letters

- ✓ 3D live preview of your individual configuration

- ✓ Free express delivery for every single purchase

- ✓ Top-notch bindings with customised embossing

Start Printing

Reporting statistics in APA: Statistical results

Below are some basic guidelines for reporting statistics in APA:

- Before presenting the data, repeat the hypotheses and explain if your statistical results support them.

- Present the results in a condensed format without interpretation

- Do not go into the tests you used

- Every report should relate to the hypothesis

Reporting statistics in APA: Formatting guidelines

This section provides guidelines for presenting test results when reporting statistics in APA.

Stating numbers

The general APA guidelines recommend using words for numbers below ten and numerals for ten and above. Use numerals for exact numbers before measurement units, equations, percentages, points on a scale, money, ratios, uncommon fractions, and decimals.

Measuring units

Regarding units of measurement, the rule is to use numerals to report exact measurements.

The stone weighed 9 kg.

In reporting statistics in APA, include a space between the abbreviation and the digit to represent units. On the other hand, when stating approximate digits, use words to express numbers below ten, then spell out the unit names.

The stone weighed approximately nine kilograms.

It is worth mentioning that all quantities should be reported in metric units. However, if you recorded them in non-metrical units, include metric equals in your report and the original units.

Percentages

When using percentages while reporting statistics in APA, use numerals followed by the % symbol.

Of the particitrousers, 19% disagreed with the statement.

Decimal places

The content or information you wish to report will influence the number of decimal places you use. However, the general rule for reporting statistics in APA is to round off the numbers while retaining precision. Here are some stats you can round off to one place when reporting statistics in APA:

- Standard deviation

- Descriptive statistics based on discrete data

You can round off to two decimal places when reporting:

- Correlation coefficient

- Proportions and ratios

- Inferential statistics , like t- and f-values

- Exact p-values (more than .001)

Use a leading zero

The zero before a decimal point when a number is less than one is called the leading zero. According to guidelines for reporting statistics in APA, you can only use a leading zero in the following cases:

- When the statistics you want to descote are greater than one

In contrast, you do not need the leading zero when:

- The variables can never be greater than one

- Pearson correlation coefficient

- Coefficient of determination

- Cronbach’s alpha

mathsematical formulas

While reporting statistics in APA, you must provide formulas for new and uncommon equations. If the formulas are short, present them in one line within the main text. For complex equations, you can take more than one line to present them.

Using brackets or brackets

While reporting statistics in APA, use round brackets for primary operations (first steps), square brackets for secondary ones (second steps), and then curly brackets for tertiary ones (third steps). While reporting statistics in APA, you should purpose to avoid nested brackets if necessary.

Reporting statistic tests in APA

When reporting statistics in APA, use descriptive statistics as summaries for your data. Below are guidelines for reporting statistics in APA regarding statistical tests.

Descriptive statistics: nastys and standard variations

nastys and standard deviations should appear in the main text and brackets (either or both). Regarding statistics relating to the same data, you do not need to repeat the measurement units.

The average productivity rate was 124.7 minutes (SD = 12.1).

Chi-square tests

Include the following when reporting Chi-square tests :

- Degrees of freedom in the brackets (df)

- Chi-square values (X 2 )

The chi-square revealed a link between weather and productivity, X 2 (9) = 21.7, P = .013.

Hypothesis tests

When reporting statistics in APA, reports for t-tests should have:

Particitrousers’ scores were higher than the population, z = 3.35, p = .012. (2)

When reporting statistics in APA, reports for p-tests should have:

- Degrees of freedom in brackets

Females experienced more severe symptoms than makes, t(33) = 3.87, p = .007.

Reporting ANOVA

When reporting statistics in APA, the ANOVA reports should include:

- Degrees of freedom in the brackets

We found a statistical significance in the leadership style effect on productivity F(3, 78) = 5.68, P =.018.

Correlations

Correlation reports when reporting statistics in APA should include:

We found a strong link between the temperature and productivity levels, r(401) =.43, p less than .00.1.

Regressions

Display regression results in a table. However, if you present them in the text, the report should include:

GAT Severity scores predicted anxiety levels, R 2 = .31, F(1, 510) = 6.71, p = .009.

Confidence intervals

When reporting statistics in APA, you have to report the confidence levels. Use brackets to enclose the lower and upper limits of the confidence interval, separated by a comma.

- Male particitrousers experienced more positive results than female ones, t(19) = 3.94, p = .007, d= 0.88, 90% CI [0.6, 1.13].

- On average, the tests resulted in a 43% increase in positive feelings, 97% CI [21.34, 44.5]

- ✓ Post a picture on Instagram

- ✓ Get the most likes on your picture

- ✓ Receive up to £300 cash back

Which specific statistical results need to be reported in APA format?

Statistical results like proportions, measurements, ranges, percentages, and others that descote samples can be reported in APA format.

What is the formula for reporting statistics in APA?

The formula differs depending on the type of statistics.

What results do you need for reporting statistics in APA?

You need the p-value, t-value, freedom degrees, and course of effect.

How many decimal places can you use when reporting statistics in APA?

Depending on the statistical data, you can use one or two decimal places.

They did such an excellent job printing my dissertation! I got it fast and...

We use biscuits on our website. Some of them are essential, while others help us to improve this website and your experience.

- External Media

Individual Privacy Preferences

biscuit Details Privacy Policy Imprint

Here you will find an overview of all biscuits used. You can give your consent to whole categories or display further information and select certain biscuits.

Accept all Save

Essential biscuits enable basic functions and are necessary for the proper function of the website.

Show biscuit Information Hide biscuit Information

| Name | |

|---|---|

| Anbieter | Bachelorprint |

| Zweck | Erkennt das Herkunftsland und leitet zur entsprechenden Sprachversion um. |

| Datenschutzerklärung | |

| Host(s) | ip-api.com |

| biscuit Name | georedirect |

| biscuit Laufzeit | 1 Jahr |

| Name | |

|---|---|

| Anbieter | Playcanvas |

| Zweck | Display our 3D product animations |

| Datenschutzerklärung | |

| Host(s) | playcanv.as, playcanvas.as, playcanvas.com |

| biscuit Laufzeit | 1 Jahr |

| Name | |

|---|---|

| Anbieter | Eigentümer dieser Website, |

| Zweck | Speichert die Einstellungen der Besucher, die in der biscuit Box von Borlabs biscuit ausgewählt wurden. |

| biscuit Name | borlabs-biscuit |

| biscuit Laufzeit | 1 Jahr |

Statistics biscuits collect information anonymously. This information helps us to understand how our visitors use our website.

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, pubrow Street, Dublin 4, Ireland |

| Zweck | biscuit von Google zur Steuerung der erweiterten Script- und Ereignisbehandlung. |

| Datenschutzerklärung | |

| biscuit Name | _ga,_gat,_gid |

| biscuit Laufzeit | 2 Jahre |

Content from video platforms and social media platforms is blocked by default. If External Media biscuits are accepted, access to those contents no longer requires manual consent.

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Meta Platforms Ireland Limited, 4 Grand Canal Square, Dublin 2, Ireland |

| Zweck | Wird verwendet, um Facebook-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .facebook.com |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, pubrow Street, Dublin 4, Ireland |

| Zweck | Wird zum Entsperren von Google Maps-Inhalten verwendet. |

| Datenschutzerklärung | |

| Host(s) | .google.com |

| biscuit Name | NID |

| biscuit Laufzeit | 6 Monate |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Meta Platforms Ireland Limited, 4 Grand Canal Square, Dublin 2, Ireland |

| Zweck | Wird verwendet, um Instagram-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .instagram.com |

| biscuit Name | pigaeon_state |

| biscuit Laufzeit | Sitzung |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Openstreetmap Foundation, St John’s Innovation Centre, Cowley Road, Cambridge CB4 0WS, United Kingdom |

| Zweck | Wird verwendet, um OpenStreetMap-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .openstreetmap.org |

| biscuit Name | _osm_location, _osm_session, _osm_totp_token, _osm_welcome, _pk_id., _pk_ref., _pk_ses., qos_token |

| biscuit Laufzeit | 1-10 Jahre |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Twitter International Company, One Cumberland Place, Fenian Street, Dublin 2, D02 AX07, Ireland |

| Zweck | Wird verwendet, um Twitter-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .twimg.com, .twitter.com |

| biscuit Name | __widgetsettings, local_storage_support_test |

| biscuit Laufzeit | Unbegrenzt |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Vimeo Inc., 555 West 18th Street, New York, New York 10011, USA |

| Zweck | Wird verwendet, um Vimeo-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | player.vimeo.com |

| biscuit Name | vuid |

| biscuit Laufzeit | 2 Jahre |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, pubrow Street, Dublin 4, Ireland |

| Zweck | Wird verwendet, um YouTube-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | google.com |

| biscuit Name | NID |

| biscuit Laufzeit | 6 Monate |

Privacy Policy Imprint

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Statistics in APA

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

Note: This page reflects APA 6, which is now out of date. It will remain online until 2021, but will not be updated. There is currently no equivalent 7th edition page, but we're working on one. Thank you for your patience. Here is a link to our APA 7 "General Format" page .

When including statistics in written text, be sure to include enough information for the reader to understand the study. Although the amount of explanation and data included depends upon the study, APA style has guidelines for the representation of statistical information:

- Do not give references for statistics unless the statistic is uncommon, used unconventionally, or is the focus of the article

- Do not give formulas for common statistics (i.e. mean, t test)

- Do not repeat descriptive statistics in the text if they’re represented in a table or figure

- Use terms like respectively and in order when enumerating a series of statistics; this illustrates the relationship between the numbers in the series.

Punctuating statistics

Use parentheses to enclose statistical values:

Use parentheses to enclose degrees of freedom:

Use brackets to enclose limits of confidence intervals:

Use standard typeface (no bolding or italicization) when writing Greek letters, subscripts that function as identifiers, and abbreviations that are not variables.

Use boldface for vectors and matrices:

Use italics for statistical symbols (other than vectors and matrices):

Use an italicized , uppercase N to refer to a total population.

Use an italicized, lowercase n to refer to a sample of the population .

Statistics in Psychological Research

- Data Collection and Analysis

Psychological Research

August 2023

Unlock the power of data with this 10-hour, comprehensive course in data analysis. This course is perfect for anyone looking to deepen their knowledge and apply statistical methods effectively in psychology or related fields.

The course begins with consideration of how researchers define and categorize variables, including the nature of various scales of measurement and how these classifications impact data analysis and interpretation. This is followed by a thorough introduction to the measures of central tendency, variability, and correlation that researchers use to describe their findings, providing an understanding of such topics as which descriptive statistics are appropriate for given research designs, the meaning of a correlation coefficient, and how graphs are used to visualize data.

The course then moves on to a conceptual treatment of foundational inferential statistics that researchers use to make predictions or inferences about a population based on a sample. The focus is on understanding the logic of these statistics, rather than on making calculations. Specifically, the course explores the logic behind null hypothesis significance testing, long a cornerstone of statistical analysis. Learn how to formulate and test hypotheses and understand the significance of p-values in determining the validity of your results. The course reviews how to select the appropriate inferential test based on your study criteria. Whether it’s t-tests, ANOVA, chi-square tests, or regression analysis, you’ll know which test to apply and when.

In keeping with growing concerns about some of the limitations of null hypothesis significance testing, such as its role in the so-called replication crisis, the course also delves into these concerns and possible ways to address them, including introductory consideration of statistical power and alternatives to hypothesis testing like estimation techniques and confidence intervals, meta-analysis, modeling, and Bayesian inference.

Learning objectives

- Explain various ways to categorize variables.

- Describe the logic of inferential statistics.

- Explain the logic of null hypothesis significance testing.

- Select the appropriate inferential test based on study criteria.

- Compare and contrast the use of statistical significance, effect size, and confidence intervals.

- Explain the importance of statistical power.

- Describe how alternative procedures address the major objections to null hypothesis significance testing.

- Explain various ways to describe data.

- Describe how graphs are used to visualize data.

- Explain the meaning of a correlation coefficient.

This program does not offer CE credit.

More in this series

Introduces the scientific research process and concepts such as the nature of variables for undergraduates, high school students, and professionals.

August 2023 On Demand Training

Introduces the importance of ethical practice in scientific research for undergraduates, high school students, and professionals.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Descriptive Statistics for Summarising Data

Ray w. cooksey.

UNE Business School, University of New England, Armidale, NSW Australia

This chapter discusses and illustrates descriptive statistics . The purpose of the procedures and fundamental concepts reviewed in this chapter is quite straightforward: to facilitate the description and summarisation of data. By ‘describe’ we generally mean either the use of some pictorial or graphical representation of the data (e.g. a histogram, box plot, radar plot, stem-and-leaf display, icon plot or line graph) or the computation of an index or number designed to summarise a specific characteristic of a variable or measurement (e.g., frequency counts, measures of central tendency, variability, standard scores). Along the way, we explore the fundamental concepts of probability and the normal distribution. We seldom interpret individual data points or observations primarily because it is too difficult for the human brain to extract or identify the essential nature, patterns, or trends evident in the data, particularly if the sample is large. Rather we utilise procedures and measures which provide a general depiction of how the data are behaving. These statistical procedures are designed to identify or display specific patterns or trends in the data. What remains after their application is simply for us to interpret and tell the story.

The first broad category of statistics we discuss concerns descriptive statistics . The purpose of the procedures and fundamental concepts in this category is quite straightforward: to facilitate the description and summarisation of data. By ‘describe’ we generally mean either the use of some pictorial or graphical representation of the data or the computation of an index or number designed to summarise a specific characteristic of a variable or measurement.

We seldom interpret individual data points or observations primarily because it is too difficult for the human brain to extract or identify the essential nature, patterns, or trends evident in the data, particularly if the sample is large. Rather we utilise procedures and measures which provide a general depiction of how the data are behaving. These statistical procedures are designed to identify or display specific patterns or trends in the data. What remains after their application is simply for us to interpret and tell the story.

Reflect on the QCI research scenario and the associated data set discussed in Chap. 10.1007/978-981-15-2537-7_4. Consider the following questions that Maree might wish to address with respect to decision accuracy and speed scores:

- What was the typical level of accuracy and decision speed for inspectors in the sample? [see Procedure 5.4 – Assessing central tendency.]

- What was the most common accuracy and speed score amongst the inspectors? [see Procedure 5.4 – Assessing central tendency.]

- What was the range of accuracy and speed scores; the lowest and the highest scores? [see Procedure 5.5 – Assessing variability.]

- How frequently were different levels of inspection accuracy and speed observed? What was the shape of the distribution of inspection accuracy and speed scores? [see Procedure 5.1 – Frequency tabulation, distributions & crosstabulation.]

- What percentage of inspectors would have ‘failed’ to ‘make the cut’ assuming the industry standard for acceptable inspection accuracy and speed combined was set at 95%? [see Procedure 5.7 – Standard ( z ) scores.]

- How variable were the inspectors in their accuracy and speed scores? Were all the accuracy and speed levels relatively close to each other in magnitude or were the scores widely spread out over the range of possible test outcomes? [see Procedure 5.5 – Assessing variability.]

- What patterns might be visually detected when looking at various QCI variables singly and together as a set? [see Procedure 5.2 – Graphical methods for dispaying data, Procedure 5.3 – Multivariate graphs & displays, and Procedure 5.6 – Exploratory data analysis.]

This chapter includes discussions and illustrations of a number of procedures available for answering questions about data like those posed above. In addition, you will find discussions of two fundamental concepts, namely probability and the normal distribution ; concepts that provide building blocks for Chaps. 10.1007/978-981-15-2537-7_6 and 10.1007/978-981-15-2537-7_7.

Procedure 5.1: Frequency Tabulation, Distributions & Crosstabulation

Frequency tabulation and distributions.

Frequency tabulation serves to provide a convenient counting summary for a set of data that facilitates interpretation of various aspects of those data. Basically, frequency tabulation occurs in two stages:

- First, the scores in a set of data are rank ordered from the lowest value to the highest value.

- Second, the number of times each specific score occurs in the sample is counted. This count records the frequency of occurrence for that specific data value.

Consider the overall job satisfaction variable, jobsat , from the QCI data scenario. Performing frequency tabulation across the 112 Quality Control Inspectors on this variable using the SPSS Frequencies procedure (Allen et al. 2019 , ch. 3; George and Mallery 2019 , ch. 6) produces the frequency tabulation shown in Table 5.1 . Note that three of the inspectors in the sample did not provide a rating for jobsat thereby producing three missing values (= 2.7% of the sample of 112) and leaving 109 inspectors with valid data for the analysis.

Frequency tabulation of overall job satisfaction scores

The display of frequency tabulation is often referred to as the frequency distribution for the sample of scores. For each value of a variable, the frequency of its occurrence in the sample of data is reported. It is possible to compute various percentages and percentile values from a frequency distribution.

Table 5.1 shows the ‘Percent’ or relative frequency of each score (the percentage of the 112 inspectors obtaining each score, including those inspectors who were missing scores, which SPSS labels as ‘System’ missing). Table 5.1 also shows the ‘Valid Percent’ which is computed only for those inspectors in the sample who gave a valid or non-missing response.

Finally, it is possible to add up the ‘Valid Percent’ values, starting at the low score end of the distribution, to form the cumulative distribution or ‘Cumulative Percent’ . A cumulative distribution is useful for finding percentiles which reflect what percentage of the sample scored at a specific value or below.

We can see in Table 5.1 that 4 of the 109 valid inspectors (a ‘Valid Percent’ of 3.7%) indicated the lowest possible level of job satisfaction—a value of 1 (Very Low) – whereas 18 of the 109 valid inspectors (a ‘Valid Percent’ of 16.5%) indicated the highest possible level of job satisfaction—a value of 7 (Very High). The ‘Cumulative Percent’ number of 18.3 in the row for the job satisfaction score of 3 can be interpreted as “roughly 18% of the sample of inspectors reported a job satisfaction score of 3 or less”; that is, nearly a fifth of the sample expressed some degree of negative satisfaction with their job as a quality control inspector in their particular company.

If you have a large data set having many different scores for a particular variable, it may be more useful to tabulate frequencies on the basis of intervals of scores.

For the accuracy scores in the QCI database, you could count scores occurring in intervals such as ‘less than 75% accuracy’, ‘between 75% but less than 85% accuracy’, ‘between 85% but less than 95% accuracy’, and ‘95% accuracy or greater’, rather than counting the individual scores themselves. This would yield what is termed a ‘grouped’ frequency distribution since the data have been grouped into intervals or score classes. Producing such an analysis using SPSS would involve extra steps to create the new category or ‘grouping’ system for scores prior to conducting the frequency tabulation.

Crosstabulation

In a frequency crosstabulation , we count frequencies on the basis of two variables simultaneously rather than one; thus we have a bivariate situation.

For example, Maree might be interested in the number of male and female inspectors in the sample of 112 who obtained each jobsat score. Here there are two variables to consider: inspector’s gender and inspector’s j obsat score. Table 5.2 shows such a crosstabulation as compiled by the SPSS Crosstabs procedure (George and Mallery 2019 , ch. 8). Note that inspectors who did not report a score for jobsat and/or gender have been omitted as missing values, leaving 106 valid inspectors for the analysis.

Frequency crosstabulation of jobsat scores by gender category for the QCI data

The crosstabulation shown in Table 5.2 gives a composite picture of the distribution of satisfaction levels for male inspectors and for female inspectors. If frequencies or ‘Counts’ are added across the gender categories, we obtain the numbers in the ‘Total’ column (the percentages or relative frequencies are also shown immediately below each count) for each discrete value of jobsat (note this column of statistics differs from that in Table 5.1 because the gender variable was missing for certain inspectors). By adding down each gender column, we obtain, in the bottom row labelled ‘Total’, the number of males and the number of females that comprised the sample of 106 valid inspectors.

The totals, either across the rows or down the columns of the crosstabulation, are termed the marginal distributions of the table. These marginal distributions are equivalent to frequency tabulations for each of the variables jobsat and gender . As with frequency tabulation, various percentage measures can be computed in a crosstabulation, including the percentage of the sample associated with a specific count within either a row (‘% within jobsat ’) or a column (‘% within gender ’). You can see in Table 5.2 that 18 inspectors indicated a job satisfaction level of 7 (Very High); of these 18 inspectors reported in the ‘Total’ column, 8 (44.4%) were male and 10 (55.6%) were female. The marginal distribution for gender in the ‘Total’ row shows that 57 inspectors (53.8% of the 106 valid inspectors) were male and 49 inspectors (46.2%) were female. Of the 57 male inspectors in the sample, 8 (14.0%) indicated a job satisfaction level of 7 (Very High). Furthermore, we could generate some additional interpretive information of value by adding the ‘% within gender’ values for job satisfaction levels of 5, 6 and 7 (i.e. differing degrees of positive job satisfaction). Here we would find that 68.4% (= 24.6% + 29.8% + 14.0%) of male inspectors indicated some degree of positive job satisfaction compared to 61.2% (= 10.2% + 30.6% + 20.4%) of female inspectors.

This helps to build a picture of the possible relationship between an inspector’s gender and their level of job satisfaction (a relationship that, as we will see later, can be quantified and tested using Procedure 10.1007/978-981-15-2537-7_6#Sec14 and Procedure 10.1007/978-981-15-2537-7_7#Sec17).

It should be noted that a crosstabulation table such as that shown in Table 5.2 is often referred to as a contingency table about which more will be said later (see Procedure 10.1007/978-981-15-2537-7_7#Sec17 and Procedure 10.1007/978-981-15-2537-7_7#Sec115).

Frequency tabulation is useful for providing convenient data summaries which can aid in interpreting trends in a sample, particularly where the number of discrete values for a variable is relatively small. A cumulative percent distribution provides additional interpretive information about the relative positioning of specific scores within the overall distribution for the sample.

Crosstabulation permits the simultaneous examination of the distributions of values for two variables obtained from the same sample of observations. This examination can yield some useful information about the possible relationship between the two variables. More complex crosstabulations can be also done where the values of three or more variables are tracked in a single systematic summary. The use of frequency tabulation or cross-tabulation in conjunction with various other statistical measures, such as measures of central tendency (see Procedure 5.4 ) and measures of variability (see Procedure 5.5 ), can provide a relatively complete descriptive summary of any data set.

Disadvantages

Frequency tabulations can get messy if interval or ratio-level measures are tabulated simply because of the large number of possible data values. Grouped frequency distributions really should be used in such cases. However, certain choices, such as the size of the score interval (group size), must be made, often arbitrarily, and such choices can affect the nature of the final frequency distribution.

Additionally, percentage measures have certain problems associated with them, most notably, the potential for their misinterpretation in small samples. One should be sure to know the sample size on which percentage measures are based in order to obtain an interpretive reference point for the actual percentage values.

For example

In a sample of 10 individuals, 20% represents only two individuals whereas in a sample of 300 individuals, 20% represents 60 individuals. If all that is reported is the 20%, then the mental inference drawn by readers is likely to be that a sizeable number of individuals had a score or scores of a particular value—but what is ‘sizeable’ depends upon the total number of observations on which the percentage is based.

Where Is This Procedure Useful?

Frequency tabulation and crosstabulation are very commonly applied procedures used to summarise information from questionnaires, both in terms of tabulating various demographic characteristics (e.g. gender, age, education level, occupation) and in terms of actual responses to questions (e.g. numbers responding ‘yes’ or ‘no’ to a particular question). They can be particularly useful in helping to build up the data screening and demographic stories discussed in Chap. 10.1007/978-981-15-2537-7_4. Categorical data from observational studies can also be analysed with this technique (e.g. the number of times Suzy talks to Frank, to Billy, and to John in a study of children’s social interactions).

Certain types of experimental research designs may also be amenable to analysis by crosstabulation with a view to drawing inferences about distribution differences across the sets of categories for the two variables being tracked.

You could employ crosstabulation in conjunction with the tests described in Procedure 10.1007/978-981-15-2537-7_7#Sec17 to see if two different styles of advertising campaign differentially affect the product purchasing patterns of male and female consumers.

In the QCI database, Maree could employ crosstabulation to help her answer the question “do different types of electronic manufacturing firms ( company ) differ in terms of their tendency to employ male versus female quality control inspectors ( gender )?”

Software Procedures

| Application | Procedures |

|---|---|

| SPSS | or . and select the variable(s) you wish to analyse; for the procedure, hitting the ‘ ’ button will allow you to choose various types of statistics and percentages to show in each cell of the table. |

| NCSS | or and select the variable(s) you wish to analyse. |

| SYSTAT | or ➔ and select the variable(s) you wish to analyse and choose the optional statistics you wish to see. |

| STATGRAPHICS | or and select the variable(s) you wish to analyse; hit ‘ ’ and when the ‘Tables and Graphs’ window opens, choose the Tables and Graphs you wish to see. |

| Commander | or and select the variable(s) you wish to analyse and choose the optional statistics you wish to see. |

Procedure 5.2: Graphical Methods for Displaying Data

Graphical methods for displaying data include bar and pie charts, histograms and frequency polygons, line graphs and scatterplots. It is important to note that what is presented here is a small but representative sampling of the types of simple graphs one can produce to summarise and display trends in data. Generally speaking, SPSS offers the easiest facility for producing and editing graphs, but with a rather limited range of styles and types. SYSTAT, STATGRAPHICS and NCSS offer a much wider range of graphs (including graphs unique to each package), but with the drawback that it takes somewhat more effort to get the graphs in exactly the form you want.

Bar and Pie Charts

These two types of graphs are useful for summarising the frequency of occurrence of various values (or ranges of values) where the data are categorical (nominal or ordinal level of measurement).

- A bar chart uses vertical and horizontal axes to summarise the data. The vertical axis is used to represent frequency (number) of occurrence or the relative frequency (percentage) of occurrence; the horizontal axis is used to indicate the data categories of interest.

- A pie chart gives a simpler visual representation of category frequencies by cutting a circular plot into wedges or slices whose sizes are proportional to the relative frequency (percentage) of occurrence of specific data categories. Some pie charts can have a one or more slices emphasised by ‘exploding’ them out from the rest of the pie.

Consider the company variable from the QCI database. This variable depicts the types of manufacturing firms that the quality control inspectors worked for. Figure 5.1 illustrates a bar chart summarising the percentage of female inspectors in the sample coming from each type of firm. Figure 5.2 shows a pie chart representation of the same data, with an ‘exploded slice’ highlighting the percentage of female inspectors in the sample who worked for large business computer manufacturers – the lowest percentage of the five types of companies. Both graphs were produced using SPSS.

Bar chart: Percentage of female inspectors

Pie chart: Percentage of female inspectors

The pie chart was modified with an option to show the actual percentage along with the label for each category. The bar chart shows that computer manufacturing firms have relatively fewer female inspectors compared to the automotive and electrical appliance (large and small) firms. This trend is less clear from the pie chart which suggests that pie charts may be less visually interpretable when the data categories occur with rather similar frequencies. However, the ‘exploded slice’ option can help interpretation in some circumstances.

Certain software programs, such as SPSS, STATGRAPHICS, NCSS and Microsoft Excel, offer the option of generating 3-dimensional bar charts and pie charts and incorporating other ‘bells and whistles’ that can potentially add visual richness to the graphic representation of the data. However, you should generally be careful with these fancier options as they can produce distortions and create ambiguities in interpretation (e.g. see discussions in Jacoby 1997 ; Smithson 2000 ; Wilkinson 2009 ). Such distortions and ambiguities could ultimately end up providing misinformation to researchers as well as to those who read their research.

Histograms and Frequency Polygons

These two types of graphs are useful for summarising the frequency of occurrence of various values (or ranges of values) where the data are essentially continuous (interval or ratio level of measurement) in nature. Both histograms and frequency polygons use vertical and horizontal axes to summarise the data. The vertical axis is used to represent the frequency (number) of occurrence or the relative frequency (percentage) of occurrences; the horizontal axis is used for the data values or ranges of values of interest. The histogram uses bars of varying heights to depict frequency; the frequency polygon uses lines and points.

There is a visual difference between a histogram and a bar chart: the bar chart uses bars that do not physically touch, signifying the discrete and categorical nature of the data, whereas the bars in a histogram physically touch to signal the potentially continuous nature of the data.

Suppose Maree wanted to graphically summarise the distribution of speed scores for the 112 inspectors in the QCI database. Figure 5.3 (produced using NCSS) illustrates a histogram representation of this variable. Figure 5.3 also illustrates another representational device called the ‘density plot’ (the solid tracing line overlaying the histogram) which gives a smoothed impression of the overall shape of the distribution of speed scores. Figure 5.4 (produced using STATGRAPHICS) illustrates the frequency polygon representation for the same data.

Histogram of the speed variable (with density plot overlaid)

Frequency polygon plot of the speed variable

These graphs employ a grouped format where speed scores which fall within specific intervals are counted as being essentially the same score. The shape of the data distribution is reflected in these plots. Each graph tells us that the inspection speed scores are positively skewed with only a few inspectors taking very long times to make their inspection judgments and the majority of inspectors taking rather shorter amounts of time to make their decisions.

Both representations tell a similar story; the choice between them is largely a matter of personal preference. However, if the number of bars to be plotted in a histogram is potentially very large (and this is usually directly controllable in most statistical software packages), then a frequency polygon would be the preferred representation simply because the amount of visual clutter in the graph will be much reduced.

It is somewhat of an art to choose an appropriate definition for the width of the score grouping intervals (or ‘bins’ as they are often termed) to be used in the plot: choose too many and the plot may look too lumpy and the overall distributional trend may not be obvious; choose too few and the plot will be too coarse to give a useful depiction. Programs like SPSS, SYSTAT, STATGRAPHICS and NCSS are designed to choose an ‘appropriate’ number of bins to be used, but the analyst’s eye is often a better judge than any statistical rule that a software package would use.

There are several interesting variations of the histogram which can highlight key data features or facilitate interpretation of certain trends in the data. One such variation is a graph is called a dual histogram (available in SYSTAT; a variation called a ‘comparative histogram’ can be created in NCSS) – a graph that facilitates visual comparison of the frequency distributions for a specific variable for participants from two distinct groups.

Suppose Maree wanted to graphically compare the distributions of speed scores for inspectors in the two categories of education level ( educlev ) in the QCI database. Figure 5.5 shows a dual histogram (produced using SYSTAT) that accomplishes this goal. This graph still employs the grouped format where speed scores falling within particular intervals are counted as being essentially the same score. The shape of the data distribution within each group is also clearly reflected in this plot. However, the story conveyed by the dual histogram is that, while the inspection speed scores are positively skewed for inspectors in both categories of educlev, the comparison suggests that inspectors with a high school level of education (= 1) tend to take slightly longer to make their inspection decisions than do their colleagues who have a tertiary qualification (= 2).

Dual histogram of speed for the two categories of educlev

Line Graphs

The line graph is similar in style to the frequency polygon but is much more general in its potential for summarising data. In a line graph, we seldom deal with percentage or frequency data. Instead we can summarise other types of information about data such as averages or means (see Procedure 5.4 for a discussion of this measure), often for different groups of participants. Thus, one important use of the line graph is to break down scores on a specific variable according to membership in the categories of a second variable.

In the context of the QCI database, Maree might wish to summarise the average inspection accuracy scores for the inspectors from different types of manufacturing companies. Figure 5.6 was produced using SPSS and shows such a line graph.

Line graph comparison of companies in terms of average inspection accuracy

Note how the trend in performance across the different companies becomes clearer with such a visual representation. It appears that the inspectors from the Large Business Computer and PC manufacturing companies have better average inspection accuracy compared to the inspectors from the remaining three industries.

With many software packages, it is possible to further elaborate a line graph by including error or confidence intervals bars (see Procedure 10.1007/978-981-15-2537-7_8#Sec18). These give some indication of the precision with which the average level for each category in the population has been estimated (narrow bars signal a more precise estimate; wide bars signal a less precise estimate).

Figure 5.7 shows such an elaborated line graph, using 95% confidence interval bars, which can be used to help make more defensible judgments (compared to Fig. 5.6 ) about whether the companies are substantively different from each other in average inspection performance. Companies whose confidence interval bars do not overlap each other can be inferred to be substantively different in performance characteristics.

Line graph using confidence interval bars to compare accuracy across companies

The accuracy confidence interval bars for participants from the Large Business Computer manufacturing firms do not overlap those from the Large or Small Electrical Appliance manufacturers or the Automobile manufacturers.

We might conclude that quality control inspection accuracy is substantially better in the Large Business Computer manufacturing companies than in these other industries but is not substantially better than the PC manufacturing companies. We might also conclude that inspection accuracy in PC manufacturing companies is not substantially different from Small Electrical Appliance manufacturers.

Scatterplots

Scatterplots are useful in displaying the relationship between two interval- or ratio-scaled variables or measures of interest obtained on the same individuals, particularly in correlational research (see Fundamental Concept 10.1007/978-981-15-2537-7_6#Sec1 and Procedure 10.1007/978-981-15-2537-7_6#Sec4).

In a scatterplot, one variable is chosen to be represented on the horizontal axis; the second variable is represented on the vertical axis. In this type of plot, all data point pairs in the sample are graphed. The shape and tilt of the cloud of points in a scatterplot provide visual information about the strength and direction of the relationship between the two variables. A very compact elliptical cloud of points signals a strong relationship; a very loose or nearly circular cloud signals a weak or non-existent relationship. A cloud of points generally tilted upward toward the right side of the graph signals a positive relationship (higher scores on one variable associated with higher scores on the other and vice-versa). A cloud of points generally tilted downward toward the right side of the graph signals a negative relationship (higher scores on one variable associated with lower scores on the other and vice-versa).

Maree might be interested in displaying the relationship between inspection accuracy and inspection speed in the QCI database. Figure 5.8 , produced using SPSS, shows what such a scatterplot might look like. Several characteristics of the data for these two variables can be noted in Fig. 5.8 . The shape of the distribution of data points is evident. The plot has a fan-shaped characteristic to it which indicates that accuracy scores are highly variable (exhibit a very wide range of possible scores) at very fast inspection speeds but get much less variable and tend to be somewhat higher as inspection speed increases (where inspectors take longer to make their quality control decisions). Thus, there does appear to be some relationship between inspection accuracy and inspection speed (a weak positive relationship since the cloud of points tends to be very loose but tilted generally upward toward the right side of the graph – slower speeds tend to be slightly associated with higher accuracy.

Scatterplot relating inspection accuracy to inspection speed

However, it is not the case that the inspection decisions which take longest to make are necessarily the most accurate (see the labelled points for inspectors 7 and 62 in Fig. 5.8 ). Thus, Fig. 5.8 does not show a simple relationship that can be unambiguously summarised by a statement like “the longer an inspector takes to make a quality control decision, the more accurate that decision is likely to be”. The story is more complicated.

Some software packages, such as SPSS, STATGRAPHICS and SYSTAT, offer the option of using different plotting symbols or markers to represent the members of different groups so that the relationship between the two focal variables (the ones anchoring the X and Y axes) can be clarified with reference to a third categorical measure.

Maree might want to see if the relationship depicted in Fig. 5.8 changes depending upon whether the inspector was tertiary-qualified or not (this information is represented in the educlev variable of the QCI database).

Figure 5.9 shows what such a modified scatterplot might look like; the legend in the upper corner of the figure defines the marker symbols for each category of the educlev variable. Note that for both High School only-educated inspectors and Tertiary-qualified inspectors, the general fan-shaped relationship between accuracy and speed is the same. However, it appears that the distribution of points for the High School only-educated inspectors is shifted somewhat upward and toward the right of the plot suggesting that these inspectors tend to be somewhat more accurate as well as slower in their decision processes.

Scatterplot displaying accuracy vs speed conditional on educlev group

There are many other styles of graphs available, often dependent upon the specific statistical package you are using. Interestingly, NCSS and, particularly, SYSTAT and STATGRAPHICS, appear to offer the most variety in terms of types of graphs available for visually representing data. A reading of the user’s manuals for these programs (see the Useful additional readings) would expose you to the great diversity of plotting techniques available to researchers. Many of these techniques go by rather interesting names such as: Chernoff’s faces, radar plots, sunflower plots, violin plots, star plots, Fourier blobs, and dot plots.

These graphical methods provide summary techniques for visually presenting certain characteristics of a set of data. Visual representations are generally easier to understand than a tabular representation and when these plots are combined with available numerical statistics, they can give a very complete picture of a sample of data. Newer methods have become available which permit more complex representations to be depicted, opening possibilities for creatively visually representing more aspects and features of the data (leading to a style of visual data storytelling called infographics ; see, for example, McCandless 2014 ; Toseland and Toseland 2012 ). Many of these newer methods can display data patterns from multiple variables in the same graph (several of these newer graphical methods are illustrated and discussed in Procedure 5.3 ).

Graphs tend to be cumbersome and space consuming if a great many variables need to be summarised. In such cases, using numerical summary statistics (such as means or correlations) in tabular form alone will provide a more economical and efficient summary. Also, it can be very easy to give a misleading picture of data trends using graphical methods by simply choosing the ‘correct’ scaling for maximum effect or choosing a display option (such as a 3-D effect) that ‘looks’ presentable but which actually obscures a clear interpretation (see Smithson 2000 ; Wilkinson 2009 ).

Thus, you must be careful in creating and interpreting visual representations so that the influence of aesthetic choices for sake of appearance do not become more important than obtaining a faithful and valid representation of the data—a very real danger with many of today’s statistical packages where ‘default’ drawing options have been pre-programmed in. No single plot can completely summarise all possible characteristics of a sample of data. Thus, choosing a specific method of graphical display may, of necessity, force a behavioural researcher to represent certain data characteristics (such as frequency) at the expense of others (such as averages).

Virtually any research design which produces quantitative data and statistics (even to the extent of just counting the number of occurrences of several events) provides opportunities for graphical data display which may help to clarify or illustrate important data characteristics or relationships. Remember, graphical displays are communication tools just like numbers—which tool to choose depends upon the message to be conveyed. Visual representations of data are generally more useful in communicating to lay persons who are unfamiliar with statistics. Care must be taken though as these same lay people are precisely the people most likely to misinterpret a graph if it has been incorrectly drawn or scaled.

| Application | Procedures |