Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Image processing articles within Nature Methods

Article | 12 April 2024

Pretraining a foundation model for generalizable fluorescence microscopy-based image restoration

A pretrained foundation model (UniFMIR) enables versatile and generalizable performance across diverse fluorescence microscopy image reconstruction tasks.

- , Weimin Tan

- & Bo Yan

Resource 09 April 2024 | Open Access

Three million images and morphological profiles of cells treated with matched chemical and genetic perturbations

The CPJUMP1 Resource comprises Cell Painting images and profiles of 75 million cells treated with hundreds of chemical and genetic perturbations. The dataset enables exploration of their relationships and lays the foundation for the development of advanced methods to match perturbations.

- Srinivas Niranj Chandrasekaran

- , Beth A. Cimini

- & Anne E. Carpenter

Research Briefing | 01 April 2024

Creating a universal cell segmentation algorithm

Cell segmentation currently involves the use of various bespoke algorithms designed for specific cell types, tissues, staining methods and microscopy technologies. We present a universal algorithm that can segment all kinds of microscopy images and cell types across diverse imaging protocols.

Analysis | 26 March 2024

The multimodality cell segmentation challenge: toward universal solutions

Cell segmentation is crucial in many image analysis pipelines. This analysis compares many tools on a multimodal cell segmentation benchmark. A Transformer-based model performed best in terms of performance and general applicability.

- , Ronald Xie

- & Bo Wang

Editorial | 12 February 2024

Where imaging and metrics meet

When it comes to bioimaging and image analysis, details matter. Papers in this issue offer guidance for improved robustness and reproducibility.

Correspondence | 24 January 2024

EfficientBioAI: making bioimaging AI models efficient in energy and latency

- , Jiajun Cao

- & Jianxu Chen

Correspondence | 08 January 2024

JDLL: a library to run deep learning models on Java bioimage informatics platforms

- Carlos García López de Haro

- , Stéphane Dallongeville

- & Jean-Christophe Olivo-Marin

Article 08 January 2024 | Open Access

Unsupervised and supervised discovery of tissue cellular neighborhoods from cell phenotypes

CytoCommunity enables both supervised and unsupervised analyses of spatial omics data in order to identify complex tissue cellular neighborhoods based on cell phenotypes and spatial distributions.

- , Jiazhen Rong

- & Kai Tan

Article 04 January 2024 | Open Access

Image restoration of degraded time-lapse microscopy data mediated by near-infrared imaging

InfraRed-mediated Image Restoration (IR 2 ) uses deep learning to combine the benefits of deep-tissue imaging with NIR probes and the convenience of imaging with GFP for improved time-lapse imaging of embryogenesis.

- Nicola Gritti

- , Rory M. Power

- & Jan Huisken

Method to Watch | 06 December 2023

Imaging across scales

New twists on established methods and multimodal imaging are poised to bridge gaps between cellular and organismal imaging.

- Rita Strack

Visual proteomics

Advances will enable proteome-scale structure determination in cells.

Article 06 December 2023 | Open Access

Embryo mechanics cartography: inference of 3D force atlases from fluorescence microscopy

Foambryo is an analysis pipeline for three-dimensional force-inference measurements in developing embryos.

- Sacha Ichbiah

- , Fabrice Delbary

- & Hervé Turlier

Article | 06 December 2023

TubULAR: tracking in toto deformations of dynamic tissues via constrained maps

TubULAR is an in toto tissue cartography method for mapping complex dynamic surfaces

- Noah P. Mitchell

- & Dillon J. Cislo

Research Briefing | 05 December 2023

Inferring how animals deform improves cell tracking

Tracking cells is a time-consuming part of biological image analysis, and traditional manual annotation methods are prohibitively laborious for tracking neurons in the deforming and moving Caenorhabditis elegans brain. By leveraging machine learning to develop a ‘targeted augmentation’ method, we substantially reduced the number of labeled images required for tracking.

Article | 05 December 2023

Automated neuron tracking inside moving and deforming C. elegans using deep learning and targeted augmentation

Targettrack is a deep-learning-based pipeline for automatic tracking of neurons within freely moving C. elegans . Using targeted augmentation, the pipeline has a reduced need for manually annotated training data.

- Core Francisco Park

- , Mahsa Barzegar-Keshteli

- & Sahand Jamal Rahi

Brief Communication | 16 November 2023

Improving resolution and resolvability of single-particle cryoEM structures using Gaussian mixture models

This manuscript describes a refinement protocol that extends the e2gmm method to optimize both the orientation and conformation estimation of particles to improve the alignment for flexible domains of proteins.

- Muyuan Chen

- , Michael F. Schmid

- & Wah Chiu

Article 13 November 2023 | Open Access

Bio-friendly long-term subcellular dynamic recording by self-supervised image enhancement microscopy

DeepSeMi is a self-supervised denoising framework that can enhance SNR over 12 dB across diverse samples and imaging modalities. DeepSeMi enables extended longitudinal imaging of subcellular dynamics with high spatiotemporal resolution.

- Guoxun Zhang

- , Xiaopeng Li

- & Qionghai Dai

High-fidelity 3D live-cell nanoscopy through data-driven enhanced super-resolution radial fluctuation

Enhanced super-resolution radial fluctuations (eSRRF) offers improved image fidelity and resolution compared to the popular SRRF method and further enables volumetric live-cell super-resolution imaging at high speeds.

- Romain F. Laine

- , Hannah S. Heil

- & Ricardo Henriques

Article 26 October 2023 | Open Access

nextPYP: a comprehensive and scalable platform for characterizing protein variability in situ using single-particle cryo-electron tomography

nextPYP is a turn-key framework for single-particle cryo-electron tomography that streamlines complex data analysis pipelines, from pre-processing of tilt series to high-resolution refinement, for efficient analysis and visualization of large datasets.

- Hsuan-Fu Liu

- & Alberto Bartesaghi

Article | 07 September 2023

FIOLA: an accelerated pipeline for fluorescence imaging online analysis

FIOLA is a pipeline for processing calcium or voltage imaging data. Its advantages include the fast speed and online processing.

- Changjia Cai

- , Cynthia Dong

- & Andrea Giovannucci

Correspondence | 18 August 2023

napari-imagej: ImageJ ecosystem access from napari

- Gabriel J. Selzer

- , Curtis T. Rueden

- & Kevin W. Eliceiri

Article 17 August 2023 | Open Access

Alignment of spatial genomics data using deep Gaussian processes

Gaussian Process Spatial Alignment (GPSA) aligns multiple spatially resolved genomics and histology datasets and improves downstream analysis.

- Andrew Jones

- , F. William Townes

- & Barbara E. Engelhardt

Brief Communication 27 July 2023 | Open Access

Segmentation metric misinterpretations in bioimage analysis

This study shows the importance of proper metrics for comparing algorithms for bioimage segmentation and object detection by exploring the impact of metrics on the relative performance of algorithms in three image analysis competitions.

- Dominik Hirling

- , Ervin Tasnadi

- & Peter Horvath

Article | 27 July 2023

DBlink: dynamic localization microscopy in super spatiotemporal resolution via deep learning

DBlink uses deep learning to capture long-term dependencies between different frames in single-molecule localization microscopy data, yielding super spatiotemporal resolution videos of fast dynamic processes in living cells.

- , Onit Alalouf

- & Yoav Shechtman

Editorial | 11 July 2023

What’s next for bioimage analysis?

Advanced bioimage analysis tools are poised to disrupt the way in which microscopy images are acquired and analyzed. This Focus issue shares the hopes and opinions of experts on the near and distant future of image analysis.

Comment | 11 July 2023

The future of bioimage analysis: a dialog between mind and machine

The field of bioimage analysis is poised for a major transformation, owing to advancements in imaging technologies and artificial intelligence. The emergence of multimodal foundation models — which are akin to large language models (such as ChatGPT) but are capable of comprehending and processing biological images — holds great potential for ushering in a revolutionary era in bioimage analysis.

- Loïc A. Royer

Unveiling the vision: exploring the potential of image analysis in Africa

Here we discuss the prospects of bioimage analysis in the context of the African research landscape as well as challenges faced in the development of bioimage analysis in countries on the continent. We also speculate about potential approaches and areas of focus to overcome these challenges and thus build the communities, infrastructure and initiatives that are required to grow image analysis in African research.

- Mai Atef Rahmoon

- , Gizeaddis Lamesgin Simegn

- & Michael A. Reiche

The Twenty Questions of bioimage object analysis

The language used by microscopists who wish to find and measure objects in an image often differs in critical ways from that used by computer scientists who create tools to help them do this, making communication hard across disciplines. This work proposes a set of standardized questions that can guide analyses and shows how it can improve the future of bioimage analysis as a whole by making image analysis workflows and tools more FAIR (findable, accessible, interoperable and reusable).

- Beth A. Cimini

Smart microscopes of the future

We dream of a future where light microscopes have new capabilities: language-guided image acquisition, automatic image analysis based on extensive prior training from biologist experts, and language-guided image analysis for custom analyses. Most capabilities have reached the proof-of-principle stage, but implementation would be accelerated by efforts to gather appropriate training sets and make user-friendly interfaces.

- Anne E. Carpenter

Using AI in bioimage analysis to elevate the rate of scientific discovery as a community

The future of bioimage analysis is increasingly defined by the development and use of tools that rely on deep learning and artificial intelligence (AI). For this trend to continue in a way most useful for stimulating scientific progress, it will require our multidisciplinary community to work together, establish FAIR (findable, accessible, interoperable and reusable) data sharing and deliver usable and reproducible analytical tools.

- Damian Dalle Nogare

- , Matthew Hartley

- & Florian Jug

Scaling biological discovery at the interface of deep learning and cellular imaging

Concurrent advances in imaging technologies and deep learning have transformed the nature and scale of data that can now be collected with imaging. Here we discuss the progress that has been made and outline potential research directions at the intersection of deep learning and imaging-based measurements of living systems.

- Morgan Schwartz

- , Uriah Israel

- & David Van Valen

Towards effective adoption of novel image analysis methods

The bridging of domains such as deep learning-driven image analysis and biology brings exciting promises of previously impossible discoveries as well as perils of misinterpretation and misapplication. We encourage continual communication between method developers and application scientists that emphases likely pitfalls and provides validation tools in conjunction with new techniques.

- Talley Lambert

- & Jennifer Waters

Towards foundation models of biological image segmentation

In the ever-evolving landscape of biological imaging technology, it is crucial to develop foundation models capable of adapting to various imaging modalities and tackling complex segmentation tasks.

When seeing is not believing: application-appropriate validation matters for quantitative bioimage analysis

A key step toward biologically interpretable analysis of microscopy image-based assays is rigorous quantitative validation with metrics appropriate for the particular application in use. Here we describe this challenge for both classical and modern deep learning-based image analysis approaches and discuss possible solutions for automating and streamlining the validation process in the next five to ten years.

- Jianxu Chen

- , Matheus P. Viana

- & Susanne M. Rafelski

Article | 10 July 2023

SCS: cell segmentation for high-resolution spatial transcriptomics

Subcellular spatial transcriptomics cell segmentation (SCS) combines information from stained images and sequencing data to improve cell segmentation in high-resolution spatial transcriptomics data.

- , Dongshunyi Li

- & Ziv Bar-Joseph

Research Highlight | 09 June 2023

Capturing hyperspectral images

A single-shot hyperspectral phasor camera (SHy-Cam) enables fast, multiplexed volumetric imaging.

Correspondence | 05 June 2023

Distributed-Something: scripts to leverage AWS storage and computing for distributed workflows at scale

- Erin Weisbart

- & Beth A. Cimini

Brief Communication | 29 May 2023

New measures of anisotropy of cryo-EM maps

This paper proposes two new anisotropy metrics—the Fourier shell occupancy and the Bingham test—that can be used to understand the quality of cryogenic electron microscopy maps.

- Jose-Luis Vilas

- & Hemant D. Tagare

Analysis 18 May 2023 | Open Access

The Cell Tracking Challenge: 10 years of objective benchmarking

This updated analysis of the Cell Tracking Challenge explores how algorithms for cell segmentation and tracking in both 2D and 3D have advanced in recent years, pointing users to high-performing tools and developers to open challenges.

- Martin Maška

- , Vladimír Ulman

- & Carlos Ortiz-de-Solórzano

Article 15 May 2023 | Open Access

TomoTwin: generalized 3D localization of macromolecules in cryo-electron tomograms with structural data mining

TomoTwin is a deep metric learning-based particle picking method for cryo-electron tomograms. TomoTwin obviates the need for annotating training data and retraining a picking model for each protein.

- , Thorsten Wagner

- & Stefan Raunser

Research Briefing | 12 May 2023

Mapping the motion and structure of flexible proteins from cryo-EM data

A deep learning algorithm maps out the continuous conformational changes of flexible protein molecules from single-particle cryo-electron microscopy images, allowing the visualization of the conformational landscape of a protein with improved resolution of its moving parts.

Article 11 May 2023 | Open Access

Cross-modality supervised image restoration enables nanoscale tracking of synaptic plasticity in living mice

XTC is a supervised deep-learning-based image-restoration approach that is trained with images from different modalities and applied to an in vivo modality with no ground truth. XTC’s capabilities are demonstrated in synapse tracking in the mouse brain.

- Yu Kang T. Xu

- , Austin R. Graves

- & Jeremias Sulam

3DFlex: determining structure and motion of flexible proteins from cryo-EM

3D Flexible Refinement (3DFlex) is a generative neural network model for continuous molecular heterogeneity for cryo-EM data that can be used to determine the structure and motion of flexible biomolecules. It enables visualization of nonrigid motion and improves 3D structure resolution by aggregating information from particle images spanning the conformational landscape of the target molecule.

- Ali Punjani

- & David J. Fleet

Resource 08 May 2023 | Open Access

EmbryoNet: using deep learning to link embryonic phenotypes to signaling pathways

EmbryoNet is an automated approach to the phenotyping of developing embryos that surpasses experts in terms of speed, accuracy and sensitivity. A large annotated image dataset of zebrafish, medaka and stickleback development rounds out this resource.

- Daniel Čapek

- , Matvey Safroshkin

- & Patrick Müller

Article 01 April 2023 | Open Access

Rapid detection of neurons in widefield calcium imaging datasets after training with synthetic data

DeepWonder removes background signals from widefield calcium recordings and enables accurate and efficient neuronal segmentation with high throughput.

- Yuanlong Zhang

- , Guoxun Zhang

Correspondence | 10 February 2023

MoBIE: a Fiji plugin for sharing and exploration of multi-modal cloud-hosted big image data

- Constantin Pape

- , Kimberly Meechan

- & Christian Tischer

Article 23 January 2023 | Open Access

Convolutional networks for supervised mining of molecular patterns within cellular context

DeePiCt (deep picker in context) is a versatile, open-source deep-learning framework for supervised segmentation and localization of subcellular organelles and biomolecular complexes in cryo-electron tomography.

- Irene de Teresa-Trueba

- , Sara K. Goetz

- & Judith B. Zaugg

Correspondence | 10 January 2023

JIPipe: visual batch processing for ImageJ

- Ruman Gerst

- , Zoltán Cseresnyés

- & Marc Thilo Figge

Research Briefing | 30 December 2022

Digital brain atlases reveal postnatal development to 2 years of age in human infants

During the first two years of postnatal development, the human brain undergoes rapid, pronounced changes in size, shape and content. Using high-resolution MRI, we constructed month-to-month atlases of infants 2 weeks to 2 years old, capturing key spatiotemporal traits of early brain development in terms of cortical geometries and tissue properties.

Resource 30 December 2022 | Open Access

Multifaceted atlases of the human brain in its infancy

This Resource presents surface and volume atlases of human brain development during early infancy, at monthly intervals.

- Sahar Ahmad

- & Pew-Thian Yap

Browse broader subjects

- Computational biology and bioinformatics

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Reference Manager

- Simple TEXT file

People also looked at

Editorial article, editorial: current trends in image processing and pattern recognition.

- PAMI Research Lab, Computer Science, University of South Dakota, Vermillion, SD, United States

Editorial on the Research Topic Current Trends in Image Processing and Pattern Recognition

Technological advancements in computing multiple opportunities in a wide variety of fields that range from document analysis ( Santosh, 2018 ), biomedical and healthcare informatics ( Santosh et al., 2019 ; Santosh et al., 2021 ; Santosh and Gaur, 2021 ; Santosh and Joshi, 2021 ), and biometrics to intelligent language processing. These applications primarily leverage AI tools and/or techniques, where topics such as image processing, signal and pattern recognition, machine learning and computer vision are considered.

With this theme, we opened a call for papers on Current Trends in Image Processing & Pattern Recognition that exactly followed third International Conference on Recent Trends in Image Processing & Pattern Recognition (RTIP2R), 2020 (URL: http://rtip2r-conference.org ). Our call was not limited to RTIP2R 2020, it was open to all. Altogether, 12 papers were submitted and seven of them were accepted for publication.

In Deshpande et al. , authors addressed the use of global fingerprint features (e.g., ridge flow, frequency, and other interest/key points) for matching. With Convolution Neural Network (CNN) matching model, which they called “Combination of Nearest-Neighbor Arrangement Indexing (CNNAI),” on datasets: FVC2004 and NIST SD27, their highest rank-I identification rate of 84.5% was achieved. Authors claimed that their results can be compared with the state-of-the-art algorithms and their approach was robust to rotation and scale. Similarly, in Deshpande et al. , using the exact same datasets, exact same set of authors addressed the importance of minutiae extraction and matching by taking into low quality latent fingerprint images. Their minutiae extraction technique showed remarkable improvement in their results. As claimed by the authors, their results were comparable to state-of-the-art systems.

In Gornale et al. , authors extracted distinguishing features that were geometrically distorted or transformed by taking Hu’s Invariant Moments into account. With this, authors focused on early detection and gradation of Knee Osteoarthritis, and they claimed that their results were validated by ortho surgeons and rheumatologists.

In Tamilmathi and Chithra , authors introduced a new deep learned quantization-based coding for 3D airborne LiDAR point cloud image. In their experimental results, authors showed that their model compressed an image into constant 16-bits of data and decompressed with approximately 160 dB of PSNR value, 174.46 s execution time with 0.6 s execution speed per instruction. Authors claimed that their method can be compared with previous algorithms/techniques in case we consider the following factors: space and time.

In Tamilmathi and Chithra , authors carefully inspected possible signs of plant leaf diseases. They employed the concept of feature learning and observed the correlation and/or similarity between symptoms that are related to diseases, so their disease identification is possible.

In Das Chagas Silva Araujo et al. , authors proposed a benchmark environment to compare multiple algorithms when one needs to deal with depth reconstruction from two-event based sensors. In their evaluation, a stereo matching algorithm was implemented, and multiple experiments were done with multiple camera settings as well as parameters. Authors claimed that this work could be considered as a benchmark when we consider robust evaluation of the multitude of new techniques under the scope of event-based stereo vision.

In Steffen et al. ; Gornale et al. , authors employed handwritten signature to better understand the behavioral biometric trait for document authentication/verification, such letters, contracts, and wills. They used handcrafter features such as LBP and HOG to extract features from 4,790 signatures so shallow learning can efficiently be applied. Using k-NN, decision tree and support vector machine classifiers, they reported promising performance.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Santosh, KC, Antani, S., Guru, D. S., and Dey, N. (2019). Medical Imaging Artificial Intelligence, Image Recognition, and Machine Learning Techniques . United States: CRC Press . ISBN: 9780429029417. doi:10.1201/9780429029417

CrossRef Full Text | Google Scholar

Santosh, KC, Das, N., and Ghosh, S. (2021). Deep Learning Models for Medical Imaging, Primers in Biomedical Imaging Devices and Systems . United States: Elsevier . eBook ISBN: 9780128236505.

Google Scholar

Santosh, KC (2018). Document Image Analysis - Current Trends and Challenges in Graphics Recognition . United States: Springer . ISBN 978-981-13-2338-6. doi:10.1007/978-981-13-2339-3

Santosh, KC, and Gaur, L. (2021). Artificial Intelligence and Machine Learning in Public Healthcare: Opportunities and Societal Impact . Spain: SpringerBriefs in Computational Intelligence Series . ISBN: 978-981-16-6768-8. doi:10.1007/978-981-16-6768-8

Santosh, KC, and Joshi, A. (2021). COVID-19: Prediction, Decision-Making, and its Impacts, Book Series in Lecture Notes on Data Engineering and Communications Technologies . United States: Springer Nature . ISBN: 978-981-15-9682-7. doi:10.1007/978-981-15-9682-7

Keywords: artificial intelligence, computer vision, machine learning, image processing, signal processing, pattern recocgnition

Citation: Santosh KC (2021) Editorial: Current Trends in Image Processing and Pattern Recognition. Front. Robot. AI 8:785075. doi: 10.3389/frobt.2021.785075

Received: 28 September 2021; Accepted: 06 October 2021; Published: 09 December 2021.

Edited and reviewed by:

Copyright © 2021 Santosh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: KC Santosh, [email protected]

This article is part of the Research Topic

Current Trends in Image Processing and Pattern Recognition

Image Processing Technology Based on Machine Learning

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Real-time intelligent image processing for security applications

- Guest Editorial

- Published: 05 September 2021

- Volume 18 , pages 1787–1788, ( 2021 )

Cite this article

- Akansha Singh 1 ,

- Ping Li 2 ,

- Krishna Kant Singh 3 &

- Vijayalakshmi Saravana 4

4267 Accesses

5 Citations

Explore all metrics

The advent of machine learning techniques and image processing techniques has led to new research opportunities in this area. Machine learning has enabled automatic extraction and analysis of information from images. The convergence of machine learning with image processing is useful in a variety of security applications. Image processing plays a significant role in physical as well as digital security. Physical security applications include homeland security, surveillance applications, identity authentication, and so on. Digital security implies protecting digital data. Techniques like digital watermarking, network security, and steganography enable digital security.

Avoid common mistakes on your manuscript.

1 Accepted papers

The rapidly increasing capabilities of imaging systems and techniques have opened new research areas in the security domain. The increase of cyber and physical crimes requires novel techniques to control them. In the case of both physical and digital security, real-time performance is crucial. The availability of the right image information at the right time will enable situational awareness. The real-time image processing techniques can perform the required operation by a latency being within the required time frame. Physical security applications like surveillance and object tracking will be practical only if provided in real time. Similarly, biometric authentication, watermarking or network security is also time restricted applications and requires real-time image processing. This special issue aims to bring together researchers to present novel tools and techniques for real-time image processing for security applications augmented by machine learning techniques.

This special issue on Real-Time Intelligent Image Processing for Security Applications comprises contributions on the topics in theory and applications related to the latest developments in security applications using image processing. Real-time imaging and video processing can be used for finding solutions to a variety of security problems. The special issue consists of the articles that address such security problems.

The paper entitled “RGB + D and deep learning-based real-time detection of suspicious event in Bank ATMs” presents a real-time detection method for human activities. The method is applied to enhance the surveillance and security of Bank Automated Teller Machine (ATM) [ 1 ]. The increasing number of illicit activities at ATMs has become a security concern.

The existing methods for surveillance involving human interaction are not very efficient. The human surveillance methods are highly dependent on the security personnel’s behavior. The real-time surveillance of these machines can be achieved by the proposed solution. The authors have presented a deep learning-based method for detecting the different kinds of motion from the video stream. The motions are classified as abnormal in case of any suspicious activity.

The paper entitled “A real-time person tracking system based on SiamMask network for intelligent video surveillance” presents a real-time surveillance system by tracking persons. The proposed solution can be applied to various public places, offices, buildings, etc., for tracking persons [ 2 ]. The authors have presented a person tracking and segmentation system using an overhead camera perspective.

The paper entitled “Adaptive and stabilized real-time super-resolution control for UAV-assisted smart harbor surveillance platforms” presents a method for smart harbor surveillance platforms [ 3 ]. The method utilizes drones for flexible localization of nodes. An algorithm for scheduling among the data transmitted by different drones and multi-access edge computing systems is proposed. In the second stage of the algorithm, all drones transmit their own data, and these data are utilized for surveillance. Further, the authors have used the concept of super resolution for improving the quality of data and surveillance. Lyapunov optimization-based method is used for maximizing the time-average performance of the system subject to stability of the self-adaptive super resolution control.

The paper entitled “Real-Time Video Summarizing using Image Semantic Segmentation for CBVR” presents a real-time video summarizing method using image semantic segmentation for CBVR [ 4 ]. The paper presents a method for summarizing the videos frame-wise using stacked generalization by an ensemble of different machine learning algorithms. Also, the ranks are given to videos on the basis of the time a particular building or monument appears in the video. The videos are retrieved using KD Tree. The method can be applied to different applications for security surveillance. The authors use video summarization using prominent objects in the video scene. The summary is used to query the video for extracting the required frames. The labeling is done using machine learning and image matching algorithms.

The paper entitled “A real-time classification model based on joint sparse-collaborative representation” presents a classification model based on joint sparse-collaborative representation [ 5 ]. The paper proposes the two-phase test sample representation method. The authors have made improvements in the first phase of the traditional two set method. The second phase has an imbalance in the training samples. Thus, the authors have included the unselected training samples in modeling. The proposed method is applied on numerous face databases. The method has shown good recognition accuracy.

The paper entitled “Recognizing Human Violent Action Using Drone Surveillance within Real-Time Proximity” presents a method for recognizing human violent action using drone surveillance [ 6 ]. The authors have presented a machine-driven recognition and classification of human actions from drone videos. A database is also created from an unconstrained environment using drones. Key-point extraction is performed and 2D skeletons for the persons in the frame are generated. These extracted key points are given as features in the classification module to recognize the actions. For classification, the authors have used SVM and Random Forest methods. The violent actions can be recognized using the proposed method.

2 Conclusion

The editors believe that the papers selected for this special issue will enhance the body of knowledge in the field of security using real-time imaging. We would like to thank the authors for contributing their works to this special issue. The editors would like to acknowledge and thank the reviewers for their insightful comments. These comments have been a guiding force in improving the quality of the papers. The editors would also like to thank the editorial staff for their support and help. We are especially thankful to the Journal of Real-Time Image Processing Chief Editors, Nasser Kehtarnavaz and Matthias F. Carlsohn, who provided us the opportunity to offer this special issue.

Khaire, P.A., Kumar, P.: RGB+ D and deep learning-based real-time detection of suspicious event in Bank-ATMs. J Real-Time Image Proc 23 , 1–3 (2021)

Google Scholar

Ahmed, I., Jeon, G.: A real-time person tracking system based on SiamMask network for intelligent video surveillance. J Real-Time Image Proc 28 , 1–2 (2021)

Jung, S., Kim, J.: Adaptive and stabilized real-time super-resolution control for UAV-assisted smart harbor surveillance platforms. J Real-Time Image Proc 17 , 1–1 (2021)

Jain R, Jain P, Kumar T, Dhiman G (2021) Real time video summarizing using image semantic segmentation for CBVR. J Real-Time Image Proc.

Li Y, Jin J, Chen CLP (2021) A real-time classification model based on joint sparse-collaborative representation. J Real-Time Image Proc.

Srivastava A, Badal T, Garg A, Vidyarthi A, Singh R (2021) Recognizing human violent action using drone surveillance within real time proximity. J Real-Time Image Proc.

Download references

Author information

Authors and affiliations.

Computer Science Engineering Department, Bennett University, Greater Noida, India

Akansha Singh

Department of Computing, The Hong Kong Polytechnic University, Kowloon, Hong Kong

Faculty of Engineering and Technology, Jain (Deemed-To-Be University), Bengaluru, India

Krishna Kant Singh

Department of Computer Science, University of South Dakota, Vermillion, USA

Vijayalakshmi Saravana

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Akansha Singh .

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Singh, A., Li, P., Singh, K.K. et al. Real-time intelligent image processing for security applications. J Real-Time Image Proc 18 , 1787–1788 (2021). https://doi.org/10.1007/s11554-021-01169-w

Download citation

Published : 05 September 2021

Issue Date : October 2021

DOI : https://doi.org/10.1007/s11554-021-01169-w

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

image preprocessing Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Degraded document image preprocessing using local adaptive sharpening and illumination compensation

Quantitative identification cracks of heritage rock based on digital image technology.

Abstract Digital image processing technologies are used to extract and evaluate the cracks of heritage rock in this paper. Firstly, the image needs to go through a series of image preprocessing operations such as graying, enhancement, filtering and binaryzation to filter out a large part of the noise. Then, in order to achieve the requirements of accurately extracting the crack area, the image is again divided into the crack area and morphological filtering. After evaluation, the obtained fracture area can provide data support for the restoration and protection of heritage rock. In this paper, the cracks of heritage rock are extracted in three different locations.The results show that the three groups of rock fractures have different effects on the rocks, but they all need to be repaired to maintain the appearance of the heritage rock.

Image Preprocessing Method in Radiographic Inspection for Automatic Detection of Ship Welding Defects

Welding defects must be inspected to verify that the welds meet the requirements of ship welded joints, and in welding defect inspection, among nondestructive inspections, radiographic inspection is widely applied during the production process. To perform nondestructive inspection, the completed weldment must be transported to the nondestructive inspection station, which is expensive; consequently, automation of welding defect detection is required. Recently, at several processing sites of companies, continuous attempts are being made to combine deep learning to detect defects more accurately. Preprocessing for welding defects in radiographic inspection images should be prioritized to automatically detect welding defects using deep learning during radiographic nondestructive inspection. In this study, by analyzing the pixel values, we developed an image preprocessing method that can integrate the defect features. After maximizing the contrast between the defect and background in radiographic through CLAHE (contrast-limited adaptive histogram equalization), denoising (noise removal), thresholding (threshold processing), and concatenation were sequentially performed. The improvement in detection performance due to preprocessing was verified by comparing the results of the application of the algorithm on raw images, typical preprocessed images, and preprocessed images. The mAP for the training data and test data was 84.9% and 51.2% for the preprocessed image learning model, whereas 82.0% and 43.5% for the typical preprocessed image learning model and 78.0%, 40.8% for the raw image learning model. Object detection algorithm technology is developed every year, and the mAP is improving by approximately 3% to 10%. This study achieved a comparable performance improvement by only preprocessing with data.

IMAGE PREPROCESSING METHODS IN PERSONAL IDENTIFICATION SYSTEMS

A measurement of visual complexity for heterogeneity in the built environment based on fractal dimension and its application in two gardens.

In this study, a fractal dimension-based method has been developed to compute the visual complexity of the heterogeneity in the built environment. The built environment is a very complex combination, structurally consisting of both natural and artificial elements. Its fractal dimension computation is often disturbed by the homogenous visual redundancy, which is textured but needs less attention to process, so that it leads to a pseudo-evaluation of visual complexity in the built environment. Based on human visual perception, the study developed a method: fractal dimension of heterogeneity in the built environment, which includes Potts segmentation and Canny edge detection as image preprocessing procedure and fractal dimension as computation procedure. This proposed method effectively extracts perceptually meaningful edge structures in the visual image and computes its visual complexity which is consistent with human visual characteristics. In addition, an evaluation system combining the proposed method and the traditional method has been established to classify and assess the visual complexity of the scenario more comprehensively. Two different gardens had been computed and analyzed to demonstrate that the proposed method and the evaluation system provide a robust and accurate way to measure the visual complexity in the built environment.

Extracting Weld Bead Shapes from Radiographic Testing Images with U-Net

Metals created by melting basic metal and welding rods in welding operations are referred to as weld beads. The weld bead shape allows the observation of pores and defects such as cracks in the weld zone. Radiographic testing images are used to determine the quality of the weld zone. The extraction of only the weld bead to determine the generative pattern of the bead can help efficiently locate defects in the weld zone. However, manual extraction of the weld bead from weld images is not time and cost-effective. Efficient and rapid welding quality inspection can be conducted by automating weld bead extraction through deep learning. As a result, objectivity can be secured in the quality inspection and determination of the weld zone in the shipbuilding and offshore plant industry. This study presents a method for detecting the weld bead shape and location from the weld zone image using image preprocessing and deep learning models, and extracting the weld bead through image post-processing. In addition, to diversify the data and improve the deep learning performance, data augmentation was performed to artificially expand the image data. Contrast limited adaptive histogram equalization (CLAHE) is used as an image preprocessing method, and the bead is extracted using U-Net, a pixel-based deep learning model. Consequently, the mean intersection over union (mIoU) values are found to be 90.58% and 85.44% in the train and test experiments, respectively. Successful extraction of the bead from the radiographic testing image through post-processing is achieved.

An Improved Homomorphic Filtering Algorithm for Face Image Preprocessing

Automatic detection of mesiodens on panoramic radiographs using artificial intelligence.

AbstractThis study aimed to develop an artificial intelligence model that can detect mesiodens on panoramic radiographs of various dentition groups. Panoramic radiographs of 612 patients were used for training. A convolutional neural network (CNN) model based on YOLOv3 for detecting mesiodens was developed. The model performance according to three dentition groups (primary, mixed, and permanent dentition) was evaluated, both internally (130 images) and externally (118 images), using a multi-center dataset. To investigate the effect of image preprocessing, contrast-limited histogram equalization (CLAHE) was applied to the original images. The accuracy of the internal test dataset was 96.2% and that of the external test dataset was 89.8% in the original images. For the primary, mixed, and permanent dentition, the accuracy of the internal test dataset was 96.7%, 97.5%, and 93.3%, respectively, and the accuracy of the external test dataset was 86.7%, 95.3%, and 86.7%, respectively. The CLAHE images yielded less accurate results than the original images in both test datasets. The proposed model showed good performance in the internal and external test datasets and had the potential for clinical use to detect mesiodens on panoramic radiographs of all dentition types. The CLAHE preprocessing had a negligible effect on model performance.

Vehicle License Plate Image Preprocessing Strategy Under Fog/Hazy Weather Conditions

An image preprocessing model of coal and gangue in high dust and low light conditions based on the joint enhancement algorithm.

The lighting facilities are affected due to conditions of coal mine in high dust pollution, which bring problems of dim, shadow, or reflection to coal and gangue images, and make it difficult to identify coal and gangue from background. To solve these problems, a preprocessing model for low-quality images of coal and gangue is proposed based on a joint enhancement algorithm in this paper. Firstly, the characteristics of coal and gangue images are analyzed in detail, and the improvement ways are put forward. Secondly, the image preprocessing flow of coal and gangue is established based on local features. Finally, a joint image enhancement algorithm is proposed based on bilateral filtering. In experimental, K-means clustering segmentation is used to compare the segmentation results of different preprocessing methods with information entropy and structural similarity. Through the simulation experiments for six scenes, the results show that the proposed preprocessing model can effectively reduce noise, improve overall brightness and contrast, and enhance image details. At the same time, it has a better segmentation effect. All of these can provide a better basis for target recognition.

Export Citation Format

Share document.

MIT Technology Review

- Newsletters

Google helped make an exquisitely detailed map of a tiny piece of the human brain

A small brain sample was sliced into 5,000 pieces, and machine learning helped stitch it back together.

- Cassandra Willyard archive page

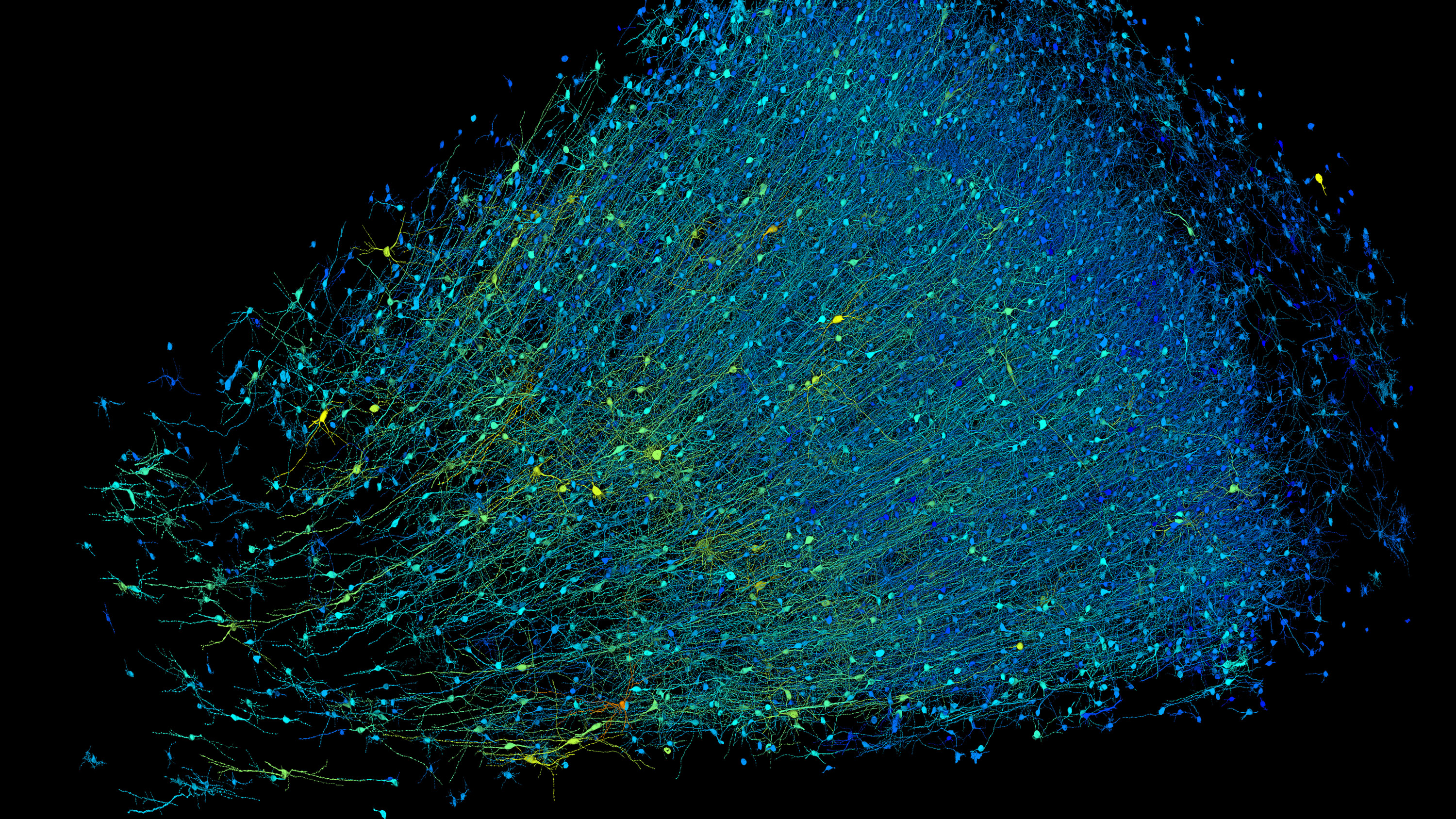

A team led by scientists from Harvard and Google has created a 3D, nanoscale-resolution map of a single cubic millimeter of the human brain. Although the map covers just a fraction of the organ—a whole brain is a million times larger—that piece contains roughly 57,000 cells, about 230 millimeters of blood vessels, and nearly 150 million synapses. It is currently the highest-resolution picture of the human brain ever created.

To make a map this finely detailed, the team had to cut the tissue sample into 5,000 slices and scan them with a high-speed electron microscope. Then they used a machine-learning model to help electronically stitch the slices back together and label the features. The raw data set alone took up 1.4 petabytes. “It’s probably the most computer-intensive work in all of neuroscience,” says Michael Hawrylycz, a computational neuroscientist at the Allen Institute for Brain Science, who was not involved in the research. “There is a Herculean amount of work involved.”

Many other brain atlases exist, but most provide much lower-resolution data. At the nanoscale, researchers can trace the brain’s wiring one neuron at a time to the synapses, the places where they connect. “To really understand how the human brain works, how it processes information, how it stores memories, we will ultimately need a map that’s at that resolution,” says Viren Jain, a senior research scientist at Google and coauthor on the paper, published in Science on May 9 . The data set itself and a preprint version of this paper were released in 2021 .

Brain atlases come in many forms. Some reveal how the cells are organized. Others cover gene expression. This one focuses on connections between cells, a field called “connectomics.” The outermost layer of the brain contains roughly 16 billion neurons that link up with each other to form trillions of connections. A single neuron might receive information from hundreds or even thousands of other neurons and send information to a similar number. That makes tracing these connections an exceedingly complex task, even in just a small piece of the brain..

To create this map, the team faced a number of hurdles. The first problem was finding a sample of brain tissue. The brain deteriorates quickly after death, so cadaver tissue doesn’t work. Instead, the team used a piece of tissue removed from a woman with epilepsy during brain surgery that was meant to help control her seizures.

Once the researchers had the sample, they had to carefully preserve it in resin so that it could be cut into slices, each about a thousandth the thickness of a human hair. Then they imaged the sections using a high-speed electron microscope designed specifically for this project.

Next came the computational challenge. “You have all of these wires traversing everywhere in three dimensions, making all kinds of different connections,” Jain says. The team at Google used a machine-learning model to stitch the slices back together, align each one with the next, color-code the wiring, and find the connections. This is harder than it might seem. “If you make a single mistake, then all of the connections attached to that wire are now incorrect,” Jain says.

“The ability to get this deep a reconstruction of any human brain sample is an important advance,” says Seth Ament, a neuroscientist at the University of Maryland. The map is “the closest to the ground truth that we can get right now.” But he also cautions that it’s a single brain specimen taken from a single individual.

The map, which is freely available at a web platform called Neuroglancer , is meant to be a resource other researchers can use to make their own discoveries. “Now anybody who’s interested in studying the human cortex in this level of detail can go into the data themselves. They can proofread certain structures to make sure everything is correct, and then publish their own findings,” Jain says. (The preprint has already been cited at least 136 times .)

The team has already identified some surprises. For example, some of the long tendrils that carry signals from one neuron to the next formed “whorls,” spots where they twirled around themselves. Axons typically form a single synapse to transmit information to the next cell. The team identified single axons that formed repeated connections—in some cases, 50 separate synapses. Why that might be isn’t yet clear, but the strong bonds could help facilitate very quick or strong reactions to certain stimuli, Jain says. “It’s a very simple finding about the organization of the human cortex,” he says. But “we didn’t know this before because we didn’t have maps at this resolution.”

The data set was full of surprises, says Jeff Lichtman, a neuroscientist at Harvard University who helped lead the research. “There were just so many things in it that were incompatible with what you would read in a textbook.” The researchers may not have explanations for what they’re seeing, but they have plenty of new questions: “That’s the way science moves forward.”

Biotechnology and health

How scientists traced a mysterious covid case back to six toilets.

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

An AI-driven “factory of drugs” claims to have hit a big milestone

Insilico is part of a wave of companies betting on AI as the "next amazing revolution" in biology

- Antonio Regalado archive page

The quest to legitimize longevity medicine

Longevity clinics offer a mix of services that largely cater to the wealthy. Now there’s a push to establish their work as a credible medical field.

- Jessica Hamzelou archive page

There is a new most expensive drug in the world. Price tag: $4.25 million

But will the latest gene therapy suffer the curse of the costliest drug?

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

- Computer Vision

- Federated Learning

- Reinforcement Learning

- Natural Language Processing

- New Releases

- AI Dev Tools

- Advisory Board Members

- 🐝 Partnership and Promotion

A research team from Technion – Israel Institute of Technology and Google Research has introduced SliCK, a novel framework specifically designed to examine integrating new knowledge within LLMs. This methodology stands out by categorizing knowledge into distinct levels, ranging from HighlyKnown to Unknown, providing a granular analysis of how different types of information affect model performance. This setup allows for a precise evaluation of the model’s ability to assimilate new facts while maintaining the accuracy of its existing knowledge base, highlighting the delicate balance required in model training.

In the methodology, the study leverages the PaLM model, a robust LLM developed by Google, which was fine-tuned using datasets carefully designed to include varying proportions of knowledge categories: HighlyKnown, MaybeKnown, WeaklyKnown, and Unknown. These datasets are derived from a curated subset of factual questions mapped from Wikidata relations, enabling a controlled examination of the model’s learning dynamics. The experiment meticulously quantifies the model’s performance across these categories using exact match (EM) metrics to assess how effectively the model integrates new information while avoiding the pitfalls of hallucinations. This structured approach provides a clear view of the impact of fine-tuning with both familiar and novel data on model accuracy.

The study’s findings demonstrate the effectiveness of the SliCK categorization in enhancing the fine-tuning process. Models trained using this structured approach, particularly with a 50% Known and 50% Unknown mix, showed an optimized balance, achieving a 5% higher accuracy in generating correct responses compared to models trained with predominantly Unknown data. Conversely, when the proportion of Unknown data exceeded 70%, the models’ propensity for hallucinations increased by approximately 12%. These results highlight SliCK’s critical role in quantitatively assessing and managing the risk of error as new information is integrated during the fine-tuning of LLMs.

To summarize, the research by Technion – Israel Institute of Technology and Google Research thoroughly examines fine-tuning LLMs using the SliCK framework to manage the integration of new knowledge. The study highlights the delicate balance required in model training, with the PaLM model demonstrating improved accuracy and reduced hallucinations when trained under controlled knowledge conditions. These findings underscore the importance of strategic data categorization in enhancing model reliability and performance, offering valuable insights for future developments in machine learning methodologies.

Check out the Paper . All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter . Join our Telegram Channel , Discord Channel , and LinkedIn Gr oup .

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.

- Nikhil https://www.marktechpost.com/author/nikhil0980/ This AI Research Introduces SubGDiff: Utilizing Diffusion Model to Improve Molecular Representation Learning

- Nikhil https://www.marktechpost.com/author/nikhil0980/ This AI Paper by the University of Michigan Introduces MIDGARD: Advancing AI Reasoning with Minimum Description Length

- Nikhil https://www.marktechpost.com/author/nikhil0980/ This AI Paper by Microsoft and Tsinghua University Introduces YOCO: A Decoder-Decoder Architectures for Language Models

- Nikhil https://www.marktechpost.com/author/nikhil0980/ This AI Paper by Alibaba Group Introduces AlphaMath: Automating Mathematical Reasoning with Monte Carlo Tree Search

RELATED ARTICLES MORE FROM AUTHOR

Large language model (llm) training data is running out. how close are we to the limit, openai launches chatgpt desktop app: enhancing productivity for mac users, top books on deep learning and neural networks, radonc-gpt: leveraging meta llama for a pioneering radiation oncology model, generative ai in marketing and sales: a comprehensive review, microsoft researchers propose dig: transforming molecular modeling with deep learning for equilibrium distribution prediction, large language model (llm) training data is running out. how close are we to..., advances and challenges in drone detection and classification techniques, microsoft researchers introduce syntheseus: a machine learning benchmarking python library for end-to-end retrosynthetic planning, breaking down barriers: scaling multimodal ai with cumo, vidur: a large-scale simulation framework revolutionizing llm deployment through cost cuts and increased efficiency.

- AI Magazine

- Privacy & TC

- Cookie Policy

🐝 🐝 Join the Fastest Growing AI Research Newsletter Read by Researchers from Google + NVIDIA + Meta + Stanford + MIT + Microsoft and many others...

Thank You 🙌

Privacy Overview

IMAGES

VIDEO

COMMENTS

Image processing articles from across Nature Portfolio ... Research Open Access 30 Apr 2024 npj ... When it comes to bioimaging and image analysis, details matter. Papers in this issue offer ...

The 5th International Conference on Recent Trends in Image Processing and Pattern Recognition (RTIP2R) aims to attract current and/or advanced research on image processing, pattern recognition, computer vision, and machine learning. The RTIP2R will take place at the Texas A&M University—Kingsville, Texas (USA), on November 22-23, 2022, in ...

Read the latest Research articles in Image processing from Nature Methods ... When it comes to bioimaging and image analysis, details matter. Papers in this issue offer guidance for improved ...

Image enhancement plays an important role in improving image quality in the field of image processing, which is achieved by highlighting useful information and suppressing redundant information in the image. In this paper, the development of image enhancement algorithms is surveyed. The purpose of our review is to provide relevant researchers with a comprehensive and systematic analysis on ...

IET Image Processing journal publishes the latest research in image and video processing, covering the generation, processing and communication of visual information. Abstract Image denoising aims to remove noise from images and improve the quality of images. However, most image denoising methods heavily rely on pairwise training strategies and ...

All kinds of image processing approaches. | Explore the latest full-text research PDFs, articles, conference papers, preprints and more on IMAGE PROCESSING. Find methods information, sources ...

The field of image processing has been the subject of intensive research and development activities for several decades. This broad area encompasses topics such as image/video processing, image/video analysis, image/video communications, image/video sensing, modeling and representation, computational imaging, electronic imaging, information forensics and security, 3D imaging, medical imaging ...

In Tamilmathi and Chithra, authors introduced a new deep learned quantization-based coding for 3D airborne LiDAR point cloud image. In their experimental results, authors showed that their model compressed an image into constant 16-bits of data and decompressed with approximately 160 dB of PSNR value, 174.46 s execution time with 0.6 s ...

The Call for Papers of the special issue was initially sent out to the participants of the 2018 conference (2nd International Conference on Recent Trends in Image Processing and Pattern Recognition). To attract high quality research articles, we also accepted papers for review from outside the conference event.

With the recent advances in digital technology, there is an eminent integration of ML and image processing to help resolve complex problems. In this special issue, we received six interesting papers covering the following topics: image prediction, image segmentation, clustering, compressed sensing, variational learning, and dynamic light coding.

results, then, finally, Section V concludes the paper. II. B. ACKGROUND AND. R. ELATED. W. ORKS. Over the past years, research has renewed interest in modeling image compression as a learning problem, giving a series of pioneering works [5]-[9], [14], [28]-[30] that have contributed to a universal fashion effect, and have

Deep learning is a powerful multi-layer architecture that has important applications in image processing and text classification. This paper first introduces the development of deep learning and two important algorithms of deep learning: convolutional neural networks and recurrent neural networks. The paper then introduces three applications of deep learning for image recognition, image ...

Explore the latest full-text research PDFs, articles, conference papers, preprints and more on DIGITAL IMAGE PROCESSING. Find methods information, sources, references or conduct a literature ...

AI has had a substantial influence on image processing, allowing cutting-edge methods and uses. The foundations of image processing are covered in this chapter, along with representation, formats ...

IET Image Processing journal publishes the latest research in image and video processing, covering the generation, processing ... This paper offers a comprehensive review of recent advancements in optic disc and optic cup segmentation techniques crucial for glaucoma screening. It presents insights into commonly used datasets, evaluation metrics ...

2.2 Rotation. Rotation (Sifre and Mallat 2013) is another type of classical geometric image data augmentation; the rotation process is done by rotating the image around an axis whether in the right direction or the left direction by angels between 1 and 359.Rotation may be applied to images by a certain angle degree in an additive way. For example, rotate the image at about 30-degree angles.

Abstract Digital image processing technologies are used to extract and evaluate the cracks of heritage rock in this paper. Firstly, the image needs to go through a series of image preprocessing operations such as graying, enhancement, filtering and binaryzation to filter out a large part of the noise. Then, in order to achieve the requirements ...

What code is in the image? submit Your support ID is: 8203161988935138230. ...

Machine learning is a relatively new field. With the deepening of people's research in this field, the application of machine learning is increasingly extensive. On the other hand, with the development of science and technology, image has become an indispensable medium of information transmission, and image processing technology is also booming. This paper introduces machine learning into ...

direction of digital image processing technology is expressed. This paper is beneficial to understand the latest technology and development trends in digital image processing, and can promote in-depth research of this technology and apply it to real life. 2. Digital image processing Technology

Our research focuses on the critical field of early diagnosis of disease by examining retinal blood vessels in fundus images. While automatic segmentation of retinal blood vessels holds promise for early detection, accurate analysis remains challenging due to the limitations of existing methods, which often lack discrimination power and are susceptible to influences from pathological regions ...

Artificial neural networks (ANNs) perform extraordinarily on numerous tasks including classification or prediction, e.g., speech processing and image classification. These new functions are based on a computational model that is enabled to select freely all necessary internal model parameters as long as it eventually delivers the functionality it is supposed to exhibit. Here, we review the ...

The advent of machine learning techniques and image processing techniques has led to new research opportunities in this area. Machine learning has enabled automatic extraction and analysis of information from images. The convergence of machine learning with image processing is useful in a variety of security applications. Image processing plays a significant role in physical as well as digital ...

The mAP for the training data and test data was 84.9% and 51.2% for the preprocessed image learning model, whereas 82.0% and 43.5% for the typical preprocessed image learning model and 78.0%, 40.8% for the raw image learning model. Object detection algorithm technology is developed every year, and the mAP is improving by approximately 3% to 10%.

X-ray imaging is a valuable non-destructive tool for examining bronze wares, but the complexity of the coverings of bronze wares and the limitations of single-energy imaging techniques often obscure critical details, such as lesions and ornamentation. Therefore, multiple imaging is required to fully present the key information of bronze artifacts, which affects the complete presentation of ...

Image Processing: Research O pportunities and Challenges. Ravindra S. Hegadi. Department of Computer Science. Karnatak University, Dharwad-580003. ravindrahegadi@rediffmail. Abstract. Interest in ...

Researchers built a 3D image of nearly every neuron and its connections within a small piece of human brain tissue. This version shows excitatory neurons colored by their depth from the surface of ...

Prior to GPT-4o, you could use Voice Mode to talk to ChatGPT with latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average. To achieve this, Voice Mode is a pipeline of three separate models: one simple model transcribes audio to text, GPT-3.5 or GPT-4 takes in text and outputs text, and a third simple model converts that text back to audio.

Research in computational linguistics continues to explore how large language models (LLMs) can be adapted to integrate new knowledge without compromising the integrity of existing information. A key challenge is ensuring that these models, fundamental to various language processing applications, maintain accuracy even as they expand their knowledge bases. One conventional approach involves ...