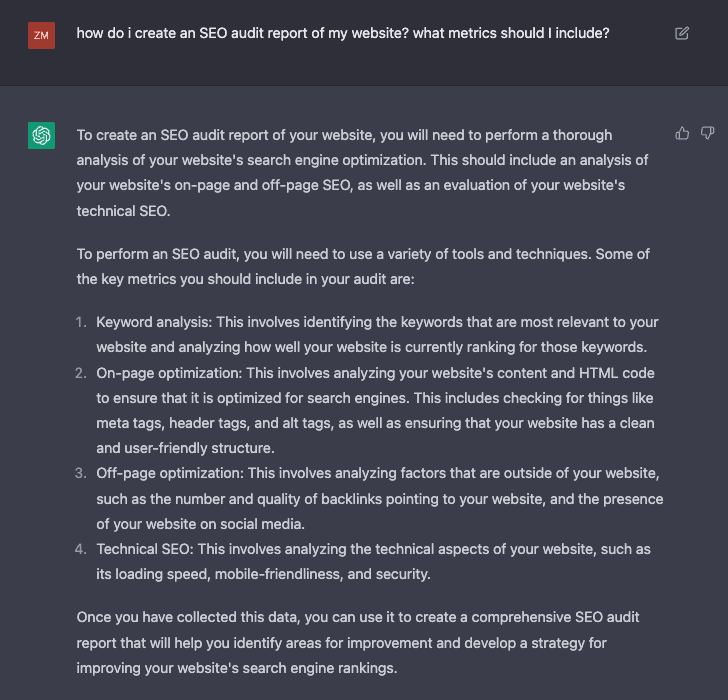

Mastering ChatGPT: The Ultimate Prompts Guide for Academic Writing Excellence

ChatGPT, with its advanced AI capabilities, has emerged as a game-changer for many. Yet, its true potential is unlocked when approached with the right queries. The prompts listed in this article have been crafted to optimize your interaction with this powerful tool. By leveraging them, you not only streamline your writing process but also enhance the quality of your research and insights. As we wrap up, we urge you not to take our word for it. Dive into the world of ChatGPT, armed with these prompts, and witness the transformation in your academic writing endeavors firsthand.

ChatGPT Prompts for Idea Generation

If you’re stuck or unsure where to begin, ChatGPT can help brainstorm ideas or topics for your paper, thesis, or dissertation.

- Suggest some potential topics on [your broader subject or theme] for an academic paper.

- Suggest some potential topics within the field of [your broader subject] related to [specific interest or theme].

- I’m exploring the field of [broader subject, e.g., “psychology”]. Could you suggest some topics that intersect with [specific interest, e.g., “child development”] and are relevant to [specific context or region, e.g., “urban settings in Asia”]?

- Within the realm of [broader subject, e.g., “philosophy”], I’m intrigued by [specific interest, e.g., “existentialism”]. Could you recommend topics that bridge it with [another field or theme, e.g., “modern technology”] in the context of [specific region or era, e.g., “21st-century Europe”]?

- Act as my brainstorming partner. I’m working on [your broader subject or theme]. What topics could be pertinent for an academic paper?

- Act as my brainstorming partner for a moment. Given the broader subject of [discipline, e.g., ‘sociology’], can you help generate ideas that intertwine with [specific theme or interest, e.g., ‘social media’] and cater to an audience primarily from [region or demographic, e.g., ‘South East Asia’]?

ChatGPT Prompts for Structuring Content

The model can provide suggestions for how to organize your content, including potential section headers, logical flow of arguments, etc.

- How should I structure my paper on [your specific topic]? Provide an outline or potential section headers.

- I’m writing a paper about [your specific topic]. How should I structure it and which sub-topics should I cover within [chosen section, e.g., “Literature Review”]?

- For a paper that discusses [specific topic, e.g., “climate change”], how should I structure the [chosen section, e.g., “Literature Review”] and integrate studies from [specific decade or period, e.g., “the 2010s”]?

- I’m compiling a paper on [specific topic, e.g., “biodiversity loss”]. How should I arrange the [chosen section, e.g., “Discussion”] to incorporate perspectives from [specific discipline, e.g., “socio-economics”] and findings from [specified region or ecosystem, e.g., “tropical rainforests”]?

- Act as an editor for a moment. Based on a paper about [your specific topic], how would you recommend I structure it? Are there key sections or elements I should include?

- Act as a structural consultant for my paper on [topic, e.g., ‘quantum physics’]. Could you suggest a logical flow and potential section headers, especially when I aim to cover aspects like [specific elements, e.g., ‘quantum entanglement and teleportation’]?

- Act as my editorial guide. For a paper focused on [specific topic, e.g., “quantum computing”], how might I structure my [chosen section, e.g., “Findings”]? Especially when integrating viewpoints from [specific discipline, e.g., “software engineering”] and case studies from [specified region, e.g., “East Asia”]?

ChatGPT Prompts for Proofreading

While it might not replace a human proofreader, ChatGPT can help you identify grammatical errors, awkward phrasing, or inconsistencies in your writing.

- Review this passage for grammatical or stylistic errors: [paste your text here].

- Review this paragraph from my [type of document, e.g., “thesis”] for grammatical or stylistic errors: [paste your text here].

- Please review this passage from my [type of document, e.g., “dissertation”] on [specific topic, e.g., “renewable energy”] for potential grammatical or stylistic errors: [paste your text here].

- Kindly scrutinize this segment from my [type of document, e.g., “journal article”] concerning [specific topic, e.g., “deep-sea exploration”]. Highlight any linguistic or structural missteps and suggest how it might better fit the style of [target publication or audience, e.g., “Nature Journal”]: [paste your text here].

- Act as my proofreader. In this passage: [paste your text here], are there any grammatical or stylistic errors I should be aware of?

- Act as my preliminary proofreader. I’ve drafted a section for my [type of document, e.g., “research proposal”] about [specific topic, e.g., “nanotechnology”]. I’d value feedback on grammar, coherence, and alignment with [target publication or style, e.g., “IEEE standards”]: [paste your text here].

ChatGPT Prompts for Citation Guidance

Need help formatting citations or understanding the nuances of different citation styles (like APA, MLA, Chicago)? ChatGPT can guide you.

- How do I format this citation in [desired style, e.g., APA, MLA]? Here’s the source: [paste source details here].

- I’m referencing a [type of source, e.g., “conference paper”] authored by [author’s name] in my document. How should I format this citation in the [desired style, e.g., “Chicago”] style?

- Act as a citation guide. I need to reference a [source type, e.g., ‘journal article’] for my work. How should I format this using the [citation style, e.g., ‘APA’] method?

- Act as my citation assistant. I’ve sourced a [type of source, e.g., “web article”] from [author’s name] published in [year, e.g., “2018”]. How should I present this in [desired style, e.g., “MLA”] format?

ChatGPT Prompts for Paraphrasing

If you’re trying to convey information from sources without plagiarizing, the model can assist in rephrasing the content.

- Can you help me paraphrase this statement? [paste your original statement here].

- Help me convey the following idea from [source author’s name] in my own words: [paste the original statement here].

- I’d like to reference an idea from [source author’s name]’s work on [specific topic, e.g., “quantum physics”]. Can you help me paraphrase this statement without losing its essence: [paste the original statement here]?

- Act as a wordsmith. I’d like a rephrased version of this statement without losing its essence: [paste your original statement here].

- Act as my rephraser. Here’s a statement from [author’s name]’s work on [topic, e.g., ‘cognitive development’]: [paste original statement here]. How can I convey this without plagiarizing?

- Act as my plagiarism prevention aid. I’d like to include insights from [source author’s name]’s research on [specific topic, e.g., “solar energy”]. Help me convey this in my own words while maintaining the tone of my [type of work, e.g., “doctoral thesis”]: [paste the original statement here].

ChatGPT Prompts for Vocabulary Enhancement

If you’re looking for more sophisticated or subject-specific terminology, ChatGPT can suggest synonyms or alternative phrasing.

- I want a more academic or sophisticated way to express this: [paste your sentence or phrase here].

- In the context of [specific field or subject], can you suggest a more academic way to express this phrase: [paste your phrase here]?

- I’m writing a paper in the field of [specific discipline, e.g., “bioinformatics”]. How can I convey this idea more academically: [paste your phrase here]?

- Within the purview of [specific discipline, e.g., “astrophysics”], I wish to enhance this assertion: [paste your phrase here]. What terminologies or phrasing would resonate more with an audience well-versed in [related field or topic, e.g., “stellar evolution”]?

- Act as my thesaurus. For this phrase: [paste your sentence or phrase here], is there a more academic or sophisticated term or phrase I could use?

- Act as a lexicon expert in [field, e.g., ‘neuroscience’]. How might I express this idea more aptly: [paste your phrase here]?

ChatGPT Prompts for Clarifying Concepts

If you’re working in a field that’s not your primary area of expertise, the model can provide explanations or definitions for unfamiliar terms or concepts.

- Can you explain the concept of [specific term or concept] in the context of academic research?

- In [specific field, e.g., “sociology”], what does [specific term or concept] mean? And how does it relate to [another term or concept]?

- In the realm of [specific discipline, e.g., “neuroscience”], how would you define [term or concept A], and how does it differentiate from [term or concept B]?

- Act as my tutor. I’m a bit lost on the concept of [specific term or concept]. Can you break it down for me in the context of [specific academic field]?

- Act as my academic tutor for a moment. I’ve encountered some challenging terms in [specific discipline, e.g., “metaphysics”]. Could you elucidate the distinctions between [term A], [term B], and [term C], especially when applied in [specific context or theory, e.g., “Kantian philosophy”]?

ChatGPT Prompts for Draft Review

You can share sections or excerpts of your draft, and ChatGPT can provide general feedback or points for consideration.

- Please provide feedback on this excerpt from my draft: [paste excerpt here].

- Could you review this excerpt from my [type of document, e.g., “research proposal”] and provide feedback on [specific aspect, e.g., “clarity and coherence”]: [paste excerpt here]?

- I’d appreciate feedback on this fragment from my [type of document, e.g., “policy analysis”] that centers on [specific topic, e.g., “renewable energy adoption”]. Specifically, I’m looking for guidance on its [specific aspect, e.g., “argumentative flow”] and how it caters to [intended audience, e.g., “policy-makers in Southeast Asia”]: [paste excerpt here].

- Act as a reviewer for my journal submission. Could you critique this section of my draft: [paste excerpt here]?

- Act as my critique partner. I’ve written a segment for my [type of document, e.g., “literature review”] on [specific topic, e.g., “cognitive biases”]. Could you assess its [specific quality, e.g., “objectivity”], especially considering its importance for [target audience or application, e.g., “clinical psychologists”]: [paste excerpt here].

ChatGPT Prompts for Reference Pointers

If you’re looking for additional sources or literature on a topic, ChatGPT can point you to key papers, authors, or studies (though its knowledge is up to 2022, so it won’t have the latest publications).

- Can you recommend key papers or studies related to [your topic or research question]?

- I need references related to [specific topic] within the broader field of [your subject area]. Can you suggest key papers or authors?

- I’m researching [specific topic, e.g., “machine learning in healthcare”]. Can you suggest seminal works from the [specific decade, e.g., “2000s”] within the broader domain of [your general field, e.g., “computer science”]?

- My study orbits around [specific topic, e.g., “augmented reality in education”]. I’m especially keen on understanding its evolution during the [specific time frame, e.g., “late 2010s”]. Can you direct me to foundational papers or figures within [your overarching domain, e.g., “educational technology”]?

- Act as a literature guide. I’m diving into [your topic or research question]. Do you have suggestions for seminal papers or must-read studies?

- Act as my literary guide. My work revolves around [specific topic, e.g., “virtual reality in pedagogy”]. I’d appreciate direction towards key texts or experts from the [specific era, e.g., “early 2000s”], especially those that highlight applications in [specific setting, e.g., “higher education institutions”].

ChatGPT Prompts for Writing Prompts

For those facing writer’s block, ChatGPT can generate prompts or questions to help you think critically about your topic and stimulate your writing.

- I’m facing writer’s block on [your topic]. Can you give me some prompts or questions to stimulate my thinking?

- I’m writing about [specific topic] in the context of [broader theme or issue]. Can you give me questions that would enhance my discussion?

- I’m discussing [specific topic, e.g., “urban planning”] in relation to [another topic, e.g., “sustainable development”] in [specific region or country, e.g., “Latin America”]. Can you offer some thought-provoking prompts?

- Act as my muse. I’m struggling with [your topic]. Could you generate some prompts or lead questions to help steer my writing?

- Act as a muse for my writer’s block. Given the themes of [topic A, e.g., ‘climate change’] and its impact on [topic B, e.g., ‘marine ecosystems’], can you generate thought-provoking prompts?

ChatGPT Prompts for Thesis Statements

If you’re struggling with framing your thesis statement, ChatGPT can help you refine and articulate it more clearly.

- Help me refine this thesis statement for clarity and impact: [paste your thesis statement here].

- Here’s a draft thesis statement for my paper on [specific topic]: [paste your thesis statement]. How can it be made more compelling?

- I’m drafting a statement for my research on [specific topic, e.g., “cryptocurrency adoption”] in the context of [specific region, e.g., “European markets”]. Here’s my attempt: [paste your thesis statement]. Any suggestions for enhancement?

- Act as my thesis advisor. I’m shaping a statement on [topic, e.g., ‘blockchain in finance’]. Here’s my draft: [paste your thesis statement]. How might it be honed further?

ChatGPT Prompts for Abstract and Summary

The model can help in drafting, refining, or summarizing abstracts for your papers.

- Can you help me draft/summarize an abstract based on this content? [paste main points or brief content here].

- I’m submitting a paper to [specific conference or journal]. Can you help me summarize my findings from [paste main content or points] into a concise abstract?

- I’m aiming to condense my findings on [specific topic, e.g., “gene therapy”] from [source or dataset, e.g., “recent clinical trials”] into an abstract for [specific event, e.g., “a biotech conference”]. Can you assist?

- Act as an abstracting service. Based on the following content: [paste main points or brief content here], how might you draft or summarize an abstract?

- Act as my editorial assistant. I’ve compiled findings on [topic, e.g., ‘genetic modifications’] from my research. Help me craft or refine a concise abstract suitable for [event or publication, e.g., ‘an international biology conference’].

ChatGPT Prompts for Methodological Assistance

If you’re unsure about the methodology section of your paper, ChatGPT can provide insights or explanations about various research methods.

- I’m using [specific research method, e.g., qualitative interviews] for my study on [your topic]. Can you provide insights or potential pitfalls?

- For a study on [specific topic], I’m considering using [specific research method]. Can you explain its application and potential challenges in this context?

- I’m considering a study on [specific topic, e.g., “consumer behavior”] using [research method, e.g., “ethnographic studies”]. Given the demographic of [target group, e.g., “millennials in urban settings”], what might be the methodological challenges?

- My exploration of [specific topic, e.g., “consumer sentiment”] deploys [research method, e.g., “mixed-method analysis”]. Given my target demographic of [specific group, e.g., “online shoppers aged 18-25”], what are potential methodological challenges and best practices in [specific setting or platform, e.g., “e-commerce platforms”]?

- Act as a methodological counselor. I’m exploring [topic, e.g., ‘consumer behavior patterns’] using [research technique, e.g., ‘qualitative interviews’]. Given the scope of [specific context or dataset, e.g., ‘online retail platforms’], what insights can you offer?

ChatGPT Prompts for Language Translation

While not perfect, ChatGPT can assist in translating content to and from various languages, which might be helpful for non-native English speakers or when dealing with sources in other languages.

- Please translate this passage to [desired language]: [paste your text here].

- I’m integrating a passage for my research on [specific topic, e.g., “Mesoamerican civilizations”]. Could you assist in translating this content from [source language, e.g., “Nahuatl”] to [target language, e.g., “English”] while preserving academic rigor: [paste your text here]?

- Act as my translation assistant. I have this passage in [source language, e.g., ‘French’] about [topic, e.g., ‘European history’]: [paste your text here]. Can you render it in [target language, e.g., ‘English’] while maintaining academic integrity?

ChatGPT Prompts for Ethical Considerations

ChatGPT can provide a general overview of ethical considerations in research, though specific guidance should come from institutional review boards or ethics committees.

- What are some general ethical considerations when conducting research on [specific topic or population]?

- I’m conducting research involving [specific group or method, e.g., “minors” or “online surveys”]. What are key ethical considerations I should be aware of in the context of [specific discipline or field]?

- My investigation encompasses [specific method or technique, e.g., “genome editing”] on [target population or organism, e.g., “plant species”]. As I operate within the framework of [specific institution or body, e.g., “UNESCO guidelines”], what ethical imperatives should I foreground, especially when considering implications for [broader context, e.g., “global food security”]?

- Act as an ethics board member. I’m conducting research on [specific topic or population]. Could you outline key ethical considerations I should bear in mind?

- Act as an ethics overview guide. My research involves [specific technique or method, e.g., ‘live human trials’] in the realm of [specific discipline, e.g., ‘medical research’]. What general ethical considerations might be paramount, especially when targeting [specific population or group, e.g., ‘adolescents’]?

ChatGPT’s advanced AI capabilities have made it a standout tool in the world of academic writing. However, its real strength shines when paired with the right questions. The prompts in this article are tailored to optimize your experience with ChatGPT. By using them, you can streamline your writing and elevate the depth of your research. But don’t just take our word for it. Explore ChatGPT with these prompts and see the transformation in your academic writing for yourself. Excellent writing is just one prompt away.

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

'ZDNET Recommends': What exactly does it mean?

ZDNET's recommendations are based on many hours of testing, research, and comparison shopping. We gather data from the best available sources, including vendor and retailer listings as well as other relevant and independent reviews sites. And we pore over customer reviews to find out what matters to real people who already own and use the products and services we’re assessing.

When you click through from our site to a retailer and buy a product or service, we may earn affiliate commissions. This helps support our work, but does not affect what we cover or how, and it does not affect the price you pay. Neither ZDNET nor the author are compensated for these independent reviews. Indeed, we follow strict guidelines that ensure our editorial content is never influenced by advertisers.

ZDNET's editorial team writes on behalf of you, our reader. Our goal is to deliver the most accurate information and the most knowledgeable advice possible in order to help you make smarter buying decisions on tech gear and a wide array of products and services. Our editors thoroughly review and fact-check every article to ensure that our content meets the highest standards. If we have made an error or published misleading information, we will correct or clarify the article. If you see inaccuracies in our content, please report the mistake via this form .

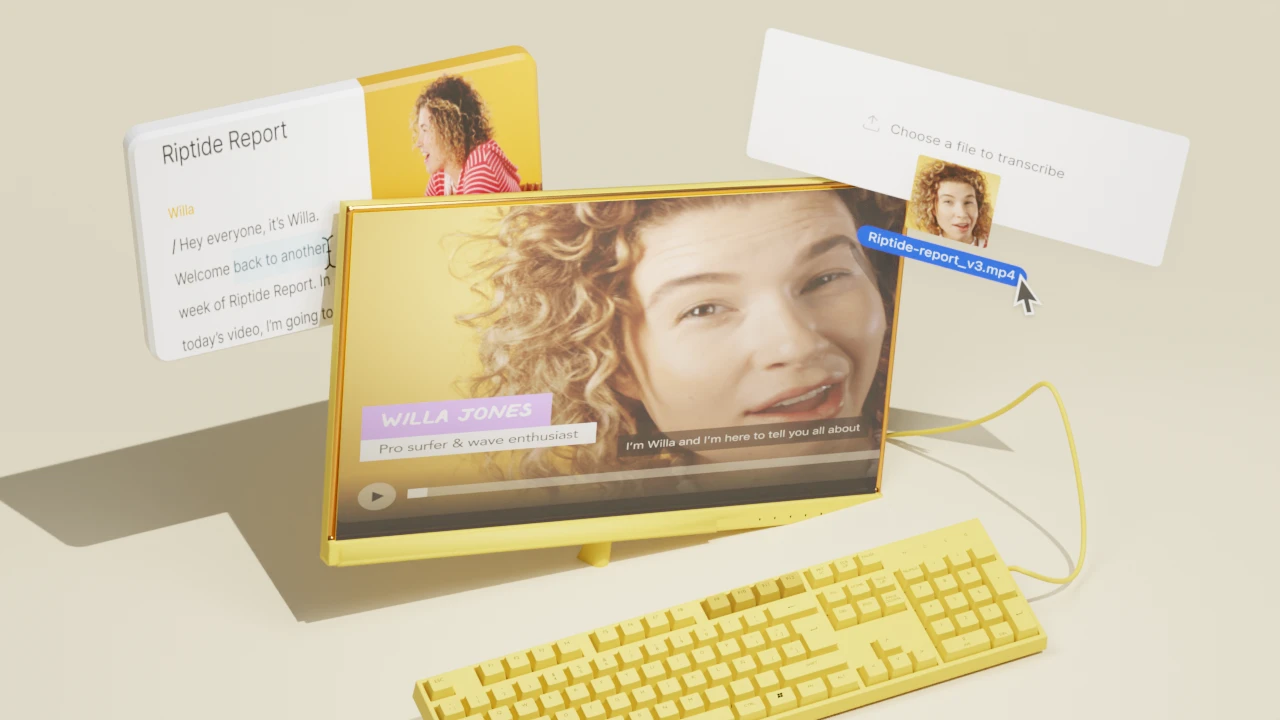

How ChatGPT (and other AI chatbots) can help you write an essay

ChatGPT is capable of doing many different things very well, with one of the biggest standout features being its ability to compose all sorts of text within seconds, including songs, poems, bedtime stories, and essays .

The chatbot's writing abilities are not only fun to experiment with, but can help provide assistance with everyday tasks. Whether you are a student, a working professional, or just getting stuff done, we constantly take time out of our day to compose emails, texts, posts, and more. ChatGPT can help you claim some of that time back by helping you brainstorm and then compose any text you need.

How to use ChatGPT to write: Code | Excel formulas | Resumes | Cover letters

Contrary to popular belief, ChatGPT can do much more than just write an essay for you from scratch (which would be considered plagiarism). A more useful way to use the chatbot is to have it guide your writing process.

Below, we show you how to use ChatGPT to do both the writing and assisting, as well as some other helpful writing tips.

How ChatGPT can help you write an essay

If you are looking to use ChatGPT to support or replace your writing, here are five different techniques to explore.

It is also worth noting before you get started that other AI chatbots can output the same results as ChatGPT or are even better, depending on your needs.

Also: The best AI chatbots of 2024: ChatGPT and alternatives

For example, Copilot has access to the internet, and as a result, it can source its answers from recent information and current events. Copilot also includes footnotes linking back to the original source for all of its responses, making the chatbot a more valuable tool if you're writing a paper on a more recent event, or if you want to verify your sources.

Regardless of which AI chatbot you pick, you can use the tips below to get the most out of your prompts and from AI assistance.

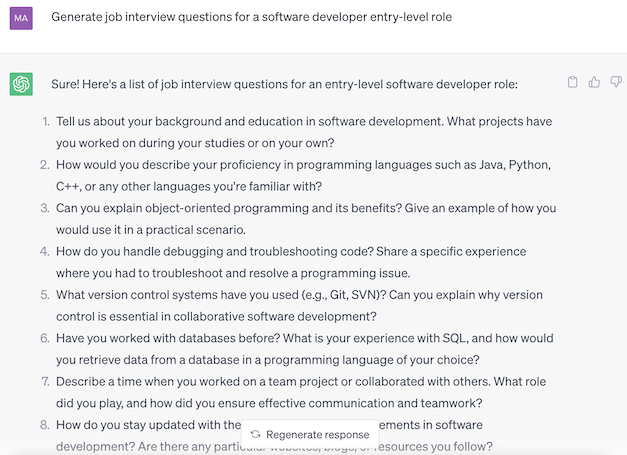

1. Use ChatGPT to generate essay ideas

Before you can even get started writing an essay, you need to flesh out the idea. When professors assign essays, they generally give students a prompt that gives them leeway for their own self-expression and analysis.

As a result, students have the task of finding the angle to approach the essay on their own. If you have written an essay recently, you know that finding the angle is often the trickiest part -- and this is where ChatGPT can help.

Also: ChatGPT vs. Copilot: Which AI chatbot is better for you?

All you need to do is input the assignment topic, include as much detail as you'd like -- such as what you're thinking about covering -- and let ChatGPT do the rest. For example, based on a paper prompt I had in college, I asked:

Can you help me come up with a topic idea for this assignment, "You will write a research paper or case study on a leadership topic of your choice." I would like it to include Blake and Mouton's Managerial Leadership Grid, and possibly a historical figure.

Also: I'm a ChatGPT pro but this quick course taught me new tricks, and you can take it for free

Within seconds, the chatbot produced a response that provided me with the title of the essay, options of historical figures I could focus my article on, and insight on what information I could include in my paper, with specific examples of a case study I could use.

2. Use the chatbot to create an outline

Once you have a solid topic, it's time to start brainstorming what you actually want to include in the essay. To facilitate the writing process, I always create an outline, including all the different points I want to touch upon in my essay. However, the outline-writing process is usually tedious.

With ChatGPT, all you have to do is ask it to write the outline for you.

Also: Thanks to my 5 favorite AI tools, I'm working smarter now

Using the topic that ChatGPT helped me generate in step one, I asked the chatbot to write me an outline by saying:

Can you create an outline for a paper, "Examining the Leadership Style of Winston Churchill through Blake and Mouton's Managerial Leadership Grid."

After a couple of seconds, the chatbot produced a holistic outline divided into seven different sections, with three different points under each section.

This outline is thorough and can be condensed for a shorter essay or elaborated on for a longer paper. If you don't like something or want to tweak the outline further, you can do so either manually or with more instructions to ChatGPT.

As mentioned before, since Copilot is connected to the internet, if you use Copilot to produce the outline, it will even include links and sources throughout, further expediting your essay-writing process.

3. Use ChatGPT to find sources

Now that you know exactly what you want to write, it's time to find reputable sources to get your information. If you don't know where to start, you can just ask ChatGPT.

Also: How to make ChatGPT provide sources and citations

All you need to do is ask the AI to find sources for your essay topic. For example, I asked the following:

Can you help me find sources for a paper, "Examining the Leadership Style of Winston Churchill through Blake and Mouton's Managerial Leadership Grid."

The chatbot output seven sources, with a bullet point for each that explained what the source was and why it could be useful.

Also: How to use ChatGPT to make charts and tables

The one caveat you will want to be aware of when using ChatGPT for sources is that it does not have access to information after 2021, so it will not be able to suggest the freshest sources. If you want up-to-date information, you can always use Copilot.

Another perk of using Copilot is that it automatically links to sources in its answers.

4. Use ChatGPT to write an essay

It is worth noting that if you take the text directly from the chatbot and submit it, your work could be considered a form of plagiarism since it is not your original work. As with any information taken from another source, text generated by an AI should be clearly identified and credited in your work.

Also: ChatGPT will now remember its past conversations with you (if you want it to)

In most educational institutions, the penalties for plagiarism are severe, ranging from a failing grade to expulsion from the school. A better use of ChatGPT's writing features would be to use it to create a sample essay to guide your writing.

If you still want ChatGPT to create an essay from scratch, enter the topic and the desired length, and then watch what it generates. For example, I input the following text:

Can you write a five-paragraph essay on the topic, "Examining the Leadership Style of Winston Churchill through Blake and Mouton's Managerial Leadership Grid."

Within seconds, the chatbot gave the exact output I required: a coherent, five-paragraph essay on the topic. You could then use that text to guide your own writing.

Also: ChatGPT vs. Microsoft Copilot vs. Gemini: Which is the best AI chatbot?

At this point, it's worth remembering how tools like ChatGPT work : they put words together in a form that they think is statistically valid, but they don't know if what they are saying is true or accurate.

As a result, the output you receive might include invented facts, details, or other oddities. The output might be a useful starting point for your own work, but don't expect it to be entirely accurate, and always double-check the content.

5. Use ChatGPT to co-edit your essay

Once you've written your own essay, you can use ChatGPT's advanced writing capabilities to edit the piece for you.

You can simply tell the chatbot what you want it to edit. For example, I asked ChatGPT to edit our five-paragraph essay for structure and grammar, but other options could have included flow, tone, and more.

Also: AI meets AR as ChatGPT is now available on the Apple Vision Pro

Once you ask the tool to edit your essay, it will prompt you to paste your text into the chatbot. ChatGPT will then output your essay with corrections made. This feature is particularly useful because ChatGPT edits your essay more thoroughly than a basic proofreading tool, as it goes beyond simply checking spelling.

You can also co-edit with the chatbot, asking it to take a look at a specific paragraph or sentence, and asking it to rewrite or fix the text for clarity. Personally, I find this feature very helpful.

Rote automation is so last year: AI pushes more intelligence into software development

The best ai chatbots: chatgpt isn't the only one worth trying, how to use chatgpt (and how to access gpt-4o).

- Gradehacker

- Meet the Team

- Essay Writing

- Degree Accelerator

- Entire Class Bundle

- Learning Center

- Gradehacker TV

Write an Essay From Scratch With Chat GPT: Step-by-Step Tutorial

Santiago mallea.

- Best Apps And Tools , Writing Tips

Chief of Content At Gradehacker

Chat GPT Essay Writer: Step-by-Step Tutorial

To write an essay with Chat GPT, you need to:

- Understand your prompt

- Choose a topic

- Write the entire prompt in Chat GPT

- Break down the arguments you got

- Write one prompt at a time

- Check the sources

- Create your first draft

- Edit your draft

Want an Actual Human Help You Write?

If you are looking for a more personalized approach, get in touch with our team and get a top-quality essay!

Want an Actual Human Help You?

How amazing would it be if there was a robot willing to help you write a college essay from scratch?

A few years ago, that may have sounded like something so futuristic that could only be seen in movies. But actually, we are closer than you might think so.

Artificial Intelligence tools are everywhere , and college students have noticed it. Among all, there is one revolutionary AI that learns over time and writes all types of content, from typical conversations to academic texts.

But can Chat GPT write essays from scratch?

We tried it, and the answer is kind so (for now at least.)

Here at Gradehacker, we have years of being the non-traditional adult students’ #1 resource.

We have lots of experience helping people like you write their essays on time or get their college degree sooner , and we know how important it is to be updated with the latest tools.

AIs and Chat GPT are going to stay for a while , so you better learn how to use them properly. If you ever wondered whether it was possible to write an essay from scratch with Chat GPT, you are about to find out!

Now, in case you aren’t familiarized with Chat GPT or don’t know the basics of how it works, we recommend watching our video first!

How we Used Chat GPT to Write Essays

So, to try our experiment with Chat GPT, we created two different college assignments that any student could find:

- An argumentative essay about America's healthcare system

- A book review of George Orwell's 1984

Our main goal is to test Chat GPT’s essay-writing skills and see how much students can use it to write their academic assignments.

Now, we are pretty aware that this (or any) artificial intelligence can carry a wide range of problems such as:

- Giving you incorrect premises and information

- Delivering a piece of writing that is plagiarised from somewhere else

- Does not include citations or list the sources it used

- Is not always available to use

That’s why after receiving our first rough draft, we’ll edit the parts of the text that are necessary and run what we get through our plagiarism checker. After our revision, we’ll ask the AI to expand on the information or make the changes we need.

We’ll consider that final version after our revision as the best possible work that Chat GPT could have done to write an essay from scratch.

And to cover the lack of citations, we’ll see what academic sources the chatbot considers worthy for us to use when writing our paper.

Now, we don’t think that AIs are ready to deliver fully edited and well-written academic writing assignments that you can simply submit to your professor without reading them first.

But is it possible to speed up the writing process and save time by asking Chat GPT to write essays?

Let’s see!

Can Chat GPT Write an Argumentative Paper?

First, we’ll see how it can handle one of the most common academic essays: an argumentative paper.

We chose the American healthcare system as our topic, but as we know that we to find a specific subject with a wide range of sources to write a strong and persuasive essay, we are focusing on structural racism in our healthcare system and how African Americans accessed it during covid.

It’s a clear and specific topic that we included in our list of best topics for your research paper. If you want similar alternatives for college papers, be sure to watch our video !

Instructions and Essay Prompt

Take a position on an issue and compose a 5-page paper that supports it.

In the introduction, establish why your topic is important and present a specific, argumentative thesis statement that previews your argument.

The body of your essay should be logical, coherent, and purposeful. It should synthesize your research and your own informed opinions in order to support your thesis.

Address other positions on the topic along with arguments and evidence that support those positions.

Write a conclusion that restates your thesis and reminds your reader of your main points.

First Results

After giving Chat GPT this prompt, this is what we received:

To begin with, after copying and pasting these paragraphs into a word document, it only covered two and a half pages.

While the introduction directly tackles the main topic, it fails to provide a clear thesis statement. And even if it’s included in a separate section, the thesis is broad and lacks factual evidence or statistics to support it.

Throughout the body of the text, the AI lists many real-life issues that contribute to the topic of the paper. Still, these are never fully explained nor supported with evidence.

For example, in the first paragraph, it says that “African Americans have long experienced poorer health outcomes compared to other racial groups.” Here it would be interesting to add statistics that prove this information is correct.

Something that really stood up for us, was that Chat GPT credited a source to back up important data, even though it didn’t cite it properly. It talks about a study conducted by the Kaiser Family Foundation that supports that in 2019, 11% of African Americans and 6% of non-Hispanic Whites were uninsured.

We checked the original article and found that the information was almost 100% accurate . The correct rates were 8% for White Americans and 10.9% for African Americans, but the biggest issue was that the study included more updated statistics from 2021.

Then, when addressing other issues like transportation and discrimination, the problem is presented without any problems, but once again, there are no sources that support them .

Once the essay starts developing the thesis statement on how these issues could be fixed, we can see the same problem.

But even if they lack supporting evidence , the arguments listed are cohesive and make sense . These were:

- Expanding Medicaid coverage

- Provide incentives for healthcare providers to practice in underserved areas

- Invest in telehealth services

- Improve transportation infrastructure, particularly in rural areas

- Train healthcare providers on cultural competence and anti-racism

- Increase diversity in the healthcare workforce

- Implement patient-centered care models

These are all strong ideas that could be stronger and more persuasive with specific information and statistics.

Still, the main problem is that there is no counter-argument that is against the essay’s main arguments.

Overall, Chat GPT delivered a cohesive first draft that tackled the topic by explaining its multiple issues and listing possible solutions. However, there is a clear lack of evidence, no counter-arguments were included, and the essay we got was half the length we needed.

Changes and Final Results

In our second attempt, we asked the AI to expand on each section and subtopic of the essay . While the final result ended up repeating some parts on multiple occasions, Chat GPT wrote more extensively and even included in-text citations with their corresponding reference.

By pasting all these new texts (without editing) into a new document, we get more than seven pages, which is a great starting point for writing a better essay.

Explanation of the issues and use of sources

The new introduction stayed pretty much the same, but the difference is that now the thesis statement is stronger and even had a cited statistic to back it up . Unfortunately, while the information is correct, the source isn’t.

Clicking on the link included in the references took us to a non-existing page , and after looking for that data on Google, we found that it actually belonged to a study from the National Library of Medicine.

But then, the AI did a solid job expanding on the issues that were related to the paper’s topic. But again, while some sources were useful, sometimes the information reflected in the text didn’t correspond to it.

For example, when citing an article posted in KFF to evidence the importance of transportation as a critical factor in health disparities, when we go to the site, we don’t find any mention of that issue.

Similarly, when addressing the higher rates of infection and death compared to White Americans, the AI once again cited the wrong source. The statistics came from a study conducted by the CDC , but from a different article than the one that is credited.

And sometimes, the information displayed was incorrect.

In that same section, when listing the percentages of death in specific states, we see in the cited source that the statistics don’t match.

However, what’s interesting is that if we search for that data on Google, we find a different study that backs it up. So, even if Chat GPT didn’t include inaccurate information in the text, it failed to properly acknowledge the real source.

And so did this problem of having correct information but citing the wrong source continued throughout the paper.

Solutions and counter-arguments

When we asked the AI to write more about the solutions it mentioned in the first draft, we received more extensive arguments with supporting evidence for each case.

As we were expecting , the statistics were real, but the source credited wasn’t the original and didn’t mention anything related to what was included in the text.

And it wasn’t any different with the counterarguments. They made sense and had a strong point, but the sources credited weren’t correct.

For instance, regarding telehealth services, it recognized the multiple barriers it would take for low-income areas to adopt this modality. It credited an article posted in the KKF mainly written by “Gillespie,” but after searching for the information, we see that the original study was conducted by other people.

Still, the fact that Chat GPT now provided us with multiple data and information we could use to develop counter-arguments and later refute them is excellent progress.

The good news is that none of the multiple paragraphs that Chat GPT delivered had plagiarism issues.

After running them through our plagiarism checker, it only found a few parts that had duplicated content, but these were sentences composed of commonly used phrases that other articles about different topics also had.

For example, multiple times it recognized as plagiarism phrases like “according to the CDC” or “according to a report by the Kaiser Family Foundation.” And even these “ plagiarism issues ” could be easily solved by rearranging the order or adding new words.

Checking for plagiarism is a critical part of the essay writing process. If you are not using one yet, be sure to pick one as soon as possible. We recommend checking our list of best plagiarism checkers.

Key Takeaways

So, what did we learn by asking Chat GPT to write an argumentative paper?

- It's better if the AI writes section per section

- It can give you accurate information related to issues, solutions, and counterarguments

- There is a high chance the source credited won't be the right one

- The texts, which can have duplicated content among themselves, don't appear to be plagiarized

It’s clear that we still need to do a lot of editing and writing.

However, considering that Chat GPT wrote this in less than an hour , the AI proved to be a solid tool. It gave us many strong arguments, interesting and accurate statistics, and an order that we cal follow to structure our argumentative paper.

If writing these types of assignments isn’t your strength, be sure to watch our tutorial on how to write an exceptional argumentative essay!

Want to Know Who is Helping You?

At Gradehacker, you have direct communication with your writer. We are here to help!

Can Chat GPT Write a Book Review?

For our second experiment, we want to see if Chat GPT can write an essay for a literature class.

To do so, we picked one of the novels we consider one of the 5 must-read books any college student should read: 1984 by George Orwell. There is so much written and discussed about this literary classic that we thought it would be a perfect choice for an artificial intelligence chatbot like Chat GPT to write something about.

Write a book review of the book 1984 by George Orwell. The paper needs to include an introduction with the author’s title, publication information (Publisher, year, number of pages), genre, and a brief introduction to the review.

Then, write a summary of the plot with the basic parts of the plot: situation, conflict, development, climax, and resolution.

Continue by describing the setting and the point of view and discussing the book’s literary devices.

Lastly, analyze the book, and explain the particular style of writing or literary elements used.

And then write a conclusion.

This is the first draft we got:

Starting with the introduction, all the information is correct , while including the number of pages is worthless as it depends on the edition of the book.

The summary is also accurate, but it relies too heavily on the plot instead of the context and world described in the novel , which is arguably the reason 1984 transcended time. For example, there is no mention of Big Brother, the leader of the totalitarian superstate.

Now, the setting and point of view section is the poorest section written by Chat GPT . It is very short and lacks development.

The literary devices are not necessarily wrong, but it would be better to focus more on each . For instance, talk more about the importance of symbolism or explain how the book critiques propaganda, totalitarianism, and individual freedom.

The analysis of Orwell’s writing is simple , but the conclusion is clear and straightforward, so it might be the best piece that the AI wrote.

For the second draft, instead of submitting the entire prompt, we wrote one command per section . As a result, Chat GPT focused on each part of the review and tossed more paragraphs with more detailed information in every case.

It’s clear that this way, the AI can write better and more developed texts that are easier to edit and improve . Each section analyzes more in-depth the topic it’s reviewing, which facilitates the upcoming process of structuring the most useful paragraphs into a cohesive essay.

For example, it now added more literary devices used by Orwell and gave specific examples of the symbolism of the novel.

Of course, there are many sentences and ideas that are repeated throughout the different sections. But now, because each has more specific information, we can take these parts and structure a new paragraph that comprises the most valuable sentences.

Now, even if sometimes book reviews don’t need to include citations from external sources apart from the novel we are analyzing, Chat GPT gave us five different options for us to choose from.

The only problem was that we couldn’t find any of them on Google.

The names of the authors were real people, but the titles of the articles and essays were nowhere to be found. This made us think that it’s likely that the AI picked real-life writers and created a title for a fictional essay about 1984 or George Orwell .

Finally, we need to see if the texts are original or plagiarized material.

After running it through our plagiarism detection software, we found that it was mostly original content with only a few issues on sight . But nothing too big to worry about.

One easy-to-solve example is in the literary devices section, where it directly quotes a sentence from the book. In this case, we would just need to add the in-text citation.

The biggest plagiarism problem was with one sentence (or six words, to be more specific) from the conclusion that linked to the introduction from a summary review . But by rearranging the word order or adding synonyms, this issue can be easily solved too.

So, what are the most important tips we can take from Chat GPT writing a book review?

- It will review each section more in-depth if you ask it one prompt at a time

- The analysis and summary of the book were accurate

- If you ask it to list scholarly sources, the AI will create unexisting sources based on real authors

- Very few plagiarism issues

Once again, there is still a lot of work to do.

The writing sample chat GPT gave us is a solid start, but we need to rearrange all the paragraphs into one cohesive essay that perfectly summarizes the different aspects of the novel. Plus, we would also have to find scholarly sources on our own.

Still, the AI can do the heavy lifting and give you a great starting point.

If writing book reviews isn’t your strong suit, you have our tutorial and tips!

Having Doubts on How we Can Help You?

Get in touch with us!

Save Time And Use Chat GPT to Write Your Essay

We know that writing essays can be a tedious task.

Sometimes, kicking off the process can be harder than what it looks. That’s why understanding how to use a powerful tool like Chat GPT can truly make the difference.

It may not have the critical thinking skills you have or write a high-quality essay from scratch, but by using our tips, it can deliver you a solid first draft to start writing your entire essay.

But if you want to have an expert team of writers giving you personalized support or aren’t sure about editing an AI-written essay, you can trust Gradehacker to help you with your assignments.

You can also check out our related blog posts if you want to learn how to take your writing skills to the next level!

7 Best Websites to Find Free College Textbooks in 2023

Best iPads For College Students

How To Be More Productive | Tips For Non-Traditional Students

Studying with Mnemonic Techniques

How To Nail Every Discussion Board | Tips To Improve Your Discussion Posts

Study Habits That Keep College Students Focused

Santiago Mallea is a curious and creative journalist who first helped many college students as a Gradehacker consultant in subjects like literature, communications, ethics, and business. Now, as a Content Creator in our blog, YouTube channel, and TikTok, he assists non-traditional students improve their college experience by sharing the best tips. You can find him on LinkedIn .

- Best Apps and Tools

- Writing Tips

- Financial Tips and Scholarships

- Career Planning

- Non-Traditional Students

- Student Wellness

- Cost & Pricing

- 2525 Ponce de Leon Blvd Suite 300 Coral Gables, FL 33134 USA

- Phone: (786) 991-9293

- Gradehacker 2525 Ponce de Leon Blvd Suite 300 Coral Gables, FL 33134 USA

About Gradehacker

Business hours.

Mon - Fri: 10:00 am - 7 pm ET Sat - Sun: 10 am - 3 pm ET

© 2024 Gradehacker LLC All Rights Reserved.

Chat GPT Essay Example: Enhancing Communication and Creativity

Introduction

In the realm of artificial intelligence (AI), the emergence of cutting-edge technologies has revolutionized various aspects of human life. One such remarkable innovation is the application of Chat Generative Pre-trained Transformers (Chat GPT), a sophisticated AI model developed to enhance communication and foster creativity. This article delves into a comprehensive chat GPT essay example, shedding light on its potential to revolutionize essay writing, nurture creativity, and reshape the boundaries of human-machine interaction.

Unleashing the Power of Chat GPT in Essay Writing

Crafting engaging introductions.

Essay writing often hinges on the ability to captivate readers from the outset. With chat GPT, crafting captivating introductions becomes a seamless endeavor. By analyzing vast databases of literary masterpieces, historical narratives, and contemporary discourse, chat GPT generates introductions that effortlessly grab readers' attention. For instance, when tasked with an essay about climate change, chat GPT might begin with a thought-provoking quote or a startling statistic to immediately immerse the audience.

Developing Well-Structured Arguments

The hallmark of a compelling essay lies in its coherent structure and logical progression of arguments. Chat GPT excels in this domain by employing its intricate algorithms to organize ideas seamlessly. It sifts through a plethora of information, identifying key points and arranging them into a structured framework. As a result, essay writers can leverage chat GPT to streamline the process of outlining and presenting arguments in a clear and organized manner.

Fostering Creativity and Originality

Creativity is the lifeblood of impactful essay writing. Chat GPT serves as a wellspring of inspiration by generating novel perspectives and creative insights. By amalgamating diverse concepts and innovative viewpoints, chat GPT empowers essayists to infuse their compositions with fresh ideas and imaginative flair. Writers can collaborate with chat GPT to explore unconventional angles, injecting a unique and captivating essence into their essays.

Enhancing Language Proficiency

A hallmark of exceptional essayists is their command over language and eloquence in expression. Chat GPT functions as a linguistic virtuoso, providing writers with an extensive lexicon and refined syntax. As writers engage in a collaborative dance with chat GPT, they absorb linguistic nuances and expand their vocabulary. This symbiotic relationship elevates the quality of written communication, enabling writers to convey complex ideas with eloquence and precision.

Realizing the Benefits of Chat GPT Essay Example

Efficiency and time savings.

The utilization of chat GPT in essay writing translates to unparalleled efficiency and time savings. Traditional research and idea generation often consume significant hours. However, chat GPT's rapid data analysis and idea synthesis expedite these preliminary phases. Writers can harness this efficiency to focus on refining their arguments, conducting deeper analyses, and perfecting the overall essay structure.

Diverse Essay Topics and Styles

Chat GPT transcends the limitations of human expertise by delving into a multitude of subjects and writing styles. Whether crafting a persuasive argument, a reflective personal essay, or a comprehensive research paper, chat GPT adapts to the desired tone and style. This adaptability equips writers with a versatile tool that seamlessly tailors its output to suit the requirements of diverse essay genres.

Optimized Research and Data Utilization

The chat GPT essay example illustrates the AI's prowess in data-driven research and information synthesis. By swiftly scouring vast repositories of information, chat GPT extracts relevant data points, statistics, and scholarly references. Writers can integrate these meticulously curated sources to bolster their arguments, thereby enhancing the essay's credibility and substantiating key claims.

Promotion of Critical Thinking

Contrary to misconceptions about AI stifling human creativity, chat GPT fosters critical thinking and analytical prowess. Collaborating with chat GPT prompts writers to engage in thoughtful deliberation, as they evaluate the AI-generated content and mold it to align with their vision. This iterative process stimulates cognitive faculties, encouraging essayists to critically assess, modify, and augment the AI-generated material.

Applications Beyond Essay Writing

Educational tool for learning.

The chat GPT essay example not only revolutionizes essay composition but also serves as an invaluable educational tool. Students can interact with chat GPT to explore intricate concepts, seek clarifications, and brainstorm ideas. This AI-driven learning experience cultivates a dynamic environment that nurtures intellectual curiosity and facilitates holistic understanding.

Innovative Content Generation

Beyond academia, chat GPT finds its footing in creative content creation. From crafting compelling marketing copy to generating engaging blog posts, chat GPT proves its mettle in diverse content-generation endeavors. Brands and businesses can harness its capabilities to resonate with target audiences, amplify brand messaging, and establish a distinctive online presence.

Language Translation and Cross-Cultural Communication

Chat GPT's language proficiency extends beyond essay writing, offering seamless translation services and fostering cross-cultural communication. In an increasingly interconnected world, chat GPT bridges language barriers, enabling individuals to communicate effortlessly across diverse linguistic landscapes.

Virtual Collaborative Writing Partner

Imagine embarking on a writing journey with an AI companion that understands your voice, style, and preferences. Chat GPT evolves into a virtual collaborative writing partner, providing real-time suggestions, refining sentence structures, and injecting creative sparks into your narrative. This partnership promises to elevate the art of writing and stimulate unparalleled literary synergies.

Frequently Asked Questions (FAQs)

How does chat gpt enhance creativity in essay writing.

Chat GPT enhances creativity by generating novel perspectives, unique insights, and imaginative angles, empowering writers to infuse their essays with fresh ideas.

Is chat GPT proficient in various writing styles?

Absolutely, chat GPT adapts to diverse writing styles, whether persuasive, informative, reflective, or analytical, ensuring seamless alignment with the desired tone and genre.

Can chat GPT assist in refining essay arguments?

Yes, chat GPT excels in structuring arguments by organizing ideas coherently and logically, offering writers a streamlined approach to presenting their viewpoints.

Does chat GPT replace human critical thinking?

No, chat GPT complements human critical thinking by stimulating thoughtful evaluation and iterative refinement of AI-generated content, fostering cognitive engagement.

What are the practical applications of chat GPT beyond essay writing?

Chat GPT finds utility in education as a learning tool, content creation for marketing and branding, language translation, cross-cultural communication, and virtual collaborative writing partnerships.

How can chat GPT revolutionize language translation?

Chat GPT breaks down language barriers by providing accurate and contextually relevant translations, facilitating seamless communication across diverse linguistic landscapes.

In the dynamic landscape of AI-driven innovation, the chat GPT essay example stands as a testament to the transformative potential of technology in the realm of communication and creativity. As writers embark on a collaborative journey with chat GPT, they unlock a tapestry of benefits, ranging from enhanced efficiency and creativity to optimized research and critical thinking. Beyond essay writing, chat GPT's applications span realms as diverse as education, content creation, language translation, and collaborative writing partnerships. As we traverse this exhilarating era of human-AI symbiosis, the chat GPT essay example beckons writers to explore uncharted horizons, ushering in a new chapter of limitless expression and intellectual evolution.

Explore more about Chat GPT

Learn about the Latest AI Innovations

Try Picasso AI

Are you looking to stand out in the world of art and creativity? Picasso AI is the answer you've been waiting for. Our artificial intelligence platform allows you to generate unique and realistic images from simple text descriptions.

Celebrating 150 years of Harvard Summer School. Learn about our history.

Should I Use ChatGPT to Write My Essays?

Everything high school and college students need to know about using — and not using — ChatGPT for writing essays.

Jessica A. Kent

ChatGPT is one of the most buzzworthy technologies today.

In addition to other generative artificial intelligence (AI) models, it is expected to change the world. In academia, students and professors are preparing for the ways that ChatGPT will shape education, and especially how it will impact a fundamental element of any course: the academic essay.

Students can use ChatGPT to generate full essays based on a few simple prompts. But can AI actually produce high quality work, or is the technology just not there yet to deliver on its promise? Students may also be asking themselves if they should use AI to write their essays for them and what they might be losing out on if they did.

AI is here to stay, and it can either be a help or a hindrance depending on how you use it. Read on to become better informed about what ChatGPT can and can’t do, how to use it responsibly to support your academic assignments, and the benefits of writing your own essays.

What is Generative AI?

Artificial intelligence isn’t a twenty-first century invention. Beginning in the 1950s, data scientists started programming computers to solve problems and understand spoken language. AI’s capabilities grew as computer speeds increased and today we use AI for data analysis, finding patterns, and providing insights on the data it collects.

But why the sudden popularity in recent applications like ChatGPT? This new generation of AI goes further than just data analysis. Instead, generative AI creates new content. It does this by analyzing large amounts of data — GPT-3 was trained on 45 terabytes of data, or a quarter of the Library of Congress — and then generating new content based on the patterns it sees in the original data.

It’s like the predictive text feature on your phone; as you start typing a new message, predictive text makes suggestions of what should come next based on data from past conversations. Similarly, ChatGPT creates new text based on past data. With the right prompts, ChatGPT can write marketing content, code, business forecasts, and even entire academic essays on any subject within seconds.

But is generative AI as revolutionary as people think it is, or is it lacking in real intelligence?

The Drawbacks of Generative AI

It seems simple. You’ve been assigned an essay to write for class. You go to ChatGPT and ask it to write a five-paragraph academic essay on the topic you’ve been assigned. You wait a few seconds and it generates the essay for you!

But ChatGPT is still in its early stages of development, and that essay is likely not as accurate or well-written as you’d expect it to be. Be aware of the drawbacks of having ChatGPT complete your assignments.

It’s not intelligence, it’s statistics

One of the misconceptions about AI is that it has a degree of human intelligence. However, its intelligence is actually statistical analysis, as it can only generate “original” content based on the patterns it sees in already existing data and work.

It “hallucinates”

Generative AI models often provide false information — so much so that there’s a term for it: “AI hallucination.” OpenAI even has a warning on its home screen , saying that “ChatGPT may produce inaccurate information about people, places, or facts.” This may be due to gaps in its data, or because it lacks the ability to verify what it’s generating.

It doesn’t do research

If you ask ChatGPT to find and cite sources for you, it will do so, but they could be inaccurate or even made up.

This is because AI doesn’t know how to look for relevant research that can be applied to your thesis. Instead, it generates content based on past content, so if a number of papers cite certain sources, it will generate new content that sounds like it’s a credible source — except it likely may not be.

There are data privacy concerns

When you input your data into a public generative AI model like ChatGPT, where does that data go and who has access to it?

Prompting ChatGPT with original research should be a cause for concern — especially if you’re inputting study participants’ personal information into the third-party, public application.

JPMorgan has restricted use of ChatGPT due to privacy concerns, Italy temporarily blocked ChatGPT in March 2023 after a data breach, and Security Intelligence advises that “if [a user’s] notes include sensitive data … it enters the chatbot library. The user no longer has control over the information.”

It is important to be aware of these issues and take steps to ensure that you’re using the technology responsibly and ethically.

It skirts the plagiarism issue

AI creates content by drawing on a large library of information that’s already been created, but is it plagiarizing? Could there be instances where ChatGPT “borrows” from previous work and places it into your work without citing it? Schools and universities today are wrestling with this question of what’s plagiarism and what’s not when it comes to AI-generated work.

To demonstrate this, one Elon University professor gave his class an assignment: Ask ChatGPT to write an essay for you, and then grade it yourself.

“Many students expressed shock and dismay upon learning the AI could fabricate bogus information,” he writes, adding that he expected some essays to contain errors, but all of them did.

His students were disappointed that “major tech companies had pushed out AI technology without ensuring that the general population understands its drawbacks” and were concerned about how many embraced such a flawed tool.

Explore Our High School Programs

How to Use AI as a Tool to Support Your Work

As more students are discovering, generative AI models like ChatGPT just aren’t as advanced or intelligent as they may believe. While AI may be a poor option for writing your essay, it can be a great tool to support your work.

Generate ideas for essays

Have ChatGPT help you come up with ideas for essays. For example, input specific prompts, such as, “Please give me five ideas for essays I can write on topics related to WWII,” or “Please give me five ideas for essays I can write comparing characters in twentieth century novels.” Then, use what it provides as a starting point for your original research.

Generate outlines

You can also use ChatGPT to help you create an outline for an essay. Ask it, “Can you create an outline for a five paragraph essay based on the following topic” and it will create an outline with an introduction, body paragraphs, conclusion, and a suggested thesis statement. Then, you can expand upon the outline with your own research and original thought.

Generate titles for your essays

Titles should draw a reader into your essay, yet they’re often hard to get right. Have ChatGPT help you by prompting it with, “Can you suggest five titles that would be good for a college essay about [topic]?”

The Benefits of Writing Your Essays Yourself

Asking a robot to write your essays for you may seem like an easy way to get ahead in your studies or save some time on assignments. But, outsourcing your work to ChatGPT can negatively impact not just your grades, but your ability to communicate and think critically as well. It’s always the best approach to write your essays yourself.

Create your own ideas

Writing an essay yourself means that you’re developing your own thoughts, opinions, and questions about the subject matter, then testing, proving, and defending those thoughts.

When you complete school and start your career, projects aren’t simply about getting a good grade or checking a box, but can instead affect the company you’re working for — or even impact society. Being able to think for yourself is necessary to create change and not just cross work off your to-do list.

Building a foundation of original thinking and ideas now will help you carve your unique career path in the future.

Develop your critical thinking and analysis skills

In order to test or examine your opinions or questions about a subject matter, you need to analyze a problem or text, and then use your critical thinking skills to determine the argument you want to make to support your thesis. Critical thinking and analysis skills aren’t just necessary in school — they’re skills you’ll apply throughout your career and your life.

Improve your research skills

Writing your own essays will train you in how to conduct research, including where to find sources, how to determine if they’re credible, and their relevance in supporting or refuting your argument. Knowing how to do research is another key skill required throughout a wide variety of professional fields.

Learn to be a great communicator

Writing an essay involves communicating an idea clearly to your audience, structuring an argument that a reader can follow, and making a conclusion that challenges them to think differently about a subject. Effective and clear communication is necessary in every industry.

Be impacted by what you’re learning about :

Engaging with the topic, conducting your own research, and developing original arguments allows you to really learn about a subject you may not have encountered before. Maybe a simple essay assignment around a work of literature, historical time period, or scientific study will spark a passion that can lead you to a new major or career.

Resources to Improve Your Essay Writing Skills

While there are many rewards to writing your essays yourself, the act of writing an essay can still be challenging, and the process may come easier for some students than others. But essay writing is a skill that you can hone, and students at Harvard Summer School have access to a number of on-campus and online resources to assist them.

Students can start with the Harvard Summer School Writing Center , where writing tutors can offer you help and guidance on any writing assignment in one-on-one meetings. Tutors can help you strengthen your argument, clarify your ideas, improve the essay’s structure, and lead you through revisions.

The Harvard libraries are a great place to conduct your research, and its librarians can help you define your essay topic, plan and execute a research strategy, and locate sources.

Finally, review the “ The Harvard Guide to Using Sources ,” which can guide you on what to cite in your essay and how to do it. Be sure to review the “Tips For Avoiding Plagiarism” on the “ Resources to Support Academic Integrity ” webpage as well to help ensure your success.

Sign up to our mailing list to learn more about Harvard Summer School

The Future of AI in the Classroom

ChatGPT and other generative AI models are here to stay, so it’s worthwhile to learn how you can leverage the technology responsibly and wisely so that it can be a tool to support your academic pursuits. However, nothing can replace the experience and achievement gained from communicating your own ideas and research in your own academic essays.

About the Author

Jessica A. Kent is a freelance writer based in Boston, Mass. and a Harvard Extension School alum. Her digital marketing content has been featured on Fast Company, Forbes, Nasdaq, and other industry websites; her essays and short stories have been featured in North American Review, Emerson Review, Writer’s Bone, and others.

5 Key Qualities of Students Who Succeed at Harvard Summer School (and in College!)

This guide outlines the kinds of students who thrive at Harvard Summer School and what the programs offer in return.

Harvard Division of Continuing Education

The Division of Continuing Education (DCE) at Harvard University is dedicated to bringing rigorous academics and innovative teaching capabilities to those seeking to improve their lives through education. We make Harvard education accessible to lifelong learners from high school to retirement.

22 Interesting ChatGPT Examples

ChatGPT is an artificial intelligence chatbot, with a unique ability to communicate with people in a human-like way. Developed by OpenAI , the large language model is equipped with cutting-edge natural language processing capabilities and has been trained on massive amounts of data, which enables it to generate written content and converse with users.

Whether it’s answering a question, generating a piece of prose or writing code, ChatGPT continues to push the boundaries of human creativity and productivity.

Interesting Ways to Use ChatGPT

- Prepare for a job interview

- Report the news

- Write songs

- Grade homework

- Help you cook

- As a (sort of) search engine

That said, ChatGPT does come with limitations. The technology can be notoriously inaccurate , generating what experts call “ hallucinations ,” or content that is stylistically correct but factually wrong. And, like any AI model, ChatGPT has a tendency to produce biased results .

So, it’s good to double-check the information that ChatGPT provides before using it. And, to be safe, don’t use the information generated by ChatGPT to make critical financial or health decisions without thorough verification and maybe even a second or third opinion.

While ChatGPT is very much a work in progress, it can be used in a variety of interesting ways.

ChatGPT Examples

Job Search Examples

1. chatgpt can write your cover letter.

Writing a cover letter is one of the most tedious and time-consuming parts of any job hunt , particularly if you’re applying to several different jobs at once. There are only so many ways one can express how excited they are about a particular company, or distill their career in an engaging way. Fortunately, ChatGPT can do the heavy lifting.

2. ChatGPT Can Improve Your Resume

Thanks to its natural language processing capabilities, ChatGPT can take an existing piece of text and make improvements on it. So, if you already have a resume written up, ChatGPT can be a useful tool in making it that much better. In a world where you can be competing with thousands of people for one job, this can be a good way to stand out among the rest.

3. ChatGPT Can Help You Prepare for an Interview

A tried-and-true way to prepare for any upcoming job interview is to have practice runs , where you test out talking points and run through various scenarios. ChatGPT can help , with the ability to generate anything from hypothetical questions to intelligent responses to those questions. It can give you tips for how to dress, or etiquette suggestions. It can even offer a few jokes , if you’re feeling playful.

4. ChatGPT Can Jumpstart Your Job Search

Knowing where to start in a job search may not be that obvious, especially for recent college graduates and professionals switching careers. ChatGPT can give job seekers ideas of positions to pursue based on a quick description. For example, a professional may type “jobs for an experienced professional who has a passion for social equity and enjoys writing blog posts.”

AI in Hiring What You Should Know About an Applicant Tracking System to Land More Interviews — And Jobs

Content Generation Examples

5. chatgpt can generate newsletter ideas.

Newsletters can be a great way for companies and individuals alike to increase visibility, as well as draw traffic to their site. But coming up with consistently solid topic ideas that align with one’s brand can be challenging. With a brief prompt, ChatGPT can not only churn out newsletter ideas, but it can also draft up an outline and even write the entire thing. And once it has written the content, it can translate the text into several different languages.

6. ChatGPT Can Write a Marketing Email

When it comes to marketing, email is an essential line of communication with customers. ChatGPT can be used to generate not only the written content within the email itself, but suggestions for eye-catching subject lines as well — which can make all the difference when it is sent to an inbox with hundreds or thousands of other emails. It can also help marketers quickly create an email marketing calendar that schedules regular sends while avoiding weekends or holidays, which could affect an email’s open and read-through rates.

7. ChatGPT Can Report the News

Again, ChatGPT has been trained on several terabytes of data across the internet, making it quite knowledgeable on many subjects. This knowledge can easily be used to generate quick news articles, or to distill the contents of a longer piece written by a human.

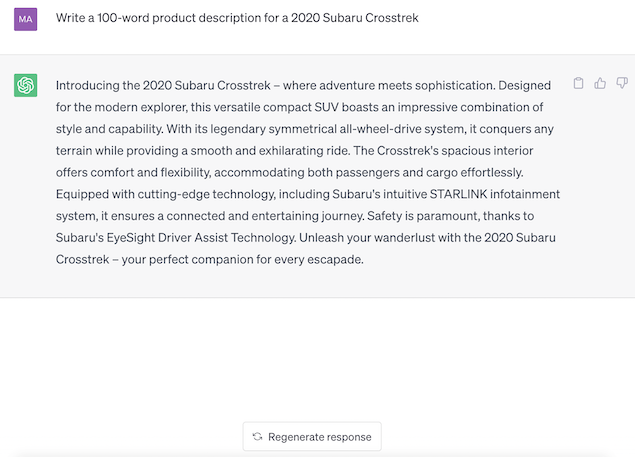

8. ChatGPT Can Be a Copywriter

Consider the immense amount of content that exists on something like an e-commerce site — the product descriptions , the image captions, the alerts about certain sales or deals — not to mention all of the promotional emails, social media posts and advertisements. Until very recently, all of that copy had to be written and edited by humans. But now, ChatGPT can handle it in a matter of seconds. Plus, it can generate several iterations of that copy so it can be targeted to specific people.

Curious How ChatGPT and Other Content Generators Work? Check Out This Piece on Natural Language Generation

Coding Examples

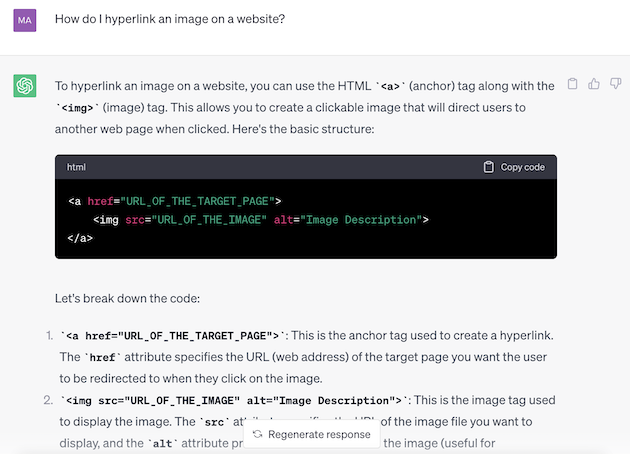

9. chatgpt can debug your code.

ChatGPT can be a useful tool for debugging code. All coders have to do is type in a line of code, and the chatbot will not only identify problems and fix them, but also explain why it made the decisions it did. ChatGPT is also capable of developing complete blocks of functional code (in many different languages) on its own, as well as translating code from one language to another. But, when it comes to code written by someone else (or, in this case, some thing else), it’s important that you fully understand how the code works before deploying it.

10. ChatGPT Can Help Create Machine Learning Algorithms

ChatGPT can even handle something as complicated as machine learning , so long as the user inputs the appropriate data. This can be in the form of labels, numbers or any other data that is useful in training a chatbot. Then, ChatGPT can act as a sort of data scientist and provide anything from an example for a linear regression machine learning algorithm , to an example of a machine learning model capable of predicting sales revenue.

11. ChatGPT Can Answer Coding-Related Questions

Coders can also look to ChatGPT for advice on specific coding-related questions. Whether a person wants to figure out a way to animate a button on their Shopify page , or set up a snapshot tool in a Fastlane configuration file , ChatGPT usually has some tips for how to make it happen. And it can even do it in the style of a fast-talkin’ wiseguy from a 1940s gangster movie if you ask it to. Just a reminder, though: ChatGPT can absolutely get things wrong. In fact, Stack Overflow has temporarily banned ChatGPT-generated responses from its platform, citing the bot’s penchant for errors.

Take It From the Experts You Can Use Artificial Intelligence to Fix Your Broken Code

Art and Music Examples

12. chatgpt can brainstorm ideas for ai generated art.

While ChatGPT is not capable of producing images itself, it can still be helpful in the creation of AI-generated art . Most AI art generators require that the user input a text prompt describing what they want the model to produce. If you’re having a hard time coming up with a good prompt to feed a given generator, ChatGPT can help. For example, one user asked ChatGPT for some “ interesting, fantastical ways of decorating a living room ” and then plugged the bot’s answers right into MidJourney.

13. ChatGPT Can Be Your Creative Writing Assistant

Although many writers have railed against using ChatGPT to produce pieces of creative writing, it can certainly be a useful tool. Instead of replacing writers outright, ChatGPT can serve as a kind of writing assistant , helping to generate ideas, produce story outlines, provide various character perspectives, and more. And it doesn’t have to be a longform novel either — ChatGPT can help write things like poems and screenplays too.

14. ChatGPT Can Write Songs