How to Convert Text to Speech in Python

Kickstart your coding journey with our Python Code Assistant . An AI-powered assistant that's always ready to help. Don't miss out!

Speech synthesis (or Text to Speech) is the computer-generated simulation of human speech. It converts human language text into human-like speech audio. In this tutorial, you will learn how to convert text to speech in Python.

Please note that I will use text-to-speech or speech synthesis interchangeably in this tutorial, as they're essentially the same thing.

In this tutorial, we won't be building neural networks and training the model from scratch to achieve results, as it is pretty complex and hard to do for regular developers. Instead, we will use some APIs, engines, and pre-trained models that offer it.

More specifically, we will use four different techniques to do text-to-speech:

- gTTS : There are a lot of APIs out there that offer speech synthesis; one of the commonly used services is Google Text to Speech; we will play around with the gTTS library.

- pyttsx3 : A library that looks for pre-installed speech synthesis engines on your operating system and, therefore, performs text-to-speech without needing an Internet connection.

- openai : We'll be using the OpenAI Text to Speech API .

- Huggingface Transformers : The famous transformer library that offers a wide range of pre-trained deep learning (transformer) models that are ready to use. We'll be using a model called SpeechT5 that does this.

To clarify, this tutorial is about converting text to speech and not vice versa. If you want to convert speech to text instead, check this tutorial .

Table of contents:

Online Text to Speech

Offline text to speech, speech synthesis using openai api, speech synthesis using 🤗 transformers.

To get started, let's install the required modules:

As you may guess, gTTS stands for Google Text To Speech; it is a Python library that interfaces with Google Translate's text-to-speech API. It requires an Internet connection, and it's pretty easy to use.

Open up a new Python file and import:

It's pretty straightforward to use this library; you just need to pass text to the gTTS object, which is an interface to Google Translate 's Text to Speech API:

Up to this point, we have sent the text and retrieved the actual audio speech from the API. Let's save this audio to a file:

Awesome, you'll see a new file appear in the current directory; let's play it using playsound module installed previously:

And that's it! You'll hear a robot talking about what you just told him to say!

It isn't available only in English; you can use other languages as well by passing the lang parameter:

If you don't want to save it to a file and just play it directly, then you should use tts.write_to_fp() which accepts io.BytesIO() object to write into; check this link for more information.

To get the list of available languages, use this:

Here are the supported languages:

Now you know how to use Google's API, but what if you want to use text-to-speech technologies offline?

Well, pyttsx3 library comes to the rescue. It is a text-to-speech conversion library in Python, and it looks for TTS engines pre-installed in your platform and uses them, here are the text-to-speech synthesizers that this library uses:

- SAPI5 on Windows XP, Windows Vista, 8, 8.1, 10 and 11.

- NSSpeechSynthesizer on Mac OS X.

- espeak on Ubuntu Desktop Edition.

Here are the main features of the pyttsx3 library:

- It works fully offline

- You can choose among different voices that are installed on your system

- Controlling the speed of speech

- Tweaking volume

- Saving the speech audio into a file

Note : If you're on a Linux system and the voice output is not working with this library, then you should install espeak, FFmpeg, and libespeak1:

To get started with this library, open up a new Python file and import it:

Now, we need to initialize the TTS engine:

To convert some text, we need to use say() and runAndWait() methods:

say() method adds an utterance to speak to the event queue, while the runAndWait() method runs the actual event loop until all commands are queued up. So you can call say() multiple times and run a single runAndWait() method in the end to hear the synthesis, try it out!

This library provides us with some properties we can tweak based on our needs. For instance, let's get the details of the speaking rate:

Alright, let's change this to 300 (make the speaking rate much faster):

Another useful property is voices, which allow us to get details of all voices available on your machine:

Here is the output in my case:

As you can see, my machine has three voice speakers. Let's use the second, for example:

You can also save the audio as a file using the save_to_file() method, instead of playing the sound using say() method:

A new MP3 file will appear in the current directory; check it out!

In this section, we'll be using the newly released OpenAI audio models. Before we get started, make sure to update openai library to the latest version:

Next, you must create an OpenAI account and navigate to the API key page to Create a new secret key . Make sure to save this somewhere safe and do not share it with anyone.

Next, let's open up a new Python file and initialize our OpenAI API client:

After that, we can simply use client.audio.speech.create() to perform text to speech:

This is a paid API, and at the time of writing this, there are two models: tts-1 for 0.015$ per 1,000 characters and tts-1-hd for 0.03$ per 1,000 characters. tts-1 is cheaper and faster, whereas tts-1-hd provides higher-quality audio.

There are currently 6 voices you can choose from. I've chosen nova , but you can use alloy , echo , fable , onyx , and shimmer .

You can also experiment with the speed parameter; the default is 1.0 , but if you set it lower than that, it'll generate a slow speech and a faster speech when above 1.0 .

There is another parameter that is response_format . The default is mp3 , but you can set it to opus , aac , and flac .

In this section, we will use the 🤗 Transformers library to load a pre-trained text-to-speech transformer model. More specifically, we will use the SpeechT5 model that is fine-tuned for speech synthesis on LibriTTS . You can learn more about the model in this paper .

To get started, let's install the required libraries (if you haven't already):

Open up a new Python file named tts_transformers.py and import the following:

Let's load everything:

The processor is the tokenizer of the input text, whereas the model is the actual model that converts text to speech.

The vocoder is the voice encoder that is used to convert human speech into electronic sounds or digital signals. It is responsible for the final production of the audio file.

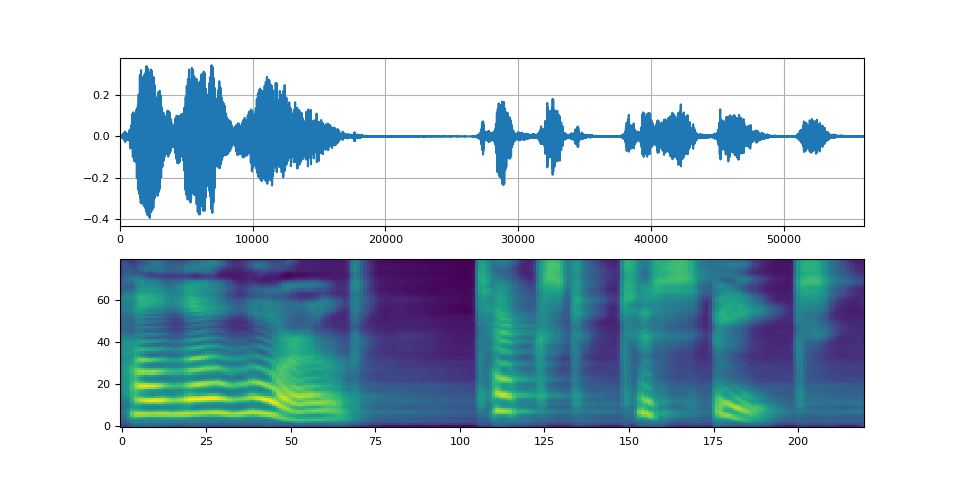

In our case, the SpeechT5 model transforms the input text we provide into a sequence of mel-filterbank features (a type of representation of the sound). These features are acoustic features often used in speech and audio processing, derived from a Fourier transform of the signal.

The HiFi-GAN vocoder we're using takes these representations and synthesizes them into actual audible speech.

Finally, we load a dataset that will help us get the speaker's voice vectors to synthesize speech with various speakers. Here are the speakers:

Next, let's make our function that does all the speech synthesis for us:

The function takes the text , and the speaker (optional) as arguments and does the following:

- It tokenizes the input text into a sequence of token IDs.

- If the speaker is passed, then we use the speaker vector to mimic the sound of the passed speaker during synthesis.

- If it's not passed, we simply make a random vector using torch.randn() . Although I do not think it's a reliable way of making a random voice.

- Next, we use our model.generate_speech() method to generate the speech tensor, it takes the input IDs, speaker embeddings, and the vocoder.

- Finally, we make our output filename and save it with a 16Khz sampling rate. (A funny thing you can do is when you reduce the sampling rate to 12Khz or 8Khz, you'll get a deeper and slower voice, and vice-versa: a higher-pitched and faster voice when you increase it to values like 22050 or 24000)

Let's use the function now:

This will generate a speech of the US female (as it's my favorite among all the speakers). This will generate a speech with a random voice:

Let's now call the function with all the speakers so you can compare speakers:

Listen to 6799-In-his-miracle-year,-he-published.mp3 :

Great, that's it for this tutorial; I hope that will help you build your application or maybe your own virtual assistant in Python!

To conclude, we have used four different methods for text-to-speech:

- Online Text to speech using the gTTS library

- Offline Text to speech using pyttsx3 library that uses an existing engine on your OS.

- The convenient Audio OpenAI API.

- Finally, we used 🤗 Transformers to perform text-to-speech (offline) using our computing resources.

So, to wrap it up, If you want to use a reliable synthesis, you can go for Audio OpenAI API, Google TTS API, or any other reliable API you choose. If you want a reliable but offline method, you can also use the SpeechT5 transformer. And if you just want to make it work quickly and without an Internet connection, you can use the pyttsx3 library.

You can get the complete code for all the methods used in the tutorial here .

Here is the documentation for used libraries:

- gTTS (Google Text-to-Speech)

- pyttsx3 - Text-to-speech x-platform

- OpenAI Text to Speech

- SpeechT5 (TTS task)

Related: How to Play and Record Audio in Python .

Happy Coding ♥

Let our Code Converter simplify your multi-language projects. It's like having a coding translator at your fingertips. Don't miss out!

- How to Convert Speech to Text in Python

Learning how to use Speech Recognition Python library for performing speech recognition to convert audio speech to text in Python.

How to Play and Record Audio in Python

Learn how to play and record sound files using different libraries such as playsound, Pydub and PyAudio in Python.

How to Translate Languages in Python

Learn how to make a language translator and detector using Googletrans library (Google Translation API) for translating more than 100 languages with Python.

Comment panel

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!

Join 50,000+ Python Programmers & Enthusiasts like you!

- Ethical Hacking

- Machine Learning

- General Python Tutorials

- Web Scraping

- Computer Vision

- Python Standard Library

- Application Programming Interfaces

- Game Development

- Web Programming

- Digital Forensics

- Natural Language Processing

- PDF File Handling

- Python for Multimedia

- GUI Programming

- Cryptography

- Packet Manipulation Using Scapy

New Tutorials

- How to Build a Breakout Game with PyGame in Python

- How to Add Sound Effects to your Python Game

- How to Remove Persistent Malware in Python

- How to Make Malware Persistent in Python

- How to Make a Pacman Game with Python

Popular Tutorials

- How to Read Emails in Python

- How to Extract Tables from PDF in Python

- How to Make a Keylogger in Python

- How to Encrypt and Decrypt Files in Python

Claim your Free Chapter!

CodeFatherTech

Learn to Code. Shape Your Future

Text to Speech in Python [With Code Examples]

In this article, you will learn how to create text-to-speech programs in Python. You will create a Python program that converts any text you provide into speech.

This is an interesting experiment to discover what can be created with Python and to show you the power of Python and its modules.

How can you make Python speak?

Python provides hundreds of thousands of packages that allow developers to write pretty much any type of program. Two cross-platform packages you can use to convert text into speech using Python are PyTTSx3 and gTTS.

Together we will create a simple program to convert text into speech. This program will show you how powerful Python is as a language. It allows us to do even complex things with very few lines of code.

The Libraries to Make Python Speak

In this guide, we will try two different text-to-speech libraries:

- gTTS (Google text to Speech API)

They are both available on the Python Package Index (PyPI), the official repository for Python third-party software. Below you can see the page on PyPI for the two libraries:

- PyTTSx3: https://pypi.org/project/pyttsx3/

- gTTS: https://pypi.org/project/gTTS/

There are different ways to create a program in Python that converts text to speech and some of them are specific to the operating system.

The reason why we will be using PyTTSx3 and gTTS is to create a program that can run in the same way on Windows, Mac, and Linux (cross-platform).

Let’s see how PyTTSx3 works first…

Text-To-Speech With the PyTTSx3 Module

Before using this module remember to install it using pip:

If you are using Windows and you see one of the following error messages, you will also have to install the module pypiwin32 :

You can use pip for that module too:

If the pyttsx3 module is not installed you will see the following error when executing your Python program:

There’s also a module called PyTTSx (without the 3 at the end), but it’s not compatible with both Python 2 and Python 3.

We are using PyTTSx3 because is compatible with both Python versions.

It’s great to see that to make your computer speak using Python you just need a few lines of code:

Run your program and you will hear the message coming from your computer.

With just four lines of code! (excluding comments)

Also, notice the difference that commas make in your phrase. Try to remove the comma before “and you?” and run the program again.

Can you see (hear) the difference?

Also, you can use multiple calls to the say() function , so:

could be written also as:

All the messages passed to the say() function are not said unless the Python interpreter sees a call to runAndWait() . You can confirm that by commenting the last line of the program.

Change Voice with PyTTSx3

What else can we do with PyTTSx?

Let’s see if we can change the voice starting from the previous program.

First of all, let’s look at the voices available. To do that we can use the following program:

You will see an output similar to the one below:

The voices available depend on your system and they might be different from the ones present on a different computer.

Considering that our message is in English we want to find all the voices that support English as a language. To do that we can add an if statement inside the previous for loop.

Also to make the output shorter we just print the id field for each Voice object in the voices list (you will understand why shortly):

Here are the voice IDs printed by the program:

Let’s choose a female voice, to do that we use the following:

I select the id com.apple.speech.synthesis.voice.samantha , so our program becomes:

How does it sound? 🙂

You can also modify the standard rate (speed) and volume of the voice setting the value of the following properties for the engine before the calls to the say() function.

Below you can see some examples on how to do it:

Play with voice id, rate, and volume to find the settings you like the most!

Text to Speech with gTTS

Now, let’s create a program using the gTTS module instead.

I’m curious to see which one is simpler to use and if there are benefits in gTTS over PyTTSx or vice versa.

As usual, we install gTTS using pip:

One difference between gTTS and PyTTSx is that gTTS also provides a CLI tool, gtts-cli .

Let’s get familiar with gtts-cli first, before writing a Python program.

To see all the language available you can use:

That’s an impressive list!

The first thing you can do with the CLI is to convert text into an mp3 file that you can then play using any suitable applications on your system.

We will convert the same message used in the previous section: “I love Python for text to speech, and you?”

I’m on a Mac and I will use afplay to play the MP3 file.

The thing I see immediately is that the comma and the question mark don’t make much difference. One point for PyTTSx that does a better job with this.

I can use the –lang flag to specify a different language, you can see an example in Italian…

…the message says: “I like programming in Python, and you?”

Now we will write a Python program to do the same thing.

If you run the program you will hear the message.

Remember that I’m using afplay because I’m on a Mac. You can just replace it with any utilities that can play sounds on your system.

Looking at the gTTS documentation, I can also read the text more slowly passing the slow parameter to the gTTS() function.

Give it a try!

Change Voice with gTTS

How easy is it to change the voice with gTTS?

Is it even possible to customize the voice?

It wasn’t easy to find an answer to this, I have been playing a bit with the parameters passed to the gTTS() function and I noticed that the English voice changes if the value of the lang parameter is ‘en-US’ instead of ‘en’ .

The language parameter uses IETF language tags.

The voice seems to take into account the comma and the question mark better than before.

Also from another test it looks like ‘en’ (the default language) is the same as ‘en-GB’.

It looks to me like there’s more variety in the voices available with PyTTSx3 compared to gTTS.

Before finishing this section I also want to show you a way to create a single MP3 file that contains multiple messages, in this case in different languages:

The write_to_fp () function writes bytes to a file-like object that we save as hello_ciao.mp3.

Makes sense?

Work With Text to Speech Offline

One last question about text-to-speech in Python.

Can you do it offline or do you need an Internet connection?

Let’s run the first one of the programs we created using PyTTSx3.

From my tests, everything works well, so I can convert text into audio even if I’m offline.

This can be very handy for the creation of any voice-based software.

Let’s try gTTS now…

If I run the program using gTTS after disabling my connection, I see the following error:

So, gTTS doesn’t work without a connection because it requires access to translate.google.com.

If you want to make Python speak offline use PyTTSx3.

We have covered a lot!

You have seen how to use two cross-platform Python modules, PyTTSx3 and gTTS, to convert text into speech and to make your computer talk!

We also went through the customization of voice, rate, volume, and language that from what I can see with the programs we created here are more flexible with the PyTTSx3 module.

Are you planning to use this for a specific project?

Let me know in the comments below 🙂

Claudio Sabato is an IT expert with over 15 years of professional experience in Python programming, Linux Systems Administration, Bash programming, and IT Systems Design. He is a professional certified by the Linux Professional Institute .

With a Master’s degree in Computer Science, he has a strong foundation in Software Engineering and a passion for robotics with Raspberry Pi.

Related posts:

- Search for YouTube Videos Using Python [6 Lines of Code]

- How to Draw with Python Turtle: Express Your Creativity

- Create a Random Password Generator in Python

- Image Edge Detection in Python using OpenCV

1 thought on “Text to Speech in Python [With Code Examples]”

Hi, Yes I was planning to develop a program which would read text in multiple voices. I’m not a programmer and was looking to find the simplest way to achieve this. There are so many programming languages out there, would you say Python would be the best to for this purpose? kind regards Delton

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

- Privacy Overview

- Strictly Necessary Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

Python: Text to Speech

Text-to-Speech (TTS) is a kind of speech synthesis which converts typed text into audible human-like voice.

There are several speech synthesizers that can be used with Python. In this tutorial, we take a look at three of them: pyttsx , Google Text-to-Speech (gTTS) and Amazon Polly .

We first install pip , the package installer for Python.

If you have already installed it, upgrade it.

We will start with the tutorial on pyttsx , a Text-to-Speech (TTS) conversion library compatible with both Python 2 and 3. The best thing about pyttsx is that it works offline without any kind of delay. Install it via pip .

By default, the pyttsx3 library loads the best driver available in an operating system: nsss on Mac, sapi5 on Windows and espeak on Linux and any other platform.

Import the installed pyttsx3 into your program.

Here is the basic program which shows how to use it.

pyttsx3 Female Voices

Now let us change the voice in pyttsx3 from male to female. If you wish for a female voice, pick voices[10] , voices[17] from the voices property of the engine. Of course, I have picked the accents which are easier for me to make out.

You can actually loop through all the available voices and pick the index of the voice you desire.

Google Text to Speech (gTTS)

Now, Google also has developed an application to read text on screen for its Android operating system. It was first released on November 6, 2013.

It has a library and CLI tool in Python called gTTS to interface with the Google Translate text-to-speech API.

We first install gTTS via pip .

gTTS creates an mp3 file from spoken text via the Google Text-to-Speech API.

We will install mpg321 to play these created mp3 files from the command-line.

Using the gtts-cli , we read the text 'Hello, World!' and output it as an mp3 file.

We now start the Python interactive shell known as the Python Shell

You will see the prompt consisting of three greater-than signs ( >>> ), which is known as the Python REPL prompt.

Import the os module and play the created hello.mp3 file.

Putting it all together in a single .py file

The created hello.mp3 file is saved in the very location where your Python program is.

gTTS supports quite a number of languages. You will find the list here .

The below line creates an mp3 file which reads the text "你好" in Chinese.

The below program creates an mp3 file out of text "안녕하세요" in Korean and plays it.

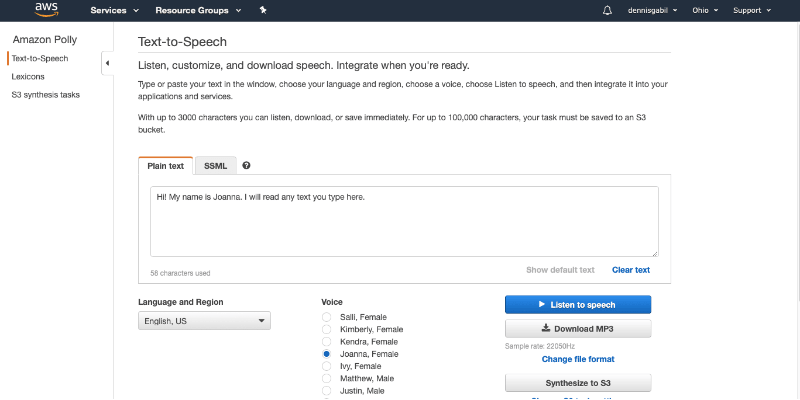

Amazon Polly

Amazon also has a cloud-based text-to-speech service called Amazon Polly .

If you have an AWS account, you can access and try out the Amazon Polly console here:

https://console.aws.amazon.com/polly/

The interface looks as follows.

There is a Language and Region dropdown to choose the desired language from and several male and female voices to pick too. Pressing the Listen to speech button reads out the text typed into the text box. Also, the speech is available to download in several formats like MP3, OGG, PCM and Speech Marks.

Now to use Polly in a Python program, we need an SDK. The AWS SDK for Python is known as Boto .

We first install it.

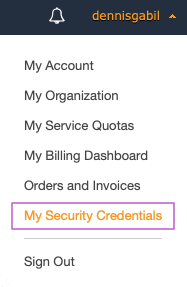

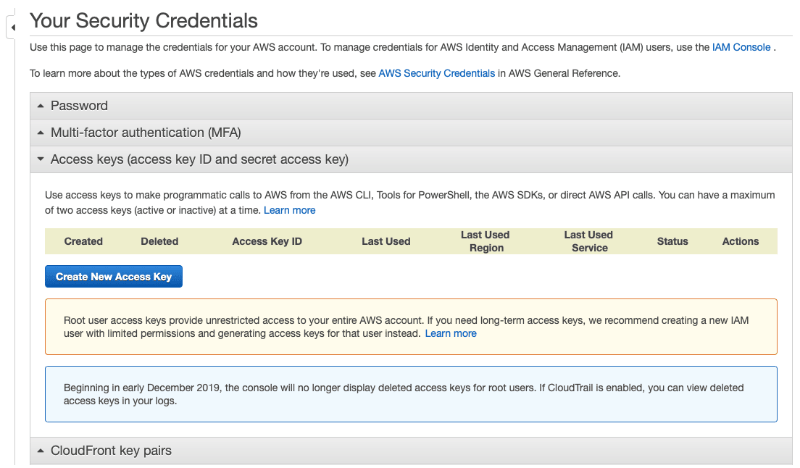

Now to initiate a boto session, we are going to need two more additional ingredients: Access Key ID and the Secret Access Key .

Login to your AWS account and expand the dropdown menu next to your user name, located on the top right of the page. Next select My Security Credentials from the menu.

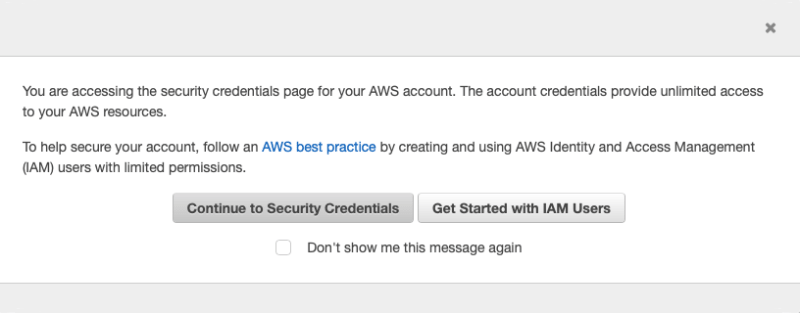

A pop-up appears. Click on the Continue to Security Credentials button on the left.

Expand the Access keys tab and click on the Create New Access Key button.

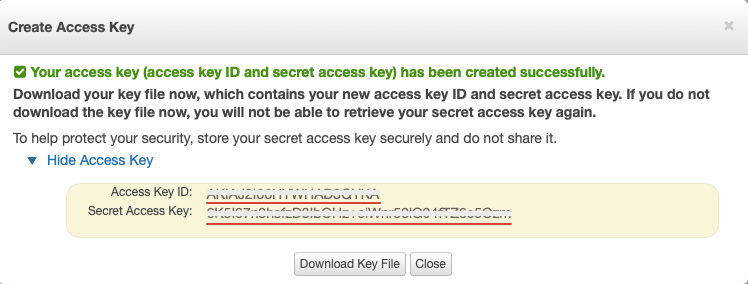

As soon as you click on the Create New Access Key button, it auto creates the two access keys: Access Key ID , a 20-digit hex number, and Secret Access Key , another 40-digit hex number.

Now we have the two keys, here is the basic Python code which reads a given block of text, convert it into mp3 and play it with mpg321 .

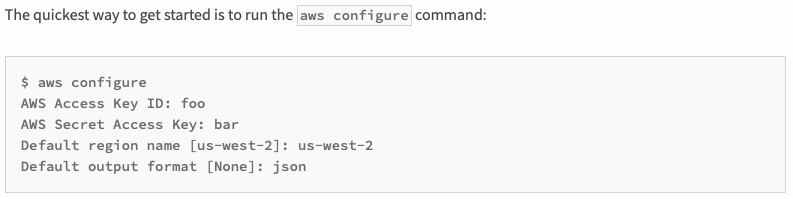

There is also another way to configure Access Key ID and the Secret Access Key . You can install awscli , the universal command-line environment for AWS ,

and configure them by typing the following command.

- The latest documentation on pyttsx3 is available here .

- You can also access the updated documentation on gTTS here .

Frequently Used, Contextual References

TODO: Remember to copy unique IDs whenever it needs used. i.e., URL: 304b2e42315e

Publication

How to perform speech synthesis in python.

Last Updated on July 24, 2023 by Editorial Team

Author(s): Tommaso De Ponti

Originally published on Towards AI .

Introduction to Text-To-Speech(TTS) in Python to perform useful tasks

Text to speech (TTS) is the use of software to create an audio output in the form of a spoken voice. The program that is used by programs to change the text on the page to an audio output of the spoken voice is normally a text to speech engine. TTS engines are needed for an audio output of machine translation results.

TTS Softwares are widely used by important companies such as Google, Apple, Microsoft, Amazon, and others. Google developed the Google Assistant, Apple developed Siri, Microsoft developed Cortana, and Amazon developed Alexa. All these advanced Softwares use, among lots of ML techniques and algorithms, TextToSpeech.

When you ask Siri something, it will process an answer using Machine Learning , and then using TTS, it will answer you vocally.

Today we will not build a Vocal Assistant. Instead, we will first introduce TTS in Python and then how to create a program that can read a text file aloud.

Finally, we’ll embed our Text-To-Speech basic functionalities in a GUI made with Kivy .

Today, in order to perform Speech Synthesis, we will use the pyttsx3 python package . To install it via pip , we open our terminal and type:

With this package installed, we can start to perform Text-To-Speech

First Speech Synthesis Program

In this paragraph, we will learn how to create a very simple TTS script that from a given input performs Text-To-Speech:

Here we: imported the pyttsx3 ( Line 1 ), created the engine object by using the pyttsx3 module( Line 2 ). At Line 5/6, we perform Speech Synthesis for the string.

Pretty simple! Now, before building another TTS program, let’s make some improvements to this code.

Here we defined the function so we can use TTS every time we want; in this case, we used it in the loop. For each text we give the program, it will perform Speech Synthesis on it. Cool but still not much useful.

Let’s see how we can apply Speech Synthesis to do some useful tasks.

Have you ever had to read that document within the next day? That long and boring document? Well, I had to.

We are programmers, There is a problem? Solve it!

The first thing that came in my head after I learned to use TTS is that the problem we’ve seen before could have been solved. Listening to something is much faster and relaxing than reading something, especially if it is boring or too long.

So, for a given text file, I choose to use TTS in order to listen to it instead of reading it:

In this simple script, we read the content of the example.txt file. Then we used the say function we talked about previously to apply Text-To-Speech to the content of the example.txt file .

When you use this script, make sure to replace ( At Line 3 ) the example.txt file with the file you want to be read loudly.

Embed TTS basic functionalities in a GUI

This step is very important. Knowing how to embed your program’s functionalities in a GUI can really make the difference, even a simple GUI. As I said in the introductory paragraph, we will use Kivy . It is an open-source Python library that allows us to quickly develop GUI apps with innovative graphics. More about Kivy can be found here .

First, let’s create a hello-world app with it:

As you can see, the code is really simple:

Lines 1/2: Imported Kivy.app and the Kivy button

Lines 4/6: Created the TestApp Class that returns us a GUI with a hello world button.

Line 8: Ran the app

This simple script gives us this as output:

Now we can embed our TTS functionalities in a GUI: we want a GUI App that for a given text performs speech synthesis.

In this simple 30 lines code we:

- Imported the needed modules → Lines 1/5

- Paste the say() the function we created in the previous steps → Lines 8/13

- Built our GUI:

- Created a layout using Kivy’s BoxLayout → Line 18

- Generated a Text Input object, we will enter our text here → Line 19

- Generated a Button that, when pressed, performs the function we’ll define at the 25th Line. → Line 20

- Added the object we generated to the layout → Lines 21/22

- Returned the layout with the TextInput and the Button objects → Line 23

- Defined the self.perform() function that, once pressed, will grab the text of our TextInput object and will perform TTS on it using the say() function defined at line 8. → Lines 25/27

4. Executed the GUI App

After executing this code, you should see this as output:

By entering your text there and clicking the Perform Speech Synthesis Button, the app will actuate TTS for the given text.

Today we have seen how speech synthesis works in Python. So, we implemented Text-To-Speech in a useful app that reads documents aloud. TTS applications have been growing significantly in recent years, and learning how to build this type of app is definitely a good way to improve your programming skills. Knowing to implement speech synthesis also applies in everyday codes; for example, you can use TTS while testing the code to receive a vocal notification of what is happening during the execution of the code.

Join thousands of data leaders on the AI newsletter . Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup , an AI-related product, or a service, we invite you to consider becoming a sponsor .

Published via Towards AI

Related posts

Vision or language, kan, and building llms for production available in india #28.

Rotary Positional Embedding(RoPE): Motivation and Implementation

Top Important LLMs Papers for the Week from 03/06 to 09/06

Midjourney Improves the Website Tools for Generating!

Feedback ↓ cancel reply, popular posts.

Best Laptops for Deep Learning, Machine Learning (ML), and Data Science for 2023

Best Workstations for Deep Learning, Data Science, and Machine Learning (ML) for 2022

Descriptive Statistics for Data-driven Decision Making with Python

Best Machine Learning (ML) Books - Free and Paid - Editorial Recommendations for 2022

Best Data Science Books - Free and Paid - Editorial Recommendations for 2022

ECCV 2020 Best Paper Award | A New Architecture For Optical Flow

NLP News Cypher | 10.11.20

NLP News Cypher | 10.18.20

Recent posts.

Why BERT is Not GPT

Gdpr ccpa statement.

In order for Towards AI to work properly, we log user data. By using Towards AI, you agree to our Privacy Policy , including our cookie policy.

- Get Started

Learn about PyTorch’s features and capabilities

Learn about the PyTorch foundation

Join the PyTorch developer community to contribute, learn, and get your questions answered.

Learn how our community solves real, everyday machine learning problems with PyTorch.

Find resources and get questions answered

Find events, webinars, and podcasts

A place to discuss PyTorch code, issues, install, research

Discover, publish, and reuse pre-trained models

- Text-to-Speech with Tacotron2 >

- Current (stable)

Click here to download the full example code

Text-to-Speech with Tacotron2 ¶

Author : Yao-Yuan Yang , Moto Hira

This tutorial shows how to build text-to-speech pipeline, using the pretrained Tacotron2 in torchaudio.

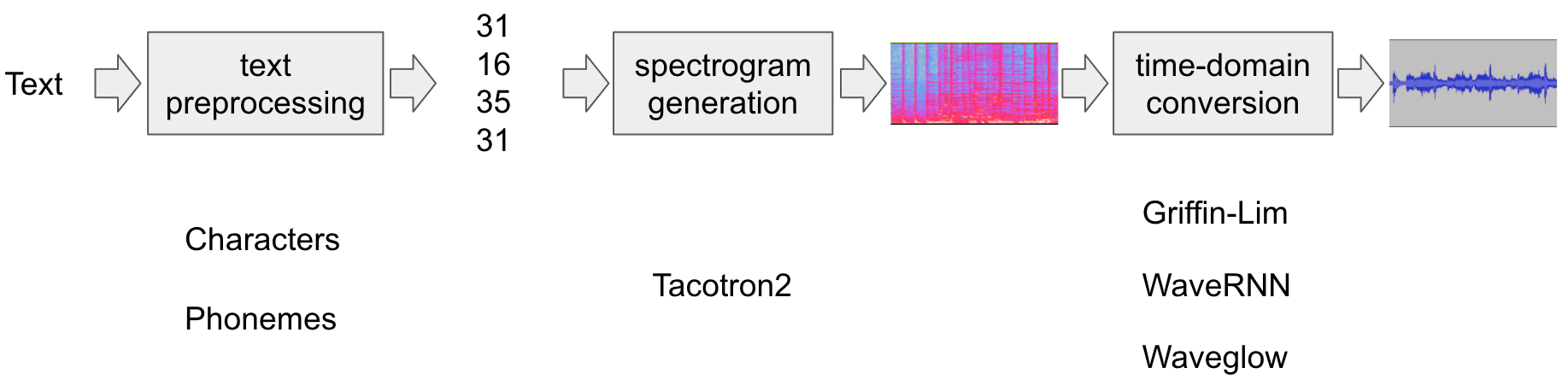

The text-to-speech pipeline goes as follows:

Text preprocessing

First, the input text is encoded into a list of symbols. In this tutorial, we will use English characters and phonemes as the symbols.

Spectrogram generation

From the encoded text, a spectrogram is generated. We use the Tacotron2 model for this.

Time-domain conversion

The last step is converting the spectrogram into the waveform. The process to generate speech from spectrogram is also called a Vocoder. In this tutorial, three different vocoders are used, WaveRNN , GriffinLim , and Nvidia’s WaveGlow .

The following figure illustrates the whole process.

All the related components are bundled in torchaudio.pipelines.Tacotron2TTSBundle , but this tutorial will also cover the process under the hood.

Preparation ¶

First, we install the necessary dependencies. In addition to torchaudio , DeepPhonemizer is required to perform phoneme-based encoding.

Text Processing ¶

Character-based encoding ¶.

In this section, we will go through how the character-based encoding works.

Since the pre-trained Tacotron2 model expects specific set of symbol tables, the same functionalities is available in torchaudio . However, we will first manually implement the encoding to aid in understanding.

First, we define the set of symbols '_-!\'(),.:;? abcdefghijklmnopqrstuvwxyz' . Then, we will map the each character of the input text into the index of the corresponding symbol in the table. Symbols that are not in the table are ignored.

As mentioned in the above, the symbol table and indices must match what the pretrained Tacotron2 model expects. torchaudio provides the same transform along with the pretrained model. You can instantiate and use such transform as follow.

Note: The output of our manual encoding and the torchaudio text_processor output matches (meaning we correctly re-implemented what the library does internally). It takes either a text or list of texts as inputs. When a list of texts are provided, the returned lengths variable represents the valid length of each processed tokens in the output batch.

The intermediate representation can be retrieved as follows:

Phoneme-based encoding ¶

Phoneme-based encoding is similar to character-based encoding, but it uses a symbol table based on phonemes and a G2P (Grapheme-to-Phoneme) model.

The detail of the G2P model is out of the scope of this tutorial, we will just look at what the conversion looks like.

Similar to the case of character-based encoding, the encoding process is expected to match what a pretrained Tacotron2 model is trained on. torchaudio has an interface to create the process.

The following code illustrates how to make and use the process. Behind the scene, a G2P model is created using DeepPhonemizer package, and the pretrained weights published by the author of DeepPhonemizer is fetched.

Notice that the encoded values are different from the example of character-based encoding.

The intermediate representation looks like the following.

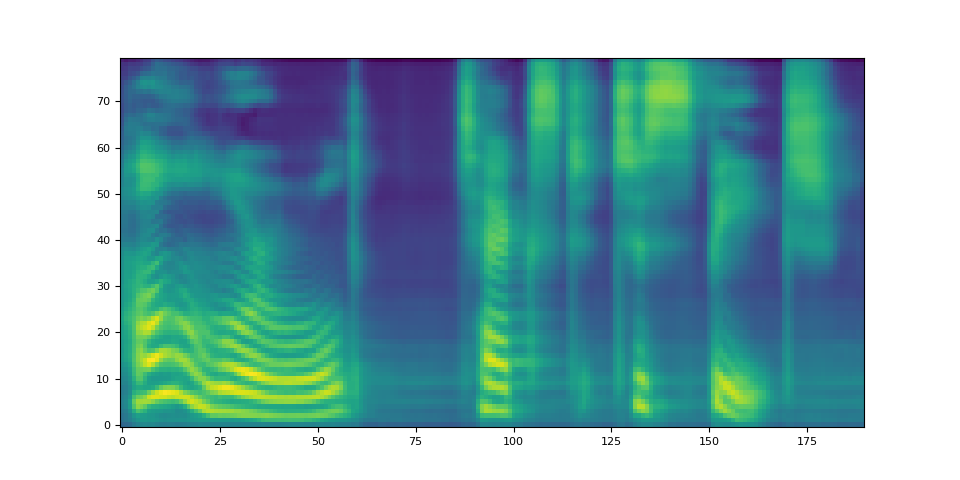

Spectrogram Generation ¶

Tacotron2 is the model we use to generate spectrogram from the encoded text. For the detail of the model, please refer to the paper .

It is easy to instantiate a Tacotron2 model with pretrained weights, however, note that the input to Tacotron2 models need to be processed by the matching text processor.

torchaudio.pipelines.Tacotron2TTSBundle bundles the matching models and processors together so that it is easy to create the pipeline.

For the available bundles, and its usage, please refer to Tacotron2TTSBundle .

Note that Tacotron2.infer method perfoms multinomial sampling, therefore, the process of generating the spectrogram incurs randomness.

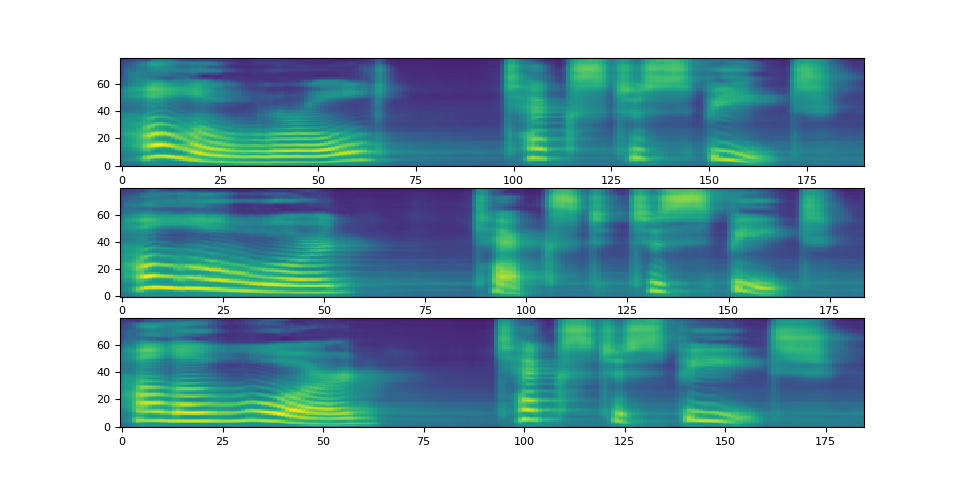

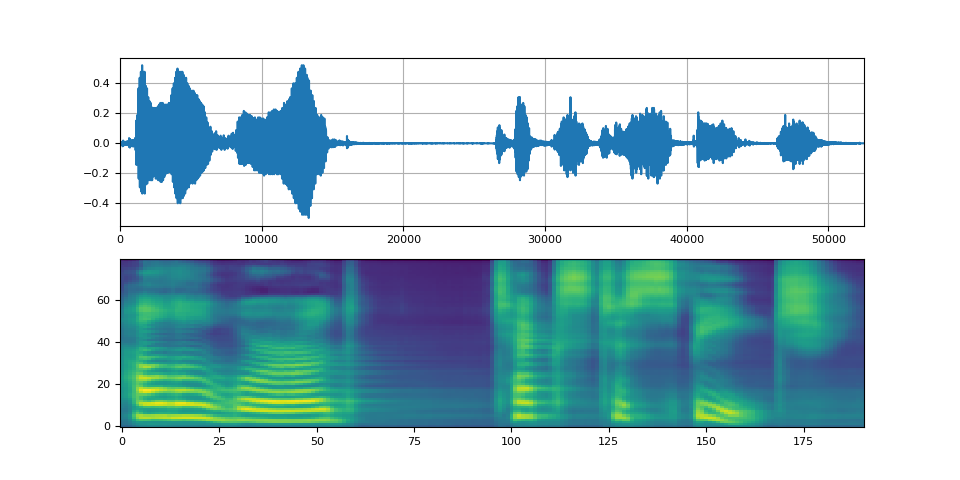

Waveform Generation ¶

Once the spectrogram is generated, the last process is to recover the waveform from the spectrogram using a vocoder.

torchaudio provides vocoders based on GriffinLim and WaveRNN .

WaveRNN Vocoder ¶

Continuing from the previous section, we can instantiate the matching WaveRNN model from the same bundle.

Griffin-Lim Vocoder ¶

Using the Griffin-Lim vocoder is same as WaveRNN. You can instantiate the vocoder object with get_vocoder() method and pass the spectrogram.

How to Create a Speech Synthesis System with Python

Requirements

To follow this tutorial, you will need:

- Python 3.x installed

- Basic knowledge of Python programming

- Access to a terminal/command prompt

Step 1: Install the gTTS Library

The first step is to install the gTTS library. You can do this using pip, the Python package manager. Open your terminal/command prompt and type the following command:

Step 2: Create a Python Script

Create a new Python script (e.g., speech_synthesis.py) and open it in your preferred text editor or integrated development environment (IDE).

Step 3: Import the Required Libraries

At the beginning of your script, import the following libraries:

Step 4: Convert Text to Speech

Create a new function called text_to_speech that takes a string as an input and outputs an MP3 file with the synthesized speech. The function should look like this:

Here's a breakdown of what the function does:

- Creates a gTTS object with the input text and language (English in this case)

- Saves the synthesized speech to an MP3 file

- Plays the MP3 file using the default media player on your system

Step 5: Test the Speech Synthesis System

Now that we have our text-to-speech function, let's test it. Add the following lines of code to the end of your script:

Save the script and run it in your terminal/command prompt with the following command:

Your computer should now play the synthesized speech. Congratulations, you've created a simple speech synthesis system with Python!

In this tutorial, we learned how to create a speech synthesis system using Python and the gTTS library. You can now use this knowledge to enhance your projects with text-to-speech capabilities. If you are looking to hire remote Python developers for your project, consider visiting Reintech.

If you're interested in enhancing this article or becoming a contributing author, we'd love to hear from you.

Please contact Sasha at [email protected] to discuss the opportunity further or to inquire about adding a direct link to your resource. We welcome your collaboration and contributions!

Speech Synthesis

Speech Synthesis is a technology that converts written text into spoken voice output, commonly known as Text-to-Speech (TTS). It is widely used in various applications such as aiding people with visual impairments, providing voice assistance in automation technologies, language translation services, and more.

Google Text-to-Speech (gTTS) is a Python library that converts text to speech. It provides an easy-to-use interface for generating spoken versions of text in multiple languages and dialects. With gTTS, developers can create voice assistants, audiobooks, and other applications that require speech output. The library also allows users to save the synthesized speech as an audio file for later use or playback.

Learn more about gTTS by visiting the official GitHub repository .

Maximize Your Tech Team's Efficiency with Expert Remote Developers Skilled in Databases, Python, and Google Cloud

Maximize Your Team's Potential with Expert Remote Developers Specializing in Databases, Python, and AWS Cognito

Leverage Expert Remote Developers with Databases, Python, and Amazon EC2 Skills for Your Engineering Team

Text to Speech Python: A Comprehensive Guide

Looking for our Text to Speech Reader ?

Featured In

Table of contents, what is text-to-speech, getting started with python tts, python libraries for text-to-speech, pyttsx3: a cross-platform library, gtts: google text to speech, speech recognition integration, customizing speech properties, saving speech to audio files, educational software, automation and notifications, try speechify text to speech, what is the free text to speech library in python, does gtts need internet, is gtts google text to speech a python library, is pyttsx3 safe, how to do text to speech on python, what does speech synthesis do, what is the best python text to speech library.

Welcome to the exciting world of text-to-speech ( TTS ) in Python! This comprehensive guide will take you through everything you need to know about converting...

Welcome to the exciting world of text-to-speech (TTS) in Python! This comprehensive guide will take you through everything you need to know about converting text to speech using Python. Whether you're a beginner or an experienced developer, you'll find valuable insights, practical examples, and real-world applications.

Text-to-speech (TTS) technology converts written text into spoken words. Using various algorithms and Python libraries, this technology has become more accessible and versatile.

To begin, ensure you have Python installed. Python 3 is recommended for its updated features and support. You can download it from the official Python website, suitable for Windows, Linux, or any other operating system.

Setting Up Your Environment

- Install Python and set up your environment.

- Choose an IDE or text editor for Python programming, like Visual Studio Code or PyCharm.

Python offers several libraries for TTS, each with unique features and functionalities.

- pyttsx3 is a Python library that works offline and supports multiple voices and languages like English, French, German, and Hindi.

- Installation: pip install pyttsx3

Basic usage:

import pyttsx3

engine = pyttsx3.init()

engine.say("Hello World")

engine.runAndWait()

- gTTS (Google Text to Speech) is a Python library that converts text into speech using Google's TTS API .

- It requires an internet connection but supports various languages and dialects.

- Installation: pip install gTTS

from gtts import gTTS

tts = gTTS('hello', lang='en')

tts.save('hello.mp3')

Advanced TTS Features in Python

Python TTS libraries offer advanced features for more sophisticated needs.

- Combine TTS with speech recognition for interactive applications.

- Python's speech_recognition library can be used alongside TTS for a comprehensive audio experience.

- Adjust the speaking rate, volume, and voice properties using pyttsx3 .

- Example: Setting a different voice or speaking rate.

Save the output speech as an MP3 file or other audio formats for later use.

Real-World Applications of Python TTS

Python TTS is not just for learning; it has practical applications in various fields.

- Assistive technology for visually impaired students.

- Language learning applications.

- Automated voice responses in customer service.

- System notifications and alerts in software applications.

This guide provides a solid foundation for text-to-speech in Python. For further exploration, check out additional resources and tutorials on GitHub or Python tutorial websites. Remember, the best way to learn is by doing, so start your own Python project today!

Cost : Free to try

Speechify Text to Speech is a groundbreaking tool that has revolutionized the way individuals consume text-based content. By leveraging advanced text-to-speech technology, Speechify transforms written text into lifelike spoken words, making it incredibly useful for those with reading disabilities, visual impairments, or simply those who prefer auditory learning. Its adaptive capabilities ensure seamless integration with a wide range of devices and platforms, offering users the flexibility to listen on-the-go.

Top 5 Speechify TTS Features :

High-Quality Voices : Speechify offers a variety of high-quality, lifelike voices across multiple languages. This ensures that users have a natural listening experience, making it easier to understand and engage with the content.

Seamless Integration : Speechify can integrate with various platforms and devices, including web browsers, smartphones, and more. This means users can easily convert text from websites, emails, PDFs, and other sources into speech almost instantly.

Speed Control : Users have the ability to adjust the playback speed according to their preference, making it possible to either quickly skim through content or delve deep into it at a slower pace.

Offline Listening : One of the significant features of Speechify is the ability to save and listen to converted text offline, ensuring uninterrupted access to content even without an internet connection.

Highlighting Text : As the text is read aloud, Speechify highlights the corresponding section, allowing users to visually track the content being spoken. This simultaneous visual and auditory input can enhance comprehension and retention for many users.

Python Text to Speech FAQ

pyttsx3 and gTTS (Google Text to Speech) are popular free text-to-speech libraries in Python. pyttsx3 works offline across various operating systems like Windows and Linux, while gTTS requires an internet connection.

Yes, gTTS (Google Text to Speech) requires an internet connection as it uses Google's text-to-speech API to convert text into speech.

Yes, gTTS is a Python library that provides an interface to Google's text-to-speech services, enabling the conversion of text to speech in Python programs.

Yes, pyttsx3 is generally considered safe. It's a widely-used Python library for text-to-speech conversion, available on GitHub for transparency and community support.

To perform text-to-speech in Python, you can use libraries like pyttsx3 or gTTS . Simply import the library, initialize the speech engine, and use the say method to convert text to speech. For example:

engine.say("Your text here")

Speech synthesis is the artificial production of human speech. It converts written text into spoken words using algorithms and can be customized in terms of voice, speaking rate, and language, often used in TTS (Text-to-Speech) systems.

The "best" Python text-to-speech library depends on specific needs. pyttsx3 is excellent for offline use and cross-platform compatibility, supporting multiple languages like English, French, and Hindi. gTTS is preferred for its simplicity and reliance on Google's advanced text-to-speech API, offering high-quality speech synthesis in various languages, but requires an internet connection.

Online Tone Generator: The Ultimate Guide to Sound Waves and Audio Testing

Free AI Audiobook generator

Cliff Weitzman

Cliff Weitzman is a dyslexia advocate and the CEO and founder of Speechify, the #1 text-to-speech app in the world, totaling over 100,000 5-star reviews and ranking first place in the App Store for the News & Magazines category. In 2017, Weitzman was named to the Forbes 30 under 30 list for his work making the internet more accessible to people with learning disabilities. Cliff Weitzman has been featured in EdSurge, Inc., PC Mag, Entrepreneur, Mashable, among other leading outlets.

Text-to-Speech in Python: On-Device Solutions

Text-to-Speech ( TTS ) technology, also known as Speech Synthesis , converts text into human-like speech. The rise of deep learning has led to major advancements in TTS quality and naturalness, but at the cost of increased computational requirements. Most big tech companies offer cloud-based TTS APIs, like Google Text-to-Speech , Amazon Polly , or Microsoft Text-to-Speech , and new companies with similar offerings have emerged, such as ElevenLabs , or Coqui Studio . While convenient, these services require an internet connection, raise privacy concerns, and are prone to network outages. On-device solutions allow for more flexibility and privacy by synthesizing speech directly on the user's device. However, few options exist for on-device TTS . This article explores three open-source Python libraries and Picovoice Orca Text-to-Speech .

PyTTSx3 is a Python library that utilizes the popular eSpeak speech synthesis engine on Linux (NSSpeechSynthesizer is used on MacOS and SAPI5 on Windows). Getting started is straightforward:

- Install pyTTSx3:

- Save synthesized speech to a file in Python:

While simple to use, eSpeak's voice quality is robotic compared to more modern TTS systems.

Coqui TTS is the open-source repository of Coqui Studio . Developers can leverage Coqui's pretrained models or train custom voices. To synthesize speech, follow the steps:

- Install Coqui TTS:

- List available models in Python:

- Choose a model name and save synthesized speech to a file:

Coqui offers high-quality voices with natural prosody, at the cost of larger model sizes and longer processing times.

Mimic3 from Mycroft

Mycroft is a free and open-source virtual assistant that offers a TTS system called Mimic3 . This framework currently lacks a pure Python API, so we will use Python's subprocess:

- Install Mycroft:

- Synthesize speech and save file to directory OUTPUT/DIR :

For prototyping on-device TTS , Mimic3 from Mycroft provides a balance of quality and performance.

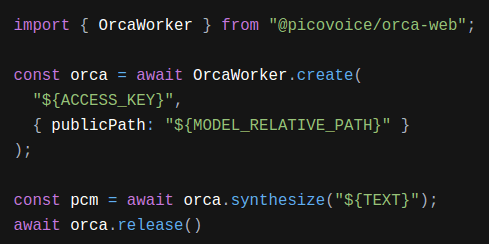

Orca Text-to-Speech

Picovoice Orca Text-to-Speech leverages state-of-the-art Text-to-Speech ( TTS ) models to provide high-quality voices, while still being small and efficient.

- Install Orca Text-to-Speech Python SDK

- Import Orca and create an Orca instance.

Sign-up or Log in to Picovoice Console to copy your access key and replace ${ACCESS_KEY} with it.

- Synthesize your desired text with

For more information refer to the Orca Text-to-Speech Python SDK Documentation .

On-device TTS removes privacy concerns, internet requirements, and minimizes latency. With Python solutions like PyTTSx3, Coqui TTS, and Mimic3, developers have several options for synthesizing speech directly on devices based on their needs. However, each solution comes with drawbacks such as poor voice quality, large resource requirements, or lack of flexible APIs. Another alternative is Orca Text-to-Speech , which combines state-of-the-art neural TTS with efficiency, allowing to synthesize high-quality speech even on a Raspberry Pi .

Subscribe to our newsletter

More from Picovoice

Create an on-device, LLM-powered Voice Assistant for iOS using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create an on-device, LLM-powered Voice Assistant for Android using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create a local LLM-powered Voice Assistant for Web Browsers using Picovoice on-device voice AI and picoLLM local LLM platforms.

Create an on-device LLM-powered Voice Assistant in 400 lines of Python using Picovoice on-device voice AI and picoLLM local LLM platforms.

Private, Efficient, Fast, Ready-to-Use Text-to-Speech: Orca Streaming Text-to-Speech, the on-device voice generator that converts written te...

Orca is an LLM-tailored Text-to-Speech that eliminates unnatural audio delays in LLM-based voice assistants, making ChatGPT respond 10x fast...

Synthesize text to speech using Picovoice Orca Text-to-Speech Web SDK. The SDK runs on all modern web browsers.

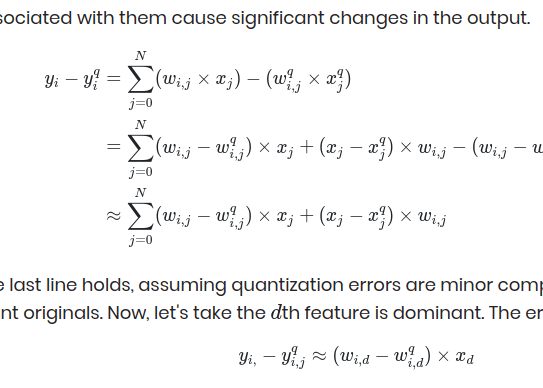

LLMs are highly useful, yet their runtime requirements are eye-watering. Learn how LLM.int8() quantizes LLMs and reduces their memory requir...

Top Free Text-to-speech (TTS) libraries for python

With more artificial intelligence applications being built, we need text-to-speech(TTS) engine API. The good news, there are a lot of open-source modules opensource for text-to-speech (TTS). This story will talk about python’s top text-to-speech(TTS) libraries.

gTTS (Google Text-to-Speech) is a Python library that allows you to convert text to speech using Google’s Text-to-Speech API. It’s designed to be easy to use and provides a range of options for controlling the speech output, such as setting the language, the speed of the speech, and the volume.

When I wrote this post, The project had 1.7k stars on GitHub.

To use gTTS, you will need to install the library using pip:

Then, you can use the gTTS class to create an instance of the text-to-speech converter. You can pass the text you want to convert to speech as a string to the gTTS constructor. For example:

Once you have an instance of the gTTS class, you can use the save method to save the speech to a file. For example:

You can also use the gTTS class to change the speech output’s language and speech speed. For example:

Complete code and output

Many other options are available for controlling the speech output, such as setting the volume and pitch of the speech. You can find more information about these options in the gTTS documentation .

I already have a series of videos and posts about coquiTTS that you can find here .

CoquiTTS is a neural text-to-speech (TTS) library developed in PyTorch. It is designed to be easy to use and provides a range of options for controlling the speech output, such as setting the language, the pitch, and the duration of the speech.

It is the most popular package, with 7.4k stars on GitHub.

To use CoquiTTS, you will need to install the library using pip:

Once you have installed the library, you can use the coquiTTS class to create an instance of the text-to-speech converter. You can pass the text you want to convert to speech as a string to the Synthesizer constructor. For example:

Once you have an instance of the Synthesizer class, you can use the tts method to generate speech. You can save the speech to a file using a the save_wav method. For example:

You can find the documentation here .

TensorFlowTTS

TensorFlowTTS (TensorFlow Text-to-Speech) is a deep learning-based text-to-speech (TTS) library developed by TensorFlow, an open-source platform for machine learning and artificial intelligence. It is designed to be easy to use and provides a range of features for building TTS systems, including support for multiple languages and customizable models.

It has 3k stars on gihub.

To use TensorFlowTTS, you will need to install the library using pip:

Sample code

TensorFlowTTS also provides pre-trained models for various languages, including English, Chinese, and Japanese. You can use these models to perform speech synthesis without the need to train your model. You can find more information about how to use TensorFlowTTS and the available options on GitHub .

pyttsx3 is a Python text-to-speech (TTS) library that allows you to convert text to speech using a range of TTS engines, including the Microsoft Text-to-Speech API, the Festival, and the eSpeak TTS engine. pyttsx3 is designed to be easy to use and provides a range of options for controlling speech output.

It has 1.3k stars on github.

To use pyttsx3, you will need to install the library using pip:

Once you have installed the library, you can use the pyttsx3.init function to create an instance of the text-to-speech converter. You can pass the TTS engine you want to use as an argument to the init function. For example:

Once you have an instance of the TTS engine, you can use the say method to generate speech from text. The say method takes the text you want to synthesize as an argument. For example:

Larynx is a text-to-speech (TTS) library written in Python that uses the Google Text-to-Speech API to convert text to speech.

To use Larynx, you will need to install the library using pip:

Once you have installed the library, you can use the text_to_speech function for the text-to-speech converter. You can pass many parameters like:

You can save the speech to a file using the **wavfile** function. For example:

Let's Innovate together for a better future.

We have the knowledge and the infrastructure to build, deploy and monitor Ai solutions for any of your needs.

- Documentation

- API Reference

- Python Library

- Getting Started

- How to use text to speech

- Combining multiple generations

- How to use pronunciation dictionaries

- How to use text to sound effects

- How to dub a video

- Integrating with Twilio

- Reducing Latency

Text to Speech

- POST Text To Speech

- POST Text To Speech With Timestamps

- POST Streaming

- POST Text To Speech Streaming With Timestamps

Speech to Speech

- POST Speech To Speech

Sound Effects

- POST Sound Generation

- GET Get Generated Items

- GET Get History Item By Id

- DEL Delete History Item

- GET Get Audio From History Item

- POST Download History Items

- DEL Delete Sample

- GET Get Audio From Sample

- GET Get User Subscription Info

- GET Get User Info

- GET Get Voices

- GET Get Default Voice Settings.

- GET Get Voice Settings

- GET Get Voice

- DEL Delete Voice

- POST Edit Voice Settings

- POST Add Voice

- POST Edit Voice

Voice Generation

- POST Generate A Random Voice

- GET Voice Generation Parameters

Voice Library

- POST Add Sharing Voice

- GET Get Projects

- GET Get Project By Id

- POST Add Project

- DEL Delete Project

- POST Convert Project

- GET Get Project Snapshots

- POST Stream Project Audio

- GET Get Chapters

- GET Get Chapter By Id

- DEL Delete Chapter

- POST Convert Chapter

- GET Get Chapter Snapshots

- POST Stream Chapter Audio

- POST Update Pronunciation Dictionaries

Pronunciation Dictionaries

- POST Add from file

- GET Get dictionaries

- GET Get dictionary by id

- POST Add rules

- POST Remove rules

- GET Download version by id

- GET Get Models

Audio-native

- POST Creates Audionative Enabled Project.

- POST Dub A Video Or An Audio File

- GET Get Dubbing Project Metadata

- GET Get Dubbed File

- DEL Delete Dubbing Project

- POST Invite User

- DEL Delete Existing Invitation

- POST Update Member

Text To Speech

API that converts text into lifelike speech with best-in-class latency & uses the most advanced AI audio model ever. Create voiceovers for your videos, audiobooks, or create AI chatbots for free.

Your API key. This is required by most endpoints to access our API programatically. You can view your xi-api-key using the 'Profile' tab on the website.

Voice ID to be used, you can use https://api.elevenlabs.io/v1/voices to list all the available voices.

When enable_logging is set to false full privacy mode will be used for the request. This will mean history features are unavailable for this request, including request stitching. Full privacy mode may only be used by enterprise customers.

You can turn on latency optimizations at some cost of quality. The best possible final latency varies by model. Possible values: 0 - default mode (no latency optimizations) 1 - normal latency optimizations (about 50% of possible latency improvement of option 3) 2 - strong latency optimizations (about 75% of possible latency improvement of option 3) 3 - max latency optimizations 4 - max latency optimizations, but also with text normalizer turned off for even more latency savings (best latency, but can mispronounce eg numbers and dates).

Defaults to 0.

Output format of the generated audio. Must be one of: mp3_22050_32 - output format, mp3 with 22.05kHz sample rate at 32kbps. mp3_44100_32 - output format, mp3 with 44.1kHz sample rate at 32kbps. mp3_44100_64 - output format, mp3 with 44.1kHz sample rate at 64kbps. mp3_44100_96 - output format, mp3 with 44.1kHz sample rate at 96kbps. mp3_44100_128 - default output format, mp3 with 44.1kHz sample rate at 128kbps. mp3_44100_192 - output format, mp3 with 44.1kHz sample rate at 192kbps. Requires you to be subscribed to Creator tier or above. pcm_16000 - PCM format (S16LE) with 16kHz sample rate. pcm_22050 - PCM format (S16LE) with 22.05kHz sample rate. pcm_24000 - PCM format (S16LE) with 24kHz sample rate. pcm_44100 - PCM format (S16LE) with 44.1kHz sample rate. Requires you to be subscribed to Pro tier or above. ulaw_8000 - μ-law format (sometimes written mu-law, often approximated as u-law) with 8kHz sample rate. Note that this format is commonly used for Twilio audio inputs.

The text that will get converted into speech.

Identifier of the model that will be used, you can query them using GET /v1/models. The model needs to have support for text to speech, you can check this using the can_do_text_to_speech property.

Voice settings overriding stored setttings for the given voice. They are applied only on the given request.

A list of pronunciation dictionary locators (id, version_id) to be applied to the text. They will be applied in order. You may have up to 3 locators per request

If specified, our system will make a best effort to sample deterministically, such that repeated requests with the same seed and parameters should return the same result. Determinism is not guaranteed.

The text that came before the text of the current request. Can be used to improve the flow of prosody when concatenating together multiple generations or to influence the prosody in the current generation.

The text that comes after the text of the current request. Can be used to improve the flow of prosody when concatenating together multiple generations or to influence the prosody in the current generation.

A list of request_id of the samples that were generated before this generation. Can be used to improve the flow of prosody when splitting up a large task into multiple requests. The results will be best when the same model is used across the generations. In case both previous_text and previous_request_ids is send, previous_text will be ignored. A maximum of 3 request_ids can be send.

A list of request_id of the samples that were generated before this generation. Can be used to improve the flow of prosody when splitting up a large task into multiple requests. The results will be best when the same model is used across the generations. In case both next_text and next_request_ids is send, next_text will be ignored. A maximum of 3 request_ids can be send.

Introduction

Our AI model produces the highest-quality AI voices in the industry.

Our text to speech API allows you to convert text into audio in 29 languages and 1000s of voices. Integrate our realistic text to speech voices into your react app, use our Python library or our websockets guide to get started.

API Features

High-quality voices.

1000s of voices, in 29 languages, for every use-case, at 128kbps

Ultra-low latency

Achieve ~400ms audio generation times with our Turbo model.

Contextual awareness

Understands text nuances for appropriate intonation and resonance.

Quick Start

authentication.

In order to use our API you need to get your xi-api-key first. Create an account , log in and in the lower left corner click on your profile picture -> “Profile + API key”.

Visit profile

Next click on the eye icon on your profile to access your xi-api-key . Do not show your account to anyone else. If someone gains access to your xi-api-key he can use your account as he could if he knew your password.

You can generate a new xi-api-key at any time by clicking on the spinning arrows next to the text field. This will invalidate your old xi-api-key .

Audio generation

Generate spoken audio from text with a simple request like the following Python example:

We offer 1000s of voices in 29 languages. Visit the Voice Lab to explore our pre-made voices or clone your own . Visit the Voices Library to see voices generated by ElevenLabs users.

Generation & Concurrency Limits

Our Turbo v2 model supports up to 30k characters (~30 minutes of audio) in a single request. All other models support up to 10k characters (~10 minutes of audio) in a single request.

The concurrency limit (the maximum number of concurrent requests you can run in parallel) depends on the tier you are on.

If you need a higher limit, reach out to our Enterprise team to discuss a custom plan.

Supported languages

Our TTS API is multilingual and currently supports the following languages:

Chinese, Korean, Dutch, Turkish, Swedish, Indonesian, Filipino, Japanese, Ukrainian, Greek, Czech, Finnish, Romanian, Russian, Danish, Bulgarian, Malay, Slovak, Croatian, Classic Arabic, Tamil, English, Polish, German, Spanish, French, Italian, Hindi and Portuguese .

To use them, simply provide the input text in the language of your choice.

Streaming API

Dig into the details of using the ElevenLabs TTS API.

Learn how to use our API with websockets.

Join Our Discord

A great place to ask questions and get help from the community.

Integration Guides

Learn how to integrate ElevenLabs into your workflow.

Path Parameters

Query parameters.

Show child attributes

The response is of type file .

- Stack Overflow Public questions & answers

- Stack Overflow for Teams Where developers & technologists share private knowledge with coworkers

- Talent Build your employer brand

- Advertising Reach developers & technologists worldwide

- Labs The future of collective knowledge sharing

- About the company

Collectives™ on Stack Overflow

Find centralized, trusted content and collaborate around the technologies you use most.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Get early access and see previews of new features.

How Microsoft Azure text to Speech without speaking just save file directly?

How to just save file directly to wave without speaking it please help

as if you can see the documentation of azure cognitive services they dont add this about how to just save also the speech_synthesizer calss also dont have any method for just save file without play it

- text-to-speech

- azure-cognitive-services

Just specify audio_config=None for speechsdk.SpeechSynthesizer .

- Thank you Stanely just one more question related to it when i try to use this code in Cent Os it gives "Import Error: version `CXXABI_1.3.9' not found" – Sachin Anbhule Commented Nov 25, 2020 at 8:32

- @SachinAnbhule, Welcome, but I am sorry,I am not quite sure about your second question. Could you please post another question with detailed information ? On stack overfolow ,it is one case one question,thanks ! – Stanley Gong Commented Nov 25, 2020 at 8:36

- thank you so much Stanely,I already solved that error – Sachin Anbhule Commented Nov 25, 2020 at 11:40

- Thank you @Stanley. I am completely new to this. While i was executing your code , i got below error ""AttributeError: module 'azure.cognitiveservices.speech' has no attribute 'SpeechSynthesizer'".Could you please guide me. I have installed the azure-cognitiveservices-speech-1.15.0 version. – ssp Commented Feb 5, 2021 at 10:50

Your Answer

Reminder: Answers generated by artificial intelligence tools are not allowed on Stack Overflow. Learn more

Sign up or log in

Post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged python python-3.x azure text-to-speech azure-cognitive-services or ask your own question .

- Featured on Meta

- Upcoming sign-up experiments related to tags

- Policy: Generative AI (e.g., ChatGPT) is banned

- The return of Staging Ground to Stack Overflow

- The 2024 Developer Survey Is Live

Hot Network Questions

- What's funny about "He leveraged his @#% deep into soy beans and cocoa futures" in The Jerk (1979)?

- How quotes work in bash regex expression? (Regarding special regex reserved character & special bash reserved characters.)

- Is it better to freeze meat in butcher paper?

- Can castanets play this fast?

- Is there a unified scientific method, anything in addition to its demarcation from pseudo science?

- I feel like doing a PhD is my only option but I am not excited about it. What can I do to fix my life?

- Why can't I connect a hose to this outdoor faucet?

- Benvenuto Cellini and Pantasilea

- NCP1117 (3.3V LDO) with ceramic and aluminum electrolytic capacitors

- Kids show with roly-poly ball creatures; they live in a big treehouse on a hill

- What does "crammle aboon the grees" mean?

- How can I determine current font attributes and/or determine if the current font has been substituted by the kernel?

- Gender in plural -- single one or 3 in disguise?

- I want to compare the last character of a string with other characters

- Is there any position where giving checkmate by En Passant is a brilliant move on Chess.com?

- In John 1:12, what is the grammatical significance of the tense of the four verbs in understanding the relationship between faith and salvation?

- How do you handle plain sight while sneaking?

- 1. What determines the color of light emitted by thermoluminescent stones?

- At what point does working memory and IQ become a problem when trying to follow a lecture?

- Containing a Black Hole's End-Of-Life

- Prisoners and warden game, again?

- What is the goal of the message “astronaut use only” written on one Hubble's module?

- If you're holding on to a playground spinning wheel and then let go, is your trajectory straight or curved?

- Why are worldships not shaped like worlds?

speech-engine 0.1.0

pip install speech-engine Copy PIP instructions

Released: Mar 18, 2024

Python package for synthesizing text into speech

Verified details

Maintainers.

Unverified details

Project links, github statistics.

- Open issues:

View statistics for this project via Libraries.io , or by using our public dataset on Google BigQuery

License: MIT License

Author: Praanesh

Tags speech_engine, text2speech, text-to-speech, TTS, speech synthesis, audio generation, natural language processing, language processing, voice synthesis, speech output, speech generation, language synthesis, voice output, audio synthesis, voice generation

Classifiers

- OSI Approved :: MIT License

- Python :: 3

Project description

Speech engine.

Speech Engine is a Python package that provides a simple interface for synthesizing text into speech using different TTS engines, including Google Text-to-Speech (gTTS) and Wit.ai Text-to-Speech (Wit TTS).

Installation

You can install speech-engine using pip:

This project is licensed under the MIT License - see the LICENSE file for details.

Contributions

Contributions are welcome! If you find any issues or have suggestions for improvements, please open an issue or submit a pull request on GitHub.

Project details

Release history release notifications | rss feed.

Mar 18, 2024

Jul 1, 2023

Jun 30, 2023

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Mar 18, 2024 Source

Built Distribution

Uploaded Mar 18, 2024 Python 3

Hashes for speech_engine-0.1.0.tar.gz

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

Hashes for speech_engine-0.1.0-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

- português (Brasil)

Supported by

Introducing Deepgram Aura : Lightning Fast Text-to-Speech for Voice AI Agents

Text to Speech for conversational AI

Bring your apps to life with responsive, natural-sounding voice AI.

Quality: Human-like tone, rhythm, and emotion

Speed: less than 250 ms latency

Scale: Cost-efficient and optimized for high-throughput applications

Turn any text into audio

Experience human-like voice AI that runs faster and more efficiently than any other solution on the market. Try for yourself!

Build an engaging full stack voice agent

Build a responsive voicebot effortlessly with Deepgram Voice AI platform, utilizing Deepgram Nova-2 speech-to-text, customized LLM, and Deepgram Aura text-to-speech. Experience optimized end-to-end performance and low system latency with our open-source code.

Speech synthesis at scale with one powerful API call

Fast and accurate transcription, generation, and conversational intelligence all from the world's best voice AI platform.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

StreamSpeech is an “All in One” seamless model for offline and simultaneous speech recognition, speech translation and speech synthesis.

ictnlp/StreamSpeech

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 13 Commits | ||||

Repository files navigation

Streamspeech.

Authors : Shaolei Zhang , Qingkai Fang , Shoutao Guo , Zhengrui Ma , Min Zhang , Yang Feng*

Code for ACL 2024 paper " StreamSpeech: Simultaneous Speech-to-Speech Translation with Multi-task Learning ". If you like our project, please give us a star ⭐ for latest update.

🎧 Listen to StreamSpeech's translated speech 🎧

💡 Highlight :

- StreamSpeech achieves SOTA performance on both offline and simultaneous speech-to-speech translation.

- StreamSpeech performs streaming ASR , simultaneous speech-to-text translation and simultaneous speech-to-speech translation via an "All in One" seamless model.

- StreamSpeech can present intermediate results (i.e., ASR or translation results) during simultaneous translation, offering a more comprehensive low-latency communication experience.

Support 8 tasks :

- Offline : Speech Recognition (ASR)✅, Speech-to-Text Translation (S2TT)✅, Speech-to-Speech Translation (S2ST)✅, Speech Synthesis (TTS)✅

- Simultaneous : Streaming ASR✅, Simultaneous S2TT✅, Simultaneous S2ST✅, Real-time TTS✅ under any latency (with only one model)

Case : more cases can be explored at ictnlp.github.io/StreamSpeech-site/

Speech Input : example/wavs/common_voice_fr_17301936.mp3 Transcription (ground truth): jai donc lexpérience des années passées jen dirai un mot tout à lheure Translation (ground truth): i therefore have the experience of the passed years i'll say a few words about that later

| StreamSpeech | Simultaneous | Offline |

|---|---|---|

| jai donc expérience des années passé jen dirairai un mot tout à lheure | jai donc lexpérience des années passé jen dirairai un mot tout à lheure | |

| i therefore have an experience of last years i will tell a word later | so i have the experience in the past years i'll say a word later | |

| simul-s2st.mov | offline-s2st.mov | |

| ( ) | real-time-tts.mov | offline-tts.mov |

⚙Requirements

Python == 3.10, PyTorch == 2.0.1

Install fairseq & SimulEval:

🔥Quick Start

1. model download, (1) streamspeech models.

| Language | UnitY | StreamSpeech (offline) | StreamSpeech (simultaneous) |

|---|---|---|---|

| Fr-En | unity.fr-en.pt [ ] [ ] | streamspeech.offline.fr-en.pt [ ] [ ] | streamspeech.simultaneous.fr-en.pt [ ] [ ] |

| Es-En | unity.es-en.pt [ ] [ ] | streamspeech.offline.es-en.pt [ ] [ ] | streamspeech.simultaneous.es-en.pt [ ] [ ] |

| De-En | unity.de-en.pt [ ] [ ] | streamspeech.offline.de-en.pt [ ] [ ] | streamspeech.simultaneous.de-en.pt [ ] [ ] |

(2) Unit-based HiFi-GAN Vocoder

| Unit config | Unit size | Vocoder language | Dataset | Model |

|---|---|---|---|---|

| mHuBERT, layer 11 | 1000 | En | , |

2. Prepare Data and Config (only for test/inference)

(1) config files.

Replace /data/zhangshaolei/StreamSpeech in files configs/fr-en/config_gcmvn.yaml and configs/fr-en/config_mtl_asr_st_ctcst.yaml with your local address of StreamSpeech repo.

(2) Test Data

Prepare test data following SimulEval format. example/ provides an example:

- wav_list.txt : Each line records the path of a source speech.