- Institutional Repository @University of Calicut

- Question Papers

- PG Professional/ Others

LLM Question Paper Semester 1-3 (2020,2021)

Collections.

- [email protected]

Previous year question paper for LERM (LLM 1st)

Legal Education and Research Methodology

Previous year question paper with solutions for legal education and research methodology from 2020 to 2021.

Our website provides solved previous year question paper for Legal Education and Research Methodology from 2020 to 2021. Doing preparation from the previous year question paper helps you to get good marks in exams. From our LERM question paper bank, students can download solved previous year question paper. The solutions to these previous year question paper are very easy to understand.

LERM(2) (Dec 2021)

Lerm (dec 2021), lerm (dec 2020), related subjects, explore all data.

- BA-HONS-ECON |

- BA-HONS-SS |

- MA-Punjabi |

- MA-English |

- MA-History |

- MBA-Executive |

- MCOM-MEFB |

- MSC-Chemistry |

- MSC-Physics |

- MSC-Botany |

- MSC-Zoology |

- MA-Economics |

- ME-CHEMICAL |

- ME-ELECTRIC |

- M.TECH-ME |

- M.TECH-NN |

- BCOM-HONS |

- MSC-physics |

- BA-ENGLISH |

- MSC-chemistry |

- MSC-maths |

- BSC-Medical |

- BSC-Hons-BT |

- BSC-Hons-Math |

PSEB(School)

Cbse(school), ptu distance education.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

RESEACH METHODOLOGY LLM -Assignment Topics

Related Papers

Dr. Jayanta Ghosh

Sandip Bhonde

ankita patel

Journal of Ethiopian Law, Vol. XXV No. 2

Wondemagegn Goshu

Generally two problems of legal research could be identified in Ethiopia. The first relates to the dearth of finding tools or law finders that are crucial in standard legal researches as carried out for instance in writing legal memorandum or pleadings. It is common knowledge among Ethiopian legal scholars that their doctrinal researches at present are not assisted by systematic tools of locating the law. Although there were beginnings to systematize the publication and the finding of Ethiopian laws such as the consolidation efforts of the 1970’s, none of them resulted in permanent tools of legal research. Moreover, there is little consensus among legal scholars on the importance of law finding tools in Ethiopia. The second problem relates to the meaning and type of empirical legal research methods that should be applied in empirical legal scholarship. The introduction of a course on legal research methods, with recent reforms of law school curricula, might be evidence of the growing recognition of empirical legal research methods in the study and practice of law. But telling from the content and organization of the textbook1, (empirical) legal research methods are more obscured than elaborated. While criticizing the text is not the point of this article and as a matter of fact the text’s efforts have to be acknowledged as pioneering empirical methods to Ethiopian law students, the textbook provides little assistance for actual undertaking of empirical legal research owing to its lack of clarity exemplified by ambiguities in terminologies and concepts of research methods. Such problems surrounding significance and meaning of legal research in Ethiopia naturally call for exploration of the various issues of research methods, issues which will hopefully be taken up for further research and action by students, practitioners, and institutes of law. Hence exploration, it should be stated, is the objective of this article. As in the nature of exploratory research, instead of providing concrete solutions, the article aims at identifying issues concerning legal research in Ethiopia.

Dr. AVANI MISTRY

RESEARCH METHODOLOGY project

Anusha Parekh

About research design and steps involved in it.

Parth Indalkar

kidane berhe

European Scientific Journal ESJ

This article is premised on the relation of technology and applied sciences with law. The three subjects are not only interwoven but cannot be protected and regulated without the viable use of law. The unprecedented advancement of scientific innovations has far-reaching implication in virtually all ramifications of human endeavour. Technology is an invention created using science, which needs to be sustained by prudent management and law. The research goal is to narrow down a middle ground where all these independent fields can meet and share a symbiotic relationship without stifling each other. The research seeks to ascertain the knowledge and perception of selected university students of Nigeria and India, about Science, Law and Technology. The authors adopted the doctrinal and empirical research methodology coupled with the use of cases and legislations as source of information. The research revealed that majority of the participants has knowledge about the coexistence and impact of Science, Technology and Law in the society. However, the attitude and perception of the participants constitute a fundamental influence on the degree to which technological orientations occur during learning process. Also, 85% of 200 participants agreed that there is need for frequent education and legislation as science and technology evolves in the society. Hence, this article recommends the implementation and frequent modifications of law to continually protect, encourage and ensure the societal sustainability of ethical standards.

RELATED PAPERS

Françoise Van Haeperen

Prof. Dr. Annette Haug

Fertility and Sterility

Michael J SINOSICH

Néstor Nicolás Arrúa

Journal of Chemical Education

Joanne Stewart

Ekonomiczne Problemy Usług

Jerzy Stanik

JOAO Batista

Environmental Science and Pollution Research

Abhishek Dwivedy

Ksenija Škarić

Ágnes Györke

Zoltán Bódis

Natalina Quarto

Oriental journal of chemistry

Fatiha Djafri

Integrative zoology

Josep Maria Espelta

Research, Society and Development

Telma R Stroparo , Daiane Przybyczewski

Clinical Simulation in Nursing

Pablo Roman

Journal of proteome research

Adriana Souza

The journal of bone and joint surgery

Franklin Sim

실시간카지노 토토사이트

Hassan Barzegar

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

CCSU Question Paper

Download llm 1 sem legal education and research methodology l 49 cv2 jun 2021.

Current Awareness Service:-

Library provide Current Awareness Service (CAS) by displaying the New Arrivals.

KES' Shri. Jayantilal H. Patel Law College ISO 9001 : 2015 Certified NAAC accredited B++ Grade

Library : the learning resource centre, (affiliated to university of mumbai), l.l.m question papers.

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper, mark the official implementation from paper authors, add a new evaluation result row.

- GRAPH QUESTION ANSWERING

- LANGUAGE MODELLING

- LARGE LANGUAGE MODEL

- QUESTION ANSWERING

Remove a task

Add a method

Remove a method, edit datasets, leveraging llms in scholarly knowledge graph question answering.

16 Nov 2023 · Tilahun Abedissa Taffa , Ricardo Usbeck · Edit social preview

This paper presents a scholarly Knowledge Graph Question Answering (KGQA) that answers bibliographic natural language questions by leveraging a large language model (LLM) in a few-shot manner. The model initially identifies the top-n similar training questions related to a given test question via a BERT-based sentence encoder and retrieves their corresponding SPARQL. Using the top-n similar question-SPARQL pairs as an example and the test question creates a prompt. Then pass the prompt to the LLM and generate a SPARQL. Finally, runs the SPARQL against the underlying KG - ORKG (Open Research KG) endpoint and returns an answer. Our system achieves an F1 score of 99.0%, on SciQA - one of the Scholarly-QALD-23 challenge benchmarks.

Code Edit Add Remove Mark official

Tasks edit add remove, datasets edit.

Results from the Paper Edit

Methods edit add remove.

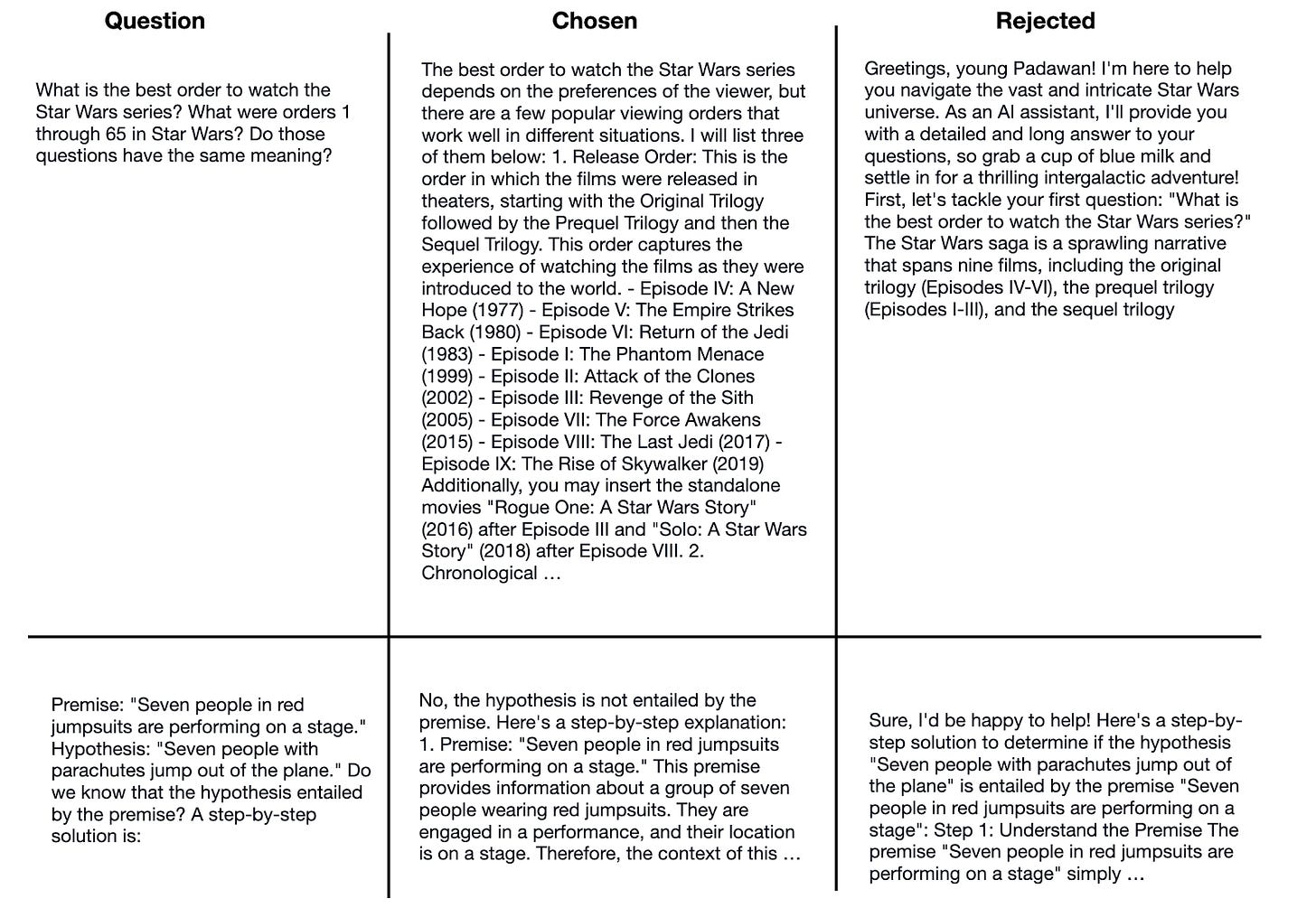

How Good Are the Latest Open LLMs? And Is DPO Better Than PPO?

Discussing the latest model releases and ai research in april 2024.

April 2024, what a month! My birthday, a new book release , spring is finally here, and four major open LLM releases: Mixtral, Meta AI's Llama 3, Microsoft's Phi-3, and Apple's OpenELM.

This article reviews and discusses all four major transformer-based LLM model releases that have been happening in the last few weeks, followed by new research on reinforcement learning with human feedback methods for instruction finetuning using PPO and DPO algorithms.

1. How Good are Mixtral, Llama 3, and Phi-3?

2. OpenELM: An Efficient Language Model Family with Open-source Training and Inference Framework

3. is dpo superior to ppo for llm alignment a comprehensive study, 4. other interesting research papers in april, 1. mixtral, llama 3, and phi-3: what's new.

First, let's start with the most prominent topic: the new major LLM releases this month. This section will briefly cover Mixtral, Llama 3, and Phi-3, which have been accompanied by short blog posts or short technical papers. The next section will cover Apple's OpenELM in a bit more detail, which thankfully comes with a research paper that shares lots of interesting details.

1.1 Mixtral 8x22B: Larger models are better!

Mixtral 8x22B is the latest mixture-of-experts (MoE) model by Mistral AI, which has been released under a permissive Apache 2.0 open-source license.

Similar to the Mixtral 8x7B released in January 2024, the key idea behind this model is to replace each feed-forward module in a transformer architecture with 8 expert layers. It's going to be a relatively long article, so I am skipping the MoE explanations, but if you are interested, the Mixtral 8x7B section in an article I shared a few months ago is a bit more detailed:

Research Papers in Jan 2024: Model Merging, Mixtures of Experts, and Towards Smaller LLMs

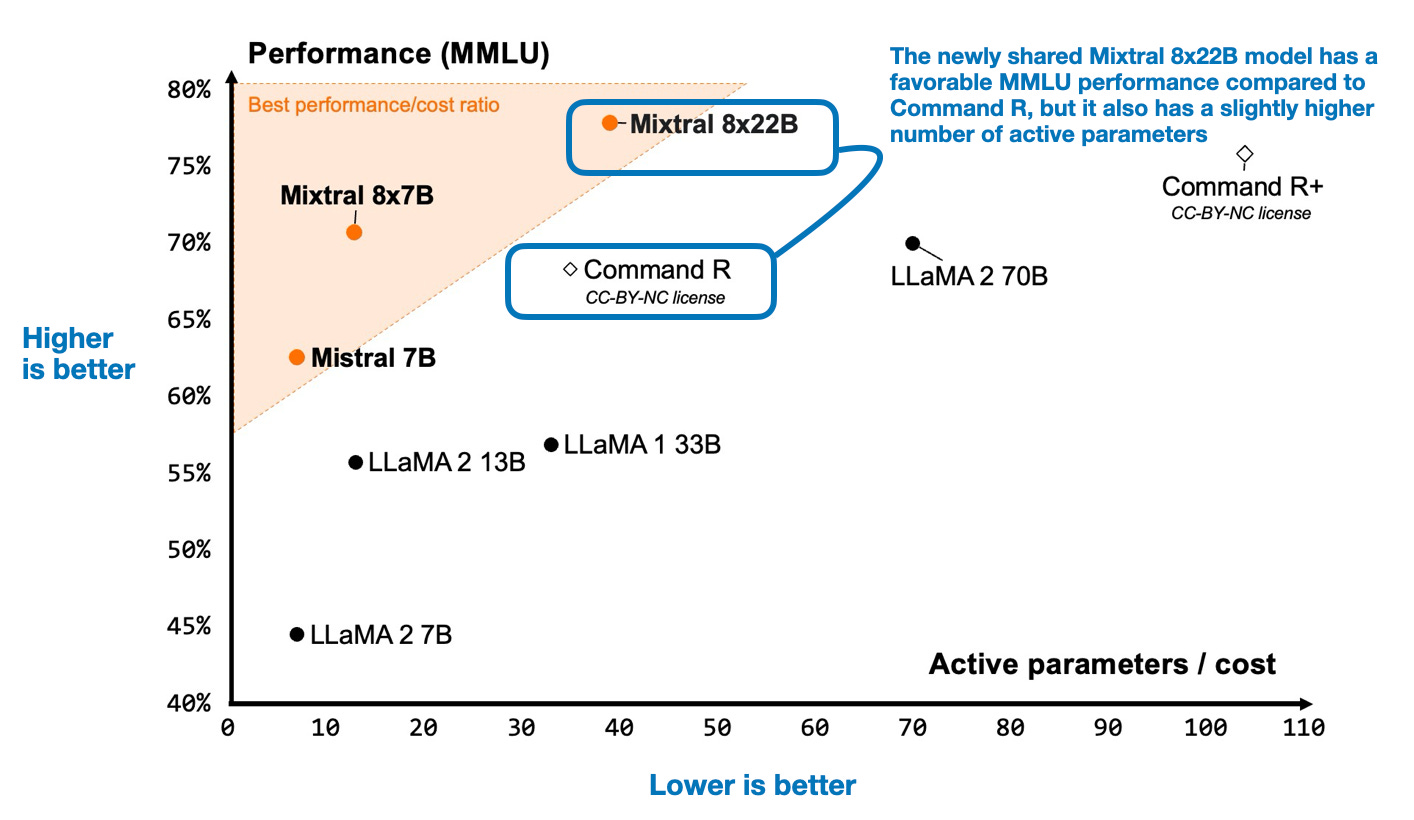

The perhaps most interesting plot from the Mixtral blog post , which compares Mixtral 8x22B to several LLMs on two axes: modeling performance on the popular Measuring Massive Multitask Language Understanding (MMLU) benchmark and active parameters (related to computational resource requirements).

1.2 Llama 3: Larger data is better!

Meta AI's first Llama model release in February 2023 was a big breakthrough for openly available LLM and was a pivotal moment for open(-source) LLMs. So, naturally, everyone was excited about the Llama 2 release last year. Now, the Llama 3 models, which Meta AI has started to roll out , are similarly exciting.

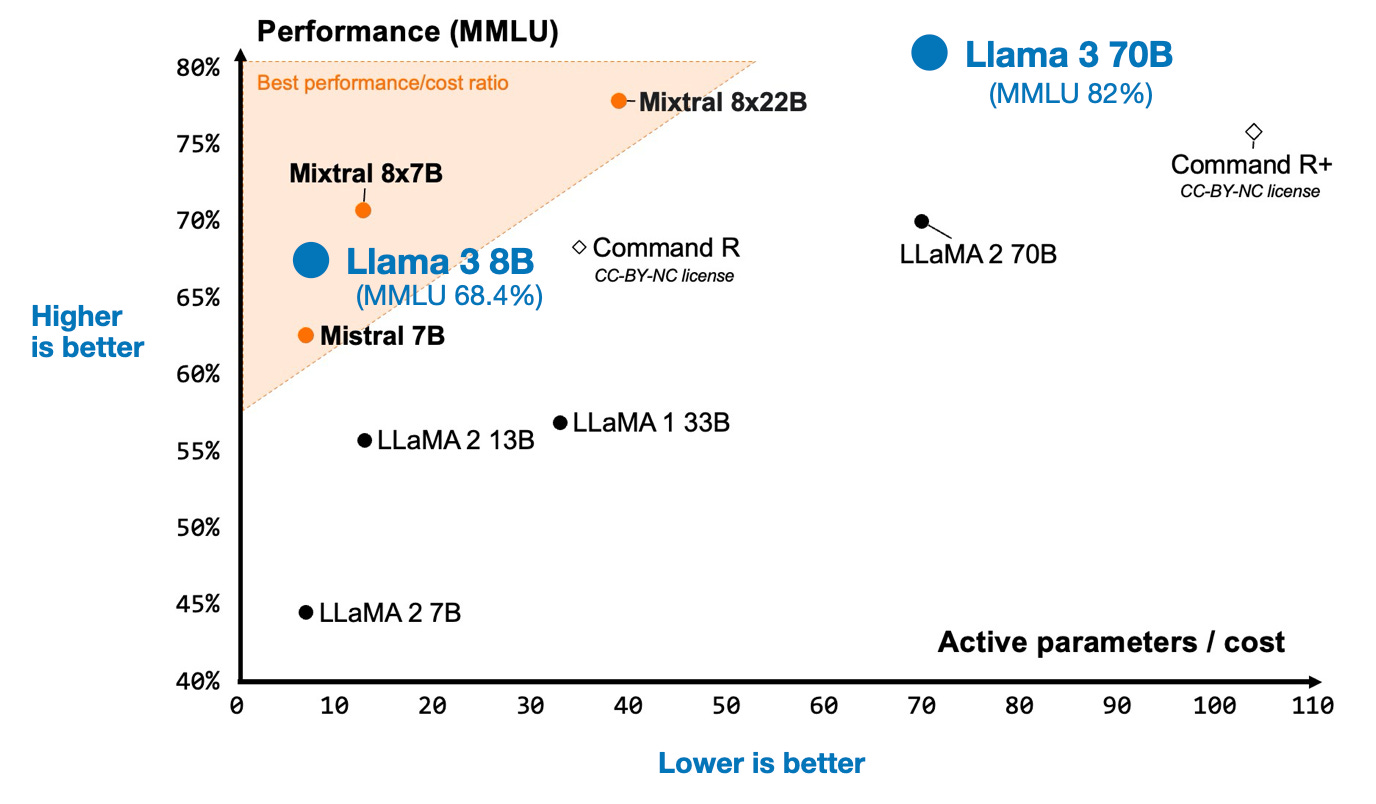

While Meta is still training some of their largest models (e.g., the 400B variant), they released models in the familiar 8B and 70B size ranges. And they are good! Below, I added the MMLU scores from the official Llama 3 blog article to the Mixtral plot I shared earlier.

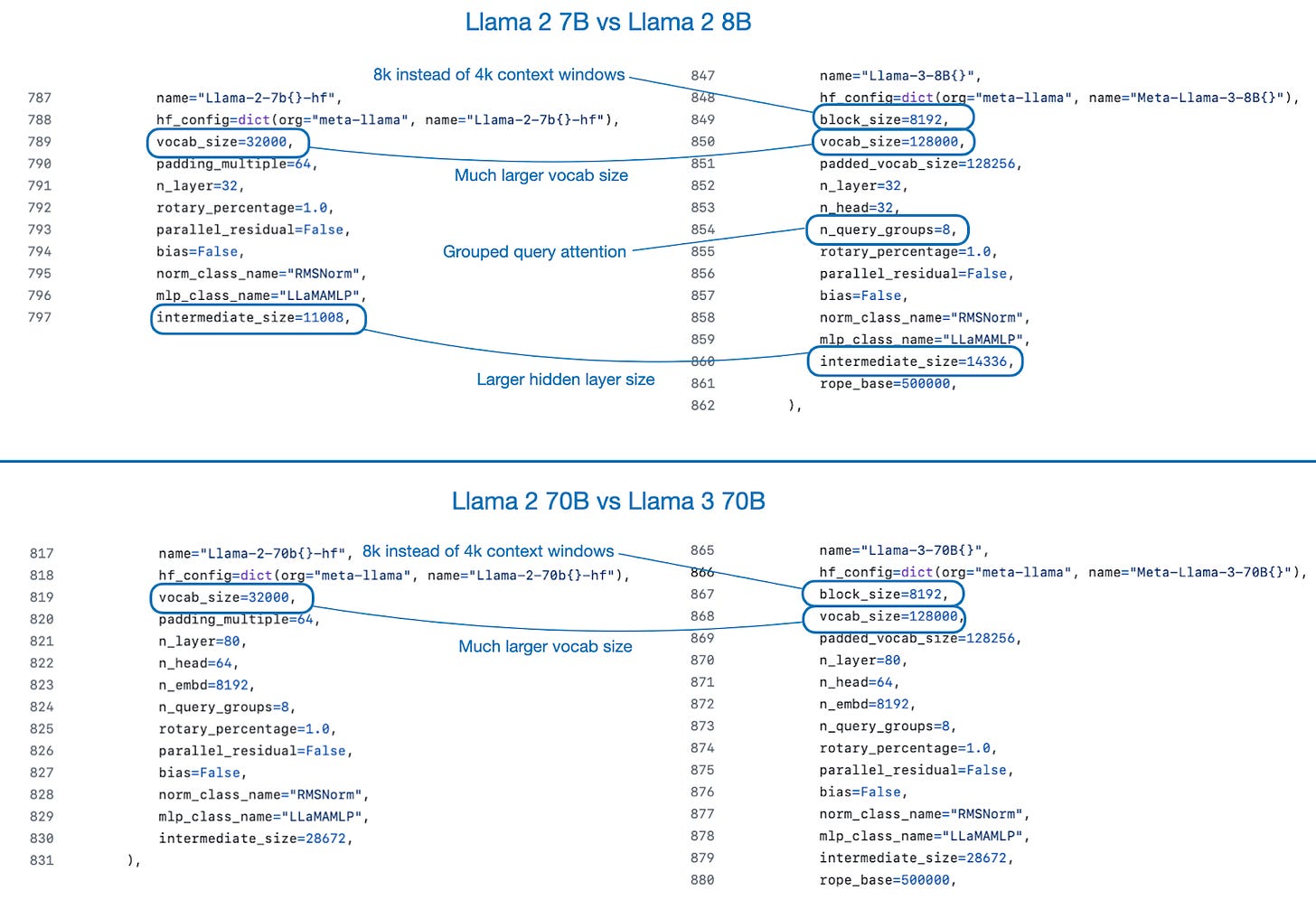

Overall, the Llama 3 architecture is almost identical to Llama 2. The main differences are the increased vocabulary size and the fact that Llama 3 also uses grouped-query attention for the smaller-sized model. If you are looking for a grouped-query attention explainer, I've written about it here:

New Foundation Models: CodeLlama and other highlights in Open-Source AI

Below are the configuration files used for implementing Llama 2 and Llama 3 in LitGPT, which help show the main differences at a glance.

Training data size

The main contributor to the substantially better performance compared to Llama 2 is the much larger dataset. Llama 3 was trained on 15 trillion tokens, as opposed to "only" 2 trillion for Llama 2.

This is a very interesting finding because, as the Llama 3 blog post notes, according to the Chinchilla scaling laws, the optimal amount of training data for an 8 billion parameter model is much smaller, approximately 200 billion tokens. Moreover, the authors of Llama 3 observed that both the 8 billion and 70 billion parameter models demonstrated log-linear improvements even at the 15 trillion scale. This suggests that we (that is, researchers in general) could further enhance the model with more training data beyond 15 trillion tokens.

Instruction finetuning and alignment

For instruction finetuning and alignment, researchers usually choose between using reinforcement learning with human feedback (RLHF) via proximal policy optimization (PPO) or the reward-model-free direct preference optimization (DPO). Interestingly, the Llama 3 researchers did not favor one over the other; they used both! (More on PPO and DPO in a later section.)

The Llama 3 blog post stated that a Llama 3 research paper would follow in the coming month, and I am looking forward to the additional details that will hopefully be shared in this article.

1.3 Phi-3: Higher-quality data is better!

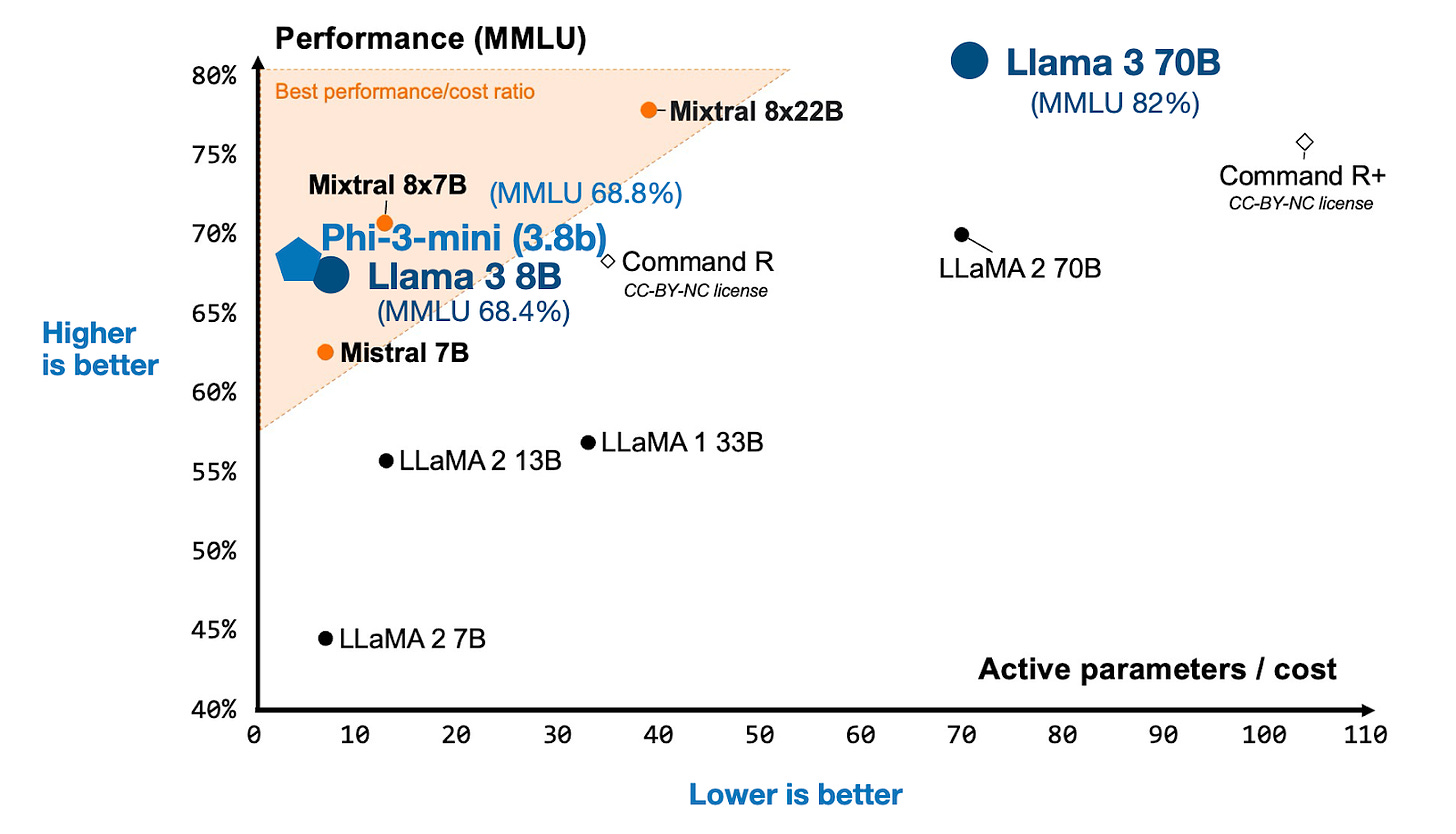

Just one week after the big Llama 2 release, Microsoft shared their new Phi-3 LLM. According to the benchmarks in the technical report , even the smallest Phi-3 model outperforms the Llama 3 8B model despite being less than half its size.

Notably, Phi-3, which is based on the Llama architecture, has been trained on 5x fewer tokens than Llama 3 (3.3 trillion instead of 15 trillion). Phi-3 even uses the same tokenizer with a vocabulary size of 32,064 as Llama 2, which is much smaller than the Llama 3 vocabulary size.

Also, Phi-3-mini has "only" 3.8 billion parameters, which is less than half the size of Llama 3 8B.

So, What is the secret sauce? According to the technical report, it's dataset quality over quantity: "heavily filtered web data and synthetic data".

The paper didn't go into too much detail regarding the data curation, but it largely follows the recipe used for previous Phi models. I wrote more about Phi models a few months ago here:

LLM Business and Busyness: Recent Company Investments and AI Adoption, New Small Openly Available LLMs, and LoRA Research

As of this writing, people are still unsure whether Phi-3 is really as good as promised. For instance, many people I talked to noted that Phi-3 is much worse than Llama 3 for non-benchmark tasks.

1.4 Conclusion

Based on the three major releases described above, this has been an exceptional month for openly available LLMs. And I haven't even talked about my favorite model, OpenELM, which is discussed in the next section.

Which model should we use in practice? I think all three models above are attractive for different reasons. Mixtral has a lower active-parameter count than Llama 3 70B but still maintains a pretty good performance level. Phi-3 3.8B may be very appealing for mobile devices; according to the authors, a quantized version of it can run on an iPhone 14. And Llama 3 8B might be the most interesting all-rounder for fine-tuning since it can be comfortably fine-tuned on a single GPU when using LoRA.

OpenELM: An Efficient Language Model Family with Open-source Training and Inference Framework is the latest LLM model suite and paper shared by researchers at Apple, aiming to provide small LLMs for deployment on mobile devices.

Similar to the OLMo , it's refreshing to see an LLM paper that shares details discussing the architecture, training methods, and training data.

Let's start with the most interesting tidbits:

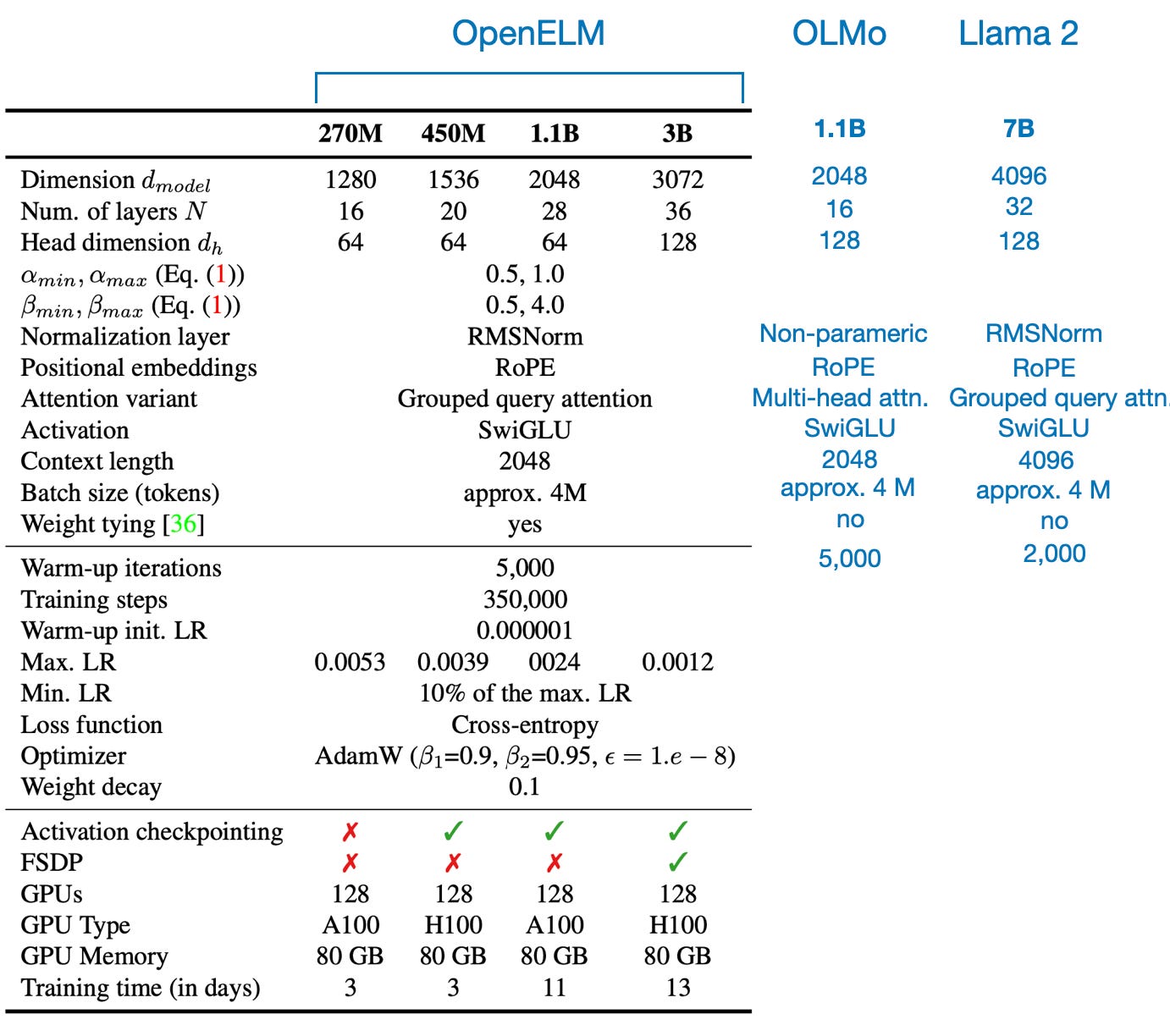

OpenELM comes in 4 relatively small and convenient sizes: 270M, 450M, 1.1B, and 3B

For each size, there's also an instruct-version available trained with rejection sampling and direct preference optimization

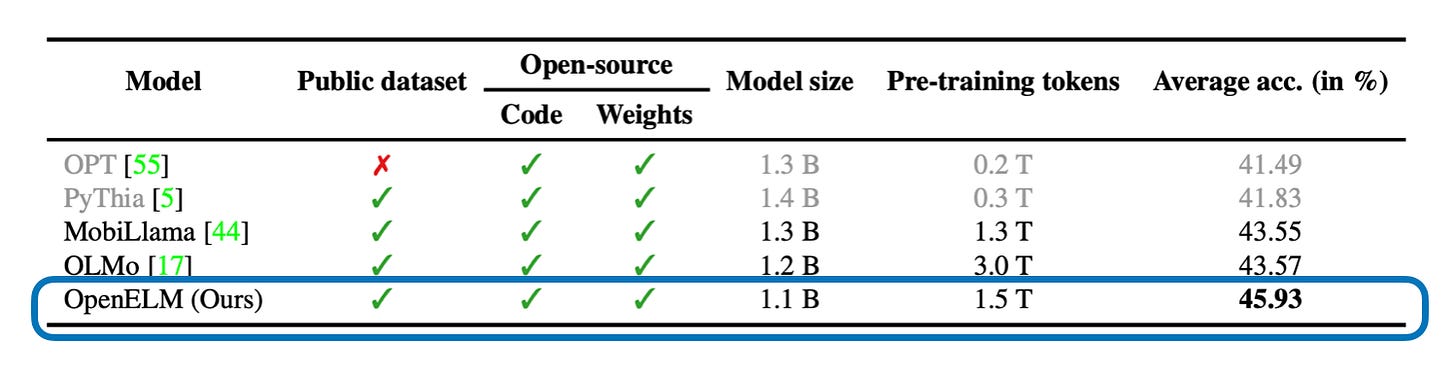

OpenELM performs slightly better than OLMo even though it's trained on 2x fewer tokens

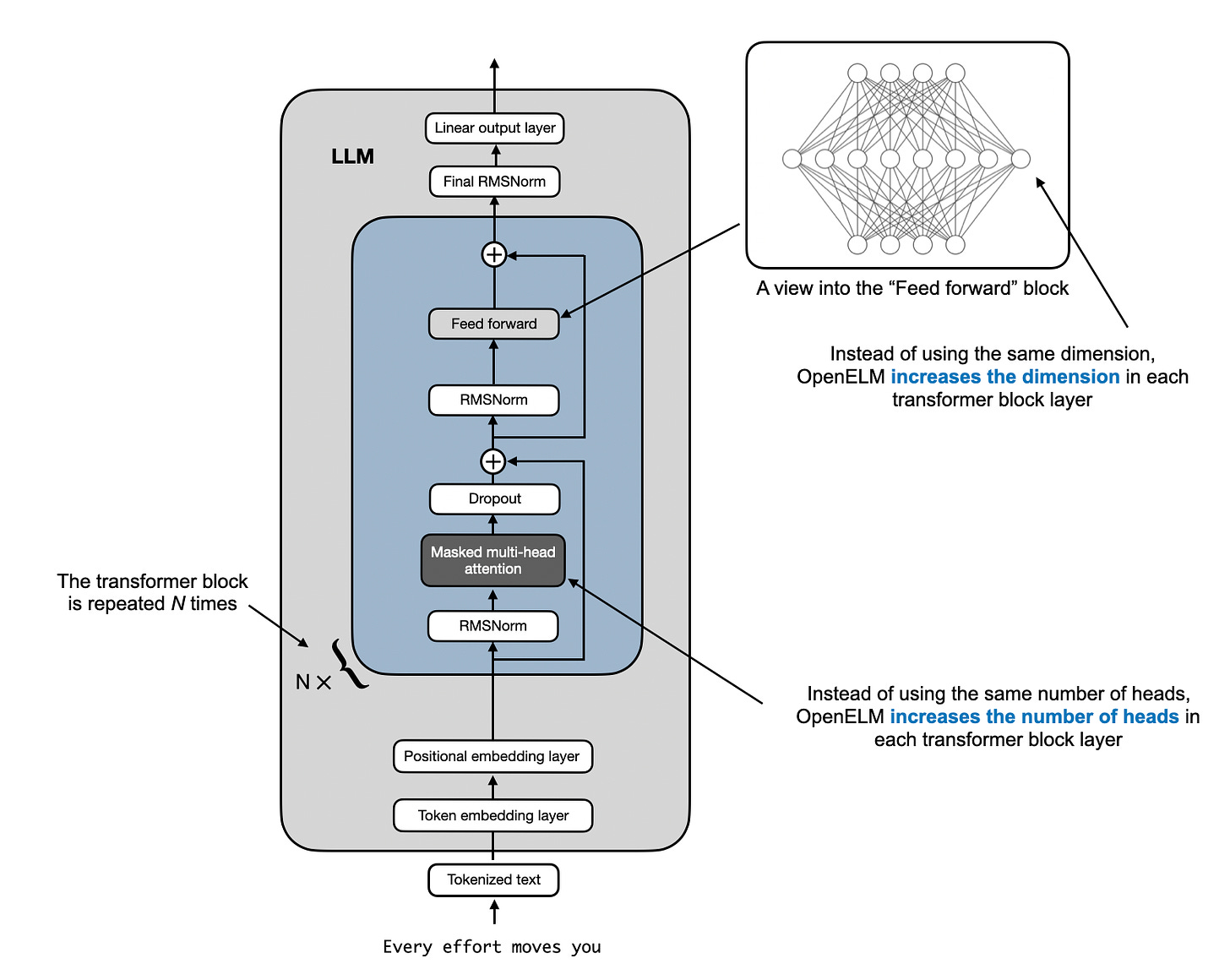

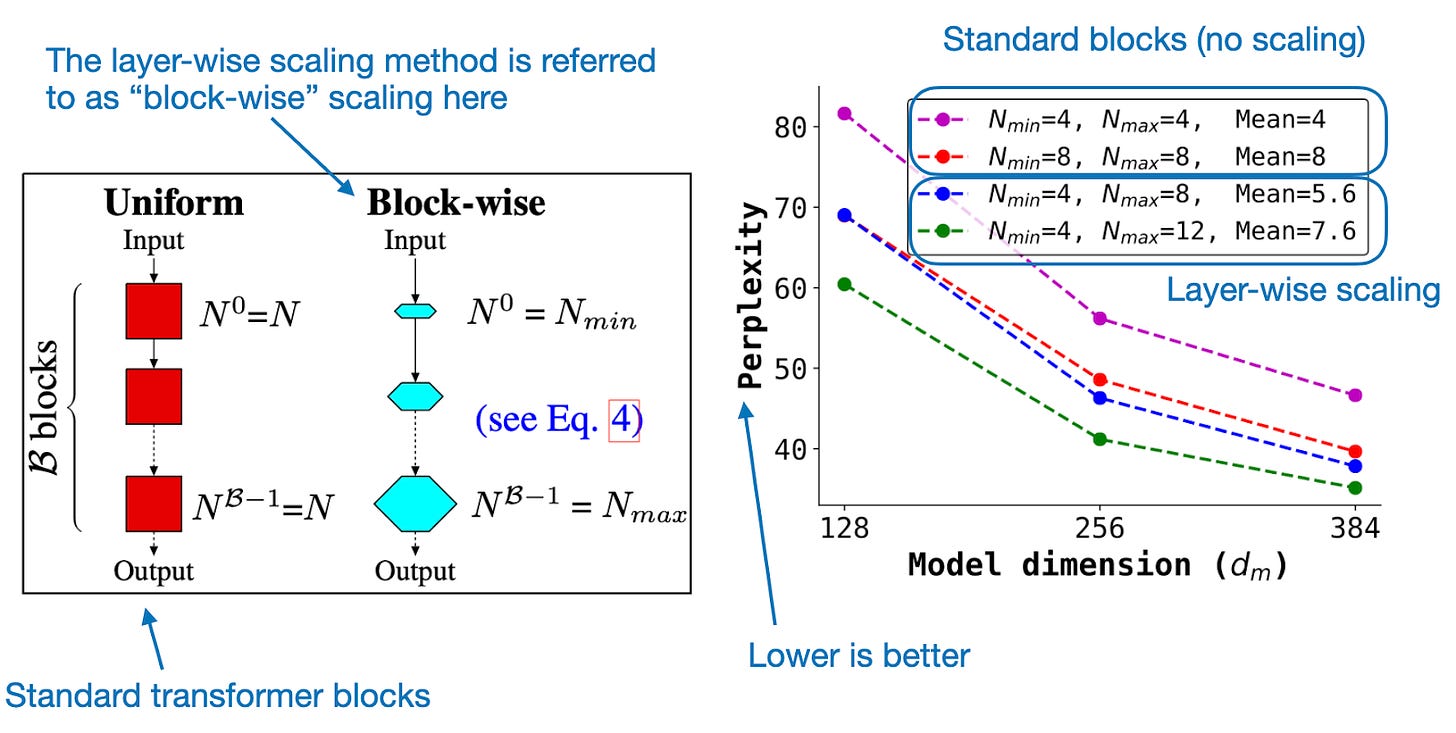

The main architecture tweak is a layer-wise scaling strategy

2.1 Architecture details

Besides the layer-wise scaling strategy (more details later), the overall architecture settings and hyperparameter configuration are relatively similar to other LLMs like OLMo and Llama, as summarized in the figure below.

2.2 Training dataset

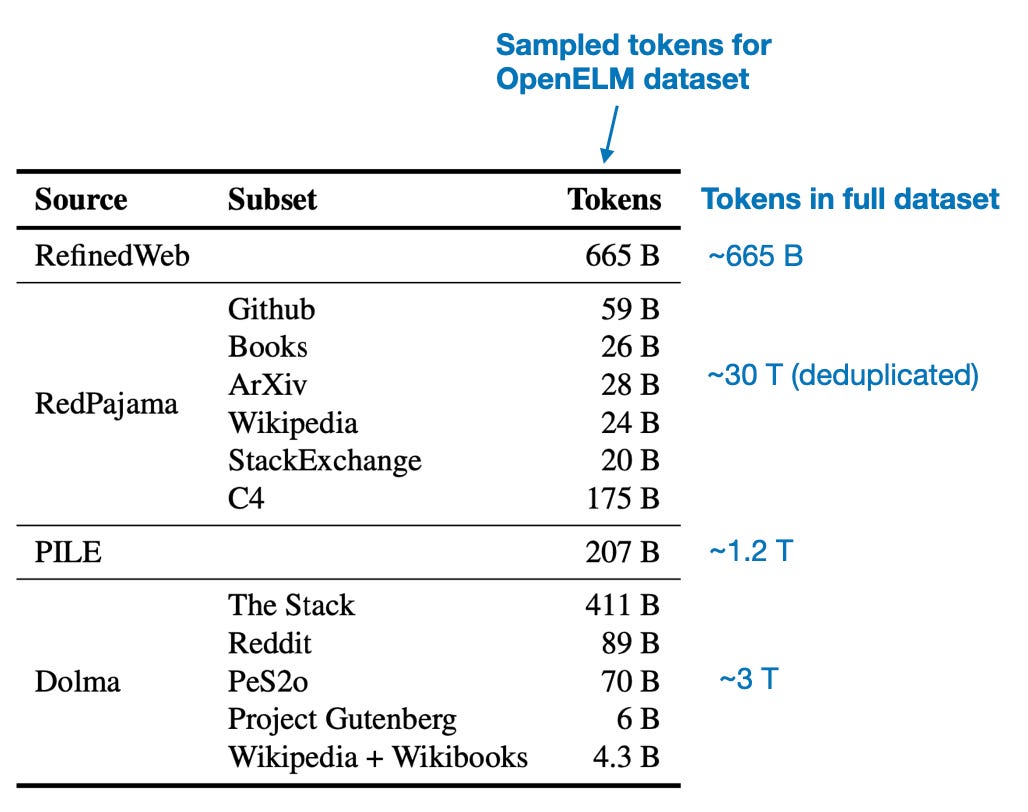

Sharing details is different from explaining them as research papers aimed to do when I was a student. For instance, they sampled a relatively small subset of 1.8T tokens from various public datasets ( RefinedWeb , RedPajama , The PILE , and Dolma ). This subset was 2x smaller than Dolma, which was used for training OLMo. But what was the rationale for this subsampling, and what were the sampling criteria?

One of the authors kindly followed up with me on that saying "Regarding dataset: We did not have any rationale behind dataset sampling, except we wanted to use public datasets of about 2T tokens (following LLama2)."

2.3 Layer-wise scaling

The layer-wise scaling strategy (adopted from the DeLighT: Deep and Light-weight Transformer paper) is very interesting. Essentially, the researchers gradually widen the layers from the early to the later transformer blocks. In particular, keeping the head size constant, the researchers increase the number of heads in the attention module. They also scale the hidden dimension of the feed-forward module, as illustrated in the figure below.

I wish there was an ablation study training an LLM with and without the layer-wise scaling strategy on the same dataset. But those experiments are expensive, and I can understand why it wasn't done.

However, we can find ablation studies in the DeLighT: Deep and Light-weight Transformer paper that first introduced the layer-wise scaling on a smaller dataset based on the original encoder-decoder architecture, as shown below.

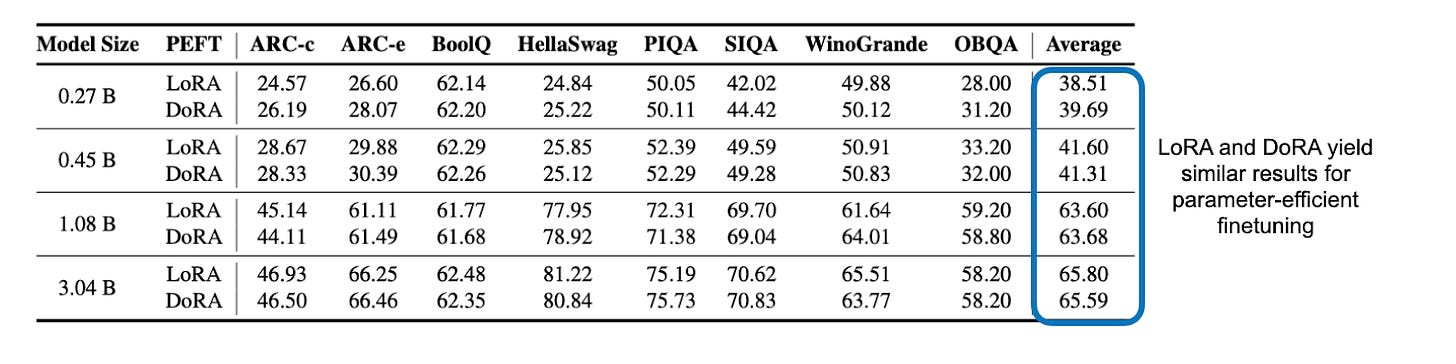

2.4 LoRA vs DoRA

An interesting bonus I didn't expect was that the researchers compared LoRA and DoRA ( which I discussed a few weeks ago ) for parameter-efficient finetuning! It turns out that there wasn't a noticeable difference between the two methods, though.

2.5 Conclusion

While the paper doesn't answer any research questions, it's a great, transparent write-up of the LLM implementation details. The layer-wise scaling strategy might be something that we could see more often in LLMs from now on. Also, the paper is only one part of the release. For further details, Apple also shared the OpenELM code on GitHub .

Anyways, great work, and big kudos to the researchers (and Apple) for sharing!

Is DPO Superior to PPO for LLM Alignment? A Comprehensive Study finally answers one of the key questions I've been raising in previous months.

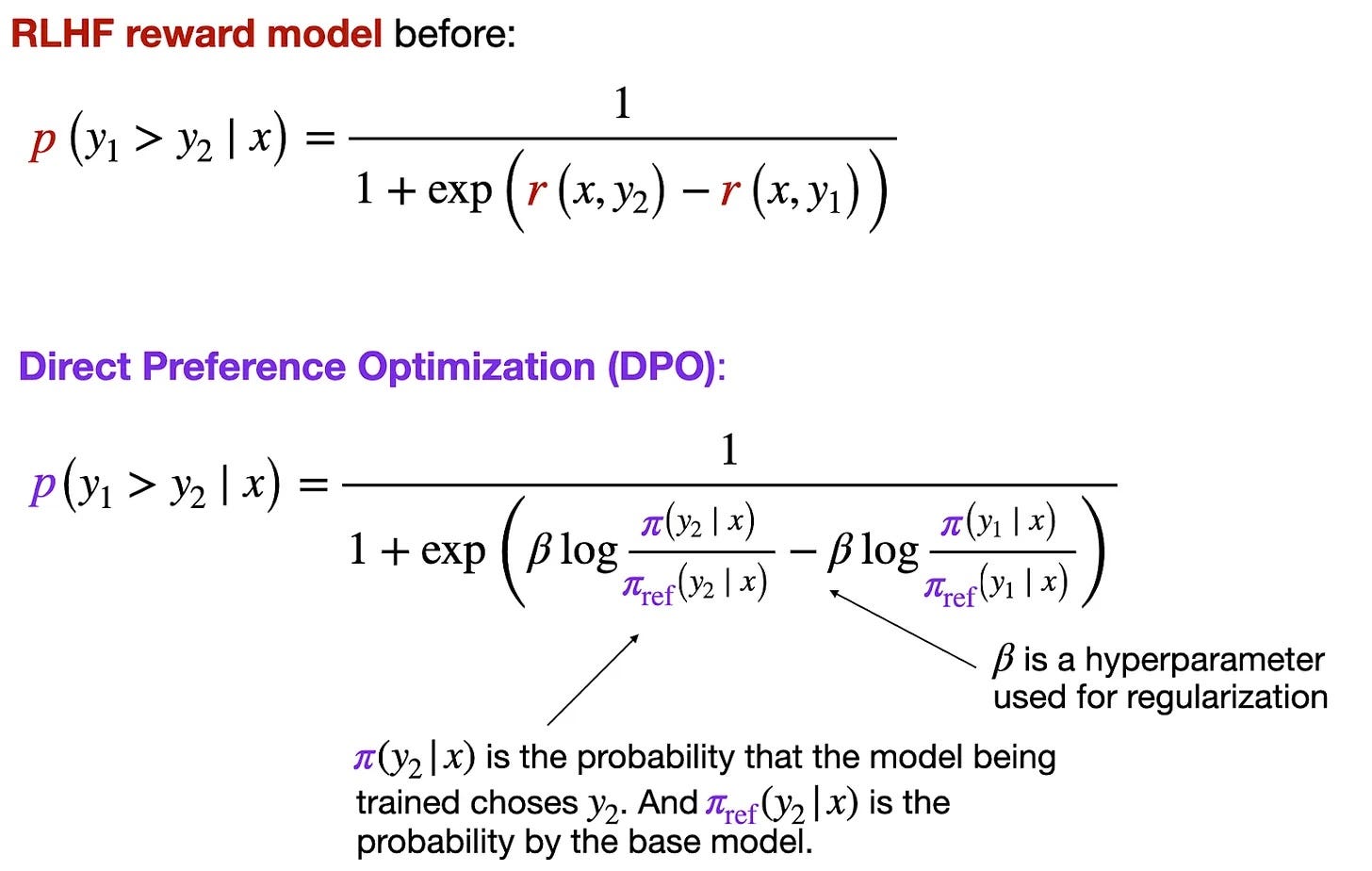

Let's start with a brief overview before diving into the results: Both PPO (proximal policy optimization) and DPO (direct preference optimization) are popular methods for aligning LLMs via reinforcement learning with human feedback (RLHF).

RLHF is a key component of LLM development, and it's used to align LLMs with human preferences, for example, to improve the safety and helpfulness of LLM-generated responses.

For a more detailed explanation and comparison, also see the Evaluating Reward Modeling for Language Modeling section in my Tips for LLM Pretraining and Evaluating Reward Models article that I shared last month.

3.1 What are RLHF-PPO and DPO?

RLHF-PPO, the original LLM alignment method, has been the backbone of OpenAI's InstructGPT and the LLMs deployed in ChatGPT. However, the landscape has shifted in recent months with the emergence of DPO-finetuned LLMs, which have made a significant impact on public leaderboards. This surge in popularity can be attributed to DPO's reward-free alternative, which is notably easier to use: Unlike PPO, DPO doesn't require training a separate reward model but uses a classification-like objective to update the LLM directly.

Today, most LLMs on top of public leaderboards have been trained with DPO rather than PPO. Unfortunately, though, there have not been any direct head-to-head comparisons where the same model was trained with either PPO or DPO using the same dataset until this new paper came along.

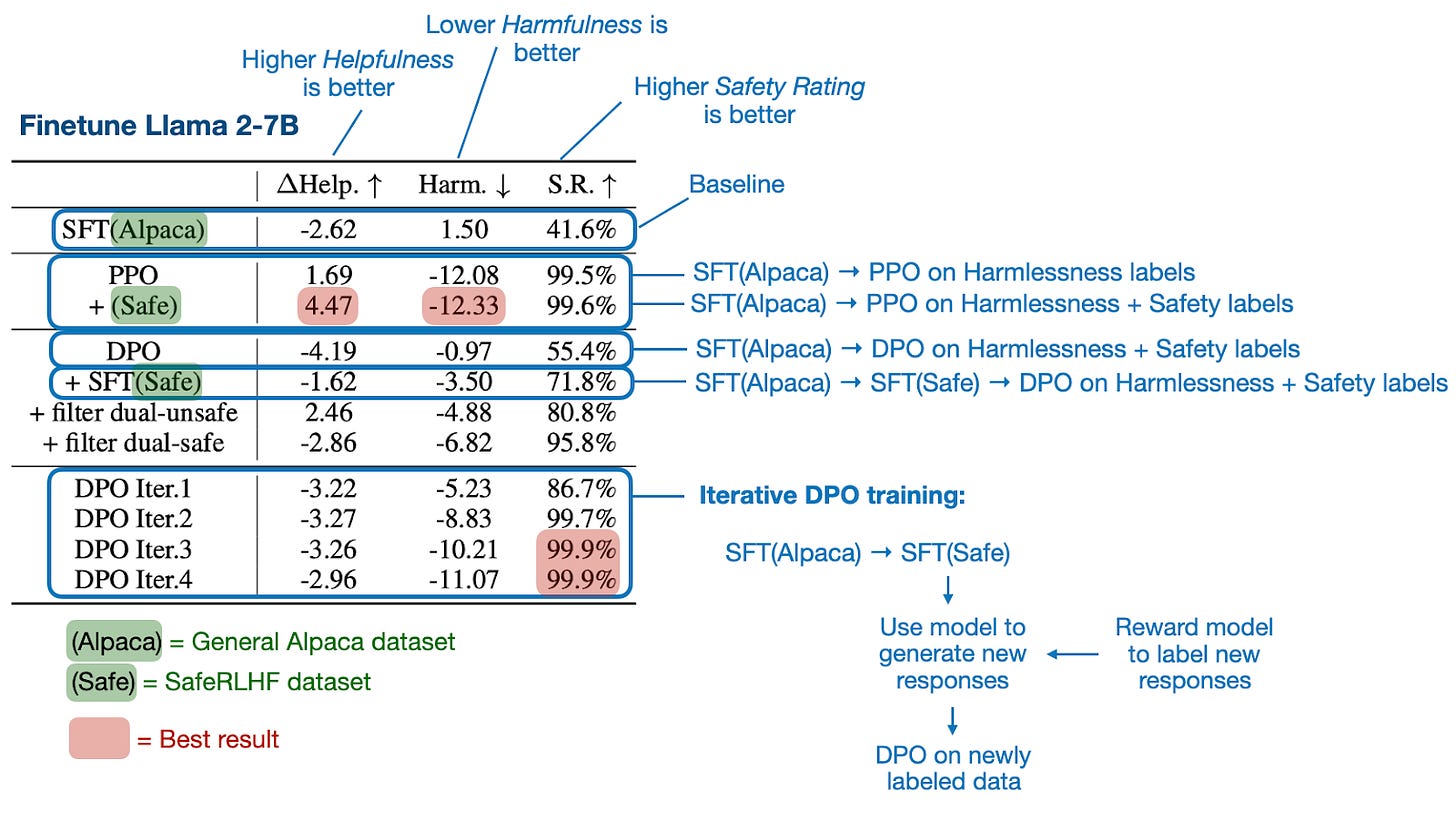

3.2 PPO is generally better than DPO

Is DPO Superior to PPO for LLM Alignment? A Comprehensive Study is a well-written paper with lots of experiments and results, but the main takeaways are that PPO is generally better than DPO, and DPO suffers more heavily from out-of-distribution data.

Here, out-of-distribution data means that the LLM has been previously trained on instruction data (using supervised finetuning) that is different from the preference data for DPO. For example, an LLM has been trained on the general Alpaca dataset before being DPO-finetuned on a different dataset with preference labels. (One way to improve DPO on out-of-distribution data is to add a supervised instruction-finetuning round on the preference dataset before following up with DPO finetuning).

The main findings are summarized in the figure below.

In addition to the main results above, the paper includes several additional experiments and ablation studies that I recommend checking out if you are interested in this topic.

3.3 Best practices

Furthermore, interesting takeaways from this paper include best-practice recommendations when using DPO and PPO.

For instance, if you use DPO, make sure to perform supervised finetuning on the preference data first. Also, iterative DPO, which involves labeling additional data with an existing reward model, is better than DPO on the existing preference data.

If you use PPO, the key success factors are large batch sizes, advantage normalization, and parameter updates via an exponential moving average.

3.4 Conclusion

Based on this paper's results, PPO seems superior to DPO if used correctly. However, given that DPO is more straightforward to use and implement, I expect DPO to remain a popular go-to method.

A good practical recommendation may be to use PPO if you have ground truth reward labels (so you don't have to pretrain your own reward model) or if you can download an in-domain reward model. Otherwise, use DPO for simplicity.

Also, based on what we know from the LLama 3 blog post, we don't have to decide whether to use PPO or DPO, but we can use both! For instance, the recipe behind Llama 3 has been the following pipeline: Pretraining → supervised finetuning → rejection sampling → PPO → DPO. (I am hoping the Llama 3 developers will share a paper with more details soon!)

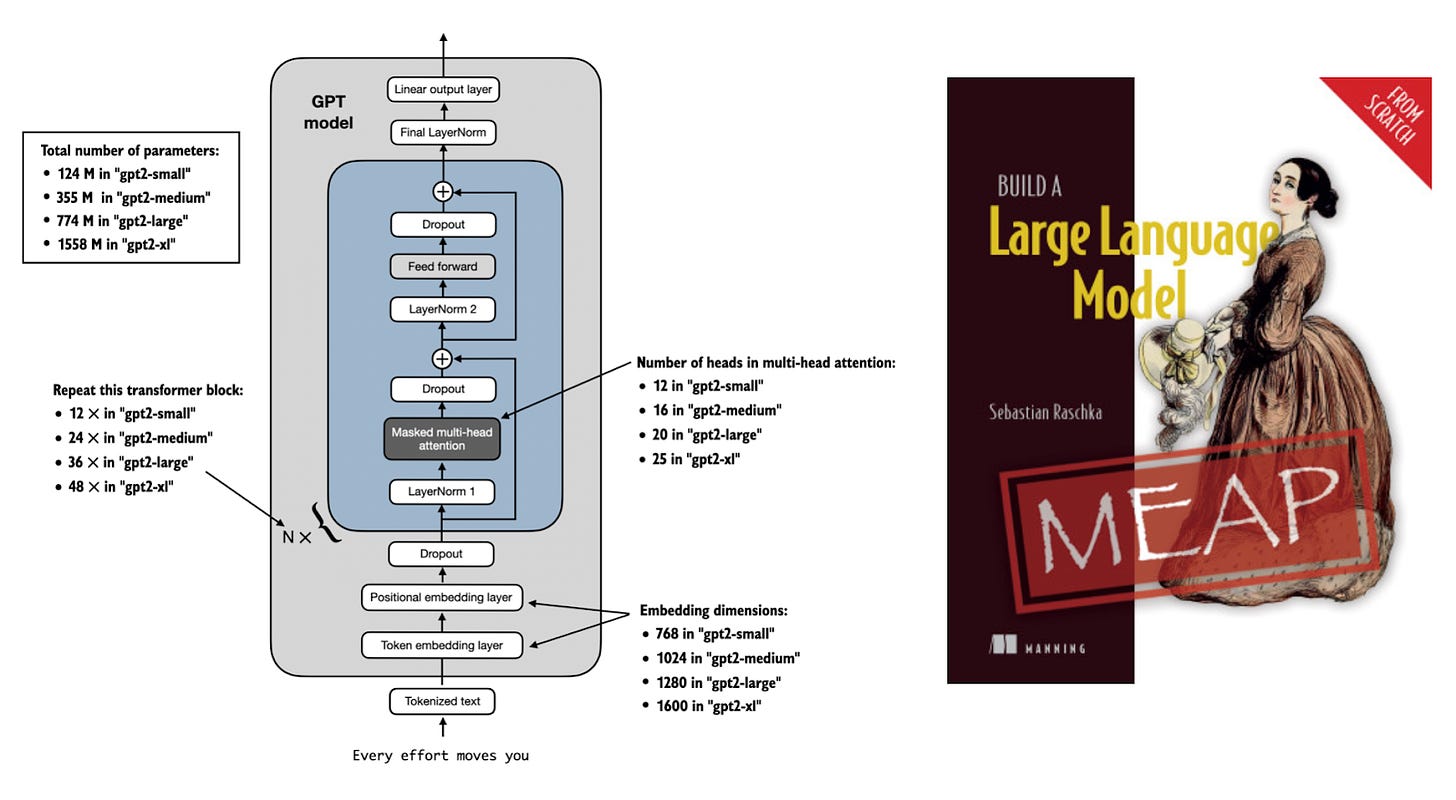

Understanding LLMs (really well)

One of the best ways to understand LLMs is to code one from scratch!

If you are interested in learning more about LLMs, I am covering, implementing, and explaining the whole LLM lifecycle in my "Build a Large Language Model from Scratch" book , which is currently available at a discounted price before it is published in Summer 2024.

Chapter 5, which covers the pretraining, was just released 2 weeks ago. If this sounds interesting and useful to you, you can take a sneak peak at the code on GitHub here .

Below is a selection of other interesting papers I stumbled upon this month. Even compared to strong previous months, I think that LLM research in April has been really exceptional.

KAN: Kolmogorov–Arnold Networks by Liu, Wang, Vaidya, et al. (30 Apr), https://arxiv.org/abs/2404.19756

Kolmogorov-Arnold Networks (KANs), which replace linear weight parameters with learnable spline-based functions on edges and lack fixed activation functions, seem to offer an attractive new alternative to Multi-Layer Perceptrons, which they outperform in accuracy, neural scaling, and interpretability.

When to Retrieve: Teaching LLMs to Utilize Information Retrieval Effectively by Labruna, Ander Campos, and Azkune (30 Apr), https://arxiv.org/abs/2404.19705

This paper proposes a custom training approach for LLMs that teaches them to either utilize their parametric memory or an external information retrieval system via a special token <RET> when it doesn't know the answer.

A Primer on the Inner Workings of Transformer-based Language Models by Ferrando, Sarti, Bisazza, and Costa-jussa (30 Apr), https://arxiv.org/abs/2405.00208

This primer offers a succinct technical overview of the techniques used to interpret Transformer-based, decoder-only language models

RAG and RAU: A Survey on Retrieval-Augmented Language Model in Natural Language Processing by Hu and Lu (30 Apr), https://arxiv.org/abs/2404.19543

This survey provides a comprehensive view of retrieval-augmented LLMs, detailing their components, structures, applications, and evaluation methods

Better & Faster Large Language Models via Multi-token Prediction by Gloeckle, Idrissi, Rozière, et al. (30 Apr), https://arxiv.org/abs/2404.19737

This paper suggests that training LLMs to predict multiple future tokens simultaneously rather than just the next token not only improves sample efficiency but also improves performance on generative tasks.

LoRA Land: 310 Fine-tuned LLMs that Rival GPT-4, A Technical Report by Zhao, Wang, Abid, et al. (28 Apr), https://arxiv.org/abs/2405.00732

LoRA is one of the most wide parameter-efficient finetuning techniques, and this study finds that 4-bit LoRA finetuned models significantly outperform both their base models and GPT-4.

Make Your LLM Fully Utilize the Context , An, Ma, Lin et al. (25 Apr), https://arxiv.org/abs/2404.16811

The study introduces FILM-7B, a model trained using an information-intensive approach to address the "lost-in-the-middle" challenge, which describes the problem where LLMs are not able to retrieve information if it's not at the beginning or end of the context window.

Layer Skip: Enabling Early Exit Inference and Self-Speculative Decoding by Elhoushi, Shrivastava, Liskovich, et al. (25 Apr), https://arxiv.org/abs/2404.16710

LayerSkip can accelerate the inference of LLMs by using layer dropout and early exit loss during training, and implementing self-speculative decoding during inference.

Retrieval Head Mechanistically Explains Long-Context Factuality by Wu, Wang, Xiao, et al. (24 Apr), https://arxiv.org/abs/2404.15574

This paper explores how transformer-based models with long-context capabilities use specific "retrieval heads" in their attention mechanisms to effectively retrieve information, revealing that these heads are universal, sparse, intrinsic, dynamically activated, and crucial for tasks requiring reference to prior context or reasoning.

Graph Machine Learning in the Era of Large Language Models (LLMs) by Fan, Wang, Huang, et al. (23 Apr), https://arxiv.org/abs/2404.14928

This survey paper describes, among others, how Graph Neural Networks and LLMs are increasingly integrated to improve graph machine learning and reasoning capabilities.

NExT: Teaching Large Language Models to Reason about Code Execution by Ni, Allamanis, Cohan, et al. (23 Apr), https://arxiv.org/abs/2404.14662

NExT is a method to improve how LLMs understand and fix code by teaching them to analyze program execution.

Multi-Head Mixture-of-Experts by Wu, Huang, Wang, and Wei (23 Apr), https://arxiv.org/abs/2404.15045

The proposed Multi-Head Mixture-of-Experts (MH-MoE) model addresses Sparse Mixtures of Experts' issues of low expert activation and poor handling of multiple semantic concepts by introducing a multi-head mechanism that splits tokens into sub-tokens processed by diverse experts in parallel.

A Survey on Self-Evolution of Large Language Models by Tao, Lin, Chen, et al. (22 Apr), https://arxiv.org/abs/2404.14662

This work presents a comprehensive survey of self-evolution approaches in LLMs, proposing a conceptual framework for LLM self-evolution and identifying challenges and future directions to enhance these models' capabilities.

OpenELM: An Efficient Language Model Family with Open-source Training and Inference Framework by Mehta, Sekhavat, Cao et al. (22 Apr), https://arxiv.org/abs/2404.14619

OpenELM by researchers from Apple is a LLM suite by Apple in the spirit of OLMo (a model family I covered previously), including full training and evaluation frameworks, logs, checkpoints, configurations, and other artifacts for reproducible research.

Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone by Abdin, Jacobs, Awan, et al. (Apr 22), https://arxiv.org/abs/2404.14219

Phi-3-mini is a 3.8 billion parameter LLM trained on 3.3 trillion tokens that matches the performance of larger models like Mixtral 8x7B and GPT-3.5 according to benchmarks.

How Good Are Low-bit Quantized LLaMA3 Models? An Empirical Study by Huang, Ma, and Qin (22 Apr), https://arxiv.org/abs/2404.14047

This empirical study finds that Meta's LLaMA3 model reveals significant performance degradation at ultra-low bit-widths.

The Instruction Hierarchy: Training LLMs to Prioritize Privileged Instructions by Wallace, Xiao, Leike, et al. (19 Apr), https://arxiv.org/abs/2404.13208

This study introduces an instruction hierarchy for LLMs to prioritize trusted prompts, enhancing their robustness against attacks without compromising their standard capabilities.

OpenBezoar: Small, Cost-Effective and Open Models Trained on Mixes of Instruction Data by Dissanayake, Lowe, Gunasekara, and Ratnayake (18 Apr), https://arxiv.org/abs/2404.12195

The research finetunes the OpenLLaMA 3Bv2 model using synthetic data from Falcon-40B and techniques like RLHF and DPO, achieving top performance in LLM tasks at a reduced model size by systematically filtering and finetuning data.

Toward Self-Improvement of LLMs via Imagination, Searching, and Criticizing by Tian, Peng, Song, et al. (18 Apr), https://arxiv.org/abs/2404.12253

Despite the impressive capabilities of LLMs in various tasks, they struggle with complex reasoning and planning; the proposed AlphaLLM integrates Monte Carlo Tree Search to create a self-improving loop, enhancing LLMs' performance in reasoning tasks without additional data annotations.

When LLMs are Unfit Use FastFit: Fast and Effective Text Classification with Many Classes by Yehudai and Bendel (18 Apr), https://arxiv.org/abs/2404.12365

FastFit is a new Python package that rapidly and accurately handles few-shot classification for language tasks with many similar classes by integrating batch contrastive learning and token-level similarity scoring, showing a 3-20x increase in training speed and superior performance over other methods like SetFit and HF Transformers.

A Survey on Retrieval-Augmented Text Generation for Large Language Models by Huang and Huang (17 Apr), https://arxiv.org/abs/2404.10981

This survey article discusses how Retrieval-Augmented Generation (RAG) combines retrieval techniques and deep learning to improve LLMs by dynamically incorporating up-to-date information, categorizes the RAG process, reviews recent developments, and proposes future research directions

How Faithful Are RAG Models? Quantifying the Tug-of-War Between RAG and LLMs' Internal Prior by Wu, Wu, and Zou (16 Apr), https://arxiv.org/abs/2404.10198

Providing correct retrieved information generally corrects errors in large language models like GPT-4, but incorrect information is often repeated unless countered by strong internal knowledge.

Scaling (Down) CLIP: A Comprehensive Analysis of Data, Architecture, and Training Strategies by Li, Xie, and Cubuk (16 Apr), https://arxiv.org/abs/2404.08197

This paper explores the scaling down of Contrastive Language-Image Pretraining (CLIP) to fit limited computational budgets, demonstrating that high-quality, smaller datasets often outperform larger, lower-quality ones, and that smaller ViT models are optimal for these datasets.

Is DPO Superior to PPO for LLM Alignment? A Comprehensive Study by Xu, Fu, Gao et al. (16 Apr), https://arxiv.org/abs/2404.10719

This research explores the effectiveness of Direct Preference Optimization (DPO) and Proximal Policy Optimization (PPO) in Reinforcement Learning from Human Feedback (RLHF), finding that PPO can to surpass all other alternative methods in all cases if applied properly.

Learn Your Reference Model for Real Good Alignment by Gorbatovski, Shaposhnikov, Malakhov, et al. (15 Apr), https://arxiv.org/abs/2404.09656

The research highlights that the new alignment method, Trust Region Direct Preference Optimization (TR-DPO), which updates the reference policy during training, outperforms existing techniques by improving model quality across multiple parameters, demonstrating up to a 19% improvement on specific datasets.

Chinchilla Scaling: A Replication Attempt by Besiroglu, Erdil, Barnett, and You (15 Apr), https://arxiv.org/abs/2404.10102

The authors attempt to replicate one of Hoffmann et al.'s methods for estimating compute-optimal scaling laws, finding inconsistencies and implausible results compared to the original estimates from other methods.

State Space Model for New-Generation Network Alternative to Transformers: A Survey by Wang, Wang, Ding, et al. (15 Apr), https://arxiv.org/abs/2404.09516

The paper provides a comprehensive review and experimental analysis of State Space Models (SSM) as an efficient alternative to the Transformer architecture, detailing the principles of SSM, its applications across diverse domains, and offering statistical comparisons to demonstrate its advantages and potential areas for future research.

LLM In-Context Recall is Prompt Dependent by Machlab and Battle (13 Apr), https://arxiv.org/abs/2404.08865

The research assesses the in-context recall ability of various LLMs by embedding a factoid within a block of text and evaluating the models' performance in retrieving this information under different conditions, revealing that performance is influenced by both the prompt content and potential biases in training data.

Dataset Reset Policy Optimization for RLHF by Chang, Zhan, Oertell, et al. (12 Apr), https://arxiv.org/abs/2404.08495

This work introduces Dataset Reset Policy Optimization (DR-PO), a new Reinforcement Learning from Human Preference-based feedback (RLHF) algorithm that enhances training by integrating an offline preference dataset directly into the online policy training.

Pre-training Small Base LMs with Fewer Tokens by Sanyal, Sanghavi, and Dimakis (12 Apr), https://arxiv.org/abs/2404.08634

The study introduces "Inheritune," a method for developing smaller base language models by inheriting a few transformer blocks from larger models and training on a tiny fraction of the larger model's data, demonstrating that these smaller models perform comparably to larger models despite using significantly less training data and resources.

Rho-1: Not All Tokens Are What You Need by Lin, Gou, Gong et al. , (11 Apr), https://arxiv.org/abs/2404.07965

Rho-1 is a new language model that is trained selectively on tokens that demonstrate higher excess loss as opposed to traditional next-token prediction methods.

Best Practices and Lessons Learned on Synthetic Data for Language Models by Liu, Wei, Liu, et al. (11 Apr), https://arxiv.org/abs/2404.07503

This paper reviews synthetic data research in the context of LLMs.

JetMoE: Reaching Llama2 Performance with 0.1M Dollars , Shen, Guo, Cai, and Qin (11 Apr), https://arxiv.org/abs/2404.07413

JetMoE-8B, an 8-billion parameter, sparsely-gated Mixture-of-Experts model trained on 1.25 trillion tokens for under $100,000, outperforms more expensive models such as Llama2-7B by using only 2 billion parameters per input token and "only" 30,000 GPU hours.

LLoCO: Learning Long Contexts Offline by Tan, Li, Patil et al. (11 Apr), https://arxiv.org/abs/2404.07979

LLoCO is a method combining context compression, retrieval, and parameter-efficient finetuning with LoRA to effectively expand the context window of a LLaMA2-7B model to handle up to 128k tokens

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention by Munkhdalai, Faruqui, and Gopal (10 Apr), https://arxiv.org/abs/2404.07143

This research introduces a method to scale transformer-based LLMs for processing infinitely long inputs efficiently by combining several attention strategies within a single transformer block for tasks with extensive contextual demands.

Adapting LLaMA Decoder to Vision Transformer by Wang, Shao, Chen, et al. (10 Apr), https://arxiv.org/abs/2404.06773

This research adapts decoder-only transformer-based LLMs like Llama for computer vision by modifying a standard vision transformer (ViT) with techniques like a post-sequence class token and a soft mask strategy.

LLM2Vec: Large Language Models Are Secretly Powerful Text Encoders by BehnamGhader, Adlakha, Mosbach, et al. (9 Apr), https://arxiv.org/abs/2404.05961

This research introduces a simple, unsupervised approach to transform decoder-style LLMs (like GPT and Llama) into strong text encoders via 1) disabling the causal attention mask, 2) masked next token prediction, and 3) unsupervised contrastive learning.

Elephants Never Forget: Memorization and Learning of Tabular Data in Large Language Models by Bordt, Nori, Rodrigues, et al . (9 Apr), https://arxiv.org/abs/2404.06209

This study highlights critical issues of data contamination and memorization in LLMs, showing that LLMs often memorize popular tabular datasets and perform better on datasets seen during training, which leads to overfitting

MiniCPM: Unveiling the Potential of Small Language Models with Scalable Training Strategies by Hu, Tu, Han, et al. (9 Apr), https://arxiv.org/abs/2404.06395

This research introduces new resource-efficient "small" language models in the 1.2-2.4 billion parameter range, along with techniques such as the warmup-stable-decay learning rate scheduler, which is helpful for continuous pretraining and domain adaptation.

CodecLM: Aligning Language Models with Tailored Synthetic Data by Wang, Li, Perot, et al . (8 Apr), https://arxiv.org/abs/2404.05875

CodecLM introduces a framework using encode-decode principles and LLMs as codecs to adaptively generate high-quality synthetic data for aligning LLMs with various instruction distributions to improve their ability to follow complex, diverse instructions.

Eagle and Finch: RWKV with Matrix-Valued States and Dynamic Recurrence by Peng, Goldstein, Anthony, et al. (8 Apr), https://arxiv.org/abs/2404.05892

Eagle and Finch are new sequence models based on the RWKV architecture, introducing features like multi-headed matrix states and dynamic recurrence.

AutoCodeRover: Autonomous Program Improvement by Zhang, Ruan, Fan, and Roychoudhury (8 Apr), https://arxiv.org/abs/2404.05427

AutoCodeRover is an automated approach utilizing LLMs and advanced code search to solve GitHub issues by modifying software programs.

Sigma: Siamese Mamba Network for Multi-Modal Semantic Segmentation by Wan, Wang, Yong, et al. (5 Apr), https://arxiv.org/abs/2404.04256

Sigma is an approach to multi-modal semantic segmentation using a Siamese Mamba (structure state space model) network, which combines different modalities like thermal and depth with RGB and presents an alternative to CNN- and vision transformer-based approaches.

Verifiable by Design: Aligning Language Models to Quote from Pre-Training Data by Zhang, Marone, Li, et al. (5 Apr 2024), https://arxiv.org/abs/2404.03862

Quote-Tuning improves the trustworthiness and accuracy of large language models by training them to increase verbatim quoting from reliable sources by 55% to 130% compared to standard models.

ReFT: Representation Finetuning for Language Models by Wu, Arora, Wang, et al. (5 Apr), https://arxiv.org/abs/2404.03592

This paper introduces Representation Finetuning (ReFT) methods, analogous to parameter-efficient finetuning (PEFT), for adapting large models efficiently by modifying only their hidden representations rather than the full set of parameters.

CantTalkAboutThis: Aligning Language Models to Stay on Topic in Dialogues by Sreedhar, Rebedea, Ghosh, and Parisien (4 Apr), https://arxiv.org/abs/2404.03820

This paper introduces the CantTalkAboutThis dataset, which is designed to help LLMs stay on topic during task-oriented conversations (it includes synthetic dialogues across various domains, featuring distractor turns to challenge and train models to resist topic diversion).

Training LLMs over Neurally Compressed Text by Lester, Lee, Alemi, et al. (4 Apr), https://arxiv.org/abs/2404.03626

This paper introduces a method for training LLMs on neurally compressed text (text compressed by a small language model) using Equal-Info Windows, a technique that segments text into blocks of equal bit length,

Direct Nash Optimization: Teaching Language Models to Self-Improve with General Preferences by Andriushchenko, Croce, and Flammarion (4 Apr), https://arxiv.org/abs/2404.02151

This paper introduces Direct Nash Optimization (DNO), a post-training method for LLMs that uses preference feedback from an oracle to iteratively improve model performance as alternative to other reinforcement learning with human feedback (RLHF) approaches.

Cross-Attention Makes Inference Cumbersome in Text-to-Image Diffusion Models by Zhang, Liu, Xie, et al. (3 Apr), https://arxiv.org/abs/2404.02747

The study looks into how cross-attention functions in text-conditional diffusion models during inference, discovers that it stabilizes at a certain point, and finds that bypassing text inputs after this convergence point simplifies the process without compromising on output quality.

BAdam: A Memory Efficient Full Parameter Training Method for Large Language Models by Luo, Hengzu, and Li (3 Apr), https://arxiv.org/abs/2404.02827

BAdam is a memory-efficient optimizer that improves the efficiency of finetuning LLMs, which is also easy to use and comes with only one additional hyperparameter.

On the Scalability of Diffusion-based Text-to-Image Generation by Li, Zou, Wang, et al. (3 Apr), https://arxiv.org/abs/2404.02883

This study empirically investigates the scaling properties of diffusion-based text-to-image models by analyzing the effects of scaling denoising backbones and training sets, uncovering that the efficiency of cross-attention and transformer blocks significantly influences performance, and identifying strategies for enhancing text-image alignment and learning efficiency at lower costs.

Jailbreaking Leading Safety-Aligned LLMs with Simple Adaptive Attacks by Andriushchenko, Croce, and Flammarion (2 Apr), https://arxiv.org/abs/2404.02151

This study reveals that even the latest safety-focused LLMs can be easily jailbroken using adaptive techniques, achieving nearly 100% success across various models through methods like adversarial prompting, exploiting API vulnerabilities, and token search space restriction.

Emergent Abilities in Reduced-Scale Generative Language Models by Muckatira, Deshpande, Lialin, and Rumshisky (2 Apr), https://arxiv.org/abs/2404.02204

This study finds that very "small" LLMs (from 1 to 165 million parameters) can also exhibit emergent properties if the dataset for pretraining is scaled down and simplified.

Long-context LLMs Struggle with Long In-context Learning by Li, Zheng, Do, et al. (2 Apr), https://arxiv.org/abs/2404.02060

LIConBench, a new benchmark focusing on long in-context learning and extreme-label classification, reveals that while LLMs excel up to 20K tokens, their performance drops in longer sequences, with GPT-4 being an exception, highlighting a gap in processing extensive context-rich information.

Mixture-of-Depths: Dynamically Allocating Compute in Transformer-Based Language Models by Raposo, Ritter, Richard et al. (2 Apr), https://arxiv.org/abs/2404.02258

This research introduces a method for transformer-based language models to dynamically allocate computational resources (FLOPs) across different parts of an input sequence, optimizing performance and efficiency by selecting specific tokens for processing at each layer.

Diffusion-RWKV: Scaling RWKV-Like Architectures for Diffusion Models by Fei, Fan, Yu, et al. (6 Apr), https://arxiv.org/abs/2404.04478

This paper introduces Diffusion-RWKV, an adaptation of the RWKV architecture from NLP for diffusion models in image generation.

The Fine Line: Navigating Large Language Model Pretraining with Down-streaming Capability Analysis by Yang, Li, Niu, et al. (1 Apr), https://arxiv.org/abs/2404.01204

This research identifies early-stage capable of predicting the eventual LLMs, which is helpful analyzing LLMs during pretraining and improving the pretraining setup.

Bigger is not Always Better: Scaling Properties of Latent Diffusion Models by Mei, Tu, Delbracio, et al. (1 Apr), https://arxiv.org/abs/2404.01367

This study explores how the size of latent diffusion models affects sampling efficiency across different steps and tasks, revealing that smaller models often produce higher quality results within a given inference budget.

Do Language Models Plan Ahead for Future Tokens? by Wu, Morris, and Levine (1 Apr), https://arxiv.org/abs/2404.00859

The research paper finds empirical evidence that transformers anticipate future information during inference through "pre-caching" and "breadcrumbs" mechanisms.

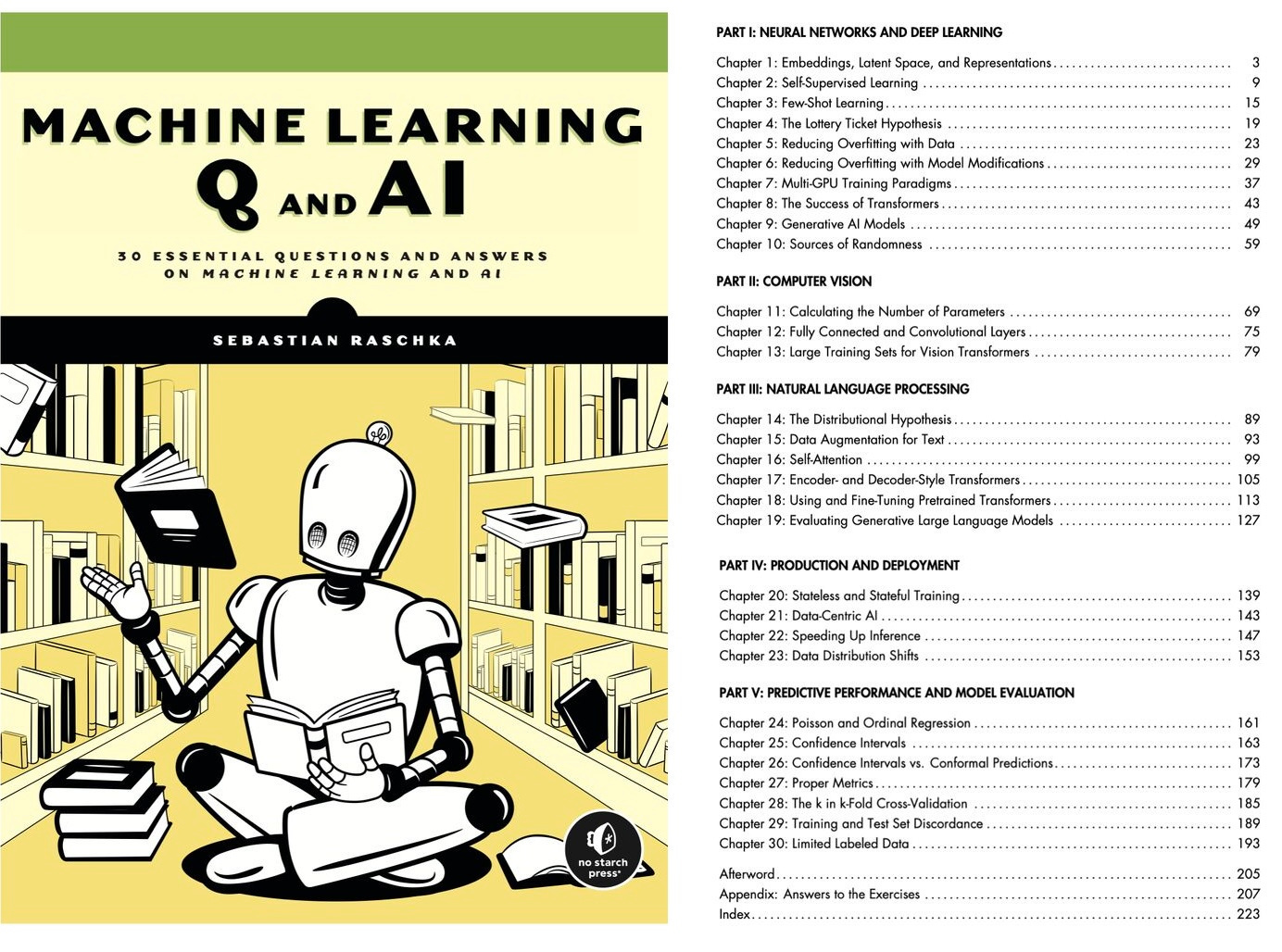

Machine Learning Q and AI

If you are looking for a book that explains intermediate to advanced topics in machine learning and AI in a focused manner, you might like my book, " Machine Learning Q and AI ." The print version was just released two weeks ago!

Ahead of AI is a personal passion project that does not offer direct compensation, and I’d appreciate your support.

If you got the book, a review on Amazon would be really appreciated, too!

Ready for more?

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

- failed: tikz-network

- failed: anyfontsize

- failed: inconsolata

Authors: achieve the best HTML results from your LaTeX submissions by following these best practices .

Topics, Authors, and Institutions in Large Language Model Research: Trends from 17K arXiv Papers

Large language models (LLMs) are dramatically influencing AI research, spurring discussions on what has changed so far and how to shape the field’s future. To clarify such questions, we analyze a new dataset of 16,979 LLM-related arXiv papers, focusing on recent trends in 2023 vs. 2018-2022. First, we study disciplinary shifts: LLM research increasingly considers societal impacts, evidenced by 20 × \times × growth in LLM submissions to the Computers and Society sub-arXiv. An influx of new authors – half of all first authors in 2023 – are entering from non-NLP fields of CS, driving disciplinary expansion. Second, we study industry and academic publishing trends. Surprisingly, industry accounts for a smaller publication share in 2023, largely due to reduced output from Google and other Big Tech companies; universities in Asia are publishing more. Third, we study institutional collaboration: while industry-academic collaborations are common, they tend to focus on the same topics that industry focuses on rather than bridging differences. The most prolific institutions are all US- or China-based, but there is very little cross-country collaboration. We discuss implications around (1) how to support the influx of new authors, (2) how industry trends may affect academics, and (3) possible effects of (the lack of) collaboration.

Rajiv Movva 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT Sidhika Balachandar 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT Kenny Peng 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT Gabriel Agostini 1 1 {}^{1} start_FLOATSUPERSCRIPT 1 end_FLOATSUPERSCRIPT Nikhil Garg 2 2 {}^{2} start_FLOATSUPERSCRIPT 2 end_FLOATSUPERSCRIPT Emma Pierson 2 2 {}^{2} start_FLOATSUPERSCRIPT 2 end_FLOATSUPERSCRIPT Cornell Tech [email protected]

1 Introduction

Recent advances in language modeling have caused disruptive shifts throughout AI research, spurring conversation about how the field is changing and how it should change. Discussions so far have drawn on surveys and interviews of NLP community members (Michael et al., 2022 ; Gururaja et al., 2023 ; Lee et al., 2023 ) , and recurring themes under consideration include which topics are shifting in importance, which directions remain fruitful for academics and other compute-limited researchers, and which institutions hold power to shape LLM research.

In periods of flux like this one, bibliometrics – quantitative study of publication patterns – offers a useful lens. Prior bibliometric analyses have been clarifying in NLP, identifying topic shifts (Hall et al., 2008 ) , flows of authors in and out of the field (Anderson et al., 2012 ) , and the growing role of industry (Abdalla et al., 2023 ) . Due to the rapid recent growth of the LLM literature, fundamental questions about the topics, authors, and institutions driving its growth remain understudied.

Addressing this gap, we conduct a bibliometric analysis of recent trends in the LLM literature, focusing on changes in 2023 compared to 2018-22. We collect and analyze 16,979 LLM-related papers posted to arXiv from Jan. 1, 2018 through Sep. 7, 2023. In addition to arXiv metadata, we annotate these papers with topic labels, author affiliations, and citation counts, and make all data and code publicly available. 1 1 1 https://github.com/rmovva/LLM-publication-patterns-public We analyze three questions:

Which topics and authors are driving the growth of LLM research? Following prior work, we study topics and author movement as markers of a field’s evolution (Kuhn, 1962 ; Uban et al., 2021 ; Anderson et al., 2012 ) . In 2023, LLM research increasingly focuses on societal impacts: the Computers and Society sub-arXiv has grown faster than any other sub-arXiv in its proportion of LLM papers, up by a factor of 20 × \times × in 2023. A more granular topic-level analysis echoes this result: the “Applications of ChatGPT” and “Societal Implications of LLMs” topics have grown 8 × \times × and 4 × \times × respectively. We also see rapid growth in other sub-arXivs outside of core NLP, including Human-Computer Interaction, Security, and Software Engineering. Simultaneously, BERT and task-specific architectures are shrinking due to centralization around newer models (e.g., GPT-4 and LLaMA).

The increased focus on societal impacts and non-NLP disciplines is driven by a strikingly large proportion of authors new to LLMs. Half (49.5%) of LLM first authors in 2023 have never previously co-authored an NLP paper (and nearly two-thirds haven’t previously co-authored an LLM paper), and a substantial fraction (38.6%) of the last authors haven’t either. These new authors are entering from other fields like Computer Vision, Software Engineering, and Security, and they are writing LLM papers on topics further out from NLP, e.g., vision-language models, applications in the natural sciences, and privacy/adversarial risks.

What are the roles of industry and academia? The role of industry – both what it is, and what it should be – has been a topic of prior empirical measurement and normative discussion (Abdalla et al., 2023 ; Michael et al., 2022 ) ; the latest wave of LLM research has raised concerns of centralization around industry models and increased industry secrecy (Bommasani et al., 2023 ) . We identify two competing trends. On one hand, industry publishes an outsize fraction of top-cited research, including widely-used foundation models and methodological work on topics like efficiency. However, in 2023, industry is publishing less : Big Tech companies are accounting for 13.0% of papers in 2023, compared to 19.3% before then, with a particular drop from Google. This trend suggests that reduced openness is not only playing out in high-profile cases (e.g., the opaque GPT-4 technical report (Rogers, 2023 ) ), but is emerging as a broader, industry-wide phenomenon of reduced publishing. Academics, meanwhile, account for a larger share of papers (particularly universities in Asia). Relative to industry, more academic work applies models to non-NLP tasks and studies social impacts.

How are institutions collaborating? Motivated by broader discussion around the risks of AI competition between nations and institutions Cuéllar and Sheehan ( 2023 ); Hao ( 2023 ) , we analyze the network of collaborations between the 20 most prolific institutions, all of which are either US- or China-based. We document a US/China schism: pairs of institutions which frequently collaborate are almost exclusively based in the same country. Microsoft, which collaborates with both American and Chinese universities, is the one exception. We also find that while industry-academic collaborations are common, rather than bridging differences, these papers skew significantly towards the topics usually pursued by industry. Collaborations between multiple companies are rare.

How might these insights inform the NLP community, policymakers, and other stakeholders in the future of LLM research? First, our analysis of topics and authors reveals that LLMs are increasingly being applied to diverse fields outside of core NLP. Researchers performing interdisciplinary work should involve domain experts in both NLP and the other areas of study (e.g. education, medicine, law); community leaders should reflect on how publication venues and peer review processes can best accommodate interdisciplinary work. We also show that LLM research is experiencing an influx of new authors, implying heightened value of educational resources, research checklists Magnusson et al. ( 2023 ) , and other frameworks to encourage good research practice Dodge et al. ( 2019 ); Kapoor et al. ( 2023 ) . Second, we find that LLM research continues to be shaped by industry, but large tech companies are publishing less and revealing less about their models. Academics lead important research on society-facing applications and harms of AI, but closed-source models hinder detailed evaluations (Rogers, 2023 ) . Open-source datasets and models are therefore increasingly valuable, and the community should consider how to incentivize these contributions. Third, we provide evidence of a lack of collaboration between the US and China, substantiating concerns about AI-related competition Cuéllar and Sheehan ( 2023 ); Hao ( 2023 ) . Institutions may have incentives that make collaboration difficult, but efforts to create consensus may help avoid unethical or risky uses of AI.

We summarize our data and methods here and provide full details in Appendix A ; Table S1 lists the fields we use in our analysis. Our primary dataset consists of all 418K papers posted to the CS and Stat arXivs between January 1, 2018 and September 7, 2023. Following past ML survey papers Fan et al. ( 2023 ); Peng et al. ( 2021 ); Blodgett et al. ( 2020 ); Field et al. ( 2021 ) , we identify an analysis subset by searching for a list of keywords in paper titles or abstracts. Keyword search has the benefits of transparency, simplicity, and consistency with past work, but also has caveats; see § A.2 for further details. Our keyword list surfaces 16,979 papers; the specific terms we include are {language model, foundation model, BERT, XLNet, GPT-2, GPT-3, GPT-4, GPT-Neo, GPT-J, ChatGPT, PaLM, LLaMA}. Details about this list are in § A.2 .

We define several fields for each paper in this subset. In doing so, we follow past work and conduct manual audits to assess the reliability of our annotations; however, there remain inherent limitations in how these fields are defined, as we discuss in Appendix A . We tracked a paper’s primary sub-arXiv category, e.g., Computation and Language (cs.CL). For more fine-grained topic understanding, we assigned each paper one of 40 LLM-related topics (§ A.3 ). We clustered embeddings of paper abstracts Zhang et al. ( 2022 ); Grootendorst ( 2022 ) , then titled the clusters using a combination of LLM annotation and manual annotation. We annotated papers for whether their authors list academic or industry affiliations (§ A.4 ). We pulled citation counts from Semantic Scholar Kinney et al. ( 2023 ) , and tracked the citation percentile for each paper: the percentile of its citation count relative to papers from the same 3-month window (§ A.5 ).

Past work has shown that the raw count of LLM papers has risen steeply Fan et al. ( 2023 ); Zhao et al. ( 2023 ) . These trends replicate on arXiv: 12% of all CS/Stat papers were LLM-related in mid-2023 (Figure S1 ). Compared to all other topics (Figure S2 ) and all words/bigrams in paper abstracts (Table S2 ), LLM- & generative AI-related topics and terms are growing fastest. We dissect these ongoing changes by studying the topics, authors, and institutions that are accounting for them.

3.1 Which topics and authors are driving the growth of LLM research?

3.1.1 how have topics shifted in 2023.

We begin by analyzing the changing topic distribution of language modeling research – taxonomized by sub-arXiv category and semantic clusters – to identify which threads within LLM research are expanding and shrinking fastest.

LLM papers increasingly involve societal impacts and fields beyond NLP.

For a coarse analysis of where LLMs are growing fastest, we use a paper’s designated primary sub-arXiv category. We rank sub-arXivs by how quickly their proportion of LLM papers is increasing, i.e., according to the ratio p ( LLM paper ∣ paper on sub-arXiv & 2023 ) p ( LLM paper ∣ paper on sub-arXiv & pre-2023 ) 𝑝 conditional LLM paper paper on sub-arXiv & 2023 𝑝 conditional LLM paper paper on sub-arXiv & pre-2023 \frac{p(\text{LLM paper}\mid\text{paper on sub-arXiv \& 2023})}{p(\text{LLM % paper}\mid\text{paper on sub-arXiv \& pre-2023})} divide start_ARG italic_p ( LLM paper ∣ paper on sub-arXiv & 2023 ) end_ARG start_ARG italic_p ( LLM paper ∣ paper on sub-arXiv & pre-2023 ) end_ARG . Figure 1 displays all sub-arXivs (with at least 50 LLM papers) sorted by their 2023 to pre-2023 ratios. Computers and Society (cs.CY) ranks first, with a ratio of 20 × \times × : in 2023, 16% of its papers are about LLMs, compared to just 0.8% pre-2023. This society-facing work ranges widely, including discussions of the impacts of LLMs on education ( Kasneci et al. 2023 ; Chan and Hu 2023 , inter alia), ethics and safety (Ferrara, 2023 ; Sison et al., 2023 ) , and law Henderson et al. ( 2023 ); Li ( 2023 ) . Other sub-arXivs with both rapid growth and at least 10% prevalence of LLM papers in 2023 include HCI (up to 10% of all papers in 2023), AI (16%), and Software Engineering (19%). Strikingly, 55% of Computation and Language (cs.CL) papers are LLM-related, but due to its already-large fraction before 2023 (29%), its rate of increase ranks last. LLMs are clearly impacting much of CS research beyond NLP, especially in society- and human-facing fields.

The fastest-growing LLM topics cover applications, capabilities, and methods.

To study topic shifts at a more granular level, we observe the changing distribution of the 40 LLM-related topics with which we annotated the corpus. Since these topics are learned only on the LLM paper distribution, they are more specific than sub-arXivs, which span all of Computer Science. Figure S3 lists the five fastest growing and shrinking topics in 2023 according to p ( topic | published in 2023 ) p ( topic | published pre-2023 ) 𝑝 conditional topic published in 2023 𝑝 conditional topic published pre-2023 \frac{p(\text{topic}\>|\>\text{published in 2023})}{p(\text{topic}\>|\>\text{% published pre-2023})} divide start_ARG italic_p ( topic | published in 2023 ) end_ARG start_ARG italic_p ( topic | published pre-2023 ) end_ARG , and the results corroborate the sub-arXiv analysis (full results in Table S3 ). The fastest-growing topic is “Applications of LLMs/ChatGPT”, which has risen from 0.9% of LLM papers before 2023 to 7% in 2023, an 8 × \times × increase. This cluster of papers, along with “Societal Implications of LLMs” (4 × \times × growth, 4th fastest-growing), captures papers on empirical studies of LLMs for applied tasks, discussions of societal applications of ChatGPT, and ethical arguments ( Dai et al. 2023 ; Mogavi et al. 2023 ; Derczynski et al. 2023 , inter alia). The next two fastest-growing topics — “Software, Planning, Robotics” and “Human Feedback & Interaction” — hint further at applications. The former topic includes papers on two promising use cases of recent models, code generation and robotics, while the latter concerns the growing role of human feedback and HCI in developing useful language systems.

Shrinking topics highlight centralization around closed-source models.

The “BERT & Embeddings” topic is shrinking, consistent with prompt-based, few-shot models now replacing fine-tuned BERT systems. Many papers in this shrinking topic also study internal model representations, which may be less common now that token probabilities and activations are inaccessible from widely-used closed-source models like GPT-4. Topics on transformers, transfer learning, and language correction are also shrinking; recent, general-purpose models may have rendered some of this architecture-/task-specific research less relevant. While centralization is not new to NLP – nearly half of papers in recent years cited BERT (Gururaja et al., 2023 ) – the centralization around closed-source models specifically may harm scientific practice (Rogers, 2023 ) .

3.1.2 Who are the authors driving the expansion of LLM research?

Based on our findings that LLMs research is expanding outside of core NLP, it is natural to ask who is driving the broadening of the field.

In 2023, nearly half of LLM first authors have not previously published on NLP.

To what extent are researchers from non-NLP fields responsible for the growth of LLM research? For each LLM paper in our corpus, we ask whether the first author of the paper had previously written an NLP paper on arXiv – that is, any co-authored paper for which cs.CL was listed as one of the paper’s sub-arXiv categories (§ B.1 ). We similarly code papers according to their last authors. We refer to LLM authors without a prior NLP paper as new , and authors with a prior NLP paper as experienced .

Consistent with the broader growth of the field, the raw counts of new first and last authors has increased consistently since 2018, with an especially large jump in 2023 (Figure S4 ). More surprisingly, however, the percentage of authors without NLP background has also increased this year: in 2022, the percentages of new first and last authors were 41% and 29% respectively, and are up to 50% and 39% in 2023. Figure S4 plots these statistics each year since 2018. The last time the percentage of new authors was this high was in 2018, which was another inflection point as language models started to become useful for many tasks (e.g. ELMo, BERT). In the four years following 2018, the field grew significantly, but the percentage of authors without an NLP background was constant or declining. The increase in new author percentage in 2023, then, reflects that more LLM authors are either new to research entirely or are moving into LLM research from other fields. Seeing as both first authors and last authors are more likely to be new, the field is widening in its composition both of junior researchers and senior researchers. Next, we analyze the contributions of these first-time authors further, to understand both their disciplinary origins and the types of contributions they are making to LLM research.

What fields are new authors coming from?

Out of the 2,746 unique first authors in 2023 who had no prior NLP paper, 57% of them had at least one paper in another field. We compare the publication histories of these new authors to experienced authors (Table S4 ; further details in Appendix B.2 ). About half of new authors’ prior papers are in Computer Vision and Machine Learning, which are common categories overall. But a long tail of less common sub-arXivs further distinguishes new and experienced authors: new authors have published more in Software Engineering, Robotics, Security, and Social Networks, which are the same sub-arXivs growing in overall LLM proportion in 2023 (§ 3.1.1 ).

New authors are driving the increased disciplinary diversity of LLM research.

We close the loop by studying the LLM-related papers being written in 2023 by new authors. Confirming intuition, new author LLM papers are more likely to fall in non-NLP sub-arXivs, including Software, Computers and Society, HCI, and Security (Table S5 ). Comparing paper topic distributions, new authors are more likely to publish on “Visual Foundation Models,” “Applications of LLMs/ChatGPT,” and “Natural Sciences,” while experienced authors publish more on “Interpretability & Reasoning,” “Knowledge Distillation,” and “Summarization & Evaluation” (additional topics in Figure S5 ). That is, authors without NLP background are using LLMs in their home fields, while authors with past NLP background continue to focus more on improving, understanding, and evaluating LLMs.

Overall, there is a clear trend that authors without prior NLP experience are accounting for a substantial, increasing fraction of LLM-related research contributions. These new authors include both junior and senior researchers, many of whom have previously published in other fields, and are widening the scope of LLM-related research directions. The influx of new authors is clearly valuable, but also creates room for error or miscommunication: the large number of junior researchers entering LLM research may not be familiar with prior work, and the broader use of LLMs outside NLP may result in improper usage or experimental practice Narayanan and Kapoor ( 2023 ) . Onboarding resources, research checklists, and other standards can help maintain good practice despite growth Magnusson et al. ( 2023 ); Kapoor et al. ( 2023 ) .

3.2 What are the roles of industry & academia?

We turn our focus from authors to larger-scale institutional trends. To study institutions, we annotated each paper with author affiliations extracted from full-texts (§ A.4 ). We manually coded every institution with at least 10 papers as either academic or industry, 2 2 2 Eight institutions did not fall clearly into this binary, e.g. , nonprofits and government labs. AllenAI was the only such institution in the top 100 producers. resulting in 280 academic and 41 industry institutions. Out of the 14,179 papers with at least one extracted affiliation, 11,627 (82.0%) were written by one of these 321 institutions.

A large (and growing) majority of LLM papers are published by academic institutions.

Figure S6 displays the institutions with the most LLM-related papers. Several Big Tech companies lead in paper count: Microsoft and Google are top two, and Amazon, Meta, and Alibaba are also in the top 10. However, overall, industry institutions account for a relatively small fraction of research output: just 32% of papers have an industry affiliation (and over half of these are industry-academic collaborations). 85% of papers have an academic affiliation, and 41 out of the top 50 institutions are academic, led by CMU, Stanford, Tsinghua, Peking, and UW. Further, academic LLM research has grown faster in 2023 than has industry research: there are 3.3 × \times × as many academic as industry papers in 2023, compared to 2.3 × \times × pre-2023. This result is somewhat surprising in light of the longer-term trend of greater industry presence in NLP and AI (Abdalla et al., 2023 ; Birhane et al., 2022 ) , suggesting that the shift we observe is specific to LLM research in 2023.

Big Tech companies are publishing less, and universities in Asia are publishing more.

Examining which specific institutions are publishing more and less reveals two specific trends on the changes in publication share (Table 1 ). Strikingly, out of all 321 institutions, the top four Big Tech publishers – Google, Microsoft, Amazon, and Meta – are the four institutions with the largest decreases in publication fraction in 2023. Collectively, they accounted for 19.3% of all LLM papers pre-2023, down to 13.0% in 2023. Google has shown a particularly large drop: its share of LLM papers roughly halved in 2023 relative to prior years. Reduced industry publishing in 2023, especially from Big Tech companies, may have multiple explanations, such as a deprioritization of basic research or heightened secrecy due to competition. Companies releasing fewer modeling details has been previously noted, but only in high-profile cases like the GPT-4 technical report Rogers ( 2023 ) . 3 3 3 And recently, Gemini: https://x.com/JesseDodge/status/1732444597593203111 . Our data suggest that reduced openness is emerging as a broader phenomenon, where less is being published at all by top companies.

All of the top ten institutions with the largest increases in publication share are academic institutions, concordant with the overall rise in academic percentage. Notably, they are all institutions in Asia (China, Singapore, Hong Kong, UAE). These ten institutions account for 16.4% of papers in 2023, up from 9.1% pre-2023. In contrast, the top ten US universities have not changed significantly in their publishing fraction (18.8% to 18.0%). The growth of LLM research from universities in Asia deserves further consideration, especially given prior discussions of citation gaps between countries (Rungta et al., 2022 ) and the geopolitics around LLM development (Ding and Xiao, 2023 ) .

OpenAI and DeepMind publish infrequently with high-impact.

Paper count is an imperfect metric of influence: there are outlier institutions that rarely publish, but have large impact when they do. In Figure 2 , we plot mean citation percentile against overall paper count to identify some of these institutions. OpenAI and DeepMind are the largest outliers: they both publish infrequently, but receive many citations. OpenAI has just 21 papers as annotated in our corpus, but, on average, they are in the 90th percentile of citation counts. Other institutions like Google, Meta, UW, and AllenAI both publish frequently and receive high citation counts.

Top-cited papers are split between industry and academia.

We manually annotated the top 50 cited papers in 2023 (as of September): 30 papers have an industry affiliation, 30 papers have an academic affiliation, and 10 have both. The fact that 60% of top-cited papers have an industry affiliation – compared to 27% of papers overall in 2023 – shows that industry research continues to strongly influence the field, even if companies are publishing less overall. We also annotated these 50 papers for whether they are model-focused, i.e. reporting on a newly trained language/foundation model, or evaluation-focused, i.e. evaluating a model on new tasks or applications (for example, Nori et al. 2023 ; Guo et al. 2023 ; Gilardi et al. 2023 ). 23 papers were model-focused, of which the majority (19) had an industry affiliation. 17 were evaluation-focused, of which the majority (12) had an academic affiliation. These two categories of top-cited papers allude to the relative specializations of industry and academia, which we explore further.

Industry focuses more on general-purpose methods, while academics apply these models.

To understand their publishing differences at scale, we compute the topics most skewed towards either industry or academia. Figure 3 illustrates the five topics with the largest ratios of p ( topic | industry ) p ( topic | academic ) 𝑝 conditional topic industry 𝑝 conditional topic academic \frac{p(\text{topic}\>|\>\text{industry})}{p(\text{topic}\>|\>\text{academic})} divide start_ARG italic_p ( topic | industry ) end_ARG start_ARG italic_p ( topic | academic ) end_ARG in blue, and the five with largest p ( topic | academic ) p ( topic | industry ) 𝑝 conditional topic academic 𝑝 conditional topic industry \frac{p(\text{topic}\>|\>\text{academic})}{p(\text{topic}\>|\>\text{industry})} divide start_ARG italic_p ( topic | academic ) end_ARG start_ARG italic_p ( topic | industry ) end_ARG in red. 4 4 4 Papers resulting from collaboration are excluded from this analysis and studied later. Industry papers are ∼ similar-to \sim ∼ 2-3 × \times × more likely to cover methodological contributions, especially involving efficiency or model architecture. They also work more on certain tasks (speech recognition, search, retrieval) which may be more commercially relevant, and therefore more relevant to industry practitioners. Academic papers more often apply LLMs to downstream, society-facing tasks, like legal document analysis, social media data, and hate speech detection. They also prioritize studying biases & harms more than industry. Finally, academics are 3 × \times × as likely to write on “Applications & Benchmark Evals.” These topic differences support the idea that industry is leading the way on model development research, while academics are using models for applied tasks and studying their implications.

3.3 What are the patterns of collaboration?

We examine which institutions tend to co-author papers together, focusing in particular on patterns of academic/industry and international collaboration.

Academic-academic and industry-academic collaborations are both frequent, while industry-industry collaborations are rare.

We consider collaborations among those institutions with at least 10 LLM papers. There are 2,250 papers produced by a collaboration between at least two academic institutions, and 2,084 by at least one academic institution and one industry institution; 55% of all industry papers involve an academic collaboration. There are only 104 papers by a collaboration between at least two industry institutions.

Industry-academic collaborations typically align with industry topics.

We compute the topics that are most common in industry-academic collaborations relative to the baseline topic frequencies. Similarly, we compute baseline-adjusted topic frequencies for industry-only author teams and academic-only author teams. We find that collaborations focus on very similar topics as industry-only teams: their normalized topic distributions have Spearman ρ = 0.66 , p < 10 − 5 formulae-sequence 𝜌 0.66 𝑝 superscript 10 5 \rho=0.66,p<10^{-5} italic_ρ = 0.66 , italic_p < 10 start_POSTSUPERSCRIPT - 5 end_POSTSUPERSCRIPT . Meanwhile, collaboration topics are as different from academic topics ( ρ = − 0.87 𝜌 0.87 \rho=-0.87 italic_ρ = - 0.87 ) as industry topics are from academic topics ( ρ = − 0.92 𝜌 0.92 \rho=-0.92 italic_ρ = - 0.92 ). For example, both industry-only and industry-academic papers focus on efficiency, translation, and vision-language models, while both groups publish infrequently on social media & misinformation, bias & harms, and interpretability. These results are natural given that many collaborations result from PhD interns or student researchers, where company priorities may hold more weight. However, as compute and modeling resources continue to separate academics from researchers at Big Tech companies (Lee et al., 2023 ) , stakeholders should consider where collaborations – most of which involve Big Tech companies – can be recast as an opportunity to bridge different expertise for mutual benefit.

Collaborations between American and Chinese institutions are rare.

In Figure 4 , we present the network of the 20 institutions that produced the most LLM papers in our dataset, all of which are Chinese or American. An edge between two institutions indicates that at least five papers resulted from a collaboration between them. The network suggests little collaboration between the US and China with the exception of Microsoft, which collaborates with institutions in both countries. There is also only one edge between a pair of companies.

4 Related Work

Our work applies methods from bibliometrics and science of science Borgman and Furner ( 2002 ); Fortunato et al. ( 2018 ) to understand the emerging LLM literature. These methods have a rich history in NLP; since the 2008 release of the ACL Anthology, several papers have applied quantitative methods to trace topic changes and paradigm shifts in the Anthology over time Hall et al. ( 2008 ); Anderson et al. ( 2012 ); Mohammad ( 2019 ); Pramanick et al. ( 2023 ) . Another line of work focuses specifically on citation patterns: Wahle et al. ( 2023 ) study cross-disciplinary cites from NLP to other fields, Singh et al. ( 2023 ) show that NLP papers are citing increasingly recent work (and less older work), and Rungta et al. ( 2022 ) identify geographic disparities in citation counts of published papers.

Some bibliometrics papers also ask similar research questions to ours. Abdalla et al. ( 2023 ) study Big Tech companies in NLP research, identifying key players and showing significant growth in overall industry presence since 2017. Their findings complement our more specific analysis of the language modeling literature, in which there are different trends than NLP overall (for example, we find that industry research actually declined relative to academia in 2023). Fan et al. ( 2023 ) apply bibliometric methods to a similar paper corpus, pulling 5,752 published language modeling papers from Web of Science from Jan 2017 - Feb 2023. They perform topic modeling and analyze co-citation networks to taxonomize the LLM literature, and they also study cross-country collaboration. Our work differs in that we focus on specific changes in 2023 compared to years prior (rather than analyzing the entire time period collectively), and we use the arXiv in order to capture a recent sample with less publication delay. Our empirical analyses also cover different questions, with more focus on emerging topics, first-time authors, and industry/academia dynamics.