- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Present Your Data Like a Pro

- Joel Schwartzberg

Demystify the numbers. Your audience will thank you.

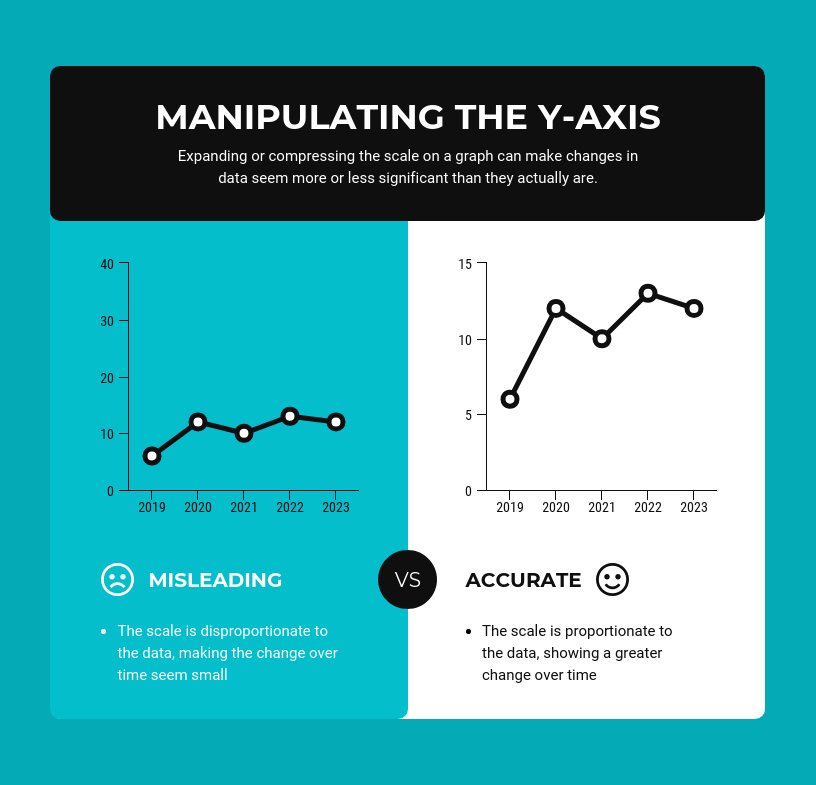

While a good presentation has data, data alone doesn’t guarantee a good presentation. It’s all about how that data is presented. The quickest way to confuse your audience is by sharing too many details at once. The only data points you should share are those that significantly support your point — and ideally, one point per chart. To avoid the debacle of sheepishly translating hard-to-see numbers and labels, rehearse your presentation with colleagues sitting as far away as the actual audience would. While you’ve been working with the same chart for weeks or months, your audience will be exposed to it for mere seconds. Give them the best chance of comprehending your data by using simple, clear, and complete language to identify X and Y axes, pie pieces, bars, and other diagrammatic elements. Try to avoid abbreviations that aren’t obvious, and don’t assume labeled components on one slide will be remembered on subsequent slides. Every valuable chart or pie graph has an “Aha!” zone — a number or range of data that reveals something crucial to your point. Make sure you visually highlight the “Aha!” zone, reinforcing the moment by explaining it to your audience.

With so many ways to spin and distort information these days, a presentation needs to do more than simply share great ideas — it needs to support those ideas with credible data. That’s true whether you’re an executive pitching new business clients, a vendor selling her services, or a CEO making a case for change.

- JS Joel Schwartzberg oversees executive communications for a major national nonprofit, is a professional presentation coach, and is the author of Get to the Point! Sharpen Your Message and Make Your Words Matter and The Language of Leadership: How to Engage and Inspire Your Team . You can find him on LinkedIn and X. TheJoelTruth

Partner Center

Home Blog Design Understanding Data Presentations (Guide + Examples)

Understanding Data Presentations (Guide + Examples)

In this age of overwhelming information, the skill to effectively convey data has become extremely valuable. Initiating a discussion on data presentation types involves thoughtful consideration of the nature of your data and the message you aim to convey. Different types of visualizations serve distinct purposes. Whether you’re dealing with how to develop a report or simply trying to communicate complex information, how you present data influences how well your audience understands and engages with it. This extensive guide leads you through the different ways of data presentation.

Table of Contents

What is a Data Presentation?

What should a data presentation include, line graphs, treemap chart, scatter plot, how to choose a data presentation type, recommended data presentation templates, common mistakes done in data presentation.

A data presentation is a slide deck that aims to disclose quantitative information to an audience through the use of visual formats and narrative techniques derived from data analysis, making complex data understandable and actionable. This process requires a series of tools, such as charts, graphs, tables, infographics, dashboards, and so on, supported by concise textual explanations to improve understanding and boost retention rate.

Data presentations require us to cull data in a format that allows the presenter to highlight trends, patterns, and insights so that the audience can act upon the shared information. In a few words, the goal of data presentations is to enable viewers to grasp complicated concepts or trends quickly, facilitating informed decision-making or deeper analysis.

Data presentations go beyond the mere usage of graphical elements. Seasoned presenters encompass visuals with the art of data storytelling , so the speech skillfully connects the points through a narrative that resonates with the audience. Depending on the purpose – inspire, persuade, inform, support decision-making processes, etc. – is the data presentation format that is better suited to help us in this journey.

To nail your upcoming data presentation, ensure to count with the following elements:

- Clear Objectives: Understand the intent of your presentation before selecting the graphical layout and metaphors to make content easier to grasp.

- Engaging introduction: Use a powerful hook from the get-go. For instance, you can ask a big question or present a problem that your data will answer. Take a look at our guide on how to start a presentation for tips & insights.

- Structured Narrative: Your data presentation must tell a coherent story. This means a beginning where you present the context, a middle section in which you present the data, and an ending that uses a call-to-action. Check our guide on presentation structure for further information.

- Visual Elements: These are the charts, graphs, and other elements of visual communication we ought to use to present data. This article will cover one by one the different types of data representation methods we can use, and provide further guidance on choosing between them.

- Insights and Analysis: This is not just showcasing a graph and letting people get an idea about it. A proper data presentation includes the interpretation of that data, the reason why it’s included, and why it matters to your research.

- Conclusion & CTA: Ending your presentation with a call to action is necessary. Whether you intend to wow your audience into acquiring your services, inspire them to change the world, or whatever the purpose of your presentation, there must be a stage in which you convey all that you shared and show the path to staying in touch. Plan ahead whether you want to use a thank-you slide, a video presentation, or which method is apt and tailored to the kind of presentation you deliver.

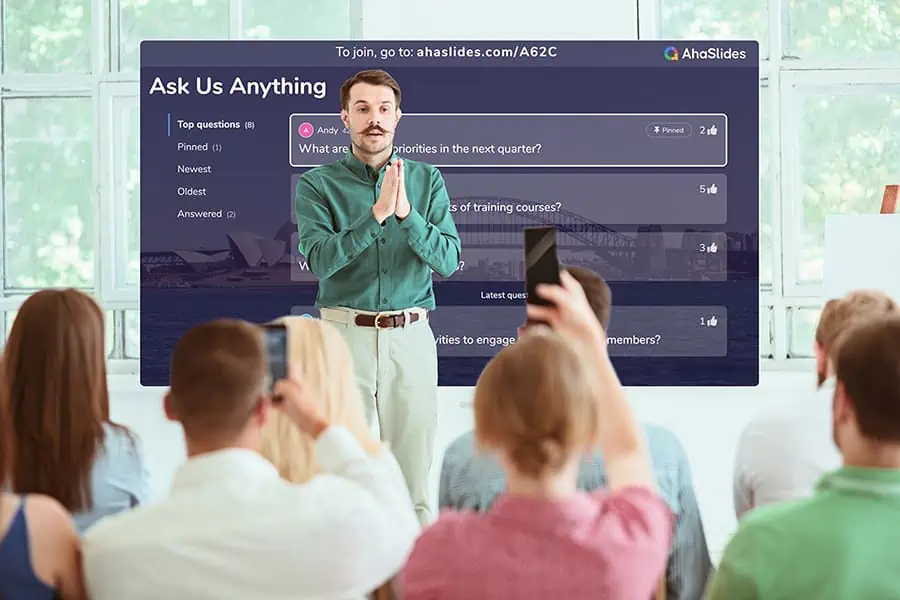

- Q&A Session: After your speech is concluded, allocate 3-5 minutes for the audience to raise any questions about the information you disclosed. This is an extra chance to establish your authority on the topic. Check our guide on questions and answer sessions in presentations here.

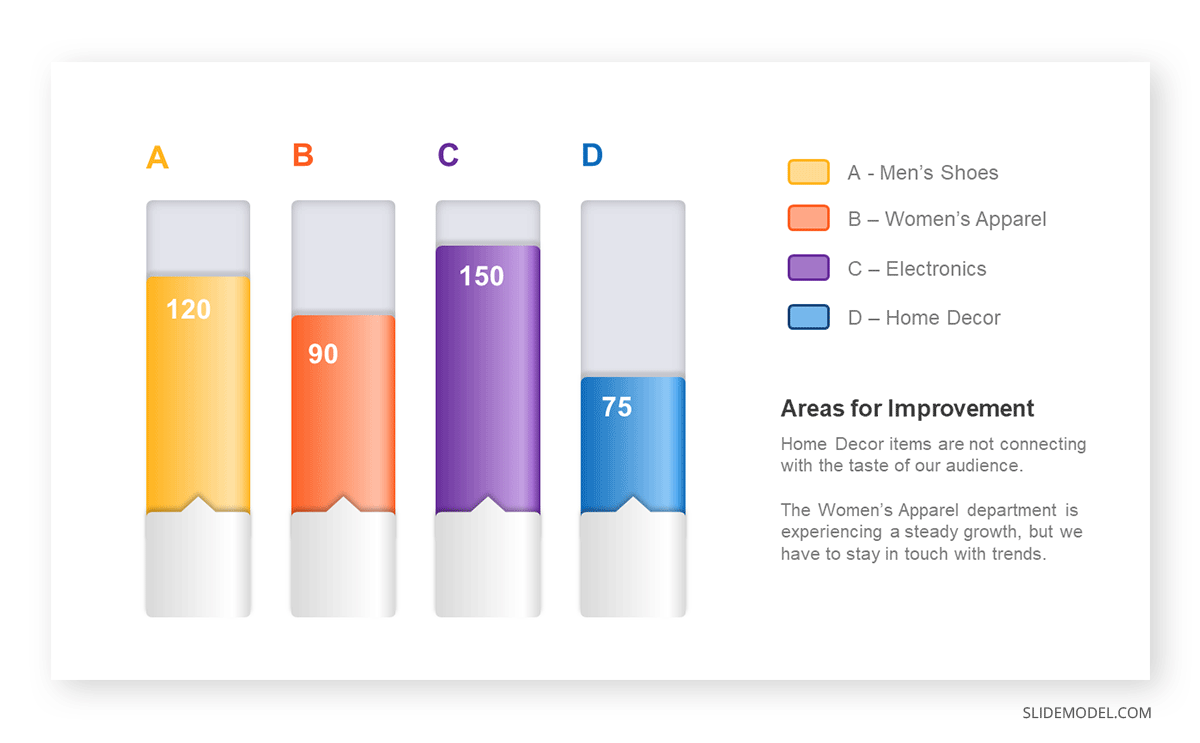

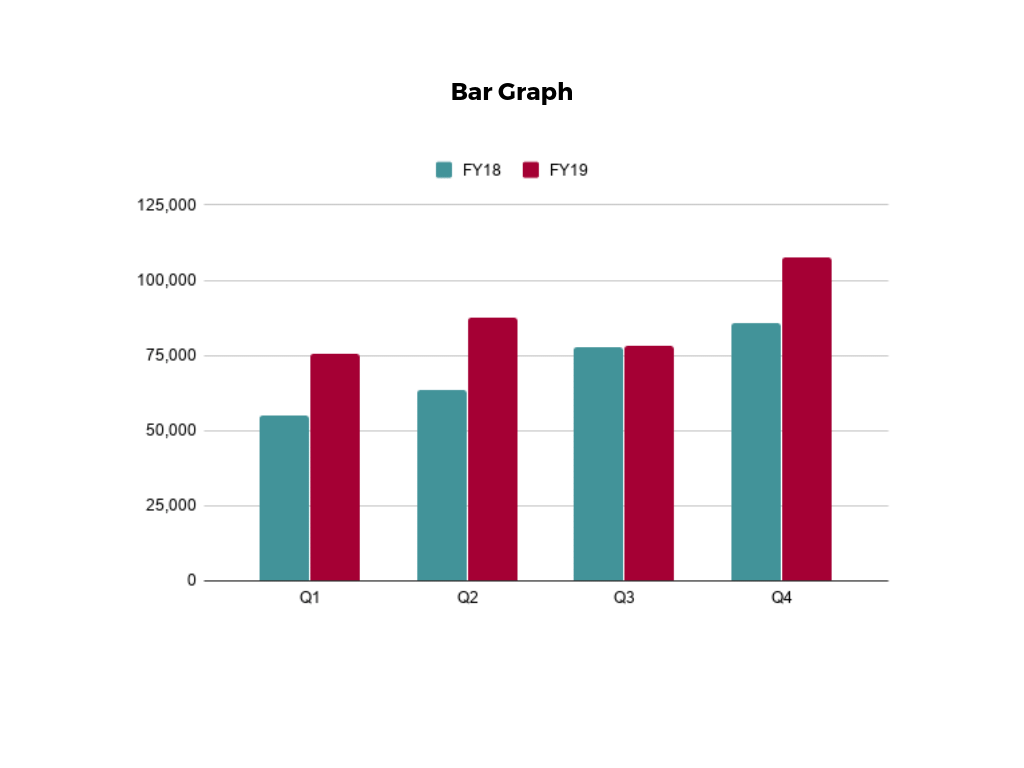

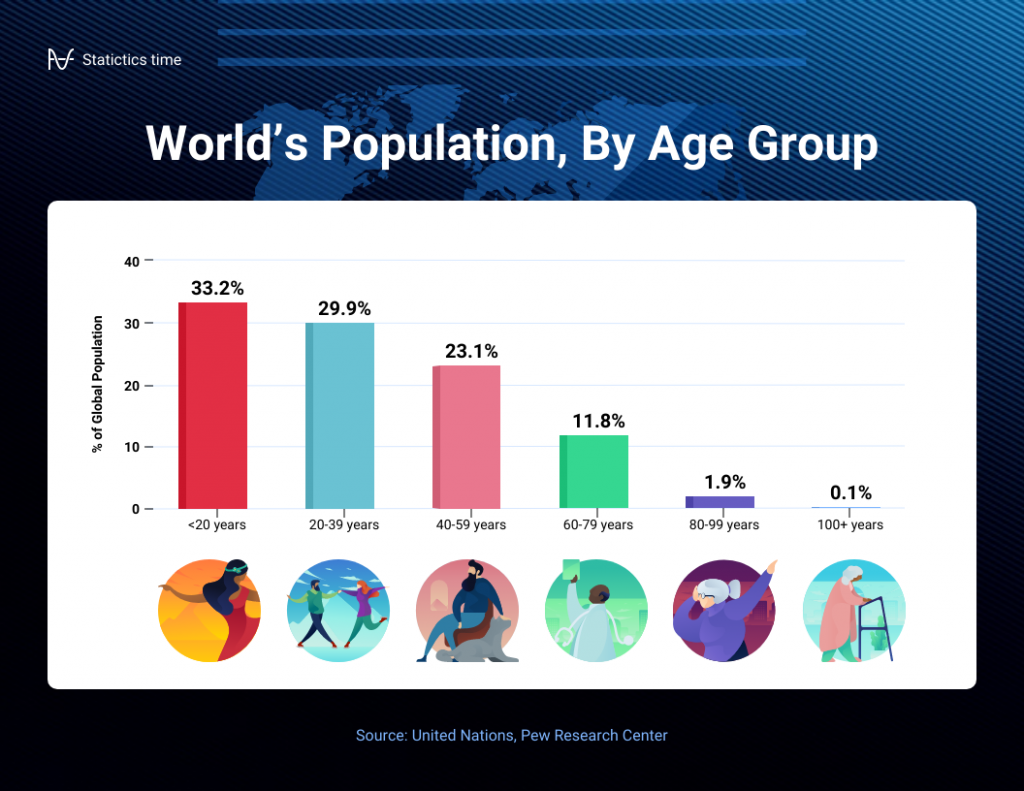

Bar charts are a graphical representation of data using rectangular bars to show quantities or frequencies in an established category. They make it easy for readers to spot patterns or trends. Bar charts can be horizontal or vertical, although the vertical format is commonly known as a column chart. They display categorical, discrete, or continuous variables grouped in class intervals [1] . They include an axis and a set of labeled bars horizontally or vertically. These bars represent the frequencies of variable values or the values themselves. Numbers on the y-axis of a vertical bar chart or the x-axis of a horizontal bar chart are called the scale.

Real-Life Application of Bar Charts

Let’s say a sales manager is presenting sales to their audience. Using a bar chart, he follows these steps.

Step 1: Selecting Data

The first step is to identify the specific data you will present to your audience.

The sales manager has highlighted these products for the presentation.

- Product A: Men’s Shoes

- Product B: Women’s Apparel

- Product C: Electronics

- Product D: Home Decor

Step 2: Choosing Orientation

Opt for a vertical layout for simplicity. Vertical bar charts help compare different categories in case there are not too many categories [1] . They can also help show different trends. A vertical bar chart is used where each bar represents one of the four chosen products. After plotting the data, it is seen that the height of each bar directly represents the sales performance of the respective product.

It is visible that the tallest bar (Electronics – Product C) is showing the highest sales. However, the shorter bars (Women’s Apparel – Product B and Home Decor – Product D) need attention. It indicates areas that require further analysis or strategies for improvement.

Step 3: Colorful Insights

Different colors are used to differentiate each product. It is essential to show a color-coded chart where the audience can distinguish between products.

- Men’s Shoes (Product A): Yellow

- Women’s Apparel (Product B): Orange

- Electronics (Product C): Violet

- Home Decor (Product D): Blue

Bar charts are straightforward and easily understandable for presenting data. They are versatile when comparing products or any categorical data [2] . Bar charts adapt seamlessly to retail scenarios. Despite that, bar charts have a few shortcomings. They cannot illustrate data trends over time. Besides, overloading the chart with numerous products can lead to visual clutter, diminishing its effectiveness.

For more information, check our collection of bar chart templates for PowerPoint .

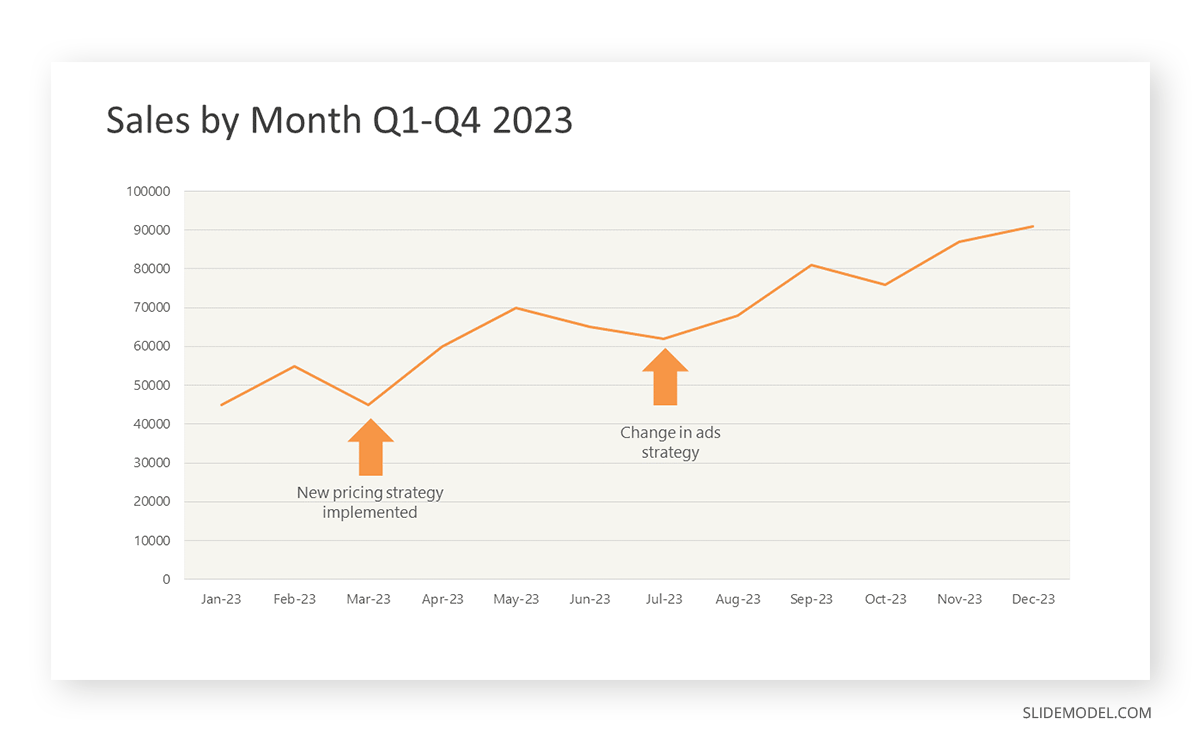

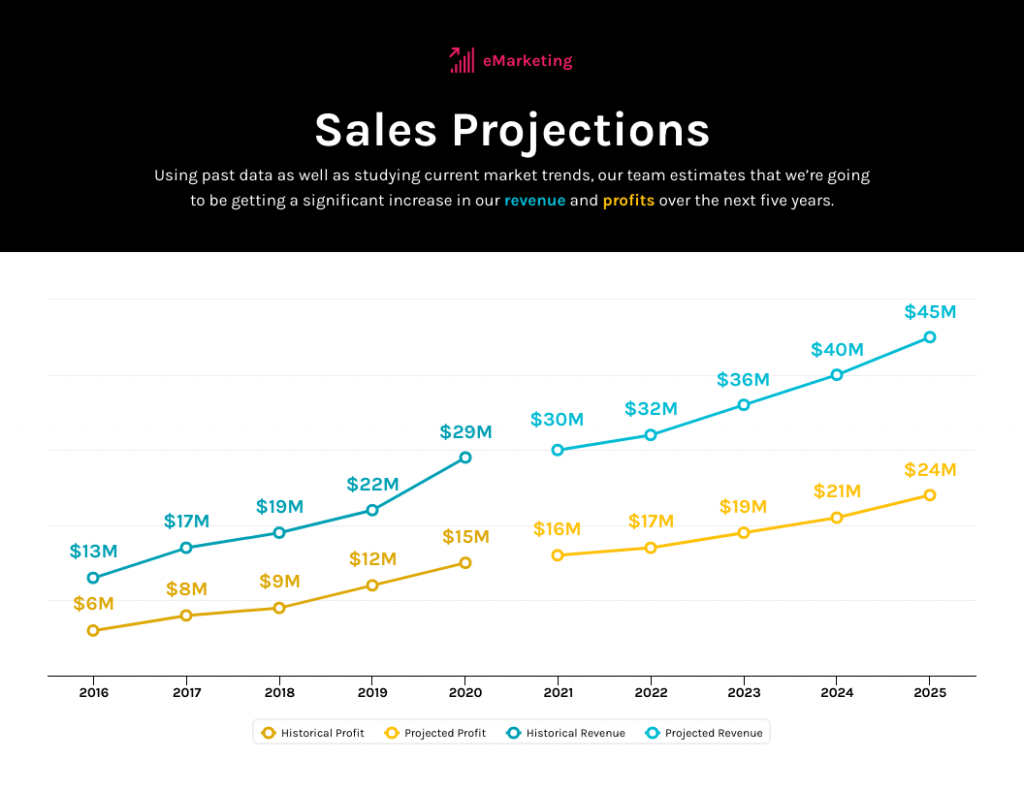

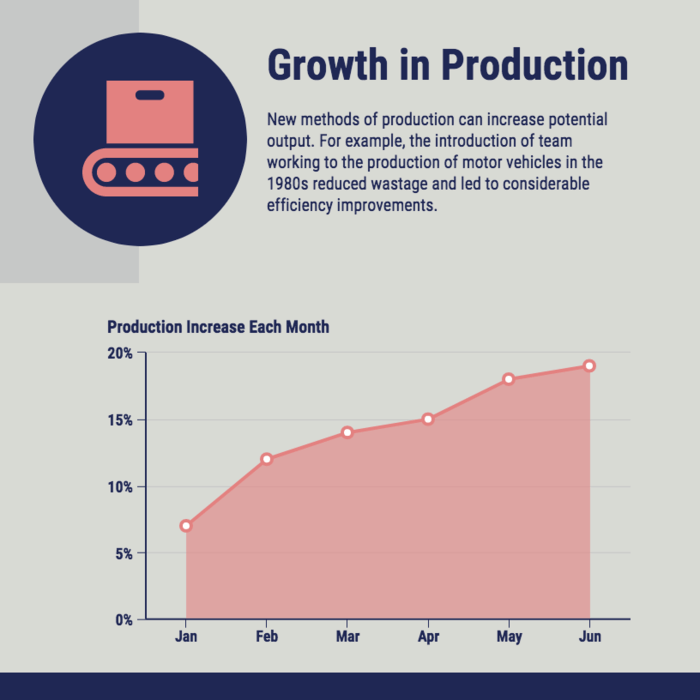

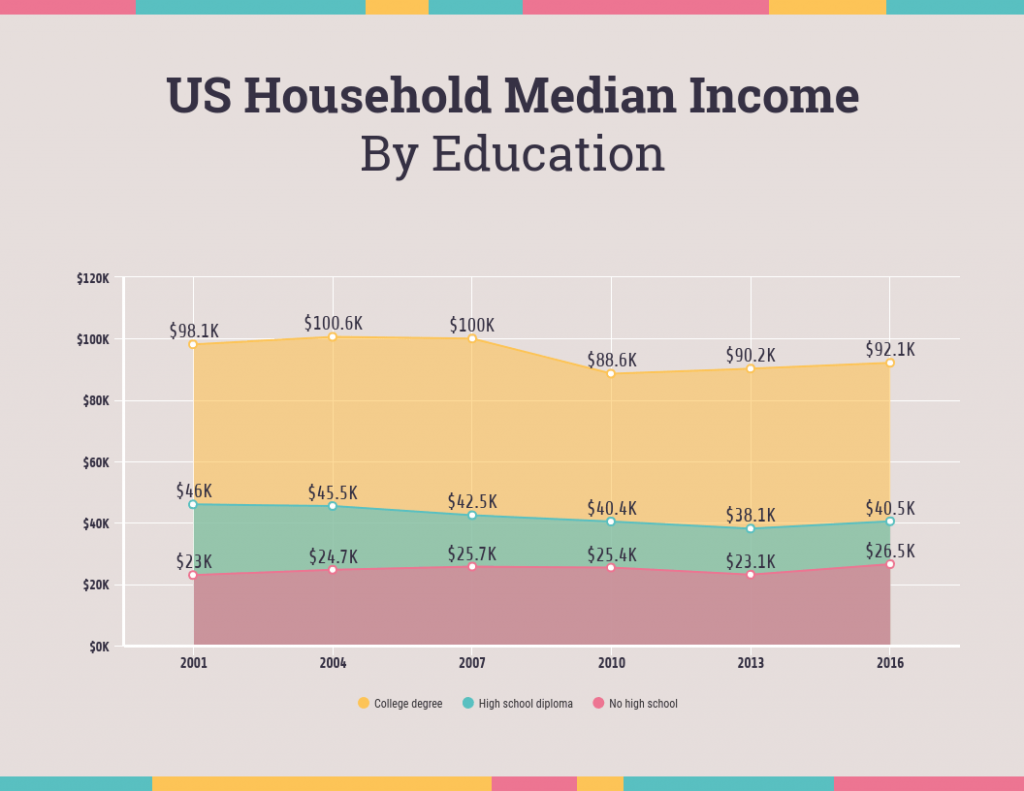

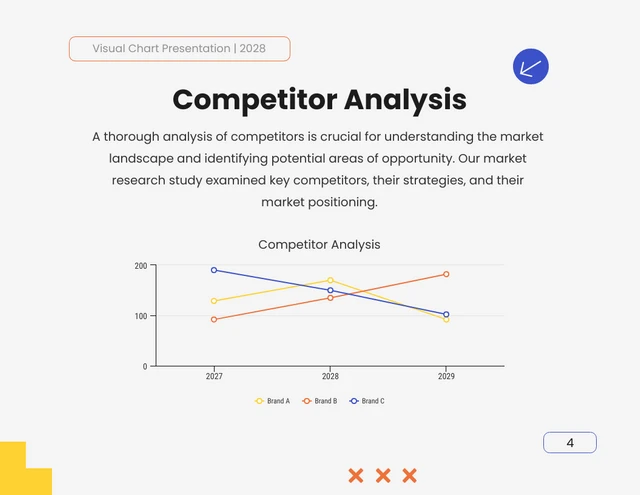

Line graphs help illustrate data trends, progressions, or fluctuations by connecting a series of data points called ‘markers’ with straight line segments. This provides a straightforward representation of how values change [5] . Their versatility makes them invaluable for scenarios requiring a visual understanding of continuous data. In addition, line graphs are also useful for comparing multiple datasets over the same timeline. Using multiple line graphs allows us to compare more than one data set. They simplify complex information so the audience can quickly grasp the ups and downs of values. From tracking stock prices to analyzing experimental results, you can use line graphs to show how data changes over a continuous timeline. They show trends with simplicity and clarity.

Real-life Application of Line Graphs

To understand line graphs thoroughly, we will use a real case. Imagine you’re a financial analyst presenting a tech company’s monthly sales for a licensed product over the past year. Investors want insights into sales behavior by month, how market trends may have influenced sales performance and reception to the new pricing strategy. To present data via a line graph, you will complete these steps.

First, you need to gather the data. In this case, your data will be the sales numbers. For example:

- January: $45,000

- February: $55,000

- March: $45,000

- April: $60,000

- May: $ 70,000

- June: $65,000

- July: $62,000

- August: $68,000

- September: $81,000

- October: $76,000

- November: $87,000

- December: $91,000

After choosing the data, the next step is to select the orientation. Like bar charts, you can use vertical or horizontal line graphs. However, we want to keep this simple, so we will keep the timeline (x-axis) horizontal while the sales numbers (y-axis) vertical.

Step 3: Connecting Trends

After adding the data to your preferred software, you will plot a line graph. In the graph, each month’s sales are represented by data points connected by a line.

Step 4: Adding Clarity with Color

If there are multiple lines, you can also add colors to highlight each one, making it easier to follow.

Line graphs excel at visually presenting trends over time. These presentation aids identify patterns, like upward or downward trends. However, too many data points can clutter the graph, making it harder to interpret. Line graphs work best with continuous data but are not suitable for categories.

For more information, check our collection of line chart templates for PowerPoint and our article about how to make a presentation graph .

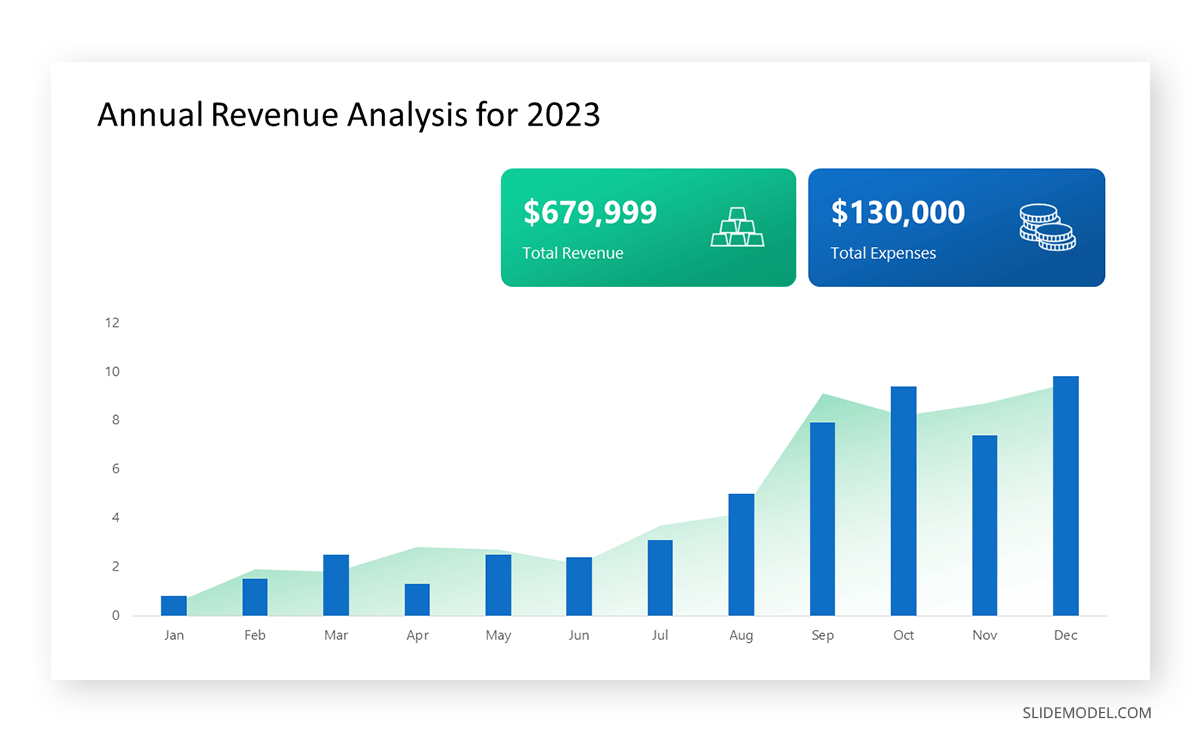

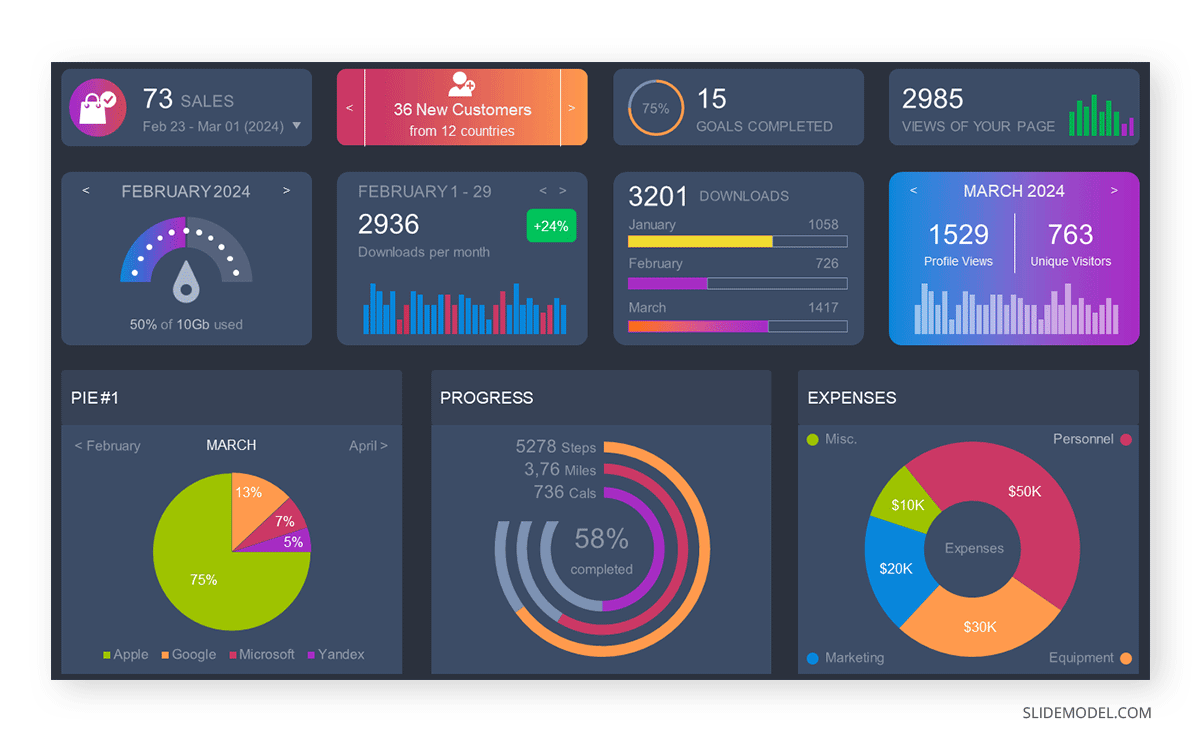

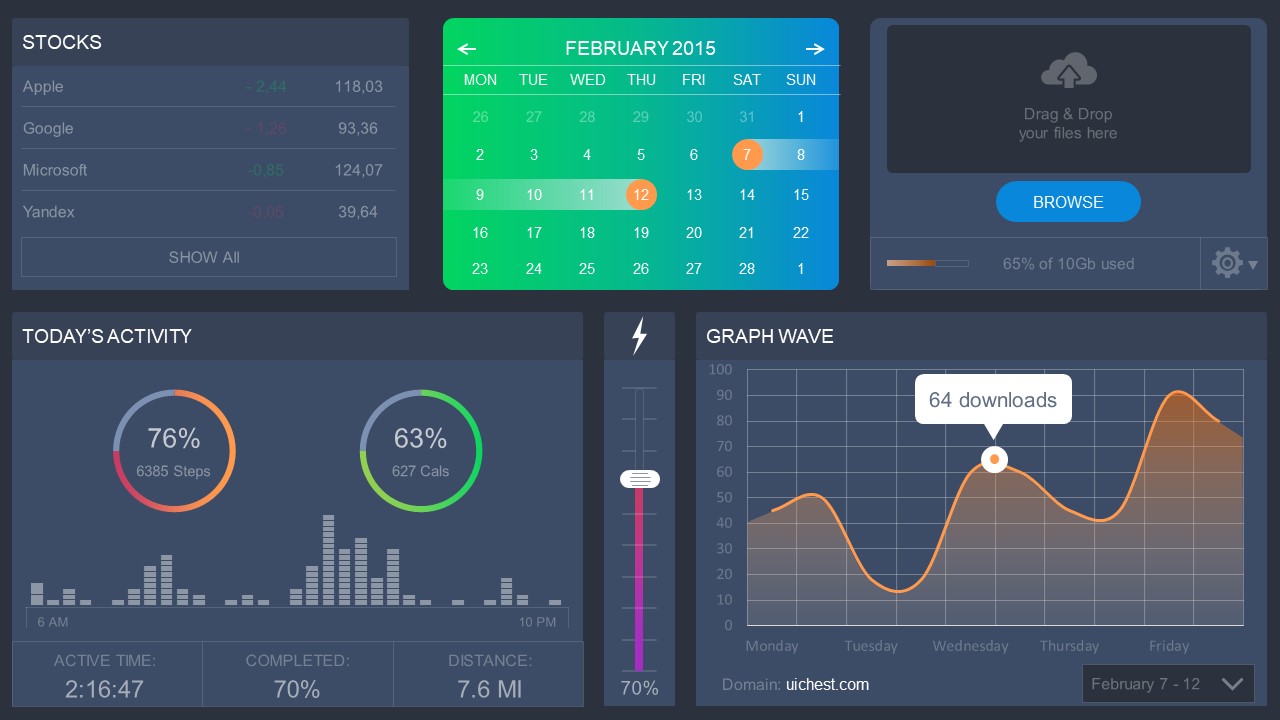

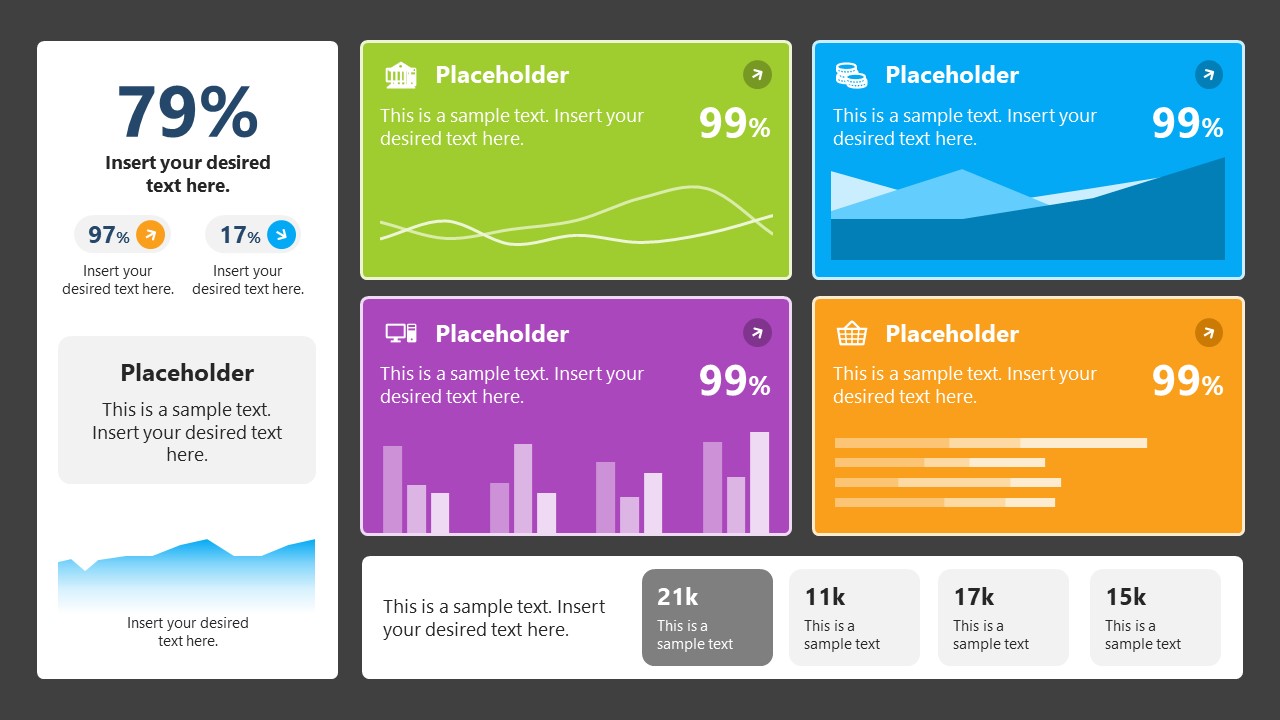

A data dashboard is a visual tool for analyzing information. Different graphs, charts, and tables are consolidated in a layout to showcase the information required to achieve one or more objectives. Dashboards help quickly see Key Performance Indicators (KPIs). You don’t make new visuals in the dashboard; instead, you use it to display visuals you’ve already made in worksheets [3] .

Keeping the number of visuals on a dashboard to three or four is recommended. Adding too many can make it hard to see the main points [4]. Dashboards can be used for business analytics to analyze sales, revenue, and marketing metrics at a time. They are also used in the manufacturing industry, as they allow users to grasp the entire production scenario at the moment while tracking the core KPIs for each line.

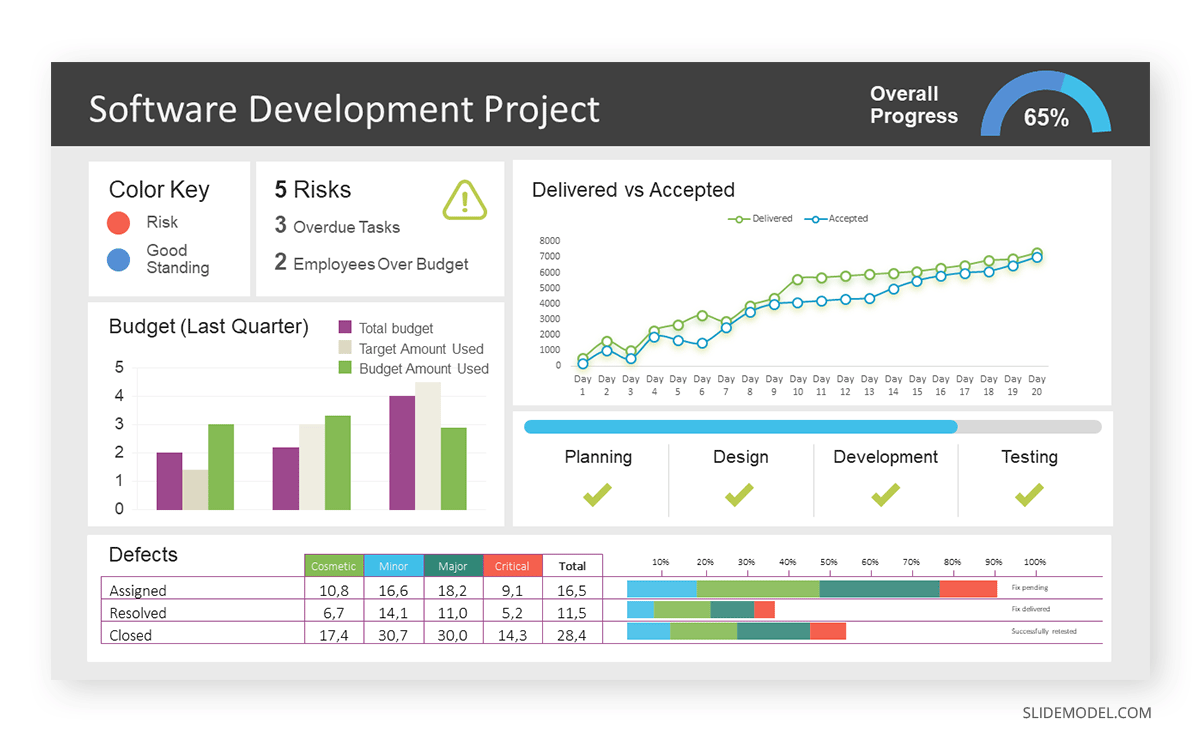

Real-Life Application of a Dashboard

Consider a project manager presenting a software development project’s progress to a tech company’s leadership team. He follows the following steps.

Step 1: Defining Key Metrics

To effectively communicate the project’s status, identify key metrics such as completion status, budget, and bug resolution rates. Then, choose measurable metrics aligned with project objectives.

Step 2: Choosing Visualization Widgets

After finalizing the data, presentation aids that align with each metric are selected. For this project, the project manager chooses a progress bar for the completion status and uses bar charts for budget allocation. Likewise, he implements line charts for bug resolution rates.

Step 3: Dashboard Layout

Key metrics are prominently placed in the dashboard for easy visibility, and the manager ensures that it appears clean and organized.

Dashboards provide a comprehensive view of key project metrics. Users can interact with data, customize views, and drill down for detailed analysis. However, creating an effective dashboard requires careful planning to avoid clutter. Besides, dashboards rely on the availability and accuracy of underlying data sources.

For more information, check our article on how to design a dashboard presentation , and discover our collection of dashboard PowerPoint templates .

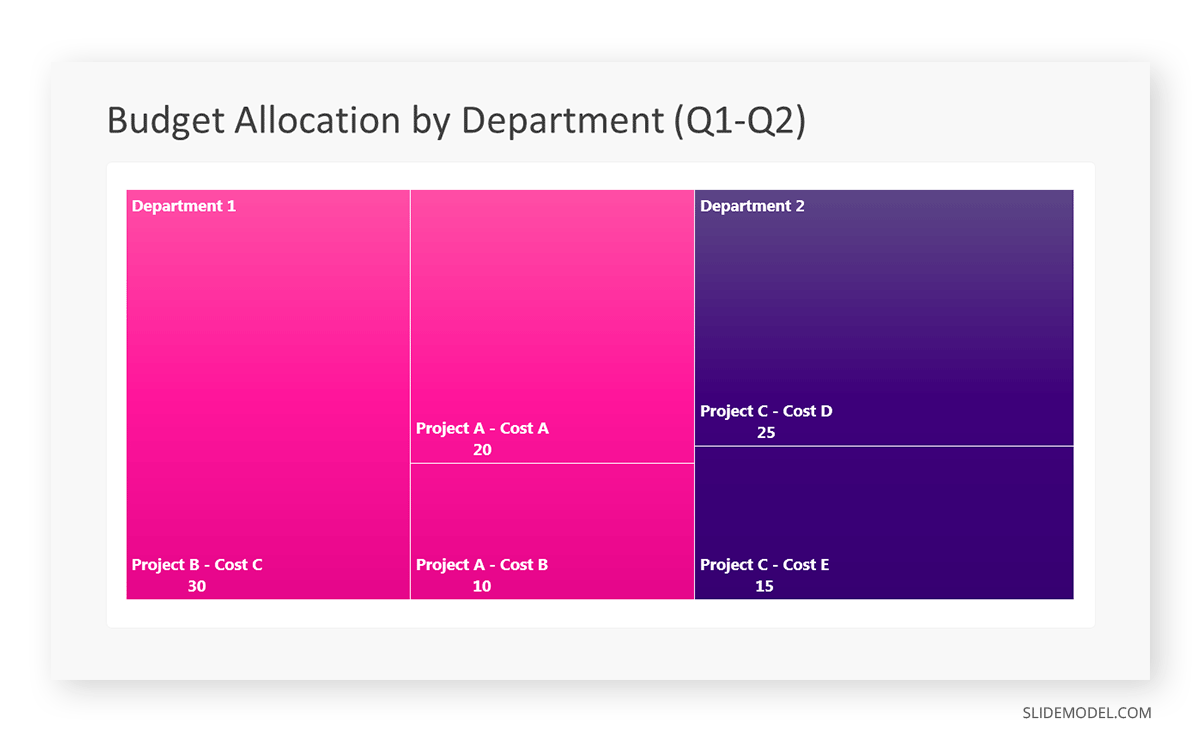

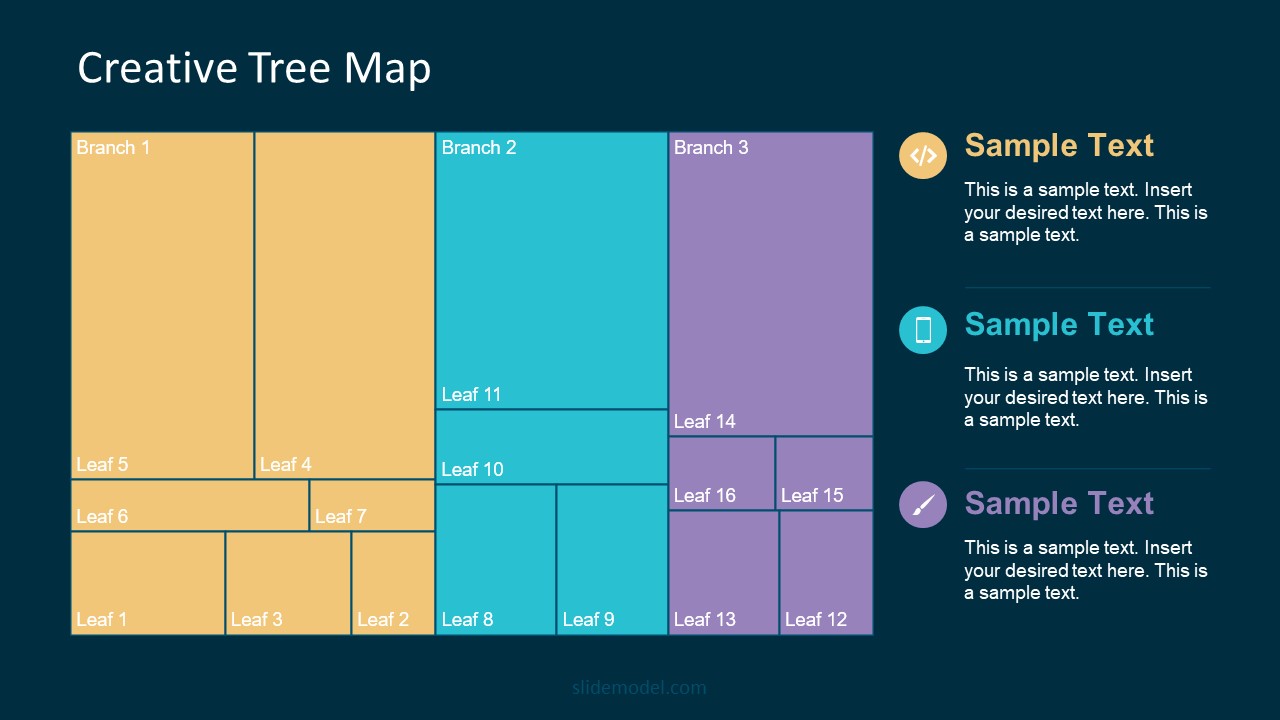

Treemap charts represent hierarchical data structured in a series of nested rectangles [6] . As each branch of the ‘tree’ is given a rectangle, smaller tiles can be seen representing sub-branches, meaning elements on a lower hierarchical level than the parent rectangle. Each one of those rectangular nodes is built by representing an area proportional to the specified data dimension.

Treemaps are useful for visualizing large datasets in compact space. It is easy to identify patterns, such as which categories are dominant. Common applications of the treemap chart are seen in the IT industry, such as resource allocation, disk space management, website analytics, etc. Also, they can be used in multiple industries like healthcare data analysis, market share across different product categories, or even in finance to visualize portfolios.

Real-Life Application of a Treemap Chart

Let’s consider a financial scenario where a financial team wants to represent the budget allocation of a company. There is a hierarchy in the process, so it is helpful to use a treemap chart. In the chart, the top-level rectangle could represent the total budget, and it would be subdivided into smaller rectangles, each denoting a specific department. Further subdivisions within these smaller rectangles might represent individual projects or cost categories.

Step 1: Define Your Data Hierarchy

While presenting data on the budget allocation, start by outlining the hierarchical structure. The sequence will be like the overall budget at the top, followed by departments, projects within each department, and finally, individual cost categories for each project.

- Top-level rectangle: Total Budget

- Second-level rectangles: Departments (Engineering, Marketing, Sales)

- Third-level rectangles: Projects within each department

- Fourth-level rectangles: Cost categories for each project (Personnel, Marketing Expenses, Equipment)

Step 2: Choose a Suitable Tool

It’s time to select a data visualization tool supporting Treemaps. Popular choices include Tableau, Microsoft Power BI, PowerPoint, or even coding with libraries like D3.js. It is vital to ensure that the chosen tool provides customization options for colors, labels, and hierarchical structures.

Here, the team uses PowerPoint for this guide because of its user-friendly interface and robust Treemap capabilities.

Step 3: Make a Treemap Chart with PowerPoint

After opening the PowerPoint presentation, they chose “SmartArt” to form the chart. The SmartArt Graphic window has a “Hierarchy” category on the left. Here, you will see multiple options. You can choose any layout that resembles a Treemap. The “Table Hierarchy” or “Organization Chart” options can be adapted. The team selects the Table Hierarchy as it looks close to a Treemap.

Step 5: Input Your Data

After that, a new window will open with a basic structure. They add the data one by one by clicking on the text boxes. They start with the top-level rectangle, representing the total budget.

Step 6: Customize the Treemap

By clicking on each shape, they customize its color, size, and label. At the same time, they can adjust the font size, style, and color of labels by using the options in the “Format” tab in PowerPoint. Using different colors for each level enhances the visual difference.

Treemaps excel at illustrating hierarchical structures. These charts make it easy to understand relationships and dependencies. They efficiently use space, compactly displaying a large amount of data, reducing the need for excessive scrolling or navigation. Additionally, using colors enhances the understanding of data by representing different variables or categories.

In some cases, treemaps might become complex, especially with deep hierarchies. It becomes challenging for some users to interpret the chart. At the same time, displaying detailed information within each rectangle might be constrained by space. It potentially limits the amount of data that can be shown clearly. Without proper labeling and color coding, there’s a risk of misinterpretation.

A heatmap is a data visualization tool that uses color coding to represent values across a two-dimensional surface. In these, colors replace numbers to indicate the magnitude of each cell. This color-shaded matrix display is valuable for summarizing and understanding data sets with a glance [7] . The intensity of the color corresponds to the value it represents, making it easy to identify patterns, trends, and variations in the data.

As a tool, heatmaps help businesses analyze website interactions, revealing user behavior patterns and preferences to enhance overall user experience. In addition, companies use heatmaps to assess content engagement, identifying popular sections and areas of improvement for more effective communication. They excel at highlighting patterns and trends in large datasets, making it easy to identify areas of interest.

We can implement heatmaps to express multiple data types, such as numerical values, percentages, or even categorical data. Heatmaps help us easily spot areas with lots of activity, making them helpful in figuring out clusters [8] . When making these maps, it is important to pick colors carefully. The colors need to show the differences between groups or levels of something. And it is good to use colors that people with colorblindness can easily see.

Check our detailed guide on how to create a heatmap here. Also discover our collection of heatmap PowerPoint templates .

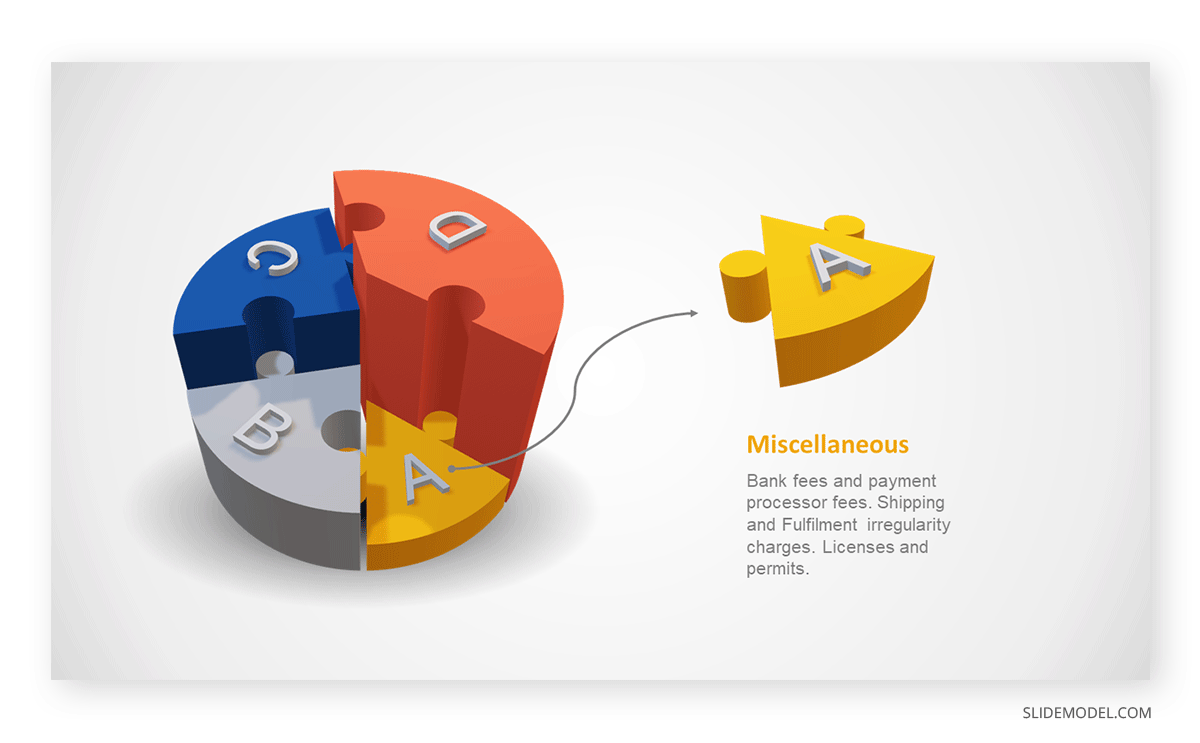

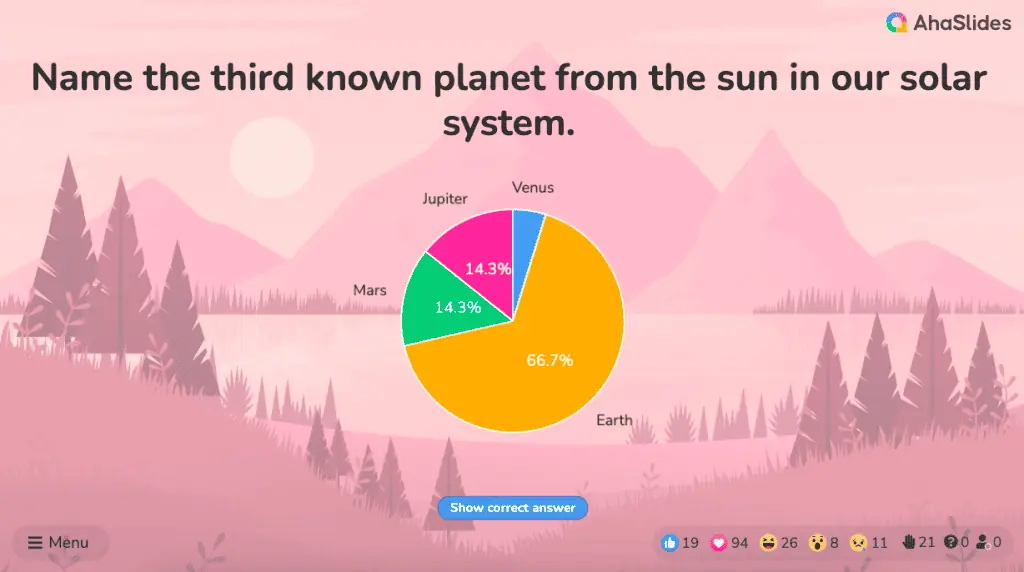

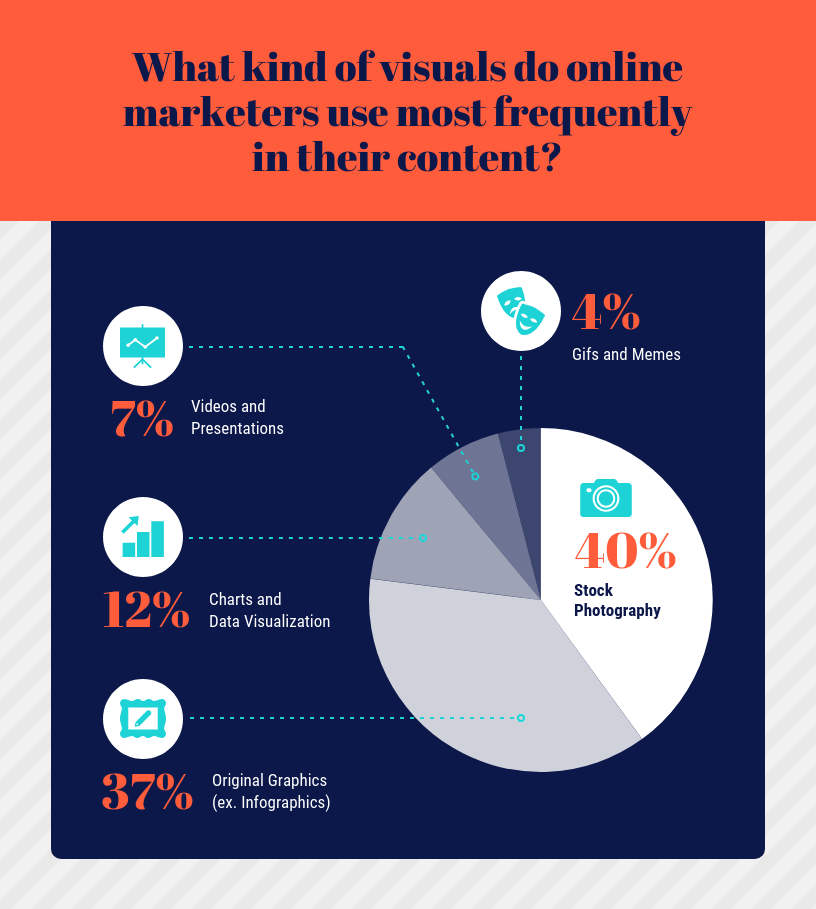

Pie charts are circular statistical graphics divided into slices to illustrate numerical proportions. Each slice represents a proportionate part of the whole, making it easy to visualize the contribution of each component to the total.

The size of the pie charts is influenced by the value of data points within each pie. The total of all data points in a pie determines its size. The pie with the highest data points appears as the largest, whereas the others are proportionally smaller. However, you can present all pies of the same size if proportional representation is not required [9] . Sometimes, pie charts are difficult to read, or additional information is required. A variation of this tool can be used instead, known as the donut chart , which has the same structure but a blank center, creating a ring shape. Presenters can add extra information, and the ring shape helps to declutter the graph.

Pie charts are used in business to show percentage distribution, compare relative sizes of categories, or present straightforward data sets where visualizing ratios is essential.

Real-Life Application of Pie Charts

Consider a scenario where you want to represent the distribution of the data. Each slice of the pie chart would represent a different category, and the size of each slice would indicate the percentage of the total portion allocated to that category.

Step 1: Define Your Data Structure

Imagine you are presenting the distribution of a project budget among different expense categories.

- Column A: Expense Categories (Personnel, Equipment, Marketing, Miscellaneous)

- Column B: Budget Amounts ($40,000, $30,000, $20,000, $10,000) Column B represents the values of your categories in Column A.

Step 2: Insert a Pie Chart

Using any of the accessible tools, you can create a pie chart. The most convenient tools for forming a pie chart in a presentation are presentation tools such as PowerPoint or Google Slides. You will notice that the pie chart assigns each expense category a percentage of the total budget by dividing it by the total budget.

For instance:

- Personnel: $40,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 40%

- Equipment: $30,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 30%

- Marketing: $20,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 20%

- Miscellaneous: $10,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 10%

You can make a chart out of this or just pull out the pie chart from the data.

3D pie charts and 3D donut charts are quite popular among the audience. They stand out as visual elements in any presentation slide, so let’s take a look at how our pie chart example would look in 3D pie chart format.

Step 03: Results Interpretation

The pie chart visually illustrates the distribution of the project budget among different expense categories. Personnel constitutes the largest portion at 40%, followed by equipment at 30%, marketing at 20%, and miscellaneous at 10%. This breakdown provides a clear overview of where the project funds are allocated, which helps in informed decision-making and resource management. It is evident that personnel are a significant investment, emphasizing their importance in the overall project budget.

Pie charts provide a straightforward way to represent proportions and percentages. They are easy to understand, even for individuals with limited data analysis experience. These charts work well for small datasets with a limited number of categories.

However, a pie chart can become cluttered and less effective in situations with many categories. Accurate interpretation may be challenging, especially when dealing with slight differences in slice sizes. In addition, these charts are static and do not effectively convey trends over time.

For more information, check our collection of pie chart templates for PowerPoint .

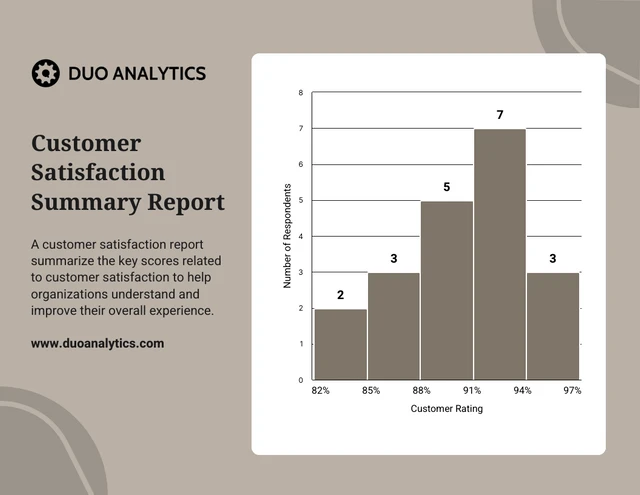

Histograms present the distribution of numerical variables. Unlike a bar chart that records each unique response separately, histograms organize numeric responses into bins and show the frequency of reactions within each bin [10] . The x-axis of a histogram shows the range of values for a numeric variable. At the same time, the y-axis indicates the relative frequencies (percentage of the total counts) for that range of values.

Whenever you want to understand the distribution of your data, check which values are more common, or identify outliers, histograms are your go-to. Think of them as a spotlight on the story your data is telling. A histogram can provide a quick and insightful overview if you’re curious about exam scores, sales figures, or any numerical data distribution.

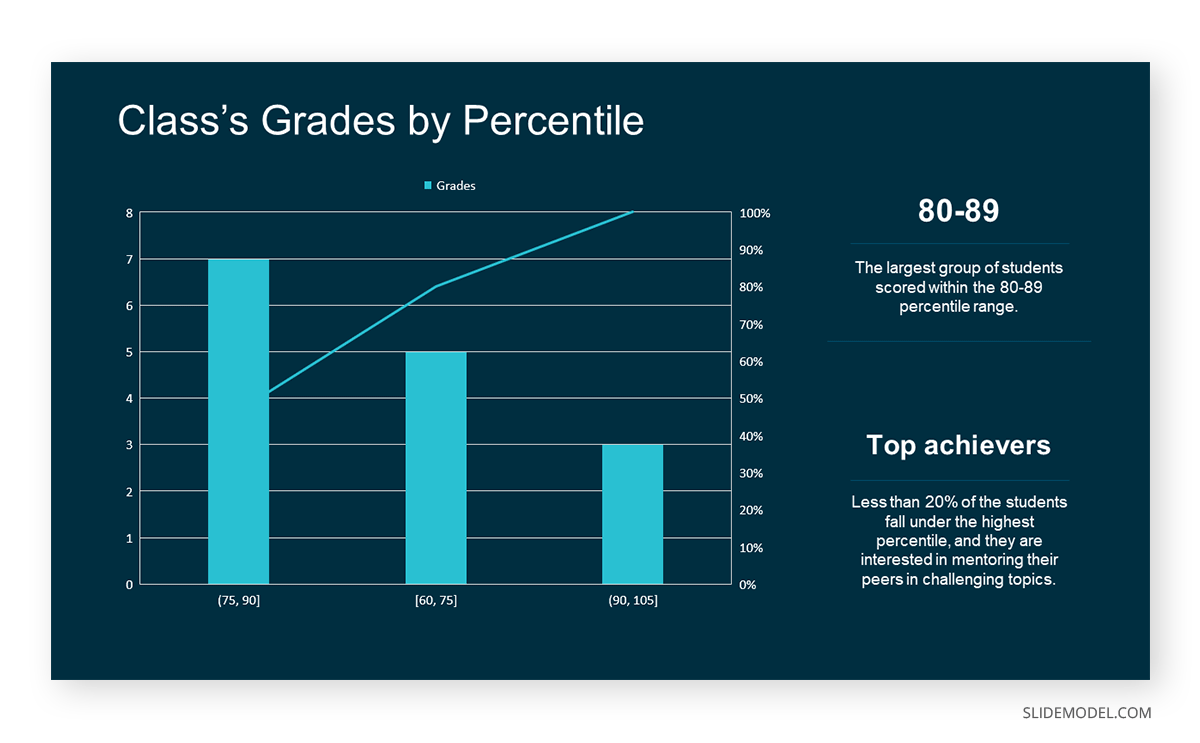

Real-Life Application of a Histogram

In the histogram data analysis presentation example, imagine an instructor analyzing a class’s grades to identify the most common score range. A histogram could effectively display the distribution. It will show whether most students scored in the average range or if there are significant outliers.

Step 1: Gather Data

He begins by gathering the data. The scores of each student in class are gathered to analyze exam scores.

| Names | Score |

|---|---|

| Alice | 78 |

| Bob | 85 |

| Clara | 92 |

| David | 65 |

| Emma | 72 |

| Frank | 88 |

| Grace | 76 |

| Henry | 95 |

| Isabel | 81 |

| Jack | 70 |

| Kate | 60 |

| Liam | 89 |

| Mia | 75 |

| Noah | 84 |

| Olivia | 92 |

After arranging the scores in ascending order, bin ranges are set.

Step 2: Define Bins

Bins are like categories that group similar values. Think of them as buckets that organize your data. The presenter decides how wide each bin should be based on the range of the values. For instance, the instructor sets the bin ranges based on score intervals: 60-69, 70-79, 80-89, and 90-100.

Step 3: Count Frequency

Now, he counts how many data points fall into each bin. This step is crucial because it tells you how often specific ranges of values occur. The result is the frequency distribution, showing the occurrences of each group.

Here, the instructor counts the number of students in each category.

- 60-69: 1 student (Kate)

- 70-79: 4 students (David, Emma, Grace, Jack)

- 80-89: 7 students (Alice, Bob, Frank, Isabel, Liam, Mia, Noah)

- 90-100: 3 students (Clara, Henry, Olivia)

Step 4: Create the Histogram

It’s time to turn the data into a visual representation. Draw a bar for each bin on a graph. The width of the bar should correspond to the range of the bin, and the height should correspond to the frequency. To make your histogram understandable, label the X and Y axes.

In this case, the X-axis should represent the bins (e.g., test score ranges), and the Y-axis represents the frequency.

The histogram of the class grades reveals insightful patterns in the distribution. Most students, with seven students, fall within the 80-89 score range. The histogram provides a clear visualization of the class’s performance. It showcases a concentration of grades in the upper-middle range with few outliers at both ends. This analysis helps in understanding the overall academic standing of the class. It also identifies the areas for potential improvement or recognition.

Thus, histograms provide a clear visual representation of data distribution. They are easy to interpret, even for those without a statistical background. They apply to various types of data, including continuous and discrete variables. One weak point is that histograms do not capture detailed patterns in students’ data, with seven compared to other visualization methods.

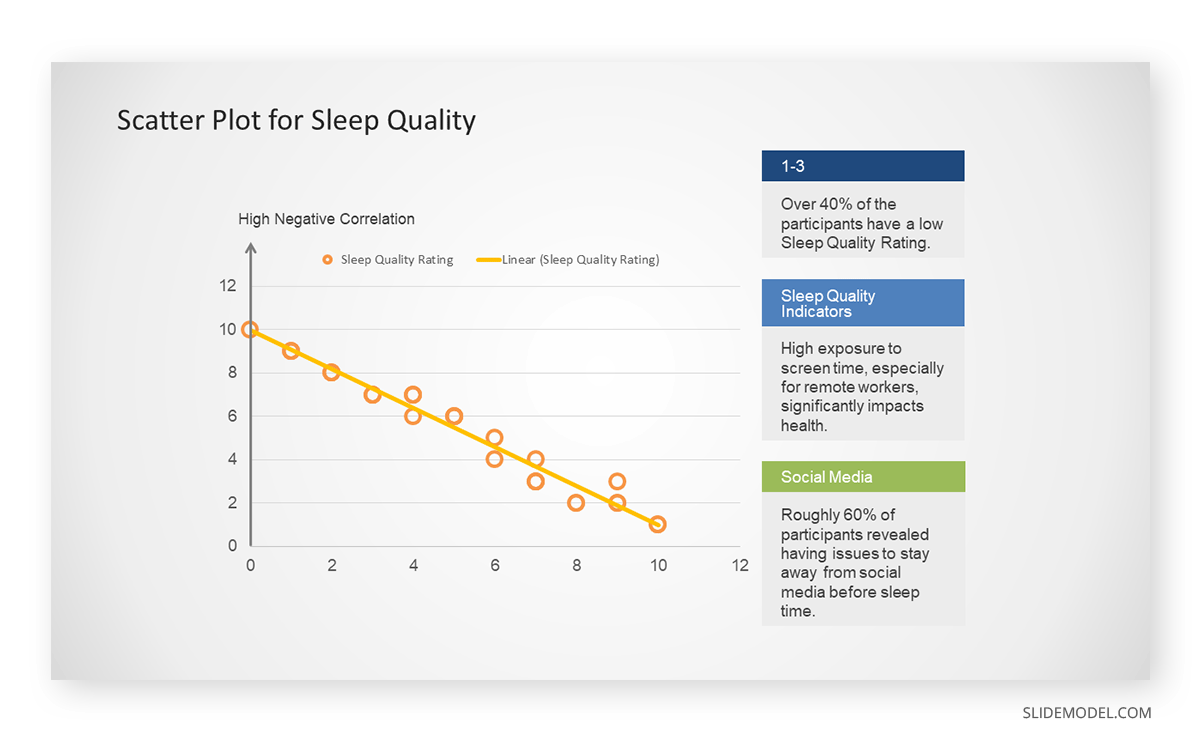

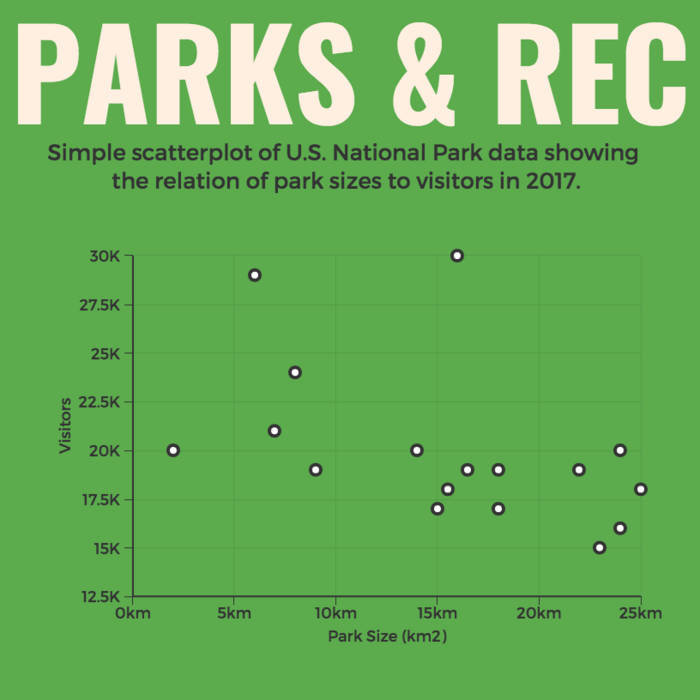

A scatter plot is a graphical representation of the relationship between two variables. It consists of individual data points on a two-dimensional plane. This plane plots one variable on the x-axis and the other on the y-axis. Each point represents a unique observation. It visualizes patterns, trends, or correlations between the two variables.

Scatter plots are also effective in revealing the strength and direction of relationships. They identify outliers and assess the overall distribution of data points. The points’ dispersion and clustering reflect the relationship’s nature, whether it is positive, negative, or lacks a discernible pattern. In business, scatter plots assess relationships between variables such as marketing cost and sales revenue. They help present data correlations and decision-making.

Real-Life Application of Scatter Plot

A group of scientists is conducting a study on the relationship between daily hours of screen time and sleep quality. After reviewing the data, they managed to create this table to help them build a scatter plot graph:

| Participant ID | Daily Hours of Screen Time | Sleep Quality Rating |

|---|---|---|

| 1 | 9 | 3 |

| 2 | 2 | 8 |

| 3 | 1 | 9 |

| 4 | 0 | 10 |

| 5 | 1 | 9 |

| 6 | 3 | 7 |

| 7 | 4 | 7 |

| 8 | 5 | 6 |

| 9 | 5 | 6 |

| 10 | 7 | 3 |

| 11 | 10 | 1 |

| 12 | 6 | 5 |

| 13 | 7 | 3 |

| 14 | 8 | 2 |

| 15 | 9 | 2 |

| 16 | 4 | 7 |

| 17 | 5 | 6 |

| 18 | 4 | 7 |

| 19 | 9 | 2 |

| 20 | 6 | 4 |

| 21 | 3 | 7 |

| 22 | 10 | 1 |

| 23 | 2 | 8 |

| 24 | 5 | 6 |

| 25 | 3 | 7 |

| 26 | 1 | 9 |

| 27 | 8 | 2 |

| 28 | 4 | 6 |

| 29 | 7 | 3 |

| 30 | 2 | 8 |

| 31 | 7 | 4 |

| 32 | 9 | 2 |

| 33 | 10 | 1 |

| 34 | 10 | 1 |

| 35 | 10 | 1 |

In the provided example, the x-axis represents Daily Hours of Screen Time, and the y-axis represents the Sleep Quality Rating.

The scientists observe a negative correlation between the amount of screen time and the quality of sleep. This is consistent with their hypothesis that blue light, especially before bedtime, has a significant impact on sleep quality and metabolic processes.

There are a few things to remember when using a scatter plot. Even when a scatter diagram indicates a relationship, it doesn’t mean one variable affects the other. A third factor can influence both variables. The more the plot resembles a straight line, the stronger the relationship is perceived [11] . If it suggests no ties, the observed pattern might be due to random fluctuations in data. When the scatter diagram depicts no correlation, whether the data might be stratified is worth considering.

Choosing the appropriate data presentation type is crucial when making a presentation . Understanding the nature of your data and the message you intend to convey will guide this selection process. For instance, when showcasing quantitative relationships, scatter plots become instrumental in revealing correlations between variables. If the focus is on emphasizing parts of a whole, pie charts offer a concise display of proportions. Histograms, on the other hand, prove valuable for illustrating distributions and frequency patterns.

Bar charts provide a clear visual comparison of different categories. Likewise, line charts excel in showcasing trends over time, while tables are ideal for detailed data examination. Starting a presentation on data presentation types involves evaluating the specific information you want to communicate and selecting the format that aligns with your message. This ensures clarity and resonance with your audience from the beginning of your presentation.

1. Fact Sheet Dashboard for Data Presentation

Convey all the data you need to present in this one-pager format, an ideal solution tailored for users looking for presentation aids. Global maps, donut chats, column graphs, and text neatly arranged in a clean layout presented in light and dark themes.

Use This Template

2. 3D Column Chart Infographic PPT Template

Represent column charts in a highly visual 3D format with this PPT template. A creative way to present data, this template is entirely editable, and we can craft either a one-page infographic or a series of slides explaining what we intend to disclose point by point.

3. Data Circles Infographic PowerPoint Template

An alternative to the pie chart and donut chart diagrams, this template features a series of curved shapes with bubble callouts as ways of presenting data. Expand the information for each arch in the text placeholder areas.

4. Colorful Metrics Dashboard for Data Presentation

This versatile dashboard template helps us in the presentation of the data by offering several graphs and methods to convert numbers into graphics. Implement it for e-commerce projects, financial projections, project development, and more.

5. Animated Data Presentation Tools for PowerPoint & Google Slides

A slide deck filled with most of the tools mentioned in this article, from bar charts, column charts, treemap graphs, pie charts, histogram, etc. Animated effects make each slide look dynamic when sharing data with stakeholders.

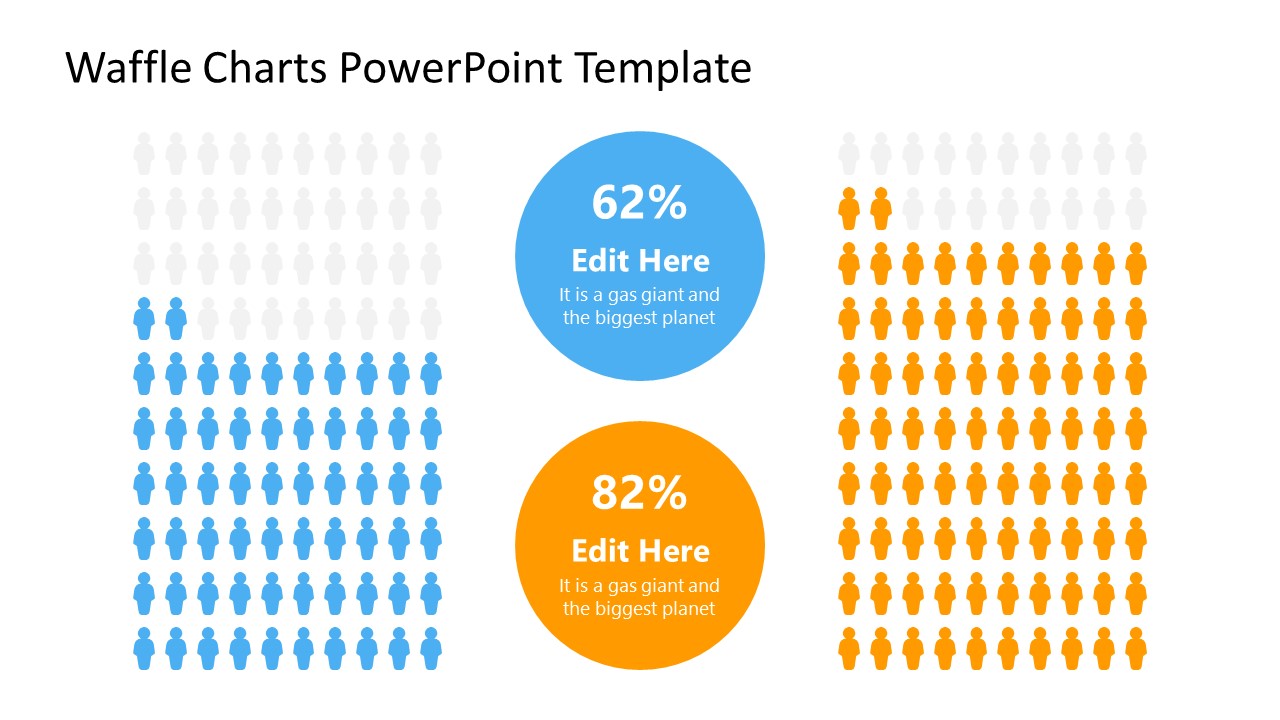

6. Statistics Waffle Charts PPT Template for Data Presentations

This PPT template helps us how to present data beyond the typical pie chart representation. It is widely used for demographics, so it’s a great fit for marketing teams, data science professionals, HR personnel, and more.

7. Data Presentation Dashboard Template for Google Slides

A compendium of tools in dashboard format featuring line graphs, bar charts, column charts, and neatly arranged placeholder text areas.

8. Weather Dashboard for Data Presentation

Share weather data for agricultural presentation topics, environmental studies, or any kind of presentation that requires a highly visual layout for weather forecasting on a single day. Two color themes are available.

9. Social Media Marketing Dashboard Data Presentation Template

Intended for marketing professionals, this dashboard template for data presentation is a tool for presenting data analytics from social media channels. Two slide layouts featuring line graphs and column charts.

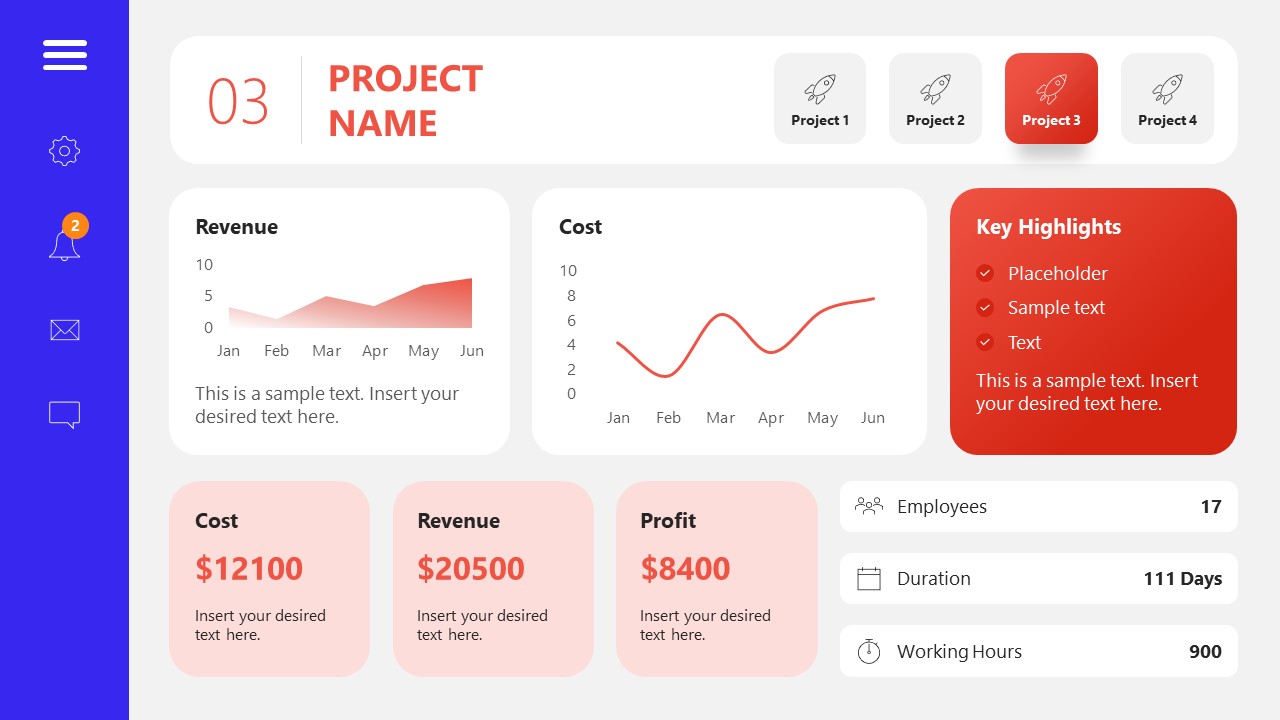

10. Project Management Summary Dashboard Template

A tool crafted for project managers to deliver highly visual reports on a project’s completion, the profits it delivered for the company, and expenses/time required to execute it. 4 different color layouts are available.

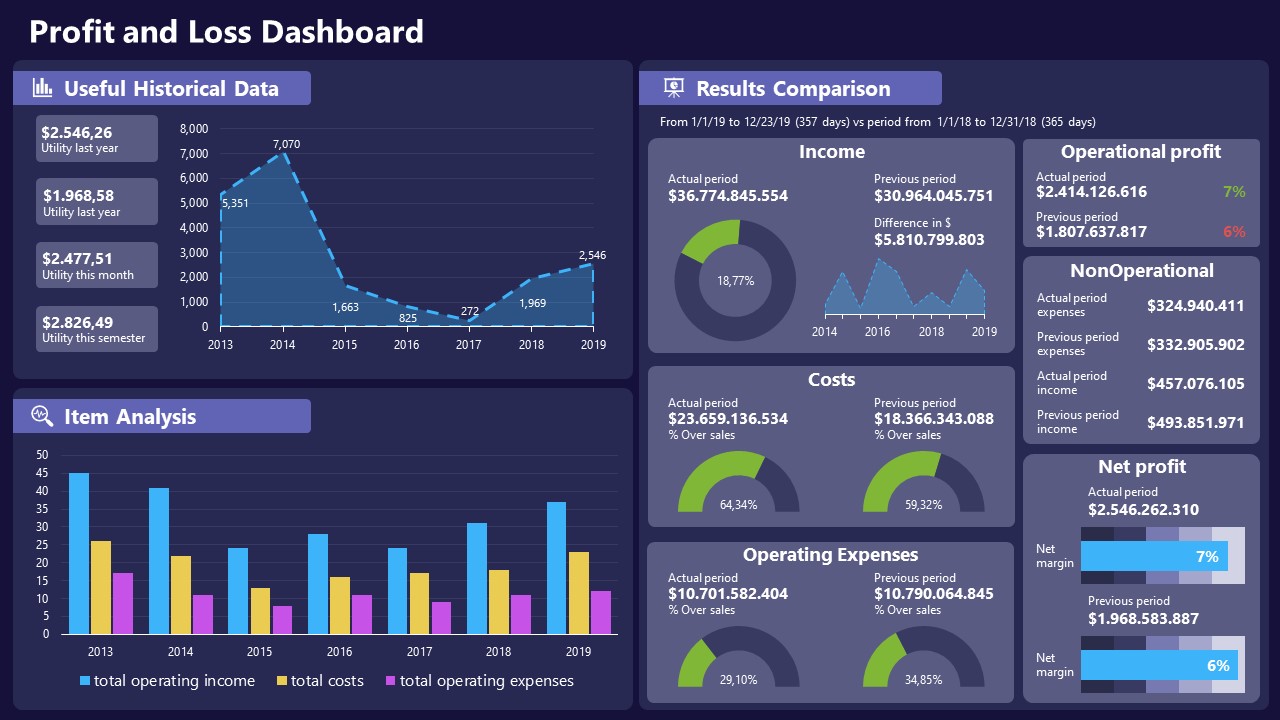

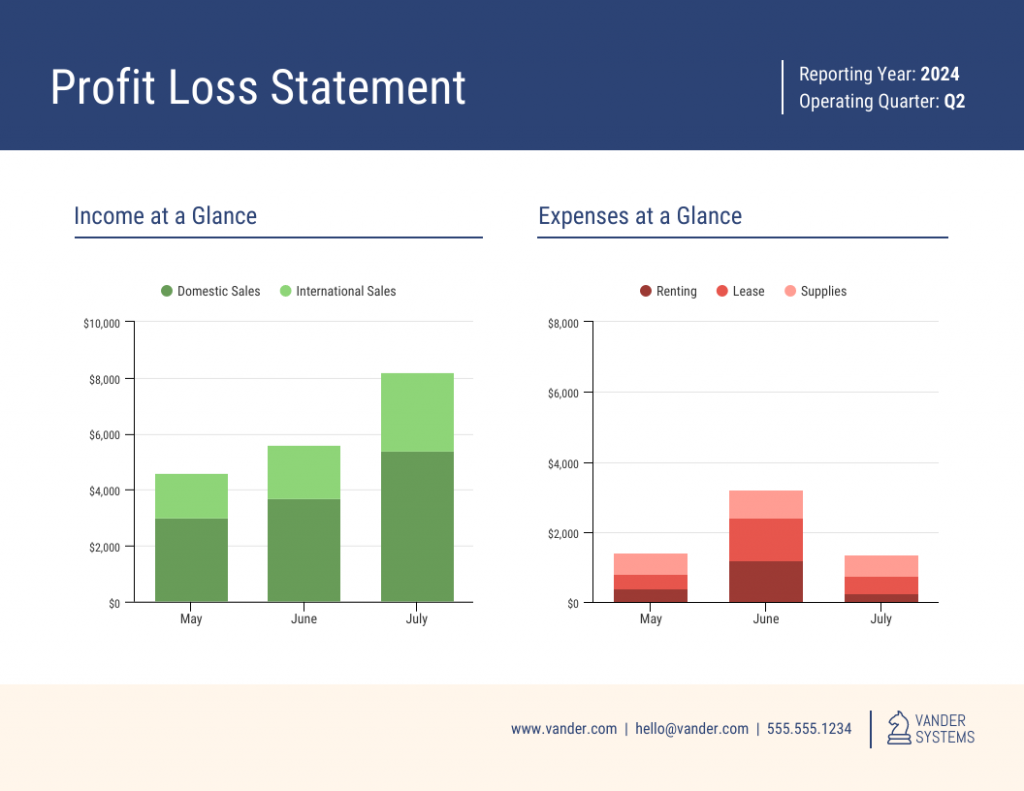

11. Profit & Loss Dashboard for PowerPoint and Google Slides

A must-have for finance professionals. This typical profit & loss dashboard includes progress bars, donut charts, column charts, line graphs, and everything that’s required to deliver a comprehensive report about a company’s financial situation.

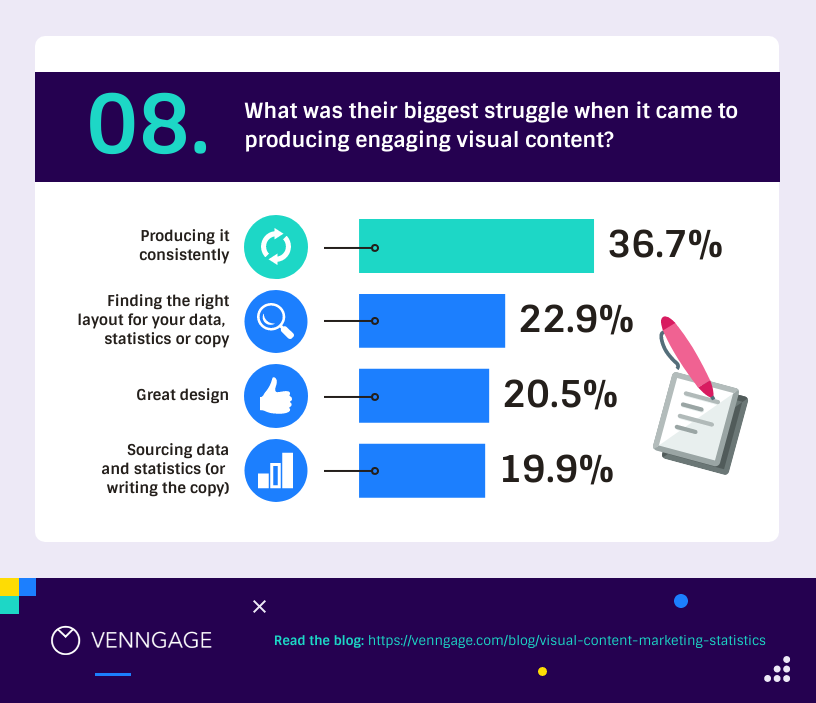

Overwhelming visuals

One of the mistakes related to using data-presenting methods is including too much data or using overly complex visualizations. They can confuse the audience and dilute the key message.

Inappropriate chart types

Choosing the wrong type of chart for the data at hand can lead to misinterpretation. For example, using a pie chart for data that doesn’t represent parts of a whole is not right.

Lack of context

Failing to provide context or sufficient labeling can make it challenging for the audience to understand the significance of the presented data.

Inconsistency in design

Using inconsistent design elements and color schemes across different visualizations can create confusion and visual disarray.

Failure to provide details

Simply presenting raw data without offering clear insights or takeaways can leave the audience without a meaningful conclusion.

Lack of focus

Not having a clear focus on the key message or main takeaway can result in a presentation that lacks a central theme.

Visual accessibility issues

Overlooking the visual accessibility of charts and graphs can exclude certain audience members who may have difficulty interpreting visual information.

In order to avoid these mistakes in data presentation, presenters can benefit from using presentation templates . These templates provide a structured framework. They ensure consistency, clarity, and an aesthetically pleasing design, enhancing data communication’s overall impact.

Understanding and choosing data presentation types are pivotal in effective communication. Each method serves a unique purpose, so selecting the appropriate one depends on the nature of the data and the message to be conveyed. The diverse array of presentation types offers versatility in visually representing information, from bar charts showing values to pie charts illustrating proportions.

Using the proper method enhances clarity, engages the audience, and ensures that data sets are not just presented but comprehensively understood. By appreciating the strengths and limitations of different presentation types, communicators can tailor their approach to convey information accurately, developing a deeper connection between data and audience understanding.

[1] Government of Canada, S.C. (2021) 5 Data Visualization 5.2 Bar Chart , 5.2 Bar chart . https://www150.statcan.gc.ca/n1/edu/power-pouvoir/ch9/bargraph-diagrammeabarres/5214818-eng.htm

[2] Kosslyn, S.M., 1989. Understanding charts and graphs. Applied cognitive psychology, 3(3), pp.185-225. https://apps.dtic.mil/sti/pdfs/ADA183409.pdf

[3] Creating a Dashboard . https://it.tufts.edu/book/export/html/1870

[4] https://www.goldenwestcollege.edu/research/data-and-more/data-dashboards/index.html

[5] https://www.mit.edu/course/21/21.guide/grf-line.htm

[6] Jadeja, M. and Shah, K., 2015, January. Tree-Map: A Visualization Tool for Large Data. In GSB@ SIGIR (pp. 9-13). https://ceur-ws.org/Vol-1393/gsb15proceedings.pdf#page=15

[7] Heat Maps and Quilt Plots. https://www.publichealth.columbia.edu/research/population-health-methods/heat-maps-and-quilt-plots

[8] EIU QGIS WORKSHOP. https://www.eiu.edu/qgisworkshop/heatmaps.php

[9] About Pie Charts. https://www.mit.edu/~mbarker/formula1/f1help/11-ch-c8.htm

[10] Histograms. https://sites.utexas.edu/sos/guided/descriptive/numericaldd/descriptiven2/histogram/ [11] https://asq.org/quality-resources/scatter-diagram

Like this article? Please share

Data Analysis, Data Science, Data Visualization Filed under Design

Related Articles

Filed under Design • March 27th, 2024

How to Make a Presentation Graph

Detailed step-by-step instructions to master the art of how to make a presentation graph in PowerPoint and Google Slides. Check it out!

Filed under Presentation Ideas • January 6th, 2024

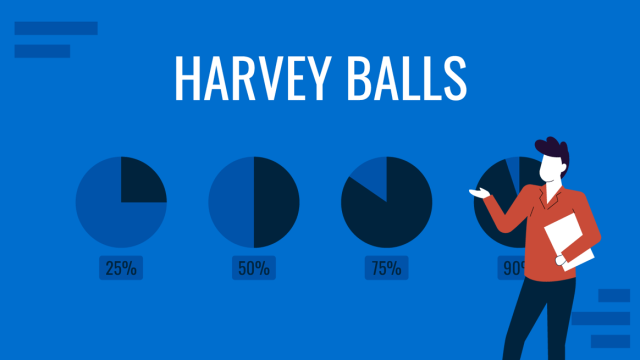

All About Using Harvey Balls

Among the many tools in the arsenal of the modern presenter, Harvey Balls have a special place. In this article we will tell you all about using Harvey Balls.

Filed under Business • December 8th, 2023

How to Design a Dashboard Presentation: A Step-by-Step Guide

Take a step further in your professional presentation skills by learning what a dashboard presentation is and how to properly design one in PowerPoint. A detailed step-by-step guide is here!

Leave a Reply

The 7 Most Useful Data Analysis Methods and Techniques

Data analytics is the process of analyzing raw data to draw out meaningful insights. These insights are then used to determine the best course of action.

When is the best time to roll out that marketing campaign? Is the current team structure as effective as it could be? Which customer segments are most likely to purchase your new product?

Ultimately, data analytics is a crucial driver of any successful business strategy. But how do data analysts actually turn raw data into something useful? There are a range of methods and techniques that data analysts use depending on the type of data in question and the kinds of insights they want to uncover.

You can get a hands-on introduction to data analytics in this free short course .

In this post, we’ll explore some of the most useful data analysis techniques. By the end, you’ll have a much clearer idea of how you can transform meaningless data into business intelligence. We’ll cover:

- What is data analysis and why is it important?

- What is the difference between qualitative and quantitative data?

- Regression analysis

- Monte Carlo simulation

- Factor analysis

- Cohort analysis

- Cluster analysis

- Time series analysis

- Sentiment analysis

- The data analysis process

- The best tools for data analysis

- Key takeaways

The first six methods listed are used for quantitative data , while the last technique applies to qualitative data. We briefly explain the difference between quantitative and qualitative data in section two, but if you want to skip straight to a particular analysis technique, just use the clickable menu.

1. What is data analysis and why is it important?

Data analysis is, put simply, the process of discovering useful information by evaluating data. This is done through a process of inspecting, cleaning, transforming, and modeling data using analytical and statistical tools, which we will explore in detail further along in this article.

Why is data analysis important? Analyzing data effectively helps organizations make business decisions. Nowadays, data is collected by businesses constantly: through surveys, online tracking, online marketing analytics, collected subscription and registration data (think newsletters), social media monitoring, among other methods.

These data will appear as different structures, including—but not limited to—the following:

The concept of big data —data that is so large, fast, or complex, that it is difficult or impossible to process using traditional methods—gained momentum in the early 2000s. Then, Doug Laney, an industry analyst, articulated what is now known as the mainstream definition of big data as the three Vs: volume, velocity, and variety.

- Volume: As mentioned earlier, organizations are collecting data constantly. In the not-too-distant past it would have been a real issue to store, but nowadays storage is cheap and takes up little space.

- Velocity: Received data needs to be handled in a timely manner. With the growth of the Internet of Things, this can mean these data are coming in constantly, and at an unprecedented speed.

- Variety: The data being collected and stored by organizations comes in many forms, ranging from structured data—that is, more traditional, numerical data—to unstructured data—think emails, videos, audio, and so on. We’ll cover structured and unstructured data a little further on.

This is a form of data that provides information about other data, such as an image. In everyday life you’ll find this by, for example, right-clicking on a file in a folder and selecting “Get Info”, which will show you information such as file size and kind, date of creation, and so on.

Real-time data

This is data that is presented as soon as it is acquired. A good example of this is a stock market ticket, which provides information on the most-active stocks in real time.

Machine data

This is data that is produced wholly by machines, without human instruction. An example of this could be call logs automatically generated by your smartphone.

Quantitative and qualitative data

Quantitative data—otherwise known as structured data— may appear as a “traditional” database—that is, with rows and columns. Qualitative data—otherwise known as unstructured data—are the other types of data that don’t fit into rows and columns, which can include text, images, videos and more. We’ll discuss this further in the next section.

2. What is the difference between quantitative and qualitative data?

How you analyze your data depends on the type of data you’re dealing with— quantitative or qualitative . So what’s the difference?

Quantitative data is anything measurable , comprising specific quantities and numbers. Some examples of quantitative data include sales figures, email click-through rates, number of website visitors, and percentage revenue increase. Quantitative data analysis techniques focus on the statistical, mathematical, or numerical analysis of (usually large) datasets. This includes the manipulation of statistical data using computational techniques and algorithms. Quantitative analysis techniques are often used to explain certain phenomena or to make predictions.

Qualitative data cannot be measured objectively , and is therefore open to more subjective interpretation. Some examples of qualitative data include comments left in response to a survey question, things people have said during interviews, tweets and other social media posts, and the text included in product reviews. With qualitative data analysis, the focus is on making sense of unstructured data (such as written text, or transcripts of spoken conversations). Often, qualitative analysis will organize the data into themes—a process which, fortunately, can be automated.

Data analysts work with both quantitative and qualitative data , so it’s important to be familiar with a variety of analysis methods. Let’s take a look at some of the most useful techniques now.

3. Data analysis techniques

Now we’re familiar with some of the different types of data, let’s focus on the topic at hand: different methods for analyzing data.

a. Regression analysis

Regression analysis is used to estimate the relationship between a set of variables. When conducting any type of regression analysis , you’re looking to see if there’s a correlation between a dependent variable (that’s the variable or outcome you want to measure or predict) and any number of independent variables (factors which may have an impact on the dependent variable). The aim of regression analysis is to estimate how one or more variables might impact the dependent variable, in order to identify trends and patterns. This is especially useful for making predictions and forecasting future trends.

Let’s imagine you work for an ecommerce company and you want to examine the relationship between: (a) how much money is spent on social media marketing, and (b) sales revenue. In this case, sales revenue is your dependent variable—it’s the factor you’re most interested in predicting and boosting. Social media spend is your independent variable; you want to determine whether or not it has an impact on sales and, ultimately, whether it’s worth increasing, decreasing, or keeping the same. Using regression analysis, you’d be able to see if there’s a relationship between the two variables. A positive correlation would imply that the more you spend on social media marketing, the more sales revenue you make. No correlation at all might suggest that social media marketing has no bearing on your sales. Understanding the relationship between these two variables would help you to make informed decisions about the social media budget going forward. However: It’s important to note that, on their own, regressions can only be used to determine whether or not there is a relationship between a set of variables—they don’t tell you anything about cause and effect. So, while a positive correlation between social media spend and sales revenue may suggest that one impacts the other, it’s impossible to draw definitive conclusions based on this analysis alone.

There are many different types of regression analysis, and the model you use depends on the type of data you have for the dependent variable. For example, your dependent variable might be continuous (i.e. something that can be measured on a continuous scale, such as sales revenue in USD), in which case you’d use a different type of regression analysis than if your dependent variable was categorical in nature (i.e. comprising values that can be categorised into a number of distinct groups based on a certain characteristic, such as customer location by continent). You can learn more about different types of dependent variables and how to choose the right regression analysis in this guide .

Regression analysis in action: Investigating the relationship between clothing brand Benetton’s advertising expenditure and sales

b. Monte Carlo simulation

When making decisions or taking certain actions, there are a range of different possible outcomes. If you take the bus, you might get stuck in traffic. If you walk, you might get caught in the rain or bump into your chatty neighbor, potentially delaying your journey. In everyday life, we tend to briefly weigh up the pros and cons before deciding which action to take; however, when the stakes are high, it’s essential to calculate, as thoroughly and accurately as possible, all the potential risks and rewards.

Monte Carlo simulation, otherwise known as the Monte Carlo method, is a computerized technique used to generate models of possible outcomes and their probability distributions. It essentially considers a range of possible outcomes and then calculates how likely it is that each particular outcome will be realized. The Monte Carlo method is used by data analysts to conduct advanced risk analysis, allowing them to better forecast what might happen in the future and make decisions accordingly.

So how does Monte Carlo simulation work, and what can it tell us? To run a Monte Carlo simulation, you’ll start with a mathematical model of your data—such as a spreadsheet. Within your spreadsheet, you’ll have one or several outputs that you’re interested in; profit, for example, or number of sales. You’ll also have a number of inputs; these are variables that may impact your output variable. If you’re looking at profit, relevant inputs might include the number of sales, total marketing spend, and employee salaries. If you knew the exact, definitive values of all your input variables, you’d quite easily be able to calculate what profit you’d be left with at the end. However, when these values are uncertain, a Monte Carlo simulation enables you to calculate all the possible options and their probabilities. What will your profit be if you make 100,000 sales and hire five new employees on a salary of $50,000 each? What is the likelihood of this outcome? What will your profit be if you only make 12,000 sales and hire five new employees? And so on. It does this by replacing all uncertain values with functions which generate random samples from distributions determined by you, and then running a series of calculations and recalculations to produce models of all the possible outcomes and their probability distributions. The Monte Carlo method is one of the most popular techniques for calculating the effect of unpredictable variables on a specific output variable, making it ideal for risk analysis.

Monte Carlo simulation in action: A case study using Monte Carlo simulation for risk analysis

c. Factor analysis

Factor analysis is a technique used to reduce a large number of variables to a smaller number of factors. It works on the basis that multiple separate, observable variables correlate with each other because they are all associated with an underlying construct. This is useful not only because it condenses large datasets into smaller, more manageable samples, but also because it helps to uncover hidden patterns. This allows you to explore concepts that cannot be easily measured or observed—such as wealth, happiness, fitness, or, for a more business-relevant example, customer loyalty and satisfaction.

Let’s imagine you want to get to know your customers better, so you send out a rather long survey comprising one hundred questions. Some of the questions relate to how they feel about your company and product; for example, “Would you recommend us to a friend?” and “How would you rate the overall customer experience?” Other questions ask things like “What is your yearly household income?” and “How much are you willing to spend on skincare each month?”

Once your survey has been sent out and completed by lots of customers, you end up with a large dataset that essentially tells you one hundred different things about each customer (assuming each customer gives one hundred responses). Instead of looking at each of these responses (or variables) individually, you can use factor analysis to group them into factors that belong together—in other words, to relate them to a single underlying construct. In this example, factor analysis works by finding survey items that are strongly correlated. This is known as covariance . So, if there’s a strong positive correlation between household income and how much they’re willing to spend on skincare each month (i.e. as one increases, so does the other), these items may be grouped together. Together with other variables (survey responses), you may find that they can be reduced to a single factor such as “consumer purchasing power”. Likewise, if a customer experience rating of 10/10 correlates strongly with “yes” responses regarding how likely they are to recommend your product to a friend, these items may be reduced to a single factor such as “customer satisfaction”.

In the end, you have a smaller number of factors rather than hundreds of individual variables. These factors are then taken forward for further analysis, allowing you to learn more about your customers (or any other area you’re interested in exploring).

Factor analysis in action: Using factor analysis to explore customer behavior patterns in Tehran

d. Cohort analysis

Cohort analysis is a data analytics technique that groups users based on a shared characteristic , such as the date they signed up for a service or the product they purchased. Once users are grouped into cohorts, analysts can track their behavior over time to identify trends and patterns.

So what does this mean and why is it useful? Let’s break down the above definition further. A cohort is a group of people who share a common characteristic (or action) during a given time period. Students who enrolled at university in 2020 may be referred to as the 2020 cohort. Customers who purchased something from your online store via the app in the month of December may also be considered a cohort.

With cohort analysis, you’re dividing your customers or users into groups and looking at how these groups behave over time. So, rather than looking at a single, isolated snapshot of all your customers at a given moment in time (with each customer at a different point in their journey), you’re examining your customers’ behavior in the context of the customer lifecycle. As a result, you can start to identify patterns of behavior at various points in the customer journey—say, from their first ever visit to your website, through to email newsletter sign-up, to their first purchase, and so on. As such, cohort analysis is dynamic, allowing you to uncover valuable insights about the customer lifecycle.

This is useful because it allows companies to tailor their service to specific customer segments (or cohorts). Let’s imagine you run a 50% discount campaign in order to attract potential new customers to your website. Once you’ve attracted a group of new customers (a cohort), you’ll want to track whether they actually buy anything and, if they do, whether or not (and how frequently) they make a repeat purchase. With these insights, you’ll start to gain a much better understanding of when this particular cohort might benefit from another discount offer or retargeting ads on social media, for example. Ultimately, cohort analysis allows companies to optimize their service offerings (and marketing) to provide a more targeted, personalized experience. You can learn more about how to run cohort analysis using Google Analytics .

Cohort analysis in action: How Ticketmaster used cohort analysis to boost revenue

e. Cluster analysis

Cluster analysis is an exploratory technique that seeks to identify structures within a dataset. The goal of cluster analysis is to sort different data points into groups (or clusters) that are internally homogeneous and externally heterogeneous. This means that data points within a cluster are similar to each other, and dissimilar to data points in another cluster. Clustering is used to gain insight into how data is distributed in a given dataset, or as a preprocessing step for other algorithms.

There are many real-world applications of cluster analysis. In marketing, cluster analysis is commonly used to group a large customer base into distinct segments, allowing for a more targeted approach to advertising and communication. Insurance firms might use cluster analysis to investigate why certain locations are associated with a high number of insurance claims. Another common application is in geology, where experts will use cluster analysis to evaluate which cities are at greatest risk of earthquakes (and thus try to mitigate the risk with protective measures).

It’s important to note that, while cluster analysis may reveal structures within your data, it won’t explain why those structures exist. With that in mind, cluster analysis is a useful starting point for understanding your data and informing further analysis. Clustering algorithms are also used in machine learning—you can learn more about clustering in machine learning in our guide .

Cluster analysis in action: Using cluster analysis for customer segmentation—a telecoms case study example

f. Time series analysis

Time series analysis is a statistical technique used to identify trends and cycles over time. Time series data is a sequence of data points which measure the same variable at different points in time (for example, weekly sales figures or monthly email sign-ups). By looking at time-related trends, analysts are able to forecast how the variable of interest may fluctuate in the future.

When conducting time series analysis, the main patterns you’ll be looking out for in your data are:

- Trends: Stable, linear increases or decreases over an extended time period.

- Seasonality: Predictable fluctuations in the data due to seasonal factors over a short period of time. For example, you might see a peak in swimwear sales in summer around the same time every year.

- Cyclic patterns: Unpredictable cycles where the data fluctuates. Cyclical trends are not due to seasonality, but rather, may occur as a result of economic or industry-related conditions.

As you can imagine, the ability to make informed predictions about the future has immense value for business. Time series analysis and forecasting is used across a variety of industries, most commonly for stock market analysis, economic forecasting, and sales forecasting. There are different types of time series models depending on the data you’re using and the outcomes you want to predict. These models are typically classified into three broad types: the autoregressive (AR) models, the integrated (I) models, and the moving average (MA) models. For an in-depth look at time series analysis, refer to our guide .

Time series analysis in action: Developing a time series model to predict jute yarn demand in Bangladesh

g. Sentiment analysis

When you think of data, your mind probably automatically goes to numbers and spreadsheets.

Many companies overlook the value of qualitative data, but in reality, there are untold insights to be gained from what people (especially customers) write and say about you. So how do you go about analyzing textual data?

One highly useful qualitative technique is sentiment analysis , a technique which belongs to the broader category of text analysis —the (usually automated) process of sorting and understanding textual data.

With sentiment analysis, the goal is to interpret and classify the emotions conveyed within textual data. From a business perspective, this allows you to ascertain how your customers feel about various aspects of your brand, product, or service.

There are several different types of sentiment analysis models, each with a slightly different focus. The three main types include:

Fine-grained sentiment analysis

If you want to focus on opinion polarity (i.e. positive, neutral, or negative) in depth, fine-grained sentiment analysis will allow you to do so.

For example, if you wanted to interpret star ratings given by customers, you might use fine-grained sentiment analysis to categorize the various ratings along a scale ranging from very positive to very negative.

Emotion detection

This model often uses complex machine learning algorithms to pick out various emotions from your textual data.

You might use an emotion detection model to identify words associated with happiness, anger, frustration, and excitement, giving you insight into how your customers feel when writing about you or your product on, say, a product review site.

Aspect-based sentiment analysis

This type of analysis allows you to identify what specific aspects the emotions or opinions relate to, such as a certain product feature or a new ad campaign.

If a customer writes that they “find the new Instagram advert so annoying”, your model should detect not only a negative sentiment, but also the object towards which it’s directed.

In a nutshell, sentiment analysis uses various Natural Language Processing (NLP) algorithms and systems which are trained to associate certain inputs (for example, certain words) with certain outputs.

For example, the input “annoying” would be recognized and tagged as “negative”. Sentiment analysis is crucial to understanding how your customers feel about you and your products, for identifying areas for improvement, and even for averting PR disasters in real-time!

Sentiment analysis in action: 5 Real-world sentiment analysis case studies

4. The data analysis process

In order to gain meaningful insights from data, data analysts will perform a rigorous step-by-step process. We go over this in detail in our step by step guide to the data analysis process —but, to briefly summarize, the data analysis process generally consists of the following phases:

Defining the question

The first step for any data analyst will be to define the objective of the analysis, sometimes called a ‘problem statement’. Essentially, you’re asking a question with regards to a business problem you’re trying to solve. Once you’ve defined this, you’ll then need to determine which data sources will help you answer this question.

Collecting the data

Now that you’ve defined your objective, the next step will be to set up a strategy for collecting and aggregating the appropriate data. Will you be using quantitative (numeric) or qualitative (descriptive) data? Do these data fit into first-party, second-party, or third-party data?

Learn more: Quantitative vs. Qualitative Data: What’s the Difference?

Cleaning the data

Unfortunately, your collected data isn’t automatically ready for analysis—you’ll have to clean it first. As a data analyst, this phase of the process will take up the most time. During the data cleaning process, you will likely be:

- Removing major errors, duplicates, and outliers

- Removing unwanted data points

- Structuring the data—that is, fixing typos, layout issues, etc.

- Filling in major gaps in data

Analyzing the data

Now that we’ve finished cleaning the data, it’s time to analyze it! Many analysis methods have already been described in this article, and it’s up to you to decide which one will best suit the assigned objective. It may fall under one of the following categories:

- Descriptive analysis , which identifies what has already happened

- Diagnostic analysis , which focuses on understanding why something has happened

- Predictive analysis , which identifies future trends based on historical data

- Prescriptive analysis , which allows you to make recommendations for the future

Visualizing and sharing your findings

We’re almost at the end of the road! Analyses have been made, insights have been gleaned—all that remains to be done is to share this information with others. This is usually done with a data visualization tool, such as Google Charts, or Tableau.

Learn more: 13 of the Most Common Types of Data Visualization

To sum up the process, Will’s explained it all excellently in the following video:

5. The best tools for data analysis

As you can imagine, every phase of the data analysis process requires the data analyst to have a variety of tools under their belt that assist in gaining valuable insights from data. We cover these tools in greater detail in this article , but, in summary, here’s our best-of-the-best list, with links to each product:

The top 9 tools for data analysts

- Microsoft Excel

- Jupyter Notebook

- Apache Spark

- Microsoft Power BI

6. Key takeaways and further reading

As you can see, there are many different data analysis techniques at your disposal. In order to turn your raw data into actionable insights, it’s important to consider what kind of data you have (is it qualitative or quantitative?) as well as the kinds of insights that will be useful within the given context. In this post, we’ve introduced seven of the most useful data analysis techniques—but there are many more out there to be discovered!

So what now? If you haven’t already, we recommend reading the case studies for each analysis technique discussed in this post (you’ll find a link at the end of each section). For a more hands-on introduction to the kinds of methods and techniques that data analysts use, try out this free introductory data analytics short course. In the meantime, you might also want to read the following:

- The Best Online Data Analytics Courses for 2024

- What Is Time Series Data and How Is It Analyzed?

- What is Spatial Analysis?

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

17 Data Visualization Techniques All Professionals Should Know

- 17 Sep 2019

There’s a growing demand for business analytics and data expertise in the workforce. But you don’t need to be a professional analyst to benefit from data-related skills.

Becoming skilled at common data visualization techniques can help you reap the rewards of data-driven decision-making , including increased confidence and potential cost savings. Learning how to effectively visualize data could be the first step toward using data analytics and data science to your advantage to add value to your organization.

Several data visualization techniques can help you become more effective in your role. Here are 17 essential data visualization techniques all professionals should know, as well as tips to help you effectively present your data.

Access your free e-book today.

What Is Data Visualization?

Data visualization is the process of creating graphical representations of information. This process helps the presenter communicate data in a way that’s easy for the viewer to interpret and draw conclusions.

There are many different techniques and tools you can leverage to visualize data, so you want to know which ones to use and when. Here are some of the most important data visualization techniques all professionals should know.

Data Visualization Techniques

The type of data visualization technique you leverage will vary based on the type of data you’re working with, in addition to the story you’re telling with your data .

Here are some important data visualization techniques to know:

- Gantt Chart

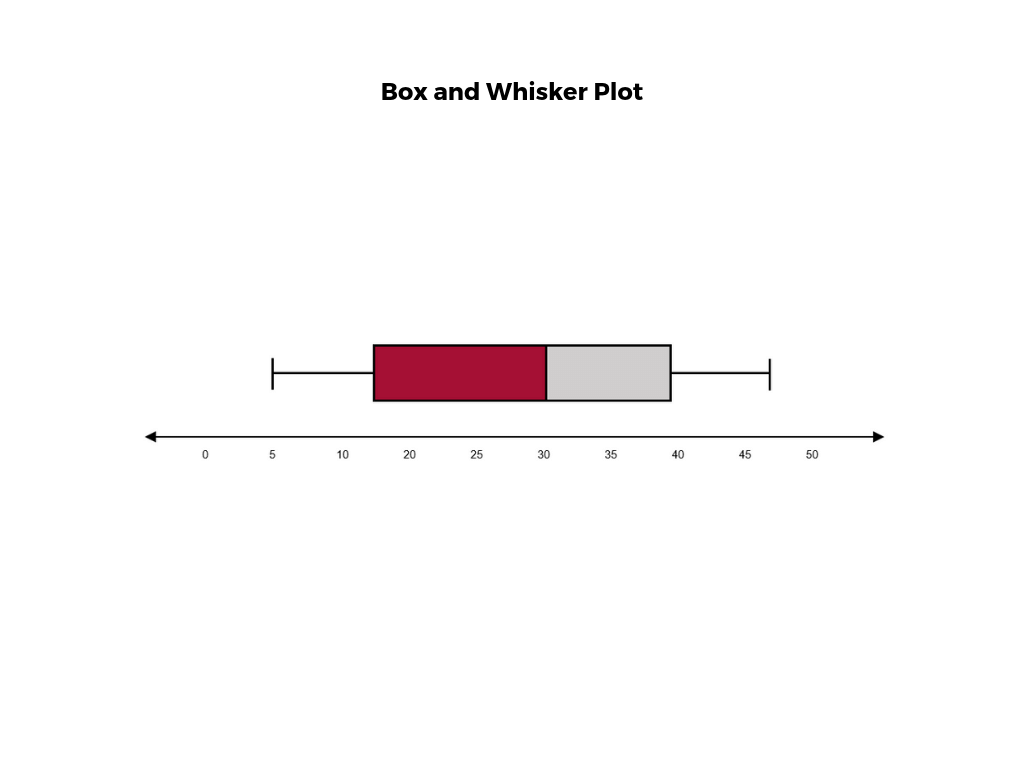

- Box and Whisker Plot

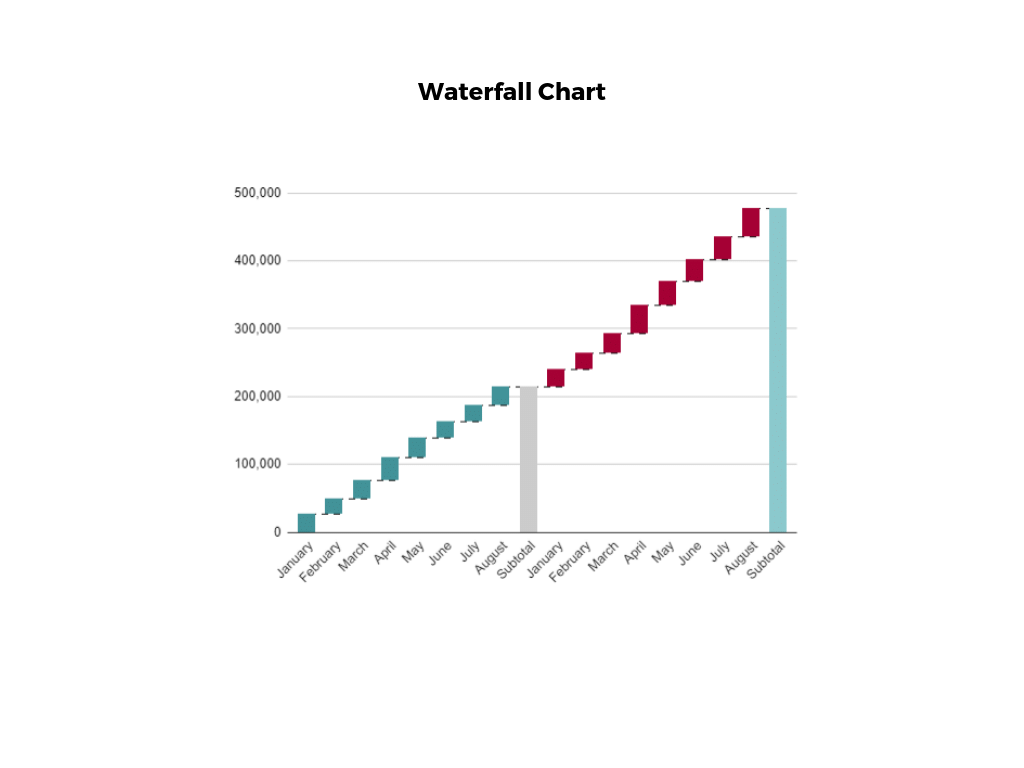

- Waterfall Chart

- Scatter Plot

- Pictogram Chart

- Highlight Table

- Bullet Graph

- Choropleth Map

- Network Diagram

- Correlation Matrices

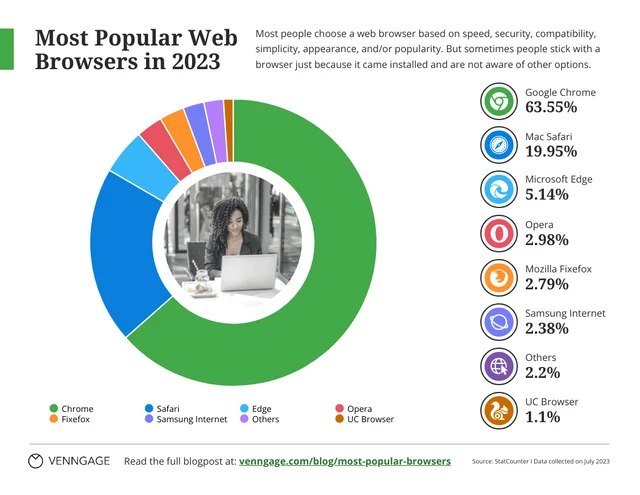

1. Pie Chart

Pie charts are one of the most common and basic data visualization techniques, used across a wide range of applications. Pie charts are ideal for illustrating proportions, or part-to-whole comparisons.

Because pie charts are relatively simple and easy to read, they’re best suited for audiences who might be unfamiliar with the information or are only interested in the key takeaways. For viewers who require a more thorough explanation of the data, pie charts fall short in their ability to display complex information.

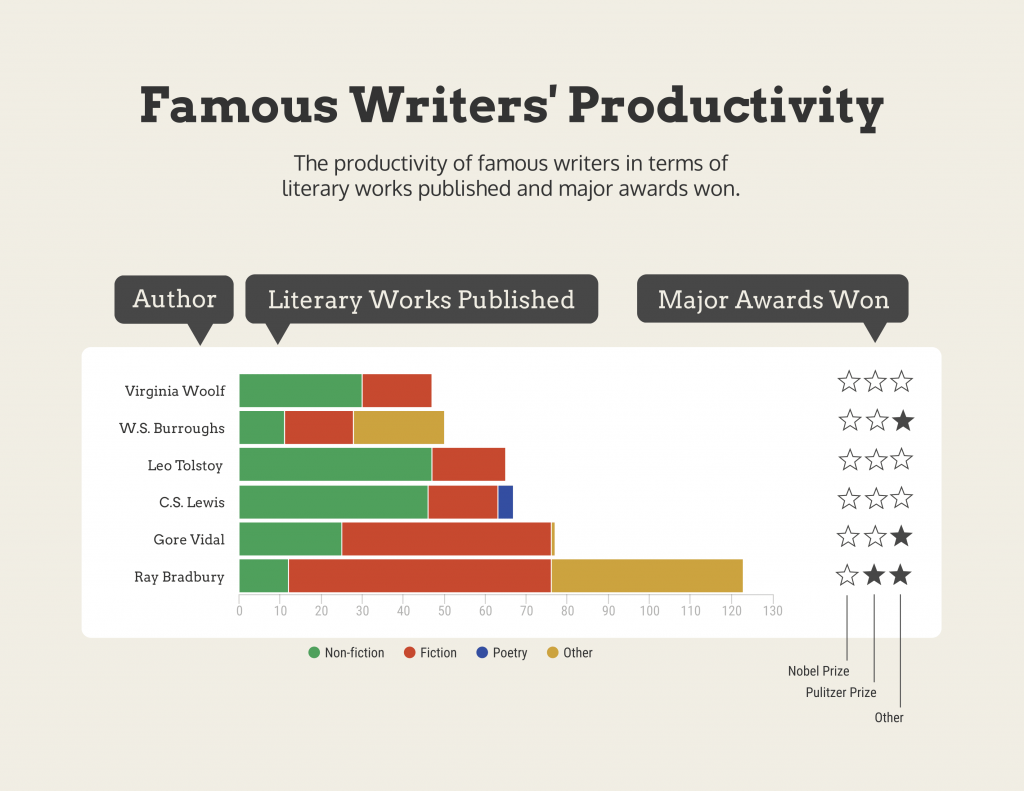

2. Bar Chart

The classic bar chart , or bar graph, is another common and easy-to-use method of data visualization. In this type of visualization, one axis of the chart shows the categories being compared, and the other, a measured value. The length of the bar indicates how each group measures according to the value.

One drawback is that labeling and clarity can become problematic when there are too many categories included. Like pie charts, they can also be too simple for more complex data sets.

3. Histogram

Unlike bar charts, histograms illustrate the distribution of data over a continuous interval or defined period. These visualizations are helpful in identifying where values are concentrated, as well as where there are gaps or unusual values.

Histograms are especially useful for showing the frequency of a particular occurrence. For instance, if you’d like to show how many clicks your website received each day over the last week, you can use a histogram. From this visualization, you can quickly determine which days your website saw the greatest and fewest number of clicks.

4. Gantt Chart

Gantt charts are particularly common in project management, as they’re useful in illustrating a project timeline or progression of tasks. In this type of chart, tasks to be performed are listed on the vertical axis and time intervals on the horizontal axis. Horizontal bars in the body of the chart represent the duration of each activity.

Utilizing Gantt charts to display timelines can be incredibly helpful, and enable team members to keep track of every aspect of a project. Even if you’re not a project management professional, familiarizing yourself with Gantt charts can help you stay organized.

5. Heat Map

A heat map is a type of visualization used to show differences in data through variations in color. These charts use color to communicate values in a way that makes it easy for the viewer to quickly identify trends. Having a clear legend is necessary in order for a user to successfully read and interpret a heatmap.

There are many possible applications of heat maps. For example, if you want to analyze which time of day a retail store makes the most sales, you can use a heat map that shows the day of the week on the vertical axis and time of day on the horizontal axis. Then, by shading in the matrix with colors that correspond to the number of sales at each time of day, you can identify trends in the data that allow you to determine the exact times your store experiences the most sales.

6. A Box and Whisker Plot

A box and whisker plot , or box plot, provides a visual summary of data through its quartiles. First, a box is drawn from the first quartile to the third of the data set. A line within the box represents the median. “Whiskers,” or lines, are then drawn extending from the box to the minimum (lower extreme) and maximum (upper extreme). Outliers are represented by individual points that are in-line with the whiskers.

This type of chart is helpful in quickly identifying whether or not the data is symmetrical or skewed, as well as providing a visual summary of the data set that can be easily interpreted.

7. Waterfall Chart

A waterfall chart is a visual representation that illustrates how a value changes as it’s influenced by different factors, such as time. The main goal of this chart is to show the viewer how a value has grown or declined over a defined period. For example, waterfall charts are popular for showing spending or earnings over time.

8. Area Chart

An area chart , or area graph, is a variation on a basic line graph in which the area underneath the line is shaded to represent the total value of each data point. When several data series must be compared on the same graph, stacked area charts are used.

This method of data visualization is useful for showing changes in one or more quantities over time, as well as showing how each quantity combines to make up the whole. Stacked area charts are effective in showing part-to-whole comparisons.

9. Scatter Plot

Another technique commonly used to display data is a scatter plot . A scatter plot displays data for two variables as represented by points plotted against the horizontal and vertical axis. This type of data visualization is useful in illustrating the relationships that exist between variables and can be used to identify trends or correlations in data.

Scatter plots are most effective for fairly large data sets, since it’s often easier to identify trends when there are more data points present. Additionally, the closer the data points are grouped together, the stronger the correlation or trend tends to be.

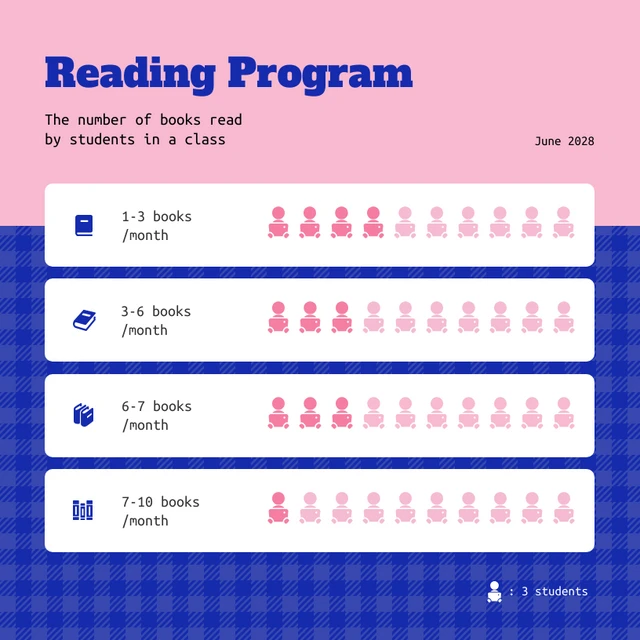

10. Pictogram Chart

Pictogram charts , or pictograph charts, are particularly useful for presenting simple data in a more visual and engaging way. These charts use icons to visualize data, with each icon representing a different value or category. For example, data about time might be represented by icons of clocks or watches. Each icon can correspond to either a single unit or a set number of units (for example, each icon represents 100 units).

In addition to making the data more engaging, pictogram charts are helpful in situations where language or cultural differences might be a barrier to the audience’s understanding of the data.

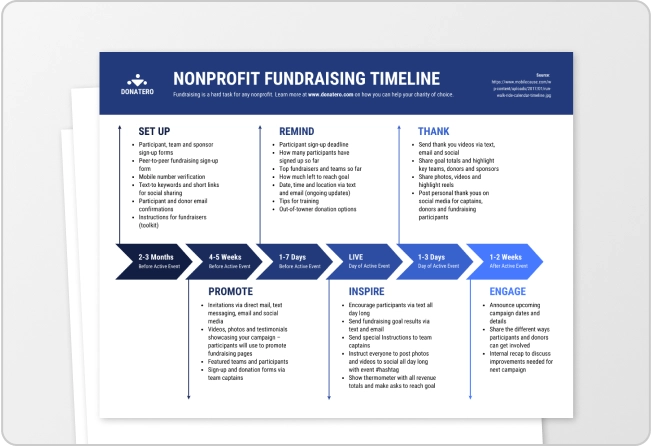

11. Timeline

Timelines are the most effective way to visualize a sequence of events in chronological order. They’re typically linear, with key events outlined along the axis. Timelines are used to communicate time-related information and display historical data.

Timelines allow you to highlight the most important events that occurred, or need to occur in the future, and make it easy for the viewer to identify any patterns appearing within the selected time period. While timelines are often relatively simple linear visualizations, they can be made more visually appealing by adding images, colors, fonts, and decorative shapes.

12. Highlight Table

A highlight table is a more engaging alternative to traditional tables. By highlighting cells in the table with color, you can make it easier for viewers to quickly spot trends and patterns in the data. These visualizations are useful for comparing categorical data.

Depending on the data visualization tool you’re using, you may be able to add conditional formatting rules to the table that automatically color cells that meet specified conditions. For instance, when using a highlight table to visualize a company’s sales data, you may color cells red if the sales data is below the goal, or green if sales were above the goal. Unlike a heat map, the colors in a highlight table are discrete and represent a single meaning or value.

13. Bullet Graph

A bullet graph is a variation of a bar graph that can act as an alternative to dashboard gauges to represent performance data. The main use for a bullet graph is to inform the viewer of how a business is performing in comparison to benchmarks that are in place for key business metrics.

In a bullet graph, the darker horizontal bar in the middle of the chart represents the actual value, while the vertical line represents a comparative value, or target. If the horizontal bar passes the vertical line, the target for that metric has been surpassed. Additionally, the segmented colored sections behind the horizontal bar represent range scores, such as “poor,” “fair,” or “good.”

14. Choropleth Maps

A choropleth map uses color, shading, and other patterns to visualize numerical values across geographic regions. These visualizations use a progression of color (or shading) on a spectrum to distinguish high values from low.

Choropleth maps allow viewers to see how a variable changes from one region to the next. A potential downside to this type of visualization is that the exact numerical values aren’t easily accessible because the colors represent a range of values. Some data visualization tools, however, allow you to add interactivity to your map so the exact values are accessible.

15. Word Cloud

A word cloud , or tag cloud, is a visual representation of text data in which the size of the word is proportional to its frequency. The more often a specific word appears in a dataset, the larger it appears in the visualization. In addition to size, words often appear bolder or follow a specific color scheme depending on their frequency.

Word clouds are often used on websites and blogs to identify significant keywords and compare differences in textual data between two sources. They are also useful when analyzing qualitative datasets, such as the specific words consumers used to describe a product.

16. Network Diagram

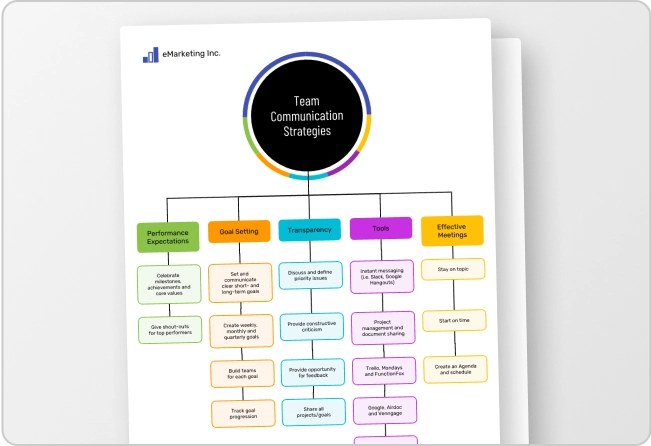

Network diagrams are a type of data visualization that represent relationships between qualitative data points. These visualizations are composed of nodes and links, also called edges. Nodes are singular data points that are connected to other nodes through edges, which show the relationship between multiple nodes.

There are many use cases for network diagrams, including depicting social networks, highlighting the relationships between employees at an organization, or visualizing product sales across geographic regions.

17. Correlation Matrix