Cognitive Distortions: 22 Examples & Worksheets (& PDF)

Generally, this is a good thing—our brain has been wired to alert us to danger, attract us to potential mates, and find solutions to the problems we encounter every day.

However, there are some occasions when you may want to second guess what your brain is telling you. It’s not that your brain is purposely lying to you, it’s just that it may have developed some faulty or non-helpful connections over time.

It can be surprisingly easy to create faulty connections in the brain. Our brains are predisposed to making connections between thoughts, ideas, actions, and consequences, whether they are truly connected or not.

This tendency to make connections where there is no true relationship is the basis of a common problem when it comes to interpreting research: the assumption that because two variables are correlated, one causes or leads to the other. The refrain “correlation does not equal causation!” is a familiar one to any student of psychology or the social sciences.

It is all too easy to view a coincidence or a complicated relationship and make false or overly simplistic assumptions in research—just as it is easy to connect two events or thoughts that occur around the same time when there are no real ties between them.

There are many terms for this kind of mistake in social science research, complete with academic jargon and overly complicated phrasing. In the context of our thoughts and beliefs, these mistakes are referred to as “cognitive distortions.”

Before you continue, we thought you might like to download our three Positive CBT Exercises for free. These science-based exercises will provide you with a detailed insight into Positive CBT and will give you additional tools to address cognitive distortions in your therapy or coaching.

This Article Contains:

What are cognitive distortions, experts in cognitive distortions: aaron beck and david burns, a list of the most common cognitive distortions, changing your thinking: examples of techniques to combat cognitive distortions, a take-home message.

Cognitive distortions are biased perspectives we take on ourselves and the world around us. They are irrational thoughts and beliefs that we unknowingly reinforce over time.

These patterns and systems of thought are often subtle–it’s difficult to recognize them when they are a regular feature of your day-to-day thoughts. That is why they can be so damaging since it’s hard to change what you don’t recognize as something that needs to change!

Cognitive distortions come in many forms (which we’ll cover later in this piece), but they all have some things in common.

All cognitive distortions are:

- Tendencies or patterns of thinking or believing;

- That are false or inaccurate;

- And have the potential to cause psychological damage.

It can be scary to admit that you may fall prey to distorted thinking. You might be thinking, “There’s no way I am holding on to any blatantly false beliefs!” While most people don’t suffer in their daily lives from these kinds of cognitive distortions, it seems that no one can completely escape these distortions.

If you’re human, you have likely fallen for a few of the numerous cognitive distortions at one time or another. The difference between those who occasionally stumble into a cognitive distortion and those who struggle with them on a more long-term basis is the ability to identify and modify or correct these faulty patterns of thinking.

As with many skills and abilities in life, some are far better at this than others–but with practice, you can improve your ability to recognize and respond to these distortions.

These distortions have been shown to relate positively to symptoms of depression, meaning that where cognitive distortions abound, symptoms of depression are likely to occur as well (Burns, Shaw, & Croker, 1987).

In the words of the renowned psychiatrist and researcher David Burns:

“I suspect you will find that a great many of your negative feelings are in fact based on such thinking errors.”

Errors in thinking, or cognitive distortions, are particularly effective at provoking or exacerbating symptoms of depression. It is still a bit ambiguous as to whether these distortions cause depression or depression brings out these distortions (after all, correlation does not equal causation!) but it is clear that they frequently go hand-in-hand.

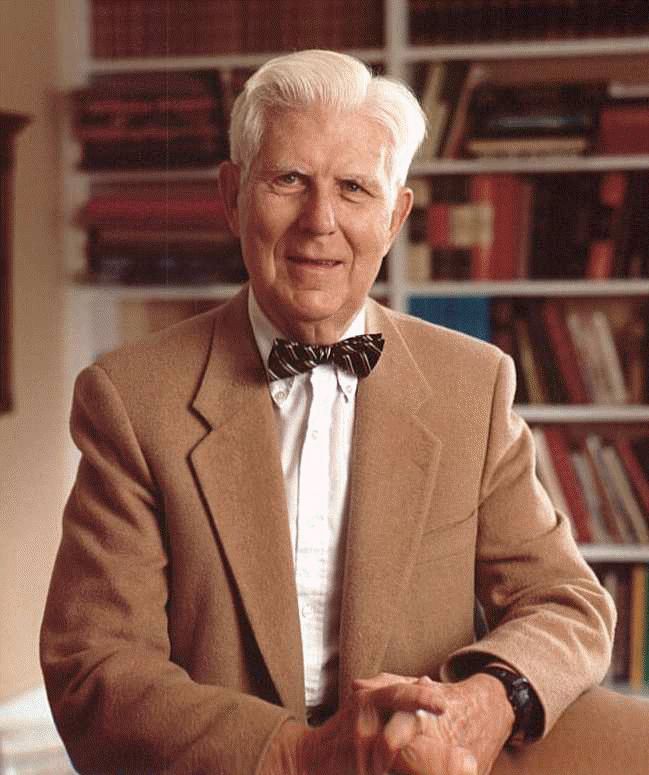

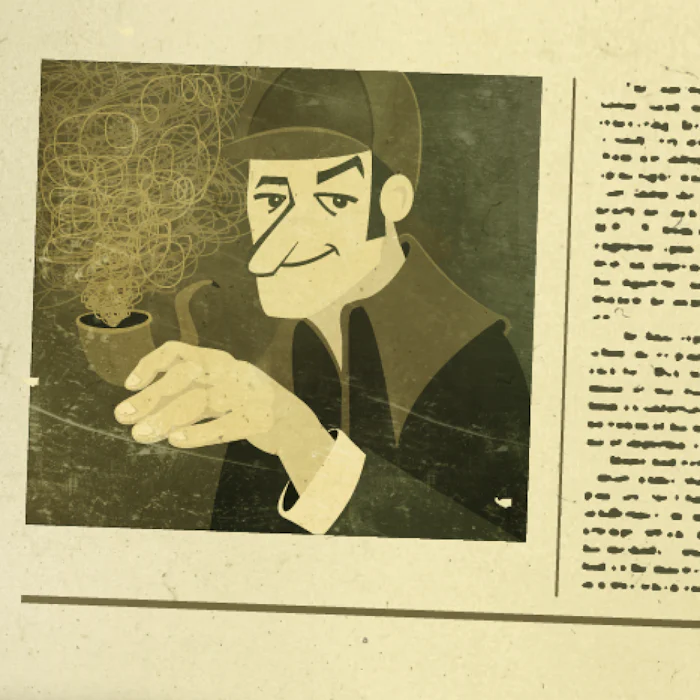

Much of the knowledge around cognitive distortions come from research by two experts: Aaron Beck and David Burns. Both are prominent in the fields of psychiatry and psychotherapy.

If you dig any deeper into cognitive distortions and their role in depression, anxiety, and other mental health issues, you will find two names over and over again: Aaron Beck and David Burns.

These two psychologists literally wrote the book(s) on depression, cognitive distortions, and the treatment of these problems.

Aaron Beck began his career at Yale Medical School, where he graduated in 1946 (GoodTherapy, 2015). His required rotations in psychiatry during his residency ignited his passion for research on depression, suicide, and effective treatment.

In 1954, he joined the University of Pennsylvania’s Department of Psychiatry, where he still holds the position of Professor Emeritus of Psychiatry.

In addition to his prodigious catalog of publications, Beck founded the Beck Initiative to teach therapists how to conduct cognitive therapy with their patients–an endeavor that has helped cognitive therapy grow into the therapy juggernaut that it is today.

Beck also applied his knowledge as a member or consultant for the National Institute of Mental Health, an editor for several peer-reviewed journals, and lectures and visiting professorships at various academic institutions throughout the world (GoodTherapy, 2015).

While there are clearly many honors, awards, and achievements Beck may be known for, perhaps his greatest contribution to the field of psychology is his role in the development of cognitive therapy.

Beck developed the basis for Cognitive Behavioral Therapy , or CBT, when he noticed that many of his patients struggling with depression were operating on false assumptions and distorted thinking (GoodTherapy, 2015). He connected these distorted thinking patterns with his patients’ symptoms and hypothesized that changing their thinking could change their symptoms.

This is the foundation of CBT – the idea that our thought patterns and deeply held beliefs about ourselves and the world around us drive our experiences. This can lead to mental health disorders when they are distorted but can be modified or changed to eliminate troublesome symptoms.

In line with his general research focus, Beck also developed two important scales that are among some of the most used scales in psychology: the Beck Depression Inventory and the Beck Hopelessness Scale. These scales are used to evaluate symptoms of depression and risk of suicide and are still applied decades after their original development (GoodTherapy, 2015).

David Burns

Another big name in depression and treatment research, Dr. David Burns, also spent some time learning and developing his skills at the University of Pennsylvania – it seems that UPenn is particularly good at producing future leaders in psychology!

Burns graduated from Stanford University School of Medicine and moved on to the University of Pennsylvania School of Medicine, where he completed his psychiatry residency and cemented his interest in the treatment of mental health disorders (Feeling Good, n.d.).

He is currently serving as a Professor Emeritus of Psychiatry and Behavioral Sciences at the Stanford University School of Medicine, in addition to continuing his research on treating depression and training therapists to conduct effective psychotherapy sessions (Feeling Good, n.d.). Much of his work is based on Beck’s research revealing the potential impacts of distorted thinking and suggesting ways to correct this thinking.

He is perhaps most well known outside of strictly academic circles for his worldwide best-selling book Feeling Good: The New Mood Therapy . This book has sold more than 4 million copies within the United States alone and is often recommended by therapists to their patients struggling with depression (Summit for Clinical Excellence, n.d.).

This book outlines Burns’ approach to treating depression, which mostly focuses on identifying, correcting, and replacing distorted systems and patterns of thinking. If you are interested in learning more about this book, you can find it on Amazon with over 1,400 reviews to help you evaluate its effectiveness.

To hear more about Burns’ work in the treatment of depression, check out his TED talk on the subject below.

As Burns discusses in the above video, his studies of depression have also influenced the studies around joy and self-esteem.

The most researched form of psychotherapy right now is covered by his book, Feeling Good , aimed at providing tools to the general public.

There are many others who have picked up the torch for this research, often with their own take on cognitive distortions. As such, there are numerous cognitive distortions floating around in the literature, but we’ll limit this list to the most common sixteen.

The first eleven distortions come straight from Burns’ Feeling Good Handbook (1989).

1. All-or-Nothing Thinking / Polarized Thinking

Also known as “Black-and-White Thinking,” this distortion manifests as an inability or unwillingness to see shades of gray. In other words, you see things in terms of extremes – something is either fantastic or awful, you believe you are either perfect or a total failure.

2. Overgeneralization

This sneaky distortion takes one instance or example and generalizes it to an overall pattern. For example, a student may receive a C on one test and conclude that she is stupid and a failure. Overgeneralizing can lead to overly negative thoughts about yourself and your environment based on only one or two experiences.

3. Mental Filter

Similar to overgeneralization, the mental filter distortion focuses on a single negative piece of information and excludes all the positive ones. An example of this distortion is one partner in a romantic relationship dwelling on a single negative comment made by the other partner and viewing the relationship as hopelessly lost, while ignoring the years of positive comments and experiences.

The mental filter can foster a decidedly pessimistic view of everything around you by focusing only on the negative.

4. Disqualifying the Positive

On the flip side, the “Disqualifying the Positive” distortion acknowledges positive experiences but rejects them instead of embracing them.

For example, a person who receives a positive review at work might reject the idea that they are a competent employee and attribute the positive review to political correctness, or to their boss simply not wanting to talk about their employee’s performance problems.

This is an especially malignant distortion since it can facilitate the continuation of negative thought patterns even in the face of strong evidence to the contrary.

5. Jumping to Conclusions – Mind Reading

This “Jumping to Conclusions” distortion manifests as the inaccurate belief that we know what another person is thinking. Of course, it is possible to have an idea of what other people are thinking, but this distortion refers to the negative interpretations that we jump to.

Seeing a stranger with an unpleasant expression and jumping to the conclusion that they are thinking something negative about you is an example of this distortion.

6. Jumping to Conclusions – Fortune Telling

A sister distortion to mind reading, fortune telling refers to the tendency to make conclusions and predictions based on little to no evidence and holding them as gospel truth.

One example of fortune-telling is a young, single woman predicting that she will never find love or have a committed and happy relationship based only on the fact that she has not found it yet. There is simply no way for her to know how her life will turn out, but she sees this prediction as fact rather than one of several possible outcomes.

7. Magnification (Catastrophizing) or Minimization

Also known as the “Binocular Trick” for its stealthy skewing of your perspective, this distortion involves exaggerating or minimizing the meaning, importance, or likelihood of things.

An athlete who is generally a good player but makes a mistake may magnify the importance of that mistake and believe that he is a terrible teammate, while an athlete who wins a coveted award in her sport may minimize the importance of the award and continue believing that she is only a mediocre player.

8. Emotional Reasoning

This may be one of the most surprising distortions to many readers, and it is also one of the most important to identify and address. The logic behind this distortion is not surprising to most people; rather, it is the realization that virtually all of us have bought into this distortion at one time or another.

Emotional reasoning refers to the acceptance of one’s emotions as fact. It can be described as “ I feel it, therefore it must be true .” Just because we feel something doesn’t mean it is true; for example, we may become jealous and think our partner has feelings for someone else, but that doesn’t make it true. Of course, we know it isn’t reasonable to take our feelings as fact, but it is a common distortion nonetheless.

Relevant: What is Emotional Intelligence? + 18 Ways to Improve It

9. Should Statements

Another particularly damaging distortion is the tendency to make “should” statements. Should statements are statements that you make to yourself about what you “should” do, what you “ought” to do, or what you “must” do. They can also be applied to others, imposing a set of expectations that will likely not be met.

When we hang on too tightly to our “should” statements about ourselves, the result is often guilt that we cannot live up to them. When we cling to our “should” statements about others, we are generally disappointed by their failure to meet our expectations, leading to anger and resentment.

10. Labeling and Mislabeling

These tendencies are basically extreme forms of overgeneralization, in which we assign judgments of value to ourselves or to others based on one instance or experience.

For example, a student who labels herself as “an utter fool” for failing an assignment is engaging in this distortion, as is the waiter who labels a customer “a grumpy old miser” if he fails to thank the waiter for bringing his food. Mislabeling refers to the application of highly emotional, loaded, and inaccurate or unreasonable language when labeling.

11. Personalization

As the name implies, this distortion involves taking everything personally or assigning blame to yourself without any logical reason to believe you are to blame.

This distortion covers a wide range of situations, from assuming you are the reason a friend did not enjoy the girls’ night out, to the more severe examples of believing that you are the cause for every instance of moodiness or irritation in those around you.

In addition to these basic cognitive distortions, Beck and Burns have mentioned a few others (Beck, 1976; Burns, 1980):

12. Control Fallacies

A control fallacy manifests as one of two beliefs: (1) that we have no control over our lives and are helpless victims of fate, or (2) that we are in complete control of ourselves and our surroundings, giving us responsibility for the feelings of those around us. Both beliefs are damaging, and both are equally inaccurate.

No one is in complete control of what happens to them, and no one has absolutely no control over their situation. Even in extreme situations where an individual seemingly has no choice in what they do or where they go, they still have a certain amount of control over how they approach their situation mentally.

13. Fallacy of Fairness

While we would all probably prefer to operate in a world that is fair, the assumption of an inherently fair world is not based in reality and can foster negative feelings when we are faced with proof of life’s unfairness.

A person who judges every experience by its perceived fairness has fallen for this fallacy, and will likely feel anger, resentment, and hopelessness when they inevitably encounter a situation that is not fair.

14. Fallacy of Change

Another ‘fallacy’ distortion involves expecting others to change if we pressure or encourage them enough. This distortion is usually accompanied by a belief that our happiness and success rests on other people, leading us to believe that forcing those around us to change is the only way to get what we want.

A man who thinks “If I just encourage my wife to stop doing the things that irritate me, I can be a better husband and a happier person” is exhibiting the fallacy of change.

15. Always Being Right

Perfectionists and those struggling with Imposter Syndrome will recognize this distortion – it is the belief that we must always be right. For those struggling with this distortion, the idea that we could be wrong is absolutely unacceptable, and we will fight to the metaphorical death to prove that we are right.

For example, the internet commenters who spend hours arguing with each other over an opinion or political issue far beyond the point where reasonable individuals would conclude that they should “agree to disagree” are engaging in the “Always Being Right” distortion. To them, it is not simply a matter of a difference of opinion, it is an intellectual battle that must be won at all costs.

16. Heaven’s Reward Fallacy

This distortion is a popular one, and it’s easy to see myriad examples of this fallacy playing out on big and small screens across the world. The “Heaven’s Reward Fallacy” manifests as a belief that one’s struggles, one’s suffering, and one’s hard work will result in a just reward.

It is obvious why this type of thinking is a distortion – how many examples can you think of, just within the realm of your personal acquaintances, where hard work and sacrifice did not pay off?

Sometimes no matter how hard we work or how much we sacrifice, we will not achieve what we hope to achieve. To think otherwise is a potentially damaging pattern of thought that can result in disappointment, frustration, anger, and even depression when the awaited reward does not materialize.

These distortions in our thinking are often subtle, and it is challenging to recognize them when they are a regular feature of our day-to-day thoughts. Importantly also, these distortions have been shown to relate positively to symptoms of depression, meaning that where cognitive distortions abound, symptoms of depression are likely to occur (Burns et al., 1987).

But, all is not lost. Identifying and being mindful of when we engage in these distorted thoughts can be really helpful. Ways to tackle this may be by keeping a thought log, checking whether these thoughts are facts or just the opinions of ourselves or others or, even putting our thoughts on trial and actively trying to challenge them.

Attempting to recognize and challenge our cognitive distortions can be difficult, but know that we aren’t alone in this experience. Shedding a gentle awareness onto our thoughts can be a great first step.

These distortions, while common and potentially extremely damaging, are not something we must simply resign ourselves to living with.

Beck, Burns, and other researchers in this area have developed numerous ways to identify, challenge, minimize, or erase these distortions from our thinking.

Some of the most effective and evidence-based techniques and resources are listed below.

Cognitive Distortions Handout

Since you must first identify the distortions you struggle with before you can effectively challenge them, this resource is a must-have.

The Cognitive Distortions handout lists and describes several types of cognitive distortions to help you figure out which ones you might be dealing with.

The distortions listed include:

- All-or-Nothing Thinking;

- Overgeneralizing;

- Discounting the Positive;

- Jumping to Conclusions;

- Mind Reading;

- Fortune Telling;

- Magnification (Catastrophizing) and Minimizing;

- Emotional Reasoning;

- Should Statements;

- Labeling and Mislabeling;

- Personalization.

The descriptions are accompanied by helpful descriptions and a couple of examples.

This information can be found in the Increasing Awareness of Cognitive Distortions exercise in the Positive Psychology Toolkit© .

Automatic Thought Record

This worksheet is an excellent tool for identifying and understanding your cognitive distortions. Our automatic, negative thoughts are often related to a distortion that we may or may not realize we have. Completing this exercise can help you to figure out where you are making inaccurate assumptions or jumping to false conclusions.

The worksheet is split into six columns:

- Automatic Thoughts (ATs)

- Your Response

- A More Adaptive Response

First, you note the date and time of the thought.

In the second column, you will write down the situation. Ask yourself:

- What led to this event?

- What caused the unpleasant feelings I am experiencing?

The third component of the worksheet directs you to write down the negative automatic thought, including any images or feelings that accompanied the thought. You will consider the thoughts and images that went through your mind, write them down, and determine how much you believed these thoughts.

After you have identified the thought, the worksheet instructs you to note the emotions that ran through your mind along with the thoughts and images identified. Ask yourself what emotions you felt at the time and how intense the emotions were on a scale from 1 (barely felt it) to 10 (completely overwhelming).

Next, you have an opportunity to come up with an adaptive response to those thoughts. This is where the real work happens, where you identify the distortions that are cropping up and challenge them.

Ask yourself these questions:

- Which cognitive distortions were you employing?

- What is the evidence that the automatic thought(s) is true, and what evidence is there that it is not true?

- You’ve thought about the worst that can happen, but what’s the best that could happen? What’s the most realistic scenario?

- How likely are the best-case and most realistic scenarios?

Finally, you will consider the outcome of this event. Think about how much you believe the automatic thought now that you’ve come up with an adaptive response, and rate your belief. Determine what emotion(s) you are feeling now and at what intensity you are experiencing them.

Download 3 Free Positive CBT Exercises (PDF)

These detailed, science-based exercises will equip you or your clients with tools to find new pathways to reduce suffering and more effectively cope with life stressors.

Download 3 Free Positive CBT Tools Pack (PDF)

By filling out your name and email address below.

Decatastrophizing

This is a particularly good tool for talking yourself out of catastrophizing a situation.

The worksheet begins with a description of cognitive distortions in general and catastrophizing in particular; catastrophizing is when you distort the importance or meaning of a problem to be much worse than it is, or you assume that the worst possible scenario is going to come to pass. It’s a reinforcing distortion, as you get more and more anxious the more you think about it, but there are ways to combat it.

First, write down your worry. Identify the issue you are catastrophizing by answering the question, “What are you worried about?”

Once you have articulated the issue that is worrying you, you can move on to thinking about how this issue will turn out.

Think about how terrible it would be if the catastrophe actually came to pass. What is the worst-case scenario? Consider whether a similar event has occurred in your past and, if so, how often it occurred. With the frequency of this catastrophe in mind, make an educated guess of how likely the worst-case scenario is to happen.

After this, think about what is most likely to happen–not the best possible outcome, not the worst possible outcome, but the most likely. Consider this scenario in detail and write it down. Note how likely you think this scenario is to happen as well.

Next, think about your chances of surviving in one piece. How likely is it that you’ll be okay one week from now if your fear comes true? How likely is it that you’ll be okay in one month? How about one year? For all three, write down “Yes” if you think you’d be okay and “No” if you don’t think you’d be okay.

Finally, come back to the present and think about how you feel right now. Are you still just as worried, or did the exercise help you think a little more realistically? Write down how you’re feeling about it.

This worksheet can be an excellent resource for anyone who is worrying excessively about a potentially negative event.

You can download the Decatastrophizing Worksheet here.

Cataloging Your Inner Rules

Cognitive distortions include assumptions and rules that we hold dearly or have decided we must live by. Sometimes these rules or assumptions help us to stick to our values or our moral code, but often they can limit and frustrate us.

This exercise can help you to think more critically about an assumption or rule that may be harmful.

First, think about a recent scenario where you felt bad about your thoughts or behavior afterward. Write down a description of the scenario and the infraction (what you did to break the rule).

Next, based on your infraction, identify the rule or assumption that was broken. What are the parameters of the rule? How does it compel you to think or act?

Once you have described the rule or assumption, think about where it came from. Consider when you acquired this rule, how you learned about it, and what was happening in your life that encouraged you to adopt it. What makes you think it’s a good rule to have?

Now that you have outlined a definition of the rule or assumption and its origins and impact on your life, you can move on to comparing its advantages and disadvantages. Every rule or assumption we follow will likely have both advantages and disadvantages.

The presence of one advantage does not mean the rule or assumption is necessarily a good one, just as the presence of one disadvantage does not automatically make the rule or assumption a bad one. This is where you must think critically about how the rule or assumption helps and/or hurts you.

Finally, you have an opportunity to think about everything you have listed and decide to either accept the rule as it is, throw it out entirely and create a new one, or modify it into a rule that would suit you better. This may be a small change or a big modification.

If you decide to change the rule or assumption, the new version should maximize the advantages of the rule, minimize or limit the disadvantages, or both. Write down this new and improved rule and consider how you can put it into practice in your daily life.

You can download the Cataloging Your Inner Rules Worksheet.

Facts or Opinions?

This is one of the first lessons that participants in cognitive behavioral therapy (CBT) learn – that facts are not opinions. As obvious as this seems, it can be difficult to remember and adhere to this fact in your day to day life.

This exercise can help you learn the difference between fact and opinion, and prepare you to distinguish between your own opinions and facts.

The worksheet lists the following fifteen statements and asks the reader to decide whether they are fact or opinion:

- I am a failure.

- I’m uglier than him/her.

- I said “no” to a friend in need.

- A friend in need said “no” to me.

- I suck at everything.

- I yelled at my partner.

- I can’t do anything right.

- He said some hurtful things to me.

- She didn’t care about hurting me.

- This will be an absolute disaster.

- I’m a bad person.

- I said things I regret.

- I’m shorter than him.

- I am not loveable.

- I’m selfish and uncaring.

- Everyone is a way better person than I am.

- Nobody could ever love me.

- I am overweight for my height.

- I ruined the evening.

- I failed my exam.

Practicing making this distinction between fact and opinion can improve your ability to quickly differentiate between the two when they pop up in your own thoughts.

Here is the Facts or Opinions Worksheet .

In case you’re wondering which is which, here is the key:

- I am a failure. False

- I’m uglier than him/her. False

- I said “no” to a friend in need. True

- A friend in need said “no” to me. True

- I suck at everything. False

- I yelled at my partner. True

- I can’t do anything right. False

- He said some hurtful things to me. True

- She didn’t care about hurting me. False

- This will be an absolute disaster. False

- I’m a bad person. False

- I said things I regret. True

- I’m shorter than him. True

- I am not loveable. False

- I’m selfish and uncaring. False

- Everyone is a way better person than I am. False

- Nobody could ever love me. False

- I am overweight for my height. True

- I ruined the evening. False

- I failed my exam. True

Putting Thoughts on Trial

This exercise uses CBT theory and techniques to help you examine your irrational thoughts. You will act as the defense attorney, prosecutor, and judge all at once, providing evidence for and against the irrational thought and evaluating the merit of the thought based on this evidence.

The worksheet begins with an explanation of the exercise and a description of the roles you will be playing.

The first box to be completed is “The Thought.” This is where you write down the irrational thought that is being put on trial.

Next, you fill out “The Defense” box with evidence that corroborates or supports the thought. Once you have listed all of the defense’s evidence, do the same for “The Prosecution” box. Write down all of the evidence calling the thought into question or instilling doubt in its accuracy.

When you have listed all of the evidence you can think of, both for and against the thought, evaluate the evidence and write down the results of your evaluation in “The Judge’s Verdict” box.

This worksheet is a fun and engaging way to think critically about your negative or irrational thoughts and make good decisions about which thoughts to modify and which to embrace.

Click here to see this worksheet for yourself (TherapistAid).

World’s Largest Positive Psychology Resource

The Positive Psychology Toolkit© is a groundbreaking practitioner resource containing over 500 science-based exercises , activities, interventions, questionnaires, and assessments created by experts using the latest positive psychology research.

Updated monthly. 100% Science-based.

“The best positive psychology resource out there!” — Emiliya Zhivotovskaya , Flourishing Center CEO

Hopefully, this piece has given you a good understanding of cognitive distortions. These sneaky, inaccurate patterns of thinking and believing are common, but their potential impact should not be underestimated.

Even if you are not struggling with depression, anxiety, or another serious mental health issue, it doesn’t hurt to evaluate your own thoughts every now and then. The sooner you catch a cognitive distortion and mount a defense against it, the less likely it is to make a negative impact on your life.

What is your experience with cognitive distortions? Which ones do you struggle with? Do you think we missed any important ones? How have you tackled them, whether in CBT or on your own?

Let us know in the comments below. We love hearing from you.

We hope you enjoyed reading this article. For more information, don’t forget to download our three Positive CBT Exercises for free .

- Beck, A. T. (1976). Cognitive therapies and emotional disorders . New York, NY: New American Library.

- Burns, D. D. (1980). Feeling good: The new mood therapy. New York, NY: New American Library.

- Burns, D. D. (1989). The feeling good handbook. New York, NY: Morrow.

- Burns, D. D., Shaw, B. F., & Croker, W. (1987). Thinking styles and coping strategies of depressed women: An empirical investigation. Behaviour Research and Therapy, 25, 223-225.

- Feeling Good. (n.d.). About. Feeling Good. Retrieved from https://feelinggood.com/about/

- GoodTherapy. (2015). Aaron Beck. GoodTherapy LLC. Retrieved from https://www.goodtherapy.org/famous-psychologists/aaron-beck.html

- Summit for Clinical Excellence. (n.d.). David Burns, MD. Summit for clinical excellence faculty page. Retrieved from https://summitforclinicalexcellence.com/partners/faculty/david-burns/

- TherapistAid. (n.d.). Cognitive restructuring: Thoughts on trial. Retrieved from https://www.therapistaid.com/worksheets/putting-thoughts-on-trial.pdf

Share this article:

Article feedback

What our readers think.

Wow! Very interesting! 😀 I wish you all the best <3

Hello, I enjoyed the article. my only complaint is that we should focus on grammatical correctness. Him/Her is very bad grammar we have them and they which reads as much more adult

thank you for your valuable input! We are very much aware of it and have already implemented this in the newer articles, but we definitely still need to adapt the grammatical correctness in older content.

Warm regards, Julia | Community Manager

Grammar indeed. They/them is the plural form for he/she and him/her. Without any political pseudo-scientific marxist-leninist based imperialisme of a minority. Language follows its own rules. Let it be as it always has been. As a rose is a rose. And a cow is a cow.

It’s really helpful. I’m myself struggling with anxiety and I get to know that there are so many cognitive distortions I’ve encountered with .Thank you so much .And I hope that I came out of this phase as soon as possible .

Great article, thanks for sharing these valuable information and exercises

Great article! Was a good PDF to read!

Hi, from where I can download a questionnaire that will help to understand what is their thinking style?

Thanks for your question, I’d love to help! Could you clarify exactly which questionnaire you mean?

If you are referring to the Increasing Awareness of Cognitive Distortions exercise, you must subscribe to our PositivePsychology.com Toolkit© to gain access.

Let me know if I understood your request clearly 🙂

Kind regards, -Caroline | Community Manager

Hi Caroline, i have a problem with catastrophizing thinking error, i do imagine and magnify some things with no reason how can i prevent that

Hi Patrick,

It’s wonderful to see your self-awareness, as recognizing the issue is the vital first step towards resolution!

We are pleased to offer you two complimentary resources designed to assist you in addressing catastrophizing thought patterns: (1) the “ Challenging Catastrophic Thinking ” worksheet, and (2) the “ Decatastrophizing ” worksheet.

We’re truly hoping these tools are helpful. Just remember, it’s one step at a time. You’ve got this! Kind regards, Julia | Community Manager

Let us know your thoughts Cancel reply

Your email address will not be published.

Save my name, email, and website in this browser for the next time I comment.

Related articles

The Hidden Costs of Sleep Deprivation & Its Consequences

In the short term, a lack of sleep can leave us stressed and grumpy, unwilling, or unable to take on what is needed or expected [...]

Circadian Rhythm: The Science Behind Your Internal Clock

Circadian rhythms are the daily cycles of our bodily processes, such as sleep, appetite, and alertness. In a sense, we all know about them because [...]

What Is the Health Belief Model? An Updated Look

Early detection through regular screening is key to preventing and treating many diseases. Despite this fact, participation in screening tends to be low. In Australia, [...]

Read other articles by their category

- Body & Brain (50)

- Coaching & Application (58)

- Compassion (25)

- Counseling (51)

- Emotional Intelligence (23)

- Gratitude (18)

- Grief & Bereavement (21)

- Happiness & SWB (40)

- Meaning & Values (26)

- Meditation (20)

- Mindfulness (44)

- Motivation & Goals (45)

- Optimism & Mindset (34)

- Positive CBT (30)

- Positive Communication (22)

- Positive Education (47)

- Positive Emotions (32)

- Positive Leadership (19)

- Positive Parenting (16)

- Positive Psychology (34)

- Positive Workplace (37)

- Productivity (18)

- Relationships (43)

- Resilience & Coping (39)

- Self Awareness (21)

- Self Esteem (38)

- Strengths & Virtues (32)

- Stress & Burnout Prevention (34)

- Theory & Books (46)

- Therapy Exercises (37)

- Types of Therapy (64)

Download 3 Free Positive Psychology Tools Pack (PDF)

3 Positive Psychology Tools (PDF)

- Get started with computers

- Learn Microsoft Office

- Apply for a job

- Improve my work skills

- Design nice-looking docs

- Getting Started

- Smartphones & Tablets

- Typing Tutorial

- Online Learning

- Basic Internet Skills

- Online Safety

- Social Media

- Zoom Basics

- Google Docs

- Google Sheets

- Career Planning

- Resume Writing

- Cover Letters

- Job Search and Networking

- Business Communication

- Entrepreneurship 101

- Careers without College

- Job Hunt for Today

- 3D Printing

- Freelancing 101

- Personal Finance

- Sharing Economy

- Decision-Making

- Graphic Design

- Photography

- Image Editing

- Learning WordPress

- Language Learning

- Critical Thinking

- For Educators

- Translations

- Staff Picks

- English expand_more expand_less

Critical Thinking and Decision-Making - Logical Fallacies

Critical thinking and decision-making -, logical fallacies, critical thinking and decision-making logical fallacies.

Critical Thinking and Decision-Making: Logical Fallacies

Lesson 7: logical fallacies.

/en/problem-solving-and-decision-making/how-critical-thinking-can-change-the-game/content/

Logical fallacies

If you think about it, vegetables are bad for you. I mean, after all, the dinosaurs ate plants, and look at what happened to them...

Let's pause for a moment: That argument was pretty ridiculous. And that's because it contained a logical fallacy .

A logical fallacy is any kind of error in reasoning that renders an argument invalid . They can involve distorting or manipulating facts, drawing false conclusions, or distracting you from the issue at hand. In theory, it seems like they'd be pretty easy to spot, but this isn't always the case.

Watch the video below to learn more about logical fallacies.

Sometimes logical fallacies are intentionally used to try and win a debate. In these cases, they're often presented by the speaker with a certain level of confidence . And in doing so, they're more persuasive : If they sound like they know what they're talking about, we're more likely to believe them, even if their stance doesn't make complete logical sense.

False cause

One common logical fallacy is the false cause . This is when someone incorrectly identifies the cause of something. In my argument above, I stated that dinosaurs became extinct because they ate vegetables. While these two things did happen, a diet of vegetables was not the cause of their extinction.

Maybe you've heard false cause more commonly represented by the phrase "correlation does not equal causation ", meaning that just because two things occurred around the same time, it doesn't necessarily mean that one caused the other.

A straw man is when someone takes an argument and misrepresents it so that it's easier to attack . For example, let's say Callie is advocating that sporks should be the new standard for silverware because they're more efficient. Madeline responds that she's shocked Callie would want to outlaw spoons and forks, and put millions out of work at the fork and spoon factories.

A straw man is frequently used in politics in an effort to discredit another politician's views on a particular issue.

Begging the question

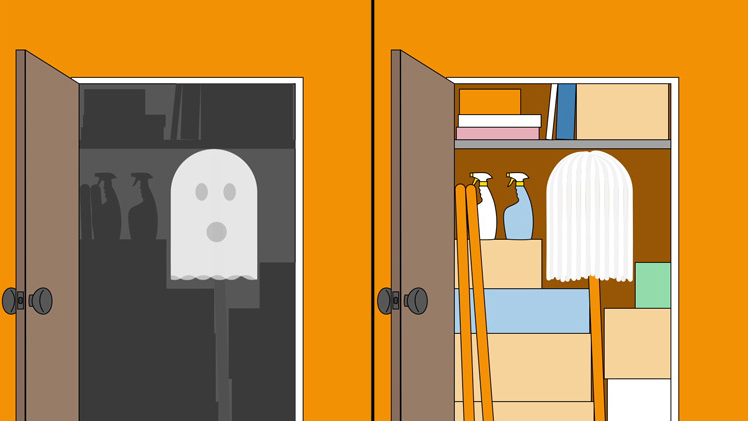

Begging the question is a type of circular argument where someone includes the conclusion as a part of their reasoning. For example, George says, “Ghosts exist because I saw a ghost in my closet!"

George concluded that “ghosts exist”. His premise also assumed that ghosts exist. Rather than assuming that ghosts exist from the outset, George should have used evidence and reasoning to try and prove that they exist.

Since George assumed that ghosts exist, he was less likely to see other explanations for what he saw. Maybe the ghost was nothing more than a mop!

False dilemma

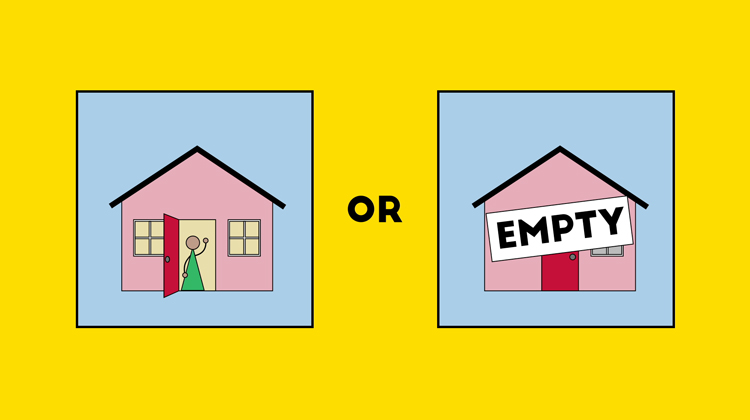

The false dilemma (or false dichotomy) is a logical fallacy where a situation is presented as being an either/or option when, in reality, there are more possible options available than just the chosen two. Here's an example: Rebecca rings the doorbell but Ethan doesn't answer. She then thinks, "Oh, Ethan must not be home."

Rebecca posits that either Ethan answers the door or he isn't home. In reality, he could be sleeping, doing some work in the backyard, or taking a shower.

Most logical fallacies can be spotted by thinking critically . Make sure to ask questions: Is logic at work here or is it simply rhetoric? Does their "proof" actually lead to the conclusion they're proposing? By applying critical thinking, you'll be able to detect logical fallacies in the world around you and prevent yourself from using them as well.

Sources of Errors in Thinking and How to Avoid Them

- First Online: 20 December 2016

Cite this chapter

- Balu H. Athreya 3 , 4 &

- Chrystalla Mouza 5

1217 Accesses

In this chapter we discuss ways in which our experiences might mislead our thinking. We identify sources of errors in thinking as well as time-honored strategies to avoid these errors. Being aware of these errors is crucial to developing critical thinking skills.

“Natural intelligence is no barrier to the propagation of error.” —John Dewey ( 1910 , p. 21) “Distortions in thinking are often due to unconscious bias and unrecognized ignorance” —Susan Stebbing ( 1939 , p. 5)

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Adler, M. J. (1978). Aristotle for everybody . New York: Bantam Books.

Google Scholar

Baron, J. (1993). Why teach thinking? Applied Psychology, 42 (3), 191–214.

Article Google Scholar

Beall, J. C., & Restall, G. (2013). Logical consequences . Retrieved May 7, 2016, from Stanford Encyclopedia of Philosophy: plato.stanford.edu

Beveridge, W. I. (1957). The art of scientific investigation (3rd ed.). London: William Heinemann.

Boss, J. (2014). Think: Critical thinking and logic skills for everyday life (3rd ed.). Columbus, OH: McGraw-Hill Education.

Browne, N. M., & Keeley, S. M. (2015). Asking the right questions: A guide to critical thinking . Upper Saddle River, NJ: Pearson Education Inc.

Buckwalter, J. A., Tolo, V. T., & O’Keefe, R. J. (2015). How do you know it is true? Integrity in research and publications. Journal of Bone and Joint Surgery, 97 , e-2.

Croskerry, P. (2003). The importance of cognitive errors in diagnosis and strategies to minimize them. Academic Medicine, 78 (8), 775–780.

Dawes, R. M. (1988). Rational choice in an uncertain world . Orlando, FL: Harcourt Brace College Publishers.

de Bono, E. (1994). De Bono’s thinking course . New York: Facts on File Inc.

De Condorcet, M. (1802). A historical review of the progress of the human mind . Baltimore, MD: J. Frank.

Deutsch, D. (2011). The beginning of infinity . New York: Viking.

Dewey, J. (1910). How we think . Boston, MA: D.C. Heath & Co.

Book Google Scholar

Dimnet, E. (1928). The art of thinking . Greenwich, CT: Fawcett.

Durant, W., & Durant, A. (1961). The story of civilization . New York: Simon and Schuster.

Eddy, D. A., & Clanton, C. H. (1982). The art of diagnosis: Solving the clinicopathological exercise. New England Journal of Medicine, 306 , 1263–1268.

Elenjimittam, A. (1974). The yoga philosophy of Patanjali . Allahabad, India: Better Yourself Books.

Etkin, J., Evangelidis, I., & Aker, J. (2014). Pressed for time? Goal conflict shapes how time is perceived, spent and valued. Journal of Marketing Research . doi: 10.1509/jmr.14.0130 .

Flesch, R. (1951). The art of clear thinking . New York, NY: Harper & Row.

Fisher, M., Goddu, M. K., & Keil, F. C. (2015). Searching for explanations: How internet inflates estimates of internal knowledge. Journal of Experimental Psychology, 144 (3), 674–687.

Gilovich, T. (1991). How we know what isn’t so: The fallibility of human reason in everyday life . New York: Free Press.

Goodman, S. N. (2016). Aligning statistical and scientific reasoning: Misunderstanding and misuse of statistical significance impede science. Science, 352 (6290), 1180–1181.

Graber, M. L., Franklin, N., & Gordon, R. (2005). Diagnostic error in internal medicine. Archives of Internal Medicine, 165 , 1493–1499.

Guyatt, G., Drummond, R., Meade, M. O., & Cook, D. J. (2015). User’s guide to the medical literature: Essentials of evidence-based medical practice . New York: McGraw-Hill Education/Medicine.

Habits of the Mind. (1989). Retrieved May 25, 2016, from AAAS: Science for all Americans Online: http://www.project2061.org/publications/sfaa/online/chap12.htm

Hobbs, R. (2010). Digital and media literacy: A plan for action . Aspen, CO: The Aspen Institute.

Hofstadter, D., & Sander, E. (2013). Surfaces and essences: Analogy as a fuel and fire of thinking . New York: Basic Books.

Kahneman, D. (2011). Thinking fast and slow . New York: Farrar, Straus & Giroux.

Kornfield, J. (2008). Meditation for beginners . Boulder, CO: Sounds True Inc.

Kulka, A. (2006). Mental trap: Stupid things that sane people do to mess up their minds . New York: McGraw-Hill.

MacKinght, C. B. (2000). Teaching critical thinking through online discussions. Educause Quarterly, 4 , 38–41.

McIntyre, N., & Popper, K. (1983). The critical attitude in medicine: The need for a new ethics. British Medical Journal, 287 , 1919–1923.

Metzger, M. (2009). Credibility research to date . Retrieved from Credibility and Media @UCSB: http://www.credibilty.ucsb.edu/past_research.php

Miller, G. A. (1956). The magical Number seven, plus or minus two: Some limits on our capacity to processing information. Psychological Review, 63 , 81–97.

Ophir, E., Nass, C., & Wagner, A. D. (2009). Cognitive control in media multitaskers. Proceedings of the National Academy of Sciences, 106 (37), 15583–15587.

Patten, B. M. (2004). Truth, knowledge, just plain Bull. How to tell the difference . Amherst, NY: Prometheus Books.

Pei, M. (1978). Weasle words—The art of saying what you do not mean . New York: Harper & Row.

Pingdom. (2012). Internet 2012 in numbers . Retrieved June 7, 2016, from http://royal.pingdom.com : http://royal.pingdom.com/2013/01/16/internet-2012-in-numbers/

Potter, J. W. (2013). Media literacy (7th ed.). Thousand Oaks, CA: Sage Publications.

Priest, G. (2000). Logic: A very short introduction . Oxford, UK: Oxford University Press.

Ross, L., Greene, D., & House, P. (1977). The “false consensus” effect. An egocentric bias in social perception and attribution process. Journal of Experimental and Social Psychology, 13 , 279–301.

Rupert, C. W. (2012). The comforts of unreason . London: Forgotten Books.

Schulte, B. (2014). Overwhelmed: Work, love and play when no one has the time . New York: Sarah Crichton Books.

Sheridan, H., & Reingold, E. M. (2013). The mechanism and boundary conditions of the Einstellung effect in chess: Evidence from eye movements. PLoS One . doi: 10.1371/journal.pone.0075796 .

Shermer, M. (2015, March). Forging doubt. Scientific American , p. 74.

Stebbing, S. L. (1939). Thinking to some purpose . London, UK: Penguin.

Taleb, N. N. (2010). The black swan: The impact of the highly improbable (2nd ed.). New York: Random House Trade Paperbacks.

The National Council for Excellence in Critical Thinking. (2015). Retrieved October 24, 2016, from http://www.criticalthinking.org/pages/the-national-council-for-excellence-in-critical-thinking/406

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185 , 1124–1131.

Wasserstein, R. L., & Lazar, N. A. (2016). The ASA statement on p-value: Context, process and purpose. The American Statistician, 70 (2), 129–133.

Download references

Author information

Authors and affiliations.

University of Pennsylvania – Perelman School of Medicine and Thomas Jefferson University – Sidney Kimmel Medical College, Philadelphia, PA, USA

Balu H. Athreya ( Professor Emeritus of Pediatrics, Teaching Consultant )

Nemours- A.I.duPont Hospital for Children, Wilmington, DE, USA

School of Education, University of Delaware, Newark, DE, USA

Chrystalla Mouza

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Athreya, B.H., Mouza, C. (2017). Sources of Errors in Thinking and How to Avoid Them. In: Thinking Skills for the Digital Generation. Springer, Cham. https://doi.org/10.1007/978-3-319-12364-6_7

Download citation

DOI : https://doi.org/10.1007/978-3-319-12364-6_7

Published : 20 December 2016

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-12363-9

Online ISBN : 978-3-319-12364-6

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

How it works

For Business

Join Mind Tools

Article • 8 min read

Critical Thinking

Developing the right mindset and skills.

By the Mind Tools Content Team

We make hundreds of decisions every day and, whether we realize it or not, we're all critical thinkers.

We use critical thinking each time we weigh up our options, prioritize our responsibilities, or think about the likely effects of our actions. It's a crucial skill that helps us to cut out misinformation and make wise decisions. The trouble is, we're not always very good at it!

In this article, we'll explore the key skills that you need to develop your critical thinking skills, and how to adopt a critical thinking mindset, so that you can make well-informed decisions.

What Is Critical Thinking?

Critical thinking is the discipline of rigorously and skillfully using information, experience, observation, and reasoning to guide your decisions, actions, and beliefs. You'll need to actively question every step of your thinking process to do it well.

Collecting, analyzing and evaluating information is an important skill in life, and a highly valued asset in the workplace. People who score highly in critical thinking assessments are also rated by their managers as having good problem-solving skills, creativity, strong decision-making skills, and good overall performance. [1]

Key Critical Thinking Skills

Critical thinkers possess a set of key characteristics which help them to question information and their own thinking. Focus on the following areas to develop your critical thinking skills:

Being willing and able to explore alternative approaches and experimental ideas is crucial. Can you think through "what if" scenarios, create plausible options, and test out your theories? If not, you'll tend to write off ideas and options too soon, so you may miss the best answer to your situation.

To nurture your curiosity, stay up to date with facts and trends. You'll overlook important information if you allow yourself to become "blinkered," so always be open to new information.

But don't stop there! Look for opposing views or evidence to challenge your information, and seek clarification when things are unclear. This will help you to reassess your beliefs and make a well-informed decision later. Read our article, Opening Closed Minds , for more ways to stay receptive.

Logical Thinking

You must be skilled at reasoning and extending logic to come up with plausible options or outcomes.

It's also important to emphasize logic over emotion. Emotion can be motivating but it can also lead you to take hasty and unwise action, so control your emotions and be cautious in your judgments. Know when a conclusion is "fact" and when it is not. "Could-be-true" conclusions are based on assumptions and must be tested further. Read our article, Logical Fallacies , for help with this.

Use creative problem solving to balance cold logic. By thinking outside of the box you can identify new possible outcomes by using pieces of information that you already have.

Self-Awareness

Many of the decisions we make in life are subtly informed by our values and beliefs. These influences are called cognitive biases and it can be difficult to identify them in ourselves because they're often subconscious.

Practicing self-awareness will allow you to reflect on the beliefs you have and the choices you make. You'll then be better equipped to challenge your own thinking and make improved, unbiased decisions.

One particularly useful tool for critical thinking is the Ladder of Inference . It allows you to test and validate your thinking process, rather than jumping to poorly supported conclusions.

Developing a Critical Thinking Mindset

Combine the above skills with the right mindset so that you can make better decisions and adopt more effective courses of action. You can develop your critical thinking mindset by following this process:

Gather Information

First, collect data, opinions and facts on the issue that you need to solve. Draw on what you already know, and turn to new sources of information to help inform your understanding. Consider what gaps there are in your knowledge and seek to fill them. And look for information that challenges your assumptions and beliefs.

Be sure to verify the authority and authenticity of your sources. Not everything you read is true! Use this checklist to ensure that your information is valid:

- Are your information sources trustworthy ? (For example, well-respected authors, trusted colleagues or peers, recognized industry publications, websites, blogs, etc.)

- Is the information you have gathered up to date ?

- Has the information received any direct criticism ?

- Does the information have any errors or inaccuracies ?

- Is there any evidence to support or corroborate the information you have gathered?

- Is the information you have gathered subjective or biased in any way? (For example, is it based on opinion, rather than fact? Is any of the information you have gathered designed to promote a particular service or organization?)

If any information appears to be irrelevant or invalid, don't include it in your decision making. But don't omit information just because you disagree with it, or your final decision will be flawed and bias.

Now observe the information you have gathered, and interpret it. What are the key findings and main takeaways? What does the evidence point to? Start to build one or two possible arguments based on what you have found.

You'll need to look for the details within the mass of information, so use your powers of observation to identify any patterns or similarities. You can then analyze and extend these trends to make sensible predictions about the future.

To help you to sift through the multiple ideas and theories, it can be useful to group and order items according to their characteristics. From here, you can compare and contrast the different items. And once you've determined how similar or different things are from one another, Paired Comparison Analysis can help you to analyze them.

The final step involves challenging the information and rationalizing its arguments.

Apply the laws of reason (induction, deduction, analogy) to judge an argument and determine its merits. To do this, it's essential that you can determine the significance and validity of an argument to put it in the correct perspective. Take a look at our article, Rational Thinking , for more information about how to do this.

Once you have considered all of the arguments and options rationally, you can finally make an informed decision.

Afterward, take time to reflect on what you have learned and what you found challenging. Step back from the detail of your decision or problem, and look at the bigger picture. Record what you've learned from your observations and experience.

Critical thinking involves rigorously and skilfully using information, experience, observation, and reasoning to guide your decisions, actions and beliefs. It's a useful skill in the workplace and in life.

You'll need to be curious and creative to explore alternative possibilities, but rational to apply logic, and self-aware to identify when your beliefs could affect your decisions or actions.

You can demonstrate a high level of critical thinking by validating your information, analyzing its meaning, and finally evaluating the argument.

Critical Thinking Infographic

See Critical Thinking represented in our infographic: An Elementary Guide to Critical Thinking .

You've accessed 1 of your 2 free resources.

Get unlimited access

Discover more content

Book Insights

Work Disrupted: Opportunity, Resilience, and Growth in the Accelerated Future of Work

Jeff Schwartz and Suzanne Riss

Zenger and Folkman's 10 Fatal Leadership Flaws

Avoiding Common Mistakes in Leadership

Add comment

Comments (1)

priyanka ghogare

Sign-up to our newsletter

Subscribing to the Mind Tools newsletter will keep you up-to-date with our latest updates and newest resources.

Subscribe now

Business Skills

Personal Development

Leadership and Management

Member Extras

Most Popular

Latest Updates

Pain Points Podcast - Presentations Pt 2

NEW! Pain Points - How Do I Decide?

Mind Tools Store

About Mind Tools Content

Discover something new today

Finding the Best Mix in Training Methods

Using Mediation To Resolve Conflict

Resolving conflicts peacefully with mediation

How Emotionally Intelligent Are You?

Boosting Your People Skills

Self-Assessment

What's Your Leadership Style?

Learn About the Strengths and Weaknesses of the Way You Like to Lead

Recommended for you

Developing personal accountability.

Taking Responsibility to Get Ahead

Business Operations and Process Management

Strategy Tools

Customer Service

Business Ethics and Values

Handling Information and Data

Project Management

Knowledge Management

Self-Development and Goal Setting

Time Management

Presentation Skills

Learning Skills

Career Skills

Communication Skills

Negotiation, Persuasion and Influence

Working With Others

Difficult Conversations

Creativity Tools

Self-Management

Work-Life Balance

Stress Management and Wellbeing

Coaching and Mentoring

Change Management

Team Management

Managing Conflict

Delegation and Empowerment

Performance Management

Leadership Skills

Developing Your Team

Talent Management

Problem Solving

Decision Making

Member Podcast

Counselling, Psychotherapy, CBT & Mindfulness in Central London & Online

Home » Blog » Mental Health » What are Thinking Errors in CBT (and how to manage them)

- 9 May / 2021

What are Thinking Errors in CBT (and how to manage them)

When generally talking about how effective CBT Therapy can be, we have discussed how two main components are involved in this type of work: Cognitive Therapy, which deals with the way we think, and Behavioural Therapy, which deals with our actions.

In this post, we’ll focus on one aspect of Cognitive Therapy which is crucial to CBT: dealing with Thinking Errors. These are automatic, often unrealistic types of thinking that can rapidly affect our mood and keep us stuck in a cycle of anxiety, sadness or other difficult emotions. However, in CBT, learning how to identify and label them, can make the difference between escalating and containing our difficult emotions.

What are Thinking Errors?

Thinking Errors – also known as Cognitive Distortions – are irrational and extreme ways of thinking that can maintain mental and emotional issues. Anxiety, low mood, worry, anger management issues are often fuelled by this type of thinking.

Thinking errors, proposed initially by Aaron Beck (1963) (one of the leading CBT figures), are essential in how CBT works with anxiety and other issues.

Although we all fall prey to irrational and extreme thinking, Thinking Errors are a distinctive aspect of the everyday life of those who often experience unpleasant emotions. In anxiety, for instance, the unpleasant feelings are triggered by frequent negative and unbalanced thinking. This type of thinking then informs decisions on how to act, which are equally unhelpful. This chain of events keeps us stuck in a vicious anxiety cycle, as the one below.

Share this Image On Your Site

</p><br /><br /><br /><br /><br /><br /><br /><br /><br /><br /><p><strong>Please include attribution to https://therapy-central.com with this graphic.</strong></p><br /><br /><br /><br /><br /><br /><br /><br /><br /><br /><p><a href=’https://therapy-central.com/2021/05/09/thinking-errors-cbt-and-manage-them/’><img src=’https://therapy-central.com/wp-content/uploads/2021/05/Anxiety_CBT_Vicious_Cycle.png’ alt=’CBT Vicious Cycle of Anxiety’ 540px border=’0′ /></a></p><br /><br /><br /><br /><br /><br /><br /><br /><br /><br /><p>

How do Thinking Errors Affect Anxiety?

Thinking errors play an essential role in keeping us anxious, low or frustrated. They’re what makes the difference between seeing the glass half full or half empty.

Cognitive distortions tend to be consistent with the expectations we have of a situation. For instance, if we have a generally negative outlook on how others see us, it’s more likely that our thinking errors will confirm such negative expectations. For example, after our boss expresses dissatisfaction with our department’s performance, typical thinking errors that may arise could be: “She thinks I am rubbish” (Mind Reading) and “I will lose my job” (Catastrophising).

By falling trap of and believing these thinking errors are factual, we will sink deeper into the negative emotions associated with them, for example, worry, anxiety or fear.

This is why thinking errors are a critical component in increasing and maintaining our anxiety.

How do Thoughts Affect our Mental Health?

If you missed our main article on CBT therapy for anxiety , let’s briefly refresh why thoughts are so crucial to our mental health. CBT believes that we feel anxious, sad or angry because of the thoughts (or images) triggered by the situations that make us feel anxious, sad or angry.

With anxiety, for instance, if you notice your heart racing and have the thought: “I’ll have a heart attack!” this is likely to make you feel anxious. Although it’s completely normal to feel anxious if you believe you’re having a heart attack, if you look closely (and if you don’t have a physical condition), the likelihood of that thought being true is generally very low. Yet, by believing in such a thought, you’re accidentally falling into the trap of anxiety. This is the reason we label these kinds of thoughts as negative or ‘unhelpful’. Your unhelpful thoughts become a prime target of CBT Therapy.

How does CBT work with Thinking Errors?

One of the aims of CBT Therapy for Anxiety (and other issues) is to work on challenging and reframing negative, unhelpful thoughts. This almost always involves some form of journaling and keeping a diary of the thoughts that affect your mood daily. The goal here is to help you identify your unhelpful thoughts and label them as irrational. Following that, with the help of a CBT therapist, the work shifts towards generating more balanced and rational, evidence-based alternative thoughts.

When new, realistic and balanced thoughts are adopted, replacing the unhelpful, irrational ones, you will start to notice a reduction in the intensity of your anxiety reaction. The more unhelpful thoughts are recognised and replaced with helpful, realistic ones, the more anxiety loses its grip on you. You’re able to live a life guided by your choices rather than by fear.

</p><br /><br /><br /><br /><br /><br /><br /><br /><br /><p><strong>Please include attribution to https://therapy-central.com with this graphic.</strong></p><br /><br /><br /><br /><br /><br /><br /><br /><br /><p><a href=’https://therapy-central.com/2021/05/09/thinking-errors-cbt-and-manage-them/’><img src=’https://therapy-central.com/wp-content/uploads/2021/05/MostCommonThinkingErrorsCBT.png’ alt=’The Most Common Thinking Errors in CBT’ 540px border=’0′ /></a></p><br /><br /><br /><br /><br /><br /><br /><br /><br /><p>

How Can We Identify Thinking Errors? A Practical Example

Challenging and reframing unhelpful thoughts can be significantly improved when you can categorise them as thinking errors.

Once you know how they sound, it’s easy to identify if one of your thoughts is a thinking error. When you identify a thought as a thinking error, it’s much more likely to lose its credibility, leading you to feel less anxious, sad or angry.

In other words, recognising and labelling thinking errors when they arise can significantly improve our ability to start escaping the anxiety trap.

Let’s see how it’s done with an example:

- Jennifer is at a work meeting with 10 other people; she’s usually a bit shy and tends to not talk too much when there are many people around for fear of being judged.

- Jennifer’s boss explains that her department did not meet the targets and expresses her disappointment.

- Jennifer feels anxious and on edge. Although she knows her team has done everything they could to meet the target, she chooses not to say a word for fear of confronting her boss.

- After the meeting, Jennifer returns to her desk. She notices her anxiety increasing. Her heart is racing. In her mind, many thoughts crop up about her boss and the meeting: “ She thinks I am rubbish “, “ I will be fired “. Her mind is on a roll, and her thoughts escalate: “ I will never get another job “, “ I won’t be able to pay the rent and end up living on the street “.

- The more these thoughts mount up, unchallenged, the more Jennifer’s Anxiety grows, to the point of needing to take the afternoon off to go home and cool down.

- When she’s back home, Jennifer feels less anxious. However, other negative thoughts crop up, like: “ I’m such a failure for having left earlier “, “ others must have noticed my anxiety and believe I am rubbish “. Ultimately these thoughts contribute to maintaining her anxiety.

What’s the issue with Jennifer, then? The main problem is that whenever thoughts naturally arise, she accepts them as facts, no matter how potentially far-fetched or irrational they might be.

If we look closely, many of her thoughts are pretty unhelpful and irrational. Crucially, the most powerful ones are precisely thinking errors! Here they are:

– “ She thinks I am rubbish ” – Mind Reading

– “ I will never get another job ” Catastrophising/Overgeneralising

– “ I will end up living on the street ” Catastrophising/Overgeneralising

</p><br /><br /><br /><br /><br /><br /><br /><br /><p><strong>Please include attribution to https://therapy-central.com with this graphic.</strong></p><br /><br /><br /><br /><br /><br /><br /><br /><p><a href=’https://therapy-central.com/2021/05/09/thinking-errors-cbt-and-manage-them/’><img src=’https://therapy-central.com/wp-content/uploads/2021/05/TipsToCorrectThinkingErrors.png’ alt=’Tips to Correct Thinking Errors CBT’ 540px border=’0′ /></a></p><br /><br /><br /><br /><br /><br /><br /><br /><p>

How to Correct Thinking Errors? Some Practical Tips:

Catching our minds engaging in thinking errors, and labelling them can be an effective way to avoid making them and tackling anxiety and other challenging emotions in our everyday lives.

If Jennifer could recognise and label her own thoughts as thinking errors in our example, she would have a chance to dismiss them and start decreasing her anxiety. She could then choose to not escape the situation by leaving work early and would ultimately feel better about herself.

Over time, Jennifer would feel more confident and would less likely fall prey to her thinking errors in the future!

1) Start a daily journal.

Pick your favourite medium (a notebook, your notes app or anything else) and write down the negative emotions you feel daily (e.g., anxiety, worry, sadness, etc.). Then, next to them, jot down the thoughts associated with those emotions (e.g., “my boss thinks I am rubbish”). To do this, ask yourself, “What thought or image is making me feel distressed?”.

2) Identify and Label your Thinking Errors.

After step one, take a look at the table (or infographic) below, with a list of some of the most well-known thinking errors, and see if any of the thoughts you wrote down can be labelled as thinking errors.

3) Reality Check!

Once you have identified any of your thoughts as thinking errors, it’s time for a reality check. Ask yourself whether they are actually true and remind yourself that these cognitive distortions are known to be unrealistic, extreme and irrational. There is very likely no good reason to believe them.

Repeating these steps consistently has the potential to help you gradually reduce your anxiety (as well as other negative emotions).

What Are Common Thinking Errors?

Overcome thinking errors starting today.

Thinking errors (or Cognitive Distortions) are well-known mechanisms that keep our negative emotions going. Hopefully, this article helped you learn more about them and get you started on tackling them. Keep in mind that there are times in which we’re stuck in a vicious cycles of anxiety, sadness and other difficult emotions, and we might not be able to get out of them on our own. If your difficult emotions have significantly started affecting your life, get in touch with us for professional help. Our CBT trained therapists have the expertise to help you work with your thinking errors and negative emotions, discover more about CBT Therapy .

With our help, you’ll have the chance to make the crucial changes to bring balance and fulfilment back into your life!

Get in touch with us for a FREE 15 min consultation today!

Beck, A. T. (1963). Thinking and depression: I. Idiosyncratic content and cognitive distortions . Archives of general psychiatry , 9 (4), 324-333. Chicago

- Dr. Raffaello Antonino

Depression & Anxiety in the LGBT Community

Does CBT For Anxiety Work?

How To Be Less Insecure in a Relationship: A Guide to Building Confidence and Trust

Do you often get jealous in your relationship? Are you constantly seeking the validation of your partner? Maybe you tend to put their needs above your own to please them.

- 13 Sep / 2021

- 18 Apr / 2022

- 4 Apr / 2023

Members of:

A Practical Guide to Critical Thinking, 2nd Edition by David A. Hunter

Get full access to A Practical Guide to Critical Thinking, 2nd Edition and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

APPENDIX A CRITICAL THINKING MISTAKES

Critical thinking is reasonable and reflective thinking aimed at deciding what to believe and what to do. Throughout this book, we have identified mistakes that a good critical thinker should avoid. Some are mistakes that can arise in clarifying or defining a view. Others are mistakes that can arise as we collect or rely on evidence or reasons for a view. Still others arise when we try to draw conclusions for our evidence. And there are even mistakes that can arise as we assess other people's views or reasons. Knowing what they are will help us to avoid them in our own reasoning. But it will also help to make it clear just what the value is in being a critical thinker: thinking critically is valuable in part because it helps us to avoid some mistakes. This appendix lists all of the mistakes we have discussed.

Personalizing Reasons. It is a mistake to personalize reasons by treating them as if they belonged to someone. That is a mistake for two reasons. First, epistemic reasons are universal: if they are reasons for me to believe something, then they are equally reasons for anyone else to believe it. Second, epistemic reasons are objective: whether a piece of evidence is sufficient or acceptable is an objective matter. It has nothing to do with me or with anyone else. Personalizing reasons can obscure the fact that they are universal and objective. It can also allow emotion to get in the way of thinking critically, if one identifies too ...

Get A Practical Guide to Critical Thinking, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

- Search Search Search …

- Search Search …

Common Critical Thinking Fallacies

Critical thinking is the process of reaching a decision or judgment by analyzing, evaluating, and reasoning with facts and data presented. However, nobody is thinking critically 100% of the time. Logical reasoning can be prone to fallacies.

A fallacy is an error in reasoning. When there is a fallacy in the reasoning, conclusions are less credible and can be deemed invalid.

How can critical thinking fallacies be avoided? The first step is to be aware of the possible fallacies that can be committed. This article will highlight the most common logical fallacies.

Common fallacies fall under two categories:

- Fallacies of Relevance

- Fallacies of Unacceptable Premises

For fallacies of relevance, reasons are presented why a certain conclusion is reached, but these reasons may not be entirely true nor significant to the argument.

Under Fallacies of Relevance are:

“Ad Hominem” is Latin for “to the person”. It’s a fallacy that uses attacks on the person making the argument instead of the argument itself.

This is commonly seen in informal arguments where a person’s looks or characteristics are often attacked instead of the argument they’re making.

- Red Herring

This is a fallacy of distraction. It sidetracks the main argument by offering a different issue and then claims that this new issue is relevant to the current one. People who do this aim to divert the audience or another person from their arguments.

- Tu Quoque Fallacy

“Tu Quoque” means “you also” in Latin. This fallacy discredits a person’s argument based on the fact that the person does not practice what he or she preaches.

- Strawman Fallacy

Where a person refutes another person’s argument by presenting a weakened version of the original argument.

- Appeal to Authority

Appeal to Authority fallacy claims that an argument is true because someone who has the “authority” on the subject believes that it’s true. For example, a policeman believes that guns should not have permits. This argument should be accepted as the truth because policemen know what they are talking about. Policemen know how to use guns properly, therefore can be called “experts” to the subject matter.

- Appeal to Popularity or Ad Populum

Much like the previous fallacy, Appeal to Popularity claims that something is true because a lot of people or the majority believe that it’s true. We should steer clear of this fallacy because having 100,000 believers doesn’t make a wrong argument true.