Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Assessing the impact of online-learning effectiveness and benefits in knowledge management, the antecedent of online-learning strategies and motivations: an empirical study.

1. Introduction

2. literature review and research hypothesis, 2.1. online-learning self-efficacy terminology, 2.2. online-learning monitoring terminology, 2.3. online-learning confidence in technology terminology, 2.4. online-learning willpower terminology, 2.5. online-learning attitude terminology, 2.6. online-learning motivation terminology, 2.7. online-learning strategies and online-learning effectiveness terminology, 2.8. online-learning effectiveness terminology, 3. research method, 3.1. instruments, 3.2. data analysis and results, 4.1. reliability and validity analysis, 4.2. hypothesis result, 5. discussion, 6. conclusions, 7. limitations and future directions, author contributions, institutional review board statement, informed consent statement, data availability statement, conflicts of interest.

- UNESCO. COVID-19 Educational Disruption and Response ; UNESCO: Paris, France, 2020. [ Google Scholar ]

- Moore, D.R. E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. Educ. Technol. Res. Dev. 2006 , 54 , 197–200. [ Google Scholar ] [ CrossRef ]

- McDonald, E.W.; Boulton, J.L.; Davis, J.L. E-learning and nursing assessment skills and knowledge–An integrative review. Nurse Educ. Today 2018 , 66 , 166–174. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Homan, S.R.; Wood, K. Taming the mega-lecture: Wireless quizzing. Syllabus Sunnyvale Chatsworth 2003 , 17 , 23–27. [ Google Scholar ]

- Emran, M.A.; Shaalan, K. E-podium technology: A medium of managing knowledge at al buraimi university college via mlearning. In Proceedings of the 2nd BCS International IT Conference, Abu Dhabi, United Arab Emirates, 9–10 March 2014; pp. 1–4. [ Google Scholar ]

- Tenório, T.; Bittencourt, I.I.; Isotani, S.; Silva, A.P. Does peer assessment in on-line learning environments work? A systematic review of the literature. Comput. Hum. Behav. 2016 , 64 , 94–107. [ Google Scholar ] [ CrossRef ]

- Sheshasaayee, A.; Bee, M.N. Analyzing online learning effectiveness for knowledge society. In Information Systems Design and Intelligent Applications ; Bhateja, V., Nguyen, B., Nguyen, N., Satapathy, S., Le, D.N., Eds.; Springer: Singapore, 2018; pp. 995–1002. [ Google Scholar ]

- Panigrahi, R.; Srivastava, P.R.; Sharma, D. Online learning: Adoption, continuance, and learning outcome—A review of literature. Int. J. Inform. Manag. 2018 , 43 , 1–14. [ Google Scholar ] [ CrossRef ]

- Al-Rahmi, W.M.; Alias, N.; Othman, M.S.; Alzahrani, A.I.; Alfarraj, O.; Saged, A.A. Use of e-learning by university students in Malaysian higher educational institutions: A case in Universiti Teknologi Malaysia. IEEE Access 2018 , 6 , 14268–14276. [ Google Scholar ] [ CrossRef ]

- Al-Rahmi, W.M.; Yahaya, N.; Aldraiweesh, A.A.; Alamri, M.M.; Aljarboa, N.A.; Alturki, U. Integrating technology acceptance model with innovation diffusion theory: An empirical investigation on students’ intention to use E-learning systems. IEEE Access 2019 , 7 , 26797–26809. [ Google Scholar ] [ CrossRef ]

- Gunawan, I.; Hui, L.K.; Ma’sum, M.A. Enhancing learning effectiveness by using online learning management system. In Proceedings of the 6th International Conference on Education and Technology (ICET), Beijing, China, 18–20 June 2021; pp. 48–52. [ Google Scholar ]

- Nguyen, P.H.; Tangworakitthaworn, P.; Gilbert, L. Individual learning effectiveness based on cognitive taxonomies and constructive alignment. In Proceedings of the IEEE Region 10 Conference (Tencon), Osaka, Japan, 16–19 November 2020; pp. 1002–1006. [ Google Scholar ]

- Pee, L.G. Enhancing the learning effectiveness of ill-structured problem solving with online co-creation. Stud. High. Educ. 2020 , 45 , 2341–2355. [ Google Scholar ] [ CrossRef ]

- Kintu, M.J.; Zhu, C.; Kagambe, E. Blended learning effectiveness: The relationship between student characteristics, design features and outcomes. Int. J. Educ. Technol. High. Educ. 2017 , 14 , 1–20. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Wang, M.H.; Vogel, D.; Ran, W.J. Creating a performance-oriented e-learning environment: A design science approach. Inf. Manag. 2011 , 48 , 260–269. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Hew, K.F.; Cheung, W.S. Students’ and instructors’ use of massive open online courses (MOOCs): Motivations and challenges. Educ. Res. Rev. 2014 , 12 , 45–58. [ Google Scholar ] [ CrossRef ]

- Bryant, J.; Bates, A.J. Creating a constructivist online instructional environment. TechTrends 2015 , 59 , 17–22. [ Google Scholar ] [ CrossRef ]

- Lee, M.C. Explaining and predicting users’ continuance intention toward e-learning: An extension of the expectation–confirmation model. Comput. Educ. 2010 , 54 , 506–516. [ Google Scholar ] [ CrossRef ]

- Lin, K.M. E-Learning continuance intention: Moderating effects of user e-learning experience. Comput. Educ. 2011 , 56 , 515–526. [ Google Scholar ] [ CrossRef ]

- Huang, E.Y.; Lin, S.W.; Huang, T.K. What type of learning style leads to online participation in the mixed-mode e-learning environment? A study of software usage instruction. Comput. Educ. 2012 , 58 , 338–349. [ Google Scholar ]

- Chu, T.H.; Chen, Y.Y. With good we become good: Understanding e-learning adoption by theory of planned behavior and group influences. Comput. Educ. 2016 , 92 , 37–52. [ Google Scholar ] [ CrossRef ]

- Bandura, A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol. Rev. 1977 , 84 , 191–215. [ Google Scholar ] [ CrossRef ]

- Torkzadeh, G.; Van Dyke, T.P. Development and validation of an Internet self-efficacy scale. Behav. Inform. Technol. 2001 , 20 , 275–280. [ Google Scholar ] [ CrossRef ]

- Saadé, R.G.; Kira, D. Computer anxiety in e-learning: The effect of computer self-efficacy. J. Inform. Technol. Educ. Res. 2009 , 8 , 177–191. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Tucker, J.; Gentry, G. Developing an E-Learning strategy in higher education. In Proceedings of the SITE 2009–Society for Information Technology & Teacher Education International Conference, Charleston, SC, USA, 2–6 March 2009; pp. 2702–2707. [ Google Scholar ]

- Wang, Y.; Peng, H.M.; Huang, R.H.; Hou, Y.; Wang, J. Characteristics of distance learners: Research on relationships of learning motivation, learning strategy, self-efficacy, attribution and learning results. Open Learn. J. Open Distance Elearn. 2008 , 23 , 17–28. [ Google Scholar ] [ CrossRef ]

- Mahmud, B.H. Study on the impact of motivation, self-efficacy and learning strategies of faculty of education undergraduates studying ICT courses. In Proceedings of the 4th International Postgraduate Research Colloquium (IPRC) Proceedings, Bangkok, Thailand, 29 October 2009; pp. 59–80. [ Google Scholar ]

- Yusuf, M. Investigating relationship between self-efficacy, achievement motivation, and self-regulated learning strategies of undergraduate Students: A study of integrated motivational models. Procedia Soc. Behav. Sci. 2011 , 15 , 2614–2617. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- De la Fuente, J.; Martínez-Vicente, J.M.; Peralta-Sánchez, F.J.; GarzónUmerenkova, A.; Vera, M.M.; Paoloni, P. Applying the SRL vs. ERL theory to the knowledge of achievement emotions in undergraduate university students. Front. Psychol. 2019 , 10 , 2070. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Ahmadi, S. Academic self-esteem, academic self-efficacy and academic achievement: A path analysis. J. Front. Psychol. 2020 , 5 , 155. [ Google Scholar ]

- Meyen, E.L.; Aust, R.J.; Bui, Y.N. Assessing and monitoring student progress in an E-learning personnel preparation environment. Teach. Educ. Spec. Educ. 2002 , 25 , 187–198. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Dunlosky, J.; Kubat-Silman, A.K.; Christopher, H. Training monitoring skills improves older adults’ self-paced associative learning. Psychol. Aging 2003 , 18 , 340–345. [ Google Scholar ] [ CrossRef ]

- Zhang, H.J. Research on the relationship between English learning motivation. Self-monitoring and Test Score. Ethnic Educ. Res. 2005 , 6 , 66–71. [ Google Scholar ]

- Rosenberg, M.J. E-Learning: Strategies for Delivering Knowledge in the Digital Age ; McGraw-Hill: New York, NY, USA, 2001. [ Google Scholar ]

- Bhat, S.A.; Bashir, M. Measuring ICT orientation: Scale development & validation. Educ. Inf. Technol. 2018 , 23 , 1123–1143. [ Google Scholar ]

- Achuthan, K.; Francis, S.P.; Diwakar, S. Augmented reflective learning and knowledge retention perceived among students in classrooms involving virtual laboratories. Educ. Inf. Technol. 2017 , 22 , 2825–2855. [ Google Scholar ] [ CrossRef ]

- Hu, X.; Yelland, N. An investigation of preservice early childhood teachers’ adoption of ICT in a teaching practicum context in Hong Kong. J. Early Child. Teach. Educ. 2017 , 38 , 259–274. [ Google Scholar ] [ CrossRef ]

- Fraillon, J.; Ainley, J.; Schulz, W.; Friedman, T.; Duckworth, D. Preparing for Life in a Digital World: The IEA International Computer and Information Literacy Study 2018 International Report ; Springer: New York, NY, USA, 2019. [ Google Scholar ]

- Huber, S.G.; Helm, C. COVID-19 and schooling: Evaluation, assessment and accountability in times of crises—Reacting quickly to explore key issues for policy, practice and research with the school barometer. Educ. Assess. Eval. Account. 2020 , 32 , 237–270. [ Google Scholar ] [ CrossRef ]

- Eickelmann, B.; Gerick, J. Learning with digital media: Objectives in times of Corona and under special consideration of social Inequities. Dtsch. Schule. 2020 , 16 , 153–162. [ Google Scholar ]

- Shehzadi, S.; Nisar, Q.A.; Hussain, M.S.; Basheer, M.F.; Hameed, W.U.; Chaudhry, N.I. The role of e-learning toward students’ satisfaction and university brand image at educational institutes of Pakistan: A post-effect of COVID-19. Asian Educ. Dev. Stud. 2020 , 10 , 275–294. [ Google Scholar ] [ CrossRef ]

- Miller, E.M.; Walton, G.M.; Dweck, C.S.; Job, V.; Trzesniewski, K.; McClure, S. Theories of willpower affect sustained learning. PLoS ONE 2012 , 7 , 38680. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Moriña, A.; Molina, V.M.; Cortés-Vega, M.D. Voices from Spanish students with disabilities: Willpower and effort to survive university. Eur. J. Spec. Needs Educ. 2018 , 33 , 481–494. [ Google Scholar ] [ CrossRef ]

- Koballa, T.R., Jr.; Crawley, F.E. The influence of attitude on science teaching and learning. Sch. Sci. Math. 1985 , 85 , 222–232. [ Google Scholar ] [ CrossRef ]

- Chao, C.Y.; Chen, Y.T.; Chuang, K.Y. Exploring students’ learning attitude and achievement in flipped learning supported computer aided design curriculum: A study in high school engineering education. Comput. Appl. Eng. Educ. 2015 , 23 , 514–526. [ Google Scholar ] [ CrossRef ]

- Stefan, M.; Ciomos, F. The 8th and 9th grades students’ attitude towards teaching and learning physics. Acta Didact. Napocensia. 2010 , 3 , 7–14. [ Google Scholar ]

- Sedighi, F.; Zarafshan, M.A. Effects of attitude and motivation on the use of language learning strategies by Iranian EFL University students. J. Soc. Sci. Humanit. Shiraz Univ. 2007 , 23 , 71–80. [ Google Scholar ]

- Megan, S.; Jennifer, H.C.; Stephanie, V.; Kyla, H. The relationship among middle school students’ motivation orientations, learning strategies, and academic achievement. Middle Grades Res. J. 2013 , 8 , 1–12. [ Google Scholar ]

- Nasser, O.; Majid, V. Motivation, attitude, and language learning. Procedia Soc. Behav. Sci. 2011 , 29 , 994–1000. [ Google Scholar ]

- Özhan, Ş.Ç.; Kocadere, S.A. The effects of flow, emotional engagement, and motivation on success in a gamified online learning environment. J. Educ. Comput. Res. 2020 , 57 , 2006–2031. [ Google Scholar ] [ CrossRef ]

- Wang, A.P.; Che, H.S. A research on the relationship between learning anxiety, learning attitude, motivation and test performance. Psychol. Dev. Educ. 2005 , 21 , 55–59. [ Google Scholar ]

- Lin, C.H.; Zhang, Y.N.; Zheng, B.B. The roles of learning strategies and motivation in online language learning: A structural equation modeling analysis. Comput. Educ. 2017 , 113 , 75–85. [ Google Scholar ] [ CrossRef ]

- Deschênes, M.F.; Goudreau, J.; Fernandez, N. Learning strategies used by undergraduate nursing students in the context of a digital educational strategy based on script concordance: A descriptive study. Nurse Educ. Today 2020 , 95 , 104607. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Jerusalem, M.; Schwarzer, R. Self-efficacy as a resource factor in stress appraisal processes. In Self-Efficacy: Thought Control of Action ; Schwarzer, R., Ed.; Hemisphere Publishing Corp: Washington, DC, USA, 1992; pp. 195–213. [ Google Scholar ]

- Zimmerman, B.J. Becoming a self-regulated learner: An overview. Theory Pract. 2002 , 41 , 64–70. [ Google Scholar ] [ CrossRef ]

- Pintrich, P.R.; Smith, D.A.F.; García, T.; McKeachie, W.J. A Manual for the Use of the Motivated Strategies Questionnaire (MSLQ) ; University of Michigan, National Center for Research to Improve Post Secondary Teaching and Learning: Ann Arbor, MI, USA, 1991. [ Google Scholar ]

- Knowles, E.; Kerkman, D. An investigation of students attitude and motivation toward online learning. InSight Collect. Fac. Scholarsh. 2007 , 2 , 70–80. [ Google Scholar ] [ CrossRef ]

- Hair, J.F., Jr.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis: A Global Perspective , 7th ed.; Pearson Education International: Upper Saddle River, NJ, USA, 2010. [ Google Scholar ]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981 , 18 , 39–50. [ Google Scholar ] [ CrossRef ]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM) ; Sage: Los Angeles, CA, USA, 2016. [ Google Scholar ]

- Kiliç-Çakmak, E. Learning strategies and motivational factors predicting information literacy self-efficacy of e-learners. Aust. J. Educ. Technol. 2010 , 26 , 192–208. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Zheng, C.; Liang, J.C.; Li, M.; Tsai, C. The relationship between English language learners’ motivation and online self-regulation: A structural equation modelling approach. System 2018 , 76 , 144–157. [ Google Scholar ] [ CrossRef ]

- May, M.; George, S.; Prévôt, P. TrAVis to enhance students’ self-monitoring in online learning supported by computer-mediated communication tools. Int. J. Comput. Inform. Syst. Ind. Manag. Appl. 2011 , 3 , 623–634. [ Google Scholar ]

- Rafart, M.A.; Bikfalvi, A.; Soler, J.; Poch, J. Impact of using automatic E-Learning correctors on teaching business subjects to engineers. Int. J. Eng. Educ. 2019 , 35 , 1630–1641. [ Google Scholar ]

- Lee, P.M.; Tsui, W.H.; Hsiao, T.C. A low-cost scalable solution for monitoring affective state of students in E-learning environment using mouse and keystroke data. In Intelligent Tutoring Systems ; Cerri, S.A., Clancey, W.J., Papadourakis, G., Panourgia, K., Eds.; Springer: Berlin, Germany, 2012; pp. 679–680. [ Google Scholar ]

- Metz, D.; Karadgi, S.S.; Müller, U.J.; Grauer, M. Self-Learning monitoring and control of manufacturing processes based on rule induction and event processing. In Proceedings of the 4th International Conference on Information, Process, and Knowledge Management eKNOW, Valencia, Spain, 21–25 November 2012; pp. 78–85. [ Google Scholar ]

- Fitch, J.L.; Ravlin, E.C. Willpower and perceived behavioral control: Intention-behavior relationship and post behavior attributions. Soc. Behav. Pers. Int. J. 2005 , 33 , 105–124. [ Google Scholar ] [ CrossRef ]

- Sridharan, B.; Deng, H.; Kirk, J.; Brian, C. Structural equation modeling for evaluating the user perceptions of e-learning effectiveness in higher education. In Proceedings of the ECIS 2010: 18th European Conference on Information Systems, Pretoria, South Africa, 7–9 June 2010. [ Google Scholar ]

- Tarhini, A.; Hone, K.; Liu, X. The effects of individual differences on e-learning users’ behaviour in developing countries: A structural equation model. Comput. Hum. Behav. 2014 , 41 , 153–163. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- de Leeuw, R.A.; Logger, D.N.; Westerman, M.; Bretschneider, J.; Plomp, M.; Scheele, F. Influencing factors in the implementation of postgraduate medical e-learning: A thematic analysis. BMC Med. Educ. 2019 , 19 , 300. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Erenler, H.H.T. A structural equation model to evaluate students’ learning and satisfaction. Comput. Appl. Eng. Educ. 2020 , 28 , 254–267. [ Google Scholar ] [ CrossRef ]

- Fee, K. Delivering E-learning: A complete strategy for design, application and assessment. Dev. Learn. Organ. 2013 , 27 , 40–52. [ Google Scholar ] [ CrossRef ]

- So, W.W.N.; Chen, Y.; Wan, Z.H. Multimedia e-Learning and self-regulated science learning: A study of primary school learners’ experiences and perceptions. J. Sci. Educ. Technol. 2019 , 28 , 508–522. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure

| Variables | Category | Frequency | Percentage |

|---|---|---|---|

| Gender | Male | 243 | 51.81 |

| Female | 226 | 48.19 | |

| Education program level | Undergraduate program | 210 | 44.78 |

| Master program | 154 | 32.84 | |

| Doctoral program | 105 | 22.39 | |

| Online learning tools | Smartphone | 255 | 54.37 |

| Computer/PC | 125 | 26.65 | |

| Tablet | 89 | 18.98 | |

| Online learning media | Google Meet | 132 | 28.14 |

| Microsoft Teams | 99 | 21.11 | |

| Zoom | 196 | 41.79 | |

| Others | 42 | 8.96 |

| Construct | Measurement Items | Factor Loading/Coefficient (t-Value) | AVE | Composite Reliability | Cronbach’s Alpha |

|---|---|---|---|---|---|

| Online Learning Benefit (LBE) | LBE1 | 0.88 | 0.68 | 0.86 | 0.75 |

| LBE2 | 0.86 | ||||

| LBE3 | 0.71 | ||||

| Online-learning effectiveness (LEF) | LEF1 | 0.83 | 0.76 | 0.90 | 0.84 |

| LEF2 | 0.88 | ||||

| LEF3 | 0.90 | ||||

| Online-learning motivation (LMT) | LMT1 | 0.86 | 0.77 | 0.91 | 0.85 |

| LMT2 | 0.91 | ||||

| LMT3 | 0.85 | ||||

| Online-learning strategies (LST) | LST1 | 0.90 | 0.75 | 0.90 | 0.84 |

| LST2 | 0.87 | ||||

| LST3 | 0.83 | ||||

| Online-learning attitude (OLA) | OLA1 | 0.89 | 0.75 | 0.90 | 0.84 |

| OLA2 | 0.83 | ||||

| OLA3 | 0.87 | ||||

| Online-learning confidence-in-technology (OLC) | OLC1 | 0.87 | 0.69 | 0.87 | 0.76 |

| OLC2 | 0.71 | ||||

| OLC3 | 0.89 | ||||

| Online-learning monitoring (OLM) | OLM1 | 0.88 | 0.75 | 0.89 | 0.83 |

| OLM2 | 0.91 | ||||

| OLM3 | 0.79 | ||||

| Online-learning self-efficacy (OLS) | OLS1 | 0.79 | 0.64 | 0.84 | 0.73 |

| OLS2 | 0.81 | ||||

| OLS3 | 0.89 | ||||

| Online-learning willpower (OLW) | OLW1 | 0.91 | 0.69 | 0.87 | 0.77 |

| OLW2 | 0.84 | ||||

| OLW3 | 0.73 |

| LBE | LEF | LMT | LST | OLA | OLC | OLM | OLS | OLW | |

|---|---|---|---|---|---|---|---|---|---|

| LBE | |||||||||

| LEF | 0.82 | ||||||||

| LMT | 0.81 | 0.80 | |||||||

| LST | 0.80 | 0.84 | 0.86 | ||||||

| OLA | 0.69 | 0.63 | 0.78 | 0.81 | |||||

| OLC | 0.76 | 0.79 | 0.85 | 0.79 | 0.72 | ||||

| OLM | 0.81 | 0.85 | 0.81 | 0.76 | 0.63 | 0.83 | |||

| OLS | 0.71 | 0.59 | 0.69 | 0.57 | 0.56 | 0.69 | 0.75 | ||

| OLW | 0.75 | 0.75 | 0.80 | 0.74 | 0.64 | 0.81 | 0.80 | 0.79 |

| LBE | LEF | LMT | LST | OLA | OLC | OLM | OLS | OLW | |

|---|---|---|---|---|---|---|---|---|---|

| LBE1 | 0.88 | 0.76 | 0.87 | 0.66 | 0.54 | 0.79 | 0.78 | 0.63 | 0.74 |

| LBE2 | 0.86 | 0.68 | 0.74 | 0.63 | 0.57 | 0.75 | 0.91 | 0.73 | 0.79 |

| LBE3 | 0.71 | 0.54 | 0.59 | 0.71 | 0.63 | 0.55 | 0.50 | 0.36 | 0.53 |

| LEF1 | 0.63 | 0.83 | 0.72 | 0.65 | 0.51 | 0.62 | 0.69 | 0.46 | 0.57 |

| LEF2 | 0.77 | 0.88 | 0.78 | 0.71 | 0.55 | 0.73 | 0.78 | 0.52 | 0.69 |

| LEF3 | 0.72 | 0.90 | 0.80 | 0.83 | 0.57 | 0.72 | 0.76 | 0.58 | 0.69 |

| LMT1 | 0.88 | 0.76 | 0.87 | 0.66 | 0.54 | 0.79 | 0.78 | 0.63 | 0.74 |

| LMT2 | 0.79 | 0.89 | 0.91 | 0.79 | 0.62 | 0.73 | 0.88 | 0.61 | 0.67 |

| LMT3 | 0.72 | 0.65 | 0.85 | 0.77 | 0.89 | 0.72 | 0.67 | 0.59 | 0.69 |

| LST1 | 0.61 | 0.63 | 0.68 | 0.90 | 0.78 | 0.64 | 0.57 | 0.39 | 0.57 |

| LST2 | 0.74 | 0.59 | 0.72 | 0.87 | 0.78 | 0.68 | 0.61 | 0.48 | 0.63 |

| LST3 | 0.72 | 0.90 | 0.80 | 0.83 | 0.57 | 0.72 | 0.76 | 0.58 | 0.69 |

| OLA1 | 0.72 | 0.65 | 0.85 | 0.79 | 0.89 | 0.72 | 0.67 | 0.59 | 0.69 |

| OLA2 | 0.51 | 0.48 | 0.55 | 0.59 | 0.83 | 0.58 | 0.47 | 0.42 | 0.43 |

| OLA3 | 0.52 | 0.44 | 0.55 | 0.70 | 0.87 | 0.55 | 0.43 | 0.39 | 0.47 |

| OLC1 | 0.78 | 0.70 | 0.73 | 0.65 | 0.53 | 0.87 | 0.77 | 0.65 | 0.91 |

| OLC2 | 0.51 | 0.53 | 0.57 | 0.62 | 0.75 | 0.71 | 0.46 | 0.39 | 0.47 |

| OLC3 | 0.81 | 0.73 | 0.78 | 0.69 | 0.55 | 0.89 | 0.80 | 0.66 | 0.75 |

| OLM1 | 0.79 | 0.89 | 0.91 | 0.79 | 0.62 | 0.73 | 0.88 | 0.61 | 0.69 |

| OLM2 | 0.86 | 0.68 | 0.74 | 0.63 | 0.57 | 0.75 | 0.91 | 0.73 | 0.79 |

| OLM3 | 0.69 | 0.55 | 0.57 | 0.47 | 0.39 | 0.67 | 0.79 | 0.61 | 0.73 |

| OLS1 | 0.41 | 0.23 | 0.35 | 0.28 | 0.39 | 0.41 | 0.40 | 0.69 | 0.49 |

| OLS2 | 0.45 | 0.41 | 0.48 | 0.38 | 0.43 | 0.48 | 0.52 | 0.81 | 0.49 |

| OLS3 | 0.75 | 0.66 | 0.72 | 0.60 | 0.49 | 0.69 | 0.77 | 0.89 | 0.82 |

| OLW1 | 0.78 | 0.70 | 0.73 | 0.65 | 0.53 | 0.87 | 0.77 | 0.65 | 0.91 |

| OLW2 | 0.75 | 0.65 | 0.71 | 0.59 | 0.51 | 0.69 | 0.77 | 0.87 | 0.84 |

| OLW3 | 0.57 | 0.49 | 0.54 | 0.59 | 0.57 | 0.57 | 0.53 | 0.39 | 0.73 |

| Hypothesis | Path | Standardized Path Coefficient | t-Value | Result |

|---|---|---|---|---|

| H1 | OLS → LST | 0.29 *** | 2.14 | Accepted |

| H2 | OLM → LST | 0.24 *** | 2.29 | Accepted |

| H3 | OLC → LST | 0.28 *** | 1.99 | Accepted |

| H4 | OLC → LMT | 0.36 *** | 2.96 | Accepted |

| H5 | OLW → LMT | 0.26 *** | 2.55 | Accepted |

| H6 | OLA → LMT | 0.34 *** | 4.68 | Accepted |

| H7 | LMT → LST | 0.71 *** | 4.96 | Accepted |

| H8 | LMT → LEF | 0.60 *** | 5.89 | Accepted |

| H9 | LST → LEF | 0.32 *** | 3.04 | Accepted |

| H10 | LEF → LBE | 0.81 *** | 23.6 | Accepted |

| MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

Share and Cite

Hongsuchon, T.; Emary, I.M.M.E.; Hariguna, T.; Qhal, E.M.A. Assessing the Impact of Online-Learning Effectiveness and Benefits in Knowledge Management, the Antecedent of Online-Learning Strategies and Motivations: An Empirical Study. Sustainability 2022 , 14 , 2570. https://doi.org/10.3390/su14052570

Hongsuchon T, Emary IMME, Hariguna T, Qhal EMA. Assessing the Impact of Online-Learning Effectiveness and Benefits in Knowledge Management, the Antecedent of Online-Learning Strategies and Motivations: An Empirical Study. Sustainability . 2022; 14(5):2570. https://doi.org/10.3390/su14052570

Hongsuchon, Tanaporn, Ibrahiem M. M. El Emary, Taqwa Hariguna, and Eissa Mohammed Ali Qhal. 2022. "Assessing the Impact of Online-Learning Effectiveness and Benefits in Knowledge Management, the Antecedent of Online-Learning Strategies and Motivations: An Empirical Study" Sustainability 14, no. 5: 2570. https://doi.org/10.3390/su14052570

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

COVID-19’s impacts on the scope, effectiveness, and interaction characteristics of online learning: A social network analysis

Roles Data curation, Formal analysis, Methodology, Writing – review & editing

¶ ‡ JZ and YD are contributed equally to this work as first authors.

Affiliation School of Educational Information Technology, South China Normal University, Guangzhou, Guangdong, China

Roles Data curation, Formal analysis, Methodology, Writing – original draft

Affiliations School of Educational Information Technology, South China Normal University, Guangzhou, Guangdong, China, Hangzhou Zhongce Vocational School Qiantang, Hangzhou, Zhejiang, China

Roles Data curation, Writing – original draft

Roles Data curation

Roles Writing – original draft

Affiliation Faculty of Education, Shenzhen University, Shenzhen, Guangdong, China

Roles Conceptualization, Supervision, Writing – review & editing

* E-mail: [email protected] (JH); [email protected] (YZ)

- Junyi Zhang,

- Yigang Ding,

- Xinru Yang,

- Jinping Zhong,

- XinXin Qiu,

- Zhishan Zou,

- Yujie Xu,

- Xiunan Jin,

- Xiaomin Wu,

- Published: August 23, 2022

- https://doi.org/10.1371/journal.pone.0273016

- Reader Comments

The COVID-19 outbreak brought online learning to the forefront of education. Scholars have conducted many studies on online learning during the pandemic, but only a few have performed quantitative comparative analyses of students’ online learning behavior before and after the outbreak. We collected review data from China’s massive open online course platform called icourse.163 and performed social network analysis on 15 courses to explore courses’ interaction characteristics before, during, and after the COVID-19 pan-demic. Specifically, we focused on the following aspects: (1) variations in the scale of online learning amid COVID-19; (2a) the characteristics of online learning interaction during the pandemic; (2b) the characteristics of online learning interaction after the pandemic; and (3) differences in the interaction characteristics of social science courses and natural science courses. Results revealed that only a small number of courses witnessed an uptick in online interaction, suggesting that the pandemic’s role in promoting the scale of courses was not significant. During the pandemic, online learning interaction became more frequent among course network members whose interaction scale increased. After the pandemic, although the scale of interaction declined, online learning interaction became more effective. The scale and level of interaction in Electrodynamics (a natural science course) and Economics (a social science course) both rose during the pan-demic. However, long after the pandemic, the Economics course sustained online interaction whereas interaction in the Electrodynamics course steadily declined. This discrepancy could be due to the unique characteristics of natural science courses and social science courses.

Citation: Zhang J, Ding Y, Yang X, Zhong J, Qiu X, Zou Z, et al. (2022) COVID-19’s impacts on the scope, effectiveness, and interaction characteristics of online learning: A social network analysis. PLoS ONE 17(8): e0273016. https://doi.org/10.1371/journal.pone.0273016

Editor: Heng Luo, Central China Normal University, CHINA

Received: April 20, 2022; Accepted: July 29, 2022; Published: August 23, 2022

Copyright: © 2022 Zhang et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: The data underlying the results presented in the study were downloaded from https://www.icourse163.org/ and are now shared fully on Github ( https://github.com/zjyzhangjunyi/dataset-from-icourse163-for-SNA ). These data have no private information and can be used for academic research free of charge.

Funding: The author(s) received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

1. Introduction

The development of the mobile internet has spurred rapid advances in online learning, offering novel prospects for teaching and learning and a learning experience completely different from traditional instruction. Online learning harnesses the advantages of network technology and multimedia technology to transcend the boundaries of conventional education [ 1 ]. Online courses have become a popular learning mode owing to their flexibility and openness. During online learning, teachers and students are in different physical locations but interact in multiple ways (e.g., via online forum discussions and asynchronous group discussions). An analysis of online learning therefore calls for attention to students’ participation. Alqurashi [ 2 ] defined interaction in online learning as the process of constructing meaningful information and thought exchanges between more than two people; such interaction typically occurs between teachers and learners, learners and learners, and the course content and learners.

Massive open online courses (MOOCs), a 21st-century teaching mode, have greatly influenced global education. Data released by China’s Ministry of Education in 2020 show that the country ranks first globally in the number and scale of higher education MOOCs. The COVID-19 outbreak has further propelled this learning mode, with universities being urged to leverage MOOCs and other online resource platforms to respond to government’s “School’s Out, But Class’s On” policy [ 3 ]. Besides MOOCs, to reduce in-person gatherings and curb the spread of COVID-19, various online learning methods have since become ubiquitous [ 4 ]. Though Lederman asserted that the COVID-19 outbreak has positioned online learning technologies as the best way for teachers and students to obtain satisfactory learning experiences [ 5 ], it remains unclear whether the COVID-19 pandemic has encouraged interaction in online learning, as interactions between students and others play key roles in academic performance and largely determine the quality of learning experiences [ 6 ]. Similarly, it is also unclear what impact the COVID-19 pandemic has had on the scale of online learning.

Social constructivism paints learning as a social phenomenon. As such, analyzing the social structures or patterns that emerge during the learning process can shed light on learning-based interaction [ 7 ]. Social network analysis helps to explain how a social network, rooted in interactions between learners and their peers, guides individuals’ behavior, emotions, and outcomes. This analytical approach is especially useful for evaluating interactive relationships between network members [ 8 ]. Mohammed cited social network analysis (SNA) as a method that can provide timely information about students, learning communities and interactive networks. SNA has been applied in numerous fields, including education, to identify the number and characteristics of interelement relationships. For example, Lee et al. also used SNA to explore the effects of blogs on peer relationships [ 7 ]. Therefore, adopting SNA to examine interactions in online learning communities during the COVID-19 pandemic can uncover potential issues with this online learning model.

Taking China’s icourse.163 MOOC platform as an example, we chose 15 courses with a large number of participants for SNA, focusing on learners’ interaction characteristics before, during, and after the COVID-19 outbreak. We visually assessed changes in the scale of network interaction before, during, and after the outbreak along with the characteristics of interaction in Gephi. Examining students’ interactions in different courses revealed distinct interactive network characteristics, the pandemic’s impact on online courses, and relevant suggestions. Findings are expected to promote effective interaction and deep learning among students in addition to serving as a reference for the development of other online learning communities.

2. Literature review and research questions

Interaction is deemed as central to the educational experience and is a major focus of research on online learning. Moore began to study the problem of interaction in distance education as early as 1989. He defined three core types of interaction: student–teacher, student–content, and student–student [ 9 ]. Lear et al. [ 10 ] described an interactivity/ community-process model of distance education: they specifically discussed the relationships between interactivity, community awareness, and engaging learners and found interactivity and community awareness to be correlated with learner engagement. Zulfikar et al. [ 11 ] suggested that discussions initiated by the students encourage more students’ engagement than discussions initiated by the instructors. It is most important to afford learners opportunities to interact purposefully with teachers, and improving the quality of learner interaction is crucial to fostering profound learning [ 12 ]. Interaction is an important way for learners to communicate and share information, and a key factor in the quality of online learning [ 13 ].

Timely feedback is the main component of online learning interaction. Woo and Reeves discovered that students often become frustrated when they fail to receive prompt feedback [ 14 ]. Shelley et al. conducted a three-year study of graduate and undergraduate students’ satisfaction with online learning at universities and found that interaction with educators and students is the main factor affecting satisfaction [ 15 ]. Teachers therefore need to provide students with scoring justification, support, and constructive criticism during online learning. Some researchers examined online learning during the COVID-19 pandemic. They found that most students preferred face-to-face learning rather than online learning due to obstacles faced online, such as a lack of motivation, limited teacher-student interaction, and a sense of isolation when learning in different times and spaces [ 16 , 17 ]. However, it can be reduced by enhancing the online interaction between teachers and students [ 18 ].

Research showed that interactions contributed to maintaining students’ motivation to continue learning [ 19 ]. Baber argued that interaction played a key role in students’ academic performance and influenced the quality of the online learning experience [ 20 ]. Hodges et al. maintained that well-designed online instruction can lead to unique teaching experiences [ 21 ]. Banna et al. mentioned that using discussion boards, chat sessions, blogs, wikis, and other tools could promote student interaction and improve participation in online courses [ 22 ]. During the COVID-19 pandemic, Mahmood proposed a series of teaching strategies suitable for distance learning to improve its effectiveness [ 23 ]. Lapitan et al. devised an online strategy to ease the transition from traditional face-to-face instruction to online learning [ 24 ]. The preceding discussion suggests that online learning goes beyond simply providing learning resources; teachers should ideally design real-life activities to give learners more opportunities to participate.

As mentioned, COVID-19 has driven many scholars to explore the online learning environment. However, most have ignored the uniqueness of online learning during this time and have rarely compared pre- and post-pandemic online learning interaction. Taking China’s icourse.163 MOOC platform as an example, we chose 15 courses with a large number of participants for SNA, centering on student interaction before and after the pandemic. Gephi was used to visually analyze changes in the scale and characteristics of network interaction. The following questions were of particular interest:

- (1) Can the COVID-19 pandemic promote the expansion of online learning?

- (2a) What are the characteristics of online learning interaction during the pandemic?

- (2b) What are the characteristics of online learning interaction after the pandemic?

- (3) How do interaction characteristics differ between social science courses and natural science courses?

3. Methodology

3.1 research context.

We selected several courses with a large number of participants and extensive online interaction among hundreds of courses on the icourse.163 MOOC platform. These courses had been offered on the platform for at least three semesters, covering three periods (i.e., before, during, and after the COVID-19 outbreak). To eliminate the effects of shifts in irrelevant variables (e.g., course teaching activities), we chose several courses with similar teaching activities and compared them on multiple dimensions. All course content was taught online. The teachers of each course posted discussion threads related to learning topics; students were expected to reply via comments. Learners could exchange ideas freely in their responses in addition to asking questions and sharing their learning experiences. Teachers could answer students’ questions as well. Conversations in the comment area could partly compensate for a relative absence of online classroom interaction. Teacher–student interaction is conducive to the formation of a social network structure and enabled us to examine teachers’ and students’ learning behavior through SNA. The comment areas in these courses were intended for learners to construct knowledge via reciprocal communication. Meanwhile, by answering students’ questions, teachers could encourage them to reflect on their learning progress. These courses’ successive terms also spanned several phases of COVID-19, allowing us to ascertain the pandemic’s impact on online learning.

3.2 Data collection and preprocessing

To avoid interference from invalid or unclear data, the following criteria were applied to select representative courses: (1) generality (i.e., public courses and professional courses were chosen from different schools across China); (2) time validity (i.e., courses were held before during, and after the pandemic); and (3) notability (i.e., each course had at least 2,000 participants). We ultimately chose 15 courses across the social sciences and natural sciences (see Table 1 ). The coding is used to represent the course name.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0273016.t001

To discern courses’ evolution during the pandemic, we gathered data on three terms before, during, and after the COVID-19 outbreak in addition to obtaining data from two terms completed well before the pandemic and long after. Our final dataset comprised five sets of interactive data. Finally, we collected about 120,000 comments for SNA. Because each course had a different start time—in line with fluctuations in the number of confirmed COVID-19 cases in China and the opening dates of most colleges and universities—we divided our sample into five phases: well before the pandemic (Phase I); before the pandemic (Phase Ⅱ); during the pandemic (Phase Ⅲ); after the pandemic (Phase Ⅳ); and long after the pandemic (Phase Ⅴ). We sought to preserve consistent time spans to balance the amount of data in each period ( Fig 1 ).

https://doi.org/10.1371/journal.pone.0273016.g001

3.3 Instrumentation

Participants’ comments and “thumbs-up” behavior data were converted into a network structure and compared using social network analysis (SNA). Network analysis, according to M’Chirgui, is an effective tool for clarifying network relationships by employing sophisticated techniques [ 25 ]. Specifically, SNA can help explain the underlying relationships among team members and provide a better understanding of their internal processes. Yang and Tang used SNA to discuss the relationship between team structure and team performance [ 26 ]. Golbeck argued that SNA could improve the understanding of students’ learning processes and reveal learners’ and teachers’ role dynamics [ 27 ].

To analyze Question (1), the number of nodes and diameter in the generated network were deemed as indicators of changes in network size. Social networks are typically represented as graphs with nodes and degrees, and node count indicates the sample size [ 15 ]. Wellman et al. proposed that the larger the network scale, the greater the number of network members providing emotional support, goods, services, and companionship [ 28 ]. Jan’s study measured the network size by counting the nodes which represented students, lecturers, and tutors [ 29 ]. Similarly, network nodes in the present study indicated how many learners and teachers participated in the course, with more nodes indicating more participants. Furthermore, we investigated the network diameter, a structural feature of social networks, which is a common metric for measuring network size in SNA [ 30 ]. The network diameter refers to the longest path between any two nodes in the network. There has been evidence that a larger network diameter leads to greater spread of behavior [ 31 ]. Likewise, Gašević et al. found that larger networks were more likely to spread innovative ideas about educational technology when analyzing MOOC-related research citations [ 32 ]. Therefore, we employed node count and network diameter to measure the network’s spatial size and further explore the expansion characteristic of online courses. Brief introduction of these indicators can be summarized in Table 2 .

https://doi.org/10.1371/journal.pone.0273016.t002

To address Question (2), a list of interactive analysis metrics in SNA were introduced to scrutinize learners’ interaction characteristics in online learning during and after the pandemic, as shown below:

- (1) The average degree reflects the density of the network by calculating the average number of connections for each node. As Rong and Xu suggested, the average degree of a network indicates how active its participants are [ 33 ]. According to Hu, a higher average degree implies that more students are interacting directly with each other in a learning context [ 34 ]. The present study inherited the concept of the average degree from these previous studies: the higher the average degree, the more frequent the interaction between individuals in the network.

- (2) Essentially, a weighted average degree in a network is calculated by multiplying each degree by its respective weight, and then taking the average. Bydžovská took the strength of the relationship into account when determining the weighted average degree [ 35 ]. By calculating friendship’s weighted value, Maroulis assessed peer achievement within a small-school reform [ 36 ]. Accordingly, we considered the number of interactions as the weight of the degree, with a higher average degree indicating more active interaction among learners.

- (3) Network density is the ratio between actual connections and potential connections in a network. The more connections group members have with each other, the higher the network density. In SNA, network density is similar to group cohesion, i.e., a network of more strong relationships is more cohesive [ 37 ]. Network density also reflects how much all members are connected together [ 38 ]. Therefore, we adopted network density to indicate the closeness among network members. Higher network density indicates more frequent interaction and closer communication among students.

- (4) Clustering coefficient describes local network attributes and indicates that two nodes in the network could be connected through adjacent nodes. The clustering coefficient measures users’ tendency to gather (cluster) with others in the network: the higher the clustering coefficient, the more frequently users communicate with other group members. We regarded this indicator as a reflection of the cohesiveness of the group [ 39 ].

- (5) In a network, the average path length is the average number of steps along the shortest paths between any two nodes. Oliveres has observed that when an average path length is small, the route from one node to another is shorter when graphed [ 40 ]. This is especially true in educational settings where students tend to become closer friends. So we consider that the smaller the average path length, the greater the possibility of interaction between individuals in the network.

- (6) A network with a large number of nodes, but whose average path length is surprisingly small, is known as the small-world effect [ 41 ]. A higher clustering coefficient and shorter average path length are important indicators of a small-world network: a shorter average path length enables the network to spread information faster and more accurately; a higher clustering coefficient can promote frequent knowledge exchange within the group while boosting the timeliness and accuracy of knowledge dissemination [ 42 ]. Brief introduction of these indicators can be summarized in Table 3 .

https://doi.org/10.1371/journal.pone.0273016.t003

To analyze Question 3, we used the concept of closeness centrality, which determines how close a vertex is to others in the network. As Opsahl et al. explained, closeness centrality reveals how closely actors are coupled with their entire social network [ 43 ]. In order to analyze social network-based engineering education, Putnik et al. examined closeness centrality and found that it was significantly correlated with grades [ 38 ]. We used closeness centrality to measure the position of an individual in the network. Brief introduction of these indicators can be summarized in Table 4 .

https://doi.org/10.1371/journal.pone.0273016.t004

3.4 Ethics statement

This study was approved by the Academic Committee Office (ACO) of South China Normal University ( http://fzghb.scnu.edu.cn/ ), Guangzhou, China. Research data were collected from the open platform and analyzed anonymously. There are thus no privacy issues involved in this study.

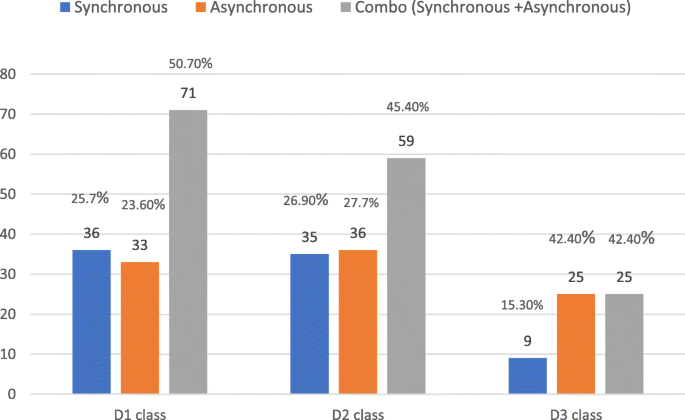

4.1 COVID-19’s role in promoting the scale of online courses was not as important as expected

As shown in Fig 2 , the number of course participants and nodes are closely correlated with the pandemic’s trajectory. Because the number of participants in each course varied widely, we normalized the number of participants and nodes to more conveniently visualize course trends. Fig 2 depicts changes in the chosen courses’ number of participants and nodes before the pandemic (Phase II), during the pandemic (Phase III), and after the pandemic (Phase IV). The number of participants in most courses during the pandemic exceeded those before and after the pandemic. But the number of people who participate in interaction in some courses did not increase.

https://doi.org/10.1371/journal.pone.0273016.g002

In order to better analyze the trend of interaction scale in online courses before, during, and after the pandemic, the selected courses were categorized according to their scale change. When the number of participants increased (decreased) beyond 20% (statistical experience) and the diameter also increased (decreased), the course scale was determined to have increased (decreased); otherwise, no significant change was identified in the course’s interaction scale. Courses were subsequently divided into three categories: increased interaction scale, decreased interaction scale, and no significant change. Results appear in Table 5 .

https://doi.org/10.1371/journal.pone.0273016.t005

From before the pandemic until it broke out, the interaction scale of five courses increased, accounting for 33.3% of the full sample; one course’s interaction scale declined, accounting for 6.7%. The interaction scale of nine courses decreased, accounting for 60%. The pandemic’s role in promoting online courses thus was not as important as anticipated, and most courses’ interaction scale did not change significantly throughout.

No courses displayed growing interaction scale after the pandemic: the interaction scale of nine courses fell, accounting for 60%; and the interaction scale of six courses did not shift significantly, accounting for 40%. Courses with an increased scale of interaction during the pandemic did not maintain an upward trend. On the contrary, the improvement in the pandemic caused learners’ enthusiasm for online learning to wane. We next analyzed several interaction metrics to further explore course interaction during different pandemic periods.

4.2 Characteristics of online learning interaction amid COVID-19

4.2.1 during the covid-19 pandemic, online learning interaction in some courses became more active..

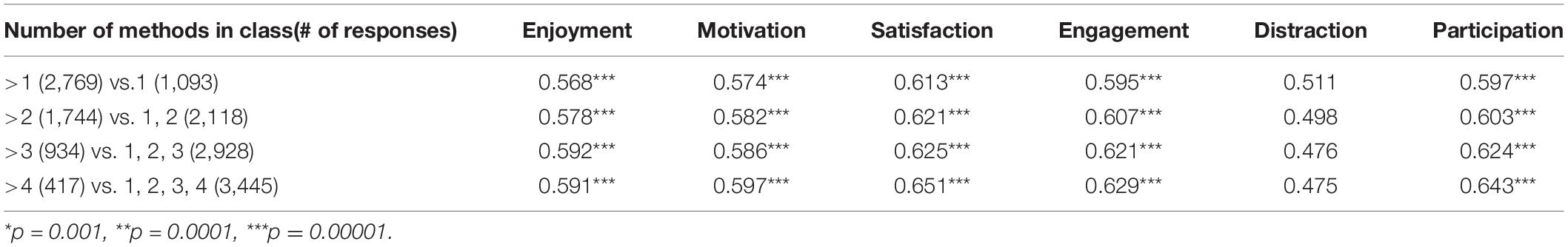

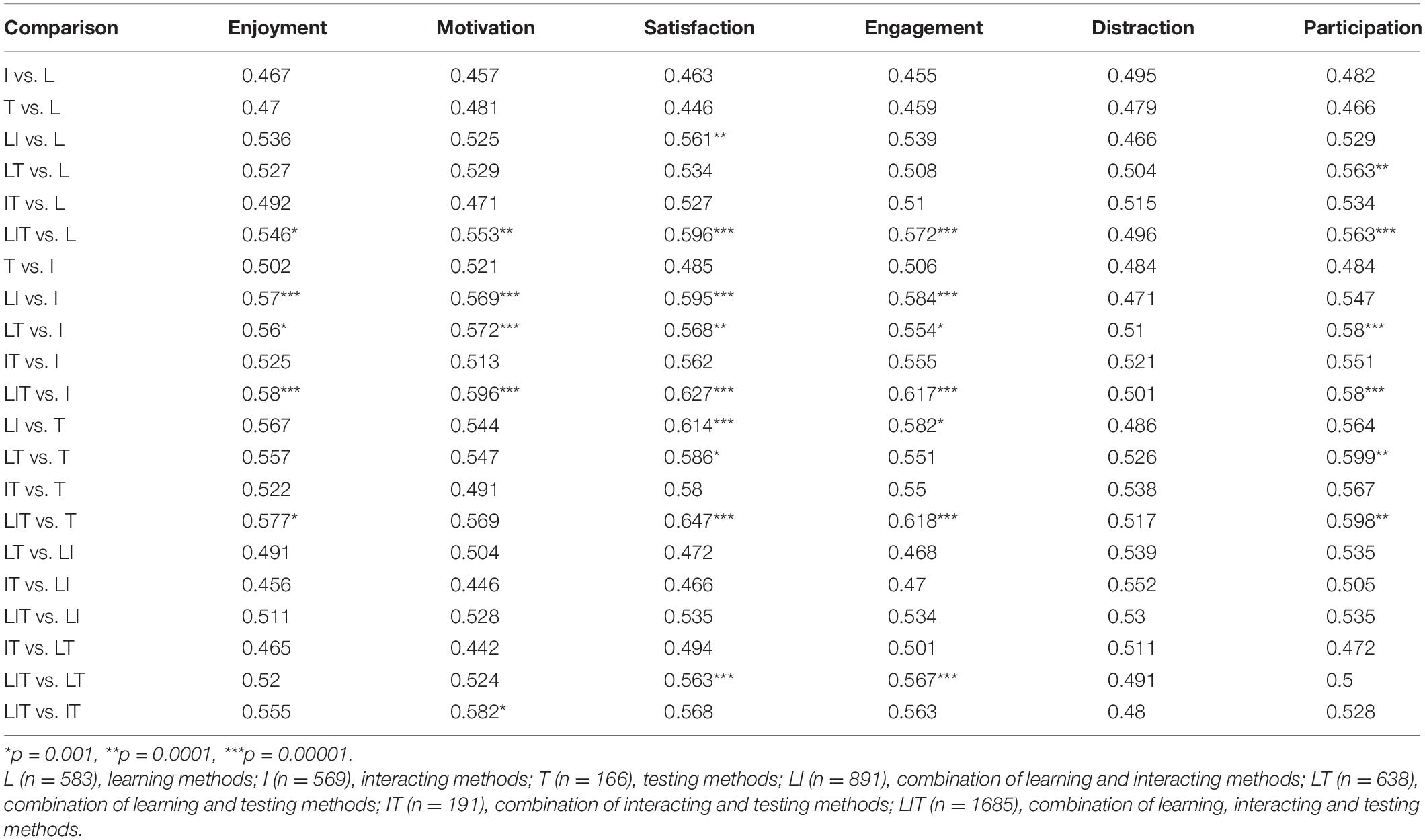

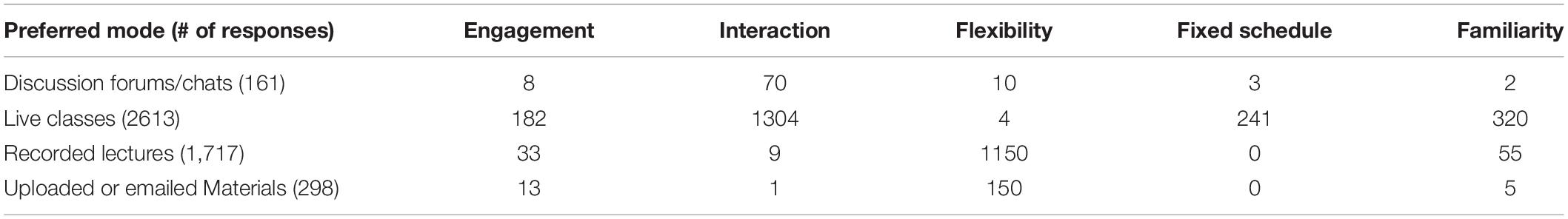

Changes in course indicators with the growing interaction scale during the pandemic are presented in Fig 3 , including SS5, SS6, NS1, NS3, and NS8. The horizontal ordinate indicates the number of courses, with red color representing the rise of the indicator value on the vertical ordinate and blue representing the decline.

https://doi.org/10.1371/journal.pone.0273016.g003

Specifically: (1) The average degree and weighted average degree of the five course networks demonstrated an upward trend. The emergence of the pandemic promoted students’ enthusiasm; learners were more active in the interactive network. (2) Fig 3 shows that 3 courses had increased network density and 2 courses had decreased. The higher the network density, the more communication within the team. Even though the pandemic accelerated the interaction scale and frequency, the tightness between learners in some courses did not improve. (3) The clustering coefficient of social science courses rose whereas the clustering coefficient and small-world property of natural science courses fell. The higher the clustering coefficient and the small-world property, the better the relationship between adjacent nodes and the higher the cohesion [ 39 ]. (4) Most courses’ average path length increased as the interaction scale increased. However, when the average path length grew, adverse effects could manifest: communication between learners might be limited to a small group without multi-directional interaction.

When the pandemic emerged, the only declining network scale belonged to a natural science course (NS2). The change in each course index is pictured in Fig 4 . The abscissa indicates the size of the value, with larger values to the right. The red dot indicates the index value before the pandemic; the blue dot indicates its value during the pandemic. If the blue dot is to the right of the red dot, then the value of the index increased; otherwise, the index value declined. Only the weighted average degree of the course network increased. The average degree, network density decreased, indicating that network members were not active and that learners’ interaction degree and communication frequency lessened. Despite reduced learner interaction, the average path length was small and the connectivity between learners was adequate.

https://doi.org/10.1371/journal.pone.0273016.g004

4.2.2 After the COVID-19 pandemic, the scale decreased rapidly, but most course interaction was more effective.

Fig 5 shows the changes in various courses’ interaction indicators after the pandemic, including SS1, SS2, SS3, SS6, SS7, NS2, NS3, NS7, and NS8.

https://doi.org/10.1371/journal.pone.0273016.g005

Specifically: (1) The average degree and weighted average degree of most course networks decreased. The scope and intensity of interaction among network members declined rapidly, as did learners’ enthusiasm for communication. (2) The network density of seven courses also fell, indicating weaker connections between learners in most courses. (3) In addition, the clustering coefficient and small-world property of most course networks decreased, suggesting little possibility of small groups in the network. The scope of interaction between learners was not limited to a specific space, and the interaction objects had no significant tendencies. (4) Although the scale of course interaction became smaller in this phase, the average path length of members’ social networks shortened in nine courses. Its shorter average path length would expedite the spread of information within the network as well as communication and sharing among network members.

Fig 6 displays the evolution of course interaction indicators without significant changes in interaction scale after the pandemic, including SS4, SS5, NS1, NS4, NS5, and NS6.

https://doi.org/10.1371/journal.pone.0273016.g006

Specifically: (1) Some course members’ social networks exhibited an increase in the average and weighted average. In these cases, even though the course network’s scale did not continue to increase, communication among network members rose and interaction became more frequent and deeper than before. (2) Network density and average path length are indicators of social network density. The greater the network density, the denser the social network; the shorter the average path length, the more concentrated the communication among network members. However, at this phase, the average path length and network density in most courses had increased. Yet the network density remained small despite having risen ( Table 6 ). Even with more frequent learner interaction, connections remained distant and the social network was comparatively sparse.

https://doi.org/10.1371/journal.pone.0273016.t006

In summary, the scale of interaction did not change significantly overall. Nonetheless, some course members’ frequency and extent of interaction increased, and the relationships between network members became closer as well. In the study, we found it interesting that the interaction scale of Economics (a social science course) course and Electrodynamics (a natural science course) course expanded rapidly during the pandemic and retained their interaction scale thereafter. We next assessed these two courses to determine whether their level of interaction persisted after the pandemic.

4.3 Analyses of natural science courses and social science courses

4.3.1 analyses of the interaction characteristics of economics and electrodynamics..

Economics and Electrodynamics are social science courses and natural science courses, respectively. Members’ interaction within these courses was similar: the interaction scale increased significantly when COVID-19 broke out (Phase Ⅲ), and no significant changes emerged after the pandemic (Phase Ⅴ). We hence focused on course interaction long after the outbreak (Phase V) and compared changes across multiple indicators, as listed in Table 7 .

https://doi.org/10.1371/journal.pone.0273016.t007

As the pandemic continued to improve, the number of participants and the diameter long after the outbreak (Phase V) each declined for Economics compared with after the pandemic (Phase IV). The interaction scale decreased, but the interaction between learners was much deeper. Specifically: (1) The weighted average degree, network density, clustering coefficient, and small-world property each reflected upward trends. The pandemic therefore exerted a strong impact on this course. Interaction was well maintained even after the pandemic. The smaller network scale promoted members’ interaction and communication. (2) Compared with after the pandemic (Phase IV), members’ network density increased significantly, showing that relationships between learners were closer and that cohesion was improving. (3) At the same time, as the clustering coefficient and small-world property grew, network members demonstrated strong small-group characteristics: the communication between them was deepening and their enthusiasm for interaction was higher. (4) Long after the COVID-19 outbreak (Phase V), the average path length was reduced compared with previous terms, knowledge flowed more quickly among network members, and the degree of interaction gradually deepened.

The average degree, weighted average degree, network density, clustering coefficient, and small-world property of Electrodynamics all decreased long after the COVID-19 outbreak (Phase V) and were lower than during the outbreak (Phase Ⅲ). The level of learner interaction therefore gradually declined long after the outbreak (Phase V), and connections between learners were no longer active. Although the pandemic increased course members’ extent of interaction, this rise was merely temporary: students’ enthusiasm for learning waned rapidly and their interaction decreased after the pandemic (Phase IV). To further analyze the interaction characteristics of course members in Economics and Electrodynamics, we evaluated the closeness centrality of their social networks, as shown in section 4.3.2.

4.3.2 Analysis of the closeness centrality of Economics and Electrodynamics.

The change in the closeness centrality of social networks in Economics was small, and no sharp upward trend appeared during the pandemic outbreak, as shown in Fig 7 . The emergence of COVID-19 apparently fostered learners’ interaction in Economics albeit without a significant impact. The closeness centrality changed in Electrodynamics varied from that of Economics: upon the COVID-19 outbreak, closeness centrality was significantly different from other semesters. Communication between learners was closer and interaction was more effective. Electrodynamics course members’ social network proximity decreased rapidly after the pandemic. Learners’ communication lessened. In general, Economics course showed better interaction before the outbreak and was less affected by the pandemic; Electrodynamics course was more affected by the pandemic and showed different interaction characteristics at different periods of the pandemic.

(Note: "****" indicates the significant distinction in closeness centrality between the two periods, otherwise no significant distinction).

https://doi.org/10.1371/journal.pone.0273016.g007

5. Discussion

We referred to discussion forums from several courses on the icourse.163 MOOC platform to compare online learning before, during, and after the COVID-19 pandemic via SNA and to delineate the pandemic’s effects on online courses. Only 33.3% of courses in our sample increased in terms of interaction during the pandemic; the scale of interaction did not rise in any courses thereafter. When the courses scale rose, the scope and frequency of interaction showed upward trends during the pandemic; and the clustering coefficient of natural science courses and social science courses differed: the coefficient for social science courses tended to rise whereas that for natural science courses generally declined. When the pandemic broke out, the interaction scale of a single natural science course decreased along with its interaction scope and frequency. The amount of interaction in most courses shrank rapidly during the pandemic and network members were not as active as they had been before. However, after the pandemic, some courses saw declining interaction but greater communication between members; interaction also became more frequent and deeper than before.

5.1 During the COVID-19 pandemic, the scale of interaction increased in only a few courses

The pandemic outbreak led to a rapid increase in the number of participants in most courses; however, the change in network scale was not significant. The scale of online interaction expanded swiftly in only a few courses; in others, the scale either did not change significantly or displayed a downward trend. After the pandemic, the interaction scale in most courses decreased quickly; the same pattern applied to communication between network members. Learners’ enthusiasm for online interaction reduced as the circumstances of the pandemic improved—potentially because, during the pandemic, China’s Ministry of Education declared “School’s Out, But Class’s On” policy. Major colleges and universities were encouraged to use the Internet and informational resources to provide learning support, hence the sudden increase in the number of participants and interaction in online courses [ 46 ]. After the pandemic, students’ enthusiasm for online learning gradually weakened, presumably due to easing of the pandemic [ 47 ]. More activities also transitioned from online to offline, which tempered learners’ online discussion. Research has shown that long-term online learning can even bore students [ 48 ].

Most courses’ interaction scale decreased significantly after the pandemic. First, teachers and students occupied separate spaces during the outbreak, had few opportunities for mutual cooperation and friendship, and lacked a sense of belonging [ 49 ]. Students’ enthusiasm for learning dissipated over time [ 50 ]. Second, some teachers were especially concerned about adapting in-person instructional materials for digital platforms; their pedagogical methods were ineffective, and they did not provide learning activities germane to student interaction [ 51 ]. Third, although teachers and students in remote areas were actively engaged in online learning, some students could not continue to participate in distance learning due to inadequate technology later in the outbreak [ 52 ].

5.2 Characteristics of online learning interaction during and after the COVID-19 pandemic

5.2.1 during the covid-19 pandemic, online interaction in most courses did not change significantly..

The interaction scale of only a few courses increased during the pandemic. The interaction scope and frequency of these courses climbed as well. Yet even as the degree of network interaction rose, course network density did not expand in all cases. The pandemic sparked a surge in the number of online learners and a rapid increase in network scale, but students found it difficult to interact with all learners. Yau pointed out that a greater network scale did not enrich the range of interaction between individuals; rather, the number of individuals who could interact directly was limited [ 53 ]. The internet facilitates interpersonal communication. However, not everyone has the time or ability to establish close ties with others [ 54 ].

In addition, social science courses and natural science courses in our sample revealed disparate trends in this regard: the clustering coefficient of social science courses increased and that of natural science courses decreased. Social science courses usually employ learning approaches distinct from those in natural science courses [ 55 ]. Social science courses emphasize critical and innovative thinking along with personal expression [ 56 ]. Natural science courses focus on practical skills, methods, and principles [ 57 ]. Therefore, the content of social science courses can spur large-scale discussion among learners. Some course evaluations indicated that the course content design was suboptimal as well: teachers paid close attention to knowledge transmission and much less to piquing students’ interest in learning. In addition, the thread topics that teachers posted were scarcely diversified and teachers’ questions lacked openness. These attributes could not spark active discussion among learners.

5.2.2 Online learning interaction declined after the COVID-19 pandemic.

Most courses’ interaction scale and intensity decreased rapidly after the pandemic, but some did not change. Courses with a larger network scale did not continue to expand after the outbreak, and students’ enthusiasm for learning paled. The pandemic’s reduced severity also influenced the number of participants in online courses. Meanwhile, restored school order moved many learning activities from virtual to in-person spaces. Face-to-face learning has gradually replaced online learning, resulting in lower enrollment and less interaction in online courses. Prolonged online courses could have also led students to feel lonely and to lack a sense of belonging [ 58 ].

The scale of interaction in some courses did not change substantially after the pandemic yet learners’ connections became tighter. We hence recommend that teachers seize pandemic-related opportunities to design suitable activities. Additionally, instructors should promote student-teacher and student-student interaction, encourage students to actively participate online, and generally intensify the impact of online learning.

5.3 What are the characteristics of interaction in social science courses and natural science courses?

The level of interaction in Economics (a social science course) was significantly higher than that in Electrodynamics (a natural science course), and the small-world property in Economics increased as well. To boost online courses’ learning-related impacts, teachers can divide groups of learners based on the clustering coefficient and the average path length. Small groups of students may benefit teachers in several ways: to participate actively in activities intended to expand students’ knowledge, and to serve as key actors in these small groups. Cultivating students’ keenness to participate in class activities and self-management can also help teachers guide learner interaction and foster deep knowledge construction.

As evidenced by comments posted in the Electrodynamics course, we observed less interaction between students. Teachers also rarely urged students to contribute to conversations. These trends may have arisen because teachers and students were in different spaces. Teachers might have struggled to discern students’ interaction status. Teachers could also have failed to intervene in time, to design online learning activities that piqued learners’ interest, and to employ sound interactive theme planning and guidance. Teachers are often active in traditional classroom settings. Their roles are comparatively weakened online, such that they possess less control over instruction [ 59 ]. Online instruction also requires a stronger hand in learning: teachers should play a leading role in regulating network members’ interactive communication [ 60 ]. Teachers can guide learners to participate, help learners establish social networks, and heighten students’ interest in learning [ 61 ]. Teachers should attend to core members in online learning while also considering edge members; by doing so, all network members can be driven to share their knowledge and become more engaged. Finally, teachers and assistant teachers should help learners develop knowledge, exchange topic-related ideas, pose relevant questions during course discussions, and craft activities that enable learners to interact online [ 62 ]. These tactics can improve the effectiveness of online learning.

As described, network members displayed distinct interaction behavior in Economics and Electrodynamics courses. First, these courses varied in their difficulty: the social science course seemed easier to understand and focused on divergent thinking. Learners were often willing to express their views in comments and to ponder others’ perspectives [ 63 ]. The natural science course seemed more demanding and was oriented around logical thinking and skills [ 64 ]. Second, courses’ content differed. In general, social science courses favor the acquisition of declarative knowledge and creative knowledge compared with natural science courses. Social science courses also entertain open questions [ 65 ]. Natural science courses revolve around principle knowledge, strategic knowledge, and transfer knowledge [ 66 ]. Problems in these courses are normally more complicated than those in social science courses. Third, the indicators affecting students’ attitudes toward learning were unique. Guo et al. discovered that “teacher feedback” most strongly influenced students’ attitudes towards learning social science courses but had less impact on students in natural science courses [ 67 ]. Therefore, learners in social science courses likely expect more feedback from teachers and greater interaction with others.

6. Conclusion and future work

Our findings show that the network interaction scale of some online courses expanded during the COVID-19 pandemic. The network scale of most courses did not change significantly, demonstrating that the pandemic did not notably alter the scale of course interaction. Online learning interaction among course network members whose interaction scale increased also became more frequent during the pandemic. Once the outbreak was under control, although the scale of interaction declined, the level and scope of some courses’ interactive networks continued to rise; interaction was thus particularly effective in these cases. Overall, the pandemic appeared to have a relatively positive impact on online learning interaction. We considered a pair of courses in detail and found that Economics (a social science course) fared much better than Electrodynamics (a natural science course) in classroom interaction; learners were more willing to partake in-class activities, perhaps due to these courses’ unique characteristics. Brint et al. also came to similar conclusions [ 57 ].

This study was intended to be rigorous. Even so, several constraints can be addressed in future work. The first limitation involves our sample: we focused on a select set of courses hosted on China’s icourse.163 MOOC platform. Future studies should involve an expansive collection of courses to provide a more holistic understanding of how the pandemic has influenced online interaction. Second, we only explored the interactive relationship between learners and did not analyze interactive content. More in-depth content analysis should be carried out in subsequent research. All in all, the emergence of COVID-19 has provided a new path for online learning and has reshaped the distance learning landscape. To cope with associated challenges, educational practitioners will need to continue innovating in online instructional design, strengthen related pedagogy, optimize online learning conditions, and bolster teachers’ and students’ competence in online learning.

- View Article

- Google Scholar

- PubMed/NCBI

- 30. Serrat O. Social network analysis. Knowledge solutions: Springer; 2017. p. 39–43. https://doi.org/10.1007/978-981-10-0983-9_9

- 33. Rong Y, Xu E, editors. Strategies for the Management of the Government Affairs Microblogs in China Based on the SNA of Fifty Government Affairs Microblogs in Beijing. 14th International Conference on Service Systems and Service Management 2017.

- 34. Hu X, Chu S, editors. A comparison on using social media in a professional experience course. International Conference on Social Media and Society; 2013.

- 35. Bydžovská H. A Comparative Analysis of Techniques for Predicting Student Performance. Proceedings of the 9th International Conference on Educational Data Mining; Raleigh, NC, USA: International Educational Data Mining Society2016. p. 306–311.

- 40. Olivares D, Adesope O, Hundhausen C, et al., editors. Using social network analysis to measure the effect of learning analytics in computing education. 19th IEEE International Conference on Advanced Learning Technologies 2019.

- 41. Travers J, Milgram S. An experimental study of the small world problem. Social Networks: Elsevier; 1977. p. 179–197. https://doi.org/10.1016/B978-0-12-442450-0.50018–3

- 43. Okamoto K, Chen W, Li X-Y, editors. Ranking of closeness centrality for large-scale social networks. International workshop on frontiers in algorithmics; 2008; Springer, Berlin, Heidelberg: Springer.

- 47. Ding Y, Yang X, Zheng Y, editors. COVID-19’s Effects on the Scope, Effectiveness, and Roles of Teachers in Online Learning Based on Social Network Analysis: A Case Study. International Conference on Blended Learning; 2021: Springer.

- 64. Boys C, Brennan J., Henkel M., Kirkland J., Kogan M., Youl P. Higher Education and Preparation for Work. Jessica Kingsley Publishers. 1988. https://doi.org/10.1080/03075079612331381467

ORIGINAL RESEARCH article

Insights into students’ experiences and perceptions of remote learning methods: from the covid-19 pandemic to best practice for the future.

- 1 Minerva Schools at Keck Graduate Institute, San Francisco, CA, United States

- 2 Ronin Institute for Independent Scholarship, Montclair, NJ, United States

- 3 Department of Physics, University of Toronto, Toronto, ON, Canada

This spring, students across the globe transitioned from in-person classes to remote learning as a result of the COVID-19 pandemic. This unprecedented change to undergraduate education saw institutions adopting multiple online teaching modalities and instructional platforms. We sought to understand students’ experiences with and perspectives on those methods of remote instruction in order to inform pedagogical decisions during the current pandemic and in future development of online courses and virtual learning experiences. Our survey gathered quantitative and qualitative data regarding students’ experiences with synchronous and asynchronous methods of remote learning and specific pedagogical techniques associated with each. A total of 4,789 undergraduate participants representing institutions across 95 countries were recruited via Instagram. We find that most students prefer synchronous online classes, and students whose primary mode of remote instruction has been synchronous report being more engaged and motivated. Our qualitative data show that students miss the social aspects of learning on campus, and it is possible that synchronous learning helps to mitigate some feelings of isolation. Students whose synchronous classes include active-learning techniques (which are inherently more social) report significantly higher levels of engagement, motivation, enjoyment, and satisfaction with instruction. Respondents’ recommendations for changes emphasize increased engagement, interaction, and student participation. We conclude that active-learning methods, which are known to increase motivation, engagement, and learning in traditional classrooms, also have a positive impact in the remote-learning environment. Integrating these elements into online courses will improve the student experience.

Introduction

The COVID-19 pandemic has dramatically changed the demographics of online students. Previously, almost all students engaged in online learning elected the online format, starting with individual online courses in the mid-1990s through today’s robust online degree and certificate programs. These students prioritize convenience, flexibility and ability to work while studying and are older than traditional college age students ( Harris and Martin, 2012 ; Levitz, 2016 ). These students also find asynchronous elements of a course are more useful than synchronous elements ( Gillingham and Molinari, 2012 ). In contrast, students who chose to take courses in-person prioritize face-to-face instruction and connection with others and skew considerably younger ( Harris and Martin, 2012 ). This leaves open the question of whether students who prefer to learn in-person but are forced to learn remotely will prefer synchronous or asynchronous methods. One study of student preferences following a switch to remote learning during the COVID-19 pandemic indicates that students enjoy synchronous over asynchronous course elements and find them more effective ( Gillis and Krull, 2020 ). Now that millions of traditional in-person courses have transitioned online, our survey expands the data on student preferences and explores if those preferences align with pedagogical best practices.