What’s a Hypothesis Space?

Last updated: March 18, 2024

- Math and Logic

Baeldung Pro comes with both absolutely No-Ads as well as finally with Dark Mode , for a clean learning experience:

>> Explore a clean Baeldung

Once the early-adopter seats are all used, the price will go up and stay at $33/year.

1. Introduction

Machine-learning algorithms come with implicit or explicit assumptions about the actual patterns in the data. Mathematically, this means that each algorithm can learn a specific family of models, and that family goes by the name of the hypothesis space.

In this tutorial, we’ll talk about hypothesis spaces and how to choose the right one for the data at hand.

2. Hypothesis Spaces

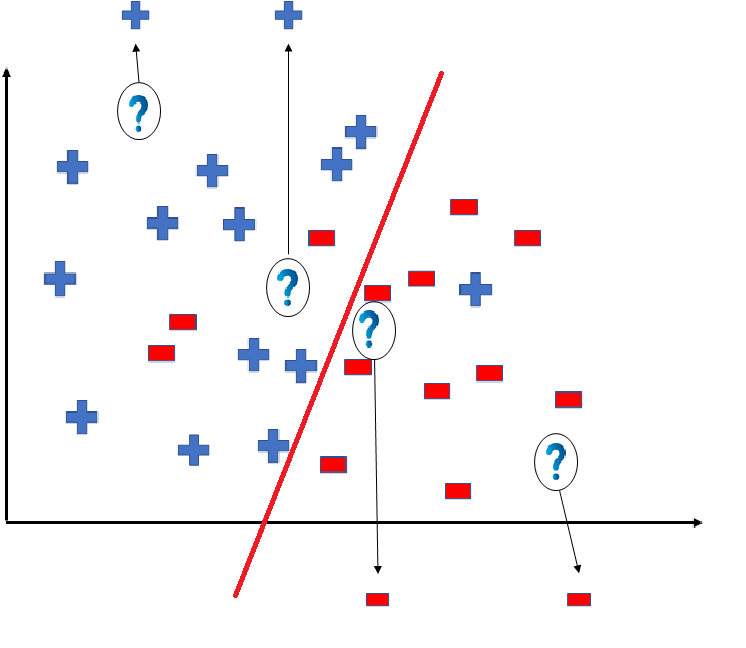

Let’s say that we have a binary classification task and that the data are two-dimensional. Our goal is to find a model that classifies objects as positive or negative. Applying Logistic Regression , we can get the models of the form:

which estimate the probability that the object at hand is positive.

2.1. Hypotheses and Assumptions

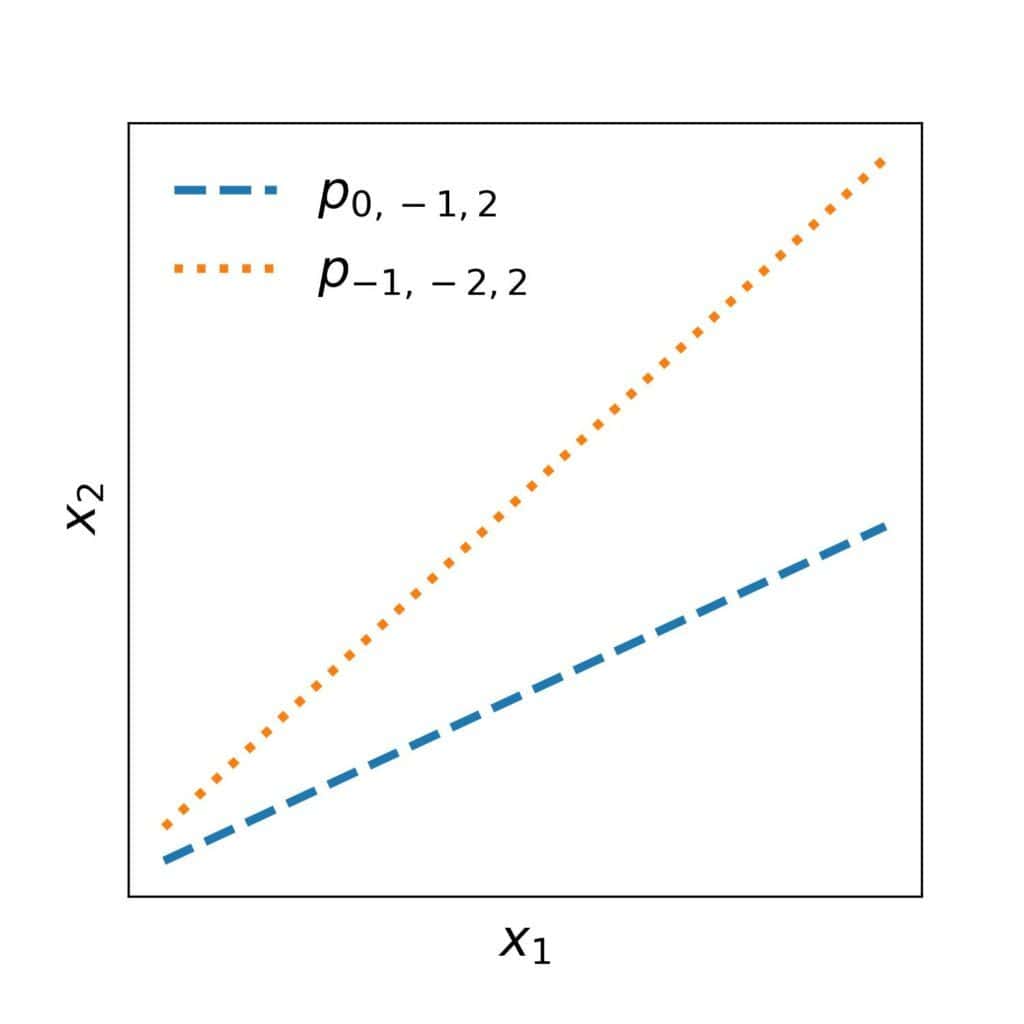

The underlying assumption of hypotheses ( 1 ) is that the boundary separating the positive from negative objects is a straight line. So, every hypothesis from this space corresponds to a straight line in a 2D plane. For instance:

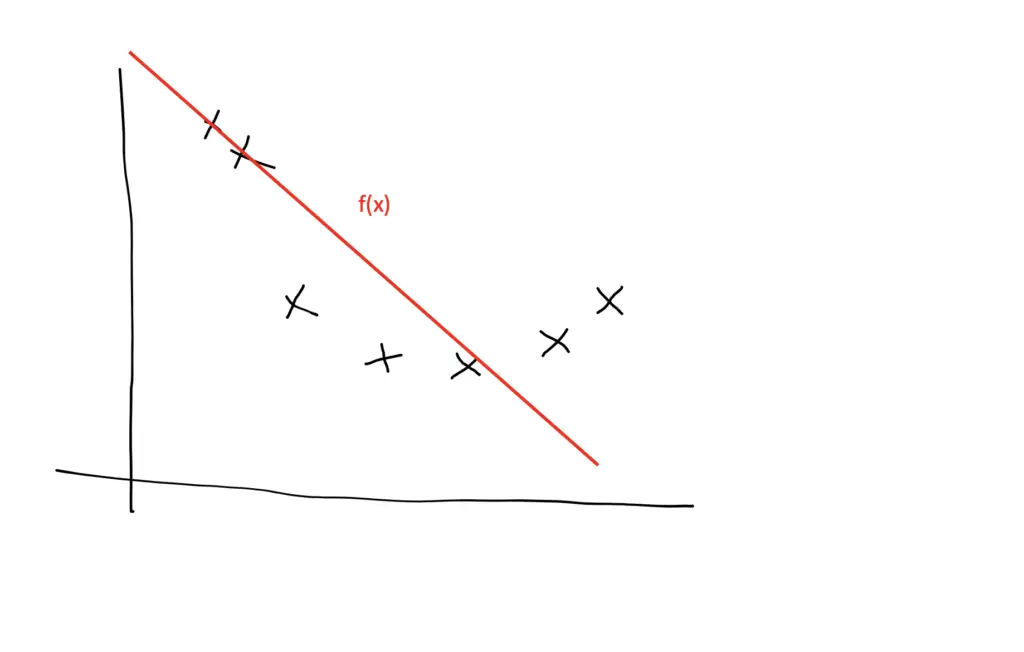

2.2. Regression

3. expressivity of a hypothesis space.

We could informally say that one hypothesis space is more expressive than another if its hypotheses are more diverse and complex.

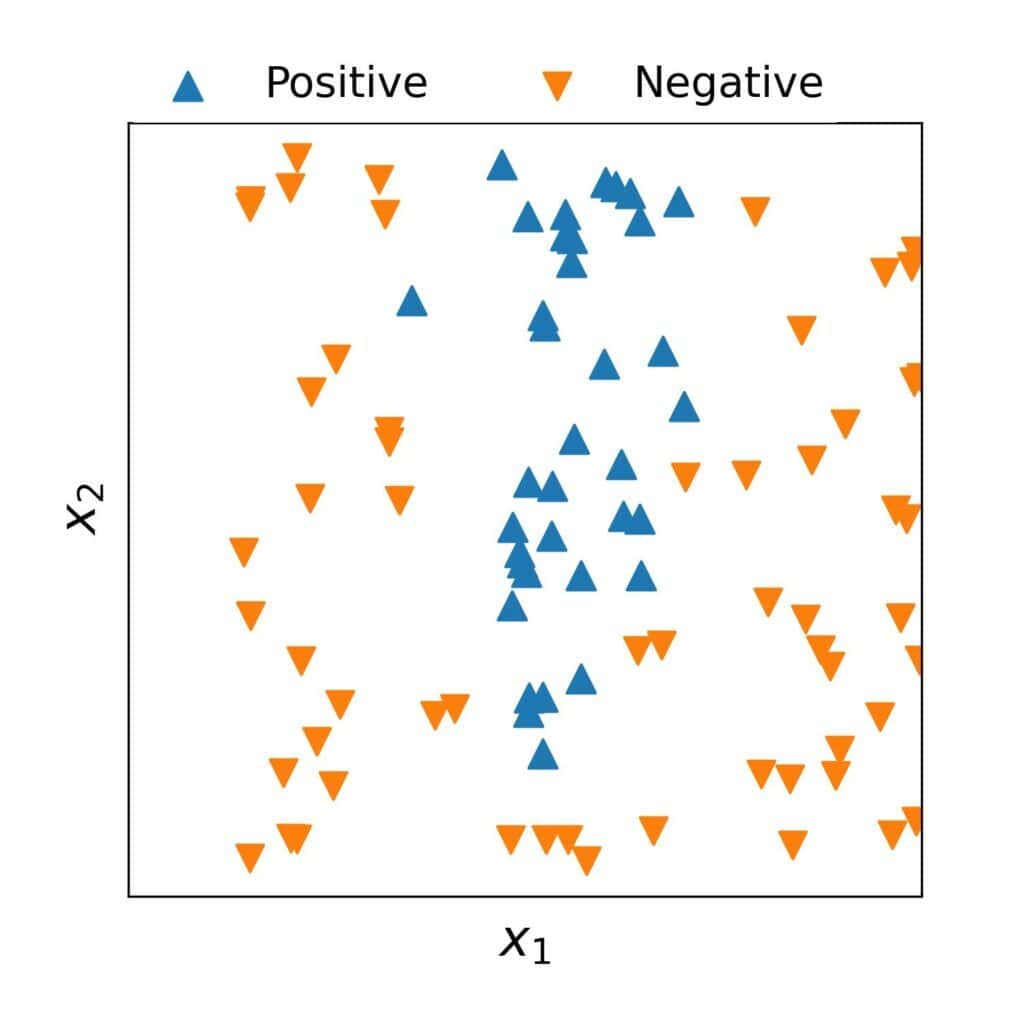

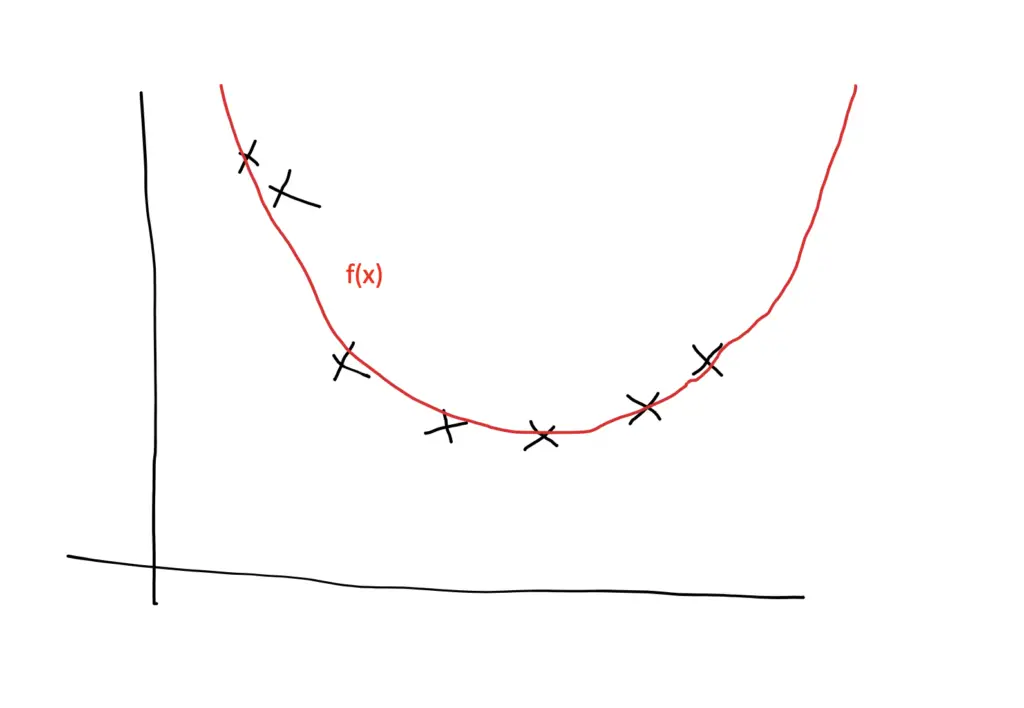

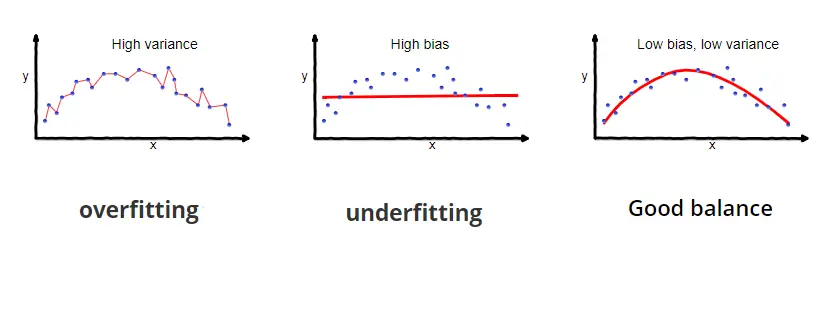

We may underfit the data if our algorithm’s hypothesis space isn’t expressive enough. For instance, linear hypotheses aren’t particularly good options if the actual data are extremely non-linear:

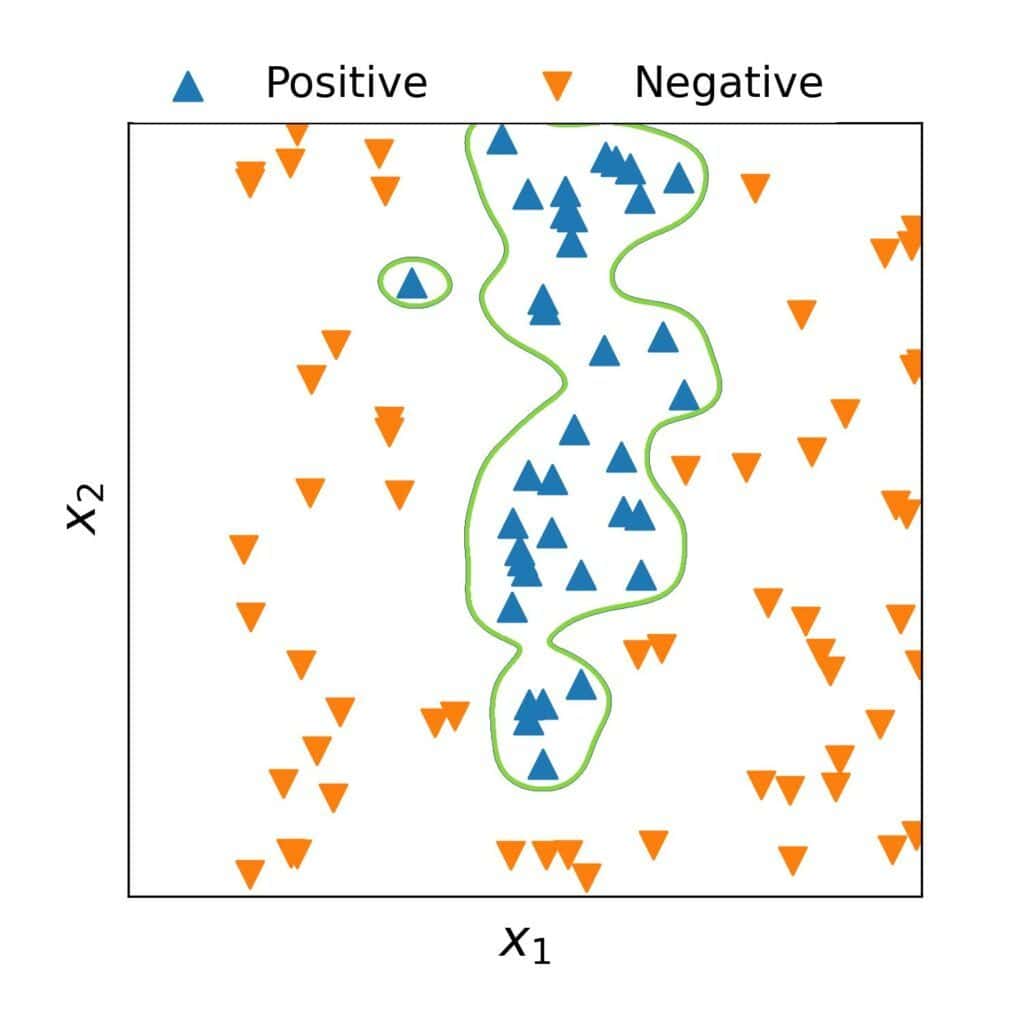

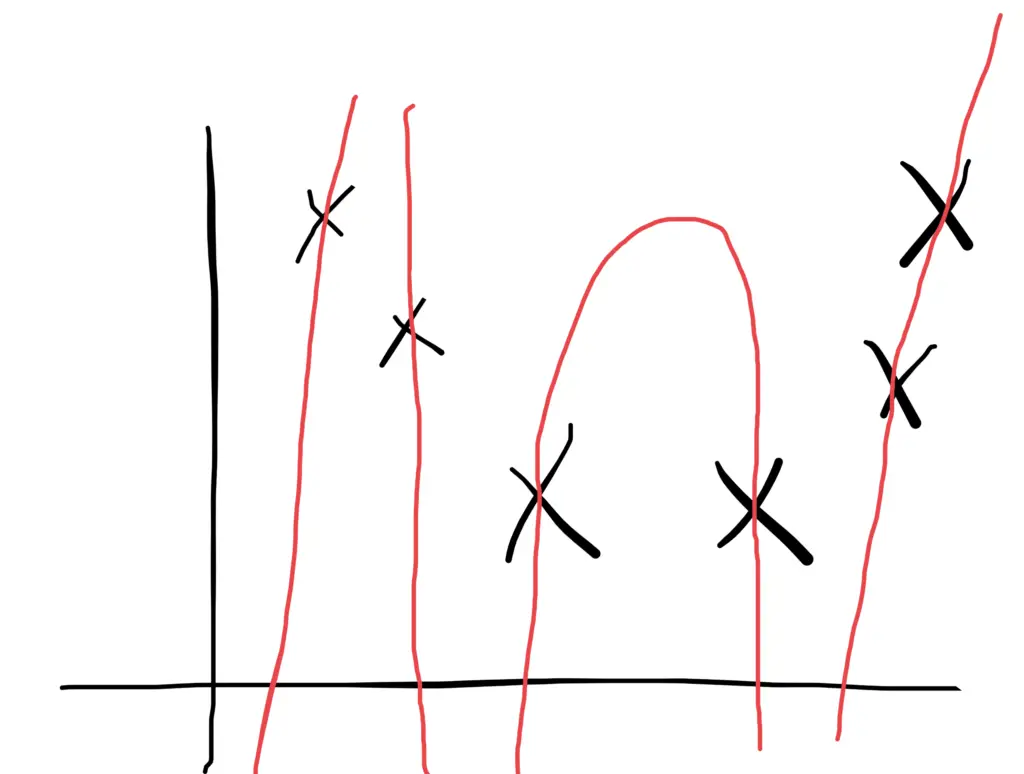

So, training an algorithm that has a very expressive space increases the chance of completely capturing the patterns in the data. However, it also increases the risk of overfitting. For instance, a space containing the hypotheses of the form:

would start modelling the noise, which we see from its decision boundary:

Such models would generalize poorly to unseen data.

3.1. Expressivity vs. Interpretability

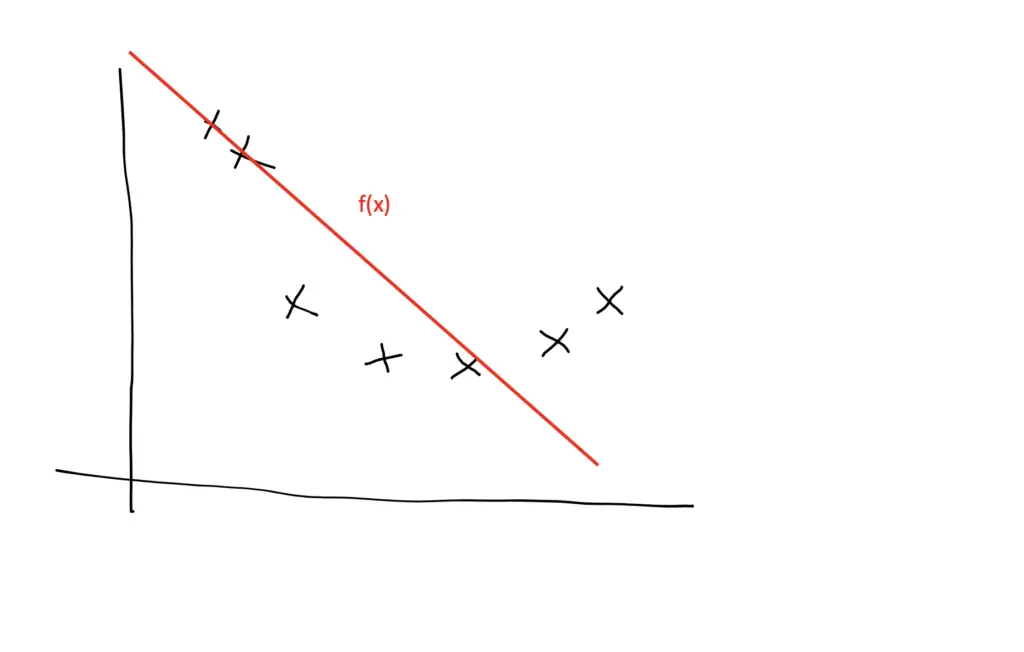

Additionally, even if a complex hypothesis has a good generalization capability, it may be unusable in practice because it’s too complicated to understand or compute. What’s more, intricated hypotheses offer limited insight into the real-world process that generated the data. For example, a quadratic model:

4. How to Choose the Hypothesis Space?

We need to find the right balance between expressivity and simplicity. Unfortunately, that’s easier said than done. Most of the time, we need to rely on our intuition about the data.

So, we should start by exploring the dataset, using visualizations as much as possible. For instance, we can conclude that a straight line isn’t likely to be an adequate boundary for the above classification data. However, a high-order curve would probably be too complex even though it might split the dataset into two classes without an error.

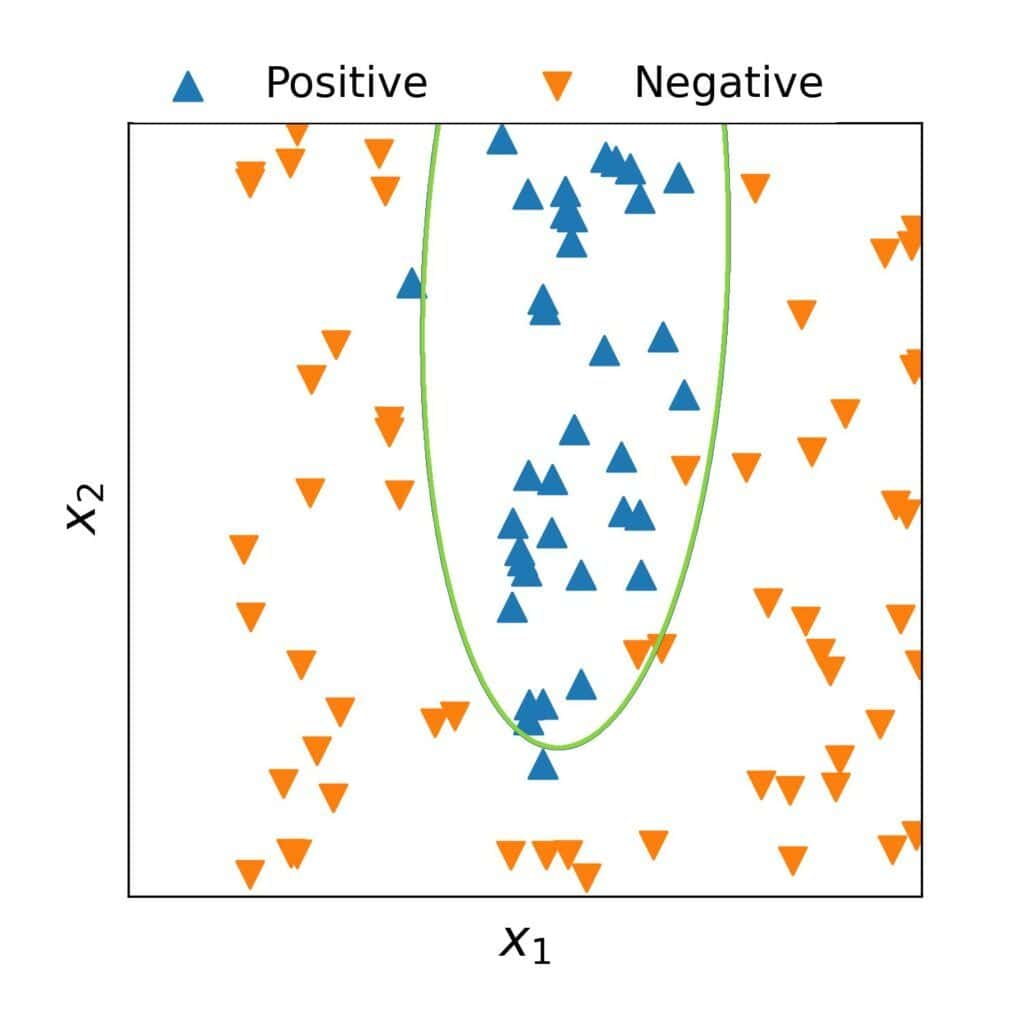

A second-degree curve might be the compromise we seek, but we aren’t sure. So, we start with the space of quadratic hypotheses:

We get a model whose decision boundary appears to be a good fit even though it misclassifies some objects:

Since we’re satisfied with the model, we can stop here. If that hadn’t been the case, we could have tried a space of cubic models. The idea would be to iteratively try incrementally complex families until finding a model that both performs well and is easy to understand.

4. Conclusion

In this article, we talked about hypotheses spaces in machine learning. An algorithm’s hypothesis space contains all the models it can learn from any dataset.

The algorithms with too expressive spaces can generalize poorly to unseen data and be too complex to understand, whereas those with overly simple hypotheses may underfit the data. So, when applying machine-learning algorithms in practice, we need to find the right balance between expressivity and simplicity.

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Hypothesis in Machine Learning

The concept of a hypothesis is fundamental in Machine Learning and data science endeavours. In the realm of machine learning, a hypothesis serves as an initial assumption made by data scientists and ML professionals when attempting to address a problem. Machine learning involves conducting experiments based on past experiences, and these hypotheses are crucial in formulating potential solutions.

It’s important to note that in machine learning discussions, the terms “hypothesis” and “model” are sometimes used interchangeably. However, a hypothesis represents an assumption, while a model is a mathematical representation employed to test that hypothesis. This section on “Hypothesis in Machine Learning” explores key aspects related to hypotheses in machine learning and their significance.

Table of Content

How does a Hypothesis work?

Hypothesis space and representation in machine learning, hypothesis in statistics, faqs on hypothesis in machine learning.

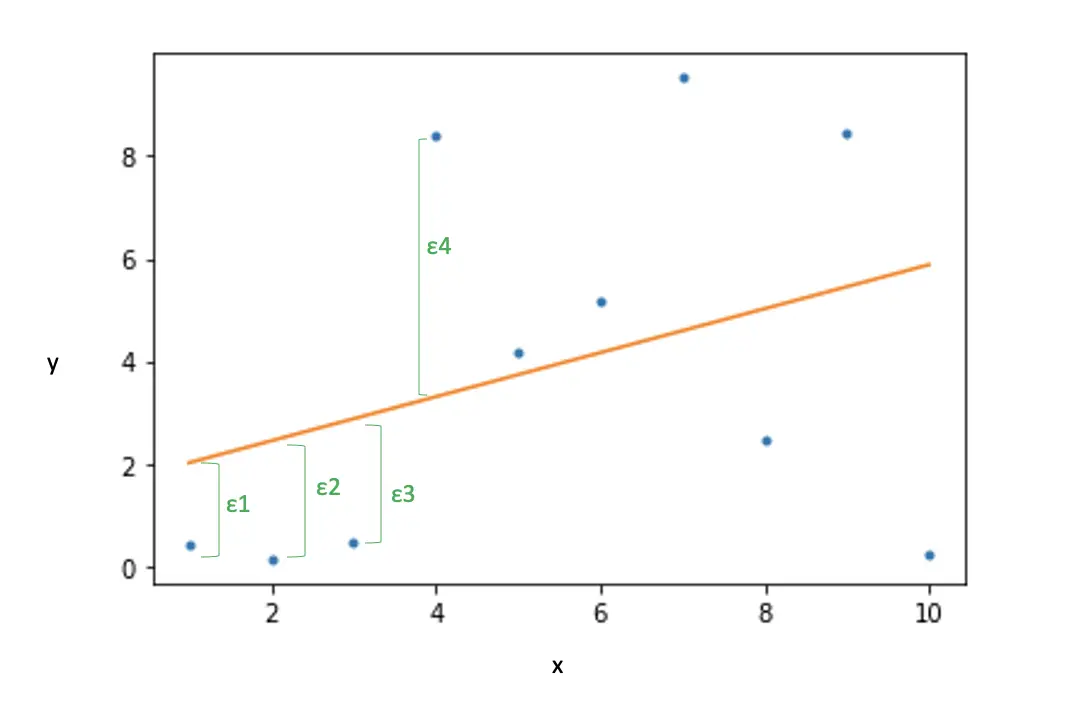

A hypothesis in machine learning is the model’s presumption regarding the connection between the input features and the result. It is an illustration of the mapping function that the algorithm is attempting to discover using the training set. To minimize the discrepancy between the expected and actual outputs, the learning process involves modifying the weights that parameterize the hypothesis. The objective is to optimize the model’s parameters to achieve the best predictive performance on new, unseen data, and a cost function is used to assess the hypothesis’ accuracy.

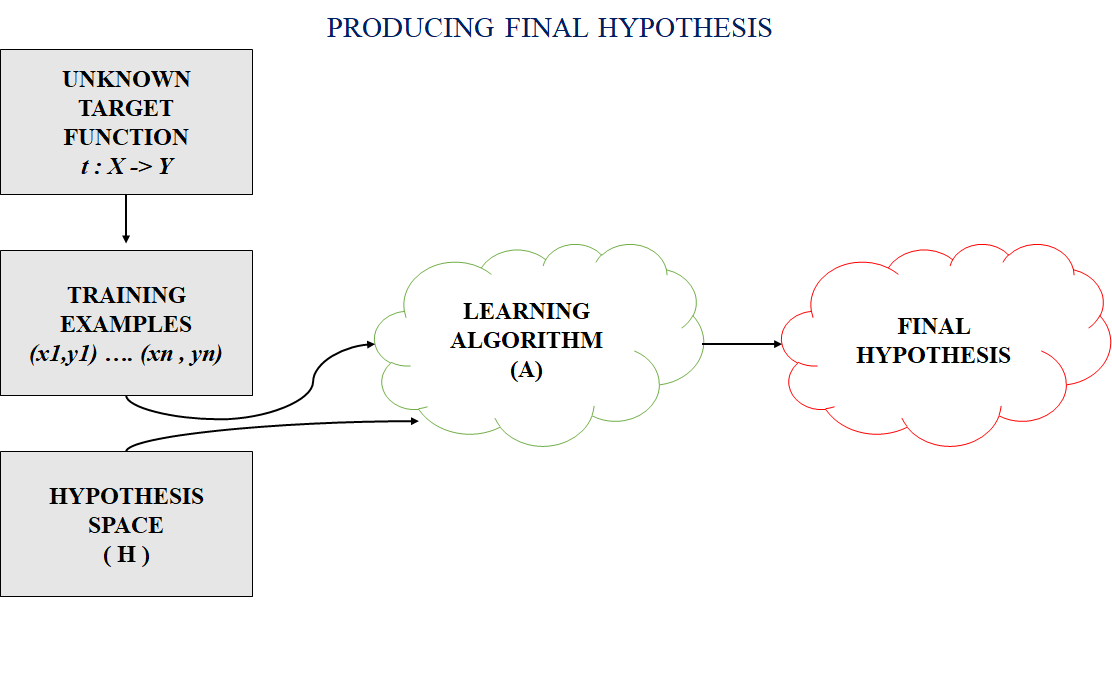

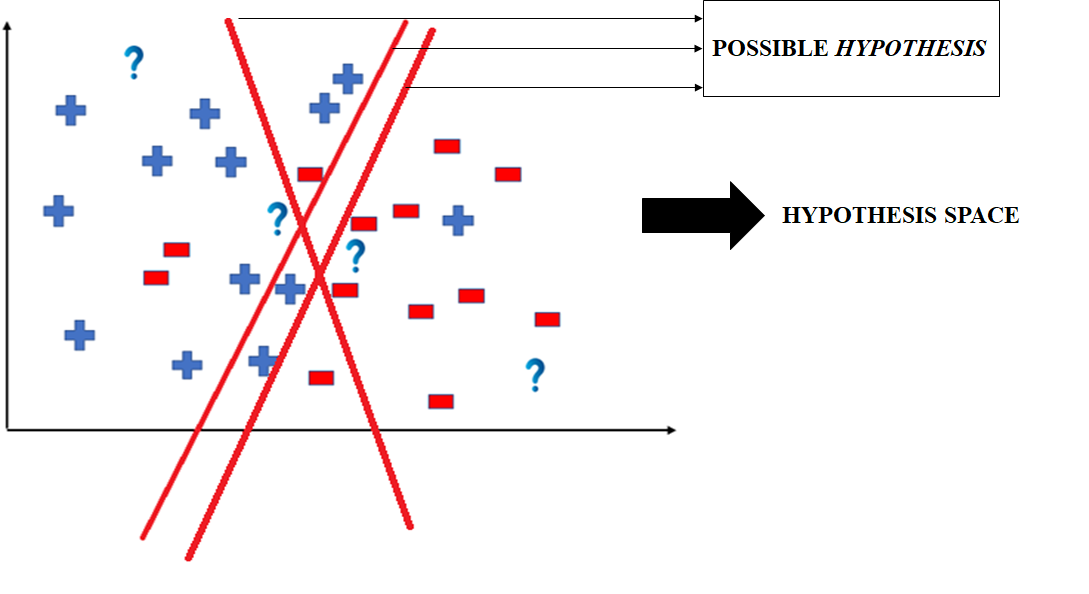

In most supervised machine learning algorithms, our main goal is to find a possible hypothesis from the hypothesis space that could map out the inputs to the proper outputs. The following figure shows the common method to find out the possible hypothesis from the Hypothesis space:

Hypothesis Space (H)

Hypothesis space is the set of all the possible legal hypothesis. This is the set from which the machine learning algorithm would determine the best possible (only one) which would best describe the target function or the outputs.

Hypothesis (h)

A hypothesis is a function that best describes the target in supervised machine learning. The hypothesis that an algorithm would come up depends upon the data and also depends upon the restrictions and bias that we have imposed on the data.

The Hypothesis can be calculated as:

[Tex]y = mx + b [/Tex]

- m = slope of the lines

- b = intercept

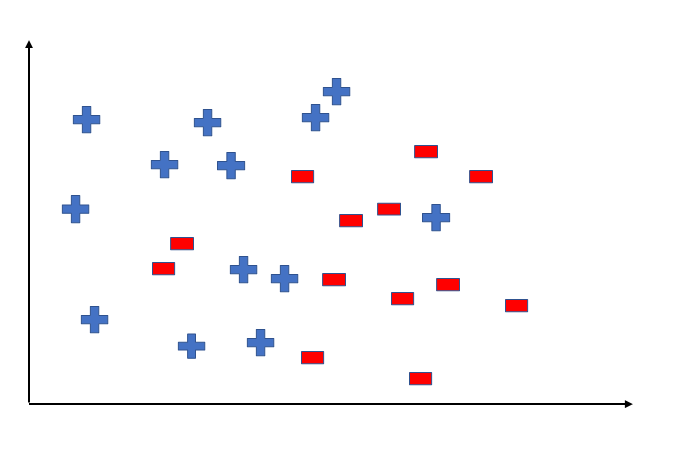

To better understand the Hypothesis Space and Hypothesis consider the following coordinate that shows the distribution of some data:

Say suppose we have test data for which we have to determine the outputs or results. The test data is as shown below:

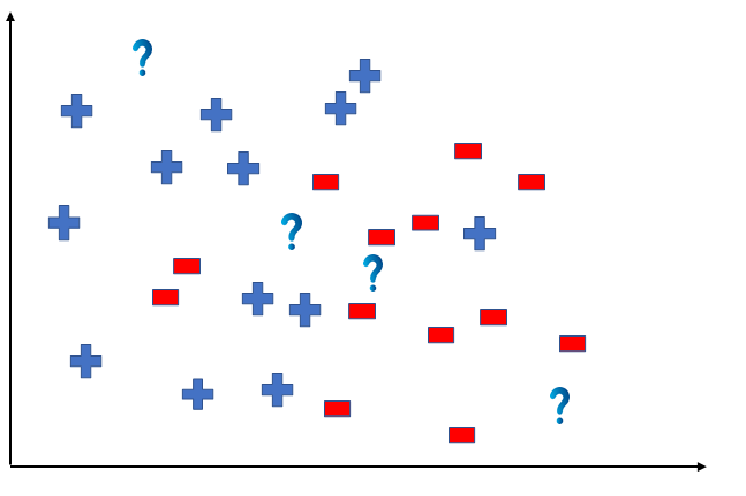

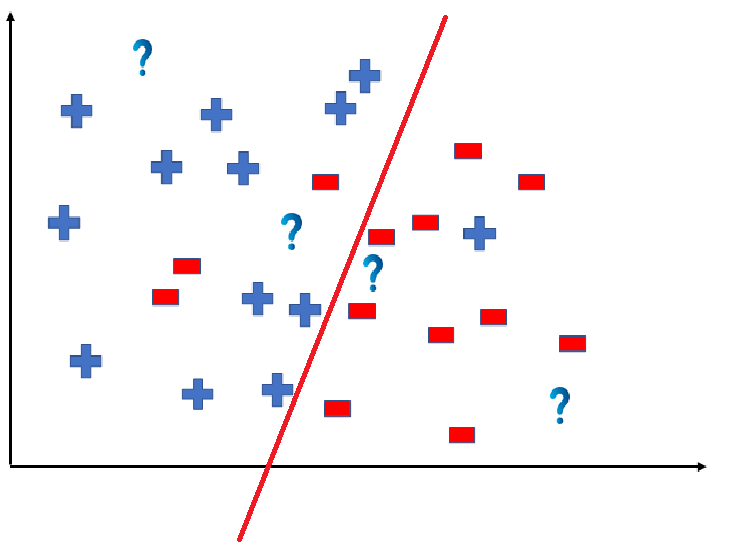

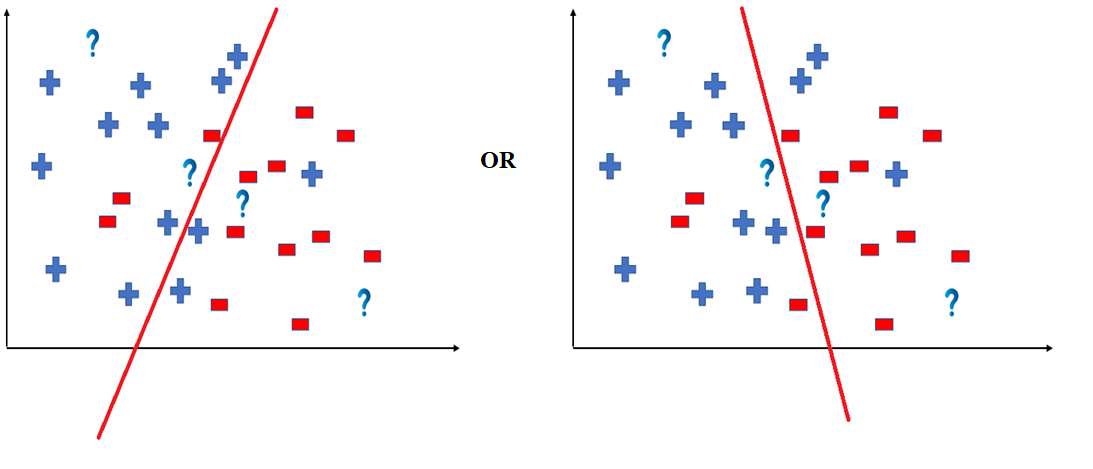

We can predict the outcomes by dividing the coordinate as shown below:

So the test data would yield the following result:

But note here that we could have divided the coordinate plane as:

The way in which the coordinate would be divided depends on the data, algorithm and constraints.

- All these legal possible ways in which we can divide the coordinate plane to predict the outcome of the test data composes of the Hypothesis Space.

- Each individual possible way is known as the hypothesis.

Hence, in this example the hypothesis space would be like:

The hypothesis space comprises all possible legal hypotheses that a machine learning algorithm can consider. Hypotheses are formulated based on various algorithms and techniques, including linear regression, decision trees, and neural networks. These hypotheses capture the mapping function transforming input data into predictions.

Hypothesis Formulation and Representation in Machine Learning

Hypotheses in machine learning are formulated based on various algorithms and techniques, each with its representation. For example:

- Linear Regression : [Tex] h(X) = \theta_0 + \theta_1 X_1 + \theta_2 X_2 + … + \theta_n X_n[/Tex]

- Decision Trees : [Tex]h(X) = \text{Tree}(X)[/Tex]

- Neural Networks : [Tex]h(X) = \text{NN}(X)[/Tex]

In the case of complex models like neural networks, the hypothesis may involve multiple layers of interconnected nodes, each performing a specific computation.

Hypothesis Evaluation:

The process of machine learning involves not only formulating hypotheses but also evaluating their performance. This evaluation is typically done using a loss function or an evaluation metric that quantifies the disparity between predicted outputs and ground truth labels. Common evaluation metrics include mean squared error (MSE), accuracy, precision, recall, F1-score, and others. By comparing the predictions of the hypothesis with the actual outcomes on a validation or test dataset, one can assess the effectiveness of the model.

Hypothesis Testing and Generalization:

Once a hypothesis is formulated and evaluated, the next step is to test its generalization capabilities. Generalization refers to the ability of a model to make accurate predictions on unseen data. A hypothesis that performs well on the training dataset but fails to generalize to new instances is said to suffer from overfitting. Conversely, a hypothesis that generalizes well to unseen data is deemed robust and reliable.

The process of hypothesis formulation, evaluation, testing, and generalization is often iterative in nature. It involves refining the hypothesis based on insights gained from model performance, feature importance, and domain knowledge. Techniques such as hyperparameter tuning, feature engineering, and model selection play a crucial role in this iterative refinement process.

In statistics , a hypothesis refers to a statement or assumption about a population parameter. It is a proposition or educated guess that helps guide statistical analyses. There are two types of hypotheses: the null hypothesis (H0) and the alternative hypothesis (H1 or Ha).

- Null Hypothesis(H 0 ): This hypothesis suggests that there is no significant difference or effect, and any observed results are due to chance. It often represents the status quo or a baseline assumption.

- Aternative Hypothesis(H 1 or H a ): This hypothesis contradicts the null hypothesis, proposing that there is a significant difference or effect in the population. It is what researchers aim to support with evidence.

Q. How does the training process use the hypothesis?

The learning algorithm uses the hypothesis as a guide to minimise the discrepancy between expected and actual outputs by adjusting its parameters during training.

Q. How is the hypothesis’s accuracy assessed?

Usually, a cost function that calculates the difference between expected and actual values is used to assess accuracy. Optimising the model to reduce this expense is the aim.

Q. What is Hypothesis testing?

Hypothesis testing is a statistical method for determining whether or not a hypothesis is correct. The hypothesis can be about two variables in a dataset, about an association between two groups, or about a situation.

Q. What distinguishes the null hypothesis from the alternative hypothesis in machine learning experiments?

The null hypothesis (H0) assumes no significant effect, while the alternative hypothesis (H1 or Ha) contradicts H0, suggesting a meaningful impact. Statistical testing is employed to decide between these hypotheses.

Please Login to comment...

Similar reads.

- Top 10 Fun ESL Games and Activities for Teaching Kids English Abroad in 2024

- Top Free Voice Changers for Multiplayer Games and Chat in 2024

- Best Monitors for MacBook Pro and MacBook Air in 2024

- 10 Best Laptop Brands in 2024

- System Design Netflix | A Complete Architecture

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Machine Learning

- Machine Learning Tutorial

- Machine Learning Applications

- Life cycle of Machine Learning

- Install Anaconda & Python

- AI vs Machine Learning

- How to Get Datasets

- Data Preprocessing

- Supervised Machine Learning

- Unsupervised Machine Learning

- Supervised vs Unsupervised Learning

Supervised Learning

- Regression Analysis

- Linear Regression

- Simple Linear Regression

- Multiple Linear Regression

- Backward Elimination

- Polynomial Regression

Classification

- Classification Algorithm

- Logistic Regression

- K-NN Algorithm

- Support Vector Machine Algorithm

- Na�ve Bayes Classifier

Miscellaneous

- Classification vs Regression

- Linear Regression vs Logistic Regression

- Decision Tree Classification Algorithm

- Random Forest Algorithm

- Clustering in Machine Learning

- Hierarchical Clustering in Machine Learning

- K-Means Clustering Algorithm

- Apriori Algorithm in Machine Learning

- Association Rule Learning

- Confusion Matrix

- Cross-Validation

- Data Science vs Machine Learning

- Machine Learning vs Deep Learning

- Dimensionality Reduction Technique

- Machine Learning Algorithms

- Overfitting & Underfitting

- Principal Component Analysis

- What is P-Value

- Regularization in Machine Learning

- Examples of Machine Learning

- Semi-Supervised Learning

- Essential Mathematics for Machine Learning

- Overfitting in Machine Learning

- Types of Encoding Techniques

- Feature Selection Techniques in Machine Learning

- Bias and Variance in Machine Learning

- Machine Learning Tools

- Prerequisites for Machine Learning

- Gradient Descent in Machine Learning

- Machine Learning Experts Salary in India

- Machine Learning Models

- Machine Learning Books

- Linear Algebra for Machine learning

- Types of Machine Learning

- Feature Engineering for Machine Learning

- Top 10 Machine Learning Courses in 2021

- Epoch in Machine Learning

- Machine Learning with Anomaly Detection

- What is Epoch

- Cost Function in Machine Learning

- Bayes Theorem in Machine learning

- Perceptron in Machine Learning

- Entropy in Machine Learning

- Issues in Machine Learning

- Precision and Recall in Machine Learning

- Genetic Algorithm in Machine Learning

- Normalization in Machine Learning

- Adversarial Machine Learning

- Basic Concepts in Machine Learning

- Machine Learning Techniques

- Demystifying Machine Learning

- Challenges of Machine Learning

- Model Parameter vs Hyperparameter

- Hyperparameters in Machine Learning

- Importance of Machine Learning

- Machine Learning and Cloud Computing

- Anti-Money Laundering using Machine Learning

- Data Science Vs. Machine Learning Vs. Big Data

- Popular Machine Learning Platforms

- Deep learning vs. Machine learning vs. Artificial Intelligence

- Machine Learning Application in Defense/Military

- Machine Learning Applications in Media

- How can Machine Learning be used with Blockchain

- Prerequisites to Learn Artificial Intelligence and Machine Learning

- List of Machine Learning Companies in India

- Mathematics Courses for Machine Learning

- Probability and Statistics Books for Machine Learning

- Risks of Machine Learning

- Best Laptops for Machine Learning

- Machine Learning in Finance

- Lead Generation using Machine Learning

- Machine Learning and Data Science Certification

- What is Big Data and Machine Learning

- How to Save a Machine Learning Model

- Machine Learning Model with Teachable Machine

- Data Structure for Machine Learning

- Hypothesis in Machine Learning

- Gaussian Discriminant Analysis

- How Machine Learning is used by Famous Companies

- Introduction to Transfer Learning in ML

- LDA in Machine Learning

- Stacking in Machine Learning

- CNB Algorithm

- Deploy a Machine Learning Model using Streamlit Library

- Different Types of Methods for Clustering Algorithms in ML

- EM Algorithm in Machine Learning

- Machine Learning Pipeline

- Exploitation and Exploration in Machine Learning

- Machine Learning for Trading

- Data Augmentation: A Tactic to Improve the Performance of ML

- Difference Between Coding in Data Science and Machine Learning

- Data Labelling in Machine Learning

- Impact of Deep Learning on Personalization

- Major Business Applications of Convolutional Neural Network

- Mini Batch K-means clustering algorithm

- What is Multilevel Modelling

- GBM in Machine Learning

- Back Propagation through time - RNN

- Data Preparation in Machine Learning

- Predictive Maintenance Using Machine Learning

- NLP Analysis of Restaurant Reviews

- What are LSTM Networks

- Performance Metrics in Machine Learning

- Optimization using Hopfield Network

- Data Leakage in Machine Learning

- Generative Adversarial Network

- Machine Learning for Data Management

- Tensor Processing Units

- Train and Test datasets in Machine Learning

- How to Start with Machine Learning

- AUC-ROC Curve in Machine Learning

- Targeted Advertising using Machine Learning

- Top 10 Machine Learning Projects for Beginners using Python

- What is Human-in-the-Loop Machine Learning

- What is MLOps

- K-Medoids clustering-Theoretical Explanation

- Machine Learning Or Software Development: Which is Better

- How does Machine Learning Work

- How to learn Machine Learning from Scratch

- Is Machine Learning Hard

- Face Recognition in Machine Learning

- Product Recommendation Machine Learning

- Designing a Learning System in Machine Learning

- Recommendation System - Machine Learning

- Customer Segmentation Using Machine Learning

- Detecting Phishing Websites using Machine Learning

- Hidden Markov Model in Machine Learning

- Sales Prediction Using Machine Learning

- Crop Yield Prediction Using Machine Learning

- Data Visualization in Machine Learning

- ELM in Machine Learning

- Probabilistic Model in Machine Learning

- Survival Analysis Using Machine Learning

- Traffic Prediction Using Machine Learning

- t-SNE in Machine Learning

- BERT Language Model

- Federated Learning in Machine Learning

- Deep Parametric Continuous Convolutional Neural Network

- Depth-wise Separable Convolutional Neural Networks

- Need for Data Structures and Algorithms for Deep Learning and Machine Learning

- Geometric Model in Machine Learning

- Machine Learning Prediction

- Scalable Machine Learning

- Credit Score Prediction using Machine Learning

- Extrapolation in Machine Learning

- Image Forgery Detection Using Machine Learning

- Insurance Fraud Detection -Machine Learning

- NPS in Machine Learning

- Sequence Classification- Machine Learning

- EfficientNet: A Breakthrough in Machine Learning Model Architecture

- focl algorithm in Machine Learning

- Gini Index in Machine Learning

- Rainfall Prediction using ML

- Major Kernel Functions in Support Vector Machine

- Bagging Machine Learning

- BERT Applications

- Xtreme: MultiLingual Neural Network

- History of Machine Learning

- Multimodal Transformer Models

- Pruning in Machine Learning

- ResNet: Residual Network

- Gold Price Prediction using Machine Learning

- Dog Breed Classification using Transfer Learning

- Cataract Detection Using Machine Learning

- Placement Prediction Using Machine Learning

- Stock Market prediction using Machine Learning

- How to Check the Accuracy of your Machine Learning Model

- Interpretability and Explainability: Transformer Models

- Pattern Recognition in Machine Learning

- Zillow Home Value (Zestimate) Prediction in ML

- Fake News Detection Using Machine Learning

- Genetic Programming VS Machine Learning

- IPL Prediction Using Machine Learning

- Document Classification Using Machine Learning

- Heart Disease Prediction Using Machine Learning

- OCR with Machine Learning

- Air Pollution Prediction Using Machine Learning

- Customer Churn Prediction Using Machine Learning

- Earthquake Prediction Using Machine Learning

- Factor Analysis in Machine Learning

- Locally Weighted Linear Regression

- Machine Learning in Restaurant Industry

- Machine Learning Methods for Data-Driven Turbulence Modeling

- Predicting Student Dropout Using Machine Learning

- Image Processing Using Machine Learning

- Machine Learning in Banking

- Machine Learning in Education

- Machine Learning in Healthcare

- Machine Learning in Robotics

- Cloud Computing for Machine Learning and Cognitive Applications

- Credit Card Approval Using Machine Learning

- Liver Disease Prediction Using Machine Learning

- Majority Voting Algorithm in Machine Learning

- Data Augmentation in Machine Learning

- Decision Tree Classifier in Machine Learning

- Machine Learning in Design

- Digit Recognition Using Machine Learning

- Electricity Consumption Prediction Using Machine Learning

- Data Analytics vs. Machine Learning

- Injury Prediction in Competitive Runners Using Machine Learning

- Protein Folding Using Machine Learning

- Sentiment Analysis Using Machine Learning

- Network Intrusion Detection System Using Machine Learning

- Titanic- Machine Learning From Disaster

- Adenovirus Disease Prediction for Child Healthcare Using Machine Learning

- RNN for Sequence Labelling

- CatBoost in Machine Learning

- Cloud Computing Future Trends

- Histogram of Oriented Gradients (HOG)

- Implementation of neural network from scratch using NumPy

- Introduction to SIFT( Scale Invariant Feature Transform)

- Introduction to SURF (Speeded-Up Robust Features)

- Kubernetes - load balancing service

- Kubernetes Resource Model (KRM) and How to Make Use of YAML

- Are Robots Self-Learning

- Variational Autoencoders

- What are the Security and Privacy Risks of VR and AR

- What is a Large Language Model (LLM)

- Privacy-preserving Machine Learning

- Continual Learning in Machine Learning

- Quantum Machine Learning (QML)

- Split Single Column into Multiple Columns in PySpark DataFrame

- Why should we use AutoML

- Evaluation Metrics for Object Detection and Recognition

- Mean Intersection over Union (mIoU) for image segmentation

- YOLOV5-Object-Tracker-In-Videos

- Predicting Salaries with Machine Learning

- Fine-tuning Large Language Models

- AutoML Workflow

- Build Chatbot Webapp with LangChain

- Building a Machine Learning Classification Model with PyCaret

- Continuous Bag of Words (CBOW) in NLP

- Deploying Scrapy Spider on ScrapingHub

- Dynamic Pricing Using Machine Learning

- How to Improve Neural Networks by Using Complex Numbers

- Introduction to Bayesian Deep Learning

- LiDAR: Light Detection and Ranging for 3D Reconstruction

- Meta-Learning in Machine Learning

- Object Recognition in Medical Imaging

- Region-level Evaluation Metrics for Image Segmentation

- Sarcasm Detection Using Neural Networks

- SARSA Reinforcement Learning

- Single Shot MultiBox Detector (SSD) using Neural Networking Approach

- Stepwise Predictive Analysis in Machine Learning

- Vision Transformers vs. Convolutional Neural Networks

- V-Net in Image Segmentation

- Forest Cover Type Prediction Using Machine Learning

- Ada Boost algorithm in Machine Learning

- Continuous Value Prediction

- Bayesian Regression

- Least Angle Regression

- Linear Models

- DNN Machine Learning

- Why do we need to learn Machine Learning

- Roles in Machine Learning

- Clustering Performance Evaluation

- Spectral Co-clustering

- 7 Best R Packages for Machine Learning

- Calculate Kurtosis

- Machine Learning for Data Analysis

- What are the benefits of 5G Technology for the Internet of Things

- What is the Role of Machine Learning in IoT

- Human Activity Recognition Using Machine Learning

- Components of GIS

- Attention Mechanism

- Backpropagation- Algorithm

- VGGNet-16 Architecture

- Independent Component Analysis

- Nonnegative Matrix Factorization

- Sparse Inverse Covariance

- Accuracy, Precision, Recall or F1

- L1 and L2 Regularization

- Maximum Likelihood Estimation

- Kernel Principal Component Analysis (KPCA)

- Latent Semantic Analysis

- Overview of outlier detection methods

- Robust Covariance Estimation

- Spectral Bi-Clustering

- Drift in Machine Learning

- Credit Card Fraud Detection Using Machine Learning

- KL-Divergence

- Transformers Architecture

- Novelty Detection with Local Outlier Factor

- Novelty Detection

- Introduction to Bayesian Linear Regression

- Firefly Algorithm

- Keras: Attention and Seq2Seq

- A Guide Towards a Successful Machine Learning Project

- ACF and PCF

- Bayesian Hyperparameter Optimization for Machine Learning

- Random Forest Hyperparameter tuning in python

- Simulated Annealing

- Top Benefits of Machine Learning in FinTech

- Weight Initialisation

- Density Estimation

- Overlay Network

- Micro, Macro Weighted Averages of F1 Score

- Assumptions of Linear Regression

- Evaluation Metrics for Clustering Algorithms

- Frog Leap Algorithm

- Isolation Forest

- McNemar Test

- Stochastic Optimization

- Geomagnetic Field Using Machine Learning

- Image Generation Using Machine Learning

- Confidence Intervals

- Facebook Prophet

- Understanding Optimization Algorithms in Machine Learning

- What Are Probabilistic Models in Machine Learning

- How to choose the best Linear Regression model

- How to Remove Non-Stationarity From Time Series

- AutoEncoders

- Cat Classification Using Machine Learning

- AIC and BIC

- Inception Model

- Architecture of Machine Learning

- Business Intelligence Vs Machine Learning

- Guide to Cluster Analysis: Applications, Best Practices

- Linear Regression using Gradient Descent

- Text Clustering with K-Means

- The Significance and Applications of Covariance Matrix

- Stationarity Tests in Time Series

- Graph Machine Learning

- Introduction to XGBoost Algorithm in Machine Learning

- Bahdanau Attention

- Greedy Layer Wise Pre-Training

- OneVsRestClassifier

- Best Program for Machine Learning

- Deep Boltzmann machines (DBMs) in machine learning

- Find Patterns in Data Using Machine Learning

- Generalized Linear Models

- How to Implement Gradient Descent Optimization from Scratch

- Interpreting Correlation Coefficients

- Image Captioning Using Machine Learning

- fit() vs predict() vs fit_predict() in Python scikit-learn

- CNN Filters

- Shannon Entropy

- Time Series -Exponential Smoothing

- AUC ROC Curve in Machine Learning

- Vector Norms in Machine Learning

- Swarm Intelligence

- L1 and L2 Regularization Methods in Machine Learning

- ML Approaches for Time Series

- MSE and Bias-Variance Decomposition

- Simple Exponential Smoothing

- How to Optimise Machine Learning Model

- Multiclass logistic regression from scratch

- Lightbm Multilabel Classification

- Monte Carlo Methods

- What is Inverse Reinforcement learning

- Content-Based Recommender System

- Context-Awareness Recommender System

- Predicting Flights Using Machine Learning

- NTLK Corpus

- Traditional Feature Engineering Models

- Concept Drift and Model Decay in Machine Learning

- Hierarchical Reinforcement Learning

- What is Feature Scaling and Why is it Important in Machine Learning

- Difference between Statistical Model and Machine Learning

- Introduction to Ranking Algorithms in Machine Learning

- Multicollinearity: Causes, Effects and Detection

- Bag of N-Grams Model

- TF-IDF Model

Related Tutorials

- Tensorflow Tutorial

- PyTorch Tutorial

- Data Science Tutorial

- AI Tutorial

- NLP Tutorial

- Reinforcement Learning

Interview Questions

- Machine learning Interview

| The hypothesis is a common term in Machine Learning and data science projects. As we know, machine learning is one of the most powerful technologies across the world, which helps us to predict results based on past experiences. Moreover, data scientists and ML professionals conduct experiments that aim to solve a problem. These ML professionals and data scientists make an initial assumption for the solution of the problem. This assumption in Machine learning is known as Hypothesis. In Machine Learning, at various times, Hypothesis and Model are used interchangeably. However, a Hypothesis is an assumption made by scientists, whereas a model is a mathematical representation that is used to test the hypothesis. In this topic, "Hypothesis in Machine Learning," we will discuss a few important concepts related to a hypothesis in machine learning and their importance. So, let's start with a quick introduction to Hypothesis. It is just a guess based on some known facts but has not yet been proven. A good hypothesis is testable, which results in either true or false. : Let's understand the hypothesis with a common example. Some scientist claims that ultraviolet (UV) light can damage the eyes then it may also cause blindness. In this example, a scientist just claims that UV rays are harmful to the eyes, but we assume they may cause blindness. However, it may or may not be possible. Hence, these types of assumptions are called a hypothesis. The hypothesis is one of the commonly used concepts of statistics in Machine Learning. It is specifically used in Supervised Machine learning, where an ML model learns a function that best maps the input to corresponding outputs with the help of an available dataset. There are some common methods given to find out the possible hypothesis from the Hypothesis space, where hypothesis space is represented by and hypothesis by Th ese are defined as follows: It is used by supervised machine learning algorithms to determine the best possible hypothesis to describe the target function or best maps input to output. It is often constrained by choice of the framing of the problem, the choice of model, and the choice of model configuration. . It is primarily based on data as well as bias and restrictions applied to data. Hence hypothesis (h) can be concluded as a single hypothesis that maps input to proper output and can be evaluated as well as used to make predictions. The hypothesis (h) can be formulated in machine learning as follows: Where, Y: Range m: Slope of the line which divided test data or changes in y divided by change in x. x: domain c: intercept (constant) : Let's understand the hypothesis (h) and hypothesis space (H) with a two-dimensional coordinate plane showing the distribution of data as follows: Hypothesis space (H) is the composition of all legal best possible ways to divide the coordinate plane so that it best maps input to proper output. Further, each individual best possible way is called a hypothesis (h). Hence, the hypothesis and hypothesis space would be like this: Similar to the hypothesis in machine learning, it is also considered an assumption of the output. However, it is falsifiable, which means it can be failed in the presence of sufficient evidence. Unlike machine learning, we cannot accept any hypothesis in statistics because it is just an imaginary result and based on probability. Before start working on an experiment, we must be aware of two important types of hypotheses as follows: A null hypothesis is a type of statistical hypothesis which tells that there is no statistically significant effect exists in the given set of observations. It is also known as conjecture and is used in quantitative analysis to test theories about markets, investment, and finance to decide whether an idea is true or false. An alternative hypothesis is a direct contradiction of the null hypothesis, which means if one of the two hypotheses is true, then the other must be false. In other words, an alternative hypothesis is a type of statistical hypothesis which tells that there is some significant effect that exists in the given set of observations.The significance level is the primary thing that must be set before starting an experiment. It is useful to define the tolerance of error and the level at which effect can be considered significantly. During the testing process in an experiment, a 95% significance level is accepted, and the remaining 5% can be neglected. The significance level also tells the critical or threshold value. For e.g., in an experiment, if the significance level is set to 98%, then the critical value is 0.02%. The p-value in statistics is defined as the evidence against a null hypothesis. In other words, P-value is the probability that a random chance generated the data or something else that is equal or rarer under the null hypothesis condition. If the p-value is smaller, the evidence will be stronger, and vice-versa which means the null hypothesis can be rejected in testing. It is always represented in a decimal form, such as 0.035. Whenever a statistical test is carried out on the population and sample to find out P-value, then it always depends upon the critical value. If the p-value is less than the critical value, then it shows the effect is significant, and the null hypothesis can be rejected. Further, if it is higher than the critical value, it shows that there is no significant effect and hence fails to reject the Null Hypothesis. In the series of mapping instances of inputs to outputs in supervised machine learning, the hypothesis is a very useful concept that helps to approximate a target function in machine learning. It is available in all analytics domains and is also considered one of the important factors to check whether a change should be introduced or not. It covers the entire training data sets to efficiency as well as the performance of the models. Hence, in this topic, we have covered various important concepts related to the hypothesis in machine learning and statistics and some important parameters such as p-value, significance level, etc., to understand hypothesis concepts in a better way. |

Latest Courses

Javatpoint provides tutorials and interview questions of all technology like java tutorial, android, java frameworks

Contact info

G-13, 2nd Floor, Sec-3, Noida, UP, 201301, India

[email protected] .

Online Compiler

Programmathically

Introduction to the hypothesis space and the bias-variance tradeoff in machine learning.

In this post, we introduce the hypothesis space and discuss how machine learning models function as hypotheses. Furthermore, we discuss the challenges encountered when choosing an appropriate machine learning hypothesis and building a model, such as overfitting, underfitting, and the bias-variance tradeoff.

The hypothesis space in machine learning is a set of all possible models that can be used to explain a data distribution given the limitations of that space. A linear hypothesis space is limited to the set of all linear models. If the data distribution follows a non-linear distribution, the linear hypothesis space might not contain a model that is appropriate for our needs.

To understand the concept of a hypothesis space, we need to learn to think of machine learning models as hypotheses.

The Machine Learning Model as Hypothesis

Generally speaking, a hypothesis is a potential explanation for an outcome or a phenomenon. In scientific inquiry, we test hypotheses to figure out how well and if at all they explain an outcome. In supervised machine learning, we are concerned with finding a function that maps from inputs to outputs.

But machine learning is inherently probabilistic. It is the art and science of deriving useful hypotheses from limited or incomplete data. Our functions are not axioms that explain the data perfectly, and for most real-life problems, we will never have all the data that exists. Accordingly, we will not find the one true function that perfectly describes the data. Instead, we find a function through training a model to map from known training input to known training output. This way, the model gradually approximates the assumed true function that describes the distribution of the data. So we treat our model as a hypothesis that needs to be tested as to how well it explains the output from a given input. We do this using a test or validation data set.

The Hypothesis Space

During the training process, we select a model from a hypothesis space that is subject to our constraints. For example, a linear hypothesis space only provides linear models. We can approximate data that follows a quadratic distribution using a model from the linear hypothesis space.

Of course, a linear model will never have the same predictive performance as a quadratic model, so we can adjust our hypothesis space to also include non-linear models or at least quadratic models.

The Data Generating Process

The data generating process describes a hypothetical process subject to some assumptions that make training a machine learning model possible. We need to assume that the data points are from the same distribution but are independent of each other. When these requirements are met, we say that the data is independent and identically distributed (i.i.d.).

Independent and Identically Distributed Data

How can we assume that a model trained on a training set will perform better than random guessing on new and previously unseen data? First of all, the training data needs to come from the same or at least a similar problem domain. If you want your model to predict stock prices, you need to train the model on stock price data or data that is similarly distributed. It wouldn’t make much sense to train it on whether data. Statistically, this means the data is identically distributed . But if data comes from the same problem, training data and test data might not be completely independent. To account for this, we need to make sure that the test data is not in any way influenced by the training data or vice versa. If you use a subset of the training data as your test set, the test data evidently is not independent of the training data. Statistically, we say the data must be independently distributed .

Overfitting and Underfitting

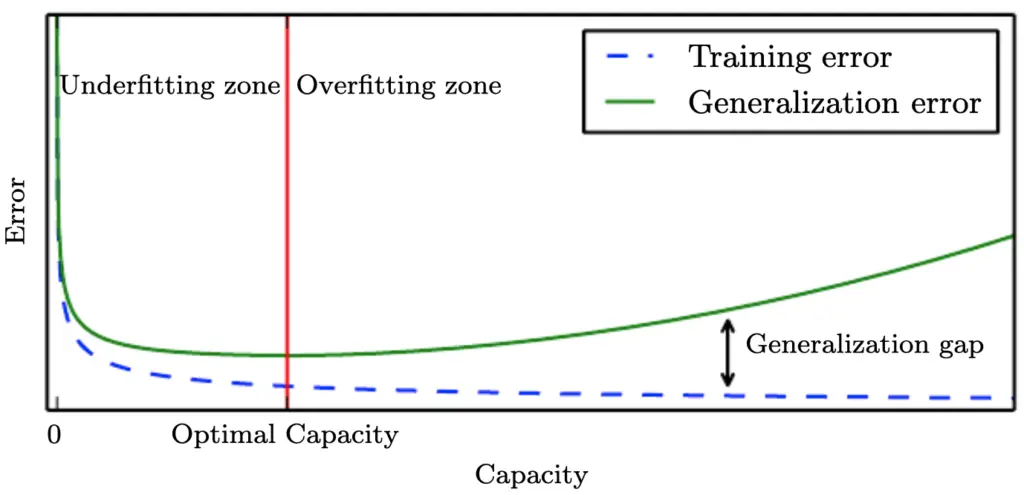

We want to select a model from the hypothesis space that explains the data sufficiently well. During training, we can make a model so complex that it perfectly fits every data point in the training dataset. But ultimately, the model should be able to predict outputs on previously unseen input data. The ability to do well when predicting outputs on previously unseen data is also known as generalization. There is an inherent conflict between those two requirements.

If we make the model so complex that it fits every point in the training data, it will pick up lots of noise and random variation specific to the training set, which might obscure the larger underlying patterns. As a result, it will be more sensitive to random fluctuations in new data and predict values that are far off. A model with this problem is said to overfit the training data and, as a result, to suffer from high variance .

To avoid the problem of overfitting, we can choose a simpler model or use regularization techniques to prevent the model from fitting the training data too closely. The model should then be less influenced by random fluctuations and instead, focus on the larger underlying patterns in the data. The patterns are expected to be found in any dataset that comes from the same distribution. As a consequence, the model should generalize better on previously unseen data.

But if we go too far, the model might become too simple or too constrained by regularization to accurately capture the patterns in the data. Then the model will neither generalize well nor fit the training data well. A model that exhibits this problem is said to underfit the data and to suffer from high bias . If the model is too simple to accurately capture the patterns in the data (for example, when using a linear model to fit non-linear data), its capacity is insufficient for the task at hand.

When training neural networks, for example, we go through multiple iterations of training in which the model learns to fit an increasingly complex function to the data. Typically, your training error will decrease during learning the more complex your model becomes and the better it learns to fit the data. In the beginning, the training error decreases rapidly. In later training iterations, it typically flattens out as it approaches the minimum possible error. Your test or generalization error should initially decrease as well, albeit likely at a slower pace than the training error. As long as the generalization error is decreasing, your model is underfitting because it doesn’t live up to its full capacity. After a number of training iterations, the generalization error will likely reach a trough and start to increase again. Once it starts to increase, your model is overfitting, and it is time to stop training.

Ideally, you should stop training once your model reaches the lowest point of the generalization error. The gap between the minimum generalization error and no error at all is an irreducible error term known as the Bayes error that we won’t be able to completely get rid of in a probabilistic setting. But if the error term seems too large, you might be able to reduce it further by collecting more data, manipulating your model’s hyperparameters, or altogether picking a different model.

Bias Variance Tradeoff

We’ve talked about bias and variance in the previous section. Now it is time to clarify what we actually mean by these terms.

Understanding Bias and Variance

In a nutshell, bias measures if there is any systematic deviation from the correct value in a specific direction. If we could repeat the same process of constructing a model several times over, and the results predicted by our model always deviate in a certain direction, we would call the result biased.

Variance measures how much the results vary between model predictions. If you repeat the modeling process several times over and the results are scattered all across the board, the model exhibits high variance.

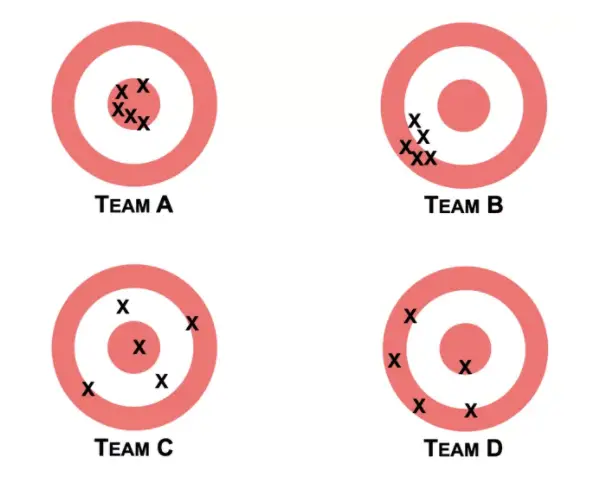

In their book “Noise” Daniel Kahnemann and his co-authors provide an intuitive example that helps understand the concept of bias and variance. Imagine you have four teams at the shooting range.

Team B is biased because the shots of its team members all deviate in a certain direction from the center. Team B also exhibits low variance because the shots of all the team members are relatively concentrated in one location. Team C has the opposite problem. The shots are scattered across the target with no discernible bias in a certain direction. Team D is both biased and has high variance. Team A would be the equivalent of a good model. The shots are in the center with little bias in one direction and little variance between the team members.

Generally speaking, linear models such as linear regression exhibit high bias and low variance. Nonlinear algorithms such as decision trees are more prone to overfitting the training data and thus exhibit high variance and low bias.

A linear model used with non-linear data would exhibit a bias to predict data points along a straight line instead of accomodating the curves. But they are not as susceptible to random fluctuations in the data. A nonlinear algorithm that is trained on noisy data with lots of deviations would be more capable of avoiding bias but more prone to incorporate the noise into its predictions. As a result, a small deviation in the test data might lead to very different predictions.

To get our model to learn the patterns in data, we need to reduce the training error while at the same time reducing the gap between the training and the testing error. In other words, we want to reduce both bias and variance. To a certain extent, we can reduce both by picking an appropriate model, collecting enough training data, selecting appropriate training features and hyperparameter values. At some point, we have to trade-off between minimizing bias and minimizing variance. How you balance this trade-off is up to you.

The Bias Variance Decomposition

Mathematically, the total error can be decomposed into the bias and the variance according to the following formula.

Remember that Bayes’ error is an error that cannot be eliminated.

Our machine learning model represents an estimating function \hat f(X) for the true data generating function f(X) where X represents the predictors and y the output values.

Now the mean squared error of our model is the expected value of the squared difference of the output produced by the estimating function \hat f(X) and the true output Y.

The bias is a systematic deviation from the true value. We can measure it as the squared difference between the expected value produced by the estimating function (the model) and the values produced by the true data-generating function.

Of course, we don’t know the true data generating function, but we do know the observed outputs Y, which correspond to the values generated by f(x) plus an error term.

The variance of the model is the squared difference between the expected value and the actual values of the model.

Now that we have the bias and the variance, we can add them up along with the irreducible error to get the total error.

A machine learning model represents an approximation to the hypothesized function that generated the data. The chosen model is a hypothesis since we hypothesize that this model represents the true data generating function.

We choose the hypothesis from a hypothesis space that may be subject to certain constraints. For example, we can constrain the hypothesis space to the set of linear models.

When choosing a model, we aim to reduce the bias and the variance to prevent our model from either overfitting or underfitting the data. In the real world, we cannot completely eliminate bias and variance, and we have to trade-off between them. The total error produced by a model can be decomposed into the bias, the variance, and irreducible (Bayes) error.

About Author

Related Posts

Best Guesses: Understanding The Hypothesis in Machine Learning

- February 22, 2024

- General , Supervised Learning , Unsupervised Learning

Machine learning is a vast and complex field that has inherited many terms from other places all over the mathematical domain.

It can sometimes be challenging to get your head around all the different terminologies, never mind trying to understand how everything comes together.

In this blog post, we will focus on one particular concept: the hypothesis.

While you may think this is simple, there is a little caveat regarding machine learning.

The statistics side and the learning side.

Don’t worry; we’ll do a full breakdown below.

You’ll learn the following:

What Is a Hypothesis in Machine Learning?

- Is This any different than the hypothesis in statistics?

- What is the difference between the alternative hypothesis and the null?

- Why do we restrict hypothesis space in artificial intelligence?

- Example code performing hypothesis testing in machine learning

In machine learning, the term ‘hypothesis’ can refer to two things.

First, it can refer to the hypothesis space, the set of all possible training examples that could be used to predict or answer a new instance.

Second, it can refer to the traditional null and alternative hypotheses from statistics.

Since machine learning works so closely with statistics, 90% of the time, when someone is referencing the hypothesis, they’re referencing hypothesis tests from statistics.

Is This Any Different Than The Hypothesis In Statistics?

In statistics, the hypothesis is an assumption made about a population parameter.

The statistician’s goal is to prove it true or disprove it.

This will take the form of two different hypotheses, one called the null, and one called the alternative.

Usually, you’ll establish your null hypothesis as an assumption that it equals some value.

For example, in Welch’s T-Test Of Unequal Variance, our null hypothesis is that the two means we are testing (population parameter) are equal.

This means our null hypothesis is that the two population means are the same.

We run our statistical tests, and if our p-value is significant (very low), we reject the null hypothesis.

This would mean that their population means are unequal for the two samples you are testing.

Usually, statisticians will use the significance level of .05 (a 5% risk of being wrong) when deciding what to use as the p-value cut-off.

What Is The Difference Between The Alternative Hypothesis And The Null?

The null hypothesis is our default assumption, which we are trying to prove correct.

The alternate hypothesis is usually the opposite of our null and is much broader in scope.

For most statistical tests, the null and alternative hypotheses are already defined.

You are then just trying to find “significant” evidence we can use to reject our null hypothesis.

These two hypotheses are easy to spot by their specific notation. The null hypothesis is usually denoted by H₀, while H₁ denotes the alternative hypothesis.

Example Code Performing Hypothesis Testing In Machine Learning

Since there are many different hypothesis tests in machine learning and data science, we will focus on one of my favorites.

This test is Welch’s T-Test Of Unequal Variance, where we are trying to determine if the population means of these two samples are different.

There are a couple of assumptions for this test, but we will ignore those for now and show the code.

You can read more about this here in our other post, Welch’s T-Test of Unequal Variance .

We see that our p-value is very low, and we reject the null hypothesis.

What Is The Difference Between The Biased And Unbiased Hypothesis Spaces?

The difference between the Biased and Unbiased hypothesis space is the number of possible training examples your algorithm has to predict.

The unbiased space has all of them, and the biased space only has the training examples you’ve supplied.

Since neither of these is optimal (one is too small, one is much too big), your algorithm creates generalized rules (inductive learning) to be able to handle examples it hasn’t seen before.

Here’s an example of each:

Example of The Biased Hypothesis Space In Machine Learning

The Biased Hypothesis space in machine learning is a biased subspace where your algorithm does not consider all training examples to make predictions.

This is easiest to see with an example.

Let’s say you have the following data:

Happy and Sunny and Stomach Full = True

Whenever your algorithm sees those three together in the biased hypothesis space, it’ll automatically default to true.

This means when your algorithm sees:

Sad and Sunny And Stomach Full = False

It’ll automatically default to False since it didn’t appear in our subspace.

This is a greedy approach, but it has some practical applications.

Example of the Unbiased Hypothesis Space In Machine Learning

The unbiased hypothesis space is a space where all combinations are stored.

We can use re-use our example above:

This would start to breakdown as

Happy = True

Happy and Sunny = True

Happy and Stomach Full = True

Let’s say you have four options for each of the three choices.

This would mean our subspace would need 2^12 instances (4096) just for our little three-word problem.

This is practically impossible; the space would become huge.

So while it would be highly accurate, this has no scalability.

More reading on this idea can be found in our post, Inductive Bias In Machine Learning .

Why Do We Restrict Hypothesis Space In Artificial Intelligence?

We have to restrict the hypothesis space in machine learning. Without any restrictions, our domain becomes much too large, and we lose any form of scalability.

This is why our algorithm creates rules to handle examples that are seen in production.

This gives our algorithms a generalized approach that will be able to handle all new examples that are in the same format.

Other Quick Machine Learning Tutorials

At EML, we have a ton of cool data science tutorials that break things down so anyone can understand them.

Below we’ve listed a few that are similar to this guide:

- Instance-Based Learning in Machine Learning

- Types of Data For Machine Learning

- Verbose in Machine Learning

- Generalization In Machine Learning

- Epoch In Machine Learning

- Inductive Bias in Machine Learning

- Understanding The Hypothesis In Machine Learning

- Zip Codes In Machine Learning

- get_dummies() in Machine Learning

- Bootstrapping In Machine Learning

- X and Y in Machine Learning

- F1 Score in Machine Learning

- Recent Posts

- Unveiling the Essential Value of Software Engineers [See why they are worth it!] - September 7, 2024

- How much does BlueCross BlueShield of Tennessee pay software engineers? [Secrets to Negotiating Higher Pay] - September 7, 2024

- How much does a software engineer at Komodo Health make? [Discover the Salary Insights] - September 7, 2024

Hypothesis Space

- Reference work entry

- Cite this reference work entry

- Hendrik Blockeel

5834 Accesses

4 Citations

4 Altmetric

Model space

The hypothesis space used by a machine learning system is the set of all hypotheses that might possibly be returned by it. It is typically defined by a Hypothesis Language , possibly in conjunction with a Language Bias .

Motivation and Background

Many machine learning algorithms rely on some kind of search procedure: given a set of observations and a space of all possible hypotheses that might be considered (the “hypothesis space”), they look in this space for those hypotheses that best fit the data (or are optimal with respect to some other quality criterion).

To describe the context of a learning system in more detail, we introduce the following terminology. The key terms have separate entries in this encyclopedia, and we refer to those entries for more detailed definitions.

A learner takes observations as inputs. The Observation Language is the language used to describe these observations.

The hypotheses that a learner may produce, will be formulated in...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Recommended Reading

De Raedt, L. (1992). Interactive theory revision: An inductive logic programming approach . London: Academic Press.

Google Scholar

Nédellec, C., Adé, H., Bergadano, F., & Tausend, B. (1996). Declarative bias in ILP. In L. De Raedt (Ed.), Advances in inductive logic programming . Frontiers in artificial intelligence and applications (Vol. 32, pp. 82–103). Amsterdam: IOS Press.

Download references

Author information

Authors and affiliations.

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

School of Computer Science and Engineering, University of New South Wales, Sydney, Australia, 2052

Claude Sammut

Faculty of Information Technology, Clayton School of Information Technology, Monash University, P.O. Box 63, Victoria, Australia, 3800

Geoffrey I. Webb

Rights and permissions

Reprints and permissions

Copyright information

© 2011 Springer Science+Business Media, LLC

About this entry

Cite this entry.

Blockeel, H. (2011). Hypothesis Space. In: Sammut, C., Webb, G.I. (eds) Encyclopedia of Machine Learning. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-30164-8_373

Download citation

DOI : https://doi.org/10.1007/978-0-387-30164-8_373

Publisher Name : Springer, Boston, MA

Print ISBN : 978-0-387-30768-8

Online ISBN : 978-0-387-30164-8

eBook Packages : Computer Science Reference Module Computer Science and Engineering

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

VC Dimensions

This is a continuation of my notes on Computational Learning Theory .

One caveat mentioned at the end of that lecture series was that the formula for the lower sample complexity bound breaks down in the case of an infinite hypothesis space. That formula follows. In particular, as $|H| \rightarrow \infty$, $m \rightarrow \infty$.

$$m \geq \frac{1}{\epsilon}(ln|H| + ln \frac{1}{\delta})$$

This is problematic because each of the following hypothesis spaces are infinite: linear separators , artificial neural networks , and decision trees (continuous inputs) . An example of a finite hypothesis space is a decision tree (discrete inputs)

Inputs, $X:\ \lbrace1,2,3,4,5,6,7,8,9,10\rbrace$ Hypotheses, $h(x)=x \geq \theta$, where $\theta \in \mathbb R$, so $|H|=\infty$

Syntactic hypothesis space: all the things you could write. Semantic hypothesis space: the actual different functions you are practically representing. IE, meaningfully different.

So, the hypothesis space for this example is syntactically infinite but semantically finite. This is because we can arrive at the same answer as tracking an infinite number of hypotheses by only tracking non-negative integers ten or below.

Power of a Hypothesis Space

What is the largest set of inputs that the hypothesis class can label in all possible ways?

Consider the set of inputs $S=\lbrace 6 \rbrace$. This hypothesis class can be labeled in all possible ways (either True or False) with different values of $\theta$ assigned. Consider the larger set of inputs $S=\lbrace 5, 6 \rbrace$ There are four ways this hypothesis class could be labeled {(False, False), (False, True), (True, False), (True, True)}. In practice only 3 of these four are accessible because there is not a $\theta$ that is less than 5 and greater than 6.

The technical term for “labeling in all possible ways” is to “ shatter ” the input space.

The size of that input space is called the VC dimension, where VC stands for Vapnik-Chervonenkis. In this example, size of the largest set of inputs this hypothesis class can shatter is 1 element.

Inputs: $X=\mathbb R$ Hypothesis Space: $H=\lbrace h(x)=x\in [a,b]\rbrace$ where the space is parametrized by $a,b \in \mathbb R$

Based on the figure below, the VC dimension is clearly greater than or equal to 2.

Based on the figure below, the VCC dimensions is clearly less than 3, so the VC dimension is 2.

To show the VC dimension is at least a given number, it is only necessary to find one example that can be shattered. However, to show the VC dimension is less than a given number, it is necessary to prove there are no examples with that can be shattered.

Inputs: $X=\mathbb R^2$ Hypothesis Space: $H=\lbrace h(x)=W^T x \geq \theta\rbrace$

Based on the example below, the VC dimension is at least 3, because lines can be drawn to categorize the points in all possible ways.

Once there are four inputs, however, separating the points becomes impossible.

Summarizing the examples outlined above:

| Hypothesis Class Dimension | VC Dimension | Actual Parameters |

|---|---|---|

| 1-D | 1 | $\theta$ |

| interval | 2 | a,b |

| 2-D | 3 | $w_1$, $w_2$, $\theta$ |

The VC dimension is often the number of parameters. For a d-dimensional hyperplane, the parameter count must be d+1.

Sample Complexity and VC Dimensions

If the training data has at least $m$ samples, then that will be sufficient to get $\epsilon$ error with probability $\delta$, where $VC(H)$ is the VC dimension of the hypothesis class.

Infinite case : $$m \geq \frac{1}{\epsilon}(8VC(H) \log_2 \frac{13}{\epsilon}+4\log_2 \frac{2}{\delta})$$

Finite case : $$m \geq \frac{1}{\epsilon}(ln|H| + ln \frac{1}{\delta})$$

What is VC-Dimension of a finite H?

Let $d$ equal the VC dimension of some finite hypothesis $H$: $d = VC(H)$. This implies that there exists $s^d$ distinct concepts, because each gets a different hypothesis. It follows that $2^d \leq |H|$, by manipulation $d \leq \log_2 |H|$.

From similar reasoning we get the following theorem. H is PAC-learnable if and only if the VC dimension is finite.

So, something is PAC-learnable if it has a finite VC dimension. Inversely, something with infinite VC dimension cannot be learned.

The VC dimension captures in one quantity the notion of PAC-Learnability.

For Fall 2019, CS 7461 is instructed by Dr. Charles Isbell . The course content was originally created by Dr. Charles Isbell and Dr. Michael Littman .

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

What is the difference between hypothesis space and representational capacity?

I am reading Goodfellow et al Deeplearning Book . I found it difficult to understand the difference between the definition of the hypothesis space and representation capacity of a model.

In Chapter 5 , it is written about hypothesis space:

One way to control the capacity of a learning algorithm is by choosing its hypothesis space, the set of functions that the learning algorithm is allowed to select as being the solution.

And about representational capacity:

The model specifies which family of functions the learning algorithm can choose from when varying the parameters in order to reduce a training objective. This is called the representational capacity of the model.

If we take the linear regression model as an example and allow our output $y$ to takes polynomial inputs, I understand the hypothesis space as the ensemble of quadratic functions taking input $x$ , i.e $y = a_0 + a_1x + a_2x^2$ .

How is it different from the definition of the representational capacity, where parameters are $a_0$ , $a_1$ and $a_2$ ?

- machine-learning

- terminology

- computational-learning-theory

- hypothesis-class

3 Answers 3

Consider a target function $f: x \mapsto f(x)$ .

A hypothesis refers to an approximation of $f$ . A hypothesis space refers to the set of possible approximations that an algorithm can create for $f$ . The hypothesis space consists of the set of functions the model is limited to learn. For instance, linear regression can be limited to linear functions as its hypothesis space, or it can be expanded to learn polynomials.

The representational capacity of a model determines the flexibility of it, its ability to fit a variety of functions (i.e. which functions the model is able to learn), at the same. It specifies the family of functions the learning algorithm can choose from.

- 1 $\begingroup$ Does it mean that the set of functions described by the representational capacity is strictly included in the hypothesis space ? By definition, is it possible to have functions in the hypothesis space NOT described in the representational capacity ? $\endgroup$ – Qwarzix Commented Aug 23, 2018 at 8:43

- $\begingroup$ It's still pretty confusing to me. Most sources say that a "model" is an instance (after execution/training on data) of a "learning algorithm". How, then, can a model specify the family of functions the learning algorithm can choose from? It doesn't make sense to me. The authors of the book should've explained these concepts in more depth. $\endgroup$ – Talendar Commented Oct 9, 2020 at 13:09

A hypothesis space is defined as the set of functions $\mathcal H$ that can be chosen by a learning algorithm to minimize loss (in general).

$$\mathcal H = \{h_1, h_2,....h_n\}$$

The hypothesis class can be finite or infinite, for example a discrete set of shapes to encircle certain portion of the input space is a finite hypothesis space, whereas hpyothesis space of parametrized functions like neural nets and linear regressors are infinite.

Although the term representational capacity is not in the vogue a rough definition woukd be: The representational capacity of a model, is the ability of its hypothesis space to approximate a complex function, with 0 error, which can only be approximated by infinitely many hypothesis spaces whose representational capacity is equal to or exceed the representational capacity required to approximate the complex function.

The most popular measure of representational capacity is the $\mathcal V$ $\mathcal C$ Dimension of a model. The upper bound for VC dimension ( $d$ ) of a model is: $$d \leq \log_2| \mathcal H|$$ where $|H|$ is the cardinality of the set of hypothesis space.

A hypothesis space/class is the set of functions that the learning algorithm considers when picking one function to minimize some risk/loss functional.

The capacity of a hypothesis space is a number or bound that quantifies the size (or richness) of the hypothesis space, i.e. the number (and type) of functions that can be represented by the hypothesis space. So a hypothesis space has a capacity. The two most famous measures of capacity are VC dimension and Rademacher complexity.

In other words, the hypothesis class is the object and the capacity is a property (that can be measured or quantified) of this object, but there is not a big difference between hypothesis class and its capacity, in the sense that a hypothesis class naturally defines a capacity, but two (different) hypothesis classes could have the same capacity.

Note that representational capacity (not capacity , which is common!) is not a standard term in computational learning theory, while hypothesis space/class is commonly used. For example, this famous book on machine learning and learning theory uses the term hypothesis class in many places, but it never uses the term representational capacity .

Your book's definition of representational capacity is bad , in my opinion, if representational capacity is supposed to be a synonym for capacity , given that that definition also coincides with the definition of hypothesis class, so your confusion is understandable.

- 1 $\begingroup$ I agree with you. The authors of the book should've explained these concepts in more depth. Most sources say that a "model" is an instance (after execution/training on data) of a "learning algorithm". How, then, can a model specify the family of functions the learning algorithm can choose from? Also, as you pointed out, the definition of the terms "hypothesis space" and "representational capacity" given by the authors are practically the same, although they use the terms as if they represent different concepts. $\endgroup$ – Talendar Commented Oct 9, 2020 at 13:18

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged machine-learning terminology computational-learning-theory hypothesis-class capacity ..

- Featured on Meta

- Bringing clarity to status tag usage on meta sites

- Announcing a change to the data-dump process

Hot Network Questions

- Children in a field trapped under a transparent dome who interact with a strange machine inside their car

- I'm a little embarrassed by the research of one of my recommenders

- How can I play MechWarrior 2?

- help to grep a string from a site

- Visual assessment of scatterplots acceptable?

- Gravitational potential energy of a water column

- Why isn't a confidence level of anything >50% "good enough"?

- How to truncate text in latex?

- Are others allowed to use my copyrighted figures in theses, without asking?

- SOT 23-6 SMD marking code GC1MGR

- Direction of centripetal acceleration

- Why does Jeff think that having a story at all seems gross?

- What would be a good weapon to use with size changing spell

- Nausea during high altitude cycling climbs

- What is the optimal number of function evaluations?

- What is this movie aircraft?

- What are the most commonly used markdown tags when doing online role playing chats?

- Can reinforcement learning rewards be a combination of current and new state?

- How to change upward facing track lights 26 feet above living room?

- Confusion about time dilation

- Could a lawyer agree not to take any further cases against a company?

- Does the average income in the US drop by $9,500 if you exclude the ten richest Americans?

- Current in a circuit is 50% lower than predicted by Kirchhoff's law

- Does a party have to wait 1d4 hours to start a Short Rest if no healing is available and an ally is only stabilized?

ID3 Algorithm and Hypothesis space in Decision Tree Learning

The collection of potential decision trees is the hypothesis space searched by ID3. ID3 searches this hypothesis space in a hill-climbing fashion, starting with the empty tree and moving on to increasingly detailed hypotheses in pursuit of a decision tree that properly classifies the training data.

In this blog, we’ll have a look at the Hypothesis space in Decision Trees and the ID3 Algorithm.

ID3 Algorithm:

The ID3 algorithm (Iterative Dichotomiser 3) is a classification technique that uses a greedy approach to create a decision tree by picking the optimal attribute that delivers the most Information Gain (IG) or the lowest Entropy (H).

What is Information Gain and Entropy?

Information gain: .

The assessment of changes in entropy after segmenting a dataset based on a characteristic is known as information gain.

It establishes how much information a feature provides about a class.

We divided the node and built the decision tree based on the value of information gained.

The greatest information gain node/attribute is split first in a decision tree method, which always strives to maximize the value of information gain.

The formula for Information Gain:

Entropy is a metric for determining the degree of impurity in a particular property. It denotes the unpredictability of data. The following formula may be used to compute entropy:

S stands for “total number of samples.”

P(yes) denotes the likelihood of a yes answer.

P(no) denotes the likelihood of a negative outcome.

- Calculate the dataset’s entropy.

- For each feature/attribute.

Determine the entropy for each of the category values.

Calculate the feature’s information gain.

- Find the feature that provides the most information.

- Repeat it till we get the tree we want.

Characteristics of ID3:

- ID3 takes a greedy approach, which means it might become caught in local optimums and hence cannot guarantee an optimal result.

- ID3 has the potential to overfit the training data (to avoid overfitting, smaller decision trees should be preferred over larger ones).

- This method creates tiny trees most of the time, however, it does not always yield the shortest tree feasible.

- On continuous data, ID3 is not easy to use (if the values of any given attribute are continuous, then there are many more places to split the data on this attribute, and searching for the best value to split by takes a lot of time).

Over Fitting:

Good generalization is the desired property in our decision trees (and, indeed, in all classification problems), as we noted before.

This implies we want the model fit on the labeled training data to generate predictions that are as accurate as they are on new, unseen observations.

Capabilities and Limitations of ID3:

- In relation to the given characteristics, ID3’s hypothesis space for all decision trees is a full set of finite discrete-valued functions.

- As it searches across the space of decision trees, ID3 keeps just one current hypothesis. This differs from the prior version space candidate Elimination approach, which keeps the set of all hypotheses compatible with the training instances provided.

- ID3 loses the capabilities that come with explicitly describing all consistent hypotheses by identifying only one hypothesis. It is unable to establish how many different decision trees are compatible with the supplied training data.

- One benefit of incorporating all of the instances’ statistical features (e.g., information gain) is that the final search is less vulnerable to faults in individual training examples.

- By altering its termination criterion to allow hypotheses that inadequately match the training data, ID3 may simply be modified to handle noisy training data.

- In its purest form, ID3 does not go backward in its search. It never goes back to evaluate a choice after it has chosen an attribute to test at a specific level in the tree. As a result, it is vulnerable to the standard dangers of hill-climbing search without backtracking, resulting in local optimum but not globally optimal solutions.

- At each stage of the search, ID3 uses all training instances to make statistically based judgments on how to refine its current hypothesis. This is in contrast to approaches that make incremental judgments based on individual training instances (e.g., FIND-S or CANDIDATE-ELIMINATION ).

Hypothesis Space Search by ID3:

- ID3 climbs the hill of knowledge acquisition by searching the space of feasible decision trees.

- It looks for all finite discrete-valued functions in the whole space. Every function is represented by at least one tree.

- It only holds one theory (unlike Candidate-Elimination). It is unable to inform us how many more feasible options exist.

- It’s possible to get stranded in local optima.

- At each phase, all training examples are used. Errors have a lower impact on the outcome.

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Could anyone explain the terms "Hypothesis space" "sample space" "parameter space" "feature space in machine learning with one concrete example?

I am confused with these machine learning terms, and trying to distinguish them with one concrete example.

for instance, use logistic regression to classify a bunch of cat images.

assume there are 1,000 images with labels indicating the corresponding image is or is not a cat image.

each image has a size of 100*100.

given above, is my following understanding right?

the sample space is the 1,000 images.

the feature space is 100*100 pixels.

the parameter space is a vector that has a length of 100*100+1.

the Hypothesis space is the set of all the possible hyperplanes that have some attribute that I have no idea.

- machine-learning

- classification

- data-mining

2 Answers 2

People are a bit loose with their definitions (meaning different people will use different definitions, depending on the context), but let me put what I would say. I will do so more in the context of modern computer vision.

First, more generally, define $X$ as the space of the input data, and $Y$ as the output label space (some subset of the integers or equivalently one-hot vectors). A dataset is then $D=\{ d=(x,y)\in X\times Y \}$ , where $d\sim P_{X\times Y}$ is sampled from some joint distribution over the input and output space.

Now, let $\mathcal{H}$ be a set of functions such that an element $f \in \mathcal{H}$ is a map $f: X\rightarrow Y$ . This is the space of functions we will consider for our problem. And finally, let $g_\theta \in \mathcal{H}$ be some specific function with parameters $\theta\in\mathbb{R}^n$ , such that we denote $\widehat{y} = g_\theta(x|\theta)$ .

Finally, lets assume that any $f\in\mathcal{H}$ consists of a sequence of mappings $f=f_\ell\circ f_{\ell-1}\circ\ldots\circ f_2\circ f_1$ , where $f_i: F_{i}\rightarrow F_{i+1}$ and $F_1 = X, \, F_{\ell+1}=Y$ .

Ok, now for the definitions: