November 12, 2020

amanjeetsahu/Natural-Language-Processing-Specialization

This repo contains my coursework, assignments, and Slides for Natural Language Processing Specialization by deeplearning.ai on Coursera

| repo name | amanjeetsahu/Natural-Language-Processing-Specialization |

| repo link | |

| homepage | |

| language | Jupyter Notebook |

| size (curr.) | 299445 kB |

| stars (curr.) | 67 |

| created | 2020-07-06 |

| license | |

Natural Language Processing Specialization

Natural Language Processing (NLP) uses algorithms to understand and manipulate human language. This technology is one of the most broadly applied areas of machine learning. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce insights from text and audio. This Specialization will equip you with the state-of-the-art deep learning techniques needed to build cutting-edge NLP systems. By the end of this Specialization, you will be ready to design NLP applications that perform question-answering and sentiment analysis, create tools to translate languages and summarize text, and even build chatbots.

This Specialization is for students of machine learning or artificial intelligence as well as software engineers looking for a deeper understanding of how NLP models work and how to apply them. Learners should have a working knowledge of machine learning, intermediate Python including experience with a deep learning framework (e.g., TensorFlow, Keras), as well as proficiency in calculus, linear algebra, and statistics. If you would like to brush up on these skills, we recommend the Deep Learning Specialization, offered by deeplearning.ai and taught by Andrew Ng.

This Specialization is designed and taught by two experts in NLP, machine learning, and deep learning. Younes Bensouda Mourri is an Instructor of AI at Stanford University who also helped build the Deep Learning Specialization. Łukasz Kaiser is a Staff Research Scientist at Google Brain and the co-author of Tensorflow, the Tensor2Tensor and Trax libraries, and the Transformer paper.

Course 1: Classification and Vector Spaces in NLP

This is the first course of the Natural Language Processing Specialization.

Week 1: Logistic Regression for Sentiment Analysis of Tweets

- Use a simple method to classify positive or negative sentiment in tweets

Week 2: Naïve Bayes for Sentiment Analysis of Tweets

- Use a more advanced model for sentiment analysis

Week 3: Vector Space Models

- Use vector space models to discover relationships between words and use principal component analysis (PCA) to reduce the dimensionality of the vector space and visualize those relationships

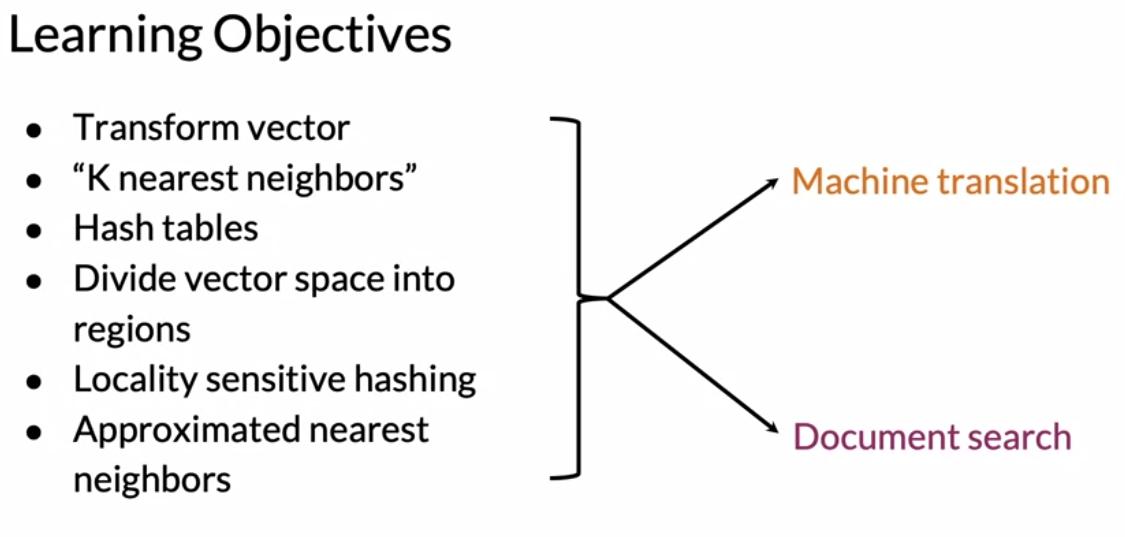

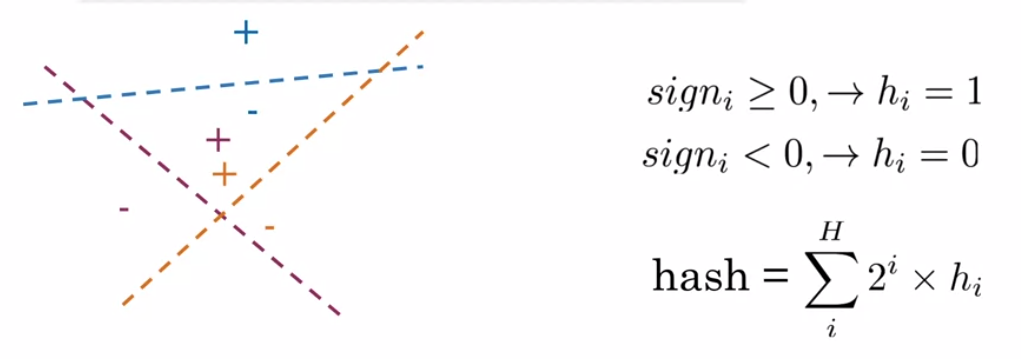

Week 4: Word Embeddings and Locality Sensitive Hashing for Machine Translation

- Write a simple English-to-French translation algorithm using pre-computed word embeddings and locality sensitive hashing to relate words via approximate k-nearest neighbors search

Course 2: Probabilistic Models in NLP

This is the second course of the Natural Language Processing Specialization.

Week 1: Auto-correct using Minimum Edit Distance

- Create a simple auto-correct algorithm using minimum edit distance and dynamic programming

Week 2: Part-of-Speech (POS) Tagging

- Apply the Viterbi algorithm for POS tagging, which is important for computational linguistics

Week 3: N-gram Language Models

- Write a better auto-complete algorithm using an N-gram model (similar models are used for translation, determining the author of a text, and speech recognition)

Week 4: Word2Vec and Stochastic Gradient Descent

- Write your own Word2Vec model that uses a neural network to compute word embeddings using a continuous bag-of-words model

Course 3: Sequence Models in NLP

This is the third course in the Natural Language Processing Specialization.

Week 1: Sentiment with Neural Nets

- Train a neural network with GLoVe word embeddings to perform sentiment analysis of tweets

Week 2: Language Generation Models

- Generate synthetic Shakespeare text using a Gated Recurrent Unit (GRU) language model

Week 3: Named Entity Recognition (NER)

- Train a recurrent neural network to perform NER using LSTMs with linear layers

Week 4: Siamese Networks

- Use so-called ‘Siamese’ LSTM models to compare questions in a corpus and identify those that are worded differently but have the same meaning

Course 4: Attention Models in NLP

This is the fourth course in the Natural Language Processing Specialization.

Week 1: Neural Machine Translation with Attention

- Translate complete English sentences into French using an encoder/decoder attention model

Week 2: Summarization with Transformer Models

- Build a transformer model to summarize text

Week 3: Question-Answering with Transformer Models

- Use T5 and BERT models to perform question answering

Week 4: Chatbots with a Reformer Model

- Build a chatbot using a reformer model

Specialization Completion Certificate

launchcode01dl/mathematics-for-machine-learning-cousera

October 19, 2020

ijelliti/Deeplearning.ai-Natural-Language-Processing-Specialization

June 26, 2020

AlessandroCorradini/University-of-California-San-Diego-Big-Data-Specialization

June 11, 2020

Vinohith/Self_Driving_Car_specialization

tuanavu/coursera-stanford

February 11, 2020

qiaoxu123/Self-Driving-Cars

February 2, 2020

shenweichen/Coursera

January 4, 2020

hse-aml/natural-language-processing

December 8, 2019

enggen/Deep-Learning-Coursera

November 29, 2019

Natural Language Processing (Coursera)

Dec 20, 2020

The following are my notes for the NLP Specialization by DeepLearning.AI on Coursera

Course 1 - Natural Language Processing with Classification and Vector Spaces

Course 2 - Natural Language Processing with Probabilistic Models

Course 3 - Natural Language Processing with Sequence Models

Course 4 - Natural Language Processing with Attention Models

- Use logistic regression, naïve Bayes, and word vectors to implement sentiment analysis, complete analogies, and translate words, and use locality sensitive hashing for approximate nearest neighbors.

- Use dynamic programming, hidden Markov models, and word embeddings to autocorrect misspelled words, autocomplete partial sentences, and identify part-of-speech tags for words.

- Use dense and recurrent neural networks, LSTMs, GRUs, and Siamese networks in TensorFlow and Trax to perform advanced sentiment analysis, text generation, named entity recognition, and to identify duplicate questions.

- Use encoder-decoder, causal, and self-attention to perform advanced machine translation of complete sentences, text summarization, question-answering and to build chatbots. Models covered include T5, BERT, transformer, reformer, and more!

- Natural Language Processing (NLP) uses algorithms to understand and manipulate human language. This technology is one of the most broadly applied areas of machine learning. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce insights from text and audio.

- By the end of this specialization, you will be ready to design NLP applications that perform question-answering, sentiment analysis, language translation and text summarization, and even build chatbots. These and other NLP applications are going to be at the forefront of the coming transformation to an AI-powered future.

- This Specialization is designed and taught by two experts in NLP, machine learning, and deep learning. Younes Bensouda Mourri is an Instructor of AI at Stanford University who also helped build the Deep Learning Specialization. Łukasz Kaiser is a Staff Research Scientist at Google Brain and the co-author of Tensorflow, the Tensor2Tensor and Trax libraries, and the Transformer paper.

Coursera NLP Module 1 Week 4 Notes

Sep 4, 2020 - 12:09 • Marcos Leal

Machine Translation: An Overview

Transforming word vector

Given a set of english words X, a transformation matrix R and a desired set of french word Y the transformation

- \[XR \approx Y\]

- We initialize the weights R randomly and in a loop execute the following steps

- \[Loss = || XR - Y||_F\]

- \[g = \frac{d}{dR} Loss\]

- \[R = R - \alpha g\]

The Frobenius Norm takes all the squares of each elements of the matrix and sum them up.

- \[||A||_F = \sqrt{\sum_{i=1}^{m} \sum_{j=1}^{n} |a_{ij}|^2}\]

To simplify we can take the norm squared, thus:

- \[||A||^2_F = \sum_{i=1}^{m} \sum_{j=1}^{n} |a_{ij}|^2\]

- \[g = \frac{d}{dR} Loss = \frac{2}{m} (X^T (XR-Y))\]

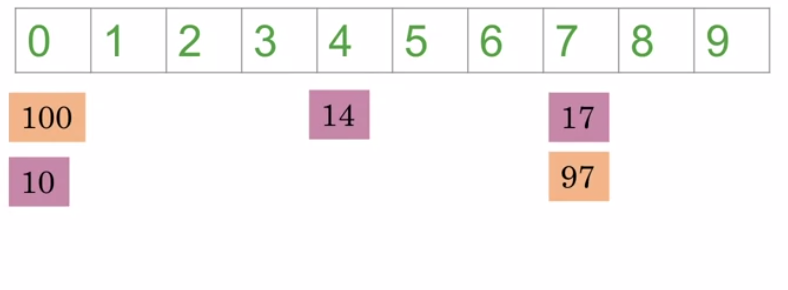

Hash tables and hash functions

Locality sensitive hashing

Ungraded Lab: Rotation Matrices in R2

Notebook / HTML

Ungraded Lab: Hash Tables and Multiplanes

Programming assignment: word translation.

Resources on various topics being worked on at IvLabs

Natural language processing.

These courses will help you understand the basics of Natural Language Processing along with enabling you to read and implement papers.

Sequence Models This course, like all the other courses by Andrew Ng (on Coursera) is a simple overview or montage of NLP. It will prolly give you some confidence but since the assignments have a lot of readymade code, which once you see, you cannot unsee. Also, if you try reading a paper on NLP, you will not be able to do so (if all your knowledge comes from this course). So all in all, it is a simple, basic and gentle scratch on the surface.

CS224n: Natural Language Processing with Deep Learning This is one of the most rigorous courses on NLP and it starts from the very basics and scales up almost all the way to SOTA (since it is a relatively new course). It has a good amount of depth of concept and reasonable math. The best part is that they provide you with concepts and let you figure out how to implement them. This is very very helpful in the long run and once you are done with like 12-13 lectures of this course, you can actually read, understand and implement papers right from scratch in PyTorch. **Note:** This course may seem to be boring in the beginning but the concepts (which may seem too rudimentary) taught in the lectures really go a long way.

Natural Language Processing by National Research University, Russia This course aims to teach you Natural Language Processing from the ground up, starting from dated statistical methods, and covering everything upto the latest Deep Learning based techniques. The quizzes test mathematical and theoritical knowledge, and the programming assignments make you build stuff. These result in a good blend of theory and practice, which can help if you’re the kind that gets bored of just studying and not doing. The final project is enticing and will require you to deploy a telegram chatbot on an AWS machine, which gives you real world experience of how these systems run in production.

More Courses

- Natural Language Processing Specialization by Coursera

- Fast.ai - NLP

- Hugging Face Course

Tutorials/Implementations

- IvLabs’ Natural Language Processing Repository

- NLP From Scratch: Generating names with a character-level RNN

- Sequence-to-Sequence Modeling with nn.Transformer and TorchText

- NLP From Scratch: Translation with a Sequence to Sequence Network and Attention

- PyTorch Seq-2-Seq

- Introduction to Word Embedding and Word2Vec

- Illustrated Word2Vec

- Illustrated: Self-Attention

- The Illustrated Transformer

Research Papers

Notes for some of the below-mentioned papers can be found here .

- Sequence to Sequence Learning with Neural Networks

- Neural Machine Translation by Jointly Learning to Align and Translate

- Convolutional Sequence to Sequence Learning

- Attention Is All You Need

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks

Natural Language Processing Specialization

What you will learn

- Use logistic regression, naïve Bayes, and word vectors to implement sentiment analysis, complete analogies, translate words, and use locality-sensitive hashing to approximate nearest neighbors.

- Use dynamic programming, hidden Markov models, and word embeddings to autocorrect misspelled words, autocomplete partial sentences, and identify part-of-speech tags for words.

- Use dense and recurrent neural networks, LSTMs, GRUs, and Siamese networks in TensorFlow and Trax to perform advanced sentiment analysis, text generation, named entity recognition, and to identify duplicate questions.

- Use encoder-decoder, causal, and self-attention to perform advanced machine translation of complete sentences, text summarization, question-answering, and build chatbots. Models covered include T5, BERT, transformer, reformer, and more!

Skills you will gain

- Sentiment Analysis

- Transformers

- Attention Models

- Machine Translation

- Word Embeddings

- Locality-Sensitive Hashing

- Vector Space Models

- Parts-of-Speech Tagging

- N-gram Language Models

- Autocorrect

- Sentiment with Neural Networks

- Siamese Networks

- Natural Language Generation

- Named Entity Recognition (NER)

- Reformer Models

- Neural Machine Translation

- T5 + BERT Models

Natural language processing is a subfield of linguistics, computer science, and artificial intelligence that uses algorithms to interpret and manipulate human language.

In the Natural Language Processing (NLP) Specialization, you will learn how to design NLP applications that perform question-answering and sentiment analysis, create tools to translate languages, summarize text, and even build chatbots. These and other NLP applications will be at the forefront of the coming transformation to an AI-powered future.

NLP is one of the most broadly applied areas of machine learning and is critical in effectively analyzing massive quantities of unstructured, text-heavy data. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce insights from text and audio.

- > 4 months (6 hours/week)

- Intermediate

In Course 1 of the Natural Language Processing Specialization, you will:

a) Perform sentiment analysis of tweets using logistic regression and then naïve Bayes, b) Use vector space models to discover relationships between words and use PCA to reduce the dimensionality of the vector space and visualize those relationships, and c) Write a simple English to French translation algorithm using pre-computed word embeddings and locality-sensitive hashing to relate words via approximate k-nearest neighbor search.

Week 1: Sentiment Analysis with Logistic Regression

Learn how to extract features from text into numerical vectors, then build a binary classifier for tweets using logistic regression.

Week 2: Sentiment Analysis with Naïve Bayes

Understand the theory behind Bayes’ rule for conditional probabilities, then apply it toward building a Naive Bayes tweet classifier of your own.

Week 3: Vector Space Models

Vector space models capture semantic meaning and relationships between words. You’ll learn how to create word vectors that capture dependencies between words, then visualize their relationships in two dimensions using PCA.

Week 4: Machine Translation and Document Search

Learn how to transform word vectors and assign them to subsets using locality-sensitive hashing to perform machine translation and document search.

In Course 2 of the Natural Language Processing Specialization, you will:

a) Create a simple auto-correct algorithm using minimum edit distance and dynamic programming, b) Apply the Viterbi Algorithm for part-of-speech (POS) tagging, which is vital for computational linguistics, c) Write a better auto-complete algorithm using an N-gram language model, and d) Write your own Word2Vec model that uses a neural network to compute word embeddings using a continuous bag-of-words model.

Week 1: Auto-correct

Learn about autocorrect, minimum edit distance, and dynamic programming, then build your own spellchecker to correct misspelled words.

Week 2: Part-of-Speech (POS) Tagging and Hidden Markov Models

Learn about Markov chains and Hidden Markov models, then use them to create part-of-speech tags for a Wall Street Journal text corpus.

Week 3: Auto-complete and Language Models

Learn about how N-gram language models work by calculating sequence probabilities, then build your own autocomplete language model using a text corpus from Twitter.

Week 4: Word Embeddings with Neural Networks

Learn how word embeddings carry the semantic meaning of words, making them more powerful for NLP tasks. Then build your own Continuous bag-of-words model to create word embeddings from Shakespeare text.

In Course 3 of the Natural Language Processing Specialization, you will:

a) Train a neural network with GLoVe word embeddings to perform sentiment analysis of tweets, b) Generate synthetic Shakespeare text using a Gated Recurrent Unit (GRU) language model, c) Train a recurrent neural network to perform named entity recognition (NER) using LSTMs with linear layers, and d) Use so-called ‘Siamese’ LSTM models to compare questions in a corpus and identify those that are worded differently but have the same meaning.

Week 1: Neural Network for Sentiment Analysis

Learn about neural networks for deep learning, then build a sophisticated tweet classifier that places tweets into positive or negative sentiment categories using a deep neural network.

Week 2: Recurrent Neural Networks for Language Modeling

Learn about the limitations of traditional language models and see how RNNs and GRUs use sequential data for text prediction. Then build your own next-word generator using a simple RNN on Shakespeare text data.

Week 3: LSTMs and Named Entity Recognition (NER)

Learn how long short-term memory units (LSTMs) solve the vanishing gradient problem and how Named Entity Recognition systems quickly extract essential information from text. Then build your own Named Entity Recognition system using an LSTM and data from Kaggle.

Week 4: Siamese Networks

Learn about Siamese networks, a special type of neural network made of two identical networks that are eventually merged, then build your own Siamese network that identifies question duplicates in a dataset from Quora.

In Course 4 of the Natural Language Processing Specialization, you will:

a) Translate complete English sentences into German using an encoder-decoder attention model, b) Build a Transformer model to summarize text, c) Use T5 and BERT models to perform question-answering, and d) Build a chatbot using a Reformer model.

Week 1: Neural Machine Translation with Attention models

Discover some of the shortcomings of a traditional seq2seq model and how to solve for them by adding an attention mechanism, then build a Neural Machine Translation model with Attention that translates English sentences into German.

Week 2: Text Summarization with Transformer models

Compare RNNs and other sequential models to the more modern Transformer architecture, then create a tool that generates text summaries.

Week 3: Question-Answering

Explore transfer learning with state-of-the-art models like T5 and BERT, then build a model that can answer questions.

Week 4: Chatbots with Reformer models

Examine some unique challenges Transformer models face and their solutions, then build a chatbot using a Reformer model.

Course 1 : Natural Language Processing with Classification and Vector Spaces

Course 2 : Natural Language Processing with Probabilistic Models

Course 3 : Natural Language Processing with Sequence Models

Course 4 : Natural Language Processing with Attention Models

Instructors.

Younes Bensouda Mourri

Łukasz Kaiser

Course Slides

You can download the annotated version of the course slides below.

Be notified of new courses

Frequently Asked Questions

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

This repository contains my full work and notes on Coursera's NLP Specialization (Natural Language Processing) taught by the instructor Younes Bensouda Mourri and Łukasz Kaiser offered by deeplearning.ai

ibrahimjelliti/Deeplearning.ai-Natural-Language-Processing-Specialization

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 131 Commits | ||||

Repository files navigation

My gan specialization repository.

DeepLearning.ai NLP Specialization Courses Notes

This repository contains my personal notes on DeepLearning.ai NLP specialization courses.

DeepLearning.ai contains four courses which can be taken on Coursera . The four courses are:

- Natural Language Processing with Classification and Vector Spaces

- Natural Language Processing with Probabilistic Models

- Natural Language Processing with Sequence Models

- Natural Language Processing with Attention Models

About This Specialization (From the official NLP Specialization page)

Natural Language Processing (NLP) uses algorithms to understand and manipulate human language. This technology is one of the most broadly applied areas of machine learning. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce insights from text and audio.

By the end of this Specialization, you will be ready to design NLP applications that perform question-answering and sentiment analysis, create tools to translate languages and summarize text, and even build chatbots. These and other NLP applications are going to be at the forefront of the coming transformation to an AI-powered future.

This Specialization is designed and taught by two experts in NLP, machine learning, and deep learning. Younes Bensouda Mourri is an Instructor of AI at Stanford University who also helped build the Deep Learning Specialization. Łukasz Kaiser is a Staff Research Scientist at Google Brain and the co-author of Tensorflow, the Tensor2Tensor and Trax libraries, and the Transformer paper.

Applied Learning Project

This Specialization will equip you with the state-of-the-art deep learning techniques needed to build cutting-edge NLP systems:

• Use logistic regression, naïve Bayes, and word vectors to implement sentiment analysis, complete analogies, and translate words, and use locality sensitive hashing for approximate nearest neighbors.

• Use dynamic programming, hidden Markov models, and word embeddings to autocorrect misspelled words, autocomplete partial sentences, and identify part-of-speech tags for words.

• Use dense and recurrent neural networks, LSTMs, GRUs, and Siamese networks in TensorFlow and Trax to perform advanced sentiment analysis, text generation, named entity recognition, and to identify duplicate questions.

• Use encoder-decoder, causal, and self-attention to perform advanced machine translation of complete sentences, text summarization, question-answering and to build chatbots. Models covered include T5, BERT, transformer, reformer, and more! Enjoy!

I share the assignment notebooks with my prefilled and from the contributors code structred as in the course Course/Week The assignment notebooks are subject to changes through time.

Connect with your mentors and fellow learners on Slack!

Once you enrolled to the course, you are invited to join a slack workspace for this specialization: Please join the Slack workspace by going to the following link deeplearningai-nlp.slack.com This Slack workspace includes all courses of this specialization.

Contact Information

- Twitter: @IbrahimJelliti

- LinkedIn: @ibrahimjelliti

- the specialization slack channel: @ibrahim

Stargazers over Time

Ibrahim Jelliti © 2020

Code of conduct

Contributors 2.

- Jupyter Notebook 98.0%

- Python 1.5%

IMAGES

VIDEO

COMMENTS

Programming assignments from all courses in the Coursera Natural Language Processing Specialization offered by deeplearning.ai. - amanchadha/coursera-natural-language-processing-specialization. ... understanding new concepts and debugging assignments. The solutions uploaded here are only for reference. They are meant to unblock you if you get ...

Natural Language Processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence that uses algorithms to interpret and manipulate human language. This technology is one of the most broadly applied areas of machine learning and is critical in effectively analyzing massive quantities of unstructured, text-heavy data.

This Repository Contains Solution to the Assignments of the Natural Language Processing Specialization from Deeplearning.ai on Coursera Taught by Younes Bensouda Mourri, Łukasz Kaiser, Eddy Shyu - ...

This is the second course of the Natural Language Processing Specialization. Week 1: Auto-correct using Minimum Edit Distance. Create a simple auto-correct algorithm using minimum edit distance and dynamic programming. Week 2: Part-of-Speech (POS) Tagging. Apply the Viterbi algorithm for POS tagging, which is important for computational ...

Natural Language Processing (Coursera) Dec 20, 2020. The following are my notes for the NLP Specialization by DeepLearning.AI on Coursera. Course 1 - Natural Language Processing with Classification and Vector Spaces. Course 2 - Natural Language Processing with Probabilistic Models. Course 3 - Natural Language Processing with Sequence Models.

Aman shares his notes for the Natural Language Processing Specialization on Coursera, offered by deeplearning.ai. The notes cover topics such as sentiment analysis, machine translation, text summarization, and chatbots.

Assignment 3: Hello Vectors¶ Welcome to this week's programming assignment on exploring word vectors. In natural language processing, we represent each word as a vector consisting of numbers. The vector encodes the meaning of the word. These numbers (or weights) for each word are learned using various machine learning models, which we will ...

Offered by deeplearning.ai. Natural Language Processing (NLP) uses algorithms to understand and manipulate human language. This technology is one of the most broadly applied areas of machine learning. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce insights from text and audio.

Spanning four progressively advanced courses, this specialization begins with logistic regression for sentiment classification and culminates in mastering transformer models, crucial for ...

Learn about machine translation, hash tables and multiplanes, and word translation programming assignment in Coursera NLP course. See notes, code, and examples from Marcos Leal.

This is the first course of the Natural Language Processing Specialization. Week 1: Logistic Regression for Sentiment Analysis of Tweets. Use a simple method to classify positive or negative sentiment in tweets; Week 2: Naïve Bayes for Sentiment Analysis of Tweets. Use a more advanced model for sentiment analysis; Week 3: Vector Space Models

There are 4 modules in this course. In Course 1 of the Natural Language Processing Specialization, you will: a) Perform sentiment analysis of tweets using logistic regression and then naïve Bayes, b) Use vector space models to discover relationships between words and use PCA to reduce the dimensionality of the vector space and visualize those ...

There are 3 modules in this course. In Course 4 of the Natural Language Processing Specialization, you will: a) Translate complete English sentences into German using an encoder-decoder attention model, b) Build a Transformer model to summarize text, c) Use T5 and BERT models to perform question-answering, and d) Build a chatbot using a ...

These courses will help you understand the basics of Natural Language Processing along with enabling you to read and implement papers. Sequence Models This course, like all the other courses by Andrew Ng (on Coursera) is a simple overview or montage of NLP. It will prolly give you some confidence but since the assignments have a lot of ...

This is all my notebooks, lab solutions, and assignments for the DeepLearning.AI Natural Language Processing Specialization on Coursera. About the specialization This Specialization is designed and taught by two experts in NLP, machine learning, and deep learning.

Natural language processing is a subfield of linguistics, computer science, and artificial intelligence that uses algorithms to interpret and manipulate human language. In the Natural Language Processing (NLP) Specialization, you will learn how to design NLP applications that perform question-answering and sentiment analysis, create tools to ...

This Specialization will teach you best practices for using TensorFlow, a popular open-source framework for machine learning. In Course 3 of the DeepLearning.AI TensorFlow Developer Specialization, you will build natural language processing systems using TensorFlow. You will learn to process text, including tokenizing and representing sentences ...

Autocorrect: Learn about autocorrect, minimum edit distance, and dynamic programming, then build a spellchecker to correct misspelled words.; Part of Speech Tagging and Hidden Markov Models: Learn about Markov chains and Hidden Markov models, then use them to create part-of-speech tags for a Wall Street Journal text corpus.; Autocomplete and Language Models: Learn about how N-gram language ...

There are 3 modules in this course. In Course 3 of the Natural Language Processing Specialization, you will: a) Train a neural network with GLoVe word embeddings to perform sentiment analysis of tweets, b) Generate synthetic Shakespeare text using a Gated Recurrent Unit (GRU) language model, c) Train a recurrent neural network to perform named ...

Natural Language Processing (NLP) uses algorithms to understand and manipulate human language. This technology is one of the most broadly applied areas of machine learning. As AI continues to expand, so will the demand for professionals skilled at building models that analyze speech and language, uncover contextual patterns, and produce ...

The TensorFlow Projector reads this file type and uses it to plot the vectors in 3D space so we can visualize them. To the vectors file, we simply write out the value of each of the items in the ...

Learn from top instructors with graded assignments, videos, and discussion forums. Projects (6) ... NLP (Natural Language Processing) courses cover a variety of topics essential for understanding and developing systems that can interpret and generate human language. ... enhancing their ability to develop and implement NLP solutions. ...

In summary, here are 10 of our most popular nlp courses. Transfer Learning for NLP with TensorFlow Hub: Coursera Project Network. NLP: Twitter Sentiment Analysis: Coursera Project Network. ChatGPT Playground for Beginners: Intro to NLP AI: Coursera Project Network. Tweet Emotion Recognition with TensorFlow: Coursera Project Network.