Learn More Psychology

- Memory Psychology

10 Influential Memory Theories and Studies in Psychology

Discover the experiments and theories that shaped our understanding of how we develop and recall memories..

Permalink Print |

How do our memories store information? Why is it that we can recall a memory at will from decades ago, and what purpose does forgetting information serve?

The human memory has been the subject of investigation among many 20th Century psychologists and remains an active area of study for today’s cognitive scientists. Below we take a look at some of the most influential studies, experiments and theories that continue to guide our understanding of the function of memory.

1 Multi-Store Model

(atkinson & shiffrin, 1968).

An influential theory of memory known as the multi-store model was proposed by Richard Atkinson and Richard Shiffrin in 1968. This model suggested that information exists in one of 3 states of memory: the sensory, short-term and long-term stores . Information passes from one stage to the next the more we rehearse it in our minds, but can fade away if we do not pay enough attention to it. Read More

Information enters the memory from the senses - for instance, the eyes observe a picture, olfactory receptors in the nose might smell coffee or we might hear a piece of music. This stream of information is held in the sensory memory store , and because it consists of a huge amount of data describing our surroundings, we only need to remember a small portion of it. As a result, most sensory information ‘ decays ’ and is forgotten after a short period of time. A sight or sound that we might find interesting captures our attention, and our contemplation of this information - known as rehearsal - leads to the data being promoted to the short-term memory store , where it will be held for a few hours or even days in case we need access to it.

The short-term memory gives us access to information that is salient to our current situation, but is limited in its capacity.

Therefore, we need to further rehearse information in the short-term memory to remember it for longer. This may involve merely recalling and thinking about a past event, or remembering a fact by rote - by thinking or writing about it repeatedly. Rehearsal then further promotes this significant information to the long-term memory store, where Atkinson and Shiffrin believed that it could survive for years, decades or even a lifetime.

Key information regarding people that we have met, important life events and other important facts makes it through the sensory and short-term memory stores to reach the long-term memory .

Learn more about Atkinson and Shiffrin’s Multi-Store Model

2 Levels of Processing

(craik & lockhart, 1972).

Fergus Craik and Robert Lockhart were critical of explanation for memory provided by the multi-store model, so in 1972 they proposed an alternative explanation known as the levels of processing effect . According to this model, memories do not reside in 3 stores; instead, the strength of a memory trace depends upon the quality of processing , or rehearsal , of a stimulus . In other words, the more we think about something, the more long-lasting the memory we have of it ( Craik & Lockhart , 1972). Read More

Craik and Lockhart distinguished between two types of processing that take place when we make an observation : shallow and deep processing. Shallow processing - considering the overall appearance or sound of something - generally leads to a stimuli being forgotten. This explains why we may walk past many people in the street on a morning commute, but not remember a single face by lunch time.

Deep (or semantic) processing , on the other hand, involves elaborative rehearsal - focusing on a stimulus in a more considered way, such as thinking about the meaning of a word or the consequences of an event. For example, merely reading a news story involves shallow processing, but thinking about the repercussions of the story - how it will affect people - requires deep processing, which increases the likelihood of details of the story being memorized.

In 1975, Craik and another psychologist, Endel Tulving , published the findings of an experiment which sought to test the levels of processing effect.

Participants were shown a list of 60 words, which they then answered a question about which required either shallow processing or more elaborative rehearsal. When the original words were placed amongst a longer list of words, participants who had conducted deeper processing of words and their meanings were able to pick them out more efficiently than those who had processed the mere appearance or sound of words ( Craik & Tulving , 1975).

Learn more about Levels of Processing here

3 Working Memory Model

(baddeley & hitch, 1974).

Whilst the Multi-Store Model (see above) provided a compelling insight into how sensory information is filtered and made available for recall according to its importance to us, Alan Baddeley and Graham Hitch viewed the short-term memory (STM) store as being over-simplistic and proposed a working memory model (Baddeley & Hitch, 1974), which replace the STM.

The working memory model proposed 2 components - a visuo-spatial sketchpad (the ‘inner eye’) and an articulatory-phonological loop (the ‘inner ear’), which focus on a different types of sensory information. Both work independently of one another, but are regulated by a central executive , which collects and processes information from the other components similarly to how a computer processor handles data held separately on a hard disk. Read More

According to Baddeley and Hitch, the visuo-spatial sketchpad handles visual data - our observations of our surroundings - and spatial information - our understanding of objects’ size and location in our environment and their position in relation to ourselves. This enables us to interact with objects: to pick up a drink or avoid walking into a door, for example.

The visuo-spatial sketchpad also enables a person to recall and consider visual information stored in the long-term memory. When you try to recall a friend’s face, your ability to visualize their appearance involves the visuo-spatial sketchpad.

The articulatory-phonological loop handles the sounds and voices that we hear. Auditory memory traces are normally forgotten but may be rehearsed using the ‘inner voice’; a process which can strengthen our memory of a particular sound.

Learn more about Baddeley and Hitch’s working memory model here

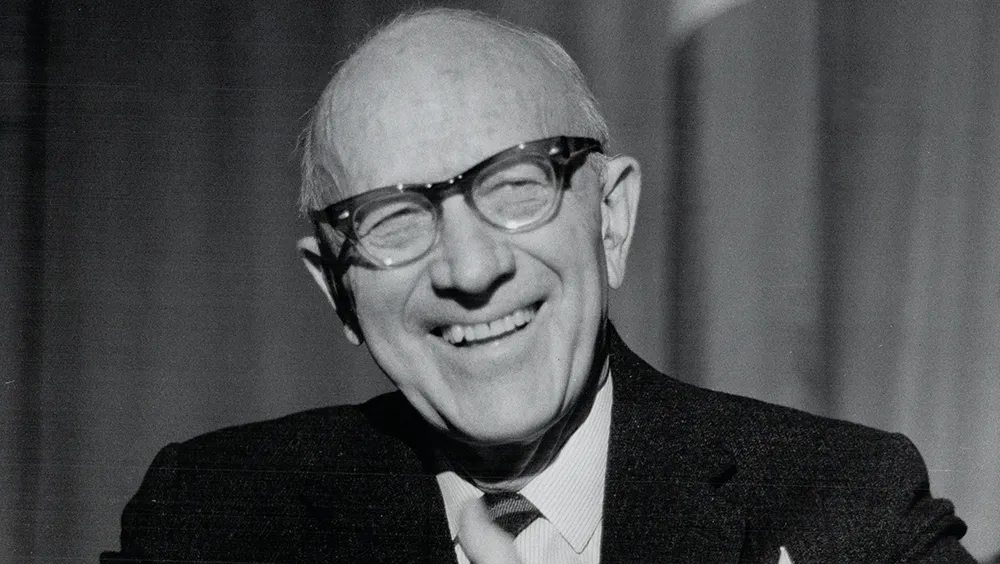

4 Miller’s Magic Number

(miller, 1956).

Prior to the working memory model, U.S. cognitive psychologist George A. Miller questioned the limits of the short-term memory’s capacity. In a renowned 1956 paper published in the journal Psychological Review , Miller cited the results of previous memory experiments, concluding that people tend only to be able to hold, on average, 7 chunks of information (plus or minus two) in the short-term memory before needing to further process them for longer storage. For instance, most people would be able to remember a 7-digit phone number but would struggle to remember a 10-digit number. This led to Miller describing the number 7 +/- 2 as a “magical” number in our understanding of memory. Read More

But why are we able to remember the whole sentence that a friend has just uttered, when it consists of dozens of individual chunks in the form of letters? With a background in linguistics, having studied speech at the University of Alabama, Miller understood that the brain was able to ‘chunk’ items of information together and that these chunks counted towards the 7-chunk limit of the STM. A long word, for example, consists of many letters, which in turn form numerous phonemes. Instead of only being able to remember a 7-letter word, the mind “recodes” it, chunking the individual items of data together. This process allows us to boost the limits of recollection to a list of 7 separate words.

Miller’s understanding of the limits of human memory applies to both the short-term store in the multi-store model and Baddeley and Hitch’s working memory. Only through sustained effort of rehearsing information are we able to memorize data for longer than a short period of time.

Read more about Miller’s Magic Number here

5 Memory Decay

(peterson and peterson, 1959).

Following Miller’s ‘magic number’ paper regarding the capacity of the short-term memory, Peterson and Peterson set out to measure memories’ longevity - how long will a memory last without being rehearsed before it is forgotten completely?

In an experiment employing a Brown-Peterson task, participants were given a list of trigrams - meaningless lists of 3 letters (e.g. GRT, PXM, RBZ) - to remember. After the trigrams had been shown, participants were asked to count down from a number, and to recall the trigrams at various periods after remembering them. Read More

The use of such trigrams makes it impracticable for participants to assign meaning to the data to help encode them more easily, while the interference task prevented rehearsal, enabling the researchers to measure the duration of short-term memories more accurately.

Whilst almost all participants were initially able to recall the trigrams, after 18 seconds recall accuracy fell to around just 10%. Peterson and Peterson’s study demonstrated the surprising brevity of memories in the short-term store, before decay affects our ability to recall them.

Learn more about memory decay here

6 Flashbulb Memories

(brown & kulik, 1977).

There are particular moments in living history that vast numbers of people seem to hold vivid recollections of. You will likely be able to recall such an event that you hold unusually detailed memories of yourself. When many people learned that JFK, Elvis Presley or Princess Diana died, or they heard of the terrorist attacks taking place in New York City in 2001, a detailed memory seems to have formed of what they were doing at the particular moment that they heard such news.

Psychologists Roger Brown and James Kulik recognized this memory phenomenon as early as 1977, when they published a paper describing flashbulb memories - vivid and highly detailed snapshots created often (but not necessarily) at times of shock or trauma. Read More

We are able to recall minute details of our personal circumstances whilst engaging in otherwise mundane activities when we learnt of such events. Moreover, we do not need to be personally connected to an event for it to affect us, and for it lead to the creation of a flashbulb memory.

Learn more about Flashbulb Memories here

7 Memory and Smell

The link between memory and sense of smell helps many species - not just humans - to survive. The ability to remember and later recognize smells enables animals to detect the nearby presence of members of the same group, potential prey and predators. But how has this evolutionary advantage survived in modern-day humans?

Researchers at the University of North Carolina tested the olfactory effects on memory encoding and retrieval in a 1989 experiment. Male college students were shown a series of slides of pictures of females, whose attractiveness they were asked to rate on a scale. Whilst viewing the slides, the participants were exposed to pleasant odor of aftershave or an unpleasant smell. Their recollection of the faces in the slides was later tested in an environment containing either the same or a different scent. Read More

The results showed that participants were better able to recall memories when the scent at the time of encoding matched that at the time of recall (Cann and Ross, 1989). These findings suggest that a link between our sense of smell and memories remains, even if it provides less of a survival advantage than it did for our more primitive ancestors.

8 Interference

Interference theory postulates that we forget memories due to other memories interfering with our recall. Interference can be either retroactive or proactive: new information can interfere with older memories (retroactive interference), whilst information we already know can affect our ability to memorize new information (proactive interference).

Both types of interference are more likely to occur when two memories are semantically related, as demonstrated in a 1960 experiment in which two groups of participants were given a list of word pairs to remember, so that they could recall the second ‘response’ word when given the first as a stimulus. A second group was also given a list to learn, but afterwards was asked to memorize a second list of word pairs. When both groups were asked to recall the words from the first list, those who had just learnt that list were able to recall more words than the group that had learnt a second list (Underwood & Postman, 1960). This supported the concept of retroactive interference: the second list impacted upon memories of words from the first list. Read More

Interference also works in the opposite direction: existing memories sometimes inhibit our ability to memorize new information. This might occur when you receive a work schedule, for instance. When you are given a new schedule a few months later, you may find yourself adhering to the original times. The schedule that you already knew interferes with your memory of the new schedule.

9 False Memories

Can false memories be implanted in our minds? The idea may sound like the basis of a dystopian science fiction story, but evidence suggests that memories that we already hold can be manipulated long after their encoding. Moreover, we can even be coerced into believing invented accounts of events to be true, creating false memories that we then accept as our own.

Cognitive psychologist Elizabeth Loftus has spent much of her life researching the reliability of our memories; particularly in circumstances when their accuracy has wider consequences, such as the testimonials of eyewitness in criminal trials. Loftus found that the phrasing of questions used to extract accounts of events can lead witnesses to attest to events inaccurately. Read More

In one experiment, Loftus showed a group of participants a video of a car collision, where the vehicle was travelling at a one of a variety of speeds. She then asked them the car’s speed using a sentence whose depiction of the crash was adjusted from mild to severe using different verbs. Loftus found when the question suggested that the crash had been severe, participants disregarded their video observation and vouched that the car had been travelling faster than if the crash had been more of a gentle bump (Loftus and Palmer, 1974). The use of framed questions, as demonstrated by Loftus, can retroactively interfere with existing memories of events.

James Coan (1997) demonstrated that false memories can even be produced of entire events. He produced booklets detailing various childhood events and gave them to family members to read. The booklet given to his brother contained a false account of him being lost in a shopping mall, being found by an older man and then finding his family. When asked to recall the events, Coan’s brother believed the lost in a mall story to have actually occurred, and even embellished the account with his own details (Coan, 1997).

Read more about false memories here

10 The Weapon Effect on Eyewitness Testimonies

(johnson & scott, 1976).

A person’s ability to memorize an event inevitably depends not just on rehearsal but also on the attention paid to it at the time it occurred. In a situation such as an bank robbery, you may have other things on your mind besides memorizing the appearance of the perpetrator. But witness’s ability to produce a testimony can sometimes be affected by whether or not a gun was involved in a crime. This phenomenon is known as the weapon effect - when a witness is involved in a situation in which a weapon is present, they have been found to remember details less accurately than a similar situation without a weapon. Read More

The weapon effect on eyewitness testimonies was the subject of a 1976 experiment in which participants situated in a waiting room watched as a man left a room carrying a pen in one hand. Another group of participants heard an aggressive argument, and then saw a man leave a room carrying a blood-stained knife.

Later, when asked to identify the man in a line-up, participants who saw the man carrying a weapon were less able to identify him than those who had seen the man carrying a pen (Johnson & Scott, 1976). Witnesses’ focus of attention had been distracted by a weapon, impeding their ability to remember other details of the event.

Which Archetype Are You?

Are You Angry?

Windows to the Soul

Are You Stressed?

Attachment & Relationships

Memory Like A Goldfish?

31 Defense Mechanisms

Slave To Your Role?

Are You Fixated?

Interpret Your Dreams

How to Read Body Language

How to Beat Stress and Succeed in Exams

More on Memory Psychology

Test your short-term memory with this online feature.

Memory Test

False Memories

How false memories are created and can affect our ability to recall events.

Why Do We Forget?

Why do we forget information? Find out in this fascinating article exploring...

Conditioned Behavior

What is conditioning? What Pavlov's dogs experiment teaches us about how we...

Interrupt To Remember?

Explanation of the Zeigarnik effect, whereby interruption of a task can lead to...

Sign Up for Unlimited Access

- Psychology approaches, theories and studies explained

- Body Language Reading Guide

- How to Interpret Your Dreams Guide

- Self Hypnosis Downloads

- Plus More Member Benefits

You May Also Like...

Nap for performance, brainwashed, dark sense of humor linked to intelligence, psychology of color, master body language, making conversation, why do we dream, persuasion with ingratiation, psychology guides.

Learn Body Language Reading

How To Interpret Your Dreams

Overcome Your Fears and Phobias

Psychology topics, learn psychology.

- Access 2,200+ insightful pages of psychology explanations & theories

- Insights into the way we think and behave

- Body Language & Dream Interpretation guides

- Self hypnosis MP3 downloads and more

- Behavioral Approach

- Eye Reading

- Stress Test

- Cognitive Approach

- Fight-or-Flight Response

- Neuroticism Test

© 2024 Psychologist World. Home About Contact Us Terms of Use Privacy & Cookies Hypnosis Scripts Sign Up

- U.S. Department of Health & Human Services

- Virtual Tour

- Staff Directory

- En Español

You are here

News releases.

News Release

Tuesday, June 8, 2021

Study shows how taking short breaks may help our brains learn new skills

NIH scientists discover that the resting brain repeatedly replays compressed memories of what was just practiced.

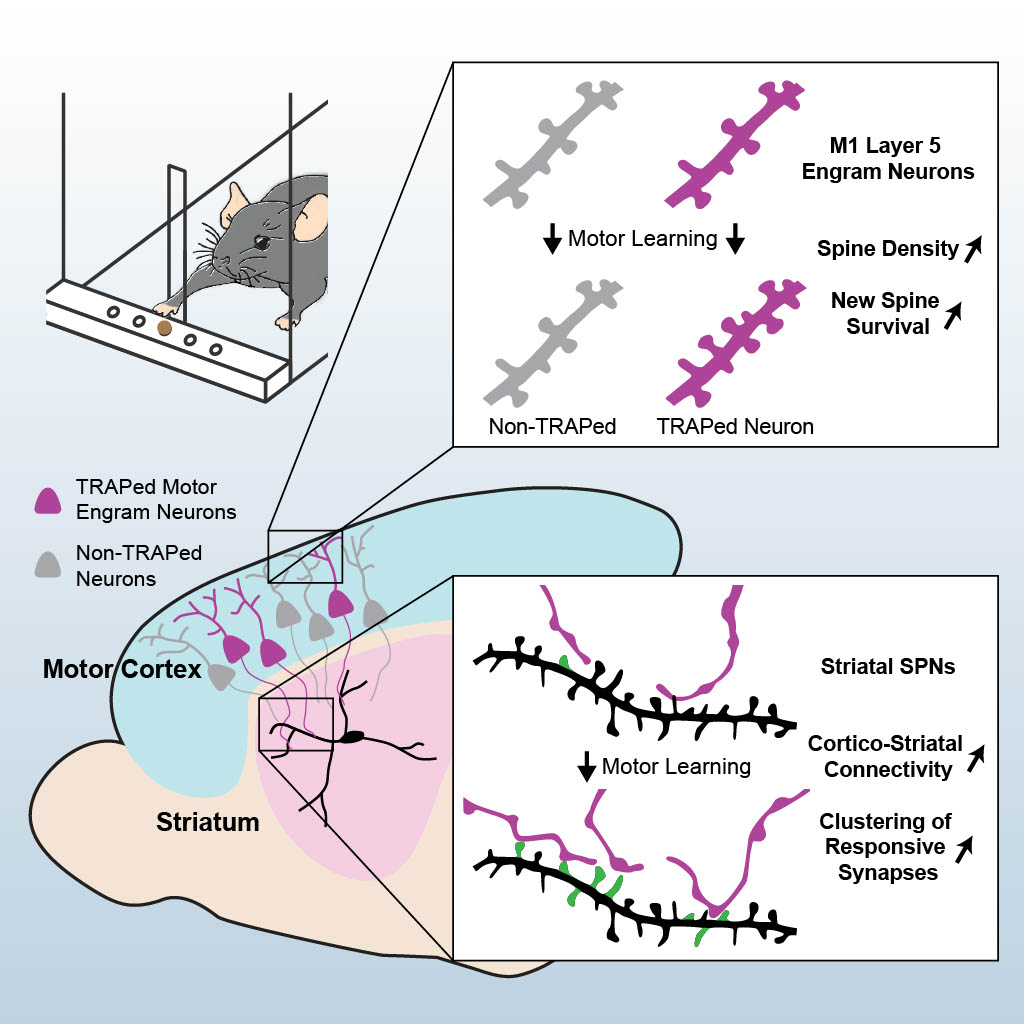

In a study of healthy volunteers, National Institutes of Health researchers have mapped out the brain activity that flows when we learn a new skill, such as playing a new song on the piano, and discovered why taking short breaks from practice is a key to learning. The researchers found that during rest the volunteers’ brains rapidly and repeatedly replayed faster versions of the activity seen while they practiced typing a code. The more a volunteer replayed the activity the better they performed during subsequent practice sessions, suggesting rest strengthened memories.

“Our results support the idea that wakeful rest plays just as important a role as practice in learning a new skill. It appears to be the period when our brains compress and consolidate memories of what we just practiced,” said Leonardo G. Cohen, M.D., senior investigator at the NIH’s National Institute of Neurological Disorders and Stroke (NINDS) and the senior author of the study published in Cell Reports. “Understanding this role of neural replay may not only help shape how we learn new skills but also how we help patients recover skills lost after neurological injury like stroke.”

The study was conducted at the NIH Clinical Center . Dr. Cohen’s team used a highly sensitive scanning technique, called magnetoencephalography, to record the brain waves of 33 healthy, right-handed volunteers as they learned to type a five-digit test code with their left hands. The subjects sat in a chair and under the scanner’s long, cone-shaped cap. An experiment began when a subject was shown the code “41234” on a screen and asked to type it out as many times as possible for 10 seconds and then take a 10 second break. Subjects were asked to repeat this cycle of alternating practice and rest sessions a total of 35 times.

During the first few trials, the speed at which subjects correctly typed the code improved dramatically and then leveled off around the 11th cycle. In a previous study, led by former NIH postdoctoral fellow Marlene Bönstrup, M.D., Dr. Cohen’s team showed that most of these gains happened during short rests , and not when the subjects were typing. Moreover, the gains were greater than those made after a night’s sleep and were correlated with a decrease in the size of brain waves, called beta rhythms. In this new report, the researchers searched for something different in the subjects’ brain waves.

“We wanted to explore the mechanisms behind memory strengthening seen during wakeful rest. Several forms of memory appear to rely on the replaying of neural activity, so we decided to test this idea out for procedural skill learning,” said Ethan R. Buch, Ph.D., a staff scientist on Dr. Cohen’s team and leader of the study.

To do this, Leonardo Claudino, Ph.D., a former postdoctoral fellow in Dr. Cohen’s lab, helped Dr. Buch develop a computer program which allowed the team to decipher the brain wave activity associated with typing each number in the test code.

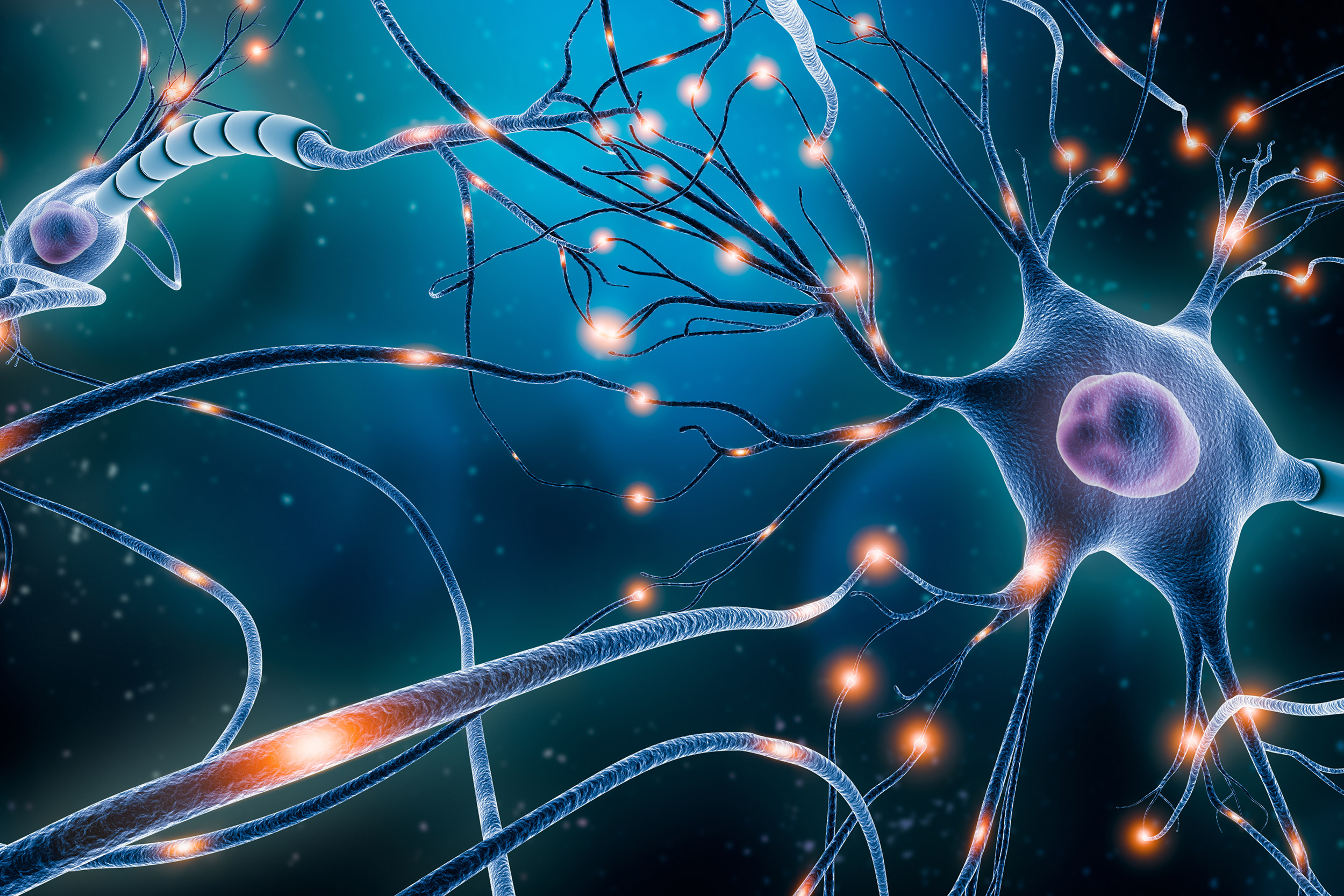

The program helped them discover that a much faster version – about 20 times faster - of the brain activity seen during typing was replayed during the rest periods. Over the course of the first eleven practice trials, these compressed versions of the activity were replayed many times - about 25 times - per rest period. This was two to three times more often than the activity seen during later rest periods or after the experiments had ended.

Interestingly, they found that the frequency of replay during rest predicted memory strengthening. In other words, the subjects whose brains replayed the typing activity more often showed greater jumps in performance after each trial than those who replayed it less often.

“During the early part of the learning curve we saw that wakeful rest replay was compressed in time, frequent, and a good predictor of variability in learning a new skill across individuals,” said Dr. Buch. “This suggests that during wakeful rest the brain binds together the memories required to learn a new skill.”

As expected, the team discovered that the replay activity often happened in the sensorimotor regions of the brain, which are responsible for controlling movements. However, they also saw activity in other brain regions, namely the hippocampus and entorhinal cortex.

“We were a bit surprised by these last results. Traditionally, it was thought that the hippocampus and entorhinal cortex may not play such a substantive role in procedural memory. In contrast, our results suggest that these regions are rapidly chattering with the sensorimotor cortex when learning these types of skills,” said Dr. Cohen. “Overall, our results support the idea that manipulating replay activity during waking rest may be a powerful tool that researchers can use to help individuals learn new skills faster and possibly facilitate rehabilitation from stroke.”

This study was supported by the NIH Intramural Research Program at the NINDS.

NINDS is the nation’s leading funder of research on the brain and nervous system. The mission of NINDS is to seek fundamental knowledge about the brain and nervous system and to use that knowledge to reduce the burden of neurological disease.

About the National Institutes of Health (NIH): NIH, the nation's medical research agency, includes 27 Institutes and Centers and is a component of the U.S. Department of Health and Human Services. NIH is the primary federal agency conducting and supporting basic, clinical, and translational medical research, and is investigating the causes, treatments, and cures for both common and rare diseases. For more information about NIH and its programs, visit www.nih.gov .

NIH…Turning Discovery Into Health ®

Buch et al., Consolidation of human skill linked to waking hippocampo-neocortical replay, Cell Reports, June 8, 2021, DOI: 10.1016/j.celrep.2021.109193

Connect with Us

- More Social Media from NIH

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Evidence of memory from brain data

Emily r d murphy, jesse rissman.

- Author information

- Article notes

- Copyright and License information

Corresponding author. E-mail: [email protected]

PhD, JD. Associate Professor of Law, University of California Hastings College of the Law. Dr Murphy is a neuroscientist and law professor specializing in the intersection of law, policy, brain, and behavior. She writes about brain-based technologies as evidence as well as the implications of advances in neuroscience and understanding of human behavior on various aspects of law and policy.

PhD Associate Professor, Psychology and Psychiatry and Biobehavioral Sciences, University of California, Los Angeles. Dr Rissman is a psychologist whose research focuses on the cognitive and neural mechanisms of human memory. He has pioneered novel memory research techniques using functional magnetic resonance imaging and machine learning, and also writes and teaches about the intersection of neuroscience, ethics, and policy.

Received 2020 May 14; Revised 2020 May 14; Accepted 2020 Jul 31; Collection date 2020 Jan-Dec.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted reuse, distribution, and reproduction in any medium, provided the original work is properly cited.

Much courtroom evidence relies on assessing witness memory. Recent advances in brain imaging analysis techniques offer new information about the nature of autobiographical memory and introduce the potential for brain-based memory detection. In particular, the use of powerful machine-learning algorithms reveals the limits of technological capacities to detect true memories and contributes to existing psychological understanding that all memory is potentially flawed. This article first provides the conceptual foundation for brain-based memory detection as evidence. It then comprehensively reviews the state of the art in brain-based memory detection research before establishing a framework for admissibility of brain-based memory detection evidence in the courtroom and considering whether and how such use would be consistent with notions of justice. The central question that this interdisciplinary analysis presents is: if the science is sophisticated enough to demonstrate that accurate, veridical memory detection is limited by biological, rather than technological, constraints, what should that understanding mean for broader legal conceptions of how memory is traditionally assessed and relied upon in legal proceedings? Ultimately, we argue that courtroom admissibility is presently a misdirected pursuit, though there is still much to be gained from advancing our understanding of the biology of human memory.

Keywords: brain, court, evidence, fMRI, machine learning, memory detection

I. INTRODUCTION

In 2008, Aditi Sharma was convicted by an Indian court of killing her fiancé, Udit Bharati. 1 The court relied in part on evidence derived from the so-called Brain Electrical Oscillations Signature (BEOS) test. 2 To take this test, Sharma sat alone in a room wearing a skullcap with protruding wires that measured brain activity under her scalp. She listened to a series of statements detailing some aspects of the murder that were based on the investigators’ understanding. Throughout the test, she said not a word. But when she heard statements such as ‘I had an affair with Udit’, ‘I got arsenic from the shop’, ‘I called Udit’, ‘I gave him the sweets mixed with arsenic’, and ‘The sweets killed Udit’, the computer analyzing her brain activity purported to detect ‘experiential knowledge’ in her brain. 3 In the subsequent 6 months, the same lab provided evidence in the murder convictions of two more people. 4

Recent advances in peer-reviewed research on memory and its detection present an important question for evidence law: what would it mean to be able to detect the contents of a person’s memory? If a brain-based approach were scientifically reliable, would it be admitted as courtroom evidence? Admissibility in court and the persuasiveness (or prejudicial effect) of evidence is often the focus of legal analysis of the new brain science technology. 5 Indeed, some memory detection researchers perceive courtroom admissibility to be the sine qua non for forensic applications of memory detection technology. 6

Both the inventors of BEOS and some US-based researchers insist that their brain-based memory detection technologies are not ‘lie detection’ and should not be painted with the same brush of unreliability and thus inadmissibility. 7 Others have claimed that memory detection technology will soon be admissible as evidence. 8 But ‘admissibility’ is not an inherent quality of novel technology, but rather a complicated legal, factual, and scientific question in a particular case. Because admissibility is a recurrent focal point for some researchers and advocates, and because brain-based memory detection technology has been admitted in some jurisdictions, 9 we offer a framework for the assessment of evidentiary use of brain-based memory detection in trial proceedings. The primary focus for this analysis will be on memories of witnesses and suspects as the most likely targets of forensic applications. 10

The admissibility of memory detection evidence is a complex legal question not just because it requires careful judicial gatekeeping of complex scientific evidence, but also because it directly concerns one of the core ‘testimonial capacities’ of a witness, and thus directly bears on the jury’s task of assessing witness credibility. Part I introduces the conceptual framework for memory detection research being translated to forensic practice. Part II provides an overview of the current state of the technology, with further details for scientific readers published elsewhere. 11 Part III sets up the framework for assessing courtroom admissibility, establishing the nature of the factual and legal issues raised by the current technological capabilities and scientific understanding. Memory detection may also implicate individual constitutional and compulsory process rights, limiting or enabling its use as courtroom evidence. 12 Part IV then analyzes the potential objections to memory detection as courtroom evidence, starting with those that are surmountable challenges before moving onto stronger objections and normative considerations. Finally, Part V concludes by considering the use of the technology outside of the adversarial context. The question that this analysis presents is: if the science is in fact sophisticated enough to demonstrate that accurate, veridical memory detection is limited by biological, rather than technological, constraints, what should that understanding mean for broader legal conceptions of how memory is traditionally assessed and relied upon in legal proceedings? Ultimately, we argue that courtroom admissibility is presently a misdirected pursuit, though there is still much to be gained from advancing our understanding of the biology of human memory.

II. MEMORY DETECTION: THEORY TO FORENSIC PRACTICE

Human memory plays a critical role in many different aspects of law and legal proceedings, not the least of which is witness testimony. For example, decades of scientific research into the nature of memory have recently supported significant structural legal developments, such as changes in pretrial motion practice and jury instructions about evaluating eyewitness identification testimony. 13 Thus, periodic assessment of what we know about memory and can do in terms of its detection is important for legal and interdisciplinary scholars. Parts I and II synthesize several lines of research centering around the question: is it possible to detect the presence (or absence) of a specific memory? The answer depends both on advances in measurement (in technology and behavioral test design) and also on the better understanding of the biological (including psychological) nature and limitations of human memory—an ongoing subject of basic science research.

Significant technological advances have been made in neuroimaging since this literature was last comprehensively reviewed for an interdisciplinary audience, 14 and a key question now is whether remaining limitations are fundamentally technological or biological problems. Technological problems are of the kind that permits researchers and commentators to say ‘in the future, we may be able to…’ based on advances in technology. Biological problems, in contrast, may be true boundary conditions on what type of detection or characterization may be possible. As technology advances, what seemed like biological problems may yield to advanced scrutiny.

II.A. What Is (and Is Not) Memory Detection?

What do we mean when we refer to ‘memory detection’? Let us first distinguish memory detection from lie detection, or ‘truth verification’. 15 The existence (or absence) of a memory trace 16 could theoretically be detected regardless of whether the subject is affirmatively misrepresenting or concealing that information. 17 This article evaluates work aimed at detecting the presence or absence of recognition of some sort of stimuli, rather than deception per se .

Researcher Peter Rosenfeld and colleagues explain that a canonical version of the ‘guilty knowledge test’ used in most memory detection protocols ‘actually does not claim or aim to detect lies; it is instead aimed at detecting whether or not a suspect recognizes information ordinarily known only by guilty perpetrators and, of course, enforcement authorities’. 18 That is, present forms of memory detection require the human designing the test to know something about the ground truth of interest, and obtain or design stimuli and testing protocols to determine whether the subject also has that knowledge. Presently, no brain-based memory detection technology functions as one might imagine ‘mind-reading’ to work, via uncued reconstruction of the subjective contents of a subject’s memory. 19

In theory—and consistent with lived experience—our brains are generally able to distinguish autobiographical memories (such as having personally witnessed or participated in an event) from other sources of event knowledge (such as knowing details of people’s lives that we have read or heard about, or knowing the mere fact that an event occurred at a given time and place). 20 That is, we know the stories of our own lives and can generally tell the difference between our own lives and the events and information in the world around us. Of course, our brains are not perfect at this task, and normal people experience spontaneous memory errors (such as the powerful experience of déjà vu, the subjective feeling of having previously lived through a current, novel experience) 21 as well as imagined or suggested memory errors (sometimes referred to as ‘source confusions’ because we misattribute the source of our event knowledge). 22

Exactly how the brain distinguishes autobiographical memories from other memories, and the base rates of inaccuracies or distortions, is the subject of ongoing memory research. For example, very recent research suggests that different kinds of episodic memories—varying in their degree of autobiographical content—may be neurobiologically distinguishable. 23 A neurobiological distinction would not be surprising. Rather, it would be expected that distinct biological mechanisms underlie how these different types of memories are subjectively experienced. These findings, explored further below in Part II, may have significant import for assumptions about the ecological validity to be assumed from laboratory-based memory creation and detection, even using mock crime scenarios, and real-world memory creation and detection. Each of these characteristics contributes to a technique’s false positive and false negative rate, which are critical measures for any test used to classify an outcome as present/absent.

As with methods of ‘truth verification’, a potential forensic appeal of memory detection is based on an essentialization—the assumption that certain brain activity is more automatic, less under conscious control, and less subject to fabrication, reinterpretation, or concealment than subjective reports or even physiological measurements of the body such as skin conductance, heart rate, breathing rate, and even eye movements. 24 One way this assumption has been tested is through more rigorous attempts to quantify how vulnerable different kinds of memory detection are to countermeasures—deliberately applied behavioral or cognitive strategies for ‘beating the test’ or manipulating the test results. But active countermeasures are only one form of potential distortion; others come from the innate imperfections of human memory. 25 How these ‘sins’ such as transience, absent-mindedness, misattribution, and subsequent misinformation constrain memory detection is a question now squarely raised by the most recent research on functional magnetic resonance imaging (fMRI)-based memory detection, discussed in Part II.

Some proponents of forensic memory detection tend to approach the problem as one of technological limitations, and indeed the literature reviewed below is organized primarily by methodology. More complex technology (fMRI and machine-learning data analysis) has enabled major advances in memory detection and scientific knowledge about the nature of memory. But it has also brought us closer to asking whether the constraints on memory detection may be ‘biological problems’ as well as technological problems. That is, which limitations on memory detection come from the nature of memory itself?

Most obviously, for many aspects of our experiences, a memory beyond a brief sensory trace never gets formed. As an a priori theoretical matter, it will not be possible to detect a memory that was never formed because the information or stimulus was never attended to. But as an empirical matter, it is extremely difficult to test for the true absence of something, and thus it would be extremely difficult for a negative result from a memory detection test to be interpreted as the true absence of a memory and thus a true lack of personal experience. Conversely, lots of things we do not pay attention to may nonetheless get stored into memory at some level. These unattended stimuli are less likely to be consciously remembered and will sometimes be completely forgotten, but other times will influence behavior in subtle ways. 26

A second challenge is that, even if a memory were formed, it may be degraded or forgotten to a degree that it is no longer detectable. This boundary condition is at least theoretically easier to experimentally investigate—a memory trace could be detected at time one, and then be absent when probed at a later time. But the mere act of testing for the memory could, itself, modify or strengthen the memory, thus preventing ‘normal’ degradation and forgetting. 27 The reactivation of a memory through questioning or testing procedures could strengthen a degraded recognition, and/or a subject’s confidence in their recall. 28 How such influences impact the detectability of a memory will have a critical impact upon the marginal utility of brain-based memory detection versus testimony of compliant, but perhaps forgetful, witnesses.

II.B. What Kinds of Memories Are to Be Detected?

The next important concept is to define precisely what kind of memories we are most interested in detecting and what kind of memories various tests actually detect or could detect. For laypersons without a psychology background, this may seem obvious: we would like to detect true/veridical memories, rather than false, inaccurate, or distorted memories. False memories in legal proceedings are an important area of psychological study with many legal implications; detailed consideration of which has been undertaken elsewhere. 29 For our purposes, it is important to hone in on what kinds of memories forensic applications would likely be most interested in detecting: objectively true, autobiographical memories.

Broadly speaking, autobiographical memories can be semantic (facts about yourself such ask knowing the places you have lived and the names of your family members and friends) or episodic (recollection of events that happened to you at a specific time and place). 30 Both are a type of ‘explicit’ or ‘declarative’ memory: generally speaking, those that can be consciously recalled and described verbally. These may be contrasted with ‘implicit’ memories, where current behavior is influenced by prior learning in a non-conscious matter, such as the procedural skill of riding a bike. Although some researchers in this field have limited the categories of forensic interest to episodic memories, there may be types of semantic autobiographical memories of forensic interest such as names of criminal associates whom one has never personally met, targets of attack one has only been told, or one’s true name or place of birth. 31

The taxonomy just described is a drastic simplification of an active and ongoing area of research—just ‘how’ memory should be classified and operationalized—and one that does not seem to be in active dialogue with memory detection researchers. A recent review described a categorization of ‘personal semantic’ (PS) memory—knowledge of one’s past—as an intermediate form of memory between semantic and episodic memory. 32 PS memory has been ‘assumed to be a form of semantic memory, [thus] formal studies of PS have been rare and PS is not well integrated into existing models of semantic memory and knowledge’. 33 The authors describe four ways in which PS memory has been operationalized as autobiographical facts, self-knowledge, repeated events, and autobiographically significant concepts. Depending upon how PS memory is operationalized, in different studies it appears neurobiologically more similar to general semantic memory or episodic memory, meaning that tasks activating each show similar patterns of neural activation on functional brain imaging. Though a sophisticated field of research with a voluminous literature, the difficulties in coming to a consensus about the taxonomic nature of memory are in part because memory is extremely difficult to study. 34

One final thing must be said about a type of autobiographical memory that is not the subject of memory detection studies, despite being extremely relevant as courtroom evidence: memories, or beliefs, about one’s past mental states. Memories of ‘events that happened’ are the subject of brain-based memory detection research. This is not the case for memories of ‘past subjective mental state’, notwithstanding the concept’s obvious relevance to legal issues of mens rea . Of course, a past mens rea could potentially be inferred from the detection of a memory of a specific event or item. For instance, if brain-based memory detection could establish that a defendant had actually seen bullets in a gun’s chamber, such evidence might weigh against the defendant’s claim that he believed the gun was unloaded. But direct detection of past mental states, even if considered as a subtype of autobiographical memory, has not been attempted, and nor is it clear how it could be. Although a single recent study suggested that brain imaging could distinguish between present, active mental states of legal relevance (knowing vs. reckless, to the extent those legal concepts can be operationalized for behavioral study) 35 , the ‘time travel’ problem will not likely be resolved by increasing technological sophistication. 36

III. STATE OF THE ART IN BRAIN-BASED MEMORY DETECTION

With this conceptual framework in mind, brain-based memory detection has biological and technological axes to examine. 37 The biological axis considers and explores how memory actually works in the brain. The technological axis is concerned with the tools used to explore that biology. Advances in understanding on one axis reciprocally inform the other.

Organization of this part by technological method allows understanding of technological improvements and approaches to the challenges discussed above, which helps set the stage for understanding where limitations are biological, rather than technological, in nature. We start with electroencephalography (EEG)-based technology, which uses electrodes to detect electrical brain activity through a subject’s scalp. We then discuss fMRI, which uses powerful magnetic fields to non-invasively detect correlates of brain activity. Those readers who are interested in the high-level summary and analysis may skip ahead to Part II.C.

III.A. EEG-Based Technology

There is now a substantial body of research using EEG-based techniques to detect memories, reporting impressive results on accuracy of detection, often exceeding 90 per cent. EEG-based technologies are deployed in various commercial efforts 38 and are in forensic use internationally. 39 This section will first review the basic technique and theory behind EEG-based memory detection, and then report on recent findings and remaining limitations on forensic use. 40 Notably, a small handful of research groups have dominated the EEG-based memory detection research efforts. The large bulk of peer-reviewed publications comes from the lab of Peter Rosenfeld et al at Northwestern University. 41

EEG-based memory detection protocols measure electrical brain activity from dozens of small electrodes placed on the scalp as a subject is presented with a series of stimuli—typically words or pictures shown on a computer screen, or sounds played through headphones or speakers. EEG-based memory detection protocols vary, and subtle variations in those protocols are the subject of intense research because small changes in testing protocol have large impacts on results.

The fundamental concept relies on a test framework exploiting the brain’s different responses to personally meaningful versus non-meaningful stimuli, called the ‘orienting response.’ 42 The logic of EEG-based memory detection protocols is that the person administering the EEG can measure the subject’s brain responses to different stimuli and use them to discriminate between meaningful or non-meaningful information. In this context, a stimulus that is ‘meaningful’ is one that would be salient or significant only to someone with prior knowledge or experience with that stimulus, such that its ‘meaningfulness’ is at least partially based on recognition memory. Thus, the nature of the information presented in the stimuli—which must be carefully selected beforehand by the test administrator—is the most important methodological factor in EEG-based memory detection.

Nearly all EEG-based memory detection protocols attempt to detect a characteristic waveform of electrical activity evoked by meaningful/familiar/salient stimuli, and in most research this waveform is the P300 ‘event related potential’, or ERP. 43 An ERP is a particular spike or dip in brain voltage activity in response to a discrete event (such as a bright light or loud noise). The P300 is a positive ERP occurring between 300 and 1000 milliseconds after the onset of events that are both infrequent and meaningful, with a maximal effect typically observed for those electrodes situated over the parietal lobe. 44 A shorthand way to think of what the P300 represents is a response to stimuli that are ‘rare, recognized, and meaningful’. 45

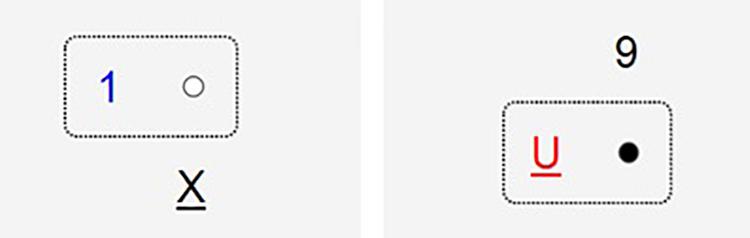

As mentioned above, EEG-based detection relies on the comparison of brain responses between different categories of stimuli. The majority of protocols is based on the concealed information test (CIT; also known as the ‘guilty knowledge test’) and use three types of stimuli: (i) infrequent ‘probe’ stimuli with information relevant to the event of interest that would only be recognized by someone with prior knowledge (that is, meaningful to some people but meaningless to others); (ii) infrequent ‘target’ stimuli that subjects are explicitly instructed to monitor for and respond to with a unique button-press—these are stimuli that the subject is expected to know, recognize, or be familiar with (meaningful to everyone); and (iii) frequent ‘irrelevant’ stimuli, of no relevance to the event of interest nor of any particular importance to the subject (meaningless to everyone). For all subjects, the target stimuli should elicit a greater P300 response amplitude than the irrelevant stimuli, providing an internal check that the procedure is working and the data are of sufficient quality. Most critically, for ‘guilty’ examinees, probe stimuli should elicit a P300 similar in magnitude to that observed for target stimuli, indicating that their brain ‘knows’ the probe stimuli are not irrelevant. Conversely, the brains of ‘innocent’ examinees should show little no P300 response to the probes, such that this waveform will look more similar to that observed for irrelevant stimuli than for target stimuli. 46

An important point to appreciate is that for EEG-based memory detection, subtle variations in methods can substantially affect the results. The past several years of research have largely been dedicated to these methodological challenges. One methodological constraint of much of EEG-based memory detection deserves particular attention, which is that most studies report results on ‘blocks’ of trials for a given condition, rather than a single trial. Generally speaking, the reason for this is that EEG data are extremely noisy, such that a meaningful ‘signal’ can only be pulled out from background ‘noise’ when averaging responses over several trials of a given condition. 47 As such, to detect the ERP, researchers typically average the EEG samples of repeated presentations of the same stimulus, or of several stimuli in the same category. That is, relatively smooth-looking graphs of P300 responses should be understood to be averages of multiple repeated trials. Indeed, typically several hundred presentations of test items are required for reliable recording of the P300 ERP. 48

Researchers have been studying the use of the P300 ERP to detect ‘concealed information’ since the 1980. The first P300-based protocol was based on the CIT. 49 Eventually, the standard CIT task was determined to be vulnerable to countermeasures, 50 and Rosenfeld and colleagues developed a new countermeasure-resistant variant, which they dubbed the ‘Complex Trial Protocol’ (CTP). The CTP is designed to enhance the difficulty of the standard CIT and reduce the likelihood of success that a subject could secretly designate a category of ‘irrelevant’ probes as ‘relevant’, thus reducing the difference in P300 amplitudes between ‘probes’ and secretly relevant ‘irrelevants’ for guilty subjects—essentially beating the test by creating their own false positives. 51 The reasoning behind the countermeasure vulnerability of the older three-stimulus protocol is that when covert target and probe stimuli competed for attention resources, the P300 response is reduced. This proposed mechanism highlights a particular analytic weakness of needing to use an internal comparison between different categories of stimuli to detect whether one subset is recognized or recalled. 52

As discussed, much of the recent research in EEG-based memory detection has focused in recent years on subtle changes in protocol design. Those that are particularly significant for purposes of assessing forensic capacity and potential admissibility are methodological developments focused on more sophisticated countermeasure vulnerability, issues of ecological validity, and, most recently, the application of pattern recognition techniques to analyze EEG data. The highlights of those areas of research, and consideration of the outstanding limitations for forensic use, are briefly discussed below.

III.A.1. Sophisticated countermeasure vulnerability

Alternative mental actions as countermeasures . Several studies have shown that subtle physical countermeasures, such as instructing participate to wiggle a toe or finger during the presentation of certain stimuli (as a means to increase their salience), can be highly effective at preventing experimenters from obtaining an accurate assessment of concealed knowledge. 53 But countermeasures could be even more covert. Mental countermeasures such as silently ‘saying’ one’s own name in response to different ‘irrelevant’ stimuli would be nearly impossible to detect through even the closest observation of a test subject. 54

But does using mental countermeasures slow down task performance, such that countermeasure use could be detected? Early work with the CTP found that slower reaction times indicated countermeasure use. 55 This telltale slowing seems to work only when subjects execute the covert countermeasure separately from a required ‘I saw it’ motor response. If participants are trained to execute both at the same time, this ‘lumping’ strategy can eliminate the ability to use reaction time as an indication of countermeasure use, though the P300 ERP signal can still detect more than 80 per cent of the ‘guilty’ test subjects who were trained to use this strategy (note that this is still a significant reduction from reported detection thresholds exceeding 90 per cent). 56 That is, there may be countermeasures to countermeasure detection strategies, possibly able to characterize mendacious subjects, though without high degrees of certainty.

Voluntary suppression of memory. Can someone beat a memory detection test by voluntarily suppressing their memories? Two papers in 2013 and 2015 reported that the P300 signal for episodic information could be voluntarily suppressed. 57 But it may be possible to design the task in a slightly easier way so as to protect against this countermeasure. 58 Moreover, it may not be possible to suppress responses to probes targeting semantic memories, such as the knowledge of one’s true name. 59 At present, the effect of countermeasures on well-constructed CTP applications appears to be modest and perhaps available only to highly trained subjects. Nevertheless, a complete understanding of potential countermeasure vulnerability is critical to accurate forensic application of any P300-based memory detection protocol, especially research that can differentiate the effects of task demands from mechanisms of countermeasure deployment.

III.A.2. Ecological validity

Ecological validity is the extent to which laboratory conditions and results translate to those in the real world. A canonical memory detection study with low ecological validity is having a subject study a list of random words, then asking her to respond whether or not certain words were on that list, while attempting to conceal the actual studied list with false responses. Studying a list of random words is not a task that resembles real world or forensically relevant events, and it is unwise to generalize from this kind of task to a forensic task that involves presenting a subject with a list of words that may be relevant to a crime being investigated. Alternatively, studies aiming for greater ecological validity may engage their subjects in mock crime scenarios, such as ‘stealing’ a particular item and then attempting to conceal their ‘crime’ from the examiners. These types of studies began with the earliest efforts at EEG-based memory detection. 60 But with all studies using volunteers, the genuine motivation to engage in and then conceal truly criminal or antisocial actions cannot be replicated, as of course all laboratory ‘thefts’ are designed and sanctioned by researchers.

Use of real-world scenarios and detecting autobiographical memory in individuals. A fundamental question for forensic application of memory detection technology is the extent to which lab-created memories—even those with more realistic mnemonic content than a memorized word list, such as a mock crime—are similar to or meaningfully different from real-world memories. There are many reasons such memories could be different, including the amount of attention a subject is allocating to lab-based, instructed tasks such as stealing a document versus going about normal routines of the day when something unexpected happens.

A recent study has tried to address the detectability of incidentally acquired, real-world memories. 61 Meixner and Rosenfeld had subjects wear a small video camera for 4 hours, as they went about their normal daily routine. The next day, subjects returned to the lab and, while hooked up to the EEG, were shown words associated with activities they experienced the previous day (such as ‘grocery store’), as well as irrelevant words of the same category but not relating to the subject’s personal activities (such as ‘movie theater’ or ‘mall’). 62 The authors reported that the EEG data could be used to perfectly discriminate between the 12 ‘knowledgeable’ subjects who viewed words related to their personal activities and the 12 ‘non-knowledgeable’ subjects who simply viewed irrelevant items. Notably, this was the first P300 study to examine whether autobiographical memory could be detected at an individual level, since most psychological studies of autobiographical memory use group averaged data, which is not helpful for forensic purposes. But, it still presents the question of whether comparisons between subjects are necessary to make a decision about an individual’s ‘knowledgeable’ status. More importantly, the study design does not quite get at the question of whether real-life memories are different, at some important neural level, from lab-created memories (which most studies investigate)—it simply suggests that this particular tool can be used to discriminate between subjects who had or did not have discrete real-life experiences.

Innocent-but-informed participants. Other work focused on assessing the ecological validity of a P300-based CIT demonstrated that prior knowledge of crime details has an effect on detection rates, illustrating potential risks of using probes that may have become known to an innocent subject (such as someone who received instructions to steal a ‘ring’ but did not go through with the crime). 63 These ‘innocent-but-informed’ participants were ‘essentially indistinguishable’ from those who actually committed the mock crime: ‘Simple knowledge of probe items was sufficient to induce a high rate of false positives in innocent participants’. 64 Rosenfeld and colleagues counsel that crime details must remain secret, known only to police, perpetrators, eyewitnesses, and victims. But how feasible is it for investigators to know for certain that the probed details are, indeed, secret? This may be easier said than done, and it is not always possible to know the degree to which critical details about the event in question have been inadvertently disclosed. Further relevant to forensic application, the studies investigating variables reviewed in this section have not distinguished between witnesses (who may be innocent-but-informed) and participants (‘guilty’ subjects), such that variables such as time delays and quality of encoding are poorly understood in applications of detection of witness (rather than suspect) memory.

Time delay between event and test. In lab studies using mock crimes, participants are often tested immediately or a few days after the incident. In the real world, interrogation about an event may come weeks, months, or even years later. One study attempting to quantify the impact of a delay in testing asked students to ‘steal’ an exam copy from a professor’s mailbox. 65 Some students were tested using a P300-based CTP procedure immediately after the theft, whereas others returned to the lab a month later. Researchers found no difference in detection efficiency. This is an encouraging result, but more evidence is needed. The test only used a single probe item (the stolen exam), and—as with other mock crime scenarios—subjects were explicitly instructed to engage in the theft, likely heightening the salience of the central detail. What is presently unknown is how P300-based detection fares over time for peripheral crime details, which may be less robustly encoded, but more likely to be uniquely known only to a perpetrator, and thus useful to minimize a false positive result.

Quality of encoding and incidentally acquired information. Not all information is equally well remembered. This is, of course, an important feature of human memory, as it would be highly inefficient for a lawyer to remember where she parked her car two Tuesdays ago equally as well as the legal standard for summary judgment. How well information is remembered has substantial implications for how well it may be detected. Yet behavioral tests of people’s memory for incidental details of real-world experiences show that sometimes surprisingly little is retained. 66 Rosenfeld’s research group acknowledges that sensitivities in their P300-based tests are less with incidentally acquired information than with well-rehearsed information. 67 Strategies to improve the sensitivity of detection of incidentally acquired information under investigation include providing feedback to focus a participant’s attention on the probe 68 , using an additional ERP component, 69 and combining separately administered testing with the CTP. 70 This is a critically important problem for memory detection, as incident-relevant details important for determining guilt may be only ‘incidentally encoded’, particularly under conditions of stress. Is this a problem that can be addressed with technical advances, such as those explored by Rosenfeld et al? Or will the technical improvements simply reveal the outer boundaries of conditions under which subtle but critical details may be recalled and detected, given that they were not central to the incident but may be necessary for avoiding a false positive? It is well known that stimuli that are unattended during learning are often only weakly remembered, or not remembered at all, when assessed on later recognition test. 71

Existing research has focused on how the use of countermeasures by a guilty subject may lead to missed detection—a false negative because of countermeasures. What has not been firmly established is how often a guilty subject will fail to show a P300 response to a probe stimulus for another reason, such as the fact that he may not have encoded the particular details of a weapon used, because of intoxication, darkness, mental illness, impulsivity, or stress—attributes that may be more prevalent in a criminal defendant population than in a research population. 72

Quality of retrieval environment. A final concern for ecological validity is the effect of stress or other contextual factors on memory ‘retrieval’ as well as encoding. 73 Most P300 studies using volunteer subjects—even those who participate in a mock crime—cannot fully replicate the stress of a real-world, high-stakes memory probe of a suspect (or witness). Although studies generally suggest that stress has a negative effect on episodic memory retrieval, 74 the effect of real-world stress on EEG-based memory detection is not adequately studied.

III.B. fMRI Based Technology

Functional magnetic resonance imaging is a safe, non-invasive, and widely used research and clinical tool. The details of how it works have been reviewed extensively elsewhere in the legal literature, so only a high-level reorientation is provided here for the unfamiliar reader. 75 Functional magnetic resonance imaging uses powerful magnetic fields and precisely tuned radio waves to detect small differences in blood oxygenation that serve as a proxy for neural activity. When a population of neurons becomes active, the brain’s vascular system quickly delivers a supply of richly oxygenated blood to replenish the metabolic needs of those neurons. At present, some fMRI scanners are capable of collecting a snapshot of the blood oxygenation level-dependent signal across the entire human brain every 1 or 2 seconds at reasonably high spatial resolution (ie the images are comprised of 2–3 mm cubes called ‘voxels’). 76 Subjects must lie with their head very still in a large, loud tube, but can perform behavioral tasks during scanning by looking at visual stimuli presented via a projection screen (or a virtual reality headset) 77 , listening to audio stimuli over headphones, and/or making responses using a keypad or button-box.

Two attributes in particular distinguish fMRI-based memory-detection from EEG-based memory detection. The first is technological access to more and different biological sources: fMRI can provide data from the entire brain. Multiple, interconnected brain regions are involved in forming and retrieving memories. One physiological limitation with EEG-based memory detection technologies is that EEG predominantly measures cortical electrical responses—the outer covering of the brain closest to the scalp—but cannot measure signals from ‘deeper’ brain structures such as the hippocampus. Although fMRI offers slower temporal resolution than EEG (that is, brain activity levels are sample on the order of seconds in fMRI, instead of milliseconds in EEG), fMRI offers substantially greater spatial resolution across the entire brain.

The second attribute is technological with respect to analysis capability: the most advanced and interesting fMRI studies of memory detection leverage the power of massive amounts of data obtained from brain scans to actually assess complex network connections and use machine-learning algorithms to recognize subtle patterns in networks, rather than activation in local areas. 78 These analytic techniques, combined with experimental paradigms and research questions directly aimed at assessment of ecological validity concerns, represent significant advancement in the technological aspects of memory detection. These methodological advances permit assessment of where biological constraints may lie that put ultimate boundaries on forensic use.

III.B.1. Early fMRI work on true and false memories

Early fMRI work examining neural correlates of true and false memories reported dissociations—that is, unique differences—between brain areas activated in response to test stimuli in a recognition task asking subjects to remember which stimuli they had previously studied. 79 A recent review of this literature identified as a ‘ubiquitous’ finding the ‘considerable overlap in the neural networks mediating both true and false memories’ for recognition responses to items that share the same ‘gist’ as items that were actually studied during encoding. 80 Many studies also find differences—notably, increased activity in regions related to sensory processing for true as compared to false retrieval, leading to the ‘sensory reactivation hypothesis’ that true memories are associated with the bringing back to mine of more sensory and perceptual details than false memories. 81 Overall, empirical support for the intuitively appealing sensory reactivation hypothesis is mixed, and other dissociations such as different patterns of activity in the medial temporal lobe and prefrontal cortices have been reported. 82 Other work testing episodic memory retrieval in an fMRI scanner a week after viewing a narrative documentary movie reported that the coactivations of certain brain areas were greater when subjects responded correctly to factually accurate statements about the movie, but such coactivations did not differ between responses to inaccurate statements about the movie. 83 Collectively, this work provided some suggestion that activation patterns could differentiate between true and false recognitions, based on distinct memory processes, though no clear potential for diagnostic assessment of true or false memories emerged. That is, there is no particular ‘spot’ in the brain that serves as a litmus test for whether a memory is true or false.

III.B.2. Newer fMRI methods: advanced techniques reveal the biological limitations of memory detection

A limitation of the classic fMRI analysis paradigms is that memories do not exist in discrete regions of the brain. Memories are encoded and stored in networks of brain regions. 84 Moreover, EEG studies using event-related potentials and fMRI studies using classic ‘univariate’ contrasts of brain activity are only equipped to gage the relative level of activation across different regions of brain, and the level of activation provides only rudimentary information about one’s memory state. But newer fMRI analysis methods can make use of the massive amounts of data to assess complex network connections and use machine-learning algorithms to recognize subtle activity patterns in networks, rather than activation in local areas.

This is a substantially more powerful way to analyze complex data, and the remainder of this review will focus on fMRI studies that employ such methods. These methods offer the best hope for reliable forensic memory detection, the greatest insights into the biological limitations of memory detection, and the subtlest challenges for a fact finder to assess the credibility of the techniques used to make claims about the presence or absence of a memory of interest.

III.C. Multi-Voxel Pattern Analysis and Machine-Learning Classifiers

Classic fMRI analysis looks at chunks of the brain: clusters of voxels and regions of interest. By analyzing each voxel separately, this ‘univariate’ analysis approach ignores the rich information that is encoded in the spatial topography of the distributed activation patterns—that is, it spotlights a particular area, but misses patterns in the broader network of brain activity. But the brain is highly interconnected, and not organized as a highly localized series of components.

A newer ‘multivariate’ technique, multi-voxel pattern analysis (MVPA), tries to exploit the information that is represented in the distributed patterns throughout a brain region or even across the entire brain. It is a more sensitive method of analysis because it is more adept at detecting distributed networks of processing. MVPA techniques use machine-learning algorithms to train classifiers on data patterns from test subjects. The classifier learns the distributed ‘neural signatures’ that differentiate unique mental states or behavioral conditions. Once adequately trained, the classifier is then tested on new fMRI data (that it has not been trained on) to determine whether it can accurately classify the condition of a subject’s brain on a given trial based solely on brain data information. 85 Simply put, there is more informational content in fMRI activity ‘patterns’ than is typically detected with conventional fMRI analyses. The accuracy with which the classifier can discriminate trials from Condition A and Condition B gives a quantitative assessment of how reliably two putatively distinct mental states are differentiated by their brain activity patterns.

MVPA has enabled significant advances in memory detection research. In 2010, the first paper to apply an MVPA approach to memory detection in fMRI ‘evaluated whether individuals’ subjective memory experiences, as well as their veridical experiential history, can be decoded from distributed fMRI activity patterns evoked in response to individual stimuli’. 86 Before entering the scanner, participants studied 200 faces for 4 seconds each. In the scanner about an hour later, they were presented with the 200 studied faces interspersed with 200 unstudied faces and pressed a button to indicate whether or not they recognized a given face. Participants were accurate about 70 per cent of the time, giving researchers the ability to examine their brain activity both when they were correct and when their memory failed them. The classifier was first trained to differentiate brain patterns responding to old faces that subjects correctly recognized from brain patterns responding to new faces that they correctly judged to be novel—both situations in which the subjective experience and objective reality of the response were identical. The classifier performed well above chance, with a mean classification accuracy of 83 per cent, and rising to 95 per cent if only the classifier’s ‘most confident’ guesses were considered. But since subjects did not perform perfectly—sometimes misidentifying new faces as having been previously seen, or rejecting old faces as novel—the classifier could also be tested on the ‘subjective’ mnemonic experience. In those scenarios, the classifier performed relatively poorly—near chance—when applied to detect the ‘true’ experiential history of a given stimulus on those trials for which participants made memory errors. That is, the classifier proved to be very good at decoding a participant’s ‘subjective’ memory state, but not nearly as good at detecting the true, veridical, ‘objective’ experiential history of a given stimulus. Subjective recognition, of course, can be susceptible to memory interference—resulting in commonly experienced, but false, memories. 87

Three other aspects of this study deserve separate mention, as they are particularly relevant to assessing the forensic capabilities and limitations of fMRI/MVPA-based memory detection. First, though many stimuli were shown to subjects in order to train the classifier, once trained it could be applied to single trials—that is, a face shown just one time provided sufficient neural information for the classifier to make a categorization decision. 88 This is a significant advantage over EEG-based memory detection paradigms that require multiple presentations to detect an event-related potential, as well as ‘classic’ univariate fMRI analyses that assess averages of trials across conditions. A subsequent study confirmed that the MVPA classifier could decode the memory status of an individual retrieval trial, and attempted to assess the vulnerability of this single-trial assessment to countermeasures by instructing participants who had studied a set of faces to attempt to conceal their true memory state. 89 Participants were instructed to feign the subjective experience of novelty for any faces they actually recognized and to feign the experience of recognition for any faces they did not recognize (eg by recalling someone that the novel face reminded them of). Using only this easy-to-implement countermeasure strategy, participants were able to prevent the experimenters from accurately differentiating brain responses to studied versus novel faces, with the mean classification accuracy dropping to chance level. 90 That is, although MVPA classification of fMRI data can enable single-trial memory assessment, it may still be vulnerable to simple mental countermeasures. 91

Second, the researchers specifically considered whether the same classifier would work across subjects by training it on data from some individuals, but then testing it on data from others. This would be an important feature of any technology to be forensically applied, but depends on an assumption of some unknown degree of consistency across the brains of different people. The classifier worked well across individuals, ‘suggesting high across-participant consistency in memory-related activation patterns’. 92 This is a significant result in that it suggests that, biologically, different people’s brains may be similar enough in how they process memories for technological solutions to be somewhat standardized.

Third, recall that the ‘classifier’ is not a person making a judgment call—it is a machine-learning algorithm, in this case based on regularized logistic regression 93 —assessing patterns of neural data. What the 2010 study suggests is that memories that feel true but are objectively false may have neural signatures quite similar to memories that feel true and are true. 94 If that finding holds, it has substantial implications for the ability to detect true memories and avoid detection of false memories.

III.D. Advances in Experimental Paradigms with MVPA: Real-World Life Tracking, Single-Trial Detection, and Boundary Conditions Revealed

Lab-created memories such as studying a series of faces may be relatively impoverished, from a neural data perspective, as compared to real-life memories that have potential for context and meaning. It is possible that where MVPA-based detection met limits for lab-created memories (in particular, the inability to distinguish objectively false but subjectively experienced memories, and vulnerability to simple countermeasures), additional information available to the classifier from a richer memory set may make real-life memories more distinguishable. This enrichment of the memory experience, and delays between the experience and time of test, also address concerns about ecological validity of research for potential forensic application. 95

To address this, Rissman and colleagues had participants wear a necklace camera for a three-week period while going about their daily lives before returning a week later to be scanned while making memory judgments about sequences of photos from their own life or from others’ lives. 96 After viewing a short sequence of four photographs depicting one event, participants made a self/other judgment and then indicated how strong their experience of recollection or familiarity was for photos judged to be from their own life, or their degree of certainty about photos judged to be from someone else’s life.