Integrations

What's new?

In-Product Prompts

Participant Management

Interview Studies

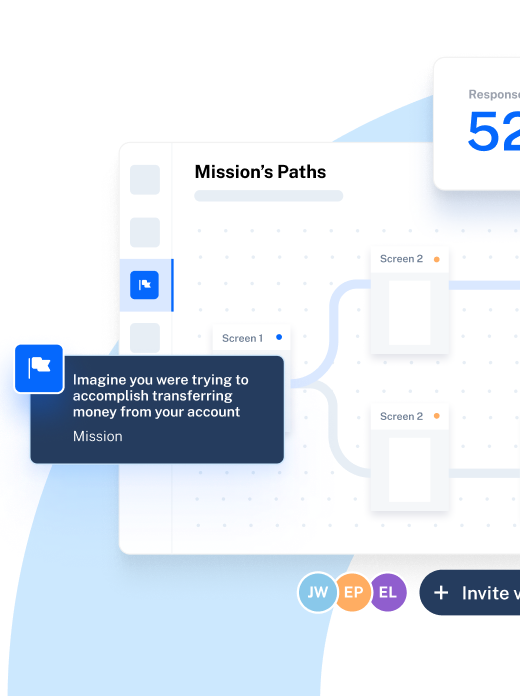

Prototype Testing

Card Sorting

Tree Testing

Live Website Testing

Automated Reports

Templates Gallery

Choose from our library of pre-built mazes to copy, customize, and share with your own users

Browse all templates

Financial Services

Tech & Software

Product Designers

Product Managers

User Researchers

By use case

Concept & Idea Validation

Wireframe & Usability Test

Content & Copy Testing

Feedback & Satisfaction

Content Hub

Educational resources for product, research and design teams

Explore all resources

Question Bank

Maze Research Success Hub

Guides & Reports

Help Center

Future of User Research Report

The Optimal Path Podcast

User Research

Mar 21, 2024

Creating a research hypothesis: How to formulate and test UX expectations

A research hypothesis helps guide your UX research with focused predictions you can test and learn from. Here’s how to formulate your own hypotheses.

Armin Tanovic

All great products were once just thoughts—the spark of an idea waiting to be turned into something tangible.

A research hypothesis in UX is very similar. It’s the starting point for your user research; the jumping off point for your product development initiatives.

Formulating a UX research hypothesis helps guide your UX research project in the right direction, collect insights, and evaluate not only whether an idea is worth pursuing, but how to go after it.

In this article, we’ll cover what a research hypothesis is, how it's relevant to UX research, and the best formula to create your own hypothesis and put it to the test.

Test your hypothesis with Maze

Maze lets you validate your design and test research hypotheses to move forward with authentic user insights.

What defines a research hypothesis?

A research hypothesis is a statement or prediction that needs testing to be proven or disproven.

Let’s say you’ve got an inkling that making a change to a feature icon will increase the number of users that engage with it—with some minor adjustments, this theory becomes a research hypothesis: “ Adjusting Feature X’s icon will increase daily average users by 20% ”.

A research hypothesis is the starting point that guides user research . It takes your thought and turns it into something you can quantify and evaluate. In this case, you could conduct usability tests and user surveys, and run A/B tests to see if you’re right—or, just as importantly, wrong .

A good research hypothesis has three main features:

- Specificity: A hypothesis should clearly define what variables you’re studying and what you expect an outcome to be, without ambiguity in its wording

- Relevance: A research hypothesis should have significance for your research project by addressing a potential opportunity for improvement

- Testability: Your research hypothesis must be able to be tested in some way such as empirical observation or data collection

What is the difference between a research hypothesis and a research question?

Research questions and research hypotheses are often treated as one and the same, but they’re not quite identical.

A research hypothesis acts as a prediction or educated guess of outcomes , while a research question poses a query on the subject you’re investigating. Put simply, a research hypothesis is a statement, whereas a research question is (you guessed it) a question.

For example, here’s a research hypothesis: “ Implementing a navigation bar on our dashboard will improve customer satisfaction scores by 10%. ”

This statement acts as a testable prediction. It doesn’t pose a question, it’s a prediction. Here’s what the same hypothesis would look like as a research question: “ Will integrating a navigation bar on our dashboard improve customer satisfaction scores? ”

The distinction is minor, and both are focused on uncovering the truth behind the topic, but they’re not quite the same.

Why do you use a research hypothesis in UX?

Research hypotheses in UX are used to establish the direction of a particular study, research project, or test. Formulating a hypothesis and testing it ensures the UX research you conduct is methodical, focused, and actionable. It aids every phase of your research process , acting as a north star that guides your efforts toward successful product development .

Typically, UX researchers will formulate a testable hypothesis to help them fulfill a broader objective, such as improving customer experience or product usability. They’ll then conduct user research to gain insights into their prediction and confirm or reject the hypothesis.

A proven or disproven hypothesis will tell if your prediction is right, and whether you should move forward with your proposed design—or if it's back to the drawing board.

Formulating a hypothesis can be helpful in anything from prototype testing to idea validation, and design iteration. Put simply, it’s one of the first steps in conducting user research.

Whether you’re in the initial stages of product discovery for a new product, a single feature, or conducting ongoing research, a strong hypothesis presents a clear purpose and angle for your research It also helps understand which user research methodology to use to get your answers.

What are the types of research hypotheses?

Not all hypotheses are built the same—there are different types with different objectives. Understanding the different types enables you to formulate a research hypothesis that outlines the angle you need to take to prove or disprove your predictions.

Here are some of the different types of hypotheses to keep in mind.

Null and alternative hypotheses

While a normal research hypothesis predicts that a specific outcome will occur based upon a certain change of variables, a null hypothesis predicts that no difference will occur when you introduce a new condition.

By that reasoning, a null hypothesis would be:

- Adding a new CTA button to the top of our homepage will make no difference in conversions

Null hypotheses are useful because they help outline what your test or research study is trying to dis prove, rather than prove, through a research hypothesis.

An alternative hypothesis states the exact opposite of a null hypothesis. It proposes that a certain change will occur when you introduce a new condition or variable. For example:

- Adding a CTA button to the top of our homepage will cause a difference in conversion rates

Simple hypotheses and complex hypotheses

A simple hypothesis is a prediction that includes only two variables in a cause-and-effect sequence, with one variable dependent on the other. It predicts that you'll achieve a particular outcome based on a certain condition. The outcome is known as the dependent variable and the change causing it is the independent variable .

For example, this is a simple hypothesis:

- Including the search function on our mobile app will increase user retention

The expected outcome of increasing user retention is based on the condition of including a new search function. But, what happens when there are more than two factors at play?

We get what’s called a complex hypothesis. Instead of a simple condition and outcome, complex hypotheses include multiple results. This makes them a perfect research hypothesis type for framing complex studies or tracking multiple KPIs based on a single action.

Building upon our previous example, a complex research hypothesis could be:

- Including the search function on our mobile app will increase user retention and boost conversions

Directional and non-directional hypotheses

Research hypotheses can also differ in the specificity of outcomes. Put simply, any hypothesis that has a specific outcome or direction based on the relationship of its variables is a directional hypothesis . That means that our previous example of a simple hypothesis is also a directional hypothesis.

Non-directional hypotheses don’t specify the outcome or difference the variables will see. They just state that a difference exists. Following our example above, here’s what a non-directional hypothesis would look like:

- Including the search function on our mobile app will make a difference in user retention

In this non-directional hypothesis, the direction of difference (increase/decrease) hasn’t been specified, we’ve just noted that there will be a difference.

The type of hypothesis you write helps guide your research—let’s get into it.

How to write and test your UX research hypothesis

Now we’ve covered the types of research hypothesis examples, it’s time to get practical.

Creating your research hypothesis is the first step in conducting successful user research.

Here are the four steps for writing and testing a UX research hypothesis to help you make informed, data-backed decisions for product design and development.

1. Formulate your hypothesis

Start by writing out your hypothesis in a way that’s specific and relevant to a distinct aspect of your user or product experience. Meaning: your prediction should include a design choice followed by the outcome you’d expect—this is what you’re looking to validate or reject.

Your proposed research hypothesis should also be testable through user research data analysis. There’s little point in a hypothesis you can’t test!

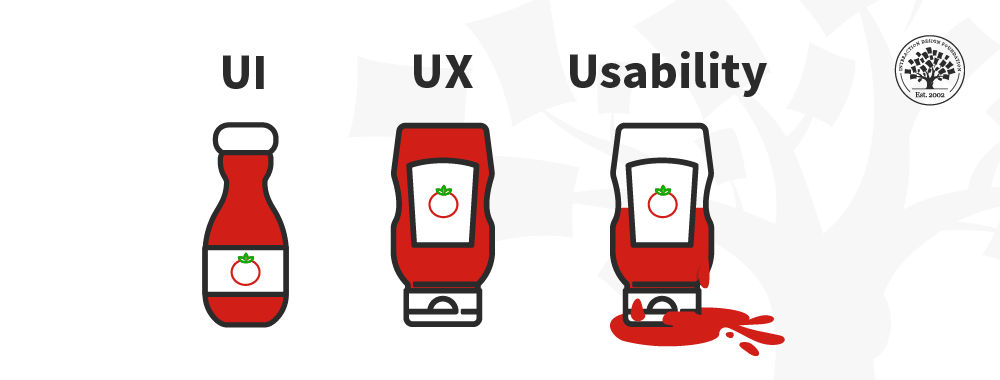

Let’s say your focus is your product’s user interface—and how you can improve it to better meet customer needs. A research hypothesis in this instance might be:

- Adding a settings tab to the navigation bar will improve usability

By writing out a research hypothesis in this way, you’re able to conduct relevant user research to prove or disprove your hypothesis. You can then use the results of your research—and the validation or rejection of your hypothesis—to decide whether or not you need to make changes to your product’s interface.

2. Identify variables and choose your research method

Once you’ve got your hypothesis, you need to map out how exactly you’ll test it. Consider what variables relate to your hypothesis. In our case, the main variable of our outcome is adding a settings tab to the navigation bar.

Once you’ve defined the relevant variables, you’re in a better position to decide on the best UX research method for the job. If you’re after metrics that signal improvement, you’ll want to select a method yielding quantifiable results—like usability testing . If your outcome is geared toward what users feel, then research methods for qualitative user insights, like user interviews , are the way to go.

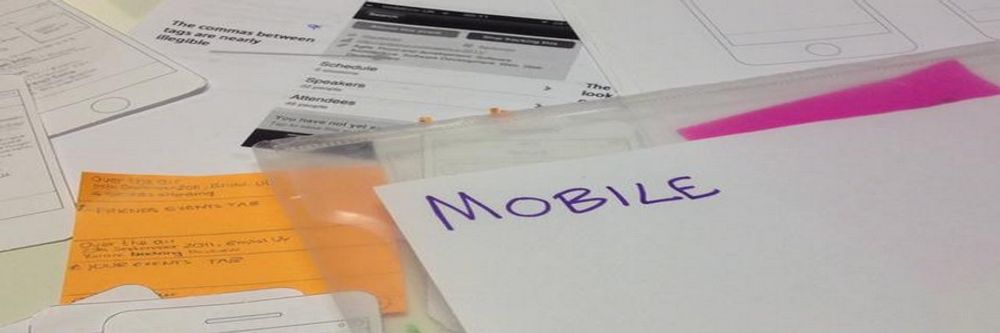

3. Carry out your study

It’s go time. Now you’ve got your hypothesis, identified the relevant variables, and outlined your method for testing them, you’re ready to run your study. This step involves recruiting participants for your study and reaching out to them through relevant channels like email, live website testing , or social media.

Given our hypothesis, our best bet is to conduct A/B and usability tests with a prototype that includes the additional UI elements, then compare the usability metrics to see whether users find navigation easier with or without the settings button.

We can also follow up with UX surveys to get qualitative insights and ask users how they found the task, what they preferred about each design, and to see what additional customer insights we uncover.

💡 Want more insights from your usability tests? Maze Clips enables you to gather real-time recordings and reactions of users participating in usability tests .

4. Analyze your results and compare them to your hypothesis

By this point, you’ve neatly outlined a hypothesis, chosen a research method, and carried out your study. It’s now time to analyze your findings and evaluate whether they support or reject your hypothesis.

Look at the data you’ve collected and what it means. Given that we conducted usability testing, we’ll want to look to some key usability metrics for an indication of whether the additional settings button improves usability.

For example, with the usability task of ‘ In account settings, find your profile and change your username ’, we can conduct task analysis to compare the times spent on task and misclick rates of the new design, with those same metrics from the old design.

If you also conduct follow-up surveys or interviews, you can ask users directly about their experience and analyze their answers to gather additional qualitative data . Maze AI can handle the analysis automatically, but you can also manually read through responses to get an idea of what users think about the change.

By comparing the findings to your research hypothesis, you can identify whether your research accepts or rejects your hypothesis. If the majority of users struggle with finding the settings page within usability tests, but had a higher success rate with your new prototype, you’ve proved the hypothesis.

However, it's also crucial to acknowledge if the findings refute your hypothesis rather than prove it as true. Ruling something out is just as valuable as confirming a suspicion.

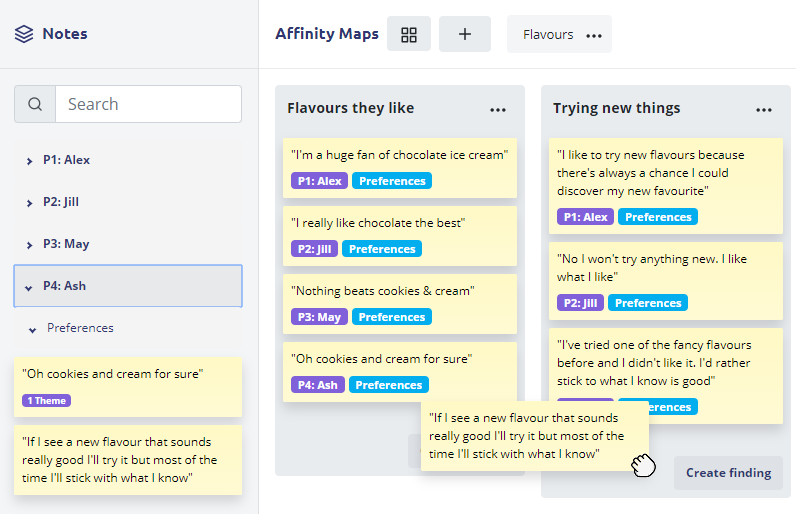

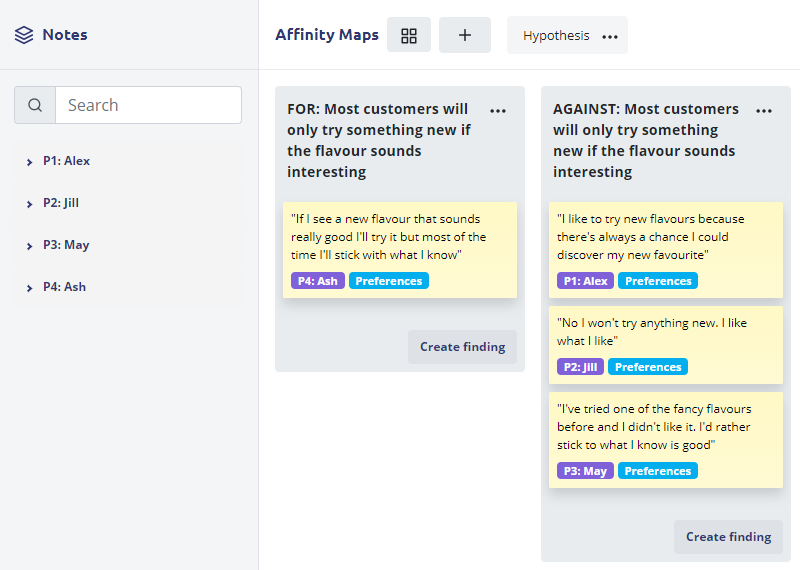

In either case, make sure to draw conclusions based on the relationship between the variables and store findings in your UX research repository . You can conduct deeper analysis with techniques like thematic analysis or affinity mapping .

UX research hypotheses: four best practices to guide your research

Knowing the big steps for formulating and testing a research hypothesis ensures that your next UX research project gives you focused, impactful results and insights. But, that’s only the tip of the research hypothesis iceberg. There are some best practices you’ll want to consider when using a hypothesis to test your UX design ideas.

Here are four research hypothesis best practices to help guide testing and make your UX research systematic and actionable.

Align your hypothesis to broader business and UX goals

Before you begin to formulate your hypothesis, be sure to pause and think about how it connects to broader goals in your UX strategy . This ensures that your efforts and predictions align with your overarching design and development goals.

For example, implementing a brand new navigation menu for current account holders might work for usability, but if the wider team is focused on boosting conversion rates for first-time site viewers, there might be a different research project to prioritize.

Create clear and actionable reports for stakeholders

Once you’ve conducted your testing and proved or disproved your hypothesis, UX reporting and analysis is the next step. You’ll need to present your findings to stakeholders in a way that's clear, concise, and actionable. If your hypothesis insights come in the form of metrics and statistics, then quantitative data visualization tools and reports will help stakeholders understand the significance of your study, while setting the stage for design changes and solutions.

If you went with a research method like user interviews, a narrative UX research report including key themes and findings, proposed solutions, and your original hypothesis will help inform your stakeholders on the best course of action.

Consider different user segments

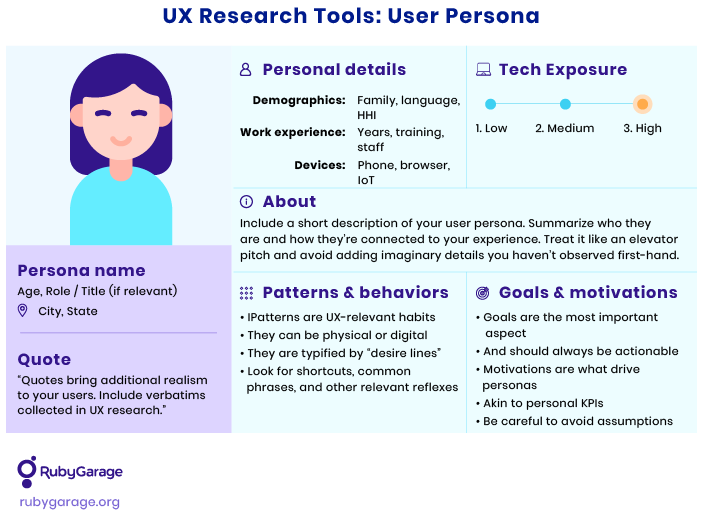

While getting enough responses is crucial for proving or disproving your hypothesis, you’ll want to consider which users will give you the highest quality and most relevant responses. Remember to consider user personas —e.g. If you’re only introducing a change for premium users, exclude testing with users who are on a free trial of your product.

You can recruit and target specific user demographics with the Maze Panel —which enables you to search for and filter participants that meet your requirements. Doing so allows you to better understand how different users will respond to your hypothesis testing. It also helps you uncover specific needs or issues different users may have.

Involve stakeholders from the start

Before testing or even formulating a research hypothesis by yourself, ensure all your stakeholders are on board. Informing everyone of your plan to formulate and test your hypothesis does three things:

Firstly, it keeps your team in the loop . They’ll be able to inform you of any relevant insights, special considerations, or existing data they already have about your particular design change idea, or KPIs to consider that would benefit the wider team.

Secondly, informing stakeholders ensures seamless collaboration across multiple departments . Together, you’ll be able to fit your testing results into your overall CX strategy , ensuring alignment with business goals and broader objectives.

Finally, getting everyone involved enables them to contribute potential hypotheses to test . You’re not the only one with ideas about what changes could positively impact the user experience, and keeping everyone in the loop brings fresh ideas and perspectives to the table.

Test your UX research hypotheses with Maze

Formulating and testing out a research hypothesis is a great way to define the scope of your UX research project clearly. It helps keep research on track by providing a single statement to come back to and anchor your research in.

Whether you run usability tests or user interviews to assess your hypothesis—Maze's suite of advanced research methods enables you to get the in-depth user and customer insights you need.

Frequently asked questions about research hypothesis

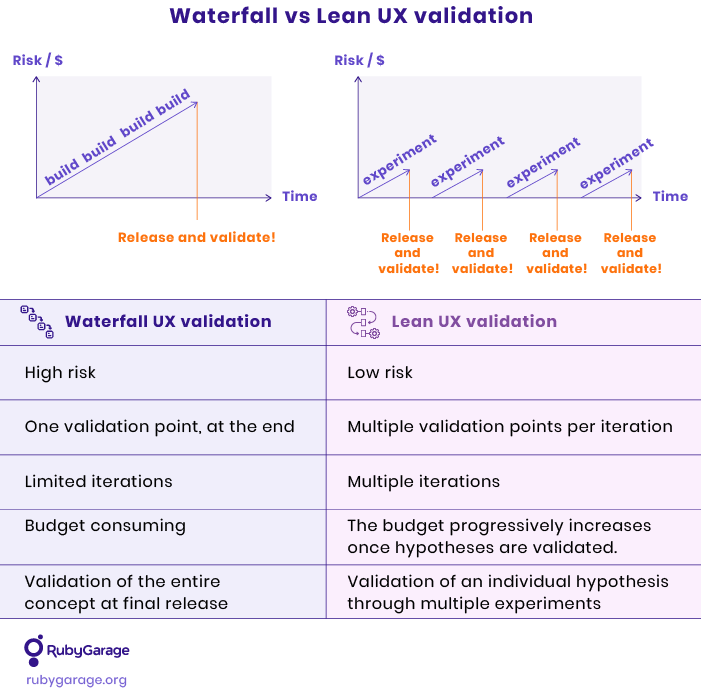

What is the difference between a hypothesis and a problem statement in UX?

A research hypothesis describes the prediction or method of solving that problem. A problem statement, on the other hand, identifies a specific issue in your design that you intend to solve. A problem statement will typically include a user persona, an issue they have, and a desired outcome they need.

How many hypotheses should a UX research problem have?

Technically, there are no limits to the amount of hypotheses you can have for a certain problem or study. However, you should limit it to one hypothesis per specific issue in UX research. This ensures that you can conduct focused testing and reach clear, actionable results.

Hypothesis Testing in the User Experience

It’s something we all have completed and if you have kids might see each year at the school science fair.

- Does an expensive baseball travel farther than a cheaper one?

- Which melts an ice block quicker, salt water or tap water?

- Does changing the amount of vinegar affect the color when dying Easter eggs?

While the science project might be relegated to the halls of elementary schools or your fading childhood memory, it provides an important lesson for improving the user experience.

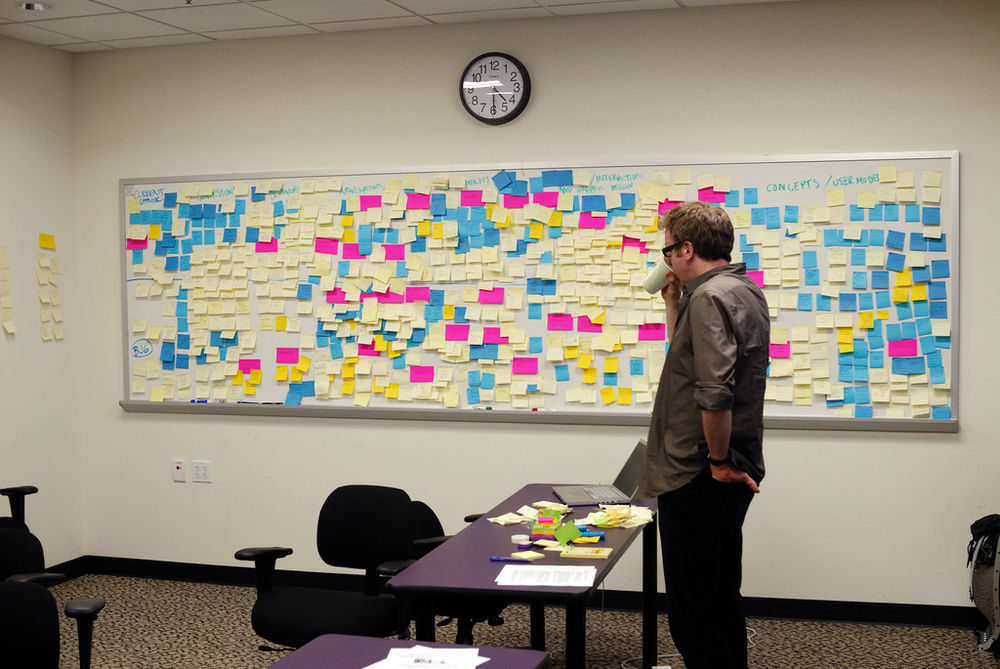

The science project provides us with a template for designing a better user experience. Form a clear hypothesis, identify metrics, and collect data to see if there is evidence to refute or confirm it. Hypothesis testing is at the heart of modern statistical thinking and a core part of the Lean methodology .

Instead of approaching design decisions with pure instinct and arguments in conference rooms, form a testable statement, invite users, define metrics, collect data and draw a conclusion.

- Does requiring the user to double enter an email result result in more valid email addresses?

- Will labels on the top of form fields or the left of form fields reduce the time to complete the form?

- Does requiring the last four digits of your Social Security Number improve application rates over asking for a full SSN?

- Do users have more trust in the website if we include the McAfee security symbol or the Verisign symbol ?

- Do more users make purchases if the checkout button is blue or red?

- Does a single long form generate higher form submissions than the division of the form on three smaller pages?

- Will users find items faster using mega menu navigation or standard drop-down navigation?

- Does the number of monthly invoices a small business sends affect which payment solution they prefer?

- Do mobile users prefer to download an app to shop for furniture or use the website?

Each of the above questions is both testable and represents real examples. It’s best to have as specific a hypothesis as possible and isolate the variable of interest. Many of these hypotheses can be tested with a simple A/B test , unmoderated usability test , survey or some combination of them all .

Even before you collect any data, there is an immediate benefit gained from forming hypotheses. It forces you and your team to think through the assumptions in your designs and business decisions. For example, many registration systems require users to enter their email address twice. If an email address is wrong, in many cases a company has no communication with a prospective customer.

Requiring two email fields would presumably reduce the number of mistyped email addresses. But just like legislation can have unintended consequences, so do rules in the user interface. Do users just copy and paste their email thus negating the double fields? If you then disable the pasting of email addresses into the field, does this lead to more form abandonment and less overall customers?

With a clear hypothesis to test, the next step involves identifying metrics that help quantify the experience . Like most tests, you can use a simple binary metric (yes/no, pass/fail, convert/didn’t convert). For example, you could collect how many users registered using the double email vs. the single email form, how many submitted using the last four numbers of their SSN vs. the full SSN, and how many found an item with the mega menu vs. the standard menu.

Binary metrics are simple, but they usually can’t fully describe the experience. This is why we routinely collect multiple metrics, both performance and attitudinal. You can measure the time it takes users to submit alternate versions of the forms, or the time it takes to find items using different menus. Rating scales and forced ranking questions are good ways of measuring preferences for downloading apps or choosing a payment solution.

With a clear research hypothesis and some appropriate metrics, the next steps involve collecting data from the right users and analyzing the data statistically to test the hypothesis. Technically we rework our research hypothesis into what’s called the Null Hypothesis, then look for evidence against the Null Hypothesis, usually in the form of the p-value . This is of course a much larger topic we cover in Quantifying the User Experience .

While the process of subjecting data to statistical analysis intimidates many designers and researchers (recalling those school memories again), remember that the hardest and most important part is working with a good testable hypothesis. It takes practice to convert fuzzy business questions into testable hypotheses. Once you’ve got that down, the rest is mechanics that we can help with.

You might also be interested in

UX Research: Objectives, Assumptions, and Hypothesis

by Rick Dzekman

An often neglected step in UX research

Introduction

UX research should always be done for a clear purpose – otherwise you’re wasting the both your time and the time of your participants. But many people who do UX research fail to properly articulate the purpose in their research objectives. A major issue is that the research objectives include assumptions that have not been properly defined.

When planning UX research you have some goal in mind:

- For generative research it’s usually to find out something about users or customers that you previously did not know

- For evaluative research it’s usually to identify any potential issues in a solution

As part of this goal you write down research objectives that help you achieve that goal. But for many researchers (especially more junior ones) they are missing some key steps:

- How will those research objectives help to reach that goal?

- What assumptions have you made that are necessary for those objectives to reach that goal?

- How does your research (questions, tasks, observations, etc.) help meet those objectives?

- What kind of responses or observations do you need from your participants to meet those objectives?

One approach people use is to write their objectives in the form of research hypothesis. There are a lot of problems when trying to validate a hypothesis with qualitative research and sometimes even with quantitative.

This article focuses largely on qualitative research: interviews, user tests, diary studies, ethnographic research, etc. With qualitative research in mind let’s start by taking a look at a few examples of UX research hypothesis and how they may be problematic.

Research hypothesis

Example hypothesis: users want to be able to filter products by colour.

At first it may seem that there are a number of ways to test this hypothesis with qualitative research. For example we might:

- Observe users shopping on sites with and without colour filters and see whether or not they use them

- Ask users who are interested in our products about how narrow down their choices

- Run a diary study where participants document the ways they narrowed down their searches on various stores

- Make a prototype with colour filters and see if participants use them unprompted

These approaches are all effective but they do not and cannot prove or disprove our hypothesis. It’s not that the research methods are ineffective it’s that the hypothesis itself is poorly expressed.

The first problem is that there are hidden assumptions made by this hypothesis. Presumably we would be doing this research to decide between a choice of possible filters we could implement. But there’s no obvious link between users wanting to filter by colour and a benefit from us implementing a colour filter. Users may say they want it but how will that actually benefit their experience?

The second problem with this hypothesis is that we’re asking a question about “users” in general. How many users would have to want colour filters before we could say that this hypothesis is true?

Example Hypothesis: Adding a colour filter would make it easier for users to find the right products

This is an obvious improvement to the first example but it still has problems. We could of course identify further assumptions but that will be true of pretty much any hypothesis. The problem again comes from speaking about users in general.

Perhaps if we add the ability to filter by colour it might make the possible filters crowded and make it more difficult for users who don’t need colour to find the filter that they do need. Perhaps there is a sample bias in our research participants that does not apply broadly to our user base.

It is difficult (though not impossible) to design research that could prove or disprove this hypothesis. Any such research would have to be quantitative in nature. And we would have to spend time mapping out what it means for something to be “easier” or what “the right products” are.

Example Hypothesis: Travelers book flights before they book their hotels

The problem with this hypothesis should now be obvious: what would it actually mean for this hypothesis to be proved or disproved? What portion of travelers would need to book their flights first for us to consider this true?

Example Hypothesis: Most users who come to our app know where and when they want to fly

This hypothesis is better because it talks about “most users” rather than users in general. “Most” would need to be better defined but at least this hypothesis is possible to prove or disprove.

We could address this hypothesis with quantitative research. If we found out that it was true we could focus our design around the primary use case or do further research about how to attract users at different stages of their journey.

However there is no clear way to prove or disprove this hypothesis with qualitative research. If the app has a million users and 15/20 research participants tell you that this is true would your findings generalise to the entire user base? The margin of error on that finding is 20-25%, meaning that the true results could be closer to 50% or even 100% depending on how unlucky you are with your sample.

Example Hypothesis: Customers want their bank to help them build better savings habits

There are many things wrong with this hypothesis but we will focus on the hidden assumptions and the links to design decisions. Two big assumptions are that (1) it’s possible to find out what research participants want and (2) people’s wants should dictate what features or services to provide.

Research objectives

One of the biggest problem with using hypotheses is that they set the wrong expectations about what your research results are telling you. In Thinking, Fast and Slow, Daniel Kahneman points out that:

- “extreme outcomes (both high and low) are more likely to be found in small than in large samples”

- “the prominence of causal intuitions is a recurrent theme in this book because people are prone to apply causal thinking inappropriately, to situations that require statistical reasoning”

- “when people believe a conclusion is true, they are also very likely to believe arguments that appear to support it, even when these arguments are unsound”

Using a research hypothesis primes us to think that we have found some fundamental truth about user behaviour from our qualitative research. This leads to overconfidence about what the research is saying and to poor quality research that could simply have been skipped in exchange for simply making assumption. To once again quote Kahneman: “you do not believe that these results apply to you because they correspond to nothing in your subjective experience”.

We can fix these problems by instead putting our focus on research objectives. We pay attention to the reason that we are doing the research and work to understand if the results we get could help us with our objectives.

This does not get us off the hook however because we can still create poor research objectives.

Let’s look back at one of our prior hypothesis examples and try to find effective research objectives instead.

Example objectives: deciding on filters

In thinking about the colour filter we might imagine that this fits into a larger project where we are trying to decide what filters we should implement. This is decidedly different research to trying to decide what order to implement filters in or understand how they should work. In this case perhaps we have limited resources and just want to decide what to implement first.

A good approach would be quantitative research designed to produce some sort of ranking. But we should not dismiss qualitative research for this particular project – provided our assumptions are well defined.

Let’s consider this research objective: Understand how users might map their needs against the products that we offer . There are three key aspects to this objective:

- “Understand” is a common form of research objective and is a way that qualitative research can discover things that we cannot find with quant. If we don’t yet understand some user attitude or behaviour we cannot quantify it. By focusing our objective on understanding we are looking at uncovering unknowns.

- By using the word “might” we are not definitively stating that our research will reveal all of the ways that users think about their needs.

- Our focus is on understanding the users’ mental models. Then we are not designing for what users say that they want and we aren’t even designing for existing behaviour. Instead we are designing for some underlying need.

The next step is to look at the assumptions that we are making. One assumption is that mental models are roughly the same between most people. So even though different users may have different problems that for the most part people tend to think about solving problems with the same mental machinery. As we do more research we might discover that this assumption is not true and there are distinctly different kinds of behaviours. Perhaps we know what those are in advance and we can recruit our research participants in a way that covers those distinct behaviours.

Another assumption is that if we understand our users’ mental models that we will be able to design a solution that will work for most people. There are of course more assumptions we could map but this is a good start.

Now let’s look at another research objective: Understand why users choose particular filters . Again we are looking to understand something that we did not know before.

Perhaps we have some prior research that tells us what the biggest pain points are that our products solve. If we have an understanding of why certain filters are used we can think about how those motivations fit in with our existing knowledge.

Mapping objectives to our research plan

Our actual research will involve some form of asking questions and/or making observations. It’s important that we don’t simply forget about our research objectives and start writing questions. This leads to completing research and realising that you haven’t captured anything about some specific objective.

An important step is to explicitly write down all the assumptions that we are making in our research and to update those assumptions as we write our questions or instructions. These assumptions will help us frame our research plan and make sure that we are actually learning the things that we think we are learning. Consider even high level assumptions such as: a solution we design with these insights will lead to a better experience, or that a better experience is necessarily better for the user.

Once we have our main assumptions defined the next step is to break our research objective down further.

Breaking down our objectives

The best way to consider this breakdown is to think about what things we could learn that would contribute to meeting our research objective. Let’s consider one of the previous examples: Understand how users might map their needs against the products that we offer

We may have an assumption that users do in fact have some mental representation of their needs that align with the products they might purchase. An aspect of this research objective is to understand whether or not this true. So two sub-objectives may be to (1) understand why users actually buy these sorts of products (if at all), and (2) understand how users go about choosing which product to buy.

Next we might want to understand what our users needs actually are or if we already have research about this understand which particular needs apply to our research participants and why.

And finally we would want to understand what factors go into addressing a particular need. We may leave this open ended or even show participants attributes of the products and ask which ones address those needs and why.

Once we have a list of sub-objectives we could continue to drill down until we feel we’ve exhausted all the nuances. If we’re happy with our objectives the next step is to think about what responses (or observations) we would need in order to answer those objectives.

It’s still important that we ask open ended questions and see what our participants say unprompted. But we also don’t want our research to be so open that we never actually make any progress on our research objectives.

Reviewing our objectives and pilot studies

At the end it’s important to review every task, question, scenario, etc. and seeing which research objectives are being addressed. This is vital to make sure that your planning is worthwhile and that you haven’t missed anything.

If there’s time it’s also useful to run a pilot study and analyse the responses to see if they help to address your objectives.

Plan accordingly

It should be easy to see why research hypothesis are not suitable for most qualitative research. While it is possible to create suitable hypothesis it is more often than not going to lead to poor quality research. This is because hypothesis create the impression that qualitative research can find things that generalise to the entire user base. In general this is not true for the sample sizes typically used for qualitative research and also generally not the reason that we do qualitative research in the first place.

Instead we should focus on producing effective research objectives and making sure every part of our research plan maps to a suitable objective.

Advisory boards aren’t only for executives. Join the LogRocket Content Advisory Board today →

- Product Management

- Solve User-Reported Issues

- Find Issues Faster

- Optimize Conversion and Adoption

How to create a perfect design hypothesis

A design hypothesis is a cornerstone of the UX and UI design process. It guides the entire process, defines research needs, and heavily influences the final outcome.

Doing any design work without a well-defined hypothesis is like riding a car without headlights. Although still possible, it forces you to go slower and dramatically increases the chances of unpleasant pitfalls.

The importance of a hypothesis in the design process

Design change for your hypothesis, the objective of your hypothesis, mapping underlying assumptions in your hypothesis, example 1: a simple design hypothesis, example 2: a robust design hypothesis.

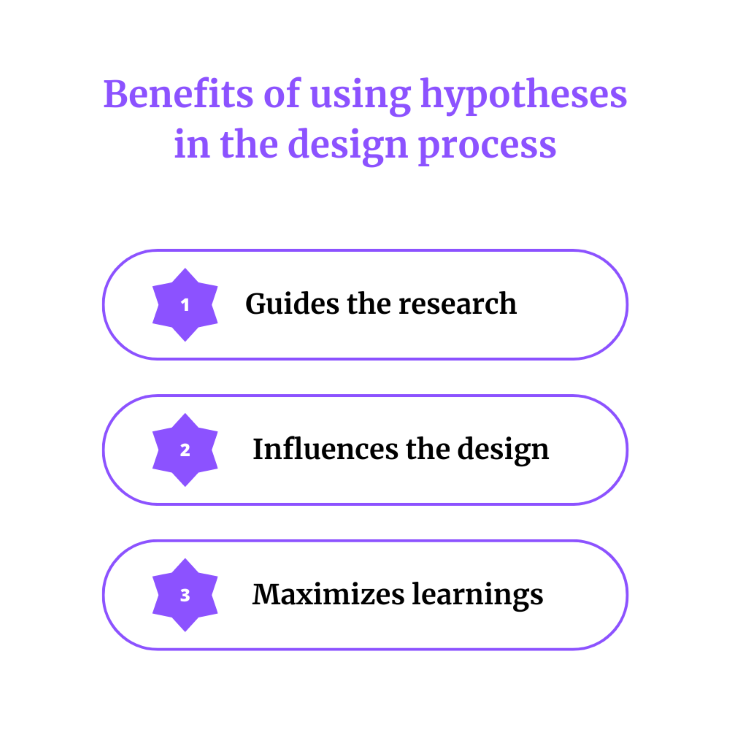

There are three main reasons why no discovery or design process should start without a well-defined and framed hypothesis. A good design hypothesis helps us:

- Guide the research

- Nail the solutions

- Maximize learnings and enable iterative design

A design hypothesis guides research

A good hypothesis not only states what we want to achieve but also the final objective and our current beliefs. It allows designers to assess how much actual evidence there is to support the hypothesis and focus their research and discovery efforts on areas they are least confident about.

Research for the sake of research brings waste. Research for the sake of validating specific hypotheses brings learnings.

A design hypothesis influences the design and solution

Design hypothesis gives much-needed context. It helps you:

- Ideate right solutions

- Focus on the proper UX

- Polish UI details

The more detailed and robust the design hypothesis, the more context you have to help you make the best design decisions.

A design hypothesis maximizes learnings and enables iterative design

If you design new features blindly, it’s hard to truly learn from the launch. Some metrics might go up. Others might go down, so what?

With a well-defined design hypothesis, you can not only validate whether the design itself works but also better understand why and how to improve it in the future. This helps you iterate on your learnings.

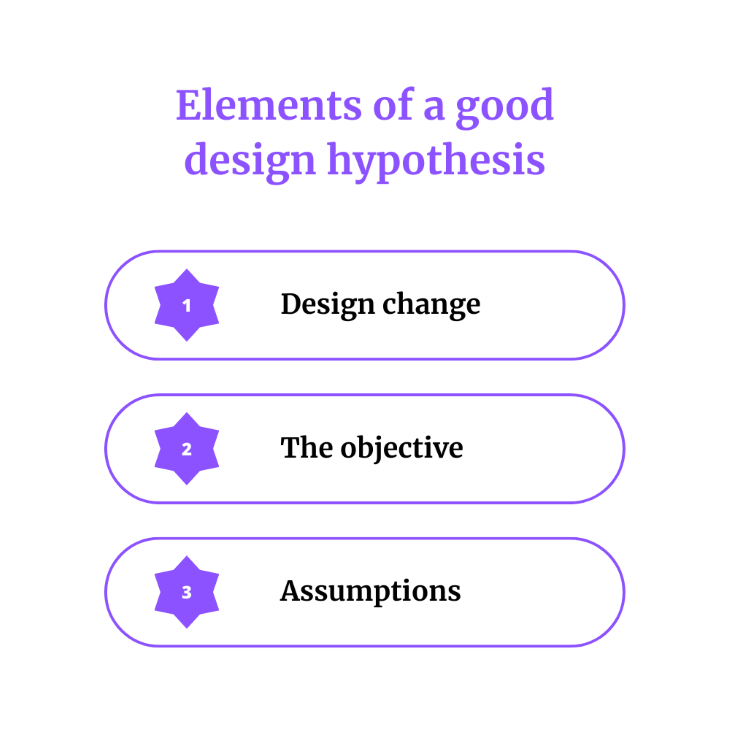

Components of a good design hypothesis

I am not a fan of templatizing how a solid design hypothesis should look. There are various ways to approach it, and you should choose whatever works for you best. However, there are three essential elements you should include to ensure you get all the benefits mentioned earlier of using design hypotheses, that is:

- Design change

- The objective

- Underlying assumptions

The fundamental part is the definition of what you are trying to do. If you are working on shortening the onboarding process, you might simply put “[…] we’d like to shorten the onboarding process […].”

The goal here is to give context to a wider audience and be able to quickly reference that the design hypothesis is concerning. Don’t fret too much about this part; simply boil the problem down to its essentials. What is frustrating your users?

In other words, the objective is the “why” behind the change. What exactly are you trying to achieve with the planned design change? The objective serves a few purposes.

Over 200k developers and product managers use LogRocket to create better digital experiences

First, it’s a great sanity check. You’d be surprised how many designers proposed various ideas, changes, and improvements without a clear goal. Changing design just for the sake of changing the design is a no-no.

It also helps you step back and see if the change you are considering is the best approach. For instance, if you are considering shortening the onboarding to increase the percentage of users completing it, are there any other design changes you can think of to achieve the same goal? Maybe instead of shortening the onboarding, there’s a bigger opportunity in simply adjusting the copy? Defining clear objectives invites conversations about whether you focus on the right things.

Additionally, a clearly defined objective gives you a measure of success to evaluate the effectiveness of your solution. If you believed you could boost the completion rate by 40 percent, but achieved only a 10 percent lift, then either the hypothesis was flawed (good learning point for the future), or there’s still room for improvements.

Last but not least, a clear objective is essential for the next step: mapping underlying assumptions.

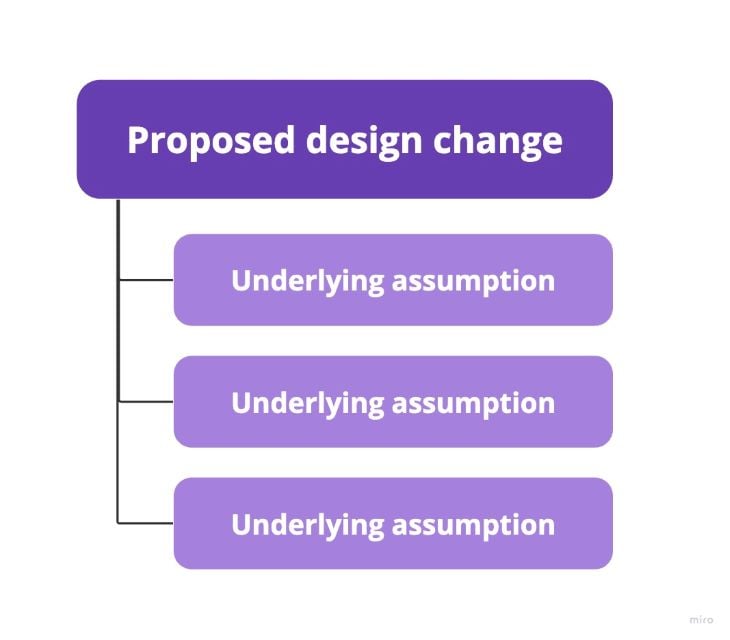

Now that you know what you plan to do and which goal you are trying to achieve, it’s time for the most critical question.

Why do you believe the proposed design change will achieve the desired objective? Whether it’s because you heard some interesting insights during user interviews or spotted patterns in users’ behavioral data, note it down.

Even if you don’t have any strong justification and base your hypothesis on pure guesses (we all do that sometimes!), clearly name these beliefs. Listing out all your assumption will help you:

- Focus your discovery efforts on validating these assumptions to avoid late disappointments

- Better analyze results post-launch to maximize your learnings

You’ll see exactly how in the examples of good design hypotheses below.

Examples of good design hypotheses

Let’s put it all into practice and see what a good design hypothesis might look like.

I’ll use two examples:

- A simple design hypothesis

- A robust design hypothesis

You should still formulate a design hypothesis if you are working on minor changes, such as changing the copy on buttons. But there’s also no point in spending hours formulating a perfect hypothesis for a fifteen-minute test. In these cases, I’d just use a simple one-sentence hypothesis.

Yet, suppose you are working on an extensive and critical initiative, such as redesigning the whole conversion funnel. In that case, you might want to put more effort into a more robust and detailed design hypothesis to guide your entire process.

A simple example of a design hypothesis could be:

Moving the sign-up button to the top of the page will increase our conversion to registration by 10 percent, as most users don’t look at the bottom of the page.

Although it’s pretty straightforward, it still can help you in a few ways.

First of all, it helps prioritize experiments. If there is another small experiment in the backlog, but with the hypothesis that it’ll improve conversion to registration by 15 percent, it might influence the order of things you work on.

Impact assessments (where the 10 percent or 15 percent comes from) are another quite advanced topic, so I won’t cover it in detail, but in most cases, you can ask your product manager and/or data analyst for help.

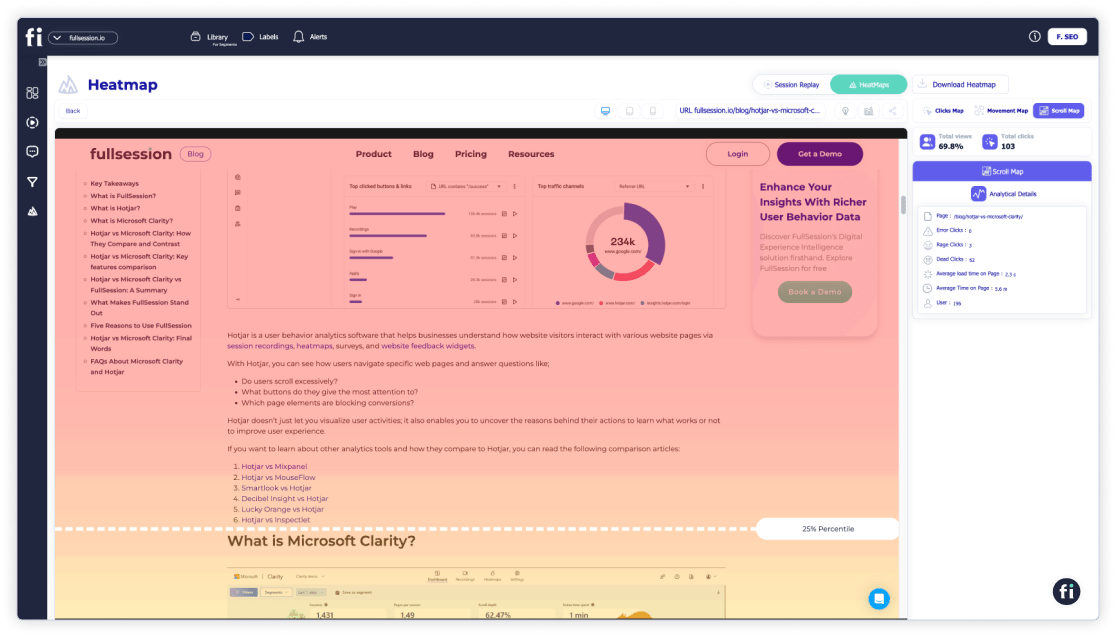

It also allows you to validate the hypothesis without even experimenting. If you guessed that people don’t look at the bottom of the page, you can check your analytics tools to see what the scroll rate is or check heatmaps.

Lastly, if your hypothesis fails (that is, the conversion rate doesn’t improve), you get valuable insights that can help you reassess other hypotheses based on the “most users don’t look at the bottom of the page” assumption.

Now let’s take a look at a slightly more robust assumption. An example could be:

Shortening the number of screens during onboarding by half will boost our free trial to subscription conversion by 20 percent because:

- Most users don’t complete the whole onboarding flow

- Shorter onboarding will increase the onboarding completion rate

- Focusing on the most important features will increase their adoption

- Which will lead to aha moments and better premium retention

- Users will perceive our product as simpler and less complex

The most significant difference is our effort to map all relevant assumptions.

Listing out assumptions can help you test them out in isolation before committing to the initiative.

For example, if you believe most users don’t complete the onboarding flow , you can check self-serve tools or ask your PM for help to validate if that’s true. If the data shows only 10 percent of users finish the onboarding, the hypothesis is stronger and more likely to be successful. If, on the other hand, most users do complete the whole onboarding, the idea suddenly becomes less promising.

The second advantage is the number of learnings you can get from the post-release analysis.

Say the change led to a 10 percent increase in conversion. Instead of blindly guessing why it didn’t meet expectations, you can see how each assumption turned out.

It might turn out that some users actually perceive the product as more complex (rather than less complex, as you assumed), as they have difficulty figuring out some functionalities that were skipped in the onboarding. Thus, they are less willing to convert.

Not only can it help you propose a second iteration of the experiment, that learning will help you greatly when working on other initiatives based on a similar assumption.

Closing thoughts

Ensuring everything you work on is based on a solid design hypothesis can greatly help you and your career.

It’ll guide your research and discovery in the right direction, enable better iterative design, maximize learning, and help you make better design decisions.

Some designers might think, “Hypotheses are the job of a product manager, not a designer.”

While that’s partly true, I believe designers should be proactive in working with hypotheses.

If there are none set, do it yourself for the sake of your own success. If all your designs succeed, or worse, flunk, no one will care who set or didn’t set the hypotheses behind these decisions. You’ll be judged, too.

If there’s a hypothesis set upfront, try to understand it, refine it, and challenge it if needed.

Most senior and desired product designers are not just pixel-pushers that do what they are being told to do, but they also play an active role in shaping the direction of the product as a whole. Becoming fluent in working with hypotheses is a significant step toward true seniority.

Header image source: IconScout

LogRocket : Analytics that give you UX insights without the need for interviews

LogRocket lets you replay users' product experiences to visualize struggle, see issues affecting adoption, and combine qualitative and quantitative data so you can create amazing digital experiences.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today .

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Facebook (Opens in new window)

- #ux research

Stop guessing about your digital experience with LogRocket

Recent posts:.

A guide to the Law of Pragnanz

Oftentimes when looking at something, you can tell what looks good or bad, however struggle to verbalize why.

Enhancing UX design with lateral thinking techniques

For when you’re stuck in a UX design rut next, bring in lateral thinking. Lateral thinking will take your designs in fresh directions, solving tricky problems with unexpected creativity.

Choosing the best color combinations for UX design

Colors in UI aren’t just decoration. They’re the key to emotional impact, readability, and accessibility. This blog breaks down how to pick colors that don’t just look good — they work for your users.

How to think outside the box to create user-centric designs

To think outside the box means to come up with atypical ideas, usually by ideating in an non-traditional way.

Leave a Reply Cancel reply

5 rules for creating a good research hypothesis

UserTesting

A hypothesis is a proposed explanation made on the basis of limited evidence. It is the starting point for further investigation of something that peaks your curiosity.

A good hypothesis is critical to creating a measurable study with successful outcomes. Without one, you’re stumbling through the fog and merely guessing which direction to travel in. It’s an especially critical step in A/B and Multivariate testing.

Every user research study needs clear goals and objectives, and a hypothesis is essential for this to happen. Writing a good hypothesis looks like this:

1: Problem : Think about the problem you’re trying to solve and what you know about it.

2: Question : Consider which questions you want to answer.

3: Hypothesis : Write your research hypothesis.

4: Goal : State one or two SMART goals for your project (specific, measurable, achievable, relevant, time-bound).

5: Objective : Draft a measurable objective that aligns directly with each goal.

In this article, we will focus on writing your hypothesis.

Five rules for a good hypothesis

1: A hypothesis is your best guess about what will happen. A good hypothesis says, "this change will result in this outcome. The "change" meaning a variation of an element. For example manipulating the label, color, text, etc. The "outcome" is the measure of success or the metric—such as click-through rate, conversion, etc.

2: Your hypothesis may be wrong—just learn from it. The initial hypothesis might be quite bold, such as “Variation B will result in 40% conversion over variation A”. If the conversion uptick is only 35% then your hypothesis is false. But you can still learn from it.

3: It must be specific. Explicitly stating values are important. Be bold, but not unrealistic. You must believe that what you suggest is indeed possible. When possible, be specific and assign numeric values to your predictions.

4: It must be measurable. The hypothesis must lead to concrete success metrics for the key measure. If you choose to evaluate click through, then measure clicks. If looking for conversion, then measure conversion, even if on a subsequent page. If measuring both, state in the study design which is more important, click through or conversion.

5: It should be repeatable. With a good hypothesis you should be able to run multiple different experiments that test different variants. And when retesting these variants, you should get the same results. If you find that your results are inconsistent, then revaluate prior versions and try a different direction.

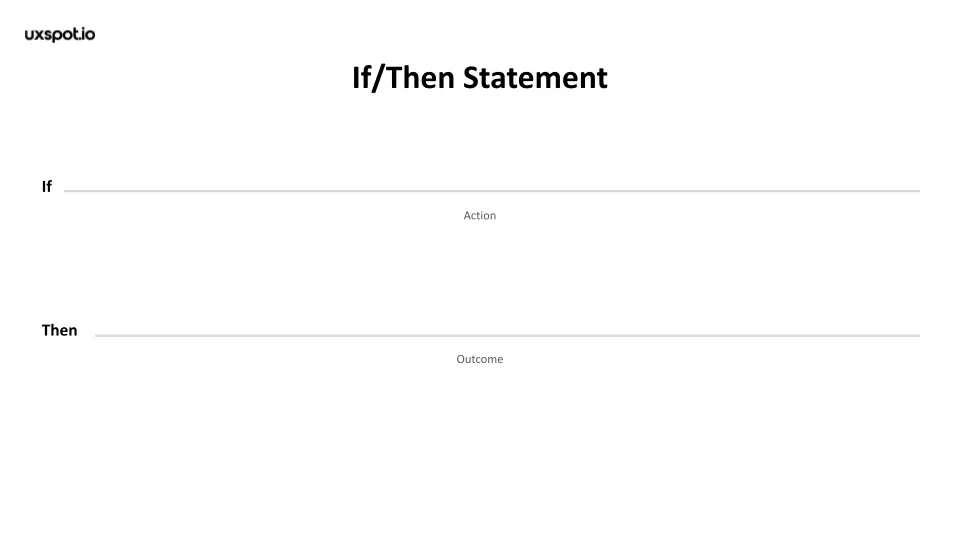

How to structure your hypothesis

Any good hypothesis has two key parts, the variant and the result.

First, state which variant will be affected. Only state one (A, B ,or C) or the recipe if multivariate (A & B). Be sure that you’ve recorded each version of variant testing in your documentation for clarity. Also, ensure to include detailed descriptions of flows or processes for the purpose of re-testing.

Next, state the expected outcome. “Variant B will result in a 40% higher rate of course completion.” After the hypothesis, be sure to specifically document the metric that will measure the result - in this case, completion. Leave no ambiguity in your metric.

Remember, always use a "control" when testing. The control is a factor that will not change during testing. It will be used as a benchmark to compare the results of the variants. The control is generally the current design in use.

A good hypothesis begins with data. Whether the data is from web analytics, user research, competitive analyses, or your gut, a hypothesis should start with data you want to better understand.

It should make sense, be easy to read without ambiguity, and be based on reality rather than pie-in-the-sky thinking or simply shooting for a company KPI or objectives and key results (OKR).

The data that results from a hypothesis is incremental and yields small insights to be built over time.

Hypothesis example

Imagine you are an eccomerce website trying to better understand your customer's journey. Based on data and insights gathered, you noticed that many website visitors are struggling to locate the checkout button at the end of their journey. You find that 30% of visitors abandon the site with items still in the cart.

You are trying to understand whether changing the checkout icon on your site will increase checkout completion.

The shopping bag icon is variant A, the shopping cart icon is variant B, and the checkmark is the control (the current icon you are using on your website).

Hypothesis: The shopping cart icon (variant B) will increase checkout completion by 15%.

After exposing users to 3 different versions of the site, with the 3 different checkout icons. The data shows...

- 55% of visitors shown the checkmark (control), completed their checkout.

- 70% of visitors shown the shopping bag icon (variant A), completed their checkout.

- 73% of visitors shown the shopping cart icon (variant B), completed their checkout.

The results shows evidence that a change in the icon led to an increase in checkout completion. Now we can take these insights further with statistical testing to see if these differences are statistically significant . Variant B was greater than our control by 18%, but is that difference significant enough to completely abandon the checkmark? Variant A and B both showed an increase, but which is better between the two? This is the beginning of optimizing our site for a seamless customer journey.

Quick tips for creating a good hypothesis

- Keep it short—just one clear sentence

- State the variant you believe will “win” (include screenshots in your doc background)

- State the metric that will define your winner (a download, purchase, sign-up … )

- Avoid adding attitudinal metrics with words like “because” or “since”

- Always use a control to measure against your variant

Get started with experience research

Everything you need to know to effectively plan, conduct, and analyze remote experience research.

In this Article

Get started now

About the author(s)

With UserTesting’s on-demand platform, you uncover ‘the why’ behind customer interactions. In just a few hours, you can capture the critical human insights you need to confidently deliver what your customers want and expect.

Related Blog Posts

How to reduce cart abandonment

How to measure UX ROI and impact

Discover the best of Austin during THiS 2024

Human understanding. Human experiences.

Get the latest news on events, research, and product launches

Oh no! We're unable to display this form.

Please check that you’re not running an adblocker and if you are please whitelist usertesting.com.

If you’re still having problems please drop us an email .

By submitting the form, I agree to the Privacy Policy and Terms of Use .

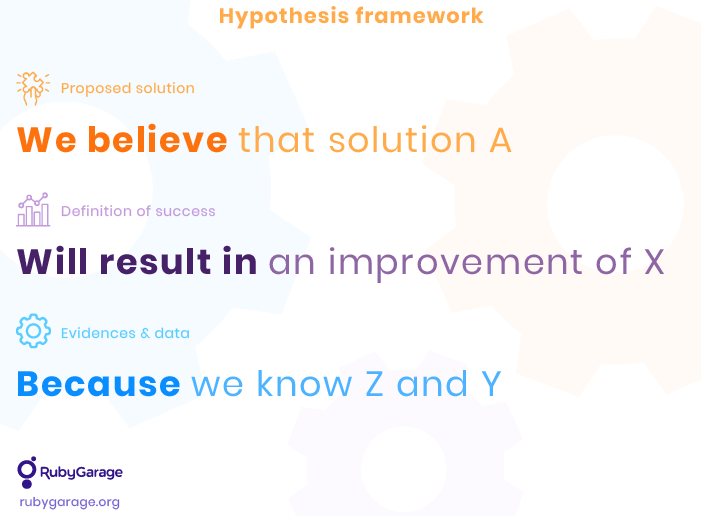

- Formulate hypotheses as a foundation for this method. The hypotheses can be statements of stakeholders or users, a research outcome or even a possible Future Trend .

- Conduct research to question the hypothesis. Depending on the size of the target group, it makes sense to conduct Surveys or perform User Interviews . Remember not to ask suggestive questions.

- Record the results of your research. Interpret the recordings to match them with your hypotheses.

- Verify or disprove the hypothesis if possible. In case, you were not able to do so, the hypothesis might be phrased incorrectly. In either case you should continue to research around your hypotheses to bring them into a more detailed shape and be aware of changes in the future.

Test new features.

Start your meeting with an creative and communicative atmosphere by seeing your project with new, extraterrestrial eyes.

Stimulate new ideas and challenge existing ones.

Reflect on what was learned from the experience of designing a product or service.

Spot quality ideas after having generated a good amount of output.

- UX Glossary

Hypothesis Testing

Hypothesis testing: validating design decisions through data.

Hypothesis testing is a fundamental aspect of user research and UX design that involves making predictions about user behavior and validating these predictions with empirical data. It helps designers and researchers make informed decisions, improving the overall user experience by relying on evidence rather than assumptions.

What is Hypothesis Testing?

Hypothesis testing is a statistical method used to determine whether there is enough evidence in a sample of data to infer that a certain condition is true for the entire population. In UX design, it involves formulating a hypothesis about user behavior or design effectiveness, collecting data, and then analyzing this data to confirm or refute the hypothesis.

Importance of Hypothesis Testing in UX Design

- Informed Decision-Making : By relying on data rather than assumptions, designers can make more informed and reliable decisions.

- Improving User Experience : Hypothesis testing helps identify what works and what doesn’t, leading to a more user-centered design approach and improved user satisfaction.

- Efficiency : It allows for the identification of ineffective design elements early in the process, saving time and resources by focusing on solutions that work.

- Objective Validation : Provides objective evidence to support design decisions, which can be crucial for stakeholder buy-in and collaboration.

Steps in Hypothesis Testing

- Formulate a Hypothesis : Start with a clear, testable statement about what you expect to happen. This could be based on previous research, user feedback , or design goals. For example, “Changing the color of the call-to-action button will increase click-through rates.”

- Set Up the Experiment : Design an experiment to test your hypothesis. This might involve A/B testing , usability testing , or other research methods. Define the metrics you will use to measure success.

- Collect Data : Run the experiment and collect data on user behavior. Ensure that your sample size is large enough to provide reliable results.

- Analyze the Data : Use statistical methods to analyze the data. This could involve comparing the performance of different design variations or measuring changes in user behavior.

- Interpret Results : Determine whether the data supports or refutes your hypothesis. Consider the statistical significance of your findings to ensure they are not due to chance.

- Make Decisions : Based on the results, make informed design decisions. If the hypothesis is supported, implement the changes. If not, consider alternative hypotheses or further testing.

Best Practices for Hypothesis Testing

- Clear and Testable Hypotheses : Ensure your hypothesis is specific, measurable, and testable. Vague hypotheses are difficult to test and analyze.

- Representative Samples : Use a sample that accurately represents your user base to ensure the findings are applicable to the broader population.

- Control Variables : Keep other variables constant to ensure that any changes in behavior are due to the variable being tested.

- Iterate and Refine : Hypothesis testing is an iterative process. Use the results to refine your hypotheses and continue testing to improve the design.

- Statistical Significance : Ensure your results are statistically significant to avoid making decisions based on random variations.

Real-World Examples

- E-commerce Sites : Online retailers often use hypothesis testing to optimize their checkout process. For example, they might test different layouts or promotional messages to see which version leads to higher conversion rates.

- Social Media Platforms : Social media companies frequently test changes to their algorithms or interface designs to determine how they affect user engagement and retention.

- Mobile Apps : App developers might test different onboarding processes to see which one results in higher user retention and satisfaction.

Hypothesis testing is a critical tool in UX design and user research , enabling data-driven decision-making and enhancing the user experience. By formulating clear hypotheses, designing effective experiments, and analyzing the results, designers can validate their ideas and create more user-centered products.

Ondrej Zoricak

Related posts.

- Posted by Ondrej Zoricak

The Rise of Microinteractions: How Tiny Details Make a Big Impact in UX/UI Design

How Tiny Details Make a Big Impact in UX/UI Design Introduction In...

UX Hypothesis Testing Resources

Delivered august 14th, 2021 . contributors: mariana d., key takeaways.

- UX Research published an article about how UX objectives can be written in the form of research hypotheses . It includes hypothesis examples and issues found around them.

- In a recent article, Jeff G o t h e l f , a product designer, explains the Hypothesis Prioritization Canvas , which helps select useful hypotheses.

- U X P i n provides an article that contains the steps of the Lean UX process , as well as how to write a good hypothesis and test it.

Introduction

1. ux research: objectives, assumptions, and hypothesis.

- The author talks about how UX objectives can be written in the form of research hypotheses . It includes hypothesis examples and issues found around them.

2. Getting Started with Statistics for UX

- This article explains two main types of hypotheses : null and alternative. It also covers how these should be tested.

3. The Hypothesis Prioritization Canvas

- Through this article, the author explains the Hypothesis Prioritization Canvas , which helps select useful hypotheses.

4. Hypotheses in User Research and Discovery

- This write-up focuses on UX hypotheses and how they can help organize user research. The author includes an explanation of testable assumptions (hypotheses), the unit of measurement, and the research plan.

5. Framing Hypotheses in Problem Discovery Phase

- In this article, an expert from SumUp shares steps for problem discovery, including observation and hypothesis design.

6. Lean UX: Expert Tips to Maximize Efficiency in UX

- This piece contains the steps of the Lean UX process , as well as how to write a good hypothesis and test it.

7. The 6 Steps That We Use For Hypothesis-Driven Development

- Throughout this paper, an expert explains hypothesis-driven development and its process, which includes the development of hypotheses , testing, and learning.

8. A/B Testing: Optimizing The UX

- This paper explains how to effectively conduct A/B testing on hypotheses.

9. How Does Statistical Hypothesis Testing Work?

- The author thoroughly explains the framework of hypothesis testing , which includes the definition of the null hypothesis, data collection, p-value computing, and determination of statistical significance.

10. How to Create Product Design Hypotheses: A Step-by-Step Guide

- This article provides a guide to creating product design hypotheses and includes five steps to do so. It also contains a shorter, one-minute guide.

Research Strategy:

Did this report spark your curiosity, ux research: objectives, assumptions, and hypothesis - rick dzekman, getting started with statistics for ux | ux booth, the hypothesis prioritization canvas | jeff gothelf, hypotheses in user research and discovery, framing hypotheses in problem discovery phase, lean ux: expert tips to maximize efficiency in ux, the 6 steps that we use for hypothesis-driven development, a/b testing: optimizing the ux - usability geek, how does statistical hypothesis testing work, how to create rock-solid product design hypotheses: a step-by-step guide.

- Services Product Management Product Ideation Services Product Design Design Design Web Design Mobile Application Design UX Audit Web Development Web Development Web Development in Ruby on Rails Backend API Development in Ruby on Rails Web Applications Development on React.js Web Applications Development on Vue.js Mobile Development Mobile Development Mobile app Development on React Native iOS Applications Development Android Applications Development Software Testing Software Testing Web Application Testing Mobile Application Testing Technology Consulting DevOps Maintenance Source Code Audit HIPAA security consulting

- Solutions Multi-Vendor Marketplace Multi-Vendor Marketplace B2B - Business to Business B2C - Business to Customer C2C - Customer to Customer Online Store Create an online store with unique design and features at minimal cost using our MarketAge solution Custom Marketplace Get a unique, scalable, and cost-effective online marketplace with minimum time to market Telemedicine Software Get a cost-efficient, HIPAA-compliant telemedicine solution tailored to your facility's requirements Chat App Get a customizable chat solution to connect users across multiple apps and platforms Custom Booking System Improve your business operations and expand to new markets with our appointment booking solution Video Conferencing Adjust our video conferencing solution for your business needs For Enterprise Scale, automate, and improve business processes in your enterprise with our custom software solutions For Startups Turn your startup ideas into viable, value-driven, and commercially successful software solutions

- Industries Fintech Automate, scale, secure your financial business or launch innovative Fintech products with our help Edutech Cut paperwork, lower operating costs, and expand your market with a custom e-learning platform E-commerce Streamline and scale your e-commerce business with a custom platform tailored to your product segments Telehealth Upgrade your workflow, enter e-health market, and increase marketability with the right custom software

- Case Studies

Privacy preference center

Cookies are small files saved to a user’s computer/device hard drive that track, save, and store information about the user’s interactions and website use. They allow a website, through its server, to provide users with a tailored experience within the site. Users are advised to take necessary steps within their web browser security settings to block all cookies from this website and its external serving vendors if they wish to deny the use and saving of cookies from this website to their computer’s/device’s hard drive. To learn more click Cookie Policy .

Manage consent preferences

Necessary cookies, analytics cookies, first party (rubygarage.org).

| Name | _rg_session |

| Provider | rubygarage.org |

| Retention period | 2 days |

| Type | First party |

| Category | Necessary |

| Description | The website session cookie is set by the server to maintain the user's session state across different pages of the website. This cookie is essential for functionalities such as login persistence, ensuring a seamless and consistent user experience. The session cookie does not store personal data and is typically deleted when the browser is closed, enhancing privacy and security. |

m.stripe.com

| Name | m |

| Provider | m.stripe.com |

| Retention period | 1 year 1 month |

| Type | Third party |

| Category | Necessary |

| Description | The m cookie is set by Stripe and is used to help assess the risk associated with attempted transactions on the website. This cookie plays a critical role in fraud detection by identifying and analyzing patterns of behavior to distinguish between legitimate users and potentially fraudulent activity. It enhances the security of online transactions, ensuring that only authorized payments are processed while minimizing the risk of fraud. |

pipedrive.com

| Name | __cf_bm |

| Provider | .pipedrive.com |

| Retention period | 1 hour |

| Type | Third party |

| Category | Necessary |

| Description | The __cf_bm cookie is set by Cloudflare to support Cloudflare Bot Management. This cookie helps to identify and filter requests from bots, enhancing the security and performance of the website. By distinguishing between legitimate users and automated traffic, it ensures that the site remains protected from malicious bots and potential attacks. This functionality is crucial for maintaining the integrity and reliability of the site's operations. |

recaptcha.net

| Name | _GRECAPTCHA |

| Provider | .recaptcha.net |

| Retention period | 6 months |

| Type | Third party |

| Category | Necessary |

| Description | The _GRECAPTCHA cookie is set by Google reCAPTCHA to ensure that interactions with the website are from legitimate human users and not automated bots. This cookie helps protect forms, login pages, and other interactive elements from spam and abuse by analyzing user behavior. It is essential for the proper functioning of reCAPTCHA, providing a critical layer of security to maintain the integrity and reliability of the site's interactive features. |

calendly.com

| Name | __cf_bm |

| Provider | .calendly.com |

| Retention period | 30 minutes |

| Type | Third party |

| Category | Necessary |

| Description | The __cf_bm cookie is set by Cloudflare to distinguish between humans and bots. This cookie is beneficial for the website as it helps in making valid reports on the use of the website. By identifying and managing automated traffic, it ensures that analytics and performance metrics accurately reflect human user interactions, thereby enhancing site security and performance. |

| Name | __cfruid |

| Provider | .calendly.com |

| Retention period | During session |

| Type | Third party |

| Category | Necessary |

| Description | The __cfruid cookie is associated with websites using Cloudflare services. This cookie is used to identify trusted web traffic and enhance security. It helps Cloudflare manage and filter legitimate traffic from potentially harmful requests, thereby protecting the website from malicious activities such as DDoS attacks and ensuring reliable performance for genuine users. |

| Name | OptanonConsent |

| Provider | .calendly.com |

| Retention period | 1 year |

| Type | Third party |

| Category | Necessary |

| Description | The OptanonConsent cookie determines whether the visitor has accepted the cookie consent box, ensuring that the consent box will not be presented again upon re-entry to the site. This cookie helps maintain the user's consent preferences and compliance with privacy regulations by storing information about the categories of cookies the user has consented to and preventing unnecessary repetition of consent requests. |

| Name | OptanonAlertBoxClosed |

| Provider | .calendly.com |

| Retention period | 1 year |

| Type | Third party |

| Category | Necessary |

| Description | The OptanonAlertBoxClosed cookie is set after visitors have seen a cookie information notice and, in some cases, only when they actively close the notice. It ensures that the cookie consent message is not shown again to the user, enhancing the user experience by preventing repetitive notifications. This cookie helps manage user preferences and ensures compliance with privacy regulations by recording when the notice has been acknowledged. |

| Name | referrer_user_id |

| Provider | .calendly.com |

| Retention period | 14 days |

| Type | Third party |

| Category | Necessary |

| Description | The referrer_user_id cookie is set by Calendly to support the booking functionality on the website. This cookie helps track the source of referrals to the booking page, enabling Calendly to attribute bookings accurately and enhance the user experience by streamlining the scheduling process. It assists in managing user sessions and preferences during the booking workflow, ensuring efficient and reliable operation. |

| Name | _calendly_session |

| Provider | .calendly.com |

| Retention period | 21 days |

| Type | Third party |

| Category | Necessary |

| Description | The _calendly_session cookie is set by Calendly, a meeting scheduling tool, to enable the meeting scheduler to function within the website. This cookie facilitates the scheduling process by maintaining session information, allowing visitors to book meetings and add events to their calendars seamlessly. It ensures that the scheduling workflow operates smoothly, providing a consistent and reliable user experience. |

| Name | _gat_UA-* |

| Provider | rubygarage.org |

| Retention period | 1 minute |

| Type | First party |

| Category | Analytics |

| Description | The _gat_UA-* cookie is a pattern type cookie set by Google Analytics, where the pattern element in the name contains the unique identity number of the Google Analytics account or website it relates to. This cookie is a variation of the _gat cookie and is used to throttle the request rate, limiting the amount of data collected by Google Analytics on high traffic websites. It helps manage the volume of data recorded, ensuring efficient performance and accurate analytics reporting. |

| Name | _ga |

| Provider | rubygarage.org |

| Retention period | 1 year 1 month 4 days |

| Type | First party |

| Category | Analytics |

| Description | The _ga cookie is set by Google Analytics to calculate visitor, session, and campaign data for the site's analytics reports. It helps track how users interact with the website, providing insights into site usage and performance. |

| Name | _ga_* |

| Provider | rubygarage.org |

| Retention period | 1 year 1 month 4 days |

| Type | First party |

| Category | Analytics |

| Description | The _ga_* cookie is set by Google Analytics to store and count page views on the website. This cookie helps track the number of visits and interactions with the website, providing valuable data for performance and user behavior analysis. It belongs to the analytics category and plays a crucial role in generating detailed usage reports for site optimization. |

| Name | _gid |

| Provider | rubygarage.org |

| Retention period | 1 day |

| Type | First party |

| Category | Analytics |

| Description | The _gid cookie is set by Google Analytics to store information about how visitors use a website and to create an analytics report on the website's performance. This cookie collects data on visitor behavior, including pages visited, duration of the visit, and interactions with the website, helping site owners understand and improve user experience. It is part of the analytics category and typically expires after 24 hours. |

| Name | _dc_gtm_UA-* |

| Provider | rubygarage.org |

| Retention period | 1 minute |

| Type | First party |

| Category | Analytics |

| Description | The _dc_gtm_UA-* cookie is set by Google Analytics to help load the Google Analytics script tag via Google Tag Manager. This cookie facilitates the efficient loading of analytics tools, ensuring that data on user behavior and website performance is accurately collected and reported. It is categorized under analytics and assists in the seamless integration and functioning of Google Analytics on the website. |

- How to Test UX Design

How to Test UX Design: UX Problem Discovery, Hypothesis Validation & User Testing

- 17676 views

- Feb 23, 2022

Oleksandra I.

Head of Product Management Office

- Tech Navigator

- Entrepreneurship

Customer-driven product development implies designing and developing a product based on customer feedback. The match between actual product capabilities and end users’ expectations defines the success of any software project.

At RubyGarage, we create design mockups, wireframes, and prototypes to communicate assumptions regarding how your app should look and perform. User testing is then needed to validate these ideas before the actual product development starts. Why? Because early UX validation takes significantly less time and effort than rebuilding a ready-made product.

Learn how to test UX design ideas with this ultimate practical guide from RubyGarage UX experts.

What is UX validation?

In a broad sense, UX validation is the process of collecting evidence and learning around some idea with the aim of conducting experiments and user testing to make informed decisions about product design. You can validate a business idea, a user experience, or a specific problem with an existing product, or you can choose the most viable solution among all available options.

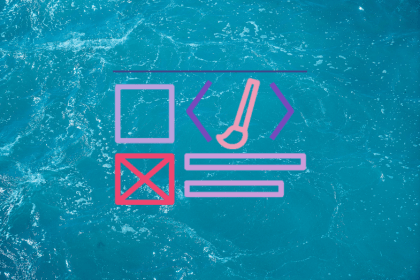

There are two approaches to UX validation:

- Waterfall: Validation of the whole concept at the final release

- Lean: Validation of individual hypotheses through multiple experiments

Lean UX validation is preferable for startups due to lower risks of failure compared to the Waterfall approach and optimized budget distribution.

Why do you need UX validation?

The validation phase of UX research gives the product team an understanding of what the future (or existing) product should be like to satisfy the end user. It helps the team:

- Understand customer value more profoundly. Get precise feedback on each feature and every blocker on the user’s way to conversion.