Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- External Validity | Types, Threats & Examples

External Validity | Types, Threats & Examples

Published on 3 May 2022 by Pritha Bhandari . Revised on 18 December 2023.

External validity is the extent to which you can generalise the findings of a study to other situations, people, settings, and measures. In other words, can you apply the findings of your study to a broader context?

The aim of scientific research is to produce generalisable knowledge about the real world. Without high external validity, you cannot apply results from the laboratory to other people or the real world.

In qualitative studies , external validity is referred to as transferability.

Table of contents

Types of external validity, trade-off between external and internal validity, threats to external validity and how to counter them, frequently asked questions about external validity.

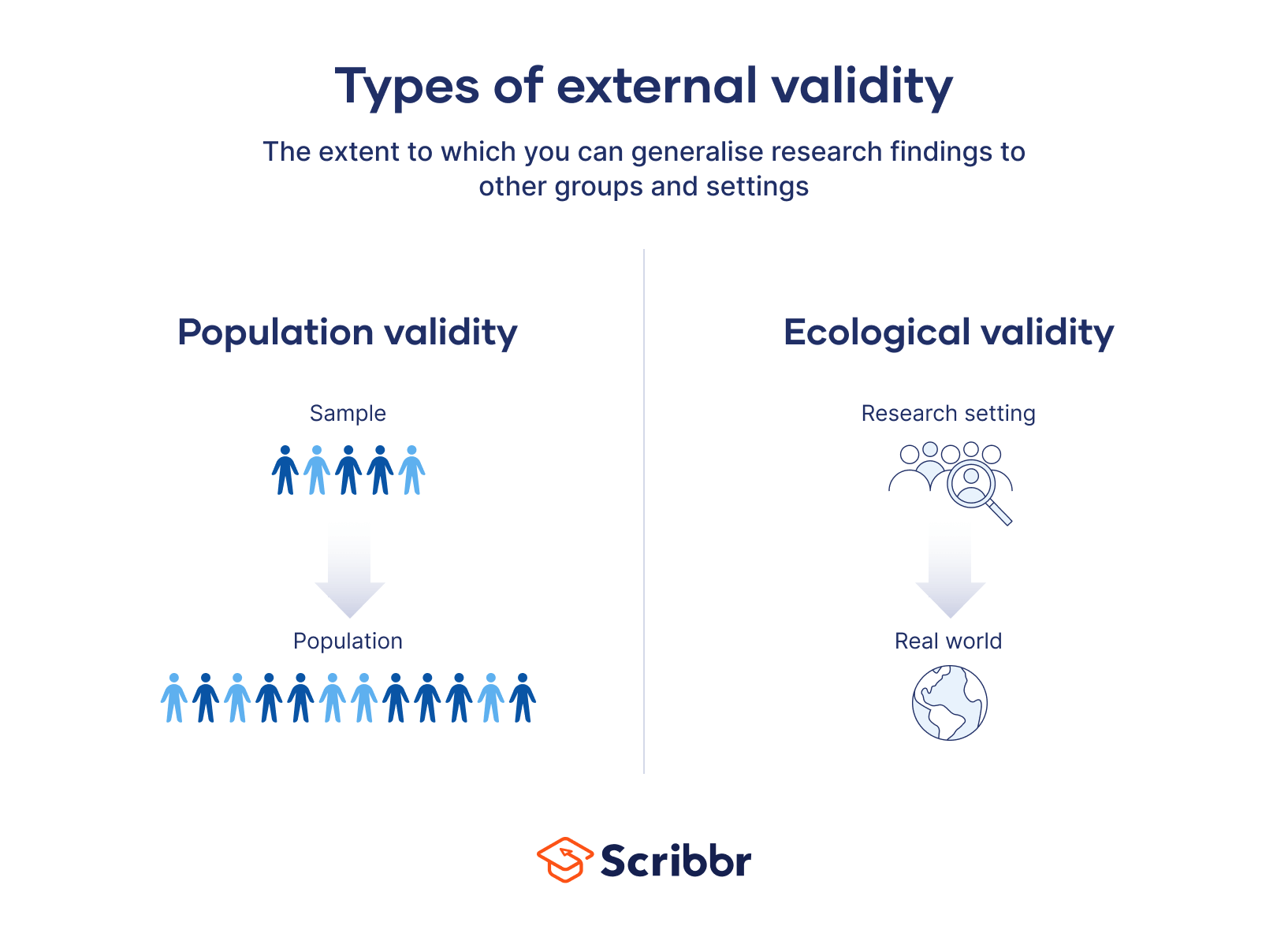

There are two main types of external validity: population validity and ecological validity.

Population validity

Population validity refers to whether you can reasonably generalise the findings from your sample to a larger group of people (the population).

Population validity depends on the choice of population and on the extent to which the study sample mirrors that population. Non-probability sampling methods are often used for convenience. With this type of sampling, the generalisability of results is limited to populations that share similar characteristics with the sample.

You recruit over 200 participants. They are science and engineering students; most of them are British, male, 18–20 years old, and from a high socioeconomic background. In a laboratory setting, you administer a mathematics and science test and then ask them to rate how well they think performed. You find that the average participant believes they are smarter than 66% of their peers.

Here, your sample is not representative of the whole population of students at your university. The findings can only reasonably be generalised to populations that share characteristics with the participants, e.g. university-educated men studying STEM subjects.

For higher population validity, your sample would need to include people with different characteristics (e.g., women, nonbinary people, and students from different fields, countries, and socioeconomic backgrounds).

Samples like this one, from Western, educated, industrialised, rich, and democratic (WEIRD) countries, are used in an estimated 96% of psychology studies , even though they represent only 12% of the world’s population. As outliers in terms of visual perception, moral reasoning, and categorisation (among many other topics), WEIRD samples limit broad population validity in the social sciences.

Ecological validity

Ecological validity refers to whether you can reasonably generalise the findings of a study to other situations and settings in the ‘real world’.

In a laboratory setting, you set up a simple computer-based task to measure reaction times. Participants are told to imagine themselves driving around the racetrack and double-click the mouse whenever they see an orange cat on the screen. For one round, participants listen to a podcast. In the other round, they do not need to listen to anything.

In the example above, it is difficult to generalise the findings to real-life driving conditions. A computer-based task using a mouse does not resemble real-life driving conditions with a steering wheel. Additionally, a static image of an orange cat may not represent common real-life hurdles when driving.

To improve ecological validity in a lab setting, you could use an immersive driving simulator with a steering wheel and foot pedal instead of a computer and mouse. This increases psychological realism by more closely mirroring the experience of driving in the real world.

Alternatively, for higher ecological validity, you could conduct the experiment using a real driving course.

Prevent plagiarism, run a free check.

Internal validity is the extent to which you can be confident that the causal relationship established in your experiment cannot be explained by other factors.

There is an inherent trade-off between external and internal validity ; the more applicable you make your study to a broader context, the less you can control extraneous factors in your study.

Threats to external validity are important to recognise and counter in a research design for a robust study.

Participants are given a pretest and a post-test measuring how often they experienced anxiety in the past week. During the study, all participants are given an individual mindfulness training and asked to practise mindfulness daily for 15 minutes in the morning.

How to counter threats to external validity

There are several ways to counter threats to external validity:

- Replications counter almost all threats by enhancing generalisability to other settings, populations and conditions.

- Field experiments counter testing and situation effects by using natural contexts.

- Probability sampling counters selection bias by making sure everyone in a population has an equal chance of being selected for a study sample.

- Recalibration or reprocessing also counters selection bias using algorithms to correct weighting of factors (e.g., age) within study samples.

The external validity of a study is the extent to which you can generalise your findings to different groups of people, situations, and measures.

The two types of external validity are population validity (whether you can generalise to other groups of people) and ecological validity (whether you can generalise to other situations and settings).

There are seven threats to external validity : selection bias , history, experimenter effect, Hawthorne effect , testing effect, aptitude-treatment, and situation effect.

Attrition bias can skew your sample so that your final sample differs significantly from your original sample. Your sample is biased because some groups from your population are underrepresented.

With a biased final sample, you may not be able to generalise your findings to the original population that you sampled from, so your external validity is compromised.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, December 18). External Validity | Types, Threats & Examples. Scribbr. Retrieved 2 April 2024, from https://www.scribbr.co.uk/research-methods/external-validity-explained/

Is this article helpful?

Pritha Bhandari

Other students also liked, the 4 types of validity | types, definitions & examples, internal validity | definition, threats & examples, reliability vs validity in research | differences, types & examples.

- Privacy Policy

Buy Me a Coffee

Home » External Validity – Threats, Examples and Types

External Validity – Threats, Examples and Types

Table of Contents

External Validity

Definition:

External validity refers to the extent to which the results of a study can be generalized or applied to a larger population, settings, or conditions beyond the specific context of the study. It is a measure of how well the findings of a study can be considered representative of the real world.

How To Increase External Validity

To increase external validity in research, researchers can employ several strategies to enhance the generalizability of their findings. Here are some common approaches:

Representative Sampling

Ensure that the sample used in the study is representative of the target population of interest. Random sampling techniques, such as simple random sampling or stratified sampling, can help reduce sampling bias and increase the likelihood of obtaining a representative sample.

Diverse Participant Characteristics

Include participants with diverse demographic characteristics, such as age, gender, socioeconomic status, and cultural backgrounds. This helps to ensure that the findings are applicable to a wider range of individuals.

Multiple Settings

Conduct the study in multiple settings or contexts to assess the robustness of the findings across different environments. This could involve replicating the study in different geographical locations, institutions, or organizations.

Large Sample Size

Increasing the sample size can improve the statistical power of the study and enhance the reliability of the findings. Larger samples are generally more representative of the population, making it easier to generalize the results.

Longitudinal Studies

Consider conducting longitudinal studies that span a longer duration. By observing changes and trends over time, researchers can provide a more comprehensive understanding of the phenomenon under investigation and increase the applicability of their findings.

Real-world Conditions

Strive to create conditions in the study that closely resemble real-world situations. This can be achieved by conducting field experiments, using naturalistic observation, or implementing interventions in real-life settings.

External Validation of Measures

Use established and validated measurement instruments to assess variables of interest. By employing recognized measures, researchers increase the likelihood that their findings can be compared and replicated in other studies.

Meta-Analysis

Conducting a meta-analysis, which involves systematically analyzing and combining the results of multiple studies on the same topic, can provide a more comprehensive view and increase the external validity by pooling findings from various sources.

Replication

Encourage replication of the study by other researchers. When multiple studies yield similar results, it strengthens the external validity of the findings.

Transparent Reporting

Clearly document the study design, methodology, and limitations in research publications. Transparent reporting allows readers to evaluate the study’s external validity and consider the potential generalizability of the findings.

Threats to External Validity

There are several threats to external validity that researchers should be aware of when interpreting the generalizability of their findings. These threats include:

Selection Bias

Participants in a study may not be representative of the target population due to the way they were selected or recruited. This can limit the generalizability of the findings to the broader population.

Sampling Bias

Even with random sampling techniques, there is a possibility of sampling bias. This occurs when certain segments of the population are underrepresented or overrepresented in the sample, leading to a skewed representation of the population.

Reactive or Interaction Effects of Testing

The act of participating in a study or being exposed to a specific experimental condition can influence participants’ behaviors or responses. This can lead to artificial results that may not occur in natural settings.

Experimental Setting

The controlled environment of a laboratory or research setting may differ significantly from real-world situations, potentially influencing participant behavior and limiting the generalizability of the findings.

Demand Characteristics

Participants may alter their behavior based on their perception of the study’s purpose or the researcher’s expectations. This can introduce biases and limit the external validity of the findings.

Novelty Effects

Participants may respond differently to novel or unusual conditions in a study, which may not accurately reflect their behavior in everyday life.

Hawthorne Effect

Participants may change their behavior simply because they are aware they are being observed. This effect can distort the findings and limit generalizability.

Experimenter Bias

The actions or behaviors of the researchers conducting the study can inadvertently influence participant responses or outcomes, impacting the generalizability of the findings.

Time-related Threats

The passage of time can affect the external validity of findings. Social, cultural, or technological changes that occur between the study and the application of the findings may limit their relevance.

Specificity of the Intervention or Treatment

If the study involves a specific intervention or treatment, the findings may be limited to that particular intervention and may not generalize to other similar interventions or treatments.

Publication Bias

The tendency of researchers or journals to publish studies with significant or positive findings can introduce a bias in the literature and limit the generalizability of research findings.

Types of External Validity

Types of External Validity are as follows:

Population Validity

Population validity refers to the extent to which the findings of a study can be generalized to the larger target population from which the study sample was drawn. If the sample is representative of the population in terms of relevant characteristics, such as age, gender, socioeconomic status, and ethnicity, the study’s findings are more likely to have high population validity.

Ecological Validity

Ecological validity refers to the extent to which the findings of a study can be generalized to real-world settings or conditions. It assesses whether the experimental conditions and procedures accurately represent the complexity and dynamics of the natural environment. High ecological validity suggests that the findings are applicable to everyday situations.

Temporal Validity

Temporal validity, also known as historical validity or generalizability over time, refers to the extent to which the findings of a study can be generalized across different time periods. It assesses whether the relationships or effects observed during the study remain consistent or change over time.

Cross-Cultural Validity

Cross-cultural validity refers to the extent to which the findings of a study can be generalized to different cultural contexts or populations. It examines whether the relationships or effects observed in one culture hold true in other cultures. Conducting research in multiple cultural settings can help establish cross-cultural validity.

Setting Validity

Setting validity refers to the extent to which the findings of a study can be generalized to different settings or environments. It assesses whether the relationships or effects observed in one specific setting can be replicated in other similar settings.

Task Validity

Task validity refers to the extent to which the findings of a study can be generalized to different tasks or activities. It examines whether the relationships or effects observed during a specific task are applicable to other tasks that share similar characteristics.

Measurement Validity

Measurement validity refers to the extent to which the chosen measurements or instruments accurately capture the constructs or variables of interest. It examines whether the relationships or effects observed are robust across different measurement tools or techniques.

Examples of External Validity

Here are some real-time examples of external validity:

Medical Research: A pharmaceutical company conducts a clinical trial to test the efficacy of a new drug on a specific population group (e.g., adults with diabetes). To ensure external validity, the company includes participants from diverse backgrounds, ages, and geographical locations to ensure that the results can be generalized to a broader population.

Educational Research: A study examines the effectiveness of a teaching method in improving student performance in mathematics. Researchers choose a sample of schools from different regions, representing various socioeconomic backgrounds, to ensure the findings can be applied to a wider range of schools and students.

Opinion Polls: A polling agency conducts a survey to understand public opinion on a particular political issue. To ensure external validity, the agency ensures a representative sample of respondents, considering factors such as age, gender, ethnicity, education level, and geographic location. This approach allows the findings to be generalized to the broader population.

Social Science Research: A study investigates the impact of a social intervention program on reducing crime rates in a specific neighborhood. To enhance external validity, researchers select neighborhoods that represent diverse socio-economic conditions and urban and rural settings. This approach increases the likelihood that the findings can be applied to similar neighborhoods in other locations.

Psychological Research: A psychology study examines the effects of a therapy technique on reducing anxiety levels in individuals. To improve external validity, the researchers recruit a diverse sample of participants, including individuals of different ages, genders, and cultural backgrounds. This ensures that the findings can be applicable to a broader range of individuals experiencing anxiety.

Applications of External Validity

External validity has several practical applications across various fields. Here are some specific applications of external validity:

Policy Development:

External validity helps policymakers make informed decisions by considering research findings from different contexts and populations. By examining the external validity of studies, policymakers can determine the applicability and generalizability of research results to their target population and policy goals.

Program Evaluation:

External validity is crucial in evaluating the effectiveness of programs or interventions. By assessing the external validity of evaluation studies, policymakers and program administrators can determine if the findings are applicable to their target population and whether similar interventions can be implemented in different settings.

Market Research:

External validity is essential in market research to understand consumer behavior and preferences. By conducting studies with representative samples, companies can extrapolate the findings to the broader consumer population, allowing them to make informed marketing and product development decisions.

Health Interventions:

External validity plays a significant role in healthcare research. It helps researchers and healthcare practitioners understand the generalizability of treatment outcomes to diverse patient populations. By considering external validity, healthcare providers can determine if a specific treatment or intervention will be effective for their patients.

Education and Training:

External validity is important in educational research to ensure that instructional methods, educational interventions, and training programs are effective across diverse student populations and different educational settings. It helps educators and trainers make evidence-based decisions about instructional strategies that are likely to have positive outcomes in different contexts.

Public Opinion Research:

External validity is crucial in public opinion research, such as political polling or survey research. By ensuring a representative sample and considering external validity, researchers can generalize their findings to the larger population, providing insights into public sentiment and informing decision-making processes.

Advantages of External Validity

Here are some advantages of external validity:

- Generalizability: External validity allows researchers to generalize their findings to broader populations, settings, or conditions. It enables them to make inferences about how the results of a study might hold true in real-world situations beyond the controlled environment of the study.

- Real-world applicability: When a study has high external validity, the findings are more likely to be applicable and relevant to real-world scenarios. This is particularly important in fields such as medicine, psychology, and social sciences, where the goal is often to understand and improve human behavior and well-being.

- Increased confidence in findings: Studies with high external validity provide stronger evidence and increase confidence in the findings. When the results can be generalized to diverse populations or different contexts, it suggests that the observed effects are more robust and reliable.

- Enhanced ecological validity: External validity enhances ecological validity, which refers to the degree to which a study reflects real-life situations. When a study has good external validity, it increases the likelihood that the findings accurately represent the complexities and nuances of the real world.

- Policy implications: Research findings with high external validity are more likely to have practical implications for policy-making. Policymakers are interested in studies that can inform decisions and interventions on a larger scale. Studies with strong external validity provide a basis for making informed decisions and implementing effective policies.

- Replication and meta-analysis: External validity facilitates replication studies and meta-analyses, which involve combining the results of multiple studies. When studies have high external validity, it becomes easier to replicate the findings in different contexts or conduct meta-analyses to examine the overall effects across a range of studies.

- Improved understanding of causal relationships: External validity allows researchers to test the generalizability of causal relationships. By replicating studies in different settings or populations, researchers can examine whether the causal relationships observed in one context hold true in other contexts, providing a more comprehensive understanding of the phenomenon under investigation.

Limitations of External Validity

While external validity offers several advantages, it also has limitations that researchers need to consider. Here are some limitations of external validity:

- Specificity of conditions: The specific conditions and settings of a study may limit the generalizability of the findings. Factors such as the time period, location, and sample characteristics can influence the results. For example, cultural, socioeconomic, or geographical differences between the study sample and the target population may affect the generalizability of the findings.

- Selection bias: In many studies, participants are recruited through convenience sampling or other non-random methods, which can introduce selection bias. This means that the sample may not be representative of the larger population, reducing the external validity of the findings. Selection bias can limit the generalizability of the results to other populations or contexts.

- Artificiality of experimental settings: Studies conducted in controlled laboratory or experimental settings may lack ecological validity. The artificial conditions and controlled variables may not accurately reflect real-world complexities. Participants’ behavior in a laboratory setting may differ from their behavior in naturalistic settings, leading to limited generalizability.

- Novelty and awareness effects: Participants in research studies may behave differently simply because they are aware they are being studied. This awareness can lead to the novelty effect or demand characteristics, where participants alter their behavior in response to the study context or the researchers’ expectations. As a result, the observed effects may not accurately represent real-world behavior.

- Time-dependent effects: The relevance and applicability of research findings can change over time due to societal, technological, or cultural shifts. What may be true and valid today may not hold true in the future. Therefore, the external validity of a study’s findings may diminish as time progresses.

- Lack of contextual variation: Studies often focus on a narrow range of contexts or populations, limiting the understanding of how findings may vary across different contexts. The external validity of a study may be compromised if it fails to account for contextual variations that can influence the generalizability of the results.

- Replication challenges: While replication is important for assessing the external validity of a study, it can be challenging to replicate studies in different contexts or with diverse populations. Replication studies may encounter practical constraints, such as resource limitations, time constraints, or ethical considerations, which can limit the ability to establish external validity.

Also see Validity

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Validity – Types, Examples and Guide

Alternate Forms Reliability – Methods, Examples...

Construct Validity – Types, Threats and Examples

Internal Validity – Threats, Examples and Guide

Reliability Vs Validity

Internal Consistency Reliability – Methods...

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- The 4 Types of Validity in Research | Definitions & Examples

The 4 Types of Validity in Research | Definitions & Examples

Published on September 6, 2019 by Fiona Middleton . Revised on June 22, 2023.

Validity tells you how accurately a method measures something. If a method measures what it claims to measure, and the results closely correspond to real-world values, then it can be considered valid. There are four main types of validity:

- Construct validity : Does the test measure the concept that it’s intended to measure?

- Content validity : Is the test fully representative of what it aims to measure?

- Face validity : Does the content of the test appear to be suitable to its aims?

- Criterion validity : Do the results accurately measure the concrete outcome they are designed to measure?

In quantitative research , you have to consider the reliability and validity of your methods and measurements.

Note that this article deals with types of test validity, which determine the accuracy of the actual components of a measure. If you are doing experimental research, you also need to consider internal and external validity , which deal with the experimental design and the generalizability of results.

Table of contents

Construct validity, content validity, face validity, criterion validity, other interesting articles, frequently asked questions about types of validity.

Construct validity evaluates whether a measurement tool really represents the thing we are interested in measuring. It’s central to establishing the overall validity of a method.

What is a construct?

A construct refers to a concept or characteristic that can’t be directly observed, but can be measured by observing other indicators that are associated with it.

Constructs can be characteristics of individuals, such as intelligence, obesity, job satisfaction, or depression; they can also be broader concepts applied to organizations or social groups, such as gender equality, corporate social responsibility, or freedom of speech.

There is no objective, observable entity called “depression” that we can measure directly. But based on existing psychological research and theory, we can measure depression based on a collection of symptoms and indicators, such as low self-confidence and low energy levels.

What is construct validity?

Construct validity is about ensuring that the method of measurement matches the construct you want to measure. If you develop a questionnaire to diagnose depression, you need to know: does the questionnaire really measure the construct of depression? Or is it actually measuring the respondent’s mood, self-esteem, or some other construct?

To achieve construct validity, you have to ensure that your indicators and measurements are carefully developed based on relevant existing knowledge. The questionnaire must include only relevant questions that measure known indicators of depression.

The other types of validity described below can all be considered as forms of evidence for construct validity.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Content validity assesses whether a test is representative of all aspects of the construct.

To produce valid results, the content of a test, survey or measurement method must cover all relevant parts of the subject it aims to measure. If some aspects are missing from the measurement (or if irrelevant aspects are included), the validity is threatened and the research is likely suffering from omitted variable bias .

A mathematics teacher develops an end-of-semester algebra test for her class. The test should cover every form of algebra that was taught in the class. If some types of algebra are left out, then the results may not be an accurate indication of students’ understanding of the subject. Similarly, if she includes questions that are not related to algebra, the results are no longer a valid measure of algebra knowledge.

Face validity considers how suitable the content of a test seems to be on the surface. It’s similar to content validity, but face validity is a more informal and subjective assessment.

You create a survey to measure the regularity of people’s dietary habits. You review the survey items, which ask questions about every meal of the day and snacks eaten in between for every day of the week. On its surface, the survey seems like a good representation of what you want to test, so you consider it to have high face validity.

As face validity is a subjective measure, it’s often considered the weakest form of validity. However, it can be useful in the initial stages of developing a method.

Criterion validity evaluates how well a test can predict a concrete outcome, or how well the results of your test approximate the results of another test.

What is a criterion variable?

A criterion variable is an established and effective measurement that is widely considered valid, sometimes referred to as a “gold standard” measurement. Criterion variables can be very difficult to find.

What is criterion validity?

To evaluate criterion validity, you calculate the correlation between the results of your measurement and the results of the criterion measurement. If there is a high correlation, this gives a good indication that your test is measuring what it intends to measure.

A university professor creates a new test to measure applicants’ English writing ability. To assess how well the test really does measure students’ writing ability, she finds an existing test that is considered a valid measurement of English writing ability, and compares the results when the same group of students take both tests. If the outcomes are very similar, the new test has high criterion validity.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analyzing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Criterion validity evaluates how well a test measures the outcome it was designed to measure. An outcome can be, for example, the onset of a disease.

Criterion validity consists of two subtypes depending on the time at which the two measures (the criterion and your test) are obtained:

- Concurrent validity is a validation strategy where the the scores of a test and the criterion are obtained at the same time .

- Predictive validity is a validation strategy where the criterion variables are measured after the scores of the test.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

The purpose of theory-testing mode is to find evidence in order to disprove, refine, or support a theory. As such, generalizability is not the aim of theory-testing mode.

Due to this, the priority of researchers in theory-testing mode is to eliminate alternative causes for relationships between variables . In other words, they prioritize internal validity over external validity , including ecological validity .

It’s often best to ask a variety of people to review your measurements. You can ask experts, such as other researchers, or laypeople, such as potential participants, to judge the face validity of tests.

While experts have a deep understanding of research methods , the people you’re studying can provide you with valuable insights you may have missed otherwise.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Middleton, F. (2023, June 22). The 4 Types of Validity in Research | Definitions & Examples. Scribbr. Retrieved April 2, 2024, from https://www.scribbr.com/methodology/types-of-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, reliability vs. validity in research | difference, types and examples, construct validity | definition, types, & examples, external validity | definition, types, threats & examples, unlimited academic ai-proofreading.

✔ Document error-free in 5minutes ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Popular searches

- How to Get Participants For Your Study

- How to Do Segmentation?

- Conjoint Preference Share Simulator

- MaxDiff Analysis

- Likert Scales

- Reliability & Validity

Request consultation

Do you need support in running a pricing or product study? We can help you with agile consumer research and conjoint analysis.

Looking for an online survey platform?

Conjointly offers a great survey tool with multiple question types, randomisation blocks, and multilingual support. The Basic tier is always free.

Research Methods Knowledge Base

- Navigating the Knowledge Base

- Foundations

External Validity

- Sampling Terminology

- Statistical Terms in Sampling

- Probability Sampling

- Nonprobability Sampling

- Measurement

- Research Design

- Table of Contents

Fully-functional online survey tool with various question types, logic, randomisation, and reporting for unlimited number of surveys.

Completely free for academics and students .

External validity is related to generalizing. That’s the major thing you need to keep in mind. Recall that validity refers to the approximate truth of propositions, inferences, or conclusions. So, external validity refers to the approximate truth of conclusions the involve generalizations. Put in more pedestrian terms, external validity is the degree to which the conclusions in your study would hold for other persons in other places and at other times.

In science there are two major approaches to how we provide evidence for a generalization. I’ll call the first approach the Sampling Model . In the sampling model, you start by identifying the population you would like to generalize to. Then, you draw a fair sample from that population and conduct your research with the sample. Finally, because the sample is representative of the population, you can automatically generalize your results back to the population. There are several problems with this approach. First, perhaps you don’t know at the time of your study who you might ultimately like to generalize to. Second, you may not be easily able to draw a fair or representative sample. Third, it’s impossible to sample across all times that you might like to generalize to (like next year).

I’ll call the second approach to generalizing the Proximal Similarity Model . ‘Proximal’ means ’nearby’ and ‘similarity’ means… well, it means ‘similarity’. The term proximal similarity was suggested by Donald T. Campbell as an appropriate relabeling of the term external validity (although he was the first to admit that it probably wouldn’t catch on!). Under this model, we begin by thinking about different generalizability contexts and developing a theory about which contexts are more like our study and which are less so. For instance, we might imagine several settings that have people who are more similar to the people in our study or people who are less similar. This also holds for times and places. When we place different contexts in terms of their relative similarities, we can call this implicit theoretical a gradient of similarity . Once we have developed this proximal similarity framework, we are able to generalize. How? We conclude that we can generalize the results of our study to other persons, places or times that are more like (that is, more proximally similar) to our study. Notice that here, we can never generalize with certainty – it is always a question of more or less similar.

Threats to External Validity

A threat to external validity is an explanation of how you might be wrong in making a generalization. For instance, you conclude that the results of your study (which was done in a specific place, with certain types of people, and at a specific time) can be generalized to another context (for instance, another place, with slightly different people, at a slightly later time). There are three major threats to external validity because there are three ways you could be wrong – people, places or times. Your critics could come along, for example, and argue that the results of your study are due to the unusual type of people who were in the study. Or, they could argue that it might only work because of the unusual place you did the study in (perhaps you did your educational study in a college town with lots of high-achieving educationally-oriented kids). Or, they might suggest that you did your study in a peculiar time. For instance, if you did your smoking cessation study the week after the Surgeon General issues the well-publicized results of the latest smoking and cancer studies, you might get different results than if you had done it the week before.

Improving External Validity

How can we improve external validity? One way, based on the sampling model, suggests that you do a good job of drawing a sample from a population. For instance, you should use random selection, if possible, rather than a nonrandom procedure. And, once selected, you should try to assure that the respondents participate in your study and that you keep your dropout rates low. A second approach would be to use the theory of proximal similarity more effectively. How? Perhaps you could do a better job of describing the ways your contexts and others differ, providing lots of data about the degree of similarity between various groups of people, places, and even times. You might even be able to map out the degree of proximal similarity among various contexts with a methodology like concept mapping . Perhaps the best approach to criticisms of generalizations is simply to show them that they’re wrong – do your study in a variety of places, with different people and at different times. That is, your external validity (ability to generalize) will be stronger the more you replicate your study.

Cookie Consent

Conjointly uses essential cookies to make our site work. We also use additional cookies in order to understand the usage of the site, gather audience analytics, and for remarketing purposes.

For more information on Conjointly's use of cookies, please read our Cookie Policy .

Which one are you?

I am new to conjointly, i am already using conjointly.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

External Validity: Types, Research Methods & Examples

External validity is one of the main goals of researchers who want to find reliable cause-and-effect relationships in qualitative research.

When research has this validity, the results can be used with other people in different situations or places. Because without this validity, analysis can’t be generalized, and researchers can’t apply the results of studies to the real world. So, psychology research needs to be conducted outside a lab setting.

Still, sometimes they prefer to research how variables cause each other instead of being able to generalize the results.

In this article, we’ll talk about what external validity means, its types, and its research design methods.

LEARN ABOUT: Theoretical Research

What is external validity?

External validity describes how effectively the findings of an experiment may be generalized to different people, places, or times. Most scientific investigations do not intend to obtain outcomes that only apply to the few persons who participated in the study.

Instead, researchers want to be able to take the results of an experiment and use them with a larger group of people. It is a big part of what inferential statistics try to do.

For example, if you’re looking at a new drug or educational program, you don’t want to know that it works for only a few people. You want to use those results outside the experiment and beyond those participating. It is called “generalizability,” the essential part of this validity.

Types of external validity

Generally, there are three main types of this validity. We’ll discuss each one below and give examples to help you understand.

Population validity

Population validity is a kind of external validity that looks at how well the study’s results applied to a larger group of people. In this case, “population” refers to the group of people about whom a researcher is trying to conclude. On the other hand, a sample is a particular group of people who participate in the research.

If the results from the sample can apply to a larger group of people, then the study is valid for a large population.

Example: low population validity

You want to test the theory about how exercise and sleep are linked. You think that adults will sleep better when they do physical activities regularly. Your target group is adults in the United States, but your sample comprises about 300 college students.

Even though they are all adults, it might be hard to ensure the population validity in this case because the sampling model of students only represents some adults in the US.

So, your study has a limited amount of population validity, and you can only apply the results to some of the population.

Ecological validity

Ecological validity is another type of external validity that shows how well the research results can be used in different situations. In simple terms, ecological validity is about whether or not your results can be used in the real world.

So, if a study has a lot of ecological validity, the results can be used in the real world. On the other hand, low validity means that the results can’t be used outside the experiment.

Example: low ecological validity

The Milgram Experiment is a classic example of low ecological validity.

Stanley Milgram studied authority in the 1960s. He randomly chose participants and directed them to employ higher and higher voltage shocks to penalize wrong-answering actors. The study showed great obedience to authorities despite fake shock and victim behaviors.

The results of this study are revolutionary for the field of social psychology. However, it is often criticized because it has little ecological validity. Milgram’s set-up was not like real-life situations.

In the experiment, he set up a situation where the participants couldn’t avoid obeying the rules. But the reality of the issue can be very different.

Temporal validity

When figuring out external validity, time is just as important as the number of people involved and confusing factors.

The concept of temporal validity refers to how findings evolve. Particularly, this form of validity refers to how well the research results can be extended to another period.

High temporal validity means that research results can be used correctly in different times and places and that factors will be important in the future.

Imagine that you’re a psychologist, and you’re studying how people act the same.

You found out that social pressure from the majority group has a big effect on the choices of the minority. Because of this, people act similarly. Even though famous psychologist Solomon Asch did this research in the 1950s, the results can still be used in the real world today.

This study, therefore, has temporal validity even after nearly a century.

Research methods of external validity

There are a lot of methods you can do to improve the external validity of your research. Some things that can improve are given below:

Field experiments

Field experiments are like conducting research outside rather than in a controlled environment like a laboratory.

Criteria for inclusion and exclusion

Establishing criteria for who can participate in the research and ensuring that the group being examined is properly identified

Realism in psychology

If you want the participants to believe that the events that take place throughout the study are true, you should provide them with a cover story regarding the purpose of the research. So that they don’t behave any differently than they would in real life based on the fact.

Replication

Doing the study again with different samples or in a different place to see if you get the same results. When many studies have been done on the same topic, a meta-analysis can be used to see if the effect of an independent variable can be repeated to make it more reliable.

Reprocessing

It is like using statistical methods to fix problems with external validity, like reweighting groups if they were different in a certain way, such as age.

LEARN ABOUT: 12 Best Tools for Researchers

As stated in the article, the ability to replicate the results of an experiment is a key component of its external validity. Using the sampling methods the external validity can be improved in the research.

Researchers compare the results to other relevant data to determine the external validity. They can also do the research with more people from the target population. It’s hard to figure out external validity in research, but it’s important to use the results in the future.

The QuestionPro research suite is an enterprise-level research tool that can help you with your research process and surveys.

We at QuestionPro provide tools for data collection, such as our survey software, and a library of insights for any lengthy study. If you’re interested in seeing a demo or learning more, visit the Insight Hub.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Customer Experience Automation: Benefits and Best Tools

Apr 1, 2024

7 Best Market Segmentation Tools in 2024

In-App Feedback Tools: How to Collect, Uses & 14 Best Tools

Mar 29, 2024

11 Best Customer Journey Analytics Software in 2024

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Open access

- Published: 06 April 2022

Identification of tools used to assess the external validity of randomized controlled trials in reviews: a systematic review of measurement properties

- Andres Jung ORCID: orcid.org/0000-0003-0201-0694 1 ,

- Julia Balzer ORCID: orcid.org/0000-0001-7139-229X 2 ,

- Tobias Braun ORCID: orcid.org/0000-0002-8851-2574 3 , 4 &

- Kerstin Luedtke ORCID: orcid.org/0000-0002-7308-5469 1

BMC Medical Research Methodology volume 22 , Article number: 100 ( 2022 ) Cite this article

5245 Accesses

4 Citations

9 Altmetric

Metrics details

Internal and external validity are the most relevant components when critically appraising randomized controlled trials (RCTs) for systematic reviews. However, there is no gold standard to assess external validity. This might be related to the heterogeneity of the terminology as well as to unclear evidence of the measurement properties of available tools. The aim of this review was to identify tools to assess the external validity of RCTs. It was further, to evaluate the quality of identified tools and to recommend the use of individual tools to assess the external validity of RCTs in future systematic reviews.

A two-phase systematic literature search was performed in four databases: PubMed, Scopus, PsycINFO via OVID, and CINAHL via EBSCO. First, tools to assess the external validity of RCTs were identified. Second, studies investigating the measurement properties of these tools were selected. The measurement properties of each included tool were appraised using an adapted version of the COnsensus based Standards for the selection of health Measurement INstruments (COSMIN) guidelines.

38 publications reporting on the development or validation of 28 included tools were included. For 61% (17/28) of the included tools, there was no evidence for measurement properties. For the remaining tools, reliability was the most frequently assessed property. Reliability was judged as “ sufficient ” for three tools (very low certainty of evidence). Content validity was rated as “ sufficient ” for one tool (moderate certainty of evidence).

Conclusions

Based on these results, no available tool can be fully recommended to assess the external validity of RCTs in systematic reviews. Several steps are required to overcome the identified difficulties to either adapt and validate available tools or to develop a better suitable tool.

Trial registration

Prospective registration at Open Science Framework (OSF): https://doi.org/10.17605/OSF.IO/PTG4D .

Peer Review reports

Systematic reviews are powerful research formats to summarize and synthesize the evidence from primary research in health sciences [ 1 , 2 ]. In clinical practice, their results are often applied for the development of clinical guidelines and treatment recommendations [ 3 ]. Consequently, the methodological quality of systematic reviews is of great importance. In turn, the informative value of systematic reviews depends on the overall quality of the included controlled trials [ 3 , 4 ]. Accordingly, the evaluation of the internal and external validity is considered a key step in systematic review methodology [ 4 , 5 ].

Internal validity relates to the systematic error or bias in clinical trials [ 6 ] and expresses how methodologically robust the study was conducted. External validity is the inference about the extent to which “a causal relationship holds over variations in persons, settings, treatments and outcomes” [ 7 , 8 ]. There are plenty of definitions for external validity and a variety of different terms. Hence, external validity, generalizability, applicability, and transferability, among others, are used interchangeably in the literature [ 9 ]. Schünemann et al. [ 10 ] suggest that: (1) generalizability “may refer to whether or not the evidence can be generalized from the population from which the actual research evidence is obtained to the population for which a healthcare answer is required”; (2) applicability may be interpreted as “whether or not the research evidence answers the healthcare question asked by a clinician or public health practitioner” and (3) transferability is often interpreted as to “whether research evidence can be transferred from one setting to another”. Four essential dimensions are proposed to evaluate the external validity of controlled clinical trials in systematic reviews: patients, treatment (including comparator) variables, settings, and outcome modalities [ 4 , 11 ]. Its evaluation depends on the specificity of the reviewers´ research question, the review´s inclusion and exclusion criteria compared to the trial´s population, the setting of the study, as well as the quality of reporting these four dimensions.

In health research, however, external validity is often neglected when critically appraising clinical studies [ 12 , 13 ]. One possible explanation might be the lack of a gold standard for assessing the external validity of clinical trials. Systematic and scoping reviews examined published frameworks and tools for assessing the external validity of clinical trials in health research [ 9 , 12 , 14 – 18 ]. A substantial heterogeneity of terminology and criteria as well as a lack of guidance on how to assess the external validity of intervention studies was found [ 9 , 12 , 15 – 18 ]. The results and conclusions of previous reviews were based on descriptive as well as content analysis of frameworks and tools on external validity [ 9 , 14 – 18 ]. Although the feasibility of some frameworks and tools was assessed [ 12 ], none of the previous reviews evaluated the quality regarding the development and validation processes of the used frameworks and tools.

RCTs are considered the most suitable research design for investigating cause and effect mechanisms of interventions [ 19 ]. However, the study design of RCTs is susceptible to a lack of external validity due to the randomization, the use of exclusion criteria and poor willingness of eligible participants to participate [ 20 , 21 ]. There is evidence that the reliability of external validity evaluations with the same measurement tool differed between randomized and non-randomized trials [ 22 ]. In addition, due to differences in requested information from reporting guidelines (e.g. consolidated standards of reporting trials (CONSORT) statement, strengthening the reporting of observational studies in Epidemiology (STROBE) statement), respective items used for assessing the external validity vary between research designs. Acknowledging the importance of RCTs in the medical field, this review focused only on tools developed to assess the external validity of RCTs. The aim was to identify tools to assess the external validity of RCTs in systematic reviews and to evaluate the quality of evidence regarding their measurement properties. Objectives: (1) to identify published measurement tools to assess the external validity of RCTs in systematic reviews; (2) to evaluate the quality of identified tools; (3) to recommend the use of tools to assess the external validity of RCTs in future systematic reviews.

This systematic review was reported in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) 2020 Statement [ 23 ] and used an adapted version of the PRISMA flow diagram to illustrate the systematic search strategy used to identify clinimetric papers [ 24 ]. This study was conducted according to an adapted version of the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) methodology for systematic reviews of measurement instruments in health sciences [ 25 – 27 ] and followed recommendations of the JBI manual for systematic reviews of measurement properties [ 28 ]. The COSMIN methodology was chosen since this method is comprehensive and validation processes do not differ substantially between patient-reported outcome measures (PROMs) and measurement instruments of other latent constructs. According to the COSMIN authors, it is acceptable to use this methodology for non-PROMs [ 26 ]. Furthermore, because of its flexibility, it has already been used in systematic reviews assessing measurement tools which are not health measurement instruments [ 29 – 31 ]. However, adaptations or modifications may be necessary [ 26 ]. The type of measurement instrument of interest for the current study were reviewer-reported measurement tools. Pilot tests and adaptation-processes of the COSMIN methodology are described below (see section “Quality assessment and evidence synthesis”). The definition of each measurement property evaluated in the present review is based on COSMIN´s taxonomy, terminology and definition of measurement properties [ 32 ]. The review protocol was prospectively registered on March 6, 2020 in the Open Science Framework (OSF) with the registration DOI: https://doi.org/10.17605/OSF.IO/PTG4D [ 33 ].

Deviations from the preregistered protocol

One of the aims listed in the review protocol was to evaluate the characteristics and restrictions of measurement tools in terms of terminology and criteria for assessing external validity. This issue has been addressed in two recent reviews with a similar scope [ 9 , 17 ]. Although our eligibility criteria differed, it was concluded that no novel data was available for the present review to extract, since authors of included tools did not describe the definition or construct of interest or cited the same reports. Therefore, this objective was omitted.

Literature search and screening

A search of the literature was conducted in four databases: PubMed, Scopus, PsycINFO via OVID, and CINAHL via EBSCO. The eligibility criteria and search strategy were predefined in collaboration with a research librarian and is detailed in Table S1 (see Additional file 1 ). The search strategy was designed according to the COSMIN methodology and consists of the following four key elements: (1) construct (external validity of RCTs from the review authors´perspective), (2) population(s) (RCTs), (3) type of instrument(s) (measurement tools, checklists, surveys etc.), and (4) measurement properties (e.g. validity and reliability) [ 34 ]. The four key elements were divided into two main searches (adapted from previous reviews [ 24 , 35 , 36 ]): the phase 1 search contained the first three key elements to identify measurement tools to assess the external validity of RCTs. The phase 2 search aimed to identify studies evaluating the measurement properties of each tool, which was identified and included during phase 1. For this second search, a sensitive PubMed search filter developed by Terwee et al. [ 37 ] was applied. Translations of this filter for the remaining databases were taken from the COSMIN website and from other published COSMIN reviews [ 38 , 39 ] with permission from the authors. Both searches were conducted until March 2021 without restriction regarding the time of publication (databases were searched from inception). In addition, forward citation tracking with Scopus (which is a specialized citation database) was conducted in phase 2 using the ‘cited by’-function. The Scopus search filter was then entered into the ‘search within results’-function. The results from the forward citation tracking with Scopus were added to the database search results into the Rayyan app for screening. Reference lists of the retrieved full-text articles and forward citations with PubMed were scanned manually for any additional studies by one reviewer (AJ) and checked by a second reviewer (KL).

Title and abstract screening for both searches and the full-text screening during phase 2 were performed independently by at least two out of three involved researchers (AJ, KL & TB). For pragmatic reasons, full-text screening and tool/data extraction in phase 1 was performed by one reviewer (AJ) and checked by a second reviewer (TB). This screening method is acceptable for full-text screening as well as data extraction [ 40 ]. Data extraction for both searches was performed with a predesigned extraction sheet based on the recommendations of the COSMIN user manual [ 34 ]. The Rayyan Qatar Computing Research Institute (QCRI) web app [ 41 ] was used to facilitate the screening process (both searches) according to a priori defined eligibility criteria. A pilot test was conducted for both searches in order to reach agreement between the reviewers during the screening process. For this purpose, the first 100 records in phase 1 and the first 50 records in phase 2 (sorted by date) in the Rayyan app were screened by two reviewers independently and subsequently, issues regarding the feasibility of screening methods were discussed in a meeting.

Eligibility criteria

Phase 1 search (identification of tools).

Records were considered for inclusion based on their title and abstract according to the following criteria: (1) records that described the development and or implementation (application), e.g. manual or handbook, of any tool to assess the external validity of RCTs; (2) systematic reviews that applied tools to assess the external validity of RCTs and which explicitly mentioned the tool in the title or abstract; (3) systematic reviews or any other publication potentially using a tool for external validity assessment, but the tool was not explicitly mentioned in the title or abstract; (4) records that gave other references to, or dealt with, tools for the assessment of external validity of RCTs, e.g. method papers, commentaries.

The full-text screening was performed to extract or to find references to potential tools. If a tool was cited, but not presented or available in the full-text version, the internet was searched for websites on which this tool was presented, to extract and review for inclusion. Potential tools were extracted and screened for eligibility as follows: measurement tools aiming to assess the external validity of RCTs and designed for implementation in systematic reviews of intervention studies. Since the terms external validity, applicability, generalizability, relevance and transferability are used interchangeably in the literature [ 10 , 11 ], tools aiming to assess one of these constructs were eligible. Exclusion criteria: (1) The multidimensional tool included at least one item related to external validity, but it was not possible to assess and interpret external validity separately. (2) The tool was developed exclusively for study designs other than RCTs. (3) The tool contained items assessing information not requested in the CONSORT-Statement [ 42 ] (e.g. cost-effectiveness of the intervention, salary of health care provider) and these items could not be separated from items on external validity. (4) The tool was published in a language other than English or German. (5) The tool was explicitly designed for a specific medical profession or field and cannot be used in other medical fields.

Phase 2 search (identification of reports on the measurement properties of included tools)

For the phase 2 search, records evaluating the measurement properties of at least one of the included measurement tools were selected. Reports only using the measurement tool as an outcome measure without the evaluation of at least one measurement property were excluded. If a report did not evaluate the measurement properties of a tool, it was also excluded. Hence, reports providing data on the validity or the reliability of sum-scores of multidimensional tools, only, were excluded if the dimension “external validity” was not evaluated separately.

If there was missing data or information (phase 1 or phase 2), the corresponding authors were contacted.

Quality assessment and evidence synthesis

All included reports were systematically evaluated: (1) for their methodological quality by using the adapted COSMIN Risk of Bias (RoB) checklist [ 25 ] and (2) against the updated criteria for good measurement properties [ 26 , 27 ]. Subsequently, all available evidence for each measurement property for the individual tool were summarized and rated against the updated criteria for good measurement properties and graded for their certainty of evidence, according to COSMIN´s modified GRADE approach [ 26 , 27 ]. The quality assessment was performed by two independent reviewers (AJ & JB). In case of irreconcilable disagreement, a third reviewer (TB) was consulted to reach consensus.

The COSMIN RoB checklist is a tool [ 25 , 27 , 32 , 43 ] designed for the systematic evaluation of the methodological quality of studies assessing the measurement properties of health measurement instruments [ 25 ]. Although this checklist was specifically developed for systematic reviews of PROMs, it can also be used for reviews of non-PROMs [ 26 ] or measurement tools of other latent constructs [ 28 , 29 ]. As mentioned in the COSMIN user manual, adaptations for some items in the COSMIN RoB checklist might be necessary, in relation to the construct being measured [ 34 ]. Therefore, pilot tests were performed for the assessment of measurement properties of tools assessing the quality of RCTs before data extraction, aiming to ensure feasibility during the planned evaluation of the included tools. The pilot tests were performed with a random sample of publications on measurement instruments of potentially relevant tools. After each pilot test, results and problems regarding the comprehensibility, relevance and feasibility of the instructions, items, and response options in relation to the construct of interest were discussed. Where necessary, adaptations and/or supplements were added to the instructions of the evaluation with the COSMIN RoB checklist. Saturation was reached after two rounds of pilot testing. Substantial adaptations or supplements were required for Box 1 (‘development process’) and Box 10 (‘responsiveness’) of the COSMIN RoB checklist. Minor adaptations were necessary for the remaining boxes. The specification list, including the adaptations, can be seen in Table S2 (see Additional file 2 ). The methodological quality of included studies was rated via the four-point rating scale of the COSMIN RoB checklist as “inadequate”, “doubtful”, “adequate”, or “very good” [ 25 ]. The lowest score of any item in a box is taken to determine the overall rating of the methodological quality of each single study on a measurement property [ 25 ].

After the RoB-assessment, the result of each single study on a measurement property was rated against the updated criteria for good measurement properties for content validity [ 27 ] and for the remaining measurement properties [ 26 ] as “sufficient” (+), “insufficient” (-), or “indeterminate” (?). These ratings were summarized and an overall rating for each measurement property was given as “sufficient” (+), “insufficient” (-), “inconsistent” (±), or “indeterminate” (?). However, the overall rating criteria for good content validity was adapted to the research topic of the present review. This method usually requires an additional subjective judgement from reviewers [ 44 ]. Since one of the biggest limitations within this field of research is the lack of consensus on terminology and criteria as well as on how to assess the external validity [ 9 , 12 ], a reviewers’ subjective judgement was considered inappropriate. After this issue was also discussed with one leading member of the COSMIN steering committee, the reviewers’ rating was omitted. A “sufficient” (+) overall rating was given if there was evidence of face or content validity of the final version of the measurement tool assessed by a user or expert panel. Otherwise, the rating “indeterminate” (?) or “insufficient” (-) was used for the content validity.

The summarized evidence for each measurement property for the individual tool was graded using COSMIN´s modified GRADE approach [ 26 , 27 ]. The certainty (quality) of evidence was graded as “high”, “moderate”, “low”, or “very low” according to the approach for content validity [ 27 ] and for the remaining measurement properties [ 26 ]. COSMIN´s modified GRADE approach distinguishes between four factors influencing the certainty of evidence: risk of bias, inconsistency, indirectness, and imprecision. The starting point for all measurement properties is high certainty of evidence and is subsequently downgraded by one to three levels per factor when there is risk of bias, (unexplained) inconsistency, imprecision (not considered for content validity [ 27 ]), or indirect results [ 26 , 27 ]. If there is no study on the content validity of a tool, the starting point for this measurement property is “moderate” and is subsequently downgraded depending on the quality of the development process [ 27 ]. The grading process according to COSMIN [ 26 , 27 ] is described in Table S4. Selective reporting bias or publication bias is not taken into account in COSMIN´s modified GRADE approach, because of a lack of registries for studies on measurement properties [ 26 ].

The evidence synthesis was performed qualitatively according to the COSMIN methodology [ 26 ]. If several reports revealed homogenous quantitative data (e.g. same statistics, population) on internal consistency, reliability, measurement error or hypotheses testing of a measurement tool, pooling the results was considered using generic inverse variance (random effects) methodology and weighted means as well as 95% confidence intervals for each measurement property [ 34 ]. No subgroup analysis was planned. However, statistical pooling was not possible in the present review.

We used three criteria for the recommendation of a measurement tool in accordance with the COSMIN manual: (A) “Evidence for sufficient content validity (any level) and at least low-quality evidence for sufficient internal consistency” for a tool to be recommended; (B) tool “categorized not in A or C” and further research on the quality of this tool is required to be recommended; and (C) tool with “high quality evidence for an insufficient psychometric property” and this tool should not be recommended [ 26 ].

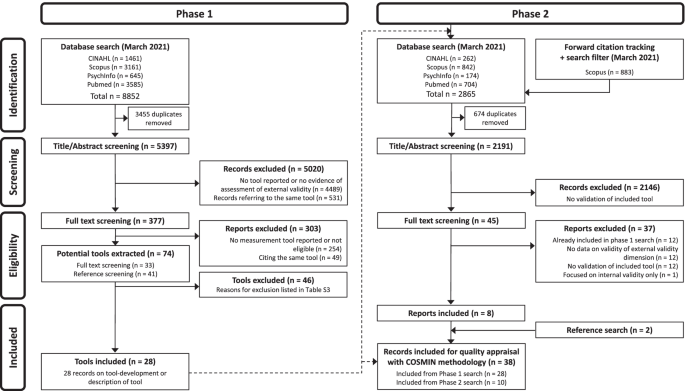

Literature search and selection process

Figure 1 shows the selection process. In the phase 1 search, from 5397 non-duplicate records, 5020 irrelevant records were excluded. 377 reports were screened, and 74 potential tools were extracted. After reaching consensus, 46 tools were excluded (reasons for exclusion are presented in Table S3 (see Additional file 3 )) and finally 28 were included. Any disagreements during the screening process were resolved through discussion. There was one case during the full-text screening process in the phase 1 search, in which the whole review team was involved to reach consensus about the inclusion/exclusion of two tools (Agency for Healthcare Research and Quality (AHRQ) criteria for applicability and TRANSFER approach, both listed in Table S 3 ).

In the phase 2 search, 2191 non-duplicate records were screened for title and abstract. 2146 records were excluded as they did not assess any measurement property of the included tools. Of 45 reports, 8 reports were included. The most common reason for exclusion was that reports evaluating the measurement properties of multidimensional tools did not evaluate external validity as a separate dimension. For example, one study assessing the interrater reliability of the GRADE method [ 45 ] was identified during full-text screening, but had to be excluded, since it did not provide separate data on the reliability of the indirectness domain (representing external validity). Two additional reports were included during reference screening. Any disagreements during the screening process were resolved through discussion.

Thirty-eight publications on the development or evaluation of the measurement properties of 28 included tools were included for quality appraisal according to the adapted COSMIN guidelines.

Flow diagram “of systematic search strategy used to identify clinimetric papers”[ 24 ]

We contacted the corresponding authors of three reports [ 46 – 48 ] for additional information. One corresponding author did reply [ 48 ].

Methods to assess the external validity of RCTs

During full-text screening in phase 1, several concepts to assess the external validity of RCTs were found (Table 1 ). Two main concepts were identified: experimental/statistical methods and non-experimental methods. The experimental/statistical methods were summarized and collated into five subcategories giving a descriptive overview of the different approaches used to assess the external validity. However, according to our eligibility criteria, these methods were excluded, since they were not developed for the use in systematic reviews of interventions. In addition, a comparison of these methods as well as appraisal of risk of bias with the COSMIN RoB checklist would not have been feasible. Therefore, the experimental/statistical methods described below were not included for further evaluation.

Characteristics of included measurement tools

The included tools and their characteristics are listed in Table 2 . Overall, the tools were heterogenous with respect to the number of items or dimensions, response options and development processes. The number of items varied between one and 26 items and the response options varied between 2-point-scales to 5-point-scales. Most tools used a 3-point-scale ( n = 20/28, 71%). For 14/28 (50%) of the tools, the development was not described in detail [ 63 – 76 ]. Seven review authors appear to have developed their own tool but did not provide any information on the development process [ 63 – 68 , 71 ].

The constructs aimed to be measured by the tools or dimensions of interest are diverse. Two of the tools focused on the characterization of RCTs on an efficacy-effectiveness continuum [ 47 , 86 ], three tools focused predominantly on the report quality of factors essential to external validity [ 69 , 75 , 88 ] (rather than the external validity itself), 18 tools aimed to assess the representativeness, generalizability or applicability of population, setting, intervention, and/or outcome measure to usual practice [ 22 , 63 – 65 , 70 , 71 , 73 , 74 , 76 – 78 , 81 – 83 , 92 , 94 , 100 ], and five tools seemed to measure a mixture of these different constructs related to external validity [ 66 , 68 , 72 , 79 , 98 ]. However, the construct of interest of most tools was not described adequately (see below).

- Measurement properties

The results of the methodological quality assessment according to the adapted COSMIN RoB checklist are detailed in Table 3 . If all data on hypotheses testing in an article had the same methodological quality rating, they were combined and summarized in Table 3 in accordance with the COSMIN manual [ 34 ]. The results of the ratings against the updated criteria for good measurement properties and the overall certainty of evidence, according to the modified GRADE approach, can be seen in Table 4 . The detailed grading is described in Table S4 (see Additional file 4 ). Disagreements between reviewers during the quality assessment were resolved through discussion.

Content validity

The methodological quality of the development process was “inadequate” for 19/28 (68%) of the included tools [ 63 – 66 , 68 – 74 , 76 , 78 , 81 , 88 , 98 , 100 ]. This was mainly due to insufficient description of the construct to be measured, the target population, or missing pilot tests. Six development studies had a “doubtful” methodological quality [ 22 , 75 , 77 , 79 , 82 , 83 ] and three had an “adequate” methodological quality [ 47 , 48 , 94 ].