- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Qualitative Data Analysis: What is it, Methods + Examples

In a world rich with information and narrative, understanding the deeper layers of human experiences requires a unique vision that goes beyond numbers and figures. This is where the power of qualitative data analysis comes to light.

In this blog, we’ll learn about qualitative data analysis, explore its methods, and provide real-life examples showcasing its power in uncovering insights.

What is Qualitative Data Analysis?

Qualitative data analysis is a systematic process of examining non-numerical data to extract meaning, patterns, and insights.

In contrast to quantitative analysis, which focuses on numbers and statistical metrics, the qualitative study focuses on the qualitative aspects of data, such as text, images, audio, and videos. It seeks to understand every aspect of human experiences, perceptions, and behaviors by examining the data’s richness.

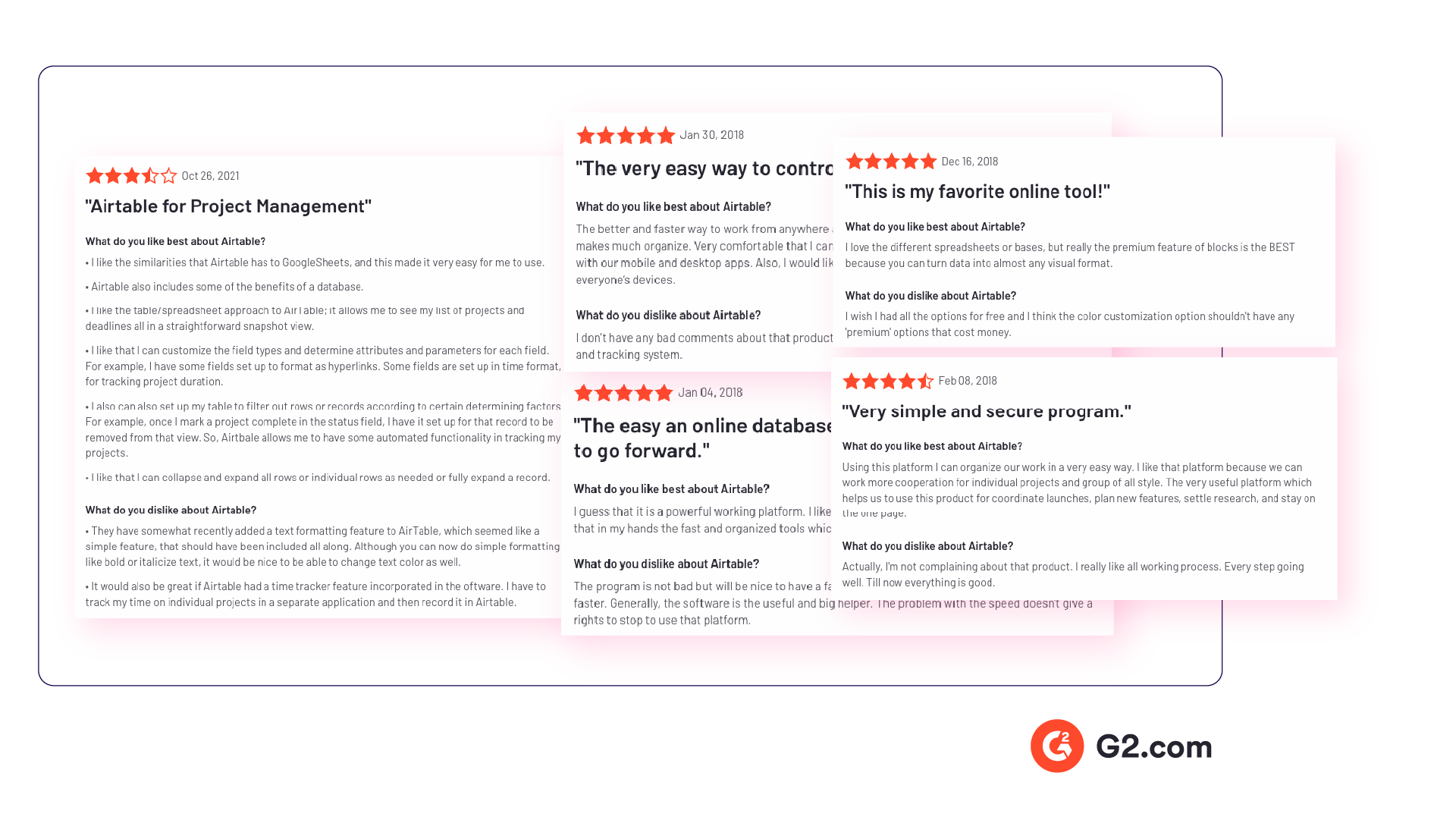

Companies frequently conduct this analysis on customer feedback. You can collect qualitative data from reviews, complaints, chat messages, interactions with support centers, customer interviews, case notes, or even social media comments. This kind of data holds the key to understanding customer sentiments and preferences in a way that goes beyond mere numbers.

Importance of Qualitative Data Analysis

Qualitative data analysis plays a crucial role in your research and decision-making process across various disciplines. Let’s explore some key reasons that underline the significance of this analysis:

In-Depth Understanding

It enables you to explore complex and nuanced aspects of a phenomenon, delving into the ‘how’ and ‘why’ questions. This method provides you with a deeper understanding of human behavior, experiences, and contexts that quantitative approaches might not capture fully.

Contextual Insight

You can use this analysis to give context to numerical data. It will help you understand the circumstances and conditions that influence participants’ thoughts, feelings, and actions. This contextual insight becomes essential for generating comprehensive explanations.

Theory Development

You can generate or refine hypotheses via qualitative data analysis. As you analyze the data attentively, you can form hypotheses, concepts, and frameworks that will drive your future research and contribute to theoretical advances.

Participant Perspectives

When performing qualitative research, you can highlight participant voices and opinions. This approach is especially useful for understanding marginalized or underrepresented people, as it allows them to communicate their experiences and points of view.

Exploratory Research

The analysis is frequently used at the exploratory stage of your project. It assists you in identifying important variables, developing research questions, and designing quantitative studies that will follow.

Types of Qualitative Data

When conducting qualitative research, you can use several qualitative data collection methods , and here you will come across many sorts of qualitative data that can provide you with unique insights into your study topic. These data kinds add new views and angles to your understanding and analysis.

Interviews and Focus Groups

Interviews and focus groups will be among your key methods for gathering qualitative data. Interviews are one-on-one talks in which participants can freely share their thoughts, experiences, and opinions.

Focus groups, on the other hand, are discussions in which members interact with one another, resulting in dynamic exchanges of ideas. Both methods provide rich qualitative data and direct access to participant perspectives.

Observations and Field Notes

Observations and field notes are another useful sort of qualitative data. You can immerse yourself in the research environment through direct observation, carefully documenting behaviors, interactions, and contextual factors.

These observations will be recorded in your field notes, providing a complete picture of the environment and the behaviors you’re researching. This data type is especially important for comprehending behavior in their natural setting.

Textual and Visual Data

Textual and visual data include a wide range of resources that can be qualitatively analyzed. Documents, written narratives, and transcripts from various sources, such as interviews or speeches, are examples of textual data.

Photographs, films, and even artwork provide a visual layer to your research. These forms of data allow you to investigate what is spoken and the underlying emotions, details, and symbols expressed by language or pictures.

When to Choose Qualitative Data Analysis over Quantitative Data Analysis

As you begin your research journey, understanding why the analysis of qualitative data is important will guide your approach to understanding complex events. If you analyze qualitative data, it will provide new insights that complement quantitative methodologies, which will give you a broader understanding of your study topic.

It is critical to know when to use qualitative analysis over quantitative procedures. You can prefer qualitative data analysis when:

- Complexity Reigns: When your research questions involve deep human experiences, motivations, or emotions, qualitative research excels at revealing these complexities.

- Exploration is Key: Qualitative analysis is ideal for exploratory research. It will assist you in understanding a new or poorly understood topic before formulating quantitative hypotheses.

- Context Matters: If you want to understand how context affects behaviors or results, qualitative data analysis provides the depth needed to grasp these relationships.

- Unanticipated Findings: When your study provides surprising new viewpoints or ideas, qualitative analysis helps you to delve deeply into these emerging themes.

- Subjective Interpretation is Vital: When it comes to understanding people’s subjective experiences and interpretations, qualitative data analysis is the way to go.

You can make informed decisions regarding the right approach for your research objectives if you understand the importance of qualitative analysis and recognize the situations where it shines.

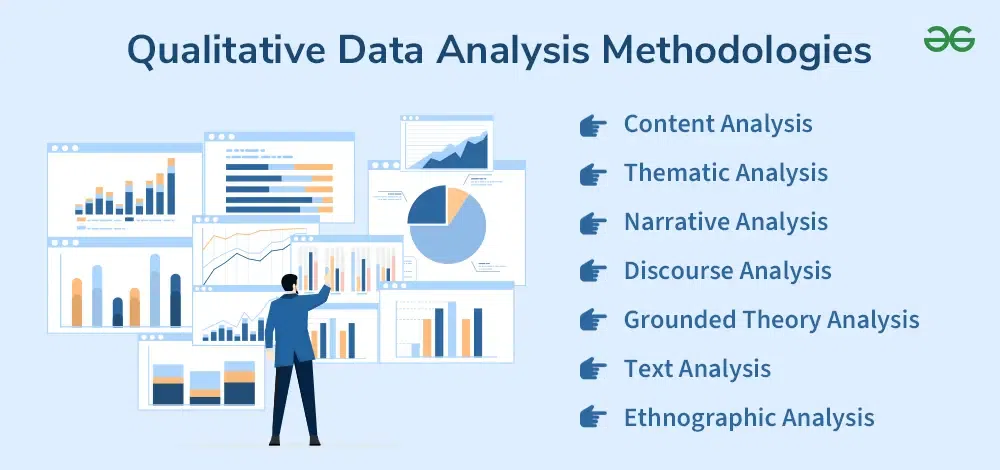

Qualitative Data Analysis Methods and Examples

Exploring various qualitative data analysis methods will provide you with a wide collection for making sense of your research findings. Once the data has been collected, you can choose from several analysis methods based on your research objectives and the data type you’ve collected.

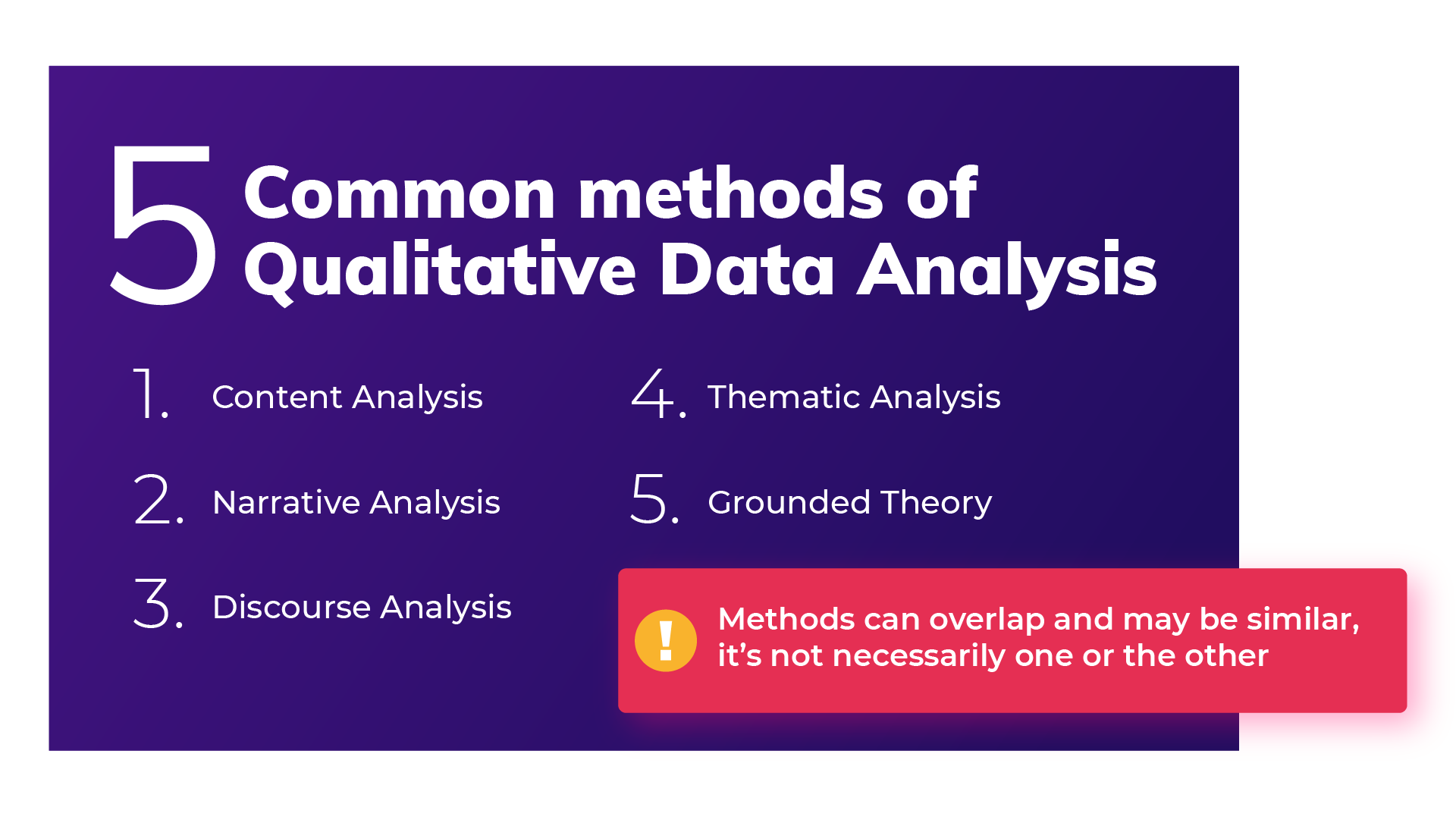

There are five main methods for analyzing qualitative data. Each method takes a distinct approach to identifying patterns, themes, and insights within your qualitative data. They are:

Method 1: Content Analysis

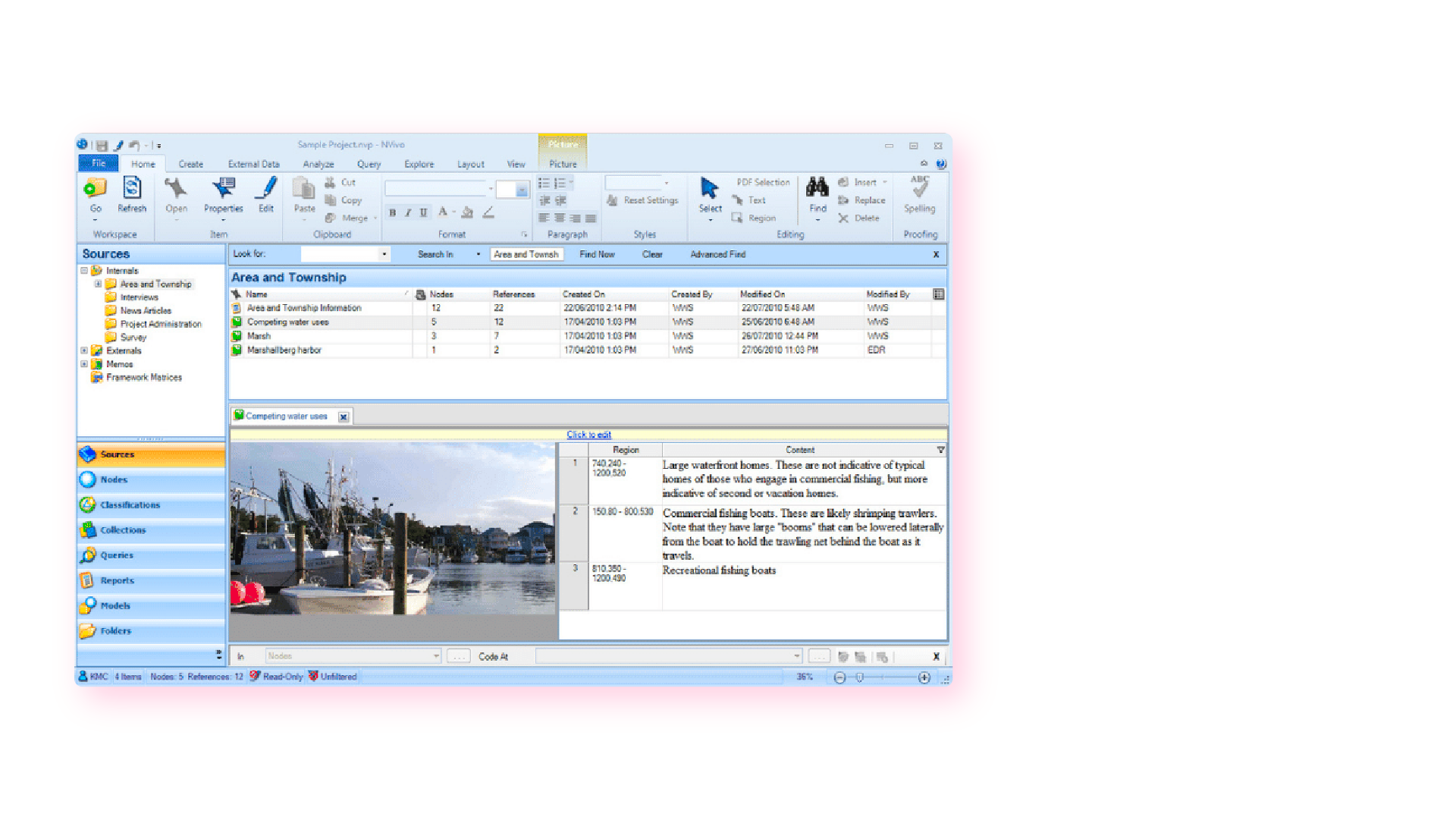

Content analysis is a methodical technique for analyzing textual or visual data in a structured manner. In this method, you will categorize qualitative data by splitting it into manageable pieces and assigning the manual coding process to these units.

As you go, you’ll notice ongoing codes and designs that will allow you to conclude the content. This method is very beneficial for detecting common ideas, concepts, or themes in your data without losing the context.

Steps to Do Content Analysis

Follow these steps when conducting content analysis:

- Collect and Immerse: Begin by collecting the necessary textual or visual data. Immerse yourself in this data to fully understand its content, context, and complexities.

- Assign Codes and Categories: Assign codes to relevant data sections that systematically represent major ideas or themes. Arrange comparable codes into groups that cover the major themes.

- Analyze and Interpret: Develop a structured framework from the categories and codes. Then, evaluate the data in the context of your research question, investigate relationships between categories, discover patterns, and draw meaning from these connections.

Benefits & Challenges

There are various advantages to using content analysis:

- Structured Approach: It offers a systematic approach to dealing with large data sets and ensures consistency throughout the research.

- Objective Insights: This method promotes objectivity, which helps to reduce potential biases in your study.

- Pattern Discovery: Content analysis can help uncover hidden trends, themes, and patterns that are not always obvious.

- Versatility: You can apply content analysis to various data formats, including text, internet content, images, etc.

However, keep in mind the challenges that arise:

- Subjectivity: Even with the best attempts, a certain bias may remain in coding and interpretation.

- Complexity: Analyzing huge data sets requires time and great attention to detail.

- Contextual Nuances: Content analysis may not capture all of the contextual richness that qualitative data analysis highlights.

Example of Content Analysis

Suppose you’re conducting market research and looking at customer feedback on a product. As you collect relevant data and analyze feedback, you’ll see repeating codes like “price,” “quality,” “customer service,” and “features.” These codes are organized into categories such as “positive reviews,” “negative reviews,” and “suggestions for improvement.”

According to your findings, themes such as “price” and “customer service” stand out and show that pricing and customer service greatly impact customer satisfaction. This example highlights the power of content analysis for obtaining significant insights from large textual data collections.

Method 2: Thematic Analysis

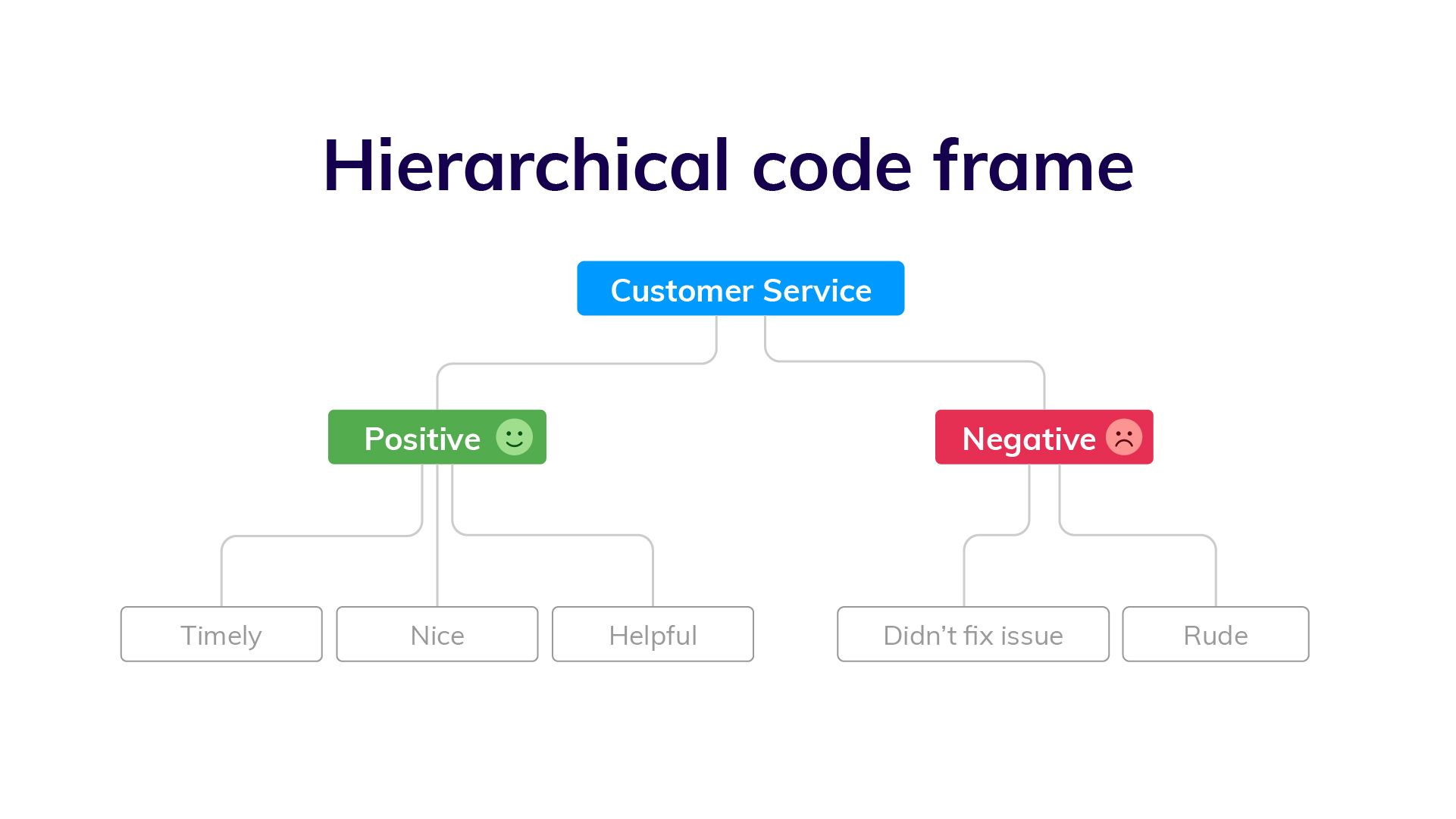

Thematic analysis is a well-structured procedure for identifying and analyzing recurring themes in your data. As you become more engaged in the data, you’ll generate codes or short labels representing key concepts. These codes are then organized into themes, providing a consistent framework for organizing and comprehending the substance of the data.

The analysis allows you to organize complex narratives and perspectives into meaningful categories, which will allow you to identify connections and patterns that may not be visible at first.

Steps to Do Thematic Analysis

Follow these steps when conducting a thematic analysis:

- Code and Group: Start by thoroughly examining the data and giving initial codes that identify the segments. To create initial themes, combine relevant codes.

- Code and Group: Begin by engaging yourself in the data, assigning first codes to notable segments. To construct basic themes, group comparable codes together.

- Analyze and Report: Analyze the data within each theme to derive relevant insights. Organize the topics into a consistent structure and explain your findings, along with data extracts that represent each theme.

Thematic analysis has various benefits:

- Structured Exploration: It is a method for identifying patterns and themes in complex qualitative data.

- Comprehensive knowledge: Thematic analysis promotes an in-depth understanding of the complications and meanings of the data.

- Application Flexibility: This method can be customized to various research situations and data kinds.

However, challenges may arise, such as:

- Interpretive Nature: Interpreting qualitative data in thematic analysis is vital, and it is critical to manage researcher bias.

- Time-consuming: The study can be time-consuming, especially with large data sets.

- Subjectivity: The selection of codes and topics might be subjective.

Example of Thematic Analysis

Assume you’re conducting a thematic analysis on job satisfaction interviews. Following your immersion in the data, you assign initial codes such as “work-life balance,” “career growth,” and “colleague relationships.” As you organize these codes, you’ll notice themes develop, such as “Factors Influencing Job Satisfaction” and “Impact on Work Engagement.”

Further investigation reveals the tales and experiences included within these themes and provides insights into how various elements influence job satisfaction. This example demonstrates how thematic analysis can reveal meaningful patterns and insights in qualitative data.

Method 3: Narrative Analysis

The narrative analysis involves the narratives that people share. You’ll investigate the histories in your data, looking at how stories are created and the meanings they express. This method is excellent for learning how people make sense of their experiences through narrative.

Steps to Do Narrative Analysis

The following steps are involved in narrative analysis:

- Gather and Analyze: Start by collecting narratives, such as first-person tales, interviews, or written accounts. Analyze the stories, focusing on the plot, feelings, and characters.

- Find Themes: Look for recurring themes or patterns in various narratives. Think about the similarities and differences between these topics and personal experiences.

- Interpret and Extract Insights: Contextualize the narratives within their larger context. Accept the subjective nature of each narrative and analyze the narrator’s voice and style. Extract insights from the tales by diving into the emotions, motivations, and implications communicated by the stories.

There are various advantages to narrative analysis:

- Deep Exploration: It lets you look deeply into people’s personal experiences and perspectives.

- Human-Centered: This method prioritizes the human perspective, allowing individuals to express themselves.

However, difficulties may arise, such as:

- Interpretive Complexity: Analyzing narratives requires dealing with the complexities of meaning and interpretation.

- Time-consuming: Because of the richness and complexities of tales, working with them can be time-consuming.

Example of Narrative Analysis

Assume you’re conducting narrative analysis on refugee interviews. As you read the stories, you’ll notice common themes of toughness, loss, and hope. The narratives provide insight into the obstacles that refugees face, their strengths, and the dreams that guide them.

The analysis can provide a deeper insight into the refugees’ experiences and the broader social context they navigate by examining the narratives’ emotional subtleties and underlying meanings. This example highlights how narrative analysis can reveal important insights into human stories.

Method 4: Grounded Theory Analysis

Grounded theory analysis is an iterative and systematic approach that allows you to create theories directly from data without being limited by pre-existing hypotheses. With an open mind, you collect data and generate early codes and labels that capture essential ideas or concepts within the data.

As you progress, you refine these codes and increasingly connect them, eventually developing a theory based on the data. Grounded theory analysis is a dynamic process for developing new insights and hypotheses based on details in your data.

Steps to Do Grounded Theory Analysis

Grounded theory analysis requires the following steps:

- Initial Coding: First, immerse yourself in the data, producing initial codes that represent major concepts or patterns.

- Categorize and Connect: Using axial coding, organize the initial codes, which establish relationships and connections between topics.

- Build the Theory: Focus on creating a core category that connects the codes and themes. Regularly refine the theory by comparing and integrating new data, ensuring that it evolves organically from the data.

Grounded theory analysis has various benefits:

- Theory Generation: It provides a one-of-a-kind opportunity to generate hypotheses straight from data and promotes new insights.

- In-depth Understanding: The analysis allows you to deeply analyze the data and reveal complex relationships and patterns.

- Flexible Process: This method is customizable and ongoing, which allows you to enhance your research as you collect additional data.

However, challenges might arise with:

- Time and Resources: Because grounded theory analysis is a continuous process, it requires a large commitment of time and resources.

- Theoretical Development: Creating a grounded theory involves a thorough understanding of qualitative data analysis software and theoretical concepts.

- Interpretation of Complexity: Interpreting and incorporating a newly developed theory into existing literature can be intellectually hard.

Example of Grounded Theory Analysis

Assume you’re performing a grounded theory analysis on workplace collaboration interviews. As you open code the data, you will discover notions such as “communication barriers,” “team dynamics,” and “leadership roles.” Axial coding demonstrates links between these notions, emphasizing the significance of efficient communication in developing collaboration.

You create the core “Integrated Communication Strategies” category through selective coding, which unifies new topics.

This theory-driven category serves as the framework for understanding how numerous aspects contribute to effective team collaboration. This example shows how grounded theory analysis allows you to generate a theory directly from the inherent nature of the data.

Method 5: Discourse Analysis

Discourse analysis focuses on language and communication. You’ll look at how language produces meaning and how it reflects power relations, identities, and cultural influences. This strategy examines what is said and how it is said; the words, phrasing, and larger context of communication.

The analysis is precious when investigating power dynamics, identities, and cultural influences encoded in language. By evaluating the language used in your data, you can identify underlying assumptions, cultural standards, and how individuals negotiate meaning through communication.

Steps to Do Discourse Analysis

Conducting discourse analysis entails the following steps:

- Select Discourse: For analysis, choose language-based data such as texts, speeches, or media content.

- Analyze Language: Immerse yourself in the conversation, examining language choices, metaphors, and underlying assumptions.

- Discover Patterns: Recognize the dialogue’s reoccurring themes, ideologies, and power dynamics. To fully understand the effects of these patterns, put them in their larger context.

There are various advantages of using discourse analysis:

- Understanding Language: It provides an extensive understanding of how language builds meaning and influences perceptions.

- Uncovering Power Dynamics: The analysis reveals how power dynamics appear via language.

- Cultural Insights: This method identifies cultural norms, beliefs, and ideologies stored in communication.

However, the following challenges may arise:

- Complexity of Interpretation: Language analysis involves navigating multiple levels of nuance and interpretation.

- Subjectivity: Interpretation can be subjective, so controlling researcher bias is important.

- Time-Intensive: Discourse analysis can take a lot of time because careful linguistic study is required in this analysis.

Example of Discourse Analysis

Consider doing discourse analysis on media coverage of a political event. You notice repeating linguistic patterns in news articles that depict the event as a conflict between opposing parties. Through deconstruction, you can expose how this framing supports particular ideologies and power relations.

You can illustrate how language choices influence public perceptions and contribute to building the narrative around the event by analyzing the speech within the broader political and social context. This example shows how discourse analysis can reveal hidden power dynamics and cultural influences on communication.

How to do Qualitative Data Analysis with the QuestionPro Research suite?

QuestionPro is a popular survey and research platform that offers tools for collecting and analyzing qualitative and quantitative data. Follow these general steps for conducting qualitative data analysis using the QuestionPro Research Suite:

- Collect Qualitative Data: Set up your survey to capture qualitative responses. It might involve open-ended questions, text boxes, or comment sections where participants can provide detailed responses.

- Export Qualitative Responses: Export the responses once you’ve collected qualitative data through your survey. QuestionPro typically allows you to export survey data in various formats, such as Excel or CSV.

- Prepare Data for Analysis: Review the exported data and clean it if necessary. Remove irrelevant or duplicate entries to ensure your data is ready for analysis.

- Code and Categorize Responses: Segment and label data, letting new patterns emerge naturally, then develop categories through axial coding to structure the analysis.

- Identify Themes: Analyze the coded responses to identify recurring themes, patterns, and insights. Look for similarities and differences in participants’ responses.

- Generate Reports and Visualizations: Utilize the reporting features of QuestionPro to create visualizations, charts, and graphs that help communicate the themes and findings from your qualitative research.

- Interpret and Draw Conclusions: Interpret the themes and patterns you’ve identified in the qualitative data. Consider how these findings answer your research questions or provide insights into your study topic.

- Integrate with Quantitative Data (if applicable): If you’re also conducting quantitative research using QuestionPro, consider integrating your qualitative findings with quantitative results to provide a more comprehensive understanding.

Qualitative data analysis is vital in uncovering various human experiences, views, and stories. If you’re ready to transform your research journey and apply the power of qualitative analysis, now is the moment to do it. Book a demo with QuestionPro today and begin your journey of exploration.

LEARN MORE FREE TRIAL

MORE LIKE THIS

I Am Disconnected – Tuesday CX Thoughts

May 21, 2024

20 Best Customer Success Tools of 2024

May 20, 2024

AI-Based Services Buying Guide for Market Research (based on ESOMAR’s 20 Questions)

Data Information vs Insight: Essential differences

May 14, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Qualitative Data Analysis Methods 101:

The “big 6” methods + examples.

By: Kerryn Warren (PhD) | Reviewed By: Eunice Rautenbach (D.Tech) | May 2020 (Updated April 2023)

Qualitative data analysis methods. Wow, that’s a mouthful.

If you’re new to the world of research, qualitative data analysis can look rather intimidating. So much bulky terminology and so many abstract, fluffy concepts. It certainly can be a minefield!

Don’t worry – in this post, we’ll unpack the most popular analysis methods , one at a time, so that you can approach your analysis with confidence and competence – whether that’s for a dissertation, thesis or really any kind of research project.

What (exactly) is qualitative data analysis?

To understand qualitative data analysis, we need to first understand qualitative data – so let’s step back and ask the question, “what exactly is qualitative data?”.

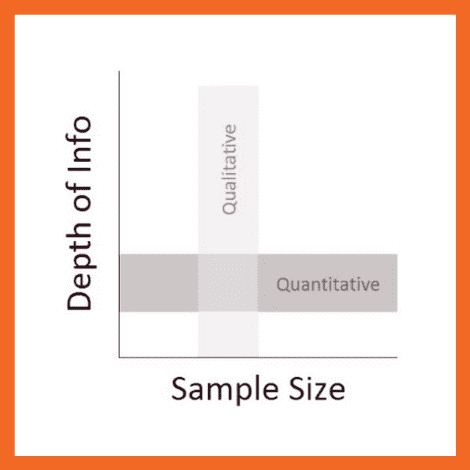

Qualitative data refers to pretty much any data that’s “not numbers” . In other words, it’s not the stuff you measure using a fixed scale or complex equipment, nor do you analyse it using complex statistics or mathematics.

So, if it’s not numbers, what is it?

Words, you guessed? Well… sometimes , yes. Qualitative data can, and often does, take the form of interview transcripts, documents and open-ended survey responses – but it can also involve the interpretation of images and videos. In other words, qualitative isn’t just limited to text-based data.

So, how’s that different from quantitative data, you ask?

Simply put, qualitative research focuses on words, descriptions, concepts or ideas – while quantitative research focuses on numbers and statistics . Qualitative research investigates the “softer side” of things to explore and describe , while quantitative research focuses on the “hard numbers”, to measure differences between variables and the relationships between them. If you’re keen to learn more about the differences between qual and quant, we’ve got a detailed post over here .

So, qualitative analysis is easier than quantitative, right?

Not quite. In many ways, qualitative data can be challenging and time-consuming to analyse and interpret. At the end of your data collection phase (which itself takes a lot of time), you’ll likely have many pages of text-based data or hours upon hours of audio to work through. You might also have subtle nuances of interactions or discussions that have danced around in your mind, or that you scribbled down in messy field notes. All of this needs to work its way into your analysis.

Making sense of all of this is no small task and you shouldn’t underestimate it. Long story short – qualitative analysis can be a lot of work! Of course, quantitative analysis is no piece of cake either, but it’s important to recognise that qualitative analysis still requires a significant investment in terms of time and effort.

Need a helping hand?

In this post, we’ll explore qualitative data analysis by looking at some of the most common analysis methods we encounter. We’re not going to cover every possible qualitative method and we’re not going to go into heavy detail – we’re just going to give you the big picture. That said, we will of course includes links to loads of extra resources so that you can learn more about whichever analysis method interests you.

Without further delay, let’s get into it.

The “Big 6” Qualitative Analysis Methods

There are many different types of qualitative data analysis, all of which serve different purposes and have unique strengths and weaknesses . We’ll start by outlining the analysis methods and then we’ll dive into the details for each.

The 6 most popular methods (or at least the ones we see at Grad Coach) are:

- Content analysis

- Narrative analysis

- Discourse analysis

- Thematic analysis

- Grounded theory (GT)

- Interpretive phenomenological analysis (IPA)

Let’s take a look at each of them…

QDA Method #1: Qualitative Content Analysis

Content analysis is possibly the most common and straightforward QDA method. At the simplest level, content analysis is used to evaluate patterns within a piece of content (for example, words, phrases or images) or across multiple pieces of content or sources of communication. For example, a collection of newspaper articles or political speeches.

With content analysis, you could, for instance, identify the frequency with which an idea is shared or spoken about – like the number of times a Kardashian is mentioned on Twitter. Or you could identify patterns of deeper underlying interpretations – for instance, by identifying phrases or words in tourist pamphlets that highlight India as an ancient country.

Because content analysis can be used in such a wide variety of ways, it’s important to go into your analysis with a very specific question and goal, or you’ll get lost in the fog. With content analysis, you’ll group large amounts of text into codes , summarise these into categories, and possibly even tabulate the data to calculate the frequency of certain concepts or variables. Because of this, content analysis provides a small splash of quantitative thinking within a qualitative method.

Naturally, while content analysis is widely useful, it’s not without its drawbacks . One of the main issues with content analysis is that it can be very time-consuming , as it requires lots of reading and re-reading of the texts. Also, because of its multidimensional focus on both qualitative and quantitative aspects, it is sometimes accused of losing important nuances in communication.

Content analysis also tends to concentrate on a very specific timeline and doesn’t take into account what happened before or after that timeline. This isn’t necessarily a bad thing though – just something to be aware of. So, keep these factors in mind if you’re considering content analysis. Every analysis method has its limitations , so don’t be put off by these – just be aware of them ! If you’re interested in learning more about content analysis, the video below provides a good starting point.

QDA Method #2: Narrative Analysis

As the name suggests, narrative analysis is all about listening to people telling stories and analysing what that means . Since stories serve a functional purpose of helping us make sense of the world, we can gain insights into the ways that people deal with and make sense of reality by analysing their stories and the ways they’re told.

You could, for example, use narrative analysis to explore whether how something is being said is important. For instance, the narrative of a prisoner trying to justify their crime could provide insight into their view of the world and the justice system. Similarly, analysing the ways entrepreneurs talk about the struggles in their careers or cancer patients telling stories of hope could provide powerful insights into their mindsets and perspectives . Simply put, narrative analysis is about paying attention to the stories that people tell – and more importantly, the way they tell them.

Of course, the narrative approach has its weaknesses , too. Sample sizes are generally quite small due to the time-consuming process of capturing narratives. Because of this, along with the multitude of social and lifestyle factors which can influence a subject, narrative analysis can be quite difficult to reproduce in subsequent research. This means that it’s difficult to test the findings of some of this research.

Similarly, researcher bias can have a strong influence on the results here, so you need to be particularly careful about the potential biases you can bring into your analysis when using this method. Nevertheless, narrative analysis is still a very useful qualitative analysis method – just keep these limitations in mind and be careful not to draw broad conclusions . If you’re keen to learn more about narrative analysis, the video below provides a great introduction to this qualitative analysis method.

QDA Method #3: Discourse Analysis

Discourse is simply a fancy word for written or spoken language or debate . So, discourse analysis is all about analysing language within its social context. In other words, analysing language – such as a conversation, a speech, etc – within the culture and society it takes place. For example, you could analyse how a janitor speaks to a CEO, or how politicians speak about terrorism.

To truly understand these conversations or speeches, the culture and history of those involved in the communication are important factors to consider. For example, a janitor might speak more casually with a CEO in a company that emphasises equality among workers. Similarly, a politician might speak more about terrorism if there was a recent terrorist incident in the country.

So, as you can see, by using discourse analysis, you can identify how culture , history or power dynamics (to name a few) have an effect on the way concepts are spoken about. So, if your research aims and objectives involve understanding culture or power dynamics, discourse analysis can be a powerful method.

Because there are many social influences in terms of how we speak to each other, the potential use of discourse analysis is vast . Of course, this also means it’s important to have a very specific research question (or questions) in mind when analysing your data and looking for patterns and themes, or you might land up going down a winding rabbit hole.

Discourse analysis can also be very time-consuming as you need to sample the data to the point of saturation – in other words, until no new information and insights emerge. But this is, of course, part of what makes discourse analysis such a powerful technique. So, keep these factors in mind when considering this QDA method. Again, if you’re keen to learn more, the video below presents a good starting point.

QDA Method #4: Thematic Analysis

Thematic analysis looks at patterns of meaning in a data set – for example, a set of interviews or focus group transcripts. But what exactly does that… mean? Well, a thematic analysis takes bodies of data (which are often quite large) and groups them according to similarities – in other words, themes . These themes help us make sense of the content and derive meaning from it.

Let’s take a look at an example.

With thematic analysis, you could analyse 100 online reviews of a popular sushi restaurant to find out what patrons think about the place. By reviewing the data, you would then identify the themes that crop up repeatedly within the data – for example, “fresh ingredients” or “friendly wait staff”.

So, as you can see, thematic analysis can be pretty useful for finding out about people’s experiences , views, and opinions . Therefore, if your research aims and objectives involve understanding people’s experience or view of something, thematic analysis can be a great choice.

Since thematic analysis is a bit of an exploratory process, it’s not unusual for your research questions to develop , or even change as you progress through the analysis. While this is somewhat natural in exploratory research, it can also be seen as a disadvantage as it means that data needs to be re-reviewed each time a research question is adjusted. In other words, thematic analysis can be quite time-consuming – but for a good reason. So, keep this in mind if you choose to use thematic analysis for your project and budget extra time for unexpected adjustments.

QDA Method #5: Grounded theory (GT)

Grounded theory is a powerful qualitative analysis method where the intention is to create a new theory (or theories) using the data at hand, through a series of “ tests ” and “ revisions ”. Strictly speaking, GT is more a research design type than an analysis method, but we’ve included it here as it’s often referred to as a method.

What’s most important with grounded theory is that you go into the analysis with an open mind and let the data speak for itself – rather than dragging existing hypotheses or theories into your analysis. In other words, your analysis must develop from the ground up (hence the name).

Let’s look at an example of GT in action.

Assume you’re interested in developing a theory about what factors influence students to watch a YouTube video about qualitative analysis. Using Grounded theory , you’d start with this general overarching question about the given population (i.e., graduate students). First, you’d approach a small sample – for example, five graduate students in a department at a university. Ideally, this sample would be reasonably representative of the broader population. You’d interview these students to identify what factors lead them to watch the video.

After analysing the interview data, a general pattern could emerge. For example, you might notice that graduate students are more likely to read a post about qualitative methods if they are just starting on their dissertation journey, or if they have an upcoming test about research methods.

From here, you’ll look for another small sample – for example, five more graduate students in a different department – and see whether this pattern holds true for them. If not, you’ll look for commonalities and adapt your theory accordingly. As this process continues, the theory would develop . As we mentioned earlier, what’s important with grounded theory is that the theory develops from the data – not from some preconceived idea.

So, what are the drawbacks of grounded theory? Well, some argue that there’s a tricky circularity to grounded theory. For it to work, in principle, you should know as little as possible regarding the research question and population, so that you reduce the bias in your interpretation. However, in many circumstances, it’s also thought to be unwise to approach a research question without knowledge of the current literature . In other words, it’s a bit of a “chicken or the egg” situation.

Regardless, grounded theory remains a popular (and powerful) option. Naturally, it’s a very useful method when you’re researching a topic that is completely new or has very little existing research about it, as it allows you to start from scratch and work your way from the ground up .

QDA Method #6: Interpretive Phenomenological Analysis (IPA)

Interpretive. Phenomenological. Analysis. IPA . Try saying that three times fast…

Let’s just stick with IPA, okay?

IPA is designed to help you understand the personal experiences of a subject (for example, a person or group of people) concerning a major life event, an experience or a situation . This event or experience is the “phenomenon” that makes up the “P” in IPA. Such phenomena may range from relatively common events – such as motherhood, or being involved in a car accident – to those which are extremely rare – for example, someone’s personal experience in a refugee camp. So, IPA is a great choice if your research involves analysing people’s personal experiences of something that happened to them.

It’s important to remember that IPA is subject – centred . In other words, it’s focused on the experiencer . This means that, while you’ll likely use a coding system to identify commonalities, it’s important not to lose the depth of experience or meaning by trying to reduce everything to codes. Also, keep in mind that since your sample size will generally be very small with IPA, you often won’t be able to draw broad conclusions about the generalisability of your findings. But that’s okay as long as it aligns with your research aims and objectives.

Another thing to be aware of with IPA is personal bias . While researcher bias can creep into all forms of research, self-awareness is critically important with IPA, as it can have a major impact on the results. For example, a researcher who was a victim of a crime himself could insert his own feelings of frustration and anger into the way he interprets the experience of someone who was kidnapped. So, if you’re going to undertake IPA, you need to be very self-aware or you could muddy the analysis.

How to choose the right analysis method

In light of all of the qualitative analysis methods we’ve covered so far, you’re probably asking yourself the question, “ How do I choose the right one? ”

Much like all the other methodological decisions you’ll need to make, selecting the right qualitative analysis method largely depends on your research aims, objectives and questions . In other words, the best tool for the job depends on what you’re trying to build. For example:

- Perhaps your research aims to analyse the use of words and what they reveal about the intention of the storyteller and the cultural context of the time.

- Perhaps your research aims to develop an understanding of the unique personal experiences of people that have experienced a certain event, or

- Perhaps your research aims to develop insight regarding the influence of a certain culture on its members.

As you can probably see, each of these research aims are distinctly different , and therefore different analysis methods would be suitable for each one. For example, narrative analysis would likely be a good option for the first aim, while grounded theory wouldn’t be as relevant.

It’s also important to remember that each method has its own set of strengths, weaknesses and general limitations. No single analysis method is perfect . So, depending on the nature of your research, it may make sense to adopt more than one method (this is called triangulation ). Keep in mind though that this will of course be quite time-consuming.

As we’ve seen, all of the qualitative analysis methods we’ve discussed make use of coding and theme-generating techniques, but the intent and approach of each analysis method differ quite substantially. So, it’s very important to come into your research with a clear intention before you decide which analysis method (or methods) to use.

Start by reviewing your research aims , objectives and research questions to assess what exactly you’re trying to find out – then select a qualitative analysis method that fits. Never pick a method just because you like it or have experience using it – your analysis method (or methods) must align with your broader research aims and objectives.

Let’s recap on QDA methods…

In this post, we looked at six popular qualitative data analysis methods:

- First, we looked at content analysis , a straightforward method that blends a little bit of quant into a primarily qualitative analysis.

- Then we looked at narrative analysis , which is about analysing how stories are told.

- Next up was discourse analysis – which is about analysing conversations and interactions.

- Then we moved on to thematic analysis – which is about identifying themes and patterns.

- From there, we went south with grounded theory – which is about starting from scratch with a specific question and using the data alone to build a theory in response to that question.

- And finally, we looked at IPA – which is about understanding people’s unique experiences of a phenomenon.

Of course, these aren’t the only options when it comes to qualitative data analysis, but they’re a great starting point if you’re dipping your toes into qualitative research for the first time.

If you’re still feeling a bit confused, consider our private coaching service , where we hold your hand through the research process to help you develop your best work.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

84 Comments

This has been very helpful. Thank you.

Thank you madam,

Thank you so much for this information

I wonder it so clear for understand and good for me. can I ask additional query?

Very insightful and useful

Good work done with clear explanations. Thank you.

Thanks so much for the write-up, it’s really good.

Thanks madam . It is very important .

thank you very good

This has been very well explained in simple language . It is useful even for a new researcher.

Great to hear that. Good luck with your qualitative data analysis, Pramod!

This is very useful information. And it was very a clear language structured presentation. Thanks a lot.

Thank you so much.

very informative sequential presentation

Precise explanation of method.

Hi, may we use 2 data analysis methods in our qualitative research?

Thanks for your comment. Most commonly, one would use one type of analysis method, but it depends on your research aims and objectives.

You explained it in very simple language, everyone can understand it. Thanks so much.

Thank you very much, this is very helpful. It has been explained in a very simple manner that even a layman understands

Thank nicely explained can I ask is Qualitative content analysis the same as thematic analysis?

Thanks for your comment. No, QCA and thematic are two different types of analysis. This article might help clarify – https://onlinelibrary.wiley.com/doi/10.1111/nhs.12048

This is my first time to come across a well explained data analysis. so helpful.

I have thoroughly enjoyed your explanation of the six qualitative analysis methods. This is very helpful. Thank you!

Thank you very much, this is well explained and useful

i need a citation of your book.

Thanks a lot , remarkable indeed, enlighting to the best

Hi Derek, What other theories/methods would you recommend when the data is a whole speech?

Keep writing useful artikel.

It is important concept about QDA and also the way to express is easily understandable, so thanks for all.

Thank you, this is well explained and very useful.

Very helpful .Thanks.

Hi there! Very well explained. Simple but very useful style of writing. Please provide the citation of the text. warm regards

The session was very helpful and insightful. Thank you

This was very helpful and insightful. Easy to read and understand

As a professional academic writer, this has been so informative and educative. Keep up the good work Grad Coach you are unmatched with quality content for sure.

Keep up the good work Grad Coach you are unmatched with quality content for sure.

Its Great and help me the most. A Million Thanks you Dr.

It is a very nice work

Very insightful. Please, which of this approach could be used for a research that one is trying to elicit students’ misconceptions in a particular concept ?

This is Amazing and well explained, thanks

great overview

What do we call a research data analysis method that one use to advise or determining the best accounting tool or techniques that should be adopted in a company.

Informative video, explained in a clear and simple way. Kudos

Waoo! I have chosen method wrong for my data analysis. But I can revise my work according to this guide. Thank you so much for this helpful lecture.

This has been very helpful. It gave me a good view of my research objectives and how to choose the best method. Thematic analysis it is.

Very helpful indeed. Thanku so much for the insight.

This was incredibly helpful.

Very helpful.

very educative

Nicely written especially for novice academic researchers like me! Thank you.

choosing a right method for a paper is always a hard job for a student, this is a useful information, but it would be more useful personally for me, if the author provide me with a little bit more information about the data analysis techniques in type of explanatory research. Can we use qualitative content analysis technique for explanatory research ? or what is the suitable data analysis method for explanatory research in social studies?

that was very helpful for me. because these details are so important to my research. thank you very much

I learnt a lot. Thank you

Relevant and Informative, thanks !

Well-planned and organized, thanks much! 🙂

I have reviewed qualitative data analysis in a simplest way possible. The content will highly be useful for developing my book on qualitative data analysis methods. Cheers!

Clear explanation on qualitative and how about Case study

This was helpful. Thank you

This was really of great assistance, it was just the right information needed. Explanation very clear and follow.

Wow, Thanks for making my life easy

This was helpful thanks .

Very helpful…. clear and written in an easily understandable manner. Thank you.

This was so helpful as it was easy to understand. I’m a new to research thank you so much.

so educative…. but Ijust want to know which method is coding of the qualitative or tallying done?

Thank you for the great content, I have learnt a lot. So helpful

precise and clear presentation with simple language and thank you for that.

very informative content, thank you.

You guys are amazing on YouTube on this platform. Your teachings are great, educative, and informative. kudos!

Brilliant Delivery. You made a complex subject seem so easy. Well done.

Beautifully explained.

Thanks a lot

Is there a video the captures the practical process of coding using automated applications?

Thanks for the comment. We don’t recommend using automated applications for coding, as they are not sufficiently accurate in our experience.

content analysis can be qualitative research?

THANK YOU VERY MUCH.

Thank you very much for such a wonderful content

do you have any material on Data collection

What a powerful explanation of the QDA methods. Thank you.

Great explanation both written and Video. i have been using of it on a day to day working of my thesis project in accounting and finance. Thank you very much for your support.

very helpful, thank you so much

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Privacy Policy

Home » Qualitative Research – Methods, Analysis Types and Guide

Qualitative Research – Methods, Analysis Types and Guide

Table of Contents

Qualitative Research

Qualitative research is a type of research methodology that focuses on exploring and understanding people’s beliefs, attitudes, behaviors, and experiences through the collection and analysis of non-numerical data. It seeks to answer research questions through the examination of subjective data, such as interviews, focus groups, observations, and textual analysis.

Qualitative research aims to uncover the meaning and significance of social phenomena, and it typically involves a more flexible and iterative approach to data collection and analysis compared to quantitative research. Qualitative research is often used in fields such as sociology, anthropology, psychology, and education.

Qualitative Research Methods

Qualitative Research Methods are as follows:

One-to-One Interview

This method involves conducting an interview with a single participant to gain a detailed understanding of their experiences, attitudes, and beliefs. One-to-one interviews can be conducted in-person, over the phone, or through video conferencing. The interviewer typically uses open-ended questions to encourage the participant to share their thoughts and feelings. One-to-one interviews are useful for gaining detailed insights into individual experiences.

Focus Groups

This method involves bringing together a group of people to discuss a specific topic in a structured setting. The focus group is led by a moderator who guides the discussion and encourages participants to share their thoughts and opinions. Focus groups are useful for generating ideas and insights, exploring social norms and attitudes, and understanding group dynamics.

Ethnographic Studies

This method involves immersing oneself in a culture or community to gain a deep understanding of its norms, beliefs, and practices. Ethnographic studies typically involve long-term fieldwork and observation, as well as interviews and document analysis. Ethnographic studies are useful for understanding the cultural context of social phenomena and for gaining a holistic understanding of complex social processes.

Text Analysis

This method involves analyzing written or spoken language to identify patterns and themes. Text analysis can be quantitative or qualitative. Qualitative text analysis involves close reading and interpretation of texts to identify recurring themes, concepts, and patterns. Text analysis is useful for understanding media messages, public discourse, and cultural trends.

This method involves an in-depth examination of a single person, group, or event to gain an understanding of complex phenomena. Case studies typically involve a combination of data collection methods, such as interviews, observations, and document analysis, to provide a comprehensive understanding of the case. Case studies are useful for exploring unique or rare cases, and for generating hypotheses for further research.

Process of Observation

This method involves systematically observing and recording behaviors and interactions in natural settings. The observer may take notes, use audio or video recordings, or use other methods to document what they see. Process of observation is useful for understanding social interactions, cultural practices, and the context in which behaviors occur.

Record Keeping

This method involves keeping detailed records of observations, interviews, and other data collected during the research process. Record keeping is essential for ensuring the accuracy and reliability of the data, and for providing a basis for analysis and interpretation.

This method involves collecting data from a large sample of participants through a structured questionnaire. Surveys can be conducted in person, over the phone, through mail, or online. Surveys are useful for collecting data on attitudes, beliefs, and behaviors, and for identifying patterns and trends in a population.

Qualitative data analysis is a process of turning unstructured data into meaningful insights. It involves extracting and organizing information from sources like interviews, focus groups, and surveys. The goal is to understand people’s attitudes, behaviors, and motivations

Qualitative Research Analysis Methods

Qualitative Research analysis methods involve a systematic approach to interpreting and making sense of the data collected in qualitative research. Here are some common qualitative data analysis methods:

Thematic Analysis

This method involves identifying patterns or themes in the data that are relevant to the research question. The researcher reviews the data, identifies keywords or phrases, and groups them into categories or themes. Thematic analysis is useful for identifying patterns across multiple data sources and for generating new insights into the research topic.

Content Analysis

This method involves analyzing the content of written or spoken language to identify key themes or concepts. Content analysis can be quantitative or qualitative. Qualitative content analysis involves close reading and interpretation of texts to identify recurring themes, concepts, and patterns. Content analysis is useful for identifying patterns in media messages, public discourse, and cultural trends.

Discourse Analysis

This method involves analyzing language to understand how it constructs meaning and shapes social interactions. Discourse analysis can involve a variety of methods, such as conversation analysis, critical discourse analysis, and narrative analysis. Discourse analysis is useful for understanding how language shapes social interactions, cultural norms, and power relationships.

Grounded Theory Analysis

This method involves developing a theory or explanation based on the data collected. Grounded theory analysis starts with the data and uses an iterative process of coding and analysis to identify patterns and themes in the data. The theory or explanation that emerges is grounded in the data, rather than preconceived hypotheses. Grounded theory analysis is useful for understanding complex social phenomena and for generating new theoretical insights.

Narrative Analysis

This method involves analyzing the stories or narratives that participants share to gain insights into their experiences, attitudes, and beliefs. Narrative analysis can involve a variety of methods, such as structural analysis, thematic analysis, and discourse analysis. Narrative analysis is useful for understanding how individuals construct their identities, make sense of their experiences, and communicate their values and beliefs.

Phenomenological Analysis

This method involves analyzing how individuals make sense of their experiences and the meanings they attach to them. Phenomenological analysis typically involves in-depth interviews with participants to explore their experiences in detail. Phenomenological analysis is useful for understanding subjective experiences and for developing a rich understanding of human consciousness.

Comparative Analysis

This method involves comparing and contrasting data across different cases or groups to identify similarities and differences. Comparative analysis can be used to identify patterns or themes that are common across multiple cases, as well as to identify unique or distinctive features of individual cases. Comparative analysis is useful for understanding how social phenomena vary across different contexts and groups.

Applications of Qualitative Research

Qualitative research has many applications across different fields and industries. Here are some examples of how qualitative research is used:

- Market Research: Qualitative research is often used in market research to understand consumer attitudes, behaviors, and preferences. Researchers conduct focus groups and one-on-one interviews with consumers to gather insights into their experiences and perceptions of products and services.

- Health Care: Qualitative research is used in health care to explore patient experiences and perspectives on health and illness. Researchers conduct in-depth interviews with patients and their families to gather information on their experiences with different health care providers and treatments.

- Education: Qualitative research is used in education to understand student experiences and to develop effective teaching strategies. Researchers conduct classroom observations and interviews with students and teachers to gather insights into classroom dynamics and instructional practices.

- Social Work : Qualitative research is used in social work to explore social problems and to develop interventions to address them. Researchers conduct in-depth interviews with individuals and families to understand their experiences with poverty, discrimination, and other social problems.

- Anthropology : Qualitative research is used in anthropology to understand different cultures and societies. Researchers conduct ethnographic studies and observe and interview members of different cultural groups to gain insights into their beliefs, practices, and social structures.

- Psychology : Qualitative research is used in psychology to understand human behavior and mental processes. Researchers conduct in-depth interviews with individuals to explore their thoughts, feelings, and experiences.

- Public Policy : Qualitative research is used in public policy to explore public attitudes and to inform policy decisions. Researchers conduct focus groups and one-on-one interviews with members of the public to gather insights into their perspectives on different policy issues.

How to Conduct Qualitative Research

Here are some general steps for conducting qualitative research:

- Identify your research question: Qualitative research starts with a research question or set of questions that you want to explore. This question should be focused and specific, but also broad enough to allow for exploration and discovery.

- Select your research design: There are different types of qualitative research designs, including ethnography, case study, grounded theory, and phenomenology. You should select a design that aligns with your research question and that will allow you to gather the data you need to answer your research question.

- Recruit participants: Once you have your research question and design, you need to recruit participants. The number of participants you need will depend on your research design and the scope of your research. You can recruit participants through advertisements, social media, or through personal networks.

- Collect data: There are different methods for collecting qualitative data, including interviews, focus groups, observation, and document analysis. You should select the method or methods that align with your research design and that will allow you to gather the data you need to answer your research question.

- Analyze data: Once you have collected your data, you need to analyze it. This involves reviewing your data, identifying patterns and themes, and developing codes to organize your data. You can use different software programs to help you analyze your data, or you can do it manually.

- Interpret data: Once you have analyzed your data, you need to interpret it. This involves making sense of the patterns and themes you have identified, and developing insights and conclusions that answer your research question. You should be guided by your research question and use your data to support your conclusions.

- Communicate results: Once you have interpreted your data, you need to communicate your results. This can be done through academic papers, presentations, or reports. You should be clear and concise in your communication, and use examples and quotes from your data to support your findings.

Examples of Qualitative Research

Here are some real-time examples of qualitative research:

- Customer Feedback: A company may conduct qualitative research to understand the feedback and experiences of its customers. This may involve conducting focus groups or one-on-one interviews with customers to gather insights into their attitudes, behaviors, and preferences.

- Healthcare : A healthcare provider may conduct qualitative research to explore patient experiences and perspectives on health and illness. This may involve conducting in-depth interviews with patients and their families to gather information on their experiences with different health care providers and treatments.

- Education : An educational institution may conduct qualitative research to understand student experiences and to develop effective teaching strategies. This may involve conducting classroom observations and interviews with students and teachers to gather insights into classroom dynamics and instructional practices.

- Social Work: A social worker may conduct qualitative research to explore social problems and to develop interventions to address them. This may involve conducting in-depth interviews with individuals and families to understand their experiences with poverty, discrimination, and other social problems.

- Anthropology : An anthropologist may conduct qualitative research to understand different cultures and societies. This may involve conducting ethnographic studies and observing and interviewing members of different cultural groups to gain insights into their beliefs, practices, and social structures.

- Psychology : A psychologist may conduct qualitative research to understand human behavior and mental processes. This may involve conducting in-depth interviews with individuals to explore their thoughts, feelings, and experiences.

- Public Policy: A government agency or non-profit organization may conduct qualitative research to explore public attitudes and to inform policy decisions. This may involve conducting focus groups and one-on-one interviews with members of the public to gather insights into their perspectives on different policy issues.

Purpose of Qualitative Research

The purpose of qualitative research is to explore and understand the subjective experiences, behaviors, and perspectives of individuals or groups in a particular context. Unlike quantitative research, which focuses on numerical data and statistical analysis, qualitative research aims to provide in-depth, descriptive information that can help researchers develop insights and theories about complex social phenomena.

Qualitative research can serve multiple purposes, including:

- Exploring new or emerging phenomena : Qualitative research can be useful for exploring new or emerging phenomena, such as new technologies or social trends. This type of research can help researchers develop a deeper understanding of these phenomena and identify potential areas for further study.

- Understanding complex social phenomena : Qualitative research can be useful for exploring complex social phenomena, such as cultural beliefs, social norms, or political processes. This type of research can help researchers develop a more nuanced understanding of these phenomena and identify factors that may influence them.

- Generating new theories or hypotheses: Qualitative research can be useful for generating new theories or hypotheses about social phenomena. By gathering rich, detailed data about individuals’ experiences and perspectives, researchers can develop insights that may challenge existing theories or lead to new lines of inquiry.

- Providing context for quantitative data: Qualitative research can be useful for providing context for quantitative data. By gathering qualitative data alongside quantitative data, researchers can develop a more complete understanding of complex social phenomena and identify potential explanations for quantitative findings.

When to use Qualitative Research

Here are some situations where qualitative research may be appropriate:

- Exploring a new area: If little is known about a particular topic, qualitative research can help to identify key issues, generate hypotheses, and develop new theories.

- Understanding complex phenomena: Qualitative research can be used to investigate complex social, cultural, or organizational phenomena that are difficult to measure quantitatively.

- Investigating subjective experiences: Qualitative research is particularly useful for investigating the subjective experiences of individuals or groups, such as their attitudes, beliefs, values, or emotions.

- Conducting formative research: Qualitative research can be used in the early stages of a research project to develop research questions, identify potential research participants, and refine research methods.

- Evaluating interventions or programs: Qualitative research can be used to evaluate the effectiveness of interventions or programs by collecting data on participants’ experiences, attitudes, and behaviors.

Characteristics of Qualitative Research

Qualitative research is characterized by several key features, including:

- Focus on subjective experience: Qualitative research is concerned with understanding the subjective experiences, beliefs, and perspectives of individuals or groups in a particular context. Researchers aim to explore the meanings that people attach to their experiences and to understand the social and cultural factors that shape these meanings.

- Use of open-ended questions: Qualitative research relies on open-ended questions that allow participants to provide detailed, in-depth responses. Researchers seek to elicit rich, descriptive data that can provide insights into participants’ experiences and perspectives.

- Sampling-based on purpose and diversity: Qualitative research often involves purposive sampling, in which participants are selected based on specific criteria related to the research question. Researchers may also seek to include participants with diverse experiences and perspectives to capture a range of viewpoints.

- Data collection through multiple methods: Qualitative research typically involves the use of multiple data collection methods, such as in-depth interviews, focus groups, and observation. This allows researchers to gather rich, detailed data from multiple sources, which can provide a more complete picture of participants’ experiences and perspectives.

- Inductive data analysis: Qualitative research relies on inductive data analysis, in which researchers develop theories and insights based on the data rather than testing pre-existing hypotheses. Researchers use coding and thematic analysis to identify patterns and themes in the data and to develop theories and explanations based on these patterns.

- Emphasis on researcher reflexivity: Qualitative research recognizes the importance of the researcher’s role in shaping the research process and outcomes. Researchers are encouraged to reflect on their own biases and assumptions and to be transparent about their role in the research process.

Advantages of Qualitative Research

Qualitative research offers several advantages over other research methods, including:

- Depth and detail: Qualitative research allows researchers to gather rich, detailed data that provides a deeper understanding of complex social phenomena. Through in-depth interviews, focus groups, and observation, researchers can gather detailed information about participants’ experiences and perspectives that may be missed by other research methods.

- Flexibility : Qualitative research is a flexible approach that allows researchers to adapt their methods to the research question and context. Researchers can adjust their research methods in real-time to gather more information or explore unexpected findings.

- Contextual understanding: Qualitative research is well-suited to exploring the social and cultural context in which individuals or groups are situated. Researchers can gather information about cultural norms, social structures, and historical events that may influence participants’ experiences and perspectives.

- Participant perspective : Qualitative research prioritizes the perspective of participants, allowing researchers to explore subjective experiences and understand the meanings that participants attach to their experiences.

- Theory development: Qualitative research can contribute to the development of new theories and insights about complex social phenomena. By gathering rich, detailed data and using inductive data analysis, researchers can develop new theories and explanations that may challenge existing understandings.

- Validity : Qualitative research can offer high validity by using multiple data collection methods, purposive and diverse sampling, and researcher reflexivity. This can help ensure that findings are credible and trustworthy.

Limitations of Qualitative Research

Qualitative research also has some limitations, including:

- Subjectivity : Qualitative research relies on the subjective interpretation of researchers, which can introduce bias into the research process. The researcher’s perspective, beliefs, and experiences can influence the way data is collected, analyzed, and interpreted.

- Limited generalizability: Qualitative research typically involves small, purposive samples that may not be representative of larger populations. This limits the generalizability of findings to other contexts or populations.

- Time-consuming: Qualitative research can be a time-consuming process, requiring significant resources for data collection, analysis, and interpretation.

- Resource-intensive: Qualitative research may require more resources than other research methods, including specialized training for researchers, specialized software for data analysis, and transcription services.

- Limited reliability: Qualitative research may be less reliable than quantitative research, as it relies on the subjective interpretation of researchers. This can make it difficult to replicate findings or compare results across different studies.

- Ethics and confidentiality: Qualitative research involves collecting sensitive information from participants, which raises ethical concerns about confidentiality and informed consent. Researchers must take care to protect the privacy and confidentiality of participants and obtain informed consent.

Also see Research Methods

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- What Is Qualitative Research? | Methods & Examples

What Is Qualitative Research? | Methods & Examples

Published on 4 April 2022 by Pritha Bhandari . Revised on 30 January 2023.

Qualitative research involves collecting and analysing non-numerical data (e.g., text, video, or audio) to understand concepts, opinions, or experiences. It can be used to gather in-depth insights into a problem or generate new ideas for research.

Qualitative research is the opposite of quantitative research , which involves collecting and analysing numerical data for statistical analysis.

Qualitative research is commonly used in the humanities and social sciences, in subjects such as anthropology, sociology, education, health sciences, and history.

- How does social media shape body image in teenagers?

- How do children and adults interpret healthy eating in the UK?

- What factors influence employee retention in a large organisation?

- How is anxiety experienced around the world?

- How can teachers integrate social issues into science curriculums?

Table of contents

Approaches to qualitative research, qualitative research methods, qualitative data analysis, advantages of qualitative research, disadvantages of qualitative research, frequently asked questions about qualitative research.

Qualitative research is used to understand how people experience the world. While there are many approaches to qualitative research, they tend to be flexible and focus on retaining rich meaning when interpreting data.

Common approaches include grounded theory, ethnography, action research, phenomenological research, and narrative research. They share some similarities, but emphasise different aims and perspectives.

Prevent plagiarism, run a free check.

Each of the research approaches involve using one or more data collection methods . These are some of the most common qualitative methods:

- Observations: recording what you have seen, heard, or encountered in detailed field notes.

- Interviews: personally asking people questions in one-on-one conversations.

- Focus groups: asking questions and generating discussion among a group of people.

- Surveys : distributing questionnaires with open-ended questions.

- Secondary research: collecting existing data in the form of texts, images, audio or video recordings, etc.

- You take field notes with observations and reflect on your own experiences of the company culture.

- You distribute open-ended surveys to employees across all the company’s offices by email to find out if the culture varies across locations.

- You conduct in-depth interviews with employees in your office to learn about their experiences and perspectives in greater detail.

Qualitative researchers often consider themselves ‘instruments’ in research because all observations, interpretations and analyses are filtered through their own personal lens.

For this reason, when writing up your methodology for qualitative research, it’s important to reflect on your approach and to thoroughly explain the choices you made in collecting and analysing the data.

Qualitative data can take the form of texts, photos, videos and audio. For example, you might be working with interview transcripts, survey responses, fieldnotes, or recordings from natural settings.

Most types of qualitative data analysis share the same five steps:

- Prepare and organise your data. This may mean transcribing interviews or typing up fieldnotes.

- Review and explore your data. Examine the data for patterns or repeated ideas that emerge.

- Develop a data coding system. Based on your initial ideas, establish a set of codes that you can apply to categorise your data.

- Assign codes to the data. For example, in qualitative survey analysis, this may mean going through each participant’s responses and tagging them with codes in a spreadsheet. As you go through your data, you can create new codes to add to your system if necessary.

- Identify recurring themes. Link codes together into cohesive, overarching themes.

There are several specific approaches to analysing qualitative data. Although these methods share similar processes, they emphasise different concepts.

Qualitative research often tries to preserve the voice and perspective of participants and can be adjusted as new research questions arise. Qualitative research is good for:

- Flexibility

The data collection and analysis process can be adapted as new ideas or patterns emerge. They are not rigidly decided beforehand.

- Natural settings

Data collection occurs in real-world contexts or in naturalistic ways.

- Meaningful insights

Detailed descriptions of people’s experiences, feelings and perceptions can be used in designing, testing or improving systems or products.

- Generation of new ideas

Open-ended responses mean that researchers can uncover novel problems or opportunities that they wouldn’t have thought of otherwise.

Researchers must consider practical and theoretical limitations in analysing and interpreting their data. Qualitative research suffers from:

- Unreliability

The real-world setting often makes qualitative research unreliable because of uncontrolled factors that affect the data.