7 Quantitative Data Examples in Education

Quantitative data plays a crucial role in education, providing valuable insights into various aspects of the learning process. By analyzing numerical information, educators can make informed decisions and implement effective strategies to improve educational outcomes. But what exactly is quantitative data in education , and why is it essential? In this article, we’ll delve into seven illustrative quantitative data examples in education and analyze their impact.

- Standardized Test Scores: Measuring Performance at Scale

- Attendance Rates: More than Just Numbers

- Graduation Rates: Tracking Long-Term Success

- Class Average Scores: Gauging Collective Performance

- Student-to-Teacher Ratios: A Reflection of Learning Environments

- Homework Completion Rates: Analyzing Daily Academic Engagement

- Frequency of Library Book Checkouts: Monitoring Reading Habits

The Importance of Quantitative Data Examples in Education

Before delving into specific examples, it’s important to understand the importance of quantitative data in education.

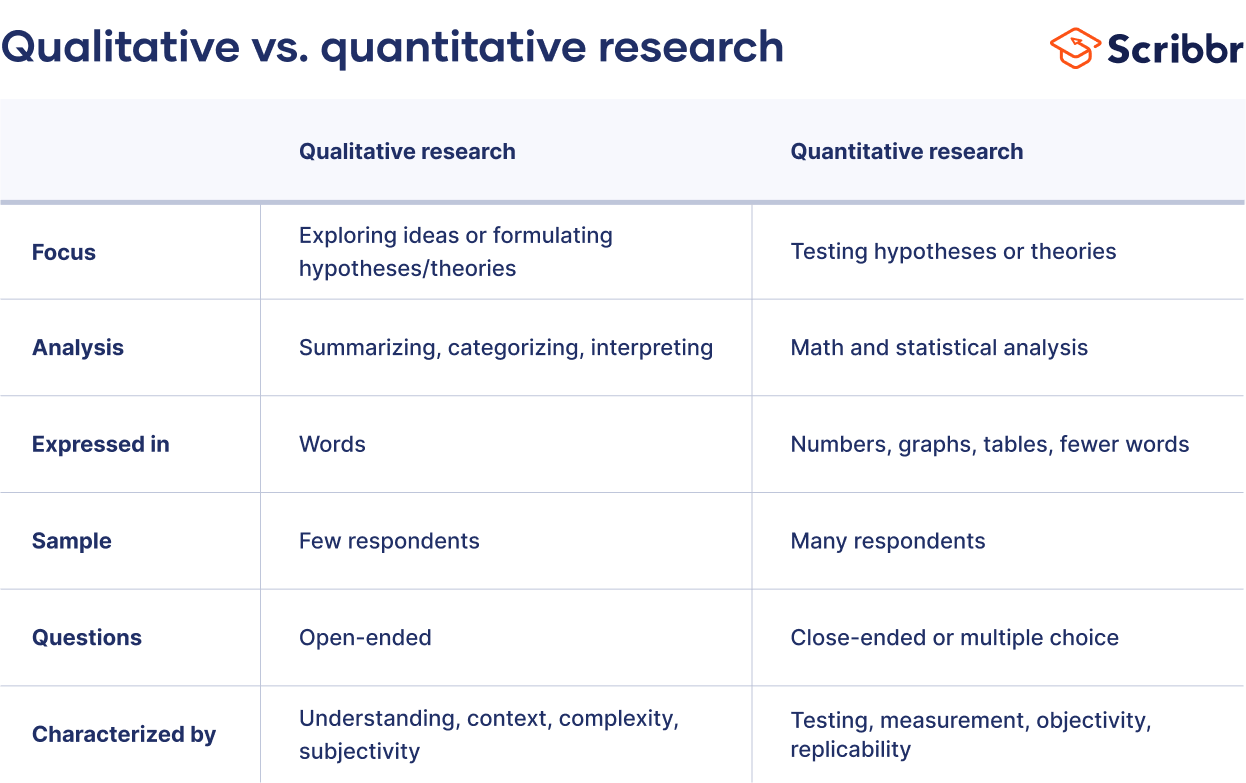

Quantitative data plays a crucial role in education by providing objective evidence of student achievement and progress. Mining educational data allows educators to identify trends and patterns, enabling them to tailor teaching methods and interventions to meet the individual needs of students. For example, if a particular group of students consistently underperforms in standardized tests, quantitative data can help educators identify the specific areas where additional support is needed. This data-driven approach ensures that resources are allocated effectively, and students receive the targeted support they require to succeed.

Read next: How data analytics is reshaping the education industry

In addition to informing classroom instruction, quantitative data also plays a significant role in shaping education policies. Policymakers rely on this data to make informed decisions about curriculum development, resource allocation, and educational reforms. By analyzing quantitative data on a larger scale, policymakers can identify systemic issues and implement evidence-based strategies to address them. For instance, if quantitative data reveals a high dropout rate in a specific region, policymakers can develop targeted interventions to improve graduation rates and ensure that students have access to quality education.

1. Standardized Test Scores: Measuring Performance at Scale

Standardized test scores, spanning from globally recognized exams like the SAT and ACT to national or regional board examinations, have become a cornerstone in the world of education. These scores serve multiple purposes, providing a consistent, objective measure of a student’s grasp of specific subjects and skills. This universal consistency allows for comparisons across regions, states, or even countries, simplifying the monumental task for college and university admissions offices when they sift through thousands of applications from varied educational backgrounds. For these institutions, these scores are invaluable in determining a student’s readiness for the rigors of higher education.

However, the significance of these scores isn’t restricted to tertiary institutions. K-12 schools and districts also harness these numbers to assess the efficacy of their teaching methodologies, curricula, and allocated resources. Consistently low scores might hint at areas where instructional techniques need refinement or indicate students who require additional support. But, as pivotal as they are, it’s essential to approach standardized test scores with a balanced perspective. They capture just one dimension of a student’s academic journey, and their true value is unlocked when integrated with other forms of quantitative and qualitative data.

2. Attendance Rates: More than Just Numbers

Attendance rates in schools often serve as more than just basic metrics of student presence. At its core, this data provides a nuanced understanding of how engaged, motivated, and committed students are to their educational pursuits. By calculating the percentage of days students are present over a set period, institutions can glean insights into a myriad of underlying factors. A consistently high attendance rate, for instance, could indicate a thriving school environment where students feel inspired and eager to participate. Conversely, a sudden drop might hint at external challenges, from health outbreaks to socio-economic disturbances.

However, diving deeper, these rates also unveil more subtle issues affecting education. Consistent absences can indicate personal struggles, whether they be familial, psychological, or health-related. For educators and administrators, understanding the intricacies behind these numbers is essential. Addressing the root causes, whether they involve bolstering student engagement through innovative teaching methods or providing additional resources for those facing challenges, ensures a more inclusive and responsive educational environment.

3. Graduation Rates: Tracking Long-Term Success

Graduation rates stand as a pivotal metric in assessing the long-term success and effectiveness of educational institutions. This rate, which depicts the percentage of students who complete their academic programs within a standard timeframe, is more than just a reflection of student diligence. It also provides insights into the quality of instruction, the adequacy of resources, and the overall support infrastructure in place. High graduation rates often suggest that an institution is not only providing valuable academic content but also fostering an environment conducive to sustained student success.

On the flip side, lower graduation rates can act as an early warning sign for potential challenges within the educational framework. Whether it’s a curriculum that doesn’t resonate with the student body, inadequate support for those with learning differences, or external factors like socio-economic challenges that affect a student’s ability to prioritize education, these numbers prompt introspection. For educators and institutional leaders, these rates serve as a guidepost, highlighting areas of success and illuminating opportunities for enhancement in the ever-evolving landscape of academia.

4. Class Average Scores: Gauging Collective Performance

Class average scores play a fundamental role in deciphering the collective performance of a student group, offering a holistic view of how a class or cohort is faring academically. By taking the mean of scores across a specific subject or class, educators can identify patterns, strengths, and areas that may require more attention. High averages might suggest that teaching methods, curricula, and learning materials are resonating with students, leading to broad comprehension and mastery of the content.

Conversely, consistently lower average scores can serve as a catalyst for introspection and change. They may indicate potential misalignments between the curriculum and students’ learning styles, a need for more interactive or varied teaching methods, or even external factors impacting students’ ability to grasp content. By closely monitoring and analyzing these averages, educational institutions can adapt dynamically, ensuring that teaching strategies evolve to meet the unique needs of every student cohort.

5. Student-to-Teacher Ratios: A Reflection of Learning Environments

The student-to-teacher ratio in educational settings offers a clear, quantifiable snapshot of the learning environment’s structure. A direct representation of how many students are assigned to each educator, this metric provides insights into the potential for individualized attention within a class. In instances where the ratio is low, it often implies that teachers have fewer students to manage, allowing for more one-on-one interactions, personalized feedback, and a closer understanding of each student’s needs and challenges.

However, a higher ratio can signify challenges in resource allocation or an influx of students beyond the institution’s standard capacity. In such scenarios, teachers might find it challenging to address individual student concerns, potentially leading to overlooked learning gaps or unmet needs. Recognizing the implications of these ratios allows educational institutions to strategize effectively, whether it’s hiring additional staff, incorporating teaching assistants, or leveraging technology to ensure every student receives the attention and support they deserve.

6. Homework Completion Rates: Analyzing Daily Academic Engagement

Homework, a staple in the K-12 educational journey, can provide more insights than just individual student performance. By tracking homework completion rates, schools gain a clearer perspective on daily academic engagement outside the classroom. Consistently high completion rates typically indicate a student body that’s committed, understands the material, and can effectively manage their time. It can also suggest that the homework given is appropriately challenging and relevant, resonating with students and thus motivating them to complete it.

Conversely, lower homework completion rates might raise flags about potential challenges students face. These can range from the homework being perceived as too difficult or irrelevant, to external factors such as familial obligations or extracurricular activities taking up significant time. Schools can use this quantitative data to reassess the nature and volume of homework assigned or to initiate conversations with students about their challenges, ensuring that homework remains a productive, beneficial aspect of the learning process.

7. Frequency of Library Book Checkouts: Monitoring Reading Habits

In K-12 schools, libraries often serve as hubs of exploration, learning, and growth. Tracking the frequency of library book checkouts can provide a quantitative measure of students’ reading habits and interests. A high frequency indicates an enthusiastic student body actively engaging with literature, research, or both. It can also reflect the effectiveness of library programs, reading challenges, or events aimed at promoting literary exploration.

On the other hand, a decline or consistently low checkout rate might signal a waning interest in reading or challenges in accessing library resources. This could prompt schools to examine the relevance and variety of available books, consider introducing digital reading platforms, or revamp the library’s ambiance to make it more inviting. Ultimately, this quantitative data aids schools in ensuring their libraries remain vibrant centers of literary exploration and learning for all students.

Quantitative data examples in education offer valuable insights into various aspects of the learning process. By analyzing different types of data in education , policymakers can make informed decisions and develop strategies to enhance educational outcomes. Harnessing the power of quantitative data allows educators to foster an environment where every student has the opportunity to thrive and reach their full potential.

As you delve into the diverse landscape of quantitative data in education, it’s paramount to harness tools that streamline analysis and interpretation. The Inno™ Starter Kits have been meticulously crafted to assist educators in navigating the intricate world of data. Whether you’re just beginning your data-driven journey or are an established expert, these kits offer a comprehensive solution to visualizing, understanding, and applying quantitative insights. Explore today and unlock unparalleled potential in educational outcomes!

Thank you for sharing!

You may also be interested in

Why interactive data visualization is the key to better student outcomes.

Discover the power of interactive data visualization for student data with this in-depth guide.

by Innovare | Dec 4, 2023 | Data in K-12 Schools

Data in K-12 Schools

What is Data in Education? The Ultimate Guide

Discover the power of data in education with our comprehensive guide.

by Innovare | Sep 18, 2023 | Data in K-12 Schools

This website uses cookies to improve your experience. See our Privacy Policy to learn more. Accept

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Quantitative Research Designs in Educational Research

Introduction, general overviews.

- Survey Research Designs

- Correlational Designs

- Other Nonexperimental Designs

- Randomized Experimental Designs

- Quasi-Experimental Designs

- Single-Case Designs

- Single-Case Analyses

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Educational Research Approaches: A Comparison

- Methodologies for Conducting Education Research

- Mixed Methods Research

- Multivariate Research Methodology

- Qualitative Data Analysis Techniques

- Qualitative, Quantitative, and Mixed Methods Research Sampling Strategies

- Researcher Development and Skills Training within the Context of Postgraduate Programs

- Single-Subject Research Design

- Social Network Analysis

- Statistical Assumptions

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Cyber Safety in Schools

- Girls' Education in the Developing World

- History of Education in Europe

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Quantitative Research Designs in Educational Research by James H. McMillan , Richard S. Mohn , Micol V. Hammack LAST REVIEWED: 24 July 2013 LAST MODIFIED: 24 July 2013 DOI: 10.1093/obo/9780199756810-0113

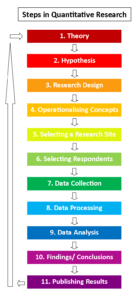

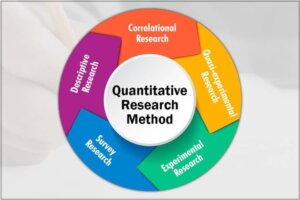

The field of education has embraced quantitative research designs since early in the 20th century. The foundation for these designs was based primarily in the psychological literature, and psychology and the social sciences more generally continued to have a strong influence on quantitative designs until the assimilation of qualitative designs in the 1970s and 1980s. More recently, a renewed emphasis on quasi-experimental and nonexperimental quantitative designs to infer causal conclusions has resulted in many newer sources specifically targeting these approaches to the field of education. This bibliography begins with a discussion of general introductions to all quantitative designs in the educational literature. The sources in this section tend to be textbooks or well-known sources written many years ago, though still very relevant and helpful. It should be noted that there are many other sources in the social sciences more generally that contain principles of quantitative designs that are applicable to education. This article then classifies quantitative designs primarily as either nonexperimental or experimental but also emphasizes the use of nonexperimental designs for making causal inferences. Among experimental designs the article distinguishes between those that include random assignment of subjects, those that are quasi-experimental (with no random assignment), and those that are single-case (single-subject) designs. Quasi-experimental and nonexperimental designs used for making causal inferences are becoming more popular in education given the practical difficulties and expense in conducting well-controlled experiments, particularly with the use of structural equation modeling (SEM). There have also been recent developments in statistical analyses that allow stronger causal inferences. Historically, quantitative designs have been tied closely to sampling, measurement, and statistics. In this bibliography there are important sources for newer statistical procedures that are needed for particular designs, especially single-case designs, but relatively little attention to sampling or measurement. The literature on quantitative designs in education is not well focused or comprehensively addressed in very many sources, except in general overview textbooks. Those sources that do include the range of designs are introductory in nature; more advanced designs and statistical analyses tend to be found in journal articles and other individual documents, with a couple exceptions. Another new trend in educational research designs is the use of mixed-method designs (both quantitative and qualitative), though this article does not emphasize these designs.

For many years there have been textbooks that present the range of quantitative research designs, both in education and the social sciences more broadly. Indeed, most of the quantitative design research principles are much the same for education, psychology, and other social sciences. These sources provide an introduction to basic designs that are used within the broader context of other educational research methodologies such as qualitative and mixed-method. Examples of these textbooks written specifically for education include Johnson and Christensen 2012 ; Mertens 2010 ; Arthur, et al. 2012 ; and Creswell 2012 . An example of a similar text written for the social sciences, including education that is dedicated only to quantitative research, is Gliner, et al. 2009 . In these texts separate chapters are devoted to different types of quantitative designs. For example, Creswell 2012 contains three quantitative design chapters—experimental, which includes both randomized and quasi-experimental designs; correlational (nonexperimental); and survey (also nonexperimental). Johnson and Christensen 2012 also includes three quantitative design chapters, with greater emphasis on quasi-experimental and single-subject research. Mertens 2010 includes a chapter on causal-comparative designs (nonexperimental). Often survey research is addressed as a distinct type of quantitative research with an emphasis on sampling and measurement (how to design surveys). Green, et al. 2006 also presents introductory chapters on different types of quantitative designs, but each of the chapters has different authors. In this book chapters extend basic designs by examining in greater detail nonexperimental methodologies structured for causal inferences and scaled-up experiments. Two additional sources are noted because they represent the types of publications for the social sciences more broadly that discuss many of the same principles of quantitative design among other types of designs. Bickman and Rog 2009 uses different chapter authors to cover topics such as statistical power for designs, sampling, randomized controlled trials, and quasi-experiments, and educational researchers will find this information helpful in designing their studies. Little 2012 provides a comprehensive coverage of topics related to quantitative methods in the social, behavioral, and education fields.

Arthur, James, Michael Waring, Robert Coe, and Larry V. Hedges, eds. 2012. Research methods & methodologies in education . Thousand Oaks, CA: SAGE.

Readers will find this book more of a handbook than a textbook. Different individuals author each of the chapters, representing quantitative, qualitative, and mixed-method designs. The quantitative chapters are on the treatment of advanced statistical applications, including analysis of variance, regression, and multilevel analysis.

Bickman, Leonard, and Debra J. Rog, eds. 2009. The SAGE handbook of applied social research methods . 2d ed. Thousand Oaks, CA: SAGE.

This handbook includes quantitative design chapters that are written for the social sciences broadly. There are relatively advanced treatments of statistical power, randomized controlled trials, and sampling in quantitative designs, though the coverage of additional topics is not as complete as other sources in this section.

Creswell, John W. 2012. Educational research: Planning, conducting, and evaluating quantitative and qualitative research . 4th ed. Boston: Pearson.

Creswell presents an introduction to all major types of research designs. Three chapters cover quantitative designs—experimental, correlational, and survey research. Both the correlational and survey research chapters focus on nonexperimental designs. Overall the introductions are complete and helpful to those beginning their study of quantitative research designs.

Gliner, Jeffrey A., George A. Morgan, and Nancy L. Leech. 2009. Research methods in applied settings: An integrated approach to design and analysis . 2d ed. New York: Routledge.

This text, unlike others in this section, is devoted solely to quantitative research. As such, all aspects of quantitative designs are covered. There are separate chapters on experimental, nonexperimental, and single-subject designs and on internal validity, sampling, and data-collection techniques for quantitative studies. The content of the book is somewhat more advanced than others listed in this section and is unique in its quantitative focus.

Green, Judith L., Gregory Camilli, and Patricia B. Elmore, eds. 2006. Handbook of complementary methods in education research . Mahwah, NJ: Lawrence Erlbaum.

Green, Camilli, and Elmore edited forty-six chapters that represent many contemporary issues and topics related to quantitative designs. Written by noted researchers, the chapters cover design experiments, quasi-experimentation, randomized experiments, and survey methods. Other chapters include statistical topics that have relevance for quantitative designs.

Johnson, Burke, and Larry B. Christensen. 2012. Educational research: Quantitative, qualitative, and mixed approaches . 4th ed. Thousand Oaks, CA: SAGE.

This comprehensive textbook of educational research methods includes extensive coverage of qualitative and mixed-method designs along with quantitative designs. Three of twenty chapters focus on quantitative designs (experimental, quasi-experimental, and single-case) and nonexperimental, including longitudinal and retrospective, designs. The level of material is relatively high, and there are introductory chapters on sampling and quantitative analyses.

Little, Todd D., ed. 2012. The Oxford handbook of quantitative methods . Vol. 1, Foundations . New York: Oxford Univ. Press.

This handbook is a relatively advanced treatment of quantitative design and statistical analyses. Multiple authors are used to address strengths and weaknesses of many different issues and methods, including advanced statistical tools.

Mertens, Donna M. 2010. Research and evaluation in education and psychology: Integrating diversity with quantitative, qualitative, and mixed methods . 3d ed. Thousand Oaks, CA: SAGE.

This textbook is an introduction to all types of educational designs and includes four chapters devoted to quantitative research—experimental and quasi-experimental, causal comparative and correlational, survey, and single-case research. The author’s treatment of some topics is somewhat more advanced than texts such as Creswell 2012 , with extensive attention to threats to internal validity for some of the designs.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Education »

- Meet the Editorial Board »

- Academic Achievement

- Academic Audit for Universities

- Academic Freedom and Tenure in the United States

- Action Research in Education

- Adjuncts in Higher Education in the United States

- Administrator Preparation

- Adolescence

- Advanced Placement and International Baccalaureate Courses

- Advocacy and Activism in Early Childhood

- African American Racial Identity and Learning

- Alaska Native Education

- Alternative Certification Programs for Educators

- Alternative Schools

- American Indian Education

- Animals in Environmental Education

- Art Education

- Artificial Intelligence and Learning

- Assessing School Leader Effectiveness

- Assessment, Behavioral

- Assessment, Educational

- Assessment in Early Childhood Education

- Assistive Technology

- Augmented Reality in Education

- Beginning-Teacher Induction

- Bilingual Education and Bilingualism

- Black Undergraduate Women: Critical Race and Gender Perspe...

- Black Women in Academia

- Blended Learning

- Case Study in Education Research

- Changing Professional and Academic Identities

- Character Education

- Children’s and Young Adult Literature

- Children's Beliefs about Intelligence

- Children's Rights in Early Childhood Education

- Citizenship Education

- Civic and Social Engagement of Higher Education

- Classroom Learning Environments: Assessing and Investigati...

- Classroom Management

- Coherent Instructional Systems at the School and School Sy...

- College Admissions in the United States

- College Athletics in the United States

- Community Relations

- Comparative Education

- Computer-Assisted Language Learning

- Computer-Based Testing

- Conceptualizing, Measuring, and Evaluating Improvement Net...

- Continuous Improvement and "High Leverage" Educational Pro...

- Counseling in Schools

- Critical Approaches to Gender in Higher Education

- Critical Perspectives on Educational Innovation and Improv...

- Critical Race Theory

- Crossborder and Transnational Higher Education

- Cross-National Research on Continuous Improvement

- Cross-Sector Research on Continuous Learning and Improveme...

- Cultural Diversity in Early Childhood Education

- Culturally Responsive Leadership

- Culturally Responsive Pedagogies

- Culturally Responsive Teacher Education in the United Stat...

- Curriculum Design

- Data Collection in Educational Research

- Data-driven Decision Making in the United States

- Deaf Education

- Desegregation and Integration

- Design Thinking and the Learning Sciences: Theoretical, Pr...

- Development, Moral

- Dialogic Pedagogy

- Digital Age Teacher, The

- Digital Citizenship

- Digital Divides

- Disabilities

- Distance Learning

- Distributed Leadership

- Doctoral Education and Training

- Early Childhood Education and Care (ECEC) in Denmark

- Early Childhood Education and Development in Mexico

- Early Childhood Education in Aotearoa New Zealand

- Early Childhood Education in Australia

- Early Childhood Education in China

- Early Childhood Education in Europe

- Early Childhood Education in Sub-Saharan Africa

- Early Childhood Education in Sweden

- Early Childhood Education Pedagogy

- Early Childhood Education Policy

- Early Childhood Education, The Arts in

- Early Childhood Mathematics

- Early Childhood Science

- Early Childhood Teacher Education

- Early Childhood Teachers in Aotearoa New Zealand

- Early Years Professionalism and Professionalization Polici...

- Economics of Education

- Education For Children with Autism

- Education for Sustainable Development

- Education Leadership, Empirical Perspectives in

- Education of Native Hawaiian Students

- Education Reform and School Change

- Educational Statistics for Longitudinal Research

- Educator Partnerships with Parents and Families with a Foc...

- Emotional and Affective Issues in Environmental and Sustai...

- Emotional and Behavioral Disorders

- English as an International Language for Academic Publishi...

- Environmental and Science Education: Overlaps and Issues

- Environmental Education

- Environmental Education in Brazil

- Epistemic Beliefs

- Equity and Improvement: Engaging Communities in Educationa...

- Equity, Ethnicity, Diversity, and Excellence in Education

- Ethical Research with Young Children

- Ethics and Education

- Ethics of Teaching

- Ethnic Studies

- Europe, History of Education in

- Evidence-Based Communication Assessment and Intervention

- Family and Community Partnerships in Education

- Family Day Care

- Federal Government Programs and Issues

- Feminization of Labor in Academia

- Finance, Education

- Financial Aid

- Formative Assessment

- Future-Focused Education

- Gender and Achievement

- Gender and Alternative Education

- Gender, Power and Politics in the Academy

- Gender-Based Violence on University Campuses

- Gifted Education

- Global Mindedness and Global Citizenship Education

- Global University Rankings

- Governance, Education

- Grounded Theory

- Growth of Effective Mental Health Services in Schools in t...

- Higher Education and Globalization

- Higher Education and the Developing World

- Higher Education Faculty Characteristics and Trends in the...

- Higher Education Finance

- Higher Education Governance

- Higher Education Graduate Outcomes and Destinations

- Higher Education in Africa

- Higher Education in China

- Higher Education in Latin America

- Higher Education in the United States, Historical Evolutio...

- Higher Education, International Issues in

- Higher Education Management

- Higher Education Policy

- Higher Education Research

- Higher Education Student Assessment

- High-stakes Testing

- History of Early Childhood Education in the United States

- History of Education in the United States

- History of Technology Integration in Education

- Homeschooling

- Inclusion in Early Childhood: Difference, Disability, and ...

- Inclusive Education

- Indigenous Education in a Global Context

- Indigenous Learning Environments

- Indigenous Students in Higher Education in the United Stat...

- Infant and Toddler Pedagogy

- Inservice Teacher Education

- Integrating Art across the Curriculum

- Intelligence

- Intensive Interventions for Children and Adolescents with ...

- International Perspectives on Academic Freedom

- Intersectionality and Education

- Knowledge Development in Early Childhood

- Leadership Development, Coaching and Feedback for

- Leadership in Early Childhood Education

- Leadership Training with an Emphasis on the United States

- Learning Analytics in Higher Education

- Learning Difficulties

- Learning, Lifelong

- Learning, Multimedia

- Learning Strategies

- Legal Matters and Education Law

- LGBT Youth in Schools

- Linguistic Diversity

- Linguistically Inclusive Pedagogy

- Literacy Development and Language Acquisition

- Literature Reviews

- Mathematics Identity

- Mathematics Instruction and Interventions for Students wit...

- Mathematics Teacher Education

- Measurement for Improvement in Education

- Measurement in Education in the United States

- Meta-Analysis and Research Synthesis in Education

- Methodological Approaches for Impact Evaluation in Educati...

- Mindfulness, Learning, and Education

- Motherscholars

- Multiliteracies in Early Childhood Education

- Multiple Documents Literacy: Theory, Research, and Applica...

- Museums, Education, and Curriculum

- Music Education

- Narrative Research in Education

- Native American Studies

- Nonformal and Informal Environmental Education

- Note-Taking

- Numeracy Education

- One-to-One Technology in the K-12 Classroom

- Online Education

- Open Education

- Organizing for Continuous Improvement in Education

- Organizing Schools for the Inclusion of Students with Disa...

- Outdoor Play and Learning

- Outdoor Play and Learning in Early Childhood Education

- Pedagogical Leadership

- Pedagogy of Teacher Education, A

- Performance Objectives and Measurement

- Performance-based Research Assessment in Higher Education

- Performance-based Research Funding

- Phenomenology in Educational Research

- Philosophy of Education

- Physical Education

- Podcasts in Education

- Policy Context of United States Educational Innovation and...

- Politics of Education

- Portable Technology Use in Special Education Programs and ...

- Post-humanism and Environmental Education

- Pre-Service Teacher Education

- Problem Solving

- Productivity and Higher Education

- Professional Development

- Professional Learning Communities

- Program Evaluation

- Programs and Services for Students with Emotional or Behav...

- Psychology Learning and Teaching

- Psychometric Issues in the Assessment of English Language ...

- Qualitative, Quantitative, and Mixed Methods Research Samp...

- Qualitative Research Design

- Quantitative Research Designs in Educational Research

- Queering the English Language Arts (ELA) Writing Classroom

- Race and Affirmative Action in Higher Education

- Reading Education

- Refugee and New Immigrant Learners

- Relational and Developmental Trauma and Schools

- Relational Pedagogies in Early Childhood Education

- Reliability in Educational Assessments

- Religion in Elementary and Secondary Education in the Unit...

- Researcher Development and Skills Training within the Cont...

- Research-Practice Partnerships in Education within the Uni...

- Response to Intervention

- Restorative Practices

- Risky Play in Early Childhood Education

- Role of Gender Equity Work on University Campuses through ...

- Scale and Sustainability of Education Innovation and Impro...

- Scaling Up Research-based Educational Practices

- School Accreditation

- School Choice

- School Culture

- School District Budgeting and Financial Management in the ...

- School Improvement through Inclusive Education

- School Reform

- Schools, Private and Independent

- School-Wide Positive Behavior Support

- Science Education

- Secondary to Postsecondary Transition Issues

- Self-Regulated Learning

- Self-Study of Teacher Education Practices

- Service-Learning

- Severe Disabilities

- Single Salary Schedule

- Single-sex Education

- Social Context of Education

- Social Justice

- Social Pedagogy

- Social Science and Education Research

- Social Studies Education

- Sociology of Education

- Standards-Based Education

- Student Access, Equity, and Diversity in Higher Education

- Student Assignment Policy

- Student Engagement in Tertiary Education

- Student Learning, Development, Engagement, and Motivation ...

- Student Participation

- Student Voice in Teacher Development

- Sustainability Education in Early Childhood Education

- Sustainability in Early Childhood Education

- Sustainability in Higher Education

- Teacher Beliefs and Epistemologies

- Teacher Collaboration in School Improvement

- Teacher Evaluation and Teacher Effectiveness

- Teacher Preparation

- Teacher Training and Development

- Teacher Unions and Associations

- Teacher-Student Relationships

- Teaching Critical Thinking

- Technologies, Teaching, and Learning in Higher Education

- Technology Education in Early Childhood

- Technology, Educational

- Technology-based Assessment

- The Bologna Process

- The Regulation of Standards in Higher Education

- Theories of Educational Leadership

- Three Conceptions of Literacy: Media, Narrative, and Gamin...

- Tracking and Detracking

- Traditions of Quality Improvement in Education

- Transformative Learning

- Transitions in Early Childhood Education

- Tribally Controlled Colleges and Universities in the Unite...

- Understanding the Psycho-Social Dimensions of Schools and ...

- University Faculty Roles and Responsibilities in the Unite...

- Using Ethnography in Educational Research

- Value of Higher Education for Students and Other Stakehold...

- Virtual Learning Environments

- Vocational and Technical Education

- Wellness and Well-Being in Education

- Women's and Gender Studies

- Young Children and Spirituality

- Young Children's Learning Dispositions

- Young Children's Working Theories

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [91.193.111.216]

- 91.193.111.216

Conducting Quantitative Research in Education

- January 2020

- ISBN: 978-981-13-9131-6

- Edith Cowan University

- University of Notre Dame Australia

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Marina Ahmad

- Aida Hanim A. Hamid

- Khaula Alkaabi

- Julian D. Romero

- Nelson Amponsah

- Awinimi Timothy Agure

- Rolly Robert Oroh

- Aristianto Budi Sutrisno

- Dwi Hardaningtyas

- Marouen Ben abdallah

- Chawalee Na Thalang

- Seri Wongmontha

- Muh. Wahyudi Jasman

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Trends and Motivations in Critical Quantitative Educational Research: A Multimethod Examination Across Higher Education Scholarship and Author Perspectives

- Open access

- Published: 04 June 2024

Cite this article

You have full access to this open access article

- Christa E. Winkler ORCID: orcid.org/0000-0002-1700-5444 1 &

- Annie M. Wofford ORCID: orcid.org/0000-0002-2246-1946 2

1186 Accesses

12 Altmetric

Explore all metrics

To challenge “objective” conventions in quantitative methodology, higher education scholars have increasingly employed critical lenses (e.g., quantitative criticalism, QuantCrit). Yet, specific approaches remain opaque. We use a multimethod design to examine researchers’ use of critical approaches and explore how authors discussed embedding strategies to disrupt dominant quantitative thinking. We draw data from a systematic scoping review of critical quantitative higher education research between 2007 and 2021 ( N = 34) and semi-structured interviews with 18 manuscript authors. Findings illuminate (in)consistencies across scholars’ incorporation of critical approaches, including within study motivations, theoretical framing, and methodological choices. Additionally, interview data reveal complex layers to authors’ decision-making processes, indicating that decisions about embracing critical quantitative approaches must be asset-based and intentional. Lastly, we discuss findings in the context of their guiding frameworks (e.g., quantitative criticalism, QuantCrit) and offer implications for employing and conducting research about critical quantitative research.

Similar content being viewed by others

Publication Patterns of Higher Education Research Using Quantitative Criticalism and QuantCrit Perspectives

Questions of Legitimacy and Quality in Educational Research

Rethinking approaches to research: the importance of considering contextually mitigating factors in promoting equitable practices in science education research

Avoid common mistakes on your manuscript.

Across the field of higher education and within many roles—including policymakers, researchers, and administrators—key leaders and educational partners have historically relied on quantitative methods to inform system-level and student-level changes to policy and practice. This reliance is rooted, in part, on the misconception that quantitative methods depict the objective state of affairs in higher education. This perception is not only inaccurate but also dangerous, as the numbers produced from quantitative methods are “neither objective nor color-blind” (Gillborn et al., 2018 , p. 159). In fact, like all research, quantitative data collection and analysis are informed by theories and beliefs that are susceptible to bias. Further, such bias may come in multiple forms such as researcher bias and bias within the statistical methods themselves (e.g., Bierema et al., 2021 ; Torgerson & Torgerson, 2003 ). Thus, if left unexamined from a critical perspective, quantitative research may inform policies and practices that fuel the engine of cultural and social reproduction in higher education (e.g., Bourdieu, 1977 ).

Largely, critical approaches to higher education research have been dominated by qualitative methods (McCoy & Rodricks, 2015 ). While qualitative approaches are vital, some have argued that a wider conceptualization of critical inquiry may propel our understanding of processes in higher education (Stage & Wells, 2014 ) and that critical research need not be explicitly qualitative (refer to Sablan, 2019 ; Stage, 2007 ). If scholars hope to embrace multiple ways of challenging persistent inequities and structures of oppression in higher education, such as racism, advancing critical quantitative work can help higher education researchers “expose and challenge hidden assumptions that frequently encode racist perspectives beneath the façade of supposed quantitative objectivity” (Gillborn et al., 2018 , p. 158).

Across professional networks in higher education, the perspectives of association leaders (e.g., Association for the Study of Higher Education [ASHE]) have often placed qualitative and quantitative research in opposition to each other, with qualitative research being a primary way to amplify the voices of systemically minoritized students, faculty, and staff (Kimball & Friedensen, 2019 ). Yet, given the vast growth of critical higher education research (e.g., Byrd, 2019 ; Espino, 2012 ; Martínez-Alemán et al., 2015 ), recent ASHE presidents have recognized how prior leaders planted transformative seeds of critical theory and praxis (Renn, 2020 ) and advocated for critical higher education scholarship as a disrupter (Stewart, 2022 ). With this shift in discourse, many members of the higher education research community have also grown their desire to expand upon the legacy of critical research—in both qualitative and quantitative forms.

Critical quantitative approaches hold promise as one avenue for meeting recent calls to embrace equity-mindedness and transform the future of higher education research, yet current structures of training and resources for quantitative methods lack guidance on engaging such approaches. For higher education scholars to advance critical inquiry via quantitative methods, we must first understand the extent to which such approaches have been adopted. Accordingly, this study sheds light on critical quantitative approaches used in higher education literature and provides storied insights from the experiences of scholars who have engaged critical perspectives with quantitative methods. We were guided by the following research questions:

To what extent do higher education scholars incorporate critical perspectives into quantitative research?

How do higher education scholars discuss specific strategies to leverage critical perspectives in quantitative research?

Contextualizing Existing Critical Approaches to Quantitative Research

To foreground our analysis of literature employing critical quantitative lenses to studies about higher education, we first must understand the roots of such framing. Broadly, the foundations of critical quantitative approaches align with many elements of equity-mindedness. Equity-mindedness prompts individuals to question divergent patterns in educational outcomes, recognize that racism is embedded in everyday practices, and invest in un/learning the effects of racial identity and racialized expectations (Bensimon, 2018 ). Yet, researchers’ commitments to critical quantitative approaches stand out as a unique thread in the larger fabric of opportunities to embrace equity-mindedness in higher education research. Below, we discuss three significant publications that have been widely applied as frameworks to engage critical quantitative approaches in higher education. While these publications are not the only ones associated with critical inquiry in quantitative research, their evolution, commonalities, and distinctions offer a robust background of epistemological development in this area of scholarship.

Quantitative Criticalism (Stage, 2007 )

Although some higher education scholars have applied critical perspectives in their research for many years, Stage’s ( 2007 ) introduction of quantitative criticalism was a salient contribution to creating greater discourse related to such perspectives. Quantitative criticalism, as a coined paradigmatic approach for engaging critical questions using quantitative data, was among the first of several crucial publications on this topic in a 2007 edition of New Directions for Institutional Research . Collectively, this special issue advanced perspectives on how higher education scholars may challenge traditional positivist and post-positivist paradigms in quantitative inquiry. Instead, researchers could apply (what Stage referred to as) quantitative criticalism to develop research questions centering on social inequities in educational processes and outcomes as well as challenge widely accepted models, measures, and analytic practices.

Notably, Stage ( 2007 ) grounded the motivation for this new paradigmatic approach in the core concepts of critical inquiry (e.g., Kincheloe & McLaren, 1994 ). Tracing critical inquiry back to the German Frankfurt school, Stage discussed how the principles of critical theory have evolved over time and highlighted Kincheloe and McLaren’s ( 1994 ) definition of critical theory as most relevant to the principles of quantitative criticalism. Kincheloe and McLaren’s definition of critical describes how researchers applying critical paradigms in their scholarship center concepts such as socially and historically created power structures, subjectivity, privilege and oppression, and the reproduction of oppression in traditional research approaches. Perhaps most importantly, Kincheloe and McLaren urge scholars to be self-conscious in their decision making—a tall ask of quantitative scholars operating from positivist and post-positivist vantage points.

In advancing quantitative criticalism, Stage ( 2007 ) first argued that all critical scholars must center their outcomes on equity. To enact this core focus on equity in quantitative criticalism, Stage outlined two tasks for researchers. First, critical quantitative researchers must “use data to represent educational processes and outcomes on a large scale to reveal inequities and to identify social or institutional perpetuation of systematic inequities in such processes and outcomes” (p. 10). Second, Stage advocated for critical quantitative researchers to “question the models, measures, and analytic practices of quantitative research in order to offer competing models, measures, and analytic practices that better describe experiences of those who have not been adequately represented” (p. 10). Stage’s arguments and invitations for criticalism spurred crucial conversations, many of which led to the development of a two-part series on critical quantitative approaches in New Directions for Institutional Research (Stage & Wells, 2014 ; Wells & Stage, 2015 ). With nearly a decade of new perspectives to offer, manuscripts within these subsequent special issues expanded the concepts of quantitative criticalism. Specifically, these new contributions advanced the notion that quantitative criticalism should include all parts of the research process—instead of maintaining a focus on paradigm and research questions alone—and made inroads when it came to challenging the (default, dominant) process of quantitative research. While many scholars offered noteworthy perspectives in these special issues (Stage & Wells, 2014 ; Wells & Stage, 2015 ), we now turn to one specific article within these special issues that offered a conceptual model for critical quantitative inquiry.

Critical Quantitative Inquiry (Rios-Aguilar, 2014 )

Building from and guided by the work of other criticalists (namely, Estela Bensimon, Sara Goldrick-Rab, Frances Stage, and Erin Leahey), Rios-Aguilar ( 2014 ) developed a complementary framework representing the process and application of critical quantitative inquiry in higher education scholarship. At the heart of Rios-Aguilar’s conceptualization lies the acknowledgment that quantitative research is a human activity that requires careful decisions. With this foundation comes the pressing need for quantitative scholars to engage in self-reflection and transparency about the processes and outcomes of their methodological choices—actions that could potentially disrupt traditional notions and deficit assumptions that maintain systems of oppression in higher education.

Rios-Aguilar ( 2014 ) offered greater specificity to build upon many principles from other criticalists. For one, methodologically, Rios-Aguilar challenged the notion of using “fancy” statistical methods just for the sake of applying advanced methods. Instead, she argued that critical quantitative scholars should engage “in a self-reflection of the actual research practices and statistical approaches (i.e., choice of centering approach, type of model estimated, number of control variables, etc.) they use and the various influences that affect those practices” (Rios-Aguilar, 2014 , p. 98). In this purview, scholars should ensure that all methodological choices advance their ability to reveal inequities; such choices may include those that challenge the use of reference groups in coding, the interpretation of statistics in ways that move beyond p -values for statistical significance, or the application and alignment of theoretical and conceptual frameworks that focus on the assets of systemically minoritized students. Rios-Aguilar also noted, in agreement with the foundations of equity-mindedness and critical theory, that quantitative criticalists have an obligation to translate findings into tangible changes in policy and practice that can redress inequities.

Ultimately, Rios-Aguilar’s ( 2014 ) framework focused on “the interplay between research questions, theory, method/research practices, and policy/advocacy” to identify how quantitative criticalists’ scholarship can be “relevant and meaningful” (p. 96). Specifically, Rios-Aguilar called upon quantitative criticalists to ask research questions that center on equity and power, engage in self-reflection about their data sources, analyses, and disaggregation techniques, attend to interpretation with practical/policy-related significance, and expand beyond field-level silos in theory and implications. Without challenging dominant approaches in quantitative higher education research, Rios-Aguilar noted that the field will continue to inaccurately capture the experiences of systemically minoritized students. In college access and success, for example, ignoring this need for evolving approaches and models would continue what Bensimon ( 2007 ) referred to as the Tintonian Dynasty, with scholars widely applying and citing Tinto’s work but failing to acknowledge the unique experiences of systemically minoritized students. These and other concrete recommendations have served as a springboard for quantitative criticalists, prompting scholars to incorporate critical approaches in more cohesive and congruent ways.

QuantCrit (Gillborn et al., 2018 )

As an epistemologically different but related form of critical quantitative scholarship, QuantCrit—quantitative critical race theory—has emerged as a vital stream of inquiry that applies critical race theory to methodological approaches. Given that statistical methods were developed in support of the eugenics movement (Zuberi, 2001 ), QuantCrit researchers must consider how the “norms” of quantitative research support white supremacy (Zuberi & Bonilla-Silva, 2008 ). Fortunately, as Garcia et al. ( 2018 ) noted, “[t]he problems concerning the ahistorical and decontextualized ‘default’ mode and misuse of quantitative research methods are not insurmountable” (p. 154). As such, the goal of QuantCrit is to conduct quantitative research in a way that can contextualize and challenge historical, social, political, and economic power structures that uphold racism (e.g., Garcia et al., 2018 ; Gillborn et al., 2018 ).

In coining the term QuantCrit, Gillborn et al. ( 2018 ) provided five QuantCrit tenets adapted from critical race theory. First, the centrality of racism offers a methodological and political statement about how racism is complex, fluid, and rooted in social dynamics of power. Second, numbers are not neutral demonstrates an imperative for QuantCrit researchers—one that prompts scholars to understand how quantitative data have been collected and analyzed to prioritize interests rooted in white, elite worldviews. As such, QuantCrit researchers must reject numbers as “true” and as presenting a unidimensional truth. Third, categories are neither “natural” nor given prompts researchers to consider how “even the most basic decisions in research design can have fundamental consequences for the re/presentation of race inequity” (Gillborn et al., 2018 , p. 171). Notably, even when race is a focus, scholars must operationalize and interpret findings related to race in the context of racism. Fourth, prioritizing voice and insight advances the notion that data cannot “speak for itself” and numerous interpretations are possible. In QuantCrit, this tenet leverages experiential knowledge among People of Color as an interpretive tool. Finally, the fifth tenet explicates how numbers can be used for social justice but statistical research cannot be placed in a position of greater legitimacy in equity efforts relative to qualitative research. Collectively, although Gillborn et al. ( 2018 ) stated that they expect—much like all epistemological foundations—the tenets of QuantCrit to be expanded, we must first understand how these stated principles arise in critical quantitative research.

Bridging Critical Quantitative Concepts as a Guiding Framework

Guided by these framings (i.e., quantitative criticalism, critical quantitative inquiry, QuantCrit) as a specific stream of inquiry within the larger realm of equity-minded educational research, we explore the extent to which the primary elements of these critical quantitative frameworks are applied in higher education. Across the framings discussed, the commitment to equity-mindedness contributes to a shared underlying essence of critical quantitative approaches. Not only do Stage, Rios-Aguilar, and Gillborn et al. aim for researchers to center on inequities and commit to disrupting “neutral” decisions about and interpretations of statistics, but they also advocate for critical quantitative research (by any name) to serve as a tool for advocacy and praxis—creating structural changes to discriminatory policies and practices, rather than ceasing equity-based commitments with publications alone. Thus, the conceptual framework for the present study brings together alignments and distinctions in scholars’ motivations and actualizations of quantitative research through a critical lens.

Specifically, looking to Stage ( 2007 ), quantitative criticalists must center on inequity in their questions and actions to disrupt traditional models, methods, and practices. Second, extending critical inquiry through all aspects of quantitative research (Rios-Aguilar, 2014 ), researchers must interrogate how critical perspectives can be embedded in every part of research. The embedded nature of critical approaches should consider how study questions, frameworks, analytic practices, and advocacy are developed with intentionality, reflexivity, and the goal of unmasking inequities. Third, centering on the five known tenets of QuantCrit (Gillborn et al., 2018 ), QuantCrit researchers should adapt critical race theory for quantitative research. Although QuantCrit tenets are likely to be expanded in the future, the foundations of such research should continue to acknowledge the centrality of racism, advance critiques of statistical neutrality and categories that serve white racial interests, prioritize the lived experiences of People of Color, and complicate how statistics can be one—but not the lone—part of social justice endeavors.

Over many years, higher education scholars have advanced more critical research, as illustrated through publication trends of critical quantitative manuscripts in higher education (Wofford & Winkler, 2022 ). However, the application of critical quantitative approaches remains laced with tensions among paradigms and analytic strategies. Despite recent systematic examinations of critical quantitative scholarship across educational research broadly (Tabron & Thomas, 2023 ), there has yet to be a comprehensive, systematic review of higher education studies that attempt to apply principles rooted in quantitative criticalism, critical quantitative inquiry, and QuantCrit. Thus, much remains to be learned regarding whether and how higher education researchers have been able to apply the principles previously articulated. In order for researchers to fully (re)imagine possibilities for future critical approaches to quantitative higher education research, we must first understand the landscape of current approaches.

Study Aims and Role of the Researchers

Study aims and scope.

For this study, we examined the extent to which authors adopted critical quantitative approaches in higher education research and the trends in tools and strategies they employed to do so. In other words, we sought to understand to what extent, and in what ways, authors—in their own perspectives—applied critical perspectives to quantitative research. We relied on the nomenclature used by the authors of each manuscript (e.g., whether they operated from the lens of quantitative criticalism, QuantCrit, or another approach determined by the authors). Importantly, our intent was not to evaluate the quality of authors’ applications of critical approaches to quantitative research in higher education.

Researcher Positionality

As with all research, our positions and motivations shape how we conceptualized and executed the present study. We come to this work as early career higher education faculty, drawn to the study of higher education as one way to rectify educational disparities, and thus are both deeply invested in understanding how critical quantitative approaches may advance such efforts. After engaging in initial discussions during an association-sponsored workshop on critical quantitative research in higher education, we were motivated to explore these perspectives, understand trends in our field, and inform our own empirical engagement. Throughout our collaboration, we were also reflexive about the social privileges we hold in the academy and society as white, cisgender women—particularly given how quantitative criticalism and QuantCrit create inroads for systemically minoritized scholars to combat the erasure of perspectives from their communities due to small sample sizes. As we work to understand prior critical quantitative endeavors, with the goal of creating opportunity for this work to flourish in the future, we continually reflect on how we can use our positions of privilege to be co-conspirators in the advancement of quantitative research for social justice in higher education.

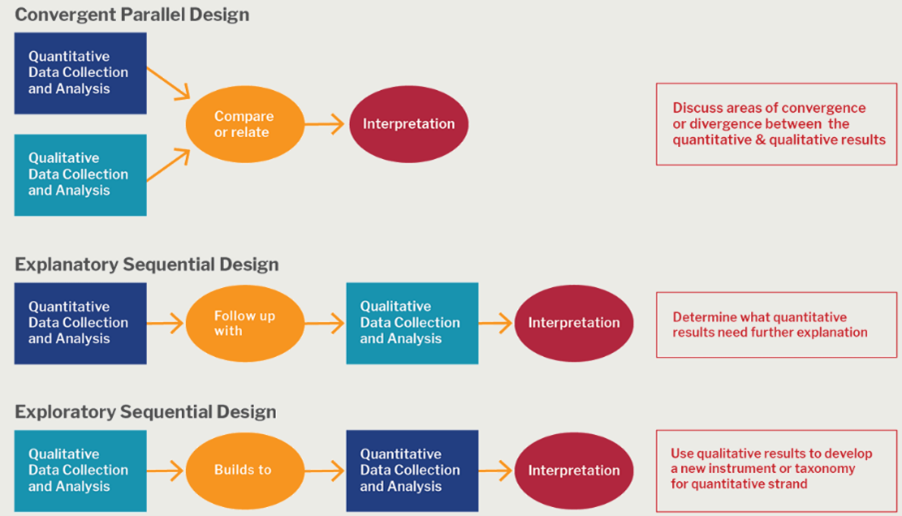

This study employed a qualitatively driven multimethod sequential design (Hesse-Biber et al., 2015 ) to illuminate how critical quantitative perspectives and methods have been applied in higher education contexts over 15 years. Anguera et al. ( 2018 ) noted that the hallmark feature of multimethod studies is the coexistence of different methodologies. Unlike mixed-methods studies, which integrate both quantitative and qualitative methods, multimethod studies can be exclusively qualitative, exclusively quantitative, or a combination of qualitative and quantitative methods. A multimethod research design was also appropriate given the distinct research questions in this study—each answered using a different stream of data. Specifically, we conducted a systematic scoping review of existing literature and facilitated follow-up interviews with a subset of corresponding authors from included publications, as detailed below and in Fig. 1 . We employed a systematic scoping review to examine the extent to which higher education scholars incorporated critical perspectives into quantitative research (research question one), and we then conducted follow-up interviews to elucidate how those scholars discussed specific strategies for leveraging critical perspectives in their quantitative research (research question two).

Sequential multimethod approach to data collection and analysis

Given the scope of our work—which examined the extent to which, and in what ways, authors applied critical perspectives to quantitative higher education research—we employed an exploratory approach with a constructivist lens. Using a constructivist paradigm allowed us to explore the many realities of doing critical quantitative research, with the authors themselves constructing truths from their worldviews (Magoon, 1977 ). In what follows, we contextualize both our methodological choices and the limitations of those choices in executing this study.

Data Sources

Systematic scoping review.

First, we employed a systematic scoping review of published higher education literature. Consistent with the purpose of a scoping review, we sought to “examine the extent, range, and nature” of critical quantitative approaches in higher education that integrate quantitative methods and critical inquiry (Arskey & O’Malley, 2005 , p. 6). We used a multi-stage scoping framework (Arskey & O’Malley, 2005 ; Levac et al., 2010 ) to identify studies that were (a) empirical, (b) conducted within a higher education context, and (c) guided by critical quantitative perspectives. We restricted our review to literature published in 2007 or later (i.e., since Stage’s formal introduction of quantitative criticalism in higher education). All studies considered for review were written in the English language.

The literature search spanned multiple databases, including Academic Search Premier, Scopus, ERIC, PsychINFO, Web of Science, SocINDEX , Psychological and Behavioral Sciences Collection, Sociological Abstracts, and JSTOR. To locate relevant works, we used independent and combined keywords that reflected the inclusion criteria, with the initial search resulting in 285 unique records for eligibility screening. All screening was conducted separately by both authors using the CADIMA online platform (Kohl et al., 2018). In total, 285 title/abstract records were screened for eligibility, with 40 full-text records subsequently screened for eligibility. After separately screening all records, we discussed inconsistencies in title/abstract and full-text eligibility ratings to reach consensus. This strategy led us to a sample of 34 manuscripts that met all inclusion criteria (Fig. 2 ).

Identification of systematic scoping review sample via literature search and screening

Systematic scoping reviews are particularly well-suited for initial examinations of emerging approaches in the literature (Munn et al., 2018 ), aligning with our goal to establish an initial understanding of the landscape of critical quantitative research applications in higher education. It also relies heavily on researcher-led qualitative review of the literature, which we viewed as a vital component of our study, as we sought to identify not just what researchers did (e.g., what topics they explored or in what outlets they did so), but also how they articulated their decision-making process in the literature. Alternative methods to examining the literature, such as bibliometric analysis, supervised topic modeling, and network analysis, may reveal additional insights regarding the scope and structure of critical quantitative research in higher education not addressed in the current study. As noted by Munn et al. ( 2018 ), systematic scoping reviews can serve as a useful precursor to more advanced approaches of research synthesis.

Semi-structured Interviews

To understand how scholars navigated the opportunities and tensions of critical quantitative inquiry in their research, we then conducted semi-structured interviews with authors whose work was identified in the scoping review. For each article meeting the review criteria ( N = 34), we compiled information about the corresponding author and their contact information as our sample universe (Robinson, 2014 ). Each corresponding author was contacted via email for participation in a semi-structured interview. There were 32 distinct corresponding authors for the 34 manuscripts, as two corresponding authors led two manuscripts each within our corpus of data. In the recruitment email, we provided corresponding authors with a link to a Qualtrics intake survey; this survey confirmed potential participants’ role as corresponding author on the identified manuscript, collected information about their professional roles and social identities, and provided information about informed consent in the study. Twenty-five authors responded to the Qualtrics survey, with 18 corresponding authors ultimately participating in an interview.

Individual semi-structured interviews were conducted via Zoom and lasted approximately 45–60 min. The interview protocol began with questions about corresponding authors’ backgrounds, then moving into questions regarding their motivations for engaging in critical approaches to quantitative methods, navigation of the epistemological and methodological tensions that may arise when doing quantitative research with a critical lens, approaches to research design, frameworks, and methods that challenged quantitative norms, and experiences with the publication process for their manuscript included in the scoping review. In other words, we asked that corresponding authors explicitly relay the thought processes underlying their methodological choices in the article(s) from our scoping review. Importantly, given the semi-structured nature of these interviews, conversations also reflected participants’ broader trajectory to and through critical quantitative thinking as well as their general reflections about how the field of higher education has grappled with critical approaches to quantitative scholarship. To increase consistency in our data collection and the nature of these conversations, the first author conducted all interviews. With participants’ consent, we recorded each interview, had interviews professionally transcribed, and then de-identified data for subsequent analysis. All interview participants were compensated for their time and contributions with a $50 Amazon gift card.

At the conclusion of each interview, participants were given the opportunity to select their own pseudonym. A profile of interview participants, along with their self-selected pseudonyms, is provided in Table 1 . Although we invited all corresponding authors to participate in interviews, our sample may reflect some self-selection bias, as authors had to opt in to be represented in the interview data. Further, interview insights do not represent all perspectives from participants’ co-authors, some of which may diverge based on lived experiences, history with quantitative research, or engagement with critical quantitative approaches.

Data Analysis

After identifying the sample of 34 publications, we began data analysis for the scoping review by uploading manuscripts to Dedoose. Both researchers then independently applied a priori codes (Saldaña, 2015 ) from Stage’s ( 2007 ) conceptualization of quantitative criticalism, Rios-Aguilar’s ( 2014 ) framework for quantitative critical inquiry, and Gillborn et al.’s ( 2018 ) QuantCrit tenets (Table 2 ). While we applied codes in accordance with Stage’s and Rios-Aguilar’s conceptualizations to each article, codes relevant to Gillborn et al.’s tenets of QuantCrit were only applied to manuscripts where authors self-identified as explicitly employing QuantCrit. Given the distinct epistemological origin of QuantCrit from broader forms of critical quantitative scholarship, codes representing the tenets of QuantCrit reflect its origins in critical race theory and may not be appropriate to apply to broader streams of critical quantitative scholarship that do not center on racism (e.g., scholarship related to (dis)ability, gender identity, sexual identity and orientation). After individually completing a priori coding, we met to reconcile discrepancies and engage in peer debriefing (Creswell & Miller, 2000 ). Data synthesis involved tabulating and reporting findings to explore how each manuscript component aligned with critical quantitative frameworks in higher education research to date.

We analyzed interview data through a multiphase process that engaged deductive and inductive coding strategies. After interviews were transcribed and redacted, we uploaded the transcripts to Dedoose for collaborative qualitative coding. The second author read each transcript in full to holistically understand participants’ insights about generating critical quantitative research. During this initial read, the second author noted quotes that were salient to our question regarding the strategies that scholars use to employ critical quantitative approaches.

Then, using the a priori codes drawn from Stage’s ( 2007 ), Rios-Aguilar’s ( 2014 ) and Gillborn et al.’s ( 2018 ) conceptualizations relevant to quantitative criticalism, critical quantitative inquiry, and QuantCrit, we collaboratively established a working codebook for deductive coding by defining the a priori codes in ways that could capture how participants discussed their work. Although these a priori codes had been previously applied to the manuscripts in the scoping review, definitions and applications of the same codes for interview analysis were noticeably broader (to align with the nature of conversations during interviews). For example, we originally applied the code “policy/advocacy”—established from Rios-Aguilar's work—to components from the implications section of scoping review manuscripts. When (re)developed for deductive coding of interview data, however, we expanded the definition of “policy/advocacy” to include participants’ policy- and advocacy-related actions (beyond writing) that advanced critical inquiry and equity for their educational communities.

In the final phase of analysis, each research team member engaged in inductive coding of the interview data. Specifically, we relied on open coding (Saldaña, 2015 ) to analyze excerpts pertaining to participants’ strategies for employing critical quantitative approaches that were not previously captured by deductive codes. Through open coding, we used successive analysis to work in sequence from a single case to multiple cases (Miles et al., 2014 ). Then, as suggested by Saldaña ( 2015 ), we collapsed our initial codes into broader categories that allowed us insight regarding how participants’ strategies in critical quantitative research expanded beyond those which have been previously articulated. Finally, to draw cohesive interpretations from these data, we independently drafted analytic memos for each interview participant’s transcript, later bridging examples from the scoping review that mapped onto qualitative codes as a form of establishing greater confidence and trustworthiness in our multimethod design.

In introducing study findings through a synthesized lens that heeds our multimethod design, we organize the sections below to draw from both scoping review and interview data. Specifically, we organize findings into two primary areas that address authors’ (1) articulated motivations to adopt critical approaches to quantitative higher education research, and (2) methodological choices that they perceive to align with critical approaches to quantitative higher education research. Within these sections, we discuss several coherent areas where authors collectively grappled with tensions in motivation (i.e., broad motivations, using coined names of critical approaches, conveying positionality, leveraging asset-based frameworks) and method (i.e., using data sources and choosing variables, challenging coding norms, interpreting statistical results), all of which signal authors’ efforts to embody criticality in quantitative research about higher education. Given our sequential research questions, which first examined the landscape of critical quantitative higher education research and then asked authors to elucidate their thought processes and strategies underlying their approaches to these manuscripts, our findings primarily focus on areas of convergence across data sources; we do, however, highlight challenges and tensions authors faced in conducting such work.

Articulated Motivations in Critical Approaches to Quantitative Research

To date, critical quantitative researchers in higher education have heeded Stage’s ( 2007 ) call to use data to reveal the large-scale perpetuation of inequities in educational processes and outcomes. This emerged as a defining aspect of higher education scholars’ critical quantitative work, as all manuscripts ( N = 34) in the scoping review articulated underlying motivations to identify and/or address inequities.

Often, these motivations were reflected in the articulated research questions ( n = 31; 91.2%). For example, one manuscript sought to “critically examine […] whether students were differentially impacted” by an educational policy based on intersecting race/ethnicity, gender, and income (Article 29, p. 39). Others sought to challenge notions of homogeneity across groups of systemically minoritized individuals by “explor[ing] within-group heterogeneity” of constructs such as sense of belonging among Asian American students (Article 32, p. iii) and “challenging the assumption that [economically and educationally challenged] students are a monolithic group with the same values and concerns” (Article 31, p. 5). These underlying motivations for conducting critical quantitative research emerged most clearly in the named approaches, positionality statements, and asset-based frameworks articulated in manuscripts.

Adopting the Coined Names of Quantitative Criticalism, QuantCrit, and Related Approaches

Based on the inclusion criteria applied in the scoping review, we anticipated that all manuscripts would employ approaches that were explicitly critical and quantitative in nature. Accordingly, all manuscripts ( N = 34; 100%) adopted approaches that were coined as quantitative criticalism , QuantCrit , critical policy analysis (CPA), critical quantitative intersectionality (CQI) , or some combination of those terms. Twenty-one manuscripts (61.8%) identified their approach as quantitative criticalism, nine manuscripts (26.5%) identified their approach as QuantCrit, two manuscripts (5.9%) identified their approach as CPA, and two manuscripts (5.9%) identified their approach as CQI.