- Technical Articles

Neural Networks Provide Solutions to Real-World Problems: Powerful new algorithms to explore, classify, and identify patterns in data

By Matthew J. Simoneau, MathWorks and Jane Price, MathWorks

Inspired by research into the functioning of the human brain, artificial neural networks are able to learn from experience. These powerful problem solvers are highly effective where traditional, formal analysis would be difficult or impossible. Their strength lies in their ability to make sense out of complex, noisy, or nonlinear data. Neural networks can provide robust solutions to problems in a wide range of disciplines, particularly areas involving classification, prediction, filtering, optimization, pattern recognition, and function approximation.

sum98net_fig1_w.gif

A look at a specific application using neural networks technology will illustrate how it can be applied to solve real-world problems. An interesting example can be found at the University of Saskatchewan, where researchers are using MATLAB and the Neural Network Toolbox to determine whether a popcorn kernel will pop.

Knowing that nothing is worse than a half-popped bag of popcorn, they set out to build a system that could recognize which kernels will pop when heated by looking at their physical characteristics. The neural network learns to recognize what differentiates a poppable from an unpoppable kernel by looking at 16 features, such as roughness, color, and size.

The goal is to design a neural network that maps a set of inputs (the 16 features extracted from a kernel) to the proper output, in this case a 1 for popped, and -1 for unpopped. The first step is to gather this data from hundreds of kernels. To do this, the researchers extract the characteristics of each kernel using a machine vision system, then heat the kernel to see if it pops. This data, when combined with the proper learning algorithm, will be used to teach the network to recognize a good kernel from a bad one.

Designing the network

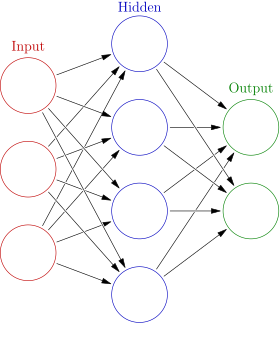

As the name suggests, a neural network is a collection of connected artificial neurons. Each artificial neuron is based on a simplified model of the neurons found in the human brain. The complexity of the task dictates the size and structure of the network. The popcorn problem requires a standard feed-forward network. An example of this type of network is shown in Figure 1. But the popcorn problem needs 16 inputs, 15 neurons in the first hidden layer, 35 in the second, and 1 output neuron. Each neuron has a connection to each of the neurons in the previous layer. Each of these connections has a weight that determines the strength of the coupling.

For this problem, the backpropagation algorithm guides the network's training. It holds the network's structure constant and modifies the weight associated with each connection. This is an iterative process that takes these initially random weights and adjusts them so the network will perform better after each pass through the data. Each set of features is presented to the neural network along with the corresponding desired output. The input signal propagates through the network and emerges at the output. The network's actual output is compared to the desired output to measure the network's performance. The algorithm then adjusts the weights to decrease this error. This training process continues until the network's performance can no longer improve.

The desired result is a neural network that is able to distinguish a poppable kernel from an unpoppable one. The key to the training is that the network doesn't just memorize specific kernels. Rather, it generalizes from the training sample and builds an internal model of which combinations of features determine “poppability.” The test, of course, is to give the network some data extracted from kernels it has never seen before and have it classify them. Illustrated in Figure 2, the network is correct three out of four times, providing the manufacturer with a method to significantly increase popcorn quality.

More Than Popcorn

Neural network technology has been proven to excel in solving a variety of complex problems in engineering, science, finance, and market analysis. Examples of the practical applications of this technology are widespread. For example, NOW! Software uses the Neural Network Toolbox to predict prices in futures markets for the financial community. The model is able to generate highly accurate, next-day price predictions. Meanwhile researchers at Scientific Monitoring, Inc., are using MATLAB and the Neural Network Toolbox to apply a neural network-based, sensor validation system to a simulation of a turbofan engine. Their ultimate goal is to improve the time-limited dispatch of an aircraft by deferring engine sensor maintenance without a loss in operational safety or performance.

Highlights of Neural Network Toolbox 3.0

The latest release offers several new features, including new network types, learning and training algorithms, improved network performance, easier customization, and increased design flexibility.

- New modular network representation: all network properties can be easily customized and are collected in a single network object

- New reduced memory: Levenberg-Marquardt algorithm for handling very large problems

- New supervised networks - Generalized Regression - Probabilistic

- New network training algorithms - Resilient Backpropogation (Rprop) - Conjugate Gradient - Two Quasi-Newton methods

- Flexible and easy-to-customize network performance, initialization, learning and training functions

- Automatic creation of network simulation blocks for use with Simulink

- New training options - Automatic regularization - Training with validation - Early stopping

- New pre- and post-processing functions

Published 1998

Products Used

Deep Learning Toolbox

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

- América Latina (Español)

- Canada (English)

- United States (English)

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- United Kingdom (English)

Asia Pacific

- Australia (English)

- India (English)

- New Zealand (English)

Contact your local office

- Contact sales

Deep Learning Neural Networks Explained in Plain English

Machine learning , and especially deep learning, are two technologies that are changing the world.

After a long "AI winter" that spanned 30 years, computing power and data sets have finally caught up to the artificial intelligence algorithms that were proposed during the second half of the twentieth century.

This means that deep learning models are finally being used to make effective predictions that solve real-world problems.

It's more important than ever for data scientists and software engineers to have a high-level understanding of how deep learning models work. This article will explain the history and basic concepts of deep learning neural networks in plain English.

The History of Deep Learning

Deep learning was conceptualized by Geoffrey Hinton in the 1980s. He is widely considered to be the founding father of the field of deep learning. Hinton has worked at Google since March 2013 when his company, DNNresearch Inc., was acquired.

Hinton’s main contribution to the field of deep learning was to compare machine learning techniques to the human brain.

More specifically, he created the concept of a "neural network", which is a deep learning algorithm structured similar to the organization of neurons in the brain. Hinton took this approach because the human brain is arguably the most powerful computational engine known today.

The structure that Hinton created was called an artificial neural network (or artificial neural net for short). Here’s a brief description of how they function:

- Artificial neural networks are composed of layers of node

- Each node is designed to behave similarly to a neuron in the brain

- The first layer of a neural net is called the input layer, followed by hidden layers, then finally the output layer

- Each node in the neural net performs some sort of calculation, which is passed on to other nodes deeper in the neural net

Here is a simplified visualization to demonstrate how this works:

Neural nets represented an immense stride forward in the field of deep learning.

However, it took decades for machine learning (and especially deep learning) to gain prominence.

We’ll explore why in the next section.

Why Deep Learning Did Not Immediately Work

If deep learning was originally conceived decades ago, why is it just beginning to gain momentum today?

It’s because any mature deep learning model requires an abundance of two resources:

- Computing power

At the time of deep learning’s conceptual birth, researchers did not have access to enough of either data or computing power to build and train meaningful deep learning models. This has changed over time, which has led to deep learning’s prominence today.

Understanding Neurons in Deep Learning

Neurons are a critical component of any deep learning model.

In fact, one could argue that you can’t fully understand deep learning with having a deep knowledge of how neurons work.

This section will introduce you to the concept of neurons in deep learning. We’ll talk about the origin of deep learning neurons, how they were inspired by the biology of the human brain, and why neurons are so important in deep learning models today.

What is a Neuron in Biology?

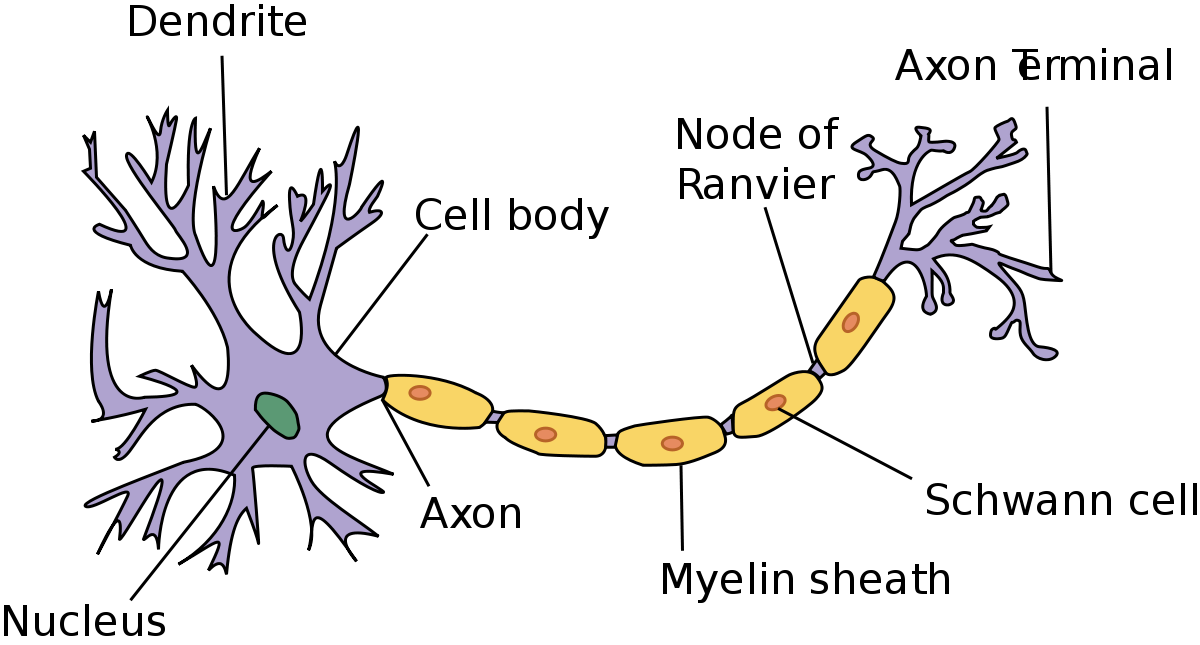

Neurons in deep learning were inspired by neurons in the human brain. Here is a diagram of the anatomy of a brain neuron:

As you can see, neurons have quite an interesting structure. Groups of neurons work together inside the human brain to perform the functionality that we require in our day-to-day lives.

The question that Geoffrey Hinton asked during his seminal research in neural networks was whether we could build computer algorithms that behave similarly to neurons in the brain. The hope was that by mimicking the brain’s structure, we might capture some of its capability.

To do this, researchers studied the way that neurons behaved in the brain. One important observation was that a neuron by itself is useless. Instead, you require networks of neurons to generate any meaningful functionality.

This is because neurons function by receiving and sending signals. More specifically, the neuron’s dendrites receive signals and pass along those signals through the axon .

The dendrites of one neuron are connected to the axon of another neuron. These connections are called synapses , which is a concept that has been generalized to the field of deep learning.

What is a Neuron in Deep Learning?

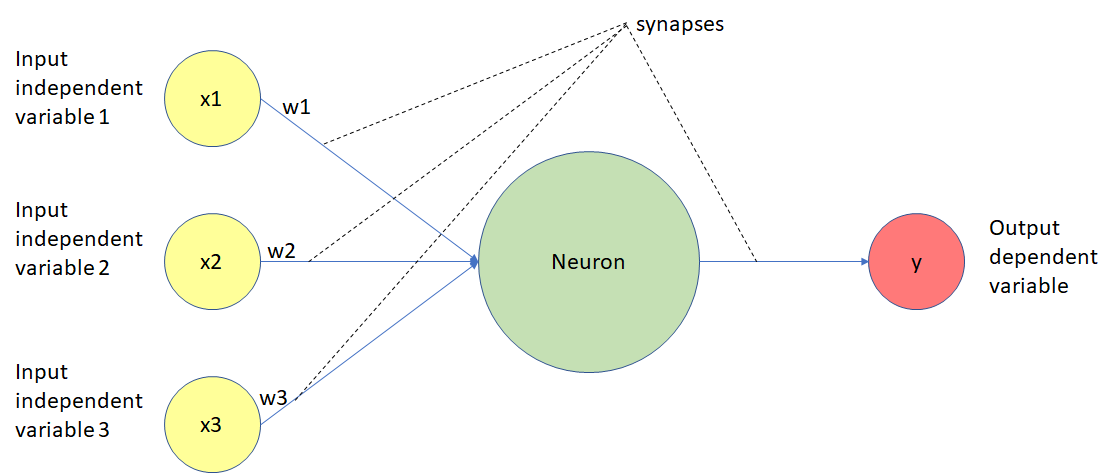

Neurons in deep learning models are nodes through which data and computations flow.

Neurons work like this:

- They receive one or more input signals. These input signals can come from either the raw data set or from neurons positioned at a previous layer of the neural net.

- They perform some calculations.

- They send some output signals to neurons deeper in the neural net through a synapse .

Here is a diagram of the functionality of a neuron in a deep learning neural net:

Let’s walk through this diagram step-by-step.

As you can see, neurons in a deep learning model are capable of having synapses that connect to more than one neuron in the preceding layer. Each synapse has an associated weight , which impacts the preceding neuron’s importance in the overall neural network.

Weights are a very important topic in the field of deep learning because adjusting a model’s weights is the primary way through which deep learning models are trained. You’ll see this in practice later on when we build our first neural networks from scratch.

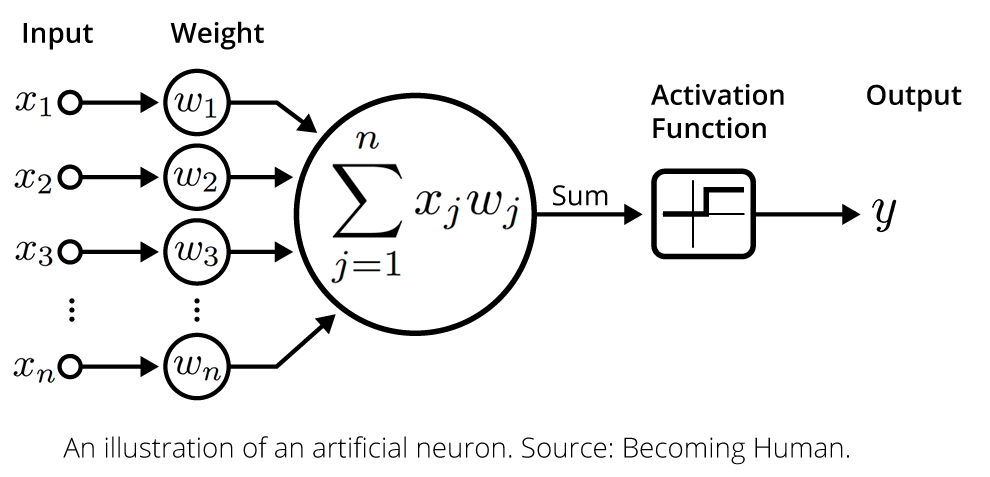

Once a neuron receives its inputs from the neurons in the preceding layer of the model, it adds up each signal multiplied by its corresponding weight and passes them on to an activation function, like this:

The activation function calculates the output value for the neuron. This output value is then passed on to the next layer of the neural network through another synapse.

This serves as a broad overview of deep learning neurons. Do not worry if it was a lot to take in – we’ll learn much more about neurons in the rest of this tutorial. For now, it’s sufficient for you to have a high-level understanding of how they are structured in a deep learning model.

Deep Learning Activation Functions

Activation functions are a core concept to understand in deep learning.

They are what allows neurons in a neural network to communicate with each other through their synapses.

In this section, you will learn to understand the importance and functionality of activation functions in deep learning.

What Are Activation Functions in Deep Learning?

In the last section, we learned that neurons receive input signals from the preceding layer of a neural network. A weighted sum of these signals is fed into the neuron's activation function, then the activation function's output is passed onto the next layer of the network.

There are four main types of activation functions that we’ll discuss in this tutorial:

- Threshold functions

- Sigmoid functions

- Rectifier functions, or ReLUs

- Hyperbolic Tangent functions

Let’s work through these activations functions one-by-one.

Threshold Functions

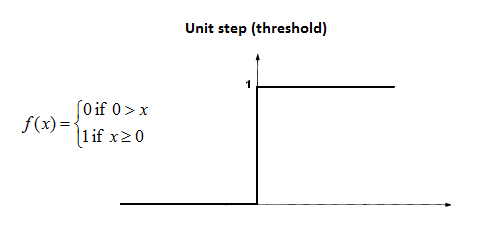

Threshold functions compute a different output signal depending on whether or not its input lies above or below a certain threshold. Remember, the input value to an activation function is the weighted sum of the input values from the preceding layer in the neural network.

Mathematically speaking, here is the formal definition of a deep learning threshold function:

As the image above suggests, the threshold function is sometimes also called a unit step function .

Threshold functions are similar to boolean variables in computer programming. Their computed value is either 1 (similar to True ) or 0 (equivalent to False ).

The Sigmoid Function

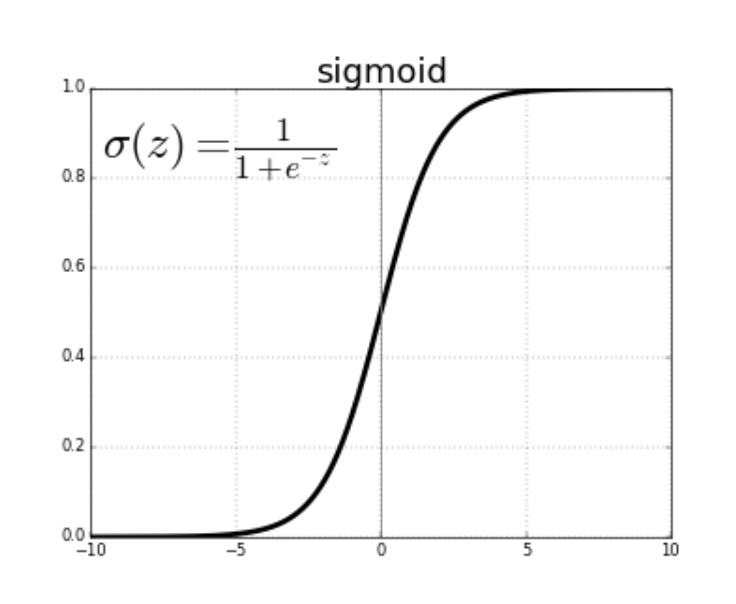

The sigmoid function is well-known among the data science community because of its use in logistic regression , one of the core machine learning techniques used to solve classification problems .

The sigmoid function can accept any value, but always computes a value between 0 and 1 .

Here is the mathematical definition of the sigmoid function:

One benefit of the sigmoid function over the threshold function is that its curve is smooth. This means it is possible to calculate derivatives at any point along the curve.

The Rectifier Function

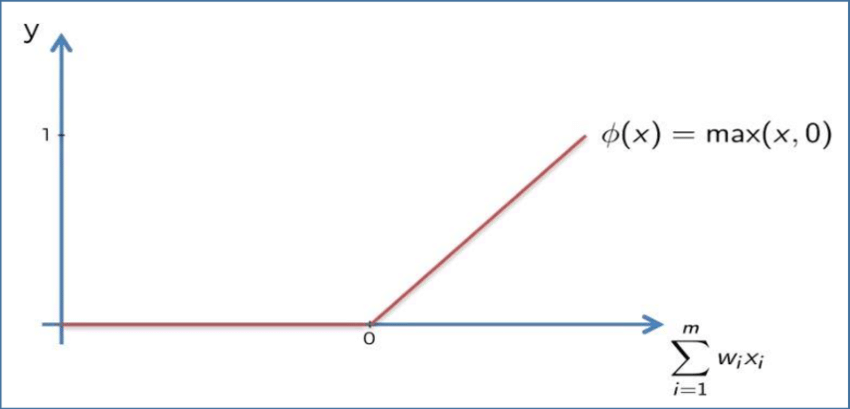

The rectifier function does not have the same smoothness property as the sigmoid function from the last section. However, it is still very popular in the field of deep learning.

The rectifier function is defined as follows:

- If the input value is less than 0 , then the function outputs 0

- If not, the function outputs its input value

Here is this concept explained mathematically:

Rectifier functions are often called Rectified Linear Unit activation functions, or ReLUs for short.

The Hyperbolic Tangent Function

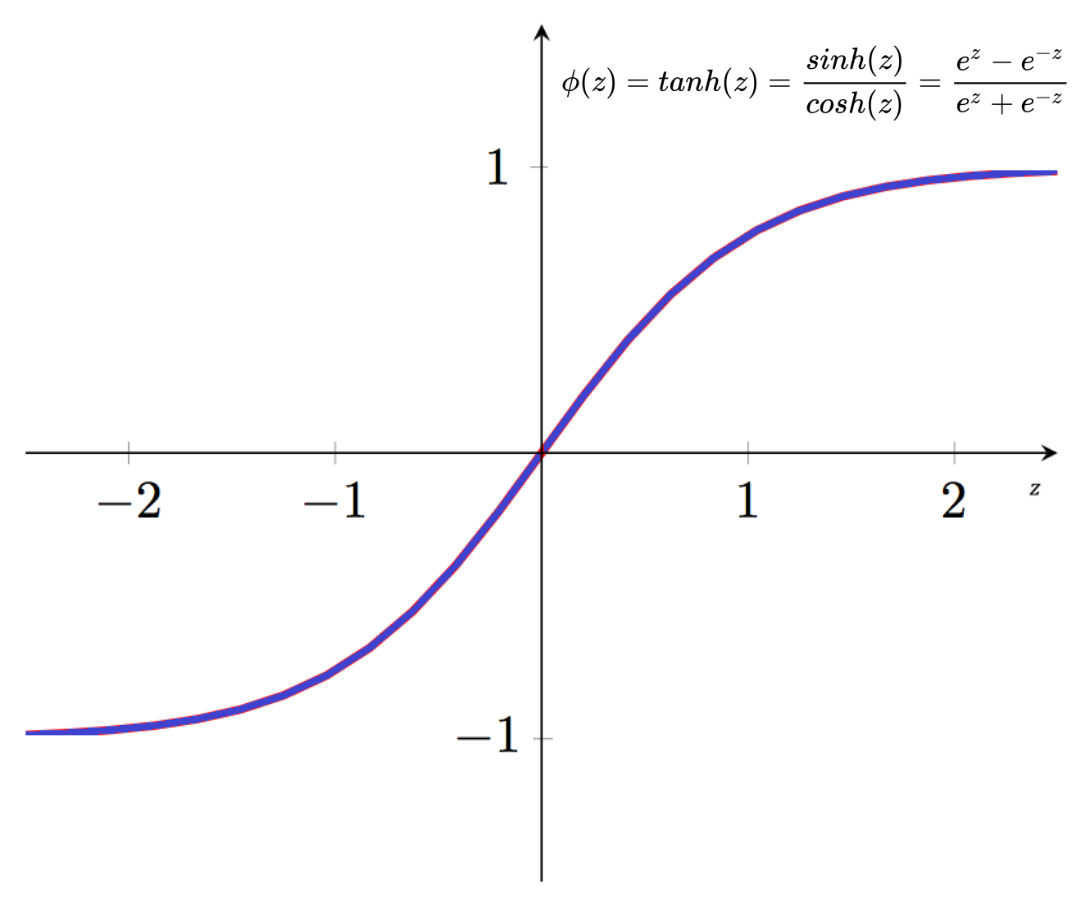

The hyperbolic tangent function is the only activation function included in this tutorial that is based on a trigonometric identity.

It’s mathematical definition is below:

The hyperbolic tangent function is similar in appearance to the sigmoid function, but its output values are all shifted downwards.

How Do Neural Networks Really Work?

So far in this tutorial, we have discussed two of the building blocks for building neural networks:

- Activation functions

However, you’re probably still a bit confused as to how neural networks really work.

This tutorial will put together the pieces we’ve already discussed so that you can understand how neural networks work in practice.

The Example We’ll Be Using In This Tutorial

This tutorial will work through a real-world example step-by-step so that you can understand how neural networks make predictions.

More specifically, we will be dealing with property valuations.

You probably already know that there are a ton of factors that influence house prices, including the economy, interest rates, its number of bedrooms/bathrooms, and its location.

The high dimensionality of this data set makes it an interesting candidate for building and training a neural network on.

One caveat about this section is the neural network we will be using to make predictions has already been trained . We’ll explore the process for training a new neural network in the next section of this tutorial.

The Parameters In Our Data Set

Let’s start by discussing the parameters in our data set. More specifically, let’s imagine that the data set contains the following parameters:

- Square footage

- Distance to city center

These four parameters will form the input layer of the artificial neural network. Note that in reality, there are likely many more parameters that you could use to train a neural network to predict housing prices. We have constrained this number to four to keep the example reasonably simple.

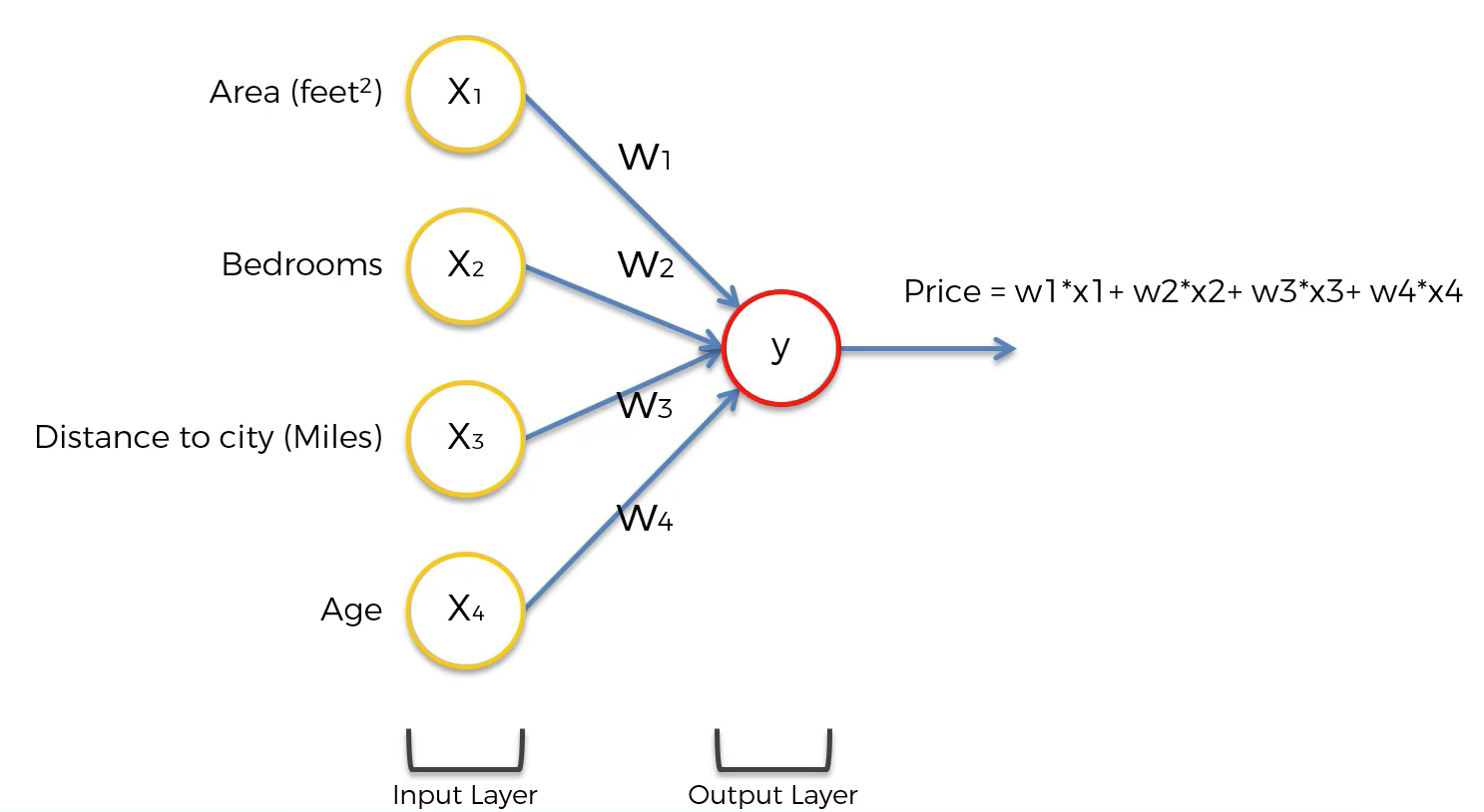

The Most Basic Form of a Neural Network

In its most basic form, a neural network only has two layers - the input layer and the output layer. The output layer is the component of the neural net that actually makes predictions.

For example, if you wanted to make predictions using a simple weighted sum (also called linear regression) model, your neural network would take the following form:

While this diagram is a bit abstract, the point is that most neural networks can be visualized in this manner:

- An input layer

- Possibly some hidden layers

- An output layer

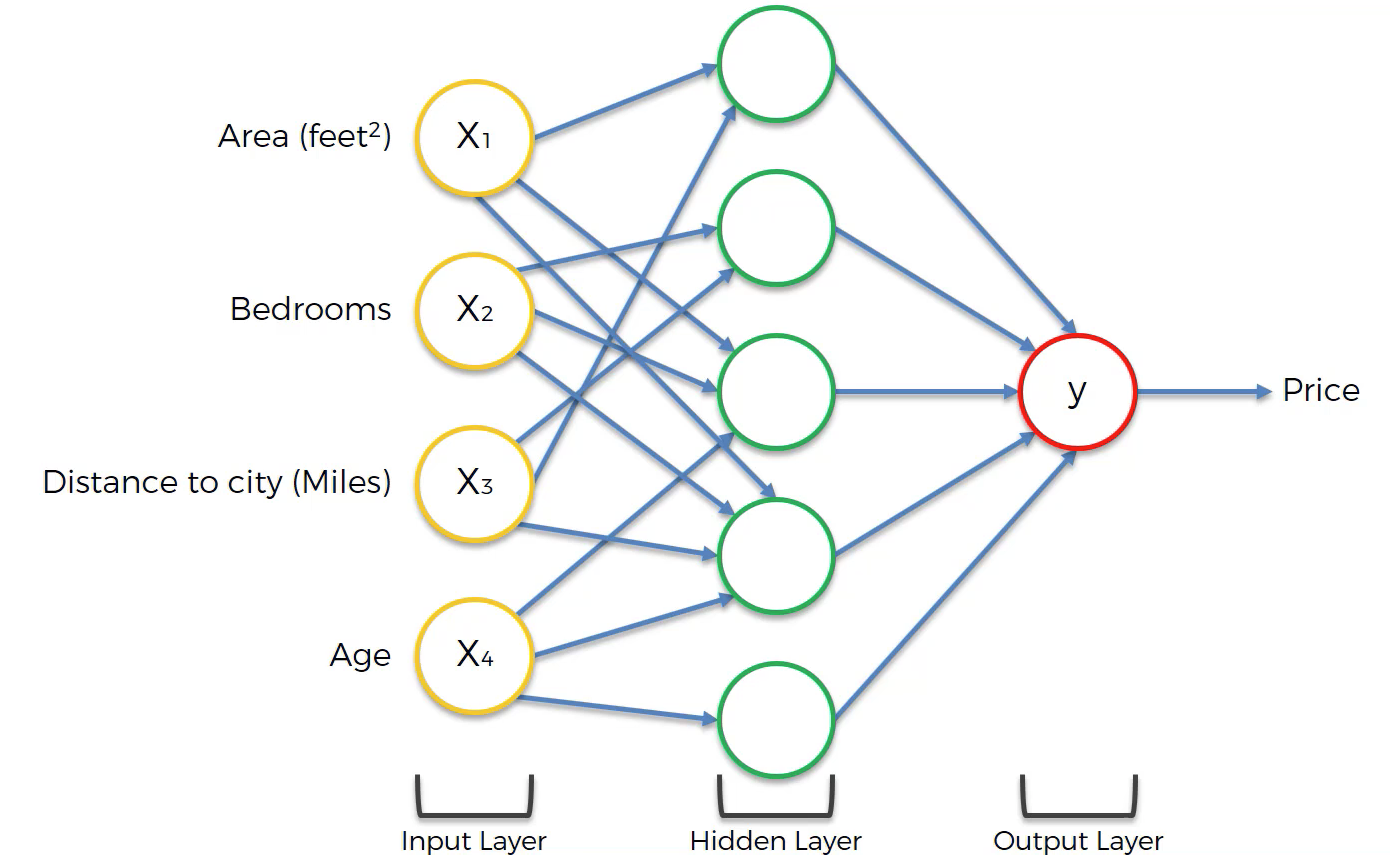

It is the hidden layer of neurons that causes neural networks to be so powerful for calculating predictions.

For each neuron in a hidden layer, it performs calculations using some (or all) of the neurons in the last layer of the neural network. These values are then used in the next layer of the neural network.

The Purpose of Neurons in the Hidden Layer of a Neural Network

You are probably wondering – what exactly does each neuron in the hidden layer mean ? Said differently, how should machine learning practitioners interpret these values?

Generally speaking, neurons in the midden layers of a neural net are activated (meaning their activation function returns 1 ) for an input value that satisfies certain sub-properties.

For our housing price prediction model, one example might be 5-bedroom houses with small distances to the city center.

In most other cases, describing the characteristics that would cause a neuron in a hidden layer to activate is not so easy.

How Neurons Determine Their Input Values

Earlier in this tutorial, I wrote “For each neuron in a hidden layer, it performs calculations using some (or all) of the neurons in the last layer of the neural network.”

This illustrates an important point – that each neuron in a neural net does not need to use every neuron in the preceding layer.

The process through which neurons determine which input values to use from the preceding layer of the neural net is called training the model. We will learn more about training neural nets in the next section of this course.

Visualizing A Neural Net’s Prediction Process

When visualizing a neutral network, we generally draw lines from the previous layer to the current layer whenever the preceding neuron has a weight above 0 in the weighted sum formula for the current neuron.

The following image will help visualize this:

As you can see, not every neuron-neuron pair has synapse. x4 only feeds three out of the five neurons in the hidden layer, as an example. This illustrates an important point when building neural networks – that not every neuron in a preceding layer must be used in the next layer of a neural network.

How Neural Networks Are Trained

So far you have learned the following about neural networks:

- That they are composed of neurons

- That each neuron uses an activation function applied to the weighted sum of the outputs from the preceding layer of the neural network

- A broad, no-code overview of how neural networks make predictions

We have not yet covered a very important part of the neural network engineering process: how neural networks are trained.

Now you will learn how neural networks are trained. We’ll discuss data sets, algorithms, and broad principles used in training modern neural networks that solve real-world problems.

Hard-Coding vs. Soft-Coding

There are two main ways that you can develop computer applications. Before digging in to how neural networks are trained, it’s important to make sure that you have an understanding of the difference between hard-coding and soft-coding computer programs.

Hard-coding means that you explicitly specify input variables and your desired output variables. Said differently, hard-coding leaves no room for the computer to interpret the problem that you’re trying to solve.

Soft-coding is the complete opposite. It leaves room for the program to understand what is happening in the data set. Soft-coding allows the computer to develop its own problem-solving approaches.

A specific example is helpful here. Here are two instances of how you might identify cats within a data set using soft-coding and hard-coding techniques.

- Hard-coding: you use specific parameters to predict whether an animal is a cat. More specifically, you might say that if an animal’s weight and length lie within certain

- Soft-coding: you provide a data set that contains animals labelled with their species type and characteristics about those animals. Then you build a computer program to predict whether an animal is a cat or not based on the characteristics in the data set.

As you might imagine, training neural networks falls into the category of soft-coding. Keep this in mind as you proceed through this course.

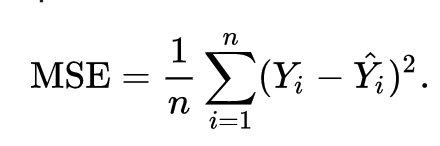

Training A Neural Network Using A Cost Function

Neural networks are trained using a cost function , which is an equation used to measure the error contained in a network’s prediction.

The formula for a deep learning cost function (of which there are many – this is just one example) is below:

Note: this cost function is called the mean squared error , which is why there is an MSE on the left side of the equal sign.

While there is plenty of formula mathematics in this equation, it is best summarized as follows:

Take the difference between the predicted output value of an observation and the actual output value of that observation. Square that difference and divide it by 2.

To reiterate, note that this is simply one example of a cost function that could be used in machine learning (although it is admittedly the most popular choice). The choice of which cost function to use is a complex and interesting topic on its own, and outside the scope of this tutorial.

As mentioned, the goal of an artificial neural network is to minimize the value of the cost function. The cost function is minimized when your algorithm’s predicted value is as close to the actual value as possible. Said differently, the goal of a neural network is to minimize the error it makes in its predictions!

Modifying A Neural Network

After an initial neural network is created and its cost function is imputed, changes are made to the neural network to see if they reduce the value of the cost function.

More specifically, the actual component of the neural network that is modified is the weights of each neuron at its synapse that communicate to the next layer of the network.

The mechanism through which the weights are modified to move the neural network to weights with less error is called gradient descent . For now, it’s enough for you to understand that the process of training neural networks looks like this:

- Initial weights for the input values of each neuron are assigned

- Predictions are calculated using these initial values

- The predictions are fed into a cost function to measure the error of the neural network

- A gradient descent algorithm changes the weights for each neuron’s input values

- This process is continued until the weights stop changing (or until the amount of their change at each iteration falls below a specified threshold)

This may seem very abstract - and that’s OK! These concepts are usually only fully understood when you begin training your first machine learning models.

Final Thoughts

In this tutorial, you learned about how neural networks perform computations to make useful predictions.

If you're interested in learning more about building, training, and deploying cutting-edge machine learning model, my eBook Pragmatic Machine Learning will teach you how to build 9 different machine learning models using real-world projects.

You can deploy the code from the eBook to your GitHub or personal portfolio to show to prospective employers. The book launches on August 3rd – preorder it for 50% off now !

I write about software, machine learning, and entrepreneurship at https://nickmccullum.com. I also sell premium courses on Python programming and machine learning.

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

A neural network is a machine learning program, or model, that makes decisions in a manner similar to the human brain, by using processes that mimic the way biological neurons work together to identify phenomena, weigh options and arrive at conclusions.

Every neural network consists of layers of nodes, or artificial neurons—an input layer, one or more hidden layers, and an output layer. Each node connects to others, and has its own associated weight and threshold. If the output of any individual node is above the specified threshold value, that node is activated, sending data to the next layer of the network. Otherwise, no data is passed along to the next layer of the network.

Neural networks rely on training data to learn and improve their accuracy over time. Once they are fine-tuned for accuracy, they are powerful tools in computer science and artificial intelligence , allowing us to classify and cluster data at a high velocity. Tasks in speech recognition or image recognition can take minutes versus hours when compared to the manual identification by human experts. One of the best-known examples of a neural network is Google’s search algorithm.

Neural networks are sometimes called artificial neural networks (ANNs) or simulated neural networks (SNNs). They are a subset of machine learning, and at the heart of deep learning models.

Learn the building blocks and best practices to help your teams accelerate responsible AI.

Register for the ebook on generative AI

Think of each individual node as its own linear regression model, composed of input data, weights, a bias (or threshold), and an output. The formula would look something like this:

∑wixi + bias = w1x1 + w2x2 + w3x3 + bias

output = f(x) = 1 if ∑w1x1 + b>= 0; 0 if ∑w1x1 + b < 0

Once an input layer is determined, weights are assigned. These weights help determine the importance of any given variable, with larger ones contributing more significantly to the output compared to other inputs. All inputs are then multiplied by their respective weights and then summed. Afterward, the output is passed through an activation function, which determines the output. If that output exceeds a given threshold, it “fires” (or activates) the node, passing data to the next layer in the network. This results in the output of one node becoming in the input of the next node. This process of passing data from one layer to the next layer defines this neural network as a feedforward network.

Let’s break down what one single node might look like using binary values. We can apply this concept to a more tangible example, like whether you should go surfing (Yes: 1, No: 0). The decision to go or not to go is our predicted outcome, or y-hat. Let’s assume that there are three factors influencing your decision-making:

- Are the waves good? (Yes: 1, No: 0)

- Is the line-up empty? (Yes: 1, No: 0)

- Has there been a recent shark attack? (Yes: 0, No: 1)

Then, let’s assume the following, giving us the following inputs:

- X1 = 1, since the waves are pumping

- X2 = 0, since the crowds are out

- X3 = 1, since there hasn’t been a recent shark attack

Now, we need to assign some weights to determine importance. Larger weights signify that particular variables are of greater importance to the decision or outcome.

- W1 = 5, since large swells don’t come around often

- W2 = 2, since you’re used to the crowds

- W3 = 4, since you have a fear of sharks

Finally, we’ll also assume a threshold value of 3, which would translate to a bias value of –3. With all the various inputs, we can start to plug in values into the formula to get the desired output.

Y-hat = (1*5) + (0*2) + (1*4) – 3 = 6

If we use the activation function from the beginning of this section, we can determine that the output of this node would be 1, since 6 is greater than 0. In this instance, you would go surfing; but if we adjust the weights or the threshold, we can achieve different outcomes from the model. When we observe one decision, like in the above example, we can see how a neural network could make increasingly complex decisions depending on the output of previous decisions or layers.

In the example above, we used perceptrons to illustrate some of the mathematics at play here, but neural networks leverage sigmoid neurons, which are distinguished by having values between 0 and 1. Since neural networks behave similarly to decision trees, cascading data from one node to another, having x values between 0 and 1 will reduce the impact of any given change of a single variable on the output of any given node, and subsequently, the output of the neural network.

As we start to think about more practical use cases for neural networks, like image recognition or classification, we’ll leverage supervised learning, or labeled datasets, to train the algorithm. As we train the model, we’ll want to evaluate its accuracy using a cost (or loss) function. This is also commonly referred to as the mean squared error (MSE). In the equation below,

- i represents the index of the sample,

- y-hat is the predicted outcome,

- y is the actual value, and

- m is the number of samples.

𝐶𝑜𝑠𝑡 𝐹𝑢𝑛𝑐𝑡𝑖𝑜𝑛= 𝑀𝑆𝐸=1/2𝑚 ∑129_(𝑖=1)^𝑚▒(𝑦 ̂^((𝑖) )−𝑦^((𝑖) ) )^2

Ultimately, the goal is to minimize our cost function to ensure correctness of fit for any given observation. As the model adjusts its weights and bias, it uses the cost function and reinforcement learning to reach the point of convergence, or the local minimum. The process in which the algorithm adjusts its weights is through gradient descent, allowing the model to determine the direction to take to reduce errors (or minimize the cost function). With each training example, the parameters of the model adjust to gradually converge at the minimum.

See this IBM Developer article for a deeper explanation of the quantitative concepts involved in neural networks .

Most deep neural networks are feedforward, meaning they flow in one direction only, from input to output. However, you can also train your model through backpropagation; that is, move in the opposite direction from output to input. Backpropagation allows us to calculate and attribute the error associated with each neuron, allowing us to adjust and fit the parameters of the model(s) appropriately.

The all new enterprise studio that brings together traditional machine learning along with new generative AI capabilities powered by foundation models.

Neural networks can be classified into different types, which are used for different purposes. While this isn’t a comprehensive list of types, the below would be representative of the most common types of neural networks that you’ll come across for its common use cases:

The perceptron is the oldest neural network, created by Frank Rosenblatt in 1958.

Feedforward neural networks, or multi-layer perceptrons (MLPs), are what we’ve primarily been focusing on within this article. They are comprised of an input layer, a hidden layer or layers, and an output layer. While these neural networks are also commonly referred to as MLPs, it’s important to note that they are actually comprised of sigmoid neurons, not perceptrons, as most real-world problems are nonlinear. Data usually is fed into these models to train them, and they are the foundation for computer vision, natural language processing , and other neural networks.

Convolutional neural networks (CNNs) are similar to feedforward networks, but they’re usually utilized for image recognition, pattern recognition, and/or computer vision. These networks harness principles from linear algebra, particularly matrix multiplication, to identify patterns within an image.

Recurrent neural networks (RNNs) are identified by their feedback loops. These learning algorithms are primarily leveraged when using time-series data to make predictions about future outcomes, such as stock market predictions or sales forecasting.

Deep Learning and neural networks tend to be used interchangeably in conversation, which can be confusing. As a result, it’s worth noting that the “deep” in deep learning is just referring to the depth of layers in a neural network. A neural network that consists of more than three layers—which would be inclusive of the inputs and the output—can be considered a deep learning algorithm. A neural network that only has two or three layers is just a basic neural network.

To learn more about the differences between neural networks and other forms of artificial intelligence, like machine learning, please read the blog post “ AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the Difference? ”

The history of neural networks is longer than most people think. While the idea of “a machine that thinks” can be traced to the Ancient Greeks, we’ll focus on the key events that led to the evolution of thinking around neural networks, which has ebbed and flowed in popularity over the years:

1943: Warren S. McCulloch and Walter Pitts published “ A logical calculus of the ideas immanent in nervous activity (link resides outside ibm.com)” This research sought to understand how the human brain could produce complex patterns through connected brain cells, or neurons. One of the main ideas that came out of this work was the comparison of neurons with a binary threshold to Boolean logic (i.e., 0/1 or true/false statements).

1958: Frank Rosenblatt is credited with the development of the perceptron, documented in his research, “ The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain ” (link resides outside ibm.com). He takes McCulloch and Pitt’s work a step further by introducing weights to the equation. Leveraging an IBM 704, Rosenblatt was able to get a computer to learn how to distinguish cards marked on the left vs. cards marked on the right.

1974: While numerous researchers contributed to the idea of backpropagation, Paul Werbos was the first person in the US to note its application within neural networks within his PhD thesis (link resides outside ibm.com).

1989: Yann LeCun published a paper (link resides outside ibm.com) illustrating how the use of constraints in backpropagation and its integration into the neural network architecture can be used to train algorithms. This research successfully leveraged a neural network to recognize hand-written zip code digits provided by the U.S. Postal Service.

Design complex neural networks. Experiment at scale to deploy optimized learning models within IBM Watson Studio.

Build and scale trusted AI on any cloud. Automate the AI lifecycle for ModelOps.

Take the next step to start operationalizing and scaling generative AI and machine learning for business.

Register for our e-book for insights into the opportunities, challenges and lessons learned from infusing AI into businesses.

These terms are often used interchangeably, but what differences make each a unique technology?

Get an in-depth understanding of neural networks, their basic functions and the fundamentals of building one.

Train, validate, tune and deploy generative AI, foundation models and machine learning capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders. Build AI applications in a fraction of the time with a fraction of the data.

Caltech Bootcamp / Blog / /

What is a Neural Network?

- Written by John Terra

- Updated on November 27, 2023

Many of today’s information technologies aspire to mimic human behavior and thought processes as closely as possible. But do you realize that these efforts extend to imitating a human brain? The human brain is a marvel of organic engineering, and any attempt to create an artificial version will ultimately send the fields of Artificial Intelligence (AI) and Machine Learning (ML) to new heights.

This article tackles the question, “What is a neural network?” We will define the term, outline the types of neural networks, compare the pros and cons, explore neural network applications, and finally, a way for you to upskill in AI and machine learning .

So, before we explore the fantastic world of artificial neural networks and how they are poised to revolutionize what we know about AI, let’s first establish a definition.

What Is a Neural Network?

So, what is a neural network anyway? A neural network is a method of artificial intelligence, a series of algorithms that teach computers to recognize underlying relationships in data sets and process the data in a way that imitates the human brain. Also, it’s considered a type of machine learning process, usually called deep learning, that uses interconnected nodes or neurons in a layered structure, following the same pattern of neurons found in organic brains.

This process creates an adaptive system that lets computers continuously learn from their mistakes and improve performance. Humans use artificial neural networks to solve complex problems, such as summarizing documents or recognizing faces, with greater accuracy.

Neural networks are sometimes called artificial neural networks (ANN) to distinguish them from organic neural networks. After all, every person walking around today is equipped with a neural network. Neural networks interpret sensory data using a method of machine perception that labels or clusters raw input. The patterns that ANNs recognize are numerical and contained in vectors, translating all real-world data, including text, images, sound, or time series.

Artificial neural networks form the basis of large-language models (LLMS) used by tools such as chatGPT, Google’s Bard, Microsoft’s Bing, and Meta’s Llama.

Neural networks come in several types, listed below.

Also Read: Is AI Engineering a Viable Career?

What Are the Various Types of Neural Networks?

Here’s a rundown of the types of neural networks available today. Using different neural network paths, ANN types are distinguished by how the data moves from input to output mode.

Feed-forward Neural Networks

This ANN is one of the least complex networks. Information passes through various input nodes in one direction until it reaches the output node. For example, computer vision and facial recognition use feed-forward networks.

Recurrent Neural Networks

Recurrent neural networks are more complex than feed-forwards. They save processing node output and feed it into the model, a process that trains the network to predict a layer’s outcome. Each RNN model’s node is a memory cell that continues computation and implements operations. For example, ANN is usually used in text-to-speech conversions.

Convolutional Neural Networks

Convolution neural networks are one of today’s most popular ANN models. This model uses a different version of multilayer perceptrons, containing at least one convolutional layer that may be connected entirely or pooled. These layers generate feature maps that record an image’s region, are broken down into rectangles, and sent out. This ANN model is used primarily in image recognition in many of the more complex applications of Artificial Intelligence, like facial recognition, natural language processing, and text digitization.

Deconvolutional Neural Networks

This type of neural network uses a reversed CNN model process that finds lost signals or features previously considered irrelevant to the CNN system’s operations. This model works well with image synthesis and analysis.

Modular Neural Networks

Finally, modular neural networks have multiple neural networks that work separately from each other. These networks don’t communicate or interfere with each other’s operations during the computing process. As a result, large or complex computational processes can be conducted more efficiently.

Also Read: What is Machine Learning? A Comprehensive Guide for Beginners

What is a Neural Network and How Does a Neural Network Work?

Neural network architecture emulates the human brain. Human brain cells, referred to as neurons, build a highly interconnected, complex network that transmits electrical signals to each other, helping us process information. Likewise, artificial neural networks consist of artificial neurons that work together to solve problems. Artificial neurons comprise software modules called nodes, and artificial neural networks consist of software programs or algorithms that ultimately use computing systems to tackle math calculations. Nodes are called perceptrons and are comparable to multiple linear regressions. Perceptrons feed the signal created by multiple linear regressions into an activation function that could be nonlinear.

Here’s a look at basic neural network architecture.

- Input layer. Data from the outside world enters the ANN via the input layer. Input nodes process, analyze, and categorize the data, then pass it along to the next layer.

- Hidden layer. The hidden layer takes the input data from either the input layer or other hidden layers in the network. ANNs can have numerous hidden layers. Each of the network’s hidden layers analyzes the previous layer’s output, performs any necessary processing, then sends it along to the next layer.

- Output layer. The output layer produces the data processing’s final result created by the artificial neural network. The output layer can have single or multiple nodes. For example, if the problem is a binary (yes/no) classification issue, the output layer has just one output node, resulting in either 1 or 0. But if it’s a multi-class classification problem, the output layer can consist of multiple output nodes.

- Deep neural network architecture. Deep neural networks, also called deep learning networks, consist of numerous hidden layers containing millions of linked artificial neurons. A number, referred to as “weight,” represents the connections between nodes. Weight is a positive number if a node excites another and a negative number if a node suppresses another. The nodes with higher weight values influence the other nodes more.

A deep neural network can theoretically map any input to the output type. However, the network also needs considerably more training than other machine learning methods. Consequently, deep neural networks need millions of training data examples instead of the hundreds or thousands a simpler network may require.

Speaking of deep learning, let’s explore the neural network machine learning concept.

Machine Learning and Deep Learning: A Comparison

Standard machine learning methods need humans to input data for the machine learning software to work correctly. Then, data scientists determine the set of relevant features the software must analyze. This tedious process limits the software’s ability.

On the other hand, when dealing with deep learning, the data scientist only needs to give the software raw data. Then, the deep learning network extracts the relevant features by itself, thereby learning more independently. Moreover, it allows it to analyze unstructured data sets such as text documents, identify which data attributes need prioritization, and solve more challenging and complex problems.

Also Read: AI ML Engineer Salary – What You Can Expect

A Look at the Applications of Neural Networks

To get a more in-depth answer to the question “What is a neural network?” it’s super helpful to get an idea of the real-world applications they’re used for. Neural networks have countless uses, and as the technology improves, we’ll see more of them in our everyday lives. Here’s a partial list of how neural networks are being used today.

Speech Recognition

Let’s start off the list with one of the most popular applications. Neural networks can analyze human speech despite disparate languages, speech patterns, pitch, tone, and accents. Virtual assistants such as Amazon Alexa and transcription software use speech recognition to:

- Help call center agents and automatically classify calls

- Turn clinical conversations into documentation in real-time

- Place accurate subtitles in videos and meeting recordings

Computer Vision

Computer vision lets computers extract insights and information from images and videos. Using neural networks, computers can distinguish and recognize images as humans can. Computer vision is used for:

- Visual recognition in self-driving cars

- Content moderation that automatically removes inappropriate or unsafe content from image and video databases

- Facial recognition for identifying faces and recognizing characteristics such as open eyes, facial hair, and glasses

Natural Language Processing

Natural language processing (NLP) is a computer’s ability to process natural, human-made text. Neural networks aid computers in gathering insights and meaning from documents and other text data. NLP has many uses, including:

- Automated chatbots and virtual agents

- Automatically organizing and classifying written data

- Business intelligence (BI) analysis of long-form documents such as e-mails, contracts, and other forms

- Indexing key phrases that show sentiment, such as positive or negative comments on social media posts

Recommendation Engines

If you’ve ever ordered something online and later noticed that your social media newsfeed got flooded with recommendations for related products, congratulations! You’ve encountered a recommendation engine! Neural networks can track user activity and use the results to develop personalized recommendations. They can also analyze all aspects of a user’s behavior and discover new products or services that could interest them.

Pro tip: You can gain practical experience working on these applications in an interactive AI/ML bootcamp .

Also Read: What are Today’s Top Ten AI Technologies?

What is a Neural Network: Advantages and Disadvantages of Neural Networks

Neural networks bring plenty of advantages to the table but also have downsides. So let’s break things down into a list of pros and cons.

Advantages of Neural Networks

Neural networks have a lot going for them, and as the technology gets better, they will only improve and offer more functionality.

- Parallel processing abilities allow the network to perform multiple jobs simultaneously.

- The neural network can learn from events and make decisions based on its observations.

- Information gets accessed faster because it’s stored on an entire network, not just in a single database.

- Thanks to fault tolerance, neural network output generation won’t be interrupted if one or more cells get corrupted.

- Gradual corruption means the network degrades slowly over time rather than the network getting instantly destroyed by a problem. So, IT staff has time to address the problem and root out the corruption.

- The ANNs can produce output with incomplete information, and performance loss will be based on how important the missing information is. Consequently, output production doesn’t have to be interrupted due to irrelevant information not being available.

- ANNs can learn hidden relationships in the data without commanding any fixed relationships, so the network can better model highly volatile data and non-constant variances.

- Neural networks can generalize and infer relationships that would otherwise have gone unnoticed on unseen data, thus predicting the output of unseen data.

Disadvantages of Neural Networks

Unfortunately, it’s not all sunshine and smooth sailing. Neural networks aren’t perfect and have their drawbacks.

- Neural networks are bad at the “show your work” requirement. ANNs are saddled with the inability to explain their solutions’ hows and whys, thus breeding a lack of trust in the process. This situation is possibly the biggest drawback of neural networks.

- Neural networks are hardware-dependent because they require processors with parallel processing abilities.

- Since there aren’t any rules for determining proper network structures, data scientists must resort to trial and error and user experience to find the appropriate artificial neural network architecture.

- Neural networks operate with numerical information; thus, all problems must be converted into numerical values before the ANN can work on them.

Do You Want More Training in the Field of Artificial Intelligence?

Neural networks are gaining in popularity, so if you’re interested in an exciting career in a technology that’s still in its infancy, consider taking an AI course and setting your sights on an AI/ML position.

This six-month course provides a high-engagement learning experience that teaches concepts and skills such as computer vision, deep learning, speech recognition, neural networks, NLP, and much more.

The job website Glassdoor.com reports that an Artificial Intelligence Engineer’s average yearly salary in the United States is $105,013. So, if you’re ready to claim a good seat at the table of an industry that’s still new and growing, getting in at the ground floor of this exciting technology while enjoying excellent compensation, consider this bootcamp and get that ball rolling.

You might also like to read:

The Future of AI: A Comprehensive Guide

How Does AI Work? A Beginner’s Guide

Machine Learning Engineer Salary: Trends in 2023

Artificial Intelligence & Machine Learning Bootcamp

- Learning Format:

Online Bootcamp

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Recommended Articles

Leveraging Machine Learning and AI in Finance: Applications and Use Cases

AI and machine learning are applicable to almost every industry today. The financial services industry is one of the earliest adopters of these powerful technologies. Read on to learn more about how to apply ML and AI in finance.

Machine Learning in Healthcare: Applications, Use Cases, and Careers

While generative AI, like ChatGPT, has been all the rage in the last year, organizations have been leveraging AI and machine learning in healthcare for years. In this blog, learn about some of the innovative ways these technologies are revolutionizing the industry in many different ways.

What is Machine Learning? A Comprehensive Guide for Beginners

Machine learning touches nearly every aspect of modern life. But what is machine learning, anyway? In this blog, you will learn everything you need to know about this exciting technology and how to boost your career in the field.

Machine Learning Interview Questions & Answers

This article contains the top machine learning interview questions and answers for 2024, broken down into introductory and experienced categories.

How to Become an AI Architect: A Beginner’s Guide

As AI becomes more mainstream, the need for qualified professionals to work in this field is exploding. If you’re wondering how to become an AI architect, this blog is for you!

How to Become a Robotics Engineer? A Comprehensive Guide

Do you want to learn how to become a robotics engineer? Learn how to get into robotics engineering and what it takes to excel in this exciting field with our comprehensive guide.

Learning Format

Program Benefits

- 9+ top tools covered, 25+ hands-on projects

- Masterclasses by distinguished Caltech instructors

- In collaboration with IBM

- Global AI and ML experts lead training

- Call us on : 1800-212-7688

Dynamic Neural Network Models for Time-Varying Problem Solving: A Survey on Model Structures

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 23 June 2022

A hybrid biological neural network model for solving problems in cognitive planning

- Henry Powell 1 , 2 ,

- Mathias Winkel 1 ,

- Alexander V. Hopp 1 &

- Helmut Linde 1 , 3

Scientific Reports volume 12 , Article number: 10628 ( 2022 ) Cite this article

2440 Accesses

1 Citations

1 Altmetric

Metrics details

- Computational biology and bioinformatics

- Neuroscience

- Systems biology

A variety of behaviors, like spatial navigation or bodily motion, can be formulated as graph traversal problems through cognitive maps. We present a neural network model which can solve such tasks and is compatible with a broad range of empirical findings about the mammalian neocortex and hippocampus. The neurons and synaptic connections in the model represent structures that can result from self-organization into a cognitive map via Hebbian learning, i.e. into a graph in which each neuron represents a point of some abstract task-relevant manifold and the recurrent connections encode a distance metric on the manifold. Graph traversal problems are solved by wave-like activation patterns which travel through the recurrent network and guide a localized peak of activity onto a path from some starting position to a target state.

Similar content being viewed by others

Computational complexity drives sustained deliberation

Foundations of human spatial problem solving

Intelligent problem-solving as integrated hierarchical reinforcement learning

Introduction.

Building a bridge between structure and function of neural networks is an ambition at the heart of neuroscience. Historically, the first models studied were simplistic artificial neurons arranged in a feed-forward architecture. Such models are still widely applied today—forming the conceptual basis for Deep Learning. They have shaped our intuition of neurons as “feature detectors” which fire when a certain approximate configuration of input signals is present, and which aggregate simple features to more and more complex ones layer by layer. Yet in the brain, the vast majority of neural connections is recurrent, and although several possible explanations of their function have been proposed 1 , 2 , 3 , their computational purpose is still little understood 4 .

In the present paper, we propose a new algorithmic role which recurrent neural connections might play, namely as a computational substrate to solve graph traversal problems. We argue that many cognitive tasks like navigation or motion planning can be framed as finding a path from a starting position to some target position in a space of possible states. The possible states may be encoded by neurons via their “feature-detector property”. Allowed transitions between nearby states would then be encoded in recurrent connections, which can form naturally via Hebbian learning since the feature detectors’ receptive fields overlap. They may eventually form a “map” of some external system. Activation propagating through the network can then be used to find a short path through this map. In effect, the neural dynamics then implement an algorithm similar to Breadth-First Search on a graph.

Proposed model

A network of neurons that represents a manifold of stimuli.

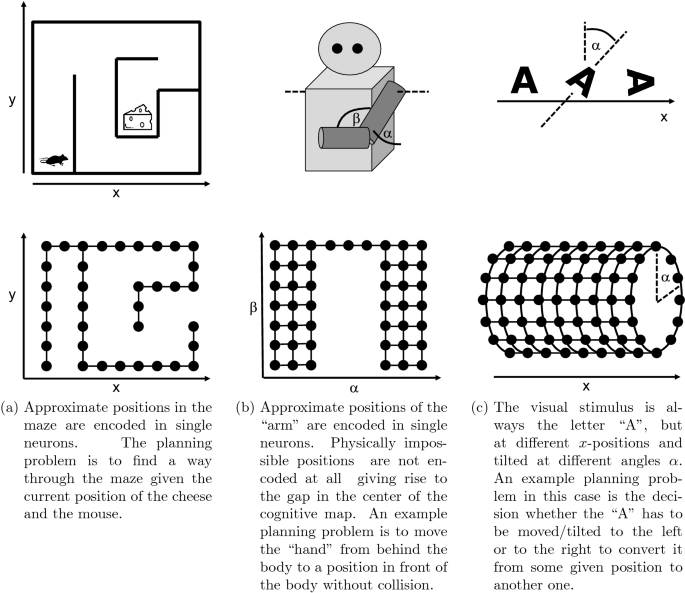

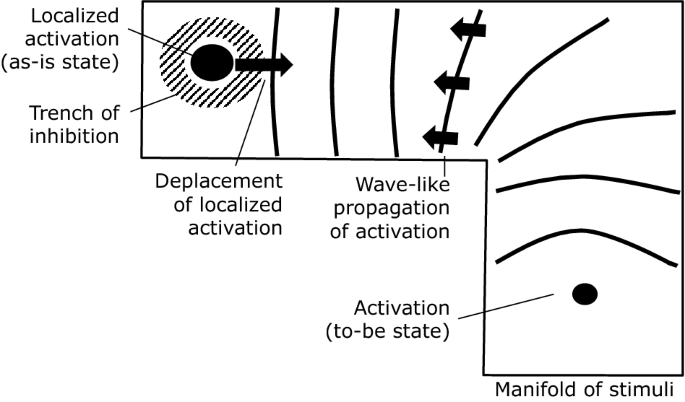

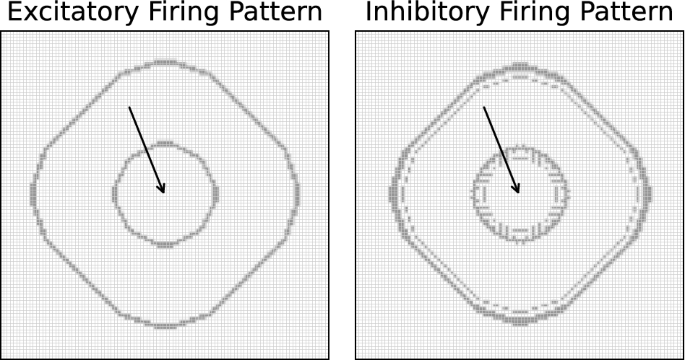

We consider a neural network which is exposed to some external stimuli-generating process under the assumption that the possible stimuli can be organized in some continuous manifold in the sense that similar stimuli are located close to each other on this manifold. For example, in the case of a mouse running through a maze, all possible perceptions can be associated with a particular position in a two-dimensional map, and neighboring positions will generate similar perceptions, see Fig. 1 a.

Proprioception, i. e. the sense of location of body parts, can also be a source of stimuli. For example, for a simplified arm with two degrees of freedom every possible position of the arm corresponds to one specific stimulus, cf. Fig. 1 b. All possible stimuli combined give rise to a two-dimensional manifold. The example also shows that the manifold will usually be restricted since not every conceivable combination of two joint angles might be a physically viable position for the arm.

The manifold of potential stimuli needs not necessarily be embedded in a flat Euclidean space as in the case of the maze. For example, if the stimuli are two-dimensional figures which can be shifted horizontally or rotated on a screen, the corresponding manifold is two-dimensional (one translational parameter plus one for the rotation angle) but it is not isomorphic to a flat plane since a change of the rotation angle by \(2\pi\) maps the figure onto itself again, see Fig. 1 c.

We assume that such manifolds of stimuli are approximated by the connectivity structure of a neural network which forms via a learning process. The result is a neural structure which we call a cognitive map . The defining property of a cognitive map is that is has a neural encoding for every possible stimulus and that two similar stimuli, i. e. stimuli which are close to each other in the manifold of stimuli, are represented by similar encodings, i. e. encodings which are close to each other in the cognitive map (of course, we do not imply that two neurons which are close to each other in the connectivity structure are also close to each other with respect to their physical location in the neural tissue).

Three examples of stimuli-generating processes and recurrent neural networks representing the corresponding manifold of stimuli. ( a ) Approximate positions in the maze are encoded in single neurons. The planning problem is to find a way through the maze given the current position of the cheese and the mouse. ( b ) Approximate positions of the "arm" are encoded in single neurons. Physically impossible positions are not encoded at all giving rise to the gap in the center of the cognitive map. An example planning problem is to move the "hand" from behind the body to a position in front of the body without collision. ( c ) The visual stimulus is always the letter "A", but at different x-positions and tilted at different angles α . An example planning problem in this case is the decision whether the "A" has to be moved/tilted to the left or to the right to convert it from some given position to another one.

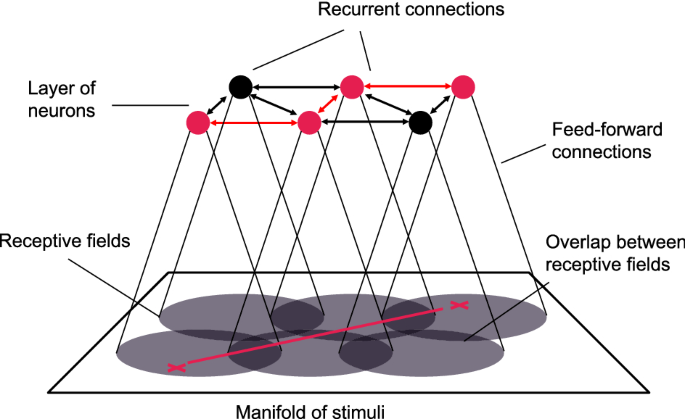

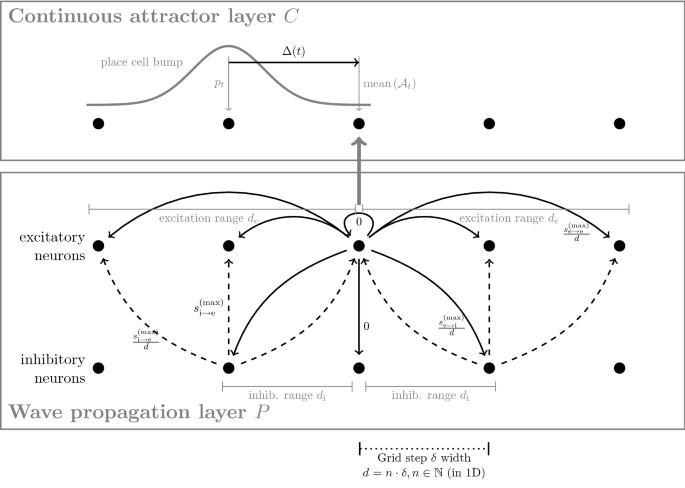

For the model, we make a very simplistic choice and assume a single-neuron encoding, i. e. the manifold of stimuli is covered by the receptive fields of individual neurons. Each such receptive field is a small localized area in the manifold and two neighboring receptive fields may overlap, see Fig. 2 . Such an encoding is a typical outcome for a single layer of neurons which are trained in a competitive Hebbian learning process 5 .

The key idea of the model is that solving a problem that can be formulated as a planning problem in the manifold of stimuli, can be solved as a planning problem in a corresponding cognitive map. To this end, it is not enough to consider the cognitive map as a set of individual points, but its topology must be known as well. This topological information will be encoded in the recurrent connections of the neural network.

It seems natural that a neural network could learn this topology via Hebbian learning: Two neurons with close-by receptive fields in the manifold will be excited simultaneously relatively often because their receptive fields overlap. Consequently, recurrent connections within the cognitive map will be strengthened between such neurons and the topology of the neural network will approximate the topology of the manifold, see Fig. 2 . This idea has been explored in more detail by Curto and Itskov in 6 . Indeed, previous work on the formation of neocortical maps that code for ocular dominance and stimulus orientation suggest that the formation of cognitive maps could well occur in this fashion 7 . For a review and comparison of these kinds of cognitive maps see 8 . Recent studies also show that recurrent neural networks might serve even more purposes, for example for working memory 9 , 10 or image recognition 11 .

In the model, the recurrent connections within a single layer of neurons approximate the topology of the manifold of stimuli. During the learning process, the strongest recurrent connections are formed between neurons with overlapping receptive fields. The problem of finding a route through the manifold (red line) is thus approximated by the problem of finding a path through the graph of recurrent neural connections (red path).

To avoid confusion with related concepts in machine learning, note that the present definition of recurrence is not exactly the same as the one used, for example, in Long Short-Term Memory networks 12 . Those algorithms employ recurrent connections as a loop to mix some input signal of a neural network with the output signal from a previous time step. The present model, however, separates between the primary excitation by some external stimulus via feed-forward connections and the resulting dynamics of the network mediated by the recurrent connections as described in the following.

Dynamics required for solving planning problems

Having set up a network that represents a manifold of stimuli, we need to endow this network of feed-forward and recurrent connections with dynamics. We do so by imposing two interacting mechanisms.

First, the neurons in the network should exhibit continuous attractor dynamics 13 : If a “clique” of a few tightly connected neurons are activated by a stimulus via the corresponding feed-forward pass, they keep activating each other while inhibiting their wider neighborhood. The result is a self-sustained, localized neural activity surrounded by a “trench of inhibition”. In the model, this encodes the as-is situation or the starting position for the planning problem. Such a state is called an “attractor” since it is stable under small perturbations of the dynamics, and it is part of a continuous landscape of attractors with different locations across the network. The dynamics of these kinds of bumps of activity in neural sheets of different kinds has been studied in depth in 14 and applied to more general problems in neurosciene 15 but have not, as of yet, been used as means to solve planning problems in the way proposed here.

Second, the neural network should allow for wave-like expansion of activity. If a small number of close-by neurons are activated by some hypothetical executive brain function (i. e. not via the feed-forward pass), they activate their neighbors, which in turn activate theirs, and so on. The result is a wave-like front of activity propagating through the recurrent network. The neurons which have been activated first encode the to-be state or the end position of the planning problem.

The key to solving a planning problem is in the interaction between the two types of dynamics, namely in what happens when the expanding wave front hits the stationary peak of activity. On the side where the wave is approaching it, the “trench of inhibition” surrounding the peak is in part neutralized by the additional excitatory activation from the wave. Consequently, the containment of the activity peak is somewhat “softer” on the side where the wave hit it and it may move a step towards the direction of the incoming wave. This process repeats, leading to a small change of position with every incoming wave front. The localized peak of excitation will follow the wave fronts back to their source, thus moving along a route through the manifold from start to end position, see Fig. 3 .

The as-is state of the system is encoded in a stable, localized, and self-sustained peak of activity surrounded by a “trench” of inhibition (top left corner). A planning process is started by stimulating the neurons which encode the to-be position (bottom right corner). The resulting waves of activity travel through the network and interact with the localized peak. Each incoming wave front shifts the peak slightly towards its direction of origin. Note that, for reasons of simplicity, we did not draw the neural network in this figure but only the manifold which it approximates.

The two types of dynamics described above are seemingly contradictory, since the first one restricts the system to localized activity, while the second one permits a wave-like propagation of activity throughout the system. To resolve the conflict in numerical simulations, we have split the dynamics into a continuous attractor layer and a wave propagation layer , which are responsible for different aspects of the system’s dynamical behaviour. We discuss the concepts of a numerical implementation in the section “ Implementation in a numerical proof-of-concept ” and ideas for a biologically more plausible implementation in the “ Discussion ” section.

Connection to real-life cognitive processes

To make the proposed concept more tangible, we present a rough sketch of how it could be embedded in a real-life cognitive process along with a speculative proposal for its anatomical implementation in the special case of motor control.

As an example, we consider a human grabbing a cup of coffee and we explain how the presented model complements and details the processes described in 16 for that particular case. According to our hypothesis, the as-is position of the subject’s arm is encoded as a localized peak of activity in the cognitive map encoding the complex manifold of arm positions. Anatomically, this cognitive map is certainly of a more complicated structure than the one in our simple model and it is possibly shared between primary motor cortex and primary somatosensory cortex.

We assume that the encoding of the arm’s state works in a bi-directional way, somewhat like the string of a puppet: When the arm is moved by external forces, the neural representation of its position mediated by afferent somatosensory signals moves along with it. On the other hand, if the representation in the cortical map is changed slightly by some cognitive process, then some hypothetical control mechanism of the primary motor cortex sends efferent signals to the muscles in an attempt to make the arm follow its neural representation and bring the limb and its representation back into congruence.

If now the human subject decides to grab the cup of coffee, some executive brain function with heavy involvement from prefrontal cortex constructs a to-be state of holding the cup: The final position of the hand with the fingers around the cup handle is what the person consciously thinks of. The high-level instructions generated by prefrontal cortex are possibly translated by the premotor cortex into a specific target state in the cognitive map that represents the manifold of possible arm positions. The neurons of the primary motor cortex and/or the primary somatosensory cortex representing this target state are thus activated.

The activation creates waves of activity propagating through the network, reaching the representation of the as-is state and shifting it slightly towards the to-be state. The hypothetical muscle control mechanism reacts on this disturbance and performs a motor action to keep the physical position of the arm and its representation in the cognitive map in line. As long as the person implicitly represents the to-be state, the arm “automatically” performs the complicated sequence of many individual joint movements which is necessary to grab the cup.

This concept can be extended to flexibly consider restrictions that have not been hard-coded in the cognitive map by learning. For example, in order to grab the cup of coffee, the arm may need to avoid obstacles on the way. To this end, the hypothetical executive brain function which defines the target state of the hand could also temporarily “block” certain regions of the cognitive map (e. g. via inhibition) which it associates with the discomfort of a collision. Those parts of the network which are blocked cannot conduct the “planning waves” anymore and thus a path around those regions will be found.

Implementation in a numerical proof-of-concept

To substantiate the presented conceptual ideas, we performed numerical experiments using multiple different setups. In each case, the implementation of the model employs two neural networks that both represent the same manifold of stimuli.

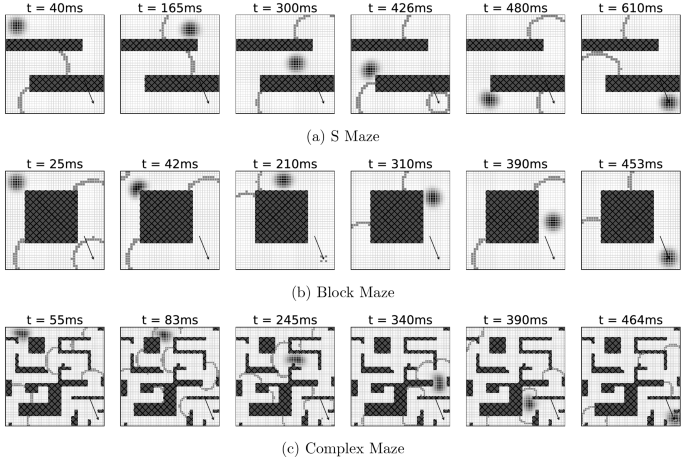

The continuous attractor layer is a sheet of neurons that models the functionality of a network of place cells in the human hippocampus 17 , 18 . Each neuron is implemented as a rate-coded cell embedded in its neighborhood via short-range excitatory and long-range inhibitory connections as in 19 . This structure allows the formation of a self-sustaining “bump” of activity, which can be shifted through the network by external perturbations.

The wave propagation layer is constructed with an identical number of excitatory and inhibitory Izhikevich neurons 20 , 21 , properly connected to allow for stable signal propagation across the manifold of stimuli. The target node is permanently stimulated, causing it to emit waves of activation which travel through the network.

The interaction between the two layers is modeled in a rather simplistic way. As in 19 , a time-dependent direction vector was introduced in the synaptic weight matrix of the continuous attractor layer. It has the effect of shifting the synaptic weights in a particular direction which in turn causes the location of the activation bump in the attractor layer to shift to a neighbouring neuron. The direction vector is updated whenever a wave of activity in the wave propagation layer newly enters the region which corresponds to the bump in the continuous attractor layer. Its direction is set to point from the center of the bump to the center of the overlap area between bump and wave, thus causing a shift of the bump towards the incoming wave fronts.

For more details on the implementation, see “ Methods and experiments ” below.

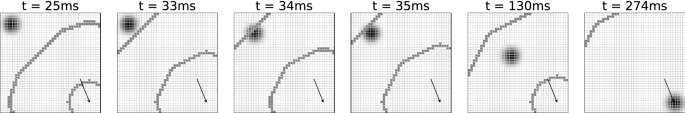

Results of the numerical experiments

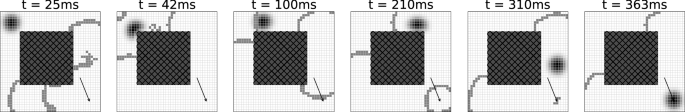

In a very simple initial configuration, the path finding algorithm was tested on a fully populated quadratic grid of neurons as described before. Figure 4 shows snapshots of wave activity and continuous attractor position at some representative time points during the simulation. As expected, stimulation of the wave propagation layer in the lower right of the cognitive map causes the emission of waves, which in turn shift the bump in the continuous attractor layer from its starting position in the upper left towards its target state.

Activity in the wave propagation layer (greyish lines) and the continuous attractor layer (circular blob-like structure) overlaid on top of each other at different time points during the simulation. The grid signifies the neural network structure, i. e. every grid cell in the visualization corresponds to one neuron in each, the wave propagation layer and the continuous attractor layer. The position of the external wave propagation layer stimulation (to-be state) is shown with an arrow. Starting from an initial position in the top left of the sheet, the activation bump traces back the incoming waves to their source in the bottom right.

As described in the section “ Connection to real-life cognitive processes ” above, the manifold of stimuli represented by the neural network can be curved, branched, or of different topology, either permanently or temporarily. The purpose of the model is to allow for a reliable solution to the underlying graph traversal problems independent of potential obstacles in the networks. For this reason we investigated whether the bump of activation in the continuous attractor layer was able to successfully navigate through the graph from the starting node to the end node in the presence of nodes that could not be traversed. To test this idea we constructed different “mazes”, blocking off sections of the graph by zeroing the synaptic connections of the respective neurons in the wave propagation layer and by clamping activation functions of the corresponding neurons in the continuous attractor layer to zero, see Fig. 5 . We found that in all these setups, the algorithm was able to successfully navigate the bump in the continuous attractor layer through the mazes.

Simulations where specific portions of the neural layers were blocked for traversal (dark hatched regions) show the model’s capability of solving complex planning problems. Note, that especially in the very fine structure of Fig. 5 c leftover excitation can trigger waves apparently spontaneously in the simulation region, such as at the right center at \(t={83}\,\hbox {ms}\) . As the corresponding neurons are not constantly stimulated, these are usually singular events that do not disturb the overall process (Supplementary Videos).

Relation to existing graph traversal algorithms

To conclude this section, we highlight a few parallels between the presented approach and the classical Breadth-First Search ( BFS ) algorithm.

BFS begins at some start node \(s\) of the graph and marks this node as “visited”. In each step, it then chooses one node which is “visited” but not “finished” and checks whether there are still unvisited nodes that have an edge to this node. If so, the corresponding nodes are also marked as “visited”, the current node is marked as “finished” and another iteration of the algorithm is started.

The approach presented here is a parallelized variant of this algorithm. Assuming that all neurons always obtain sufficient current to become activated, the propagating wave corresponds to the step of the algorithm in which the neighbors of the currently considered node are investigated. In contrast to BFS , the algorithm performs this step for all candidate nodes in a single step. That is, it considers all nodes currently marked as visited, checks the neighbors of all these nodes at once and marks them as visited if necessary.

Having all ingredients of the proposed conceptual framework in place, the following section reviews some experimental evidence indicating that it could in principle be employed by biological brains.

Empirical evidence

Cognitive maps.

The concept of “cognitive maps” was first proposed by Edward Tolman 22 , 23 , who conducted experiments to understand how rats were able to navigate mazes to seek rewards.

A body of evidence suggests that neural structures in the hippocampus and enthorinal cortex potentially support cognitive maps used for spatial navigation 17 , 24 , 25 . Within these networks, specific kinds of neurons are thought to be responsible for the representation of particular aspects of cognitive maps. Some examples are place cells 17 , 24 which code for the current location of a subject in space, grid cells which contribute to the problem of locating the subject in that space 26 as well as supporting the stabilisation of the attractor dynamics of the place cell network 19 , head-direction cells 27 which code for the direction in which the subject’s head is currently facing, and reward cells 28 which code for the location of a reward in the same environment.