11.2 Writing a Research Report in American Psychological Association (APA) Style

Learning objectives.

- Identify the major sections of an APA-style research report and the basic contents of each section.

- Plan and write an effective APA-style research report.

In this section, we look at how to write an APA-style empirical research report , an article that presents the results of one or more new studies. Recall that the standard sections of an empirical research report provide a kind of outline. Here we consider each of these sections in detail, including what information it contains, how that information is formatted and organized, and tips for writing each section. At the end of this section is a sample APA-style research report that illustrates many of these principles.

Sections of a Research Report

Title page and abstract.

An APA-style research report begins with a title page . The title is centered in the upper half of the page, with each important word capitalized. The title should clearly and concisely (in about 12 words or fewer) communicate the primary variables and research questions. This sometimes requires a main title followed by a subtitle that elaborates on the main title, in which case the main title and subtitle are separated by a colon. Here are some titles from recent issues of professional journals published by the American Psychological Association.

- Sex Differences in Coping Styles and Implications for Depressed Mood

- Effects of Aging and Divided Attention on Memory for Items and Their Contexts

- Computer-Assisted Cognitive Behavioral Therapy for Child Anxiety: Results of a Randomized Clinical Trial

- Virtual Driving and Risk Taking: Do Racing Games Increase Risk-Taking Cognitions, Affect, and Behavior?

Below the title are the authors’ names and, on the next line, their institutional affiliation—the university or other institution where the authors worked when they conducted the research. As we have already seen, the authors are listed in an order that reflects their contribution to the research. When multiple authors have made equal contributions to the research, they often list their names alphabetically or in a randomly determined order.

It’s Soooo Cute! How Informal Should an Article Title Be?

In some areas of psychology, the titles of many empirical research reports are informal in a way that is perhaps best described as “cute.” They usually take the form of a play on words or a well-known expression that relates to the topic under study. Here are some examples from recent issues of the Journal Psychological Science .

- “Smells Like Clean Spirit: Nonconscious Effects of Scent on Cognition and Behavior”

- “Time Crawls: The Temporal Resolution of Infants’ Visual Attention”

- “Scent of a Woman: Men’s Testosterone Responses to Olfactory Ovulation Cues”

- “Apocalypse Soon?: Dire Messages Reduce Belief in Global Warming by Contradicting Just-World Beliefs”

- “Serial vs. Parallel Processing: Sometimes They Look Like Tweedledum and Tweedledee but They Can (and Should) Be Distinguished”

- “How Do I Love Thee? Let Me Count the Words: The Social Effects of Expressive Writing”

Individual researchers differ quite a bit in their preference for such titles. Some use them regularly, while others never use them. What might be some of the pros and cons of using cute article titles?

For articles that are being submitted for publication, the title page also includes an author note that lists the authors’ full institutional affiliations, any acknowledgments the authors wish to make to agencies that funded the research or to colleagues who commented on it, and contact information for the authors. For student papers that are not being submitted for publication—including theses—author notes are generally not necessary.

The abstract is a summary of the study. It is the second page of the manuscript and is headed with the word Abstract . The first line is not indented. The abstract presents the research question, a summary of the method, the basic results, and the most important conclusions. Because the abstract is usually limited to about 200 words, it can be a challenge to write a good one.

Introduction

The introduction begins on the third page of the manuscript. The heading at the top of this page is the full title of the manuscript, with each important word capitalized as on the title page. The introduction includes three distinct subsections, although these are typically not identified by separate headings. The opening introduces the research question and explains why it is interesting, the literature review discusses relevant previous research, and the closing restates the research question and comments on the method used to answer it.

The Opening

The opening , which is usually a paragraph or two in length, introduces the research question and explains why it is interesting. To capture the reader’s attention, researcher Daryl Bem recommends starting with general observations about the topic under study, expressed in ordinary language (not technical jargon)—observations that are about people and their behavior (not about researchers or their research; Bem, 2003 [1] ). Concrete examples are often very useful here. According to Bem, this would be a poor way to begin a research report:

Festinger’s theory of cognitive dissonance received a great deal of attention during the latter part of the 20th century (p. 191)

The following would be much better:

The individual who holds two beliefs that are inconsistent with one another may feel uncomfortable. For example, the person who knows that he or she enjoys smoking but believes it to be unhealthy may experience discomfort arising from the inconsistency or disharmony between these two thoughts or cognitions. This feeling of discomfort was called cognitive dissonance by social psychologist Leon Festinger (1957), who suggested that individuals will be motivated to remove this dissonance in whatever way they can (p. 191).

After capturing the reader’s attention, the opening should go on to introduce the research question and explain why it is interesting. Will the answer fill a gap in the literature? Will it provide a test of an important theory? Does it have practical implications? Giving readers a clear sense of what the research is about and why they should care about it will motivate them to continue reading the literature review—and will help them make sense of it.

Breaking the Rules

Researcher Larry Jacoby reported several studies showing that a word that people see or hear repeatedly can seem more familiar even when they do not recall the repetitions—and that this tendency is especially pronounced among older adults. He opened his article with the following humorous anecdote:

A friend whose mother is suffering symptoms of Alzheimer’s disease (AD) tells the story of taking her mother to visit a nursing home, preliminary to her mother’s moving there. During an orientation meeting at the nursing home, the rules and regulations were explained, one of which regarded the dining room. The dining room was described as similar to a fine restaurant except that tipping was not required. The absence of tipping was a central theme in the orientation lecture, mentioned frequently to emphasize the quality of care along with the advantages of having paid in advance. At the end of the meeting, the friend’s mother was asked whether she had any questions. She replied that she only had one question: “Should I tip?” (Jacoby, 1999, p. 3)

Although both humor and personal anecdotes are generally discouraged in APA-style writing, this example is a highly effective way to start because it both engages the reader and provides an excellent real-world example of the topic under study.

The Literature Review

Immediately after the opening comes the literature review , which describes relevant previous research on the topic and can be anywhere from several paragraphs to several pages in length. However, the literature review is not simply a list of past studies. Instead, it constitutes a kind of argument for why the research question is worth addressing. By the end of the literature review, readers should be convinced that the research question makes sense and that the present study is a logical next step in the ongoing research process.

Like any effective argument, the literature review must have some kind of structure. For example, it might begin by describing a phenomenon in a general way along with several studies that demonstrate it, then describing two or more competing theories of the phenomenon, and finally presenting a hypothesis to test one or more of the theories. Or it might describe one phenomenon, then describe another phenomenon that seems inconsistent with the first one, then propose a theory that resolves the inconsistency, and finally present a hypothesis to test that theory. In applied research, it might describe a phenomenon or theory, then describe how that phenomenon or theory applies to some important real-world situation, and finally suggest a way to test whether it does, in fact, apply to that situation.

Looking at the literature review in this way emphasizes a few things. First, it is extremely important to start with an outline of the main points that you want to make, organized in the order that you want to make them. The basic structure of your argument, then, should be apparent from the outline itself. Second, it is important to emphasize the structure of your argument in your writing. One way to do this is to begin the literature review by summarizing your argument even before you begin to make it. “In this article, I will describe two apparently contradictory phenomena, present a new theory that has the potential to resolve the apparent contradiction, and finally present a novel hypothesis to test the theory.” Another way is to open each paragraph with a sentence that summarizes the main point of the paragraph and links it to the preceding points. These opening sentences provide the “transitions” that many beginning researchers have difficulty with. Instead of beginning a paragraph by launching into a description of a previous study, such as “Williams (2004) found that…,” it is better to start by indicating something about why you are describing this particular study. Here are some simple examples:

Another example of this phenomenon comes from the work of Williams (2004).

Williams (2004) offers one explanation of this phenomenon.

An alternative perspective has been provided by Williams (2004).

We used a method based on the one used by Williams (2004).

Finally, remember that your goal is to construct an argument for why your research question is interesting and worth addressing—not necessarily why your favorite answer to it is correct. In other words, your literature review must be balanced. If you want to emphasize the generality of a phenomenon, then of course you should discuss various studies that have demonstrated it. However, if there are other studies that have failed to demonstrate it, you should discuss them too. Or if you are proposing a new theory, then of course you should discuss findings that are consistent with that theory. However, if there are other findings that are inconsistent with it, again, you should discuss them too. It is acceptable to argue that the balance of the research supports the existence of a phenomenon or is consistent with a theory (and that is usually the best that researchers in psychology can hope for), but it is not acceptable to ignore contradictory evidence. Besides, a large part of what makes a research question interesting is uncertainty about its answer.

The Closing

The closing of the introduction—typically the final paragraph or two—usually includes two important elements. The first is a clear statement of the main research question and hypothesis. This statement tends to be more formal and precise than in the opening and is often expressed in terms of operational definitions of the key variables. The second is a brief overview of the method and some comment on its appropriateness. Here, for example, is how Darley and Latané (1968) [2] concluded the introduction to their classic article on the bystander effect:

These considerations lead to the hypothesis that the more bystanders to an emergency, the less likely, or the more slowly, any one bystander will intervene to provide aid. To test this proposition it would be necessary to create a situation in which a realistic “emergency” could plausibly occur. Each subject should also be blocked from communicating with others to prevent his getting information about their behavior during the emergency. Finally, the experimental situation should allow for the assessment of the speed and frequency of the subjects’ reaction to the emergency. The experiment reported below attempted to fulfill these conditions. (p. 378)

Thus the introduction leads smoothly into the next major section of the article—the method section.

The method section is where you describe how you conducted your study. An important principle for writing a method section is that it should be clear and detailed enough that other researchers could replicate the study by following your “recipe.” This means that it must describe all the important elements of the study—basic demographic characteristics of the participants, how they were recruited, whether they were randomly assigned to conditions, how the variables were manipulated or measured, how counterbalancing was accomplished, and so on. At the same time, it should avoid irrelevant details such as the fact that the study was conducted in Classroom 37B of the Industrial Technology Building or that the questionnaire was double-sided and completed using pencils.

The method section begins immediately after the introduction ends with the heading “Method” (not “Methods”) centered on the page. Immediately after this is the subheading “Participants,” left justified and in italics. The participants subsection indicates how many participants there were, the number of women and men, some indication of their age, other demographics that may be relevant to the study, and how they were recruited, including any incentives given for participation.

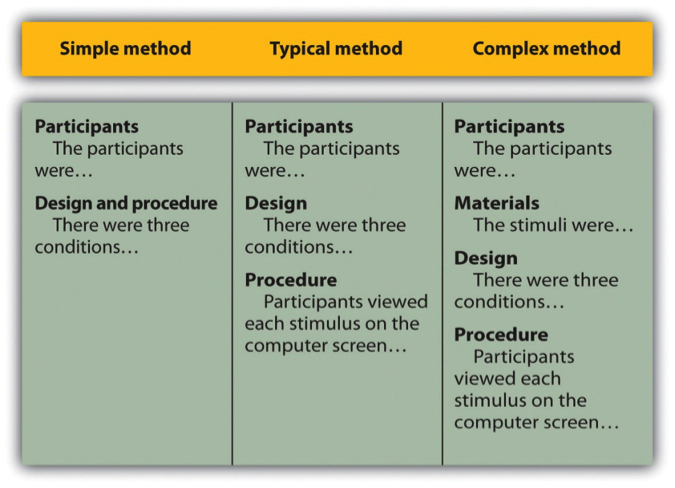

Figure 11.1 Three Ways of Organizing an APA-Style Method

After the participants section, the structure can vary a bit. Figure 11.1 shows three common approaches. In the first, the participants section is followed by a design and procedure subsection, which describes the rest of the method. This works well for methods that are relatively simple and can be described adequately in a few paragraphs. In the second approach, the participants section is followed by separate design and procedure subsections. This works well when both the design and the procedure are relatively complicated and each requires multiple paragraphs.

What is the difference between design and procedure? The design of a study is its overall structure. What were the independent and dependent variables? Was the independent variable manipulated, and if so, was it manipulated between or within subjects? How were the variables operationally defined? The procedure is how the study was carried out. It often works well to describe the procedure in terms of what the participants did rather than what the researchers did. For example, the participants gave their informed consent, read a set of instructions, completed a block of four practice trials, completed a block of 20 test trials, completed two questionnaires, and were debriefed and excused.

In the third basic way to organize a method section, the participants subsection is followed by a materials subsection before the design and procedure subsections. This works well when there are complicated materials to describe. This might mean multiple questionnaires, written vignettes that participants read and respond to, perceptual stimuli, and so on. The heading of this subsection can be modified to reflect its content. Instead of “Materials,” it can be “Questionnaires,” “Stimuli,” and so on. The materials subsection is also a good place to refer to the reliability and/or validity of the measures. This is where you would present test-retest correlations, Cronbach’s α, or other statistics to show that the measures are consistent across time and across items and that they accurately measure what they are intended to measure.

The results section is where you present the main results of the study, including the results of the statistical analyses. Although it does not include the raw data—individual participants’ responses or scores—researchers should save their raw data and make them available to other researchers who request them. Several journals now encourage the open sharing of raw data online.

Although there are no standard subsections, it is still important for the results section to be logically organized. Typically it begins with certain preliminary issues. One is whether any participants or responses were excluded from the analyses and why. The rationale for excluding data should be described clearly so that other researchers can decide whether it is appropriate. A second preliminary issue is how multiple responses were combined to produce the primary variables in the analyses. For example, if participants rated the attractiveness of 20 stimulus people, you might have to explain that you began by computing the mean attractiveness rating for each participant. Or if they recalled as many items as they could from study list of 20 words, did you count the number correctly recalled, compute the percentage correctly recalled, or perhaps compute the number correct minus the number incorrect? A final preliminary issue is whether the manipulation was successful. This is where you would report the results of any manipulation checks.

The results section should then tackle the primary research questions, one at a time. Again, there should be a clear organization. One approach would be to answer the most general questions and then proceed to answer more specific ones. Another would be to answer the main question first and then to answer secondary ones. Regardless, Bem (2003) [3] suggests the following basic structure for discussing each new result:

- Remind the reader of the research question.

- Give the answer to the research question in words.

- Present the relevant statistics.

- Qualify the answer if necessary.

- Summarize the result.

Notice that only Step 3 necessarily involves numbers. The rest of the steps involve presenting the research question and the answer to it in words. In fact, the basic results should be clear even to a reader who skips over the numbers.

The discussion is the last major section of the research report. Discussions usually consist of some combination of the following elements:

- Summary of the research

- Theoretical implications

- Practical implications

- Limitations

- Suggestions for future research

The discussion typically begins with a summary of the study that provides a clear answer to the research question. In a short report with a single study, this might require no more than a sentence. In a longer report with multiple studies, it might require a paragraph or even two. The summary is often followed by a discussion of the theoretical implications of the research. Do the results provide support for any existing theories? If not, how can they be explained? Although you do not have to provide a definitive explanation or detailed theory for your results, you at least need to outline one or more possible explanations. In applied research—and often in basic research—there is also some discussion of the practical implications of the research. How can the results be used, and by whom, to accomplish some real-world goal?

The theoretical and practical implications are often followed by a discussion of the study’s limitations. Perhaps there are problems with its internal or external validity. Perhaps the manipulation was not very effective or the measures not very reliable. Perhaps there is some evidence that participants did not fully understand their task or that they were suspicious of the intent of the researchers. Now is the time to discuss these issues and how they might have affected the results. But do not overdo it. All studies have limitations, and most readers will understand that a different sample or different measures might have produced different results. Unless there is good reason to think they would have, however, there is no reason to mention these routine issues. Instead, pick two or three limitations that seem like they could have influenced the results, explain how they could have influenced the results, and suggest ways to deal with them.

Most discussions end with some suggestions for future research. If the study did not satisfactorily answer the original research question, what will it take to do so? What new research questions has the study raised? This part of the discussion, however, is not just a list of new questions. It is a discussion of two or three of the most important unresolved issues. This means identifying and clarifying each question, suggesting some alternative answers, and even suggesting ways they could be studied.

Finally, some researchers are quite good at ending their articles with a sweeping or thought-provoking conclusion. Darley and Latané (1968) [4] , for example, ended their article on the bystander effect by discussing the idea that whether people help others may depend more on the situation than on their personalities. Their final sentence is, “If people understand the situational forces that can make them hesitate to intervene, they may better overcome them” (p. 383). However, this kind of ending can be difficult to pull off. It can sound overreaching or just banal and end up detracting from the overall impact of the article. It is often better simply to end by returning to the problem or issue introduced in your opening paragraph and clearly stating how your research has addressed that issue or problem.

The references section begins on a new page with the heading “References” centered at the top of the page. All references cited in the text are then listed in the format presented earlier. They are listed alphabetically by the last name of the first author. If two sources have the same first author, they are listed alphabetically by the last name of the second author. If all the authors are the same, then they are listed chronologically by the year of publication. Everything in the reference list is double-spaced both within and between references.

Appendices, Tables, and Figures

Appendices, tables, and figures come after the references. An appendix is appropriate for supplemental material that would interrupt the flow of the research report if it were presented within any of the major sections. An appendix could be used to present lists of stimulus words, questionnaire items, detailed descriptions of special equipment or unusual statistical analyses, or references to the studies that are included in a meta-analysis. Each appendix begins on a new page. If there is only one, the heading is “Appendix,” centered at the top of the page. If there is more than one, the headings are “Appendix A,” “Appendix B,” and so on, and they appear in the order they were first mentioned in the text of the report.

After any appendices come tables and then figures. Tables and figures are both used to present results. Figures can also be used to display graphs, illustrate theories (e.g., in the form of a flowchart), display stimuli, outline procedures, and present many other kinds of information. Each table and figure appears on its own page. Tables are numbered in the order that they are first mentioned in the text (“Table 1,” “Table 2,” and so on). Figures are numbered the same way (“Figure 1,” “Figure 2,” and so on). A brief explanatory title, with the important words capitalized, appears above each table. Each figure is given a brief explanatory caption, where (aside from proper nouns or names) only the first word of each sentence is capitalized. More details on preparing APA-style tables and figures are presented later in the book.

Sample APA-Style Research Report

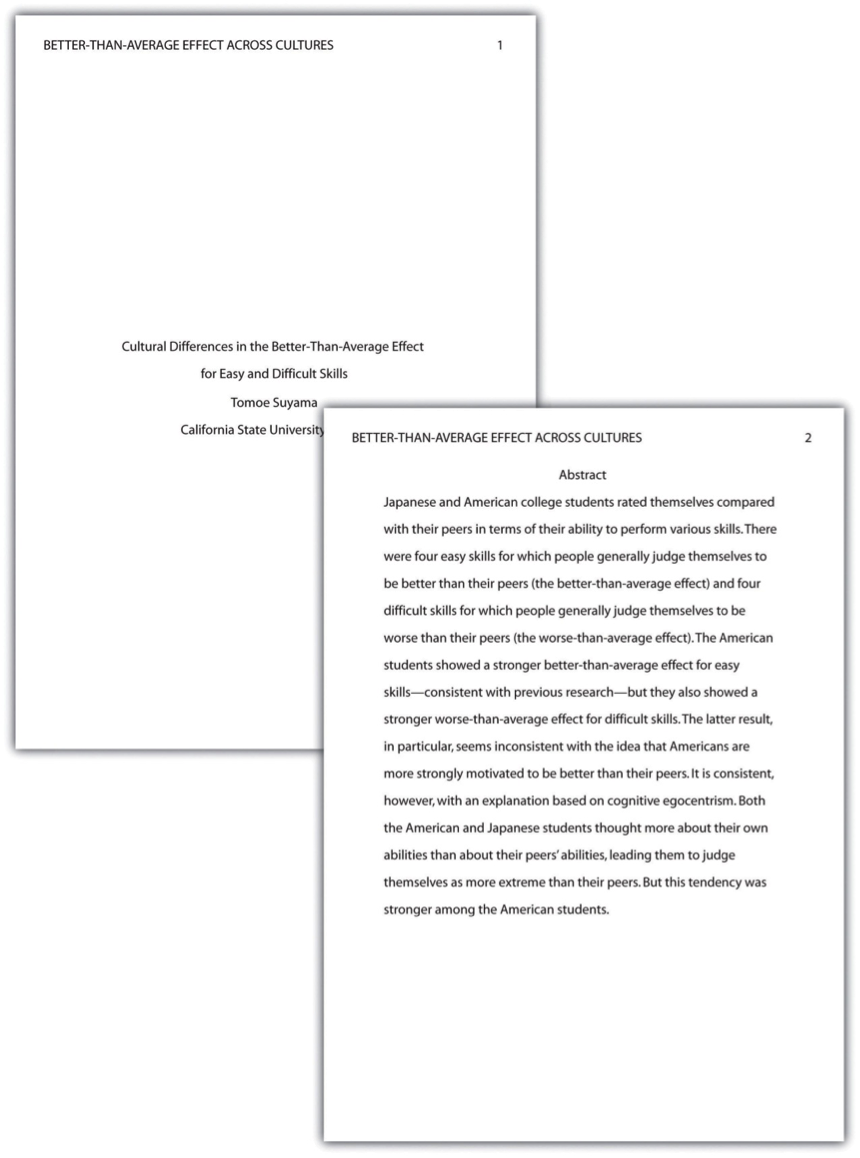

Figures 11.2, 11.3, 11.4, and 11.5 show some sample pages from an APA-style empirical research report originally written by undergraduate student Tomoe Suyama at California State University, Fresno. The main purpose of these figures is to illustrate the basic organization and formatting of an APA-style empirical research report, although many high-level and low-level style conventions can be seen here too.

Figure 11.2 Title Page and Abstract. This student paper does not include the author note on the title page. The abstract appears on its own page.

Figure 11.3 Introduction and Method. Note that the introduction is headed with the full title, and the method section begins immediately after the introduction ends.

Figure 11.4 Results and Discussion The discussion begins immediately after the results section ends.

Figure 11.5 References and Figure. If there were appendices or tables, they would come before the figure.

Key Takeaways

- An APA-style empirical research report consists of several standard sections. The main ones are the abstract, introduction, method, results, discussion, and references.

- The introduction consists of an opening that presents the research question, a literature review that describes previous research on the topic, and a closing that restates the research question and comments on the method. The literature review constitutes an argument for why the current study is worth doing.

- The method section describes the method in enough detail that another researcher could replicate the study. At a minimum, it consists of a participants subsection and a design and procedure subsection.

- The results section describes the results in an organized fashion. Each primary result is presented in terms of statistical results but also explained in words.

- The discussion typically summarizes the study, discusses theoretical and practical implications and limitations of the study, and offers suggestions for further research.

- Practice: Look through an issue of a general interest professional journal (e.g., Psychological Science ). Read the opening of the first five articles and rate the effectiveness of each one from 1 ( very ineffective ) to 5 ( very effective ). Write a sentence or two explaining each rating.

- Practice: Find a recent article in a professional journal and identify where the opening, literature review, and closing of the introduction begin and end.

- Practice: Find a recent article in a professional journal and highlight in a different color each of the following elements in the discussion: summary, theoretical implications, practical implications, limitations, and suggestions for future research.

- Bem, D. J. (2003). Writing the empirical journal article. In J. M. Darley, M. P. Zanna, & H. R. Roediger III (Eds.), The complete academic: A practical guide for the beginning social scientist (2nd ed.). Washington, DC: American Psychological Association. ↵

- Darley, J. M., & Latané, B. (1968). Bystander intervention in emergencies: Diffusion of responsibility. Journal of Personality and Social Psychology, 4 , 377–383. ↵

Share This Book

- Increase Font Size

- Search This Site All UCSD Sites Faculty/Staff Search Term

- Contact & Directions

- Climate Statement

- Cognitive Behavioral Neuroscience

- Cognitive Psychology

- Developmental Psychology

- Social Psychology

- Adjunct Faculty

- Non-Senate Instructors

- Researchers

- Psychology Grads

- Affiliated Grads

- New and Prospective Students

- Honors Program

- Experiential Learning

- Programs & Events

- Psi Chi / Psychology Club

- Prospective PhD Students

- Current PhD Students

- Area Brown Bags

- Colloquium Series

- Anderson Distinguished Lecture Series

- Speaker Videos

- Undergraduate Program

- Academic and Writing Resources

Writing Research Papers

- Research Paper Structure

Whether you are writing a B.S. Degree Research Paper or completing a research report for a Psychology course, it is highly likely that you will need to organize your research paper in accordance with American Psychological Association (APA) guidelines. Here we discuss the structure of research papers according to APA style.

Major Sections of a Research Paper in APA Style

A complete research paper in APA style that is reporting on experimental research will typically contain a Title page, Abstract, Introduction, Methods, Results, Discussion, and References sections. 1 Many will also contain Figures and Tables and some will have an Appendix or Appendices. These sections are detailed as follows (for a more in-depth guide, please refer to " How to Write a Research Paper in APA Style ”, a comprehensive guide developed by Prof. Emma Geller). 2

What is this paper called and who wrote it? – the first page of the paper; this includes the name of the paper, a “running head”, authors, and institutional affiliation of the authors. The institutional affiliation is usually listed in an Author Note that is placed towards the bottom of the title page. In some cases, the Author Note also contains an acknowledgment of any funding support and of any individuals that assisted with the research project.

One-paragraph summary of the entire study – typically no more than 250 words in length (and in many cases it is well shorter than that), the Abstract provides an overview of the study.

Introduction

What is the topic and why is it worth studying? – the first major section of text in the paper, the Introduction commonly describes the topic under investigation, summarizes or discusses relevant prior research (for related details, please see the Writing Literature Reviews section of this website), identifies unresolved issues that the current research will address, and provides an overview of the research that is to be described in greater detail in the sections to follow.

What did you do? – a section which details how the research was performed. It typically features a description of the participants/subjects that were involved, the study design, the materials that were used, and the study procedure. If there were multiple experiments, then each experiment may require a separate Methods section. A rule of thumb is that the Methods section should be sufficiently detailed for another researcher to duplicate your research.

What did you find? – a section which describes the data that was collected and the results of any statistical tests that were performed. It may also be prefaced by a description of the analysis procedure that was used. If there were multiple experiments, then each experiment may require a separate Results section.

What is the significance of your results? – the final major section of text in the paper. The Discussion commonly features a summary of the results that were obtained in the study, describes how those results address the topic under investigation and/or the issues that the research was designed to address, and may expand upon the implications of those findings. Limitations and directions for future research are also commonly addressed.

List of articles and any books cited – an alphabetized list of the sources that are cited in the paper (by last name of the first author of each source). Each reference should follow specific APA guidelines regarding author names, dates, article titles, journal titles, journal volume numbers, page numbers, book publishers, publisher locations, websites, and so on (for more information, please see the Citing References in APA Style page of this website).

Tables and Figures

Graphs and data (optional in some cases) – depending on the type of research being performed, there may be Tables and/or Figures (however, in some cases, there may be neither). In APA style, each Table and each Figure is placed on a separate page and all Tables and Figures are included after the References. Tables are included first, followed by Figures. However, for some journals and undergraduate research papers (such as the B.S. Research Paper or Honors Thesis), Tables and Figures may be embedded in the text (depending on the instructor’s or editor’s policies; for more details, see "Deviations from APA Style" below).

Supplementary information (optional) – in some cases, additional information that is not critical to understanding the research paper, such as a list of experiment stimuli, details of a secondary analysis, or programming code, is provided. This is often placed in an Appendix.

Variations of Research Papers in APA Style

Although the major sections described above are common to most research papers written in APA style, there are variations on that pattern. These variations include:

- Literature reviews – when a paper is reviewing prior published research and not presenting new empirical research itself (such as in a review article, and particularly a qualitative review), then the authors may forgo any Methods and Results sections. Instead, there is a different structure such as an Introduction section followed by sections for each of the different aspects of the body of research being reviewed, and then perhaps a Discussion section.

- Multi-experiment papers – when there are multiple experiments, it is common to follow the Introduction with an Experiment 1 section, itself containing Methods, Results, and Discussion subsections. Then there is an Experiment 2 section with a similar structure, an Experiment 3 section with a similar structure, and so on until all experiments are covered. Towards the end of the paper there is a General Discussion section followed by References. Additionally, in multi-experiment papers, it is common for the Results and Discussion subsections for individual experiments to be combined into single “Results and Discussion” sections.

Departures from APA Style

In some cases, official APA style might not be followed (however, be sure to check with your editor, instructor, or other sources before deviating from standards of the Publication Manual of the American Psychological Association). Such deviations may include:

- Placement of Tables and Figures – in some cases, to make reading through the paper easier, Tables and/or Figures are embedded in the text (for example, having a bar graph placed in the relevant Results section). The embedding of Tables and/or Figures in the text is one of the most common deviations from APA style (and is commonly allowed in B.S. Degree Research Papers and Honors Theses; however you should check with your instructor, supervisor, or editor first).

- Incomplete research – sometimes a B.S. Degree Research Paper in this department is written about research that is currently being planned or is in progress. In those circumstances, sometimes only an Introduction and Methods section, followed by References, is included (that is, in cases where the research itself has not formally begun). In other cases, preliminary results are presented and noted as such in the Results section (such as in cases where the study is underway but not complete), and the Discussion section includes caveats about the in-progress nature of the research. Again, you should check with your instructor, supervisor, or editor first.

- Class assignments – in some classes in this department, an assignment must be written in APA style but is not exactly a traditional research paper (for instance, a student asked to write about an article that they read, and to write that report in APA style). In that case, the structure of the paper might approximate the typical sections of a research paper in APA style, but not entirely. You should check with your instructor for further guidelines.

Workshops and Downloadable Resources

- For in-person discussion of the process of writing research papers, please consider attending this department’s “Writing Research Papers” workshop (for dates and times, please check the undergraduate workshops calendar).

Downloadable Resources

- How to Write APA Style Research Papers (a comprehensive guide) [ PDF ]

- Tips for Writing APA Style Research Papers (a brief summary) [ PDF ]

- Example APA Style Research Paper (for B.S. Degree – empirical research) [ PDF ]

- Example APA Style Research Paper (for B.S. Degree – literature review) [ PDF ]

Further Resources

How-To Videos

- Writing Research Paper Videos

APA Journal Article Reporting Guidelines

- Appelbaum, M., Cooper, H., Kline, R. B., Mayo-Wilson, E., Nezu, A. M., & Rao, S. M. (2018). Journal article reporting standards for quantitative research in psychology: The APA Publications and Communications Board task force report . American Psychologist , 73 (1), 3.

- Levitt, H. M., Bamberg, M., Creswell, J. W., Frost, D. M., Josselson, R., & Suárez-Orozco, C. (2018). Journal article reporting standards for qualitative primary, qualitative meta-analytic, and mixed methods research in psychology: The APA Publications and Communications Board task force report . American Psychologist , 73 (1), 26.

External Resources

- Formatting APA Style Papers in Microsoft Word

- How to Write an APA Style Research Paper from Hamilton University

- WikiHow Guide to Writing APA Research Papers

- Sample APA Formatted Paper with Comments

- Sample APA Formatted Paper

- Tips for Writing a Paper in APA Style

1 VandenBos, G. R. (Ed). (2010). Publication manual of the American Psychological Association (6th ed.) (pp. 41-60). Washington, DC: American Psychological Association.

2 geller, e. (2018). how to write an apa-style research report . [instructional materials]. , prepared by s. c. pan for ucsd psychology.

Back to top

- Formatting Research Papers

- Using Databases and Finding References

- What Types of References Are Appropriate?

- Evaluating References and Taking Notes

- Citing References

- Writing a Literature Review

- Writing Process and Revising

- Improving Scientific Writing

- Academic Integrity and Avoiding Plagiarism

- Writing Research Papers Videos

Guidelines and Recommendations for Writing a Rigorous Quantitative Methods Section in Counseling and Related Fields

Volume 12 - Issue 3

Michael T. Kalkbrenner

Conducting and publishing rigorous empirical research based on original data is essential for advancing and sustaining high-quality counseling practice. The purpose of this article is to provide a one-stop-shop for writing a rigorous quantitative Methods section in counseling and related fields. The importance of judiciously planning, implementing, and writing quantitative research methods cannot be understated, as methodological flaws can completely undermine the integrity of the results. This article includes an overview, considerations, guidelines, best practices, and recommendations for conducting and writing quantitative research designs. The author concludes with an exemplar Methods section to provide a sample of one way to apply the guidelines for writing or evaluating quantitative research methods that are detailed in this manuscript.

Keywords : empirical, quantitative, methods, counseling, writing

The findings of rigorous empirical research based on original data is crucial for promoting and maintaining high-quality counseling practice (American Counseling Association [ACA], 2014; Giordano et al., 2021; Lutz & Hill, 2009; Wester et al., 2013). Peer-reviewed publication outlets play a crucial role in ensuring the rigor of counseling research and distributing the findings to counseling practitioners. The four major sections of an original empirical study usually include: (a) Introduction/Literature Review, (b) Methods, (c) Results, and (d) Discussion (American Psychological Association [APA], 2020; Heppner et al., 2016). Although every section of a research study must be carefully planned, executed, and reported (Giordano et al., 2021), scholars have engaged in commentary about the importance of a rigorous and clearly written Methods section for decades (Korn & Bram, 1988; Lutz & Hill, 2009). The Methods section is the “conceptual epicenter of a manuscript” (Smagorinsky, 2008, p. 390) and should include clear and specific details about how the study was conducted (Heppner et al., 2016). It is essential that producers and consumers of research are aware of key methodological standards, as the quality of quantitative methods in published research can vary notably, which has serious implications for the merit of research findings (Lutz & Hill, 2009; Wester et al., 2013).

Careful planning prior to launching data collection is especially important for conducting and writing a rigorous quantitative Methods section, as it is rarely appropriate to alter quantitative methods after data collection is complete for both practical and ethical reasons (ACA, 2014; Creswell & Creswell, 2018). A well-written Methods section is also crucial for publishing research in a peer-reviewed journal; any serious methodological flaws tend to automatically trigger a decision of rejection without revisions. Accordingly, the purpose of this article is to provide both producers and consumers of quantitative research with guidelines and recommendations for writing or evaluating the rigor of a Methods section in counseling and related fields. Specifically, this manuscript includes a general overview of major quantitative methodological subsections as well as an exemplar Methods section. The recommended subsections and guidelines for writing a rigorous Methods section in this manuscript (see Appendix) are based on a synthesis of (a) the extant literature (e.g., Creswell & Creswell, 2018; Flinn & Kalkbrenner, 2021; Giordano et al., 2021); (b) the Standards for Educational and Psychological Testing (American Educational Research Association [AERA] et al., 2014), (c) the ACA Code of Ethics (ACA, 2014), and (d) the Journal Article Reporting Standards (JARS) in the APA 7 (2020) manual.

Quantitative Methods: An Overview of the Major Sections

The Methods section is typically the second major section in a research manuscript and can begin with an overview of the theoretical framework and research paradigm that ground the study (Creswell & Creswell, 2018; Leedy & Ormrod, 2019). Research paradigms and theoretical frameworks are more commonly reported in qualitative, conceptual, and dissertation studies than in quantitative studies. However, research paradigms and theoretical frameworks can be very applicable to quantitative research designs (see the exemplar Methods section below). Readers are encouraged to consult Creswell and Creswell (2018) for a clear and concise overview about the utility of a theoretical framework and a research paradigm in quantitative research.

Research Design The research design should be clearly specified at the beginning of the Methods section. Commonly employed quantitative research designs in counseling include but are not limited to group comparisons (e.g., experimental, quasi-experimental, ex-post-facto), correlational/predictive, meta-analysis, descriptive, and single-subject designs (Creswell & Creswell, 2018; Flinn & Kalkbrenner, 2021; Leedy & Ormrod, 2019). A well-written literature review and strong research question(s) will dictate the most appropriate research design. Readers can refer to Flinn and Kalkbrenner (2021) for free (open access) commentary on and examples of conducting a literature review, formulating research questions, and selecting the most appropriate corresponding research design.

Researcher Bias and Reflexivity Counseling researchers have an ethical responsibility to minimize their personal biases throughout the research process (ACA, 2014). A researcher’s personal beliefs, values, expectations, and attitudes create a lens or framework for how data will be collected and interpreted. Researcher reflexivity or positionality statements are well-established methodological standards in qualitative research (Hays & Singh, 2012; Heppner et al., 2016; Rovai et al., 2013). Researcher bias is rarely reported in quantitative research; however, researcher bias can be just as inherently present in quantitative as it is in qualitative studies. Being reflexive and transparent about one’s biases strengthens the rigor of the research design (Creswell & Creswell, 2018; Onwuegbuzie & Leech, 2005). Accordingly, quantitative researchers should consider reflecting on their biases in similar ways as qualitative researchers (Onwuegbuzie & Leech, 2005). For example, a researcher’s topical and methodological choices are, at least in part, based on their personal interests and experiences. To this end, quantitative researchers are encouraged to reflect on and consider reporting their beliefs, assumptions, and expectations throughout the research process.

Participants and Procedures The major aim in the Participants and Procedures subsection of the Methods section is to provide a clear description of the study’s participants and procedures in enough detail for replication (ACA, 2014; APA, 2020; Giordano et al., 2021; Heppner et al., 2016). When working with human subjects, authors should briefly discuss research ethics including but not limited to receiving institutional review board (IRB) approval (Giordano et al., 2021; Korn & Bram, 1988). Additional considerations for the Participants and Procedures section include details about the authors’ sampling procedure, inclusion and/or exclusion criteria for participation, sample size, participant background information, location/site, and protocol for interventions (APA, 2020).

Sampling Procedure and Sample Size Sampling procedures should be clearly stated in the Methods section. At a minimum, the description of the sampling procedure should include researcher access to prospective participants, recruitment procedures, data collection modality (e.g., online survey), and sample size considerations. Quantitative sampling approaches tend to be clustered into either probability or non-probability techniques (Creswell & Creswell, 2018; Leedy & Ormrod, 2019). The key distinguishing feature of probability sampling is random selection, in which all prospective participants in the population have an equal chance of being randomly selected to participate in the study (Leedy & Ormrod, 2019). Examples of probability sampling techniques include simple random sampling, systematic random sampling, stratified random sampling, or cluster sampling (Leedy & Ormrod, 2019).

Non-probability sampling techniques lack random selection and there is no way of determining if every member of the population had a chance of being selected to participate in the study (Leedy & Ormrod, 2019). Examples of non-probability sampling procedures include volunteer sampling, convenience sampling, purposive sampling, quota sampling, snowball sampling, and matched sampling. In quantitative research, probability sampling procedures are more rigorous in terms of generalizability (i.e., the extent to which research findings based on sample data extend or generalize to the larger population from which the sample was drawn). However, probability sampling is not always possible and non-probability sampling procedures are rigorous in their own right. Readers are encouraged to review Leedy and Ormrod’s (2019) commentary on probability and non-probability sampling procedures. Ultimately, the selection of a sampling technique should be made based on the population parameters, available resources, and the purpose and goals of the study.

A Priori Statistical Power Analysis . It is essential that quantitative researchers determine the minimum necessary sample size for computing statistical analyses before launching data collection (Balkin & Sheperis, 2011; Sink & Mvududu, 2010). An insufficient sample size substantially increases the probability of committing a Type II error, which occurs when the results of statistical testing reveal non–statistically significant findings when in reality (of which the researcher is unaware), significant findings do exist. Computing an a priori (computed before starting data collection) statistical power analysis reduces the chances of a Type II error by determining the smallest sample size that is necessary for finding statistical significance, if statistical significance exists (Balkin & Sheperis, 2011). Readers can consult Balkin and Sheperis (2011) as well as Sink and Mvududu (2010) for an overview of statistical significance, effect size, and statistical power. A number of statistical power analysis programs are available to researchers. For example, G*Power (Faul et al., 2009) is a free software program for computing a priori statistical power analyses.

Sampling Frame and Location Counselors should report their sampling frame (total number of potential participants), response rate, raw sample (total number of participants that engaged with the study at any level, including missing and incomplete data), and the size of the final useable sample. It is also important to report the breakdown of the sample by demographic and other important participant background characteristics, for example, “XX.X% ( n = XXX) of participants were first-generation college students, XX.X% ( n = XXX) were second-generation . . .” The selection of demographic variables as well as inclusion and exclusion criteria should be justified in the literature review. Readers are encouraged to consult Creswell and Creswell (2018) for commentary on writing a strong literature review.

The timeframe, setting, and location during which data were collected are important methodological considerations (APA, 2020). Specific names of institutions and agencies should be masked to protect their privacy and confidentiality; however, authors can give descriptions of the setting and location (e.g., “Data were collected between April 2021 to February 2022 from clients seeking treatment for addictive disorders at an outpatient, integrated behavioral health care clinic located in the Northeastern United States.”). Authors should also report details about any interventions, curriculum, qualifications and background information for research assistants, experimental design protocol(s), and any other procedural design issues that would be necessary for replication. In instances in which describing a treatment or conditions becomes exorbitant (e.g., step-by-step manualized therapy, programs, or interventions), researchers can include footnotes, appendices, and/or references to refer the reader to more information about the intervention protocol.

Missing Data Procedures for handling missing values (incomplete survey responses) are important considerations in quantitative data analysis. Perhaps the most straightforward option for handling missing data is to simply delete missing responses. However, depending on the percentage of data that are missing and how the data are missing (e.g., missing completely at random, missing at random, or not missing at random), data imputation techniques can be employed to recover missing values (Cook, 2021; Myers, 2011). Quantitative researchers should provide a clear rationale behind their decisions around the deletion of missing values or when using a data imputation method. Readers are encouraged to review Cook’s (2021) commentary on procedures for handling missing data in quantitative research.

Measures Counseling and other social science researchers oftentimes use instruments and screening tools to appraise latent traits, which can be defined as variables that are inferred rather than observed (AERA et al., 2014). The purpose of the Measures (aka Instrumentation) section is to operationalize the construct(s) of measurement (Heppner et al., 2016). Specifically, the Measures subsection of the Methods in a quantitative manuscript tends to include a presentation of (a) the instrument and construct(s) of measurement, (b) reliability and validity evidence of test scores, and (c) cross-cultural fairness and norming. The Measures section might also include a Materials subsection for studies that employed data-gathering techniques or equipment besides or in addition to instruments (Heppner et al., 2016); for instance, if a research study involved the use of a biofeedback device to collect data on changes in participants’ body functions.

Instrument and Construct of Measurement Begin the Measures section by introducing the questionnaire or screening tool, its construct(s) of measurement, number of test items, example test items, and scale points. If applicable, the Measures section can also include information on scoring procedures and cutoff criterion; for example, total score benchmarks for low, medium, and high levels of the trait. Authors might also include commentary about how test scores will be operationalized to constitute the variables in the upcoming Data Analysis section.

Reliability and Validity Evidence of Test Scores Reliability evidence involves the degree to which test scores are stable or consistent and validity evidence refers to the extent to which scores on a test succeed in measuring what the test was designed to measure (AERA et al., 2014; Bardhoshi & Erford, 2017). Researchers should report both reliability and validity evidence of scores for each instrument they use (Wester et al., 2013). A number of forms of reliability evidence exist (e.g., internal consistency, test-retest, interrater, and alternate/parallel/equivalent forms) and the AERA standards (2014) outline five forms of validity evidence. For the purposes of this article, I will focus on internal consistency reliability, as it is the most popular and most commonly misused reliability estimate in social sciences research (Kalkbrenner, 2021a; McNeish, 2018), as well as construct validity. The psychometric properties of a test (including reliability and validity evidence) are contingent upon the scores from which they were derived. As such, no test is inherently valid or reliable; test scores are only reliable and valid for a certain purpose, at a particular time, for use with a specific sample. Accordingly, authors should discuss reliability and validity evidence in terms of scores, for example, “Stamm (2010) found reliability and validity evidence of scores on the Professional Quality of Life (ProQOL 5) with a sample of . . . ”

Internal Consistency Reliability Evidence. Internal consistency estimates are derived from associations between the test items based on one administration (Kalkbrenner, 2021a). Cronbach’s coefficient alpha (α) is indisputably the most popular internal consistency reliability estimate in counseling and throughout social sciences research in general (Kalkbrenner, 2021a; McNeish, 2018). The appropriate use of coefficient alpha is reliant on the data meeting the following statistical assumptions: (a) essential tau equivalence, (b) continuous level scale of measurement, (c) normally distributed data, (d) uncorrelated error, (e) unidimensional scale, and (f) unit-weighted scaling (Kalkbrenner, 2021a). For decades, coefficient alpha has been passed down in the instructional practice of counselor training programs. Coefficient alpha has appeared as the dominant reliability index in national counseling and psychology journals without most authors computing and reporting the necessary statistical assumption checking (Kalkbrenner, 2021a; McNeish, 2018). The psychometrically daunting practice of using alpha without assumption checking poses a threat to the veracity of counseling research, as the accuracy of coefficient alpha is threatened if the data violate one or more of the required assumptions.

Internal Consistency Reliability Indices and Their Appropriate Use . Composite reliability (CR) internal consistency estimates are derived in similar ways as coefficient alpha; however, the proper computation of CRs is not reliant on the data meeting many of alpha’s statistical assumptions (Kalkbrenner, 2021a; McNeish, 2018). For example, McDonald’s coefficient omega (ω or ω t ) is a CR estimate that is not dependent on the data meeting most of alpha’s assumptions (Kalkbrenner, 2021a). In addition, omega hierarchical (ω h ) and coefficient H are CR estimates that can be more advantageous than alpha. Despite the utility of CRs, their underuse in research practice is historically, in part, because of the complex nature of computation. However, recent versions of SPSS include a breakthrough point-and-click feature for computing coefficient omega as easily as coefficient alpha. Readers can refer to the SPSS user guide for steps to compute omega.

Guidelines for Reporting Internal Consistency Reliability. In the Measures subsection of the Methods section, researchers should report existing reliability evidence of scores for their instruments. This can be done briefly by reporting the results of multiple studies in the same sentence, as in: “A number of past investigators found internal consistency reliability evidence for scores on the [name of test] with a number of different samples, including college students (α =. XX, ω =. XX; Authors et al., 20XX), clients living with chronic back pain (α =. XX, ω =. XX; Authors et al., 20XX), and adults in the United States (α = . XX, ω =. XX; Authors et al., 20XX) . . .”

Researchers should also compute and report reliability estimates of test scores with their data set in the Measures section. If a researcher is using coefficient alpha, they have a duty to complete and report assumption checking to demonstrate that the properties of their sample data were suitable for alpha (Kalkbrenner, 2021a; McNeish, 2018). Another option is to compute a CR (e.g., ω or H ) instead of alpha. However, Kalkbrenner (2021a) recommended that researchers report both coefficient alpha (because of its popularity) and coefficient omega (because of the robustness of the estimate). The proper interpretation of reliability estimates of test scores is done on a case-by-case basis, as the meaning of reliability coefficients is contingent upon the construct of measurement and the stakes or consequences of the results for test takers (Kalkbrenner, 2021a). The following tentative interpretative guidelines for adults’ scores on attitudinal measures were offered by Kalkbrenner (2021b) for coefficient alpha: α < .70 = poor, α > .70 to .84 = acceptable, α > .85 = strong; and for coefficient omega: ω < .65 = poor, ω > .65 to .80 = acceptable, ω > .80 = strong. It is important to note that these thresholds are for adults’ scores on attitudinal measures; acceptable internal consistency reliability estimates of scores should be much stronger for high-stakes testing.

Construct Validity Evidence of Test Scores. Construct validity involves the test’s ability to accurately capture a theoretical or latent construct (AERA et al., 2014). Construct validity considerations are particularly important for counseling researchers who tend to investigate latent traits as outcome variables. At a minimum, counseling researchers should report construct validity evidence for both internal structure and relations with theoretically relevant constructs. Internal structure (aka factorial validity) is a source of construct validity that represents the degree to which “the relationships among test items and test components conform to the construct on which the proposed test score interpretations are based” (AERA et al., 2014, p. 16). Readers can refer to Kalkbrenner (2021b) for a free (open access publishing) overview of exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) that is written in layperson’s terms. Relations with theoretically relevant constructs (e.g., convergent and divergent validity) are another source of construct validity evidence that involves comparing scores on the test in question with scores on other reputable tests (AERA et al., 2014; Strauss & Smith, 2009).

Guidelines for Reporting Validity Evidence. Counseling researchers should report existing evidence of at least internal structure and relations with theoretically relevant constructs (e.g., convergent or divergent validity) for each instrument they use. EFA results alone are inadequate for demonstrating internal structure validity evidence of scores, as EFA is a much less rigorous test of internal structure than CFA (Kalkbrenner, 2021b). In addition, EFA results can reveal multiple retainable factor solutions, which need to be tested/confirmed via CFA before even initial internal structure validity evidence of scores can be established. Thus, both EFA and CFA are necessary for reporting/demonstrating initial evidence of internal structure of test scores. In an extension of internal structure, counselors should also report existing convergent and/or divergent validity of scores. High correlations ( r > .50) demonstrate evidence of convergent validity and moderate-to-low correlations ( r < .30, preferably r < .10) support divergent validity evidence of scores (Sink & Stroh, 2006; Swank & Mullen, 2017).

In an ideal situation, a researcher will have the resources to test and report the internal structure (e.g., compute CFA firsthand) of scores on the instrumentation with their sample. However, CFA requires large sample sizes (Kalkbrenner, 2021b), which oftentimes is not feasible. It might be more practical for researchers to test and report relations with theoretically relevant constructs, though adding one or more questionnaire(s) to data collection efforts can come with the cost of increasing respondent fatigue. In these instances, researchers might consider reporting other forms of validity evidence (e.g., evidence based on test content, criterion validity, or response processes; AERA et al., 2014). In instances when computing firsthand validity evidence of scores is not logistically viable, researchers should be transparent about this limitation and pay especially careful attention to presenting evidence for cross-cultural fairness and norming.

Cross-Cultural Fairness and Norming In a psychometric context, fairness (sometimes referred to as cross-cultural fairness) is a fundamental validity issue and a complex construct to define (AERA et al., 2014; Kane, 2010; Neukrug & Fawcett, 2015). I offer the following composite definition of cross-cultural fairness for the purposes of a quantitative Measures section: the degree to which test construction, administration procedures, interpretations, and uses of results are equitable and represent an accurate depiction of a diverse group of test takers’ abilities, achievement, attitudes, perceptions, values, and/or experiences (AERA et al., 2014; Educational Testing Service [ETS], 2016; Kane, 2010; Kane & Bridgeman, 2017). Counseling researchers should consider the following central fairness issues when selecting or developing instrumentation: measurement bias, accessibility, universal design, equivalent meaning (invariance), test content, opportunity to learn, test adaptations, and comparability (AERA et al., 2014; Kane & Bridgeman, 2017). Providing a comprehensive overview of fairness is beyond the scope of this article; however, readers are encouraged to read Chapter 3 in the AERA standards (2014) on Fairness in Testing.

In the Measures section, counseling researchers should include commentary on how and in what ways cross-cultural fairness guided their selection, administration, and interpretation of procedures and test results (AERA et al., 2014; Kalkbrenner, 2021b). Cross-cultural fairness and construct validity are related constructs (AERA et al., 2014). Accordingly, citing construct validity of test scores (see the previous section) with normative samples similar to the researcher’s target population is one way to provide evidence of cross-cultural fairness. However, construct validity evidence alone might not be a sufficient indication of cross-cultural fairness, as the latent meaning of test scores are a function of test takers’ cultural context (Kalkbrenner, 2021b). To this end, when selecting instrumentation, researchers should review original psychometric studies and consider the normative sample(s) from which test scores were derived.

Commentary on the Danger of Using Self-Developed and Untested Scales Counseling researchers have an ethical duty to “carefully consider the validity, reliability, psychometric limitations, and appropriateness of instruments when selecting assessments” (ACA, 2014, p. 11). Quantitative researchers might encounter instances in which a scale is not available to measure their desired construct of measurement (latent/inferred variable). In these cases, the first step in the line of research is oftentimes to conduct an instrument development and score validation study (AERA et al., 2014; Kalkbrenner, 2021b). Detailing the protocol for conducting psychometric research is outside the scope of this article; however, readers can refer to the MEASURE Approach to Instrument Development (Kalkbrenner, 2021c) for a free (open access publishing) overview of the steps in an instrument development and score validation study. Adapting an existing scale can be option in lieu of instrument development; however, according to the AERA standards (2014), “an index that is constructed by manipulating and combining test scores should be subjected to the same validity, reliability, and fairness investigations that are expected for the test scores that underlie the index” (p. 210). Although it is not necessary that all quantitative researchers become psychometricians and conduct full-fledged psychometric studies to validate scores on instrumentation, researchers do have a responsibility to report evidence of the reliability, validity, and cross-cultural fairness of test scores for each instrument they used. Without at least initial construct validity testing of scores (calibration), researchers cannot determine what, if anything at all, an untested instrument actually measures.

Data Analysis Counseling researchers should report and explain the selection of their data analytic procedures (e.g., statistical analyses) in a Data Analysis (or Statistical Analysis) subsection of the Methods or Results section (Giordano et al., 2021; Leedy & Ormrod, 2019). The placement of the Data Analysis section in either the Methods or Results section can vary between publication outlets; however, this section tends to include commentary on variables, statistical models and analyses, and statistical assumption checking procedures.

Operationalizing Variables and Corresponding Statistical Analyses Clearly outlining each variable is an important first step in selecting the most appropriate statistical analysis for answering each research question (Creswell & Creswell, 2018). Researchers should specify the independent variable(s) and corresponding levels as well as the dependent variable(s); for example, “The first independent variable, time, was composed of the three following levels: pre, middle, and post. The dependent variables were participants’ scores on the burnout and compassion satisfaction subscales of the ProQOL 5.” After articulating the variables, counseling researchers are tasked with identifying each variable’s scale of measurement (Creswell & Creswell, 2018; Field, 2018; Flinn & Kalkbrenner, 2021). Researchers can select the most appropriate statistical test(s) for answering their research question(s) based on the scale of measurement for each variable and referring to Table 8.3 on page 159 in Creswell and Creswell (2018), Figure 1 in Flinn and Kalkbrenner (2021), or the chart on page 1072 in Field (2018).

Assumption Checking Statistical analyses used in quantitative research are derived based on a set of underlying assumptions (Field, 2018; Giordano et al., 2021). Accordingly, it is essential that quantitative researchers outline their protocol for testing their sample data for the appropriate statistical assumptions. Assumptions of common statistical tests in counseling research include normality, absence of outliers (multivariate and/or univariate), homogeneity of covariance, homogeneity of regression slopes, homoscedasticity, independence, linearity, and absence of multicollinearity (Flinn & Kalkbrenner, 2021; Giordano et al., 2021). Readers can refer to Figure 2 in Flinn and Kalkbrenner (2021) for an overview of statistical assumptions for the major statistical analyses in counseling research.

Exemplar Quantitative Methods Section

The following section includes an exemplar quantitative methods section based on a hypothetical example and a practice data set. Producers and consumers of quantitative research can refer to the following section as an example for writing their own Methods section or for evaluating the rigor of an existing Methods section. As stated previously, a well-written literature review and research question(s) are essential for grounding the study and Methods section (Flinn & Kalkbrenner, 2021). The final piece of a literature review section is typically the research question(s). Accordingly, the following research question guided the following exemplar Methods section: To what extent are there differences in anxiety severity between college students who participate in deep breathing exercises with progressive muscle relaxation, group exercise program, or both group exercise and deep breathing with progressive muscle relaxation?

——-Exemplar——-

A quantitative group comparison research design was employed based on a post-positivist philosophy of science (Creswell & Creswell, 2018). Specifically, I implemented a quasi-experimental, control group pretest/posttest design to answer the research question (Leedy & Ormrod, 2019). Consistent with a post-positivist philosophy of science, I reflected on pursuing a probabilistic objective answer that is situated within the context of imperfect and fallible evidence. The rationale for the present study was grounded in Dr. David Servan-Schreiber’s (2009) theory of lifestyle practices for integrated mental and physical health. According to Servan-Schreiber, simultaneously focusing on improving one’s mental and physical health is more effective than focusing on either physical health or mental wellness in isolation. Consistent with Servan-Schreiber’s theory, the aim of the present study was to compare the utility of three different approaches for anxiety reduction: a behavioral approach alone, a physiological approach alone, and a combined behavioral approach and physiological approach.

I am in my late 30s and identify as a White man. I have a PhD in counselor education as well as an MS in clinical mental health counseling. I have a deep belief in and an active line of research on the utility of total wellness (combined mental and physical health). My research and clinical experience have informed my passion and interest in studying the utility of integrated physical and psychological health services. More specifically, my personal beliefs, values, and interest in total wellness influenced my decision to conduct the present study. I carefully followed the procedures outlined below to reduce the chances that my personal values biased the research design.

Participants and Procedures Data collection began following approval from the IRB. Data were collected during the fall 2022 semester from undergraduate students who were at least 18 years or older and enrolled in at least one class at a land grant, research-intensive university located in the Southwestern United States. An a priori statistical power analysis was computed using G*Power (Faul et al., 2009). Results revealed that a sample size of at least 42 would provide an 80% power estimate, α = .05, with a moderate effect size, f = 0.25.

I obtained an email list from the registrar’s office of all students enrolled in a section of a Career Excellence course, which was selected to recruit students in a variety of academic majors because all undergraduate students in the College of Education are required to take this course. The focus of this study (mental and physical wellness) was also consistent with the purpose of the course (success in college). A non-probability, convenience sampling procedure was employed by sending a recruitment message to students’ email addresses via the Qualtrics online survey platform. The response rate was approximately 15%, with a total of 222 prospective participants indicating their interest in the study by clicking on the electronic recruitment link, which automatically sent them an invitation to attend an information session about the study. One hundred forty-four students showed up for the information session, 129 of which provided their voluntary informed consent to enroll in the study. Participants were given a confidential identification number to track their pretest/posttest responses, and then they completed the pretest (see the Measures section below). Respondents were randomly assigned in equal groups to either (a) deep breathing with progressive muscle relaxation condition, (b) group exercise condition, or (c) both exercise and deep breathing with progressive muscle relaxation condition.

A missing values analysis showed that less than 5% of data was missing for all cases. Expectation maximization was used to impute missing values, as Little’s Missing Completely at Random (MCAR) test revealed that the data could be treated as MCAR ( p = .367). Data from five participants who did not return to complete the posttest at the end of the semester were removed, yielding a robust sample of N = 124. Participants ( N = 124) ranged in age from 18 to 33 ( M = 21.64, SD = 3.70). In terms of gender identity, 65.0% ( n = 80) self-identified as female, 32.2% ( n = 40) as male, 0.8% ( n = 1) as transgender, and 2.4% ( n = 3) did not specify their gender identity. For ethnic identity, 50.0% ( n = 62) identified as White, 26.7% ( n = 33) as Latinx, 12.1% ( n = 15) as Asian, 9.6% ( n = 12) as Black, 0.8% ( n = 1) as Alaskan Native, and 0.8% ( n = 1) did not specify their ethnic identity. In terms of generational status, 36.3% ( n = 45) of participants were first-generation college students and 63.7% ( n = 79) were second-generation or beyond.

Group Exercise and Deep Breathing Programs I was awarded a small grant to offer on-campus deep breathing with progressive muscle relaxation and group exercise programs. The structure of the group exercise program was based on Patterson et al. (2021), which consisted of more than 50 available exercise classes each week (e.g., cycling, yoga, swimming, dance). There was no limit to the number of classes that participants could attend; however, attending at least one class each week was required for participation in the study. Readers can refer to Patterson et al. for more information about the group exercise programming.

Neeru et al.’s (2015) deep breathing and progressive muscle relaxation programming was used in the present study. Participants completed daily deep breathing and Jacobson Progressive Muscle Relaxation (JPMR). JPMR was selected because of its documented success with treating anxiety disorders (Neeru et al., 2015). Specifically, the program consisted of four deep breathing steps completed five times and JPMR for approximately 25 minutes daily. Participants attended a weekly deep breathing and JPMR session facilitated by a licensed professional counselor. Participants also practiced deep breathing and JPMR on their own daily and kept a log to document their practice sessions. Readers can refer to Neeru et al. for more information about JPMR and the deep breathing exercises.

Measures Prospective participants read an informed consent statement and indicated their voluntary informed consent by clicking on a checkbox. Next, participants confirmed that they met the following inclusion criteria: (a) at least 18 years old and (b) currently enrolled in at least one undergraduate college class. The instrumentation began with demographic items regarding participants’ gender identity, ethnic identity, age, and confidential identification number to track their pretest and posttest scores. Lastly, participants completed a convergent validity measure (Mental Health Inventory – 5) and the Generalized Anxiety Disorder (GAD)-7 to measure the outcome variable (anxiety severity).

Reliability and Validity Evidence of Test Scores Tests of internal consistency were computed to test the reliability of scores on the screening tool for appraising anxiety severity with undergraduate students in the present sample. For internal consistency reliability of scores, coefficient alpha (α) and coefficient omega (ω) were computed with the following minimum thresholds for adults’ scores on attitudinal measures: α > .70 and ω > .65, based on the recommendations of Kalkbrenner (2021b).

The Mental Health Inventory–5. Participants completed the Mental Health Inventory (MHI)-5 to test the convergent validity of undergraduate students in the present samples’ scores on the GAD-7, which was used to measure the outcome variable in this study, anxiety severity. The MHI-5 is a 5-item measure for appraising overall mental health (Berwick et al., 1991). Higher MHI-5 scores reflect better mental health. Participants responded to test items (example: “How much of the time, during the past month, have you been a very nervous person?”) on the following Likert-type scale: 0 = none of the time , 1 = a little of the time , 2 = some of the time , 3 = a good bit of the time , 4 = most of the time , or 5 = all of the time . The MHI-5 has particular utility as a convergent validity measure because of its brief nature (5 items) coupled with the myriad of support for its psychometric properties (e.g., Berwick et al., 1991; Rivera-Riquelme et al., 2019; Thorsen et al., 2013). As just a few examples, Rivera-Riquelme et al. (2019) found acceptable internal consistency reliability evidence (α = .71, ω = .78) and internal structure validity evidence of MHI-5 scores. In addition, the findings of Thorsen et al. (2013) demonstrated convergent validity evidence of MHI-5 scores. Findings in the extant literature (e.g., Foster et al., 2016; Vijayan & Joseph, 2015) established an inverse relationship between anxiety and mental health. Thus, a strong negative correlation ( r > −.50; Sink & Stroh, 2006) between the MHI-5 and GAD-7 would support convergent validity evidence of scores.