Subscribe to the PwC Newsletter

Join the community, trending research, autocoder: enhancing code large language model with \textsc{aiev-instruct}.

bin123apple/autocoder • 23 May 2024

We introduce AutoCoder, the first Large Language Model to surpass GPT-4 Turbo (April 2024) and GPT-4o in pass@1 on the Human Eval benchmark test ($\mathbf{90. 9\%}$ vs. $\mathbf{90. 2\%}$).

FinRobot: An Open-Source AI Agent Platform for Financial Applications using Large Language Models

ai4finance-foundation/finrobot • 23 May 2024

As financial institutions and professionals increasingly incorporate Large Language Models (LLMs) into their workflows, substantial barriers, including proprietary data and specialized knowledge, persist between the finance sector and the AI community.

EasyAnimate: A High-Performance Long Video Generation Method based on Transformer Architecture

The motion module can be adapted to various DiT baseline methods to generate video with different styles.

YOLOv10: Real-Time End-to-End Object Detection

In this work, we aim to further advance the performance-efficiency boundary of YOLOs from both the post-processing and model architecture.

InstaDrag: Lightning Fast and Accurate Drag-based Image Editing Emerging from Videos

magic-research/instadrag • 22 May 2024

Accuracy and speed are critical in image editing tasks.

$\textit{S}^3$Gaussian: Self-Supervised Street Gaussians for Autonomous Driving

Photorealistic 3D reconstruction of street scenes is a critical technique for developing real-world simulators for autonomous driving.

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity

We design models based off T5-Base and T5-Large to obtain up to 7x increases in pre-training speed with the same computational resources.

Looking Backward: Streaming Video-to-Video Translation with Feature Banks

This paper introduces StreamV2V, a diffusion model that achieves real-time streaming video-to-video (V2V) translation with user prompts.

LLaVA-UHD: an LMM Perceiving Any Aspect Ratio and High-Resolution Images

To address the challenges, we present LLaVA-UHD, a large multimodal model that can efficiently perceive images in any aspect ratio and high resolution.

FlashRAG: A Modular Toolkit for Efficient Retrieval-Augmented Generation Research

With the advent of Large Language Models (LLMs), the potential of Retrieval Augmented Generation (RAG) techniques have garnered considerable research attention.

machine learning Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

An explainable machine learning model for identifying geographical origins of sea cucumber Apostichopus japonicus based on multi-element profile

A comparison of machine learning- and regression-based models for predicting ductility ratio of rc beam-column joints, alexa, is this a historical record.

Digital transformation in government has brought an increase in the scale, variety, and complexity of records and greater levels of disorganised data. Current practices for selecting records for transfer to The National Archives (TNA) were developed to deal with paper records and are struggling to deal with this shift. This article examines the background to the problem and outlines a project that TNA undertook to research the feasibility of using commercially available artificial intelligence tools to aid selection. The project AI for Selection evaluated a range of commercial solutions varying from off-the-shelf products to cloud-hosted machine learning platforms, as well as a benchmarking tool developed in-house. Suitability of tools depended on several factors, including requirements and skills of transferring bodies as well as the tools’ usability and configurability. This article also explores questions around trust and explainability of decisions made when using AI for sensitive tasks such as selection.

Automated Text Classification of Maintenance Data of Higher Education Buildings Using Text Mining and Machine Learning Techniques

Data-driven analysis and machine learning for energy prediction in distributed photovoltaic generation plants: a case study in queensland, australia, modeling nutrient removal by membrane bioreactor at a sewage treatment plant using machine learning models, big five personality prediction based in indonesian tweets using machine learning methods.

<span lang="EN-US">The popularity of social media has drawn the attention of researchers who have conducted cross-disciplinary studies examining the relationship between personality traits and behavior on social media. Most current work focuses on personality prediction analysis of English texts, but Indonesian has received scant attention. Therefore, this research aims to predict user’s personalities based on Indonesian text from social media using machine learning techniques. This paper evaluates several machine learning techniques, including <a name="_Hlk87278444"></a>naive Bayes (NB), K-nearest neighbors (KNN), and support vector machine (SVM), based on semantic features including emotion, sentiment, and publicly available Twitter profile. We predict the personality based on the big five personality model, the most appropriate model for predicting user personality in social media. We examine the relationships between the semantic features and the Big Five personality dimensions. The experimental results indicate that the Big Five personality exhibit distinct emotional, sentimental, and social characteristics and that SVM outperformed NB and KNN for Indonesian. In addition, we observe several terms in Indonesian that specifically refer to each personality type, each of which has distinct emotional, sentimental, and social features.</span>

Compressive strength of concrete with recycled aggregate; a machine learning-based evaluation

Temperature prediction of flat steel box girders of long-span bridges utilizing in situ environmental parameters and machine learning, computer-assisted cohort identification in practice.

The standard approach to expert-in-the-loop machine learning is active learning, where, repeatedly, an expert is asked to annotate one or more records and the machine finds a classifier that respects all annotations made until that point. We propose an alternative approach, IQRef , in which the expert iteratively designs a classifier and the machine helps him or her to determine how well it is performing and, importantly, when to stop, by reporting statistics on a fixed, hold-out sample of annotated records. We justify our approach based on prior work giving a theoretical model of how to re-use hold-out data. We compare the two approaches in the context of identifying a cohort of EHRs and examine their strengths and weaknesses through a case study arising from an optometric research problem. We conclude that both approaches are complementary, and we recommend that they both be employed in conjunction to address the problem of cohort identification in health research.

Export Citation Format

Share document.

Frequently Asked Questions

JMLR Papers

Select a volume number to see its table of contents with links to the papers.

Volume 23 (January 2022 - Present)

Volume 22 (January 2021 - December 2021)

Volume 21 (January 2020 - December 2020)

Volume 20 (January 2019 - December 2019)

Volume 19 (August 2018 - December 2018)

Volume 18 (February 2017 - August 2018)

Volume 17 (January 2016 - January 2017)

Volume 16 (January 2015 - December 2015)

Volume 15 (January 2014 - December 2014)

Volume 14 (January 2013 - December 2013)

Volume 13 (January 2012 - December 2012)

Volume 12 (January 2011 - December 2011)

Volume 11 (January 2010 - December 2010)

Volume 10 (January 2009 - December 2009)

Volume 9 (January 2008 - December 2008)

Volume 8 (January 2007 - December 2007)

Volume 7 (January 2006 - December 2006)

Volume 6 (January 2005 - December 2005)

Volume 5 (December 2003 - December 2004)

Volume 4 (Apr 2003 - December 2003)

Volume 3 (Jul 2002 - Mar 2003)

Volume 2 (Oct 2001 - Mar 2002)

Volume 1 (Oct 2000 - Sep 2001)

Special Topics

Bayesian Optimization

Learning from Electronic Health Data (December 2016)

Gesture Recognition (May 2012 - present)

Large Scale Learning (Jul 2009 - present)

Mining and Learning with Graphs and Relations (February 2009 - present)

Grammar Induction, Representation of Language and Language Learning (Nov 2010 - Apr 2011)

Causality (Sep 2007 - May 2010)

Model Selection (Apr 2007 - Jul 2010)

Conference on Learning Theory 2005 (February 2007 - Jul 2007)

Machine Learning for Computer Security (December 2006)

Machine Learning and Large Scale Optimization (Jul 2006 - Oct 2006)

Approaches and Applications of Inductive Programming (February 2006 - Mar 2006)

Learning Theory (Jun 2004 - Aug 2004)

Special Issues

In Memory of Alexey Chervonenkis (Sep 2015)

Independent Components Analysis (December 2003)

Learning Theory (Oct 2003)

Inductive Logic Programming (Aug 2003)

Fusion of Domain Knowledge with Data for Decision Support (Jul 2003)

Variable and Feature Selection (Mar 2003)

Machine Learning Methods for Text and Images (February 2003)

Eighteenth International Conference on Machine Learning (ICML2001) (December 2002)

Computational Learning Theory (Nov 2002)

Shallow Parsing (Mar 2002)

Kernel Methods (December 2001)

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 01 February 2021

An open source machine learning framework for efficient and transparent systematic reviews

- Rens van de Schoot ORCID: orcid.org/0000-0001-7736-2091 1 ,

- Jonathan de Bruin ORCID: orcid.org/0000-0002-4297-0502 2 ,

- Raoul Schram 2 ,

- Parisa Zahedi ORCID: orcid.org/0000-0002-1610-3149 2 ,

- Jan de Boer ORCID: orcid.org/0000-0002-0531-3888 3 ,

- Felix Weijdema ORCID: orcid.org/0000-0001-5150-1102 3 ,

- Bianca Kramer ORCID: orcid.org/0000-0002-5965-6560 3 ,

- Martijn Huijts ORCID: orcid.org/0000-0002-8353-0853 4 ,

- Maarten Hoogerwerf ORCID: orcid.org/0000-0003-1498-2052 2 ,

- Gerbrich Ferdinands ORCID: orcid.org/0000-0002-4998-3293 1 ,

- Albert Harkema ORCID: orcid.org/0000-0002-7091-1147 1 ,

- Joukje Willemsen ORCID: orcid.org/0000-0002-7260-0828 1 ,

- Yongchao Ma ORCID: orcid.org/0000-0003-4100-5468 1 ,

- Qixiang Fang ORCID: orcid.org/0000-0003-2689-6653 1 ,

- Sybren Hindriks 1 ,

- Lars Tummers ORCID: orcid.org/0000-0001-9940-9874 5 &

- Daniel L. Oberski ORCID: orcid.org/0000-0001-7467-2297 1 , 6

Nature Machine Intelligence volume 3 , pages 125–133 ( 2021 ) Cite this article

73k Accesses

228 Citations

165 Altmetric

Metrics details

- Computational biology and bioinformatics

- Computer science

- Medical research

A preprint version of the article is available at arXiv.

To help researchers conduct a systematic review or meta-analysis as efficiently and transparently as possible, we designed a tool to accelerate the step of screening titles and abstracts. For many tasks—including but not limited to systematic reviews and meta-analyses—the scientific literature needs to be checked systematically. Scholars and practitioners currently screen thousands of studies by hand to determine which studies to include in their review or meta-analysis. This is error prone and inefficient because of extremely imbalanced data: only a fraction of the screened studies is relevant. The future of systematic reviewing will be an interaction with machine learning algorithms to deal with the enormous increase of available text. We therefore developed an open source machine learning-aided pipeline applying active learning: ASReview. We demonstrate by means of simulation studies that active learning can yield far more efficient reviewing than manual reviewing while providing high quality. Furthermore, we describe the options of the free and open source research software and present the results from user experience tests. We invite the community to contribute to open source projects such as our own that provide measurable and reproducible improvements over current practice.

Similar content being viewed by others

Testing theory of mind in large language models and humans

Highly accurate protein structure prediction with AlphaFold

A guide to artificial intelligence for cancer researchers

With the emergence of online publishing, the number of scientific manuscripts on many topics is skyrocketing 1 . All of these textual data present opportunities to scholars and practitioners while simultaneously confronting them with new challenges. Scholars often develop systematic reviews and meta-analyses to develop comprehensive overviews of the relevant topics 2 . The process entails several explicit and, ideally, reproducible steps, including identifying all likely relevant publications in a standardized way, extracting data from eligible studies and synthesizing the results. Systematic reviews differ from traditional literature reviews in that they are more replicable and transparent 3 , 4 . Such systematic overviews of literature on a specific topic are pivotal not only for scholars, but also for clinicians, policy-makers, journalists and, ultimately, the general public 5 , 6 , 7 .

Given that screening the entire research literature on a given topic is too labour intensive, scholars often develop quite narrow searches. Developing a search strategy for a systematic review is an iterative process aimed at balancing recall and precision 8 , 9 ; that is, including as many potentially relevant studies as possible while simultaneously limiting the total number of studies retrieved. The vast number of publications in the field of study often leads to a relatively precise search, with the risk of missing relevant studies. The process of systematic reviewing is error prone and extremely time intensive 10 . In fact, if the literature of a field is growing faster than the amount of time available for systematic reviews, adequate manual review of this field then becomes impossible 11 .

The rapidly evolving field of machine learning has aided researchers by allowing the development of software tools that assist in developing systematic reviews 11 , 12 , 13 , 14 . Machine learning offers approaches to overcome the manual and time-consuming screening of large numbers of studies by prioritizing relevant studies via active learning 15 . Active learning is a type of machine learning in which a model can choose the data points (for example, records obtained from a systematic search) it would like to learn from and thereby drastically reduce the total number of records that require manual screening 16 , 17 , 18 . In most so-called human-in-the-loop 19 machine-learning applications, the interaction between the machine-learning algorithm and the human is used to train a model with a minimum number of labelling tasks. Unique to systematic reviewing is that not only do all relevant records (that is, titles and abstracts) need to seen by a researcher, but an extremely diverse range of concepts also need to be learned, thereby requiring flexibility in the modelling approach as well as careful error evaluation 11 . In the case of systematic reviewing, the algorithm(s) are interactively optimized for finding the most relevant records, instead of finding the most accurate model. The term researcher-in-the-loop was introduced 20 as a special case of human-in-the-loop with three unique components: (1) the primary output of the process is a selection of the records, not a trained machine learning model; (2) all records in the relevant selection are seen by a human at the end of the process 21 ; (3) the use-case requires a reproducible workflow and complete transparency is required 22 .

Existing tools that implement such an active learning cycle for systematic reviewing are described in Table 1 ; see the Supplementary Information for an overview of all of the software that we considered (note that this list was based on a review of software tools 12 ). However, existing tools have two main drawbacks. First, many are closed source applications with black box algorithms, which is problematic as transparency and data ownership are essential in the era of open science 22 . Second, to our knowledge, existing tools lack the necessary flexibility to deal with the large range of possible concepts to be learned by a screening machine. For example, in systematic reviews, the optimal type of classifier will depend on variable parameters, such as the proportion of relevant publications in the initial search and the complexity of the inclusion criteria used by the researcher 23 . For this reason, any successful system must allow for a wide range of classifier types. Benchmark testing is crucial to understand the real-world performance of any machine learning-aided system, but such benchmark options are currently mostly lacking.

In this paper we present an open source machine learning-aided pipeline with active learning for systematic reviews called ASReview. The goal of ASReview is to help scholars and practitioners to get an overview of the most relevant records for their work as efficiently as possible while being transparent in the process. The open, free and ready-to-use software ASReview addresses all concerns mentioned above: it is open source, uses active learning, allows multiple machine learning models. It also has a benchmark mode, which is especially useful for comparing and designing algorithms. Furthermore, it is intended to be easily extensible, allowing third parties to add modules that enhance the pipeline. Although we focus this paper on systematic reviews, ASReview can handle any text source.

In what follows, we first present the pipeline for manual versus machine learning-aided systematic reviews. We then show how ASReview has been set up and how ASReview can be used in different workflows by presenting several real-world use cases. We subsequently demonstrate the results of simulations that benchmark performance and present the results of a series of user-experience tests. Finally, we discuss future directions.

Pipeline for manual and machine learning-aided systematic reviews

The pipeline of a systematic review without active learning traditionally starts with researchers doing a comprehensive search in multiple databases 24 , using free text words as well as controlled vocabulary to retrieve potentially relevant references. The researcher then typically verifies that the key papers they expect to find are indeed included in the search results. The researcher downloads a file with records containing the text to be screened. In the case of systematic reviewing it contains the titles and abstracts (and potentially other metadata such as the authors’s names, journal name, DOI) of potentially relevant references into a reference manager. Ideally, two or more researchers then screen the records’s titles and abstracts on the basis of the eligibility criteria established beforehand 4 . After all records have been screened, the full texts of the potentially relevant records are read to determine which of them will be ultimately included in the review. Most records are excluded in the title and abstract phase. Typically, only a small fraction of the records belong to the relevant class, making title and abstract screening an important bottleneck in systematic reviewing process 25 . For instance, a recent study analysed 10,115 records and excluded 9,847 after title and abstract screening, a drop of more than 95% 26 . ASReview therefore focuses on this labour-intensive step.

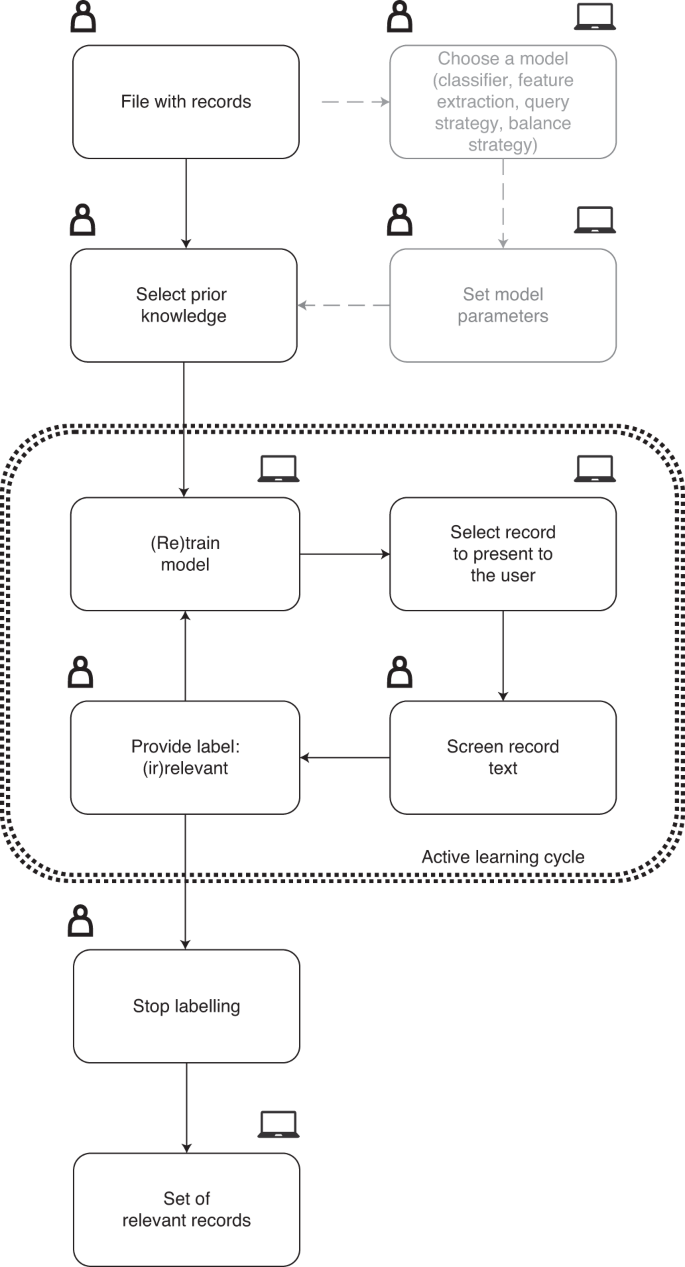

The research pipeline of ASReview is depicted in Fig. 1 . The researcher starts with a search exactly as described above and subsequently uploads a file containing the records (that is, metadata containing the text of the titles and abstracts) into the software. Prior knowledge is then selected, which is used for training of the first model and presenting the first record to the researcher. As screening is a binary classification problem, the reviewer must select at least one key record to include and exclude on the basis of background knowledge. More prior knowledge may result in improved efficiency of the active learning process.

The symbols indicate whether the action is taken by a human, a computer, or whether both options are available.

A machine learning classifier is trained to predict study relevance (labels) from a representation of the record-containing text (feature space) on the basis of prior knowledge. We have purposefully chosen not to include an author name or citation network representation in the feature space to prevent authority bias in the inclusions. In the active learning cycle, the software presents one new record to be screened and labelled by the user. The user’s binary label (1 for relevant versus 0 for irrelevant) is subsequently used to train a new model, after which a new record is presented to the user. This cycle continues up to a certain user-specified stopping criterion has been reached. The user now has a file with (1) records labelled as either relevant or irrelevant and (2) unlabelled records ordered from most to least probable to be relevant as predicted by the current model. This set-up helps to move through a large database much quicker than in the manual process, while the decision process simultaneously remains transparent.

Software implementation for ASReview

The source code 27 of ASReview is available open source under an Apache 2.0 license, including documentation 28 . Compiled and packaged versions of the software are available on the Python Package Index 29 or Docker Hub 30 . The free and ready-to-use software ASReview implements oracle, simulation and exploration modes. The oracle mode is used to perform a systematic review with interaction by the user, the simulation mode is used for simulation of the ASReview performance on existing datasets, and the exploration mode can be used for teaching purposes and includes several preloaded labelled datasets.

The oracle mode presents records to the researcher and the researcher classifies these. Multiple file formats are supported: (1) RIS files are used by digital libraries such as IEEE Xplore, Scopus and ScienceDirect; the citation managers Mendeley, RefWorks, Zotero and EndNote support the RIS format too. (2) Tabular datasets with the .csv, .xlsx and .xls file extensions. CSV files should be comma separated and UTF-8 encoded; the software for CSV files accepts a set of predetermined labels in line with the ones used in RIS files. Each record in the dataset should hold the metadata on, for example, a scientific publication. Mandatory metadata is text and can, for example, be titles or abstracts from scientific papers. If available, both are used to train the model, but at least one is needed. An advanced option is available that splits the title and abstracts in the feature-extraction step and weights the two feature matrices independently (for TF–IDF only). Other metadata such as author, date, DOI and keywords are optional but not used for training the models. When using ASReview in the simulation or exploration mode, an additional binary variable is required to indicate historical labelling decisions. This column, which is automatically detected, can also be used in the oracle mode as background knowledge for previous selection of relevant papers before entering the active learning cycle. If unavailable, the user has to select at least one relevant record that can be identified by searching the pool of records. At least one irrelevant record should also be identified; the software allows to search for specific records or presents random records that are most likely to be irrelevant due to the extremely imbalanced data.

The software has a simple yet extensible default model: a naive Bayes classifier, TF–IDF feature extraction, a dynamic resampling balance strategy 31 and certainty-based sampling 17 , 32 for the query strategy. These defaults were chosen on the basis of their consistently high performance in benchmark experiments across several datasets 31 . Moreover, the low computation time of these default settings makes them attractive in applications, given that the software should be able to run locally. Users can change the settings, shown in Table 2 , and technical details are described in our documentation 28 . Users can also add their own classifiers, feature extraction techniques, query strategies and balance strategies.

ASReview has a number of implemented features (see Table 2 ). First, there are several classifiers available: (1) naive Bayes; (2) support vector machines; (3) logistic regression; (4) neural networks; (5) random forests; (6) LSTM-base, which consists of an embedding layer, an LSTM layer with one output, a dense layer and a single sigmoid output node; and (7) LSTM-pool, which consists of an embedding layer, an LSTM layer with many outputs, a max pooling layer and a single sigmoid output node. The feature extraction techniques available are Doc2Vec 33 , embedding LSTM, embedding with IDF or TF–IDF 34 (the default is unigram, with the option to run n -grams while other parameters are set to the defaults of Scikit-learn 35 ) and sBERT 36 . The available query strategies for the active learning part are (1) random selection, ignoring model-assigned probabilities; (2) uncertainty-based sampling, which chooses the most uncertain record according to the model (that is, closest to 0.5 probability); (3) certainty-based sampling (max in ASReview), which chooses the record most likely to be included according to the model; and (4) mixed sampling, which uses a combination of random and certainty-based sampling.

There are several balance strategies that rebalance and reorder the training data. This is necessary, because the data is typically extremely imbalanced and therefore we have implemented the following balance strategies: (1) full sampling, which uses all of the labelled records; (2) undersampling the irrelevant records so that the included and excluded records are in some particular ratio (closer to one); and (3) dynamic resampling, a novel method similar to undersampling in that it decreases the imbalance of the training data 31 . However, in dynamic resampling, the number of irrelevant records is decreased, whereas the number of relevant records is increased by duplication such that the total number of records in the training data remains the same. The ratio between relevant and irrelevant records is not fixed over interactions, but dynamically updated depending on the number of labelled records, the total number of records and the ratio between relevant and irrelevant records. Details on all of the described algorithms can be found in the code and documentation referred to above.

By default, ASReview converts the records’s texts into a document-term matrix, terms are converted to lowercase and no stop words are removed by default (but this can be changed). As the document-term matrix is identical in each iteration of the active learning cycle, it is generated in advance of model training and stored in the (active learning) state file. Each row of the document-term matrix can easily be requested from the state-file. Records are internally identified by their row number in the input dataset. In oracle mode, the record that is selected to be classified is retrieved from the state file and the record text and other metadata (such as title and abstract) are retrieved from the original dataset (from the file or the computer’s memory). ASReview can run on your local computer, or on a (self-hosted) local or remote server. Data (all records and their labels) remain on the users’s computer. Data ownership and confidentiality are crucial and no data are processed or used in any way by third parties. This is unique by comparison with some of the existing systems, as shown in the last column of Table 1 .

Real-world use cases and high-level function descriptions

Below we highlight a number of real-world use cases and high-level function descriptions for using the pipeline of ASReview.

ASReview can be integrated in classic systematic reviews or meta-analyses. Such reviews or meta-analyses entail several explicit and reproducible steps, as outlined in the PRISMA guidelines 4 . Scholars identify all likely relevant publications in a standardized way, screen retrieved publications to select eligible studies on the basis of defined eligibility criteria, extract data from eligible studies and synthesize the results. ASReview fits into this process, particularly in the abstract screening phase. ASReview does not replace the initial step of collecting all potentially relevant studies. As such, results from ASReview depend on the quality of the initial search process, including selection of databases 24 and construction of comprehensive searches using keywords and controlled vocabulary. However, ASReview can be used to broaden the scope of the search (by keyword expansion or omitting limitation in the search query), resulting in a higher number of initial papers to limit the risk of missing relevant papers during the search part (that is, more focus on recall instead of precision).

Furthermore, many reviewers nowadays move towards meta-reviews when analysing very large literature streams, that is, systematic reviews of systematic reviews 37 . This can be problematic as the various reviews included could use different eligibility criteria and are therefore not always directly comparable. Due to the efficiency of ASReview, scholars using the tool could conduct the study by analysing the papers directly instead of using the systematic reviews. Furthermore, ASReview supports the rapid update of a systematic review. The included papers from the initial review are used to train the machine learning model before screening of the updated set of papers starts. This allows the researcher to quickly screen the updated set of papers on the basis of decisions made in the initial run.

As an example case, let us look at the current literature on COVID-19 and the coronavirus. An enormous number of papers are being published on COVID-19. It is very time consuming to manually find relevant papers (for example, to develop treatment guidelines). This is especially problematic as urgent overviews are required. Medical guidelines rely on comprehensive systematic reviews, but the medical literature is growing at breakneck pace and the quality of the research is not universally adequate for summarization into policy 38 . Such reviews must entail adequate protocols with explicit and reproducible steps, including identifying all potentially relevant papers, extracting data from eligible studies, assessing potential for bias and synthesizing the results into medical guidelines. Researchers need to screen (tens of) thousands of COVID-19-related studies by hand to find relevant papers to include in their overview. Using ASReview, this can be done far more efficiently by selecting key papers that match their (COVID-19) research question in the first step; this should start the active learning cycle and lead to the most relevant COVID-19 papers for their research question being presented next. A plug-in was therefore developed for ASReview 39 , which contained three databases that are updated automatically whenever a new version is released by the owners of the data: (1) the Cord19 database, developed by the Allen Institute for AI, with over all publications on COVID-19 and other coronavirus research (for example SARS, MERS and so on) from PubMed Central, the WHO COVID-19 database of publications, the preprint servers bioRxiv and medRxiv and papers contributed by specific publishers 40 . The CORD-19 dataset is updated daily by the Allen Institute for AI and updated also daily in the plugin. (2) In addition to the full dataset, we automatically construct a daily subset of the database with studies published after December 1st, 2019 to search for relevant papers published during the COVID-19 crisis. (3) A separate dataset of COVID-19 related preprints, containing metadata of preprints from over 15 preprints servers across disciplines, published since January 1st, 2020 41 . The preprint dataset is updated weekly by the maintainers and then automatically updated in ASReview as well. As this dataset is not readily available to researchers through regular search engines (for example, PubMed), its inclusion in ASReview provided added value to researchers interested in COVID-19 research, especially if they want a quick way to screen preprints specifically.

Simulation study

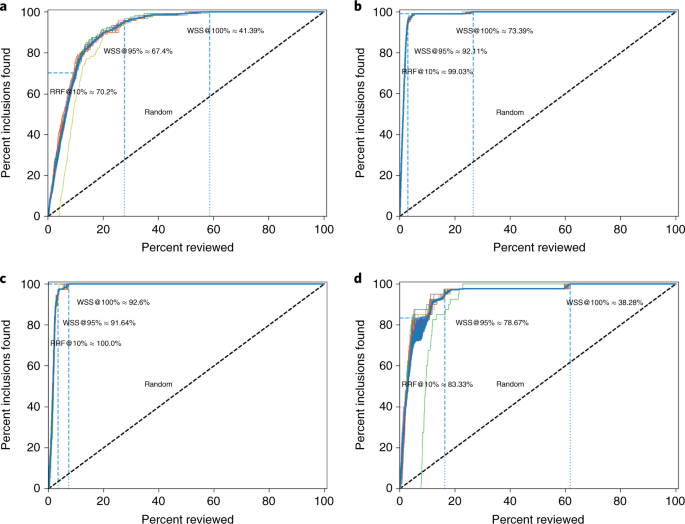

To evaluate the performance of ASReview on a labelled dataset, users can employ the simulation mode. As an example, we ran simulations based on four labelled datasets with version 0.7.2 of ASReview. All scripts to reproduce the results in this paper can be found on Zenodo ( https://doi.org/10.5281/zenodo.4024122 ) 42 , whereas the results are available at OSF ( https://doi.org/10.17605/OSF.IO/2JKD6 ) 43 .

First, we analysed the performance for a study systematically describing studies that performed viral metagenomic next-generation sequencing in common livestock such as cattle, small ruminants, poultry and pigs 44 . Studies were retrieved from Embase ( n = 1,806), Medline ( n = 1,384), Cochrane Central ( n = 1), Web of Science ( n = 977) and Google Scholar ( n = 200, the top relevant references). After deduplication this led to 2,481 studies obtained in the initial search, of which 120 were inclusions (4.84%).

A second simulation study was performed on the results for a systematic review of studies on fault prediction in software engineering 45 . Studies were obtained from ACM Digital Library, IEEExplore and the ISI Web of Science. Furthermore, a snowballing strategy and a manual search were conducted, accumulating to 8,911 publications of which 104 were included in the systematic review (1.2%).

A third simulation study was performed on a review of longitudinal studies that applied unsupervised machine learning techniques to longitudinal data of self-reported symptoms of the post-traumatic stress assessed after trauma exposure 46 , 47 ; 5,782 studies were obtained by searching Pubmed, Embase, PsychInfo and Scopus and through a snowballing strategy in which both the references and the citation of the included papers were screened. Thirty-eight studies were included in the review (0.66%).

A fourth simulation study was performed on the results for a systematic review on the efficacy of angiotensin-converting enzyme inhibitors, from a study collecting various systematic review datasets from the medical sciences 15 . The collection is a subset of 2,544 publications from the TREC 2004 Genomics Track document corpus 48 . This is a static subset from all MEDLINE records from 1994 through 2003, which allows for replicability of results. Forty-one publications were included in the review (1.6%).

Performance metrics

We evaluated the four datasets using three performance metrics. We first assess the work saved over sampling (WSS), which is the percentage reduction in the number of records needed to screen achieved by using active learning instead of screening records at random; WSS is measured at a given level of recall of relevant records, for example 95%, indicating the work reduction in screening effort at the cost of failing to detect 5% of the relevant records. For some researchers it is essential that all relevant literature on the topic is retrieved; this entails that the recall should be 100% (that is, WSS@100%). We also propose the amount of relevant references found after having screened the first 10% of the records (RRF10%). This is a useful metric for getting a quick overview of the relevant literature.

For every dataset, 15 runs were performed with one random inclusion and one random exclusion (see Fig. 2 ). The classical review performance with randomly found inclusions is shown by the dashed line. The average work saved over sampling at 95% recall for ASReview is 83% and ranges from 67% to 92%. Hence, 95% of the eligible studies will be found after screening between only 8% to 33% of the studies. Furthermore, the number of relevant abstracts found after reading 10% of the abstracts ranges from 70% to 100%. In short, our software would have saved many hours of work.

a – d , Results of the simulation study for the results for a study systematically review studies that performed viral metagenomic next-generation sequencing in common livestock ( a ), results for a systematic review of studies on fault prediction in software engineering ( b ), results for longitudinal studies that applied unsupervised machine learning techniques on longitudinal data of self-reported symptoms of posttraumatic stress assessed after trauma exposure ( c ), and results for a systematic review on the efficacy of angiotensin-converting enzyme inhibitors ( d ). Fiteen runs (shown with separate lines) were performed for every dataset, with only one random inclusion and one random exclusion. The classical review performances with randomly found inclusions are shown by the dashed lines.

Usability testing (user experience testing)

We conducted a series of user experience tests to learn from end users how they experience the software and implement it in their workflow. The study was approved by the Ethics Committee of the Faculty of Social and Behavioral Sciences of Utrecht University (ID 20-104).

Unstructured interviews

The first user experience (UX) test—carried out in December 2019—was conducted with an academic research team in a substantive research field (public administration and organizational science) that has conducted various systematic reviews and meta-analyses. It was composed of three university professors (ranging from assistant to full) and three PhD candidates. In one 3.5 h session, the participants used the software and provided feedback via unstructured interviews and group discussions. The goal was to provide feedback on installing the software and testing the performance on their own data. After these sessions we prioritized the feedback in a meeting with the ASReview team, which resulted in the release of v.0.4 and v.0.6. An overview of all releases can be found on GitHub 27 .

A second UX test was conducted with four experienced researchers developing medical guidelines based on classical systematic reviews, and two experienced reviewers working at a pharmaceutical non-profit organization who work on updating reviews with new data. In four sessions, held in February to March 2020, these users tested the software following our testing protocol. After each session we implemented the feedback provided by the experts and asked them to review the software again. The main feedback was about how to upload datasets and select prior papers. Their feedback resulted in the release of v.0.7 and v.0.9.

Systematic UX test

In May 2020 we conducted a systematic UX test. Two groups of users were distinguished: an unexperienced group and an experienced user who already used ASReview. Due to the COVID-19 lockdown the usability tests were conducted via video calling where one person gave instructions to the participant and one person observed, called human-moderated remote testing 49 . During the tests, one person (SH) asked the questions and helped the participant with the tasks, the other person observed and made notes, a user experience professional at the IT department of Utrecht University (MH).

To analyse the notes, thematic analysis was used, which is a method to analyse data by dividing the information in subjects that all have a different meaning 50 using the Nvivo 12 software 51 . When something went wrong the text was coded as showstopper, when something did not go smoothly the text was coded as doubtful, and when something went well the subject was coded as superb. The features the participants requested for future versions of the ASReview tool were discussed with the lead engineer of the ASReview team and were submitted to GitHub as issues or feature requests.

The answers to the quantitative questions can be found at the Open Science Framework 52 . The participants ( N = 11) rated the tool with a grade of 7.9 (s.d. = 0.9) on a scale from one to ten (Table 2 ). The unexperienced users on average rated the tool with an 8.0 (s.d. = 1.1, N = 6). The experienced user on average rated the tool with a 7.8 (s.d. = 0.9, N = 5). The participants described the usability test with words such as helpful, accessible, fun, clear and obvious.

The UX tests resulted in the new release v0.10, v0.10.1 and the major release v0.11, which is a major revision of the graphical user interface. The documentation has been upgraded to make installing and launching ASReview more straightforward. We made setting up the project, selecting a dataset and finding past knowledge is more intuitive and flexible. We also added a project dashboard with information on your progress and advanced settings.

Continuous input via the open source community

Finally, the ASReview development team receives continuous feedback from the open science community about, among other things, the user experience. In every new release we implement features listed by our users. Recurring UX tests are performed to keep up with the needs of users and improve the value of the tool.

We designed a system to accelerate the step of screening titles and abstracts to help researchers conduct a systematic review or meta-analysis as efficiently and transparently as possible. Our system uses active learning to train a machine learning model that predicts relevance from texts using a limited number of labelled examples. The classifier, feature extraction technique, balance strategy and active learning query strategy are flexible. We provide an open source software implementation, ASReview with state-of-the-art systems across a wide range of real-world systematic reviewing applications. Based on our experiments, ASReview provides defaults on its parameters, which exhibited good performance on average across the applications we examined. However, we stress that in practical applications, these defaults should be carefully examined; for this purpose, the software provides a simulation mode to users. We encourage users and developers to perform further evaluation of the proposed approach in their application, and to take advantage of the open source nature of the project by contributing further developments.

Drawbacks of machine learning-based screening systems, including our own, remain. First, although the active learning step greatly reduces the number of manuscripts that must be screened, it also prevents a straightforward evaluation of the system’s error rates without further onerous labelling. Providing users with an accurate estimate of the system’s error rate in the application at hand is therefore a pressing open problem. Second, although, as argued above, the use of such systems is not limited in principle to reviewing, no empirical benchmarks of actual performance in these other situations yet exist to our knowledge. Third, machine learning-based screening systems automate the screening step only; although the screening step is time-consuming and a good target for automation, it is just one part of a much larger process, including the initial search, data extraction, coding for risk of bias, summarizing results and so on. Although some other works, similar to our own, have looked at (semi-)automating some of these steps in isolation 53 , 54 , to our knowledge the field is still far removed from an integrated system that would truly automate the review process while guaranteeing the quality of the produced evidence synthesis. Integrating the various tools that are currently under development to aid the systematic reviewing pipeline is therefore a worthwhile topic for future development.

Possible future research could also focus on the performance of identifying full text articles with different document length and domain-specific terminologies or even other types of text, such as newspaper articles and court cases. When the selection of past knowledge is not possible based on expert knowledge, alternative methods could be explored. For example, unsupervised learning or pseudolabelling algorithms could be used to improve training 55 , 56 . In addition, as the NLP community pushes forward the state of the art in feature extraction methods, these are easily added to our system as well. In all cases, performance benefits should be carefully evaluated using benchmarks for the task at hand. To this end, common benchmark challenges should be constructed that allow for an even comparison of the various tools now available. To facilitate such a benchmark, we have constructed a repository of publicly available systematic reviewing datasets 57 .

The future of systematic reviewing will be an interaction with machine learning algorithms to deal with the enormous increase of available text. We invite the community to contribute to open source projects such as our own, as well as to common benchmark challenges, so that we can provide measurable and reproducible improvement over current practice.

Data availability

The results described in this paper are available at the Open Science Framework ( https://doi.org/10.17605/OSF.IO/2JKD6 ) 43 . The answers to the quantitative questions of the UX test can be found at the Open Science Framework (OSF.IO/7PQNM) 52 .

Code availability

All code to reproduce the results described in this paper can be found on Zenodo ( https://doi.org/10.5281/zenodo.4024122 ) 42 . All code for the software ASReview is available under an Apache 2.0 license ( https://doi.org/10.5281/zenodo.3345592 ) 27 , is maintained on GitHub 63 and includes documentation ( https://doi.org/10.5281/zenodo.4287120 ) 28 .

Bornmann, L. & Mutz, R. Growth rates of modern science: a bibliometric analysis based on the number of publications and cited references. J. Assoc. Inf. Sci. Technol. 66 , 2215–2222 (2015).

Article Google Scholar

Gough, D., Oliver, S. & Thomas, J. An Introduction to Systematic Reviews (Sage, 2017).

Cooper, H. Research Synthesis and Meta-analysis: A Step-by-Step Approach (SAGE Publications, 2015).

Liberati, A. et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J. Clin. Epidemiol. 62 , e1–e34 (2009).

Boaz, A. et al. Systematic Reviews: What have They Got to Offer Evidence Based Policy and Practice? (ESRC UK Centre for Evidence Based Policy and Practice London, 2002).

Oliver, S., Dickson, K. & Bangpan, M. Systematic Reviews: Making Them Policy Relevant. A Briefing for Policy Makers and Systematic Reviewers (UCL Institute of Education, 2015).

Petticrew, M. Systematic reviews from astronomy to zoology: myths and misconceptions. Brit. Med. J. 322 , 98–101 (2001).

Lefebvre, C., Manheimer, E. & Glanville, J. in Cochrane Handbook for Systematic Reviews of Interventions (eds. Higgins, J. P. & Green, S.) 95–150 (John Wiley & Sons, 2008); https://doi.org/10.1002/9780470712184.ch6 .

Sampson, M., Tetzlaff, J. & Urquhart, C. Precision of healthcare systematic review searches in a cross-sectional sample. Res. Synth. Methods 2 , 119–125 (2011).

Wang, Z., Nayfeh, T., Tetzlaff, J., O’Blenis, P. & Murad, M. H. Error rates of human reviewers during abstract screening in systematic reviews. PLoS ONE 15 , e0227742 (2020).

Marshall, I. J. & Wallace, B. C. Toward systematic review automation: a practical guide to using machine learning tools in research synthesis. Syst. Rev. 8 , 163 (2019).

Harrison, H., Griffin, S. J., Kuhn, I. & Usher-Smith, J. A. Software tools to support title and abstract screening for systematic reviews in healthcare: an evaluation. BMC Med. Res. Methodol. 20 , 7 (2020).

O’Mara-Eves, A., Thomas, J., McNaught, J., Miwa, M. & Ananiadou, S. Using text mining for study identification in systematic reviews: a systematic review of current approaches. Syst. Rev. 4 , 5 (2015).

Wallace, B. C., Trikalinos, T. A., Lau, J., Brodley, C. & Schmid, C. H. Semi-automated screening of biomedical citations for systematic reviews. BMC Bioinf. 11 , 55 (2010).

Cohen, A. M., Hersh, W. R., Peterson, K. & Yen, P.-Y. Reducing workload in systematic review preparation using automated citation classification. J. Am. Med. Inform. Assoc. 13 , 206–219 (2006).

Kremer, J., Steenstrup Pedersen, K. & Igel, C. Active learning with support vector machines. WIREs Data Min. Knowl. Discov. 4 , 313–326 (2014).

Miwa, M., Thomas, J., O’Mara-Eves, A. & Ananiadou, S. Reducing systematic review workload through certainty-based screening. J. Biomed. Inform. 51 , 242–253 (2014).

Settles, B. Active Learning Literature Survey (Minds@UW, 2009); https://minds.wisconsin.edu/handle/1793/60660

Holzinger, A. Interactive machine learning for health informatics: when do we need the human-in-the-loop? Brain Inform. 3 , 119–131 (2016).

Van de Schoot, R. & De Bruin, J. Researcher-in-the-loop for Systematic Reviewing of Text Databases (Zenodo, 2020); https://doi.org/10.5281/zenodo.4013207

Kim, D., Seo, D., Cho, S. & Kang, P. Multi-co-training for document classification using various document representations: TF–IDF, LDA, and Doc2Vec. Inf. Sci. 477 , 15–29 (2019).

Nosek, B. A. et al. Promoting an open research culture. Science 348 , 1422–1425 (2015).

Kilicoglu, H., Demner-Fushman, D., Rindflesch, T. C., Wilczynski, N. L. & Haynes, R. B. Towards automatic recognition of scientifically rigorous clinical research evidence. J. Am. Med. Inform. Assoc. 16 , 25–31 (2009).

Gusenbauer, M. & Haddaway, N. R. Which academic search systems are suitable for systematic reviews or meta‐analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res. Synth. Methods 11 , 181–217 (2020).

Borah, R., Brown, A. W., Capers, P. L. & Kaiser, K. A. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 7 , e012545 (2017).

de Vries, H., Bekkers, V. & Tummers, L. Innovation in the Public Sector: a systematic review and future research agenda. Public Adm. 94 , 146–166 (2016).

Van de Schoot, R. et al. ASReview: Active Learning for Systematic Reviews (Zenodo, 2020); https://doi.org/10.5281/zenodo.3345592

De Bruin, J. et al. ASReview Software Documentation 0.14 (Zenodo, 2020); https://doi.org/10.5281/zenodo.4287120

ASReview PyPI Package (ASReview Core Development Team, 2020); https://pypi.org/project/asreview/

Docker container for ASReview (ASReview Core Development Team, 2020); https://hub.docker.com/r/asreview/asreview

Ferdinands, G. et al. Active Learning for Screening Prioritization in Systematic Reviews—A Simulation Study (OSF Preprints, 2020); https://doi.org/10.31219/osf.io/w6qbg

Fu, J. H. & Lee, S. L. Certainty-enhanced active learning for improving imbalanced data classification. In 2011 IEEE 11th International Conference on Data Mining Workshops 405–412 (IEEE, 2011).

Le, Q. V. & Mikolov, T. Distributed representations of sentences and documents. Preprint at https://arxiv.org/abs/1405.4053 (2014).

Ramos, J. Using TF–IDF to determine word relevance in document queries. In Proc. 1st Instructional Conference on Machine Learning Vol. 242, 133–142 (ICML, 2003).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12 , 2825–2830 (2011).

MathSciNet MATH Google Scholar

Reimers, N. & Gurevych, I. Sentence-BERT: sentence embeddings using siamese BERT-networks Preprint at https://arxiv.org/abs/1908.10084 (2019).

Smith, V., Devane, D., Begley, C. M. & Clarke, M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med. Res. Methodol. 11 , 15 (2011).

Wynants, L. et al. Prediction models for diagnosis and prognosis of COVID-19: systematic review and critical appraisal. Brit. Med. J . 369 , 1328 (2020).

Van de Schoot, R. et al. Extension for COVID-19 Related Datasets in ASReview (Zenodo, 2020). https://doi.org/10.5281/zenodo.3891420 .

Lu Wang, L. et al. CORD-19: The COVID-19 open research dataset. Preprint at https://arxiv.org/abs/2004.10706 (2020).

Fraser, N. & Kramer, B. Covid19_preprints (FigShare, 2020); https://doi.org/10.6084/m9.figshare.12033672.v18

Ferdinands, G., Schram, R., Van de Schoot, R. & De Bruin, J. Scripts for ‘ASReview: Open Source Software for Efficient and Transparent Active Learning for Systematic Reviews’ (Zenodo, 2020); https://doi.org/10.5281/zenodo.4024122

Ferdinands, G., Schram, R., van de Schoot, R. & de Bruin, J. Results for ‘ASReview: Open Source Software for Efficient and Transparent Active Learning for Systematic Reviews’ (OSF, 2020); https://doi.org/10.17605/OSF.IO/2JKD6

Kwok, K. T. T., Nieuwenhuijse, D. F., Phan, M. V. T. & Koopmans, M. P. G. Virus metagenomics in farm animals: a systematic review. Viruses 12 , 107 (2020).

Hall, T., Beecham, S., Bowes, D., Gray, D. & Counsell, S. A systematic literature review on fault prediction performance in software engineering. IEEE Trans. Softw. Eng. 38 , 1276–1304 (2012).

van de Schoot, R., Sijbrandij, M., Winter, S. D., Depaoli, S. & Vermunt, J. K. The GRoLTS-Checklist: guidelines for reporting on latent trajectory studies. Struct. Equ. Model. Multidiscip. J. 24 , 451–467 (2017).

Article MathSciNet Google Scholar

van de Schoot, R. et al. Bayesian PTSD-trajectory analysis with informed priors based on a systematic literature search and expert elicitation. Multivar. Behav. Res. 53 , 267–291 (2018).

Cohen, A. M., Bhupatiraju, R. T. & Hersh, W. R. Feature generation, feature selection, classifiers, and conceptual drift for biomedical document triage. In Proc. 13th Text Retrieval Conference (TREC, 2004).

Vasalou, A., Ng, B. D., Wiemer-Hastings, P. & Oshlyansky, L. Human-moderated remote user testing: orotocols and applications. In 8th ERCIM Workshop, User Interfaces for All Vol. 19 (ERCIM, 2004).

Joffe, H. in Qualitative Research Methods in Mental Health and Psychotherapy: A Guide for Students and Practitioners (eds Harper, D. & Thompson, A. R.) Ch. 15 (Wiley, 2012).

NVivo v. 12 (QSR International Pty, 2019).

Hindriks, S., Huijts, M. & van de Schoot, R. Data for UX-test ASReview - June 2020. OSF https://doi.org/10.17605/OSF.IO/7PQNM (2020).

Marshall, I. J., Kuiper, J. & Wallace, B. C. RobotReviewer: evaluation of a system for automatically assessing bias in clinical trials. J. Am. Med. Inform. Assoc. 23 , 193–201 (2016).

Nallapati, R., Zhou, B., dos Santos, C. N., Gulcehre, Ç. & Xiang, B. Abstractive text summarization using sequence-to-sequence RNNs and beyond. In Proc. 20th SIGNLL Conference on Computational Natural Language Learning 280–290 (Association for Computational Linguistics, 2016).

Xie, Q., Dai, Z., Hovy, E., Luong, M.-T. & Le, Q. V. Unsupervised data augmentation for consistency training. Preprint at https://arxiv.org/abs/1904.12848 (2019).

Ratner, A. et al. Snorkel: rapid training data creation with weak supervision. VLDB J. 29 , 709–730 (2020).

Systematic Review Datasets (ASReview Core Development Team, 2020); https://github.com/asreview/systematic-review-datasets

Wallace, B. C., Small, K., Brodley, C. E., Lau, J. & Trikalinos, T. A. Deploying an interactive machine learning system in an evidence-based practice center: Abstrackr. In Proc. 2nd ACM SIGHIT International Health Informatics Symposium 819–824 (Association for Computing Machinery, 2012).

Cheng, S. H. et al. Using machine learning to advance synthesis and use of conservation and environmental evidence. Conserv. Biol. 32 , 762–764 (2018).

Yu, Z., Kraft, N. & Menzies, T. Finding better active learners for faster literature reviews. Empir. Softw. Eng . 23 , 3161–3186 (2018).

Ouzzani, M., Hammady, H., Fedorowicz, Z. & Elmagarmid, A. Rayyan—a web and mobile app for systematic reviews. Syst. Rev. 5 , 210 (2016).

Przybyła, P. et al. Prioritising references for systematic reviews with RobotAnalyst: a user study. Res. Synth. Methods 9 , 470–488 (2018).

ASReview: Active learning for Systematic Reviews (ASReview Core Development Team, 2020); https://github.com/asreview/asreview

Download references

Acknowledgements

We would like to thank the Utrecht University Library, focus area Applied Data Science, and departments of Information and Technology Services, Test and Quality Services, and Methodology and Statistics, for their support. We also want to thank all researchers who shared data, participated in our user experience tests or who gave us feedback on ASReview in other ways. Furthermore, we would like to thank the editors and reviewers for providing constructive feedback. This project was funded by the Innovation Fund for IT in Research Projects, Utrecht University, the Netherlands.

Author information

Authors and affiliations.

Department of Methodology and Statistics, Faculty of Social and Behavioral Sciences, Utrecht University, Utrecht, the Netherlands

Rens van de Schoot, Gerbrich Ferdinands, Albert Harkema, Joukje Willemsen, Yongchao Ma, Qixiang Fang, Sybren Hindriks & Daniel L. Oberski

Department of Research and Data Management Services, Information Technology Services, Utrecht University, Utrecht, the Netherlands

Jonathan de Bruin, Raoul Schram, Parisa Zahedi & Maarten Hoogerwerf

Utrecht University Library, Utrecht University, Utrecht, the Netherlands

Jan de Boer, Felix Weijdema & Bianca Kramer

Department of Test and Quality Services, Information Technology Services, Utrecht University, Utrecht, the Netherlands

Martijn Huijts

School of Governance, Faculty of Law, Economics and Governance, Utrecht University, Utrecht, the Netherlands

Lars Tummers

Department of Biostatistics, Data management and Data Science, Julius Center, University Medical Center Utrecht, Utrecht, the Netherlands

Daniel L. Oberski

You can also search for this author in PubMed Google Scholar

Contributions

R.v.d.S. and D.O. originally designed the project, with later input from L.T. J.d.Br. is the lead engineer, software architect and supervises the code base on GitHub. R.S. coded the algorithms and simulation studies. P.Z. coded the very first version of the software. J.d.Bo., F.W. and B.K. developed the systematic review pipeline. M.Huijts is leading the UX tests and was supported by S.H. M.Hoogerwerf developed the architecture of the produced (meta)data. G.F. conducted the simulation study together with R.S. A.H. performed the literature search comparing the different tools together with G.F. J.W. designed all the artwork and helped with formatting the manuscript. Y.M. and Q.F. are responsible for the preprocessing of the metadata under the supervision of J.d.Br. R.v.d.S, D.O. and L.T. wrote the paper with input from all authors. Each co-author has written parts of the manuscript.

Corresponding author

Correspondence to Rens van de Schoot .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Jian Wu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information.

Overview of software tools supporting systematic reviews.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

van de Schoot, R., de Bruin, J., Schram, R. et al. An open source machine learning framework for efficient and transparent systematic reviews. Nat Mach Intell 3 , 125–133 (2021). https://doi.org/10.1038/s42256-020-00287-7

Download citation

Received : 04 June 2020

Accepted : 17 December 2020

Published : 01 February 2021

Issue Date : February 2021

DOI : https://doi.org/10.1038/s42256-020-00287-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

A systematic review, meta-analysis, and meta-regression of the prevalence of self-reported disordered eating and associated factors among athletes worldwide.

- Hadeel A. Ghazzawi

- Lana S. Nimer

- Haitham Jahrami

Journal of Eating Disorders (2024)

Systematic review using a spiral approach with machine learning

- Amirhossein Saeidmehr

- Piers David Gareth Steel

- Faramarz F. Samavati

Systematic Reviews (2024)

The spatial patterning of emergency demand for police services: a scoping review

- Samuel Langton

- Stijn Ruiter

- Linda Schoonmade

Crime Science (2024)

The SAFE procedure: a practical stopping heuristic for active learning-based screening in systematic reviews and meta-analyses

- Josien Boetje

- Rens van de Schoot

Effect of capacity building interventions on classroom teacher and early childhood educator perceived capabilities, knowledge, and attitudes relating to physical activity and fundamental movement skills: a systematic review and meta-analysis

- Matthew Bourke

- Ameena Haddara

- Patricia Tucker

BMC Public Health (2024)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

Machine Learning

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Machine Learning-Based Research for COVID-19 Detection, Diagnosis, and Prediction: A Survey

Yassine meraihi.

1 LIST Laboratory, University of M’Hamed Bougara Boumerdes, Avenue of Independence, 35000 Boumerdes, Algeria

Asma Benmessaoud Gabis

2 Ecole nationale Supérieure d’Informatique, Laboratoire des Méthodes de Conception des Systèmes, BP 68 M, 16309 Oued-Smar, Alger Algeria

Seyedali Mirjalili

3 Centre for Artificial Intelligence Research and Optimisation, Torrens University Australia, Fortitude Valley, Brisbane, QLD 4006 Australia

4 Yonsei Frontier Lab, Yonsei University, Seoul, Korea

Amar Ramdane-Cherif

5 LISV Laboratory, University of Versailles St-Quentin-en-Yvelines, 10-12 Avenue of Europe, 78140 Velizy, France

Fawaz E. Alsaadi

6 Information Technology Department, Faculty of Computing and Information Technology, King Abdulaziz University, Jeddah, Saudi Arabia

The year 2020 experienced an unprecedented pandemic called COVID-19, which impacted the whole world. The absence of treatment has motivated research in all fields to deal with it. In Computer Science, contributions mainly include the development of methods for the diagnosis, detection, and prediction of COVID-19 cases. Data science and Machine Learning (ML) are the most widely used techniques in this area. This paper presents an overview of more than 160 ML-based approaches developed to combat COVID-19. They come from various sources like Elsevier, Springer, ArXiv, MedRxiv, and IEEE Xplore. They are analyzed and classified into two categories: Supervised Learning-based approaches and Deep Learning-based ones. In each category, the employed ML algorithm is specified and a number of used parameters is given. The parameters set for each of the algorithms are gathered in different tables. They include the type of the addressed problem (detection, diagnosis, or detection), the type of the analyzed data (Text data, X-ray images, CT images, Time series, Clinical data,...) and the evaluated metrics (accuracy, precision, sensitivity, specificity, F1-Score, and AUC). The study discusses the collected information and provides a number of statistics drawing a picture about the state of the art. Results show that Deep Learning is used in 79% of cases where 65% of them are based on the Convolutional Neural Network (CNN) and 17% use Specialized CNN. On his side, supervised learning is found in only 16% of the reviewed approaches and only Random Forest, Support Vector Machine (SVM) and Regression algorithms are employed.

Introduction

COVID-19 has led to one of the most disruptive disasters in the current century and is caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). The health system and economy of a large number of countries have been impacted. As per World Health Organization (WHO) data, there have been 225,024,781 confirmed cases of COVID-19, including 4,636,153 deaths as of 14 September 2021. Immediately, after its outbreak, several studies are conducted to understand the characteristics of this coronavirus.

It is argued that human-to-human transmission of SARS-CoV-2 is typically done via direct contacts and respiratory droplets [ 1 ]. On the other side, the incubation of the infection is estimated to a period of 2–14 days. This helps in controlling it and preventing the spread of COVID-19 is the primary intervention being used. Moreover, studies on clinical forms reveal the presence of asymptomatic carriers in the population and the most affected age groups [ 2 ]. After almost a year in this situation, and the high number of researches conducted in different disciplines to bring a relief, a huge amount of data is generated. Computer science researchers find themselves involved to provide their help. One of the first registered contributions is the visualization of data. The latter was mapped and/or plotted in graphs which allows to: (i) better track the propagation of the virus over the globe in general and country by country in particular (Fig. 1 );

Propagation of COVID-19 over the world

ii) better track the propagation of the pandemic over the time; iii) better estimate the number of confirmed cases and the number of deaths (Fig. (Fig.2a, 2 a, b). Later, more advanced techniques based essentially on Artificial Intelligence (AI) are employed. Bringing AI to go against COVID-19 has served in the prevention and monitoring of infectious patients. In fact, by using geographical coordinates of people, some governments were able to limit their movements and locate people with whom they were in contact. The second aspect in which AI benefits is the ability to classify individuals whether they are affected or not. Finally, AI offers the ability to make a prediction on possible future contaminations. To this purpose, Machine Learning (ML), which is often confused with AI, is precisely used. Beyond the different ML algorithms, Neural Network (NN) is one of the most used to solve real-world problems which gives the emergence of Deep Learning (DL).

Data-visualization for tracking COVID-19 progress

Deep learning is particularly suited to contexts where the data is complex and where there are large datasets available as it is the case with COVID-19.

In this context, the present paper gives an overview of the Machine Learning researches performed to handle COVID-19 data. It specifies for each of them the targeted objectives and the type of data used to achieve them.

To accomplish this study, we use Google scholar by employing the following search strings to build a database of COVID-19 related articles:

- COVID-19 detection using Machine learning;

- COVID-19 detection using Deep learning;

- COVID-19 detection using Artificial intelligence;

- COVID-19 diagnosis using Machine learning;

- COVID-19 diagnosis using Deep learning;

- COVID-19 diagnosis using Artificial intelligence;

- COVID-19 prediction using Machine learning;

- Deep learning for COVID-19 prediction;

- Artificial intelligence for COVID-19 prediction.

We retain all articles in this field which:

- Are published in scientific journals;

- Propose new algorithms to deal with COVID-19;

- Have more than 4 pages;

- Are written in English;

- Represent complete versions when several are available;

- Do not report the statistical tests used to assess the significance of the presented results.

- Do not report details on the source of their data sets.

The result is impressive. In fact, since February 2020, several papers are published in this area every month. As we can see in Fig. 3 , India and China seem to be those having the highest number of COVID-19 publications. However, many other countries showed a strong activity in the number of contributions. This is expected as the situation affects the entire world. The different papers appeared from various well-known publishers such as IEEE, Elsevier, Springer, ArXIv and many others as shown in Fig. 4 .

Number of COVID-19 published articles by countries

Percentage of identified COVID-19 papers in different scientific publishers

In this paper, the surveyed approaches are presented according to the Machine Learning classification given in Fig. 8 . Techniques highlighted in yellow color are those employed in the different propositions to go against COVID-19. We show that most of them are based on Convolutional Neural Networks (CNN) which allows making Deep Learning. Almost half of these techniques use X-ray images. Nevertheless, several other data sources are used at different proportions as shown in Fig. 5 . They include Computed Tomography (CT) images, Text data, Time series, Sounds, Coughing/Breathing videos, and even Blood Samples world cloud of the works we have summarized, reviewed, and analyzed in this paper can be seen in Fig. 6 .

Proportion of the different data sources used in COVID-19 publications

A world cloud of the works we have summarized, reviewed, and analyzed in this paper

Classification of Machine Learning Algorithms

There are similar surveys on AI and COVID-19 (e.g. in the works of Rasheed et al. [ 3 ], Shah et al. [ 4 ], Mehta et al. [ 5 ], Shinde et al. [ 6 ] and Chiroma et al. [ 7 ]). What makes this survey different is the focus on specialized Machine Learning techniques proposed globally to detect, diagnose, and predict COVID-19.

The remainder of this paper is organized as follows. In the second section, the definition of Deep Learning and its connection with AI and Machine Learning is given with descriptions of the most used algorithms. The third section presents a classification of the different approaches proposed to deal with COVID-19. They are illustrated by multiple tables highlighting the most important parameters of each of them. The fourth section discusses the results revealed from the conducted study in regard to the techniques used and their evaluation. It notes the limitations encountered and possible solutions to overcome them. The last section concludes the present article.

Artificial Intelligence, Machine Learning and Deep Learning

Artificial Intelligence (AI) as it is traditionally known is considered weak. Making it stronger results in making it capable of reproducing human behavior with consciousness, sensitivity and spirit. The appearance of Machine Learning (ML) was the means that made it possible to take a step towards achieving this objective. By definition, Machine Learning is a subfield of AI concerned with giving computers the ability to learn without being explicitly programmed. It is based on the principle of reproducing a behavior thanks to algorithms, themselves fed by a large amount of data. Faced with many situations, the algorithm learns which decision to make and creates a model. The machine can therefore automate the tasks according to the situations. The general process to carry out a Machine Learning requires a training dataset, a test dataset and an algorithm to generate a predictive model (Fig. 7 ). Four types of ML can be distinguished as we can see in Fig. 8 .

Machine learning prediction process

Supervised Learning

It is a form of machine learning that falls under artificial intelligence. The idea is to “guide” the algorithm on the way of learning based on pre-labeled examples of expected results. Artificial intelligence then learns from each example by adjusting its parameters to reduce the gap between the results obtained and the expected ones. The margin of error is thus reduced over the training sessions, with the aim of being able to generalize learning in the objective to predict the result of new cases [ 8 , 9 ]. The output is called classification if labels are like discrete classes or regression if they are like continuous quantities. Within each category, there exists several algorithms [ 10 , 11 ]. We define below those which was applied in the detection/prediction of COVID-19.

Linear Regression

Linea regression can be considered as one of the most conventional machine learning techniques [ 12 ], in which the best fit line/hyperplane for the available training data is determined using the minimum mean squared error function. This algorithm considers the predictive function as linear. Its general form is as follows: Y = a ∗ X + b + ϵ with a and b two constants. Y is the variable to be predicted, X the variable used to predict, a is the slope of the regression and b is the intercept, that is, the value of Y when X is zero.

Logistic Regression

Despite its name, Logistic Regression [ 13 ] can be employed to perform regression as classification. It is based on the sigmoid predictive function defined as: h ( z ) = 1 1 + e - z where z is a linear function. The function returns a probability score P between 0 and 1. In order to map this to two discrete classes ( 0 or 1), a threshold value θ is fixed. The predicted class is equal to 1 if P ≥ θ , to 0 otherwise.

Support Vector Machine (SVM)

Similar to the previously defined algorithms, the idea behind SVM [ 14 , 15 ] is to distinctly classifies data points by finding an hyperplane in an N-dimensional space. Since there are several possibilities to choose the hyperplane, in SVM a margin distance is calculated between data points of the two classes to separate. The objective is to maximize the value of this margin to get a clear decision boundary helping in the classification of future data points.

Decision Tree

A Decision Tree [ 16 ] is an algorithm that seeks to partition the individuals into groups of individuals as similar as possible from the point of view of the variable to be predicted. The result of the algorithm produces a tree that reveals hierarchical relationships between the variables. An iterative process is used where at each iteration a sub-population of individuals is obtained by choosing the explanatory variable which allows the best separation of individuals. The algorithm stops when no more split is possible.

Random Forest Algorithms

Random Forest Algorithms are methods that provide predictive models for classification and regression [ 17 , 18 ]. They are composed of a large number of Decision Tree blocks used as individual predictors. The fundamental idea behind the method is that instead of trying to get an optimized method all at once, several predictors are generated and their different predictions are pooled. The final predicted class is the one having the most votes.

Artificial Neural Network (ANN)