NEWS: Redpanda acquires Benthos & introduces Redpanda Connect with 220+ connectors. Learn more .

Kafka rebalancing

Apache Kafka® is a distributed messaging system that handles large data volumes efficiently and reliably. Producers publish data to topics, and consumers subscribe to these topics to consume the data. It also stores data across multiple brokers and partitions, allowing it to scale horizontally.

Nonetheless, Kafka is not immune to its own set of limitations. One of the key challenges is maintaining the balance of partitions across the available brokers and consumers.

This article explores the concept of Kafka rebalancing. We look at what triggers a rebalance and the side effects of rebalancing. We also discuss best practices to reduce rebalancing occurrences and alternative rebalancing approaches.

Summary of key concepts

Before we dive into Kafka rebalancing, we must understand some key concepts.

What is rebalancing?

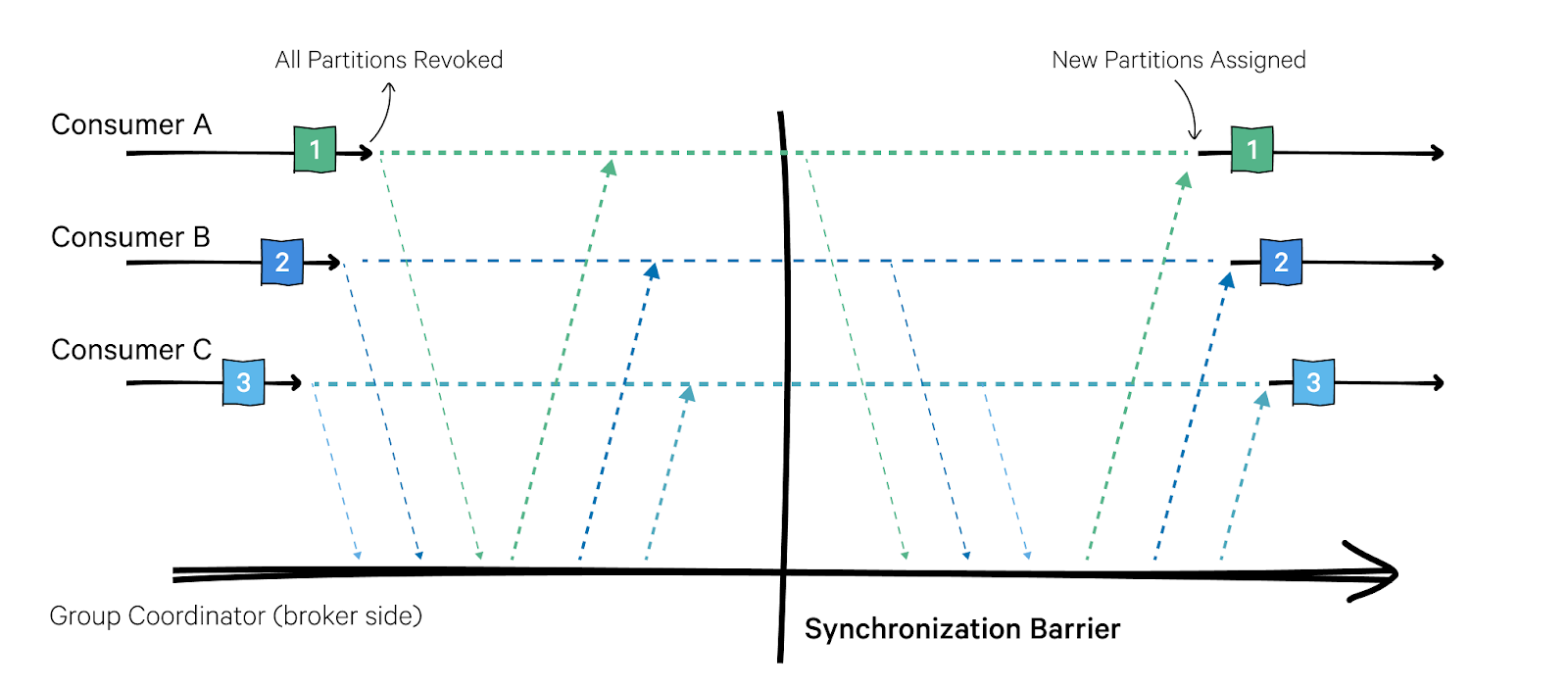

The concept of rebalancing is fundamental to Kafka's consumer group architecture. When a consumer group is created, the group coordinator assigns partitions to each consumer in the group. Each consumer is responsible for consuming data from its assigned partitions. However, as consumers join or leave the group or new partitions are added to a topic, the partition assignments become unbalanced. This is where rebalancing comes into play.

Kafka rebalancing is the process by which Kafka redistributes partitions across consumers to ensure that each consumer is processing an approximately equal number of partitions. This ensures that data processing is distributed evenly across consumers and that each consumer is processing data as efficiently as possible. As a result, Kafka can scale efficiently and effectively, preventing any single consumer from becoming overloaded or underused.

How rebalancing works

Kafka provides several partition assignment strategies to determine how partitions are assigned during a rebalance and is called an “assignor”. The default partition assignment strategy is round-robin, where Kafka assigns partitions to consumers one after another. However, Kafka also provides “range” and “cooperative sticky” assignment strategies, which may be more appropriate for specific use cases.

When a rebalance occurs:

Kafka notifies each consumer in the group by sending a GroupCoordinator message.

Each consumer then responds with a JoinGroup message, indicating its willingness to participate in the rebalance.

Kafka then uses the selected partition assignment strategy to assign partitions to each consumer in the group.

During a rebalance, Kafka may need to pause data consumption temporarily. This is necessary to ensure all consumers have an up-to-date view of the partition assignments before re-consuming data.

When to choose Redpanda over Apache Kafka

Start streaming data like it's 2024.

What triggers Kafka rebalancing?

Here are some common scenarios that trigger a consumer rebalance in Kafka.

Consumer joins or leaves

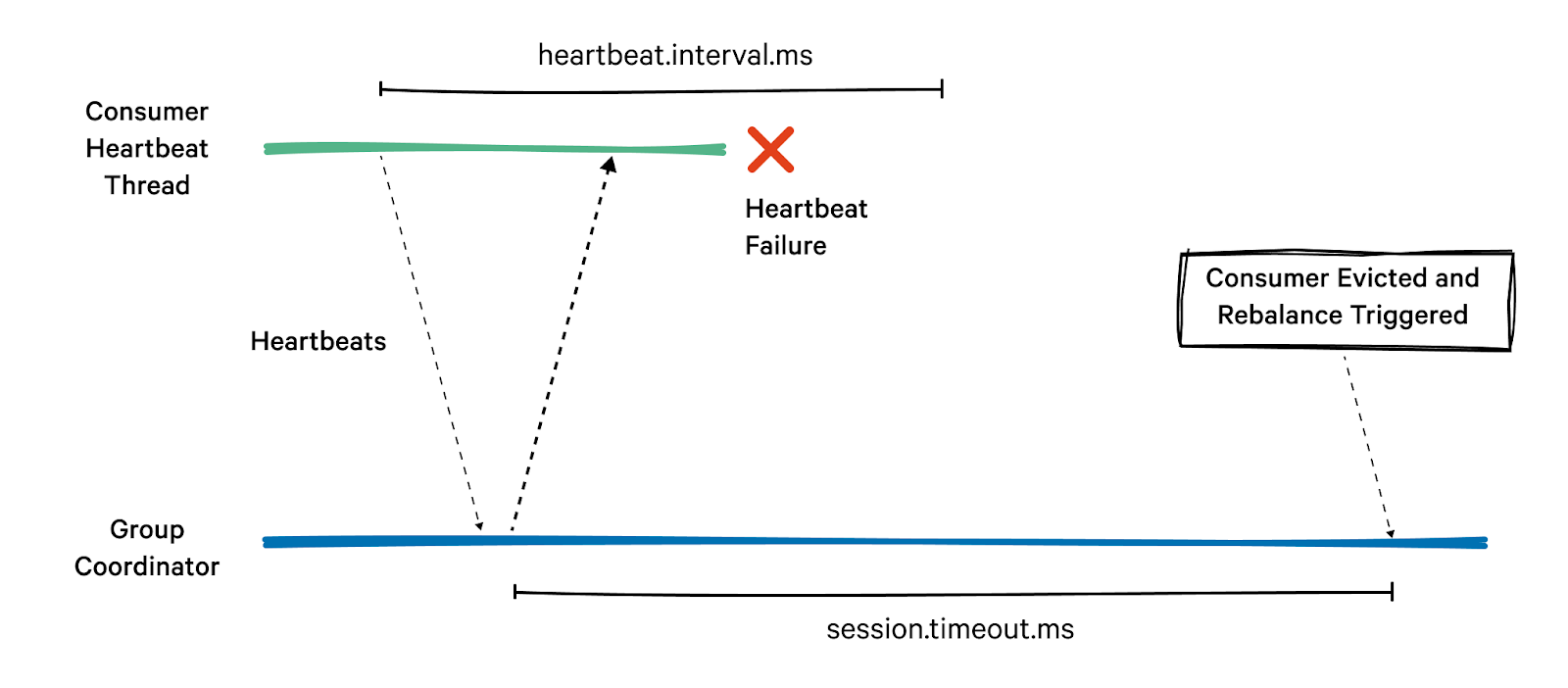

When a new consumer joins or exits a group, Kafka must rebalance the partitions across the available consumers. It can happen during shutdown/restart or application scale-up/down. A heartbeat is a signal that indicates that the consumer is still alive and actively participating in the group. If a consumer fails to send a heartbeat within the specified interval, it is considered dead, and the group coordinator initiates a rebalance to reassign its partitions to other members.

Temporary consumer failure

When a consumer experiences a temporary failure or network interruption, Kafka may consider it a failed consumer and remove it from the group. This can trigger consumer rebalancing to redistribute the partitions across the remaining active consumers in the group. However, once the failed consumer is back online, it can rejoin the group and participate in the rebalancing process again.

Consumer idle for too long

When a consumer remains idle for too long, Kafka may consider it as a failed consumer and remove it from the group. This can trigger consumer rebalancing to redistribute the partitions across the remaining active consumers in the group.

Topic partitions added

If new partitions are added to a topic, Kafka initiates a rebalance to distribute the new partitions among the consumers in the group.

Side effects of Kafka rebalancing

While rebalancing helps ensure each consumer in a group receives an equal share of the workload, it can also have some side effects on the performance and reliability of the Kafka cluster. Some unintended consequences are given below.

Increased latency

The rebalancing process involves moving partitions from one broker or consumer to another, which can result in some data being temporarily unavailable or delayed. In addition, depending on the size of the Kafka cluster and the volume of data being processed, rebalancing can take several minutes or even hours, increasing the latency consumers experience.

Reduced throughput

Kafka rebalancing causes some brokers or consumers to become overloaded or underutilized, leading to slower data processing. However, once the rebalancing process is complete, the performance of the Kafka cluster returns to normal.

Increased resource usage

Kafka uses additional resources, such as CPU, memory, and network bandwidth, to move partitions between brokers or consumers. As a result, rebalancing increases resource usage for the Kafka cluster and can adversely impact the performance of other applications running on the same infrastructure.

Potential data duplication and loss

Kafka rebalancing may result in significant data duplication (40% or more in some cases) adding to throughput and cost issues. In rare cases, Kafka rebalancing leads to data loss if improperly handled. For example, a consumer leaves a consumer group while it still has unprocessed messages. Those messages may be lost once the rebalancing process begins. To prevent data loss, it is essential to ensure messages are properly committed to Kafka and that all consumers regularly participate in rebalancing.

Increased complexity

Kafka rebalancing adds complexity to the overall architecture of a Kafka cluster. For example, rebalancing involves coordinating multiple brokers or consumers, which can be challenging to manage and debug. It may also impact other applications or processes that rely on Kafka for data processing. As a result, you may find it more difficult to ensure the overall reliability and availability of the system.

Redpanda: a powerful Kafka alternative

Fully Kafka API compatible. 6x faster. 100% easier to use.

Measures to reduce rebalancing

There are measures you can take to reduce rebalancing events. However, rebalancing is necessary and must occur occasionally to maintain reasonably-reliable consumption.

Increase session timeout

The session timeout is the maximum time for a Kafka consumer to send a heartbeat to the broker. Increasing the session timeout increases the time a broker waits before marking a consumer as inactive. You can increase the session timeout by setting the session.timeout.ms parameter to a higher value in the Kafka client configuration. However, keep in mind that setting this parameter too high leads to longer periods of consumer inactivity.

Reduce partitions per topic

Having too many partitions per topic increases the frequency of rebalancing. When creating a topic, you can reduce the partition number by setting the num.partitions parameter to a lower value. However, remember that reducing the number of partitions also reduces the parallelism and throughput of your Kafka cluster.

Increase poll interval time

Sometimes messages take longer to process due to multiple network or I/O calls involved in processing failures and retries. In such cases, the consumer may be removed from the group frequently. The consumer configuration specifies the maximum time the consumer can be idle before it is considered inactive and removed from the group is max.poll.interval.ms. Increasing the max.poll.interval.ms value in the consumer config helps avoid frequent consumer group changes.

What is incremental cooperative rebalance?

Incremental cooperative rebalance protocol was introduced in Kafka 2.4 to minimize the disruption caused by Kafka rebalancing. In a traditional rebalance, all consumers in the group stop consuming data while the rebalance is in progress, commonly called a “stop the world effect”. This causes delays and interruptions in data processing.

The incremental cooperative rebalance protocol splits rebalancing into smaller sub-tasks, and consumers continue consuming data while these sub-tasks are completed. As a result, rebalancing occurs more quickly and with less interruption to data processing.

The protocol also provides more fine-grained control over the rebalancing process. For example, it allows consumers to negotiate the specific set of partitions they will consume based on their current load and capacity. This prevents the overloading of individual consumers and ensures that partitions are assigned in a more balanced way.

What is static group membership in Kafka rebalancing?

Static group membership is a method of assigning Kafka partitions to consumers in a consumer group in a fixed and deterministic way without relying on automatic partition assignment. In this approach, the developer defines the partition assignment explicitly instead of letting the Kafka broker manage it dynamically.

With static group membership, consumers in a consumer group explicitly request the Kafka broker to assign them specific partitions by specifying the partition IDs in their configuration. Each consumer only consumes messages from a specific subset of partitions, and the partition assignment remains fixed until explicitly changed by the consumer.

However, it's important to note that static group membership leads to uneven workload distribution among consumers and may only be suitable for some use cases.

How to create a consumer with static membership

To use static group membership in Kafka, consumers must use the assign() method instead of the subscribe() method to specify the partition assignments. The assign() procedure takes a list of TopicPartition objects, identifying the topic and partition for assignment.

Here’s a sample code in Java to create a consumer with static membership.

In this example:

KafkaConsumer is configured with the group.id property set to static-group

Two partitions (partition0 and partition1) of my-topic are explicitly assigned to the consumer using the assign() method.

The consumer polls for new messages from the assigned partitions using the poll() method and commits the offsets using the commitSync() method.

Have questions about Kafka or streaming data?

Join a global community and chat with the experts on Slack.

Kafka rebalancing is an important feature that allows consumers in a Kafka cluster to dynamically redistribute the load when new consumers are added, or existing ones leave. It ensures all consumers receive an equal share of the work and helps prevent the overloading of any one consumer. However, excessive rebalancing results in reduced performance and increased latency and may even lead to duplicates and/or data loss in some cases.

So, it's essential to configure Kafka consumers properly and follow best practices to minimize the frequency and impact of rebalancing. By understanding how Kafka rebalancing works and implementing the right strategies, developers and administrators can ensure a smooth and efficient Kafka cluster that delivers reliable and scalable data processing.

Redpanda Serverless: from zero to streaming in 5 seconds

Just sign up, spin up, and start streaming data!

Four factors affecting Kafka performance

Kafka burrow partition lag, kafka consumer lag, kafka monitoring, kafka latency, kafka optimization, kafka performance tuning, kafka log compaction, kafka monitoring tools.

- How to Relic

- Best Practices

Kafka topic partitioning: Strategies and best practices

By Amy Boyle

If you’re a recent adopter of Apache Kafka, you’re undoubtedly trying to determine how to handle all the data streaming through your system. The Events Pipeline team at New Relic processes a huge amount of “ event data ” on an hourly basis, so we’re thinking about Kafka monitoring and this question a lot. Unless you’re processing only a small amount of data, you need to distribute your data onto separate partitions.

In part one of this series— Using Apache Kafka for Real-Time Event Processing at New Relic —we explained how we built some of the underlying architecture of our event processing streams using Kafka. In this post, we explain how the partitioning strategy for your producers depends on what your consumers will do with the data.

What are Kafka topics and partitions?

Apache Kafka uses topics and partitions for efficient data processing. Topics are data categories to which records are published; consumers subscribe to these topics. For scalability and performance, topics are divided into partitions, allowing parallel data processing and fault tolerance. Partitions enable multiple consumers to read concurrently and are replicated across nodes for resilience against failures. Let's dive into these further.

A partition in Kafka is the storage unit that allows for a topic log to be separated into multiple logs and distributed over the Kafka cluster.

Partitions allow Kafka clusters to scale smoothly.

Why partition your data in Kafka?

If you have so much load that you need more than a single instance of your application, you need to partition your data. How you partition serves as your load balancing for the downstream application. The producer clients decide which topic partition that the data ends up in, but it’s what the consumer applications do with that data that drives the decision logic.

How does Kafka partitioning improve performance?

Kafka partitioning allows for parallel data processing, enabling multiple consumers to work on different partitions simultaneously. This helps achieve higher throughput and ensures the processing load is distributed across the Kafka cluster.

What factors should you consider when determining the number of partitions?

Choose the number of partitions based on factors like expected data volume, the number of consumers, and the desired level of parallelism. It's essential to strike a balance to avoid over-partitioning or under-partitioning, which can impact performance.

Can the number of partitions be changed after creating a topic?

Yes, it's possible to change the number of partitions for a topic. However, doing so requires careful consideration as it may affect data distribution and consumer behavior. We recommend to plan a partitioning strategy during the initial topic creation.

Can a consumer read from multiple partitions simultaneously?

Yes, consumers can read from multiple partitions concurrently. Kafka consumers are designed to handle parallel processing, allowing them to consume messages from different partitions at the same time, thus increasing overall throughput.

Kafka topics

A Kafka topic is a category or feed name to which records are published. Topics in Kafka are always multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

The relationship between Kafka topics and partitions

To enhance scalability and performance, each topic is further divided into partitions. These partitions allow for the distribution and parallel processing of data across multiple brokers within a Kafka cluster. Each partition is an ordered, immutable sequence of records, where order is maintained only within the partition, not across the entire topic. This partitioning mechanism enables Kafka to handle a high volume of data efficiently, as multiple producers can write to different partitions simultaneously, and multiple consumers can read from different partitions in parallel. The relationship between topics and partitions is fundamental to Kafka's ability to provide high throughput and fault tolerance in data streaming applications.

Kafka topic partitioning strategy: Which one should you choose?

In Kafka, partitioning is a versatile and crucial feature, with several strategies available to optimize data distribution and processing efficiency. These strategies determine how records are allocated across different partitions within a topic. Each approach caters to specific use cases and performance requirements, influencing the balance between load distribution and ordering guarantees. We'll now dive into these various partitioning strategies, exploring how they can effectively enhance Kafka's performance and reliability in diverse scenarios.

Partitioning on an attribute

You may need to partition on an attribute of the data if:

- The consumers of the topic need to aggregate by some attribute of the data

- The consumers need some sort of ordering guarantee

- Another resource is a bottleneck and you need to shard data

- You want to concentrate data for efficiency of storage and/or indexing

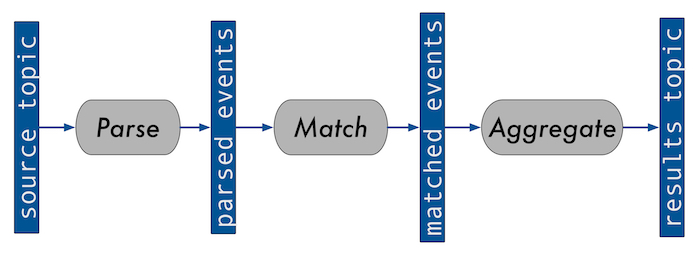

In part one , we used the following diagram to illustrate a simplification of a system we run for processing ongoing queries on event data:

Random partitioning of Kafka data

We use this system on the input topic for our most CPU-intensive application—the match service. This means that all instances of the match service must know about all registered queries to be able to match any event . While the event volume is large, the number of registered queries is relatively small, and thus a single application instance can handle holding all of them in memory, for now at least.

Kafka random partitioning diagram

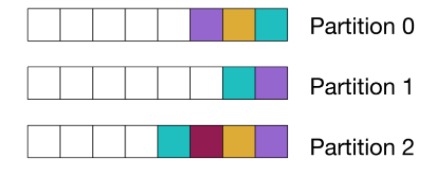

The following diagram uses colored squares to represent events that match to the same query. It shows messages randomly allocated to partitions:

When to use random partitioning

Random partitioning results in the evenest spread of load for consumers, and thus makes scaling the consumers easier. It is particularly suited for stateless or “embarrassingly parallel” services.

This is effectively what you get when using the default partitioner while not manually specifying a partition or a message key. To get an efficiency boost, the default partitioner in Kafka from version 2.4 onwards uses a “sticky” algorithm, which groups all messages to the same random partition for a batch.

Partition by aggregate

On the topic consumed by the service that does the query aggregation, however, we must partition according to the query identifier since we need all of the events that we’re aggregating to end up at the same place.

Kafka aggregate partitioning diagram

This diagram shows that events matching to the same query are all co-located on the same partition. The colors represent which query each event matches to:

After releasing the original version of the service, we discovered that the top 1.5% of queries accounted for approximately 90% of the events processed for aggregation. As you can imagine, this resulted in some pretty bad hot spots on the unlucky partitions.

When to use aggregate parsing

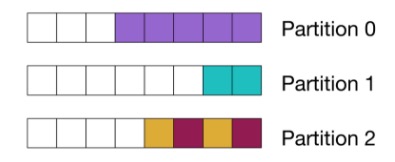

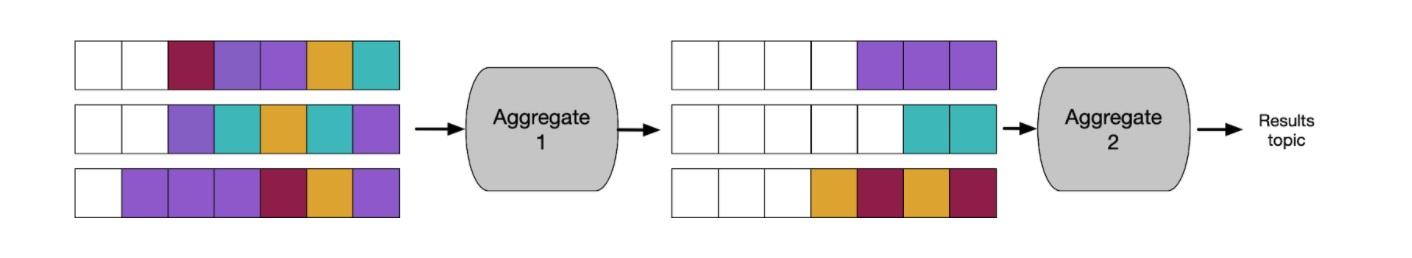

In the following example, you can see that we broke up the aggregation service into two pieces. Now we can randomly partition on the first stage, where we partially aggregate the data and then partition by the query ID to aggregate the final results per window. This approach allows us to greatly condense the larger streams at the first aggregation stage, so they are manageable to load balance at the second stage.

Of course, this approach comes with a resource-cost trade-off. Writing an extra hop to Kafka and having to split the service into two means that we spend more on network and service costs.

In this example, co-locating all the data for a query on a single client also sets us up to be able to make better ordering guarantees.

Ordering guarantee

We partition our final results by the query identifier, as the clients that consume from the results topic expect the windows to be provided in order:

Planning for resource bottlenecks and storage efficiency

When choosing a partitioning strategy, it’s important to plan for resource bottlenecks and storage efficiency.

(Note that the examples in this section reference other services that are not a part of the streaming query system I’ve been discussing.)

Resource bottleneck

We have another service that has a dependency on some databases that have been split into shards. We partition its topic according to how the shards are split in the databases. This approach produces a result similar to the diagram in our partition by aggregate example. Each consumer will be dependent only on the database shard it is linked with. Thus, issues with other database shards will not affect the instance or its ability to keep consuming from its partition. Also, if the application needs to keep state in memory related to the database, it will be a smaller share. Of course, this method of partitioning data is also prone to hotspots.

Storage efficiency

The source topic in our query processing system shares a topic with the system that permanently stores the event data. It reads all the same data using a separate consumer group. The data on this topic is partitioned by which customer account the data belongs to. For efficiency of storage and access, we concentrate an account’s data into as few nodes as possible.

While many accounts are small enough to fit on a single node, some accounts must be spread across multiple nodes. If an account becomes too large, we have custom logic to spread it across nodes, and, when needed, we can shrink the node count back down.

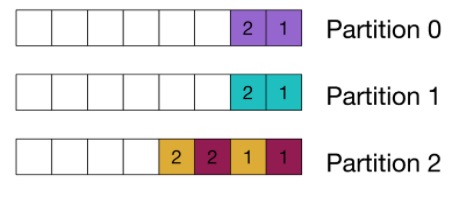

Consumer partition assignment

Whenever a consumer enters or leaves a consumer group, the brokers rebalance the partitions across consumers, meaning Kafka handles load balancing with respect to the number of partitions per application instance for you. This is great—it’s a major feature of Kafka. We use consumer groups on nearly all our services.

By default, when a rebalance happens, all consumers drop their partitions and are reassigned new ones (which is called the “eager” protocol). If you have an application that has a state associated with the consumed data, like our aggregator service, for example, you need to drop that state and start fresh with data from the new partition.

StickyAssignor

To reduce this partition shuffling on stateful services, you can use the StickyAssignor. This assignor makes some attempt to keep partition numbers assigned to the same instance, as long as they remain in the group, while still evenly distributing the partitions across members.

Because partitions are always revoked at the start of a rebalance, the consumer client code must track whether it has kept/lost/gained partitions or if partition moves are important to the logic of the application. This is the approach we use for our aggregator service.

CooperativeSitckyAssignor

I want to highlight a few other options. From Kafka release 2.4 and later, you can use the CooperativeStickyAssignor. Rather than always revoking all partitions at the start of a rebalance, the consumer listener only gets the difference in partitions revoked, as assigned over the course of the rebalance.

The rebalances as a whole do take longer, and in our application, we need to optimize for shortening the time of rebalances when a partition does move. That's why we stayed with using the “eager” protocol under the StickyPartitioner for our aggregator service. However, starting with Kafka release 2.5, we have the ability to keep consuming from partitions during a cooperative rebalance, so it might be worth revisiting.

Static membership

Additionally, you might be able to take advantage of static membership, which can avoid triggering a rebalance altogether, if clients consistently ID themselves as the same member. This approach works even if the underlying container restarts, for example. (Both brokers and clients must be on Kafka release 2.3 or later.)

Instead of using a consumer group, you can directly assign partitions through the consumer client, which does not trigger rebalances. Of course, in that case, you must balance the partitions yourself and also make sure that all partitions are consumed. We do this in situations where we’re using Kafka to snapshot state. We keep snapshot messages manually associated with the partitions of the input topic that our service reads from.

Kafka topic partitioning best practices

Following these best practices ensures that your Kafka topic partitioning strategy is well-designed, scalable, and aligned with your specific use case requirements. Regular monitoring and occasional adjustments will help maintain optimal performance as your system evolves.

Understand your data access patterns:

- Analyze how your data is produced and consumed.

- Consider the read-and-write patterns to design a partitioning strategy that aligns with your specific use case.

Choose an appropriate number of partitions:

- Avoid over-partitioning or under-partitioning.

- The number of partitions should match the desired level of parallelism and the expected workload.

- Consider the number of consumers, data volume, and the capacity of your Kafka cluster.

Use key-based partitioning when necessary:

- For scenarios where ordering or grouping of related messages is crucial, leverage key-based partitioning.

- Ensure that messages with the same key are consistently assigned to the same partition for strict ordering.

Consider data skew and load balancing:

- Be aware of potential data skew, where certain partitions receive more data than others.

- Use key-based partitioning or adjust partitioning logic to distribute the load evenly across partitions.

Plan for scalability:

- Design your partitioning strategy with scalability in mind.

- Ensure that adding more consumers or brokers can be achieved without significantly restructuring partitions.

Set an appropriate replication factor:

- Configure replication to ensure fault tolerance.

- Set a replication factor that suits your level of fault tolerance requirements.

- Consider the balance between replication and storage overhead.

Avoid frequent partition changes:

- Changing the number of partitions for a topic can be disruptive.

- Plan partitioning strategies during the initial topic creation and avoid frequent adjustments.

Monitor and tune as needed:

- Regularly monitor the performance of your Kafka cluster.

- Adjust partitioning strategies based on changes in data patterns, workloads, or cluster resources.

Choosing the best Kafka topic partitioning strategy

Your partitioning strategies will depend on the shape of your data and what type of processing your applications do. As you scale, you might need to adapt your strategies to handle new volume and shape of data. Consider what the resource bottlenecks are in your architecture, and spread load accordingly across your data pipelines. It might be CPU, database traffic, or disk space, but the principle is the same. Be efficient with your most limited/expensive resources.

To learn more tips for working with Kafka, see 20 Best Practices for Working with Kafka at Scale .

Get started with New Relic.

New Relic is an observability platform that helps you build better software. You can bring in data from any digital source so that you can fully understand how to improve your system.

Interested in how working with New Relic is like? See how we worked with ZenHub to drive in success.

Amy Boyle is a principal software engineer at New Relic, working on the core data platform. Her interests include distributed systems, readable code, and puppies.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub ( discuss.newrelic.com ) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.

750+ integrations to start monitoring your stack for free.

Share this article

- Share on Twitter

- Share on Reddit

- Share on Facebook

- Share on LinkedIn

- free forever, no credit card required

- 1 free user on up to 30 tools

- up to 100 GB ingest monthly

- in-depth product demo

- answer technical questions

- competitive pricing information

By signing up you're agreeing to Terms of Service and Services Privacy Notice .

RisingWave: Open-Source Streaming Database

Real-time insights on streaming data useing SQL

- [ Pricing ]

- [ Use Cases ]

- [ Contact ]

- [ SIGN UP ]

The Ultimate Comparison of Kafka Partition Strategies: Everything You Need to Know

Explore the best Kafka partition strategies for optimal data distribution and processing efficiency. Learn everything you need to know about Kafka partition strategy.

Apache Kafka has experienced exponential growth, with over 100,000 organizations leveraging its capabilities. The importance of partitioning in Kafka cannot be overstated, as it significantly impacts scalability and performance optimization. Partition strategies play a crucial role in data distribution , load balancing, and message ordering within a Kafka cluster . Different partitioning strategies offer distinct implications for handling data and ensuring fault tolerance. Additionally, the number of partitions directly correlates with higher throughput in a Kafka cluster. Understanding the significance of partitioning strategies is essential for optimizing data processing efficiency and ensuring seamless operations within distributed systems.

- Key Statistics:

- Over 100,000 organizations using Apache Kafka

- 41,000 Kafka meetup attendees

- 32,000 Stack Overflow questions

- 12,000 Jiras for Apache Kafka

- 31,000 Open job listings request Kafka skills

"Partitioning is a versatile and crucial feature in Kafka, with several strategies available to optimize data distribution and processing efficiency."

Apache Kafka provides a total order over messages within a partition but not between different partitions in a topic. This emphasizes the critical role that partitioning plays in managing message flow within distributed systems.

Introduction to Kafka Partition Strategies

In the realm of Apache Kafka, partitioning plays a pivotal role in ensuring efficient data distribution and processing. Understanding the fundamental concepts and key strategies associated with partitioning is essential for optimizing the performance of Kafka clusters.

The Role of Partitioning in Kafka

Basics of kafka partitioning.

Kafka partitions work by creating multiple logs from a single topic log and spreading them across one or more brokers. This division enables parallel processing and storage of data, thereby enhancing the scalability and fault tolerance of the system. Each partition operates as an independent unit, allowing for concurrent consumption and production of messages within a topic.

Benefits of Effective Partitioning

Effective partitioning offers several advantages, including improved throughput , enhanced parallelism, and optimized resource utilization. By distributing data across multiple partitions, Kafka can handle higher message volumes while maintaining low latency. Moreover, it facilitates load balancing by evenly distributing message processing tasks among brokers, thereby preventing bottlenecks and ensuring seamless operations.

Key Concepts in Partitioning

Partitions and brokers.

Partitions are distributed across brokers within a Kafka cluster to ensure fault tolerance and high availability. Each broker hosts one or more partitions, collectively managing the storage and replication of messages. This distributed architecture enhances resilience against node failures while enabling horizontal scalability.

The Impact of Partitioning on Performance

Partitioning significantly impacts the overall performance of a Kafka cluster. It allows for concurrent read and write operations on different partitions, thereby maximizing throughput. Additionally, it enables efficient resource allocation by distributing data processing tasks across multiple nodes, leading to improved system responsiveness.

Understanding Kafka Partitioning

Apache Kafka's ability to manage partitions efficiently is a critical aspect of its architecture. By understanding how Kafka manages partitions and the available partition strategies, organizations can optimize their data distribution and processing operations.

How Kafka Manages Partitions

Partition logs.

Kafka manages partitions by creating logs for each partition within a topic. These logs are then distributed across multiple brokers , allowing for parallel processing and storage of data. This approach enhances fault tolerance and scalability by enabling concurrent read and write operations on different partitions. As a result, it ensures that message processing tasks are evenly distributed across the cluster, preventing bottlenecks and optimizing resource utilization.

Message Ordering Within Partitions

One key feature of Kafka is its ability to provide total message ordering within a partition. This means that messages within a single partition are guaranteed to be in a specific order, facilitating sequential processing of data. However, it's important to note that this ordering does not extend across different partitions within a topic, as each partition operates independently.

Kafka Partition strategy

Partition selection by producers.

When producers send messages to Kafka topics, they have the option to set a key for each message. The key is then used to determine which partition the message will be pushed to through hashing. This allows producers to control how messages are distributed across partitions based on specific criteria or business logic.

Partitioning Strategies Overview

Developers have several partitioning strategies at their disposal when configuring Kafka topics. These strategies dictate how messages are assigned to partitions based on different criteria such as keys, round-robin distribution, or custom logic. Each strategy offers unique benefits and considerations depending on the specific use case and requirements.

Interviews :

- Kafka Developer : "The ability to select an appropriate partitioning strategy is crucial for optimizing performance and ensuring even distribution of message processing tasks."

Italicized statement: The flexibility in choosing partitioning strategies empowers organizations to tailor their approach based on specific data distribution needs.

Default Partitioning Strategy

Apache Kafka offers various partitioning strategies to optimize data distribution and processing efficiency. Among these, the default partitioning strategy includes Round-Robin Partitioning and Key-Based Partitioning , each with its unique mechanisms, use cases, advantages, and limitations.

Round-Robin Partitioning

Mechanism and use cases.

The Round-Robin Assignor is a key component of the round-robin partitioning strategy in Kafka. It distributes available partitions evenly across all members within a consumer group. This mechanism ensures that each consumer receives an approximately equal share of the partitions, promoting load balancing and efficient utilization of resources. The round-robin strategy is particularly beneficial in scenarios where an even distribution of workload among consumers is essential for maintaining system stability and performance.

- Ensures an equal distribution of data across partitions, facilitating balanced consumption of messages.

- Beneficial for achieving an even distribution of workload among consumers within a consumer group.

- Does not attempt to reduce partition movements when the number of consumers changes, potentially leading to inefficient rebalancing operations.

Key-Based Partitioning

How it works.

In contrast to round-robin partitioning, Key-Based Partitioning involves assigning messages to partitions based on specific keys provided by producers. This approach allows producers to control how messages are distributed across partitions by setting keys that align with their business logic or specific criteria. By leveraging key-based partitioning, organizations can tailor their data distribution strategies to align with their unique requirements and optimize message processing efficiency.

- Enables customized data distribution based on specific criteria or business logic defined by producers.

- Offers flexibility in optimizing message routing and ensuring efficient resource allocation within a Kafka cluster.

Limitations

- May lead to uneven distributions if keys are not uniformly distributed or if certain keys dominate message production.

The default partitioning strategy in Apache Kafka provides developers with essential tools for managing data distribution and optimizing system performance. Understanding the mechanisms, use cases, advantages, and limitations of round-robin and key-based partitioning is crucial for selecting the most suitable strategy based on specific use cases and requirements.

Sticky Partitioning Strategy

Apache Kafka version 2.4 introduced a new partitioning strategy known as "Sticky Partitioning," which has garnered significant attention for its impact on message processing efficiency and system performance. This innovative approach aims to address specific challenges related to data distribution and latency reduction, offering distinct benefits and potential drawbacks for organizations leveraging Kafka clusters.

Introduction to Sticky Partitioning

The concept of Sticky Partitioning revolves around the idea of maintaining a consistent mapping between producers and partitions over time, thereby optimizing message routing and reducing latency. In Kafka 2.4, this strategy became the default partitioner, signaling its critical role in enhancing the overall performance of Kafka clusters.

The Concept of Sticky Partitioning

Sticky Partitioning focuses on associating each producer with a specific set of partitions, ensuring that messages from the same producer are consistently routed to the same partitions. By establishing this persistent mapping, Kafka can streamline message processing operations and minimize unnecessary partition movements, ultimately leading to improved system responsiveness and resource utilization.

Sticky Partitioning in Kafka 2.4

In Kafka 2.4, the implementation of Sticky Partitioning resulted in a notable decrease in latency when producing messages. This outcome underscored the effectiveness of this strategy in optimizing message routing within distributed systems, contributing to enhanced throughput and reduced processing delays.

Benefits and Drawbacks of Sticky Partitioning

Performance improvements.

The adoption of Sticky Partitioning offers several compelling performance improvements for organizations utilizing Apache Kafka:

- Consistent Message Routing : By maintaining stable mappings between producers and partitions, organizations can ensure consistent message routing patterns, reducing unnecessary overhead associated with frequent partition reassignments.

- Latency Reduction : The outcomes observed in Kafka 2.4 highlighted a significant decrease in message production latency, indicating that Sticky Partitioning contributes to faster data processing and delivery.

- Enhanced Resource Utilization : With minimized partition movements, resources within a Kafka cluster are utilized more efficiently, leading to improved overall system performance.

Potential Issues

While Sticky Partitioning presents notable advantages, it also introduces potential considerations that organizations should be mindful of:

- Producer Imbalance : Over time, certain producers may dominate message production for specific partitions, potentially leading to uneven data distribution if not carefully managed.

- Dynamic Workloads : Adapting to dynamic workloads or changing producer patterns may require careful monitoring and adjustments to maintain optimal partition assignments.

Choosing the Right Partition Strategy

When it comes to Apache Kafka, choosing the appropriate partitioning strategy is crucial for optimizing performance and scalability. The decision largely depends on the nuances of the use case, data volume, and specific requirements. Each strategy has implications for data distribution, message ordering, and load balancing, making it essential to carefully consider the best approach for a given scenario.

Factors to Consider

Data volume and velocity.

The volume and velocity of data being processed play a significant role in determining the most suitable partitioning strategy. Organizations should assess their expected data volume and processing speed to strike a balance between over-partitioning and under-partitioning . Over-partitioning can lead to unnecessary overhead, while under-partitioning may impact system performance.

Message Ordering Requirements

Selecting the right partition strategy also hinges on the importance of message ordering within a Kafka cluster. If maintaining strict message order is crucial for the application, utilizing message keys for partitioning would be preferable. On the other hand, if message order is not a critical requirement, round-robin partitioning could suffice.

Kafka Partition Strategy

Custom partitioning strategies.

Developers have the flexibility to implement custom partitioning strategies tailored to their specific use cases. This customization allows organizations to align their partitioning approach with unique business logic or criteria. By leveraging custom partitioning strategies, they can optimize data distribution and processing efficiency based on their distinct requirements.

Best Practices for Partitioning

In addition to throughput considerations, several best practices should be taken into account when selecting a partition strategy:

- Scalability : Partition strategies should enable scalable data processing across a cluster of machines while ensuring efficient information distribution.

- Key Hashing : The default partitioner used by Kafka producers employs key hashing to determine how records are assigned to particular partitions. This method maps keys to specific partitions, facilitating effective data distribution.

Choosing an optimal partition strategy involves careful evaluation of various factors such as data characteristics, application requirements, and system scalability. It's essential for organizations leveraging Kafka clusters to select a strategy that aligns with their specific needs while considering implications for load balancing and fault tolerance.

Selecting the right partition strategy involves careful consideration of factors such as data volume, message ordering needs, and system scalability. It is crucial for organizations to evaluate the benefits and limitations of each strategy while adhering to best practices for efficient information distribution. Ultimately, the ability to tailor partitioning strategies empowers organizations to optimize performance and ensure seamless data processing operations within distributed systems.

RisingWave Marketing Team

Are Your Clickstream Data Safe? Security Concerns Revealed

Learn how to safeguard Clickstream Data from Security Concerns and Privacy risks. Explore tips to protect sensitive information online.

7 Essential Tips for Monitoring Kafka Event Streaming

Discover top tips for Monitoring Kafka and Event Streaming to optimize performance and ensure seamless data transfer operations.

Troubleshooting Kafka CDC: A Complete Guide

Learn how to optimize Kafka CDC with effective monitoring and troubleshooting strategies. Ensure seamless data transmission with Kafka CDC solutions.

sign up successfully_

Welcome to RisingWave community

Get ready to embark on an exciting journey of growth and inspiration. Stay tuned for updates, exclusive content, and opportunities to connect with like-minded individuals.

message sent successfully_

Thank you for reaching out to us

We appreciate your interest in RisingWave and will respond to your inquiry as soon as possible. In the meantime, feel free to explore our website for more information about our services and offerings.

subscribe successfully_

[Live Demo] Modernize Your Messaging Workloads with Data Streaming | Register Now

Login Contact Us

Apache Kafka Producer Improvements with the Sticky Partitioner

Get started with confluent, for free, watch demo: kafka streaming in 10 minutes.

- Justine Olshan Senior Software Engineer II

The amount of time it takes for a message to move through a system plays a big role in the performance of distributed systems like Apache Kafka ® . In Kafka, the latency of the producer is often defined as the time it takes for a message produced by the client to be acknowledged by Kafka. As the old saying goes, time is money , and it is always best to reduce latency when possible in order for the system to run faster. When the producer is able to send out its messages faster, the whole system benefits.

Each Kafka topic contains one or more partitions. When a Kafka producer sends a record to a topic, it needs to decide which partition to send it to. If we send several records to the same partition at around the same time, they can be sent as a batch. Processing each batch requires a bit of overhead, with each of the records inside the batch contributing to that cost. Records in smaller batches have a higher effective cost per record. Generally, smaller batches lead to more requests and queuing, resulting in higher latency.

A batch is completed either when it reaches a certain size ( batch.size ) or after a period of time ( linger.ms ) is up. Both batch.size and linger.ms are configured in the producer. The default for batch.size is 16,384 bytes, and the default of linger.ms is 0 milliseconds. Once batch.size is reached or at least linger.ms time has passed, the system will send the batch as soon as it is able.

At first glance, it might seem like setting linger.ms to 0 would only lead to the production of single-record batches. However, this is usually not the case. Even when linger.ms is 0, the producer will group records into batches when they are produced to the same partition around the same time. This is because the system needs a bit of time to handle each request, and batches form when the system cannot attend to them all right away.

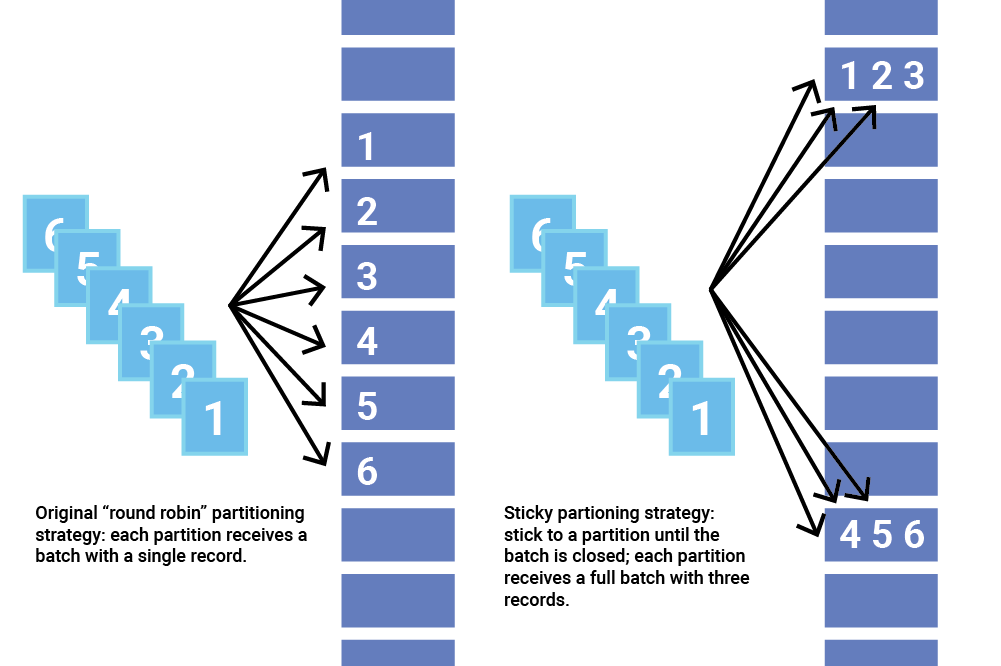

Part of what determines how batches form is the partitioning strategy; if records are not sent to the same partition, they cannot form a batch together. Fortunately, Kafka allows users to select a partitioning strategy by configuring a Partitioner class. The Partitioner assigns the partition for each record. The default behavior is to hash the key of a record to get the partition, but some records may have a key that is null . In this case, the old partitioning strategy before Apache Kafka 2.4 would be to cycle through the topic’s partitions and send a record to each one. Unfortunately, this method does not batch very well and may in fact add latency.

Due to the potential for increased latency with small batches, the original strategy for partitioning records with null keys can be inefficient. This changes with Apache Kafka version 2.4 , which introduces sticky partitioning, a new strategy for assigning records to partitions with proven lower latency.

Sticky partitioning strategy

The sticky partitioner addresses the problem of spreading out records without keys into smaller batches by picking a single partition to send all non-keyed records. Once the batch at that partition is filled or otherwise completed, the sticky partitioner randomly chooses and “sticks” to a new partition. That way, over a larger period of time, records are about evenly distributed among all the partitions while getting the added benefit of larger batch sizes.

In order to change the sticky partition, Apache Kafka 2.4 also adds a new method called onNewBatch to the partitioner interface for use right before a new batch is created, which is the perfect time to change the sticky partition. DefaultPartitioner implements this feature.

Basic tests: Producer latency

It’s important to quantify the impact of our performance improvements. Apache Kafka provides a test framework called Trogdor that can run different benchmarks, including one that measures producer latency. I used a test harness called Castle to run ProduceBench tests using a modified version of small_aws_produce.conf . These tests used three brokers and 1–3 producers and ran on Amazon Web Services (AWS) m3.xlarge instances with SSD.

Most of the tests included ran with the specifications below, and you can modify the Castle specification by replacing the default task specification with this example task spec . Some tests ran with slightly different settings, and those are mentioned below.

In order to get the best comparison, it is important to set the fields useConfiguredPartitioner and skipFlush in taskSpecs to true . This ensures that the partitions are assigned with the DefaultPartitioner and that batches are sent not through flushing but through filled batches or linger.ms triggering. Of course, you should set the keyGenerator to only generate null keys.

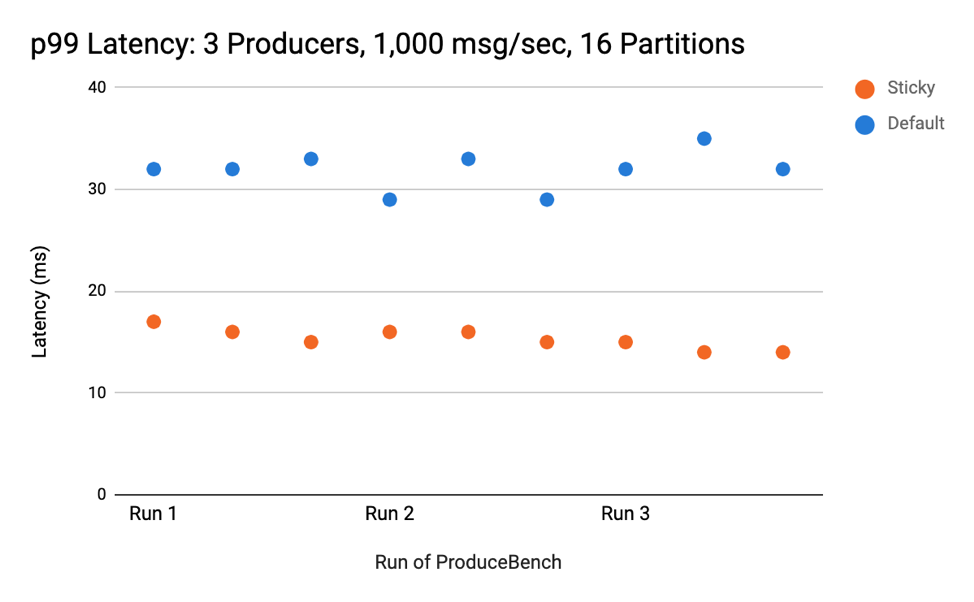

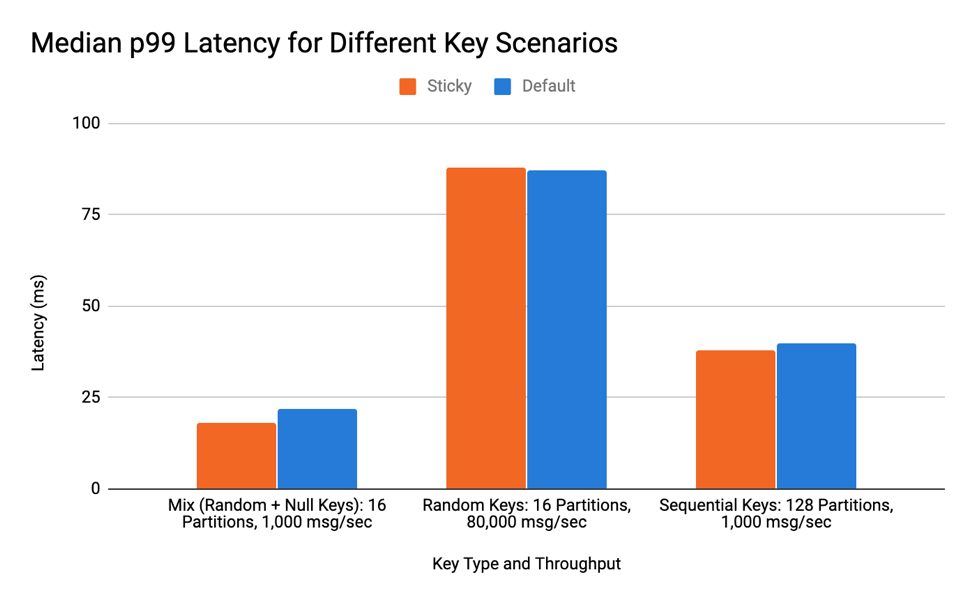

In almost all tests comparing the original DefaultPartitioner to the new and improved sticky version, the latter (sticky) had equal or less latency than the original DefaultPartitioner (default) . When comparing the 99th percentile (p99) latency of a cluster with three producers that produced 1,000 messages per second to topics with 16 partitions, the sticky partitioning strategy had around half the latency of the default strategy. Here are the results from three runs:

The decrease in latency became more apparent as partitions increased, in line with the idea that a few larger batches result in lower latency than many small batches. The difference is noticeable with as few as 16 partitions.

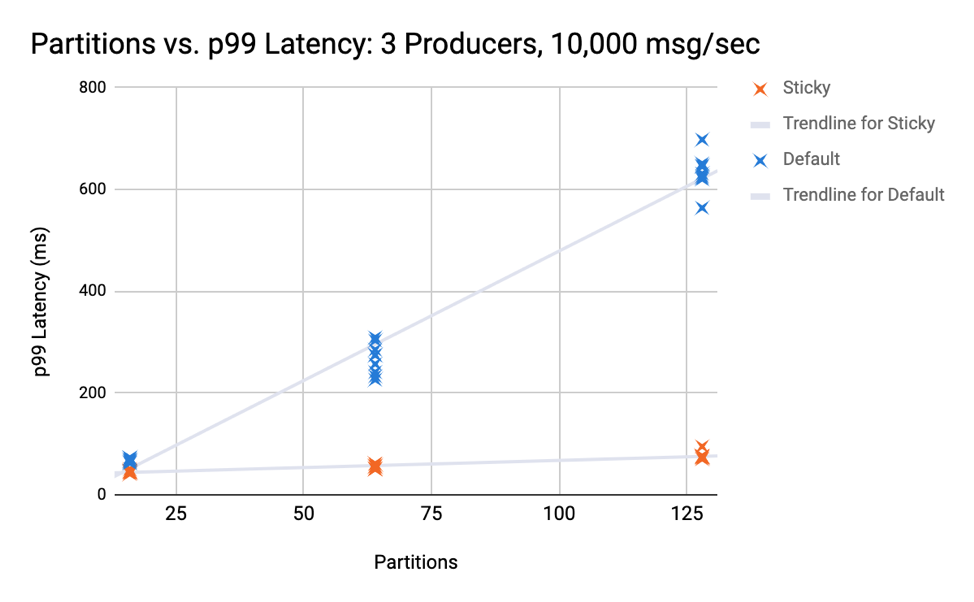

The next set of tests kept three producers producing 10,000 messages per second constant but increased the number of partitions. The graph below shows the results for 16, 64, and 128 partitions, indicating that the latency from the default partitioning strategy increases at a much faster rate. Even in the case with 16 partitions, the average p99 latency of the default partitioning strategy is 1.5x that of the sticky partitioning strategy.

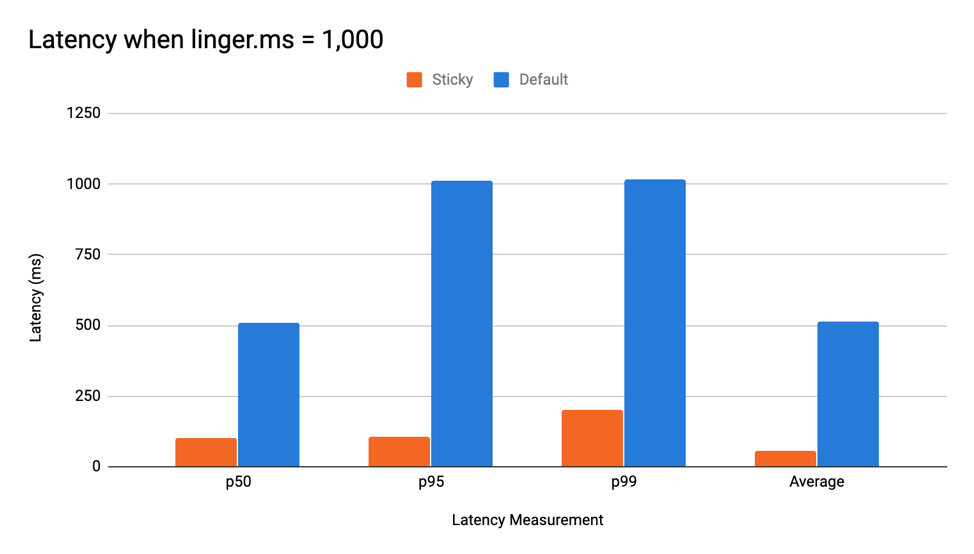

Linger latency tests and performance with different keys

As mentioned earlier, waiting for linger.ms can inject latency into the system. The sticky partitioner aims to prevent this by sending all records to one batch and potentially filling it earlier. Using the sticky partitioner with linger.ms > 0 in a relatively low-throughput scenario can mean incredible reductions in latency. When running with one producer that sends 1,000 messages per second and a linger.ms of 1,000, the p99 latency of the default partitioning strategy was five times larger. The graph below shows the results of the ProduceBench test.

The sticky partitioner helps improve the client’s performance when producing keyless messages. But how does it perform when the producer generates a mix of keyless and keyed messages? A test with randomly generated keys and a mixture of keys and no keys revealed that there is no significance difference in latency.

In this scenario, I examined a mixture of random and null keys. This sees slightly better batching, but since keyed values ignore the sticky partitioner, the benefit is not very significant. The graph below shows the median p99 latency of three runs. Over the course of testing, the latency did not drastically differ, so the median provides an accurate representation of a “typical” run.

The second scenario tested was random keys in a high-throughput situation. Since implementing the sticky partitioner changed the code slightly, it was important to see that running through the bit of extra logic would not affect the latency to produce. Since no sticky behavior or batching occurs here, it makes sense that the latency is roughly the same as the default. The median result of the random key test is shown in the graph below.

Finally, I tested the scenario that I thought would be worst for the sticky partition implementation—sequential keys with a high number of partitions. Since the extra bit of logic occurs near the time new batches are created, and this scenario creates a batch on virtually every record, checking that this did not cause an increase in latency was crucial. As the graph below shows, there was no significant difference.

CPU utilization for producer bench tasks

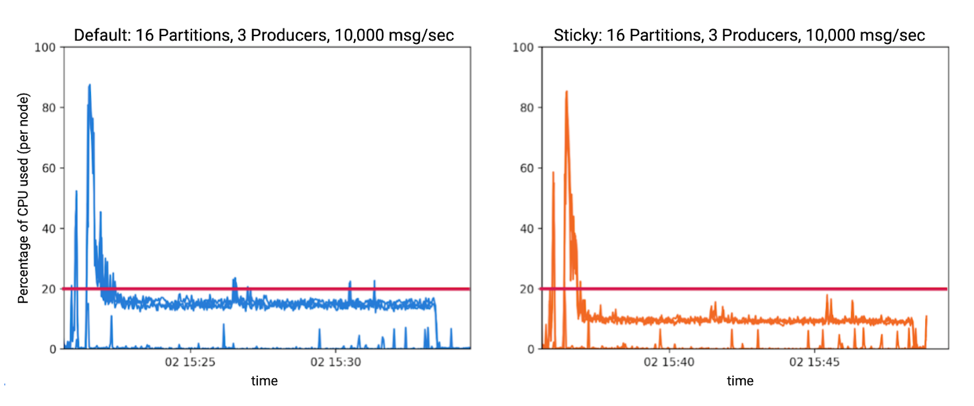

When performing these benchmarks, one thing to note is that the sticky partitioner decreased CPU usage in many cases.

For example, when running three producers producing 10,000 messages per second to 16 partitions, a noticeable drop in CPU usage was observed. Each line on the graphs below represents the percentage of CPU used by a node. Each node was both a producer and a broker, and the lines for the nodes are superimposed. This decrease in CPU was seen in tests with more partitions and with lower throughput as well.

Sticking it all together

The main goal of the sticky partitioner is to increase the number of records in each batch in order to decrease the total number of batches and eliminate excess queuing. When there are fewer batches with more records in each batch, the cost per record is lower and the same number of records can be sent more quickly using the sticky partitioning strategy. The data shows that this strategy does decrease latency in cases where null keys are used, and the effect becomes more pronounced when the number of partitions increases. In addition, CPU usage is often decreased when using the sticky partitioning strategy. By sticking to a partition and sending fewer but bigger batches, the producer sees great performance improvements.

And the best part is: this producer is simply built into Apache Kafka 2.4!

If you’d like, you can also download the Confluent Platform to get started with the leading distribution of Apache Kafka.

Justine graduated from Carnegie Mellon University in 2020 with a degree in computer science. During summer 2019, she was a software engineering intern at Confluent where she worked on improving the Apache Kafka® producer. After graduating, she returned full time to Confluent and continues to work on improving Kafka through various KIPs including KIP-516 which introduced topic IDs to Kafka and KIP-890 which strengthened the transactional protocol. She became an Apache Kafka committer in 2022 and PMC member in 2023.

Did you like this blog post? Share it now

Subscribe to the confluent blog.

Solving the Dual-Write Problem: Effective Strategies for Atomic Updates Across Systems

The post discusses the Dual-Write Problem in distributed systems, where atomic updates across multiple systems like databases and messaging systems (e.g., Apache Kafka) are challenging, leading to potential inconsistencies. It outlines common anti-patterns that fail to address the issue...

- Wade Waldron

Serverless Decoded: Reinventing Kafka Scaling with Elastic CKUs

Apache Kafka® has become the de facto standard for data streaming, used by organizations everywhere to anchor event-driven architectures and power mission-critical real-time applications.

- Julie Price

Kafka Topic Partitions Walkthrough via Python

Insights stats, toc table of contents, find partitions of a topic, find topicpartition of a topic, create new partitions for a topic, write message to a partition, read from a specific partition, kafka partitioner, customize a partitioner.

Partition is the parallelism unit in a Kafka cluster. Partitions are replicated in Kafka cluster (cluster of brokers) for fault tolerant and throughput. This articles show you how to work with Kafka partitions using Python as programming language.

Package kafka-python will be used in the following sections.

Function KafkaConsumer.partitions_for_topic (or KafkaProducer.partitions_for ) can be used to retrieve partitions of a topic.

In my Kafka cluster, there is only one partition for this topic with partition number 0.

The previous method returns a set of partition numbers and TopicPartition can be constructed using partition number.

For example, the following code snippet creates a TopicPartition object using topic name and partition number:

Example output:

To retrieve the current assigned topics for consumer, function assignments can be used.

This function returns a set of TopicPartition instances:

KafkaAdminClient class can be used to manage partitions for a topic.

The following is the function signature:

The above code will increase topic 'kontext-kafka' partitions to 3.

Producer clients can be use send function to write messages into Kafka cluster. The signature of this function looks like the following:

As you can see, partition can be specified when calling this function.

We can also read from a specific Kafka topic partition in consumer.

Example code:

Kafka partitioner is used to decide which partition the message goes to for a topic. In Kafka Java library, there are two partitioners implemented named RoundRobinPartitioner and UniformStickyPartitioner . For the Python library we are using, a default partitioner DefaultPartitioner is created. This default partitioner uses murmur2 to implement which is the Python implementation of Java class org.apache.kafka.common.utils.Utils.murmur2.

We can also implement a customized partitioner.

Let's create a user function to partition.

For this partitioner, it uses hash function instead of murmur2 to calculate partition.

The following are some examples of message keys and corresponded partition for topic ' kontext-kafka '.

Produce message using custom partitioner

To produce messages using the customer partitioner, we need to ensure message has a key otherwise partition will be randomly decided. Customized partitioner can be passed through parameter partitioner when constructing producer client.

All messages were written into partition 2.

- KafkaProducer

- KafkaAdminClient

- KafkaConsumer

Please log in or register to comment.

Log in with external accounts

Please log in or register first.

- Streaming Analytics & Kafka

- Subscribe RSS

- Help centre

- Send Message

- Core Java Tutorials

- Java EE Tutorials

- Java Swing Tutorials

- Spring Framework Tutorials

- Unit Testing

- Build Tools

- Misc Tutorials

- ExampleHelper.java

- PartitionAssignmentExample.java

- TopicCreator.java

Interface ConsumerPartitionAssignor

Nested class summary, method summary, method details, subscriptionuserdata, onassignment, supportedprotocols, getassignorinstances.

COMMENTS

Example : RangeAssignor. As you can seen, partitions 0 from topics A and B are assigned to the same consumer. In the example, at most two consumers are used because we have maximum of two ...

Producers are applications that write data to partitions in Kafka topics. Kafka provides the following partitioning strategies when producing a message. ... The property "partition.assignment.strategy" can be used to configure the assignment strategy while setting up a consumer. ... It is possible to make a consumer a static group member by ...

Static group membership is a method of assigning Kafka partitions to consumers in a consumer group in a fixed and deterministic way without relying on automatic partition assignment. In this approach, the developer defines the partition assignment explicitly instead of letting the Kafka broker manage it dynamically.

PartitionAssignor is the class that decides which partitions will be assigned to which consumer. When creating a new Kafka consumer, we can configure the strategy that will be used to assign the partitions amongst the consumers. We can set it using the configuration partition.assignment.strategy . All the Kafka consumers which belong to the ...

Avoid frequent partition changes: Changing the number of partitions for a topic can be disruptive. Plan partitioning strategies during the initial topic creation and avoid frequent adjustments. Monitor and tune as needed: Regularly monitor the performance of your Kafka cluster.

Hi, this is Paul, and welcome to the #16 part of my Apache Kafka guide. Today we will discuss Partition Rebalance and Static Group Members. Let's discuss consumer groups and strategies for…

KIP-345 (which is a Kafka Improvement Proposal, introducing the concept of static membership to the Kafka community) notes: ... Now, this process can and should be avoided altogether if the partition assignment remains unchanged, or has the potential to remain unchanged, before and after the rebalance. ...

Partition strategies play a crucial role in data distribution, load balancing, and message ordering within a Kafka cluster. Different partitioning strategies offer distinct implications for handling data and ensuring fault tolerance. Additionally, the number of partitions directly correlates with higher throughput in a Kafka cluster.

In this tutorial, we'll explore Kafka topics and partitions and how they relate to each other. 2. What Is a Kafka Topic. A topic is a storage mechanism for a sequence of events. Essentially, topics are durable log files that keep events in the same order as they occur in time. So, each new event is always added to the end of the log.

So, the partition assignment will be like C1 = {A0, B1}, C2 = {A1}, C3= {B0}. When the connection between the consumer C2 and the group is lost, the rebalance occurs, and the partitions reassign ...

Fortunately, Kafka allows users to select a partitioning strategy by configuring a Partitioner class. The Partitioner assigns the partition for each record. The default behavior is to hash the key of a record to get the partition, but some records may have a key that is null. In this case, the old partitioning strategy before Apache Kafka 2.4 ...

While sending messages, if partition is not explicitly specified, then keys can be used to decide to which partition message will go. All messages with the same key will go to the same partition. Example. Run Kafka server as described here. Creating topic. Using Kafka Admin API to create the example topic with 4 partitions.

Consume from Specific Partitions. To consume data from specific partitions in Kafka on the consumer side, we can specify the partitions we want to subscribe to using the KafkaConsumer.assign () method. This grants fine-grained control over consumption but requires managing partition offsets manually.

The Algorithm. The inputs to the sticky partition assignment algorithm are. partitionsPerTopic: topics mapped to number of their partitions; subscriptions: mapping of consumer to subscribed topics; currentAssignment: preserved assignment of topic partitions to consumers calculated during the previous rebalance; The sticky partition assignment algorithm works by defining and maintaining a ...

27. The correct way to reliably seek and check current assignment is to wait for the onPartitionsAssigned() callback after subscribing. On a newly created (still not connected) consumer, calling poll() once does not guarantees it will immedaitely be connected and assigned partitions. As a basic example, see the code below that subscribes to a ...

Thankfully, Kafka consumers handle this issue transparently to the developer. Let's pull back the curtain a little and see how that works and then get our hands dirty building our own partitioning scheme. Let's start by reminding ourselves a bit about the internals of Kafka. The data in Kafka is divided into topics.

Consumers have subscribed to Topic A and the partition assignment is : P0 to C1, P1 to C2, P2 to C3 and P1. ... (e.g Kafka Connect). Static Membership and Incremental Cooperative Rebalancing are ...

Kafka partitioner. Kafka partitioner is used to decide which partition the message goes to for a topic. In Kafka Java library, there are two partitioners implemented named RoundRobinPartitioner and UniformStickyPartitioner.For the Python library we are using, a default partitioner DefaultPartitioner is created. This default partitioner uses murmur2 to implement which is the Python ...

A topic partition can be assigned to a consumer by calling KafkaConsumer#assign(). public void assign(java.util.Collection<TopicPartition> partitions) Note that ...

I was able to apply partition.assignment.strategy for single channel using the following property in application.properties: spring.cloud.stream.kafka.bindings.input ...

Interface ConsumerPartitionAssignor. This interface is used to define custom partition assignment for use in KafkaConsumer. Members of the consumer group subscribe to the topics they are interested in and forward their subscriptions to a Kafka broker serving as the group coordinator. The coordinator selects one member to perform the group ...