Imperial College London Imperial College London

Latest news.

Neutrino discoveries and academic awards for excellence: News from Imperial

Imperial joins European discussion on accelerating innovation

Imperial to strengthen Transatlantic tech cooperation with new hub

- Centre for Higher Education Research and Scholarship

- Research and Innovation

- Education evaluation toolkit

- Tools and resources for evaluation

Interview protocol design

On this page you will find our recommendations for creating an interview protocol for both structured and semi-structured interviews. Your protocol can be viewed as a guide for the interview: what to say at the beginning of the interview to introduce yourself and the topic of the interview, how to collect participant consent, interview questions, and what to say when you end the interview. These tips have been adapted from Jacob and Furgerson’s (2012) guide to writing interview protocols and conducting interviews for those new to qualitative research. Your protocol may have more questions if you are planning a structured interview. However, it may have fewer and more open-ended questions if you are planning a semi-structured interview, in order to allow more time for participants to elaborate on their responses and for you to ask follow-up questions.

Interview protocol design accordion widget

Use a script to open and close the interview.

This will allow you to share all of the relevant information about your study and critical details about informed consent before you begin the interview. It will also allow a space to close the interview and give the participant an opportunity to share additional thoughts that haven’t yet been discussed in the interview.

Collect informed consent

The most common (and encouraged) means of gaining informed consent is by giving the participant a participant information sheet as well as an informed consent form to read through and then sign before you begin the interview. You can find the template for participant information sheets and informed consent form on the Imperial College London Education Ethics Review Process (EERP) webpage . Other resources for the EERP process can also be found on this website.

Start with the basics

To help build rapport and a comfortable space for the participant, start out with questions that ask for some basic background information. This could include asking their name, their course year, how they are doing, whether they have any interesting things happening at the moment, their likes and interests etc. (although be careful not to come across as inauthentic). This will help both you and the participant to have an open conversation throughout the interview.

Create open-ended questions

Open-ended questions enable more time and space for the participant to open up and share more detail about their experiences. Using phrases like “Tell me about…” rather than “Did you ever experience X?” will be less likely to elicit only “yes” or “no” answers, which do not provide rich data. If a participant does give a “yes” or “no” answer, but you would like to know more, you can ask, “Can you tell me why?” or “Could you please elaborate on that answer a bit more?” For example, if you are interviewing a student about their sense of belonging at Imperial, you could ask, “Can you tell me about a time when you felt a real sense that you belonged at Imperial College London?”

Ensure your questions are informed by existing research

Before creating your interview questions, conduct a thorough review of the literature about the topic you are investigating through interviews. For example, research on the topic of “students’ sense of belonging” has emphasised the importance of students feeling respected by other members of the university. Therefore, it would be a good idea to include a question about “respect” if you are interested in your students’ sense of belonging at Imperial or within their departments and study areas (e.g. the classroom). See our sense of belonging interview protocol for an idea.

Begin with questions that are easier to answer, then move to more difficult or abstract questions

Be aware that even if you have explained your topic to the participant, you should not assume that they have the same understanding of the topic as you. Resist the temptation to simply ask your research questions to your participants directly, particularly at the beginning of the interview, as these will often be too conceptual and abstract for them to answer easily. Asking abstract questions too early on can alienate your participant. By asking more concrete questions that participants can answer easily, you will build rapport and trust more quickly. Start by asking questions about concrete experiences, preferably ones that are very recent or ongoing. For example, if you are interested in students’ sense of belonging, do not start by asking whether a student “belongs” or how they perceive their “belonging.” Rather, try asking about how they have felt in recent modules to give them the opportunity to raise any positive or negative experiences themselves. Later, you can ask questions which specifically address concepts related to sense of belonging, for example whether they always feel “respected” (to follow on from our earlier example). Then, at the end of the interview, you could ask your participant to reflect more directly and generally on your topic. For example, it may be good to end an interview by asking the participant to summarise the extent to which they feel they ‘belong’ and what the main factors are. Note that this advice is particularly important if dealing with topics that may be difficult to form an opinion on, such as topics which require students to remember things from the distant past, or which deal with controversial topics.

Use prompts

If you are asking open-ended questions, the intention is that the participant will use that as an opportunity to provide you with rich qualitative detail about their experiences and perceptions. However, participants sometimes need prompts to get them going. Try to anticipate what prompts you could give to help someone answer each of your open-ended questions (Jacob & Furgerson, 2012). For example, if you are investigating sense of belonging and the participant is struggling to respond to the question “What could someone see about you that would show them that you felt like you belonged?”, you might prompt them to think about their clothes or accessories (for example do they wear or carry anything with the Imperial College London logo) or their activities (for example membership in student groups), and what meaning they attach to these.

Be prepared to revise your protocol during and after the interview

During the interview, you may notice that some additional questions might pop into your mind, or you might need to re-order the questions, depending on the response of the participant and the direction in which the interview is going. This is fine, as it probably means the interview is flowing like a natural conversation. You might even find that this new order of questions should be adopted for future interviews, and you can adjust the protocol accordingly.

Be mindful of how much time the interview will take

When designing the protocol, keep in mind that six to ten well-written questions may make for an interview lasting approximately one hour. Consider who you are interviewing, and remember that you are asking people to share their experiences and their time with you, so be mindful of how long you expect the interview to last.

Pilot test your questions with a colleague

Pilot testing your interview protocol will help you to assess whether your interview questions make sense. Pilot testing gives you the chance to familiarise yourself with the order and flow of the questions out loud, which will help you to feel more comfortable when you begin conducting the interviews for your data collection.

Jacob, S. A., & Furgerson, S. P. (2012). Writing Interview Protocols and Conducting Interviews: Tips for Students New to the Field of Qualitative Research. The Qualitative Report, 17 (2), 1-10.

Welch, C., & Piekkari, R. (2006). Crossing Language Boundaries:. Management International Review, 46 , 417-437. Retrieved from https://link.springer.com/content/pdf/10.1007%2Fs11575-006-0099-1.pdf

Our systems are now restored following recent technical disruption, and we’re working hard to catch up on publishing. We apologise for the inconvenience caused. Find out more: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > Doing Interview-based Qualitative Research

- > Designing the interview guide

Book contents

- Frontmatter

- 1 Introduction

- 2 Some examples of interpretative research

- 3 Planning and beginning an interpretative research project

- 4 Making decisions about participants

- 5 Designing the interview guide

- 6 Doing the interview

- 7 Preparing for analysis

- 8 Finding meanings in people's talk

- 9 Analyzing stories in interviews

- 10 Analyzing talk-as-action

- 11 Analyzing for implicit cultural meanings

- 12 Reporting your project

5 - Designing the interview guide

Published online by Cambridge University Press: 05 October 2015

This chapter shows you how to prepare a comprehensive interview guide. You need to prepare such a guide before you start interviewing. The interview guide serves many purposes. Most important, it is a memory aid to ensure that the interviewer covers every topic and obtains the necessary detail about the topic. For this reason, the interview guide should contain all the interview items in the order that you have decided. The exact wording of the items should be given, although the interviewer may sometimes depart from this wording. Interviews often contain some questions that are sensitive or potentially offensive. For such questions, it is vital to work out the best wording of the question ahead of time and to have it available in the interview.

To study people's meaning-making, researchers must create a situation that enables people to tell about their experiences and that also foregrounds each person's particular way of making sense of those experiences. Put another way, the interview situation must encourage participants to tell about their experiences in their own words and in their own way without being constrained by categories or classifications imposed by the interviewer. The type of interview that you will learn about here has a conversational and relaxed tone. However, the interview is far from extemporaneous. The interviewer works from the interview guide that has been carefully prepared ahead of time. It contains a detailed and specific list of items that concern topics that will shed light on the researchable questions.

Often researchers are in a hurry to get into the field and gather their material. It may seem obvious to them what questions to ask participants. Seasoned interviewers may feel ready to approach interviewing with nothing but a laundry list of topics. But it is always wise to move slowly at this point. Time spent designing and refining interview items – polishing the wording of the items, weighing language choices, considering the best sequence of topics, and then pretesting and revising the interview guide – will always pay off in producing better interviews. Moreover, it will also provide you with a deep knowledge of the elements of the interview and a clear idea of the intent behind each of the items. This can help you to keep the interviews on track.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Designing the interview guide

- Eva Magnusson , Umeå Universitet, Sweden , Jeanne Marecek , Swarthmore College, Pennsylvania

- Book: Doing Interview-based Qualitative Research

- Online publication: 05 October 2015

- Chapter DOI: https://doi.org/10.1017/CBO9781107449893.005

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Chapter 11. Interviewing

Introduction.

Interviewing people is at the heart of qualitative research. It is not merely a way to collect data but an intrinsically rewarding activity—an interaction between two people that holds the potential for greater understanding and interpersonal development. Unlike many of our daily interactions with others that are fairly shallow and mundane, sitting down with a person for an hour or two and really listening to what they have to say is a profound and deep enterprise, one that can provide not only “data” for you, the interviewer, but also self-understanding and a feeling of being heard for the interviewee. I always approach interviewing with a deep appreciation for the opportunity it gives me to understand how other people experience the world. That said, there is not one kind of interview but many, and some of these are shallower than others. This chapter will provide you with an overview of interview techniques but with a special focus on the in-depth semistructured interview guide approach, which is the approach most widely used in social science research.

An interview can be variously defined as “a conversation with a purpose” ( Lune and Berg 2018 ) and an attempt to understand the world from the point of view of the person being interviewed: “to unfold the meaning of peoples’ experiences, to uncover their lived world prior to scientific explanations” ( Kvale 2007 ). It is a form of active listening in which the interviewer steers the conversation to subjects and topics of interest to their research but also manages to leave enough space for those interviewed to say surprising things. Achieving that balance is a tricky thing, which is why most practitioners believe interviewing is both an art and a science. In my experience as a teacher, there are some students who are “natural” interviewers (often they are introverts), but anyone can learn to conduct interviews, and everyone, even those of us who have been doing this for years, can improve their interviewing skills. This might be a good time to highlight the fact that the interview is a product between interviewer and interviewee and that this product is only as good as the rapport established between the two participants. Active listening is the key to establishing this necessary rapport.

Patton ( 2002 ) makes the argument that we use interviews because there are certain things that are not observable. In particular, “we cannot observe feelings, thoughts, and intentions. We cannot observe behaviors that took place at some previous point in time. We cannot observe situations that preclude the presence of an observer. We cannot observe how people have organized the world and the meanings they attach to what goes on in the world. We have to ask people questions about those things” ( 341 ).

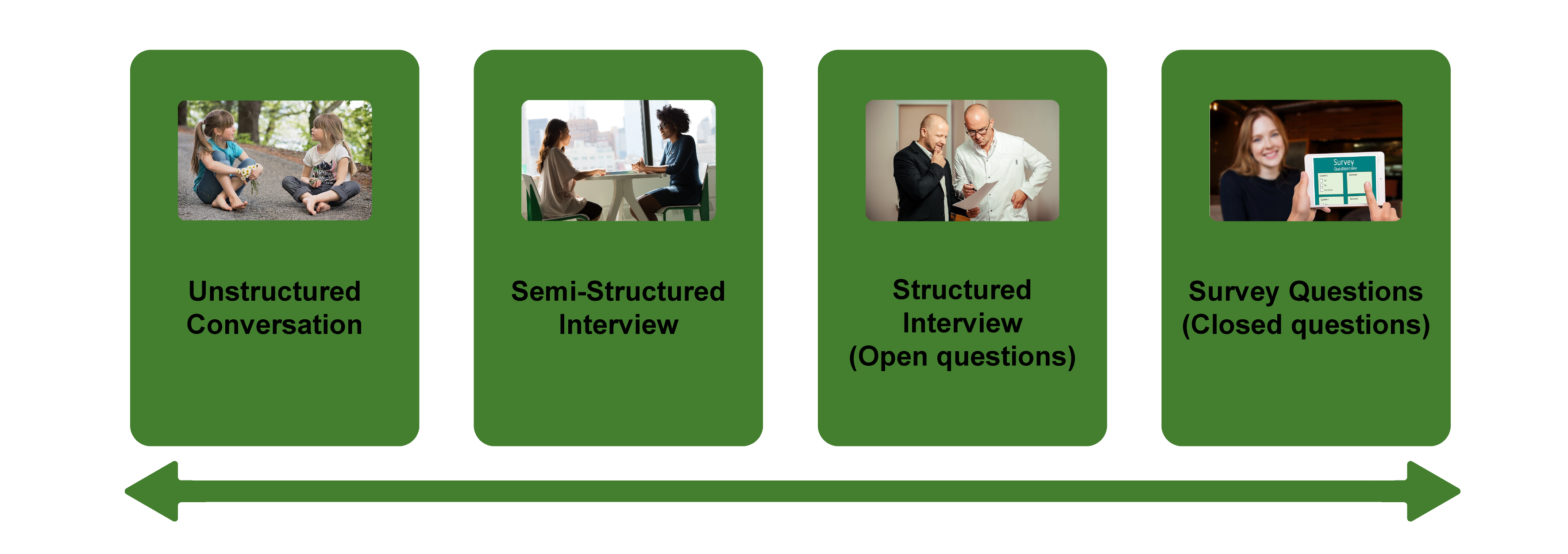

Types of Interviews

There are several distinct types of interviews. Imagine a continuum (figure 11.1). On one side are unstructured conversations—the kind you have with your friends. No one is in control of those conversations, and what you talk about is often random—whatever pops into your head. There is no secret, underlying purpose to your talking—if anything, the purpose is to talk to and engage with each other, and the words you use and the things you talk about are a little beside the point. An unstructured interview is a little like this informal conversation, except that one of the parties to the conversation (you, the researcher) does have an underlying purpose, and that is to understand the other person. You are not friends speaking for no purpose, but it might feel just as unstructured to the “interviewee” in this scenario. That is one side of the continuum. On the other side are fully structured and standardized survey-type questions asked face-to-face. Here it is very clear who is asking the questions and who is answering them. This doesn’t feel like a conversation at all! A lot of people new to interviewing have this ( erroneously !) in mind when they think about interviews as data collection. Somewhere in the middle of these two extreme cases is the “ semistructured” interview , in which the researcher uses an “interview guide” to gently move the conversation to certain topics and issues. This is the primary form of interviewing for qualitative social scientists and will be what I refer to as interviewing for the rest of this chapter, unless otherwise specified.

Informal (unstructured conversations). This is the most “open-ended” approach to interviewing. It is particularly useful in conjunction with observational methods (see chapters 13 and 14). There are no predetermined questions. Each interview will be different. Imagine you are researching the Oregon Country Fair, an annual event in Veneta, Oregon, that includes live music, artisan craft booths, face painting, and a lot of people walking through forest paths. It’s unlikely that you will be able to get a person to sit down with you and talk intensely about a set of questions for an hour and a half. But you might be able to sidle up to several people and engage with them about their experiences at the fair. You might have a general interest in what attracts people to these events, so you could start a conversation by asking strangers why they are here or why they come back every year. That’s it. Then you have a conversation that may lead you anywhere. Maybe one person tells a long story about how their parents brought them here when they were a kid. A second person talks about how this is better than Burning Man. A third person shares their favorite traveling band. And yet another enthuses about the public library in the woods. During your conversations, you also talk about a lot of other things—the weather, the utilikilts for sale, the fact that a favorite food booth has disappeared. It’s all good. You may not be able to record these conversations. Instead, you might jot down notes on the spot and then, when you have the time, write down as much as you can remember about the conversations in long fieldnotes. Later, you will have to sit down with these fieldnotes and try to make sense of all the information (see chapters 18 and 19).

Interview guide ( semistructured interview ). This is the primary type employed by social science qualitative researchers. The researcher creates an “interview guide” in advance, which she uses in every interview. In theory, every person interviewed is asked the same questions. In practice, every person interviewed is asked mostly the same topics but not always the same questions, as the whole point of a “guide” is that it guides the direction of the conversation but does not command it. The guide is typically between five and ten questions or question areas, sometimes with suggested follow-ups or prompts . For example, one question might be “What was it like growing up in Eastern Oregon?” with prompts such as “Did you live in a rural area? What kind of high school did you attend?” to help the conversation develop. These interviews generally take place in a quiet place (not a busy walkway during a festival) and are recorded. The recordings are transcribed, and those transcriptions then become the “data” that is analyzed (see chapters 18 and 19). The conventional length of one of these types of interviews is between one hour and two hours, optimally ninety minutes. Less than one hour doesn’t allow for much development of questions and thoughts, and two hours (or more) is a lot of time to ask someone to sit still and answer questions. If you have a lot of ground to cover, and the person is willing, I highly recommend two separate interview sessions, with the second session being slightly shorter than the first (e.g., ninety minutes the first day, sixty minutes the second). There are lots of good reasons for this, but the most compelling one is that this allows you to listen to the first day’s recording and catch anything interesting you might have missed in the moment and so develop follow-up questions that can probe further. This also allows the person being interviewed to have some time to think about the issues raised in the interview and go a little deeper with their answers.

Standardized questionnaire with open responses ( structured interview ). This is the type of interview a lot of people have in mind when they hear “interview”: a researcher comes to your door with a clipboard and proceeds to ask you a series of questions. These questions are all the same whoever answers the door; they are “standardized.” Both the wording and the exact order are important, as people’s responses may vary depending on how and when a question is asked. These are qualitative only in that the questions allow for “open-ended responses”: people can say whatever they want rather than select from a predetermined menu of responses. For example, a survey I collaborated on included this open-ended response question: “How does class affect one’s career success in sociology?” Some of the answers were simply one word long (e.g., “debt”), and others were long statements with stories and personal anecdotes. It is possible to be surprised by the responses. Although it’s a stretch to call this kind of questioning a conversation, it does allow the person answering the question some degree of freedom in how they answer.

Survey questionnaire with closed responses (not an interview!). Standardized survey questions with specific answer options (e.g., closed responses) are not really interviews at all, and they do not generate qualitative data. For example, if we included five options for the question “How does class affect one’s career success in sociology?”—(1) debt, (2) social networks, (3) alienation, (4) family doesn’t understand, (5) type of grad program—we leave no room for surprises at all. Instead, we would most likely look at patterns around these responses, thinking quantitatively rather than qualitatively (e.g., using regression analysis techniques, we might find that working-class sociologists were twice as likely to bring up alienation). It can sometimes be confusing for new students because the very same survey can include both closed-ended and open-ended questions. The key is to think about how these will be analyzed and to what level surprises are possible. If your plan is to turn all responses into a number and make predictions about correlations and relationships, you are no longer conducting qualitative research. This is true even if you are conducting this survey face-to-face with a real live human. Closed-response questions are not conversations of any kind, purposeful or not.

In summary, the semistructured interview guide approach is the predominant form of interviewing for social science qualitative researchers because it allows a high degree of freedom of responses from those interviewed (thus allowing for novel discoveries) while still maintaining some connection to a research question area or topic of interest. The rest of the chapter assumes the employment of this form.

Creating an Interview Guide

Your interview guide is the instrument used to bridge your research question(s) and what the people you are interviewing want to tell you. Unlike a standardized questionnaire, the questions actually asked do not need to be exactly what you have written down in your guide. The guide is meant to create space for those you are interviewing to talk about the phenomenon of interest, but sometimes you are not even sure what that phenomenon is until you start asking questions. A priority in creating an interview guide is to ensure it offers space. One of the worst mistakes is to create questions that are so specific that the person answering them will not stray. Relatedly, questions that sound “academic” will shut down a lot of respondents. A good interview guide invites respondents to talk about what is important to them, not feel like they are performing or being evaluated by you.

Good interview questions should not sound like your “research question” at all. For example, let’s say your research question is “How do patriarchal assumptions influence men’s understanding of climate change and responses to climate change?” It would be worse than unhelpful to ask a respondent, “How do your assumptions about the role of men affect your understanding of climate change?” You need to unpack this into manageable nuggets that pull your respondent into the area of interest without leading him anywhere. You could start by asking him what he thinks about climate change in general. Or, even better, whether he has any concerns about heatwaves or increased tornadoes or polar icecaps melting. Once he starts talking about that, you can ask follow-up questions that bring in issues around gendered roles, perhaps asking if he is married (to a woman) and whether his wife shares his thoughts and, if not, how they negotiate that difference. The fact is, you won’t really know the right questions to ask until he starts talking.

There are several distinct types of questions that can be used in your interview guide, either as main questions or as follow-up probes. If you remember that the point is to leave space for the respondent, you will craft a much more effective interview guide! You will also want to think about the place of time in both the questions themselves (past, present, future orientations) and the sequencing of the questions.

Researcher Note

Suggestion : As you read the next three sections (types of questions, temporality, question sequence), have in mind a particular research question, and try to draft questions and sequence them in a way that opens space for a discussion that helps you answer your research question.

Type of Questions

Experience and behavior questions ask about what a respondent does regularly (their behavior) or has done (their experience). These are relatively easy questions for people to answer because they appear more “factual” and less subjective. This makes them good opening questions. For the study on climate change above, you might ask, “Have you ever experienced an unusual weather event? What happened?” Or “You said you work outside? What is a typical summer workday like for you? How do you protect yourself from the heat?”

Opinion and values questions , in contrast, ask questions that get inside the minds of those you are interviewing. “Do you think climate change is real? Who or what is responsible for it?” are two such questions. Note that you don’t have to literally ask, “What is your opinion of X?” but you can find a way to ask the specific question relevant to the conversation you are having. These questions are a bit trickier to ask because the answers you get may depend in part on how your respondent perceives you and whether they want to please you or not. We’ve talked a fair amount about being reflective. Here is another place where this comes into play. You need to be aware of the effect your presence might have on the answers you are receiving and adjust accordingly. If you are a woman who is perceived as liberal asking a man who identifies as conservative about climate change, there is a lot of subtext that can be going on in the interview. There is no one right way to resolve this, but you must at least be aware of it.

Feeling questions are questions that ask respondents to draw on their emotional responses. It’s pretty common for academic researchers to forget that we have bodies and emotions, but people’s understandings of the world often operate at this affective level, sometimes unconsciously or barely consciously. It is a good idea to include questions that leave space for respondents to remember, imagine, or relive emotional responses to particular phenomena. “What was it like when you heard your cousin’s house burned down in that wildfire?” doesn’t explicitly use any emotion words, but it allows your respondent to remember what was probably a pretty emotional day. And if they respond emotionally neutral, that is pretty interesting data too. Note that asking someone “How do you feel about X” is not always going to evoke an emotional response, as they might simply turn around and respond with “I think that…” It is better to craft a question that actually pushes the respondent into the affective category. This might be a specific follow-up to an experience and behavior question —for example, “You just told me about your daily routine during the summer heat. Do you worry it is going to get worse?” or “Have you ever been afraid it will be too hot to get your work accomplished?”

Knowledge questions ask respondents what they actually know about something factual. We have to be careful when we ask these types of questions so that respondents do not feel like we are evaluating them (which would shut them down), but, for example, it is helpful to know when you are having a conversation about climate change that your respondent does in fact know that unusual weather events have increased and that these have been attributed to climate change! Asking these questions can set the stage for deeper questions and can ensure that the conversation makes the same kind of sense to both participants. For example, a conversation about political polarization can be put back on track once you realize that the respondent doesn’t really have a clear understanding that there are two parties in the US. Instead of asking a series of questions about Republicans and Democrats, you might shift your questions to talk more generally about political disagreements (e.g., “people against abortion”). And sometimes what you do want to know is the level of knowledge about a particular program or event (e.g., “Are you aware you can discharge your student loans through the Public Service Loan Forgiveness program?”).

Sensory questions call on all senses of the respondent to capture deeper responses. These are particularly helpful in sparking memory. “Think back to your childhood in Eastern Oregon. Describe the smells, the sounds…” Or you could use these questions to help a person access the full experience of a setting they customarily inhabit: “When you walk through the doors to your office building, what do you see? Hear? Smell?” As with feeling questions , these questions often supplement experience and behavior questions . They are another way of allowing your respondent to report fully and deeply rather than remain on the surface.

Creative questions employ illustrative examples, suggested scenarios, or simulations to get respondents to think more deeply about an issue, topic, or experience. There are many options here. In The Trouble with Passion , Erin Cech ( 2021 ) provides a scenario in which “Joe” is trying to decide whether to stay at his decent but boring computer job or follow his passion by opening a restaurant. She asks respondents, “What should Joe do?” Their answers illuminate the attraction of “passion” in job selection. In my own work, I have used a news story about an upwardly mobile young man who no longer has time to see his mother and sisters to probe respondents’ feelings about the costs of social mobility. Jessi Streib and Betsy Leondar-Wright have used single-page cartoon “scenes” to elicit evaluations of potential racial discrimination, sexual harassment, and classism. Barbara Sutton ( 2010 ) has employed lists of words (“strong,” “mother,” “victim”) on notecards she fans out and asks her female respondents to select and discuss.

Background/Demographic Questions

You most definitely will want to know more about the person you are interviewing in terms of conventional demographic information, such as age, race, gender identity, occupation, and educational attainment. These are not questions that normally open up inquiry. [1] For this reason, my practice has been to include a separate “demographic questionnaire” sheet that I ask each respondent to fill out at the conclusion of the interview. Only include those aspects that are relevant to your study. For example, if you are not exploring religion or religious affiliation, do not include questions about a person’s religion on the demographic sheet. See the example provided at the end of this chapter.

Temporality

Any type of question can have a past, present, or future orientation. For example, if you are asking a behavior question about workplace routine, you might ask the respondent to talk about past work, present work, and ideal (future) work. Similarly, if you want to understand how people cope with natural disasters, you might ask your respondent how they felt then during the wildfire and now in retrospect and whether and to what extent they have concerns for future wildfire disasters. It’s a relatively simple suggestion—don’t forget to ask about past, present, and future—but it can have a big impact on the quality of the responses you receive.

Question Sequence

Having a list of good questions or good question areas is not enough to make a good interview guide. You will want to pay attention to the order in which you ask your questions. Even though any one respondent can derail this order (perhaps by jumping to answer a question you haven’t yet asked), a good advance plan is always helpful. When thinking about sequence, remember that your goal is to get your respondent to open up to you and to say things that might surprise you. To establish rapport, it is best to start with nonthreatening questions. Asking about the present is often the safest place to begin, followed by the past (they have to know you a little bit to get there), and lastly, the future (talking about hopes and fears requires the most rapport). To allow for surprises, it is best to move from very general questions to more particular questions only later in the interview. This ensures that respondents have the freedom to bring up the topics that are relevant to them rather than feel like they are constrained to answer you narrowly. For example, refrain from asking about particular emotions until these have come up previously—don’t lead with them. Often, your more particular questions will emerge only during the course of the interview, tailored to what is emerging in conversation.

Once you have a set of questions, read through them aloud and imagine you are being asked the same questions. Does the set of questions have a natural flow? Would you be willing to answer the very first question to a total stranger? Does your sequence establish facts and experiences before moving on to opinions and values? Did you include prefatory statements, where necessary; transitions; and other announcements? These can be as simple as “Hey, we talked a lot about your experiences as a barista while in college.… Now I am turning to something completely different: how you managed friendships in college.” That is an abrupt transition, but it has been softened by your acknowledgment of that.

Probes and Flexibility

Once you have the interview guide, you will also want to leave room for probes and follow-up questions. As in the sample probe included here, you can write out the obvious probes and follow-up questions in advance. You might not need them, as your respondent might anticipate them and include full responses to the original question. Or you might need to tailor them to how your respondent answered the question. Some common probes and follow-up questions include asking for more details (When did that happen? Who else was there?), asking for elaboration (Could you say more about that?), asking for clarification (Does that mean what I think it means or something else? I understand what you mean, but someone else reading the transcript might not), and asking for contrast or comparison (How did this experience compare with last year’s event?). “Probing is a skill that comes from knowing what to look for in the interview, listening carefully to what is being said and what is not said, and being sensitive to the feedback needs of the person being interviewed” ( Patton 2002:374 ). It takes work! And energy. I and many other interviewers I know report feeling emotionally and even physically drained after conducting an interview. You are tasked with active listening and rearranging your interview guide as needed on the fly. If you only ask the questions written down in your interview guide with no deviations, you are doing it wrong. [2]

The Final Question

Every interview guide should include a very open-ended final question that allows for the respondent to say whatever it is they have been dying to tell you but you’ve forgotten to ask. About half the time they are tired too and will tell you they have nothing else to say. But incredibly, some of the most honest and complete responses take place here, at the end of a long interview. You have to realize that the person being interviewed is often discovering things about themselves as they talk to you and that this process of discovery can lead to new insights for them. Making space at the end is therefore crucial. Be sure you convey that you actually do want them to tell you more, that the offer of “anything else?” is not read as an empty convention where the polite response is no. Here is where you can pull from that active listening and tailor the final question to the particular person. For example, “I’ve asked you a lot of questions about what it was like to live through that wildfire. I’m wondering if there is anything I’ve forgotten to ask, especially because I haven’t had that experience myself” is a much more inviting final question than “Great. Anything you want to add?” It’s also helpful to convey to the person that you have the time to listen to their full answer, even if the allotted time is at the end. After all, there are no more questions to ask, so the respondent knows exactly how much time is left. Do them the courtesy of listening to them!

Conducting the Interview

Once you have your interview guide, you are on your way to conducting your first interview. I always practice my interview guide with a friend or family member. I do this even when the questions don’t make perfect sense for them, as it still helps me realize which questions make no sense, are poorly worded (too academic), or don’t follow sequentially. I also practice the routine I will use for interviewing, which goes something like this:

- Introduce myself and reintroduce the study

- Provide consent form and ask them to sign and retain/return copy

- Ask if they have any questions about the study before we begin

- Ask if I can begin recording

- Ask questions (from interview guide)

- Turn off the recording device

- Ask if they are willing to fill out my demographic questionnaire

- Collect questionnaire and, without looking at the answers, place in same folder as signed consent form

- Thank them and depart

A note on remote interviewing: Interviews have traditionally been conducted face-to-face in a private or quiet public setting. You don’t want a lot of background noise, as this will make transcriptions difficult. During the recent global pandemic, many interviewers, myself included, learned the benefits of interviewing remotely. Although face-to-face is still preferable for many reasons, Zoom interviewing is not a bad alternative, and it does allow more interviews across great distances. Zoom also includes automatic transcription, which significantly cuts down on the time it normally takes to convert our conversations into “data” to be analyzed. These automatic transcriptions are not perfect, however, and you will still need to listen to the recording and clarify and clean up the transcription. Nor do automatic transcriptions include notations of body language or change of tone, which you may want to include. When interviewing remotely, you will want to collect the consent form before you meet: ask them to read, sign, and return it as an email attachment. I think it is better to ask for the demographic questionnaire after the interview, but because some respondents may never return it then, it is probably best to ask for this at the same time as the consent form, in advance of the interview.

What should you bring to the interview? I would recommend bringing two copies of the consent form (one for you and one for the respondent), a demographic questionnaire, a manila folder in which to place the signed consent form and filled-out demographic questionnaire, a printed copy of your interview guide (I print with three-inch right margins so I can jot down notes on the page next to relevant questions), a pen, a recording device, and water.

After the interview, you will want to secure the signed consent form in a locked filing cabinet (if in print) or a password-protected folder on your computer. Using Excel or a similar program that allows tables/spreadsheets, create an identifying number for your interview that links to the consent form without using the name of your respondent. For example, let’s say that I conduct interviews with US politicians, and the first person I meet with is George W. Bush. I will assign the transcription the number “INT#001” and add it to the signed consent form. [3] The signed consent form goes into a locked filing cabinet, and I never use the name “George W. Bush” again. I take the information from the demographic sheet, open my Excel spreadsheet, and add the relevant information in separate columns for the row INT#001: White, male, Republican. When I interview Bill Clinton as my second interview, I include a second row: INT#002: White, male, Democrat. And so on. The only link to the actual name of the respondent and this information is the fact that the consent form (unavailable to anyone but me) has stamped on it the interview number.

Many students get very nervous before their first interview. Actually, many of us are always nervous before the interview! But do not worry—this is normal, and it does pass. Chances are, you will be pleasantly surprised at how comfortable it begins to feel. These “purposeful conversations” are often a delight for both participants. This is not to say that sometimes things go wrong. I often have my students practice several “bad scenarios” (e.g., a respondent that you cannot get to open up; a respondent who is too talkative and dominates the conversation, steering it away from the topics you are interested in; emotions that completely take over; or shocking disclosures you are ill-prepared to handle), but most of the time, things go quite well. Be prepared for the unexpected, but know that the reason interviews are so popular as a technique of data collection is that they are usually richly rewarding for both participants.

One thing that I stress to my methods students and remind myself about is that interviews are still conversations between people. If there’s something you might feel uncomfortable asking someone about in a “normal” conversation, you will likely also feel a bit of discomfort asking it in an interview. Maybe more importantly, your respondent may feel uncomfortable. Social research—especially about inequality—can be uncomfortable. And it’s easy to slip into an abstract, intellectualized, or removed perspective as an interviewer. This is one reason trying out interview questions is important. Another is that sometimes the question sounds good in your head but doesn’t work as well out loud in practice. I learned this the hard way when a respondent asked me how I would answer the question I had just posed, and I realized that not only did I not really know how I would answer it, but I also wasn’t quite as sure I knew what I was asking as I had thought.

—Elizabeth M. Lee, Associate Professor of Sociology at Saint Joseph’s University, author of Class and Campus Life , and co-author of Geographies of Campus Inequality

How Many Interviews?

Your research design has included a targeted number of interviews and a recruitment plan (see chapter 5). Follow your plan, but remember that “ saturation ” is your goal. You interview as many people as you can until you reach a point at which you are no longer surprised by what they tell you. This means not that no one after your first twenty interviews will have surprising, interesting stories to tell you but rather that the picture you are forming about the phenomenon of interest to you from a research perspective has come into focus, and none of the interviews are substantially refocusing that picture. That is when you should stop collecting interviews. Note that to know when you have reached this, you will need to read your transcripts as you go. More about this in chapters 18 and 19.

Your Final Product: The Ideal Interview Transcript

A good interview transcript will demonstrate a subtly controlled conversation by the skillful interviewer. In general, you want to see replies that are about one paragraph long, not short sentences and not running on for several pages. Although it is sometimes necessary to follow respondents down tangents, it is also often necessary to pull them back to the questions that form the basis of your research study. This is not really a free conversation, although it may feel like that to the person you are interviewing.

Final Tips from an Interview Master

Annette Lareau is arguably one of the masters of the trade. In Listening to People , she provides several guidelines for good interviews and then offers a detailed example of an interview gone wrong and how it could be addressed (please see the “Further Readings” at the end of this chapter). Here is an abbreviated version of her set of guidelines: (1) interview respondents who are experts on the subjects of most interest to you (as a corollary, don’t ask people about things they don’t know); (2) listen carefully and talk as little as possible; (3) keep in mind what you want to know and why you want to know it; (4) be a proactive interviewer (subtly guide the conversation); (5) assure respondents that there aren’t any right or wrong answers; (6) use the respondent’s own words to probe further (this both allows you to accurately identify what you heard and pushes the respondent to explain further); (7) reuse effective probes (don’t reinvent the wheel as you go—if repeating the words back works, do it again and again); (8) focus on learning the subjective meanings that events or experiences have for a respondent; (9) don’t be afraid to ask a question that draws on your own knowledge (unlike trial lawyers who are trained never to ask a question for which they don’t already know the answer, sometimes it’s worth it to ask risky questions based on your hypotheses or just plain hunches); (10) keep thinking while you are listening (so difficult…and important); (11) return to a theme raised by a respondent if you want further information; (12) be mindful of power inequalities (and never ever coerce a respondent to continue the interview if they want out); (13) take control with overly talkative respondents; (14) expect overly succinct responses, and develop strategies for probing further; (15) balance digging deep and moving on; (16) develop a plan to deflect questions (e.g., let them know you are happy to answer any questions at the end of the interview, but you don’t want to take time away from them now); and at the end, (17) check to see whether you have asked all your questions. You don’t always have to ask everyone the same set of questions, but if there is a big area you have forgotten to cover, now is the time to recover ( Lareau 2021:93–103 ).

Sample: Demographic Questionnaire

ASA Taskforce on First-Generation and Working-Class Persons in Sociology – Class Effects on Career Success

Supplementary Demographic Questionnaire

Thank you for your participation in this interview project. We would like to collect a few pieces of key demographic information from you to supplement our analyses. Your answers to these questions will be kept confidential and stored by ID number. All of your responses here are entirely voluntary!

What best captures your race/ethnicity? (please check any/all that apply)

- White (Non Hispanic/Latina/o/x)

- Black or African American

- Hispanic, Latino/a/x of Spanish

- Asian or Asian American

- American Indian or Alaska Native

- Middle Eastern or North African

- Native Hawaiian or Pacific Islander

- Other : (Please write in: ________________)

What is your current position?

- Grad Student

- Full Professor

Please check any and all of the following that apply to you:

- I identify as a working-class academic

- I was the first in my family to graduate from college

- I grew up poor

What best reflects your gender?

- Transgender female/Transgender woman

- Transgender male/Transgender man

- Gender queer/ Gender nonconforming

Anything else you would like us to know about you?

Example: Interview Guide

In this example, follow-up prompts are italicized. Note the sequence of questions. That second question often elicits an entire life history , answering several later questions in advance.

Introduction Script/Question

Thank you for participating in our survey of ASA members who identify as first-generation or working-class. As you may have heard, ASA has sponsored a taskforce on first-generation and working-class persons in sociology and we are interested in hearing from those who so identify. Your participation in this interview will help advance our knowledge in this area.

- The first thing we would like to as you is why you have volunteered to be part of this study? What does it mean to you be first-gen or working class? Why were you willing to be interviewed?

- How did you decide to become a sociologist?

- Can you tell me a little bit about where you grew up? ( prompts: what did your parent(s) do for a living? What kind of high school did you attend?)

- Has this identity been salient to your experience? (how? How much?)

- How welcoming was your grad program? Your first academic employer?

- Why did you decide to pursue sociology at the graduate level?

- Did you experience culture shock in college? In graduate school?

- Has your FGWC status shaped how you’ve thought about where you went to school? debt? etc?

- Were you mentored? How did this work (not work)? How might it?

- What did you consider when deciding where to go to grad school? Where to apply for your first position?

- What, to you, is a mark of career success? Have you achieved that success? What has helped or hindered your pursuit of success?

- Do you think sociology, as a field, cares about prestige?

- Let’s talk a little bit about intersectionality. How does being first-gen/working class work alongside other identities that are important to you?

- What do your friends and family think about your career? Have you had any difficulty relating to family members or past friends since becoming highly educated?

- Do you have any debt from college/grad school? Are you concerned about this? Could you explain more about how you paid for college/grad school? (here, include assistance from family, fellowships, scholarships, etc.)

- (You’ve mentioned issues or obstacles you had because of your background.) What could have helped? Or, who or what did? Can you think of fortuitous moments in your career?

- Do you have any regrets about the path you took?

- Is there anything else you would like to add? Anything that the Taskforce should take note of, that we did not ask you about here?

Further Readings

Britten, Nicky. 1995. “Qualitative Interviews in Medical Research.” BMJ: British Medical Journal 31(6999):251–253. A good basic overview of interviewing particularly useful for students of public health and medical research generally.

Corbin, Juliet, and Janice M. Morse. 2003. “The Unstructured Interactive Interview: Issues of Reciprocity and Risks When Dealing with Sensitive Topics.” Qualitative Inquiry 9(3):335–354. Weighs the potential benefits and harms of conducting interviews on topics that may cause emotional distress. Argues that the researcher’s skills and code of ethics should ensure that the interviewing process provides more of a benefit to both participant and researcher than a harm to the former.

Gerson, Kathleen, and Sarah Damaske. 2020. The Science and Art of Interviewing . New York: Oxford University Press. A useful guidebook/textbook for both undergraduates and graduate students, written by sociologists.

Kvale, Steiner. 2007. Doing Interviews . London: SAGE. An easy-to-follow guide to conducting and analyzing interviews by psychologists.

Lamont, Michèle, and Ann Swidler. 2014. “Methodological Pluralism and the Possibilities and Limits of Interviewing.” Qualitative Sociology 37(2):153–171. Written as a response to various debates surrounding the relative value of interview-based studies and ethnographic studies defending the particular strengths of interviewing. This is a must-read article for anyone seriously engaging in qualitative research!

Pugh, Allison J. 2013. “What Good Are Interviews for Thinking about Culture? Demystifying Interpretive Analysis.” American Journal of Cultural Sociology 1(1):42–68. Another defense of interviewing written against those who champion ethnographic methods as superior, particularly in the area of studying culture. A classic.

Rapley, Timothy John. 2001. “The ‘Artfulness’ of Open-Ended Interviewing: Some considerations in analyzing interviews.” Qualitative Research 1(3):303–323. Argues for the importance of “local context” of data production (the relationship built between interviewer and interviewee, for example) in properly analyzing interview data.

Weiss, Robert S. 1995. Learning from Strangers: The Art and Method of Qualitative Interview Studies . New York: Simon and Schuster. A classic and well-regarded textbook on interviewing. Because Weiss has extensive experience conducting surveys, he contrasts the qualitative interview with the survey questionnaire well; particularly useful for those trained in the latter.

- I say “normally” because how people understand their various identities can itself be an expansive topic of inquiry. Here, I am merely talking about collecting otherwise unexamined demographic data, similar to how we ask people to check boxes on surveys. ↵

- Again, this applies to “semistructured in-depth interviewing.” When conducting standardized questionnaires, you will want to ask each question exactly as written, without deviations! ↵

- I always include “INT” in the number because I sometimes have other kinds of data with their own numbering: FG#001 would mean the first focus group, for example. I also always include three-digit spaces, as this allows for up to 999 interviews (or, more realistically, allows for me to interview up to one hundred persons without having to reset my numbering system). ↵

A method of data collection in which the researcher asks the participant questions; the answers to these questions are often recorded and transcribed verbatim. There are many different kinds of interviews - see also semistructured interview , structured interview , and unstructured interview .

A document listing key questions and question areas for use during an interview. It is used most often for semi-structured interviews. A good interview guide may have no more than ten primary questions for two hours of interviewing, but these ten questions will be supplemented by probes and relevant follow-ups throughout the interview. Most IRBs require the inclusion of the interview guide in applications for review. See also interview and semi-structured interview .

A data-collection method that relies on casual, conversational, and informal interviewing. Despite its apparent conversational nature, the researcher usually has a set of particular questions or question areas in mind but allows the interview to unfold spontaneously. This is a common data-collection technique among ethnographers. Compare to the semi-structured or in-depth interview .

A form of interview that follows a standard guide of questions asked, although the order of the questions may change to match the particular needs of each individual interview subject, and probing “follow-up” questions are often added during the course of the interview. The semi-structured interview is the primary form of interviewing used by qualitative researchers in the social sciences. It is sometimes referred to as an “in-depth” interview. See also interview and interview guide .

The cluster of data-collection tools and techniques that involve observing interactions between people, the behaviors, and practices of individuals (sometimes in contrast to what they say about how they act and behave), and cultures in context. Observational methods are the key tools employed by ethnographers and Grounded Theory .

Follow-up questions used in a semi-structured interview to elicit further elaboration. Suggested prompts can be included in the interview guide to be used/deployed depending on how the initial question was answered or if the topic of the prompt does not emerge spontaneously.

A form of interview that follows a strict set of questions, asked in a particular order, for all interview subjects. The questions are also the kind that elicits short answers, and the data is more “informative” than probing. This is often used in mixed-methods studies, accompanying a survey instrument. Because there is no room for nuance or the exploration of meaning in structured interviews, qualitative researchers tend to employ semi-structured interviews instead. See also interview.

The point at which you can conclude data collection because every person you are interviewing, the interaction you are observing, or content you are analyzing merely confirms what you have already noted. Achieving saturation is often used as the justification for the final sample size.

An interview variant in which a person’s life story is elicited in a narrative form. Turning points and key themes are established by the researcher and used as data points for further analysis.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Appendix: Qualitative Interview Design

Daniel W. Turner III and Nicole Hagstrom-Schmidt

Qualitative Interview Design: A Practical Guide for Novice Investigators

Qualitative research design can be complicated depending upon the level of experience a researcher may have with a particular type of methodology. As researchers, many aspire to grow and expand their knowledge and experiences with qualitative design in order to better utilize a variety of research paradigms. One of the more popular areas of interest in qualitative research design is that of the interview protocol. Interviews provide in-depth information pertaining to participants’ experiences and viewpoints of a particular topic. Oftentimes, interviews are coupled with other forms of data collection in order to provide the researcher with a well-rounded collection of information for analyses. This paper explores the effective ways to conduct in-depth, qualitative interviews for novice investigators by expanding upon the practical components of each interview design.

Categories of Qualitative Interview Design

As common with quantitative analyses, there are various forms of interview design that can be developed to obtain thick, rich data utilizing a qualitative investigational perspective. [1] For the purpose of this examination, there are three formats for interview design that will be explored which are summarized by Gall, Gall, and Borg:

- Informal conversational interview,

- General interview guide approach,

- Standardized open-ended interview. [2]

In addition, I will expand on some suggestions for conducting qualitative interviews which includes the construction of research questions as well as the analysis of interview data. These suggestions come from both my personal experiences with interviewing as well as the recommendations from the literature to assist novice interviewers.

Informal Conversational Interview

The informal conversational interview is outlined by Gall, Gall, and Borg for the purpose of relying “…entirely on the spontaneous generation of questions in a natural interaction, typically one that occurs as part of ongoing participant observation fieldwork.” [3] I am curious when it comes to other cultures or religions and I enjoy immersing myself in these environments as an active participant. I ask questions in order to learn more about these social settings without having a predetermined set of structured questions. Primarily the questions come from “in the moment experiences” as a means for further understanding or clarification of what I am witnessing or experiencing at a particular moment. With the informal conversational approach, the researcher does not ask any specific types of questions, but rather relies on the interaction with the participants to guide the interview process. [4] Think of this type of interview as an “off the top of your head” style of interview where you really construct questions as you move forward. Many consider this type of interview beneficial because of the lack of structure, which allows for flexibility in the nature of the interview. However, many researchers view this type of interview as unstable or unreliable because of the inconsistency in the interview questions, thus making it difficult to code data. [5] If you choose to conduct an informal conversational interview, it is critical to understand the need for flexibility and originality in the questioning as a key for success.

General Interview Guide Approach

The general interview guide approach is more structured than the informal conversational interview although there is still quite a bit of flexibility in its composition. [6] The ways that questions are potentially worded depend upon the researcher who is conducting the interview. Therefore, one of the obvious issues with this type of interview is the lack of consistency in the way research questions are posed because researchers can interchange the way he or she poses them. With that in mind, the respondents may not consistently answer the same question(s) based on how they were posed by the interviewer. [7] During research for my doctoral dissertation, I was able to interact with alumni participants in a relaxed and informal manner where I had the opportunity to learn more about the in-depth experiences of the participants through structured interviews. This informal environment allowed me the opportunity to develop rapport with the participants so that I was able to ask follow-up or probing questions based on their responses to pre-constructed questions. I found this quite useful in my interviews because I could ask questions or change questions based on participant responses to previous questions. The questions were structured, but adapting them allowed me to explore a more personal approach to each alumni interview.

According to McNamara, the strength of the general interview guide approach is the ability of the researcher “…to ensure that the same general areas of information are collected from each interviewee; this provides more focus than the conversational approach, but still allows a degree of freedom and adaptability in getting information from the interviewee.” [8] The researcher remains in the driver’s seat with this type of interview approach, but flexibility takes precedence based on perceived prompts from the participants.

You might ask, “What does this mean anyway?” The easiest way to answer that question is to think about your own personal experiences at a job interview. When you were invited to a job interview in the past, you might have prepared for all sorts of curve ball-style questions to come your way. You desired an answer for every potential question. If the interviewer were asking you questions using a general interview guide approach, he or she would ask questions using their own unique style, which might differ from the way the questions were originally created. You as the interviewee would then respond to those questions in the manner in which the interviewer asked which would dictate how the interview continued. Based on how the interviewer asked the question(s), you might have been able to answer more information or less information than that of other job candidates. Therefore, it is easy to see how this could positively or negatively influence a job candidate if the interviewer were using a general interview guide approach.

Standardized Open-Ended Interviews

The standardized open-ended interview is extremely structured in terms of the wording of the questions. Participants are always asked identical questions, but the questions are worded so that responses are open-ended. [9] This open-endedness allows the participants to contribute as much detailed information as they desire and it also allows the researcher to ask probing questions as a means of follow-up. Standardized open-ended interviews are likely the most popular form of interviewing utilized in research studies because of the nature of the open-ended questions, allowing the participants to fully express their viewpoints and experiences. If one were to identify weaknesses with open-ended interviewing, they would likely identify the difficulty with coding the data. [10] Since open-ended interviews in composition call for participants to fully express their responses in as much detail as desired, it can be quite difficult for researchers to extract similar themes or codes from the interview transcripts as they would with less open-ended responses. Although the data provided by participants are rich and thick with qualitative data, it can be a more cumbersome process for the researcher to sift through the narrative responses in order to fully and accurately reflect an overall perspective of all interview responses through the coding process. However, according to Gall, Gall, and Borg, this reduces researcher biases within the study, particularly when the interviewing process involves many participants. [11]

Suggestions for Conducting Qualitative Interviews

Now that we know a few of the more popular interview designs that are available to qualitative researchers, we can more closely examine various suggestions for conducting qualitative interviews based on the available research. These suggestions are designed to provide the researcher with the tools needed to conduct a well constructed, professional interview with their participants. Some of the most common information found within the literature relating to interviews, according to Creswell [12] :

- The preparation for the interview,

- The constructing effective research questions,

- The actual implementation of the interview(s). [13]

Preparation for the Interview

Probably the most helpful tip with the interview process is that of interview preparation. This process can help make or break the process and can either alleviate or exacerbate the problematic circumstances that could potentially occur once the research is implemented. McNamara suggests the importance of the preparation stage in order to maintain an unambiguous focus as to how the interviews will be erected in order to provide maximum benefit to the proposed research study. [14] Along these lines Chenail provides a number of pre-interview exercises researchers can use to improve their instrumentality and address potential biases. [15] McNamara applies eight principles to the preparation stage of interviewing which includes the following ingredients:

- Choose a setting with little distraction;

- Explain the purpose of the interview;

- Address terms of confidentiality;

- Explain the format of the interview;

- Indicate how long the interview usually takes;

- Tell them how to get in touch with you later if they want to;

- Ask them if they have any questions before you both get started with the interview;

- Don’t count on your memory to recall their answers. [16]

Selecting Participants

Creswell discusses the importance of selecting the appropriate candidates for interviews. He asserts that the researcher should utilize one of the various types of sampling strategies such as criterion based sampling or critical case sampling (among many others) in order to obtain qualified candidates that will provide the most credible information to the study. [17] Creswell also suggests the importance of acquiring participants who will be willing to openly and honestly share information or “their story.” [18] It might be easier to conduct the interviews with participants in a comfortable environment where the participants do not feel restricted or uncomfortable to share information.

Pilot Testing

Another important element to the interview preparation is the implementation of a pilot test. The pilot test will assist the research in determining if there are flaws, limitations, or other weaknesses within the interview design and will allow him or her to make necessary revisions prior to the implementation of the study. [19] A pilot test should be conducted with participants that have similar interests as those that will participate in the implemented study. The pilot test will also assist the researchers with the refinement of research questions, which will be discussed in the next section.

Constructing Effective Research Questions

Creating effective research questions for the interview process is one of the most crucial components to interview design. Researchers desiring to conduct such an investigation should be careful that each of the questions will allow the examiner to dig deep into the experiences and/or knowledge of the participants in order to gain maximum data from the interviews. McNamara suggests several recommendations for creating effective research questions for interviews which includes the following elements:

- Wording should be open-ended (respondents should be able to choose their own terms when answering questions);

- Questions should be as neutral as possible (avoid wording that might influence answers, e.g., evocative, judgmental wording);

- Questions should be asked one at a time;

- Questions should be worded clearly (this includes knowing any terms particular to the program or the respondents’ culture); and

- Be careful asking “why” questions. [20]

Examples of Useful and Not-So Useful Research Questions

To assist the novice interviewer with the preparation of research questions, I will propose a useful research question and a not so useful research question. Based on McNamara’s suggestion, it is important to ask an open-ended question. [21] So for the useful question, I will propose the following: “How have your experiences as a kindergarten teacher influenced or not influenced you in the decisions that you have made in raising your children”? As you can see, the question allows the respondent to discuss how his or her experiences as a kindergarten teacher have or have not affected their decision-making with their own children without making the assumption that the experience has influenced their decision-making. On the other hand, if you were to ask a similar question, but from a less than useful perspective, you might construct the same question in this manner: “How has your experiences as a kindergarten teacher affected you as a parent”? As you can see, the question is still open-ended, but it makes the assumption that the experiences have indeed affected them as a parent. We as the researcher cannot make this assumption in the wording of our questions.

Follow-Up Questions

Creswell also makes the suggestion of being flexible with research questions being constructed. [22] He makes the assertion that respondents in an interview will not necessarily answer the question being asked by the researcher and, in fact, may answer a question that is asked in another question later in the interview. Creswell believes that the researcher must construct questions in such a manner to keep participants on focus with their responses to the questions. In addition, the researcher must be prepared with follow-up questions or prompts in order to ensure that they obtain optimal responses from participants. When I was an Assistant Director for a large division at my University a couple of years ago, I was tasked with the responsibility of hiring student affairs coordinators at our off-campus educational centers. Throughout the interviewing process, I found that interviewees did indeed get off topic with certain questions because they either misunderstood the question(s) being asked or did not wish to answer the question(s) directly. I was able to utilize Creswell’s suggestion [23] by reconstructing questions so that they were clearly assembled in a manner to reduce misunderstanding and was able to erect effective follow-up prompts to further understanding. This alleviated many of the problems I had and assisted me in extracting the information I needed from the interview through my follow-up questioning.

Implementation of Interviews

As with other sections of interview design, McNamara makes some excellent recommendations for the implementation stage of the interview process. He includes the following tips for interview implementation:

- Occasionally verify the tape recorder (if used) is working;

- Ask one question at a time;

- Attempt to remain as neutral as possible (that is, don’t show strong emotional reactions to their responses;

- Encourage responses with occasional nods of the head, “uh huh”s, etc.;

- Be careful about the appearance when note taking (that is, if you jump to take a note, it may appear as if you’re surprised or very pleased about an answer, which may influence answers to future questions);

- Provide transition between major topics, e.g., “we’ve been talking about (some topic) and now I’d like to move on to (another topic);”

- Don’t lose control of the interview (this can occur when respondents stray to another topic, take so long to answer a question that times begins to run out, or even begin asking questions to the interviewer). [24]

Interpreting Data

The final constituent in the interview design process is that of interpreting the data that was gathered during the interview process. During this phase, the researcher must make “sense” out of what was just uncovered and compile the data into sections or groups of information, also known as themes or codes. [25] These themes or codes are consistent phrases, expressions, or ideas that were common among research participants. [26] How the researcher formulates themes or codes vary. Many researchers suggest the need to employ a third party consultant who can review codes or themes in order to determine the quality and effectiveness based on their evaluation of the interview transcripts. [27] This helps alleviate researcher biases or potentially eliminate where over-analyzing of data has occurred. Many researchers may choose to employ an iterative review process where a committee of nonparticipating researchers can provide constructive feedback and suggestions to the researcher(s) primarily involved with the study.

From choosing the appropriate type of interview design process through the interpretation of interview data, this guide for conducting qualitative research interviews proposes a practical way to perform an investigation based on the recommendations and experiences of qualified researchers in the field and through my own personal experiences. Although qualitative investigation provides a myriad of opportunities for conducting investigational research, interview design has remained one of the more popular forms of analyses. As the variety of qualitative research methods become more widely utilized across research institutions, we will continue to see more practical guides for protocol implementation outlined in peer reviewed journals across the world.

This text was derived from

Turner, Daniel W., III. “Qualitative Interview Design: A Practical Guide for Novice Investigators.” The Qualitative Report 15, no. 3 (2010): 754-760. https://doi.org/10.46743/2160-3715/2010.1178 . Licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 International License .

It is edited and reformatted by Nicole Hagstrom-Schmidt.

- John W. Creswell, Qualitative Inquiry and Research Design: Choosing Among Five Approaches , 2nd ed. (Thousand Oaks, CA: Sage, 2007). ↵

- M.D. Gall, Walter R. Borg, and Joyce P. Gall, Educational Research: An Introduction , 7th ed. (Boston, MA: Pearson, 2003). ↵

- M.D. Gall, Walter R. Borg, and Joyce P. Gall, Educational Research: An Introduction , 7th ed (Boston, MA: Pearson, 2003), 239. ↵

- Carter McNamara, “General Guidelines for Conducting Interviews,” Free Management Library , accessed January 11, 2010, https://managementhelp.org/businessresearch/interviews.htm. ↵