Nurse Selection Criteria + Example Responses

- Careers & Specialisations

- Wages, Salaries & Pay Rates

- Types of Nursing Courses

- ATAR for Nursing

- Scholarships

- Resume Tips & Template

- Nursing Cover Letter

- Interview Questions & Answers

- Selection Criteria Examples

- Tertiary Admissions Centres

- Nursing Agencies

- Nursing Jobs

When it comes to nursing positions, education and experience are important, but they are not the only factors employers take into consideration. Employers also look for certain key selection criteria that demonstrate a candidate’s ability to perform the role effectively. As with any interview, it’s often recommended that you follow the STAR method when provding a response. The STAR method is a structured manner of responding to behavioral-based interview questions by providing the specific Situation, Task, Action, and Result of the particular scenario. This article will go beyond education and experience, and provide insight into the key selection criteria for nursing positions, along with example STAR responses.

1. Communication & Interpersonal Skills

Effective communication and interpersonal skills are critical in the nursing profession. You need to be able to communicate with patients, their families, and other healthcare professionals in a clear and concise manner. Additionally, being able to form strong relationships with others is important in building trust and providing the best care for your patients. Employers will be looking for evidence of your communication and interpersonal skills during the interview.

Example response:

- Situation: During my time working as a nurse in a hospital, I was faced with a patient who was non-verbal and unable to communicate their needs.

- Task: I needed to assess their condition and administer medication.

- Action: I used non-verbal communication techniques, such as gestures and facial expressions, to understand their needs and communicate with them effectively. I also formed a rapport with the patient, by talking to them in a calm and reassuring tone.

- Result: The patient was able to receive the necessary treatment and was much more comfortable with the process. The patient and their family also expressed their gratitude for my compassionate and empathetic approach.

2. Compassion & Empathy

Compassion and empathy are key traits for nurses as they must be able to understand and connect with their patients. This requires an ability to listen, understand, and respond to the emotional and physical needs of patients. Nurses must be able to show compassion and empathy towards their patients and provide comfort and support.

Employers are looking for nurses who can demonstrate their compassion and empathy skills and show that they are able to connect with and understand their patients. They want to see that you have a genuine concern for the well-being of your patients and are able to provide comfort and support. They also want to know that you are able to maintain a professional demeanor and provide care in a respectful and empathetic manner.

By demonstrating your compassion and empathy skills, you show that you are a caring and empathetic nurse who is able to understand and connect with your patients. You also show that you are able to provide comfort and support to your patients, which is essential for providing high-quality patient care. Your compassion and empathy skills demonstrate your commitment to providing patient-centered care and helping your patients feel supported and understood.

- Situation: I was working in a hospice where a patient was in their final stages of life.

- Task: The patient was in a lot of pain and their family was upset and worried.

- Action: I listened to the patient’s concerns and provided comfort and reassurance to both the patient and their family. I also kept in close communication with the patient’s physician to ensure that their pain was managed appropriately.

- Result: The patient was able to pass away peacefully, and the family felt comforted knowing that their loved one was not alone. They also expressed their appreciation for my compassionate and empathetic approach.

3. Teamwork

Nursing is a team-oriented profession, and it is important to be able to work well with others. This involves being able to collaborate with other healthcare professionals, such as physicians and nursing assistants, to provide the best care for your patients. Nurses must be able to work towards a common goal and support their colleagues, while also being able to take initiative and lead when necessary.

Employers are looking for nurses who can demonstrate their teamwork skills and show that they are able to collaborate effectively with others. They want to see that you have a positive attitude, are supportive of your colleagues, and can work well under pressure. They also want to know that you have the ability to take initiative and lead when necessary, as this is essential for providing high-quality patient care.

By demonstrating your teamwork skills, you show that you are a collaborative and supportive nurse who is able to work well with others. You also show that you have the ability to take initiative and lead when necessary, which is essential for providing high-quality patient care. You demonstrate your commitment to teamwork and collaboration, which is essential for ensuring the best outcomes for your patients and the success of the healthcare team.

- Situation: I was working on a busy medical-surgical unit where the staff was stretched thin.

- Task: I needed to ensure that all of my patients received the care they needed in a timely manner.

- Action: I worked closely with my fellow nurses and nursing assistants to prioritize patient care, delegate tasks, and provide support when needed. I also kept open communication with the physician to ensure that everyone was on the same page.

- Result: We were able to provide the best care for our patients and maintain a positive and productive work environment. The unit received positive feedback from patients and their families for our teamwork and collaboration.

4. Quality Improvement

Quality improvement is an essential aspect of the nursing profession as it helps to ensure that patients receive the best care possible. It involves identifying areas for improvement and implementing changes to improve the quality of care. This could include improving patient outcomes, reducing errors, increasing patient satisfaction, or improving efficiency.

Quality improvement requires a systematic approach, collaboration, and an ongoing commitment to continuous improvement. Nurses play a vital role in this process as they are often on the front lines, working with patients and providing care. By being involved in quality improvement initiatives, nurses can make a positive impact on patient outcomes and contribute to the overall success of the healthcare organisation.

Employers will be looking for evidence of your ability to identify areas for improvement, implement changes, and monitor the results during the interview. They want to see that you have a commitment to providing the best care for your patients and are proactive in seeking ways to improve the quality of care.

- Situation: I was working in a hospital where the discharge process was taking longer than it should.

- Task: I needed to find a solution to improve the discharge process for patients.

- Action: I analyzed the current process, identified areas for improvement, and made suggestions for changes. I also collaborated with the rest of the nursing staff and physicians to implement the changes and monitor the results.

- Result: The discharge process was streamlined, and patients were able to be discharged faster, which improved their experience and satisfaction. The hospital also received positive feedback from patients and their families for the improved discharge process.

5. Continuous Professional Development (CPD)

Continuous professional development is important for nurses, as it helps them to stay up-to-date with the latest developments in the field and maintain their competency. Employers are looking for nurses who are committed to their ongoing professional development and have a strong desire to learn and grow in their careers. By demonstrating a commitment to CPD, nurses show that they are dedicated to providing the best care for their patients and are interested in staying current in their field.

- Situation: I was working as a nurse and wanted to further my knowledge in a specific area of nursing.

- Task: I needed to find ways to continue my professional development.

- Action: I researched and attended conferences, workshops, and courses related to my area of interest. I also sought out mentorship opportunities with experienced nurses.

- Result: I was able to expand my knowledge and skills in my area of interest, which helped me provide better care for my patients. I also received recognition from my peers and supervisors for my commitment to continuous professional development.

6. Problem-Solving

Problem-solving is a crucial skill for nurses as they often face complex and challenging situations in their daily work. It requires critical thinking, effective communication, and the ability to identify and analyse problems and find solutions. Nurses must be able to make informed decisions, prioritise tasks, and work effectively under pressure.

Employers are looking for nurses who can demonstrate their problem-solving skills and show that they can handle challenging situations in a calm and effective manner. They want to see that you can think creatively and come up with innovative solutions to problems. They also want to know that you have the ability to make decisions that benefit your patients, your team, and the organisation.

By demonstrating your problem-solving skills, you show that you are a competent nurse who can handle complex and challenging situations and make informed decisions. You also show that you have the ability to think critically and creatively, which is essential for providing high-quality patient care.

- Situation: I was working as a nurse in a busy emergency room where a patient was in critical condition.

- Task: I needed to find a solution to provide the best care for the patient in a limited amount of time.

- Action: I assessed the patient’s condition, gathered relevant information, and considered multiple options for treatment. I then collaborated with the physician to determine the best course of action.

- Result: The patient received the necessary treatment, and their condition stabilized. The patient and their family also expressed their gratitude for my quick thinking and effective problem-solving skills.

7. Legal Understanding

Legal understanding is an important aspect of nursing as nurses must be aware of and adhere to the laws and regulations that govern their practice. This includes understanding the laws and regulations related to patient privacy, informed consent, and medical ethics. Nurses must also be aware of the legal implications of their actions and understand how to handle difficult and complex legal situations.

Employers are looking for nurses who have a good understanding of the laws and regulations that govern their practice and who can demonstrate their ability to apply this knowledge in their daily work. They want to see that you have a commitment to upholding the ethical and legal standards of the nursing profession and are able to make informed decisions that are in line with these standards.

By demonstrating your legal understanding, you show that you are a responsible and ethical nurse who is committed to providing high-quality care to your patients. You also show that you are aware of the laws and regulations that govern your practice and have the ability to handle difficult and complex legal situations in a professional and responsible manner.

- Situation: I was working as a nurse and was faced with a situation where a patient’s privacy was in question.

- Task: I needed to ensure that the patient’s privacy was protected.

- Action: I consulted the relevant laws and regulations, and determined the appropriate course of action. I also kept the patient informed of the situation and their rights.

- Result: The patient’s privacy was protected, and the hospital was able to comply with the relevant laws and regulations. The patient also expressed their appreciation for my understanding of their rights and protection of their privacy.

In conclusion, education and experience are important factors when it comes to nursing positions, but they are not the only factors that employers take into consideration. Employers also look for evidence of key selection criteria such as communication and interpersonal skills, compassion and empathy, teamwork, quality improvement, continuous professional development, problem-solving, and legal knowledge.

It is essential for nursing candidates to understand these criteria and be able to provide examples of how they demonstrate them during the interview. By following the STAR method and being able to articulate your experiences and accomplishments, you can show the interviewer that you possess the skills and qualities necessary for a successful nursing career.

So, when preparing for a nursing interview , take the time to reflect on your experiences and think about how you can demonstrate these key selection criteria. Show the interviewer that you are a well-rounded and competent nurse who is committed to providing the best care for your patients. Good luck!

More information

- Nursing Schools

- Find Courses

- Diploma of Nursing

- Bachelor of Nursing

- Master of Nursing

- CPD Courses

- Nursing Courses Sydney

- Nursing Courses Melbourne

- Nursing Courses in QLD

- Nursing Courses Brisbane

- Nursing Courses Perth

- Nursing Courses Online

- Nursing Courses in Tasmania

- Nursing Courses in Darwin NT

- Nursing Courses in Adelaide SA

- Nursing Courses in Canberra ACT

- General Nursing

- Nursing Specialties

- Nursing Students

- United States Nursing

- World Nursing

- Boards of Nursing

- Breakroom / Clubs

- Nurse Q&A

- Student Q&A

- Fastest BSN

- Most Affordable BSN

- Fastest MSN

- Most Affordable MSN

- Best RN to BSN

- Fastest RN to BSN

- Most Affordable RN to BSN

- Best LPN/LVN

- Fastest LPN/LVN

- Most Affordable LPN/LVN

- Fastest DNP

- Most Affordable DNP

- Medical Assistant

- Best Online Medical Assistant

- Best Accelerated Medical Assistant

- Most Affordable Medical Assistant

- Nurse Practitioner

- Pediatric NP

- Neonatal NP

- Oncology NP

- Acute Care NP

- Aesthetic NP

- Women's Health NP

- Adult-Gerontology NP

- Emergency NP

- Best RN to NP

- Psychiatric-Mental Health NP

- RN Specialties

- Best RN Jobs and Salaries

- Aesthetic Nurse

- Nursing Informatics

- Nurse Case Manager

- Forensic Nurse

- Labor and Delivery Nurse

- Psychiatric Nurse

- Pediatric Nurse

- Travel Nurse

- Telemetry Nurse

- Dermatology Nurse

- Best NP Jobs and Salaries

- Family NP (FNP)

- Orthopedic NP

- Psychiatric-Mental Health NP (PMHNP)

- Nurse Educator

- Nurse Administrator

- Certified Nurse Midwife (CNM)

- Clinical Nurse Specialist (CNS)

- Certified Registered Nurse Anesthetist (CRNA)

- Best Free Online NCLEX-RN Study Guide

- The Nursing Process

- Question Leveling

- NCLEX-RN Question Identification

- Expert NCLEX-RN Test-Taking Strategies

- Best Scrubs for Nurses

- Best Shoes for Nurses

- Best Stethoscopes for Nurses

- Best Gifts for Nurses

- Undergraduate

- How to Become an LPN/LVN

- How to Earn an ADN

- Differences Between ADN, ASN, AAS

- How to Earn a BSN

- Best MSN Concentrations

- Is an MSN Worth It?

- How to Earn a DNP

- MSN vs. DNP

need help with selection criteria

Nursing Students Student Assist

Published Jun 27, 2014

I am stacy. I am nearly graduate, I am applying transitional program at the moment. However, I found difficult in addressing selection criteria. Anyone could give me some help?

1. Demonstrated high level interpersonal, verbal and written communication skills

1. 2 Demonstrated clinical knowledge and clinical problem solving abilities

2. 3 An understanding of and ability to work within an interdisciplinary team

3. 4 An understanding of professional, ethical and legal requirements of registered nurse

4. 5 An understanding of EEO, Work health and safety, infection control and continuous quality improvement principle

6. 6 A demonstrated understanding of NSW health's core values- openness, collaboration, respect and empowerment

- + Add a Comment

NotMyProblem MSN, ASN, BSN, MSN, LPN, RN

2,690 Posts

If I understand your question correctly, I will give you my thoughts on what I believe you are searching for. If you are hoping to be selected for a work position or a program seat, this is what the panel is looking for. I hope this helps you...

1. This area means that you are competent in written and spoken exchanges. You need to be able to understand information that you receive as well as making sure that others can understand what you are writing and saying. And you need to be able to do it in a professional, non-offensive manner at all times.

2. This means that you understand the information from your nursing training and are able to apply/use what you've learned so far to the work setting as it pertains to solving problems in patient care.

3. Working with an interdisciplinary team ( RN , LPN, CNA, MD, PT/OT, Case Manager, etc) means that you understand that other members of the healthcare team has a specific job to do with your patients as well, and you are able to use their suggestions to help get your patients healthy just as you would expect them to accept your suggestions (your clinical knowledge) that helps to keep your patients safe as they return to good health. In other words, you are all working together and understanding that everyone has his/her own job to do that brings the patient to the point of being ready for discharge.

4. This means that you understand your obligations and duties as a nurse, as well as knowing your limitations. You are expected to follow your state's Nurse Practice Act and the Code of Ethics set forth by the American Nurses Association. Basically, if you've not been trained to do it, don't do it! You must always work within your scope of practice.Also, you need to be able to act in the best interest of your patients. Believe it or not, that may even mean having the patient to be assigned another nurse. You see this more in ethical situations.

5. This section means that there are policies and procedures that you are expected to follow to keep everyone safe at that facility. That includes but is not limited to hand washing, fall prevention, following isolation procedures, etc., which includes protecting yourself, your patients, their families/visitors, or ANYONE you come in contact with while on duty.

6. If NSW is a facility that intends to employ you, this means that, like most places, they have a mission statement. This particular statement indicates that they (all team members) strive to be as open as possible about the care they provide (keep the patients and team members informed) and try to work WITH each other (collaboration) in a manner that shows that they treat each other and their customers as they should be treated in a way that improves and strengthens (empowerment) the facility as a whole.

Thanks for your help. That is very helpful.

You're very welcome!

(The last statement in my post should read "Good luck with the transition". This autocorrect on my iPad should be called auto-add. Sorry about the typos).

just wondering should I put some theory for the last question, it seems like more about understanding, so I should paraphase and express my own idea of openness, collaboration, repsect, and empowerment. however, they are all interrelated. I should explain them in all?

I would summarize it based my understanding and interpretation of the mission statement if I were you. No need to add theory.

As nurses,we use a range of communication skills, including verbal and written, to acquire, interpret and record their knowledge and understanding of people’s needs. The interpersonal skills that we use includes working with others, empathy with clients, family and colleagues, using sensitivity when dealing with people and relating to persons from differing cultural, spiritual, social and religious backgrounds. I have acquired and refined strong communication and interpersonal skills through my course and my working experience as AIN in St Vincent Private hospital. I have used active listening, asking for clarification and negotiating solutions to fulfill my roles as specified by SVPH. I also have demonstrated clear and precise written communication skills, using standard medical terminology and abbreviations. For example, I had written“ PAC”, “FASF”,”IDC”in the handover sheet for the other AIN and also provide a handover using the ISBAR. Moreover, I have correct record the patient’s FBC and utilized organisation protocols for electronic communication like adequately use the “web delacy” to order patients’ meal.

2. Demonstrated clinical knowledge and clinical problem solving abilities

A very important clinical nursing skill is knowing how to safely administer medications to patients. There are many routes for administration, and many different drugs, To prevent errors when giving drugs to patients, nurses follow "The 5 Rights of Medication Administration." The five rights refer to: the right patient, the right drug, the right dosage, the right time and the right route. The nurse repeats the "5 Rights" test three times before giving a drug to a patient, following the administration of medication policy according to the organization to ensure the correct administration such as verified by two RN , and understanding the nurse-initiated drugs.

My clinical problem solving approach incorporate the nursing process: assessing, interpreting, implementing and evaluating. I recently demonstrated my ability to utilise this process in my role as AIN in SVPH. When I addressed the constipation problem of my patient while I was doing my AIN special. I noticed he has a distended abdomen, and it hard likes a rock. In consideration of his surgery, and his documentation that he hasn’t open his bound. I suspected that he is constipated, so I told the RN and movical was given to the patient. Then I assess ed the patient for any bound movement and toileting in order to evaluate the interventions.

3. An understanding of and ability to work within an interdisciplinary team

Working within an interdisciplinary team means that each members of the health care team has contributed to patients’ health condition in different ways and roles. Nursing care also involves communicating with other health-care professionals, including physicians, speech therapists,physio therapists or social workers. Being a team player involves sharing insights, observations and concerns with relevant medical providers to make adjustments to patient care. Good teamwork could ensure the high quality of patient care, increased patient’s safety and ensured the person centered care is delivered effectively. I have developed effective teamwork through my several clinical placements in different facility and my work experience in SVPH. I worked together with an RN who guided me during my clinical practice in gastroenterology ward. We divided the work in regarding to the handover about 3 post ops would be coming. I prepared the bed,IVpole,Obs Machine, I was assigned to do the hourly OBS and IDC, and order the diet and referred the patient to the Diettion. Futhermore, In SVPH, when I was in the othopedic ward, I worked with one physiotherapist to help my patient to get up from the bed.

ensure the person centered care is delivered. Referred to the right person to allocate the care. Referrer to the social workers

4. An understanding of professional, ethical and legal requirements of registered nurse

I endeavor to provide quality and safety nursing care in accordance with ANMC competence standards, the Code of Ethics and the code of Professional Conduct. Understanding the legal requirement of a nurse, such as scope of practice, duty of care and informed consent. As professional, I treat personal information as private and confidentiality. This could be shown through the adequate disposal of the handover sheet. Moreover, maintaining a professional relationship with patients and be aware of the professional boundaries. Ethically, I respects the values,customs and spiritual beliefs of individuals. Also, respect patient’s privacy and dignity at all time by close the door or close the curten during procedure. Legally, perform my duty of care such as provide adequate obs assessment and relatively intervention. One of the essential legal requirement is informed consent, we relies on oral consent or implied consent for most nursing interventions. While I was perform my duty as AIN of providing post op wash for my patient, I always obtain consent to proceed the wash, always assess the patient’s condition before I roll the patient on the side and prevent any potential risk.

5. An understanding of EEO, Work health and safety, infection control and continuous quality improvement principle

There are policies and procedures that are expected to follow to keep everyone safe. The EEO refers to that a person cannot be against by their race, culture background or appearance and so on. A co-worker cannot be discriminated against due to his race. Work health and safety is about promote the health, safety and wellbeing of staff,patients and others in the workplace. Complies with the infection control standards in the nursing regulation. Using standard precautions at all times,comply with 5 moment of hand hygiene, adequately use of PPE and segregate waste into the correct waste management stream. I have clearly understanding of these principles by undertake annual mandatory training in work health and safety during my employment in SVPH. Assist in maintaining a clean and tidy unit, always double check the sharp bin to avoided any needle injured during my duty as AIN in SVPH.

Is this a class assignment? I thought maybe you were preparing for a job or college intake interview...

Yes, it is prepared for my job application, this is my draft. But like my brain just doesn't work properly. I feel like I couldn't write it down properly. Some questions request me to show that I understand that, so I just write out what it is.

Oh, I see. If that's the case, these questions/statements are requirements that are expected of the RN . I've seen this on many applications. All-in-all, they just expect you to behave and function as the professional you were trained to be in accordance to your state's nurse practice act combined with the facilities policies and procedures. Trying to memorize those sections is admiral but a bit of an overkill. Just follow the rules and demonstrate respect for others. Your preceptor will train you on what you need to know to get you started once you get the job. And remember, when there is something that you don't know, ASK.

- Bookkeeping

- Financial Planning

- Animal Care

- Animal Science

- Dog Training

- Horse Breeding & Equine Studies

- Pet Grooming

- Veterinary Nursing

- Graphic Design

- Interior Design

- Photography

- User Experience Design

- Building & Construction

- Real Estate

- Business Administration

- Business Development

- Business Operations

- Change Management

- Customer Engagement

- Entrepreneurship

- Human Resources

- Leadership & Management

- Organisational Development

- Project Management

- Quality Management

- Small Business

- Supply Chain Operations

- Alcohol & Other Drugs

- Community Services

- Individual Support

- Education Support

- Training & Assessment

- Engineering

- Manufacturing

- Agriculture

- Conservation & Land Management

- Health Science

- Horticulture

- Social Science

- Sustainability

- Dermatology

- Eyebrow Specialist

- Eyelash Extension

- Hairdressing

- Nail Technology

- Allied Health

- Counselling

- Dental Assisting

- Health Administration

- Health Services Assistance

- Life Coaching

- Medical Administration

- Mental Health

- Natural Therapies

- Naturopathy

- Practice Management

- Sports & Fitness

- Culinary Arts

- Event Management

- Hospitality

- Wedding Planning

- Cloud Computing

- Cyber Security

- Data Science & Analytics

- Programming

- Systems Administration

- Web Development

- Compliance & Risk

- Criminal Justice & Psychology

- Work Health & Safety

- Advertising

- Digital Marketing

- Digital Media

- Social Media Marketing

- New South Wales

- Northern Territory

- Western Australia

- South Australia

- Graduate Diploma

- Advanced Diploma

- Associate Degree

- Graduate Certificate

- Undergraduate Certificate

- Certificate

- Certificate II

- Certificate III

- Certificate IV

- Courses by Provider

- Government Funded Courses

- Student Hub

- The Workforce Training Hub

Selection Criteria Response: Nursing Examples

In this post, 1. effective communication and interpersonal skills, 2. teamwork skills, 3. problem solving skills.

In this post Show

You will often be expected to respond to key selection criteria when applying for nursing jobs. Here are 3 examples to get you started.

While it might feel like your cover letter and resume should suffice when you’re submitting a job application, sometimes your recruiter expects a little more, depending on the field of work you’re in.

If you’re working in nursing, you’re often expected to respond to some key selection criteria in the application process.

This is an excellent opportunity to make your application stand out by matching your real-life experiences to your resume.

Here are some selection criteria examples for a registered nurse application in Australia, along with some answer examples to help you out:

Effective communication skills are essential for nursing. Show the recruiter that you’re good with people by explaining your methods, and reiterating your relevant skills.

Throughout my career in nursing care, I have proven my robust verbal communication skills when interacting one-on-one with my patients or their partners/spouses. I can effectively communicate medical terminology in digestible terms, making outcomes easier to understand by clearly indicating what any new information will mean for their overall diagnosis. I have also proven to be an effective communicator in group settings, with my patients’ larger family groups. One of my techniques is to direct my speech and eye contact between both my patient and their loved ones, to ensure that everyone feels included in the conversation. I know that I need to be conscious of the emotional impacts of what I am communicating to the group since health concerns often come hand-in-hand with hardship.

Teamwork is essential in any workplace, but especially in a hospital environment where you work with others every day. In your response to this prompt, don’t just exemplify your ability to work collaboratively – you should also prove that you’re a great team leader.

In my five years working at the Royal Melbourne Hospital as a registered nurse, I did a lot of shift work, meaning that I would not always be working with the same team. Through this experience, I thoroughly developed my teamwork skills and proved to be very adaptable as a team member. My team members were different every shift, but the outcomes of my work remained consistent, as a result of my effective interpersonal skills. I can slot into any working team and adapt my methods accordingly. Towards the end of my time at the RMH, I was occasionally rostered on with several junior staff members, meaning that I would have to step into a senior position and delegate tasks accordingly. My ability to perform as a team member or as a team leader, depending on the circumstances is one of my strongest assets.

In a nursing environment, you’re faced with new problems every day and need to be able to think on your feet, with an ability to act quickly. Provide examples of your skills in this area in your response.

When I first began my career in nursing, I primarily worked in aged care. A majority of my patients were living with degenerative brain conditions like Alzheimer’s disease. This meant that new problems arose all the time if a patient wandered off and got lost or was otherwise confused, having forgotten something significant. Patients would be lucid one second, and then stubborn and disoriented the next. This meant that I had to adapt to new circumstances quickly and efficiently, addressing new concerns every moment. It was also essential that while I solved each problem, that I had to remain calm and conscious of the patient’s vulnerability.

Overall, your key selection criteria response should come from your own real-life experience. Tailor your responses to the keywords of the position description, and try to use specific examples. Prove that your real experiences have earned you the skillset you have today.

How to Write Key Selection Criteria [+Templates]

Our Key Selection Criteria Hub can help you write the best possible answer that highlights your skillset to a potential employer.

Show Me How!

Latest Articles

How to apply for work placement: tips & resources.

Congratulations! You’re about to embark on an exciting journey where you will practise and develop t...

11 Hobbies That Can Make You Money: Use Your Passion for Profit

Hobbies often get a bad rap. They’re seen as just a way to kill time, a mindless escape from t...

Is The Job You Hate Killing You? Why You Need to Make a Change

Do you hate your job? Studies show that staying in a job you hate has negative health effects and ev...

Want to read more?

3 ways experiential learning can help you get your edge.

As the modern job market continues to shift and grow, employers are increasingly favouring experient...

How to Know When You Should Change Careers Over 50

From burnout, poor work/life balance, ageism, and lack of fulfillment, learn the signs that it’s tim...

How Technology is Affecting Career Choices (Infographic)

Technology is changing the way we work and affecting future career choices, see what areas are growi...

Subscribe to Our Newsletter

Get expert advice, insights, and explainers on tricky topics — designed to help you navigate your learning journey with confidence.

- Popular Subjects

- Qualification Type

- Courses by Location

- Business Admin

- Courses In ACT

- Courses In NSW

- Courses In NT

- Courses In QLD

- Courses In Tasmania

- Courses In VIC

- Courses In WA

Newsletter sign up

Newsletter sign up.

We’ll email you updates on job trends, career advice, study tips, news and more.

You are currently visiting our Australian website Training.com.au

Would you like to visit our New Zealand website instead?

- Exploring the ACGME Core Competencies: Medical Knowledge (Part 5 of 7)

These Core Competencies define the basic skill sets and behavioral attributes deemed a requirement for every resident and practicing physician. Their success is measured in terms of the ability to: 1) administer a high level of care to diagnose and treat illness, 2) offer and implement strategies to improve patient health, 3) provide resources for disease prevention, and 4) support patients’ and families’ emotional needs while also treating their physical needs.

Therefore, these competencies are incorporated into the training and continuing education of almost every major medical education program. This includes the adoption by the American Board of Medical Specialties (ABMS), and, later, the integration of the ACGME Core Competencies into the Maintenance of Certification (MOC) program.

The ACGME Core Competencies are defined as:

- Practice-Based Learning and Improvement

- Patient Care and Procedural Skills

- Systems-Based Practice

Medical Knowledge

- Interpersonal and Communication Skills

- Professionalism

In Part 1 of this blog series, we listed the ACGME Core Competencies with a focus on EPAs and Milestones. Other articles in this series have addressed Practice-Based Learning and Improvement , Patient Care and Procedural Skills , and Systems-Based Practice . In this article, we break down the various components of the Medical Knowledge core competency. Each ACGME Core Competency represents a necessary skill set and attitude for training and practicing physicians to continually hone and develop. Medical Knowledge includes an understanding of all established and evolving biomedical, clinical, epidemiological, and social-behavioral sciences. However, resident physicians must also go beyond simply obtaining medical knowledge for themselves. They must also demonstrate the ability to apply it to patient care and appropriately and enthusiastically transfer that knowledge to others.

Medicine is constantly evolving, and even a seasoned physician with years of experience hasn’t “seen it all.” A desire for and an understanding of the need for a lifelong-learning approach to the practice of medicine is a requisite attribute for physicians providing quality health care. For this reason, the ACGME Core Competency of Medical Knowledge seeks to ensure that residents are trained to continually investigate, question, and seek new knowledge. But knowledge without application is fruitless. Sharing those best practices with medical colleagues and employing that knowledge in the diagnosis and treatment of patients is equally as important as obtaining it.

The ACGME Core Competencies: Sub-competencies for Medical Knowledge

The Medical Knowledge subcompetencies break down into manageable pieces—the skills and attributes that comprise this core competency. These include being able to demonstrate:

- An investigative and analytical approach to clinical problem solving and knowledge acquisition

- An ability to apply medical knowledge to clinical situations

- An ability to teach others

Investigative and Analytical Approach

First and foremost, residents must always maintain an open mind. They will demonstrate a willingness to never shy away from asking questions and searching out new and useful sources of information. They will consider alternative or additional diagnoses, seeking and initiating discussions with faculty. The continual search for medical knowledge includes identifying both universal and individualized goals and objectives for learning, at every stage in a resident’s career. This includes an awareness of areas needing improvement and a humility to absorb and process feedback on areas where growth is needed.

The lifelong-learner attribute is evidenced by the physician’s commitment to querying literature and texts on a regular basis, attending conferences, and critically evaluating new medical information and scientific evidence in order to modify their knowledge base accordingly.

Apply Medical Knowledge to Clinical Situations

The acquisition of medical knowledge must continually circulate back to the application of it and provide better and more relevant, quality patient care. Residents will demonstrate competence through the combination of a physical exam and interpretation of ancillary studies, such as laboratory work and imaging, to form a working diagnosis and initiate a therapeutic approach.

The application of medical knowledge should also be measured using Miller’s Pyramid, a framework for assessing clinical competence developed in 1990. According to it, a resident progresses from “Knows” to “Knows How” to “Shows How” to “Does.” The “Does” component is the key: what a resident may know and be able to demonstrate in a controlled setting should match how they perform in actual day-to-day interactions with patients on a regular basis.

Subsequently, a physician exemplifying the attributes of this core competency will apply the evidence-based medical skills and knowledge that they have obtained in a patient-centered approach, and do so consistently. It is a skill set that is evidenced and witnessed in all patient interactions and clinical situations, not just testing scenarios.

Finally, competent residents pay attention to the clinical outcomes first and foremost, but in a manner that takes into consideration the cost-effectiveness, the risk-benefit ratio, and patient preferences.

Ability to Teach Others

Obtaining and applying medical knowledge skills is essential for delivering quality health care. But they cannot survive in a vacuum. Practitioners must regularly participate in the act of sharing knowledge. Being a lifelong learner is only half of the equation. The other half requires the ability to teach and pass along to others the experiences and knowledge each individual has acquired through the years. To successfully embody the Medical Knowledge Core Competency, a resident needs to demonstrate the ability to educate others in an organized, enthusiastic, and effective manner.

Medical knowledge is where all medical education begins. And it never ends. Residents must understand that the continual process of investigating, questioning, and learning is an integral part of what makes residents and physicians successful, and contributes to the growth and improvement of the health care field as a whole.

Read more about the six ACGME Core Competencies:

- Exploring the ACGME Core Competencies (Part 1 of 7)

- Exploring the ACGME Core Competencies: Practice-Based Learning and Improvement (Part 2 of 7)

- Exploring the ACGME Core Competencies: Patient Care and Procedural Skills (Part 3 of 7)

- Exploring the ACGME Core Competencies: Systems-Based Practice (Part 4 of 7)

- Exploring the ACGME Core Competencies: Interpersonal and Communication Skills (Part 6 of 7)

- Exploring the ACGME Core Competencies: Professionalism (Part 7 of 7)

Share This Post!

i feel that if medical students entering residency do not recognize the role of the ACGME or understand the competencies by which they will be evaluated, it. is difficult to assess the efficacy, utility, and success of the Outcome Project

Medical knowledge is one competency that is most important to a physician’s practice. It is an ongoing activity that needs self commitment to be up-to-date with current concepts. It is a reality that the busier a physician is in his/her clinical practice, the less time he/she has for reading. This sounds contradictory but is true. It is also important to distinguish continuing competency from continuing education as one can be educated but not competent or vice versa both of which are dangerous.

Comments are closed.

- Research article

- Open access

- Published: 24 November 2016

Knowledge is not enough to solve the problems – The role of diagnostic knowledge in clinical reasoning activities

- Jan Kiesewetter ORCID: orcid.org/0000-0001-8165-402X 1 ,

- Rene Ebersbach 1 ,

- Nike Tsalas 2 ,

- Matthias Holzer 1 ,

- Ralf Schmidmaier 1 , 3 &

- Martin R. Fischer 1

BMC Medical Education volume 16 , Article number: 303 ( 2016 ) Cite this article

7911 Accesses

22 Citations

7 Altmetric

Metrics details

Clinical reasoning is a key competence in medicine. There is a lack of knowledge, how non-experts like medical students solve clinical problems. It is known that they have difficulties applying conceptual knowledge to clinical cases, that they lack metacognitive awareness and that higher level cognitive actions correlate with diagnostic accuracy. However, the role of conceptual, strategic, conditional, and metacognitive knowledge for clinical reasoning is unknown.

Medical students ( n = 21) were exposed to three different clinical cases and instructed to use the think-aloud method. The recorded sessions were transcribed and coded with regards to the four different categories of diagnostic knowledge (see above). The transcripts were coded using the frequencies and time-coding of the categories of knowledge. The relationship between the coded data and accuracy of diagnosis was investigated with inferential statistical methods.

The use of metacognitive knowledge is correlated with application of conceptual, but not with conditional and strategic knowledge. Furthermore, conceptual and strategic knowledge application is associated with longer time on task. However, in contrast to cognitive action levels the use of different categories of diagnostic knowledge was not associated with better diagnostic accuracy.

Conclusions

The longer case work and the more intense application of conceptual knowledge in individuals with high metacognitive activity may hint towards reduced premature closure as one of the major cognitive causes of errors in medicine. Additionally, for correct case solution the cognitive actions seem to be more important than the diagnostic knowledge categories.

Peer Review reports

Clinical experts need general and specific problem solving strategies in order to make adequate treatment decisions for their patients. Clinical problem solving (or clinical reasoning) as a skill involves different categories of knowledge as well as several cognitive abilities and is key for becoming a clinical expert [ 1 ]. Problem-solving occurs in well-known phases, described in models like the hypothetical-deductive model and pattern recognition, a process that requires the use of knowledge [ 2 – 4 ]. In university, the focus lies on teaching medical knowledge, in order to give the student a foundation for further clinical problem-solving when dealing with real patients [ 5 ]. According to recent studies [ 6 ] diagnostic knowledge can be categorised into three categories: Conceptual knowledge (“what information”), strategic knowledge (“how information”) and conditional knowledge (“why information”) [ 5 ]. Table 1 shows an overview of the definitions. These categories have been investigated in several studies regarding clinical reasoning of medical students and medical doctors [ 6 – 8 ].

The Revision of Bloom’s Taxonomy added a fourth category: Metacognitive knowledge, which “involves knowledge about cognition in general as well as awareness of one’s own knowledge about one’s own cognition” [ 9 , 10 ]. While handling a case, medical students or doctors are able to externalize their thoughts about the strategies of problem-solving or their application of knowledge [ 11 ]. Metacognition in this sense includes the judgements of how easily one believes one learns and whether one has the feeling of knowing something.

Surprisingly, little is known about the assessment and applicability of metacognition within the medical context and its relation to the knowledge categories in the situated learning contexts of medical students.

Whereas several methods are used to assess “classic” knowledge categories (e.g. multiple choice tests, key feature problems, interviews, questions, stimulated recall) it has proven difficult to measure and observe metacognition in a realistic setting [ 7 ]. Since metacognition cannot be observed directly in students [ 12 ], self-report methods like questionnaires, rating scales and stimulated recall are used. However, these self-reporting measures already reflect that, to be able to talk what one thinks, the student’s metacognitive activities and one’s verbal capacity are of importance [ 13 ]. When students are thinking aloud, registering the metacognitive activities without the student’s awareness is possible and the otherwise implicit cognitive processes can be observed [ 14 ].

In clinical problem solving research, traditionally only little parts of knowledge are investigated in relation to the correct diagnosis. Thus far, there is no model of clinical reasoning that, if applied, can explain how and why successful students come to the correct diagnosis, while unsuccessful students do not. However, it seems worthwhile to create evidence for such a holistic model of clinical problem solving of medical students that should include all knowledge categories. We therefore set out to observe all aforementioned knowledge categories simultaneously in order to identify the relationship between them. More specifically we wanted to answer the following research questions:

How are diagnostic knowledge categories interrelated?

The interplay of knowledge categories gives insight how students store clinical knowledge and whether some categories seem more important to them than others. Further, it has not been investigated how knowledge categories relate to previous knowledge.

How is the use of the diagnostic knowledge categories related to time on task?

It is important to understand how much time the application of the different knowledge categories takes.

How is the use of diagnostic knowledge categories related to diagnostic accuracy?

Especially, it seems interesting to identify the role each plays to solve a clinical case.

How are the knowledge categories divided over the course of a case solution?

It is interesting to see if some of the knowledge categories are used more frequently in the beginning and others are used more towards the end of the case solutions.

To answer this research questions we conducted a study where medical students first received a short knowledge training for clinical nephrology and a subsequent knowledge test to standardize previous knowledge. After that the students worked on paper-based, clinical case scenarios while thinking a-loud. The think-a-loud protocols were transcribed and coded according to the aforementioned knowledge categories. In the following paragraphs each step of the methodology is explained in detail.

Participants

Twenty-one medical students (female = 11) of two German medical faculties in their third, fourth and fifth year (M = 23.9 years; range 20–34) volunteered to take part in the study. These curricular years were chosen because the participants would have finished their internal medicine curriculum and should have enough prior knowledge to solve clinical problems but would not have experienced the final sixth clinical year of full-time electives that usually elevates students’ problem-solving substantially. This study was approved by the Ethical Committee of the Medical Faculty of LMU Munich. Written, informed consent was obtained from all participants and all participants received a small monetary compensation for participation.

Coding scheme

A coding scheme was established on the foundation of the knowledge type definitions [ 7 , 10 , 15 ]. The definition used in the coding scheme is illustrated in Table 2 . The coding scheme had an overall interrater reliability of k = .79; SD = .9 for the categories. One investigator (R.E.) coded all transcripts; a random 10% sample of the text was double coded.

Course of study

Students arrived and first filled out a pre-study questionnaire (see below), then students received a three hours of practicing a standardized learning unit in the field of clinical nephrology and upon completion, the students’ retention of content specific medical knowledge was tested using a multiple choice test. Then participants were instructed on the think-aloud method in a short practice exercise. Finally, students then solved three cases in clinical nephrology with the think-aloud method (see below).

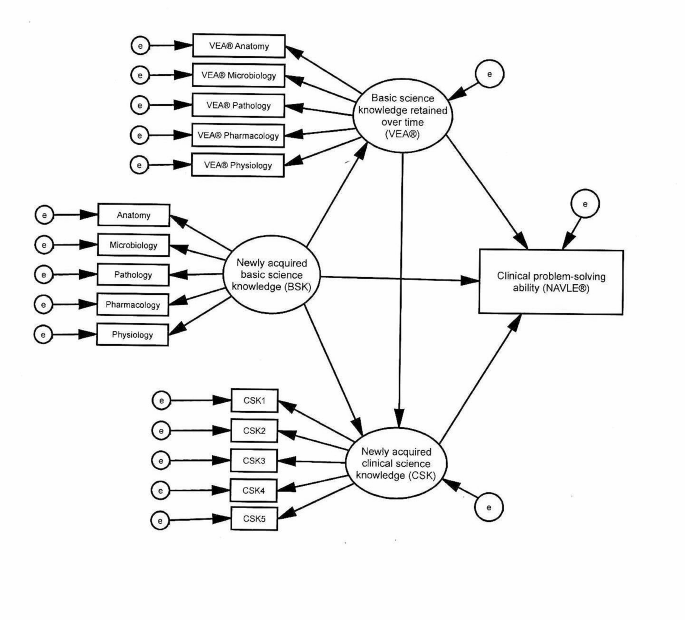

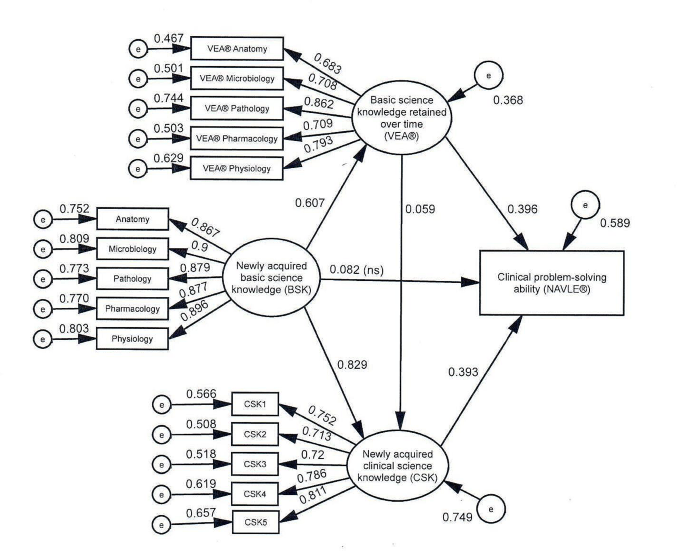

Figure 1 shows the course of the study with knowledge training, a subsequent knowledge test, and work on the paper-based, clinical case scenarios.

Overview of the study

All students were recorded and recordings were transcribed and coded according to the defined knowledge categories. Codings were analysed for accuracy of the diagnosis.

Pre-study questionnaire

All participants completed a questionnaire containing items about their socio-demographic data, gender and age to control possible confounders. Further the participants were asked their overall grade of the preliminary medical examination. The reliability of this national multiple-choice exam is very high (Cronbachs α = .957) [ 16 ]. The performance of participants in this exam was used as an indicator for general prior knowledge in medicine. The results of the questionnaire and all other obtained data were pseudonymized.

Knowledge training and test

Although all participants had successfully passed their internal medicine curriculum a standardized learning tool was provided to refresh the textbook knowledge. Thirty flashcards were used containing 98 items with factual information on clinical nephrology and more precisely to acute renal failure and chronic renal insufficiency. This content matches with the pathomechanisms of the used cases. The content of the flashcards was previously published in another study (appendix S1 (online) of Schmidmaier et al. [ 17 ]). Within a 3 h electronic learning module it was ensured by testing that all participants could retrieve the contents of each flash card at least once. This was to help ensure that all students were able to show their problem-solving strategy and ability because they had the knowledge needed for application of strategies.

Clinical case scenarios

The three, paper-based case scenarios within the field of clinical nephrology were real cases from the department of internal medicine adapted by experts with anonymized, real supplemental material (i.e. lab values). After the transformation into paper-based scenarios, the cases were additionally reviewed by two content experts and one expert of medical education to ensure best possible authenticity of a paper-based case. All cases were structured the same way, containing two or three pages describing the patient’s symptoms and medical history. The results of the physical examination, blood tests, urine sample, ECG, and ultrasound scan were each described on separate pages.

The students’ task was to work on each case to show their problem-solving abilities with no instructions being given other than “Please work on this case”. They were not explicitly asked to state a diagnosis. Only one student and the test instructor were present in the room during the case elaboration. The test instructor sat behind the participant to avoid any diversion of thought [ 18 ]. The only interaction between the participant and instructor was when the instructor provided the next page of a case upon the participant’s request. Every case was interrupted after 10 min, independent of whether the case was solved or not. While participants were working on the cases using the think-aloud method, they were audio-recorded. All students did voluntarily state a diagnosis at the end of each case.

Data analysis

All audio recordings (total time of over 12 h) were transcribed and coded using the operationalized definitions of knowledge categories and metacognition described above. Data of three case sessions of 21 participants were evaluated and 63 sessions were analysed.

The standard qualitative content analysis [ 19 ] was used to assess, code, and analyse the process of thought, as it also yields very detailed quantitative data in consecutive analysis. It uses models with several categories for the coding of a text. In this study, the knowledge categories were used. The shortest section of text matching a particular knowledge category was determined as an episode. When different knowledge categories took place at the same time, one text section could be coded as more than one category. For examples see Table 2 .

Subsequently, the codings were marked as sections in the transcription software “f4” (f4 2011, Dr. T. Dresing, http://www.audiotranskription.de ) and exported to Microsoft Excel 2010 (Microsoft, 2010). For further analysis, the statistical environment “R” was used ( http://www.r-project.org/ ).

A predefined alpha level set at p < .05 was used for all tests of significance. If the data was used multiple times for comparisons we Bonferoni corrected for alpha error accumulation and report results as significant accordingly. Graphical illustrations were processed as the percentage of time spent on one knowledge category relative to the overall time. Although the categories of the model were described qualitatively, this was the basis for a quantitative analysis and graphical illustration of the results.

The frequencies of the categories and length of the episodes were analysed as quantitative dependent variables. The accuracy of diagnosis was established in a binary form ( correct or not correct ) as a dependent variable. Chi-squared tests were used to verify the relationship of dependent variables to all dichotomous socio-demographic participant variables (like gender), while Pearson correlation was used for all continuous dependent variables to correlate them to previously obtained participant data. Correlations between two dichotomous variables were calculated using crosstabs correlation coefficient ϕ. To gain insight how knowledge categories are divided over the course of time the cases were divided in 6 timewise equal parts. Frequencies of knowledge categories per sixth of the case were analysed as frequencies. As there a so many possible comparisons between the categories and sixth, we chose not to apply nonparametric statistical tests because of a to high alpha error accumulation.

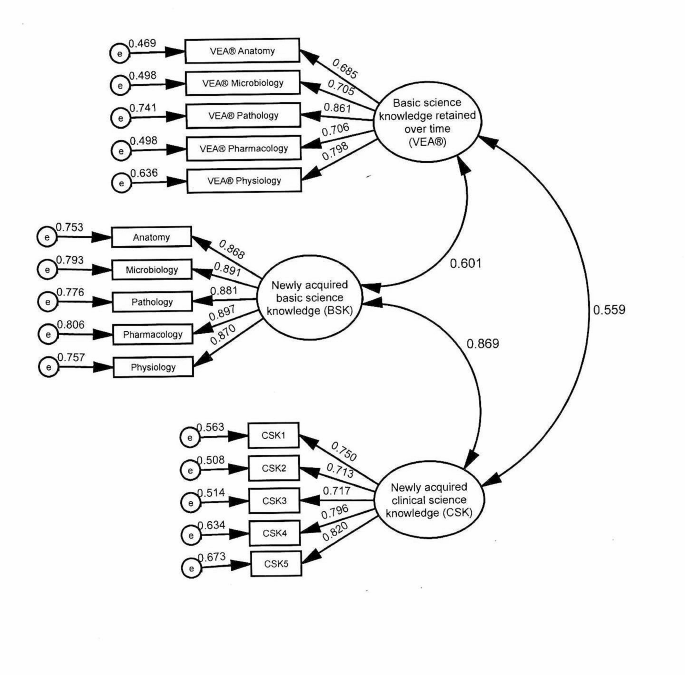

Descriptive data

Overall 983 distinct episodes of knowledge categories were be coded. Table 3 shows that the students’ reasoning consists mainly of conceptual and strategic knowledge. All cases contain these categories. Most often conceptual knowledge was used (CcK) with a 44% frequency, conditional knowledge (CdK) was used with a 36% frequency, strategic knowledge (SK) with a 21% frequency. Metacognition was identified most frequently (58%) but always in combination with other knowledge categories. Metacognition was used in every case with a mean of M = 9.02 per case (SD = 6.21). Figure 2 shows the time-line graphs of two participants, exemplifying little and extensive use of metacognition.

Time-line graph (Gantt-charts) of two participants of a session with a clinical case. The Gantt-chart shows the distribution of the use of different diagnostic knowledge categories over time. As metacognitive knowledge was only in use in combination with other knowledge categories its use is presented additively on top. The upper part of the figure shows a participant with only little use of the knowledge categories and the lower part of the figure a participant with much use of the knowledge categories

How are the diagnostic knowledge categories interrelated?

To answer this research question the frequency per case of the use of knowledge categories was correlated. Results show that conceptual and strategic knowledge are not significantly related (r CcK;SK = .23;n.s.; r CcK;CdK = .00;n.s.). Conceptual knowledge and metacognitive knowledge (r CcK;MK = .35) are significantly related, as are conditional and strategic knowledge (r CdK;SK = .27). The results are presented in Table 4 .

Interestingly prior knowledge (grades of PME and assessment of the learning phase in the field of clinical nephrology) was significantly correlated to metacognitive knowledge (r MK;PME = .41, r MK; LEARNING PHASE = .28).

How is the use of diagnostic knowledge categories related to time on task?

To answer this research question the time-on-task (TT) was correlated with the use of knowledge categories. In three cases the students had to be interrupted after 10 min. These students were included in the analysis with the maximum time. The overall time-on-task was not correlated with diagnostic accuracy (r TT; DIAGNOSTIC ACCURACY = -.13;n.s.). However, conceptual and strategic knowledge is significantly correlated to TT (see Table 5 ).

When correlating the use of the four knowledge categories to the correct solution none of them showed a significant result. As well, Chi squared tests of socio-demographic data of the participants (age, year of studies) and correct versus incorrect diagnosis yielded no significant result.

We found that frequencies of the used categories are not equally distributed over the case. Interestingly, in the first two sixth of the case the students used more conceptual and strategic knowledge. From the third sixth the students used more metacognition than any other category. Of course, metacognition could only be coded together with other categories, so there is a dependency of this category. However, the frequencies of conceptual and strategic knowledge decline in the fifth and sixth sixths. All frequencies over the course of the cases are depicted in Fig. 3 .

Diagnostic knowledge dimensions used by medical students over the course of the cases

We found the occurrence of a pattern of conditional and strategic knowledge right before the closure of cases, named sequence-at-closure (s@c). This sequence-at-closure appeared in 24 of the 63 case solutions (=38%) and is significantly correlated with the correct solution of the case (r ϕ . S@C; CORRECT SOLUTION = .37).

In this study the different knowledge categories including metacognition in case work of medical students were empirically coded and described. The diagnostic knowledge categories were applied for the first time to medical students problem-solving in a realistic environment. The result was application of conceptual, strategic and conditional knowledge throughout the cases. None of the knowledge categories on its own has a crucial role for good performance. Further, prior knowledge was not directly related to the correct diagnosis. These results supports the claim that it is not simply knowledge which solves clinical cases and more in this sense does not directly mean better. Instead, it is the goal-directed application of knowledge in a certain order that helps to solve cases. Over the course of the cases it seems that the application of conceptual and strategical knowledge declines, while the importance of metacognitive knowledge increases. We found that oftentimes the last two categories before a diagnostic decision was made by the participants consisted of a pattern of conditional and strategic knowledge at the closure of cases, named sequence-at-closure, which correlated with the correct solution of the case. This result relates to our previous findings regarding the so called higher loop of cognitive actions, which was associated with better diagnostic performance [ 20 ]. The higher loop consisted of the cognitive actions Evaluation, Representation and Integration. It seems that students who are ready to state a correct diagnosis evaluate and summarize their represented knowledge about the case with this final pattern of conditional and strategic knowledge before integrating into the correct solution. If students have a clear representation of the case in relation to their predefined clinical knowledge they know, why the patient’s symptoms and clinical findings occur and how to deal with them, then they have a very good chance to correctly diagnose the patient. This finding has direct implications for instructional medical education research, which we will discuss further below.

Metacognition could be coded in all participants. However, it always appeared in conjunction with other knowledge categories. This result seems plausible as the application of metacognition cannot be separated from the content of a case. The coding of metacognition was worthwhile; it significantly correlated with conceptual knowledge and with two distinct measures of prior knowledge. People who know more and scored better in their previous studies seem to have additional capacity to control and monitor their solution in a better way. Knowledge regularly is measured in assessment and learning research [ 6 ]. Thus far only a few studies take metacognitive knowledge into account. The few available studies take into regard interventional aspects, namely reflective practice [ 21 – 23 ]. There are many ways to assess metacognition. With our method, we tried to go one step beyond the current approaches to understand what is happening in the mind of medical students. It shows that high and low performers are not distinguished simply by their use of knowledge categories.

Limitations of the study

Our study has several limitations. First of all, aside from the correct solution of the case we did not code the performance within the knowledge categories. The knowledge could contain incorrect explanations and procedures. However, thus far there is no study that shows that the student who arrives at a correct diagnosis can necessarily only deduct it from correct knowledge.

The study included 21 participants and three cases per participant. We are aware that this sample is limited; this was necessary due to the elaborate data preparation process. On the other hand, qualitative research chooses to focus on the phenomenon of interest to unfold naturally, rather than a controlled influence of the interplay of variables [ 24 ]. The sample is relatively large considering it is a qualitative approach. The paper-based cases, while constructed with the most care and best possible authenticity, are still cases and not real patients with a real patient encounter with gestures and appearance and the possibility to ask the patient additional information. Thus the transferability to an authentic clinical environment might be limited. The think-aloud method limits our findings in a way that only verbal expressions can be analysed further coded and thus interpreted. Talking during the thought process requires metacognitive ability and this does confound with our dependent variable. Therefor, if some participants were more talkative than others they could possibly provide more information in all categories. However, we did not find any significant correlation between number of words expressed and number of categories coded.

The students who took part in our study volunteered and thus we cannot exclude a selection bias. The PME scores that we obtained, however are spread equally over the passing grades from 65 to 87% (M = 77.1%; SD = 6.6) and do not differ from the rest of the cohort of students.

The findings presented here show that the use of knowledge is not enough to distinguish between high and low performers. Further, it shows that the time students spent on the task is neither a positive nor a negative predictor for diagnostic accuracy. When medical educators design interventions to foster clinical reasoning it is important not to focus too much on the use of specific knowledge categories, but teach the use of the right sequences of knowledge at the right time, including the application of metacognition. This goes in line with a renewed conceptualization of the term “script” where students are supposed to go beyond the illness of the patient, but see diagnostic actions as a stereotypic process in which they learn the content of illnesses [ 25 ]. Studies investigating the interplay of cognitive actions and knowledge categories with instructional methods such as self-explanation prompts [ 8 , 21 , 26 , 27 ] are a promising next step in the endeavour to understand and foster clinical reasoning in medical students.

Boshuizen H, Schmidt HG. On the role of biomedical knowledge in clinical reasoning by experts, intermediates and novices. Cogn Sci. 1992;16(2):153–84.

Article Google Scholar

Elstein A, Shulman L, Sprafka S. Medical Problem Solving—An Analysis of Clinical Reasoning. 1978.

Book Google Scholar

Gräsel C. Problemorientiertes Lernen: Strategieanwendung und Gestaltungsmöglichkeiten: Hogrefe, Verl. für Psychologie. 1997.

Eva KW, Hatala RM, LeBlanc VR, Brooks LR. Teaching from the clinical reasoning literature: combined reasoning strategies help novice diagnosticians overcome misleading information. Med Educ. 2007;41(12):1152–8.

Kassirer JP. Teaching clinical reasoning: case-based and coached. Acad Med. 2010;85(7):1118.

Kopp V, Stark R, Kühne Eversmann L, Fischer MR. Do worked examples foster medical students’ diagnostic knowledge of hyperthyroidism? Med Educ. 2009;43(12):1210–7.

Schmidmaier R, Eiber S, Ebersbach R, Schiller M, Hege I, Holzer M, Fischer MR. Learning the facts in medical school is not enough: which factors predict successful application of procedural knowledge in a laboratory setting? BMC Med Educ. 2013;13(1):28.

Heitzmann N, Fischer F, Kühne-Eversmann L, Fischer MR. Enhancing Diagnostic Competence with Self-Explanation Prompts and Adaptable Feedback? Med Educ. 2015.

Pintrich PR. The role of metacognitive knowledge in learning, teaching, and assessing. Theory Pract. 2002;41(4):219–25.

Krathwohl DR. A revision of Bloom’s taxonomy: An overview. Theory Pract. 2002;41(4):212–8.

Flavell JH. Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. Am Psychol. 1979;34(10):906.

Lai ER. Metacognition: A literature review. Always learning: Pearson research report 2011.

Sperling RA, Howard BC, Miller LA, Murphy C. Measures of children’s knowledge and regulation of cognition. Contemp Educ Psychol. 2002;27(1):51–79.

Whitebread D, Coltman P, Pasternak DP, Sangster C, Grau V, Bingham S, Almeqdad Q, Demetriou D. The development of two observational tools for assessing metacognition and self-regulated learning in young children. Metacognition Learn. 2009;4(1):63–85.

Van Gog T, Paas F, Van Merriënboer JJG. Process-oriented worked examples: Improving transfer performance through enhanced understanding. Instr Sci. 2004;32(1):83–98.

Google Scholar

Fischer MR, Herrmann S, Kopp V. Answering multiple‐choice questions in high‐stakes medical examinations. Med Educ. 2005;39(9):890–4.

Schmidmaier R, Ebersbach R, Schiller M, Hege I, Holzer M, Fischer MR. Using electronic flashcards to promote learning in medical students: retesting versus restudying. Med Educ. 2011;45(11):1101–10.

Ericsson KA, Simon HA. Verbal reports as data. Psychol Rev. 1980;87(3):215.

Mayring P. Qualitative content analysis. In: A companion to qualitative research. Thousand Oaks: Sage; 2004. p. 266–9.

Kiesewetter J, Ebersbach R, Görlitz A, Holzer M, Fischer MR, Schmidmaier R. Cognitive Problem Solving Patterns of Medical Students Correlate with Success in Diagnostic Case Solutions. PloS One. 2013;8(8).

Chamberland M, St‐Onge C, Setrakian J, Lanthier L, Bergeron L, Bourget A, Mamede S, Schmidt H, Rikers R. The influence of medical students’ self‐explanations on diagnostic performance. Med Educ. 2011;45(7):688–95.

Mamede S, van Gog T, Sampaio AM, de Faria RMD, Maria JP, Schmidt HG. How can students’ diagnostic competence benefit most from practice with clinical cases? The effects of structured reflection on future diagnosis of the same and novel diseases. Acad Med. 2014;89(1):121–7.

Mamede S, van Gog T, van den Berge K, Rikers RM, van Saase JL, van Guldener C, Schmidt HG. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA. 2010;304(11):1198–203.

Patton MQ. Qualitative research: Wiley Online Library; 2005.

Berthold K, Eysink TH, Renkl A. Assisting self-explanation prompts are more effective than open prompts when learning with multiple representations. Instr Sci. 2009;37(4):345–63.

Chamberland M, Mamede S, St‐Onge C, Setrakian J, Bergeron L, Schmidt H. Self‐explanation in learning clinical reasoning: the added value of examples and prompts. Med Educ. 2015;49(2):193–202.

Kiesewetter J, Kollar I, Fernandez N, Lubarsky S, Kiessling C, Fischer MR, Charlin B. Crossing boundaries in interprofessional education: A call for instructional integration of two script concepts. J Interprof Care. 2016;1–4.

Download references

Acknowledgements

RS held a fellowship awarded by the private trust of Dr. med. Hildegard Hampp administered by LMU Munich, Germany during the project. The authors would like to acknowledge all students participating in the study. The authors would also like to thank Dorothea Lipp for her helpful comments on earlier versions of the manuscript.

Availability of data and materials

The datasets generated and/ analysed during the current study are not publicly available due to the large amount of raw written text but are available from the corresponding author on reasonable request. The Abstract was submitted to the 4th Research in Medical Education (RIME) Symposium 2015 and is available online.

Funds for this project were provided by Dr. med. Hildegard Hampp Trust administered by LMU Munich, Germany.

Authors’ contributions

JK contributed to the conceptual design of the study, the analysis and interpretation of data, as well as the drafting and revision of the paper. RE contributed to the conceptual design of the study, the analysis and interpretation of data, as well as the drafting and revision of the paper the acquisition and coding of data. NT contributed to the conceptual design and analysis of data and the revision of the paper. MH contributed to the conceptual design of the study, the analysis of data, and the revision of the paper. MRF contributed to the conceptual design of the study, to the interpretation of data, and the drafting and revision of the paper. RS contributed to the conceptual design of the study, to the interpretation of data, and the drafting and revision of the paper. All authors approved the final manuscript for submission.

Competing interests

Consent for publication.

Not applicable.

Ethics approval and consent to participate

This study was approved by the Ethical Committee of the Medical Faculty of LMU Munich. Written informed consent was obtained for all participants.

Author information

Authors and affiliations.

Institut für Didaktik und Ausbildungsforschung in der Medizin am Klinikum der Universität München, Ludwig-Maximilians-Universität, Munich, Germany

Jan Kiesewetter, Rene Ebersbach, Matthias Holzer, Ralf Schmidmaier & Martin R. Fischer

Lehrstuhl für Entwicklungspsychologie, Ludwig-Maximilians-Universität, Munich, Germany

Nike Tsalas

Medizinische Klinik und Poliklinik IV, Klinikum der Universität München, Ludwig-Maximilians-University, Munich, Germany

Ralf Schmidmaier

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Jan Kiesewetter .

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Kiesewetter, J., Ebersbach, R., Tsalas, N. et al. Knowledge is not enough to solve the problems – The role of diagnostic knowledge in clinical reasoning activities. BMC Med Educ 16 , 303 (2016). https://doi.org/10.1186/s12909-016-0821-z

Download citation

Received : 18 December 2015

Accepted : 14 November 2016

Published : 24 November 2016

DOI : https://doi.org/10.1186/s12909-016-0821-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Medical problem-solving

- Metacognition

- Knowledge categories

- Clinical reasoning

- Diagnostic reasoning

BMC Medical Education

ISSN: 1472-6920

- Submission enquiries: [email protected]

- General enquiries: [email protected]

- Search entire site

- Search for a course

- Browse study areas

Analytics and Data Science

- Data Science and Innovation

- Postgraduate Research Courses

- Business Research Programs

- Undergraduate Business Programs

- Entrepreneurship

- MBA Programs

- Postgraduate Business Programs

Communication

- Animation Production

- Business Consulting and Technology Implementation

- Digital and Social Media

- Media Arts and Production

- Media Business

- Media Practice and Industry

- Music and Sound Design

- Social and Political Sciences

- Strategic Communication

- Writing and Publishing

- Postgraduate Communication Research Degrees

Design, Architecture and Building

- Architecture

- Built Environment

- DAB Research

- Public Policy and Governance

- Secondary Education

- Education (Learning and Leadership)

- Learning Design

- Postgraduate Education Research Degrees

- Primary Education

Engineering

- Civil and Environmental

- Computer Systems and Software

- Engineering Management

- Mechanical and Mechatronic

- Systems and Operations

- Telecommunications

- Postgraduate Engineering courses

- Undergraduate Engineering courses

- Sport and Exercise

- Palliative Care

- Public Health