An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Fostering students’ motivation towards learning research skills: the role of autonomy, competence and relatedness support

Louise maddens.

1 Centre for Instructional Psychology and Technology, Faculty of Psychology and Educational Sciences, KU Leuven and KU Leuven Campus Kulak Kortrijk, Etienne Sabbelaan 51 – bus 7800, 8500 Kortrijk, Belgium

2 Itec, imec Research Group at KU Leuven, imec, Leuven, Belgium

3 Vives University of Applied Sciences, Kortrijk, Belgium

Fien Depaepe

Annelies raes.

In order to design learning environments that foster students’ research skills, one can draw on instructional design models for complex learning, such as the 4C/ID model (in: van Merriënboer and Kirschner, Ten steps to complex learning, Routledge, London, 2018). However, few attempts have been undertaken to foster students’ motivation towards learning complex skills in environments based on the 4C/ID model. This study explores the effects of providing autonomy, competence and relatedness support (in Deci and Ryan, Psychol Inquiry 11(4): 227–268, https://doi.org/10.1207/S15327965PLI1104_01, 2000) in a 4C/ID based online learning environment on upper secondary school behavioral sciences students’ cognitive and motivational outcomes. Students’ cognitive outcomes are measured by means of a research skills test consisting of short multiple choice and short answer items (in order to assess research skills in a broad way), and a research skills task in which students are asked to integrate their skills in writing a research proposal (in order to assess research skills in an integrative manner). Students’ motivational outcomes are measured by means of students’ autonomous and controlled motivation, and students’ amotivation. A pretest-intervention-posttest design was set up in order to compare 233 upper secondary school behavioral sciences students’ outcomes among (1) a 4C/ID based online learning environment condition, and (2) an identical condition additively providing support for students’ need satisfaction. Both learning environments proved equally effective in improving students’ scores on the research skills test. Students in the need supportive condition scored higher on the research skills task compared to their peers in the baseline condition. Students’ autonomous and controlled motivation were not affected by the intervention. Although, unexpectedly, students’ amotivation increased in both conditions, students’ amotivation was lower in the need supportive condition compared to students in the baseline condition. Theoretical relationships were established between students’ need satisfaction, students’ motivation (autonomous, controlled, and amotivation), and students’ cognitive outcomes. These findings are discussed taking into account the COVID-19 affected setting in which the study took place.

Introduction

Several scholars have argued that the process of learning research skills is often obstructed by motivational problems (Lehti & Lehtinen, 2005 ; Murtonen, 2005 ). Some even describe these issues as students having an aversion towards research (Pietersen, 2002 ). Examples of motivational problems are that students experience research courses as boring, inaccessible, or irrelevant to their daily lives (Braguglia & Jackson, 2012 ). In a research synthesis on teaching and learning research methods, Earley ( 2014 ) argues that students fail to see the relevance of research methods courses, are anxious or nervous about the course, are uninterested and unmotivated to learn the material, and have poor attitudes towards learning research skills. It should be mentioned that the studies mentioned above focused on the field of higher university education. In upper secondary education, to date, students’ motivation towards learning research skills has rarely been studied. As difficulties while learning research seem to relate to problems involving students’ previous experiences regarding learning research skills (Murtonen, 2005 ), we argue that fostering students’ motivation from secondary education onwards is a promising area of research.

The current study combines insights from instructional design theory and self-determination theory (SDT, Deci & Ryan, 2000 ), in order to investigate the cognitive and motivational effects of providing psychological need support (support for the need for autonomy, competence and relatedness) in a 4C/ID based (van Merriënboer & Kirschner, 2018 ) online learning environment fostering upper secondary schools students’ research skills. In the following section, we elaborate on the definition of research skills in the understudied domain of behavioral sciences; on 4C/ID (van Merriënboer & Kirschner, 2018 ) as an instructional design model for complex learning; and on self-determination theory and its related need theory (Deci & Ryan, 2000 ). In addition, the research questions addressed in the current study are outlined.

Conceptual framework

Research skills.

As described by Fischer et al., ( 2014 , p. 29), we define research skills 1 as a broad set of skills used “to understand how scientific knowledge is generated in different scientific disciplines, to evaluate the validity of science-related claims, to assess the relevance of new scientific concepts, methods, and findings, and to generate new knowledge using these concepts and methods”. Furthermore, eight scientific activities learners engage in while performing research are distinguished, namely: (1) problem identification, (2) questioning, (3) hypothesis generation, (4) construction and redesign of artefacts, (5) evidence generation, (6) evidence evaluation, (7) drawing conclusions, and (8) communicating and scrutinizing (Fischer et al., 2014 ). Fischer et al. ( 2014 ) argue that both the nature of, and the weights attributed to each of these activities, differ between domains. Intervention studies aiming to foster research skills are almost exclusively situated in natural sciences domains (Engelmann et al., 2016 ), leaving behavioral sciences domains largely understudied. The current study focuses on research skills in the understudied domain of behavioral sciences. We refer to the domain of behavioral sciences as the study of questions related to how people behave, and why they do so. Human behavior is understood in its broadest sense, and is the study of object in fields of psychology, educational sciences, cultural and social sciences.

The design of the learning environments used in this study is based on an existing instructional design model, namely the 4C/ID model (van Merriënboer & Kirschner, 2018 ). The 4C/ID model has been proven repeatedly effective in fostering complex skills (Costa et al., 2021 ), and thus drew our attention for the case of research skills, as research skills can be considered complex skills (it requires learners to integrate knowledge, skills and attitudes while performing complex learning tasks). Since the 4C/ID model focusses on supporting students’ cognitive outcomes, it might not be considered as relevant from a motivational point of view. However, since we argue that a deliberately designed learning environment from a cognitive point of view is an important prerequisite to provide qualitative motivational support, we briefly sketch the 4C/ID model and its characteristics. The 4C/ID model has a comprehensive character, integrating insights from different theories and models (Merrill, 2002 ), and highlights the relevance of four crucial components: learning tasks, supportive information, part task-practice, and just-in-time information. Central characteristics of these four components are that (a) high variability in authentic learning tasks is needed in order to deal with the complexity of the task; (b) supportive information is provided to the students in order to help them build mental models and strategies for solving the task under study (Cook & McDonald, 2008 ); (c) part-task practice is provided for recurrent skills that need to be automated; and (d) just-in-time (procedural) information is provided for recurrent skills.

Taking into account students’ cognitive struggles regarding research skills, and the existing research on the role of support in fostering research skills (see for example de Jong & van Joolingen, 1998 ), the 4C/ID model was found suitable to design a learning environment for research skills. This is partly because of its inclusion of (almost) all of the support found effective in the literature on research skills, such as providing direct access to domain information at the appropriate moment, providing learners with assignments, including model progression, the importance of students’ involvement in authentic activities, and so on (Chi, 2009 ; de Jong, 2006 ; de Jong & van Joolingen, 1998 ; Engelmann et al., 2016 ). While mainly implemented in vocational oriented programs, the 4C/ID model has been proposed as a good model to design learning environments aiming to foster research skills as well (Bastiaens et al., 2017 ; Maddens et al., 2020b ). Indeed, acquiring research skills requires complex learning processes (such as coordinating different constituent skills). Overall, the 4C/ID model can be considered to be highly suitable for designing learning environments aiming to foster research skills. Given its holistic design approach, it helps “to deal with complexity without losing sight of the interrelationships between the elements taught” (van Merriënboer & Kirschner, 2018 , p. 5).

Although the 4C/ID model has been used widely to construct learning environments enhancing students’ cognitive outcomes (see for example Fischer, 2018 ), research focusing on students’ motivational outcomes related to the 4C/ID model is scarce (van Merriënboer & Kirschner, 2018 ). Van Merriënboer and Kirschner ( 2018 ) suggest self-determination theory (SDT; Deci & Ryan, 2000 ) and its related need theory as a sound theoretical framework to investigate motivation in relation to 4C/ID.

Self-determination theory

Self-determination theory (SDT; Deci & Ryan, 2000 ) provides a broad framework for the study of motivation and distinguishes three types of motivation: amotivation (a lacking ability to self-regulate with respect to a behaviour), extrinsic motivation (extrinsically motivated behaviours, be they self-determined versus controlled), and intrinsic motivation (the ‘highest form’ of self-determined behaviour) (Deci & Ryan, 2000 ). According to Deci and Ryan ( 2000 , p. 237), intrinsic motivation can be considered “a standard against which the qualities of an extrinsically motivated behavior can be compared to determine its degree of self-determination”. Moreover, the authors (Deci & Ryan, 2000 , p. 237) argue that “extrinsic motivation does not typically become intrinsic motivation”. As the current study focuses on research skills in an academic context in which students did not voluntary chose to learn research skills, and thus learning research skills can be considered instrumental (directed to attaining a goal), the current study focuses on students’ amotivation, and students’ extrinsic motivation, realistically striving for the most self-determined types of extrinsic motivation.

Four types of extrinsic motivation are distinguished by SDT (external regulation, introjection, identification, and integration). These types can be categorized in two overarching types of motivation (autonomous and controlled motivation). Autonomous motivation contains the integrated and identified regulation towards a task (be it because the task is considered interesting, or because the task is considered personally relevant respectively). Controlled motivation refers to the external and introjected regulation towards the task (as a consequence of external or internal pressure respectively) (Vansteenkiste et al., 2009 ). More autonomous types of motivation have been found to be related to more positive cognitive and motivational outcomes (Deci & Ryan, 2000 ).

SDT further maintains that one should consider three innate psychological needs related to students’ motivation. These needs are the need for autonomy, the need for competence, and the need for relatedness. The need for autonomy can be described as the need to experience activities as being “concordant with one’s integrated sense of self” (Deci & Ryan, 2000 , p. 231). The need for competence refers to the need to feel effective when dealing with the environment (Deci & Ryan, 2000 ). The need for relatedness contains the need to have close relationships with others, including peers and teachers (Deci & Ryan, 2000 ). The satisfaction of these needs is hypothesized to be related to more internalization, and thus to more autonomous types of motivation (Deci & Ryan, 2000 ). This relationship has been studied frequently (for a recent overview, see Vansteenkiste et al., 2020 ). Indeed, research established the positive relationships between perceived autonomy (see for example Deci et al., 1996 ), perceived competence (see for example Vallerand & Reid, 1984 ), and perceived relatedness (see for example Ryan & Grolnick, 1986 for a self-report based study) with students’ more positive motivational outcomes. Apart from students’ need satisfaction, several scholars also aim to investigate need frustration as a different notion, as “it involves an active threat of the psychological needs (rather than a mere absence of need satisfaction)” (Vansteenkiste et al., 2020 , p. 9). In what follows, possible operationalizations are defined for the three needs.

Possible operationalizations of autonomy need support found in the literature are: teachers accepting irritation or negative feelings related to aspects of a task perceived as “uninteresting” (Reeve, 2006 ; Reeve & Jang, 2006 ; Reeve et al., 2002 ); providing a meaningful rationale in order to explain the value/usefulness of a certain task and stressing why involving in the task is important or why a rule exists (Deci & Ryan, 2000 ); using autonomy-supportive, inviting language (Deci et al., 1996 ); and allowing learners to regulate their own learning and to work at their own pace (Martin et al., 2018 ). Related to competence support, possible operationalizations are: providing a clear task rationale and providing structure (Reeve, 2006 ; Vansteenkiste et al., 2012 ); providing informational positive feedback after a learning activity (Deci et al., 1996 ; Martin et al., 2018 ; Vansteenkiste et al., 2012 ); providing an indication of progress and dividing content into manageable blocks (Martin et al., 2018 ; Schunk, 2003 ); and evaluating performance by means of previously introduced criteria (Ringeisen & Bürgermeister, 2015 ). Possible operationalizations concerning relatedness support are: teacher’s relational supports (Ringeisen & Bürgermeister, 2015 ); encouraging interaction between course participants and providing opportunities for learners to connect with each other (Butz & Stupnisky, 2017 ; van Merriënboer & Kirschner, 2018 ); using a warm and friendly approach or welcoming learners personally into a course (Martin et al., 2018 ); and offering a platform for learners to share ideas and to connect (Butz & Stupnisky, 2017 ; Martin et al., 2018 ).

In the current research, SDT is selected as a theoretical framework to investigate students’ motivation towards learning research skills, as, in contrast to other more purely goal-directed theories, it includes the concept of innate psychological needs or the Basic Psychological Need Theory (Deci & Ryan, 2000 ; Ryan, 1995 ; Vansteenkiste et al., 2020 ), and it describes the relation between these perceived needs and students’ autonomous motivation: higher levels of perceived needs relate to more autonomous forms of motivation. The inclusion of this need theory is considered an advantage in the case of research skills because research revealed problems of students with respect to both their feelings of competence in relation to research skills (Murtonen, 2005 ), as their feelings of autonomy in relation to research skills (Martin et al., 2018 ), as was indicated in the introduction. As such, fostering students’ psychological needs while learning research skills seems a promising way of fostering students’ motivation towards learning research skills.

4C/ID and SDT

One study (Bastiaens et al., 2017 ) was found to implement need support in 4C/ID based learning environments, comparing a traditional module, a 4C/ID based module and an autonomy supportive 4C/ID based module in a vocational undergraduate education context. Autonomy support was operationalized by means of providing choice to the learners. No main effect of the conditions was found on students’ motivation. Surprisingly, providing autonomy support did also not lead to an increase in students’ autonomy satisfaction. Similarly, no effects were found on students’ relatedness and competence satisfaction. Remarkably, students did qualitatively report positive experiences towards the need support, but this did not reflect in their quantitatively reported need experiences. In a previous study performed in the current research trajectory, Maddens et al. ( under review ) investigated the motivational effects of providing autonomy support in a 4C/ID based online learning environment fostering students’ research skills, compared to a learning environment not providing such support. Autonomy support was operationalized as stressing task meaningfulness to the students. Based on insights from self-determination theory, it was hypothesized that students in the autonomy condition would show more positive motivational outcomes compared to students in the baseline condition. However, results showed that students’ motivational outcomes appeared to be unaffected by the autonomy support. One possible explanation for this unexpected finding was that optimal circumstances for positive motivational outcomes are those that allow satisfaction of autonomy, competence, ánd relatedness support (Deci & Ryan, 2000 ; Niemiec & Ryan, 2009 ), and thus, that the intervention was insufficiently powerful for effects to occur. Autonomy support has often been manipulated in experimental research (Deci et al., 1994 ; Reeve et al., 2002 ; Sheldon & Filak, 2008 ). However, the three needs are rarely simultaneously manipulated (Sheldon & Filak, 2008 ).

Integrated need support

Although not making use of 4C/ID based learning environments, some scholars have focused on the impact of integrated (autonomy, competence and relatedness) need support on learners’ motivation. For example, Raes and Schellens ( 2015 ) found differential effects of a need supportive inquiry environment on upper secondary school students’ motivation: positive effects on autonomous motivation were only found in students in a general track, and not in students in a science track. This indicates that motivational effects of need-supportive environments might differ between tracks and disciplines. However, Raes and Schellens ( 2015 ) did not experimentally manipulate need support, as the learning environment was assumed to be need-supportive and was not compared to a non-need supportive learning environment. Pioneers in manipulating competence, relatedness and autonomy support in one study are Sheldon and Filak ( 2008 ), predicting need satisfaction and motivation based on a game-learning experience with introductory psychology students. Relatedness support (mainly operationalized by emphasizing interest in participants’ experiences in a caring way) had a significant effect on intrinsic motivation. Competence support (mainly operationalized by means of explicating positive expectations) had a marginal significant effect on intrinsic motivation. No main effects on intrinsic motivation were found regarding autonomy support (mainly operationalized by means of emphasizing choice, self-direction and participants’ perspective upon the task). However, as is often the case in motivational research based on SDT, the task at hand was quite straight forward (a timed task in which students try to form as many words as possible from a 4 × 4 letter grid), and thus, the applicability of the findings for providing need support in 4C/ID based learning environments for complex learning might be limited.

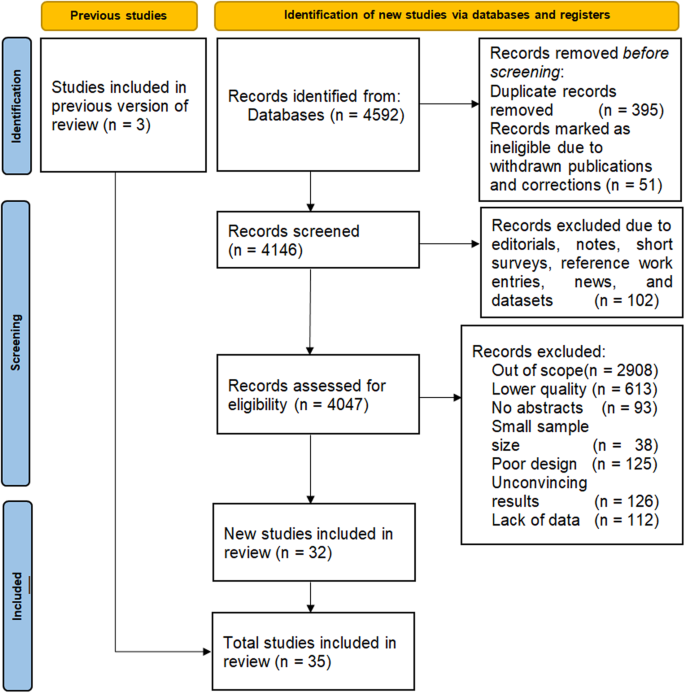

In the preceding section, several operationalizations of need support were discussed. Deci and Ryan ( 2000 ) argue that optimal circumstances for positive motivational outcomes are those that allow satisfaction of autonomy, competence, ánd relatedness support. However, such integrated need support has rarely been empirically studied (Sheldon & Filak, 2008 ). In addition, research investigating how need support can be implemented in learning environments based on the 4C/ID model is particularly scarce (van Merriënboer & Kirschner, 2018 ). This study aims to combine insights from instructional design theory for complex learning (van Merriënboer & Kirschner, 2018 ) and self-determination theory (Deci & Ryan, 2000 ) in order to investigate the motivational effects of providing need support in a 4C/ID based learning environment for students’ research skills. A pretest-intervention-posttest design is set up in order to compare 233 upper secondary school behavioral sciences students’ cognitive and motivational outcomes among two conditions: (1) a 4C/ID based online learning environment condition, and (2) an identical condition additively providing support for students’ need satisfaction. The following research questions are answered based on a combination of quantitative and qualitative data (see ‘method’): (1) Does a deliberately designed (4C/ID-based) learning environment improve students’ research skills, as measured by a research skills test and a research skills task? ; ( 2) What is the effect of providing autonomy, competence and relatedness support in a deliberately designed (4C/ID-based) learning environment fostering students’ research skills, on students’ motivational outcomes (i.e. students’ amotivation, autonomous motivation, controlled motivation, students’ perceived value/usefulness, and students’ perceived needs of competence, relatedness and autonomy)? ; (3) What are the relationships between students’ need satisfaction, students’ need frustration, students’ autonomous and controlled motivation and students’ cognitive outcomes (research skills test and research skills task)? ; (4) How do students experience need satisfaction and need frustration in a deliberately designed (4C/ID-based) learning environment? .

The first three questions are answered by means of quantitative data. Since the learning environment is constructed in line with existing instructional design principles for complex learning, we hypothesize that both learning environments will succeed in improving students’ research skills (RQ1). Relying on insights from self-determination theory (Deci & Ryan, 2000 ), we hypothesize that providing need support will enhance students’ autonomous motivation (RQ2). In addition, we hypothesize students’ need satisfaction to be positively related to students’ autonomous motivation (RQ3). These hypotheses on the relationship between students’ needs and students’ motivation rely on Vallerands’ ( 1997 ) finding that changes in motivation can be largely explained by students’ perceived competence, autonomy and relatedness (as psychological mediators). More specifically, Vallerand ( 1997 ) argues that environmental factors (in this case the characteristics of a learning environment) influence students’ perceptions of competence, autonomy, and relatedness, which, in turn, influence students’ motivation and other affective outcomes. In addition, based on the self-determination literature (Deci & Ryan, 2000 ), we expect students’ motivation to be positively related to students’ cognitive outcomes. In order to answer the fourth research question, qualitative data (students’ qualitative feedback on the learning environments) is analysed and categorized based on the need satisfaction and need frustration concepts (RQ4) in order to thoroughly capture the meaning of the quantitative results collected in light of RQ1–3. No hypotheses are formulated in this respect.

Methodology

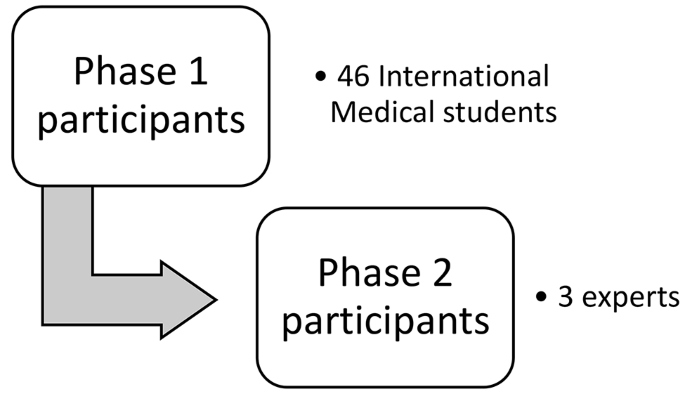

Participants.

The study took place in authentic classroom settings in upper secondary behavioral sciences classes. In total, 233 students from 12 classes from eight schools in Flanders participated in the study. All participants are 11th or 12th grade students in a behavioral sciences track 2 in general upper secondary education in Flanders (Belgium). Classes were randomly assigned to one out of two experimental conditions. Of all 233 students, 105 students (with a mean age of 16.32, SD 0.90) worked in the baseline condition (of which 62% 11th grade students, 36% 12th grade students, and 2% not determined; and of which 31% male, 68% female, and 1% ‘other’), and 128 students (with a mean age of 16.02, SD 0.59) worked in the need supportive condition (of which 80% 11th grade students, and 20% 12th grade students; and of which 19% male, and 81% female). As the current study did not randomly assign students within classes to one out of the two conditions, this study should be considered quasi-experimental. Full randomization was considered but was not feasible as students worked in the learning environments in class, and would potentially notice the experimental differences when observing their peers working in the learning environment. As such, we argued that this would potentially cause bias in the study. By taking into account students’ pretest scores on the relevant variables (cognitive and motivational outcomes) as covariates, we aimed to adjust for inter-conditional differences. No such differences were found for students’ autonomous motivation t (226) = − 0.115, p < 0.909, d = 0.015, and students’ amotivation t (226) = − 0.658, p < 0.511, d = − 0.088. However, differences were observed for students’ controlled motivation t (226) = − 2.385, p < 0.018, d = − 0.318, and students’ scores on the LRST pretest t (225) = − 5.200, p < 0.001, d = − 0.695.

Study design and procedure

In a pretest session of maximum two lesson hours, the Leuven Research Skills Test (LRST, Maddens et al., 2020a ), the Academic Self-Regulation Scale (ASRS, Vansteenkiste et al., 2009 ), and four items related to students’ amotivation (Aydin et al., 2014 ) were administered in class via an online questionnaire, under supervision of the teacher. In the subsequent eight weeks, participants worked in the online learning environment, one hour a week. Out of the 233 participating students, 105 students studied in a baseline online learning environment. The baseline online learning environment 3 is systematically designed using existing instructional design principles for complex learning based on the 4C/ID model (van Merriënboer & Kirschner, 2018 ). All four components of the 4C/ID model were taken into account in the design process: regarding the first component, the learning tasks included real-life, authentic cases. More specifically, tasks were selected from the domains of psychology, educational sciences and sociology. As such, there was a large variety in the cases used in the learning tasks. This large variety in learning tasks is expected to facilitate transfer of learners’ research skills in a wide range of contexts. Furthermore, the tasks were ill-structured and required learners to make judgments, in order to provoke deep learning processes. Regarding the second component, supportive information was provided for complex tasks in the learning environment, such as formulating a research question, where students can consult general information on what constitutes a good research question, can consult examples or demonstrations of this general information, and can receive cognitive feedback on their answers (for example by means of example answers). Examples of the implementation of the third component (procedural information) are the provision of information on how to recognize a dependent and an independent variable by means of on-demand (just-in-time) presentation by means of pop-ups; information on how to use Boolean operators; and information on how to read a graph. To avoid split attention, this kind of information was integrated with the task environment itself (van Merriënboer & Kirschner, 2018 ). Finally, the fourth component, part-task-practice (by means of short tests) was implemented for routine aspects of research skills that should be automated, for example the formulation of a search query.

The remaining participating students ( n = 128) completed an adapted version of the baseline online learning environment, in which autonomy, relatedness and competence support are provided. In total, need support consisted of 12 implementations (four implementations for each need), based on existing research on need support. An overview of these adaptations can be found in Tables Tables1 1 and and2. 2 . Although, ideally, students would work in class, under supervision of their teacher, this was not possible for all classes, due to the COVID-19 restrictions. 4 As a consequence, some students completed the learning environment partly at home. All students were supervised by their teachers (be it virtually or in class), and the researcher kept track of students’ overall activities in order to be able to contact students who did not complete the main activities. During the last two sessions of the intervention, participants submitted a two-pages long research proposal (“two-pager”). One week after the intervention, the LRST (Maddens et al., 2020a ), the ASRS (Vansteenkiste et al., 2009 ), four items related to students’ amotivation (Aydin et al., 2014 ), the value/usefulness scale (Ryan, 1982 ) and the Basic Psychological Need Satisfaction and Frustration Scale (BPNSNF, Chen et al., 2015 ) were administered in a posttest session of maximum two hours. Although most classes succeeded in organizing this posttest session in class, for some classes this posttest was administered at home. However, all classes were supervised by the teacher (be it virtually or in class). These contextual differences at the test moments will be reflected upon in the discussion section.

Adaptations online learning environment

| Support type | Implementations | Concrete operationalizations in the need supportive learning environment |

|---|---|---|

| Autonomy support | A1. Providing meaningful rationales in order to explain the value/usefulness of a certain task and stressing why involving in the task is important or why a rule exists (Assor et al., ; Deci et al., ; Deci & Ryan, ; Steingut et al., ) | –A1a. Video of a peer (student) stressing value/usefulness of learning environment before starting the learning environment –A1b. Teacher stressing importance learning environment before starting the learning environment –A1c. Avatars stressing importance (see Author et al., under review); for example an avatar mentioning ‘After having completed this module, I know how to formulate a research question for example when I am writing a bachelor thesis in my future academic career” –A1d. 2-pager: adding examples of subjects of peers, in order for the task to feel more familiar |

| A2. Accepting irritation/acknowledging negative feelings (acknowledgment of aspects of a task perceived as uninteresting) (Reeve & Jang, ; Reeve et al., ) | –A2a. Including statements during tasks: “We understand that this might cost an effort, but previous studies proved that students can learn from performing this activity…” –A2b. At the end of each module: teacher asks about students’ difficulties | |

| A3. Using autonomy-supportive, inviting language (Deci et al., ) | –A3a. Personal task rationale, for example: “I am curious about how you would tackle this problem.”, systematically implemented in the assignments | |

| A4. Allowing learners to regulate their own learning and to work at their own pace. The use of a non-pressured environment (Martin et al., ) | –A4a. Adding a statement after each task class: “no need to compare your progress to that of your peers, you can work at your own pace!” | |

| Relatedness support | R1. Teacher’s relational supports (Ringeisen & Bürgermeister, ) | –R1a. Before starting the learning environment: stressing that students can contact researcher and teacher –R1b. Researcher (scientist-mentor) sends motivational messages to the group (on a weekly basis) |

| R2. Encouraging interaction between course participants; providing opportunities for learners to connect with each other; introducing learning tasks that require group work or learning networks (Butz & Stupnisky, ; van Merriënboer & Kirschner, ) | –R2a. Opening every task class: reminding students they can contact the researcher with questions –R2b. Every task class: one opportunity to share answers in the forum | |

| R3. Using a warm and friendly approach, welcoming learners personally into a course (Martin et al., ) | –R3a. Personal welcoming message in the beginning of the online learning environment | |

| R4. Offering a platform for learners to share ideas and to connect (Butz & Stupnisky, ; Martin et al., ) | –R4a. Asking students to post an introduction post in the forum to sum up their expectations of the course (once, in the beginning of the learning environment) | |

| Competence support | C1. Clear task rationale, providing structure (Reeve, ; Vansteenkiste et al., ) | –Introductory video of researcher explaining what students will learn in the online learning environment |

| C2. Informational positive feedback after learning activity (Deci et al., ; Martin et al., ; Vansteenkiste et al., ) | –Personal short feedback after every task class, formulated in a positive manner –Adding motivational quotes to example answers: “Thank you for submitting your answer! You will receive feedback at the end of this module, but until then, you can compare your answer to the example answer” | |

| C3. Indication of progress; dividing content into manageable blocks (Martin et al., ) | –After every task class: ask students to mark their progress | |

| C4. Evaluating performance by means of previously introduced criteria (Ringeisen & Bürgermeister, ) | –SAP-chart referring to instructions 2-pager task –Short guide 2-pager task |

Overview instruments

| Measured construct(s) | Instrument | Format | Number of items | Internal consistency reliability/interrater reliability | When administered? |

|---|---|---|---|---|---|

| Psychological need frustration and satisfaction | BPNSNF-training scale (Chen et al., ; translated version Aelterman et al., ) | Likert-type items, 5 point scale | 24 items (4 items per scale) | autonomy satisfaction, = 0.67; ω = 0.67; autonomy frustration, = 0.76; ω = 0.76; relatedness satisfaction, = 0.79; ω = 0.79; relatedness frustration, = 0.60; ω = 0.61; competence satisfaction, = 0.72; ω = 0.73; competence frustration, = 0.68; ω = 0.67 | Post |

| Experienced value/usefulness of the learning environment | Intrinsic Motivation Inventory (Ryan, ) | Likert-type items, 7-point scale | 7 items | = 0.92; ω = 0.92 | Post |

| Autonomous and controlled motivation | ASRS (Vansteenkiste et al., ) | Likert-type items, 5 point scale | 16 items (8 items for autonomous motivation, 8 items for controlled motivation | Autonomous motivation: = 0.91; 0.92; ω = 0.90; 0.92 Controlled motivation: = 0.83; 0.86; ω = 0.82; 0.85 | Pre, post |

| Amotivation | Academic Motivation Scale for Learning Biology (adapted for the context) (Aydin et al., ) | Liker-type items, 5 point scale | 4 items | = 0.80; 0.75; ω = 0.81; 0.75 | Pre, post |

| Research skills test | LRST (Maddens et al., ) | Combination of open ended and close ended conceptual and procedural knowledge items, each scored as 0 or 1 | 37 items | = 0.79; 0.82; ω = 0.78; ω = 0.80 | Pre, post |

| Research skills task | Two pager task (Author et al., under review) | Open ended question (performance assessment), assessed by means of a pairwise comparison technique | 1 task | Interreliability score = 0.79 | Post |

a When administered at both pretest and posttest level (see ‘procedure’), the internal consistency values are reported respectively

Instruments

In this section, we elaborate on the tests used during the pretest and the posttest. Example items for each scale are presented in Appendix 1.

Motivational outcomes

In the current study, two groups of motivational outcomes are assessed: (1) students’ need satisfaction and frustration, and students’ experiences of value/usefulness; and (2) students’ level of autonomous motivation, controlled motivation, and amotivation. When administered at both pretest and posttest level (see ‘procedure’), the internal consistency values are reported respectively.

The BPNSNF-training scale (The Basic Psychological Need Satisfaction and Frustration Scale, Chen et al., 2015 ; translated version Aelterman et al., 2016 5 ) measured students’ need satisfaction and need frustration while working in the learning environment, and consists of 24 items (four items per scale): (autonomy satisfaction, α = 0.67; ω = 0.67; autonomy frustration, α = 0.76; ω = 0.76; relatedness satisfaction, α = 0.79; ω = 0.79; relatedness frustration, α = 0.60; ω = 0.61; competence satisfaction, α = 0.72; ω = 0.73; competence frustration, α = 0.68; ω = 0.67). The items are Likert-type items ranging from one (not at all true) to five (entirely true). Although the current study focusses mainly on students’ need satisfaction, the scales regarding students’ need frustration are included in order to be able to also detect students’ potential ill-being and in order to detect potential critical issues regarding students’ needs. In addition to the BPNSNF, by means of seven Likert-type items ranging from one (not at all true) to seven (entirely true), the (for the purpose of this research translated) value/usefulness scale of the Intrinsic Motivation Inventory (IMI, Ryan, 1982 ) measured to what extent students valued the activities of the online learning environment ( α = 0.92; ω = 0.92). Since in the research skills literature problems have been observed related to students’ perceived value/usefulness of research skills (Earley, 2014 ; Murtonen, 2005 ), and this concept is not sufficiently stressed in the BPNSNF-scale, we found it useful to include this value/usefulness scale to the study. The difference in the range of the answer possibilities (one to five vs one to seven) exists because we wanted to keep the range as initially prescribed by the authors of each instrument. All motivational measures are calculated by adding the scores on every item, and dividing this sum score by the number of items on a scale, leading to continuous outcomes. Although the IMI and the BPNSNF targeted students’ experiences while completing the online learning environment, these measures were administered during the posttest. Thus, students had to think retrospectively about their experiences. In order to prevent cognitive overload while completing the online learning environment, these measures were not administered during the intervention itself.

Students’ autonomous and controlled motivation towards learning research skills was measured by means of the Dutch version of the Academic Self-Regulation Scale (ASRS; Vansteenkiste et al., 2009 ), adapted to ‘ research skills ’. The ASRS consists of Likert-type items ranging from one (do not agree at all) to five (totally agree), and contains eight items per subscale (autonomous and controlled motivation). In the autonomous motivation scale, four items are related to identified regulation, and four items are related to intrinsic motivation. 6 In the controlled motivation scale, four items are related to external regulation, and four items are related to introjected regulation. Both scales (autonomous motivation and controlled motivation) indicated good internal consistency for the study’s data (autonomous motivation: α = 0.91; 0.92; ω = 0.90; 0.92; controlled motivation: α = 0.83; 0.86; ω = 0.82; 0.85). The items were adapted to the domain under study (motivation to learn about research skills). Based on students’ motivational issues related to research skills, we found it useful to also include a scale to assess students’ amotivation. This was measured with (for the purpose of the current research translated) four items related to students’ amotivation regarding learning research skills, adapted from Academic Motivation Scale for Learning Biology (Aydin et al., 2014 ) ( α = 0.80; 0.75; ω = 0.81; 0.75). Also this measure consist of Likert-type items ranging from one (do not agree at all) to five (totally agree).

Cognitive outcomes

Students’ research skills proficiency was measured by means of a research skills test (Maddens et al., 2020a ) and a research skills task.

The research skills test used in this study is the LRST (Maddens et al., 2020a ) consisting of a combination of 37 open ended and close ended items ( α = 0.79; 0.82; ω = 0.78; ω = 0.80 for this data set), administered via an online questionnaire. Each item of the LRST is related to one of the eight epistemic activities regarding research skills as mentioned in the introduction (Fischer et al., 2014 ), and is scored as 0 or 1. The total score on the LRST is calculated by adding the mean subscale scores (related to the eight epistemic activities), and dividing them by eight (the number of scales). In a previous study (Maddens et al., 2020a ), the LRST was checked and found suitable in light of interrater reliability ( κ = 0.89). As the same researchers assessed the same test with a similar cohort in the current study, the interrater reliability was not calculated for this study.

In the research skills task (“two pager task”), students were asked to write a research proposal of maximum two pages long. The concrete instructions for this research proposal are given in Appendix 1. In this research proposal, students were asked to formulate a research question and its relevance; to explain how they would tackle this research question (method and participants); to explain their hypotheses or expectations; and to explain how they would communicate their results. The two-pager task was analyzed using a pairwise comparison technique, in which four evaluators (i.e. the four authors of this paper) made comparative judgements by comparing two two-pagers at a time, and indicating which two-pager they think is best. All four evaluators are researchers in educational sciences and are familiar with the research project and with assessing students’ texts. This shared understanding and expertise is a prerequisite for obtaining reliable results (Lesterhuis et al., 2018 ). The comparison technique is performed by means of the Comproved tool ( https://comproved.com ). As described by Lesterhuis et al. ( 2018 , p. 18), “the comparative judgement method involves assessing a text on its overall quality. However, instead of requiring an assessor to assign an absolute score to a single text, comparative judgement simplifies the process to a decision about which of two texts is better”. In total, 1635 comparisons were made (each evaluator made 545 comparisons), and this led to a (interrater)reliability score of 0.79. In a next step, these comparative judgements were used to rank the 218 products (15 students did not submit a two-pager) on their quality; and the products were graded based on their ranking. This method was used to grade the two-pagers because it facilitates the holistic evaluation of the tasks, based on the judgement of multiple experts (interrater reliability).

Qualitative feedback

Students’ experiences with the online learning environment were investigated in the online learning environment itself. After completing the learning environment, students were asked how they experienced the tasks, the theory, the opportunity to post answers in the forum and to ask questions via the chat, what they liked or disliked in the online learning environment, and what they disliked in the online learning environment (Fig. 1 ).

Study overview

The first research question (” Does a deliberately designed (4C/ID-based) learning environment improve students’ research skills, as measured by a research skills test and a research skills task?” ) is answered by means of a paired samples t -test in order to look for overall improvements in order to detect potential general trends, followed by a full factorial MANCOVA, as this allows us to investigate the effectiveness for both conditions taking into account students’ pretest scores. Hence, the condition is included as an experimental factor, and students’ scores on the LRST and the two-pager task are included as continuous outcome variables. Students’ pretest scores on the LRST are included as a covariate. Prior to the analysis, a MANCOVA model is defined taking into account possible interaction effects between the experimental factor and the covariate.

The second research question (“ What is the effect of providing autonomy, competence and relatedness support in a deliberately designed (4C/ID-based) learning environment fostering students’ research skills, on students’ motivational outcomes, i.e. students’ amotivation, autonomous motivation, controlled motivation, students’ perceived value/usefulness, and students’ perceived needs of competence, relatedness and autonomy)?”) ;) is answered by means of a full factorial MANCOVA. The condition (need satisfaction condition versus baseline condition) is included as an experimental factor, and students’ responses on the value/usefulness, autonomous and controlled motivation, amotivation, and need satisfaction scales are included as continuous outcome variables. ASRS pretest scores (autonomous and controlled motivation) are included as covariates in order to test the differences between group means, adjusted for students’ a priori motivation. Prior to the analysis, a MANCOVA model is defined taking into account possible interaction effects between the experimental factor and the covariates, and assumptions to be met to perform a MANCOVA are checked. 7

The third research question ( “ What are the relationships between students’ need satisfaction, students’ need frustration, students’ autonomous and controlled motivation and students’ cognitive outcomes (research skills test and research skills task)?” ), is initially answered by means of five multiple regression analyses. The first three regressions include the need satisfaction and frustration scales, and students’ value/usefulness as independent variables, and students’ (1) autonomous motivation, (2) controlled motivation, and (3) amotivation as dependent variables. The fourth and fifth regressions include students’ autonomous motivation, controlled motivation, and amotivation as independent variables, and students’ (4) LRST scores, and (5) scores on the two-pager task as dependent variables. As a follow-up analysis (see ‘ results ’) two additional regression analyses are performed to look into the direct relationships between students’ perceived needs and students’ experienced value/usefulness, with students’ cognitive outcomes (LRST (6) and two-pager (7)). As the goal of this analysis is to investigate the relationships between variables as described in SDT research, this analysis focuses on the full sample, rather than distinguishing between the two conditions. An ‘Enter’ method (Field, 2013 ) is used in order to enter the independent variables simultaneously (in line with Sheldon et al., 2008 ).

The fourth research question (“ How do students experience need satisfaction and need frustration in a deliberately designed (4C/ID-based) learning environment?” ) is analyzed by means of the knowledge management tool Citavi. Based on the theoretical framework, students’ experiences are labeled by the codes ‘autonomy satisfaction, autonomy frustration, competence satisfaction, competence frustration, relatedness satisfaction, and relatedness frustration’. For example, students’ quotes referring to the value/usefulness of the learning environment, are labeled as ‘autonomy satisfaction’ or ‘autonomy frustration’. Students’ references towards their feelings of mastery of the learning content are labeled as ‘competence satisfaction’ or ‘competence frustration’. Students’ quotes regarding their relationships with peers and teachers are labeled as ‘relatedness satisfaction’ or ‘relatedness frustration’ (Fig. 2 ).

Overview variables

Does the deliberately designed (4C/ID based) learning environments improve students’ research skills, as measured by a research skills test and a research skills task?

Paired samples t -test. A paired samples t -test reveals that, in general, students ( n = 210) improved on the LRST-posttest ( M = 0.57, SD = 0.16) compared to the pretest ( M = 0.51, SD = 0.15) (range 0–1). The difference between the posttest and the pretest is significant t (209) = − 8.215, p < 0.001, d 8 = − 0.567. The correlation between the LRST pretest and posttest is 0.70 ( p < 0.010).

MANCOVA. A MANCOVA model ( n = 196) was defined checking for possible interaction effects between the experimental factor and the covariate in order to control for the assumption of ‘independence of the covariate and treatment effect’ (Field, 2013 ). The covariate LRST pretest did not show significant interaction effects for the two outcome variables LRST post ( p = 0.259) and the two-pager task ( p = 0.702). The correlation between the outcome variables (LRST post and two-pager), is 0.28 ( p < 0.050).

Of all 233 students, 36 students were excluded from the main analysis because of missing data (for example, because they were absent during a pretest or posttest moment). These students were excluded by means of a listwise deletion method because we found it important to use a complete dataset, since, in a lot of cases, students who did not complete the pretest or posttest, did also not complete the entire learning environment. Including partial data for these students could bias the results. The baseline condition counted 86 students, and the need satisfaction condition counted 111 students. Using Pillai’s Trace [ V = 0.070, F (2,193) = 7.285, p ≤ 0.001], there was a significant effect of the condition on the cognitive outcome variables, taking into account students’ LRST pretest scores. Separate univariate ANOVAs on the outcome variables revealed no significant effect of the condition on the LRST posttest measure, F (1,194) = 2.45, p = 0.120. However, a significant effect of condition was found on the two-pager scores, F (1,194) = 13.69, p < 0.001 (in the baseline group, the mean score was 6,6/20; in the need condition group, the mean score was 7,6/20). It should be mentioned that both scores are rather low.

What is the effect of providing autonomy, competence and relatedness support in a deliberately designed (4C/ID based) learning environment fostering students’ research skills, on students’ motivational outcomes (students’ amotivation, autonomous motivation, controlled motivation, students’ perceived value/usefulness, and students’ perceived needs of competence, relatedness and autonomy)?

Paired samples t -tests. The correlations between students’ pretest and posttestscores for the motivational measures are 0.67 ( p < 0.010) for autonomous motivation; 0.44 ( p < 0.010) for controlled motivation, and 0.38 for amotivation ( p < 0.010). Regarding the differences in students’ motivation, three unexpected findings were observed. Overall, students’ ( n = 215) amotivation was higher on the posttest ( M = 2.26, SD = 0.89) compared to the pretest ( M = 1.77, SD = 0.79) (based on a score between 1 and 5). The difference between the posttest and the pretest is significant t (214) = − 7.69, p < 0.001, d = − 0.524. Further analyses learn that the amotivation means in the baseline group increased with 0.65, and the amotivation in the need support group increased with 0.37. In addition, students’ ( n = 215) autonomous motivation was higher on the pretest ( M = 2.81, SD = 0.81) compared to the posttest ( M = 2.64, SD = 0.82). The difference between the posttest and the pretest is significant t (214) = 3.72, p < 0.001, d = 0.254. Students’ mean scores on autonomous motivation in the baseline condition decreased with 0.19, and students’ autonomous motivation in the need support condition decreased with 0.15. Students’ ( n = 215) controlled motivation was higher on the posttest ( M = 2.33, SD = 0.75) compared to the pretest ( M = 1.93, SD = 0.67). The difference between the posttest and the pretest is significant t (214) = − 07.72, p < 0.001, d = − 0.527. Students’ controlled motivation in the baseline group increased with 0.36, and students’ controlled motivation in the need support group increased with 0.43. However, overall, all mean scores are and stay below neutral score (below 3), indicating robust low autonomous, controlled and amotivation scores (see Table Table3). 3 ). An independent samples T -test on the mean differences between these measures shows that the increases/decreases on autonomous motivation [ t (213) = − 0.506, p = 0.613, d = − 0.069] and controlled motivation [ t (213) = − 0.656, p = 0.513, d = − 0.090] did not differ between the two groups. However, the increases in amotivation [ t (213) = 2.196, p = 0.029, d = 0.301] does differ significantly between the two conditions. More specifically, the increase was lower in the need supportive condition compared to the baseline condition.

Mean scores and standard deviations motivational variables

| Variable | Range | Baseline condition | Need supportive condition | ||

|---|---|---|---|---|---|

| Value/usefulness | 1–7 | 5.12; .94 | 5.14; 1.14 | ||

| Autonomy satisfaction | 1–5 | 3.14; .62 | 3.13; .62 | ||

| Autonomy frustration | 1–5 | 2.94; .79 | 3; .85 | ||

| Competence satisfaction | 1–5 | 3.18; .62 | 3.19; .58 | ||

| Competence frustration | 1–5 | 2.77; .74 | 2.74; .71 | ||

| Relatedness satisfaction | 1–5 | 2.73; .80 | 2.43; .82 | ||

| Relatedness frustration | 1–5 | 1.91; .73 | 2.43; .65 | ||

| Autonomous motivation | Pretest | Posttest | Pretest | Posttest | |

| 1–5 | 2.83; .82 | 2.65; .87 | 2.81; .81 | 2.65; .77 | |

| Controlled motivation | Pretest | Posttest | Pretest | Posttest | |

| 1–5 | 1.82; .66 | 2.19; .72 | 2.02; .66 | 2.45; .76 | |

| Amotivation | Pretest | Posttest | Pretest | Posttest* | |

| 1–5 | 1.74; .72 | 2.38; .91 | 1.81; .86 | 2.18; .87 | |

a Overall, students’ ( n = 215) autonomous motivation was significantly higher on the pretest compared to the posttest ( t (214) 3.72, p ≤ 0.001, d = 0.254

b Students’ (n = 215) controlled motivation was significantly higher on the posttest compared to the pretest ( t (214) = − 7.72, p ≤ 0.001, d = − 0.527

c Students’ ( n = 215) amotivation was significantly higher on the posttest compared to the pretest ( t (214) = − 07,69, p ≤ 0.001, d = − 0.534)

MANCOVA. Of all 233 students, 18 students were excluded from the analysis because of missing data (for example, because they were absent during a pretest or posttest moment). Compared to the cognitive analyses, the amount of missing data is lower concerning motivational outcomes since, concerning the cognitive outcomes, some students did not complete the two-pager task. However, we found it important to use all relevant data and chose to report this is in a clear way. In total, the baseline condition counted 97 students, and the experimental condition counted 118 students. Similar to the analysis for the cognitive outcomes, a MANCOVA model was defined to check for possible interaction effects between the experimental factor and the covariate in order to control for the assumption of ‘independence of the covariate and treatment effect’ (Field, 2013 ). The covariates did not show significant interaction effects for the outcome variables. 9

Using Pillai’s Trace [ V = 0.113, F (10,201) = 2.558, p = 0.006], there was a significant effect of condition on the motivational variables, taking into account students’ autonomous and controlled pretest scores, and students’ a priori amotivation. Separate univariate ANOVAs on the outcome variables revealed a significant effect of the condition on the outcome variables amotivation, F (1,210) = 3.98, p = 0.047; and relatedness satisfaction F (1,210) = 6.41, p = 0.012. As was hypothesized, students in the need satisfaction group reported less amotivation ( M = 2.38), compared to students in the baseline group ( M = 2.18). In contrast to what was hypothesized, students in the need satisfaction group reported less relatedness satisfaction ( M = 2.43) compared to students in the baseline group ( M = 2.73), and no significant effects of condition were found on the outcome variables autonomous motivation post, controlled motivation post, value/usefulness, autonomy satisfaction, autonomy frustration, competence satisfaction, competence frustration, and relatedness frustration. Table Table4 4 shows the correlations between the motivational outcome variables.

Correlations motivational outcome variables

| AM | CM | AMOT | VU | AS | AF | CS | CF | RS | RF | |

|---|---|---|---|---|---|---|---|---|---|---|

| AM | 1 | |||||||||

| CM | − 0.03 | 1 | ||||||||

| AMOT | − 0.21** | 0.41** | 1 | |||||||

| VU | 0.66** | − 0.07 | − 0.36** | 1 | ||||||

| AS | 0.64** | − 0.16** | − 0.28** | 0.60** | 1 | |||||

| AF | − 0.40** | 0.40** | 0.35** | − 0.41** | − 0.58** | 1 | ||||

| CS | 0.48** | − 0.19** | − 0.16* | 0.46** | 0.58** | − 0.41** | 1 | |||

| CF | − 0.11 | 0.29** | 0.22** | − 0.11 | − 0.31** | 0.41** | − 0.52** | 1 | ||

| RS | 0.27** | − 0.03 | − 0.03 | 0.15* | 0.30** | − 0.33** | 0.29** | − 0.19** | 1 | |

| RF | − 0.03 | 0.19** | 0.11 | − 0.13 | − 0.10** | 0.21** | *0.25** | 0.32** | − 0.28** | 1 |

AM autonomous motivation, CM controlled motivation, AMOT amotivation, VU value/usefulness, AS autonomy satisfaction, AF autonomy frustration, CS competence satisfaction, CF competence frustration, RS relatedness satisfaction, RF relatedness frustration

**Correlation is significant at the 0.010 level (2-tailed)

*Correlation is significant at the 0.050 level (2-tailed)

What are the relationships between students’ need satisfaction, students’ need frustration, students’ autonomous and controlled motivation and students’ cognitive outcomes (research skills test and research skills task)?

The third research question (investigating the relationships between students’ need satisfaction, students’ motivation and students’ cognitive outcomes), is answered by means of five multiple regression analyses. The first three regressions include the need satisfaction and frustration scales, and students value/usefulness as independent variables, and students’ (1) autonomous motivation, (2) controlled motivation, and (3) amotivation as dependent variables ( n = 219). The fourth and fifth regressions include students’ autonomous motivation, controlled motivation, and amotivation as independent variables, and students’ (4) LRST scores ( n = 215), and (5) scores on the two-pager task as dependent variables ( n = 206). Table Table4 4 depicts the correlations for the first three analyses. Table Table5 5 depicts the correlations for the last two analyses.

Correlations motivational and cognitive outcome variables

| AM | CM | AMOT | LRST | Twopager | |

|---|---|---|---|---|---|

| AM | 1 | ||||

| CM | − 0.03 | 1 | |||

| AMOT | − 0.21** | 0.41** | 1 | ||

| LRST | 0.10 | − 0.10 | − 0.32** | 1 | |

| 2pager | 0.05 | 0.70 | − 0.11 | 0.28** | 1 |

AM autonomous motivation, CM controlled motivation, AMOT amotivation, LRST score on LRST, Twopager score on Twopager

In Table Table3, 3 , we can see that students in both conditions experience average competence and autonomy satisfaction. However, students’ relatedness satisfaction seems low in both conditions. This finding will be further discussed in the discussion section. For autonomous motivation, a significant regression equation was found F (7,211) = 37.453, p < 0.001. The regression analysis (see Table Table5) 5 ) further reveals that all three satisfaction scores (competence satisfaction, relatedness satisfaction and autonomy satisfaction) contribute positively to students’ autonomous motivation, as does students’ experienced value/usefulness. Also for students’ controlled motivation a significant regression equation was found F (7,211) = 8.236, p < 0.001, with students’ autonomy frustration and students’ relatedness satisfaction contributing to students’ controlled motivation. The aforementioned relationships are in line with the expectations. However, we noticed that relatedness satisfaction contributed to students’ controlled motivation in the opposite direction of what was expected (the higher students’ relatedness satisfaction, the lower students’ controlled motivation). This finding will be reflected upon in the discussion section. Also for students’ amotivation, a significant regression equation was found F (7,211) = 7.913, p < 0.001. Students’ autonomy frustration, competence frustration and students’ value/usefulness contributed to students’ amotivation in an expected way. Also for cognitive outcomes related to the research skills test, a significant regression equation was found F (3,211) = 8.351, p < 0.001. In line with the expectations, the regression analysis revealed that the higher students’ amotivation, the lower students’ scores on the research skills test. No significant regression equation was found for the outcome variable related to the research skills task F (3,202) = 0.954, p < 0.416. For all regression equations, the R 2 and the exact regression weights are presented in Table Table6 6 .

Linear model of predictors of autonomous motivation, controlled motivation, amotivation, LRST scores, and two-pager scores with beta values, standard errors, standardized beta values and significance values

| Regression | Dependent variable | Independent variable | (SE) | |||

|---|---|---|---|---|---|---|

| 1 ( = 0.55) | AM | 0.39 | 0.09 | 0.30 | 0 000* | |

| AF | − 0.02 | 0.06 | − 0.02 | 0 691 | ||

| 0.22 | 0.09 | 0.16 | 0 014* | |||

| CF | 0.13 | 0.07 | 0.11 | 0.060 | ||

| 0.11 | 0.05 | 0.11 | 0.026* | |||

| RF | 0.10 | 0.06 | 0.09 | 0.088 | ||

| 0.31 | 0.05 | 0.40 | 0.000* | |||

| 2 ( = 0.46) | CM | AS | 0.07 | 0.11 | 0.06 | 0.521 |

| 0.40 | 0.07 | 0.44 | 0.000* | |||

| CS | − 0.05 | 0.11 | − 0.04 | 0.667 | ||

| CF | 0.12 | 0.08 | 0.11 | 0.154 | ||

| 0.13 | 0.06 | 0.14 | 0.035* | |||

| RF | 0.12 | 0.07 | 0.11 | 0.097 | ||

| VU | 0.06 | 0.06 | 0.09 | 0.263 | ||

| 3 ( = 0.46)* | AMOT | AS | − 0.04 | 0.14 | − 0.03 | 0.794 |

| 0.25 | 0.09 | 0.23 | 0.006* | |||

| CS | 0.24 | 0.13 | 0.16 | 0.072 | ||

| 0.21 | 0.10 | 0.17 | 0.033* | |||

| RS | 0.10 | 0.07 | 0.09 | 0.180 | ||

| RF | 0.03 | 0.09 | 0.03 | 0.699 | ||

| − 0.26 | 0.07 | − 0.31 | 0.000* | |||

| 4 ( = 0.33)* | LRST | AM | 0.00 | 0.01 | 0.02 | 0.740 |

| CM | 0.01 | 0.02 | 0.04 | 0.629 | ||

| − 0.06 | 0.01 | − 0.33 | 0.000* | |||

| 5( = 0.12) | 2-pager | AM | 0.06 | 0.14 | 0.03 | 0.687 |

| CM | 0.05 | 0.16 | 0.02 | 0.758 | ||

| AMOT | − 0.20 | 0.14 | − 0.12 | 0.137 |

*Significant at .050 level

As a follow-up analysis and in order to better understand the outcomes, we decided to also look into the direct relationships between students’ perceived needs and students’ experienced value/usefulness, with students’ cognitive outcomes (LRST and two-pager) by means of two additional regression analyses. The motivation behind this decision relates to possible issues regarding the motivational measures used, which might complicate the investigation of indirect relationships (see discussion). The results are provided in Table Table7, 7 , and show that both for the LRST and the two-pager, respectively, a significant [ F (7,207) = 4.252, p < 0.001] and marginally significant regression weight [ F (7,199) = 2.029, p = 0.053] was found. More specifically, students’ relatedness satisfaction and students’ perceived value/usefulness contribute to students’ scores on the two-pager and on the research skills test. As one would expect, we see that the higher students’ value/usefulness, the higher students’ scores on both cognitive outcomes. In contrast to one would expect, we found that the higher students’ relatedness satisfaction, the lower students’ scores on the cognitive outcomes. These findings are reflected upon in the discussion section.

Linear model of predictors of LRST scores, and two-pager scores with beta values, standard errors, standardized beta values and significance values

| Regression | Dependent variable | Independent variable | (SE) | |||

|---|---|---|---|---|---|---|

| 6 ( = 0.13) | LRST | AS | − 0.05 | 0.03 | − 0.19 | 0.055 |

| AF | − 0.01 | 0.02 | − 0.02 | 0 783 | ||

| CS | 0.03 | 0.02 | 0.11 | 0.239 | ||

| CF | 0.01 | 0.02 | − 0.04 | 0.667 | ||

| − 0.03 | 0.01 | − 0.16 | 0.025* | |||

| RF | 0.03 | 0.02 | 0.14 | 0.061 | ||

| 0.05 | 0.01 | 0.33 | 0.000* | |||

| 7 = .07) | 2-pager | AS | − 0.22 | 0.27 | − 0.09 | 0.413 |

| AF | 0.07 | 0.17 | 0.04 | 0.667 | ||

| CS | 0.02 | 0.25 | 0.01 | 0.936 | ||

| CF | − 0.30 | 0.19 | − 0.14 | 0.116 | ||

| − 0.31 | 0.14 | − 0.17 | 0.030* | |||

| RF | − 0.02 | 0.17 | − 0.12 | 0.906 | ||

| 0.33 | 0.13 | 0.22 | 0.015* |

How do students experience need satisfaction and need frustration in a deliberately designed (4C/ID based) learning environment?

As was mentioned in the method section, the fourth research question was analysed by labelling students’ qualitative feedback by the codes ‘autonomy satisfaction, autonomy frustration, competence satisfaction, competence frustration, relatedness satisfaction, and relatedness frustration’. By means of this approach, we could analyse students’ need experiences in a fine grained manner. When students’ quotes were applicable to more than one code, they were labelled with different codes. In what follows, students’ quotes are indicated with the codes “BC” (baseline condition) or “NSC” (need satisfaction condition) in order to indicate which learning environment the student completed. Of all 233 students, 124 students provided qualitative feedback (44 in BC and 80 in NSC). In total, 266 quotes were labeled. Autonomy satisfaction was coded 40 times BC and 41 times in NSC; autonomy frustration was coded 13 times in BC and four times in NSC; competence satisfaction was coded 28 times in BC and 34 times in NSC; competence frustration was coded 31 times in BC and 27 times in NSC; relatedness satisfaction was coded 10 times in BC and 16 times in NSC; and relatedness frustration was coded five times in BC and 17 times in NSC. Several observations could be drawn from the qualitative data.

Related to autonomy satisfaction , in both conditions, several students explicitly mentioned the personal value and usefulness of what they had learned in the learning environment. While in the baseline condition, these references were often vague (“Now I know what people expect from me next year ”; “I think I might use this information in the future ”); some references appeared to be more specific in the need support condition (“I want to study psychology and I think I can use this information!”; “This is a good preparation for higher education and university ”; “I can use this information to write an essay ”; “I think the theory was interesting, because you are sure you will need it once. I don’t always have that feeling during a normal lesson in school”). In addition, students in both conditions mentioned that they found the material interesting, and that they appreciated the online format: “It’s different then just listening to a teacher, I kept interested because of the large variety in exercises and overall, I found it fun” (NSC).

Several comments were coded as ‘ autonomy frustration’ in both conditions. Some students indicated that they found the material “useless” (BC), or that “they did not remember that much” (BC). Others found the material “uninteresting” (BC), “heavy and boring” (NSC) or “not fun” (BC). In addition, some students “did not like to complete the assignments” (NSC), or “prefer a book to learn theory” (NSC).

Related to competence satisfaction , students in both conditions found the material “clear” (BC, NSC). In addition, students’ appreciated the example answers, the difficulty rate (“Some exercises were hard, but that is good. That’s a sign you’re learning something new” (NSC)), and the fact that the theory was segmented in several parts. In addition, students recognized that the material required complex skills: “I learned a lot, you had to think deeper or gain insights in order to solve the exercises” (NSC), “you really had to think to complete the exercises” (NSC). In the need satisfaction group, several quotes were labelled related to the specific need support provided. For example, students indicated that they appreciated the forum option: “If something was not clear, you could check your peer’s answers” (NSC). Students also valued the fact that they could work at their own pace: “I found it very good that we could solve everything at our own pace” (NSC); “good that you could choose your own pace, and if something was not clear to you, you could reread it at your own pace” (NSC). In addition, students appreciated the immediate feedback provided by the researcher “I found it very good that we received personal feedback from xxx (name researcher). That way, I knew whether I understood the theory correctly” (NSC); and the fact that they could indicate their progress “It was good that you could see how far you proceeded in the learning environment” (NSC).

In both the baseline and the need supportive condition, there were also several comments related to competence frustration . For example, students found exercises vague, unclear or too difficult. While students, overall, understood the theory provided, applying the theory to an integrative assignment appears to be very difficult: “I did understand the several parts of the learning environment, but I did not succeed in writing a research proposal myself” (NSC). “I just found it hard to respond to questions. When I had to write my two-pager research proposal, I really struggled. I really felt like I was doing it entirely wrong” (NSC)). In addition, a lot comments related to the fact that the theory was a lot to process in a short time frame, and therefore, students indicated that it was hard to remember all the theory provided. In addition, this led pressure in some students: “Sometimes, I experiences pressure. When you see that your peers are finished, you automatically start working faster.” (BC).

Concerning relatedness satisfaction , in the baseline condition, students appreciated the chat function “you could help each other and it was interesting to hear each other’s opinions about the topics we were working on” (BC). However, most students indicated that they did not make use of the chat or forum options. In the need satisfaction condition, students appreciated the forum and the chat function: “You knew you could always ask questions. This helped to process the learning material” (NSC), “My peers’ answers inspired me” (NSC), “Thanks to the chat function, I felt more connected to my peers” (NSC). In addition, students in the need satisfaction condition appreciated the fact that they could contact the researcher any time.

Several students made comments related to relatedness frustration . In both groups, students missed the ‘live teaching’: “I tried my best, but sometimes I did not like it, because you do not receive the information in ‘real time’, but through videos” (BC). In addition, students missed their peers: “We had to complete the environment individually” (BC). While some students appreciated the opportunity of a forum, other students found this possibility stressful: “I think the forum is very scary. I posted everything I had to, but I found it very scary that everyone can see what you post” (NSC). Others did not like the fact that they needed to work individually: “Sometimes I lost my attention because no one was watching my screen with me” (NSC); “I found it hard because this was new information and we could not discuss it with each other” (NSC); “I felt lonely” (NSC); “It is hard to complete exercises without the help of a teacher. In the future this will happen more often, so I guess I will have to get used to it” (NSC); “When I see the teacher physically, I feel less reluctant to ask questions” (NSC).

The current intervention study aimed at exploring the motivational and cognitive effects of providing need support in an online learning environment fostering upper secondary school students’ research skills. More specifically, we investigated the impact of autonomy, competence and relatedness support in an online learning environment on students’ scores on a research skills test, a research skills task, students’ autonomous motivation, controlled motivation, amotivation, need satisfaction, need frustration, and experienced value/usefulness. Adopting a pretest-intervention-posttest design approach, 233 upper secondary school behavioral sciences students’ motivational outcomes were compared among two conditions: (1) a 4C/ID inspired online learning environment condition (baseline condition), and (2) a condition with an identical online learning environment additively providing support for students’ autonomy, relatedness and competence need satisfaction (need supportive condition). This study aims to contribute to the literature by exploring the integration of need support for all three needs (the need for competence, relatedness and autonomy) in an ecologically valid setting. In what follows, the findings are discussed taking into account the COVID-19 affected circumstances in which the study took place.

As was hypothesized based on existing research (Costa et al., 2021 ), results showed significant learning gains on the LRST cognitive measure in both conditions, pointing out that the learning environments in general succeeded in improving students’ research skills. The current study did not find any significant differences in these learning gains between both conditions. Controlling for a priori differences between the conditions on the LRST pretest measure, students in the need support condition did exceed students in the baseline condition on the two-pager task. However, overall, the scores on the research skills task were quite low, pointing to the fact that students still seem to struggle in writing a research proposal. This task can be considered more complex (van Merriënboer & Kirschner, 2018 ) than the research skills test, as students are required to combine their conceptual and procedural knowledge in one assignment. Indeed, in the qualitative feedback, students indicate that they understand the theory and are able to apply the theory in basic exercises, but that they struggle in integrating their knowledge in a research proposal. Future research could set up more extensive interventions explicitly targeting students’ progress while writing a research proposal, for example using development portfolios (van Merriënboer et al., 2006 ).

The effect of the intervention on the motivational outcome measures was investigated. Since we experimentally manipulated need support, this study hypothesized that students in the need supportive condition would show higher scores for autonomous motivation, value/usefulness and need satisfaction; and lower scores for controlled motivation, amotivation and need frustration compared to students in the baseline condition (Deci & Ryan, 2000 ). However, the analyses showed that students in the conditions did not differ on the value/usefulness, autonomy satisfaction, autonomy frustration, competence satisfaction, competence frustration and relatedness frustration measures. In contrast to what was hypothesized, students’ in the baseline condition reported higher relatedness satisfaction compared to students in the need supportive condition. No differences were found in students’ autonomous motivation and controlled motivation. However, as was expected, students in the need supportive conditions did report lower levels of amotivation compared to students in the baseline condition. Still, for the current study, one could question the role of the need support in this respect, as the current intervention did not succeed in manipulating students’ need experiences. In what follows, possible explanations for these findings are outlined in light of the existing literature.

Need experiences