- Help & FAQ

Gamification of Education and Learning: A Review of Empirical Literature

Research output : Chapter in Book/Report/Conference proceeding › Conference contribution › Scientific › peer-review

Publication series

Publication forum classification.

- Publication forum level 1

Access to Document

- http://urn.fi/urn:nbn:de:0074-2186-5

- http://urn.fi/URN:NBN:fi:uta-201811132835

T1 - Gamification of Education and Learning

T2 - International GamiFIN Conference

AU - Majuri, Jenni

AU - Koivisto, Jonna

AU - Hamari, Juho

N1 - jufoid=53269 INT=tie,"Majuri, Jenni"

M3 - Conference contribution

T3 - CEUR Workshop Proceedings

BT - Proceedings of the 2nd International GamiFIN Conference, GamiFIN 2018

A2 - Koivisto, Jonna

A2 - Hamari, Juho

PB - CEUR-WS

Y2 - 21 May 2018 through 23 May 2018

The Gamification of Learning: a Meta-analysis

- Meta-Analysis

- Open access

- Published: 15 August 2019

- Volume 32 , pages 77–112, ( 2020 )

Cite this article

You have full access to this open access article

- Michael Sailer ORCID: orcid.org/0000-0001-6831-5429 1 &

- Lisa Homner 1

131k Accesses

506 Citations

112 Altmetric

Explore all metrics

This meta-analysis was conducted to systematically synthesize research findings on effects of gamification on cognitive, motivational, and behavioral learning outcomes. Results from random effects models showed significant small effects of gamification on cognitive ( g = .49, 95% CI [0.30, 0.69], k = 19, N = 1686), motivational ( g = .36, 95% CI [0.18, 0.54], k = 16, N = 2246), and behavioral learning outcomes ( g = .25, 95% CI [0.04, 0.46], k = 9, N = 951). Whereas the effect of gamification on cognitive learning outcomes was stable in a subsplit analysis of studies employing high methodological rigor, effects on motivational and behavioral outcomes were less stable. Given the heterogeneity of effect sizes, moderator analyses were conducted to examine inclusion of game fiction , social interaction , learning arrangement of the comparison group , as well as situational, contextual, and methodological moderators, namely, period of time , research context , randomization , design , and instruments . Inclusion of game fiction and social interaction were significant moderators of the effect of gamification on behavioral learning outcomes. Inclusion of game fiction and combining competition with collaboration were particularly effective within gamification for fostering behavioral learning outcomes. Results of the subsplit analysis indicated that effects of competition augmented with collaboration might also be valid for motivational learning outcomes. The results suggest that gamification as it is currently operationalized in empirical studies is an effective method for instruction, even though factors contributing to successful gamification are still somewhat unresolved, especially for cognitive learning outcomes.

Similar content being viewed by others

Gamification in Education and Its Impact on Student Motivation—A Critical Review

How to Use Game Elements to Enhance Learning: Applications of the Theory of Gamified Learning

Gamification for educational purposes: What are the factors contributing to varied effectiveness?

Avoid common mistakes on your manuscript.

In recent years, the concept of gamification, defined as “the use of game design elements in non-game contexts” (Deterding et al. 2011 , p. 9), has received increased attention and interest in academia and practice, with education among the top fields of gamification research (Dichev and Dicheva 2017 ; Hamari et al. 2014 ; Seaborn and Fels 2015 ). Its hypothesized motivational power has made gamification an especially promising method for instructional contexts. However, as the popularity of gamification has increased, so have critical voices describing gamification as “the latest buzzword and the next fad” (Boulet 2012 , p. 1) or “Pavlovication” (Klabbers 2018 , p. 232). But how effective is gamification when it comes to learning, and what factors contribute to successful gamification?

Even though considerable research efforts have been made in this field (Hamari et al. 2014 ; Seaborn and Fels 2015 ), conclusive meta-analytic evidence on the effectiveness of gamification in the context of learning and education has yet to be provided. Therefore, the aim of this analysis was to statistically synthesize the state of research on the effects of gamification on cognitive, motivational, and behavioral learning outcomes in an exploratory manner. Furthermore, with this meta-analysis, not only did we try to answer the question of whether learning should be gamified but also how . Thus, we also investigated potential moderating factors of successful gamification to account for conceptual heterogeneity in gamification (Sailer et al. 2017a ). What is more, we included contextual, situational, and methodological moderators to account for different research contexts and study setups as well as methodological rigor in primary studies. To further investigate the stability and robustness of results, we assessed publication bias and computed subsplit analyses, which included studies with high methodological rigor.

Effects of Gamification on Learning

Gamification in the context of learning can be referred to as gamified learning (see Armstrong and Landers 2017 ; Landers 2014 ). Even though gamified learning and game-based learning have overlapping research literatures, a common game design element toolkit (Landers et al. 2018 ), and the same focus on adding value beyond entertainment, that is, using the entertaining quality of gamification interventions or (serious) games for learning (see Deterding et al. 2011 ; Zyda 2005 ), they are different in nature. Whereas game-based learning approaches imply the design of fully fledged (serious) games (see Deterding et al. 2011 ), gamified learning approaches focus on augmenting or altering an existing learning process to create a revised version of this process that users experience as game-like (Landers et al. 2018 ). Thus, gamification is not a product in the way that a (serious) game is; gamification in the context of learning is a design process of adding game elements in order to change existing learning processes (see Deterding et al. 2011 ; Landers et al. 2018 ).

Although many studies that have examined gamification have lacked a theoretical foundation (Hamari et al. 2014 ; Seaborn and Fels 2015 ), some authors have attempted to explain the relationship between gamification and learning by providing frameworks such as the theory of gamified learning (Landers 2014 ). This theory defines four components: instructional content, behaviors and attitudes, game characteristics, and learning outcomes. The theory proposes that instructional content directly influences learning outcomes as well as learners’ behavior. Because gamification is usually not used to replace instruction, but rather to improve it, effective instructional content is a prerequisite for successful gamification (Landers 2014 ). The aim of gamification is to directly affect behaviors and attitudes relevant to learning. In turn, these behaviors and attitudes are hypothesized to affect the relationship between the instructional content and learning outcomes via either moderation or mediation, depending on the nature of the behaviors and attitudes targeted by gamification (Landers 2014 ). The theory of gamified learning proposes a positive, indirect effect of gamification on learning outcomes. However, it is important to note that this theory provides no information about effective learning mechanisms triggered by game design elements. Such mechanisms can be found in well-established psychological theories such as self-determination theory (Ryan and Deci 2002 ).

Self-determination theory has already been successfully applied in the contexts of games (see Rigby and Ryan 2011 ) and gamification (see Mekler et al. 2017 ; Sailer et al. 2017a ). It postulates psychological needs for competence, autonomy, and social relatedness. The satisfaction of these needs is central for intrinsic motivation and subsequently for high-quality learning (see Ryan and Deci 2000 ), with self-determination theory emphasizing the importance of the environment in satisfying these psychological needs (Ryan and Deci 2002 ). Enriching learning environments with game design elements modifies these environments and potentially affects learning outcomes. From the self-determination perspective, different types of feedback can be central learning mechanisms triggered by game design elements. Constantly providing learners with feedback is a central characteristic of serious games (Wouters et al. 2013 ; Prensky 2001 ) and of gamification (Werbach and Hunter 2012 ). Although the effectiveness of feedback has been shown to vary depending on a variety of criteria such as the timing of feedback (immediate, delayed), frame of reference (criterial, individual, social), or level of feedback (task, process, self-regulation, self), feedback is among the most powerful factors in the relationship between educational interventions and learning in general (Hattie and Timperley 2007 ). Based on the theory of gamified learning as well as self-determination theory, gamification might influence learning outcomes in a positive way.

Previous research that has attempted to synthesize effects of gamification on learning outcomes has done this almost exclusively with (systematic) reviews. An exception is an early meta-analysis on gamification in educational contexts that was based on data from 14 studies published between 2013 and 2015 (Garland 2015 ). Under a random effects model, this meta-analysis identified a positive, medium-sized correlation between the use of gamification and learning outcomes ( r = .31, 95% CI [0.11, 0.47]). It should be noted that learning outcomes in this case almost exclusively pertained to motivational outcomes, except for one study that investigated knowledge retention. Though promising, these results should be viewed with caution because they were methodologically limited. The analysis was highly likely to be underpowered and had only limited generalizability due to the small sample size. Furthermore, r as an estimator of effect size cannot correct for the bias caused by small samples, which can lead to an overestimation of the effect. Also, no evaluation of publication bias was attempted.

Apart from this analysis, a series of reviews providing at least the general tendency of research findings in the field have been conducted. A mutlidisciplinary literature review identified 24 peer-reviewed empirical studies published between 2008 and 2013 (Hamari et al. 2014 ). Nine of the 24 studies were conducted in the context of education and learning. All in all, the results were mixed, despite the presence of a positive tendency suggesting the existence of confounding variables. Seaborn and Fels ( 2015 ) returned concordant findings in their review of eight empirical studies (published between 2011 and 2013) from the domain of education; they also identified a positive tendency but predominantly found mixed results. Results from these reviews were attributed to differences in gamified contexts and participant characteristics, but effects of novelty and publication bias were also discussed as possible reasons for these mixed findings.

A series of reviews specifically focusing on educational contexts was conducted by Dicheva et al. ( 2015 ), Dicheva and Dichev ( 2015 ), and Dichev and Dicheva ( 2017 ). The review by Dicheva et al. ( 2015 ) consisted of 34 empirical studies published between 2010 and 2014. The majority of experiments ( k = 18) reported positive results for gamification on various motivational, behavioral, and cognitive variables. Using the same search strategies as Dicheva et al. ( 2015 ), a subsequent review by Dicheva and Dichev ( 2015 ) resulted in 41 reports published between July 2014 and June 2015. The majority of studies were identified as inconclusive due to methodological inadequacies. Only 10 reports provided conclusive positive evidence, and three studies showed negative effects. Dichev and Dicheva ( 2017 ) followed up by conducting another subsequent literature search focusing on studies published between July 2014 and December 2015. They identified 51 additional studies, of which 41 experiments investigated the effect of gamification on motivational, behavioral, and cognitive outcomes. Whereas 12 experiments reported positive results, three reported negative results. Again, the majority of the experiments ( k = 26) were inconclusive. The large numbers of inconclusive studies in these reviews point toward a general problem: Gamification research lacks methodological rigor.

According to meta-analytic results and several reviews, gamification tends to have positive effects on different kinds of learning outcomes, albeit with mixed results. Thus, in this meta-analysis, we statistically synthesized the state of current research on gamification to investigate the extent to which gamification affects cognitive, motivational, and behavioral learning outcomes compared with conventional instructional methods.

Moderating Factors

Gamification applications can be very diverse, and research has often failed to acknowledge that there are many different game design elements at work that can result in different affordances for learners, modes of social interactions, and learning arrangements (Sailer et al. 2017a ). Thus, we included different moderating factors to account for conceptual heterogeneity in gamification. Further, because contextual and situational factors might influence the effects of gamification on learning outcomes (Hamari et al. 2014 ), and gamification research lacks methodological rigor (Dicheva and Dichev 2015 ; Dichev and Dicheva 2017 ), we also included contextual, situational, and methodological moderators. The process of choosing potential moderating factors for the effects of gamification on learning outcomes was iterative in nature. We included moderating factors that were both theoretically interesting and which the literature was large enough to support their inclusion.

Inclusion of Game Fiction

From a self-determination theory perspective, game contexts can potentially satisfy the need for autonomy and relatedness by including choices, volitional engagement, sense of relevance, and shared goals (Rigby and Ryan 2011 ). These are assumed to be triggered by meaningful stories, avatars, nonplayer characters, or (fictional) teammates (Rigby and Ryan 2011 ; Sailer et al. 2017a ). A shared attribute of these elements is that they provide narrative characteristics or introduce a game world, both of which include elements of fantasy (Bedwell et al. 2012 ; Garris et al. 2002 ). In general, they focus on game fiction , which is defined as the inclusion of a fictional game world or story (Armstrong and Landers 2017 ). Inclusion of game fiction is closely related to the use of narrative anchors and has been shown to be effective for learning as it situates and anchors learning in a context (Clark et al. 2016 ) and can further serve as a cognitive framework for problem solving (Dickey 2006 ). In the context of games, results on the effectiveness of the inclusion of game fiction have been mixed (Armstrong and Landers 2017 ). Whereas Bedwell et al. ( 2012 ) found positive effects of the inclusion of game fiction on knowledge and motivation in their review, meta-analyses by others such as Wouters et al. ( 2013 ) found that serious games with narrative elements are not more effective than serious games without narrative elements. Thus, we investigated whether the use of game fiction moderates the effects of gamification on cognitive, motivational, and behavioral learning outcomes.

Social Interaction

The impact of relatedness in interpersonal activities can be crucial (Ryan and Deci 2002 ). Therefore, the type of social interaction that is likely to occur as a result of gamification could affect its relationship with learning outcomes. Collaboration and competition can be regarded as particularly important in this context (Rigby and Ryan 2011 ).

The term collaboration in this meta-analysis subsumes both collaborative and cooperative learning arrangements (i.e., situations in which learners work together in groups to achieve a shared goal), while being assessed either as a group (collaborative) or individually (cooperative; Prince 2004 ). In the broader context of games, collaboration has the potential to affect the needs for both relatedness and competence (Rigby and Ryan 2011 ). Collaboration not only allows for team work and thus the experience of being important to others, but it also enables learners to master challenges they otherwise might not be able to overcome on their own, which can result in feelings of competence.

Competition can cause social pressure to increase learners’ level of engagement and can have a constructive effect on participation and learning (Burguillo 2010 ). However, it also has the potential to either enhance or undermine intrinsic motivation (Rigby and Ryan 2011 ). In this context, two types of competition can be distinguished. On the one hand, destructive competition occurs if succeeding by tearing others down is required, resulting in feelings of irrelevance and oppression. On the other hand, constructive competition occurs if it is good-natured and encourages cooperation and mutual support (i.e., if competition is aimed at improving everyone’s skills instead of defeating someone). In this sense, constructive competition has the potential to foster feelings of relatedness, thereby enhancing intrinsic motivation (Rigby and Ryan 2011 ).

Collaboration—as well as competition augmented by aspects of collaboration (i.e., constructive competition)—can have additional beneficial effects on intrinsic motivation when compared with solitary engagement in an activity; as in cases of collaboration and competition augmented by aspects of collaboration, the need for relatedness is fostered additionally. Mere competition, however, can thwart feelings of relatedness when the goal is to defeat each other rather than to improve skills together (see Rigby and Ryan 2011 ).

Findings from the context of games have shown that collaborative gameplay can be more effective than individual gameplay (Wouters et al. 2013 ). Clark et al. ( 2016 ) included competition in their meta-analysis on digital games and found that combinations of competition and collaboration as well as single-player games without competitive elements can outperform games with mere competition. In this meta-analysis, we investigated whether different types of social interaction moderate the effects of gamification on cognitive, motivational, and behavioral learning outcomes.

Learning Arrangement of the Comparison Group

Active engagement in cognitive processes is necessary for effective and sustainable learning as well as deep levels of understanding (see Wouters et al. 2008 ). This emphasis on active learning in educational psychology is aligned with the (inter)active nature of games (Wouters et al. 2013 ). Similar to games, gamification also has high potential to create instructional affordances for learners to engage in active learning. Based on the theory of gamified learning, gamification is assumed to affect learning outcomes by enhancing the attitudes and behaviors that are relevant for learning (e.g., when rewards for taking high-quality notes are provided in gamification; Landers 2014 ). A prerequisite is that the behavior or attitude that is targeted by gamification must itself influence learning (Landers 2014 ) and thus create instructional affordances for learners to actively engage in cognitive processes with the learning material. However, how learners interact with the environment has to be considered because learners may interact with the environment in different ways and carry out certain learning activities whether or not they are intended by gamification designers or researchers (see Chi and Wylie 2014 ; Young et al. 2012 ).

Further, in between-subject studies, which were included in this meta-analysis, the learning arrangement of the comparison condition, against which gamification was contrasted, is crucial (see Chi and Wylie 2014 ). Learners in a comparison condition can receive different prompts or instructions to engage in different learning activities and thus bias the effects of gamification. Therefore, it is important to differentiate between the passive and active instructions of comparison groups. Whereas passive instruction includes listening to lectures, watching instructional videos, and reading textbooks, active instruction involves explicitly prompting the learners to engage in learning activities (e.g., assignments, exercises, laboratory experiments; Sitzmann 2011 ; Wouters et al. 2013 ). Similar to the approach used by Wouters et al. ( 2013 ) in the context of games and Sitzmann ( 2011 ) in the context of simulation games, we included the comparison group’s learning arrangement as a potential moderator of effects of gamification on cognitive, motivational, and behavioral learning outcomes.

Apart from these moderators, situational and contextual moderators were also included in the analysis. Thus, we included the period of time in which gamification was applied and the research context as potential moderators.

Period of Time

Previous reviews have indicated that the period of time during which gamification was used and investigated in primary studies has shown substantial variance (Dichev and Dicheva 2017 ; Seaborn and Fels 2015 ). One the one hand, reviews have raised the question of whether effects of gamification persist in the long run (Dichev and Dicheva 2017 ; Hamari et al. 2014 ; Seaborn and Fels 2015 ). On the other hand, research in the context of games has indicated that the effects of games are larger when players engage in multiple sessions and thus play over longer periods of time (Wouters et al. 2013 ). Thus, we included the period of time gamification was used as a potential moderator of its effects on cognitive, motivational, and behavioral learning outcomes.

Research Context

Gamification has been studied in different research contexts. Whereas the majority of studies found in reviews focusing on education were conducted in higher education settings, some of them were performed in primary and secondary school settings (see Dichev and Dicheva 2017 ). Further, some studies have been deployed in the context of further education or work-oriented learning (e.g., Lombriser et al. 2016 ) or informal settings with no reference to formal, higher, or work-related education (e.g., Sailer et al. 2017a ). Therefore, this meta-analysis includes the context of research as a moderating factor for effects of gamification.

As meta-analytic methods allow for a synthesis of studies using different designs and instruments, the degree of methodological rigor can vary between primary studies and thus jeopardize the conclusions drawn from meta-analyses (Wouters et al. 2013 ). Because studies in the context of gamification have often lacked methodological rigor (Dicheva and Dichev 2015 , 2017 ), we included methodological factors to account for possible differences in methodological study design and rigor across primary studies.

Randomization

Randomly assigning learners to experimental conditions allows researchers to rule out alternative explanations for differences in learning outcomes between different conditions. However, quasi-experimental studies do not allow alternative explanations to be ruled out (Wouters et al. 2013 ; Sitzmann 2011 ). For this reason, we included randomization as a moderating factor to account for methodological rigor.

Besides randomization, the design of the primary studies can indicate methodological rigor. Primary studies using posttest-only designs cannot account for prior knowledge or initial motivation. The administration of pretests is particularly relevant for quasi-experimental studies because effects can be biased first by not randomly assigning learners to conditions and second by not controlling for learners’ prior knowledge and motivation. Thus, we included design as a moderating factor to further account for methodological rigor in primary studies.

Instruments

Previous reviews have indicated that primary studies investigating gamification have often not used properly validated psychometric measurements to assess relevant outcomes (Dichev and Dicheva 2017 ; Hamari et al. 2014 ; Seaborn and Fels 2015 ). The use of standardized instruments can help to ensure the comparability of study results and further ensure the reliable measurement of variables (see Hamari et al. 2014 ). Thus, we included the type of instruments used in primary studies as a moderating factor of effects of gamification on cognitive, motivational, and behavioral learning outcomes.

To sum up, in this meta-analysis, we aimed to statistically synthesize the state of current research on gamification on cognitive, motivational, and behavioral learning outcomes. Further, we included the moderating factors inclusion of game fiction , social interaction , learning arrangement of the comparison group , period of time , research context , randomization , design , and instruments that potentially affect the relationships between gamification and cognitive, motivational, and behavioral learning outcomes. Further, we investigated publication bias and the stability of the findings by performing subsplits that included only primary studies that applied high methodological rigor.

Literature Search

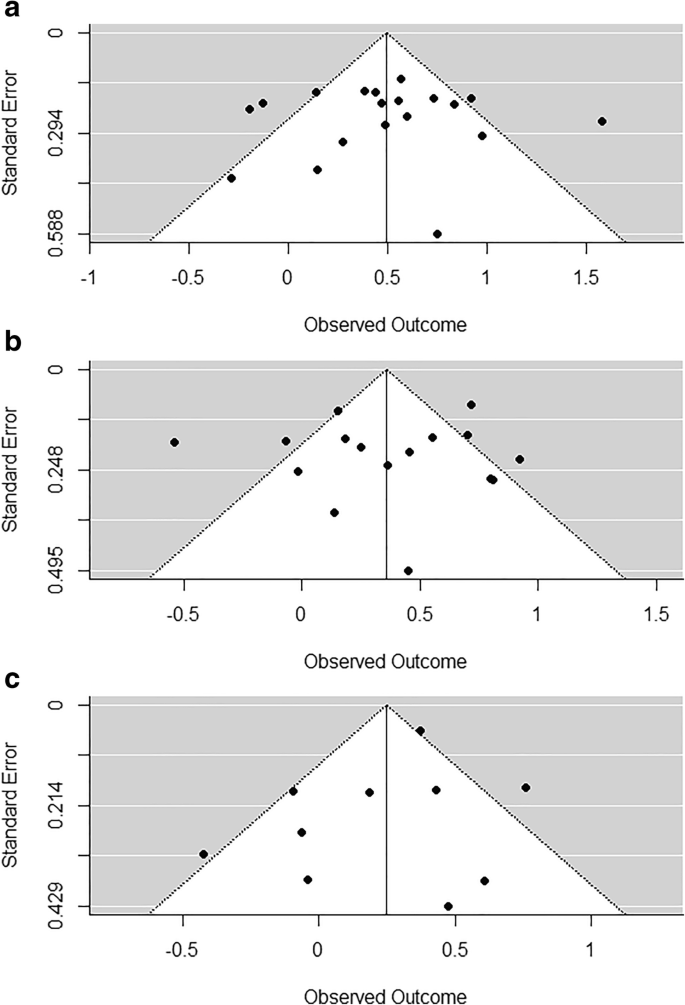

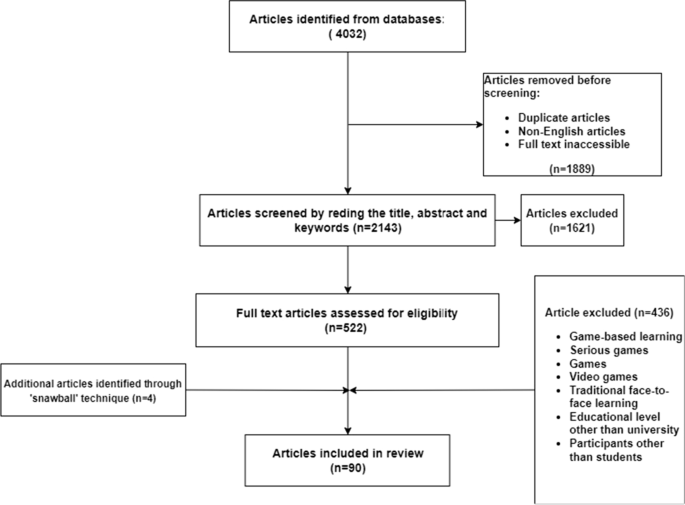

In order to maximize the sensitivity of the search and adopting the search criteria used in the review by Seaborn and Fels ( 2015 ), the terms gamification and gamif* were used in the academic literature search in all subject areas. Specific game design elements were not used as search terms because gamification research lacks consistent terms, definitions, and taxonomies for game design elements and agreed upon lists of game design elements that claim to be exhaustive are lacking (see Landers et al. 2018 ; Sailer et al. 2017a ). Including specific game design elements as search terms would put the analysis in danger of being biased toward specific game design elements while leaving out others that are less common and thus potentially not part of the search terms. The years of publication were not restricted. The literature search was conducted on March 3, 2017. We searched the following academic data bases: ACM Digital Library, ERIC, IEEE Xplore, JSTOR, PubMed, ScienceDirect, and SpringerLink. Citations from these data bases were directly exported from the websites. Aside from this search in academic data bases, we conducted a Google Scholar search with the same terms to further maximize the scope of our literature search. To retrieve citations from Google Scholar, we used Publish or Perish (version 5), a software tool that allows all accessible search results to be automatically downloaded. We also screened the reference lists from Garland’s ( 2015 ) meta-analysis and the reviews by Hamari et al. ( 2014 ), Seaborn and Fels ( 2015 ), Dicheva et al. ( 2015 ), Dicheva and Dichev ( 2015 ), and Dichev and Dicheva ( 2017 ). After removing duplicates, the literature search resulted in a total of 5548 possibly eligible records. An overview of the total number of search results per data base is found in Fig. 1 . Google Scholar only shows a maximum of 1000 search results per search query. Therefore, 1100 denotes the number of records identified and retrieved from separate queries using gamification and gamif* .

Study flow diagram

Inclusion and Exclusion Criteria

- Gamification

Studies were required to include at least one condition in which gamification, defined as “the use of game design elements in non-game contexts” (Deterding et al. 2011 , p. 9), was used as an independent variable. Accordingly, studies describing interventions using the term gamification were excluded if the definition of gamification used in this meta-analysis did not apply to the intervention.

Learning Outcomes

Eligible studies were required to assess at least one learning outcome. Learning outcomes were divided into three categories: cognitive, motivational, and behavioral learning outcomes. Cognitive learning outcomes refer to conceptual knowledge or application-oriented knowledge. Conceptual knowledge contains knowledge of facts, principles, and concepts, whereas application-oriented knowledge comprises procedural knowledge, strategic knowledge, and situational knowledge (de Jong and Ferguson-Hessler 1996 ). Adopting a broad view of motivation (see Wouters et al. 2013 ), m otivational learning outcomes encompass (intrinsic) motivation, dispositions, preferences, attitudes, engagement, as well as feelings of confidence and self-efficacy. Behavioral learning outcomes refer to technical skills, motor skills, or competences, such as learners’ performance on a specific task, for example, a test flight after aviation training (Garris et al. 2002 ).

Eligible studies were required to be published in English.

Research Design

Only primary studies applying quantitative statistical methods to examine samples of human participants were eligible. Furthermore, descriptive studies that did not compare different groups were excluded because the data obtained from such studies does not allow effect sizes to be calculated.

Control Group

Studies were required to use a between-subject design and to compare at least one gamification condition with at least one condition involving another instructional approach. As the goal of this meta-analysis was to investigate the addition of game design elements to systems that did not already contain them, studies comparing gamification with fully fledged games were ineligible.

Availability of Statistical Data

Studies were required to report sufficient statistical data to allow for the application of meta-analytic techniques.

Coding Procedure

First, we screened all titles for clearly ineligible publications (e.g., publications published in languages other than English). Next, we coded all remaining abstracts for eligibility. Finally, the remaining publications were retrieved and coded at the full-text level. An overview of the number of search results excluded per step is found in Fig. 1 .

Moderator coding for eligible studies was then performed by two independent coders on a random selection of 10 studies (approximately 25%), with interrater reliability ranging from κ = .76 to perfect agreement. Remaining coding discrepancies were discussed between the authors until mutual agreement was reached before coding the remaining studies. Furthermore, all statistical data were double-coded, and differing results were recalculated by the authors. Finally, data on moderator variables were extracted. The previously introduced moderators, which potentially influence the effectiveness of gamification on learning outcomes, were coded as follows.

Studies using game fiction by providing a narrative context or introducing a game world (e.g., meaningful stories or avatars) were coded yes , whereas studies that did not use game fiction were coded no . For this moderator, interrater reliability was κ = .76.

Studies in which learners competed against each other or nonplayer characters during gamified interventions were coded as competitive , whereas studies in which learners collaborated with each other or nonplayer characters were coded as collaborative . If a study included both competitive and collaborative elements, it was assigned the code competitive-collaborative . Studies in which learners engaged in a learning activity entirely on their own were coded as none . For this moderator, interrater reliability was κ = .83.

Passive instructional methods of the comparison group include listening to lectures, watching instructional videos, and reading textbooks. Active instruction refers to learning arrangements that explicitly prompt learners to engage in learning activities (e.g., assignments, exercises, laboratory experiments; see Sitzmann 2011 ). Mixed instruction refers to a combination of passive and active instructional methods (see Wouters et al. 2013 ). Studies using a waitlist condition as a control group were coded as untreated. For this moderator, interrater reliability was κ = .86.

The duration of the intervention was operationalized as the period of time over which the intervention took place. Studies were assigned to one of the categories: 1 day or less , 1 week or less (but longer than 1 day), 1 month or less (but longer than 1 week), half a year or less (but longer than 1 month), or more than half a year . For this moderator, coders achieved perfect agreement.

Depending on the research context, studies were coded as school setting or higher education setting . Studies in the context of further education or work-oriented learning were coded as work-related learning setting. Studies with no reference to formal, higher, or work-related education were coded as informal training setting . For this moderator, coders achieved perfect agreement.

If participants were assigned to the experimental and control groups randomly, the study was coded as experimental , whereas publications using nonrandom assignment were assigned the value quasi-experimental . For this moderator, coders reached perfect agreement.

Furthermore, studies were coded on the basis of whether they exclusively used posttest measures ( posttest only ) or also administered a pretest ( pre- and posttest ). For this moderator, coders achieved perfect agreement.

Studies using preexisting, standardized instruments to measure the variables of interest were coded as standardized , whereas studies using adapted versions of standardized measures were coded as adapted . If the authors developed a new measure, studies were assigned the value self-developed . For this moderator, coders reached perfect agreement.

Final Sample

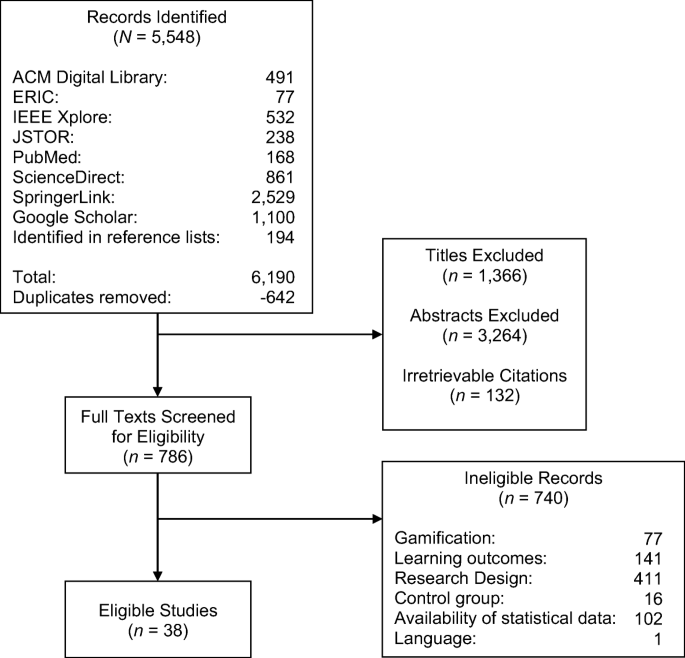

Application of the exclusion criteria detailed above resulted in a final sample of 38 publications reporting 40 experiments. Three of the studies (Wasson et al. 2013 ; Grivokostopoulou et al. 2016 ; Sanmugam et al. 2016 ) were excluded from the analysis to avoid bias after the forest plot; displaying the effect sizes for the analysis of cognitive learning outcomes showed that the corresponding effect sizes were extraordinarily large (see Fig. 2 ). An examination of the forest plots for motivational and behavioral learning outcomes revealed no remarkable outliers (see Fig. 2 ). Furthermore, the studies by de-Marcos et al. ( 2014 ) and Su and Cheng ( 2014 ) plus the first of two studies reported by Hew et al. ( 2016 ) were excluded from the analysis because they reported the same experiments as previously published or more extensive studies included in this sample.

Forest plots showing the distribution of effect sizes for cognitive (a), motivational (b), and behavioral (c) learning outcomes. Points and lines display effect sizes (Hedges’ g ) and confidence intervals, respectively. The overall effect size is shown by the diamond on the bottom, with its width reflecting the corresponding confidence interval

The final sample of studies reporting cognitive learning outcomes comprised 19 primary studies reporting 19 independent experiments. They were published between 2013 and 2017 and examined a total of 1686 participants. Considering motivational learning outcomes, the final sample of studies consisted of 16 primary studies reporting 16 independent experiments that were published between 2013 and 2017 and examined a total of 2246 participants. Finally, the final sample of studies examining behavioral learning outcomes consisted of nine primary studies reporting 10 independent experiments. These experiments were published between 2014 and 2017 and examined a total of 951 participants.

Statistical Analysis

Effect sizes were estimated using the formulas provided by Borenstein et al. ( 2009 ). First, Cohen’s d was determined by dividing the difference between the means of the experimental and control groups by the pooled standard deviation. If no means and/or standard deviations were available, effect sizes were estimated on the basis of the t , F , or r statistics. To correct for possible bias caused by small samples, Cohen’s d was then used to calculate Hedges’ g (Hedges 1981 ).

For studies with pretests, effect sizes were adjusted to allow for a more accurate estimation of the effect by controlling for pretest effects. In these cases, Hedges’ g was calculated for both pre- and posttest comparisons between groups. Posttest values were then adjusted by subtracting pretest effect sizes from posttest effect sizes, while the respective variances were added up (see Borenstein et al. 2009 ).

Another issue concerns studies reporting multiple effect sizes. This can occur when studies use multiple outcome measures or compare the same control group with more than one experimental group. To avoid the bias caused by dependent effect sizes, they were synthesized by calculating the mean effect size per study and the respective variance, taking into account the correlation between outcomes as recommended by Borenstein et al. ( 2009 ). The aggregation was performed using the R statistical environment (version 3.4.1) and Rstudio (version 1.0.143) with the MAd package.

For some studies that reported data on different experimental groups or outcomes, even though they belonged to the same study, they required assignment to different moderator levels. In these cases, aggregation would lead to a loss of data and not allow these studies to be included in the respective subgroup analyses because they could not be unambiguously assigned to a single moderator level. Therefore, groups were treated separately and not aggregated for the moderator analysis but were aggregated for all analyses not affected by this problem.

Because heterogeneity among the participant samples and the experimental conditions could be presumed, we used a random effects model for the main analysis (Borenstein et al. 2009 ), which was also conducted using R and Rstudio with the metafor and matrix packages. Because true effect sizes can vary under a random effects model, it is important to identify and quantify this heterogeneity. Using the Q statistic (Borenstein et al. 2009 ), for which the p value indicates the presence or absence of heterogeneity, the degrees of homogeneity were assessed for the effect sizes. Additionaly, I 2 was used to quantify the degree of inconsistency for the results of the included studies on a scale ranging from 0 to 100%.

Meta-regressions under a mixed effects model (Borenstein et al. 2009 ) were conducted for the moderator analyses using the metafor and matrix packages in R and R studio. This analysis uses a Q test as an omnibus test to estimate the heterogeneity among studies explained by the moderator, as well as residual heterogeneity (i.e., whether unexplained between-study variance remained). If a moderator level had a sample size smaller than two, it was excluded from the analysis. For categorical moderators, post hoc comparisons under a random effects model were calculated with the MAd package if the omnibus test indicated significance.

Because the reporting of a sufficient amount of statistical data on at least one outcome of interest was used as an eligibility criterion, there were no missing data in the summary effect analyses. However, there were missing data for the moderation analyses because not all studies reported sufficient information about all variables, and thus, they could not be unambiguously coded. These cases were excluded from the respective moderator analyses.

Furthermore, bivariate correlations between the presence of learning outcomes and moderator levels were computed for all studies. Therefore, all learning outcomes and moderator levels were dummy coded and included in a correlation matrix. The moderators inclusion of game fiction , randomization , and design were already initially dichotomously coded, and thus, only the moderator levels that indicated the presence of game fiction and randomization and the application of pre-posttest designs were included in the matrix. The database for this analysis is the final sample detailed above, excluding studies reporting the same experiment as other studies and the outliers described above.

Publication Bias

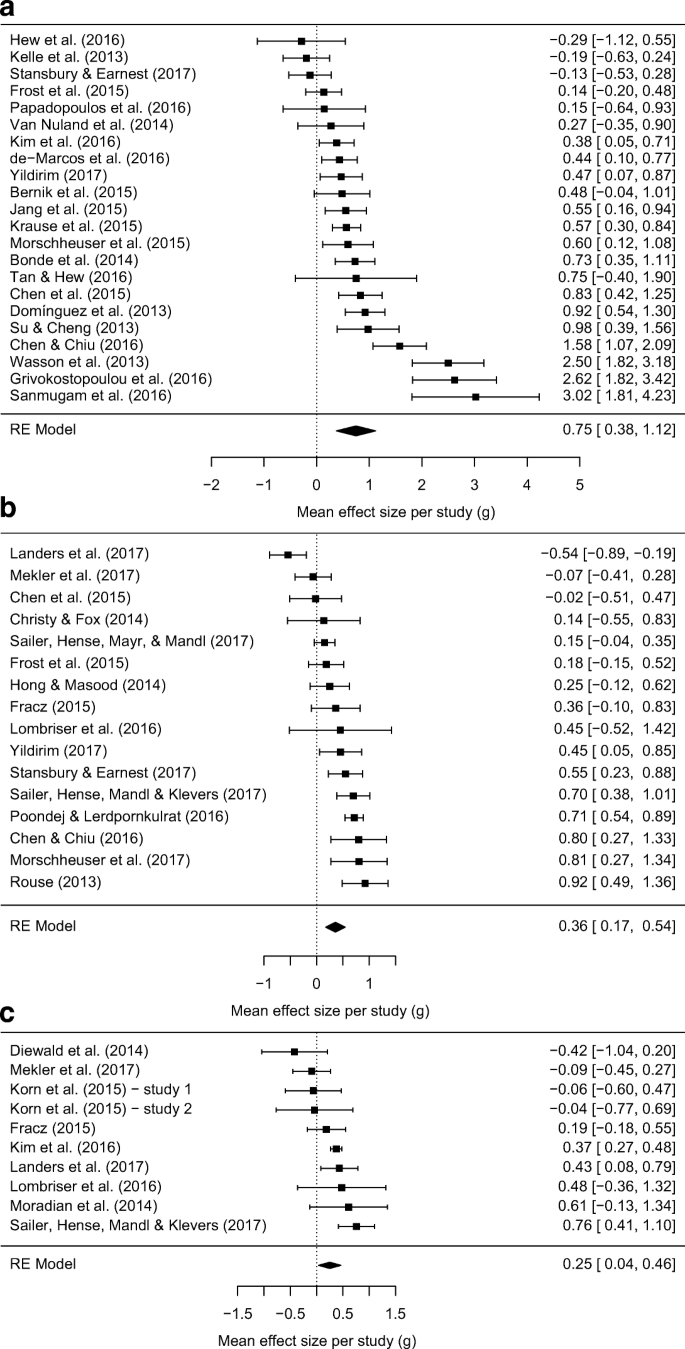

The absence of publication bias is highly unlikely unless a set of preregistered reports is examined (Carter et al. 2019 ). Because this was not the case for the primary studies included in this meta-analysis, the possibility of publication bias was explored by using a funnel plot, a selection model, and the fail-safe number. Evaluations of the funnel plots showed no obvious asymmetries for the samples of studies reporting cognitive, motivational, and behavioral learning outcomes (see Fig. 3 ), initially indicating the absence of strong publication bias.

Funnel plot for cognitive (a), motivational (b), and behavioral (c) learning outcomes. Black dots indicate studies from the present sample positioned by their respective estimated effect size and standard error

The selection model indicated that there was no publication bias for the subsets of studies reporting cognitive, χ 2 (1) = .45, p = .50, motivational, χ 2 (1) = 1.04, p = .31, and behavioral learning outcomes, χ 2 (1) = .44, p = .50. For this reason, estimates from the initial random effects model were computed for the cognitive, motivational, and behavioral learning outcomes.

Further, Rosenberg’s ( 2005 ) fail-safe number was used as an estimate of the degree to which publication bias existed in the sample. The fail-safe number indicates the number of nonsignificant studies that would need to be added to reduce a significant effect to a nonsignificant effect. It was computed for all significant summary effects as well as for every moderator level that showed a statistically significant effect. The fail-safe number can be considered robust when it is greater than 5 n + 10, with n standing for the number of primary studies (Rosenthal 1991 ).

To further investigate the stability of the effects, subsplits were computed for the summary effect and moderator analyses for conceptual, situational, and contextual moderators. In these subsplits for cognitive, motivational, and behavioral learning outcomes, only studies applying experimental designs or using quasi-experimental designs with pre- and posttests were included. Thus, we assumed that the studies included in these subsplit analyses were characterized by high methodological rigor.

Before reporting the summary effect and moderator analyses, we report correlations of the presence of different learning outcomes and moderator levels in gamification studies included in the final sample to illustrate possible co-occurrences of outcomes and moderator levels. The resulting correlation matrix, including the number of studies for each cell, is shown in Table 1 . All of the following results refer to significant correlations. Studies investigating cognitive learning outcomes were less likely to also observe motivational ( r = − .47) and behavioral learning outcomes ( r = − .60). Further, studies observing cognitive learning outcomes were more likely to use a mixed instruction comparison group ( r = .41), including self-developed instruments ( r = .35), and less likely to last 1 day ( r = − .53), indicating that these studies often took longer periods of time. Motivational learning outcomes were more likely to use standardized ( r = .44) or adapted instruments ( r = .54). Investigating behavioral learning outcomes was positively correlated with using an active comparison group ( r = .35).

Studies showed some specific patterns for their respective research context: Studies performed in school settings were more likely to use passive instruction for the comparison group ( r = .43). Higher education studies were more likely to investigate cognitive learning outcomes ( r = .35) that were compared with a mixed instruction control group ( r = .45) over a period of half a year ( r = .48). Some kind of social interaction was more likely to occur in higher education contexts (correlation with no social interaction r = − .39). In work-related training settings, studies were more likely to observe behavioral learning outcomes ( r = .37), and results were likely to be compared with a comparison group receiving active instruction ( r = .34). The use of no social interaction was positively correlated with work settings ( r = .49), and no study investigated competitive or collaborative gamification implementation in this setting. Studies in informal training settings were likely to apply randomization ( r = .35).

Further significant correlations were shown for the inclusion of game fiction: Studies including game fiction were less likely to apply randomization ( r = − .41) and were more likely to include collaborative modes of social interaction ( r = .45).

Summary Effect Analyses

Cognitive learning outcomes.

The random effects model yielded a significant, small effect of gamification on cognitive learning outcomes ( g = .49, SE = .10, p < .01, 95% CI [0.30, 0.69]). Homogeneity estimates showed a significant and substantial amount of heterogeneity for cognitive learning outcomes, Q (18) = 57.97, p < .01, I 2 = 72.21%. The fail-safe number could be considered robust for cognitive learning outcomes (fail-safe N = 469).

Motivational Learning Outcomes

The results of the random effects model showed a significant, small effect of gamification on motivational learning outcomes ( g = .36, SE = .09, p < .01, 95% CI [0.18, 0.54]) and a significant amount of heterogeneity, Q (15) = 73.54, p < .01, I 2 = 75.13%. The fail-safe number indicated a robust effect for motivational learning outcomes (fail-safe N = 316).

Behavioral Learning Outcomes

The random effects model showed a significant, small effect of gamification on behavioral learning outcomes ( g = .25, SE = .11, p < .05, 95% CI [0.04, 0.46]). Results showed a significant and substantial amount of heterogeneity for behavioral learning outcomes, Q (9) = 22.10, p < .01, I 2 = 63.80%. The fail-safe number for behavioral learning outcomes could be interpreted as robust (fail-safe N = 136).

Moderator Analyses

As the homogeneity estimates showed a significant and substantial amount of heterogeneity for cognitive, motivational, and behavioral learning outcomes, moderator analyses were conducted to determine whether additional factors could account for the variance observed in the samples. Not all of the following comparisons contained all possible levels of the respective moderator because levels with k ≤ 1 were excluded from these analyses. Summaries of the moderator analysis are shown in Table 2 for cognitive, Table 3 for motivational, and Table 4 for behavioral learning outcomes.

The mixed effects analysis concerning game fiction resulted in no significant effect size differences for cognitive learning outcomes, Q (1) = 0.04, p = .85, and the residual variance was significant ( p < .01). For motivational learning outcomes, effect size magnitude did not vary significantly, Q (1) = 0.13, p = .72, with significant residual variance remaining ( p < .01). Finally, the results of the mixed effects analysis for behavioral learning outcomes showed a significant difference in the magnitudes of the effect sizes for the game fiction moderator, Q (1) = 5.45, p < .05, and no residual variance was left ( p = .08). An evaluation of the separate levels showed that inclusion of game fiction yielded a significant, small effect on behavioral learning outcomes in contrast to a nonsignificant result of not including game fiction.

Concerning social interaction, the results of the mixed effects analysis showed no significant difference in effect sizes between different forms of social interaction for cognitive learning outcomes, Q (3) = 0.80, p = .85, or for motivational learning outcomes, Q (3) = 3.85, p = .28. For both outcomes, significant residual variance remained ( p < .01). However, for behavioral learning outcomes, there was a significant difference between competitive, competitive-collaborative, and no social interaction, Q (2) = 12.80, p < .01, with no significant residual variance remaining ( p = .05). Post hoc comparisons under a random effects model showed a significant difference between competitive-collaborative interaction and no social interaction ( p < .05), with the former outperforming the latter.

As for the learning arrangement of the comparison group, no difference in effect sizes could be found regarding cognitive learning outcomes, Q (2) = 1.49, p = .48, or for motivational learning outcomes, Q (2) = 1.17, p = .56. Significant residual variance remained for both outcomes ( p < .01). We could not assess the effect of the learning arrangement of the comparison group for behavioral learning outcomes because there was only one subgroup with more than one study.

There was no significant difference between interventions with different durations for cognitive, Q (2) = 0.17, p = .92, or behavioral learning outcomes, Q (1) = 0.28, p = .59. For both outcomes, significant residual variance remained ( p < .01). For motivational learning outcomes, there was a significant difference between gamification interventions lasting 1 day or less and interventions lasting half a year or less, Q (1) = 4.93, p < .05. Gamification interventions lasting half a year or less showed significantly larger effects on motivational learning outcomes than interventions lasting 1 day or less. Significant residual variance remained ( p < .01).

Mixed effects analyses showed no significant difference between different research contexts for motivational, Q (3) = 5.09, p = .17, or behavioral learning outcomes, Q (2) = 0.67, p = .71. For both outcomes, significant residual variance remained ( p < .01). For cognitive learning outcomes, there was a significant difference between school, higher education, and informal training settings, Q (2) = 12.48, p < .01, with significant residual variance remaining ( p < .01). Post hoc comparisons under a random effects model showed a significant difference between studies performed in school settings and studies performed in higher education settings ( p < .01) or informal training settings ( p < .05). The effects found in school settings were significantly larger than those found in either higher education settings or informal education settings.

Results from the mixed effects analyses showed no significant difference between experimental and quasi-experimental studies regarding cognitive, Q (1) = 1.18, p = .28, or behavioral learning outcomes, Q (1) = 1.63, p = .20. The residual variance was significant ( p < .01). However, for motivational learning outcomes, there was a significant difference in the magnitude of the effect size between experimental and quasi-experimental studies, Q (1) = 4.67, p < .05. Quasi-experimental studies showed a significant medium-sized effect on motivational learning outcomes, whereas experimental studies showed a nonsignificant effect. The residual variance was significant ( p < .01).

Mixed effects analyses showed no significant effects of applied designs for cognitive learning outcomes, Q (1) = 0.05, p = .82, and the residual variance was significant ( p < .01). An evaluation of the influence of the applied design was not possible for the motivational and behavioral learning outcomes because there was only one subgroup with more than one study.

An evaluation of the influence of the instrument that was used was not possible for cognitive or behavioral learning outcomes because there was only one subgroup with more than one study in each of these areas. Moreover, there was no significant difference in effect sizes between studies using standardized, adapted, or self-developed instruments for motivational learning outcomes, Q (2) = 2.85, p = .24, with significant residual variance remaining ( p < .01).

Subsplit Analyses Including Studies with High Methodological Rigor

Subsplits were performed for studies with high methodological rigor. Thus, in the following analyses, only studies with experimental designs or quasi-experimental designs that used pre- and posttests were included. In line with the summary effect analysis above, the subsplit for cognitive learning outcomes showed a small effect of gamification on cognitive learning outcomes ( g = .42, SE = .14, p < .01, 95% CI [0.14, 0.68], k = 9, N = 686, fail-safe N = 51), with homogeneity estimates showing a significant and substantial amount of heterogeneity, Q (8) = 19.90, p < .05, I 2 = 51.33%. In contrast to the summary effects analysis above, results of the subsplit for motivational learning outcomes showed no significant summary effect of gamification on motivational learning outcomes ( g = .22, SE = .17, p = .20, 95% CI [− 0.11, 0.56], k = 7, N = 1063). Significant residual variance was left, Q (6) = 35.99, p < .01, I 2 = 84.23%. Further, the subsplit summary effect of gamification on behavioral learning outcomes was not significant ( g = .27, SE = .22, p = .22, 95% CI [− 0.16, 0.70], k = 5, N = 667). The residual variance was significant, Q (4) = 17.66, p < .01, I 2 = 78.59%.

Moderator analyses for the subsplit for cognitive learning outcomes could only be performed for social interaction , learning arrangement of the comparison group , and period of time because the subgroups were too small for several other moderator levels (see Table 5 ). In line with the moderator analysis described above (see Table 2 ), social interaction, learning arrangement of the comparison group, and period of time did not significantly moderate the effects of gamification on cognitive learning outcomes in the subsplit analysis.

For motivational learning outcomes, moderator analyses were conducted for inclusion of game fiction , social interaction , and research context . Again, other moderator analyses were not possible because the subgroups were too small (see Table 6 ). In line with the initial moderator analyses for motivational learning outcomes (see Table 3 ), game fiction and research context did not significantly moderate the effects of gamification on motivational learning outcomes. Contrary to the initial moderator analysis, the subsplit analysis for social interaction showed significant differences in the magnitude of the effect size, Q (2) = 7.20, p < .05, with significant residual variance remaining ( p < .01). Gamification with combinations of competition and collaboration showed a medium-sized effect on motivational learning outcomes and thus outperformed the gamification environments that solely used competition.

Subsplit moderator analyses for behavioral learning outcomes were only possible for the social interaction moderator (see Table 7 ). The results of this analysis were in line with the initial moderator analysis (see Table 4 ) in showing a significant moderating effect of social interaction on the relationship between gamification and behavioral learning outcomes, Q (1) = 6.87, p < .01. Gamification with competitive-collaborative modes of social interaction outperformed gamification that solely used competitive modes of social interaction.

The aim of this meta-analysis was to statistically synthesize the current state of research on the effects of gamification on cognitive, motivational, and behavioral learning outcomes, taking into account potential moderating factors. Overall, the results indicated significant, small positive effects of gamification on cognitive, motivational, and behavioral learning outcomes. These findings provide evidence that gamification benefits learning, and they are in line with the theory of gamified learning (Landers 2014 ) and self-determination theory (Ryan and Deci 2002 ). In addition, the results of the summary effect analyses were similar to results from meta-analyses conducted in the context of games (see Clark et al. 2016 ; Wouters et al. 2013 ), indicating that the power of games can be transferred to non-game contexts by using game design elements. Given that gamification research is an emerging field of study, the number of primary studies eligible for this meta-analysis was rather small, and effects found in this analysis were in danger of being unstable. Therefore, we investigated the stability of the summary effects. Fail-safe numbers as an estimate of the degree to which publication bias may exist in the samples were applied and indicated that the summary effects for cognitive, motivational, and behavioral outcomes were stable. However, subsplits exclusively including studies with high methodological rigor only supported the summary effect of gamification on cognitive learning outcomes. Although the analyses were underpowered, the summary effects for motivational and behavioral learning outcomes were not significant. Thus, summary effects of gamification on motivational and behavioral learning outcomes are not robust according to the subsplit analyses. For both motivational and behavioral learning outcomes, the subsplits indicate effects of gamification depend on the mode of social interaction. In a nutshell, gamification of motivational and behavioral learning outcomes can be effective when applied in competitive-collaborative settings in contrast to mere competitive settings.

The significant, substantial amount of heterogeneity identified in all three subsamples was in line with the positive, albeit mixed findings of previous reviews. For this reason, moderator analyses were computed to determine which factors were most likely to contribute to the observed variance.

Moderators of the Effectiveness of Learning with Gamification

For both cognitive and motivational learning outcomes, there was no significant difference in effect sizes between the inclusion and the exclusion of game fiction. The mechanisms that are supposed to be at work according to self-determination theory (Rigby and Ryan 2011 ) were not fully supported in this analysis. However, the results on cognitive and motivational learning outcomes were in line with meta-analytic evidence from the context of games, which found that including game fiction was not more effective than excluding it (Wouters et al. 2013 ). Nevertheless, for behavioral learning outcomes, the effects of including game fiction were significantly larger than the effects without game fiction. The fail-safe number for the effect of game fiction on behavioral learning outcomes indicated a stable effect that did not suffer from publication bias. However, studies including game fiction were less likely to use experimental designs, which can be a confounding factor.

These results raise the question as to why the use of game fiction seems to matter only for behavioral learning outcomes. A striking divergence between the data representing behavioral learning outcomes and cognitive or motivational learning outcomes is the point of time in which the data were collected: Whereas cognitive and motivational learning outcomes were almost exclusively measured after interventions, behavioral learning outcomes were almost exclusively measured during interventions (i.e., the measurement was to some extent confounded with the intervention itself). Therefore, it makes sense to ask whether the significant difference in behavioral learning outcomes regarding the use of game fiction really reflects a difference in effectiveness for learning or rather points toward an effect of gamification with game fiction on assessment. Previous research has in fact shown that gamification can affect the data (e.g., the number of questions completed or the length of answers produced; Cechanowicz et al. 2013 ). Even though behavioral learning outcomes were typically measured by participants completing specific tasks such as assembling Lego® cars (Korn et al. 2015 ), it may well be the case that these findings also transfer to the number of completed tasks or the time and effort invested in completing a task. From a theoretical point of view, the present results might merely reflect the idea that including game fiction was more effective in getting learners to invest more effort in completing tasks than not including game fiction. It remains uncertain whether these differences would also appear if performance was assessed without gamification.

We attempted to include the time of assessment as a moderator in this analysis; however, there were not enough studies in the subgroups to allow for a conclusive evaluation. Future studies should consider assessing behavioral learning outcomes not only as process data during the intervention but also after the intervention to avoid confounding effects.

Additionally, as gamification can be described as a design process in which game elements are added, the nonsignificant result of this moderator for cognitive and motivational learning outcomes could be explained by the quality with which the (game) design methods were applied: Most learning designers who apply and investigate gamification in the context of learning are not trained as writers and are probably, on average, not successful at applying game fiction effectively. Further, the findings could also be affected by how the moderator was coded. For example, the effectiveness of gamification might be affected by whether game fiction was only used at the beginning of an invervention to provide initial motivation, but it might not be relevant afterwards (e.g., avatars that cannot be developed), or they might continue to be relevant throughout the intervention (e.g., meaningful stories that continue). These possible qualitative differences in the design and use of game fiction could have contributed to the mixed results found in the present analysis. Due to the small sample size, a more fine-grained coding was not possible because it would have led to subgroups that were too small to be used to conduct any conclusive comparisons. Further, subsplit analyses regarding the moderator inclusion of game fiction were not possible for behavioral learning outcomes because the subgroups were too small.

For the cognitive and motivational learning outcomes, no significant difference in effect sizes were found between the different types of social interaction. For behavioral outcomes, a significant difference was found between competitive-collaborative and no interaction in favor of the former. As mentioned previously, behavioral learning outcomes, as opposed to cognitive and motivational learning outcomes, were almost exclusively comprised of process data. Therefore, the results of this analysis suggested that different types of social interactions affect learners’ behavior within gamification. Evoking social interactions via gamification in the form of combinations of collaboration and competition was most promising for behavioral learning outcomes. This result for behavioral learning outcomes is in line with evidence from the context of games, showing that combinations of competition and collaboration in games are promising for learning (Clark et al. 2016 ).

Although the positive effect of competitive-collaborative modes of interaction on behavioral learning outcomes was in danger of being unstable with respect to its fail-safe number, the subsplit of studies with high methodological rigor confirmed the advantage of the combination of competition and collaboration, but here, the advantage was over competition by itself. Interestingly, the findings from the subsplits of motivational and behavioral learning outcomes showed parallels as both showed that combinations of competition and collaboration outperformed mere competition. These results can be interpreted to mean that mere competition might be problematic for fostering learners’ motivation and performance—at least for some learners under certain circumstances.

A factor that might have contributed to these results is learners’ subjective perceptions. As Rigby and Ryan ( 2011 ) pointed out, satisfying the need for relatedness, especially in competitive situations, largely depends on how competition is experienced. Perceiving competition as constructive or destructive is “a function of the people involved rather than the activity itself” (Rigby and Ryan 2011 , p. 79). The interaction between learner characteristics and gamification should therefore be investigated in primary studies. A closely related problem concerns whether learners engaged in the interventions in the manner in which they were intended. Most of the studies did not provide information about whether or not learners took advantage of the affordances to engage in collaborative and/or competitive interactions. These issues can only be resolved by conducting future primary studies with data on learning processes, which will allow for investigations of these aspects on a level more closely related to what actually occurs during interventions and checks for implementation fidelity. Besides observations of learners’ interactions with learning environments, gamification research should take advantage of log-file analyses and learning analytics to analyze the human-environment interaction in gamified environments (see Young et al. 2012 ).

Additionally, differences in learners’ skill levels may have also contributed to the mixed results found in this analysis. Competition may be problematic if it occurs between learners with widely different skill levels because succeeding can be unattainable for learners with lower skill levels (Slavin 1980 ; Werbach and Hunter 2012 ). Only a few primary studies have considered participants’ skill levels. Future studies applying competitive or competitive-collaborative interventions should take prior skill level into account and report it. Further, the effectiveness of different types of social interaction could be influenced by the way the gamification environment and the specific game design elements were designed. Landers et al. ( 2017 ) showed how leaderbords that are implemented to foster competition can be interpreted as goals, suggesting that leaderbords with easy goals would likely be less effective than leaderboards with difficult goals. This thereby suggests that also within the single moderator levels of our meta-analysis, the effects could vary depending on their specific design.

Results of the moderator learning arrangement of the comparison group did not show significant differences between gamification when compared with different types of instruction (i.e., passive, active, or mixed instruction) in the comparison group for cognitive and motivational learning outcomes. For behavioral learning outcomes, the analysis was not possible because the moderator subgroups were too small. On the one hand, these results may indicate that gamification is not yet used in a way that focuses on fostering high-quality learning activities and, thus, does not take full advantage of the possibilities that gamification might have. As proposed by the theory of gamified learning, gamification can affect learning outcomes by enhancing activities that are relevant for learning and might thus create instructional affordances for learners to actively engage in cognitive processes with the learning material. Several primary studies included in this analysis could have failed to specifically provide affordances for high-quality learning activities.

On the other hand, learners might not actually take advantage of the instructional affordances provided by the gamified system. Similar to the social interaction moderator, learners probably did not engage in certain (high-quality) learning activities, as intended by the gamification environment (see Chi and Wylie 2014 ). Primary studies in this meta-analysis did not report sufficient data on the learning processes to clarify this issue. Therefore, future primary studies should use the human-environment interaction as the unit of analysis to account for learners interacting in different ways with the gamified environment (see Young et al. 2012 ). This would allow researchers to investigate different levels of learning activities that are fostered by certain game design elements while taking into account different situational factors and learner characteristics. Differentiating actual learning activities on a more fine-grained level into passive, active, constructive, and interactive learning activities, as suggested by Chi and Wylie ( 2014 ), would enable gamification research to find answers about how, for whom, and under which conditions gamification might work best.

Situational, Contextual, and Methodological Moderators

Besides the moderators discussed above, period of time , research context , randomization , design , and instruments were included as moderators to account for situational and contextual factors as well as methodological rigor. Results regarding period of time for cognitive and behavioral learning outcomes indicated that gamification can be effective in both the short and long term. For motivational learning outcomes, interventions lasting half a year or less (but more than 1 month) showed a medium-sized effect, whereas interventions lasting 1 day or less showed a nonsignificant result. The results of this meta-analysis can weaken the fear that effects of gamification might not persist in the long run and might thus contradict the interpretations presented in reviews (see Hamari et al. 2014 ; Seaborn and Fels 2015 ). For motivational outcomes, it might even take longer to affect motivation. However, this does not allow for any conclusions about how enduring the obtained effects will be. An attempt to include the time of the posttest as an additional methodological moderator in this analysis was not possible across all three subsamples because only one study reported a delayed posttest. Future primary studies should therefore focus on conducting follow-up tests so that conclusions about the endurance of the effects of gamification can be drawn.

Regarding the research context, a significant difference was found between school settings and higher education settings as well as informal settings in favor of the school setting for cognitive learning outcomes. Therefore, gamification works especially well for school students’ knowledge acquisition. No significant differences were found for motivational and behavioral learning outcomes. It should be noted that studies in school contexts were more likely to compare gamification groups with groups receiving passive instruction as opposed to studies from higher education, which were more likely to use control groups that received mixed instructions. This could have led to biased effects.

Randomization did not affect the relationship between gamification and cognitive learning outcomes but did so for motivational and behavioral learning outcomes, indicating that methodological rigor might moderate the size of the effect. For both outcomes, effect sizes for quasi-experimental studies were significantly larger than for experimental studies. In cases in which evaluation of the other methodological moderators, namely design and instruments , was possible, no influence on the size of the effect for the respective learning outcomes could be detected.

Limitations

One limitation of our study was that the sample size was rather small, especially for behavioral learning outcomes and all subsplit analyses. This limits the generalizability of the results and is also problematic for statistical power because, for random effects models, power depends on the total number of participants across all studies and on the number of primary studies (Borenstein et al. 2009 ). If there is substantial between-study variance, as in this meta-analysis, power is likely to be low. For this reason, nonsignificant results do not necessarily indicate the absence of an effect but could be explained by a lack of statistical power, especially if effects are rather small.

A common criticism of meta-analytic techniques is that they combine studies that differ widely in certain characteristics, often referred to as comparing apples and oranges (Borenstein et al. 2009 ). This issue can be addressed, to some extent, by including moderators that can account for such differences. In the present meta-analysis, we therefore investigated a set of moderating factors, even though only some of them were shown to be significant moderators of the relationship between gamification and learning. It is important for future research not to disregard the factors that did not show any significant influence, given the issues discussed above regarding power. Furthermore, this set of factors is not exhaustive, and other variables could also account for the observed heterogeneity. A few such factors (e.g., the more fine-grained distinction of game fiction or the time of the posttest) were discussed above, but other factors such as participant characteristics (e.g., familiarity with gaming or differences in the individual perception of game design elements, personality traits, or player types; see Hamari et al. 2014 ; Seaborn and Fels 2015 ) or different types of feedback addressed by certain game design elements may also account for heterogeneity in effect sizes. These aspects could not be investigated in the present analysis because most primary studies did not examine or report them. Further, some of the aspects mentioned above refer to design decisions made by the developers of gamification interventions. Such design decisions can lead to variance in the effect sizes of certain game design elements as shown in the context of leaderboards (Landers et al. 2017 ) and in the context of achievements, showing that effectivity of achievements strongly depends on the design (i.e., the quantity and difficulty of achievements; Groening and Binnewies 2019 ). Our meta-analysis did not include such aspects of design and rather synthesized effects of gamification as it is currently operationalized in the literature. By doing so, a mean design effect was assumed. Nevertheless, our meta-analysis offers an interpretive frame for applying and further investigating gamification. Such a frame is especially needed in such a young field. However, research investigating different aspects of certain game design elements is valuable for fully capturing the role of design in the process of applying gamification.

Another limitation concerns the quality of primary studies in the present analysis, a problem often described with the metaphor garbage in , garbage out (Borenstein et al. 2009 ). As mentioned earlier, gamification research suffers from a lack of methodological rigor, which, from the perspective of meta-analyses, can be addressed by either assessing methodological differences as moderators or excluding studies with insufficient methodological rigor. In this analysis, both approaches were applied: methodological factors were included as moderators, and subsplits involving studies that applied high methodological rigor were performed. For motivational and behavioral learning outcomes, the results showed that quasi-experimental studies found significant effects, whereas experimental study showed nonsignificant results, emphasizing the need for more rigorous primary study designs that allow alternative explanations for differences in learning outcomes between different conditions to be ruled out. The subsplit analyses showed that the summary effects for the motivational and behavioral outcomes were not robust. However, given the small sample sizes in the subgroup analyses, these findings were highly likely to be underpowered and should be viewed with caution.

Outcome measures are a particular aspect affected by both these problems: A lack of uniformity in measurement leads to differences in reliability and validity that are not considered in the calculation of the mean effect sizes (Walker et al. 2008 ). The use of agreed-upon measurement instruments with good psychometric properties is therefore needed to increase both the comparability of studies and methodological rigor.

Gamification in the context of learning has received increased attention and interest over the last decade for its hypothesized benefits on motivation and learning. However, some researchers doubt that effects of games can be transferred to non-game contexts (see Boulet 2012 ; Klabbers 2018 ). The present meta-analysis supports the claim that gamification of learning works because we found significant, positive effects of gamification on cognitive, motivational, and behavioral learning outcomes. Whereas the positive effect of gamification on cognitive learning outcomes can be interpreted as stable, results on motivational and behavioral learning outcomes have been shown to be less stable. Further, the substantial amount of heterogeneity identified in the subsamples could not be accounted for by several moderating factors investigated in this analysis, leaving partly unresolved the question of which factors contribute to successful gamification. More theory-guided empirical research is needed to work toward a comprehensive theoretical framework with clearly defined components that describes precise mechanisms by which gamification can affect specific learning processes and outcomes. Future research should therefore explore possible theoretical avenues in order to construct a comprehensive framework that can be empirically tested and refined.

The theory of gamified learning offers a suitable framework for doing so (see Landers 2014 ; Landers et al. 2018 ). Combined with evidence from well-established educational and psychological theories that provide clear starting points for effective gamification, primary studies should work toward an evidence-based understanding of how gamification works: focusing on specific game design elements affecting specific psychological needs postulated by self-determination theory (see Ryan and Deci 2002 ) and exploring ways to create affordances for high-quality learning activities, namely, constructive and interactive learning activities (see Chi and Wylie 2014 ). Future research has to take into account how psychological needs and high-quality learning activities can be fostered by gamification and to what extent as well as under what conditions learners actually take advantage of these affordances by focusing on the human-environment interaction of learners in gamified interventions (see Young et al. 2012 ).