- Research article

- Open access

- Published: 24 February 2021

Is the evidence on the effectiveness of pay for performance schemes in healthcare changing? Evidence from a meta-regression analysis

- Arezou Zaresani ORCID: orcid.org/0000-0002-6271-9374 1 &

- Anthony Scott 2

BMC Health Services Research volume 21 , Article number: 175 ( 2021 ) Cite this article

8883 Accesses

20 Citations

9 Altmetric

Metrics details

This study investigated if the evidence on the success of the Pay for Performance (P4P) schemes in healthcare is changing as the schemes continue to evolve by updating a previous systematic review.

A meta-regression analysis using 116 studies evaluating P4P schemes published between January 2010 to February 2018. The effects of the research design, incentive schemes, use of incentives, and the size of the payment to revenue ratio on the proportion of statically significant effects in each study were examined.

There was evidence of an increase in the range of countries adopting P4P schemes and weak evidence that the proportion of studies with statistically significant effects have increased. Factors hypothesized to influence the success of schemes have not changed. Studies evaluating P4P schemes which made payments for improvement over time, were associated with a lower proportion of statistically significant effects. There was weak evidence of a positive association between the incentives’ size and the proportion of statistically significant effects.

The evidence on the effectiveness of P4P schemes is evolving slowly, with little evidence that lessons are being learned concerning the design and evaluation of P4P schemes.

Peer Review reports

The use of Pay for Performance (P4P) schemes in healthcare has had its successes and failures. Using financial incentives targeted at healthcare providers to improve value-based healthcare provision is a key policy issue. Many governments and insurers use P4P schemes as a policy lever to change healthcare providers’ behavior to improve value for money in healthcare and make providers accountable. However, numerous literature reviews based on narrative syntheses of evidence have concluded that the evidence on P4P schemes’ impacts is both weak and heterogeneous (see, for instance [ 1 , 2 , 3 ]). The key reasons proposed for the weak evidence is that schemes have been either poorly designed (insufficient size of incentives, unintended consequences, unclear objectives, crowding out of intrinsic motivation, myopia, multi-tasking concerns, external validity, the scheme is voluntary, gaming), or poorly evaluated (poor study designs where causality cannot be inferred, no account of provider selection into or out of schemes, poor reporting of incentive design, poor reporting of parallel interventions such as performance feedback).

In this paper, we examined whether more recent studies provided improved evidence on the effectiveness of P4P schemes. One may expect that those designing and evaluating P4P schemes are improving how these schemes work and are being assessed. We updated the first meta-regression analysis of the effectiveness of P4P schemes [ 4 ] to investigate how more recent studies differed from the previous ones in the overall effects of the P4P schemes, the effects of study design on the reported effectiveness of the P4P schemes, and the effects of the size of incentives as a percentage of total revenue. A larger number of studies evaluating the effects of P4P helped us to increase the precision of the estimates of the effects in a meta-regression analysis and provided new evidence of changes in effects, study designs, and payment designs.

Scott et al. [ 4 ] found that an average of 56% of outcome measures per scheme was statistically significantly. Their findings suggested that studies with better study designs, such as Difference-in-Differences (DD) designs, had a lower chance of finding a statistically significant effect. They also provided preliminary evidence that the size of incentives as a proportion of revenue may not be associated with the chance of finding a statistically significantly outcome.

Our method was identical to that used by [ 4 ]. The same search strategy (databases and keywords) was extended from studies published between January 2010 and July 2015 (old studies) to studies published between August 2015 and February 2018 (new studies), and the same data were extracted from the new studies. Studies were included if they examined the effectiveness of a scheme on any type of outcome (e.g., costs, utilization, expenditures, quality of care, health outcomes and if incentives were targeted at individuals or groups of medical practitioners or hospitals). Footnote 1

A vote-counting procedure was used to record the proportion of reported effect sizes that were statistically significant (at the 10% level) per study (noting if there were issues of the unit of analysis error and small sample size). Footnote 2 Some studies examined a range of different outcome indicators, each with a reported effect size (i.e., usually the difference in an outcome indicator between an intervention and control group), while others examined the heterogeneity of effect sizes across time or sub-groups of the sample. Each separate effect size was counted when constructing the dependent variable (a proportion) for each study. For example, if a study reported an overall effect size and then analyzed the results separately by gender, then three effect sizes were counted.

A meta-regression analysis was conducted to examine factors associated with the proportion of statistically significant effects in each study, with each published paper as the unit of analysis. A generalized linear model with a logit link function (a fractional logit with a binomial distribution) was used because the dependent variable was a proportion with observations at both extremes of zero and one [ 7 ]. The error terms would not be independent if there was more than one study evaluating the same scheme, and so standard errors were clustered at the scheme level.

Our regression models included studies from [ 4 ] in addition to the new studies. Our estimates differed slightly from those estimated in [ 4 ] since we controlled for additional variables, including publication year, a dummy variable indicating studies from the new review, and the size of the P4P payments as a proportion of the total revenue. This was calculated from studies that reported both the size of the incentive amount and the provider’s total income or revenue. If there was a range of incentive amounts, we used the mid-point in the regression analysis.

Additional features of payment designs were extracted from the included papers and used in the regression models as independent variables, to the extent that they were reported in the papers reviewed. The additional features included whether the scheme included incentives for improvements in both quality and cost or quality alone (an important design innovation as used in shared savings schemes in the US’s Accountable Care Organizations), whether the scheme rewarded for quality improvement over time rather than meeting a threshold at a specific point in time, and how incentives were used by those receiving the incentive payments (physician income, discretionary use, or specific use such as quality improvement initiatives). We also included a categorical variable for the study design used: Difference-in-Difference (DD), Interrupted Time Series (ITS), Randomized Controlled Trial (RCT), and Before-After with regression (BA) as the reference group. Studies with no control groups and studies which did not adjust for covariates were excluded. We further included a dummy variable for schemes in the US where arguably most experimentation has occurred to date, and whether the scheme was in a hospital or primary care setting.

A total of 448 new papers were found, of which 302 papers were included after screening the title and abstract. Of these, 163 were empirical studies, and full-text screening identified 37 new studies that were eligible for inclusion [ 4 ].

Table 1 presents a summary of the new studies by the country of the evaluated P4P scheme. Footnote 3 The new studies evaluated 23 different schemes across 12 countries, including schemes in new countries (Afghanistan, Kenya, Sweden, and Tanzania). This finding reflects an increase in the proportion of P4P schemes from countries other than the US (from 23% versus 56%) compared to the studies in [ 4 ]. There was also a slightly lower proportion of schemes in new studies, which are conducted in hospitals (25% versus 29.5%).

Table 2 summarizes the overall effects of the schemes evaluated in new studies. The 37 studies across 23 schemes reported on 620 effect sizes (average of 26.95 per scheme and 16.76 per study), of which 53% were statistically significant (53% per scheme; 60% per study). This compared to 46, 56, and 54%, respectively, from [ 4 ], suggested a slight increase in the proportion of significant effects, but an overall mixed effects of P4P schemes.

Table 3 suggests that the P4P schemes had mixed effects on the range of outcomes considered. Table 4 shows that countries with the highest proportion of statistically significant effects are Kenya (82%), France (81%), Taiwan (75%), and Tanzania (73%). Only one study, in Afghanistan, showed no effects.

- Meta-regression analysis

Table 5 presents the meta-regression analysis using 116 studies evaluating P4P schemes published between January 2010 to February 2018. A full set of descriptive statistics is presented in Additional file 2 Appendix B. The three models in Table 5 had different sample sizes because of the covariates in each model were different.

The first model suggested that the new studies were 11 percentage points more likely to report statistically significant effects, although the estimated coefficient itself was not statistically significant. Studies with DD and RCT designs were respectively 20 and 19 percentage points less likely to report a statistically significant effects compared with the studies with BA with control designs. Studies with ITS design were not statistically different compared with the BA studies. The role of study design in explaining the proportion of statistically significant effects was weaker compared with [ 4 ], which reported 24 and 25 percentage points differences for DD and RCT designs, respectively. The proportion of different study designs in the new compared to old review were similar, suggesting that the difference in the effect of study design might be due to the studies with RCT, ITS, and DD designs in the new review having lower proportions of statistically significant effects relative to the studies with BA designs.

Studies evaluating P4P schemes that used incentives for improving both costs and quality (compared to the quality alone) were 11 percentage points more likely to report statistically significant effect sizes. The coefficient was slightly larger than in [ 4 ] and statistically significant at the 10% level. This might be due to overall weaker effects from the new studies since none of the new studies examined this type of payment design. Studies evaluating P4P schemes, which rewarded for improvement over time, led to a lower proportion of statistically significant effect sizes.

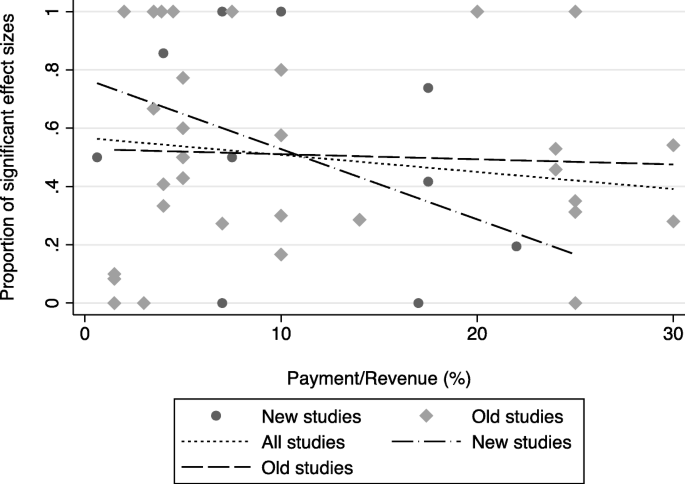

Scott et al. [ 4 ] found that the use of payments for specific purposes (such as payments for investment quality improvement rather than physician income) was associated with a 24 percentage points increase in the proportion of statistically significant effect sizes, but this has fallen to 8 percentage points (Model 2). Of the 23 new schemes included in the review, eight schemes included information on the size of the incentive payments relative to total revenue, ranging from 0.05 to 28% (see Table 6 for more details). Figure 1 shows the unadjusted correlation between the size of payments and the proportion of statistically significant effect sizes, and includes regression lines for old, new, and all studies combined. Studies from the new review showed a stronger negative relationship between the payment size and effectiveness, based on only 11 studies (Table 6 ). After controlling for other factors in the main regression in Table 5 , the results suggested a small positive association between the size of incentives and the proportion of effect sizes statistically significant at the 10% level (Model 3).

Relationship between the relative size of incentive payments to revenue and portion of significant effect sizes

We examined if more recent studies changed the conclusions of the first meta-analysis of P4P literature as P4P schemes continue to evolve. This evolution could be better scheme design, or that the same designs are being used in more settings where our results suggested the latter. Thirty-seven recent studies over a two-year period were added to the existing 80 studies reported in a previous review. The RCT and DD study designs reduced the proportion of statistically significant effects by a lower amount than the previous review. The range of countries in which P4P is being evaluated had increased, with some weak evidence that the proportion of studies with statistically significant effects had increased. The effectiveness of P4P remained mixed overall.

There may be unobserved factors within each scheme associated with both the size of incentive and the likelihood of a statistically significant effects. This variable could be measured with error as some studies present a range rather than an average, of which we took the midpoint.

Despite mounting evidence on the effectiveness of P4P schemes, differences in payment design that were able to be extracted from the studies seemed to play a minor role. However, this meta-analysis masked much heterogeneity in the context, design, and implementation of schemes that were unlikely to be reported in the published studies. Schemes included various diseases, outcome measures, and payment designs, including schemes where the additional funding was used rather than re-allocating existing funding. The choice of outcome indicators may influence the effectiveness of each scheme as some may be more costly for physicians to change than others, while others, such as health outcomes, may be more dependent on patient’s behavior change.

Clustering by the scheme may have accounted for some of this unobserved heterogeneity within schemes. There may also be interactions between different features of payment design, though to examine these would require more clear hypotheses about such interactions and more detailed reporting of payment designs. Data extraction was limited to published studies that vary how they reported the payment designs and study designs. There needs to be improved and more standardized reporting in the literature to enable more general lessons to be learned from P4P schemes that can guide their successful design and implementation [ 19 ].

The factors influencing the success of schemes have not changed remarkably. When assessing the studies, none provided any justification for the particular incentive design used, and none stated they were attempting to improve the way incentives were designed compared to previous schemes. This may be because many schemes were designed within a specific local context, and so were constrained in the design they could use and the scheme’s objectives. This could also be because they were constrained in the indicators they use and can collect and report, suggesting that improvements in information and data on quality occur only very slowly over time.

There should be no expectation that incentive designs should necessarily be becoming more complex over time, as this can lead to gaming and ignores the existence of already strong altruistic motives to improve the quality of care. Incentive schemes were also a result of negotiation between providers and payors, and this presented constraints in how incentives were designed. Providers attempted to extract maximum additional income for minimum behavior change, and payors try and achieve the opposite. This could mean that the resulting schemes were relatively weak in terms of incentives’ strength and size. The value of some schemes to policymakers could be more about the increased accountability they seemed to provide, rather than changing behavior and improving value-based healthcare itself. Similarly, although providers were interested in enhancing quality, their preference for self-regulation and autonomy and protection of income, meant that top-down comparisons of performance and potential reductions in earnings were likely to have been unwelcome. Though payment models must support and not hinder value-based healthcare provision, they remained ‘one size fits all’ fairly blunt instruments that needed to be supplemented with other behavior change interventions.

Article summary

Strength and limitations.

This study updated the first meta-regression analysis of the effectiveness of Pay-for-Performance (P4P) schemes to examine if the factors affecting P4P schemes’ success had changed over time as these schemes evolved.

Data extraction was limited to published studies that varied in how they reported the payment and the study designs.

This meta-analysis masked much of heterogeneity in the context, design, and implementation of schemes that were unlikely to be reported in the published studies.

Availability of data and materials

The extracted data and the statistical analysis code are available from the corresponding author upon reasonable request.

We searched “ PubMed” and “ EconLit” electronic databases for journal articles with the keywords of “value-based purchasing,” “pay for performance,” and “accountable care organisations ,” focusing on studies evaluating a P4P scheme or schemes rewarding for cost reductions. We restricted our search to studies in English from high-, middle and low-income countries, and we excluded studies using qualitative data, reviews of the literature, editorials, and opinion pieces, and studies with no control group, and studies which did not adjust for confounders. We included studies in our review if they examined the impact of schemes on any type of outcome (e.g., costs, utilization, expenditures, quality of care, health outcomes), and if the incentives were targeted at individual or groups of medical practitioners or hospitals. We identified 37 new studies.

Vote-counting is recommended where the outcomes of studies are too heterogeneous to pool the data together [ 5 ]. For an overview of reviews using vote-counting, see [ 6 ].

More details of the new studies are provided in Appendix A. A summary of the pooled new and old studies is presented in Table B.1 in Appendix B.

Abbreviations

Pay for performance

Difference in Differences

Randomized Control Trial

Before-After

Interrupted Time Series

United Kingdom

United States

Quality and Outcomes Framework

Spontaneous breathing trials

Accountable Care Organizations

General practitioner

France’s national P4P scheme for primary care physicians

Quality Blue

Low-density lipoprotein cholesterol

Physician Integrated Network

Akaike information criterion

Bayesian information criterion

Intensive Care Unit

Medicare Advantage Prescription Drug

Medicare Value-Based Purchasing

Mental Health Information Program

Quality Improvement Demonstration Study

Cattel D, Eijkenaar F. Value-Based Provider Payment Initiatives Combining Global Payments With Explicit Quality Incentives: A Systematic Review. Med Care Res Rev. 2019;77(6):2020.

Google Scholar

Flodgren G, Eccles MP, Shepperd S, Scott A, Parmelli E, Beyer FR. An overview of reviews evaluating the effectiveness of financial incentives in changing healthcare professional behaviours and patient outcomes. Cochrane Database Syst Rev. 2011. https://doi.org/10.1002/14651858.CD009255 .

Van Herck P, De Smedt D, Annemans L, et al. Systematic review: Effects, design choices, and context of pay-for-performance in health care. BMC Health Serv Res. 2010;10:247. https://doi.org/10.1186/1472-6963-10-247 .

Scott A, Liu M, Yong J. Financial incentives to encourage value-based health care. Med Care Res Rev. 2018;75:3–32.

Article PubMed Google Scholar

Sun X, Liu X, Sun W, Yip W, Wagstaff A, Meng Q. The Impact of a Pay-For-Performance Scheme on Perscription Quality in Rural China. Health Econ. 2016;25:706–22.

Bushman BJ, Wang M. Vote-counting procedures in meta-analysis. The handbook of research synthesis, vol. 236; 1994. p. 193–213.

Papke LE, Wooldridge JM. Econometric methods for fractional response variables with an application to 401 (k) plan participation rates. J Appl Econ. 1996;11:619–32.

Article Google Scholar

Barbash IJ, Pike F, Gunn SR, Seymour CW, Kahn JM. Effects of physician-targeted pay for performance on use of spontaneous breathing trials in mechanically ventilated patients. Am J Respir Crit Care Med. 2017;196:56–63. https://doi.org/10.1164/rccm.201607-1505OC .

Article PubMed PubMed Central Google Scholar

Sicsic J, Franc C. Impact assessment of a pay-for-performance program on breast cancer screening in France using micro data. Eur J Health Econ. 2017;18:609–21.

Michel-Lepage A, Ventelou B. The true impact of the French pay-for-performance program on physicians’ benzodiazepines prescription behavior. Eur J Health Econ. 2016;17:723–32. https://doi.org/10.1007/s10198-015-0717-6 .

Constantinou P, Sicsic J, Franc C. Effect of pay-for-performance on cervical cancer screening participation in France. Int J Health Econ Manage. 2017;17:181–201.

Sherry TB, Bauhoff S, Mohanan M. Multitasking and Heterogeneous Treatment Effects in Pay-for-Performance in Health Care: Evidence from Rwanda; 2016. https://doi.org/10.2139/ssrn.2170393 .

Book Google Scholar

Anselmi L, Binyaruka P, Borghi J. Understanding causal pathways within health systems policy evaluation through mediation analysis: an application to payment for performance (P4P) in Tanzania. Implement Sci. 2017;12:1–18.

Binyaruka P, Robberstad B, Torsvik G, Borghi J. Who benefits from increased service utilisation? Examining the distributional effects of payment for performance in Tanzania. Int J Equity Health. 2018;17:14. https://doi.org/10.1186/s12939-018-0728-x .

Sherry TB. A note on the comparative statics of pay-forperformance in health care. Health Econ. 2016;25:637–44.

Ellegard LM, Dietrichson J, Anell A. Can pay-for-performance to primary care providers stimulate appropriate use of antibiotics? Health economics. May. 2017;2017:39–54. https://doi.org/10.1002/hec.3535 .

Ryan AM, Krinsky S, Kontopantelis E, Doran T. Long-term evidence for the effect of pay-for-performance in primary care on mortality in the UK: a population study. Lancet. 2016;388:268–74. https://doi.org/10.1016/S0140-6736(16)00276-2 .

Engineer CY, Dale E, Agarwal A, Agarwal A, Alonge O, Edward A, et al. Effectiveness of a pay-for-performance intervention to improve maternal and child health services in Afghanistan: a cluster-randomized trial. Int J Epidemiol. 2016;45:451–9. https://doi.org/10.1093/ije/dyv362 .

Ogundeji YK, Sheldon TA, Maynard A. A reporting framework for describing and a typology for categorizing and analyzing the designs of health care pay for performance schemes. BMC Health Serv Res. 2018;18:1–15.

Gleeson S, Kelleher K, Gardner W. Evaluating a pay-for-performance program for Medicaid children in an accountable care organization. JAMA Pediatr. 2016;170:259. https://doi.org/10.1001/jamapediatrics.2015.3809 .

Hu T, Decker SL, Chou S-Y. Medicaid pay for performance programs and childhood immunization status. Am J Prev Med. 2016;50:S51–7. https://doi.org/10.1016/j.amepre.2016.01.012 .

Bastian ND, Kang H, Nembhard HB, Bloschichak A, Griffin PM. The impact of a pay-for-performance program on central line–associated blood stream infections in Pennsylvania. Hosp Top. 2016;94:8–14. https://doi.org/10.1080/00185868.2015.1130542 .

Asch DA, Troxel AB, Stewart WF, Sequist TD, Jones JB, Hirsch AMG, et al. Effect of financial incentives to physicians, patients, or both on lipid levels: a randomized clinical trial. JAMA - Journal of the American Medical Association. 2015;314:1926–35.

Article CAS PubMed Google Scholar

Vermeulen MJ, Stukel TA, Boozary AS, Guttmann A, Schull MJ. The Effect of Pay for Performance in the Emergency Department on Patient Waiting Times and Quality of Care in Ontario, Canada: A Difference-in-Differences Analysis. Annals of Emergency Medicine. 2016;67:496–505.e7. doi: https://doi.org/10.1016/j.annemergmed.2015.06.028 .

Lapointe-Shaw L, Mamdani M, Luo J, Austin PC, Ivers NM, Redelmeier DA, et al. Effectiveness of a financial incentive to physicians for timely follow-up after hospital discharge: a population-based time series analysis. CMAJ. 2017;189:E1224–9.

Rudoler D, de Oliveira C, Cheng J, Kurdyak P. Payment incentives for community-based psychiatric care in Ontario, Canada. Cmaj. 2017;189:E1509–16.

Lavergne MR, Law MR, Peterson S, Garrison S, Hurley J, Cheng L, et al. Effect of incentive payments on chronic disease management and health services use in British Columbia, Canada: interrupted time series analysis. Health Policy. 2018;122:157–64. https://doi.org/10.1016/j.healthpol.2017.11.001 .

Puyat JH, Kazanjian A, Wong H, Goldner EM. Is the road to mental health paved with good incentives? Estimating the population impact of physician incentives on mental health care using linked administrative data. Med Care. 2017;55:182–90.

Katz A, Enns JE, Chateau D, Lix L, Jutte D, Edwards J, et al. Does a pay-for-performance program for primary care physicians alleviate health inequity in childhood vaccination rates? Int J Equity Health. 2015;14:114. https://doi.org/10.1186/s12939-015-0231-6 .

LeBlanc E, Bélanger M, Thibault V, Babin L, Greene B, Halpine S, et al. Influence of a pay-for-performance program on glycemic control in patients living with diabetes by family physicians in a Canadian Province. Can J Diabetes. 2017;41:190–6. https://doi.org/10.1016/j.jcjd.2016.09.008 .

Kasteridis P, Mason A, Goddard M, Jacobs R, Santos R, Rodriguez-Sanchez B, et al. Risk of care home placement following acute hospital admission: effects of a pay-for-performance scheme for dementia. PLoS One. 2016;11:e0155850. https://doi.org/10.1371/journal.pone.0155850 .

Article CAS PubMed PubMed Central Google Scholar

Haarsager J, Krishnasamy R, Gray NA. Impact of pay for performance on access at first dialysis in Queensland. Nephrology. 2018;23:469–75.

Chen TT, Lai MS, Chung KP. Participating physician preferences regarding a pay-for-performance incentive design: a discrete choice experiment. Int J Qual Health Care. 2016;28:40–6.

Huang Y-C, Lee M-C, Chou Y-J, Huang N. Disease-specific pay-for-performance programs. Med Care. 2016;54:977–83. https://doi.org/10.1097/MLR.0000000000000598 .

Hsieh H-M, Gu S-M, Shin S-J, Kao H-Y, Lin Y-C, Chiu H-C. Cost-effectiveness of a diabetes pay-for-performance program in diabetes patients with multiple chronic conditions. PLoS One. 2015;10:e0133163. https://doi.org/10.1371/journal.pone.0133163 .

Pan C-C, Kung P-T, Chiu L-T, Liao YP, Tsai W-C. Patients with diabetes in pay-for-performance programs have better physician continuity of care and survival. Am J Manag Care. 2017;23:e57–66 http://www.ncbi.nlm.nih.gov/pubmed/28245660 . .

PubMed Google Scholar

Chen Y-C, Lee CT-C, Lin BJ, Chang Y-Y, Shi H-Y. Impact of pay-for-performance on mortality in diabetes patients in Taiwan. Medicine. 2016;95:e4197. https://doi.org/10.1097/MD.0000000000004197 .

Hsieh H-M, Lin T-H, Lee I-C, Huang C-J, Shin S-J, Chiu H-C. The association between participation in a pay-for-performance program and macrovascular complications in patients with type 2 diabetes in Taiwan: a nationwide population-based cohort study. Prev Med. 2016;85:53–9. https://doi.org/10.1016/j.ypmed.2015.12.013 .

Hsieh H-M, Shin S-J, Tsai S-L, Chiu H-C. Effectiveness of pay-for-performance incentive designs on diabetes care. Med Care. 2016;54:1063–9. https://doi.org/10.1097/MLR.0000000000000609 .

Chen H-J, Huang N, Chen L-S, Chou Y-J, Li C-P, Wu C-Y, et al. Does pay-for-performance program increase providers adherence to guidelines for managing hepatitis B and hepatitis C virus infection in Taiwan? PLoS One. 2016;11:e0161002. https://doi.org/10.1371/journal.pone.0161002 .

Chen T-T. Hsueh Y-S (Arthur), Ko C-H, Shih L-N, Yang S-S. the effect of a hepatitis pay-for-performance program on outcomes of patients undergoing antiviral therapy. Eur J Pub Health. 2017;27:955–60. https://doi.org/10.1093/eurpub/ckx114 .

Binyaruka P, Patouillard E, Powell-Jackson T, Greco G, Maestad O, Borghi J. Effect of paying for performance on utilisation, quality, and user costs of health services in Tanzania: a controlled before and after study. PLoS One. 2015;10:1–16.

Article CAS Google Scholar

Menya D, Platt A, Manji I, Sang E, Wafula R, Ren J, et al. Using pay for performance incentives (P4P) to improve management of suspected malaria fevers in rural Kenya: a cluster randomized controlled trial. BMC Med. 2015;13:268. https://doi.org/10.1186/s12916-015-0497-y .

Ryan AM, Burgess JF, Jr. Pesko MF, Borden WB and Dimick B. The Early Effects of Medicare's Mandatory Hospital Pay‐for‐Performance Program. Health Serv Res. 2015;50:81-97. https://doi.org/10.1111/1475-6773.12206 .

Jha AK. Value-based purchasing: time for reboot or time to move on? JAMA. 2017;317:1107. https://doi.org/10.1001/jama.2017.1170 .

Shih T, Nicholas LH, Thumma JR, Birkmeyer JD, Dimick JB. Does pay-for-performance improve surgical outcomes? Aann Surg. 2014;259:677–81.

Swaminathan S, Mor V, Mehrotra R, Trivedi AN. Effect of Medicare dialysis payment reform on use of erythropoiesis stimulating agents. Health Serv Res. 2015;50:790–808.

Kuo RNC, Chung KP, Lai MS. Effect of the Pay-for-Performance Program for Breast Cancer Care in Taiwan. J Oncol Pract. 2011;7(3S):e8s–15s.

Chen JY, Tian H, Juarez DT, Yermilov I, Braithwaite RS, Hodges KA, et al. Does pay for performance improve cardiovascular care in a “real-world” setting? Am J Med Qual. 2011;26:340–8.

Gavagan TF, Du H, Saver BG, Adams GJ, Graham DM, McCray R, et al. Effect of financial incentives on improvement in medical quality indicators for primary care. J Am Board Fam Med. 2010;23:622–31.

Kruse G, Chang Y, Kelley JH, Linder JA, Einbinder J, Rigotti NA. Healthcare system effects of pay-for-performance for smoking status documentation. Am J Manag Care. 2013;23:554–61 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3624763/pdf/nihms412728.pdf .

Kiran T, Wilton AS, Moineddin R, Paszat L, Glazier RH. Effect of payment incentives on cancer screening in Ontario primary care. Ann Fam Med. 2014;12:317–23.

Li J, Hurley J, Decicca P, Buckley G. Physician response to pay-for-perfor- mance: Evidence from a natural experiment. Health Econ. 2014;23(8):962–78.

Kristensen SR, Meacock R, Turner AJ, Boaden R, McDonald R, Roland M, et al. Long-term effect of hospital pay for performance on mortality in England. N Engl J Med. 2014;371:540–8.

Sutton M, Nikolova S, Boaden R, Lester H, McDonald R, Roland M. Reduced mortality with hospital pay for performance in England. N Engl J Med. 2012;367:1821–8.

Bhalla R, Schechter CB, Strelnick AH, Deb N, Meissner P, Currie BP. Pay for performance improves quality across demographic groups. Qual Manag Health Care. 2013;22:199–209.

Bardach NS, Wang JJ, de Leon SF, Shih SC, Boscardin WJ, Goldman LE, et al. Effect of pay-for-performance incentives on quality of care in small practices with electronic health records: a randomized trial. JAMA. 2013;310:1051–9.

Ryan AM, McCullough CM, Shih SC, Wang JJ, Ryan MS, Casalino LP. The intended and unintended consequences of quality improvement interventions for small practices in a community-based electronic health record implementation project. Med Care. 2014;52:826–32.

Peabody JW, Shimkhada R, Quimbo S, Solon O, Javier X, McCulloch C. The impact of performance incentives on child health outcomes: results from a cluster randomized controlled trial in the Philippines. Health Policy Plan. 2014;29:615–21.

Lemak CH, Nahra TA, Cohen GR, Erb ND, Paustian ML, Share D, et al. Michigan’s fee-for-value physician incentive program reduces spending and improves quality in primary care. Health Aff. 2015;34:645–52.

Saint-Lary O, Sicsic J. Impact of a pay for performance programme on French GPs’ consultation length. Health Policy. 2015;119:417–26. https://doi.org/10.1016/j.healthpol.2014.10.001 .

Sharp AL, Song Z, Safran DG, Chernew ME, Mark FA. The effect of bundled payment on emergency department use: alternative quality contract effects after year one. Acad Emerg Med. 2013;20:961–4.

Song Z, Fendrick AM, Safran DG, Landon B, Chernew ME. Global budgets and technology-intensive medical services. Healthcare. 2013;1:15–21.

Song Z, Safran DG, Landon BE, He Y, Ellis RP, Mechanic RE, et al. Health care spending and quality in year 1 of the alternative quality contract. N Engl J Med. 2011;365:909–18.

de Walque D, Gertler PJ, Bautista-Arredondo S, Kwan A, Vermeersch C, de Dieu BJ, et al. Using provider performance incentives to increase HIV testing and counseling services in Rwanda. J Health Econ. 2015;40:1–9. https://doi.org/10.1016/j.jhealeco.2014.12.001 .

Chien AT, Li Z, Rosenthal M. Imprroving timely childhood immuniizations through pay for performanace in Medicaid-managed care. Health Res Educ Trust. 2010;45:1934–47.

Allen T, Fichera E, Sutton M. Can payers use prices to improve quality? Evidence from English hospitals. Health Econ. 2016;25:56–70.

McDonald R, Zaidi S, Todd S, Konteh F, Hussein K, Roe J, et al. A qualitative and quantitative evaluation of the introduction of best practice tariffs: An evaluation report commissioned by the Department of Health. 2012; October. http://www.nottingham.ac.uk/business/documents/bpt-dh-report-21nov2012.pdf .

Unutzer J, Chan YF, Hafer E, Knaster J, Shields A, Powers D, et al. Quality improvement with pay-for-performance incentives in integrated behavioral health care. Am J Public Health. 2012;102:41–5.

Serumaga B, Ross-Degnan D, Avery AJ, Elliott RA, Majumdar SR, Zhang F, et al. Effect of pay for performance on the management and outcomes of hypertension in the United Kingdom: interrupted time series study. BMJ. 2011;342:d108.

Simpson CR, Hannaford PC, Ritchie LD, Sheikh A, Williams D. Impact of the pay-for-performance contract and themanagement of hypertension in Scottish primary care: a 6-year population-based repeated cross-sectional study. Br J Gen Pract. 2011;61:443–51.

Ross JS, Lee JT, Netuveli G, Majeed A, Millett C. The effects of pay for performance on disparities in stroke, hypertension, and coronary heart disease management: interrupted time series study. PLoS One. 2011;6:e27236.

Sun X, Liu X, Sun Q, Yip W, Wagstaff A, Meng Q. The impact of a pay-for-performance scheme on prescription quality in rural China impact evaluation; 2014.

Yip W, Powell-Jackson T, Chen W, Hu M, Fe E, Hu M, et al. Capitation combined with pay-for-performance improves antibiotic prescribing practices in rural China. Health Aff. 2014;33:502–10.

Powell-Jackson T, Yip WC-M. Realigning demand and supply side incen- tives to improve primary health care seeking in rural China. Health Econ. 2015;24:755–72.

Download references

Acknowledgements

The authors acknowledge the data provided by the authors of the previous review [ 4 ].

Financial support for this study was provided by the National Health and Medical Research Council (NHMRC) Partnership Centre on Health System Sustainability.

Author information

Authors and affiliations.

University of Manitoba, Institute for Labor Studies (IZA) and Tax and Transfer Policy Institute (TTPI), 15 Chancellors Circle, Fletcher Argue Building, Winnipeg, Manitoba, Canada

Arezou Zaresani

The University of Melbourne, Melbourne, Australia

Anthony Scott

You can also search for this author in PubMed Google Scholar

Contributions

AZ conducted the literature search, data extraction, and statistical analysis and contributed to data interpretation and paper drafting. AS provided management oversight of the whole project and contributed to data interpretation and drafting of the paper. Both authors have read and approved the manuscript.

Corresponding author

Correspondence to Arezou Zaresani .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Competing interests.

Authors have no conflict of interest to declare.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1..

Appendix A. Metadata collected by the authors and [ 4 ] ([ 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 , 42 , 43 ]).

Additional file 2.

Appendix B. Metadata collected by the authors of [ 4 ] ([ 44 , 45 , 46 , 47 , 48 , 49 , 50 , 51 , 52 , 53 , 54 , 55 , 56 , 57 , 58 , 59 , 60 , 61 , 62 , 63 , 64 , 65 , 66 , 67 , 68 , 69 , 70 , 71 , 72 , 73 , 74 , 75 ]).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Zaresani, A., Scott, A. Is the evidence on the effectiveness of pay for performance schemes in healthcare changing? Evidence from a meta-regression analysis. BMC Health Serv Res 21 , 175 (2021). https://doi.org/10.1186/s12913-021-06118-8

Download citation

Received : 27 May 2020

Accepted : 25 January 2021

Published : 24 February 2021

DOI : https://doi.org/10.1186/s12913-021-06118-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Financial incentives

- Pay for performance (P4P)

- Value-based healthcare

- Accountable care organization

BMC Health Services Research

ISSN: 1472-6963

- General enquiries: [email protected]

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Pay for performance, satisfaction and retention in longitudinal crowdsourced research

Contributed equally to this work with: Elena M. Auer, Tara S. Behrend, Andrew B. Collmus, Richard N. Landers, Ahleah F. Miles

Roles Data curation, Formal analysis, Visualization, Writing – original draft, Writing – review & editing

Affiliation Department of Psychology, University of Minnesota, Minneapolis, Minnesota, United States of America

Roles Conceptualization, Methodology, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Affiliation Department of Organizational Sciences and Communication, George Washington University, Washington, District of Columbia, United States of America

Roles Conceptualization, Investigation, Methodology, Writing – review & editing

Affiliation Department of Psychology, Old Dominion University, Norfolk, Virginia, United States of America

Roles Conceptualization, Methodology, Writing – review & editing

Roles Visualization, Writing – original draft, Writing – review & editing

- Elena M. Auer,

- Tara S. Behrend,

- Andrew B. Collmus,

- Richard N. Landers,

- Ahleah F. Miles

- Published: January 20, 2021

- https://doi.org/10.1371/journal.pone.0245460

- Reader Comments

In the social and cognitive sciences, crowdsourcing provides up to half of all research participants. Despite this popularity, researchers typically do not conceptualize participants accurately, as gig-economy worker-participants. Applying theories of employee motivation and the psychological contract between employees and employers, we hypothesized that pay and pay raises would drive worker-participant satisfaction, performance, and retention in a longitudinal study. In an experiment hiring 359 Amazon Mechanical Turk Workers, we found that initial pay, relative increase of pay over time, and overall pay did not have substantial influence on subsequent performance. However, pay significantly predicted participants' perceived choice, justice perceptions, and attrition. Given this, we conclude that worker-participants are particularly vulnerable to exploitation, having relatively low power to negotiate pay. Results of this study suggest that researchers wishing to crowdsource research participants using MTurk might not face practical dangers such as decreased performance as a result of lower pay, but they must recognize an ethical obligation to treat Workers fairly.

Citation: Auer EM, Behrend TS, Collmus AB, Landers RN, Miles AF (2021) Pay for performance, satisfaction and retention in longitudinal crowdsourced research. PLoS ONE 16(1): e0245460. https://doi.org/10.1371/journal.pone.0245460

Editor: Sergio A. Useche, Universitat de Valencia, SPAIN

Received: February 26, 2020; Accepted: December 30, 2020; Published: January 20, 2021

This is an open access article, free of all copyright, and may be freely reproduced, distributed, transmitted, modified, built upon, or otherwise used by anyone for any lawful purpose. The work is made available under the Creative Commons CC0 public domain dedication.

Data Availability: We are unable to provide the raw dataset for public use. We specifically noted that only members of the research team would have access to the raw data (which includes potentially identifiable information) in both the IRB submission and the consent forms provided to participants. Additionally, we did not stipulate an exception for de-identified data, thus all requests for data would need to be made via the Institutional Review Board of Old Dominion University at 757-683-3460 (Reference number: 15-183). Email communication regarding data availability and requests to the ODU IRB can be directed to Adam Rubenstein ( [email protected] ), Director of Compliance, Office of Research. Syntax and results for all analyses can be found at https://osf.io/zg2fj/ .

Funding: The authors received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

In the social and cognitive sciences, crowdsourcing [ 1 ] provides up to half of all research participants [ 2 ] and is growing in popularity, with Amazon Mechanical Turk (MTurk) as a dominant source [ 3 ]. For researchers, crowdsourcing has provided access to a large, diverse, and convenient pool of participants. Referred to by Amazon as Workers, we conceptualize these individuals as “worker-participants” based on their self-identification as workers and their role as research participants. Past research suggests that although the characteristics of individuals participating in crowdsourcing may be somewhat idiosyncratic, these differences do not generally threaten the conclusions of studies, with some exceptions (e.g., nonnaiveté; [ 1 , 4 , 5 ]).

As platforms such as MTurk reach maturity, the dual goals of ensuring scientific validity and protecting worker-participants’ rights must both be met. Past research in this area has focused almost exclusively on questions of issues of generalizability and representativeness in relation to other samples (e.g. [ 4 , 5 ]) but has not generally considered the ethical and practical implications of sampling from a population of gig-economy contract workers versus more traditional populations.

MTurk Workers share common characteristics with other paid research participants and may be conceptualized as professional subjects whose participation in academic research is solely to generate income. Previous research has examined unique behaviors of professional subjects such as deception in screening questionnaires to ensure selection for participation [ 6 ]. The MTurk Worker population is comprised of both professional research subjects and individuals who engage in various other Human Intelligence Tasks (HITs) outside of academic research (e.g., editing a computer-generated transcription of an audio file). They have made efforts to self-identify as contract employees and publicize their expectations of the Worker-Requester relationship in addition to other behaviors that indicate a nontraditional work environment with characteristics of both professional subjects and gig contract workers in an employment setting.

All academic research is bound by an ethical code that is concerned with protecting the rights of human subjects, explicated in guidelines such as the Belmont Report and enforced by the review boards of academic institutions and other governing bodies [ 7 ]. However, it is important to consider the ways in which crowdsourced research conducted through online platforms has implications for both general research ethics and gig work. MTurk provides a unique population of independent contractors with aspects that differentiate it from other types of research participants and other types of employees, which can provide novel and useful information for both groups. MTurk allows Workers to explicitly sign up for a marketplace with the opportunity to complete tasks for pay at their own discretion. This exerts a certain market pressure on Workers that is analogous to traditional labor halls for union members seen in the industrial era [ 8 , 9 ]. This labor model has also seen a renaissance with day laborers in the agriculture and logistics industries [ 10 ]. This analogy can be applied to many online marketplaces such as Prolific, Upwork, and Fiver; however, we have chosen MTurk for this study based on its’ prevalent usage for social science research and the researchers’ direct payment of participants. Other types of online research panels obtain participants from many different sources with varying types of rewards or compensation (e.g., game tokens and points toward gift cards) that does not typically align with the labor hall model. In these cases, researchers may not have direct knowledge of type and level of participant incentives, but instead pay the crowdsourcing platform for the collection of a panel. This type of compensation has more definitive ethical violations than the nuances afforded by the labor market created on the MTurk platform. Thus, MTurk represents a unique subset of research participants that are also gig economy workers. This conceptualization necessarily benefits from previous research on work motivation, behavior and attitudes.

The work motivation literature [ 11 ] suggests that of the many relevant factors that may determine motivation, pay and expectations about pay stand out as especially relevant. Locke, Feren, McCaleb, Shaw, and Denny [ 12 ] argued, “No other incentive or motivational technique comes even close to money with respect to its instrumental value” (p. 379). Thus, we seek to understand the effect of pay on crowdsourced worker-participant behavior. We focused on the special case of a longitudinal study in which participants must return on a separate occasion to explore how pay affects worker-participant performance (i.e., data quality), satisfaction, and attrition.

Since the creation of MTurk in 2007, researchers have explored how pay influences behavior in crowdsourced work marketplaces. In an early study, Buhrmester et al. [ 3 ] found that relatively higher pay rates (50 cents vs 5 cents) resulted in faster overall data collection time, with no major differences in data quality as assessed by scale reliability. Litman, Robinson, and Rosenzweig [ 13 ] found that monetary compensation was the highest-rated motivation for completing a research study among US-based Workers, contrary to findings just four years prior [ 3 ]. A recent poll found that on average MTurk Workers estimated fair payment hovered just above the United States minimum wage ($7.25/hr), up from the previous standard of $6/hr [ 14 ]. The MTurk community is evolving over time, and the norms and expectations for pay have changed, with new tools constantly emerging to meet Workers’ demands for fair pay (e.g., Turkopticon, TurkerView).

As Workers develop more employee-like identities, we argue that they follow patterns explicated in pay-for-performance theory [ 15 ]. Pay predicts a number of goal-directed behaviors because it supports physiological and safety needs [ 16 ]. Classic studies have found that pay for performance leads employees to increased productivity [ 12 , 17 ]. Aligned with this evidence on the extrinsic motivation provided by pay, we hypothesize:

Hypothesis 1: Base pay, pay increases, and total pay positively affect the performance of worker-participants as measured by indicators of data quality.

Although pay is often critical to work motivation, meta-analytic findings suggest that in traditional forms of work, pay is only slightly related to job satisfaction [ 18 ] and performance [ 19 ]. In short, pay might encourage worker-participants to exert just enough effort to be compensated and no more. Compensation is better considered a multifaceted issue in which the level of compensation matters, but so too do worker-participants’ expectations and their understanding of their compensation. In most organizations, many aspects of the employment relationship are left unstated, yet form a psychological contract between the employee and employer [ 20 – 22 ]. Each party, the employee and employer, holds beliefs about what they expect from the other and what they are obligated to provide in return [ 22 ]. Contractual beliefs come in part from schema, norms, and past experiences [ 21 ]. When the employer and employee hold mutual beliefs, effective performance, feelings of trust and commitment, and reciprocity follow [ 22 ]. This contract is an important framework within which to understand compensation.

The development of psychological contracts also has major implications for perceived organizational justice. Specifically, distributive justice [ 23 ] which focuses on the perceptions of decision outcomes in an organization or group, has been previously applied to compensation fairness [ 24 , 25 ]. Typically, distributive justice is cultivated when these outcomes are aligned with norms for the allocation of rewards (i.e., equity and fair pay for good performance) [ 26 ]. Especially relevant for research participants, this concept also has roots in research ethics (see the Belmont Report; [ 7 ]).

As the norms of MTurk evolve, the expectations and the psychological contract between Workers and Requesters (i.e., those providing tasks to complete) also change. In early days, Workers did not have strong prior experience to draw from in forming expectations. Now, as a mature system, Workers have strong beliefs and have formed expectations of their employers. By examining websites such as Turkopticon, where MTurk users report violations of their self-formed Bill of Rights, it becomes clear that Workers are not traditional paid participants [ 27 ]. Among these expectations are fair pay equivalent to US minimum wage, swift payment for good work, and bonuses for outstanding work [ 28 ]. To address these motivational aspects of crowdsourced research, we hypothesize:

Hypothesis 2: Base pay, pay raises, and total pay positively affect worker-participant satisfaction as measured by intrinsic motivation, compensation reactions, and distributive justice.

As in any other workplace, trust and credibility are essential in determining whether a worker-participant will return to complete additional work. Attrition in traditional employment settings can often be attributed to dissatisfaction, depending on an employee’s job embeddedness, agency, or commitment [ 29 – 31 ]. Absolute pay level is also related to turnover [ 32 ], although pay raises have been demonstrated to be more important in determining both turnover and fairness perceptions [ 19 ]. The crowdsourcing environment is unusual compared to other forms of work, however, in that worker-participants have less obvious opportunities to interact with each other, reducing their likelihood of forming bonds that drive retention decisions unless they seek out online communities built for that purpose. Further, the physical environment is not fixed, removing concerns such as location and community in determining retention. Thus, in the specific context of longitudinal crowdsourced work, we hypothesize:

Hypothesis 3: Base pay, pay increases, and total pay negatively affect attrition of worker-participants.

This study was approved and monitored by the Institutional Review Board of Old Dominion University (Reference number: 15–183).

Participants

Participants (N = 359) were adult users of MTurk located in the United States. One participant completed more than one condition and was removed from the data set. 50% of participants identified as female, 70% identified as White, 6% as African American, 14% as Asian American, 1.7% as Native American or Native Alaskan and 9.2% identified as “other.” 63% of participants reported working full-time, 16% reported working part-time and 21% were unemployed. Of those employed, about 20% were employed in business service, 12% in education, 12% in finance, 8% in healthcare, 10% in manufacturing, and 10% in retail. There were minimal differences in demographics between the initial sample and the retained sample in the second wave of the study ( Table 1 ).

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0245460.t001

We used a 3x3 between-subjects design in which the manipulated factors were Time 1 (T1) Pay (X1: $.50, $1, $2) and Pay Multiplier (X2: 100%, 200%, 400%) which represented a relative pay increase at Time 2 (T2). Thus, worker-participants who completed both waves of the study were paid anywhere between $1 and $10 total, and this total pay represents the interaction between X1 and X2. See Table 2 for a closer examination of each cell of the experimental design in addition to the observed hourly wage based on average completion time in each condition. To control for time of day effects and potential time-zone availability differences, each condition was split into two halves, which were deployed at either 12:00 p.m. or 8:00 p.m. EST. The T2 follow up for each wave was matched to the T1 day and time. The groups were made available sequentially, every 3–4 days, from January 11 to May 13. The T1 waves were each open for 12 hours, and the T2 waves were open for up to 6 weeks. The T2 deployments were accompanied by a reminder email.

https://doi.org/10.1371/journal.pone.0245460.t002

Participants first viewed a recruitment notice for the task on Amazon’s MTurk and self-selected to participate. The recruitment notice included the time-to-completion estimate (30 minutes), compensation for both the current task and the follow-up, and information about the second questionnaire invitation to follow in approximately 30 days. This information was also repeated in the consent script upon acceptance of the Human Intelligence Task (HIT) on the MTurk platform. Each condition received HIT recruitment notices and consent scripts specific to their experimental manipulation. Participants gave informed consent by clicking “YES” on a consent script before proceeding to the experiment. Participants who accepted the terms were directed to complete the questionnaire containing all measures. They had 12 hours to complete the survey and were told that their choice to participate in the second part of the study would not affect their payment for part one. The last page of the questionnaire contained a unique ID to submit for payment. Six weeks later, participants were emailed with a direct invitation to participate at Time 2. Participant contact was managed within the MTurk platform. Minimal identifiable information was collected (demographics and MTurk ID for payment), and no attempts were made to re-identify individuals based on their unique MTurk ID. If the participant accepted the invitation to the second wave, they were directed to an identical survey and followed an initial set of procedures. After the study was completed for all participants, debriefing documentation was emailed to all participants.

As the core “work”, worker-participants completed a HIT (Human Intelligence Task; a piece of work offered on the MTurk platform) comprised of a series of well-validated cognitive and personality instruments. These included a ten-item Big 5 personality measure [ 33 ], a positive and negative affectivity questionnaire [ 34 ], a 30-item cognitive ability test [ 35 ], a personal altruism questionnaire [ 36 ], the Neutral Objects Satisfaction Questionnaire (NOSQ; [ 37 ]), and an Adult Decision-making competency questionnaire [ 38 ]. Post-work attitude measures included compensation reactions, intrinsic motivation [ 39 ], and distributive justice [ 26 ]. The above measures served both as a combination of outcome variables in their own right, and a means to assess data quality and reliability.

Performance was operationalized in several ways. The first indicator of performance was the number of attention check items answered correctly at each wave. Both instructed items and bogus items were used [ 40 ]. For example, participants were asked to “Select the option that is at the left end of the scale for this question.” (see [ 41 ]). Ostensibly, anyone answering questions arbitrarily would miss some of these items. Each wave contained five attention checks. Second, personal reliability (test-retest) for two scales, personality and cognitive ability, was calculated, such that higher reliability indicated better performance [ 3 ]. Third, following recommendations from Meade & Craig [ 41 ] maximum and average LongString values were calculated representing the maximum and average number of identical responses in a row, respectively. LongString values were calculated using all non-outcome scales that would permit long-string responses including the big five personality, affect, personal altruism, and neutral objects questionnaires.

Motivation was measured using three subscales from the Intrinsic Motivation Inventory [ 39 ], including the Interest/Enjoyment Subscale (e.g., “I enjoyed doing this HIT very much”; α = .79) along with Perceived Effort/Importance (e.g., “I put a lot of effort into this.”; α = .81), and Perceived Choice (“I believe I had some choice about doing this HIT.”; α = .82).

Satisfaction with compensation was measured with two separate items regarding the current task (e.g., “I am satisfied with the overall pay I will receive for this HIT”) and overall compensation for both tasks (e.g., “I am satisfied with the overall pay I will receive for these two HITs.”) and four items from Colquitt’s [ 26 ] distributive justice scale (e.g., “Does your compensation reflect the effort you have put into your work?”; α = .86).

Retention was operationalized as successful completion of the second wave of the study.

Manipulation checks of the experimental conditions occurred in both waves of the study. Participants were asked to confirm how much money they were paid for each wave in addition to stating whether they knew this was the first or second wave of a two-part study.

Descriptive statistics and intercorrelations for all variables are in Table 3 . For this study, manipulation checks served their typical purpose of flagging insufficient effort responding; this also served as one test of the effect of experimental conditions on performance [ 40 ]. Approximately 90% of participants correctly reported how much money they were paid for the first wave, 94% indicated that they were aware they were taking the first part of a two-part study, and 96% indicated that they intended to complete the second part of the study. Seventy-seven percent of participants correctly identified how much they were paid in wave two and 93% indicated that they were aware they were taking the second part of a two-part study. Although, there is not a clear pattern explaining the noticeable drop in correct pay identification in the second wave, there are a number of possible explanations including careless responding and confusion about total pay as opposed to current wave pay. Additionally, Workers often sort HITs based on pay and once they have reached a personal threshold, may not remember the exact pay for each HIT they accept. Based on the research questions being addressed in our study, retaining those individuals who did not pass the manipulation checks for the final analysis made our sample more representative of the typical MTurk population.

https://doi.org/10.1371/journal.pone.0245460.t003

All hypotheses were tested using regression with appropriate considerations for dispersion (e.g., linear, Poisson, and logistic regression). Independent variables (including the interaction term) were dummy-coded. As in any multiple regression, coefficients should be interpreted as the effect conditional on all other variables in the model being held at zero. Given the dummy coding, holding variables at zero results in an estimated effect compared to the referent group where T1 Pay = $.50 and Pay Multiplier = 100% (see table notes and [ 42 ] for more details on interpreting dummy-variable regression models). These coefficients are not an exact replication of an ANOVA framework. For this reason, regression results are presented in addition to analysis of variance or analysis of deviance tables where appropriate.

Analyses are presented for both Time 1 and Time 2 outcome measures of performance and satisfaction. As was expected and used to test H3, only a portion of participants were retained at Time 2. Given this, some cell sizes at Time 2 across conditions are quite low, possibly resulting in reduced power to detect significant effects. Differences in significant effects between Time 1 and Time 2 may not be exclusively attributed to study variables and should be interpreted with the influence of this fact in mind.

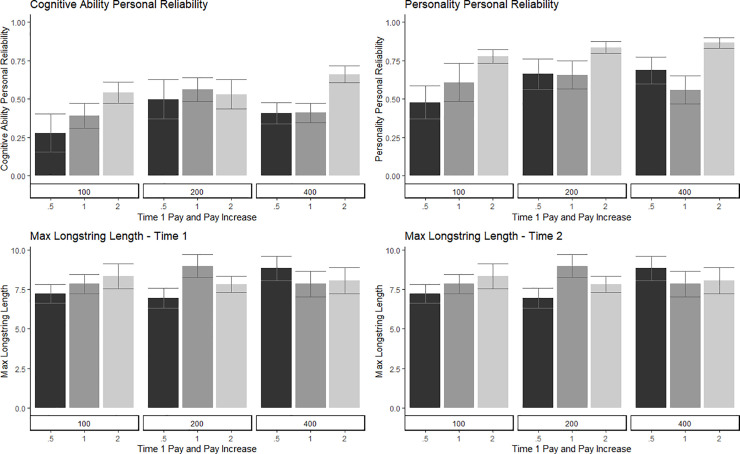

H1 predicted that pay would positively affect worker-participant performance. The effect of pay on passed attention checks was tested using two modeling approaches, one cross-sectional and the other longitudinal. In the first model, using Poisson regression, number of passed Attention Checks at T1 was regressed on to T1 Pay, Pay Multiplier, and the interaction (Total Pay). Neither T1 Pay or Pay Multiplier significantly predicted the number of Passed Attention Checks at T1. In the second model, only including participants who completed both waves, Attention Checks passed at T2 was regressed on to T1 Pay, Pay Multiplier, and the interaction (Total Pay; Tables 4 and 5 ). There were no significant effects of pay on performance in the second wave of the study. The effect of pay on personal reliability, using both personality and general mental ability responses, was tested by regressing Personal Reliability scores on T1 Pay, Pay Multiplier, and the interaction (Total Pay; Tables 4 and 6 , Fig 1 ). There was a significant effect of T1 Pay on Personality Personal Reliability scores, but not General Mental Ability. Generally, as T1 Pay increased, Personality Personal Reliability scores increased. Lastly, the effect of pay on Maximum and Average LongString values was tested (Tables 4 – 6 , Fig 1 ). Total Pay had a significant effect on Maximum LongString at T1, although there was no interpretable pattern based on condition. Pay did not have a significant effect on Average LongString at T1 or T2 and did not have a significant effect on Maximum LongString at T2. To summarize, across the indicators of performance, there was no convincing evidence that initial pay or pay multiplier significantly affected data quality; H1 was not supported.

https://doi.org/10.1371/journal.pone.0245460.g001

https://doi.org/10.1371/journal.pone.0245460.t004

https://doi.org/10.1371/journal.pone.0245460.t005

https://doi.org/10.1371/journal.pone.0245460.t006

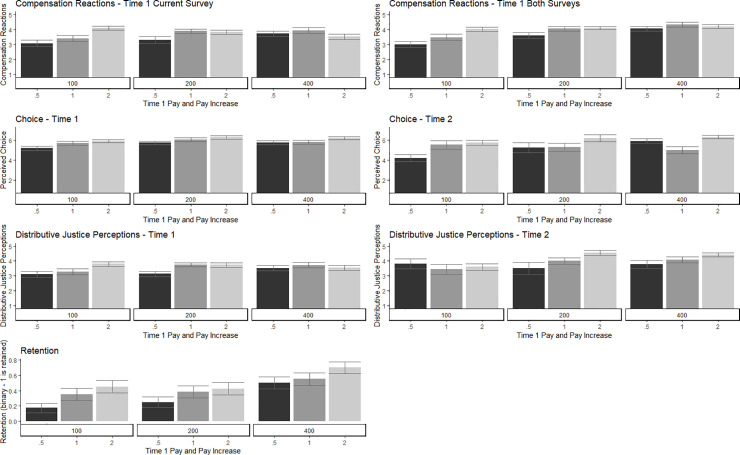

H2 predicted that pay would positively affect worker-participant satisfaction measured by post-test intrinsic motivation, compensation reactions, and distributive justice perceptions. Scores of each T1 satisfaction measure were regressed onto initial Pay, Pay Multiplier, and Total Pay (Tables 7 and 8 , Fig 2 ). There were no significant effects of Pay on Enjoyment or Perceived Effort. There was a significant positive effect of T1 Pay and Pay Multiplier on Perceived Choice. There was also a significant effect of Total Pay on Compensation Reactions at T1. Participants initially receiving $0.50 with no increase (100% multiplier) in the second wave had the lowest compensation reactions, while any participant making a total of at least $3.00 generally scored highest. T1 Pay had a significant effect on T1 Distributive Justice with those initially receiving $2 scoring highest. A second regression was conducted for participants with scores on each T2 satisfaction measure (Tables 7 and 8 , Fig 2 ). Again, there were no significant effects of pay on Enjoyment or Perceived Effort. Total Pay did, however, significantly predict Perceived Choice at T2. Of those receiving the largest increase in pay (400% multiplier), participants receiving an initial pay of $1 scored the lowest on Perceived Choice, but overall the lowest total pay of $1 resulted in the least perceived choice. Pay did not predict Compensation Reactions at T2. Total Pay positively affected Distributive Justice at T2. H2 was partially supported.

https://doi.org/10.1371/journal.pone.0245460.g002

https://doi.org/10.1371/journal.pone.0245460.t007

https://doi.org/10.1371/journal.pone.0245460.t008

H3 predicted a negative effect of pay on attrition. We used a logistic regression model, where the binary outcome of Completion was regressed on to pay (Tables 7 and 9 , Fig 2 ). T1 Pay and Pay Multiplier significantly predicted whether a participant completed the second survey such that those with higher T1 Pay and a larger Pay Multiplier were more likely to complete the survey at T2. H3 was supported.

https://doi.org/10.1371/journal.pone.0245460.t009

The scientific community has expressed both excitement and skepticism about the value of MTurk Workers as a population. The purpose of this study was to explore whether pay was a motivator for Workers, specifically in a longitudinal study. Findings showed that pay mattered for satisfaction and attrition but not performance. The norms of MTurk exert significant pressure on Workers to do a “good job” regardless of their satisfaction, because they risk rejection of their work if their performance is not acceptable. Thus, if a Worker submits a task, it is probable that the task will be of high quality regardless of pay level or Worker satisfaction, even at very low pay rates. On the other hand, low pay will likely lead to a penalty to the Requester’s reputation. Regardless of acceptable data quality in an initial HIT, decreased satisfaction and increased attrition are likely to jeopardize future data collection efforts (especially for longitudinal studies) and undermine the success of the MTurk platform for researchers. Further, it is unethical. MTurk Workers view themselves as employees who are entitled to fair pay, generally US minimum wage. A Worker’s average compensation was only US$1.38 per hour in 2010 [ 43 ]. Little progress has been made here as recent research estimates the median hourly wage (taking into account the influence of unpaid work such as time spent searching for HITs or work on HITs that are ultimately rejected) is about US$2 per hour with only four percent earning more than US$7.25 per hour [ 44 ].

In the special case of multi-wave studies, the scope of the current study, it appears that worker-participants generally do not take the average of the two pay rates in determining fairness during the first wave. With the exception of initial participant satisfaction in the first wave and distributive justice at T2, there were few significant effects of the combination of pay increase and T1 pay on satisfaction, performance, retention or data quality. Rather, participant performance, satisfaction, and retention as well as the quality of their work all depend on the initial T1 pay. Lower initial pay generally resulted in worse outcomes. Participant satisfaction with compensation across both waves was dependent on the pay increase; participants were more satisfied with their compensation when their pay increase was steeper. Pay increase also affected retention and perceptions of justice in the second wave of the study.

There was mixed support that initial pay affects performance/data quality. Generally, data quality was not affected by pay. Personal reliability across personality measures did seem to increase as T1 pay increased, and maximum LongString was affected by total pay. However, data quality and performance did not seem to be affected by initial pay, pay increase, or total pay. Researchers’ concerns that MTurk Workers are only participating for the money may initially be warranted, but when considering longitudinal research other factors may be more important.

As expected, compensation reactions and distributive justice perceptions at T1 are typically related to T1 pay. Pay does not necessarily offer much intrinsic motivation, but participants do report more perceived choice as a function of pay. A decrease in perceived choice in T2 was possibly related to individuals with higher perceived choice in T1 exercising this choice and not returning for the second wave. Paired with the performance findings, this suggests that Workers are in a social context in which they have a certain level of choice over which HITs to accept (based on pay), but that after engaging in an unofficial contract with a Requester, their level of effort and the resulting performance do not change as a function of pay.

We took a similar approach to explaining our findings for the relationship between pay and satisfaction as Judge and colleagues [ 18 ] in their landmark meta-analysis of pay satisfaction. Helson’s [ 45 ] adaptation level theory suggests that individuals judge their current experiences based on a reference point that is adjusted as a function of previous experiences and contextual stimuli. As such, a pay increase may influence this reference point and lose its value over time. Similarly, Lucas et al. [ 46 ] discuss the effect of hedonic leveling whereby individual well-being stabilizes over time such that positive events affect those whose lives are already satisfying less than those with poor well-being. Based on this rationale, it would be expected that high pay would be most satisfying for individuals, like MTurk Workers, who have historically been underpaid for their work in addition to those who receive large pay increases over time after initial lower pay.

T1 pay and T2 pay multiplier significantly predicted retention, but there was not a significant interaction between the two. MTurk Workers may recognize T1 pay as an initial hurdle to participation, but after completing T1 tasks, they renegotiate their psychological contract about the value of participation relative to the time costs associated with returning for T2. Here, a higher pay increase represents a recognition from the Requester that the Workers’ time is valuable.

The current study makes a major contribution to current discussion surrounding ethical treatment of MTurk Workers by applying psychological principles of work motivation, psychological contracts, and pay. The findings are generally applicable to a new kind of virtual work environment similar to traditional labor halls of the industrial revolution. However, the inferences made based on these findings have three limitations which may offer guidance for future research in this area.

As a first limitation, by nature, the current study does not allow us to infer the psychological and motivational characteristics of those MTurk Workers who did not accept the HIT. Though non-respondents are admittedly a blind spot in any social science research, it is particularly important for this study because it indicates a possible preferred threshold for initial pay level. The MTurk platform allows Workers to sort and filter HITs based on pay, thus non-respondents for this study include those who never saw the HIT due to pay and those who outright chose not to complete it after previewing the task and comparing it with pay. The current study does not allow us to disentangle these two scenarios.

Secondly, age was not collected as a demographic variable. There is a lack of evidence to suggest that age is a significant determinant of motivation, especially in gig work [ 11 ]. Age and tenure are highly correlated and when controlling for the latter, age is typically not a determinant of pay fairness perceptions [ 47 ]. Given the fact that MTurk is an informal marketplace and does not represent a typical employee-organization relationship, the effect of tenure is unclear. However, meta-analytic findings from Bal and colleagues suggest age moderates the relationship between psychological contract breach and attitudinal outcomes, such that as age increased the negative relationship between contract breach and trust and organizational commitment weakened [ 48 ]. MTurk Workers are typically older and more age-diverse than other convenience samples used for social science research such as undergraduate students [ 1 ]. This makes age an interesting factor to explore in future research regarding variable expectations of the working environment and reactions to pay, justice perceptions, and psychological contract breaches.

Thirdly, the current study makes inferences about low pay on crowdsourced work platforms such as MTurk, but it does not consider the possible undue influence of excessive pay compared to similar tasks. The Belmont Report which is concerned with the fair treatment of human subjects states that “undue influence…occurs through an offer of an excessive, unwarranted, inappropriate or improper reward or other overture in order to obtain compliance” [ 7 ]. Though not particularly relevant for the tasks that participants completed in this study, other research which requires disclosure of socially unacceptable attitudes and behavior should be particularly concerned about undue influence especially with populations as vulnerable as underpaid MTurk Workers. Subsequently, future research should focus on the boundary conditions of an appropriate level of pay for social science research with a focus on finding a balance between exploitation and undue influence.

Despite differences between worker-participants and voluntary or student research participants, academic Requesters may not view themselves as employers. Nonetheless, they have an equal ethical obligation to all types of participants which should incorporate unique participant motivations and expectations and the psychological contract. We have shown that although the evidence does not suggest pay affects the overall performance of a Worker, it does affect satisfaction and attrition. We hope to demonstrate that MTurk can be viewed and understood as a unique workplace, with unique needs in terms of compensation, and Requester-Worker expectations. Attention toward these characteristics, as with any research participant population, is one of many critical determinants of retention in longitudinal research.

- View Article

- PubMed/NCBI

- Google Scholar

- 9. Johnston H, Land-Kazlauskas C. Organizing on-demand: Representation, voice, and collective bargaining in the gig economy. Geneva: International Labour Organization; 2018.

- 12. Locke EA, Feren DB, McCaleb VM, Shaw KN, Denny AT. The relative effectiveness of four methods of motivating employee performance. In: Duncan KD, Gruenberg MM, Wallis D, editors. Changes in Working Life. New York: Wiley; 1980. pp. 363–388.

- 15. Lawler EE 3rd. Pay and organizational effectiveness: A psychological view. New York: McGraw Hill; 1971.

- 20. Rousseau DM. Psychological contracts in organizations: Understanding written and unwritten agreements. SAGE Publications; 1995. https://doi.org/10.4135/9781452231594

- 23. Levanthal GS. The distribution of rewards and resources in groups and organizations. Vol. 9. In: Berkowitz L, Walster W, editors. Advances in experimental social psychology. Vol. 9. New York: Academic Press; 1976. pp. 91–131.

- 42. Fox J. Dummy-Variable Regression. In: Fox J. Applied Regression Analysis and Generalized Linear Models. Los Angeles: SAGE Publications; 2015. pp. 120–142.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Pay for performance, satisfaction and retention in longitudinal crowdsourced research

Elena m auer, tara s behrend, andrew b collmus, richard n landers, ahleah f miles.