A monthly newsletter from the National Institutes of Health, part of the U.S. Department of Health and Human Services

Search form

January 2023

Print this issue

Health Capsule

Artificial Intelligence and Medical Research

Artificial intelligence, or AI, has been around for decades. In the past 20 years or so, it’s become a growing part of our lives. Researchers are now drawing on the power of AI to improve medicine and health care in innovative and far-reaching ways. NIH is on the cutting edge supporting these efforts.

At first, computers could simply do calculations based on human input. In AI, they learn to perform certain tasks. Some early forms of AI could play checkers or chess and even defeat human world champions. Others could recognize and convert speech to text.

Today, different forms of AI are being used to improve medical care. Researchers are exploring how AI could be used to sift through test results and image data. AI could then make recommendations to help with treatment decisions.

Some NIH-funded studies are using AI to develop “smart clothing” that can reduce low back pain. This technology could warn the wearer of unsafe body movements. Other studies are seeking ways to better manage blood glucose (or blood sugar) levels using wearable sensors.

Learn more about the different types of AI and their use in medical research .

Related Stories

Artificial Intelligence and Your Health

Pet Dogs to the Rescue!

How Research Works

Finding Reliable Health Information Online

NIH Office of Communications and Public Liaison Building 31, Room 5B52 Bethesda, MD 20892-2094 [email protected] Tel: 301-451-8224

Editor: Harrison Wein, Ph.D. Managing Editor: Tianna Hicklin, Ph.D. Illustrator: Alan Defibaugh

Attention Editors: Reprint our articles and illustrations in your own publication. Our material is not copyrighted. Please acknowledge NIH News in Health as the source and send us a copy.

For more consumer health news and information, visit health.nih.gov .

For wellness toolkits, visit www.nih.gov/wellnesstoolkits .

Skip to Content

Healthcare research & technology advancements

Our team of clinicians, researchers, and engineers are all working together to create new AI and discover opportunities to increase the availability and accuracy of healthcare technologies globally, to realize long-term health technology potential.

Meet Med-PaLM 2, our large language model designed for the medical domain

Developing AI that can answer medical questions accurately has been a challenge for several decades. With Med-PaLM 2 , a version of PaLM 2 fine-tuned for the medical domain, we showed state-of-the-art performance in answering medical licensing exam questions. With thorough human evaluation, we’re exploring how Med-PaLM 2 can help healthcare organizations by drafting responses, summarizing documents, and providing insights. Learn more .

Expanding the power of AI in medicine

We are building and testing AI models with the goal of helping alleviate the global shortages of physicians, as well as the low access to modern imaging and diagnostic tools in certain parts of the world. With improved tech, we hope to increase accessibility and help more patients receive timely and accurate diagnoses and care.

How DeepVariant is improving the accuracy of genomic analysis

Sequencing genomes enables us to identify variants in a person’s DNA that indicate genetic disorders such as an elevated risk for breast cancer. DeepVariant is an open-source variant caller that uses a deep neural network to call genetic variants from next-generation DNA sequencing data.

Healthcare research led by scientists, enhanced by Google

Google Health is providing secure technology to partners that helps doctors, nurses, and other healthcare professionals conduct research and help improve our understanding of health. If you are a researcher interested in working with Google Health to conduct health research, enter your details to be notified when Google Health is available for research partnerships.

Using AI to give doctors a 48-hour head start on life-threatening illness

In this research in Nature , we demonstrated how artificial intelligence could accurately predict acute kidney injuries (AKI) in patients up to 48 hours earlier than it is currently diagnosed. Notoriously difficult to spot, AKI affects up to one in five hospitalized patients in the US and UK, and deterioration can happen quickly. Read the article

Deep Learning

Protecting patients, deep learning for electronic health records.

In a paper published in npj Digital Medicine , we used deep learning models to make a broad set of predictions relevant to hospitalized patients using de-identified electronic health records, and showed how that model could be used to render an accurate prediction 24 hours after a patient was admitted to the hospital. Read the article

Protecting patients from medication errors

Research shows that 2% of hospitalized patients experience serious preventable medication-related incidents that can be life-threatening, cause permanent harm, or result in death. Published in Clinical Pharmacology and Therapeutics , our best-performing AI model was able to anticipate physician’s actual prescribing decisions 75% of the time, based on de-identified electronic health records and the doctor’s prescribing records. This is an early step towards testing the hypothesis that machine learning can support clinicians in ways that prevent mistakes and help to keep patients safe. Read the article

Discover the latest

Learn more about our most recent developments from Google’s health-related research and initiatives.

Detecting Signs of Disease from External Images of the Eye

Detecting abnormal chest x-rays using deep learning, improving genomic discovery with machine learning, how ai is advancing science and medicine.

Google researchers have been exploring ways technologies could help advance the fields of medicine and science, working with scientists, doctors, and others in the field. In this video, we share a few research projects that have big potential.

We are continuously publishing new research in health

AI has the potential to help save lives by transforming healthcare and medicine through the creation of more personalized, accessible and effective solutions. This is particularly true in more resource challenged communities where there is often a shortage of healthcare workers. In collaboration with healthcare providers, researchers and industry partners, we’ve published research, created open-source tools, and built AI systems that have the potential to positively impact health outcomes for people globally. With bold innovation that's responsibly developed, AI stands to be a powerful force for health equity, improving outcomes for everyone, everywhere.

Explore how teams at Google are catalyzing the adoption of human-centered AI in healthcare.

A suite of AI models designed for the medical domain

Building on innovations from Med-PaLM , the first large language model to reach expert performance on medical licensing exam-style questions, MedLM is our collection of medically-tuned large models for commercial applications. MedLM can complete a wide range of complex tasks, ranging from answering medical questions, to summarizing dense medical information, to deriving insights from unstructured data. It is now available to Cloud customers through Vertex AI.

Mammography

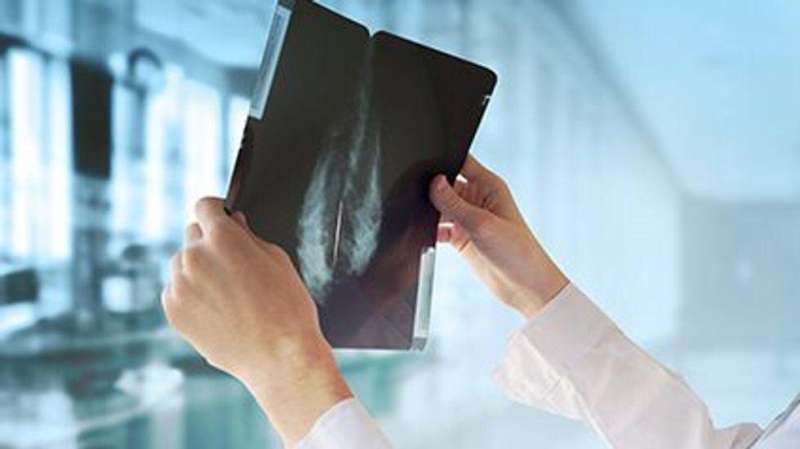

Improving breast cancer screening with AI

Breast cancer is the most common form of cancer globally, and early detection through breast cancer screening can lead to better chances of survival. Working with healthcare partners like Northwestern Medicine , we developed an AI system that integrates into breast cancer screening workflows to help radiologists identify breast cancer earlier and more consistently. Our published research shows that our technology can identify signs of breast cancer as well as trained radiologists. We are now bringing this research to reality by partnering with iCAD to embed this technology in clinical settings.

Expanding access to ultrasound with AI

Ultrasound is a versatile and increasingly more accessible early disease detection tool, providing real-time dynamic views of major organ systems. We are developing AI models to make it easier to interpret important health information from ultrasound images. Notably, we are focusing on maternal ultrasound and partnering with Jacaranda Health in Kenya to improve our AI models. Our goal is to expand access to care in areas where access to trained sonographers is limited.

Open Health Stack

Building blocks for next-generation healthcare apps

Digital mobile health apps are capable of lowering the barrier to equitable healthcare. However, it’s costly and difficult for developers to build tools that share health information across systems and work well in areas that often lack reliable internet connectivity. Open Health Stack is a suite of open-source building blocks built on an interoperable data standard. This suite of components makes it easier for developers to quickly build apps allowing healthcare workers to access the information and insights they need to make informed decisions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 15 September 2022

Multimodal biomedical AI

- Julián N. Acosta ORCID: orcid.org/0000-0001-6497-5454 1 ,

- Guido J. Falcone 1 ,

- Pranav Rajpurkar ORCID: orcid.org/0000-0002-8030-3727 2 na1 &

- Eric J. Topol ORCID: orcid.org/0000-0002-1478-4729 3 na1

Nature Medicine volume 28 , pages 1773–1784 ( 2022 ) Cite this article

114k Accesses

194 Citations

399 Altmetric

Metrics details

- Computational biology and bioinformatics

- Health care

The increasing availability of biomedical data from large biobanks, electronic health records, medical imaging, wearable and ambient biosensors, and the lower cost of genome and microbiome sequencing have set the stage for the development of multimodal artificial intelligence solutions that capture the complexity of human health and disease. In this Review, we outline the key applications enabled, along with the technical and analytical challenges. We explore opportunities in personalized medicine, digital clinical trials, remote monitoring and care, pandemic surveillance, digital twin technology and virtual health assistants. Further, we survey the data, modeling and privacy challenges that must be overcome to realize the full potential of multimodal artificial intelligence in health.

Similar content being viewed by others

Digital medicine and the curse of dimensionality

Digital twins for health: a scoping review

Integrated multimodal artificial intelligence framework for healthcare applications

While artificial intelligence (AI) tools have transformed several domains (for example, language translation, speech recognition and natural image recognition), medicine has lagged behind. This is partly due to complexity and high dimensionality—in other words, a large number of unique features or signals contained in the data—leading to technical challenges in developing and validating solutions that generalize to diverse populations. However, there is now widespread use of wearable sensors and improved capabilities for data capture, aggregation and analysis, along with decreasing costs of genome sequencing and related ‘omics’ technologies. Collectively, this sets the foundation and need for novel tools that can meaningfully process this wealth of data from multiple sources, and provide value across biomedical discovery, diagnosis, prognosis, treatment and prevention.

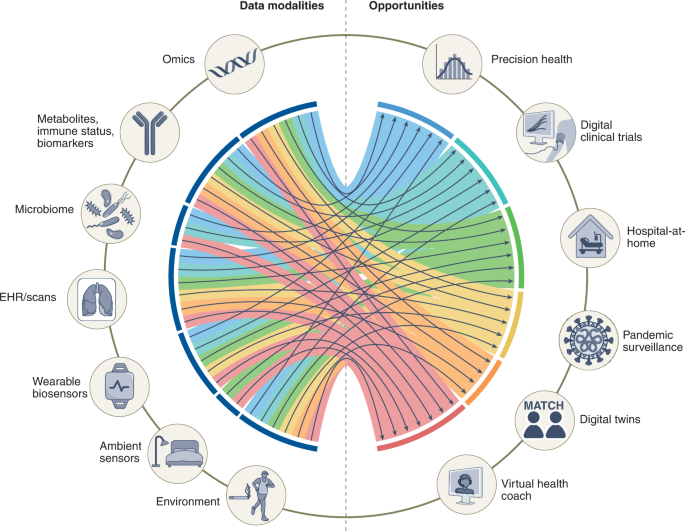

Most of the current applications of AI in medicine have addressed narrowly defined tasks using one data modality, such as a computed tomography (CT) scan or retinal photograph. In contrast, clinicians process data from multiple sources and modalities when diagnosing, making prognostic evaluations and deciding on treatment plans. Furthermore, current AI assessments are typically one-off snapshots, based on a moment of time when the assessment is performed, and therefore not ‘seeing’ health as a continuous state. In theory, however, AI models should be able to use all data sources typically available to clinicians, and even those unavailable to most of them (for example, most clinicians do not have a deep understanding of genomic medicine). The development of multimodal AI models that incorporate data across modalities—including biosensors, genetic, epigenetic, proteomic, microbiome, metabolomic, imaging, text, clinical, social determinants and environmental data—is poised to partially bridge this gap and enable broad applications that include individualized medicine, integrated, real-time pandemic surveillance, digital clinical trials and virtual health coaches (Fig. 1 ). In this Review, we explore the opportunities for such multimodal datasets in healthcare; we then discuss the key challenges and promising strategies for overcoming these. Basic concepts in AI and machine learning will not be discussed here but are reviewed in detail elsewhere 1 , 2 , 3 .

Created with BioRender.com .

Opportunities for leveraging multimodal data

Personalized ‘omics’ for precision health.

With the remarkable progress in sequencing over the past two decades, there has been a revolution in the amount of fine-grained biological data that can be obtained using novel technical developments. These are collectively referred to as the ‘omes’, and includes the genome, proteome, transcriptome, immunome, epigenome, metabolome and microbiome 4 . These can be analyzed in bulk or at the single-cell level, which is relevant because many medical conditions such as cancer are quite heterogeneous at the tissue level, and much of biology shows cell and tissue specificity.

Each of the omics has shown value in different clinical and research settings individually. Genetic and molecular markers of malignant tumors have been integrated into clinical practice 5 , 6 , with the US Food and Drug Administration (FDA) providing approval for several companion diagnostic devices and nucleic acid-based tests 7 , 8 . As an example, Foundation Medicine and Oncotype IQ (Genomic Health) offer comprehensive genomic profiling tailored to the main classes of genomic alterations across a broad panel of genes, with the final goal of identifying potential therapeutic targets 9 , 10 . Beyond these molecular markers, liquid biopsy samples—easily accessible biological fluids such as blood and urine—are becoming a widely used tool for analysis in precision oncology, with some tests based on circulating tumor cells and circulating tumor DNA already approved by the FDA 11 . Beyond oncology, there has been a remarkable increase in the last 15 years in the availability and sharing of genetic data, which enabled genome-wide association studies 12 and characterization of the genetic architecture of complex human conditions and traits 13 . This has improved our understanding of biological pathways and produced tools such as polygenic risk scores 14 (which capture the overall genetic propensity to complex traits for each individual), and may be useful for risk stratification and individualized treatment, as well as in clinical research to enrich the recruitment of participants most likely to benefit from interventions 15 , 16 .

The integration of these very distinct types of data remains challenging. Yet, overcoming this problem is paramount, as the successful integration of omics data, in addition to other types such as electronic health record (EHR) and imaging data, is expected to increase our understanding of human health even further and allow for precise and individualized preventive, diagnostic and therapeutic strategies 4 . Several approaches have been proposed for multi-omics data integration in precision health contexts 17 . Graph neural networks are one example; 18 , 19 these are deep learning model architectures that process computational graphs—a well-known data structure comprising nodes (representing concepts or entities) and edges (representing connections or relationships between nodes)—thereby allowing scientists to account for the known interrelated structure of multiple types of omics data, which can improve performance of a model 20 . Another approach is dimensionality reduction, including novel methods such as PHATE and Multiscale PHATE, which can learn abstract representations of biological and clinical data at different levels of granularity, and have been shown to predict clinical outcomes, for example, in people with coronavirus disease 2019 (COVID-19) 21 , 22 .

In the context of cancer, overcoming challenges related to data access, sharing and accurate labeling could potentially lead to impactful tools that leverage the combination of personalized omics data with histopathology, imaging and clinical data to inform clinical trajectories and improve patient outcomes 23 . The integration of histopathological morphology data with transcriptomics data, resulting in spatially resolved transcriptomics 24 , constitutes a novel and promising methodological advancement that will enable finer-grained research into gene expression within a spatial context. Of note, researchers have utilized deep learning to leverage histopathology images to predict spatial gene expression from these images alone, pointing to morphological features in these images not captured by human experts that could potentially enhance the utility and lower the costs of this technology 25 , 26 .

Genetic data are increasingly cost effective, requiring only a one-in-a-lifetime ascertainment, but they also have limited predictive ability on their own 27 . Integrating genomics data with other omics data may capture more dynamic and real-time information on how each particular combination of genetic background and environmental exposures interact to produce the quantifiable continuum of health status. As an example, Kellogg et al. 28 conducted an N -of-1 study performing whole-genome sequencing (WGS) and periodic measurements of other omics layers (transcriptome, proteome, metabolome, antibodies and clinical biomarkers); polygenic risk scoring showed an increased risk of type II diabetes mellitus, and comprehensive profiling of other omics enabled early detection and dissection of signaling network changes during the transition from health to disease.

As the scientific field advances, the cost-effectiveness profile of WGS will become increasingly favorable, facilitating the combination of clinical and biomarker data with already available genetic data to arrive at a rapid diagnosis of conditions that were previously difficult to detect 29 . Ultimately, the capability to develop multimodal AI that includes many layers of omics data will get us to the desired goal of deep phenotyping of an individual; in other words, a true understanding of each person’s biological uniqueness and how that affects health.

Digital clinical trials

Randomized clinical trials are the gold standard study design to investigate causation and provide evidence to support the use of novel diagnostic, prognostic and therapeutic interventions in clinical medicine. Unfortunately, planning and executing a high-quality clinical trial is not only time consuming (usually taking many years to recruit enough participants and follow them in time) but also financially very costly 30 , 31 . In addition, geographic, sociocultural and economic disparities in access to enrollment, have led to a remarkable underrepresentation of several groups in these studies. This limits the generalizability of results and leads to a scenario whereby widespread underrepresentation in biomedical research further perpetuates existing disparities 32 . Digitizing clinical trials could provide an unprecedented opportunity to overcome these limitations, by reducing barriers to participant enrollment and retainment, promoting engagement and optimizing trial measurements and interventions. At the same time, the use of digital technologies can enhance the granularity of the information obtained from participants, thereby increasing the value of these studies 33 .

Data from wearable technology (including heart rate, sleep, physical activity, electrocardiography, oxygen saturation and glucose monitoring) and smartphone-enabled self-reported questionnaires can be useful for monitoring clinical trial patients, identifying adverse events or ascertaining trial outcomes 34 . Additionally, a recent study highlighted the potential of data from wearable sensors to predict laboratory results 35 . Consequently, the number of studies using digital products has been growing rapidly in the last few years, with a compound annual growth rate of around 34% 36 . Most of these studies utilize data from a single wearable device. One pioneering trial used a ‘band-aid’ patch sensor for detecting atrial fibrillation; the sensor was mailed to participants who were enrolled remotely, without the use of any clinical sites, and set the foundation for digitized clinical trials 37 . Many remote, site-less trials using wearables were conducted during the COVID-19 pandemic to detect coronavirus 38 .

Effectively combining data from different wearable sensors with clinical data remains a challenge and an opportunity. Digital clinical trials could leverage multiple sources of participants’ data to enable automatic phenotyping and subgrouping 34 , which could be useful for adaptive clinical trial designs that use ongoing results to modify the trial in real time 39 , 40 . In the future, we expect that the increased availability of these data and novel multimodal learning techniques will improve our capabilities in digital clinical trials. Of note, recent work in a time-series analysis by Google has demonstrated the promise of attention-based model architectures to combine both static and time-dependent inputs to achieve interpretable time-series forecasting. As a hypothetical example, these models could understand whether to focus on static features such as genetic background, known time-varying features such as time of the day or observed features such as current glycemic levels, to make predictions on future risk of hypoglycemia or hyperglycemia 41 . Graph neural networks have been recently proposed to overcome the problem of missing or irregularly sampled data from multiple health sensors, by leveraging information from the interconnection between these 42 .

Patient recruitment and retention in clinical trials are essential but remain a challenge. In this setting, there is an increasing interest in the utilization of synthetic control methods (that is, using external data to create controls). Although synthetic control trials are still relatively novel 43 , the FDA has already approved medications based on historical controls 44 and has developed a framework for the utilization of real-world evidence 45 . AI models utilizing data from different modalities can potentially help identify or generate the most optimal synthetic controls 46 , 47 .

Remote monitoring: the ‘hospital-at-home’

Recent progress with biosensors, continuous monitoring and analytics raises the possibility of simulating the hospital setting in a person’s home. This offers the promise of marked reduction of cost, less requirement for healthcare workforce, avoidance of nosocomial infections and medical errors that occur in medical facilities, along with the comfort, convenience and emotional support of being with family members 48 .

In this context, wearable sensors have a crucial role in remote patient monitoring. The availability of relatively affordable noninvasive devices (smartwatches or bands) that can accurately measure several physiological metrics is increasing rapidly 49 , 50 . Combining these data with those derived from EHRs—using standards such as the Fast Healthcare Interoperability Resources, a global industry standard for exchanging healthcare data 51 —to query relevant information about a patient’s underlying disease risk could create a more personalized remote monitoring experience for patients and caregivers. Ambient wireless sensors offer an additional opportunity to collect valuable data. Ambient sensors are devices located within the environment (for example, a room, a wall or a mirror) ranging from video cameras and microphones to depth cameras and radio signals. These ambient sensors can potentially improve remote care systems at home and in healthcare institutions 52 .

The integration of data from these multiple modalities and sensors represents a promising opportunity to improve remote patient monitoring, and some studies have already demonstrated the potential of multimodal data in these scenarios. For example, the combination of ambient sensors (such as depth cameras and microphones) with wearables data (for example, accelerometers, which measure physical activity) has the potential to improve the reliability of fall detection systems while keeping a low false alarm rate 53 , and to improve gait analysis performance 54 . Early detection of impairments in physical functional status via activities of daily living such as bathing, dressing and eating is remarkably important to provide timely clinical care, and the utilization of multimodal data from wearable devices and ambient sensors can potentially help with accurate detection and classification of difficulties in these activities 55 .

Beyond management of chronic or degenerative disorders, multimodal remote patient monitoring could also be useful in the setting of acute disease. A recent program conducted by the Mayo Clinic showcased the feasibility and safety of remote monitoring in people with COVID-19 (ref. 56 ). Remote patient monitoring for hospital-at-home applications—not yet validated—requires randomized trials of multimodal AI-based remote monitoring versus hospital admission to show no impairment of safety. We need to be able to predict impending deterioration and have a system to intervene, and this has not been achieved yet.

Pandemic surveillance and outbreak detection

The current COVID-19 pandemic has highlighted the need for effective infectious disease surveillance at national and state levels 57 , with some countries successfully integrating multimodal data from migration maps, mobile phone utilization and health delivery data to forecast the spread of the outbreak and identify potential cases 58 , 59 .

One study has also demonstrated the utilization of resting heart rate and sleep minutes tracked using wearable devices to improve surveillance of influenza-like illness in the USA 60 . This initial success evolved into the Digital Engagement and Tracking for Early Control and Treatment (DETECT) Health study, launched by the Scripps Research Translational Institute as an app-based research program aiming to analyze a diverse set of data from wearables to allow for rapid detection of the emergence of influenza, coronavirus and other fast-spreading viral illnesses. A follow-up study from this program showed that jointly considering participant self-reported symptoms and sensor metrics improved performance relative to either modality alone, reaching an area under the receiver operating curve value of 0.80 (95% confidence interval 0.73–0.86) for classifying COVID-19-positive versus COVID-19-negative status 61 .

Several other use cases for multimodal AI models in pandemic preparedness and response have been tested with promising results, but further validation and replication of these results are needed 62 , 63 .

Digital twins

We currently rely on clinical trials as the best evidence to identify successful interventions. Interventions that help 10 of 100 people may be considered successful, but these are applied to the other 90 without proven or likely benefit. A complementary approach known as ‘digital twins’ can fill the knowledge gaps by leveraging large amounts of data to model and predict with high precision how a certain therapeutic intervention would benefit or harm a particular patient.

Digital twin technology is a concept borrowed from engineering that uses computational models of complex systems (for example, cities, airplanes or patients) to develop and test different strategies or approaches more quickly and economically than in real-life scenarios 64 . In healthcare, digital twins are a promising tool for drug target discovery 65 , 66 .

Integrating data from multiple sources to develop digital twin models using AI tools has already been proposed in precision oncology and cardiovascular health 67 , 68 . An open-source modular framework has also been proposed for the development of medical digital twin models 69 . From a commercial point of view, Unlearn.AI has developed and tested digital twin models that leverage diverse sets of clinical data to enhance clinical trials for Alzheimer’s disease and multiple sclerosis 70 , 71 .

Considering the complexity of human organisms, the development of accurate and useful digital twin technology in medicine will depend on the ability to collect large and diverse multimodal data ranging from omics data and physiological sensors to clinical and sociodemographic data. This will likely require large collaborations across health systems, research groups and industry, such as the Swedish Digital Twins Consortium 65 , 72 . The American Society of Clinical Oncology, through its subsidiary called CancerLinQ, developed a platform that enables researchers to utilize a wealth of data from patients with cancer to help guide optimal treatment and improve outcomes 73 . The development of AI models capable of effectively learning from all these data modalities together, to make real-time predictions, is paramount.

Virtual health assistant

More than one-third of US consumers have acquired a smart speaker in the last few years. However, virtual health assistants—digital AI-enabled coaches that can advise people on their health needs—have not been developed widely to date, and those currently in the market often target a particular condition or use case. In addition, a recent review of health-focused conversational agents apps found that most of these rely on rule-based approaches and predefined app-led dialog 74 .

One of the most popular, although not multimodal AI-based, current applications of these narrowly focused virtual health assistants is in diabetes care. Virta health, Accolade and Onduo by Verily (Alphabet) have all developed applications that aim to improve diabetes control, with some demonstrating improvement in hemoglobin A1c levels in individuals who followed the programs 75 . Many of these companies have expanded or are in the process of expanding to other use cases such as hypertension control and weight loss. Other examples of virtual health coaches have tackled common conditions such as migraine, asthma and chronic obstructive pulmonary disease, among others 76 . Unfortunately, most of these applications have been tested only on small observational studies, and much more research, including randomized clinical trials, are needed to evaluate their benefits.

Looking into the future, the successful integration of multiple data sources in AI models will facilitate the development of broadly focused personalized virtual health assistants 77 . These virtual health assistants can leverage individualized profiles based on genome sequencing, other omics layers, continuous monitoring of blood biomarkers and metabolites, biosensors and other relevant biomedical data—to promote behavior change, answer health-related questions, triage symptoms or communicate with healthcare providers when appropriate. Importantly, these AI-enabled medical coaches will need to demonstrate beneficial effects on clinical outcomes via randomized trials to achieve widespread acceptance in the medical field. As most of these applications are focused on improving health choices, they will need to provide evidence of influencing health behavior, which represents the ultimate pathway for the successful translation of most interventions 78 .

We still have a long way to go to achieve the full potential of AI and multimodal data integration into virtual health assistants, including the technical challenges, data-related challenges and privacy challenges discussed below. Given the rapid advances in conversational AI 79 , coupled with the development of increasingly sophisticated multimodal learning approaches, we expect future digital health applications to embrace the potential of AI to deliver accurate and personalized health coaching.

Multimodal data collection

The first requirement for the successful development of multimodal data-enabled applications is the collection, curation and harmonization of well-phenotyped and large annotated datasets, as no amount of technical sophistication can derive information not present in the data 80 . In the last 20 years, many national and international studies have collected multimodal data with the ultimate goal of accelerating precision health (Table 1 ). In the UK, the UK Biobank initiated enrollment in 2006, reaching a final participant count of over 500,000, and plans to follow participants for at least 30 years after enrollment 81 . This large biobank has collected multiple layers of data from participants, including sociodemographic and lifestyle information, physical measurements, biological samples, 12-lead electrocardiograms and EHR data 82 . Further, almost all participants underwent genome-wide array genotyping and, more recently, proteome, whole-exome sequencing 83 and WGS 84 . A subset of individuals also underwent brain magnetic resonance imaging (MRI), cardiac MRI, abdominal MRI, carotid ultrasound and dual-energy X-ray absorptiometry, including repeat imaging across at least two time points 85 .

Similar initiatives have been conducted in other countries, such as the China Kadoorie Biobank 86 and Biobank Japan 87 . In the USA, the Department of Veteran Affairs launched the Million Veteran Program 88 in 2011, aiming to enroll 1 million veterans to contribute to scientific discovery. Two important efforts funded by the National Institutes of Health (NIH) include the Trans-Omics for Precision Medicine (TOPMed) program and the All of Us Research Program. TOPMed collects WGS with the aim to integrate this genetic information with other omics data 89 . The All of Us Research Program 90 constitutes another novel and ambitious initiative by the NIH that has enrolled about 400,000 diverse participants of the 1 million people planned across the USA, and is focused on enrolling individuals from broadly defined underrepresented groups in biomedical research, which is especially needed in medical AI 91 , 92 .

Besides these large national initiatives, independent institutional and multi-institutional efforts are also building deep, multimodal data resources in smaller numbers of people. The Project Baseline Health Study, funded by Verily and managed in collaboration with Stanford University, Duke University and the California Health and Longevity Institute, aims to enroll at least 10,000 individuals, starting with an initial 2,500 participants from whom a broad range of multimodal data are collected, with the aim of evolving into a combined virtual-in-person research effort 93 . As another example, the American Gut Project collects microbiome data from self-selected participants across several countries 94 . These participants also complete surveys about general health status, disease history, lifestyle data and food frequency. The Medical Information Mart for Intensive Care (MIMIC) database 95 , organized by the Massachusetts Institute of Technology, represents another example of multidimensional data collection and harmonization. Currently in its fourth version, MIMIC is an open-source database that contains de-identified data from thousands of patients who were admitted to the critical care units of the Beth Israel Deaconess Medical Center, including demographic information, EHR data (for example, diagnosis codes, medications ordered and administered, laboratory data and physiological data such as blood pressure or intracranial pressure values), imaging data (for example, chest radiographs) 96 and, in some versions, natural language text such as radiology reports and medical notes. This granularity of data is particularly useful for the data science and machine learning community, and MIMIC has become one of the benchmark datasets for AI models aiming to predict the development of clinical events such as kidney failure, or outcomes such as survival or readmissions 97 , 98 .

The availability of multimodal data in these datasets may help achieve better diagnostic performance across a range of different tasks. As an example, recent work has demonstrated that the combination of imaging and EHR data outperforms each of these modalities alone to identify pulmonary embolism 99 , and to differentiate between common causes of acute respiratory failure, such as heart failure, pneumonia or chronic obstructive pulmonary disease 100 . The Michigan Predictive Activity & Clinical Trajectories in Health (MIPACT) study constitutes another example, with participants contributing data from wearables, physiological data (blood pressure), clinical information (EHR and surveys) and laboratory data 101 . The North American Prodrome Longitudinal Study is yet another example. This multisite program recruited individuals, and collected demographic, clinical and blood biomarker data with the goal of understanding the prodromal stages of psychosis 102 , 103 . Other studies focusing on psychiatric disorders such as the Personalised Prognostic Tools for Early Psychosis Management also collected several types of data and have already empowered the development of multimodal machine learning workflows 104 .

Technical challenges

Implementation and modeling challenges.

Health data are inherently multimodal. Our health status encompasses many domains (social, biological and environmental) that influence well-being in complex ways. Additionally, each of these domains is hierarchically organized, with data being abstracted from the big picture macro level (for example, disease presence or absence) to the in-depth micro level (for example, biomarkers, proteomics and genomics). Furthermore, current healthcare systems add to this multimodal approach by generating data in multiple ways: radiology and pathology images are, for example, paired with natural language data from their respective reports, while disease states are also documented in natural language and tabular data in the EHR.

Multimodal machine learning (also referred to as multimodal learning) is a subfield of machine learning that aims to develop and train models that can leverage multiple different types of data and learn to relate these multiple modalities or combine them, with the goal of improving prediction performance 105 . A promising approach is to learn accurate representations that are similar for different modalities (for example, a picture of an apple should be represented similarly to the word ‘apple’). In early 2021, OpenAI released an architecture termed Contrastive Language Image Pretraining (CLIP), which, when trained on millions of image–text pairs, matched the performance of competitive, fully supervised models without fine-tuning 106 . CLIP was inspired by a similar approach developed in the medical imaging domain termed Contrastive Visual Representation Learning from Text (ConVIRT) 107 . With ConVIRT, an image encoder and a text encoder are trained to generate image and text representations by maximizing the similarity of correctly paired image and text examples and minimizing the similarity of incorrectly paired examples—this is called contrastive learning. This approach for paired image–text co-learning has been used recently to learn from chest X-rays and their associated text reports, outperforming other self-supervised and fully supervised methods 108 . Other architectures have also been developed to integrate multimodal data from images, audio and text, such as the Video-Audio-Text Transformer, which uses videos to obtain paired multimodal image, text and audio and to train accurate multimodal representations able to generalize with good performance on many tasks—such as recognizing actions in videos, classifying audio events, classifying images, and selecting the most adequate video for an input text 109 .

Another desirable feature for multimodal learning frameworks is the ability to learn from different modalities without the need for different model architectures. Ideally, a unified multimodal model would incorporate different types of data (images, physiological sensor data and structured and unstructured text data, among others), codify concepts contained in these different types of data in a flexible and sparse way (that is, a unique task activates only a small part of the network, with the model learning which parts of the network should handle each unique task) 110 , produce aligned representations for similar concepts across modalities (for example, the picture of a dog, and the word ‘dog’ should produce similar internal representations), and provide any arbitrary type of output as required by the task 111 .

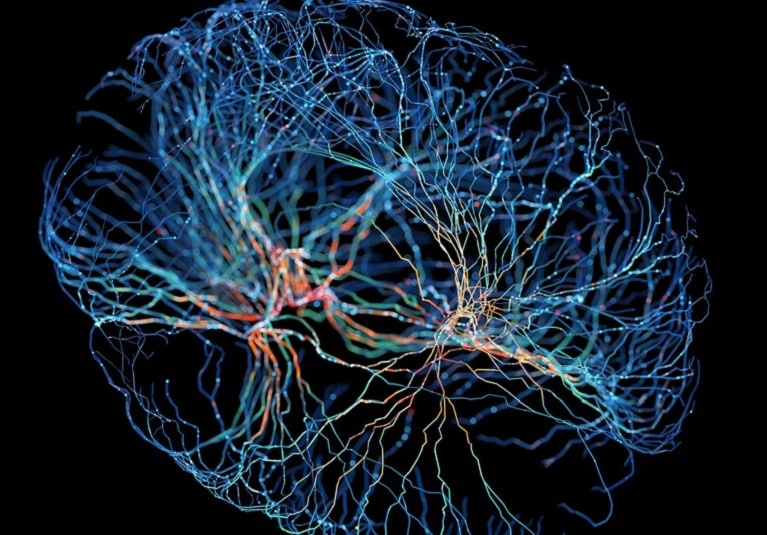

In the last few years, there has been a transition from architectures with strong modality-specific biases—such as convolutional neural networks for images, or recurrent neural networks for text and physiological signals—to a relatively novel architecture called the Transformer, which has demonstrated good performance across a wide variety of input and output modalities and tasks 112 . The key strategy behind transformers is to allow neural networks—which are artificial learning models that loosely mimic the behavior of the human brain—to dynamically pay attention to different parts of the input when processing and ultimately making decisions. Originally proposed for natural language processing, thus providing a way to capture the context of each word by attending to other words of the input sentence, this architecture has been successfully extended to other modalities 113 .

While each input token (that is, the smallest unit for processing) in natural language processing corresponds to a specific word, other modalities have generally used segments of images or video clips as tokens 114 . Transformer architectures allow us to unify the framework for learning across modalities but may still need modality-specific tokenization and encoding. A recent study by Meta AI (Meta Platforms) proposed a unified framework for self-supervised learning that is independent of the modality of interest, but still requires modality-specific preprocessing and training 115 . Benchmarks for self-supervised multimodal learning allow us to measure the progress of methods across modalities: for instance, the Domain-Agnostic Benchmark for Self-supervised learning (DABS) is a recently proposed benchmark that includes chest X-rays, sensor data and natural image and text data 116 .

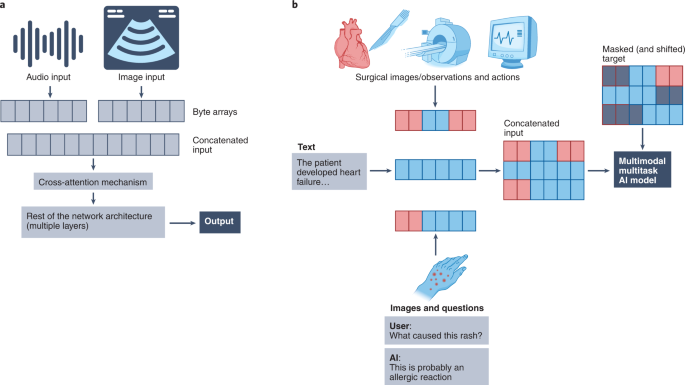

Recent advances proposed by DeepMind (Alphabet), including Perceiver 117 and Perceiver IO 118 , propose a framework for learning across modalities with the same backbone architecture. Importantly, the input to the Perceiver architectures are modality-agnostic byte arrays, which are condensed through an attention bottleneck (that is, an architecture feature that restricts the flow of information, forcing models to condense the most relevant) to avoid size-dependent large memory costs (Fig. 2a ). After processing these inputs, the Perceiver can then feed the representations to a final classification layer to obtain the probability of each output category, while the Perceiver IO can decode these representations directly into arbitrary outputs such as pixels, raw audio and classification labels, through a query vector that specifies the task of interest; for example, the model could output the predicted imaging appearance of an evolving brain tumor, in addition to the probability of successful treatment response.

a , Simplified schematic of the Perceiver-like architecture: images, text and other inputs are converted agnostically into byte arrays that are concatenated (that is, fused) and passed through cross-attention mechanisms (that is, a mechanism to project or condense information into a fixed-dimensional representation) to feed information into the network. b , Simplified illustration of the conceptual framework behind the multimodal multitask architectures (for example, Gato), within a hypothetical medical example: distinct input modalities ranging from images, text and actions are tokenized and fed to the network as input sequences, with masked shifted versions of these sequences fed as targets (that is, the network only sees information from previous time points to predict future actions, only previous words to predict the next or only the image to predict text); the network then learns to handle multiple modalities and tasks.

A promising aspect of transformers is the ability to learn meaningful representations with unlabeled data, which is paramount in biomedical AI given the limited and expensive resources needed to obtain high-quality labels. Many of the approaches mentioned above require aligned data from different modalities (for example, image–text pairs). A study from DeepMind, in fact, suggested that curating higher-quality image–text datasets may be more important than generating large single-modality datasets, and other aspects of algorithm development and training 119 . However, these data may not be readily available in the setting of biomedical AI. One possible solution to this problem is to leverage available data from one modality to help learning with another—a multimodal learning task termed ‘co-learning’ 105 . As an example, some studies suggest that transformers pretrained on unlabeled language data might be able to generalize well to a broad range of other tasks 120 . In medicine, a model architecture called ‘CycleGANs’, trained on unpaired contrast and non-contrast CT scans, has been used to generate synthetic non-contrast or contrast CT scans 121 , with this approach showing improvements, for instance, in COVID-19 diagnosis 122 . While promising, this approach has not been tested widely in the biomedical setting and requires further exploration.

Another important modeling challenge relates to the exceedingly high number of dimensions contained in multimodal health data, collectively termed ‘the curse of dimensionality’. As the number of dimensions (that is, variables or features contained in a dataset) increases, the number of people carrying some specific combinations of these features decreases (or for some combinations, even disappears), leading to ‘dataset blind spots’, that is, portions of the feature space (the set of all possible combinations of features or variables) that do not have any observation. These dataset blind spots can hurt model performance in terms of real-life prediction and should therefore be considered early in the model development and evaluation process 123 . Several strategies can be used to mitigate this issue, and have been described in detail elsewhere 123 . In brief, these include collecting data using maximum performance tasks (for example, rapid finger tapping for motor control, as opposed to passively collected data during everyday movement), ensuring large and diverse sample sizes (that is, with the conditions matching those expected at clinical deployment of the model), using domain knowledge to guide feature engineering and selection (with a focus on feature repeatability), appropriate model training and regularization, rigorous model validation and comprehensive model monitoring (including monitoring the difference between the distributions of training data and data found after deployment). Looking to the future, developing models able to incorporate previous knowledge (for example, known gene regulatory pathways and protein interactions) might be another promising approach to overcome the curse of dimensionality. Along these lines, recent studies demonstrated that models augmented by retrieving information from large databases outperform larger models trained on larger datasets, effectively leveraging available information and also providing added benefits such as interpretability 124 , 125 .

An increasingly used approach in multimodal learning is to combine the data from different modalities, as opposed to simply inputting several modalities separately into a model, to increase prediction performance—process termed ‘multimodal fusion’ 126 , 127 . Fusion of different data modalities can be performed at different stages of the process. The simplest approach involves concatenating input modalities or features before any processing (early fusion). While simple, this approach is not suitable for many complex data modalities. A more sophisticated approach is to combine and co-learn representations of these different modalities during the training process (joint fusion), allowing for modality-specific preprocessing while still capturing the interaction between data modalities. Finally, an alternative approach is to train separate models for each modality and combine the output probabilities (late fusion), a simple and robust approach, but at the cost of missing any information that could be abstracted from the interaction between modalities. Early work on fusion focused on allowing time-series models to leverage information from structured covariates for tasks such as forecasting osteoarthritis progression and predicting surgical outcomes in patients with cerebral palsy 128 . As another example of fusion, a group from DeepMind used a high-dimensional EHR-based dataset comprising 620,000 dimensions that were projected into a continuous embedding space with only 800 dimensions, capturing a wide array of information in a 6-h time frame for each patient, and built a recurrent neural network to predict acute kidney injury over time 129 . A lot of studies have used fusion of two modalities (bimodal fusion) to improve predictive performance. Imaging and EHR-based data have been fused to improve detection of pulmonary embolism, outperforming single-modality models 99 . Another bimodal study fused imaging features from chest X-rays with clinical covariates, improving the diagnosis of tuberculosis in individuals with HIV 130 . Optical coherence tomography and infrared reflectance optic disc imaging have been combined to better predict visual field maps compared to using either of those modalities alone 131 .

Multimodal fusion is a general concept that can be tackled using any architectural choice. Although not biomedical, we can learn from some AI imaging work; modern guided image generation models such as DALL-E 132 and GLIDE 133 often concatenate information from different modalities into the same encoder. This approach has demonstrated success in a recent study conducted by DeepMind (using Gato, a generalist agent) showing that concatenating a wide variety of tokens created from text, images and button presses, among others, can be used to teach a model to perform several distinct tasks ranging from captioning images and playing Atari games to stacking blocks with a robot arm (Fig. 2b ) 134 . Importantly, a recent study titled Align Before Fuse suggested that aligning representations across modalities before fusing them might result in better performance in downstream tasks, such as for creating text captions for images 135 . A recent study from Google Research proposed using attention bottlenecks for multimodal fusion, thereby restricting the flow of cross-modality information to force models to share the most relevant information across modalities and hence improving computational performance 136 .

Another paradigm of using two modalities together is to ‘translate’ from one to the other. In many cases, one data modality may be strongly associated with clinical outcomes but be less affordable, accessible or require specialized equipment or invasive procedures. Deep learning-enabled computer vision has been shown to capture information typically requiring a higher-fidelity modality for human interpretation. As an example, one study developed a convolutional neural network that uses echocardiogram videos to predict laboratory values of interest such as cardiac biomarkers (troponin I and brain natriuretic peptide) and other commonly obtained biomarkers, and found that predictions from the model were accurate, with some of them even having more prognostic performance for heart failure admissions than conventional laboratory testing 137 . Deep learning has also been widely studied in cancer pathology to make predictions beyond typical pathologist interpretation tasks with H&E stains, with several applications including prediction of genotype and gene expression, response to treatment and survival using only pathology images as inputs 138 .

Many other important challenges relating to multimodal model architectures remain. For some modalities (for example, three-dimensional imaging), even models using only a single time point require large computing capabilities, and the prospect of implementing a model that also processes large-scale omics or text data represents an important infrastructural challenge.

While multimodal learning has improved at an accelerated rate for the past few years, we expect that current methods are unlikely to be sufficient to overcome all the major challenges mentioned above. Therefore, further innovation will be required to fully enable effective, multimodal AI models.

Data challenges

The multidimensional data underpinning health leads to a broad range of challenges in terms of collecting, linking and annotating these data. Medical datasets can be described along several axes 139 , including the sample size, depth of phenotyping, the length and intervals of follow-up, the degree of interaction between participants, the heterogeneity and diversity of the participants, the level of standardization and harmonization of the data and the amount of linkage between data sources. While science and technology have advanced remarkably to facilitate data collection and phenotyping, there are inevitable trade-offs among these features of biomedical datasets. For example, although large sample sizes (in the range of hundreds of thousands to millions) are desirable in most cases for the training of AI models (especially multimodal AI models), the costs of achieving deep phenotyping and good longitudinal follow-up scales rapidly with larger numbers of participants, becoming financially unsustainable unless automated methods of data collection are put in place.

There are large-scale efforts to provide meaningful harmonization to biomedical datasets, such as the Observational Medical Outcomes Partnership Common Data Model developed by the Observational Health Data Sciences and Informatics collaboration 140 . Harmonization enormously facilitates research efforts and enhances reproducibility and translation into clinical practice. However, harmonization may obscure some relevant pathophysiological processes underlying certain diseases. As an example, ischemic stroke subtypes tend not to be accurately captured by existing ontologies 141 , but utilizing raw data from EHRs or radiology reports could allow for the use of natural language processing for phenotyping 142 . Similarly, the Diagnostic and Statistical Manual of Mental Disorders categorizes diagnoses based on clinical manifestations, which might not fully represent underlying pathophysiological processes 143 .

Achieving diversity across race/ethnicity, ancestry, income level, education level, healthcare access, age, disability status, geographic locations, gender and sexual orientation has proven difficult in practice. Genomics research is a prominent example, with the vast majority of studies focusing on individuals from European ancestry 144 . However, diversity of biomedical datasets is paramount as it constitutes the first step to ensure generalizability to the broader population 145 . Beyond these considerations, a required step for multimodal AI is the appropriate linking of all data types available in the datasets, which represents another challenge owing to the increasing risk of identification of individuals and regulatory constraints 146 .

Another frequent problem with biomedical data is the usually high proportion of missing data. While simply excluding patients with missing data before training is an option in some cases, selection bias can arise when other factors influence missing data 147 , and it is often more appropriate to address these gaps with statistical tools, such as multiple imputation 148 . As a result, imputation is a pervasive preprocessing step in many biomedical scientific fields, ranging from genomics to clinical data. Imputation has remarkably improved the statistical power of genome-wide association studies to identify novel genetic risk loci, and is facilitated by large reference datasets with deep genotypic coverage such as 1000 Genomes 149 , the UK10K 150 , the Haplotype reference consortium 151 and, recently, TOPMed 89 . Beyond genomics, imputation has also demonstrated utility for other types of medical data 152 . Different strategies have been suggested to make fewer assumptions. These include carry-forward imputation, with imputed values flagged and information added on when they were last measured 153 , and more complex strategies such as capturing the presence of missing data and time intervals using learnable decay terms 154 .

The risk of incurring several biases is important when conducting studies that collect health data, and multiple approaches are necessary to monitor and mitigate these biases 155 . The risk of these biases is amplified when combining data from multiple sources, as the bias toward individuals more likely to consent to each data modality could be amplified when considering the intersection between these potentially biased populations. This complex and unsolved problem is more important in the setting of multimodal health data (compared to unimodal data) and would warrant its own in-depth review. Medical AI algorithms using demographic features such as race as inputs can learn to perpetuate historical human biases, thereby resulting in harm when deployed 156 . Importantly, recent work has demonstrated that AI models can identify such features solely from imaging data, which highlights the need for deliberate efforts to detect racial bias and equalize racial outcomes during data quality control and model development 157 . In particular, selection bias is a common type of bias in large biobank studies, and has been reported as a problem, for example, in the UK Biobank 158 . This problem has also been pervasive in the scientific literature regarding COVID-19 (ref. 159 ). For example, patients using allergy medications were more likely to be tested for COVID-19, which leads to an artificially lower rate of positive tests, and an apparent protective effect among those tested—probably due to selection bias 160 . Importantly, selection bias can result in AI models trained on a sample that differs considerably from the general population 161 , thus hurting these models at inference time 162 .

Privacy challenges

The successful development of multimodal AI in health requires breadth and depth of data, which encompasses higher privacy challenges than single-modality AI models. For example, previous studies have demonstrated that by utilizing only a little background information about participants, an adversary could re-identify those in large datasets (for example, the Netflix prize dataset), uncovering sensitive information about the individuals 163 .

In the USA, the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule is the fundamental legislation to protect privacy of health data. However, some types of health data—such as user-generated and de-identified health data—are not covered by this regulation, which poses a risk of reidentification by combining information from multiple sources. In contrast, the more recent General Data Protection Regulation (GDPR) from the European Union has a much broader scope regarding the definition of health data, and even goes beyond data protection to also require the release of information about automated decision-making using these data 164 .

Given the challenges, multiple technical solutions have been proposed and explored to ensure security and privacy while training multimodal AI models, including differential privacy, federated learning, homomorphic encryption and swarm learning 165 , 166 . Differential privacy proposes a systematic random perturbation of the data with the ultimate goal of obscuring individual-level information while maintaining the global distribution of the dataset 167 . As expected, this approach constitutes a trade-off between the level of privacy obtained and the expected performance of the models. Federated learning, on the other hand, allows several individuals or health systems to collectively train a model without transferring raw data. In this approach, a trusted central server distributes a model to each of the individuals/organizations; each individual or organization then trains the model for a certain number of iterations and shares the model updates back to the trusted central server 165 . Finally, the trusted central server aggregates the model updates from all individuals/organizations and starts another round. Federated multimodal learning has been implemented in a multi-institutional collaboration for predicting clinical outcomes in people with COVID-19 (ref. 168 ). Homomorphic encryption is a cryptographic technique that allows mathematical operations on encrypted input data, therefore providing the possibility of sharing model weights without leaking information 169 . Finally, swarm learning is a relatively novel approach that, similarly to federated learning, is also based on several individuals or organizations training a model on local data, but does not require a trusted central server because it replaces it with the use of blockchain smart contracts 170 .

Importantly, these approaches are often complementary and they can and should be used together. A recent study demonstrated the potential of coupling federated learning with homomorphic encryption to train a model to predict a COVID-19 diagnosis from chest CT scans, with the aggregate model outperforming all of the locally trained models 122 . While these methods are promising, multimodal health data are usually spread across several distinct organizations, ranging from healthcare institutions and academic centers to pharmaceutical companies. Therefore, the development of new methods to incentivize data sharing across sectors while preserving patient privacy is crucial.

An additional layer of safety can be obtained by leveraging novel developments in edge computing 171 . Edge computing, as opposed to cloud computing, refers to the idea of bringing computation closer to the sources of data (for example, close to ambient sensors or wearable devices). In combination with other methods such as federated learning, edge computing provides more security by avoiding the transmission of sensitive data to centralized servers. Furthermore, edge computing provides other benefits, such as reducing storage costs, latency and bandwidth usage. For example, some X-ray systems now run optimized versions of deep learning models directly in their hardware, instead of transferring images to cloud servers for identification of life-threatening conditions 172 .

As a result of the expanding healthcare AI market, biomedical data are increasingly valuable, leading to another challenge pertaining to data ownership. To date, this constitutes an open issue of debate. Some voices advocate for private patient ownership of the data, arguing that this approach would ensure the patients’ right to self-determination, support health data transactions and maximize patients’ benefit from data markets; while others suggest a non-property, regulatory model would better protect secure and transparent data use 173 , 174 . Independent of the framework, appropriate incentives should be put in place to facilitate data sharing while ensuring security and privacy 175 , 176 .

Multimodal medical AI unlocks key applications in healthcare and many other opportunities exist beyond those described here. The field of drug discovery is a pertinent example, with many tasks that could leverage multidimensional data including target identification and validation, prediction of drug interactions and prediction of side effects 177 . While we addressed many important challenges to the use of multimodal AI, others that were outside the scope of this review are just as important, including the potential for false positives and how clinicians should interpret and explain the risks to patients.

With the ability to capture multidimensional biomedical data, we confront the challenge of deep phenotyping—understanding each individual’s uniqueness. Collaboration across industries and sectors is needed to collect and link large and diverse multimodal health data (Box 1 ). Yet, as this juncture, we are far better at collating and storing such data, than we are at data analysis. To meaningfully process such high-dimensional data and actualize the many exciting use cases, it will take a concentrated joint effort of the medical community and AI researchers to build and validate new models, and ultimately demonstrate their utility to improve health outcomes.

Box 1 Priorities for future development of multimodal biomedical AI

Discover and formulate key medical AI tasks for which multimodal data will add value over single modalities.

Develop approaches that can pretrain models using large amounts of unlabeled data across modalities and only require fine-tuning on limited labeled data.

Benchmark the effect of model architectures and multimodal approaches when working with previously underexplored high-dimensional data, such as omics data.

Collect paired (for example, image–text) multimodal data that could be used to train and test the generalizability of multimodal medical AI algorithms.

Esteva, A. et al. A guide to deep learning in healthcare. Nat. Med. 25 , 24–29 (2019).

Article CAS PubMed Google Scholar

Esteva, A. et al. Deep learning-enabled medical computer vision. NPJ Digit. Med. 4 , 5 (2021).

Article PubMed PubMed Central Google Scholar

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. AI in health and medicine. Nat. Med. 28 , 31–38 (2022).

Karczewski, K. J. & Snyder, M. P. Integrative omics for health and disease. Nat. Rev. Genet. 19 , 299–310 (2018).

Article CAS PubMed PubMed Central Google Scholar

Sidransky, D. Emerging molecular markers of cancer. Nat. Rev. Cancer 2 , 210–219 (2002).

Parsons, D. W. et al. An integrated genomic analysis of human glioblastoma multiforme. Science 321 , 1807–1812 (2008).

Food and Drug Administration. List of cleared or approved companion diagnostic devices (in vitro and imaging tools) https://www.fda.gov/medical-devices/in-vitro-diagnostics/list-cleared-or-approved-companion-diagnostic-devices-in-vitro-and-imaging-tools (2021).

Food and Drug Administration. Nucleic acid-based tests https://www.fda.gov/medical-devices/in-vitro-diagnostics/nucleic-acid-based-tests (2020).

Foundation Medicine. Why comprehensive genomic profiling? https://www.foundationmedicine.com/resource/why-comprehensive-genomic-profiling (2018).

Oncotype IQ. Oncotype MAP pan-cancer tissue test https://www.oncotypeiq.com/en-US/pan-cancer/healthcare-professionals/oncotype-map-pan-cancer-tissue-test/about-the-test-oncology (2020).

Heitzer, E., Haque, I. S., Roberts, C. E. S. & Speicher, M. R. Current and future perspectives of liquid biopsies in genomics-driven oncology. Nat. Rev. Genet. 20 , 71–88 (2018).

Article Google Scholar

Uffelmann, E. et al. Genome-wide association studies. Nat. Rev. Methods Primers 1 , 1–21 (2021).

Watanabe, K. et al. A global overview of pleiotropy and genetic architecture in complex traits. Nat. Genet. 51 , 1339–1348 (2019).

Choi, S. W., Mak, T. S. -H. & O’Reilly, P. F. Tutorial: a guide to performing polygenic risk score analyses. Nat. Protoc. 15 , 2759–2772 (2020).

Damask, A. et al. Patients with high genome-wide polygenic risk scores for coronary artery disease may receive greater clinical benefit from alirocumab treatment in the ODYSSEY OUTCOMES trial. Circulation 141 , 624–636 (2020).

Article PubMed Google Scholar

Marston, N. A. et al. Predicting benefit from evolocumab therapy in patients with atherosclerotic disease using a genetic risk score: results from the FOURIER trial. Circulation 141 , 616–623 (2020).

Duan, R. et al. Evaluation and comparison of multi-omics data integration methods for cancer subtyping. PLoS Comput. Biol. 17 , e1009224 (2021).

Kang, M., Ko, E. & Mersha, T. B. A roadmap for multi-omics data integration using deep learning. Brief. Bioinform . 23 , bbab454 (2022).

Wang, T. et al. MOGONET integrates multi-omics data using graph convolutional networks allowing patient classification and biomarker identification. Nat. Commun. 12 , 3445 (2021).

Zhang, X.-M., Liang, L., Liu, L. & Tang, M.-J. Graph neural networks and their current applications in bioinformatics. Front. Genet. 12 , 690049 (2021).

Moon, K. R. et al. Visualizing structure and transitions in high-dimensional biological data. Nat. Biotechnol. 37 , 1482–1492 (2019).

Kuchroo, M. et al. Multiscale PHATE identifies multimodal signatures of COVID-19. Nat. Biotechnol . https://doi.org/10.1038/s41587-021-01186-x (2022).

Boehm, K. M., Khosravi, P., Vanguri, R., Gao, J. & Shah, S. P. Harnessing multimodal data integration to advance precision oncology. Nat. Rev. Cancer 22 , 114–126 (2021).

Marx, V. Method of the year: spatially resolved transcriptomics. Nat. Methods 18 , 9–14 (2021).

He, B. et al. Integrating spatial gene expression and breast tumour morphology via deep learning. Nat. Biomed. Eng. 4 , 827–834 (2020).

Bergenstråhle, L. et al. Super-resolved spatial transcriptomics by deep data fusion. Nat. Biotechnol . https://doi.org/10.1038/s41587-021-01075-3 (2021).

Janssens, A. C. J. W. Validity of polygenic risk scores: are we measuring what we think we are? Hum. Mol. Genet 28 , R143–R150 (2019).

Kellogg, R. A., Dunn, J. & Snyder, M. P. Personal omics for precision health. Circ. Res. 122 , 1169–1171 (2018).

Owen, M. J. et al. Rapid sequencing-based diagnosis of thiamine metabolism dysfunction syndrome. N. Engl. J. Med. 384 , 2159–2161 (2021).

Moore, T. J., Zhang, H., Anderson, G. & Alexander, G. C. Estimated costs of pivotal trials for novel therapeutic agents approved by the US food and drug administration, 2015–2016. JAMA Intern. Med. 178 , 1451–1457 (2018).

Sertkaya, A., Wong, H. -H., Jessup, A. & Beleche, T. Key cost drivers of pharmaceutical clinical trials in the United States. Clin. Trials 13 , 117–126 (2016).

Loree, J. M. et al. Disparity of race reporting and representation in clinical trials leading to cancer drug approvals from 2008 to 2018. JAMA Oncol. 5 , e191870 (2019).

Steinhubl, S. R., Wolff-Hughes, D. L., Nilsen, W., Iturriaga, E. & Califf, R. M. Digital clinical trials: creating a vision for the future. NPJ Digit. Med. 2 , 126 (2019).

Inan, O. T. et al. Digitizing clinical trials. NPJ Digit. Med. 3 , 101 (2020).

Dunn, J. et al. Wearable sensors enable personalized predictions of clinical laboratory measurements. Nat. Med. 27 , 1105–1112 (2021).

Marra, C., Chen, J. L., Coravos, A. & Stern, A. D. Quantifying the use of connected digital products in clinical research. NPJ Digit. Med . 3 , 50 (2020).

Steinhubl, S. R. et al. Effect of a home-based wearable continuous ECG monitoring patch on detection of undiagnosed atrial fibrillation: the mSToPS randomized clinical trial. JAMA 320 , 146–155 (2018).

Pandit, J. A., Radin, J. M., Quer, G. & Topol, E. J. Smartphone apps in the COVID-19 pandemic. Nat. Biotechnol . 40 , 1013–1022 (2022).

Pallmann, P. et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 16 , 29 (2018).

Klarin, D. & Natarajan, P. Clinical utility of polygenic risk scores for coronary artery disease. Nat. Rev. Cardiol . https://doi.org/10.1038/s41569-021-00638-w (2021).

Lim, B., Arık, S. Ö., Loeff, N. & Pfister, T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 37 , 1748–1764 (2021).

Zhang, X., Zeman, M., Tsiligkaridis, T. & Zitnik, M. Graph-guided network for irregularly sampled multivariate time series. In International Conference on Learning Representation (ICLR, 2022).

Thorlund, K., Dron, L., Park, J. J. H. & Mills, E. J. Synthetic and external controls in clinical trials—a primer for researchers. Clin. Epidemiol. 12 , 457–467 (2020).

Food and Drug Administration. FDA approves first treatment for a form of Batten disease https://www.fda.gov/news-events/press-announcements/fda-approves-first-treatment-form-batten-disease#:~:text=The%20U.S.%20Food%20and%20Drug,specific%20form%20of%20Batten%20disease (2017).

Food and Drug Administration. Real-world evidence https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence (2022).

AbbVie. Synthetic control arm: the end of placebos? https://stories.abbvie.com/stories/synthetic-control-arm-end-placebos.htm (2019).

Unlearn.AI. Generating synthetic control subjects using machine learning for clinical trials in Alzheimer’s disease (DIA 2019) https://www.unlearn.ai/post/generating-synthetic-control-subjects-alzheimers (2019).

Noah, B. et al. Impact of remote patient monitoring on clinical outcomes: an updated meta-analysis of randomized controlled trials. NPJ Digit. Med . 1 , 20172 (2018).

Strain, T. et al. Wearable-device-measured physical activity and future health risk. Nat. Med. 26 , 1385–1391 (2020).

Iqbal, S. M. A., Mahgoub, I., Du, E., Leavitt, M. A. & Asghar, W. Advances in healthcare wearable devices. NPJ Flex. Electron. 5 , 9 (2021).

Mandel, J. C., Kreda, D. A., Mandl, K. D., Kohane, I. S. & Ramoni, R. B. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J. Am. Med. Inform. Assoc. 23 , 899–908 (2016).

Haque, A., Milstein, A. & Fei-Fei, L. Illuminating the dark spaces of healthcare with ambient intelligence. Nature 585 , 193–202 (2020).

Kwolek, B. & Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Prog. Biomed. 117 , 489–501 (2014).

Wang, C. et al. Multimodal gait analysis based on wearable inertial and microphone sensors. In 2017 IEEE SmartWorld, Ubiquitous Intelligence Computing, Advanced Trusted Computed, Scalable Computing Communications, Cloud Big Data Computing , Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI) 1–8 (2017).

Luo, Z. et al. Computer vision-based descriptive analytics of seniors’ daily activities for long-term health monitoring. In Proc. Machine Learning Research Vol. 85, 1–18 (PMLR, 2018).

Coffey, J. D. et al. Implementation of a multisite, interdisciplinary remote patient monitoring program for ambulatory management of patients with COVID-19. NPJ Digit. Med. 4 , 123 (2021).

Whitelaw, S., Mamas, M. A., Topol, E. & Van Spall, H. G. C. Applications of digital technology in COVID-19 pandemic planning and response. Lancet Digit. Health 2 , e435–e440 (2020).

Wu, J. T., Leung, K. & Leung, G. M. Nowcasting and forecasting the potential domestic and international spread of the 2019-nCoV outbreak originating in Wuhan, China: a modelling study. Lancet 395 , 689–697 (2020).

Jason Wang, C., Ng, C. Y. & Brook, R. H. Response to COVID-19 in Taiwan: big data analytics, new technology, and proactive testing. JAMA 323 , 1341–1342 (2020).

Radin, J. M., Wineinger, N. E., Topol, E. J. & Steinhubl, S. R. Harnessing wearable device data to improve state-level real-time surveillance of influenza-like illness in the USA: a population-based study. Lancet Digit. Health 2 , e85–e93 (2020).

Quer, G. et al. Wearable sensor data and self-reported symptoms for COVID-19 detection. Nat. Med. 27 , 73–77 (2020).

Syrowatka, A. et al. Leveraging artificial intelligence for pandemic preparedness and response: a scoping review to identify key use cases. NPJ Digit. Med. 4 , 96 (2021).

Varghese, E. B. & Thampi, S. M. A multimodal deep fusion graph framework to detect social distancing violations and FCGs in pandemic surveillance. Eng. Appl. Artif. Intell. 103 , 104305 (2021).

San, O. The digital twin revolution. Nat. Comput. Sci. 1 , 307–308 (2021).

Björnsson, B. et al. Digital twins to personalize medicine. Genome Med. 12 , 4 (2019).

Kamel Boulos, M. N. & Zhang, P. Digital twins: from personalised medicine to precision public health. J. Pers. Med 11 , 745 (2021).

Hernandez-Boussard, T. et al. Digital twins for predictive oncology will be a paradigm shift for precision cancer care. Nat. Med. 27 , 2065–2066 (2021).

Coorey, G., Figtree, G. A., Fletcher, D. F. & Redfern, J. The health digital twin: advancing precision cardiovascular medicine. Nat. Rev. Cardiol. 18 , 803–804 (2021).

Masison, J. et al. A modular computational framework for medical digital twins. Proc. Natl Acad. Sci. USA 118 , e2024287118 (2021).

Fisher, C. K., Smith, A. M. & Walsh, J. R. Machine learning for comprehensive forecasting of Alzheimer’s disease progression. Sci. Rep. 9 , 13622 (2019).

Walsh, J. R. et al. Generating digital twins with multiple sclerosis using probabilistic neural networks. Preprint at https://arxiv.org/abs/2002.02779 (2020).

Swedish Digital Twin Consortium. https://www.sdtc.se/ (accessed 1 February 2022).

Potter, D. et al. Development of CancerLinQ, a health information learning platform from multiple electronic health record systems to support improved quality of care. JCO Clin. Cancer Inform. 4 , 929–937 (2020).