Maël Montévil

A Primer on Mathematical Modeling in the Study of Organisms and Their Parts

Systems Biology

How do mathematical models convey meaning? What is required to build a model? An introduction for biologists and philosophers.

Mathematical modeling is a very powerful tool for understanding natural phenomena. Such a tool carries its own assumptions and should always be used critically. In this chapter, we highlight the key ingredients and steps of modeling and focus on their biological interpretation. In particular, we discuss the role of theoretical principles in writing models. We also highlight the meaning and interpretation of equations. The main aim of this chapter is to facilitate the interaction between biologists and mathematical modelers. We focus on the case of cell proliferation and motility in the context of multicellular organisms.

Keywords: Equations, Mathematical modeling, Parameters, Proliferation, Theory

A primer on mathematical modeling in the study of organisms and their parts

Mathematical modeling is a very powerful tool to understand natural phenomena. Such a tool carries its own assumptions and should always be used critically. In this chapter we highlight the key ingredients and steps of modeling and focus on their biological interpretation. In particular, we discuss the role of theoretical principles in writing models. We also highlight the meaning and interpretation of equations. The main aim of this chapter is to facilitate the interaction between biologists and mathematical modelers. We focus on the case of cell proliferation and motility in the context of multicellular organisms.

Keywords : mathematical modeling, proliferation, theory, equations, parameters

1 Introduction

Mathematical modeling may serve many purposes such as performing quantitative predictions or making sense of a situation where reciprocal interactions are beyond informal analyses. For example, describing the properties of the diferent ionic channels of a neuron individually is not sufficient to understand how their combination entails the formation of action potentials. We need a mathematical analysis such as the one performed by the Hodgkin-Huxley model to gain such an understanding [ 1 ]. In this sense, mathematical modeling is required at some point in order to understand many biological phenomena. Let us emphasize that the perspective of modelers is usually different than the one of many experimentalists, especially in molecular biology. The latter field tends to emphasize the contribution of individual parts, but traditional reductionism [ 2 ] involves both the analysis of parts and the theoretical composition of parts to understand the whole, usually by means of mathematical analysis. Without the latter move, it is never clear whether the parts analyzed individually are sufficient to explain how the phenomenon under study comes to be or whether key processes are missing.

We want to emphasize the difference between mathematical models on the one side and theories on the other side. Of course modelization belongs to the broad category of theoretical work by contrast with experimental work. However, in this text, we will refer to theory in the precise sense of a broad conceptual framework such as evolutionary theory. Evolutionary theory has been initially formulated without explicit mathematics. Evolutionary theory has actually led to different categories of mathematical analyses such as population genetics or phyllogenetic analysis which are very different mathematically. Theoretical frameworks typically guide modelization and contributes to justify mathematical models.

Mathematical modeling raises several difficulties in the study of organisms.

The first one is that most biologists do not have the mathematical or physical background to assess the meaning and the validity of models. The division of labor in interdisciplinary projects is an efficient way to work but it should at least be completed by an understanding of the principles at play in every part of the work. Otherwise, the coherence of the knowledge that result from this work is not ensured.

The second difficulty is intrinsic. Living objects have theoretical specificities that make mathematical modeling difficult or at least limit its meaning. These specificities are at least of two kinds.

- Current organisms are the result of an evolutive and developmental history which means that many contingent events are deeply inscribed in the organization of living being. By contrast the aim of mathematical modeling is usually to make explicit the necessity of an outcome. For more on this issue, see [ 3 ].

- The study of a part X of an organism is not completely meaningful by itself. Instead, the inscription of this part inside the organism and in particular the role that this part plays is a mandatory object of study to assess the biological relevance of the properties of X that are under study. As such, the modelization of X per se is insufficient and requires a supplementary discussion [ 4 ].

The third difficulty is that there are no well established theoretical principles to frame model writing in physiology or developmental biology [ 5 ]. In particular, cells are elementary objects since the cell theory states that there is no living things without cells. However, cells have complex organizations themselves. Modeling their behavior (note 1 ) is therefore challenging and requires appropriate theoretical assumptions to ensure that this modeling has a robust biological meaning.

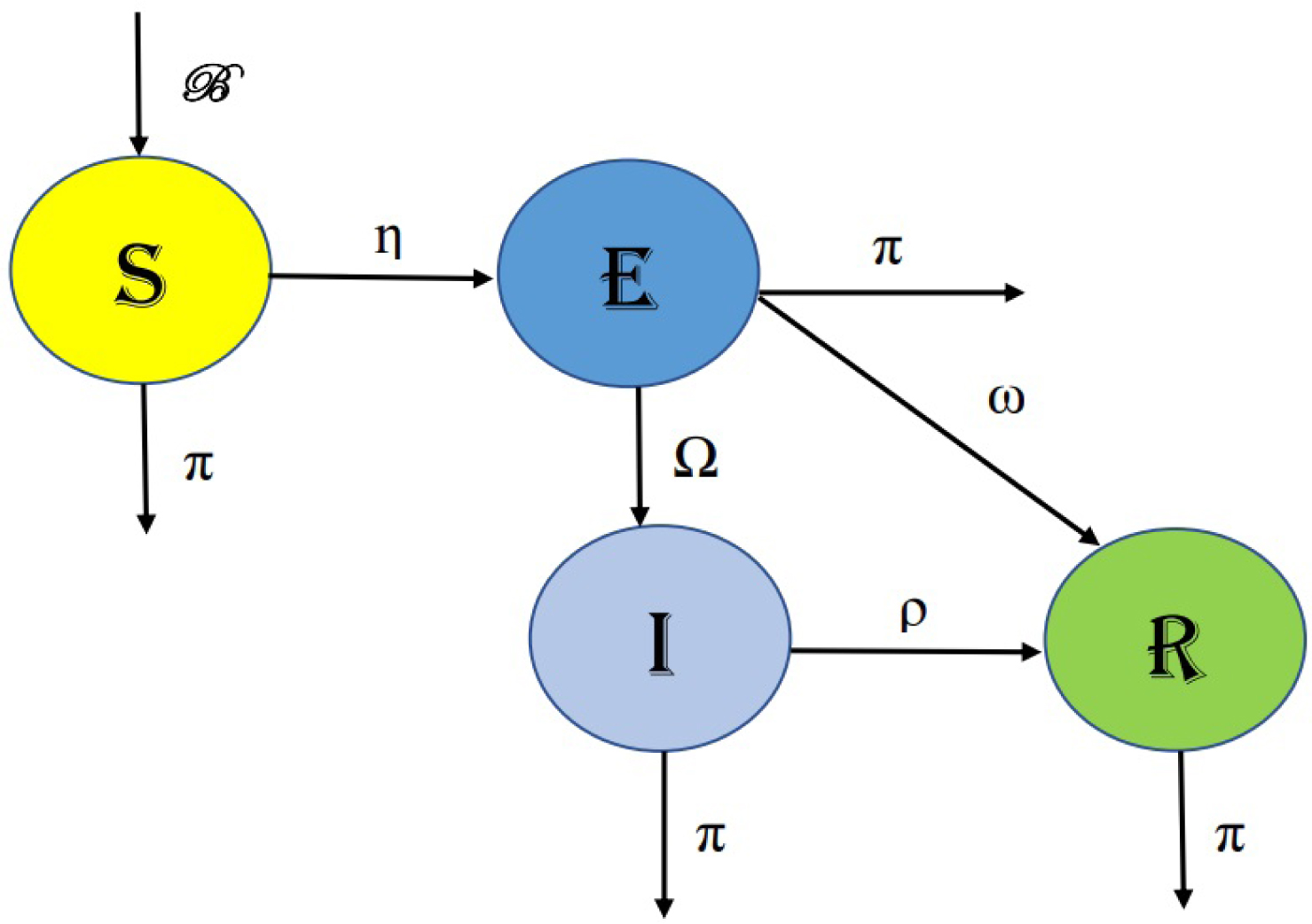

A theoretical way to organize the mathematical modeling of cell behaviors is to propose a default state, that is to say to make explicit a state of reference that takes place without the need of particular constraints, input or signal. We think that proliferation with variation and motility should be used as a default state [ 6 , 7 ]. Under this assumption, cells spontaneously proliferate. By contrast, quiescence should be explained by constraints explicitly limiting or even preventing cell proliferation. The same reasoning applies mutadis mutandis to motility. This assumption has been used to model mammary gland morphogenesis and helps to systematize the mathematical analysis of cellular populations [ 8 ].

In this chapter we will focus on model writing. Our aim is not to emphasize the technical aspects of mathematical analysis. Instead, this text aims to help biologists to understand modelization in order to better interact with modelers. Reciprocally, we also highlight theoretical specificities of biology which may be of help to modelers. Of course, the usual way to divide chapters in this book series is not entirely appropriate for the topic of our chapter. We still kept this structure and follow it in a metaphorical sense. In materials, we are describing key conceptual and mathematical ingredients of models. In methods, we will focus on the writing and analysis of models per se .

2 Materials

2.1 parameters and states, 2.1.1 parameters.

Parameters are quantities that play a role in the system but which are not significantly impacted by the system’s behavior at the time scale of the phenomenon under study. From an experimentalist’s point of view, there are two kinds of parameters. Some parameters correspond to a quantity that is explicitly set by the experimenter such as the temperature, the size of a plate or the concentration of a relevant compound in the media. Other parameters correspond to properties of parts under study, such as the speed of a chemical reaction, the elasticity of collagen or the division rate τ of a cell without constraints. Changing the value of these parameters require to change the part in question, see also note 2 .

Identifying relevant parameters has actually two different meaning :

- Parameters that will be used explicitly in the model are parameters whose value is required to deduce the behavior of the system. The dynamics of the system depends explicitly on the value of these parameters. A fortiori , parameters that correspond to different treatments leading to a response will fall under this category. Note that the importance of some parameters usually appear in other steps of modeling.

- Theoretical parameters correspond to parameters that we know are relevant and even mandatory for the process to take place but that we can keep implicit in our model. For example, the concentration of oxygen in the media is usually not made explicit in a model of an in vitro experiment even though it is relevant for the very survival of the cells studied. Of course, there is usually a cornucopia of this sort of parameters, for example the many components of the serum.

2.1.2 State space

The state of an object describes its situation at a given time. The state is composed of one or several quantities, see note 3 . By contrast with parameters, the notion of state is restricted to those aspects of the system which will change as a result of explicit causes or randomness intrinsic to the system described. The usual approach, inherited from physics, is to propose a set of possible states that does not change during the dynamics. Then the changes of the system will be changes of states while staying among these possible states. For example, we can describe a cell population in a very simple manner by the number of cells n(t) . Then, the state space is all the possible values for n , that is to say the positive integers.

Usually, the changes of state depend on the state of the system which means that the state has a causal power, which can be either direct or indirect. A direct causal power is illustrated by n which is the number of cells that are actively proliferating in the example above and thus trigger the changes in n . An indirect causal power corresponds, for example, to the position of a cell provided that some positions are too crowded for cells to proliferate.

2.1.3 Parameter versus state

Deciding whether a given quantity should be described as a parameter or as an element of the state space is a theoretical decision that is sometimes difficult, see also note 4 . The heart of the matter is to analyze the role of this quantity but it also depends on the modeling aims.

- Does this quantity change in a quantitatively significant way at the time scale of the phenomenon of interest ? If no it should be a parameter. If yes :

- Are the changes of this quantity required to observe the phenomenon one wants to explain ? If yes, it should be a part of the state space. If no :

- Do we want to perform precise quantitative predictions ? If yes, then the quantity should be a part of the state space and a parameter otherwise.

In the following, we will call “description space” the combination of the state space and parameters.

2.2 Equations

Equations are often seen as intimidating by experimental biologists. Our aim here and in the following subsection is to help demystify them. In the modeling process, equations are the final explicitation of how changes occur and causes act in a model. As a result understanding them is of paramount importance to understand the assumptions of a model.

The basic rule of modeling is extremely simple. Parameters do not require equations since they are set externally. However, the value of states are unspecified. As a result, equations are required to describe how states change. More precisely, modelers require an equation for each quantity describing the state. Quantities of the state space are degrees of freedom, and these degrees of freedom have to be “removed” by equations for the model to perform predictions. These equations need to be independent in the sense that they need to capture different aspects of the system : copying twice the same equation obviously does not constrain the states. Equations typically come in two kinds :

- Equations that relate different quantities of the state space. For example, if we have n the total number of cells and two possible cell types with cell counts n 1 and n 2 , then we will always have n=n 1 +n 2 . As a result, it is sufficient to describe how two of these variables change in order obtain the third one.

- Equations that describe a change of state as a function of the state. These equations typically take two different forms, depending on the representation of time which may be either continuous or discrete, see note 5 . In continuous time, modelers use differential equations, for example dn ∕ dt=n ∕ τ . This equation means that the change of n ( dn) during a short time ( dt) is equal to ndt/ τ . This change follows from cell proliferation and we will expand on this equation in the next section. In discrete time, n t+ Δ t − n t is the change of state which relates to the current state by n t+ Δ t − n t =n t Δ t ∕ τ . Alternatively and equivalently, the future state can be written as a function of the current state : n t+ Δ t =n t Δ t ∕ τ +n t . Defining a dynamics requires at least one such equation to bind together the different time points, that is to say to bind causes and their effects.

2.3 Invariants and symmetries

We have discussed the role of equations, now let us expand on their structure. Let us start with the equation mentioned above : dn ∕ dt=n ∕ τ . What is the meaning of such an equation ? This equation states that the change of n, dn/dt, is proportional to n . 1) In conformity, with the cell theory, there is no spontaneous generation. There is no migration from outside the system described, which is an assumption proper to a given situation. The only source of cells is then cell proliferation. 2) Every cell divides at a given rate, independently. As a conclusion, the appearance of new cells is proportional to the number of cells which are dividing unconstrained, that is to say n . A cell needs a duration of τ to generate two cells (that is to say increase the cell count by one) which is exemplified by the fact that for n=1, dn/dt=1/ τ .

Alternatively, this equation is equivalent to dn ∕ dt × 1 ∕ n= 1 ∕ τ , and the latter relation shows that the equation is equivalent to the existence of an invariant quantity : dn ∕ dt × 1 ∕ n which is equal to 1/ τ for all values of n. Doubling n thus requires to double dn/dt. In this sense, the joint transformation dn ∕ dt → 2 dn ∕ dt and n → 2 n is a symmetry, that is to say a transformation that leaves invariant a key aspect of the system . This transformation leads from one time point to another. Discussing symmetries of equations is a method to show their meaning. Here, in a sense, the size of the population does not matter. Symmetries can also be multi-scale, for example fractal analysis is based on a symmetry between the different scales that is very fruitful in biology [ 9 , 10 ].

Probabilities may also be analyzed on the basis of symmetries. Randomness may be defined as unpredictability in a given theoretical frame and is more general than probabilities. To define probabilities, two steps have to be performed. The modeler needs to define a space of possibilities and then to define the probabilities of these possibilities. The most meaningful way to do the latter is to figure out possibilities that are equivalent, that is to say symmetric. For example, in a homogeneous environment, all directions are equivalent and thus would be assigned the same probabilities. A cell, in this situation, would have the same chance to choose any of these directions assuming that the cell’s organization is not already oriented in space, see also note 6 . In physics, a common assumption is to consider that states which have the same energy have the same probabilities.

Now there are several ways to write equations, independently of their deterministic or stochastic nature :

- Symmetry based writing is exemplified by the model of exponential growth above. In this case, the equation has a genuine meaning. Of course the model conveys approximations which are not always valid, but the terms of the equation are biologically meaningful. This also ensure that all mathematical outputs of the model may be interpreted biologically.

where k is the maximum of the population. Le us remark that we have written the equation in two different forms, we come back on this in note 7 . The solution of this equation is the classical logistic function.

Note however that this equation has symmetries which are dubious from a biological viewpoint : the way the population takes off is identical to the way it saturates because the logistic equation has a center of symmetry, A in figure, see also [ 11 ].

- The last way to write equations is called heuristic. The idea is to use functions that mimic quantitatively and to some extent qualitatively the phenomenon under study. Of course this method is less meaningful that the others but it is often required when the knowledge of the underlying phenomenon is not sufficient.

2.4 Theoretical principles

Theoretical principles are powerful tools to write equations that convey biological meaning. Let us provide a few examples.

- Cell theory implies that cells come from the proliferation of other cells and excludes spontaneous generation.

- Classical mechanics aims to understand movements in space. The acceleration of an object requires that a mechanical force is exerted on this object. Note that the principle of reaction states that if A exerts a force on B, then B exerts the same force with opposite direction on A. Therefore, there is an equivalence between “A exerts a force” and “a force is exerted on A” from the point of view of classical mechanics. The difficulty lies in the forces exerted by cells as cells can consume free energy to exert many kinds of forces. Cells are neither an elastic nor a bag of water, they possess agency which leads us to the next point.

- As explained in introduction, the reference to a default state helps to write equations that pertain to cellular behaviors. There are many aspects that contribute to cellular proliferation and motility. The writing of an equation such as the logistic model is not about all these factors and should not be interpreted as such. Instead, it assumes proliferation on the one side and one or several factors that constrain proliferation on the other side.

3.1 Model writing

Model writing may have different levels of precision and ambition. Models can be a proof of concept, that is to say the genuine proof that some hypotheses explain a given behavior or even proofs of the theoretical possibility of a behavior. Proof of concept do not include a complete proof that the natural phenomenon genuinely behave like the model. On the opposite end of the spectrum, models may aim at quantitative predictions. Usually, it is good practice to start from a crude model and after that to go for more detailed and quantitative analyses depending on the experimental possibilities.

We will now provide a short walkthrough for writing an initial model :

- Specify the aims of the model. Models cannot answer all questions at once, and it is crucial to be clear on the aim of a model before attempting to write it. Of course, these aims may be adjusted afterwards. The scope of the model should also depend on the experimental methods that link it to reality.

- Analyze the level of description that is mandatory for the model to explain the target phenomenon. Usually, the simplest the description is the better. When cells do not constrain each other, describing cells by their count n is sufficient. By contrast, if cells constrain each other, for example if they are in organized 3d structures it can be necessary to take into account the position of each individual cell which leads to a list of positions x → 1, x → 2, x → 3, . . . . Note that in this case the state space is far larger than before, see note 8 . A fortiori, it is necessary to represent space to understand morphogenesis. Note that the notion of level of description is different from the notion of scale. A level of description pertains to qualitative aspects such as the individual cell, the tissue, the organ, the organism, etc. By contrast, a scale is defined by a quantity.

- List the theoretical principles that are relevant to the phenomenon. These principles can be properly biological and pertain to cell theory, the notion of default state, biological organization or evolution. Physico-chemical principles may also be useful such as mechanics or the balance of chemical reactions.

- List the relevant states and parameters. These quantities are the ones that are expected to play a causal role that pertains to the aim of the model. This list will probably not be definitive, and will be adjusted in further steps. In all cases, we cannot emphasize enough that aiming for exhaustivity is the modeler’s worst enemy. Biologists need to take many factors into account when designing an experimental protocol, it is a mistake to try to model all of these factors.

- The crucial step is to propose mathematical relations between states and their changes. We have described in sections 2.2 and 2.3 what kinds of relation can be used. Usually these relations will involve supplementary parameters whose relevance was not obvious initially. Let us emphasize here that the key to robust models is to base it on sufficiently solid grounds. A model where all relations are heuristic will probably not be robust. As such, figuring out the robust and meaningful relations that can be used is crucial.

- The last step is to analyze the consequences of the model. We describe this step with more details below. What matters here is that the models may work as intended, in which case it may be refined by adding further details. The model may also lead to unrealistic consequences and not lead to the expected results. In these latter cases, the issue may lie in the formulation of the relations above, in the choice of the variables or in oversimplifications. In all cases the model requires a revision.

Writing a model is similar to the chess game in that the anticipation of all these steps from the beginning helps. The steps that we have described are all required but a central aspect of modeling is to gain a precise intuition of what determines the system’s behavior. Once this intuition is gained, it guides the specification of the model at all step. Reciprocally, these steps help to gain such an intuition.

3.2 Model analysis

In this section, we will not cover all the main ways to analyze model since this subject is far too vast and depends on the mathematical structures used in the models. Instead, we will focus on the outcome of model analyses.

3.2.1 Analytic methods

Analytic methods consist in the mathematical analysis of a model. They should always be preferred to simulations when the model is tractable, even at the cost of using simplifying hypotheses.

- Asymptotic reasoning is a fundamental method to study models. The underlying idea is that models are always a bit complicated. To make sense of them, we can look at the dynamics after enough time which simplifies the outcome. For example, the outcome of the logistic function discussed above will always be an equilibrium point, where the population is at a maximum. Mathematically, “enough” time means infinite time, hence the term asymptotic. In practice “infinite” means “large in comparison with the characteristic times of the dynamics”, which may not be long from a human point of view. For example, a typical culture of bacteria reaches a maximum after less than day. Asymptotic behaviors may be more complicated such as oscillations or strange attractors.

- Steady states analysis. In fairly complex situations, for example when both space and time are involved, a usual approach is to analyze states that are sustained over time. For example, in the analysis of epithelial morphogenesis, it is possible to consider how the shape of a duct is sustained over time.

Near 0, n= 0 + Δ n and dn ∕ dt ≃ Δ n ∕ τ . The small variation Δ n leads to a positive dn ∕ dt therefore this variation is amplified and this equilibrium is not stable. We should not forget the biology here. For a population of cells or animals of a given large size, a small variation is possible. However, a small variation from a population of size 0 is only possible through migration because spontaneous generation does not happen. Nevertheless this analysis shows that a small population, close to n=0 , should not collapse but instead will expand.

Near k , let us write n=k+ Δ n

- Special cases. In some situations, qualitatively remarkable behaviors appear for specific values of the parameters. Studying these cases is interesting per se, e ven though the odds for parameters to have specific value are slim without an explicit reason for this paramter to be set at this value. However, in biology the value of some parameters are the result of biological evolution and specific value can become relevant when the associated qualitative behavior is biologically meaningful [ 13 , 14 ].

- Parameter rewriting. One of the major practical advantages of analytical methods is to prove the relevance of parameters that are key to understand the behavior of a system. These “new” parameters are usually combinations of the initial parameters. We have implicitly done this operation in section 2.3 . Instead of writing an+bn 2 we have written n ∕ τ − n 2 ∕ k τ . The point here is to introduce τ the characteristic time for a cell division and k which is the maximum size of the population. By contrast, a and especially b are less meaningful. These key parameters and their meaning are an outcome of models and at the same time should be the target of precise experiments to explore the validity of models.

3.2.2 Numerical methods – simulations

Simulations have a major strength and a major weakness. Their strength lies in their ability to handle complicated situations that are not tractable analytically. Their weakness is that each simulation run provides a particular trajectory which cannot a priori be assumed to be representative of the dynamical possibilities of the model.

In this sense, the outcome of simulations may be compared to empirical results, except that simulation are transparent : it is possible to track all variables of interest over time. Of course, the outcome of simulations is artificial and only as good as the initial model.

Last, there is almost always a loss when going from a mathematical model to a computer simulation. Computer simulation are always about discrete objects and deterministic functions. Randomness and continua are always approximated in simulations and mathematical care is required to ensure that the qualitative features of simulations are feature of the mathematical model and not artifacts of the transposition of the model into a computer program. A subfield of mathematics, numerical analysis, is devoted to this issue.

3.2.3 Results

We want to emphasize two points to conclude this section.

First, it is not sufficient for a model to provide the qualitative or even quantitative behavior expected for this model to be correct. The validation of a model is based on the validation of a process and of the way this process takes place. As a result, it is necessary to explore the predictions of the model to verify them experimentally. All outcomes that we have described in 3.2.1 may be used to do so on top of a direct verification of the assumptions of the model themselves. Of course, it is never possible to verify everything experimentally, therefore the focus should be on aspects that are unlikely except in the light of the model.

Second, modeling focuses on a specific part and a specific process. However, this part and this process take place in an organism. Their physiological meaning, or possible lack thereof, should be analyzed. We are developing a framework to perform this kind of analysis [ 15 , 4 ] but it can also be performed informally by looking at the consequences of the part considered for the rest of the organism.

- In biology, behavior usually has an ethological meaning and evolution refers to the theory evolution. In the mathematical context, these words have a broader meaning. They both typically refer to the properties of dynamics. For example, the behavior of a population without constrain is exponential growth.

- Parameters that play a role in an equation are defined in two different ways. They are defined by their role in the equation and by their biological interpretation. For example, the division rate τ corresponds to the division rate of the cells without the constraint that is represented by k . τ may also embed constant constraints on cell proliferation, for example chemical constraints from the serum or the temperature. Thus, τ is what physicists call an effective parameter it carries implicitly constraints beyond the explicit constraints of the model.

- A state may be composed of several quantities, let’s say k, n, m . It is possible to write the state by the three quantities independently or to join them in one vector X=(k,n,m) . The two viewpoints are of course equivalent but they lead to different mathematical methods and ways to see the problem. The second viewpoint shows that it is always valid to consider that the state is a single mathematical object and not just a plurality of quantities.

- The notion of organization in the sense of a specific interdependence between parts [ 4 ] implies that most parameters are a consequence of others parts, at other time scales. As a result, modeling a given quantity as a parameter is only valid for some time scales, and is acceptable when these time scales are the ones at which the process modeled takes place.

- The choice between a model based on discrete or on continuous time is base on several criteria. For example, if the proliferation of cells is synchronized, there is a discrete nature of the phenomenon that strongly suggests to represent the dynamics in discrete time. In this case the discrete time corresponds to an objective aspect of the phenomenon. On the opposite, when cells divide at all times in the population, a representation in continuous time is more adequate. In order to perform simulations, time may still be discretized but the status of the discrete structure is then different than in the first case : discretization is then arbitrary and serves the purpose of approximating the continuum. To distinguish the two situations, a simple question should be asked. What is the meaning of the time difference between two time points. In the first case, this time difference has a biological meaning, in the second it is arbitrary and just small enough for the approximation to be acceptable.

- Probabilities over continuous possibilities are somewhat subtle. Let us show why : let us say that all directions are equivalent, thus all angles in the interval [0,360[ are equivalent. They are equivalent, so their probabilities are all the same value p. However, there is an infinite number of possible angles, so the sum of all the probabilities of all possibilities would be infinite. Over the continuum, probabilities are assigned to sets and in particular to intervals, not individual possibilities.

- There are many equivalent ways to write a mathematical term. The choice of a specific way to write a term conveys meaning and corresponds to an interpretation of this term. For example, in the text, we transformed dn ∕ dt=n ∕ τ − n 2 ∕ k τ because this expression has little biological meaning. By contrast, dn ∕ dt=n 1 − n ∕ k ∕ τ implies that when n/k is very small by comparison with 1, cells are not constraining each other. On the opposite, when n=k there is no proliferation. The consequence of cells constraining each other can be interpreted as a proportion 1-n/k of cells proliferating and a proportion n/k of cells not proliferating. Now, there is another way to write the same term which is : dn ∕ dt=n ∕ τ ∕ 1 − n ∕ k . Here, the division time becomes τ /(1-n/k) and the more cells there are, the longer the division time becomes. This division time becomes infinite when n=k which means that cells are quiescent. These two interpretations are biologically different. In the first interpretation, a proportion of cells are completely constrained while the other proliferate freely. In the second, all cells are impacted equally. Nevertheless, the initial term is compatible with both interpretations and they hhave the same consequences at this level of analysis.

- The number of quantities that form the state space is called its dimension. The dimension of the phase space is a crucial matter for its mathematical analysis. Basically, low dimensions such as 3 or below are more tractable and easier to represent. High dimensions may also be tractable if many dimensions play equivalent roles (even in infinite dimension). A large number of heterogeneous quantities (10 or 20) is complicated to analyze even with computer simulations because this situation is associated with many possibilities for the initial conditions and for the parameters making it difficult to “probe” the different qualitative possibilities of the model.

- It is very common in modeling to use the words “small” and “large”. A small (resp. large) quantity is a quantity that is assumed to be small (resp. large) enough so that a given approximation can be performed. For example, a large time in the context of the logistic equation means that the population is approximately at the maximum k . Similarly, infinite and large are very close notions in most practical cases. For example, a very large capacity k leads to dn ∕ dt=n ∕ τ 1 − n ∕ k ≃ n ∕ τ which is an exponential growth as long as n is far smaller than k.

- Beeman, D. (2013). Hodgkin-Huxley Model, pages 1–13. Encyclopedia of Computational Neuroscience. Springer New York, New York, NY. Doi : 10.1007/978-1-4614-7320-6_127-3

- Descartes, R. (2016). Discours de la méthode. Flammarion.

- Montévil, M., Mossio, M., Pocheville, A., and Longo, G. (2016a). Theoretical principles for biology : Variation. Progress in Biophysics and Molecular Biology, 122(1) : 36 – 50. Doi : 10.1016/j.pbiomolbio.2016.08.005

- Mossio, M., Montévil, M., and Longo, G. (2016). Theoretical principles for biology : Organization. Progress in Biophysics and Molecular Biology, 122(1) : 24 – 35. Doi : 10.1016/j.pbiomolbio.2016.07.005

- Noble, D. (2010). Biophysics and systems biology. Philosophical Transactions of the Royal Society A : Mathematical, Physical and Engineering Sciences, 368(1914) : 1125. Doi : 10.1098/rsta.2009.0245

- Sonnenschein, C. and Soto, A. (1999). The society of cells : cancer and control of cell proliferation. Springer Verlag, New York.

- Soto, A. M., Longo, G., Montévil, M., and Sonnenschein, C. (2016). The biological default state of cell proliferation with variation and motility, a fundamental principle for a theory of organisms. Progress in Biophysics and Molecular Biology, 122(1) : 16 – 23. Doi : 10.1016/j.pbiomolbio.2016.06.006

- Montévil, M., Speroni, L., Sonnenschein, C., and Soto, A. M. (2016b). Modeling mammary organogenesis from biological first principles : Cells and their physical constraints. Progress in Biophysics and Molecular Biology, 122(1) : 58 – 69. Doi : 10.1016/j.pbiomolbio.2016.08.004

- D’Anselmi, F., Valerio, M., Cucina, A., Galli, L., Proietti, S., Dinicola, S., Pasqualato, A., Manetti, C., Ricci, G., Giuliani, A., and Bizzarri, M. (2011). Metabolism and cell shape in cancer : A fractal analysis. The International Journal of Biochemistry & Cell Biology, 43(7) : 1052 – 1058. Metabolic Pathways in Cancer. Doi : 10.1016/j.biocel.2010.05.002

- Longo, G. and Montévil, M. (2014). Perspectives on Organisms : Biological time, symmetries and singularities. Lecture Notes in Morphogenesis. Springer, Dordrecht. Doi : 10.1007/978-3-642-35938-5

- Tjørve, E. (2003). Shapes and functions of species–area curves : a review of possible models. Journal of Biogeography, 30(6) : 827 – 835. Doi : 10.1046/j.1365-2699.2003.00877.x

- Hoehler, T. M. and Jorgensen, B. B. (2013). Microbial life under extreme energy limitation. Nat Rev Micro, 11(2) : 83 – 94. Doi : 10.1038/nrmicro2939

- Camalet, S., Duke, T., Julicher, F., and Prost, J. (2000). Auditory sensitivity provided by self-tuned critical oscillations of hair cells. Proceedings of the National Academy of Sciences, pages 3183 – 3188. Doi : 10.1073/pnas.97.7.3183

- Lesne, A. and Victor, J.-M. (2006). Chromatin fiber functional organization : Some plausible models. Eur Phys J E Soft Matter, 19(3) : 279 – 290. Doi : 10.1140/epje/i2005-10050-6

- Montévil, M. and Mossio, M. (2015). Biological organisation as closure of constraints. Journal of Theoretical Biology, 372(0) : 179 – 191. Doi : 10.1016/j.jtbi.2015.02.029

∗ Montévil M. (2018) A Primer on Mathematical Modeling in the Study of Organisms and Their Parts. In: Bizzarri M. (eds) Systems Biology. Methods in Molecular Biology, vol 1702. Humana Press, New York, NY. Doi : 10.1007/978-1-4939-7456-6_4

† Laboratoire "Matière et Systèmes Complexes" (MSC), UMR 7057 CNRS, Université Paris 7 Diderot, 75205 Paris Cedex 13, France and Institut d’Histoire et de Philosophie des Sciences et des Techniques (IHPST) - UMR 8590 Paris, France.

Loading metrics

Open Access

Essays articulate a specific perspective on a topic of broad interest to scientists.

See all article types »

Not Just a Theory—The Utility of Mathematical Models in Evolutionary Biology

* E-mail: [email protected]

Affiliation Department of Biology, University of North Carolina, Chapel Hill, North Carolina, United States of America

Affiliation Department of Plant Biology, University of Minnesota, Twin Cities, St. Paul, Minnesota, United States of America

Affiliation National Evolutionary Synthesis Center (NESCent), Durham, North Carolina, United States of America

Affiliation Department of Ecology, Evolution, and Behavior, University of Minnesota, Twin Cities, St. Paul, Minnesota, United States of America

Affiliations Department of Biology, University of North Carolina, Chapel Hill, North Carolina, United States of America, Santa Fe Institute, Santa Fe, New Mexico, United States of America

Affiliations National Evolutionary Synthesis Center (NESCent), Durham, North Carolina, United States of America, Department of Biology, University of Kentucky, Lexington, Kentucky, United States of America

- Maria R. Servedio,

- Yaniv Brandvain,

- Sumit Dhole,

- Courtney L. Fitzpatrick,

- Emma E. Goldberg,

- Caitlin A. Stern,

- Jeremy Van Cleve,

- D. Justin Yeh

Published: December 9, 2014

- https://doi.org/10.1371/journal.pbio.1002017

- Reader Comments

Citation: Servedio MR, Brandvain Y, Dhole S, Fitzpatrick CL, Goldberg EE, Stern CA, et al. (2014) Not Just a Theory—The Utility of Mathematical Models in Evolutionary Biology. PLoS Biol 12(12): e1002017. https://doi.org/10.1371/journal.pbio.1002017

Copyright: © 2014 Servedio et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: MRS was supported by the National Science Foundation (NSF) grants DEB-0919018 and DEB-1255777, and CF and JV were supported by the National Evolutionary Synthesis Center (NESCent), NSF EF-0423641. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Progress in science often begins with verbal hypotheses meant to explain why certain biological phenomena exist. An important purpose of mathematical models in evolutionary research, as in many other fields, is to act as “proof-of-concept” tests of the logic in verbal explanations, paralleling the way in which empirical data are used to test hypotheses. Because not all subfields of biology use mathematics for this purpose, misunderstandings of the function of proof-of-concept modeling are common. In the hope of facilitating communication, we discuss the role of proof-of-concept modeling in evolutionary biology.

A Conceptual Gap: Models and Misconceptions

Recent advances in many fields of biology have been driven by a synergistic approach involving observation, experiment, and mathematical modeling (see, e.g., [1] ). Evolutionary biology has long required this approach, due in part to the complexity of population-level processes and to the long time scales over which evolutionary processes occur. Indeed, the “modern evolutionary synthesis” of the 1930s and 40s—a pivotal moment of intellectual convergence that first reconciled Mendelian genetics and gene frequency change with natural selection—hinged on elegant mathematical work by RA Fisher, Sewall Wright, and JBS Haldane. Formal (i.e., mathematical) evolutionary theory has continued to mature; models can now describe how evolutionary change is shaped by genome-scale properties such as linkage and epistasis [2] , [3] , complex demographic variability [4] , environmental variability [5] , and individual and social behavior [6] , [7] within and between species.

Despite their integral role in evolutionary biology, the purpose of certain types of mathematical models is often questioned [8] . Some view models as useful only insofar as they generate immediately testable quantitative predictions [9] , and others see them as tools to elaborate empirically-derived biological patterns but not to independently make substantial new advances [10] . Doubts about the utility of mathematical models are not limited to present day studies of evolution—indeed, this is a topic of discussion in many fields including ecology [11] , [12] , physics [13] , and economics [14] , and has been debated in evolution previously [15] . We believe that skepticism about the value of mathematical models in the field of evolution stems from a common misunderstanding regarding the goals of particular types of models. While the connection between empiricism and some forms of theory (e.g., the construction of likelihood functions for parameter inference and model choice) is straightforward, the importance of highly abstract models—which might not make immediately testable predictions—can be less evident to empiricists. The lack of a shared understanding of the purpose of these “proof-of-concept” models represents a roadblock for progress and hinders dialogue between scientists studying the same topics but using disparate approaches. This conceptual gap obstructs the stated goals of evolutionary biologists; a recent survey of evolutionary biologists and ecologists reveals that the community wants more interaction between theoretical and empirical research than is currently perceived to occur [16] .

To promote this interaction, we clarify the role of mathematical models in evolutionary biology. First, we briefly describe how models fall along a continuum from those designed for quantitative prediction to abstract models of biological processes. Then, we highlight the unique utility of proof-of-concept models, at the far end of this continuum of abstraction, presenting several examples. We stress that the development of rigorous analytical theory with proof-of-concept models is itself a test of verbal hypotheses [11] , [17] , and can in fact be as strong a test as an elegant experiment.

Degrees of Abstraction in Evolutionary Theory

Good evolutionary theory always derives its motivation from the natural world and relates its conclusions back to biological questions. Building such theory requires different degrees of biological abstraction depending on the specific question. Some questions are best addressed by building models to interface directly with data. For example, DNA substitution models in molecular evolution can be built to take into account the biochemistry of DNA, including variation in guanine and cytosine (GC) content [18] and the structure of the genetic code [19] . These substitution models form the basis of the likelihood functions used to infer phylogenetic relationships from sequence data. Models can also provide baseline expectations against which to compare empirical observations (e.g., coalescent genealogies under simple demographic histories [20] or levels of genetic diversity around selective sweeps [21] ).

In contrast, higher degrees of abstraction are required when models are built to qualitatively, as opposed to quantitatively, describe a set of processes and their expected outcomes. Though not mathematical, verbal or pictorial models have long been used in evolutionary biology to form abstract hypotheses about processes that operate among diverse species and across vast time scales. Darwin's [22] theory of natural selection represents one such model, and many others have followed since; for example, Muller proposed that genetic recombination might evolve to prevent the buildup of deleterious mutations (“Muller's ratchet”) [23] , and the “Red Queen hypothesis” proposes that coevolution between antagonistically interacting species can proceed without either species achieving a long-term increase in fitness [24] . A clear verbal model lays out explicitly which biological factors and processes it is (and is not) considering and follows a chain of logic from these initial assumptions to conclusions about how these factors interact to produce biological patterns.

However, evolutionary processes and the resulting patterns are often complex, and there is much room for error and oversight in verbal chains of logic. In fact, verbal models often derive their influence by functioning as lightning rods for debate about exactly which biological factors and processes are (or should be) under consideration and how they will interact over time. At this stage, a mathematical framing of the verbal model becomes invaluable. It is this proof-of-concept modeling on which we focus below.

Proof-of-Concept Models: Testing Verbal Logic in Evolutionary Biology

Proof-of-concept models, used in many fields, test the validity of verbal chains of logic by laying out the specific assumptions mathematically. The results that follow from these assumptions emerge through the principles of mathematics, which reduces the possibility of logical errors at this step of the process. The appropriateness of the assumptions is critical, but once they are established, the mathematical analysis provides a precise mapping to their consequences.

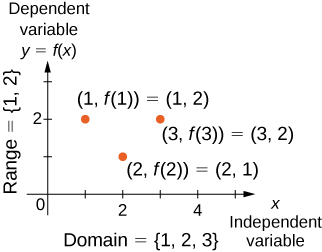

A clear analogy exists between proof-of-concept models and other forms of hypothesis testing. In general, the hypotheses generated by verbal models must ultimately be tested as part of the scientific process ( Figure 1A ). Empirical research tests a hypothesis by gathering data in order to determine whether those data match predicted outcomes ( Figure 1B ). Proof-of-concept models function very similarly ( Figure 1C ): to test the validity of a verbal model, precise predictions from a mathematical analysis of the assumptions are compared against verbal predictions. This important function of mathematical modeling is commonly misunderstood, as theoreticians are often asked how they might test their proof-of-concept models empirically. The models themselves are tests of whether verbal models are sound; if their predictions do not match, the verbal model is flawed, and that form of the hypothesis is disproved.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

This flowchart shows the steps in the scientific process, emphasizing the relationship between experimental empirical techniques and proof-of-concept modeling. Other approaches, including ones that combine empirical and mathematical techniques, are not shown. We note that some questions are best addressed by one or the other of these techniques, while others might benefit from both approaches. Proof-of-concept models, for example, are best suited to testing the logical correctness of verbal hypotheses (i.e., whether certain assumptions actually lead to certain predictions), while only empirical approaches can address hypotheses about which assumptions are most commonly met in nature. (A) A general description of the scientific process. (B) Steps in the scientific process as approached by experimental empirical techniques. In this case, statistical techniques are often used to analyze the gathered data. (C) Steps in the scientific process as approached by proof-of-concept modeling. Here, techniques such as invasion and stability analyses, stochastic simulations, and numerical analyses are employed to analyze the expected outcomes of a model. In both cases, the hypothesis can be evaluated by comparing the results of the analyses to the original predictions.

https://doi.org/10.1371/journal.pbio.1002017.g001

That is not to say, however, that proof-of-concept models do not need to interact with natural systems or with empirical work; in fact, quite the contrary is true. There are vital links between theory and natural systems at the assumption stage ( Box 1 ), and there can also be important connections at the predictions stage ( Box 2 ); connections also occur at the discussion stage, where empirical results are synthesized into a broader conceptual framework. Additionally, theoretical models often point to promising new directions for empirical research, even if these models do not provide immediately testable predictions (see below). When empirical results run counter to theoretical expectations, theorists and empiricists have an opportunity to discover unknown or underappreciated phenomena with potentially important consequences.

Box 1. A Critical Connection—Assumptions

Although the steps between assumptions and predictions in proof-of-concept models do not need to be empirically tested, empirical support is essential to ensure that key assumptions of mathematical models are biologically realistic. The process of matching assumptions to data is a two-way street; if a model demonstrates that a certain assumption is very important, it should motivate empirical work to see if it is met. Importantly, however, not all assumptions must be fully realistic for a model to inform our understanding of the natural world.

We can group assumptions into three general categories (with some overlap between them): we name these 1) critical, 2) exploratory, and 3) logistical. Critical assumptions are those that are integral to the hypothesis, analogous to the factors that an empirical scientist varies in an experiment (they would be part of the purple “hypothesis” box of Figure 1 ). These assumptions are crucial in order to properly test the verbal model; if they do not match the intent of the verbal hypothesis, then the mathematical model is not a true test of the verbal one. To illustrate this category of assumptions (and those below), consider the mathematical model by Rice [35] , which tests the verbal model that “antagonistic selection between the sexes can maintain sexual dimorphism.” In this model, assumptions that fall into the critical category are that (i) antagonistic selection at a locus results in higher fitness for alternate alleles in each sex, and (ii) sexual dimorphism results from a polymorphism between these alleles. If critical assumptions cannot be supported by underlying data or observation, and are therefore biologically unrealistic, then the entire modeling exercise is devoid of biological meaning [36] .

The second category, exploratory assumptions, may be important to vary and test, but are not at the core of the verbal hypothesis. These assumptions are analogous to factors that an empiricist wishes to control for, but that are not the primary variables. Examining the effects of these assumptions may give new insights and breadth to our understanding of a biological phenomenon. (These assumptions, and those below, might best fit in the blue “assumptions” box of Figure 1C .) Returning to Rice's [35] model of sexual dimorphism, two exploratory assumptions are the dominance relationship between the alleles under antagonistic selection and whether the locus is autosomal or sex linked. Analysis of the model shows that dominance does not affect the conditions for sexual dimorphism when the locus is autosomal, but it does when the locus is sex linked.

Finally, every mathematical modeling exercise requires that logistical assumptions be made. These assumptions are partly necessary for tractability. Additionally, proof-of-concept models in evolutionary biology, as in other fields, are not meant to replicate the real world; their purpose instead is to identify the effects of certain assumptions (critical and exploratory ones) by isolating them and placing them in a simplified and abstract context. A key to creating a meaningful model is to be certain that logistical assumptions made to reduce complexity do not qualitatively alter the model's results. In many cases, theoreticians know enough about the effects of an assumption to be able to make it safely. In Rice's [35] sexual dimorphism example, the logistical assumptions include random mating, infinitely large population size, and nonoverlapping generations. These are common and well-understood assumptions in many population genetic models. In other cases, the robustness of logistical assumptions must be tested in a specific model to understand their effects in that context. Because assumptions in mathematical models are explicit, potential limitations in applicability caused by the remaining assumptions can be identified; it is important that modelers acknowledge the potential effects of relaxing these assumptions to make these issues more transparent. As with the other categories of assumptions above, logistical assumptions have an analogy in empirical work; many experiments are conducted in lab environments, or under altered field conditions, with the same purpose of reducing biological complexity to pinpoint specific effects.

Much of the doubt about the applicability of models may stem from a mistrust of the effects of logistical assumptions. It is the responsibility of the theoretician to make his or her knowledge of the robustness of these assumptions transparent to the reader; it may not always be obvious which assumptions are critical versus logistical, and whether the effects of the latter are known. It is likewise the responsibility of the empirically-minded reader to approach models with the same open mind that he or she would an experiment in an artificial setting, rather than immediately dismiss them because of the presence of logistical assumptions.

Box 2. The Complete Picture—Testing Predictions

The predictions of some proof-of-concept models can be evaluated empirically. These tests are not “tests of the model”; the model is correct in that its predictions follow mathematically from its assumptions. They are, though, tests of the relevance or applicability of the model to empirical systems, and in that sense another way of testing whether the assumptions of the model are met in nature (i.e., an indirect test of the assumptions).

A well-known example of an empirical test of theoretically-derived predictions arises in local mate competition theory, which makes predictions about the sex ratio females should produce in their offspring in order to maximize fitness in structured populations, based on the intensity of local competition for mates [37] . These predictions have been assessed, for example, using experimental evolution in spider mites ( Tetranychus urticae ) [38] . The predictions of other evolutionary models might be best suited to comparative tests rather than tests in a single system. For example, inclusive fitness models suggest that, all else being equal, cooperation will be most likely to evolve within groups of close kin [6] . In support of this idea, comparative analyses suggest that mating with a single male (monandry), rather than polyandry, was the ancestral state for eusocial hymenoptera, meaning that this extreme form of cooperation arose within groups of full siblings [39] .

In other cases, comparative data might be very difficult to collect. Theoretical models, for example, have demonstrated that speciation is greatly facilitated if isolating mechanisms that occur before and after mating are controlled by the same genes (e.g., are pleiotropic) [40] . While this condition is found in an increasing number of case studies [41] , each case requires manipulative tests of selection and/or identification of specific genes, so that a rigorous comparative test of how often such pleiotropy is involved in speciation remains far in the future.

Proof-of-concept models can both bring to light hidden assumptions present in verbal models and generate counterintuitive predictions. When a verbal model is converted into a mathematical one, casual or implicit assumptions must be made explicit; in doing so, any unintended assumptions are revealed. Once these hidden assumptions are altered or removed, the predicted outcomes and resulting inferences of the formal model may differ from, or even contradict, those of the verbal model ( Box 3 ). This benefit of mathematical models has brought clarity and transparency to virtually all fields of evolutionary biology. Additionally, in spite of their abstract simplicity, proof-of-concept models, much like simple, elegant experiments, have the capacity to surprise. Even formalizations of seemingly straightforward verbal models can yield outcomes that are unanticipated using a verbal chain of logic ( Box 4 ). Proof-of-concept models thus have the ability both to reinforce the foundations of evolutionary explanations and to advance the field by introducing new predictions.

Box 3. Uncovering Hidden Assumptions

A striking example of the utility of mathematical models comes from the literature on the evolution of indiscriminate altruism (the provision of benefits to others, at a cost to oneself, without discriminating between partners who cooperate and partners who do not). Hamilton [6] proposed that indiscriminate altruism can evolve in a population if individuals are more likely to interact with kin. He also suggested that population viscosity—the limited dispersal of individuals from their birthplace—can increase the probability of interacting with kin. For a long time after Hamilton's original work, it was assumed, often without any explicit justification, that limited dispersal alone could facilitate the evolution of altruism [42] . A simple mathematical model by Taylor [43] , however, showed that population viscosity alone cannot facilitate the evolution of altruism, because the benefits of close proximity to kin are exactly balanced by the costs of competition with those kin. Taylor's model revealed the importance of kin competition and clarified that additional assumptions about life history, such as age structure and the timing of dispersal relative to reproduction, are required for population viscosity to promote (or even inhibit) the evolution of altruism.

Box 4. A Proof-of-Concept Model Finds a Flaw and Introduces a New Twist

In stalk-eyed flies, males' exaggerated eyestalks play two roles in sexual selection: they are used in male–male competition and are the object of female choice. Researchers noticed that generations of experimental selection for less exaggerated eyestalks resulted in males that fathered proportionally fewer sons than expected [44] . Both verbal intuition and preliminary evidence led the research group to propose that females preferred males with long eyestalks because this exaggerated trait resided on a Y chromosome that was resistant to an X chromosome driver with biased transmission [45] . However, a proof-of-concept model highlighted the flawed logic of this verbal model; the mathematical model showed that females choosing to mate with males bearing a drive-resistant Y chromosome (as putatively indicated by long eyestalks) would have lower fitness than nonchoosy females, and therefore this preference would not evolve [46] . In contrast, female choice for long eyestalks could be favored if long eyestalks were genetically associated with a nondriving allele at the (X-linked) drive locus [46] , so long as the eyestalk-length and drive loci were tightly linked [47] . These proof-of-concept models provided a new direction for empirical work, leading to the collection of new evidence demonstrating that the X-driver is linked to the eyestalk-length locus by an inversion [48] , with the nondriver and long eyestalk in coupling phase (i.e., on the same haplotype).

Investigating Evolutionary Puzzles through Proof-of-Concept Modeling

Proof-of-concept models have proven to be an essential tool for investigating some of the classic and most enduring puzzles in the study of evolutionary biology, such as “why is there sex?” and “how do new species originate?” These areas of research remain highly active in part because the relevant time scales are long and the processes are intricate. They represent excellent examples of topics in which mathematical approaches allow investigators to explore the effects of biologically complex factors that are difficult or impossible to manipulate experimentally.

Why Is There Sex?

A century after Darwin [25] published his comprehensive treatment of sexual reproduction, John Maynard Smith [26] used a simple mathematical formalization to identify a biological paradox: why is sexual reproduction ubiquitous, given that asexual organisms can reproduce at a higher rate than sexual ones by not producing males (the “2-fold cost of sex”)? Increased genetic variation resulting from sexual reproduction is widely thought to counteract this cost, but simple proof-of-concept models quickly revealed both a flaw in this verbal logic and an unexpected outcome: sex need not increase variation, and even when it does, the increased variation need not increase fitness [27] . Subsequent theoretical work has illuminated many factors that facilitate the evolution and maintenance of sex. Otto and Nuismer [28] , for example, used a population genetic model to examine the effects on the evolution of sex of antagonistic interactions between species. Such interactions were long thought to facilitate the evolution of sex [29] , [30] . They found, however, that these interactions only select for sex under particular circumstances that are probably relatively rare. Although these predictions might be difficult to test empirically, their implications are important for our conceptual understanding of the evolution of sex.

How Do New Species Originate?

Speciation is another research area that has benefitted from extensive proof-of-concept modeling. Even under the conditions most unfavorable to speciation (e.g., continuous contact between individuals from diverging types), one can weave plausible-sounding verbal speciation scenarios [22] . Verbal models, however, can easily underestimate the strength of biological factors that maintain species cohesion (e.g., gene flow and genetic constraints). Mathematical models have allowed scientists to explicitly outline the parameter space in which speciation can and cannot occur, highlighting many critical determinants of the speciation process that were previously unrecognized [31] . Felsenstein [32] , for example, revolutionized our understanding of the difficulties of speciation with gene flow by using a proof-of-concept model to identify hitherto unconsidered genetic constraints. Speciation models in general have made it clear that the devil is in the details; there are many important biological conditions that combine to determine whether speciation is more or less likely to occur. Because speciation is exceedingly difficult to replicate experimentally, theoretical developments such as these have been particularly valuable.

Pitfalls and Promise

Although mathematical models are potentially enlightening, they share with experimental tests the danger of possible overinterpretation. Mathematical models can clearly outline the parameter space in which an evolutionary phenomenon such as speciation or the evolution of sex can occur under certain assumptions, but is this space “big” or “little”? As with any scientific study, the impression that a model leaves can be misleading, either through faults in the presentation or improper citation in subsequent literature.

Overgeneralization from what a model actually investigates, and claims to investigate, is strikingly common in this age when time for reading is short [33] , and this problem is exacerbated when the presentation is not accessible to readers with a more limited background in theoretical analysis [34] . Indeed, these problems, universal to many fields of science, introduce the greatest potential for error in the conclusions that the research community draws from evolutionary theory.

We follow this word of caution with a final positive thought: in addition to the roles of mathematical models in testing verbal logic, the ability of theory to circumvent practical obstructions of experimental tractability in order to tackle virtually any problem is a benefit that should not be underestimated. Science is a quest for knowledge, and if a problem is, at least currently, empirically intractable, it is very unsatisfactory to collectively throw up our hands and accept ignorance. Surely it is far better, in such cases, to use mathematical models to explore how evolution might have proceeded, illuminating the conditions under which certain evolutionary paths are possible.

Acknowledgments

We thank Haven Wiley, Ben Haller, and Mark Peifer for stimulating discussion and insightful comments on the manuscript.

- View Article

- Google Scholar

- 7. Frank SA (1998) Foundations of Social Evolution. Princeton: Princeton University Press. 268 pp.

- 9. Peters RH (1991) A Critique for Ecology. Cambridge: Cambridge University Press. 366. pp

- 17. Kokko H (2007) Modelling for Field Biologists and other Interesting People. Cambridge: Cambridge University Press. 230 pp.

- 22. Darwin C (1859) The Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life. London: J. Murray. 490 pp

- 25. Darwin CR (1878) The effects of cross and self-fertilisation in the vegetable kingdom. 2nd edition. London: J. Murray.

- 26. Smith JM (1978) The Evolution of Sex. Cambridge: Cambridge University Press. 236 pp

- 31. Coyne JA, Orr HA (2004) Speciation. Sunderland: Sinauer Associates. 545. pp

- 40. Gavrilets S (2004) Fitness Landscapes and the Origin of Species. Princeton: Princeton University Press. 476 pp.

HYPOTHESIS AND THEORY article

Strong inference in mathematical modeling: a method for robust science in the twenty-first century.

- 1 Department of Microbiology, University of Tennessee, Knoxville, TN, USA

- 2 Department of Mathematics, University of Tennessee, Knoxville, TN, USA

- 3 National Institute for Mathematical and Biological Synthesis, University of Tennessee, Knoxville, TN, USA

While there are many opinions on what mathematical modeling in biology is, in essence, modeling is a mathematical tool, like a microscope, which allows consequences to logically follow from a set of assumptions. Only when this tool is applied appropriately, as microscope is used to look at small items, it may allow to understand importance of specific mechanisms/assumptions in biological processes. Mathematical modeling can be less useful or even misleading if used inappropriately, for example, when a microscope is used to study stars. According to some philosophers ( Oreskes et al., 1994 ), the best use of mathematical models is not when a model is used to confirm a hypothesis but rather when a model shows inconsistency of the model (defined by a specific set of assumptions) and data. Following the principle of strong inference for experimental sciences proposed by Platt (1964) , I suggest “strong inference in mathematical modeling” as an effective and robust way of using mathematical modeling to understand mechanisms driving dynamics of biological systems. The major steps of strong inference in mathematical modeling are (1) to develop multiple alternative models for the phenomenon in question; (2) to compare the models with available experimental data and to determine which of the models are not consistent with the data; (3) to determine reasons why rejected models failed to explain the data, and (4) to suggest experiments which would allow to discriminate between remaining alternative models. The use of strong inference is likely to provide better robustness of predictions of mathematical models and it should be strongly encouraged in mathematical modeling-based publications in the Twenty-First century.

1. The Core of Mathematical Modeling

What is the use of mathematical modeling in biology? The answer likely depends on the background of the responder as mathematicians or physicists may have a different answer than biologists, and the answer may also depend on the researcher's definition of a “model.” In some cases models are useful for estimation of parameters underlying biological processes when such parameters are not directly measurable. For example, by measuring the number of T lymphocytes over time and by utilizing a simple model, assuming exponential growth, we can estimate the rate of expansion of T cell populations ( De Boer et al., 2001 ). In other cases, making the model may help think more carefully about contribution of multiple players and their interactions in the observed phenomenon. In general, however, mathematical models are most useful when they provide important insights into underlying biological mechanisms. In this opinion article, I would to provide my personal thoughts on the current state and future of mathematical modeling in biology with the focus on the dynamics of infectious diseases. As a disclosure I must admit that I am taking an extreme, provocative view, based on personal experience as a reader and a reviewer. I hope that this work will generate the much needed discussion on uses and misuses of mathematical models in biology and perhaps will result in quantitative data on this topic.

In my experience, in the area of dynamical systems/models of the within-host and between-host dynamics of infectious diseases, the two most commonly given answers to the question of the “use of mathematical models” are (1) models help us understand biology better; and (2) models help us predict the impact of interventions (e.g., gene knockouts/knockins, cell depletions, vaccines, treatments) on the population dynamics. Although there is some truth to these answers the way mathematical modeling in biology is generally taught and applied rarely allows one to better understand biology. In some cases mathematical models generate predictions which are difficult or impossible to test, the latter making such models unscientific per the definition of a scientific theory according to one of the major philosophers of science in the Twentieth Century Karl Popper ( Popper, 2002 ). Moreover, mathematical modeling may result in questionable recommendations for public health-related policies. My main thesis is that while, in my experience, much of current research in mathematical biology is aimed at finding the right model for a given biological system, we should pay more attention to understanding which biologically reasonable models do not work, i.e., are not able to describe the biological phenomenon in question. According to Karl Popper, proving a given hypothesis to be correct is impossible while rejecting hypotheses is feasible ( Oreskes et al., 1994 ; Popper, 2002 ).

What is a mathematical model? In essence, mathematical model is a hypothesis regarding a phenomenon in question. While any specific model always has an underlying hypothesis (or in some cases, a set of hypotheses), the converse is not true as multiple mathematical models could be formulated for a given hypothesis. In this essay I will use words “hypothesis” and “model” interchangeably. The core of a mathematical model is the set of model assumptions. These assumptions could be based on some experimental observations or simply be a logical thought based on everyday experience. For example, for an ordinary differential equation (ODE)-based model, the assumptions are the formulated equations which include functional terms of interactions between species in the model, parameters associated with these functions, and initial conditions of the model. The utility of mathematics lies in our ability to logically follow from the assumptions to conclusions on the system's dynamics. Thus, mathematical modeling is a logical path from a set of assumptions to conclusions. Such a logical path from axioms to theorems was termed by some as a mathematical revolution in the Twentieth Century ( Quinn, 2012 ). However, while in mathematics it is vital to formulate a complete set of axioms/assumptions to establish verifiable, true statements such as theorems ( Quinn, 2012 ), a complete set of assumptions is impossible in any biology-based mathematical model due to the openness of biological systems (or any other natural system, Oreskes et al., 1994 ). Therefore, biological conclusions stemming from analysis of mathematical models are inherently incomplete and are in general strongly dependent on the assumptions of the model ( De Boer, 2012 ). While such dependency of model conclusions on model assumptions may be viewed as a weakness but it is instead the most significant strength of mathematical modeling! By varying model assumptions one can vary model predictions and subsequently by comparing predictions to experimental observations, sets of assumptions which generate predictions consistent and inconsistent with the data can be identified. This is the core of mathematical modeling which can provide profound insights into biological processes. While it is often possible to provide mechanistic explanations for some biological phenomena from intuition—and many biologists do it—it is often hard to identify sets of implicit assumptions made during such a verbal process. Mathematical modeling by requiring one to define the model specifies such assumptions explicitly. Inherent to this interpretation of mathematical modeling is the need to consider multiple sets of assumptions (or models) to determine which are consistent and, more importantly, which are not consistent with experimental observations. Rather than a thorough expedition to test multiple alternative models, in my experience as a reader and a reviewer many studies utilizing mathematical modeling in biology have been a quest to find (and analyze) a single “correct” model.

I would argue that studies in which a single model was considered and in which the developed model was not rigorously tested against experimental data, do not provide robust biological insights (see below). Pure mathematical analysis of the model and its behavior (e.g., often performed steady state stability analyses for ODE-based models) often provides little insight into the mechanisms driving dynamics in specific biological systems. Failure to consider alternative models often results in biased interpretation of biological observations. Let me give two examples.

Discussion of predator-prey interactions in ecology often starts with the Lotka-Volterra model which is built on very simple and yet powerful basic assumptions ( Mooney and Swift, 1999 ; Kot, 2001 ). The dynamics of the model can be understood analytically and predictions on the dynamics of predator and prey abundances can be easily generated. The observation of the hares and lynx dynamics in Canada has been often presented as evidence that predator-prey interactions driven the dynamics of this biological system ( Mooney and Swift, 1999 ). While it is possible that the dynamics was driven by predator-prey interactions, recent studies also suggest that the dynamics could be driven by self-regulating factors and weather activities influencing independently each of the species ( Brauer and Castillo-Chávez, 2001 ; Zhang et al., 2007 ). A more robust modeling approach would be to start with observations of lynx and hare dynamics and ask about biological mechanisms which could be driving such dynamics including predator-prey interactions, seasonality, or both ( Hilborn and Mangel, 1997 ). The data can then be used to test which of these sets of assumptions is more consistent with experimental data using standard model selection tools ( Burnham and Anderson, 2002 ).

In immunology, viral infections often lead to generation of a large population of virus-specific effector CD8 T cells, and following clearance of the infection, there is formation of memory CD8 T cells ( Ahmed and Gray, 1996 ; Kaech and Cui, 2012 ). However, how memory CD8 T cells are formed during the infection has been a subject of a debate ( Ahmed and Gray, 1996 ). One of the earlier models assumed that memory precursors proliferate during the infection and produce terminally differentiated, nondividing effector T cells, which then die following clearance of the infection ( Wodarz et al., 2000 ; Bocharov et al., 2001 ; Wodarz and Nowak, 2002 ; Fearon et al., 2006 ). While this model was used to explain several biological phenomena, later studies have shown that this model failed to accurately explain experimental data on the dynamics of CD8 T cell response to lymphocytic choriomengitis virus ( Antia et al., 2005 ; Ganusov, 2007 ). More precisely, the model was able to accurately fit experimental data but it required unphysiologically rapid interdivision time for activated CD8 T cells [e.g., 25 min in Ganusov (2007) ] which was inconsistent with other measurements made to date. Constraining the interdivision time to a larger value (e.g., 3 h) resulted in a poor model fit of the data. Therefore, development of adequate mathematical models cannot be all based on “basic principles” and must include comparison with quantitative experimental data.