- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction

Data collection

data analysis

Our editors will review what you’ve submitted and determine whether to revise the article.

- Academia - Data Analysis

- U.S. Department of Health and Human Services - Office of Research Integrity - Data Analysis

- Chemistry LibreTexts - Data Analysis

- IBM - What is Exploratory Data Analysis?

- Table Of Contents

data analysis , the process of systematically collecting, cleaning, transforming, describing, modeling, and interpreting data , generally employing statistical techniques. Data analysis is an important part of both scientific research and business, where demand has grown in recent years for data-driven decision making . Data analysis techniques are used to gain useful insights from datasets, which can then be used to make operational decisions or guide future research . With the rise of “Big Data,” the storage of vast quantities of data in large databases and data warehouses, there is increasing need to apply data analysis techniques to generate insights about volumes of data too large to be manipulated by instruments of low information-processing capacity.

Datasets are collections of information. Generally, data and datasets are themselves collected to help answer questions, make decisions, or otherwise inform reasoning. The rise of information technology has led to the generation of vast amounts of data of many kinds, such as text, pictures, videos, personal information, account data, and metadata, the last of which provide information about other data. It is common for apps and websites to collect data about how their products are used or about the people using their platforms. Consequently, there is vastly more data being collected today than at any other time in human history. A single business may track billions of interactions with millions of consumers at hundreds of locations with thousands of employees and any number of products. Analyzing that volume of data is generally only possible using specialized computational and statistical techniques.

The desire for businesses to make the best use of their data has led to the development of the field of business intelligence , which covers a variety of tools and techniques that allow businesses to perform data analysis on the information they collect.

For data to be analyzed, it must first be collected and stored. Raw data must be processed into a format that can be used for analysis and be cleaned so that errors and inconsistencies are minimized. Data can be stored in many ways, but one of the most useful is in a database . A database is a collection of interrelated data organized so that certain records (collections of data related to a single entity) can be retrieved on the basis of various criteria . The most familiar kind of database is the relational database , which stores data in tables with rows that represent records (tuples) and columns that represent fields (attributes). A query is a command that retrieves a subset of the information in the database according to certain criteria. A query may retrieve only records that meet certain criteria, or it may join fields from records across multiple tables by use of a common field.

Frequently, data from many sources is collected into large archives of data called data warehouses. The process of moving data from its original sources (such as databases) to a centralized location (generally a data warehouse) is called ETL (which stands for extract , transform , and load ).

- The extraction step occurs when you identify and copy or export the desired data from its source, such as by running a database query to retrieve the desired records.

- The transformation step is the process of cleaning the data so that they fit the analytical need for the data and the schema of the data warehouse. This may involve changing formats for certain fields, removing duplicate records, or renaming fields, among other processes.

- Finally, the clean data are loaded into the data warehouse, where they may join vast amounts of historical data and data from other sources.

After data are effectively collected and cleaned, they can be analyzed with a variety of techniques. Analysis often begins with descriptive and exploratory data analysis. Descriptive data analysis uses statistics to organize and summarize data, making it easier to understand the broad qualities of the dataset. Exploratory data analysis looks for insights into the data that may arise from descriptions of distribution, central tendency, or variability for a single data field. Further relationships between data may become apparent by examining two fields together. Visualizations may be employed during analysis, such as histograms (graphs in which the length of a bar indicates a quantity) or stem-and-leaf plots (which divide data into buckets, or “stems,” with individual data points serving as “leaves” on the stem).

Data analysis frequently goes beyond descriptive analysis to predictive analysis, making predictions about the future using predictive modeling techniques. Predictive modeling uses machine learning , regression analysis methods (which mathematically calculate the relationship between an independent variable and a dependent variable), and classification techniques to identify trends and relationships among variables. Predictive analysis may involve data mining , which is the process of discovering interesting or useful patterns in large volumes of information. Data mining often involves cluster analysis , which tries to find natural groupings within data, and anomaly detection , which detects instances in data that are unusual and stand out from other patterns. It may also look for rules within datasets, strong relationships among variables in the data.

Data Analysis

- Introduction to Data Analysis

- Quantitative Analysis Tools

- Qualitative Analysis Tools

- Mixed Methods Analysis

- Geospatial Analysis

- Further Reading

What is Data Analysis?

According to the federal government, data analysis is "the process of systematically applying statistical and/or logical techniques to describe and illustrate, condense and recap, and evaluate data" ( Responsible Conduct in Data Management ). Important components of data analysis include searching for patterns, remaining unbiased in drawing inference from data, practicing responsible data management , and maintaining "honest and accurate analysis" ( Responsible Conduct in Data Management ).

In order to understand data analysis further, it can be helpful to take a step back and understand the question "What is data?". Many of us associate data with spreadsheets of numbers and values, however, data can encompass much more than that. According to the federal government, data is "The recorded factual material commonly accepted in the scientific community as necessary to validate research findings" ( OMB Circular 110 ). This broad definition can include information in many formats.

Some examples of types of data are as follows:

- Photographs

- Hand-written notes from field observation

- Machine learning training data sets

- Ethnographic interview transcripts

- Sheet music

- Scripts for plays and musicals

- Observations from laboratory experiments ( CMU Data 101 )

Thus, data analysis includes the processing and manipulation of these data sources in order to gain additional insight from data, answer a research question, or confirm a research hypothesis.

Data analysis falls within the larger research data lifecycle, as seen below.

( University of Virginia )

Why Analyze Data?

Through data analysis, a researcher can gain additional insight from data and draw conclusions to address the research question or hypothesis. Use of data analysis tools helps researchers understand and interpret data.

What are the Types of Data Analysis?

Data analysis can be quantitative, qualitative, or mixed methods.

Quantitative research typically involves numbers and "close-ended questions and responses" ( Creswell & Creswell, 2018 , p. 3). Quantitative research tests variables against objective theories, usually measured and collected on instruments and analyzed using statistical procedures ( Creswell & Creswell, 2018 , p. 4). Quantitative analysis usually uses deductive reasoning.

Qualitative research typically involves words and "open-ended questions and responses" ( Creswell & Creswell, 2018 , p. 3). According to Creswell & Creswell, "qualitative research is an approach for exploring and understanding the meaning individuals or groups ascribe to a social or human problem" ( 2018 , p. 4). Thus, qualitative analysis usually invokes inductive reasoning.

Mixed methods research uses methods from both quantitative and qualitative research approaches. Mixed methods research works under the "core assumption... that the integration of qualitative and quantitative data yields additional insight beyond the information provided by either the quantitative or qualitative data alone" ( Creswell & Creswell, 2018 , p. 4).

- Next: Planning >>

- Last Updated: Jun 25, 2024 10:23 AM

- URL: https://guides.library.georgetown.edu/data-analysis

- Privacy Policy

Home » Data Analysis – Process, Methods and Types

Data Analysis – Process, Methods and Types

Table of Contents

Data Analysis

Definition:

Data analysis refers to the process of inspecting, cleaning, transforming, and modeling data with the goal of discovering useful information, drawing conclusions, and supporting decision-making. It involves applying various statistical and computational techniques to interpret and derive insights from large datasets. The ultimate aim of data analysis is to convert raw data into actionable insights that can inform business decisions, scientific research, and other endeavors.

Data Analysis Process

The following are step-by-step guides to the data analysis process:

Define the Problem

The first step in data analysis is to clearly define the problem or question that needs to be answered. This involves identifying the purpose of the analysis, the data required, and the intended outcome.

Collect the Data

The next step is to collect the relevant data from various sources. This may involve collecting data from surveys, databases, or other sources. It is important to ensure that the data collected is accurate, complete, and relevant to the problem being analyzed.

Clean and Organize the Data

Once the data has been collected, it needs to be cleaned and organized. This involves removing any errors or inconsistencies in the data, filling in missing values, and ensuring that the data is in a format that can be easily analyzed.

Analyze the Data

The next step is to analyze the data using various statistical and analytical techniques. This may involve identifying patterns in the data, conducting statistical tests, or using machine learning algorithms to identify trends and insights.

Interpret the Results

After analyzing the data, the next step is to interpret the results. This involves drawing conclusions based on the analysis and identifying any significant findings or trends.

Communicate the Findings

Once the results have been interpreted, they need to be communicated to stakeholders. This may involve creating reports, visualizations, or presentations to effectively communicate the findings and recommendations.

Take Action

The final step in the data analysis process is to take action based on the findings. This may involve implementing new policies or procedures, making strategic decisions, or taking other actions based on the insights gained from the analysis.

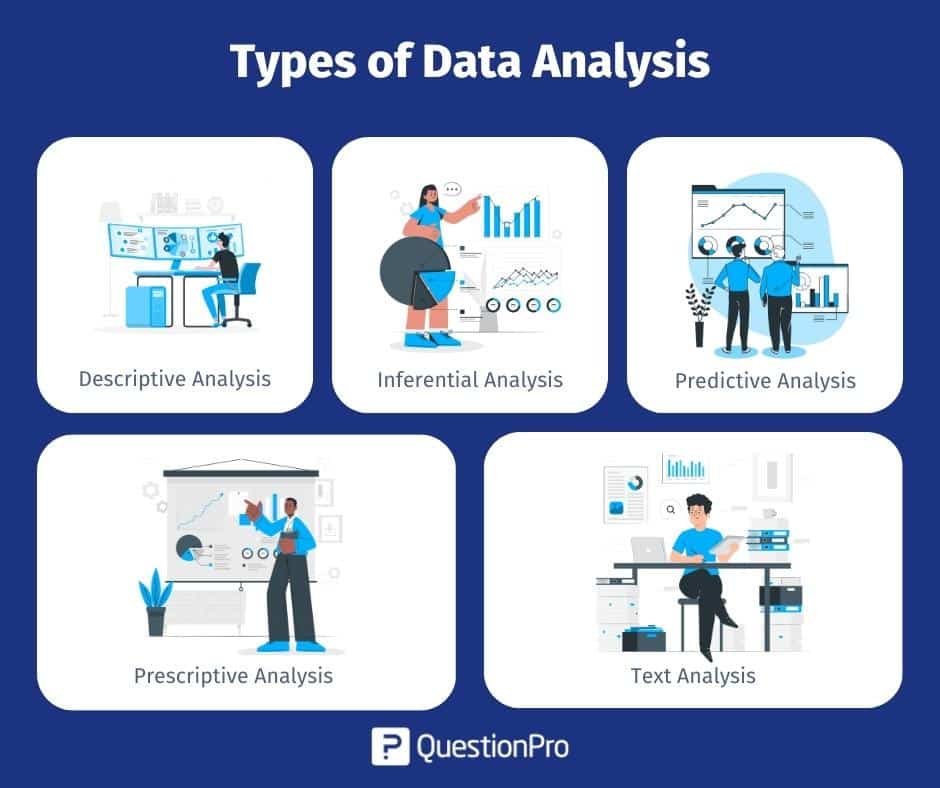

Types of Data Analysis

Types of Data Analysis are as follows:

Descriptive Analysis

This type of analysis involves summarizing and describing the main characteristics of a dataset, such as the mean, median, mode, standard deviation, and range.

Inferential Analysis

This type of analysis involves making inferences about a population based on a sample. Inferential analysis can help determine whether a certain relationship or pattern observed in a sample is likely to be present in the entire population.

Diagnostic Analysis

This type of analysis involves identifying and diagnosing problems or issues within a dataset. Diagnostic analysis can help identify outliers, errors, missing data, or other anomalies in the dataset.

Predictive Analysis

This type of analysis involves using statistical models and algorithms to predict future outcomes or trends based on historical data. Predictive analysis can help businesses and organizations make informed decisions about the future.

Prescriptive Analysis

This type of analysis involves recommending a course of action based on the results of previous analyses. Prescriptive analysis can help organizations make data-driven decisions about how to optimize their operations, products, or services.

Exploratory Analysis

This type of analysis involves exploring the relationships and patterns within a dataset to identify new insights and trends. Exploratory analysis is often used in the early stages of research or data analysis to generate hypotheses and identify areas for further investigation.

Data Analysis Methods

Data Analysis Methods are as follows:

Statistical Analysis

This method involves the use of mathematical models and statistical tools to analyze and interpret data. It includes measures of central tendency, correlation analysis, regression analysis, hypothesis testing, and more.

Machine Learning

This method involves the use of algorithms to identify patterns and relationships in data. It includes supervised and unsupervised learning, classification, clustering, and predictive modeling.

Data Mining

This method involves using statistical and machine learning techniques to extract information and insights from large and complex datasets.

Text Analysis

This method involves using natural language processing (NLP) techniques to analyze and interpret text data. It includes sentiment analysis, topic modeling, and entity recognition.

Network Analysis

This method involves analyzing the relationships and connections between entities in a network, such as social networks or computer networks. It includes social network analysis and graph theory.

Time Series Analysis

This method involves analyzing data collected over time to identify patterns and trends. It includes forecasting, decomposition, and smoothing techniques.

Spatial Analysis

This method involves analyzing geographic data to identify spatial patterns and relationships. It includes spatial statistics, spatial regression, and geospatial data visualization.

Data Visualization

This method involves using graphs, charts, and other visual representations to help communicate the findings of the analysis. It includes scatter plots, bar charts, heat maps, and interactive dashboards.

Qualitative Analysis

This method involves analyzing non-numeric data such as interviews, observations, and open-ended survey responses. It includes thematic analysis, content analysis, and grounded theory.

Multi-criteria Decision Analysis

This method involves analyzing multiple criteria and objectives to support decision-making. It includes techniques such as the analytical hierarchy process, TOPSIS, and ELECTRE.

Data Analysis Tools

There are various data analysis tools available that can help with different aspects of data analysis. Below is a list of some commonly used data analysis tools:

- Microsoft Excel: A widely used spreadsheet program that allows for data organization, analysis, and visualization.

- SQL : A programming language used to manage and manipulate relational databases.

- R : An open-source programming language and software environment for statistical computing and graphics.

- Python : A general-purpose programming language that is widely used in data analysis and machine learning.

- Tableau : A data visualization software that allows for interactive and dynamic visualizations of data.

- SAS : A statistical analysis software used for data management, analysis, and reporting.

- SPSS : A statistical analysis software used for data analysis, reporting, and modeling.

- Matlab : A numerical computing software that is widely used in scientific research and engineering.

- RapidMiner : A data science platform that offers a wide range of data analysis and machine learning tools.

Applications of Data Analysis

Data analysis has numerous applications across various fields. Below are some examples of how data analysis is used in different fields:

- Business : Data analysis is used to gain insights into customer behavior, market trends, and financial performance. This includes customer segmentation, sales forecasting, and market research.

- Healthcare : Data analysis is used to identify patterns and trends in patient data, improve patient outcomes, and optimize healthcare operations. This includes clinical decision support, disease surveillance, and healthcare cost analysis.

- Education : Data analysis is used to measure student performance, evaluate teaching effectiveness, and improve educational programs. This includes assessment analytics, learning analytics, and program evaluation.

- Finance : Data analysis is used to monitor and evaluate financial performance, identify risks, and make investment decisions. This includes risk management, portfolio optimization, and fraud detection.

- Government : Data analysis is used to inform policy-making, improve public services, and enhance public safety. This includes crime analysis, disaster response planning, and social welfare program evaluation.

- Sports : Data analysis is used to gain insights into athlete performance, improve team strategy, and enhance fan engagement. This includes player evaluation, scouting analysis, and game strategy optimization.

- Marketing : Data analysis is used to measure the effectiveness of marketing campaigns, understand customer behavior, and develop targeted marketing strategies. This includes customer segmentation, marketing attribution analysis, and social media analytics.

- Environmental science : Data analysis is used to monitor and evaluate environmental conditions, assess the impact of human activities on the environment, and develop environmental policies. This includes climate modeling, ecological forecasting, and pollution monitoring.

When to Use Data Analysis

Data analysis is useful when you need to extract meaningful insights and information from large and complex datasets. It is a crucial step in the decision-making process, as it helps you understand the underlying patterns and relationships within the data, and identify potential areas for improvement or opportunities for growth.

Here are some specific scenarios where data analysis can be particularly helpful:

- Problem-solving : When you encounter a problem or challenge, data analysis can help you identify the root cause and develop effective solutions.

- Optimization : Data analysis can help you optimize processes, products, or services to increase efficiency, reduce costs, and improve overall performance.

- Prediction: Data analysis can help you make predictions about future trends or outcomes, which can inform strategic planning and decision-making.

- Performance evaluation : Data analysis can help you evaluate the performance of a process, product, or service to identify areas for improvement and potential opportunities for growth.

- Risk assessment : Data analysis can help you assess and mitigate risks, whether it is financial, operational, or related to safety.

- Market research : Data analysis can help you understand customer behavior and preferences, identify market trends, and develop effective marketing strategies.

- Quality control: Data analysis can help you ensure product quality and customer satisfaction by identifying and addressing quality issues.

Purpose of Data Analysis

The primary purposes of data analysis can be summarized as follows:

- To gain insights: Data analysis allows you to identify patterns and trends in data, which can provide valuable insights into the underlying factors that influence a particular phenomenon or process.

- To inform decision-making: Data analysis can help you make informed decisions based on the information that is available. By analyzing data, you can identify potential risks, opportunities, and solutions to problems.

- To improve performance: Data analysis can help you optimize processes, products, or services by identifying areas for improvement and potential opportunities for growth.

- To measure progress: Data analysis can help you measure progress towards a specific goal or objective, allowing you to track performance over time and adjust your strategies accordingly.

- To identify new opportunities: Data analysis can help you identify new opportunities for growth and innovation by identifying patterns and trends that may not have been visible before.

Examples of Data Analysis

Some Examples of Data Analysis are as follows:

- Social Media Monitoring: Companies use data analysis to monitor social media activity in real-time to understand their brand reputation, identify potential customer issues, and track competitors. By analyzing social media data, businesses can make informed decisions on product development, marketing strategies, and customer service.

- Financial Trading: Financial traders use data analysis to make real-time decisions about buying and selling stocks, bonds, and other financial instruments. By analyzing real-time market data, traders can identify trends and patterns that help them make informed investment decisions.

- Traffic Monitoring : Cities use data analysis to monitor traffic patterns and make real-time decisions about traffic management. By analyzing data from traffic cameras, sensors, and other sources, cities can identify congestion hotspots and make changes to improve traffic flow.

- Healthcare Monitoring: Healthcare providers use data analysis to monitor patient health in real-time. By analyzing data from wearable devices, electronic health records, and other sources, healthcare providers can identify potential health issues and provide timely interventions.

- Online Advertising: Online advertisers use data analysis to make real-time decisions about advertising campaigns. By analyzing data on user behavior and ad performance, advertisers can make adjustments to their campaigns to improve their effectiveness.

- Sports Analysis : Sports teams use data analysis to make real-time decisions about strategy and player performance. By analyzing data on player movement, ball position, and other variables, coaches can make informed decisions about substitutions, game strategy, and training regimens.

- Energy Management : Energy companies use data analysis to monitor energy consumption in real-time. By analyzing data on energy usage patterns, companies can identify opportunities to reduce energy consumption and improve efficiency.

Characteristics of Data Analysis

Characteristics of Data Analysis are as follows:

- Objective : Data analysis should be objective and based on empirical evidence, rather than subjective assumptions or opinions.

- Systematic : Data analysis should follow a systematic approach, using established methods and procedures for collecting, cleaning, and analyzing data.

- Accurate : Data analysis should produce accurate results, free from errors and bias. Data should be validated and verified to ensure its quality.

- Relevant : Data analysis should be relevant to the research question or problem being addressed. It should focus on the data that is most useful for answering the research question or solving the problem.

- Comprehensive : Data analysis should be comprehensive and consider all relevant factors that may affect the research question or problem.

- Timely : Data analysis should be conducted in a timely manner, so that the results are available when they are needed.

- Reproducible : Data analysis should be reproducible, meaning that other researchers should be able to replicate the analysis using the same data and methods.

- Communicable : Data analysis should be communicated clearly and effectively to stakeholders and other interested parties. The results should be presented in a way that is understandable and useful for decision-making.

Advantages of Data Analysis

Advantages of Data Analysis are as follows:

- Better decision-making: Data analysis helps in making informed decisions based on facts and evidence, rather than intuition or guesswork.

- Improved efficiency: Data analysis can identify inefficiencies and bottlenecks in business processes, allowing organizations to optimize their operations and reduce costs.

- Increased accuracy: Data analysis helps to reduce errors and bias, providing more accurate and reliable information.

- Better customer service: Data analysis can help organizations understand their customers better, allowing them to provide better customer service and improve customer satisfaction.

- Competitive advantage: Data analysis can provide organizations with insights into their competitors, allowing them to identify areas where they can gain a competitive advantage.

- Identification of trends and patterns : Data analysis can identify trends and patterns in data that may not be immediately apparent, helping organizations to make predictions and plan for the future.

- Improved risk management : Data analysis can help organizations identify potential risks and take proactive steps to mitigate them.

- Innovation: Data analysis can inspire innovation and new ideas by revealing new opportunities or previously unknown correlations in data.

Limitations of Data Analysis

- Data quality: The quality of data can impact the accuracy and reliability of analysis results. If data is incomplete, inconsistent, or outdated, the analysis may not provide meaningful insights.

- Limited scope: Data analysis is limited by the scope of the data available. If data is incomplete or does not capture all relevant factors, the analysis may not provide a complete picture.

- Human error : Data analysis is often conducted by humans, and errors can occur in data collection, cleaning, and analysis.

- Cost : Data analysis can be expensive, requiring specialized tools, software, and expertise.

- Time-consuming : Data analysis can be time-consuming, especially when working with large datasets or conducting complex analyses.

- Overreliance on data: Data analysis should be complemented with human intuition and expertise. Overreliance on data can lead to a lack of creativity and innovation.

- Privacy concerns: Data analysis can raise privacy concerns if personal or sensitive information is used without proper consent or security measures.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Conceptual Framework – Types, Methodology and...

Context of the Study – Writing Guide and Examples

Cluster Analysis – Types, Methods and Examples

Informed Consent in Research – Types, Templates...

Research Methodology – Types, Examples and...

Research Problem – Examples, Types and Guide

What is Data Analysis? (Types, Methods, and Tools)

- Couchbase Product Marketing December 17, 2023

Data analysis is the process of cleaning, transforming, and interpreting data to uncover insights, patterns, and trends. It plays a crucial role in decision making, problem solving, and driving innovation across various domains.

In addition to further exploring the role data analysis plays this blog post will discuss common data analysis techniques, delve into the distinction between quantitative and qualitative data, explore popular data analysis tools, and discuss the steps involved in the data analysis process.

By the end, you should have a deeper understanding of data analysis and its applications, empowering you to harness the power of data to make informed decisions and gain actionable insights.

Why is Data Analysis Important?

Data analysis is important across various domains and industries. It helps with:

- Decision Making : Data analysis provides valuable insights that support informed decision making, enabling organizations to make data-driven choices for better outcomes.

- Problem Solving : Data analysis helps identify and solve problems by uncovering root causes, detecting anomalies, and optimizing processes for increased efficiency.

- Performance Evaluation : Data analysis allows organizations to evaluate performance, track progress, and measure success by analyzing key performance indicators (KPIs) and other relevant metrics.

- Gathering Insights : Data analysis uncovers valuable insights that drive innovation, enabling businesses to develop new products, services, and strategies aligned with customer needs and market demand.

- Risk Management : Data analysis helps mitigate risks by identifying risk factors and enabling proactive measures to minimize potential negative impacts.

By leveraging data analysis, organizations can gain a competitive advantage, improve operational efficiency, and make smarter decisions that positively impact the bottom line.

Quantitative vs. Qualitative Data

In data analysis, you’ll commonly encounter two types of data: quantitative and qualitative. Understanding the differences between these two types of data is essential for selecting appropriate analysis methods and drawing meaningful insights. Here’s an overview of quantitative and qualitative data:

Quantitative Data

Quantitative data is numerical and represents quantities or measurements. It’s typically collected through surveys, experiments, and direct measurements. This type of data is characterized by its ability to be counted, measured, and subjected to mathematical calculations. Examples of quantitative data include age, height, sales figures, test scores, and the number of website users.

Quantitative data has the following characteristics:

- Numerical : Quantitative data is expressed in numerical values that can be analyzed and manipulated mathematically.

- Objective : Quantitative data is objective and can be measured and verified independently of individual interpretations.

- Statistical Analysis : Quantitative data lends itself well to statistical analysis. It allows for applying various statistical techniques, such as descriptive statistics, correlation analysis, regression analysis, and hypothesis testing.

- Generalizability : Quantitative data often aims to generalize findings to a larger population. It allows for making predictions, estimating probabilities, and drawing statistical inferences.

Qualitative Data

Qualitative data, on the other hand, is non-numerical and is collected through interviews, observations, and open-ended survey questions. It focuses on capturing rich, descriptive, and subjective information to gain insights into people’s opinions, attitudes, experiences, and behaviors. Examples of qualitative data include interview transcripts, field notes, survey responses, and customer feedback.

Qualitative data has the following characteristics:

- Descriptive : Qualitative data provides detailed descriptions, narratives, or interpretations of phenomena, often capturing context, emotions, and nuances.

- Subjective : Qualitative data is subjective and influenced by the individuals’ perspectives, experiences, and interpretations.

- Interpretive Analysis : Qualitative data requires interpretive techniques, such as thematic analysis, content analysis, and discourse analysis, to uncover themes, patterns, and underlying meanings.

- Contextual Understanding : Qualitative data emphasizes understanding the social, cultural, and contextual factors that shape individuals’ experiences and behaviors.

- Rich Insights : Qualitative data enables researchers to gain in-depth insights into complex phenomena and explore research questions in greater depth.

In summary, quantitative data represents numerical quantities and lends itself well to statistical analysis, while qualitative data provides rich, descriptive insights into subjective experiences and requires interpretive analysis techniques. Understanding the differences between quantitative and qualitative data is crucial for selecting appropriate analysis methods and drawing meaningful conclusions in research and data analysis.

Types of Data Analysis

Different types of data analysis techniques serve different purposes. In this section, we’ll explore four types of data analysis: descriptive, diagnostic, predictive, and prescriptive, and go over how you can use them.

Descriptive Analysis

Descriptive analysis involves summarizing and describing the main characteristics of a dataset. It focuses on gaining a comprehensive understanding of the data through measures such as central tendency (mean, median, mode), dispersion (variance, standard deviation), and graphical representations (histograms, bar charts). For example, in a retail business, descriptive analysis may involve analyzing sales data to identify average monthly sales, popular products, or sales distribution across different regions.

Diagnostic Analysis

Diagnostic analysis aims to understand the causes or factors influencing specific outcomes or events. It involves investigating relationships between variables and identifying patterns or anomalies in the data. Diagnostic analysis often uses regression analysis, correlation analysis, and hypothesis testing to uncover the underlying reasons behind observed phenomena. For example, in healthcare, diagnostic analysis could help determine factors contributing to patient readmissions and identify potential improvements in the care process.

Predictive Analysis

Predictive analysis focuses on making predictions or forecasts about future outcomes based on historical data. It utilizes statistical models, machine learning algorithms, and time series analysis to identify patterns and trends in the data. By applying predictive analysis, businesses can anticipate customer behavior, market trends, or demand for products and services. For example, an e-commerce company might use predictive analysis to forecast customer churn and take proactive measures to retain customers.

Prescriptive Analysis

Prescriptive analysis takes predictive analysis a step further by providing recommendations or optimal solutions based on the predicted outcomes. It combines historical and real-time data with optimization techniques, simulation models, and decision-making algorithms to suggest the best course of action. Prescriptive analysis helps organizations make data-driven decisions and optimize their strategies. For example, a logistics company can use prescriptive analysis to determine the most efficient delivery routes, considering factors like traffic conditions, fuel costs, and customer preferences.

In summary, data analysis plays a vital role in extracting insights and enabling informed decision making. Descriptive analysis helps understand the data, diagnostic analysis uncovers the underlying causes, predictive analysis forecasts future outcomes, and prescriptive analysis provides recommendations for optimal actions. These different data analysis techniques are valuable tools for businesses and organizations across various industries.

Data Analysis Methods

In addition to the data analysis types discussed earlier, you can use various methods to analyze data effectively. These methods provide a structured approach to extract insights, detect patterns, and derive meaningful conclusions from the available data. Here are some commonly used data analysis methods:

Statistical Analysis

Statistical analysis involves applying statistical techniques to data to uncover patterns, relationships, and trends. It includes methods such as hypothesis testing, regression analysis, analysis of variance (ANOVA), and chi-square tests. Statistical analysis helps organizations understand the significance of relationships between variables and make inferences about the population based on sample data. For example, a market research company could conduct a survey to analyze the relationship between customer satisfaction and product price. They can use regression analysis to determine whether there is a significant correlation between these variables.

Data Mining

Data mining refers to the process of discovering patterns and relationships in large datasets using techniques such as clustering, classification, association analysis, and anomaly detection. It involves exploring data to identify hidden patterns and gain valuable insights. For example, a telecommunications company could analyze customer call records to identify calling patterns and segment customers into groups based on their calling behavior.

Text Mining

Text mining involves analyzing unstructured data , such as customer reviews, social media posts, or emails, to extract valuable information and insights. It utilizes techniques like natural language processing (NLP), sentiment analysis, and topic modeling to analyze and understand textual data. For example, consider how a hotel chain might analyze customer reviews from various online platforms to identify common themes and sentiment patterns to improve customer satisfaction.

Time Series Analysis

Time series analysis focuses on analyzing data collected over time to identify trends, seasonality, and patterns. It involves techniques such as forecasting, decomposition, and autocorrelation analysis to make predictions and understand the underlying patterns in the data.

For example, an energy company could analyze historical electricity consumption data to forecast future demand and optimize energy generation and distribution.

Data Visualization

Data visualization is the graphical representation of data to communicate patterns, trends, and insights visually. It uses charts, graphs, maps, and other visual elements to present data in a visually appealing and easily understandable format. For example, a sales team might use a line chart to visualize monthly sales trends and identify seasonal patterns in their sales data.

These are just a few examples of the data analysis methods you can use. Your choice should depend on the nature of the data, the research question or problem, and the desired outcome.

How to Analyze Data

Analyzing data involves following a systematic approach to extract insights and derive meaningful conclusions. Here are some steps to guide you through the process of analyzing data effectively:

Define the Objective : Clearly define the purpose and objective of your data analysis. Identify the specific question or problem you want to address through analysis.

Prepare and Explore the Data : Gather the relevant data and ensure its quality. Clean and preprocess the data by handling missing values, duplicates, and formatting issues. Explore the data using descriptive statistics and visualizations to identify patterns, outliers, and relationships.

Apply Analysis Techniques : Choose the appropriate analysis techniques based on your data and research question. Apply statistical methods, machine learning algorithms, and other analytical tools to derive insights and answer your research question.

Interpret the Results : Analyze the output of your analysis and interpret the findings in the context of your objective. Identify significant patterns, trends, and relationships in the data. Consider the implications and practical relevance of the results.

Communicate and Take Action : Communicate your findings effectively to stakeholders or intended audiences. Present the results clearly and concisely, using visualizations and reports. Use the insights from the analysis to inform decision making.

Remember, data analysis is an iterative process, and you may need to revisit and refine your analysis as you progress. These steps provide a general framework to guide you through the data analysis process and help you derive meaningful insights from your data.

Data Analysis Tools

Data analysis tools are software applications and platforms designed to facilitate the process of analyzing and interpreting data . These tools provide a range of functionalities to handle data manipulation, visualization, statistical analysis, and machine learning. Here are some commonly used data analysis tools:

Spreadsheet Software

Tools like Microsoft Excel, Google Sheets, and Apple Numbers are used for basic data analysis tasks. They offer features for data entry, manipulation, basic statistical functions, and simple visualizations.

Business Intelligence (BI) Platforms

BI platforms like Microsoft Power BI, Tableau, and Looker integrate data from multiple sources, providing comprehensive views of business performance through interactive dashboards, reports, and ad hoc queries.

Programming Languages and Libraries

Programming languages like R and Python, along with their associated libraries (e.g., NumPy, SciPy, scikit-learn), offer extensive capabilities for data analysis. They provide flexibility, customizability, and access to a wide range of statistical and machine-learning algorithms.

Cloud-Based Analytics Platforms

Cloud-based platforms like Google Cloud Platform (BigQuery, Data Studio), Microsoft Azure (Azure Analytics, Power BI), and Amazon Web Services (AWS Analytics, QuickSight) provide scalable and collaborative environments for data storage, processing, and analysis. They have a wide range of analytical capabilities for handling large datasets.

Data Mining and Machine Learning Tools

Tools like RapidMiner, KNIME, and Weka automate the process of data preprocessing, feature selection, model training, and evaluation. They’re designed to extract insights and build predictive models from complex datasets.

Text Analytics Tools

Text analytics tools, such as Natural Language Processing (NLP) libraries in Python (NLTK, spaCy) or platforms like RapidMiner Text Mining Extension, enable the analysis of unstructured text data . They help extract information, sentiment, and themes from sources like customer reviews or social media.

Choosing the right data analysis tool depends on analysis complexity, dataset size, required functionalities, and user expertise. You might need to use a combination of tools to leverage their combined strengths and address specific analysis needs.

By understanding the power of data analysis, you can leverage it to make informed decisions, identify opportunities for improvement, and drive innovation within your organization. Whether you’re working with quantitative data for statistical analysis or qualitative data for in-depth insights, it’s important to select the right analysis techniques and tools for your objectives.

To continue learning about data analysis, review the following resources:

- What is Big Data Analytics?

- Operational Analytics

- JSON Analytics + Real-Time Insights

- Database vs. Data Warehouse: Differences, Use Cases, Examples

- Couchbase Capella Columnar Product Blog

- Posted in: Analytics , Application Design , Best Practices and Tutorials

- Tagged in: data analytics , data visualization , time series

Posted by Couchbase Product Marketing

Leave a reply cancel reply.

You must be logged in to post a comment.

Check your inbox or spam folder to confirm your subscription.

Advancing Big Data Best Practices

- Why the Enterprise Big Data Framework Alliance?

- What We Offer

- Enterprise Big Data Framework

Learn about indivual memberships.

Learn about enterprise memberships.

Learn about Educator Memberships

- Memberships

Certifications

Enterprise Big Data Professional (EBDP®)

Enterprise Big Data Analyst (EBDA®)

Enterprise Big Data Scientist (EBDS®)

Enterprise Big Data Engineer (EBDE®)

Enterprise Big Data Architect (EBDAR®)

Certificates

Data Literacy Fundamentals

Data Governance Fundamentals

Data Management Fundamentals

Data Security & Privacy Fundamentals

Training & Exams

Certification Overview

Enterprise Big Data Professional

Enterprise Big Data Analyst

Enterprise Big Data Scientist

Enterprise Big Data Engineer

Enterprise Big Data Architect

Ambassador Program

Learn about the EBDFA Ambassador Program

Academic Partners

Learn about the terms and benefits of the EBDFA Academic Partner Program

Training Partners

Learn how to become an Accredited Training Organization

Corporate Partners

Join the Corporate Partner Program and connect with the EBDFA community.

Partnerships

Become an Ambassador

- Become an Academic Partner New

- Become a Corporate Partner

Become a Training Partner

- Find a Training Partner

- Blog & Big Data News

- Big Data Events & Webinars

Big Data Days 2024

Big Data Knowledge Base

Big Data Talks Podcast

- Free Downloads & Store

What is Data Analysis? An Introductory Guide

The data analysis process, key data analysis skills, start your journey into data analysis with the official enterpise big data analyst certification, data analysis examples in the enterprise, frequently asked questions (faqs).

Data analysis is the process of inspecting, cleaning, transforming, and modeling data to derive meaningful insights and make informed decisions. It involves examining raw data to identify patterns, trends, and relationships that can be used to understand various aspects of a business, organization, or phenomenon. This process often employs statistical methods, machine learning algorithms, and data visualization techniques to extract valuable information from data sets.

At its core, data analysis aims to answer questions, solve problems, and support decision-making processes. It helps uncover hidden patterns or correlations within data that may not be immediately apparent, leading to actionable insights that can drive business strategies and improve performance. Whether it’s analyzing sales figures to identify market trends, evaluating customer feedback to enhance products or services, or studying medical data to improve patient outcomes, data analysis plays a crucial role in numerous domains.

Effective data analysis requires not only technical skills but also domain knowledge and critical thinking. Analysts must understand the context in which the data is generated, choose appropriate analytical tools and methods, and interpret results accurately to draw meaningful conclusions. Moreover, data analysis is an iterative process that may involve refining hypotheses, collecting additional data, and revisiting analytical techniques to ensure the validity and reliability of findings.

Why spend time to learn data analysis?

Learning about data analysis is beneficial for your career because it equips you with the skills to make data-driven decisions, which are highly valued in today’s data-centric business environment. Employers increasingly seek professionals who can gather, analyze, and interpret data to drive innovation, optimize processes, and achieve strategic objectives.

The data analysis process is a systematic approach to extracting valuable insights and making informed decisions from raw data. It begins with defining the problem or question at hand, followed by collecting and cleaning the relevant data. Exploratory data analysis (EDA) helps in understanding the data’s characteristics and uncovering patterns, while data modeling and analysis apply statistical or machine learning techniques to derive meaningful conclusions. In most organizations, data analysis is structured in a number of steps:

- Define the Problem or Question: The first step is to clearly define the problem or question you want to address through data analysis. This could involve understanding business objectives, identifying research questions, or defining hypotheses to be tested.

- Data Collection: Once the problem is defined, gather relevant data from various sources. This could include structured data from databases, spreadsheets, or surveys, as well as unstructured data like text documents or social media posts.

- Data Cleaning and Preprocessing: Clean and preprocess the data to ensure its quality and reliability. This step involves handling missing values, removing duplicates, standardizing formats, and transforming data if needed (e.g., scaling numerical data, encoding categorical variables).

- Exploratory Data Analysis (EDA): Explore the data through descriptive statistics, visualizations (e.g., histograms, scatter plots, heatmaps), and data profiling techniques. EDA helps in understanding the distribution of variables, detecting outliers, and identifying patterns or trends.

- Data Modeling and Analysis: Apply appropriate statistical or machine learning models to analyze the data and answer the research questions or address the problem. This step may involve hypothesis testing, regression analysis, clustering, classification, or other analytical techniques depending on the nature of the data and objectives.

- Interpretation of Results: Interpret the findings from the data analysis in the context of the problem or question. Determine the significance of results, draw conclusions, and communicate insights effectively.

- Decision Making and Action: Use the insights gained from data analysis to make informed decisions, develop strategies, or take actions that drive positive outcomes. Monitor the impact of these decisions and iterate the analysis process as needed.

- Communication and Reporting: Present the findings and insights derived from data analysis in a clear and understandable manner to stakeholders, using visualizations, dashboards, reports, or presentations. Effective communication ensures that the analysis results are actionable and contribute to informed decision-making.

These steps form a cyclical process, where feedback from decision-making may lead to revisiting earlier stages, refining the analysis, and continuously improving outcomes.

Key data analysis skills encompass a blend of technical expertise, critical thinking, and domain knowledge. Some of the essential skills for effective data analysis include:

Statistical Knowledge: Understanding statistical concepts and methods such as hypothesis testing, regression analysis, probability distributions, and statistical inference is fundamental for data analysis.

Data Manipulation and Cleaning: Proficiency in tools like Python, R, SQL, or Excel for data manipulation, cleaning, and transformation tasks, including handling missing values, removing duplicates, and standardizing data formats.

Data Visualization: Creating clear and insightful visualizations using tools like Matplotlib, Seaborn, Tableau, or Power BI to communicate trends, patterns, and relationships within data to non-technical stakeholders.

Machine Learning: Familiarity with machine learning algorithms such as decision trees, random forests, logistic regression, clustering, and neural networks for predictive modeling, classification, clustering, and anomaly detection tasks.

Programming Skills: Competence in programming languages such as Python, R, or SQL for data analysis, scripting, automation, and building data pipelines, along with version control using Git.

Critical Thinking: Ability to think critically, ask relevant questions, formulate hypotheses, and design robust analytical approaches to solve complex problems and extract actionable insights from data.

Domain Knowledge: Understanding the context and domain-specific nuances of the data being analyzed, whether it’s finance, healthcare, marketing, or any other industry, is crucial for meaningful interpretation and decision-making.

Data Ethics and Privacy: Awareness of data ethics principles , privacy regulations (e.g., GDPR, CCPA), and best practices for handling sensitive data responsibly and ensuring data security and confidentiality.

Communication and Storytelling: Effectively communicating analysis results through clear reports, presentations, and data-driven storytelling to convey insights, recommendations, and implications to diverse audiences, including non-technical stakeholders.

These skills are crucial in data analysis because they empower analysts to effectively extract, interpret, and communicate insights from complex datasets across various domains. Statistical knowledge forms the foundation for making data-driven decisions and drawing reliable conclusions. Proficiency in data manipulation and cleaning ensures data accuracy and consistency, essential for meaningful analysis. Here

The Enterprise Big Data Analyst certification is aimed at Data Analyst and provides in-depth theory and practical guidance to deduce value out of Big Data sets. The curriculum segments between different kinds of Big Data problems and its corresponding solutions. This course will teach participants how to autonomously find valuable insights in large data sets in order to realize business benefits.

Data analysis plays an important role in driving informed decision-making and strategic planning within enterprises across various industries. By harnessing the power of data, organizations can gain valuable insights into market trends, customer behaviors, operational efficiency, and performance metrics. Data analysis enables businesses to identify opportunities for growth, optimize processes, mitigate risks, and enhance overall competitiveness in the market. Examples of data analysis in the enterprise span a wide range of applications, including sales and marketing optimization, customer segmentation, financial forecasting, supply chain management, fraud detection, and healthcare analytics.

- Sales and Marketing Optimization: Enterprises use data analysis to analyze sales trends, customer preferences, and marketing campaign effectiveness. By leveraging techniques like customer segmentation and predictive modeling, businesses can tailor marketing strategies, optimize pricing strategies, and identify cross-selling or upselling opportunities.

- Customer Segmentation: Data analysis helps enterprises segment customers based on demographics, purchasing behavior, and preferences. This segmentation allows for targeted marketing efforts, personalized customer experiences, and improved customer retention and loyalty.

- Financial Forecasting: Data analysis is used in financial forecasting to analyze historical data, identify trends, and predict future financial performance. This helps businesses make informed decisions regarding budgeting, investment strategies, and risk management.

- Supply Chain Management: Enterprises use data analysis to optimize supply chain operations, improve inventory management, reduce lead times, and enhance overall efficiency. Analyzing supply chain data helps identify bottlenecks, forecast demand, and streamline logistics processes.

- Fraud Detection: Data analysis is employed to detect and prevent fraud in financial transactions, insurance claims, and online activities. By analyzing patterns and anomalies in data, enterprises can identify suspicious activities, mitigate risks, and protect against fraudulent behavior.

- Healthcare Analytics: In the healthcare sector, data analysis is used for patient care optimization, disease prediction, treatment effectiveness evaluation, and resource allocation. Analyzing healthcare data helps improve patient outcomes, reduce healthcare costs, and support evidence-based decision-making.

These examples illustrate how data analysis is a vital tool for enterprises to gain actionable insights, improve decision-making processes, and achieve strategic objectives across diverse areas of business operations.

Below are some of the most frequently asked questions about data analysis and their answers:

What role does domain knowledge play in data analysis?

Domain knowledge is crucial as it provides context, understanding of data nuances, insights into relevant variables and metrics, and helps in interpreting results accurately within specific industries or domains.

How do you ensure the quality and accuracy of data for analysis?

Ensuring data quality and accuracy involves data validation, cleaning techniques like handling missing values and outliers, standardizing data formats, performing data integrity checks, and validating results through cross-validation or data audits.

What tools and techniques are commonly used in data analysis?

Commonly used tools and techniques in data analysis include programming languages like Python and R, statistical methods such as regression analysis and hypothesis testing, machine learning algorithms for predictive modeling, data visualization tools like Tableau and Matplotlib, and database querying languages like SQL.

What are the steps involved in the data analysis process?

The data analysis process typically includes defining the problem, collecting data, cleaning and preprocessing the data, conducting exploratory data analysis, applying statistical or machine learning models for analysis, interpreting results, making decisions based on insights, and communicating findings to stakeholders.

What is data analysis, and why is it important?

Data analysis involves examining, cleaning, transforming, and modeling data to derive meaningful insights and make informed decisions. It is crucial because it helps organizations uncover trends, patterns, and relationships within data, leading to improved decision-making, enhanced business strategies, and competitive advantage.

Big Data Framework

Official account of the Enterprise Big Data Framework Alliance.

Stay in the loop

Subscribe to our free newsletter.

Related articles.

Exploratory Data Analysis

Data Analysis vs. Data Literacy

Understanding Data Quality: Ensuring Accuracy, Reliability, and Consistency

The framework.

Framework Overview

Download the Guides

About the Big Data Framework

PARTNERSHIPS

Academic Partner Program

Corporate Partnerships

CERTIFICATIONS

Big data events.

Events and Webinars

CERTIFICATES

Data Privacy Fundamentals

BIG DATA RESOURCES

Big Data News & Updates

Downloads and Resources

CONNECT WITH US

Endenicher Allee 12 53115, DE Bonn Germany

SOCIAL MEDIA

© Copyright 2021 | Enterprise Big Data Framework© | All Rights Reserved | Privacy Policy | Terms of Use | Contact

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis: Definition, Types and Examples

Nowadays, data is collected at various stages of processes and transactions, which has the potential to improve the way we work significantly. However, to fully realize the value of data analysis, this data must be analyzed to gain valuable insights into improving products and services.

Data analysis consists aspect of making informed decisions in various industries. With the advancement of technology, it has become a dynamic and exciting field But what is it in simple words?

What is Data Analysis?

Data analysis is the science of examining data to conclude the information to make decisions or expand knowledge on various subjects. It consists of subjecting data to operations. This process happens to obtain precise conclusions to help us achieve our goals, such as operations that cannot be previously defined since data collection may reveal specific difficulties.

“A lot of this [data analysis] will help humans work smarter and faster because we have data on everything that happens.” –Daniel Burrus, business consultant and speaker on business and innovation issues.

Why is data analytics important?

Data analytics help businesses understand the target market faster, increase sales, reduce costs, increase revenue, and allow for better problem-solving. Data analysis is important for several reasons, as it plays a critical role in various aspects of modern businesses and organizations. Here are some key reasons why data analysis important is crucial:

Informed decision-making

Data analytics helps businesses make more informed and data-driven decisions. By analyzing data, organizations can gain insights into customer behavior, market trends, and operational performance, enabling them to make better choices that are supported by evidence rather than relying on intuition alone.

Identifying opportunities and challenges

Data analytics allows businesses to identify new opportunities for growth, product development, or market expansion. It also helps identify potential challenges and risks, allowing organizations to address them proactively.

Improving efficiency and productivity

Organizations can identify inefficiencies and bottlenecks by analyzing processes and performance data, leading to process optimization and improved productivity. This, in turn, can result in cost savings and better resource allocation.

Customer understanding and personalization

Data analytics enables businesses to understand their customers better, including their preferences, buying behaviors, and pain points. With this understanding, organizations can offer personalized products and services, enhancing customer satisfaction and loyalty.

Competitive advantage

Organizations that leverage data analytics effectively gain a competitive edge in today’s data-driven world. By analyzing data, businesses can identify unique insights and trends that better understand the market and their competitors, helping them stay ahead of the competition.

Performance tracking and evaluation

Data analytics allows organizations to track and measure their performance against key performance indicators (KPIs) and goals. This helps in evaluating the success of various strategies and initiatives, enabling continuous improvement.

Predictive analytics

Data analytics can be used for predictive modeling, helping organizations forecast future trends and outcomes. This is valuable for financial planning, demand forecasting, risk management, and proactive decision-making.

Data-driven innovation

Data analytics can fuel innovation by providing insights that lead to the development of new products, services, or business models. Innovations based on data analysis can lead to groundbreaking advancements and disruption in various industries.

Fraud detection and security

Data analytics can be used to detect anomalies and patterns indicative of fraudulent activities. It plays a crucial role in enhancing security and protecting businesses from financial losses and reputational risk .

Regulatory compliance

In many industries, regulations, and laws are mandatory. Data analytics can help organizations ensure that they meet these compliance requirements by tracking and auditing relevant data.

Types of data analysis

There are several types of data analysis, each with a specific purpose and method. Let’s talk about some significant types:

Descriptive Analysis

Descriptive analysis is used to summarize and describe the main features of a dataset. It involves calculating measures of central tendency and dispersion to describe the data. The descriptive analysis provides a comprehensive overview of the data and insights into its properties and structure.

LEARN ABOUT: Descriptive Analysis

Inferential Analysis

The inferential analysis is used statistical analysis plan and testing to make inferences about the population parameters, such as the mean or proportion. This unit of analysis involves using models and hypothesis testing to make predictions and draw conclusions about the population.

LEARN ABOUT: Statistical Analysis Methods

Predictive Analysis

Predictive analysis is used to predict future events or outcomes based on historical data and other relevant information. It involves using statistical models and machine learning algorithms to identify patterns in the data and make predictions about future outcomes.

Prescriptive Analysis

Prescriptive analysis is a decision-making analysis that uses mathematical modeling, optimization algorithms, and other data-driven techniques to identify the action for a given problem or situation. It combines mathematical models, data, and business constraints to find the best move or decision.

Text Analysis

Text analysis is a process of extracting meaningful information from unstructured text data. It involves a variety of techniques, including natural language processing (NLP), text mining, sentiment analysis, and topic modeling, to uncover insights and patterns in text data.

Diagnostic Analysis

The diagnostic analysis seeks to identify the root causes of specific events or outcomes. It is often used in troubleshooting problems or investigating anomalies in data.

LEARN ABOUT: Data Analytics Projects

Uses of data analysis

It is used in many industries regardless of the branch. It gives us the basis for making decisions or confirming a hypothesis.

A researcher or data analyst mainly performs data analysis to predict consumer behavior and help companies place their products and services in the market accordingly. For instance, sales data analysis can help you identify the product range not-so-popular in a specific demographic group. It can give you insights into tweaking your current marketing campaign to better connect with the target audience and address their needs.

Human Resources

Organizations can use data analysis tools to offer a great experience to their employees and ensure an excellent work environment. They can also utilize the data to find out the best resources whose skill set matches the organizational goals.

Universities and academic institutions can perform the analysis to measure student performance and gather insights on how certain behaviors can further improve education.

Techniques for data analysis

It is essential to analyze raw data to understand it. We must resort to various data analysis techniques that depend on the type of information collected, so it is crucial to define the method before implementing it.

- Qualitative data: Researchers collect qualitative data from the underlying emotions, body language, and expressions. Its foundation is the data interpretation of verbal responses. The most common ways of obtaining this information are through open-ended interviews, focus groups, and observation groups, where researchers generally analyze patterns in observations throughout the data collection phase.

- Quantitative data: Quantitative data presents itself in numerical form. It focuses on tangible results.

Data analysis focuses on reaching a conclusion based solely on the researcher’s current knowledge. How you collect your data should relate to how you plan to analyze and use it. You also need to collect accurate and trustworthy information.

Many data collection techniques exist, but experts’ most commonly used method is online surveys. It offers significant benefits, such as reducing time and money compared to traditional data collection methods .

Data analysis and data analytics are two interconnected but distinct processes in data science. Data analysis involves examining raw data using various techniques to uncover patterns, correlations, and insights. It’s about understanding historical data to make informed conclusions. On the other hand, data analytics goes a step further by utilizing those insights to predict future trends, prescribe actions, and guide decision-making.

At QuestionPro, we have an accurate tool that will help you professionally make better decisions.

Data Analysis Methods

The term data analysis technique has often been used interchangeably by professional researchers. Frequently people also throw out the previous analysis type. We’re hoping for this to be an important distinction between how and when data analyses are done.

However, there are many different techniques that allow for data analysis. Here are some of the main common methods used for data analysis:

Descriptive Statistics

Descriptive statistics involves summarizing and describing the main features of a dataset, such as mean, median, mode, standard deviation, range, and percentiles. It provides a basic understanding of the data’s distribution and characteristics.

Inferential Statistics

Inferential statistics are used to make inferences and draw conclusions about a larger population based on a sample of data. It includes techniques like hypothesis testing, confidence intervals, and regression analysis.

Data Visualization

Data visualization is the graphical representation of data to help analysts and stakeholders understand patterns, trends, and insights. Common visualization techniques include bar charts, line graphs, scatter plots, heat maps, and pie charts.

Exploratory Data Analysis (EDA)

EDA involves analyzing and visualizing data to discover patterns, relationships, and potential outliers. It helps in gaining insights into the data before formal statistical testing.

Predictive Modeling

Predictive modeling uses algorithms and statistical techniques to build models that can make predictions about future outcomes based on historical data. Machine learning algorithms, such as decision trees, logistic regression, and neural networks, are commonly used for predictive modeling.

Time Series Analysis

Time series analysis is used to analyze data collected over time, such as stock prices, temperature readings, or sales data. It involves identifying trends and seasonality and forecasting future values.

Cluster Analysis

Cluster analysis is used to group similar data points together based on certain features or characteristics. It helps in identifying patterns and segmenting data into meaningful clusters.

Factor Analysis and Principal Component Analysis (PCA)

These techniques are used to reduce the dimensionality of data and identify underlying factors or components that explain the variance in the data.

Text Mining and Natural Language Processing (NLP)

Text mining and NLP techniques are used to analyze and extract information from unstructured text data, such as social media posts, customer reviews, or survey responses.

Qualitative Data Analysis

Qualitative data analysis involves interpreting non-numeric data, such as text, images, audio, or video. Techniques like content analysis, thematic analysis, and grounded theory are used to analyze qualitative data.

Quantitative Data Analysis

Quantitative analysis focuses on analyzing numerical data to discover relationships, trends, and patterns. This analysis often involves statistical methods.

Data Mining

Data mining involves discovering patterns, relationships, or insights from large datasets using various algorithms and techniques.

Regression Analysis

Regression analysis is used to model the relationship between a dependent variable and one or more independent variables. It helps understand how changes in one variable impact the other(s).

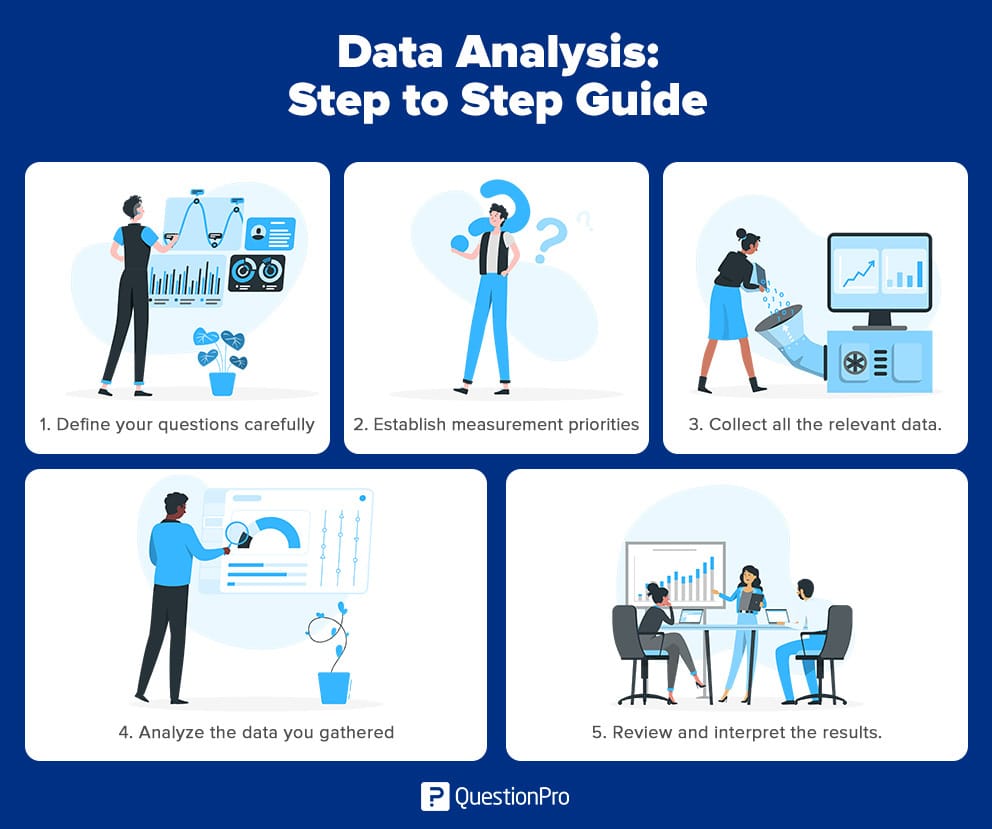

Step-by-step guide data analysis

With these five steps in your data analysis process, you will make better decisions for your business because data that has been well collected and analyzed support your choices.

LEARN ABOUT: Data Mining Techniques

Step 1: Define your questions

Start by selecting the right questions. Questions should be measurable, clear, and concise. Design your questions to qualify or disqualify possible solutions to your specific problem.

Step 2: Establish measurement priorities

This step divides into two sub-steps:

- Decide what to measure: Analyze what kind of data you need.

- Decide how to measure it: Thinking about how to measure your data is just as important, especially before the data collection phase, because your measurement process supports or discredits your thematic analysis later on.

Step 3: Collect data

With the question clearly defined and your measurement priorities established, now it’s time to collect your data. As you manage and organize your data, remember to keep these essential points in mind:

- Before collecting new data, determine what information you could gather from existing databases or sources.

- Determine a storage and file naming system to help all team members collaborate in advance. This process saves time and prevents team members from collecting the same information twice.

- If you need to collect data through surveys, observation, or interviews, develop a questionnaire in advance to ensure consistency and save time.

- Keep the collected data organized with a log of collection dates, and add any source notes as you go along.

Step 4: Analyze the data

Once you’ve collected the correct data to answer your Step 1 question, it’s time to conduct a deeper statistical analysis . Find relationships, identify trends, and sort and filter your data according to variables. You will find the exact data you need as you analyze the data.

Step 5: Interpret the results

After analyzing the data and possibly conducting further research, it is finally time to interpret the results. Ask yourself these key questions:

- Does the data answer your original question? How?

- Does the data help you defend any objections? How?

- Are there any limitations to the conclusions, any angles you haven’t considered?

If the interpretation of data holds up under these questions and considerations, you have reached a productive conclusion. The only remaining step is to use the process results to decide how you will act.

Join us as we look into the most frequently used question types and how to analyze your findings effectively.

Make the right decisions by analyzing data the right way!

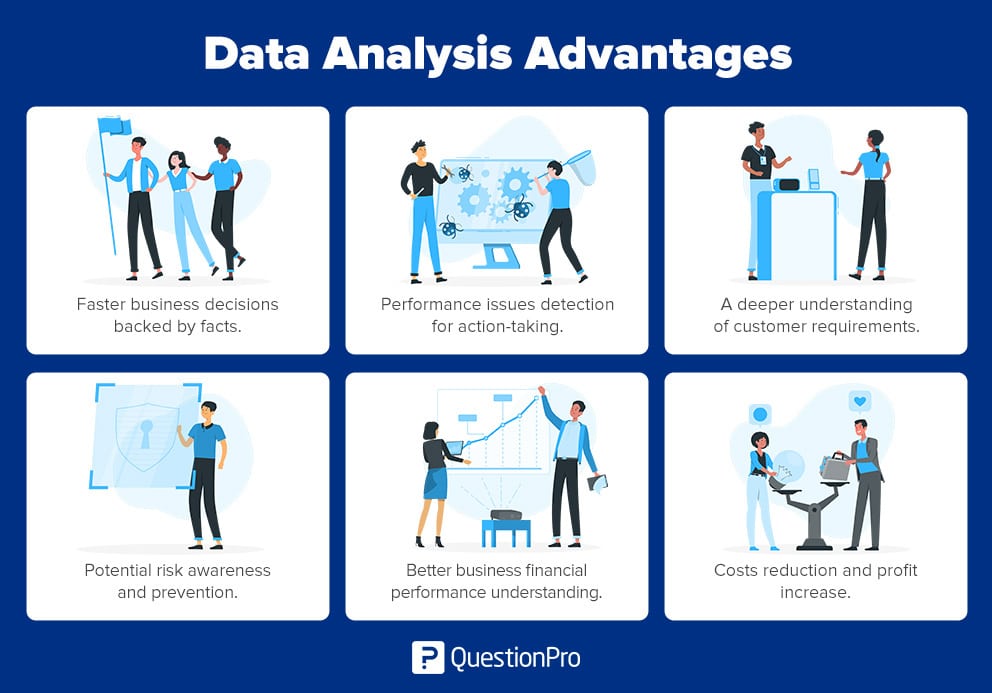

Data analysis advantages

Many industries use data to draw conclusions and decide on actions to implement. It is worth mentioning that science also uses to test or discard existing theories or models.

There’s more than one advantage to data analysis done right. Here are some examples:

- Make faster and more informed business decisions backed by facts.

- Identify performance issues that require action.

- Gain a deeper understanding of customer requirements, which creates better business relationships.

- Increase awareness of risks to implement preventive measures.

- Visualize different dimensions of the data.

- Gain competitive advantage.

- A better understanding of the financial performance of the business.

- Identify ways to reduce costs and thus increase profits.

These questions are examples of different types of data analysis. You can include them in your post-event surveys aimed at your customers:

- Questions start with: Why? How?

Example of qualitative data research analysis: Panels where a discussion is held, and consumers are interviewed about what they like or dislike about the place.

- Data is collected by asking questions like: How many? Who? How often? Where?

Example of quantitative research analysis: Surveys focused on measuring sales, trends, reports, or perceptions.

Data analysis with QuestionPro

Data analysis is crucial in aiding organizations and individuals in making informed decisions by comprehensively understanding the data. If you’re in need of various data analysis techniques solutions, consider using QuestionPro. Our software allows you to collect data easily, create real-time reports, and analyze data. Practical business intelligence relies on the synergy between analytics and reporting , where analytics uncovers valuable insights, and reporting communicates these findings to stakeholders.

LEARN ABOUT: Average Order Value

Start a free trial or schedule a demo to see the full potential of our powerful tool. We’re here to help you every step of the way!

LEARN MORE FREE TRIAL

MORE LIKE THIS

Jotform vs SurveyMonkey: Which Is Best in 2024

Aug 15, 2024

360 Degree Feedback Spider Chart is Back!

Aug 14, 2024

Jotform vs Wufoo: Comparison of Features and Prices

Aug 13, 2024

Product or Service: Which is More Important? — Tuesday CX Thoughts

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits