- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

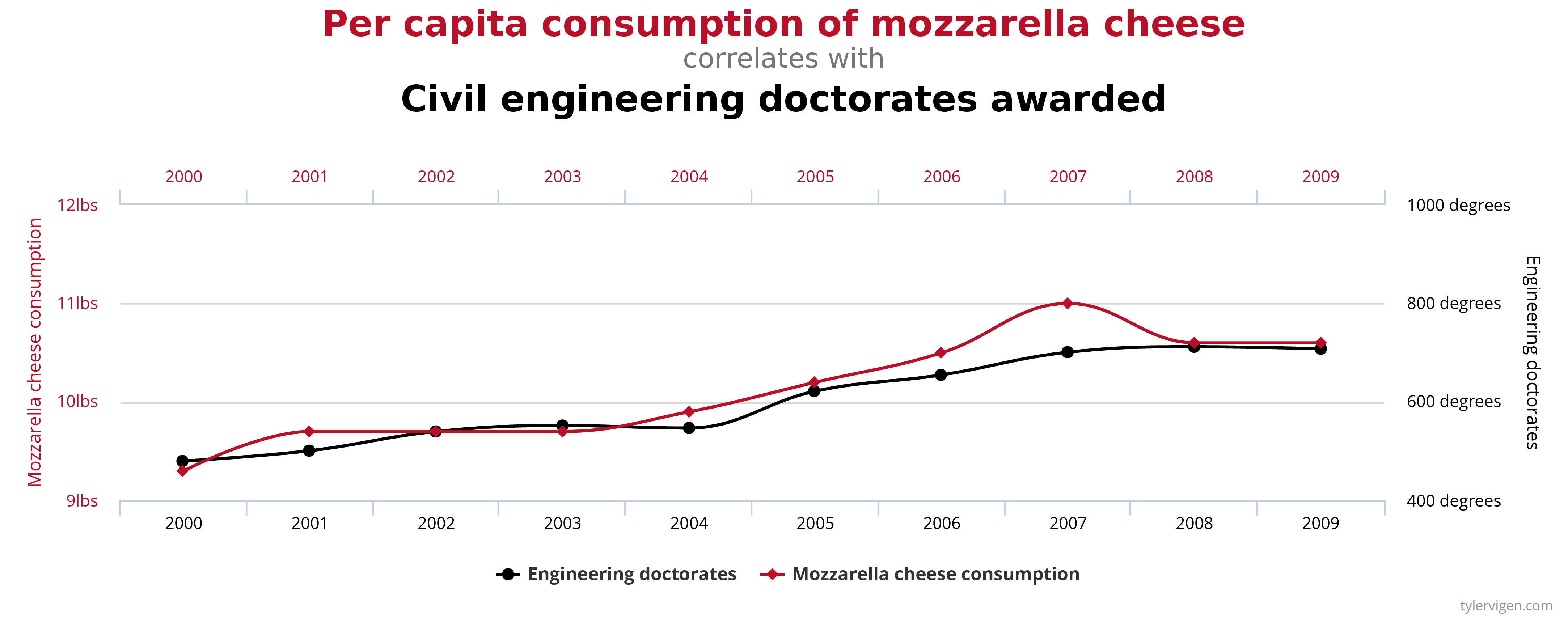

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

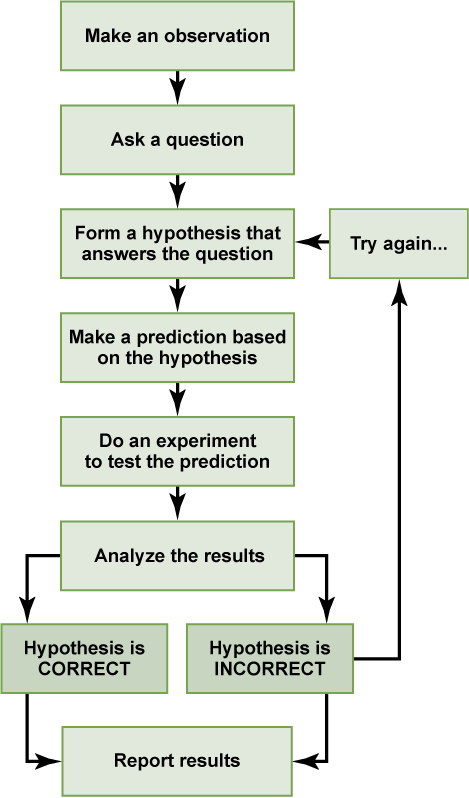

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

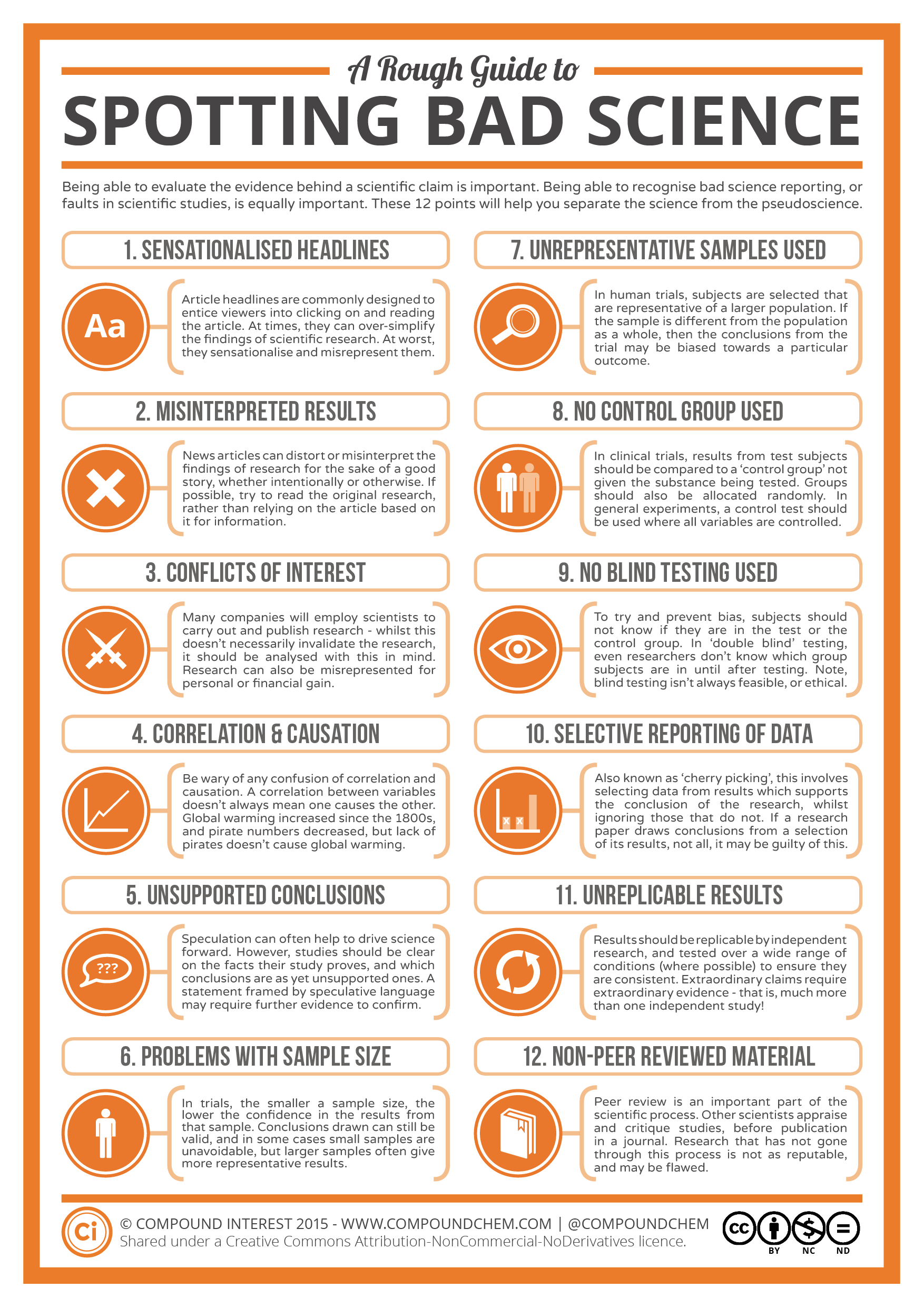

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

1.3: The Scientific Method

Chapter 1: scientific inquiry, chapter 2: chemistry of life, chapter 3: macromolecules, chapter 4: cell structure and function, chapter 5: membranes and cellular transport, chapter 6: cell signaling, chapter 7: metabolism, chapter 8: cellular respiration, chapter 9: photosynthesis, chapter 10: cell cycle and division, chapter 11: meiosis, chapter 12: classical and modern genetics, chapter 13: dna structure and function, chapter 14: gene expression, chapter 15: biotechnology, chapter 16: viruses, chapter 17: nutrition and digestion, chapter 18: nervous system, chapter 19: sensory systems, chapter 20: musculoskeletal system, chapter 21: endocrine system, chapter 22: circulatory and pulmonary systems, chapter 23: osmoregulation and excretion, chapter 24: immune system, chapter 25: reproduction and development, chapter 26: behavior, chapter 27: ecosystems, chapter 28: population and community ecology, chapter 29: biodiversity and conservation, chapter 30: speciation and diversity, chapter 31: natural selection, chapter 32: population genetics, chapter 33: evolutionary history, chapter 34: plant structure, growth, and nutrition, chapter 35: plant reproduction, chapter 36: plant responses to the environment.

The JoVE video player is compatible with HTML5 and Adobe Flash. Older browsers that do not support HTML5 and the H.264 video codec will still use a Flash-based video player. We recommend downloading the newest version of Flash here, but we support all versions 10 and above.

The scientific method is a detailed, stepwise process for answering questions. For example, a scientist makes an observation that the slugs destroy some cabbages but not those near garlic.

Such observations lead to asking questions, "Could garlic be used to deter slugs from ruining a cabbage patch?" After formulating questions, the scientist can then develop hypotheses —potential explanations for the observations that lead to specific, testable predictions.

In this case, a hypothesis could be that garlic repels slugs, which predicts that cabbages surrounded by garlic powder will suffer less damage than the ones without it.

The hypothesis is then tested through a series of experiments designed to eliminate hypotheses.

The experimental setup involves defining variables. An independent variable is an item that is being tested, in this case, garlic addition. The dependent variable describes the measurement used to determine the outcome, such as the number of slugs on the cabbages.

In addition, the slugs must be divided into groups, experimental and control. These groups are identical, except that the experimental group is exposed to garlic powder.

After data are collected and analyzed, conclusions are made, and results are communicated to other scientists.

The scientific method is a detailed, empirical problem-solving process used by biologists and other scientists. This iterative approach involves formulating a question based on observation, developing a testable potential explanation for the observation (called a hypothesis), making and testing predictions based on the hypothesis, and using the findings to create new hypotheses and predictions.

Generally, predictions are tested using carefully-designed experiments. Based on the outcome of these experiments, the original hypothesis may need to be refined, and new hypotheses and questions can be generated. Importantly, this illustrates that the scientific method is not a stepwise recipe. Instead, it is a continuous refinement and testing of ideas based on new observations, which is the crux of scientific inquiry.

Science is mutable and continuously changes as scientists learn more about the world, physical phenomena and how organisms interact with their environment. For this reason, scientists avoid claiming to ‘prove' a specific idea. Instead, they gather evidence that either supports or refutes a given hypothesis.

Making Observations and Formulating Hypotheses

A hypothesis is preceded by an initial observation, during which information is gathered by the senses (e.g., vision, hearing) or using scientific tools and instruments. This observation leads to a question that prompts the formation of an initial hypothesis, a (testable) possible answer to the question. For example, the observation that slugs eat some cabbage plants but not cabbage plants located near garlic may prompt the question: why do slugs selectively not eat cabbage plants near garlic? One possible hypothesis, or answer to this question, is that slugs have an aversion to garlic. Based on this hypothesis, one might predict that slugs will not eat cabbage plants surrounded by a ring of garlic powder.

A hypothesis should be falsifiable, meaning that there are ways to disprove it if it is untrue. In other words, a hypothesis should be testable. Scientists often articulate and explicitly test for the opposite of the hypothesis, which is called the null hypothesis. In this case, the null hypothesis is that slugs do not have an aversion to garlic. The null hypothesis would be supported if, contrary to the prediction, slugs eat cabbage plants that are surrounded by garlic powder.

Testing a Hypothesis

When possible, scientists test hypotheses using controlled experiments that include independent and dependent variables, as well as control and experimental groups.

An independent variable is an item expected to have an effect (e.g., the garlic powder used in the slug and cabbage experiment or treatment given in a clinical trial). Dependent variables are the measurements used to determine the outcome of an experiment. In the experiment with slugs, cabbages, and garlic, the number of slugs eating cabbages is the dependent variable. This number is expected to depend on the presence or absence of garlic powder rings around the cabbage plants.

Experiments require experimental and control groups. An experimental group is treated with or exposed to the independent variable (i.e., the manipulation or treatment). For example, in the garlic aversion experiment with slugs, the experimental group is a group of cabbage plants surrounded by a garlic powder ring. A control group is subject to the same conditions as the experimental group, with the exception of the independent variable. Control groups in this experiment might include a group of cabbage plants in the same area that is surrounded by a non-garlic powder ring (to control for powder aversion) and a group that is not surrounded by any particular substance (to control for cabbage aversion). It is essential to include a control group because, without one, it is unclear whether the outcome is the result of the treatment or manipulation.

Refining a Hypothesis

If the results of an experiment support the hypothesis, further experiments may be designed and carried out to provide support for the hypothesis. The hypothesis may also be refined and made more specific. For example, additional experiments could determine whether slugs also have an aversion to other plants of the Allium genus, like onions.

If the results do not support the hypothesis, then the original hypothesis may be modified based on the new observations. It is important to rule out potential problems with the experimental design before modifying the hypothesis. For example, if slugs demonstrate an aversion to both garlic and non-garlic powder, the experiment can be carried out again using fresh garlic instead of powdered garlic. If the slugs still exhibit no aversion to garlic, then the original hypothesis can be modified.

Communication

The results of the experiments should be communicated to other scientists and the public, regardless of whether the data support the original hypothesis. This information can guide the development of new hypotheses and experimental questions.

Get cutting-edge science videos from J o VE sent straight to your inbox every month.

mktb-description

We use cookies to enhance your experience on our website.

By continuing to use our website or clicking “Continue”, you are agreeing to accept our cookies.

Module 1: Introduction to Biology

Experiments and hypotheses, learning outcomes.

- Form a hypothesis and use it to design a scientific experiment

Now we’ll focus on the methods of scientific inquiry. Science often involves making observations and developing hypotheses. Experiments and further observations are often used to test the hypotheses.

A scientific experiment is a carefully organized procedure in which the scientist intervenes in a system to change something, then observes the result of the change. Scientific inquiry often involves doing experiments, though not always. For example, a scientist studying the mating behaviors of ladybugs might begin with detailed observations of ladybugs mating in their natural habitats. While this research may not be experimental, it is scientific: it involves careful and verifiable observation of the natural world. The same scientist might then treat some of the ladybugs with a hormone hypothesized to trigger mating and observe whether these ladybugs mated sooner or more often than untreated ones. This would qualify as an experiment because the scientist is now making a change in the system and observing the effects.

Forming a Hypothesis

When conducting scientific experiments, researchers develop hypotheses to guide experimental design. A hypothesis is a suggested explanation that is both testable and falsifiable. You must be able to test your hypothesis, and it must be possible to prove your hypothesis true or false.

For example, Michael observes that maple trees lose their leaves in the fall. He might then propose a possible explanation for this observation: “cold weather causes maple trees to lose their leaves in the fall.” This statement is testable. He could grow maple trees in a warm enclosed environment such as a greenhouse and see if their leaves still dropped in the fall. The hypothesis is also falsifiable. If the leaves still dropped in the warm environment, then clearly temperature was not the main factor in causing maple leaves to drop in autumn.

In the Try It below, you can practice recognizing scientific hypotheses. As you consider each statement, try to think as a scientist would: can I test this hypothesis with observations or experiments? Is the statement falsifiable? If the answer to either of these questions is “no,” the statement is not a valid scientific hypothesis.

Practice Questions

Determine whether each following statement is a scientific hypothesis.

Air pollution from automobile exhaust can trigger symptoms in people with asthma.

- No. This statement is not testable or falsifiable.

- No. This statement is not testable.

- No. This statement is not falsifiable.

- Yes. This statement is testable and falsifiable.

Natural disasters, such as tornadoes, are punishments for bad thoughts and behaviors.

a: No. This statement is not testable or falsifiable. “Bad thoughts and behaviors” are excessively vague and subjective variables that would be impossible to measure or agree upon in a reliable way. The statement might be “falsifiable” if you came up with a counterexample: a “wicked” place that was not punished by a natural disaster. But some would question whether the people in that place were really wicked, and others would continue to predict that a natural disaster was bound to strike that place at some point. There is no reason to suspect that people’s immoral behavior affects the weather unless you bring up the intervention of a supernatural being, making this idea even harder to test.

Testing a Vaccine

Let’s examine the scientific process by discussing an actual scientific experiment conducted by researchers at the University of Washington. These researchers investigated whether a vaccine may reduce the incidence of the human papillomavirus (HPV). The experimental process and results were published in an article titled, “ A controlled trial of a human papillomavirus type 16 vaccine .”

Preliminary observations made by the researchers who conducted the HPV experiment are listed below:

- Human papillomavirus (HPV) is the most common sexually transmitted virus in the United States.

- There are about 40 different types of HPV. A significant number of people that have HPV are unaware of it because many of these viruses cause no symptoms.

- Some types of HPV can cause cervical cancer.

- About 4,000 women a year die of cervical cancer in the United States.

Practice Question

Researchers have developed a potential vaccine against HPV and want to test it. What is the first testable hypothesis that the researchers should study?

- HPV causes cervical cancer.

- People should not have unprotected sex with many partners.

- People who get the vaccine will not get HPV.

- The HPV vaccine will protect people against cancer.

Experimental Design

You’ve successfully identified a hypothesis for the University of Washington’s study on HPV: People who get the HPV vaccine will not get HPV.

The next step is to design an experiment that will test this hypothesis. There are several important factors to consider when designing a scientific experiment. First, scientific experiments must have an experimental group. This is the group that receives the experimental treatment necessary to address the hypothesis.

The experimental group receives the vaccine, but how can we know if the vaccine made a difference? Many things may change HPV infection rates in a group of people over time. To clearly show that the vaccine was effective in helping the experimental group, we need to include in our study an otherwise similar control group that does not get the treatment. We can then compare the two groups and determine if the vaccine made a difference. The control group shows us what happens in the absence of the factor under study.

However, the control group cannot get “nothing.” Instead, the control group often receives a placebo. A placebo is a procedure that has no expected therapeutic effect—such as giving a person a sugar pill or a shot containing only plain saline solution with no drug. Scientific studies have shown that the “placebo effect” can alter experimental results because when individuals are told that they are or are not being treated, this knowledge can alter their actions or their emotions, which can then alter the results of the experiment.

Moreover, if the doctor knows which group a patient is in, this can also influence the results of the experiment. Without saying so directly, the doctor may show—through body language or other subtle cues—their views about whether the patient is likely to get well. These errors can then alter the patient’s experience and change the results of the experiment. Therefore, many clinical studies are “double blind.” In these studies, neither the doctor nor the patient knows which group the patient is in until all experimental results have been collected.

Both placebo treatments and double-blind procedures are designed to prevent bias. Bias is any systematic error that makes a particular experimental outcome more or less likely. Errors can happen in any experiment: people make mistakes in measurement, instruments fail, computer glitches can alter data. But most such errors are random and don’t favor one outcome over another. Patients’ belief in a treatment can make it more likely to appear to “work.” Placebos and double-blind procedures are used to level the playing field so that both groups of study subjects are treated equally and share similar beliefs about their treatment.

The scientists who are researching the effectiveness of the HPV vaccine will test their hypothesis by separating 2,392 young women into two groups: the control group and the experimental group. Answer the following questions about these two groups.

- This group is given a placebo.

- This group is deliberately infected with HPV.

- This group is given nothing.

- This group is given the HPV vaccine.

- a: This group is given a placebo. A placebo will be a shot, just like the HPV vaccine, but it will have no active ingredient. It may change peoples’ thinking or behavior to have such a shot given to them, but it will not stimulate the immune systems of the subjects in the same way as predicted for the vaccine itself.

- d: This group is given the HPV vaccine. The experimental group will receive the HPV vaccine and researchers will then be able to see if it works, when compared to the control group.

Experimental Variables

A variable is a characteristic of a subject (in this case, of a person in the study) that can vary over time or among individuals. Sometimes a variable takes the form of a category, such as male or female; often a variable can be measured precisely, such as body height. Ideally, only one variable is different between the control group and the experimental group in a scientific experiment. Otherwise, the researchers will not be able to determine which variable caused any differences seen in the results. For example, imagine that the people in the control group were, on average, much more sexually active than the people in the experimental group. If, at the end of the experiment, the control group had a higher rate of HPV infection, could you confidently determine why? Maybe the experimental subjects were protected by the vaccine, but maybe they were protected by their low level of sexual contact.

To avoid this situation, experimenters make sure that their subject groups are as similar as possible in all variables except for the variable that is being tested in the experiment. This variable, or factor, will be deliberately changed in the experimental group. The one variable that is different between the two groups is called the independent variable. An independent variable is known or hypothesized to cause some outcome. Imagine an educational researcher investigating the effectiveness of a new teaching strategy in a classroom. The experimental group receives the new teaching strategy, while the control group receives the traditional strategy. It is the teaching strategy that is the independent variable in this scenario. In an experiment, the independent variable is the variable that the scientist deliberately changes or imposes on the subjects.

Dependent variables are known or hypothesized consequences; they are the effects that result from changes or differences in an independent variable. In an experiment, the dependent variables are those that the scientist measures before, during, and particularly at the end of the experiment to see if they have changed as expected. The dependent variable must be stated so that it is clear how it will be observed or measured. Rather than comparing “learning” among students (which is a vague and difficult to measure concept), an educational researcher might choose to compare test scores, which are very specific and easy to measure.

In any real-world example, many, many variables MIGHT affect the outcome of an experiment, yet only one or a few independent variables can be tested. Other variables must be kept as similar as possible between the study groups and are called control variables . For our educational research example, if the control group consisted only of people between the ages of 18 and 20 and the experimental group contained people between the ages of 30 and 35, we would not know if it was the teaching strategy or the students’ ages that played a larger role in the results. To avoid this problem, a good study will be set up so that each group contains students with a similar age profile. In a well-designed educational research study, student age will be a controlled variable, along with other possibly important factors like gender, past educational achievement, and pre-existing knowledge of the subject area.

What is the independent variable in this experiment?

- Sex (all of the subjects will be female)

- Presence or absence of the HPV vaccine

- Presence or absence of HPV (the virus)

List three control variables other than age.

What is the dependent variable in this experiment?

- Sex (male or female)

- Rates of HPV infection

- Age (years)

- Revision and adaptation. Authored by : Shelli Carter and Lumen Learning. Provided by : Lumen Learning. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Scientific Inquiry. Provided by : Open Learning Initiative. Located at : https://oli.cmu.edu/jcourse/workbook/activity/page?context=434a5c2680020ca6017c03488572e0f8 . Project : Introduction to Biology (Open + Free). License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Mechanics (Essentials) - Class 11th

Course: mechanics (essentials) - class 11th > unit 2.

- Introduction to physics

- What is physics?

The scientific method

- Models and Approximations in Physics

Introduction

- Make an observation.

- Ask a question.

- Form a hypothesis , or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

Scientific method example: Failure to toast

1. make an observation., 2. ask a question., 3. propose a hypothesis., 4. make predictions., 5. test the predictions..

- If the toaster does toast, then the hypothesis is supported—likely correct.

- If the toaster doesn't toast, then the hypothesis is not supported—likely wrong.

Logical possibility

Practical possibility, building a body of evidence, 6. iterate..

- If the hypothesis was supported, we might do additional tests to confirm it, or revise it to be more specific. For instance, we might investigate why the outlet is broken.

- If the hypothesis was not supported, we would come up with a new hypothesis. For instance, the next hypothesis might be that there's a broken wire in the toaster.

Want to join the conversation?

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

3.5: Developing A Hypothesis

- Last updated

- Save as PDF

- Page ID 75017

- University of Missouri–St. Louis

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Theories and Hypotheses

Before describing how to develop a hypothesis, it is important to distinguish between a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition (1965). He pro- posed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are atheoretical and only after a set of observations has been made is a theory developed. This is because theories are broad in nature and explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observations before we can develop a broader theory.

Theories and hypotheses always have this if-then relation- ship. “ If drive theory is correct, then cockroaches should run through a straight runway faster and through a branching runway more slowly when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus, deriving hypotheses from theories is an excellent way of generating interesting research questions.

But how do researchers derive hypotheses from theories? One way is to generate a research question using the techniques discussed in this chapter and then ask whether any theory implies an answer to that question. For example, you might wonder whether expressive writing about positive experiences improves health as much as expressive writing about traumatic experiences. Although this question is an interesting one on its own, you might then ask whether the habituation theory—the idea that expressive writing causes people to habituate to negative thoughts and feel- ings—implies an answer. In this case, it seems clear that if the habituation theory is correct, then expressive writing about positive experiences should not be effective because it would not cause people to habituate to negative thoughts and feelings. A second way to derive hypotheses from theories is to focus on some component of the theory that has not yet been directly observed. For example, a researcher could focus on the process of habituation—perhaps hypothesizing that people should show fewer signs of emotional distress with each new writing session.

Among the very best hypotheses are those that distinguish between competing theories. For example, Norbert Schwarz and his colleagues considered two theories of how people make judgments about themselves, such as how assertive they are (Schwarz et al., 1991). Both theories held that such judgments are based on relevant examples that people bring to mind. However, one theory was that people base their judgments on the number of examples they bring to mind and the other was that people base their judgments on how easily they bring those examples to mind. To test these theories, the researchers asked people to recall either six times when they were assertive (which is easy for most people) or 12 times (which is difficult for most people). Then they asked them to judge their own assertiveness. Note that the number-of-examples theory implies that people who recalled 12 examples should judge themselves to be more assertive because they recalled more examples, but the ease-of-examples theory implies that participants who recalled six examples should judge themselves as more assertive because recalling the examples was easier. Thus the two theories made opposite predictions so that only one of the predictions could be confirmed. The surprising result was that participants who recalled fewer examples judged them- selves to be more assertive—providing particularly convincing evidence in favor of the ease-of-retrieval theory over the number-of-examples theory.

Theory Testing

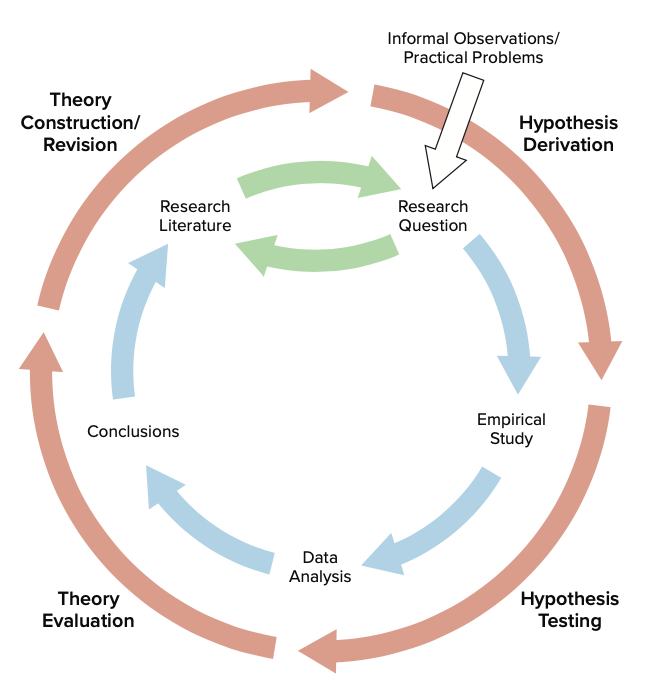

The primary way that scientific researchers use theories is some- times called the hypothetico-deductive method (although this term is much more likely to be used by philosophers of science than by scientists themselves). Researchers begin with a set of phenomena and either construct a theory to explain or interpret the phenomena or choose an existing theory to work with. They then make a prediction about some new phenomenon that should be observed if the theory is correct. Again, this pre- diction is called a hypothesis. The researchers then conduct an empirical study to test the hypothesis. Finally, they reevaluate the theory in light of the new results and revise it if necessary. This process is usually conceptualized as a cycle because the researchers can then derive a new hypothesis from the revised theory, conduct a new empirical study to test the hypothesis, and so on. As Figure \(\PageIndex{1}\) shows, this approach meshes nicely with the model of scientific research in psychology presented earlier in the textbook—creating a more detailed model of “theoretically motivated” or “theory-driven” research.

As an example, let us consider Zajonc’s research on social facilitation and inhibition. He started with a somewhat contradictory pattern of results from the research literature. He then constructed his drive theory, according to which being watched by others while performing a task causes physiological arousal, which increases an organism’s tendency to make the dominant response. This theory predicts social facilitation for well-learned tasks and social inhibition for poorly learned tasks. He now had a theory that organized previous results in a meaningful way—but he still needed to test it. He hypothesized that if his theory was correct, he should observe that the presence of others improves performance in a simple laboratory task but inhibits performance in a difficult version of the very same laboratory task. To test this hypothesis, one of the studies he conducted used cockroaches as subjects (Zajonc, Heingartner, & Herman, 1969). The cockroaches ran either down a straight runway (an easy task for a cockroach) or through a cross-shaped maze (a difficult task for a cock- roach) to escape into a dark chamber when a light was shined on them. They did this either while alone or in the presence of other cockroaches in clear plastic “audience boxes.” Zajonc found that cockroaches in the straight runway reached their goal more quickly in the presence of other cockroaches, but cockroaches in the cross-shaped maze reached their goal more slowly when they were in the presence of other cock- roaches. Thus he confirmed his hypothesis and provided support for his drive theory. (Zajonc also showed that drive theory existed in humans [Zajonc & Sales, 1966] in many other studies afterward).

Incorporating Theory into Your Research

When you write your research report or plan your presentation, be aware that there are two basic ways that researchers usually include theory. The first is to raise a research question, answer that question by conducting a new study, and then offer one or more theories (usually more) to explain or interpret the results. This format works well for applied research questions and for research questions that existing theories do not address. The second way is to describe one or more existing theories, derive a hypothesis from one of those theories, test the hypothesis in a new study, and finally reevaluate the theory. This format works well when there is an existing theory that addresses the research question—especially if the resulting hypothesis is surprising or conflicts with a hypothesis derived from a different theory.

To use theories in your research will not only give you guidance in coming up with experiment ideas and possible projects, but it lends legitimacy to your work. Psychologists have been interested in a variety of human behaviors and have developed many theories along the way. Using established theories will help you break new ground as a researcher, not limit you from developing your own ideas.

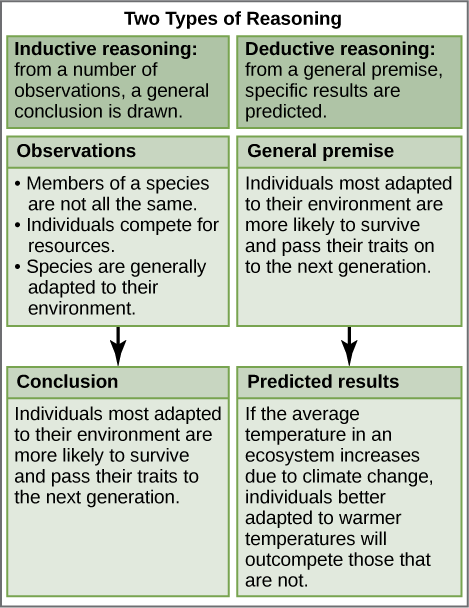

Characteristics of a Good Hypothesis

There are three general characteristics of a good hypothesis. First, a good hypothesis must be testable and falsifiable . We must be able to test the hypothesis using the methods of science, and it must be possible to gather evidence that will disconfirm the hypothesis if it is indeed false. Second, a good hypothesis must be logical . As described above, hypotheses are more than just a random guess. Hypotheses should be informed by previous theories or observations and logical reasoning. Typically, we begin with a broad and general theory and use deductive reasoning to generate a more specific hypothesis to test based on that theory. Occasionally, how- ever, when there is no theory to inform our hypothesis, we use inductive reasoning which involves using specific observations or research findings to form a more general hypothesis. Finally, the hypothesis should be positive . That is, the hypothesis should make a positive statement about the existence of a relationship or effect, rather than a statement that a relationship or effect does not exist. As scientists, we don’t set out to show that relationships do not exist or that effects do not occur so our hypotheses should not be worded in a way to suggest that an effect or relationship does not exist. The nature of science is to assume that something does not exist and then seek to find evidence to prove this wrong, to show that it really does exist. That may seem backward to you but that is the nature of the scientific method. The underlying reason for this is beyond the scope of this chapter but it has to do with statistical theory.

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

National Academy of Sciences (US), National Academy of Engineering (US) and Institute of Medicine (US) Panel on Scientific Responsibility and the Conduct of Research. Responsible Science: Ensuring the Integrity of the Research Process: Volume I. Washington (DC): National Academies Press (US); 1992.

Responsible Science: Ensuring the Integrity of the Research Process: Volume I.

- Hardcopy Version at National Academies Press

2 Scientific Principles and Research Practices

Until the past decade, scientists, research institutions, and government agencies relied solely on a system of self-regulation based on shared ethical principles and generally accepted research practices to ensure integrity in the research process. Among the very basic principles that guide scientists, as well as many other scholars, are those expressed as respect for the integrity of knowledge, collegiality, honesty, objectivity, and openness. These principles are at work in the fundamental elements of the scientific method, such as formulating a hypothesis, designing an experiment to test the hypothesis, and collecting and interpreting data. In addition, more particular principles characteristic of specific scientific disciplines influence the methods of observation; the acquisition, storage, management, and sharing of data; the communication of scientific knowledge and information; and the training of younger scientists. 1 How these principles are applied varies considerably among the several scientific disciplines, different research organizations, and individual investigators.

The basic and particular principles that guide scientific research practices exist primarily in an unwritten code of ethics. Although some have proposed that these principles should be written down and formalized, 2 the principles and traditions of science are, for the most part, conveyed to successive generations of scientists through example, discussion, and informal education. As was pointed out in an early Academy report on responsible conduct of research in the health sciences, “a variety of informal and formal practices and procedures currently exist in the academic research environment to assure and maintain the high quality of research conduct” (IOM, 1989a, p. 18).

Physicist Richard Feynman invoked the informal approach to communicating the basic principles of science in his 1974 commencement address at the California Institute of Technology (Feynman, 1985):

[There is an] idea that we all hope you have learned in studying science in school—we never explicitly say what this is, but just hope that you catch on by all the examples of scientific investigation. . . . It's a kind of scientific integrity, a principle of scientific thought that corresponds to a kind of utter honesty—a kind of leaning over backwards. For example, if you're doing an experiment, you should report everything that you think might make it invalid—not only what you think is right about it; other causes that could possibly explain your results; and things you thought of that you've eliminated by some other experiment, and how they worked—to make sure the other fellow can tell they have been eliminated.

Details that could throw doubt on your interpretation must be given, if you know them. You must do the best you can—if you know anything at all wrong, or possibly wrong—to explain it. If you make a theory, for example, and advertise it, or put it out, then you must also put down all the facts that disagree with it, as well as those that agree with it. In summary, the idea is to try to give all the information to help others to judge the value of your contribution, not just the information that leads to judgment in one particular direction or another. (pp. 311-312)

Many scholars have noted the implicit nature and informal character of the processes that often guide scientific research practices and inference. 3 Research in well-established fields of scientific knowledge, guided by commonly accepted theoretical paradigms and experimental methods, involves few disagreements about what is recognized as sound scientific evidence. Even in a revolutionary scientific field like molecular biology, students and trainees have learned the basic principles governing judgments made in such standardized procedures as cloning a new gene and determining its sequence.

In evaluating practices that guide research endeavors, it is important to consider the individual character of scientific fields. Research fields that yield highly replicable results, such as ordinary organic chemical structures, are quite different from fields such as cellular immunology, which are in a much earlier stage of development and accumulate much erroneous or uninterpretable material before the pieces fit together coherently. When a research field is too new or too fragmented to support consensual paradigms or established methods, different scientific practices can emerge.

THE NATURE OF SCIENCE

In broadest terms, scientists seek a systematic organization of knowledge about the universe and its parts. This knowledge is based on explanatory principles whose verifiable consequences can be tested by independent observers. Science encompasses a large body of evidence collected by repeated observations and experiments. Although its goal is to approach true explanations as closely as possible, its investigators claim no final or permanent explanatory truths. Science changes. It evolves. Verifiable facts always take precedence. . . .

Scientists operate within a system designed for continuous testing, where corrections and new findings are announced in refereed scientific publications. The task of systematizing and extending the understanding of the universe is advanced by eliminating disproved ideas and by formulating new tests of others until one emerges as the most probable explanation for any given observed phenomenon. This is called the scientific method.

An idea that has not yet been sufficiently tested is called a hypothesis. Different hypotheses are sometimes advanced to explain the same factual evidence. Rigor in the testing of hypotheses is the heart of science, if no verifiable tests can be formulated, the idea is called an ad hoc hypothesis—one that is not fruitful; such hypotheses fail to stimulate research and are unlikely to advance scientific knowledge.

A fruitful hypothesis may develop into a theory after substantial observational or experimental support has accumulated. When a hypothesis has survived repeated opportunities for disproof and when competing hypotheses have been eliminated as a result of failure to produce the predicted consequences, that hypothesis may become the accepted theory explaining the original facts.

Scientific theories are also predictive. They allow us to anticipate yet unknown phenomena and thus to focus research on more narrowly defined areas. If the results of testing agree with predictions from a theory, the theory is provisionally corroborated. If not, it is proved false and must be either abandoned or modified to account for the inconsistency.

Scientific theories, therefore, are accepted only provisionally. It is always possible that a theory that has withstood previous testing may eventually be disproved. But as theories survive more tests, they are regarded with higher levels of confidence. . . .

In science, then, facts are determined by observation or measurement of natural or experimental phenomena. A hypothesis is a proposed explanation of those facts. A theory is a hypothesis that has gained wide acceptance because it has survived rigorous investigation of its predictions. . . .

. . . science accommodates, indeed welcomes, new discoveries: its theories change and its activities broaden as new facts come to light or new potentials are recognized. Examples of events changing scientific thought are legion. . . . Truly scientific understanding cannot be attained or even pursued effectively when explanations not derived from or tested by the scientific method are accepted.

SOURCE: National Academy of Sciences and National Research Council (1984), pp. 8-11.

A well-established discipline can also experience profound changes during periods of new conceptual insights. In these moments, when scientists must cope with shifting concepts, the matter of what counts as scientific evidence can be subject to dispute. Historian Jan Sapp has described the complex interplay between theory and observation that characterizes the operation of scientific judgment in the selection of research data during revolutionary periods of paradigmatic shift (Sapp, 1990, p. 113):

What “liberties” scientists are allowed in selecting positive data and omitting conflicting or “messy” data from their reports is not defined by any timeless method. It is a matter of negotiation. It is learned, acquired socially; scientists make judgments about what fellow scientists might expect in order to be convincing. What counts as good evidence may be more or less well-defined after a new discipline or specialty is formed; however, at revolutionary stages in science, when new theories and techniques are being put forward, when standards have yet to be negotiated, scientists are less certain as to what others may require of them to be deemed competent and convincing.

Explicit statements of the values and traditions that guide research practice have evolved through the disciplines and have been given in textbooks on scientific methodologies. 4 In the past few decades, many scientific and engineering societies representing individual disciplines have also adopted codes of ethics (see Volume II of this report for examples), 5 and more recently, a few research institutions have developed guidelines for the conduct of research (see Chapter 6 ).

But the responsibilities of the research community and research institutions in assuring individual compliance with scientific principles, traditions, and codes of ethics are not well defined. In recent years, the absence of formal statements by research institutions of the principles that should guide research conducted by their members has prompted criticism that scientists and their institutions lack a clearly identifiable means to ensure the integrity of the research process.

- FACTORS AFFECTING THE DEVELOPMENT OF RESEARCH PRACTICES

In all of science, but with unequal emphasis in the several disciplines, inquiry proceeds based on observation and experimentation, the exercising of informed judgment, and the development of theory. Research practices are influenced by a variety of factors, including:

The general norms of science;

The nature of particular scientific disciplines and the traditions of organizing a specific body of scientific knowledge;

The example of individual scientists, particularly those who hold positions of authority or respect based on scientific achievements;

The policies and procedures of research institutions and funding agencies; and

Socially determined expectations.

The first three factors have been important in the evolution of modern science. The latter two have acquired more importance in recent times.

Norms of Science

As members of a professional group, scientists share a set of common values, aspirations, training, and work experiences. 6 Scientists are distinguished from other groups by their beliefs about the kinds of relationships that should exist among them, about the obligations incurred by members of their profession, and about their role in society. A set of general norms are imbedded in the methods and the disciplines of science that guide individual, scientists in the organization and performance of their research efforts and that also provide a basis for nonscientists to understand and evaluate the performance of scientists.

But there is uncertainty about the extent to which individual scientists adhere to such norms. Most social scientists conclude that all behavior is influenced to some degree by norms that reflect socially or morally supported patterns of preference when alternative courses of action are possible. However, perfect conformity with any relevant set of norms is always lacking for a variety of reasons: the existence of competing norms, constraints, and obstacles in organizational or group settings, and personality factors. The strength of these influences, and the circumstances that may affect them, are not well understood.

In a classic statement of the importance of scientific norms, Robert Merton specified four norms as essential for the effective functioning of science: communism (by which Merton meant the communal sharing of ideas and findings), universalism, disinterestedness, and organized skepticism (Merton, 1973). Neither Merton nor other sociologists of science have provided solid empirical evidence for the degree of influence of these norms in a representative sample of scientists. In opposition to Merton, a British sociologist of science, Michael Mulkay, has argued that these norms are “ideological” covers for self-interested behavior that reflects status and politics (Mulkay, 1975). And the British physicist and sociologist of science John Ziman, in an article synthesizing critiques of Merton's formulation, has specified a set of structural factors in the bureaucratic and corporate research environment that impede the realization of that particular set of norms: the proprietary nature of research, the local importance and funding of research, the authoritarian role of the research manager, commissioned research, and the required expertise in understanding how to use modern instruments (Ziman, 1990).

It is clear that the specific influence of norms on the development of scientific research practices is simply not known and that further study of key determinants is required, both theoretically and empirically. Commonsense views, ideologies, and anecdotes will not support a conclusive appraisal.

Individual Scientific Disciplines

Science comprises individual disciplines that reflect historical developments and the organization of natural and social phenomena for study. Social scientists may have methods for recording research data that differ from the methods of biologists, and scientists who depend on complex instrumentation may have authorship practices different from those of scientists who work in small groups or carry out field studies. Even within a discipline, experimentalists engage in research practices that differ from the procedures followed by theorists.

Disciplines are the “building blocks of science,” and they “designate the theories, problems, procedures, and solutions that are prescribed, proscribed, permitted, and preferred” (Zuckerman, 1988a, p. 520). The disciplines have traditionally provided the vital connections between scientific knowledge and its social organization. Scientific societies and scientific journals, some of which have tens of thousands of members and readers, and the peer review processes used by journals and research sponsors are visible forms of the social organization of the disciplines.

The power of the disciplines to shape research practices and standards is derived from their ability to provide a common frame of reference in evaluating the significance of new discoveries and theories in science. It is the members of a discipline, for example, who determine what is “good biology” or “good physics” by examining the implications of new research results. The disciplines' abilities to influence research standards are affected by the subjective quality of peer review and the extent to which factors other than disciplinary quality may affect judgments about scientific achievements. Disciplinary departments rely primarily on informal social and professional controls to promote responsible behavior and to penalize deviant behavior. These controls, such as social ostracism, the denial of letters of support for future employment, and the withholding of research resources, can deter and penalize unprofessional behavior within research institutions. 7

Many scientific societies representing individual disciplines have adopted explicit standards in the form of codes of ethics or guidelines governing, for example, the editorial practices of their journals and other publications. 8 Many societies have also established procedures for enforcing their standards. In the past decade, the societies' codes of ethics—which historically have been exhortations to uphold high standards of professional behavior—have incorporated specific guidelines relevant to authorship practices, data management, training and mentoring, conflict of interest, reporting research findings, treatment of confidential or proprietary information, and addressing error or misconduct.

The Role of Individual Scientists and Research Teams

The methods by which individual scientists and students are socialized in the principles and traditions of science are poorly understood. The principles of science and the practices of the disciplines are transmitted by scientists in classroom settings and, perhaps more importantly, in research groups and teams. The social setting of the research group is a strong and valuable characteristic of American science and education. The dynamics of research groups can foster—or inhibit—innovation, creativity, education, and collaboration.

One author of a historical study of research groups in the chemical and biochemical sciences has observed that the laboratory director or group leader is the primary determinant of a group's practices (Fruton, 1990). Individuals in positions of authority are visible and are also influential in determining funding and other support for the career paths of their associates and students. Research directors and department chairs, by virtue of personal example, thus can reinforce, or weaken, the power of disciplinary standards and scientific norms to affect research practices.

To the extent that the behavior of senior scientists conforms with general expectations for appropriate scientific and disciplinary practice, the research system is coherent and mutually reinforcing. When the behavior of research directors or department chairs diverges from expectations for good practice, however, the expected norms of science become ambiguous, and their effects are thus weakened. Thus personal example and the perceived behavior of role models and leaders in the research community can be powerful stimuli in shaping the research practices of colleagues, associates, and students.

The role of individuals in influencing research practices can vary by research field, institution, or time. The standards and expectations for behavior exemplified by scientists who are highly regarded for their technical competence or creative insight may have greater influence than the standards of others. Individual and group behaviors may also be more influential in times of uncertainty and change in science, especially when new scientific theories, paradigms, or institutional relationships are being established.

Institutional Policies

Universities, independent institutes, and government and industrial research organizations create the environment in which research is done. As the recipients of federal funds and the institutional sponsors of research activities, administrative officers must comply with regulatory and legal requirements that accompany public support. They are required, for example, “to foster a research environment that discourages misconduct in all research and that deals forthrightly with possible misconduct” (DHHS, 1989a, p. 32451).

Academic institutions traditionally have relied on their faculty to ensure that appropriate scientific and disciplinary standards are maintained. A few universities and other research institutions have also adopted policies or guidelines to clarify the principles that their members are expected to observe in the conduct of scientific research. 9 In addition, as a result of several highly publicized incidents of misconduct in science and the subsequent enactment of governmental regulations, most major research institutions have now adopted policies and procedures for handling allegations of misconduct in science.

Institutional policies governing research practices can have a powerful effect on research practices if they are commensurate with the norms that apply to a wide spectrum of research investigators. In particular, the process of adopting and implementing strong institutional policies can sensitize the members of those institutions to the potential for ethical problems in their work. Institutional policies can establish explicit standards that institutional officers then have the power to enforce with sanctions and penalties.

Institutional policies are limited, however, in their ability to specify the details of every problematic situation, and they can weaken or displace individual professional judgment in such situations. Currently, academic institutions have very few formal policies and programs in specific areas such as authorship, communication and publication, and training and supervision.

Government Regulations and Policies

Government agencies have developed specific rules and procedures that directly affect research practices in areas such as laboratory safety, the treatment of human and animal research subjects, and the use of toxic or potentially hazardous substances in research.

But policies and procedures adopted by some government research agencies to address misconduct in science (see Chapter 5 ) represent a significant new regulatory development in the relationships between research institutions and government sponsors. The standards and criteria used to monitor institutional compliance with an increasing number of government regulations and policies affecting research practices have been a source of significant disagreement and tension within the research community.

In recent years, some government research agencies have also adopted policies and procedures for the treatment of research data and materials in their extramural research programs. For example, the National Science Foundation (NSF) has implemented a data-sharing policy through program management actions, including proposal review and award negotiations and conditions. The NSF policy acknowledges that grantee institutions will “keep principal rights to intellectual property conceived under NSF sponsorship” to encourage appropriate commercialization of the results of research (NSF, 1989b, p. 1). However, the NSF policy emphasizes “that retention of such rights does not reduce the responsibility of researchers and institutions to make results and supporting materials openly accessible” (p. 1).

In seeking to foster data sharing under federal grant awards, the government relies extensively on the scientific traditions of openness and sharing. Research agency officials have observed candidly that if the vast majority of scientists were not so committed to openness and dissemination, government policy might require more aggressive action. But the principles that have traditionally characterized scientific inquiry can be difficult to maintain. For example, NSF staff have commented, “Unless we can arrange real returns or incentives for the original investigator, either in financial support or in professional recognition, another researcher's request for sharing is likely to present itself as ‘hassle'—an unwelcome nuisance and diversion. Therefore, we should hardly be surprised if researchers display some reluctance to share in practice, however much they may declare and genuinely feel devotion to the ideal of open scientific communication” (NSF, 1989a, p. 4).

Social Attitudes and Expectations

Research scientists are part of a larger human society that has recently experienced profound changes in attitudes about ethics, morality, and accountability in business, the professions, and government. These attitudes have included greater skepticism of the authority of experts and broader expectations about the need for visible mechanisms to assure proper research practices, especially in areas that affect the public welfare. Social attitudes are also having a more direct influence on research practices as science achieves a more prominent and public role in society. In particular, concern about waste, fraud, and abuse involving government funds has emerged as a factor that now directly influences the practices of the research community.

Varying historical and conceptual perspectives also can affect expectations about standards of research practice. For example, some journalists have criticized several prominent scientists, such as Mendel, Newton, and Millikan, because they “cut corners in order to make their theories prevail” (Broad and Wade, 1982, p. 35). The criticism suggests that all scientists at all times, in all phases of their work, should be bound by identical standards.

Yet historical studies of the social context in which scientific knowledge has been attained suggest that modern criticism of early scientific work often imposes contemporary standards of objectivity and empiricism that have in fact been developed in an evolutionary manner. 10 Holton has argued, for example, that in selecting data for publication, Millikan exercised creative insight in excluding unreliable data resulting from experimental error. But such practices, by today's standards, would not be acceptable without reporting the justification for omission of recorded data.

In the early stages of pioneering studies, particularly when fundamental hypotheses are subject to change, scientists must be free to use creative judgment in deciding which data are truly significant. In such moments, the standards of proof may be quite different from those that apply at stages when confirmation and consensus are sought from peers. Scientists must consistently guard against self-deception, however, particularly when theoretical prejudices tend to overwhelm the skepticism and objectivity basic to experimental practices.

In discussing “the theory-ladenness of observations,” Sapp (1990) observed the fundamental paradox that can exist in determining the “appropriateness” of data selection in certain experiments done in the past: scientists often craft their experiments so that the scientific problems and research subjects conform closely with the theory that they expect to verify or refute. Thus, in some cases, their observations may come closer to theoretical expectations than what might be statistically proper.