- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

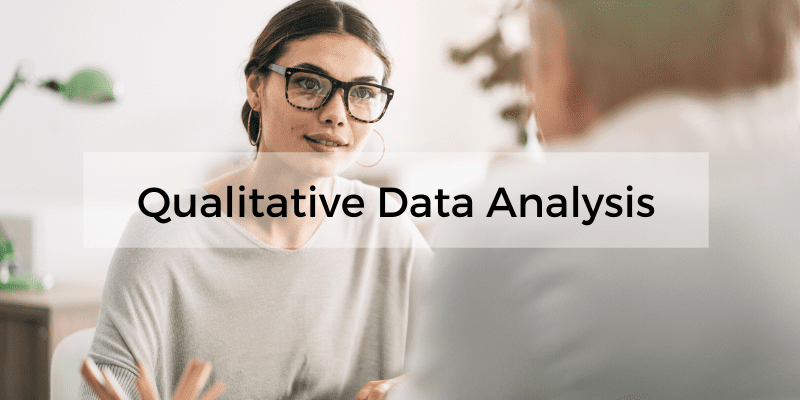

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Customer Experience Lessons from 13,000 Feet — Tuesday CX Thoughts

Aug 20, 2024

Insight: Definition & meaning, types and examples

Aug 19, 2024

Employee Loyalty: Strategies for Long-Term Business Success

Jotform vs SurveyMonkey: Which Is Best in 2024

Aug 15, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

Different Types of Data Analysis; Data Analysis Methods and Techniques in Research Projects

International Journal of Academic Research in Management, 9(1):1-9, 2022 http://elvedit.com/journals/IJARM/wp-content/uploads/Different-Types-of-Data-Analysis-Data-Analysis-Methods-and-Tec

9 Pages Posted: 18 Aug 2022

Hamed Taherdoost

Hamta Group

Date Written: August 1, 2022

This article is concentrated to define data analysis and the concept of data preparation. Then, the data analysis methods will be discussed. For doing so, the first six main categories are described briefly. Then, the statistical tools of the most commonly used methods including descriptive, explanatory, and inferential analyses are investigated in detail. Finally, we focus more on qualitative data analysis to get familiar with the data preparation and strategies in this concept.

Keywords: Data Analysis, Data Preparation, Data Analysis Methods, Data Analysis Types, Descriptive Analysis, Explanatory Analysis, Inferential Analysis, Predictive Analysis, Explanatory Analysis, Causal Analysis and Mechanistic Analysis, Statistical Analysis.

Suggested Citation: Suggested Citation

Hamed Taherdoost (Contact Author)

Hamta group ( email ).

Vancouver Canada

Do you have a job opening that you would like to promote on SSRN?

Paper statistics, related ejournals, data science, data analytics & informatics ejournal.

Subscribe to this fee journal for more curated articles on this topic

Exploring Data Analysis and Visualization Techniques for Project Tracking: Insights from the ITC

- Conference paper

- First Online: 13 September 2023

- Cite this conference paper

- André Barrocas 9 ,

- Alberto Rodrigues da Silva 10 &

- João Saraiva 11

Part of the book series: Communications in Computer and Information Science ((CCIS,volume 1871))

Included in the following conference series:

- International Conference on the Quality of Information and Communications Technology

285 Accesses

Data analysis has emerged as a cornerstone in facilitating informed decision-making across myriad fields, in particular in software development and project management. This integrative practice proves instrumental in enhancing operational efficiency, cutting expenditures, mitigating potential risks, and delivering superior results, all while sustaining structured organization and robust control. This paper presents ITC, a synergistic platform architected to streamline multi-organizational and multi-workspace collaboration for project management and technical documentation. ITC serves as a powerful tool, equipping users with the capability to swiftly establish and manage workspaces and documentation, thereby fostering the derivation of invaluable insights pivotal to both technical and business-oriented decisions. ITC boasts a plethora of features, from support for a diverse range of technologies and languages, synchronization of data, and customizable templates to reusable libraries and task automation, including data extraction, validation, and document automation. This paper also delves into the predictive analytics aspect of the ITC platform. It demonstrates how ITC harnesses predictive data models, such as Random Forest Regression, to anticipate project outcomes and risks, enhancing decision-making in project management. This feature plays a critical role in the strategic allocation of resources, optimizing project timelines, and promoting overall project success. In an effort to substantiate the efficacy and usability of ITC, we have also incorporated the results and feedback garnered from a comprehensive user assessment conducted in 2022. The feedback suggests promising potential for the platform’s application, setting the stage for further development and refinement. The insights provided in this paper not only underline the successful implementation of the ITC platform but also shed light on the transformative impact of predictive analytics in information systems.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

Importance of Project Management in Business Analytics: Academia and Real World

Project Management: Basics

Data Mining-Based Metrics for the Systematic Evaluation of Software Project Management Methodologies

Patanakul, P., Iewwongcharoen, B., Milosevic, D.: An empirical study on the use of project management tools and techniques across project life-cycle and their impact on project success. J. Gen. Manag. 35 (3), 41–66 (2010)

Google Scholar

el Emam, K., Koru, A.G.: A replicated survey of IT software project failures. IEEE Softw. 25 (5), 84–90 (2008)

Article Google Scholar

da Silva, R.: ITLingo Research Initiative in 2022,” arXiv preprint arXiv:2206.14553 (2022)

Nayebi, M., Ruhe, G., Mota, R.C., Mufti, M.: Analytics for software project management - Where are we and where do we go? In: Proceedings - 2015 30th IEEE/ACM International Conference on Automated Software Engineering Workshops, ASEW 2015, March 2016, pp. 18–21 (2016). https://doi.org/10.1109/ASEW.2015.28

Kanakaris, N., Karacapilidis, N., Kournetas, G., Lazanas, A.: Combining machine learning and operations research methods to advance the project management practice. In: Operations Research and Enterprise Systems, pp. 135–155 (2020). https://doi.org/10.1007/978-3-030-37584-3_7

Novitzká, V., et al.: Informatics 2017 : 2017 IEEE 14th International Scientific Conference on Informatics : Proceedings : 14–16 November 2017, Poprad, Slovakia (2017)

Gamito, I., da Silva, A.R.: From rigorous requirements and user interfaces specifications into software business applications. In: International Conference on the Quality of Information and Communications Technology, pp. 459–473 (2020). https://doi.org/10.1007/978-3-030-58793-2_37

da Silva, R.: Rigorous specification of use cases with the RSL language. In: International Conference on Information Systems Development’2019, AIS (2019)

Smith, A., Gupta, J.N.D.: Neural networks in business: techniques and applications for the operations researcher. Comput. Oper. Res. 27 (11), 1023–1044 (2000). https://doi.org/10.1016/S0305-0548(99)00141-0

Article MATH Google Scholar

Wang, Y.-R., Yu, C.-Y., Chan, H.-H.: Predicting construction cost and schedule success using artificial neural networks ensemble and support vector machines classification models. Int. J. Project Manage. 30 (4), 470–478 (2012). https://doi.org/10.1016/j.ijproman.2011.09.002

Costantino, F., di Gravio, G., Nonino, F.: Project selection in project portfolio management: an artificial neural network model based on critical success factors. Int. J. Project Manage. 33 (8), 1744–1754 (2015). https://doi.org/10.1016/j.ijproman.2015.07.003

Sadiku, M., Shadare, A.E., Musa, S.M., Akujuobi, C.M., Perry, R.: Data visualization. Int. J. Eng. Res. Adv. Technol. (IJERAT) 2 (12), 11–16 (2016)

Zheng, G.: Data visualization in business intelligence. In: Global Business Intelligence, Routledge , pp. 67–81 (2017)

Hardin, M., Hom, D., Perez, R., Williams, L.: Which chart or graph is right for you? Tell Impactful Stories with Data. Tableau Software (2012)

Vetter, T.R.: Fundamentals of research data and variables: the devil is in the details. Anesth. Analg. 125 (4), 1375–1380 (2017)

Cabri, A., Griffiths, M.: Earned value and agile reporting. In: AGILE 2006 (AGILE 2006), pp. 6-p (2006)

Project Management Institute., A guide to the project management body of knowledge (PMBOK Guide). Project Management Institute (2008)

Stellingwerf, R., Zandhuis, A.: ISO 21500 Guidance on project management–A Pocket Guide. Van Haren (2013)

Vukomanović, M., Young, M., Huynink, S.: IPMA ICB 4.0—A global standard for project, programme and portfolio management competences. Int. J. Project Manage. 34 (8), 1703–1705 (2016)

E. Commission and D.-G. for Informatics, PM2 project management methodology : guide 3.0.1. Publications Office (2021). https://doi.org/10.2799/022317

Charvat, J.: Project management methodologies: selecting, implementing, and supporting methodologies and processes for projects (2003)

Thesing, T., Feldmann, C., Burchardt, M.: Agile versus waterfall project management: decision model for selecting the appropriate approach to a project. Procedia Comput. Sci. 181 , 746–756 (2021). https://doi.org/10.1016/j.procs.2021.01.227

Špundak, M.: Mixed agile/traditional project management methodology–reality or illusion? Procedia-Soc. Behav. Sci. 119 , 939–948 (2014)

Beck, K., et al.: Manifesto for agile software development (2001)

Alqudah, M., Razali, R.: A comparison of scrum and Kanban for identifying their selection factors. In: 2017 6th International Conference on Electrical Engineering and Informatics (ICEEI), pp. 1–6 (2017). https://doi.org/10.1109/ICEEI.2017.8312434

de Carvalho Bragança, D.: Document Automation in ITLingo PSL Excel Template: MSc dissertation. Instituto Superior Técnico, Universidade de Lisboa (2021)

Ravindran, A.: Django Design Patterns and Best Practices. Packt Publishing Ltd (2015)

Nielsen, J., Landauer, T.K.: A mathematical model of the finding of usability problems. In: Proceedings of the INTERACT 1993 and CHI 1993 Conference on Human Factors in Computing Systems, pp. 206–213 (1993)

Breiman, L.: Random Forests. Mach. Learn. 45 (1), 5–32 (2001)

Liaw, A., Wiener, M.: Classification and regression by Random Forest. R. News 2 (3), 18–22 (2002)

Genuer, R., Poggi, J.M., Tuleau-Malot, C.: Variable selection using Random Forests. Pattern Recogn. Lett. 31 (14), 2225–2236 (2010)

Download references

Author information

Authors and affiliations.

Instituto Superior Técnico, Universidade de Lisboa, Lisbon, Portugal

André Barrocas

INESC-ID, Instituto Superior Técnico, Universidade de Lisboa, Lisbon, Portugal

Alberto Rodrigues da Silva

DefineScope, Setúba, Portugal

João Saraiva

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Alberto Rodrigues da Silva .

Editor information

Editors and affiliations.

University of Aveiro, Aveiro, Portugal

José Maria Fernandes

Federal University of Rio de Janeiro, Rio de Janeiro, Brazil

Guilherme H. Travassos

University of Oulu, Oulu, Finland

Valentina Lenarduzzi

Xiaozhou Li

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper.

Barrocas, A., da Silva, A.R., Saraiva, J. (2023). Exploring Data Analysis and Visualization Techniques for Project Tracking: Insights from the ITC. In: Fernandes, J.M., Travassos, G.H., Lenarduzzi, V., Li, X. (eds) Quality of Information and Communications Technology. QUATIC 2023. Communications in Computer and Information Science, vol 1871. Springer, Cham. https://doi.org/10.1007/978-3-031-43703-8_11

Download citation

DOI : https://doi.org/10.1007/978-3-031-43703-8_11

Published : 13 September 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-43702-1

Online ISBN : 978-3-031-43703-8

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Qualitative Data Analysis Methods 101:

The “big 6” methods + examples.

By: Kerryn Warren (PhD) | Reviewed By: Eunice Rautenbach (D.Tech) | May 2020 (Updated April 2023)

Qualitative data analysis methods. Wow, that’s a mouthful.

If you’re new to the world of research, qualitative data analysis can look rather intimidating. So much bulky terminology and so many abstract, fluffy concepts. It certainly can be a minefield!

Don’t worry – in this post, we’ll unpack the most popular analysis methods , one at a time, so that you can approach your analysis with confidence and competence – whether that’s for a dissertation, thesis or really any kind of research project.

What (exactly) is qualitative data analysis?

To understand qualitative data analysis, we need to first understand qualitative data – so let’s step back and ask the question, “what exactly is qualitative data?”.

Qualitative data refers to pretty much any data that’s “not numbers” . In other words, it’s not the stuff you measure using a fixed scale or complex equipment, nor do you analyse it using complex statistics or mathematics.

So, if it’s not numbers, what is it?

Words, you guessed? Well… sometimes , yes. Qualitative data can, and often does, take the form of interview transcripts, documents and open-ended survey responses – but it can also involve the interpretation of images and videos. In other words, qualitative isn’t just limited to text-based data.

So, how’s that different from quantitative data, you ask?

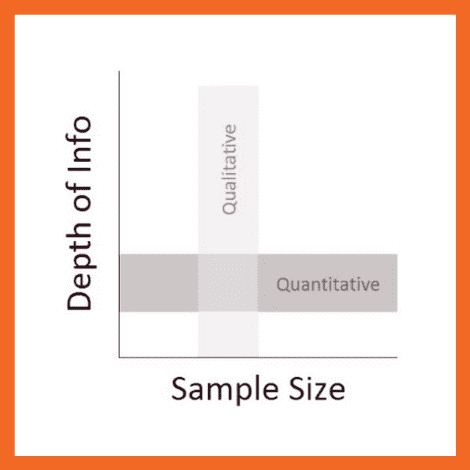

Simply put, qualitative research focuses on words, descriptions, concepts or ideas – while quantitative research focuses on numbers and statistics . Qualitative research investigates the “softer side” of things to explore and describe , while quantitative research focuses on the “hard numbers”, to measure differences between variables and the relationships between them. If you’re keen to learn more about the differences between qual and quant, we’ve got a detailed post over here .

So, qualitative analysis is easier than quantitative, right?

Not quite. In many ways, qualitative data can be challenging and time-consuming to analyse and interpret. At the end of your data collection phase (which itself takes a lot of time), you’ll likely have many pages of text-based data or hours upon hours of audio to work through. You might also have subtle nuances of interactions or discussions that have danced around in your mind, or that you scribbled down in messy field notes. All of this needs to work its way into your analysis.

Making sense of all of this is no small task and you shouldn’t underestimate it. Long story short – qualitative analysis can be a lot of work! Of course, quantitative analysis is no piece of cake either, but it’s important to recognise that qualitative analysis still requires a significant investment in terms of time and effort.

Need a helping hand?

In this post, we’ll explore qualitative data analysis by looking at some of the most common analysis methods we encounter. We’re not going to cover every possible qualitative method and we’re not going to go into heavy detail – we’re just going to give you the big picture. That said, we will of course includes links to loads of extra resources so that you can learn more about whichever analysis method interests you.

Without further delay, let’s get into it.

The “Big 6” Qualitative Analysis Methods

There are many different types of qualitative data analysis, all of which serve different purposes and have unique strengths and weaknesses . We’ll start by outlining the analysis methods and then we’ll dive into the details for each.

The 6 most popular methods (or at least the ones we see at Grad Coach) are:

- Content analysis

- Narrative analysis

- Discourse analysis

- Thematic analysis

- Grounded theory (GT)

- Interpretive phenomenological analysis (IPA)

Let’s take a look at each of them…

QDA Method #1: Qualitative Content Analysis

Content analysis is possibly the most common and straightforward QDA method. At the simplest level, content analysis is used to evaluate patterns within a piece of content (for example, words, phrases or images) or across multiple pieces of content or sources of communication. For example, a collection of newspaper articles or political speeches.

With content analysis, you could, for instance, identify the frequency with which an idea is shared or spoken about – like the number of times a Kardashian is mentioned on Twitter. Or you could identify patterns of deeper underlying interpretations – for instance, by identifying phrases or words in tourist pamphlets that highlight India as an ancient country.

Because content analysis can be used in such a wide variety of ways, it’s important to go into your analysis with a very specific question and goal, or you’ll get lost in the fog. With content analysis, you’ll group large amounts of text into codes , summarise these into categories, and possibly even tabulate the data to calculate the frequency of certain concepts or variables. Because of this, content analysis provides a small splash of quantitative thinking within a qualitative method.

Naturally, while content analysis is widely useful, it’s not without its drawbacks . One of the main issues with content analysis is that it can be very time-consuming , as it requires lots of reading and re-reading of the texts. Also, because of its multidimensional focus on both qualitative and quantitative aspects, it is sometimes accused of losing important nuances in communication.

Content analysis also tends to concentrate on a very specific timeline and doesn’t take into account what happened before or after that timeline. This isn’t necessarily a bad thing though – just something to be aware of. So, keep these factors in mind if you’re considering content analysis. Every analysis method has its limitations , so don’t be put off by these – just be aware of them ! If you’re interested in learning more about content analysis, the video below provides a good starting point.

QDA Method #2: Narrative Analysis

As the name suggests, narrative analysis is all about listening to people telling stories and analysing what that means . Since stories serve a functional purpose of helping us make sense of the world, we can gain insights into the ways that people deal with and make sense of reality by analysing their stories and the ways they’re told.

You could, for example, use narrative analysis to explore whether how something is being said is important. For instance, the narrative of a prisoner trying to justify their crime could provide insight into their view of the world and the justice system. Similarly, analysing the ways entrepreneurs talk about the struggles in their careers or cancer patients telling stories of hope could provide powerful insights into their mindsets and perspectives . Simply put, narrative analysis is about paying attention to the stories that people tell – and more importantly, the way they tell them.

Of course, the narrative approach has its weaknesses , too. Sample sizes are generally quite small due to the time-consuming process of capturing narratives. Because of this, along with the multitude of social and lifestyle factors which can influence a subject, narrative analysis can be quite difficult to reproduce in subsequent research. This means that it’s difficult to test the findings of some of this research.

Similarly, researcher bias can have a strong influence on the results here, so you need to be particularly careful about the potential biases you can bring into your analysis when using this method. Nevertheless, narrative analysis is still a very useful qualitative analysis method – just keep these limitations in mind and be careful not to draw broad conclusions . If you’re keen to learn more about narrative analysis, the video below provides a great introduction to this qualitative analysis method.

QDA Method #3: Discourse Analysis

Discourse is simply a fancy word for written or spoken language or debate . So, discourse analysis is all about analysing language within its social context. In other words, analysing language – such as a conversation, a speech, etc – within the culture and society it takes place. For example, you could analyse how a janitor speaks to a CEO, or how politicians speak about terrorism.

To truly understand these conversations or speeches, the culture and history of those involved in the communication are important factors to consider. For example, a janitor might speak more casually with a CEO in a company that emphasises equality among workers. Similarly, a politician might speak more about terrorism if there was a recent terrorist incident in the country.

So, as you can see, by using discourse analysis, you can identify how culture , history or power dynamics (to name a few) have an effect on the way concepts are spoken about. So, if your research aims and objectives involve understanding culture or power dynamics, discourse analysis can be a powerful method.

Because there are many social influences in terms of how we speak to each other, the potential use of discourse analysis is vast . Of course, this also means it’s important to have a very specific research question (or questions) in mind when analysing your data and looking for patterns and themes, or you might land up going down a winding rabbit hole.

Discourse analysis can also be very time-consuming as you need to sample the data to the point of saturation – in other words, until no new information and insights emerge. But this is, of course, part of what makes discourse analysis such a powerful technique. So, keep these factors in mind when considering this QDA method. Again, if you’re keen to learn more, the video below presents a good starting point.

QDA Method #4: Thematic Analysis

Thematic analysis looks at patterns of meaning in a data set – for example, a set of interviews or focus group transcripts. But what exactly does that… mean? Well, a thematic analysis takes bodies of data (which are often quite large) and groups them according to similarities – in other words, themes . These themes help us make sense of the content and derive meaning from it.

Let’s take a look at an example.

With thematic analysis, you could analyse 100 online reviews of a popular sushi restaurant to find out what patrons think about the place. By reviewing the data, you would then identify the themes that crop up repeatedly within the data – for example, “fresh ingredients” or “friendly wait staff”.

So, as you can see, thematic analysis can be pretty useful for finding out about people’s experiences , views, and opinions . Therefore, if your research aims and objectives involve understanding people’s experience or view of something, thematic analysis can be a great choice.

Since thematic analysis is a bit of an exploratory process, it’s not unusual for your research questions to develop , or even change as you progress through the analysis. While this is somewhat natural in exploratory research, it can also be seen as a disadvantage as it means that data needs to be re-reviewed each time a research question is adjusted. In other words, thematic analysis can be quite time-consuming – but for a good reason. So, keep this in mind if you choose to use thematic analysis for your project and budget extra time for unexpected adjustments.

QDA Method #5: Grounded theory (GT)

Grounded theory is a powerful qualitative analysis method where the intention is to create a new theory (or theories) using the data at hand, through a series of “ tests ” and “ revisions ”. Strictly speaking, GT is more a research design type than an analysis method, but we’ve included it here as it’s often referred to as a method.

What’s most important with grounded theory is that you go into the analysis with an open mind and let the data speak for itself – rather than dragging existing hypotheses or theories into your analysis. In other words, your analysis must develop from the ground up (hence the name).

Let’s look at an example of GT in action.

Assume you’re interested in developing a theory about what factors influence students to watch a YouTube video about qualitative analysis. Using Grounded theory , you’d start with this general overarching question about the given population (i.e., graduate students). First, you’d approach a small sample – for example, five graduate students in a department at a university. Ideally, this sample would be reasonably representative of the broader population. You’d interview these students to identify what factors lead them to watch the video.

After analysing the interview data, a general pattern could emerge. For example, you might notice that graduate students are more likely to read a post about qualitative methods if they are just starting on their dissertation journey, or if they have an upcoming test about research methods.

From here, you’ll look for another small sample – for example, five more graduate students in a different department – and see whether this pattern holds true for them. If not, you’ll look for commonalities and adapt your theory accordingly. As this process continues, the theory would develop . As we mentioned earlier, what’s important with grounded theory is that the theory develops from the data – not from some preconceived idea.

So, what are the drawbacks of grounded theory? Well, some argue that there’s a tricky circularity to grounded theory. For it to work, in principle, you should know as little as possible regarding the research question and population, so that you reduce the bias in your interpretation. However, in many circumstances, it’s also thought to be unwise to approach a research question without knowledge of the current literature . In other words, it’s a bit of a “chicken or the egg” situation.

Regardless, grounded theory remains a popular (and powerful) option. Naturally, it’s a very useful method when you’re researching a topic that is completely new or has very little existing research about it, as it allows you to start from scratch and work your way from the ground up .

QDA Method #6: Interpretive Phenomenological Analysis (IPA)

Interpretive. Phenomenological. Analysis. IPA . Try saying that three times fast…

Let’s just stick with IPA, okay?

IPA is designed to help you understand the personal experiences of a subject (for example, a person or group of people) concerning a major life event, an experience or a situation . This event or experience is the “phenomenon” that makes up the “P” in IPA. Such phenomena may range from relatively common events – such as motherhood, or being involved in a car accident – to those which are extremely rare – for example, someone’s personal experience in a refugee camp. So, IPA is a great choice if your research involves analysing people’s personal experiences of something that happened to them.

It’s important to remember that IPA is subject – centred . In other words, it’s focused on the experiencer . This means that, while you’ll likely use a coding system to identify commonalities, it’s important not to lose the depth of experience or meaning by trying to reduce everything to codes. Also, keep in mind that since your sample size will generally be very small with IPA, you often won’t be able to draw broad conclusions about the generalisability of your findings. But that’s okay as long as it aligns with your research aims and objectives.

Another thing to be aware of with IPA is personal bias . While researcher bias can creep into all forms of research, self-awareness is critically important with IPA, as it can have a major impact on the results. For example, a researcher who was a victim of a crime himself could insert his own feelings of frustration and anger into the way he interprets the experience of someone who was kidnapped. So, if you’re going to undertake IPA, you need to be very self-aware or you could muddy the analysis.

How to choose the right analysis method

In light of all of the qualitative analysis methods we’ve covered so far, you’re probably asking yourself the question, “ How do I choose the right one? ”

Much like all the other methodological decisions you’ll need to make, selecting the right qualitative analysis method largely depends on your research aims, objectives and questions . In other words, the best tool for the job depends on what you’re trying to build. For example:

- Perhaps your research aims to analyse the use of words and what they reveal about the intention of the storyteller and the cultural context of the time.

- Perhaps your research aims to develop an understanding of the unique personal experiences of people that have experienced a certain event, or

- Perhaps your research aims to develop insight regarding the influence of a certain culture on its members.

As you can probably see, each of these research aims are distinctly different , and therefore different analysis methods would be suitable for each one. For example, narrative analysis would likely be a good option for the first aim, while grounded theory wouldn’t be as relevant.

It’s also important to remember that each method has its own set of strengths, weaknesses and general limitations. No single analysis method is perfect . So, depending on the nature of your research, it may make sense to adopt more than one method (this is called triangulation ). Keep in mind though that this will of course be quite time-consuming.

As we’ve seen, all of the qualitative analysis methods we’ve discussed make use of coding and theme-generating techniques, but the intent and approach of each analysis method differ quite substantially. So, it’s very important to come into your research with a clear intention before you decide which analysis method (or methods) to use.

Start by reviewing your research aims , objectives and research questions to assess what exactly you’re trying to find out – then select a qualitative analysis method that fits. Never pick a method just because you like it or have experience using it – your analysis method (or methods) must align with your broader research aims and objectives.

Let’s recap on QDA methods…

In this post, we looked at six popular qualitative data analysis methods:

- First, we looked at content analysis , a straightforward method that blends a little bit of quant into a primarily qualitative analysis.

- Then we looked at narrative analysis , which is about analysing how stories are told.

- Next up was discourse analysis – which is about analysing conversations and interactions.

- Then we moved on to thematic analysis – which is about identifying themes and patterns.

- From there, we went south with grounded theory – which is about starting from scratch with a specific question and using the data alone to build a theory in response to that question.

- And finally, we looked at IPA – which is about understanding people’s unique experiences of a phenomenon.

Of course, these aren’t the only options when it comes to qualitative data analysis, but they’re a great starting point if you’re dipping your toes into qualitative research for the first time.

If you’re still feeling a bit confused, consider our private coaching service , where we hold your hand through the research process to help you develop your best work.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

87 Comments

This has been very helpful. Thank you.

Thank you madam,

Thank you so much for this information

I wonder it so clear for understand and good for me. can I ask additional query?

Very insightful and useful

Good work done with clear explanations. Thank you.

Thanks so much for the write-up, it’s really good.

Thanks madam . It is very important .

thank you very good

Great presentation

This has been very well explained in simple language . It is useful even for a new researcher.

Great to hear that. Good luck with your qualitative data analysis, Pramod!

This is very useful information. And it was very a clear language structured presentation. Thanks a lot.

Thank you so much.

very informative sequential presentation

Precise explanation of method.

Hi, may we use 2 data analysis methods in our qualitative research?

Thanks for your comment. Most commonly, one would use one type of analysis method, but it depends on your research aims and objectives.

You explained it in very simple language, everyone can understand it. Thanks so much.

Thank you very much, this is very helpful. It has been explained in a very simple manner that even a layman understands

Thank nicely explained can I ask is Qualitative content analysis the same as thematic analysis?

Thanks for your comment. No, QCA and thematic are two different types of analysis. This article might help clarify – https://onlinelibrary.wiley.com/doi/10.1111/nhs.12048

This is my first time to come across a well explained data analysis. so helpful.

I have thoroughly enjoyed your explanation of the six qualitative analysis methods. This is very helpful. Thank you!

Thank you very much, this is well explained and useful

i need a citation of your book.

Thanks a lot , remarkable indeed, enlighting to the best

Hi Derek, What other theories/methods would you recommend when the data is a whole speech?

Keep writing useful artikel.

It is important concept about QDA and also the way to express is easily understandable, so thanks for all.

Thank you, this is well explained and very useful.

Very helpful .Thanks.

Hi there! Very well explained. Simple but very useful style of writing. Please provide the citation of the text. warm regards

The session was very helpful and insightful. Thank you

This was very helpful and insightful. Easy to read and understand

As a professional academic writer, this has been so informative and educative. Keep up the good work Grad Coach you are unmatched with quality content for sure.

Keep up the good work Grad Coach you are unmatched with quality content for sure.

Its Great and help me the most. A Million Thanks you Dr.

It is a very nice work

Very insightful. Please, which of this approach could be used for a research that one is trying to elicit students’ misconceptions in a particular concept ?

This is Amazing and well explained, thanks

great overview

What do we call a research data analysis method that one use to advise or determining the best accounting tool or techniques that should be adopted in a company.

Informative video, explained in a clear and simple way. Kudos

Waoo! I have chosen method wrong for my data analysis. But I can revise my work according to this guide. Thank you so much for this helpful lecture.

This has been very helpful. It gave me a good view of my research objectives and how to choose the best method. Thematic analysis it is.

Very helpful indeed. Thanku so much for the insight.

This was incredibly helpful.

Very helpful.

very educative

Nicely written especially for novice academic researchers like me! Thank you.

choosing a right method for a paper is always a hard job for a student, this is a useful information, but it would be more useful personally for me, if the author provide me with a little bit more information about the data analysis techniques in type of explanatory research. Can we use qualitative content analysis technique for explanatory research ? or what is the suitable data analysis method for explanatory research in social studies?

that was very helpful for me. because these details are so important to my research. thank you very much

I learnt a lot. Thank you

Relevant and Informative, thanks !

Well-planned and organized, thanks much! 🙂

I have reviewed qualitative data analysis in a simplest way possible. The content will highly be useful for developing my book on qualitative data analysis methods. Cheers!

Clear explanation on qualitative and how about Case study

This was helpful. Thank you

This was really of great assistance, it was just the right information needed. Explanation very clear and follow.

Wow, Thanks for making my life easy

This was helpful thanks .

Very helpful…. clear and written in an easily understandable manner. Thank you.

This was so helpful as it was easy to understand. I’m a new to research thank you so much.

so educative…. but Ijust want to know which method is coding of the qualitative or tallying done?

Thank you for the great content, I have learnt a lot. So helpful

precise and clear presentation with simple language and thank you for that.

very informative content, thank you.

You guys are amazing on YouTube on this platform. Your teachings are great, educative, and informative. kudos!

Brilliant Delivery. You made a complex subject seem so easy. Well done.

Beautifully explained.

Thanks a lot

Is there a video the captures the practical process of coding using automated applications?

Thanks for the comment. We don’t recommend using automated applications for coding, as they are not sufficiently accurate in our experience.

content analysis can be qualitative research?

THANK YOU VERY MUCH.

Thank you very much for such a wonderful content

do you have any material on Data collection

What a powerful explanation of the QDA methods. Thank you.

Great explanation both written and Video. i have been using of it on a day to day working of my thesis project in accounting and finance. Thank you very much for your support.

very helpful, thank you so much

The tutorial is useful. I benefited a lot.

This is an eye opener for me and very informative, I have used some of your guidance notes on my Thesis, I wonder if you can assist with your 1. name of your book, year of publication, topic etc., this is for citing in my Bibliography,

I certainly hope to hear from you

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Data Analysis Techniques in Research – Methods, Tools & Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Data analysis techniques in research are essential because they allow researchers to derive meaningful insights from data sets to support their hypotheses or research objectives.

Data Analysis Techniques in Research : While various groups, institutions, and professionals may have diverse approaches to data analysis, a universal definition captures its essence. Data analysis involves refining, transforming, and interpreting raw data to derive actionable insights that guide informed decision-making for businesses.

A straightforward illustration of data analysis emerges when we make everyday decisions, basing our choices on past experiences or predictions of potential outcomes.

If you want to learn more about this topic and acquire valuable skills that will set you apart in today’s data-driven world, we highly recommend enrolling in the Data Analytics Course by Physics Wallah . And as a special offer for our readers, use the coupon code “READER” to get a discount on this course.

Table of Contents

What is Data Analysis?

Data analysis is the systematic process of inspecting, cleaning, transforming, and interpreting data with the objective of discovering valuable insights and drawing meaningful conclusions. This process involves several steps:

- Inspecting : Initial examination of data to understand its structure, quality, and completeness.

- Cleaning : Removing errors, inconsistencies, or irrelevant information to ensure accurate analysis.

- Transforming : Converting data into a format suitable for analysis, such as normalization or aggregation.

- Interpreting : Analyzing the transformed data to identify patterns, trends, and relationships.

Types of Data Analysis Techniques in Research

Data analysis techniques in research are categorized into qualitative and quantitative methods, each with its specific approaches and tools. These techniques are instrumental in extracting meaningful insights, patterns, and relationships from data to support informed decision-making, validate hypotheses, and derive actionable recommendations. Below is an in-depth exploration of the various types of data analysis techniques commonly employed in research:

1) Qualitative Analysis:

Definition: Qualitative analysis focuses on understanding non-numerical data, such as opinions, concepts, or experiences, to derive insights into human behavior, attitudes, and perceptions.

- Content Analysis: Examines textual data, such as interview transcripts, articles, or open-ended survey responses, to identify themes, patterns, or trends.

- Narrative Analysis: Analyzes personal stories or narratives to understand individuals’ experiences, emotions, or perspectives.

- Ethnographic Studies: Involves observing and analyzing cultural practices, behaviors, and norms within specific communities or settings.

2) Quantitative Analysis:

Quantitative analysis emphasizes numerical data and employs statistical methods to explore relationships, patterns, and trends. It encompasses several approaches:

Descriptive Analysis:

- Frequency Distribution: Represents the number of occurrences of distinct values within a dataset.

- Central Tendency: Measures such as mean, median, and mode provide insights into the central values of a dataset.

- Dispersion: Techniques like variance and standard deviation indicate the spread or variability of data.

Diagnostic Analysis:

- Regression Analysis: Assesses the relationship between dependent and independent variables, enabling prediction or understanding causality.

- ANOVA (Analysis of Variance): Examines differences between groups to identify significant variations or effects.

Predictive Analysis:

- Time Series Forecasting: Uses historical data points to predict future trends or outcomes.

- Machine Learning Algorithms: Techniques like decision trees, random forests, and neural networks predict outcomes based on patterns in data.

Prescriptive Analysis:

- Optimization Models: Utilizes linear programming, integer programming, or other optimization techniques to identify the best solutions or strategies.

- Simulation: Mimics real-world scenarios to evaluate various strategies or decisions and determine optimal outcomes.

Specific Techniques:

- Monte Carlo Simulation: Models probabilistic outcomes to assess risk and uncertainty.

- Factor Analysis: Reduces the dimensionality of data by identifying underlying factors or components.

- Cohort Analysis: Studies specific groups or cohorts over time to understand trends, behaviors, or patterns within these groups.

- Cluster Analysis: Classifies objects or individuals into homogeneous groups or clusters based on similarities or attributes.

- Sentiment Analysis: Uses natural language processing and machine learning techniques to determine sentiment, emotions, or opinions from textual data.

Also Read: AI and Predictive Analytics: Examples, Tools, Uses, Ai Vs Predictive Analytics

Data Analysis Techniques in Research Examples

To provide a clearer understanding of how data analysis techniques are applied in research, let’s consider a hypothetical research study focused on evaluating the impact of online learning platforms on students’ academic performance.

Research Objective:

Determine if students using online learning platforms achieve higher academic performance compared to those relying solely on traditional classroom instruction.

Data Collection:

- Quantitative Data: Academic scores (grades) of students using online platforms and those using traditional classroom methods.

- Qualitative Data: Feedback from students regarding their learning experiences, challenges faced, and preferences.

Data Analysis Techniques Applied:

1) Descriptive Analysis:

- Calculate the mean, median, and mode of academic scores for both groups.

- Create frequency distributions to represent the distribution of grades in each group.

2) Diagnostic Analysis:

- Conduct an Analysis of Variance (ANOVA) to determine if there’s a statistically significant difference in academic scores between the two groups.

- Perform Regression Analysis to assess the relationship between the time spent on online platforms and academic performance.

3) Predictive Analysis:

- Utilize Time Series Forecasting to predict future academic performance trends based on historical data.

- Implement Machine Learning algorithms to develop a predictive model that identifies factors contributing to academic success on online platforms.

4) Prescriptive Analysis:

- Apply Optimization Models to identify the optimal combination of online learning resources (e.g., video lectures, interactive quizzes) that maximize academic performance.

- Use Simulation Techniques to evaluate different scenarios, such as varying student engagement levels with online resources, to determine the most effective strategies for improving learning outcomes.

5) Specific Techniques:

- Conduct Factor Analysis on qualitative feedback to identify common themes or factors influencing students’ perceptions and experiences with online learning.

- Perform Cluster Analysis to segment students based on their engagement levels, preferences, or academic outcomes, enabling targeted interventions or personalized learning strategies.

- Apply Sentiment Analysis on textual feedback to categorize students’ sentiments as positive, negative, or neutral regarding online learning experiences.

By applying a combination of qualitative and quantitative data analysis techniques, this research example aims to provide comprehensive insights into the effectiveness of online learning platforms.

Also Read: Learning Path to Become a Data Analyst in 2024

Data Analysis Techniques in Quantitative Research

Quantitative research involves collecting numerical data to examine relationships, test hypotheses, and make predictions. Various data analysis techniques are employed to interpret and draw conclusions from quantitative data. Here are some key data analysis techniques commonly used in quantitative research:

1) Descriptive Statistics:

- Description: Descriptive statistics are used to summarize and describe the main aspects of a dataset, such as central tendency (mean, median, mode), variability (range, variance, standard deviation), and distribution (skewness, kurtosis).

- Applications: Summarizing data, identifying patterns, and providing initial insights into the dataset.

2) Inferential Statistics:

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. This technique includes hypothesis testing, confidence intervals, t-tests, chi-square tests, analysis of variance (ANOVA), regression analysis, and correlation analysis.

- Applications: Testing hypotheses, making predictions, and generalizing findings from a sample to a larger population.

3) Regression Analysis:

- Description: Regression analysis is a statistical technique used to model and examine the relationship between a dependent variable and one or more independent variables. Linear regression, multiple regression, logistic regression, and nonlinear regression are common types of regression analysis .

- Applications: Predicting outcomes, identifying relationships between variables, and understanding the impact of independent variables on the dependent variable.

4) Correlation Analysis:

- Description: Correlation analysis is used to measure and assess the strength and direction of the relationship between two or more variables. The Pearson correlation coefficient, Spearman rank correlation coefficient, and Kendall’s tau are commonly used measures of correlation.

- Applications: Identifying associations between variables and assessing the degree and nature of the relationship.

5) Factor Analysis:

- Description: Factor analysis is a multivariate statistical technique used to identify and analyze underlying relationships or factors among a set of observed variables. It helps in reducing the dimensionality of data and identifying latent variables or constructs.

- Applications: Identifying underlying factors or constructs, simplifying data structures, and understanding the underlying relationships among variables.

6) Time Series Analysis:

- Description: Time series analysis involves analyzing data collected or recorded over a specific period at regular intervals to identify patterns, trends, and seasonality. Techniques such as moving averages, exponential smoothing, autoregressive integrated moving average (ARIMA), and Fourier analysis are used.

- Applications: Forecasting future trends, analyzing seasonal patterns, and understanding time-dependent relationships in data.

7) ANOVA (Analysis of Variance):

- Description: Analysis of variance (ANOVA) is a statistical technique used to analyze and compare the means of two or more groups or treatments to determine if they are statistically different from each other. One-way ANOVA, two-way ANOVA, and MANOVA (Multivariate Analysis of Variance) are common types of ANOVA.

- Applications: Comparing group means, testing hypotheses, and determining the effects of categorical independent variables on a continuous dependent variable.

8) Chi-Square Tests:

- Description: Chi-square tests are non-parametric statistical tests used to assess the association between categorical variables in a contingency table. The Chi-square test of independence, goodness-of-fit test, and test of homogeneity are common chi-square tests.

- Applications: Testing relationships between categorical variables, assessing goodness-of-fit, and evaluating independence.

These quantitative data analysis techniques provide researchers with valuable tools and methods to analyze, interpret, and derive meaningful insights from numerical data. The selection of a specific technique often depends on the research objectives, the nature of the data, and the underlying assumptions of the statistical methods being used.

Also Read: Analysis vs. Analytics: How Are They Different?

Data Analysis Methods

Data analysis methods refer to the techniques and procedures used to analyze, interpret, and draw conclusions from data. These methods are essential for transforming raw data into meaningful insights, facilitating decision-making processes, and driving strategies across various fields. Here are some common data analysis methods:

- Description: Descriptive statistics summarize and organize data to provide a clear and concise overview of the dataset. Measures such as mean, median, mode, range, variance, and standard deviation are commonly used.

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. Techniques such as hypothesis testing, confidence intervals, and regression analysis are used.

3) Exploratory Data Analysis (EDA):

- Description: EDA techniques involve visually exploring and analyzing data to discover patterns, relationships, anomalies, and insights. Methods such as scatter plots, histograms, box plots, and correlation matrices are utilized.

- Applications: Identifying trends, patterns, outliers, and relationships within the dataset.

4) Predictive Analytics:

- Description: Predictive analytics use statistical algorithms and machine learning techniques to analyze historical data and make predictions about future events or outcomes. Techniques such as regression analysis, time series forecasting, and machine learning algorithms (e.g., decision trees, random forests, neural networks) are employed.

- Applications: Forecasting future trends, predicting outcomes, and identifying potential risks or opportunities.

5) Prescriptive Analytics:

- Description: Prescriptive analytics involve analyzing data to recommend actions or strategies that optimize specific objectives or outcomes. Optimization techniques, simulation models, and decision-making algorithms are utilized.

- Applications: Recommending optimal strategies, decision-making support, and resource allocation.

6) Qualitative Data Analysis:

- Description: Qualitative data analysis involves analyzing non-numerical data, such as text, images, videos, or audio, to identify themes, patterns, and insights. Methods such as content analysis, thematic analysis, and narrative analysis are used.

- Applications: Understanding human behavior, attitudes, perceptions, and experiences.

7) Big Data Analytics:

- Description: Big data analytics methods are designed to analyze large volumes of structured and unstructured data to extract valuable insights. Technologies such as Hadoop, Spark, and NoSQL databases are used to process and analyze big data.

- Applications: Analyzing large datasets, identifying trends, patterns, and insights from big data sources.

8) Text Analytics:

- Description: Text analytics methods involve analyzing textual data, such as customer reviews, social media posts, emails, and documents, to extract meaningful information and insights. Techniques such as sentiment analysis, text mining, and natural language processing (NLP) are used.

- Applications: Analyzing customer feedback, monitoring brand reputation, and extracting insights from textual data sources.

These data analysis methods are instrumental in transforming data into actionable insights, informing decision-making processes, and driving organizational success across various sectors, including business, healthcare, finance, marketing, and research. The selection of a specific method often depends on the nature of the data, the research objectives, and the analytical requirements of the project or organization.

Also Read: Quantitative Data Analysis: Types, Analysis & Examples

Data Analysis Tools

Data analysis tools are essential instruments that facilitate the process of examining, cleaning, transforming, and modeling data to uncover useful information, make informed decisions, and drive strategies. Here are some prominent data analysis tools widely used across various industries:

1) Microsoft Excel:

- Description: A spreadsheet software that offers basic to advanced data analysis features, including pivot tables, data visualization tools, and statistical functions.

- Applications: Data cleaning, basic statistical analysis, visualization, and reporting.

2) R Programming Language:

- Description: An open-source programming language specifically designed for statistical computing and data visualization.

- Applications: Advanced statistical analysis, data manipulation, visualization, and machine learning.

3) Python (with Libraries like Pandas, NumPy, Matplotlib, and Seaborn):

- Description: A versatile programming language with libraries that support data manipulation, analysis, and visualization.

- Applications: Data cleaning, statistical analysis, machine learning, and data visualization.

4) SPSS (Statistical Package for the Social Sciences):

- Description: A comprehensive statistical software suite used for data analysis, data mining, and predictive analytics.

- Applications: Descriptive statistics, hypothesis testing, regression analysis, and advanced analytics.

5) SAS (Statistical Analysis System):

- Description: A software suite used for advanced analytics, multivariate analysis, and predictive modeling.

- Applications: Data management, statistical analysis, predictive modeling, and business intelligence.

6) Tableau:

- Description: A data visualization tool that allows users to create interactive and shareable dashboards and reports.

- Applications: Data visualization , business intelligence , and interactive dashboard creation.

7) Power BI:

- Description: A business analytics tool developed by Microsoft that provides interactive visualizations and business intelligence capabilities.

- Applications: Data visualization, business intelligence, reporting, and dashboard creation.

8) SQL (Structured Query Language) Databases (e.g., MySQL, PostgreSQL, Microsoft SQL Server):

- Description: Database management systems that support data storage, retrieval, and manipulation using SQL queries.

- Applications: Data retrieval, data cleaning, data transformation, and database management.

9) Apache Spark:

- Description: A fast and general-purpose distributed computing system designed for big data processing and analytics.

- Applications: Big data processing, machine learning, data streaming, and real-time analytics.

10) IBM SPSS Modeler:

- Description: A data mining software application used for building predictive models and conducting advanced analytics.

- Applications: Predictive modeling, data mining, statistical analysis, and decision optimization.

These tools serve various purposes and cater to different data analysis needs, from basic statistical analysis and data visualization to advanced analytics, machine learning, and big data processing. The choice of a specific tool often depends on the nature of the data, the complexity of the analysis, and the specific requirements of the project or organization.

Also Read: How to Analyze Survey Data: Methods & Examples