- Privacy Policy

Home » Research Findings – Types Examples and Writing Guide

Research Findings – Types Examples and Writing Guide

Table of Contents

Research Findings

Definition:

Research findings refer to the results obtained from a study or investigation conducted through a systematic and scientific approach. These findings are the outcomes of the data analysis, interpretation, and evaluation carried out during the research process.

Types of Research Findings

There are two main types of research findings:

Qualitative Findings

Qualitative research is an exploratory research method used to understand the complexities of human behavior and experiences. Qualitative findings are non-numerical and descriptive data that describe the meaning and interpretation of the data collected. Examples of qualitative findings include quotes from participants, themes that emerge from the data, and descriptions of experiences and phenomena.

Quantitative Findings

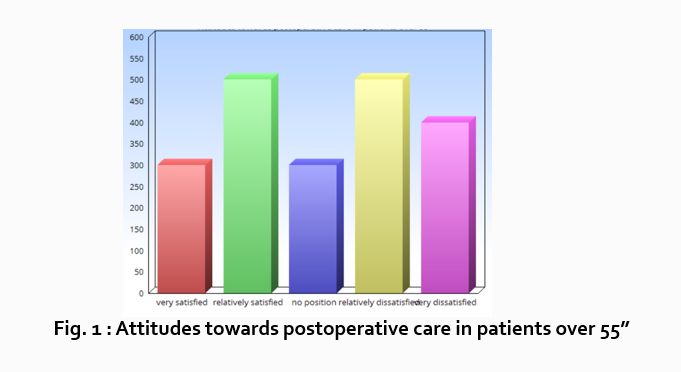

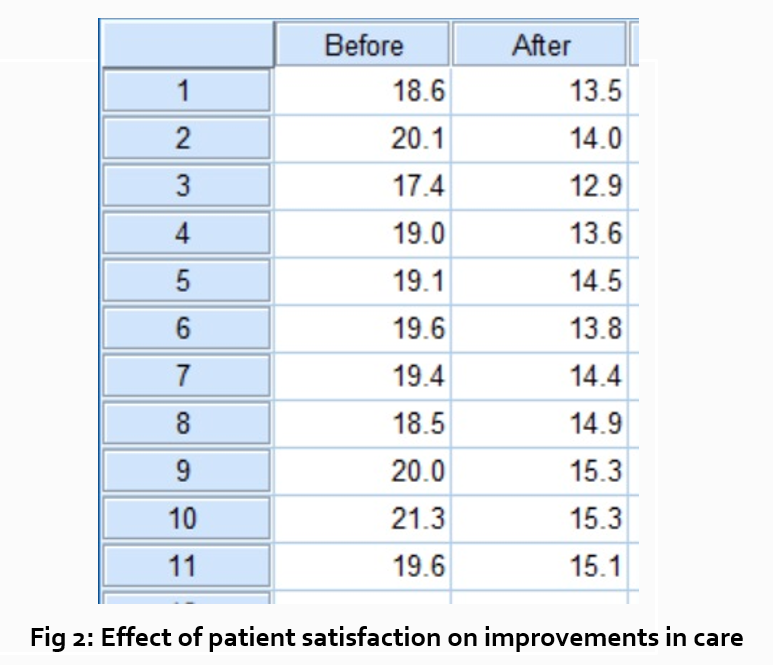

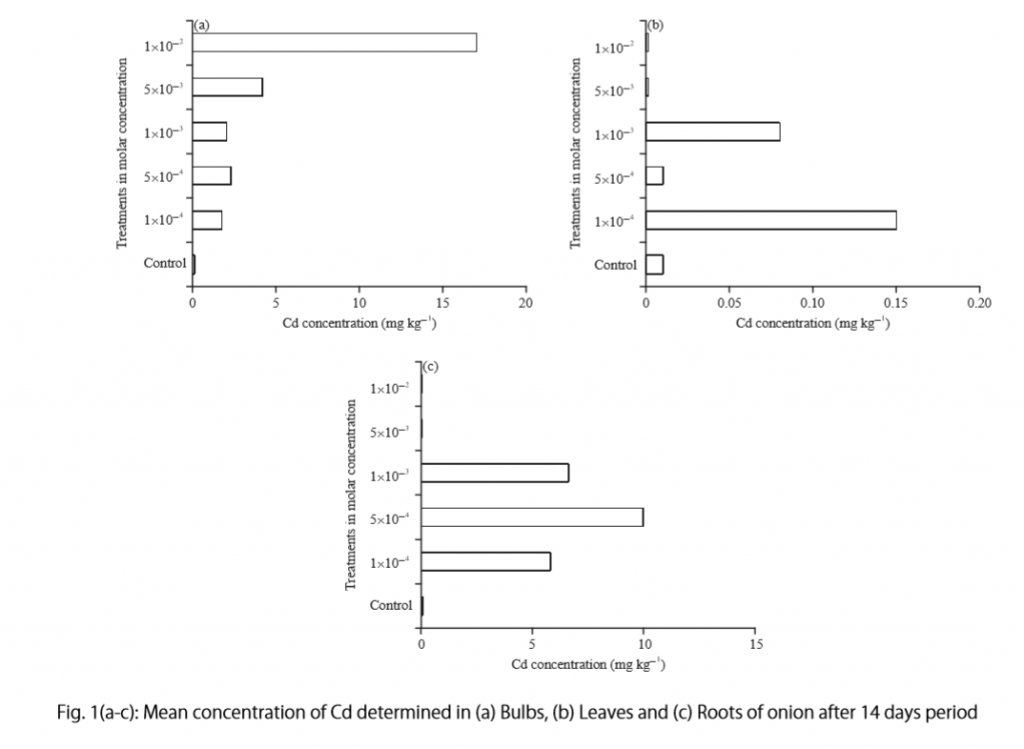

Quantitative research is a research method that uses numerical data and statistical analysis to measure and quantify a phenomenon or behavior. Quantitative findings include numerical data such as mean, median, and mode, as well as statistical analyses such as t-tests, ANOVA, and regression analysis. These findings are often presented in tables, graphs, or charts.

Both qualitative and quantitative findings are important in research and can provide different insights into a research question or problem. Combining both types of findings can provide a more comprehensive understanding of a phenomenon and improve the validity and reliability of research results.

Parts of Research Findings

Research findings typically consist of several parts, including:

- Introduction: This section provides an overview of the research topic and the purpose of the study.

- Literature Review: This section summarizes previous research studies and findings that are relevant to the current study.

- Methodology : This section describes the research design, methods, and procedures used in the study, including details on the sample, data collection, and data analysis.

- Results : This section presents the findings of the study, including statistical analyses and data visualizations.

- Discussion : This section interprets the results and explains what they mean in relation to the research question(s) and hypotheses. It may also compare and contrast the current findings with previous research studies and explore any implications or limitations of the study.

- Conclusion : This section provides a summary of the key findings and the main conclusions of the study.

- Recommendations: This section suggests areas for further research and potential applications or implications of the study’s findings.

How to Write Research Findings

Writing research findings requires careful planning and attention to detail. Here are some general steps to follow when writing research findings:

- Organize your findings: Before you begin writing, it’s essential to organize your findings logically. Consider creating an outline or a flowchart that outlines the main points you want to make and how they relate to one another.

- Use clear and concise language : When presenting your findings, be sure to use clear and concise language that is easy to understand. Avoid using jargon or technical terms unless they are necessary to convey your meaning.

- Use visual aids : Visual aids such as tables, charts, and graphs can be helpful in presenting your findings. Be sure to label and title your visual aids clearly, and make sure they are easy to read.

- Use headings and subheadings: Using headings and subheadings can help organize your findings and make them easier to read. Make sure your headings and subheadings are clear and descriptive.

- Interpret your findings : When presenting your findings, it’s important to provide some interpretation of what the results mean. This can include discussing how your findings relate to the existing literature, identifying any limitations of your study, and suggesting areas for future research.

- Be precise and accurate : When presenting your findings, be sure to use precise and accurate language. Avoid making generalizations or overstatements and be careful not to misrepresent your data.

- Edit and revise: Once you have written your research findings, be sure to edit and revise them carefully. Check for grammar and spelling errors, make sure your formatting is consistent, and ensure that your writing is clear and concise.

Research Findings Example

Following is a Research Findings Example sample for students:

Title: The Effects of Exercise on Mental Health

Sample : 500 participants, both men and women, between the ages of 18-45.

Methodology : Participants were divided into two groups. The first group engaged in 30 minutes of moderate intensity exercise five times a week for eight weeks. The second group did not exercise during the study period. Participants in both groups completed a questionnaire that assessed their mental health before and after the study period.

Findings : The group that engaged in regular exercise reported a significant improvement in mental health compared to the control group. Specifically, they reported lower levels of anxiety and depression, improved mood, and increased self-esteem.

Conclusion : Regular exercise can have a positive impact on mental health and may be an effective intervention for individuals experiencing symptoms of anxiety or depression.

Applications of Research Findings

Research findings can be applied in various fields to improve processes, products, services, and outcomes. Here are some examples:

- Healthcare : Research findings in medicine and healthcare can be applied to improve patient outcomes, reduce morbidity and mortality rates, and develop new treatments for various diseases.

- Education : Research findings in education can be used to develop effective teaching methods, improve learning outcomes, and design new educational programs.

- Technology : Research findings in technology can be applied to develop new products, improve existing products, and enhance user experiences.

- Business : Research findings in business can be applied to develop new strategies, improve operations, and increase profitability.

- Public Policy: Research findings can be used to inform public policy decisions on issues such as environmental protection, social welfare, and economic development.

- Social Sciences: Research findings in social sciences can be used to improve understanding of human behavior and social phenomena, inform public policy decisions, and develop interventions to address social issues.

- Agriculture: Research findings in agriculture can be applied to improve crop yields, develop new farming techniques, and enhance food security.

- Sports : Research findings in sports can be applied to improve athlete performance, reduce injuries, and develop new training programs.

When to use Research Findings

Research findings can be used in a variety of situations, depending on the context and the purpose. Here are some examples of when research findings may be useful:

- Decision-making : Research findings can be used to inform decisions in various fields, such as business, education, healthcare, and public policy. For example, a business may use market research findings to make decisions about new product development or marketing strategies.

- Problem-solving : Research findings can be used to solve problems or challenges in various fields, such as healthcare, engineering, and social sciences. For example, medical researchers may use findings from clinical trials to develop new treatments for diseases.

- Policy development : Research findings can be used to inform the development of policies in various fields, such as environmental protection, social welfare, and economic development. For example, policymakers may use research findings to develop policies aimed at reducing greenhouse gas emissions.

- Program evaluation: Research findings can be used to evaluate the effectiveness of programs or interventions in various fields, such as education, healthcare, and social services. For example, educational researchers may use findings from evaluations of educational programs to improve teaching and learning outcomes.

- Innovation: Research findings can be used to inspire or guide innovation in various fields, such as technology and engineering. For example, engineers may use research findings on materials science to develop new and innovative products.

Purpose of Research Findings

The purpose of research findings is to contribute to the knowledge and understanding of a particular topic or issue. Research findings are the result of a systematic and rigorous investigation of a research question or hypothesis, using appropriate research methods and techniques.

The main purposes of research findings are:

- To generate new knowledge : Research findings contribute to the body of knowledge on a particular topic, by adding new information, insights, and understanding to the existing knowledge base.

- To test hypotheses or theories : Research findings can be used to test hypotheses or theories that have been proposed in a particular field or discipline. This helps to determine the validity and reliability of the hypotheses or theories, and to refine or develop new ones.

- To inform practice: Research findings can be used to inform practice in various fields, such as healthcare, education, and business. By identifying best practices and evidence-based interventions, research findings can help practitioners to make informed decisions and improve outcomes.

- To identify gaps in knowledge: Research findings can help to identify gaps in knowledge and understanding of a particular topic, which can then be addressed by further research.

- To contribute to policy development: Research findings can be used to inform policy development in various fields, such as environmental protection, social welfare, and economic development. By providing evidence-based recommendations, research findings can help policymakers to develop effective policies that address societal challenges.

Characteristics of Research Findings

Research findings have several key characteristics that distinguish them from other types of information or knowledge. Here are some of the main characteristics of research findings:

- Objective : Research findings are based on a systematic and rigorous investigation of a research question or hypothesis, using appropriate research methods and techniques. As such, they are generally considered to be more objective and reliable than other types of information.

- Empirical : Research findings are based on empirical evidence, which means that they are derived from observations or measurements of the real world. This gives them a high degree of credibility and validity.

- Generalizable : Research findings are often intended to be generalizable to a larger population or context beyond the specific study. This means that the findings can be applied to other situations or populations with similar characteristics.

- Transparent : Research findings are typically reported in a transparent manner, with a clear description of the research methods and data analysis techniques used. This allows others to assess the credibility and reliability of the findings.

- Peer-reviewed: Research findings are often subject to a rigorous peer-review process, in which experts in the field review the research methods, data analysis, and conclusions of the study. This helps to ensure the validity and reliability of the findings.

- Reproducible : Research findings are often designed to be reproducible, meaning that other researchers can replicate the study using the same methods and obtain similar results. This helps to ensure the validity and reliability of the findings.

Advantages of Research Findings

Research findings have many advantages, which make them valuable sources of knowledge and information. Here are some of the main advantages of research findings:

- Evidence-based: Research findings are based on empirical evidence, which means that they are grounded in data and observations from the real world. This makes them a reliable and credible source of information.

- Inform decision-making: Research findings can be used to inform decision-making in various fields, such as healthcare, education, and business. By identifying best practices and evidence-based interventions, research findings can help practitioners and policymakers to make informed decisions and improve outcomes.

- Identify gaps in knowledge: Research findings can help to identify gaps in knowledge and understanding of a particular topic, which can then be addressed by further research. This contributes to the ongoing development of knowledge in various fields.

- Improve outcomes : Research findings can be used to develop and implement evidence-based practices and interventions, which have been shown to improve outcomes in various fields, such as healthcare, education, and social services.

- Foster innovation: Research findings can inspire or guide innovation in various fields, such as technology and engineering. By providing new information and understanding of a particular topic, research findings can stimulate new ideas and approaches to problem-solving.

- Enhance credibility: Research findings are generally considered to be more credible and reliable than other types of information, as they are based on rigorous research methods and are subject to peer-review processes.

Limitations of Research Findings

While research findings have many advantages, they also have some limitations. Here are some of the main limitations of research findings:

- Limited scope: Research findings are typically based on a particular study or set of studies, which may have a limited scope or focus. This means that they may not be applicable to other contexts or populations.

- Potential for bias : Research findings can be influenced by various sources of bias, such as researcher bias, selection bias, or measurement bias. This can affect the validity and reliability of the findings.

- Ethical considerations: Research findings can raise ethical considerations, particularly in studies involving human subjects. Researchers must ensure that their studies are conducted in an ethical and responsible manner, with appropriate measures to protect the welfare and privacy of participants.

- Time and resource constraints : Research studies can be time-consuming and require significant resources, which can limit the number and scope of studies that are conducted. This can lead to gaps in knowledge or a lack of research on certain topics.

- Complexity: Some research findings can be complex and difficult to interpret, particularly in fields such as science or medicine. This can make it challenging for practitioners and policymakers to apply the findings to their work.

- Lack of generalizability : While research findings are intended to be generalizable to larger populations or contexts, there may be factors that limit their generalizability. For example, cultural or environmental factors may influence how a particular intervention or treatment works in different populations or contexts.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Problem Statement – Writing Guide, Examples and...

Research Process – Steps, Examples and Tips

Institutional Review Board – Application Sample...

Appendices – Writing Guide, Types and Examples

Survey Instruments – List and Their Uses

Limitations in Research – Types, Examples and...

- Search Menu

Sign in through your institution

- Advance Articles

- Editor's Choice

- CME Reviews

- Best of 2021 collection

- Abbreviated Breast MRI Virtual Collection

- Contrast-enhanced Mammography Collection

- Author Guidelines

- Submission Site

- Open Access

- Self-Archiving Policy

- Accepted Papers Resource Guide

- About Journal of Breast Imaging

- About the Society of Breast Imaging

- Guidelines for Reviewers

- Resources for Reviewers and Authors

- Editorial Board

- Advertising Disclaimer

- Advertising and Corporate Services

- Journals on Oxford Academic

- Books on Oxford Academic

- < Previous

A Step-by-Step Guide to Writing a Scientific Review Article

- Article contents

- Figures & tables

- Supplementary Data

Manisha Bahl, A Step-by-Step Guide to Writing a Scientific Review Article, Journal of Breast Imaging , Volume 5, Issue 4, July/August 2023, Pages 480–485, https://doi.org/10.1093/jbi/wbad028

- Permissions Icon Permissions

Scientific review articles are comprehensive, focused reviews of the scientific literature written by subject matter experts. The task of writing a scientific review article can seem overwhelming; however, it can be managed by using an organized approach and devoting sufficient time to the process. The process involves selecting a topic about which the authors are knowledgeable and enthusiastic, conducting a literature search and critical analysis of the literature, and writing the article, which is composed of an abstract, introduction, body, and conclusion, with accompanying tables and figures. This article, which focuses on the narrative or traditional literature review, is intended to serve as a guide with practical steps for new writers. Tips for success are also discussed, including selecting a focused topic, maintaining objectivity and balance while writing, avoiding tedious data presentation in a laundry list format, moving from descriptions of the literature to critical analysis, avoiding simplistic conclusions, and budgeting time for the overall process.

- narrative discourse

Society of Breast Imaging members

Personal account.

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

- Add your ORCID iD

Institutional access

Sign in with a library card.

- Sign in with username/password

- Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Short-term Access

To purchase short-term access, please sign in to your personal account above.

Don't already have a personal account? Register

| Month: | Total Views: |

|---|---|

| May 2023 | 171 |

| June 2023 | 115 |

| July 2023 | 113 |

| August 2023 | 5,013 |

| September 2023 | 1,500 |

| October 2023 | 1,810 |

| November 2023 | 3,849 |

| December 2023 | 308 |

| January 2024 | 401 |

| February 2024 | 312 |

| March 2024 | 415 |

| April 2024 | 361 |

| May 2024 | 306 |

| June 2024 | 283 |

| July 2024 | 309 |

| August 2024 | 243 |

| September 2024 | 143 |

Email alerts

Citing articles via.

- Recommend to your Librarian

- Journals Career Network

Affiliations

- Online ISSN 2631-6129

- Print ISSN 2631-6110

- Copyright © 2024 Society of Breast Imaging

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Dissertation

- How to Write a Results Section | Tips & Examples

How to Write a Results Section | Tips & Examples

Published on August 30, 2022 by Tegan George . Revised on July 18, 2023.

A results section is where you report the main findings of the data collection and analysis you conducted for your thesis or dissertation . You should report all relevant results concisely and objectively, in a logical order. Don’t include subjective interpretations of why you found these results or what they mean—any evaluation should be saved for the discussion section .

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

How to write a results section, reporting quantitative research results, reporting qualitative research results, results vs. discussion vs. conclusion, checklist: research results, other interesting articles, frequently asked questions about results sections.

When conducting research, it’s important to report the results of your study prior to discussing your interpretations of it. This gives your reader a clear idea of exactly what you found and keeps the data itself separate from your subjective analysis.

Here are a few best practices:

- Your results should always be written in the past tense.

- While the length of this section depends on how much data you collected and analyzed, it should be written as concisely as possible.

- Only include results that are directly relevant to answering your research questions . Avoid speculative or interpretative words like “appears” or “implies.”

- If you have other results you’d like to include, consider adding them to an appendix or footnotes.

- Always start out with your broadest results first, and then flow into your more granular (but still relevant) ones. Think of it like a shoe store: first discuss the shoes as a whole, then the sneakers, boots, sandals, etc.

Prevent plagiarism. Run a free check.

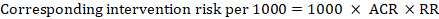

If you conducted quantitative research , you’ll likely be working with the results of some sort of statistical analysis .

Your results section should report the results of any statistical tests you used to compare groups or assess relationships between variables . It should also state whether or not each hypothesis was supported.

The most logical way to structure quantitative results is to frame them around your research questions or hypotheses. For each question or hypothesis, share:

- A reminder of the type of analysis you used (e.g., a two-sample t test or simple linear regression ). A more detailed description of your analysis should go in your methodology section.

- A concise summary of each relevant result, both positive and negative. This can include any relevant descriptive statistics (e.g., means and standard deviations ) as well as inferential statistics (e.g., t scores, degrees of freedom , and p values ). Remember, these numbers are often placed in parentheses.

- A brief statement of how each result relates to the question, or whether the hypothesis was supported. You can briefly mention any results that didn’t fit with your expectations and assumptions, but save any speculation on their meaning or consequences for your discussion and conclusion.

A note on tables and figures

In quantitative research, it’s often helpful to include visual elements such as graphs, charts, and tables , but only if they are directly relevant to your results. Give these elements clear, descriptive titles and labels so that your reader can easily understand what is being shown. If you want to include any other visual elements that are more tangential in nature, consider adding a figure and table list .

As a rule of thumb:

- Tables are used to communicate exact values, giving a concise overview of various results

- Graphs and charts are used to visualize trends and relationships, giving an at-a-glance illustration of key findings

Don’t forget to also mention any tables and figures you used within the text of your results section. Summarize or elaborate on specific aspects you think your reader should know about rather than merely restating the same numbers already shown.

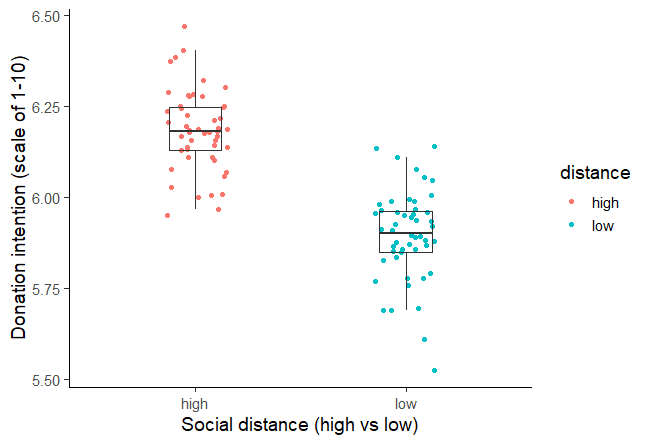

A two-sample t test was used to test the hypothesis that higher social distance from environmental problems would reduce the intent to donate to environmental organizations, with donation intention (recorded as a score from 1 to 10) as the outcome variable and social distance (categorized as either a low or high level of social distance) as the predictor variable.Social distance was found to be positively correlated with donation intention, t (98) = 12.19, p < .001, with the donation intention of the high social distance group 0.28 points higher, on average, than the low social distance group (see figure 1). This contradicts the initial hypothesis that social distance would decrease donation intention, and in fact suggests a small effect in the opposite direction.

Figure 1: Intention to donate to environmental organizations based on social distance from impact of environmental damage.

In qualitative research , your results might not all be directly related to specific hypotheses. In this case, you can structure your results section around key themes or topics that emerged from your analysis of the data.

For each theme, start with general observations about what the data showed. You can mention:

- Recurring points of agreement or disagreement

- Patterns and trends

- Particularly significant snippets from individual responses

Next, clarify and support these points with direct quotations. Be sure to report any relevant demographic information about participants. Further information (such as full transcripts , if appropriate) can be included in an appendix .

When asked about video games as a form of art, the respondents tended to believe that video games themselves are not an art form, but agreed that creativity is involved in their production. The criteria used to identify artistic video games included design, story, music, and creative teams.One respondent (male, 24) noted a difference in creativity between popular video game genres:

“I think that in role-playing games, there’s more attention to character design, to world design, because the whole story is important and more attention is paid to certain game elements […] so that perhaps you do need bigger teams of creative experts than in an average shooter or something.”

Responses suggest that video game consumers consider some types of games to have more artistic potential than others.

Your results section should objectively report your findings, presenting only brief observations in relation to each question, hypothesis, or theme.

It should not speculate about the meaning of the results or attempt to answer your main research question . Detailed interpretation of your results is more suitable for your discussion section , while synthesis of your results into an overall answer to your main research question is best left for your conclusion .

Don't submit your assignments before you do this

The academic proofreading tool has been trained on 1000s of academic texts. Making it the most accurate and reliable proofreading tool for students. Free citation check included.

Try for free

I have completed my data collection and analyzed the results.

I have included all results that are relevant to my research questions.

I have concisely and objectively reported each result, including relevant descriptive statistics and inferential statistics .

I have stated whether each hypothesis was supported or refuted.

I have used tables and figures to illustrate my results where appropriate.

All tables and figures are correctly labelled and referred to in the text.

There is no subjective interpretation or speculation on the meaning of the results.

You've finished writing up your results! Use the other checklists to further improve your thesis.

If you want to know more about AI for academic writing, AI tools, or research bias, make sure to check out some of our other articles with explanations and examples or go directly to our tools!

Research bias

- Survivorship bias

- Self-serving bias

- Availability heuristic

- Halo effect

- Hindsight bias

- Deep learning

- Generative AI

- Machine learning

- Reinforcement learning

- Supervised vs. unsupervised learning

(AI) Tools

- Grammar Checker

- Paraphrasing Tool

- Text Summarizer

- AI Detector

- Plagiarism Checker

- Citation Generator

The results chapter of a thesis or dissertation presents your research results concisely and objectively.

In quantitative research , for each question or hypothesis , state:

- The type of analysis used

- Relevant results in the form of descriptive and inferential statistics

- Whether or not the alternative hypothesis was supported

In qualitative research , for each question or theme, describe:

- Recurring patterns

- Significant or representative individual responses

- Relevant quotations from the data

Don’t interpret or speculate in the results chapter.

Results are usually written in the past tense , because they are describing the outcome of completed actions.

The results chapter or section simply and objectively reports what you found, without speculating on why you found these results. The discussion interprets the meaning of the results, puts them in context, and explains why they matter.

In qualitative research , results and discussion are sometimes combined. But in quantitative research , it’s considered important to separate the objective results from your interpretation of them.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

George, T. (2023, July 18). How to Write a Results Section | Tips & Examples. Scribbr. Retrieved September 16, 2024, from https://www.scribbr.com/dissertation/results/

Is this article helpful?

Tegan George

Other students also liked, what is a research methodology | steps & tips, how to write a discussion section | tips & examples, how to write a thesis or dissertation conclusion, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Lau F, Kuziemsky C, editors. Handbook of eHealth Evaluation: An Evidence-based Approach [Internet]. Victoria (BC): University of Victoria; 2017 Feb 27.

Handbook of eHealth Evaluation: An Evidence-based Approach [Internet].

Chapter 9 methods for literature reviews.

Guy Paré and Spyros Kitsiou .

9.1. Introduction

Literature reviews play a critical role in scholarship because science remains, first and foremost, a cumulative endeavour ( vom Brocke et al., 2009 ). As in any academic discipline, rigorous knowledge syntheses are becoming indispensable in keeping up with an exponentially growing eHealth literature, assisting practitioners, academics, and graduate students in finding, evaluating, and synthesizing the contents of many empirical and conceptual papers. Among other methods, literature reviews are essential for: (a) identifying what has been written on a subject or topic; (b) determining the extent to which a specific research area reveals any interpretable trends or patterns; (c) aggregating empirical findings related to a narrow research question to support evidence-based practice; (d) generating new frameworks and theories; and (e) identifying topics or questions requiring more investigation ( Paré, Trudel, Jaana, & Kitsiou, 2015 ).

Literature reviews can take two major forms. The most prevalent one is the “literature review” or “background” section within a journal paper or a chapter in a graduate thesis. This section synthesizes the extant literature and usually identifies the gaps in knowledge that the empirical study addresses ( Sylvester, Tate, & Johnstone, 2013 ). It may also provide a theoretical foundation for the proposed study, substantiate the presence of the research problem, justify the research as one that contributes something new to the cumulated knowledge, or validate the methods and approaches for the proposed study ( Hart, 1998 ; Levy & Ellis, 2006 ).

The second form of literature review, which is the focus of this chapter, constitutes an original and valuable work of research in and of itself ( Paré et al., 2015 ). Rather than providing a base for a researcher’s own work, it creates a solid starting point for all members of the community interested in a particular area or topic ( Mulrow, 1987 ). The so-called “review article” is a journal-length paper which has an overarching purpose to synthesize the literature in a field, without collecting or analyzing any primary data ( Green, Johnson, & Adams, 2006 ).

When appropriately conducted, review articles represent powerful information sources for practitioners looking for state-of-the art evidence to guide their decision-making and work practices ( Paré et al., 2015 ). Further, high-quality reviews become frequently cited pieces of work which researchers seek out as a first clear outline of the literature when undertaking empirical studies ( Cooper, 1988 ; Rowe, 2014 ). Scholars who track and gauge the impact of articles have found that review papers are cited and downloaded more often than any other type of published article ( Cronin, Ryan, & Coughlan, 2008 ; Montori, Wilczynski, Morgan, Haynes, & Hedges, 2003 ; Patsopoulos, Analatos, & Ioannidis, 2005 ). The reason for their popularity may be the fact that reading the review enables one to have an overview, if not a detailed knowledge of the area in question, as well as references to the most useful primary sources ( Cronin et al., 2008 ). Although they are not easy to conduct, the commitment to complete a review article provides a tremendous service to one’s academic community ( Paré et al., 2015 ; Petticrew & Roberts, 2006 ). Most, if not all, peer-reviewed journals in the fields of medical informatics publish review articles of some type.

The main objectives of this chapter are fourfold: (a) to provide an overview of the major steps and activities involved in conducting a stand-alone literature review; (b) to describe and contrast the different types of review articles that can contribute to the eHealth knowledge base; (c) to illustrate each review type with one or two examples from the eHealth literature; and (d) to provide a series of recommendations for prospective authors of review articles in this domain.

9.2. Overview of the Literature Review Process and Steps

As explained in Templier and Paré (2015) , there are six generic steps involved in conducting a review article:

- formulating the research question(s) and objective(s),

- searching the extant literature,

- screening for inclusion,

- assessing the quality of primary studies,

- extracting data, and

- analyzing data.

Although these steps are presented here in sequential order, one must keep in mind that the review process can be iterative and that many activities can be initiated during the planning stage and later refined during subsequent phases ( Finfgeld-Connett & Johnson, 2013 ; Kitchenham & Charters, 2007 ).

Formulating the research question(s) and objective(s): As a first step, members of the review team must appropriately justify the need for the review itself ( Petticrew & Roberts, 2006 ), identify the review’s main objective(s) ( Okoli & Schabram, 2010 ), and define the concepts or variables at the heart of their synthesis ( Cooper & Hedges, 2009 ; Webster & Watson, 2002 ). Importantly, they also need to articulate the research question(s) they propose to investigate ( Kitchenham & Charters, 2007 ). In this regard, we concur with Jesson, Matheson, and Lacey (2011) that clearly articulated research questions are key ingredients that guide the entire review methodology; they underscore the type of information that is needed, inform the search for and selection of relevant literature, and guide or orient the subsequent analysis. Searching the extant literature: The next step consists of searching the literature and making decisions about the suitability of material to be considered in the review ( Cooper, 1988 ). There exist three main coverage strategies. First, exhaustive coverage means an effort is made to be as comprehensive as possible in order to ensure that all relevant studies, published and unpublished, are included in the review and, thus, conclusions are based on this all-inclusive knowledge base. The second type of coverage consists of presenting materials that are representative of most other works in a given field or area. Often authors who adopt this strategy will search for relevant articles in a small number of top-tier journals in a field ( Paré et al., 2015 ). In the third strategy, the review team concentrates on prior works that have been central or pivotal to a particular topic. This may include empirical studies or conceptual papers that initiated a line of investigation, changed how problems or questions were framed, introduced new methods or concepts, or engendered important debate ( Cooper, 1988 ). Screening for inclusion: The following step consists of evaluating the applicability of the material identified in the preceding step ( Levy & Ellis, 2006 ; vom Brocke et al., 2009 ). Once a group of potential studies has been identified, members of the review team must screen them to determine their relevance ( Petticrew & Roberts, 2006 ). A set of predetermined rules provides a basis for including or excluding certain studies. This exercise requires a significant investment on the part of researchers, who must ensure enhanced objectivity and avoid biases or mistakes. As discussed later in this chapter, for certain types of reviews there must be at least two independent reviewers involved in the screening process and a procedure to resolve disagreements must also be in place ( Liberati et al., 2009 ; Shea et al., 2009 ). Assessing the quality of primary studies: In addition to screening material for inclusion, members of the review team may need to assess the scientific quality of the selected studies, that is, appraise the rigour of the research design and methods. Such formal assessment, which is usually conducted independently by at least two coders, helps members of the review team refine which studies to include in the final sample, determine whether or not the differences in quality may affect their conclusions, or guide how they analyze the data and interpret the findings ( Petticrew & Roberts, 2006 ). Ascribing quality scores to each primary study or considering through domain-based evaluations which study components have or have not been designed and executed appropriately makes it possible to reflect on the extent to which the selected study addresses possible biases and maximizes validity ( Shea et al., 2009 ). Extracting data: The following step involves gathering or extracting applicable information from each primary study included in the sample and deciding what is relevant to the problem of interest ( Cooper & Hedges, 2009 ). Indeed, the type of data that should be recorded mainly depends on the initial research questions ( Okoli & Schabram, 2010 ). However, important information may also be gathered about how, when, where and by whom the primary study was conducted, the research design and methods, or qualitative/quantitative results ( Cooper & Hedges, 2009 ). Analyzing and synthesizing data : As a final step, members of the review team must collate, summarize, aggregate, organize, and compare the evidence extracted from the included studies. The extracted data must be presented in a meaningful way that suggests a new contribution to the extant literature ( Jesson et al., 2011 ). Webster and Watson (2002) warn researchers that literature reviews should be much more than lists of papers and should provide a coherent lens to make sense of extant knowledge on a given topic. There exist several methods and techniques for synthesizing quantitative (e.g., frequency analysis, meta-analysis) and qualitative (e.g., grounded theory, narrative analysis, meta-ethnography) evidence ( Dixon-Woods, Agarwal, Jones, Young, & Sutton, 2005 ; Thomas & Harden, 2008 ).

9.3. Types of Review Articles and Brief Illustrations

EHealth researchers have at their disposal a number of approaches and methods for making sense out of existing literature, all with the purpose of casting current research findings into historical contexts or explaining contradictions that might exist among a set of primary research studies conducted on a particular topic. Our classification scheme is largely inspired from Paré and colleagues’ (2015) typology. Below we present and illustrate those review types that we feel are central to the growth and development of the eHealth domain.

9.3.1. Narrative Reviews

The narrative review is the “traditional” way of reviewing the extant literature and is skewed towards a qualitative interpretation of prior knowledge ( Sylvester et al., 2013 ). Put simply, a narrative review attempts to summarize or synthesize what has been written on a particular topic but does not seek generalization or cumulative knowledge from what is reviewed ( Davies, 2000 ; Green et al., 2006 ). Instead, the review team often undertakes the task of accumulating and synthesizing the literature to demonstrate the value of a particular point of view ( Baumeister & Leary, 1997 ). As such, reviewers may selectively ignore or limit the attention paid to certain studies in order to make a point. In this rather unsystematic approach, the selection of information from primary articles is subjective, lacks explicit criteria for inclusion and can lead to biased interpretations or inferences ( Green et al., 2006 ). There are several narrative reviews in the particular eHealth domain, as in all fields, which follow such an unstructured approach ( Silva et al., 2015 ; Paul et al., 2015 ).

Despite these criticisms, this type of review can be very useful in gathering together a volume of literature in a specific subject area and synthesizing it. As mentioned above, its primary purpose is to provide the reader with a comprehensive background for understanding current knowledge and highlighting the significance of new research ( Cronin et al., 2008 ). Faculty like to use narrative reviews in the classroom because they are often more up to date than textbooks, provide a single source for students to reference, and expose students to peer-reviewed literature ( Green et al., 2006 ). For researchers, narrative reviews can inspire research ideas by identifying gaps or inconsistencies in a body of knowledge, thus helping researchers to determine research questions or formulate hypotheses. Importantly, narrative reviews can also be used as educational articles to bring practitioners up to date with certain topics of issues ( Green et al., 2006 ).

Recently, there have been several efforts to introduce more rigour in narrative reviews that will elucidate common pitfalls and bring changes into their publication standards. Information systems researchers, among others, have contributed to advancing knowledge on how to structure a “traditional” review. For instance, Levy and Ellis (2006) proposed a generic framework for conducting such reviews. Their model follows the systematic data processing approach comprised of three steps, namely: (a) literature search and screening; (b) data extraction and analysis; and (c) writing the literature review. They provide detailed and very helpful instructions on how to conduct each step of the review process. As another methodological contribution, vom Brocke et al. (2009) offered a series of guidelines for conducting literature reviews, with a particular focus on how to search and extract the relevant body of knowledge. Last, Bandara, Miskon, and Fielt (2011) proposed a structured, predefined and tool-supported method to identify primary studies within a feasible scope, extract relevant content from identified articles, synthesize and analyze the findings, and effectively write and present the results of the literature review. We highly recommend that prospective authors of narrative reviews consult these useful sources before embarking on their work.

Darlow and Wen (2015) provide a good example of a highly structured narrative review in the eHealth field. These authors synthesized published articles that describe the development process of mobile health (m-health) interventions for patients’ cancer care self-management. As in most narrative reviews, the scope of the research questions being investigated is broad: (a) how development of these systems are carried out; (b) which methods are used to investigate these systems; and (c) what conclusions can be drawn as a result of the development of these systems. To provide clear answers to these questions, a literature search was conducted on six electronic databases and Google Scholar . The search was performed using several terms and free text words, combining them in an appropriate manner. Four inclusion and three exclusion criteria were utilized during the screening process. Both authors independently reviewed each of the identified articles to determine eligibility and extract study information. A flow diagram shows the number of studies identified, screened, and included or excluded at each stage of study selection. In terms of contributions, this review provides a series of practical recommendations for m-health intervention development.

9.3.2. Descriptive or Mapping Reviews

The primary goal of a descriptive review is to determine the extent to which a body of knowledge in a particular research topic reveals any interpretable pattern or trend with respect to pre-existing propositions, theories, methodologies or findings ( King & He, 2005 ; Paré et al., 2015 ). In contrast with narrative reviews, descriptive reviews follow a systematic and transparent procedure, including searching, screening and classifying studies ( Petersen, Vakkalanka, & Kuzniarz, 2015 ). Indeed, structured search methods are used to form a representative sample of a larger group of published works ( Paré et al., 2015 ). Further, authors of descriptive reviews extract from each study certain characteristics of interest, such as publication year, research methods, data collection techniques, and direction or strength of research outcomes (e.g., positive, negative, or non-significant) in the form of frequency analysis to produce quantitative results ( Sylvester et al., 2013 ). In essence, each study included in a descriptive review is treated as the unit of analysis and the published literature as a whole provides a database from which the authors attempt to identify any interpretable trends or draw overall conclusions about the merits of existing conceptualizations, propositions, methods or findings ( Paré et al., 2015 ). In doing so, a descriptive review may claim that its findings represent the state of the art in a particular domain ( King & He, 2005 ).

In the fields of health sciences and medical informatics, reviews that focus on examining the range, nature and evolution of a topic area are described by Anderson, Allen, Peckham, and Goodwin (2008) as mapping reviews . Like descriptive reviews, the research questions are generic and usually relate to publication patterns and trends. There is no preconceived plan to systematically review all of the literature although this can be done. Instead, researchers often present studies that are representative of most works published in a particular area and they consider a specific time frame to be mapped.

An example of this approach in the eHealth domain is offered by DeShazo, Lavallie, and Wolf (2009). The purpose of this descriptive or mapping review was to characterize publication trends in the medical informatics literature over a 20-year period (1987 to 2006). To achieve this ambitious objective, the authors performed a bibliometric analysis of medical informatics citations indexed in medline using publication trends, journal frequencies, impact factors, Medical Subject Headings (MeSH) term frequencies, and characteristics of citations. Findings revealed that there were over 77,000 medical informatics articles published during the covered period in numerous journals and that the average annual growth rate was 12%. The MeSH term analysis also suggested a strong interdisciplinary trend. Finally, average impact scores increased over time with two notable growth periods. Overall, patterns in research outputs that seem to characterize the historic trends and current components of the field of medical informatics suggest it may be a maturing discipline (DeShazo et al., 2009).

9.3.3. Scoping Reviews

Scoping reviews attempt to provide an initial indication of the potential size and nature of the extant literature on an emergent topic (Arksey & O’Malley, 2005; Daudt, van Mossel, & Scott, 2013 ; Levac, Colquhoun, & O’Brien, 2010). A scoping review may be conducted to examine the extent, range and nature of research activities in a particular area, determine the value of undertaking a full systematic review (discussed next), or identify research gaps in the extant literature ( Paré et al., 2015 ). In line with their main objective, scoping reviews usually conclude with the presentation of a detailed research agenda for future works along with potential implications for both practice and research.

Unlike narrative and descriptive reviews, the whole point of scoping the field is to be as comprehensive as possible, including grey literature (Arksey & O’Malley, 2005). Inclusion and exclusion criteria must be established to help researchers eliminate studies that are not aligned with the research questions. It is also recommended that at least two independent coders review abstracts yielded from the search strategy and then the full articles for study selection ( Daudt et al., 2013 ). The synthesized evidence from content or thematic analysis is relatively easy to present in tabular form (Arksey & O’Malley, 2005; Thomas & Harden, 2008 ).

One of the most highly cited scoping reviews in the eHealth domain was published by Archer, Fevrier-Thomas, Lokker, McKibbon, and Straus (2011) . These authors reviewed the existing literature on personal health record ( phr ) systems including design, functionality, implementation, applications, outcomes, and benefits. Seven databases were searched from 1985 to March 2010. Several search terms relating to phr s were used during this process. Two authors independently screened titles and abstracts to determine inclusion status. A second screen of full-text articles, again by two independent members of the research team, ensured that the studies described phr s. All in all, 130 articles met the criteria and their data were extracted manually into a database. The authors concluded that although there is a large amount of survey, observational, cohort/panel, and anecdotal evidence of phr benefits and satisfaction for patients, more research is needed to evaluate the results of phr implementations. Their in-depth analysis of the literature signalled that there is little solid evidence from randomized controlled trials or other studies through the use of phr s. Hence, they suggested that more research is needed that addresses the current lack of understanding of optimal functionality and usability of these systems, and how they can play a beneficial role in supporting patient self-management ( Archer et al., 2011 ).

9.3.4. Forms of Aggregative Reviews

Healthcare providers, practitioners, and policy-makers are nowadays overwhelmed with large volumes of information, including research-based evidence from numerous clinical trials and evaluation studies, assessing the effectiveness of health information technologies and interventions ( Ammenwerth & de Keizer, 2004 ; Deshazo et al., 2009 ). It is unrealistic to expect that all these disparate actors will have the time, skills, and necessary resources to identify the available evidence in the area of their expertise and consider it when making decisions. Systematic reviews that involve the rigorous application of scientific strategies aimed at limiting subjectivity and bias (i.e., systematic and random errors) can respond to this challenge.

Systematic reviews attempt to aggregate, appraise, and synthesize in a single source all empirical evidence that meet a set of previously specified eligibility criteria in order to answer a clearly formulated and often narrow research question on a particular topic of interest to support evidence-based practice ( Liberati et al., 2009 ). They adhere closely to explicit scientific principles ( Liberati et al., 2009 ) and rigorous methodological guidelines (Higgins & Green, 2008) aimed at reducing random and systematic errors that can lead to deviations from the truth in results or inferences. The use of explicit methods allows systematic reviews to aggregate a large body of research evidence, assess whether effects or relationships are in the same direction and of the same general magnitude, explain possible inconsistencies between study results, and determine the strength of the overall evidence for every outcome of interest based on the quality of included studies and the general consistency among them ( Cook, Mulrow, & Haynes, 1997 ). The main procedures of a systematic review involve:

- Formulating a review question and developing a search strategy based on explicit inclusion criteria for the identification of eligible studies (usually described in the context of a detailed review protocol).

- Searching for eligible studies using multiple databases and information sources, including grey literature sources, without any language restrictions.

- Selecting studies, extracting data, and assessing risk of bias in a duplicate manner using two independent reviewers to avoid random or systematic errors in the process.

- Analyzing data using quantitative or qualitative methods.

- Presenting results in summary of findings tables.

- Interpreting results and drawing conclusions.

Many systematic reviews, but not all, use statistical methods to combine the results of independent studies into a single quantitative estimate or summary effect size. Known as meta-analyses , these reviews use specific data extraction and statistical techniques (e.g., network, frequentist, or Bayesian meta-analyses) to calculate from each study by outcome of interest an effect size along with a confidence interval that reflects the degree of uncertainty behind the point estimate of effect ( Borenstein, Hedges, Higgins, & Rothstein, 2009 ; Deeks, Higgins, & Altman, 2008 ). Subsequently, they use fixed or random-effects analysis models to combine the results of the included studies, assess statistical heterogeneity, and calculate a weighted average of the effect estimates from the different studies, taking into account their sample sizes. The summary effect size is a value that reflects the average magnitude of the intervention effect for a particular outcome of interest or, more generally, the strength of a relationship between two variables across all studies included in the systematic review. By statistically combining data from multiple studies, meta-analyses can create more precise and reliable estimates of intervention effects than those derived from individual studies alone, when these are examined independently as discrete sources of information.

The review by Gurol-Urganci, de Jongh, Vodopivec-Jamsek, Atun, and Car (2013) on the effects of mobile phone messaging reminders for attendance at healthcare appointments is an illustrative example of a high-quality systematic review with meta-analysis. Missed appointments are a major cause of inefficiency in healthcare delivery with substantial monetary costs to health systems. These authors sought to assess whether mobile phone-based appointment reminders delivered through Short Message Service ( sms ) or Multimedia Messaging Service ( mms ) are effective in improving rates of patient attendance and reducing overall costs. To this end, they conducted a comprehensive search on multiple databases using highly sensitive search strategies without language or publication-type restrictions to identify all rct s that are eligible for inclusion. In order to minimize the risk of omitting eligible studies not captured by the original search, they supplemented all electronic searches with manual screening of trial registers and references contained in the included studies. Study selection, data extraction, and risk of bias assessments were performed independently by two coders using standardized methods to ensure consistency and to eliminate potential errors. Findings from eight rct s involving 6,615 participants were pooled into meta-analyses to calculate the magnitude of effects that mobile text message reminders have on the rate of attendance at healthcare appointments compared to no reminders and phone call reminders.

Meta-analyses are regarded as powerful tools for deriving meaningful conclusions. However, there are situations in which it is neither reasonable nor appropriate to pool studies together using meta-analytic methods simply because there is extensive clinical heterogeneity between the included studies or variation in measurement tools, comparisons, or outcomes of interest. In these cases, systematic reviews can use qualitative synthesis methods such as vote counting, content analysis, classification schemes and tabulations, as an alternative approach to narratively synthesize the results of the independent studies included in the review. This form of review is known as qualitative systematic review.

A rigorous example of one such review in the eHealth domain is presented by Mickan, Atherton, Roberts, Heneghan, and Tilson (2014) on the use of handheld computers by healthcare professionals and their impact on access to information and clinical decision-making. In line with the methodological guidelines for systematic reviews, these authors: (a) developed and registered with prospero ( www.crd.york.ac.uk/ prospero / ) an a priori review protocol; (b) conducted comprehensive searches for eligible studies using multiple databases and other supplementary strategies (e.g., forward searches); and (c) subsequently carried out study selection, data extraction, and risk of bias assessments in a duplicate manner to eliminate potential errors in the review process. Heterogeneity between the included studies in terms of reported outcomes and measures precluded the use of meta-analytic methods. To this end, the authors resorted to using narrative analysis and synthesis to describe the effectiveness of handheld computers on accessing information for clinical knowledge, adherence to safety and clinical quality guidelines, and diagnostic decision-making.

In recent years, the number of systematic reviews in the field of health informatics has increased considerably. Systematic reviews with discordant findings can cause great confusion and make it difficult for decision-makers to interpret the review-level evidence ( Moher, 2013 ). Therefore, there is a growing need for appraisal and synthesis of prior systematic reviews to ensure that decision-making is constantly informed by the best available accumulated evidence. Umbrella reviews , also known as overviews of systematic reviews, are tertiary types of evidence synthesis that aim to accomplish this; that is, they aim to compare and contrast findings from multiple systematic reviews and meta-analyses ( Becker & Oxman, 2008 ). Umbrella reviews generally adhere to the same principles and rigorous methodological guidelines used in systematic reviews. However, the unit of analysis in umbrella reviews is the systematic review rather than the primary study ( Becker & Oxman, 2008 ). Unlike systematic reviews that have a narrow focus of inquiry, umbrella reviews focus on broader research topics for which there are several potential interventions ( Smith, Devane, Begley, & Clarke, 2011 ). A recent umbrella review on the effects of home telemonitoring interventions for patients with heart failure critically appraised, compared, and synthesized evidence from 15 systematic reviews to investigate which types of home telemonitoring technologies and forms of interventions are more effective in reducing mortality and hospital admissions ( Kitsiou, Paré, & Jaana, 2015 ).

9.3.5. Realist Reviews

Realist reviews are theory-driven interpretative reviews developed to inform, enhance, or supplement conventional systematic reviews by making sense of heterogeneous evidence about complex interventions applied in diverse contexts in a way that informs policy decision-making ( Greenhalgh, Wong, Westhorp, & Pawson, 2011 ). They originated from criticisms of positivist systematic reviews which centre on their “simplistic” underlying assumptions ( Oates, 2011 ). As explained above, systematic reviews seek to identify causation. Such logic is appropriate for fields like medicine and education where findings of randomized controlled trials can be aggregated to see whether a new treatment or intervention does improve outcomes. However, many argue that it is not possible to establish such direct causal links between interventions and outcomes in fields such as social policy, management, and information systems where for any intervention there is unlikely to be a regular or consistent outcome ( Oates, 2011 ; Pawson, 2006 ; Rousseau, Manning, & Denyer, 2008 ).

To circumvent these limitations, Pawson, Greenhalgh, Harvey, and Walshe (2005) have proposed a new approach for synthesizing knowledge that seeks to unpack the mechanism of how “complex interventions” work in particular contexts. The basic research question — what works? — which is usually associated with systematic reviews changes to: what is it about this intervention that works, for whom, in what circumstances, in what respects and why? Realist reviews have no particular preference for either quantitative or qualitative evidence. As a theory-building approach, a realist review usually starts by articulating likely underlying mechanisms and then scrutinizes available evidence to find out whether and where these mechanisms are applicable ( Shepperd et al., 2009 ). Primary studies found in the extant literature are viewed as case studies which can test and modify the initial theories ( Rousseau et al., 2008 ).

The main objective pursued in the realist review conducted by Otte-Trojel, de Bont, Rundall, and van de Klundert (2014) was to examine how patient portals contribute to health service delivery and patient outcomes. The specific goals were to investigate how outcomes are produced and, most importantly, how variations in outcomes can be explained. The research team started with an exploratory review of background documents and research studies to identify ways in which patient portals may contribute to health service delivery and patient outcomes. The authors identified six main ways which represent “educated guesses” to be tested against the data in the evaluation studies. These studies were identified through a formal and systematic search in four databases between 2003 and 2013. Two members of the research team selected the articles using a pre-established list of inclusion and exclusion criteria and following a two-step procedure. The authors then extracted data from the selected articles and created several tables, one for each outcome category. They organized information to bring forward those mechanisms where patient portals contribute to outcomes and the variation in outcomes across different contexts.

9.3.6. Critical Reviews

Lastly, critical reviews aim to provide a critical evaluation and interpretive analysis of existing literature on a particular topic of interest to reveal strengths, weaknesses, contradictions, controversies, inconsistencies, and/or other important issues with respect to theories, hypotheses, research methods or results ( Baumeister & Leary, 1997 ; Kirkevold, 1997 ). Unlike other review types, critical reviews attempt to take a reflective account of the research that has been done in a particular area of interest, and assess its credibility by using appraisal instruments or critical interpretive methods. In this way, critical reviews attempt to constructively inform other scholars about the weaknesses of prior research and strengthen knowledge development by giving focus and direction to studies for further improvement ( Kirkevold, 1997 ).

Kitsiou, Paré, and Jaana (2013) provide an example of a critical review that assessed the methodological quality of prior systematic reviews of home telemonitoring studies for chronic patients. The authors conducted a comprehensive search on multiple databases to identify eligible reviews and subsequently used a validated instrument to conduct an in-depth quality appraisal. Results indicate that the majority of systematic reviews in this particular area suffer from important methodological flaws and biases that impair their internal validity and limit their usefulness for clinical and decision-making purposes. To this end, they provide a number of recommendations to strengthen knowledge development towards improving the design and execution of future reviews on home telemonitoring.

9.4. Summary

Table 9.1 outlines the main types of literature reviews that were described in the previous sub-sections and summarizes the main characteristics that distinguish one review type from another. It also includes key references to methodological guidelines and useful sources that can be used by eHealth scholars and researchers for planning and developing reviews.

Typology of Literature Reviews (adapted from Paré et al., 2015).

As shown in Table 9.1 , each review type addresses different kinds of research questions or objectives, which subsequently define and dictate the methods and approaches that need to be used to achieve the overarching goal(s) of the review. For example, in the case of narrative reviews, there is greater flexibility in searching and synthesizing articles ( Green et al., 2006 ). Researchers are often relatively free to use a diversity of approaches to search, identify, and select relevant scientific articles, describe their operational characteristics, present how the individual studies fit together, and formulate conclusions. On the other hand, systematic reviews are characterized by their high level of systematicity, rigour, and use of explicit methods, based on an “a priori” review plan that aims to minimize bias in the analysis and synthesis process (Higgins & Green, 2008). Some reviews are exploratory in nature (e.g., scoping/mapping reviews), whereas others may be conducted to discover patterns (e.g., descriptive reviews) or involve a synthesis approach that may include the critical analysis of prior research ( Paré et al., 2015 ). Hence, in order to select the most appropriate type of review, it is critical to know before embarking on a review project, why the research synthesis is conducted and what type of methods are best aligned with the pursued goals.

9.5. Concluding Remarks

In light of the increased use of evidence-based practice and research generating stronger evidence ( Grady et al., 2011 ; Lyden et al., 2013 ), review articles have become essential tools for summarizing, synthesizing, integrating or critically appraising prior knowledge in the eHealth field. As mentioned earlier, when rigorously conducted review articles represent powerful information sources for eHealth scholars and practitioners looking for state-of-the-art evidence. The typology of literature reviews we used herein will allow eHealth researchers, graduate students and practitioners to gain a better understanding of the similarities and differences between review types.

We must stress that this classification scheme does not privilege any specific type of review as being of higher quality than another ( Paré et al., 2015 ). As explained above, each type of review has its own strengths and limitations. Having said that, we realize that the methodological rigour of any review — be it qualitative, quantitative or mixed — is a critical aspect that should be considered seriously by prospective authors. In the present context, the notion of rigour refers to the reliability and validity of the review process described in section 9.2. For one thing, reliability is related to the reproducibility of the review process and steps, which is facilitated by a comprehensive documentation of the literature search process, extraction, coding and analysis performed in the review. Whether the search is comprehensive or not, whether it involves a methodical approach for data extraction and synthesis or not, it is important that the review documents in an explicit and transparent manner the steps and approach that were used in the process of its development. Next, validity characterizes the degree to which the review process was conducted appropriately. It goes beyond documentation and reflects decisions related to the selection of the sources, the search terms used, the period of time covered, the articles selected in the search, and the application of backward and forward searches ( vom Brocke et al., 2009 ). In short, the rigour of any review article is reflected by the explicitness of its methods (i.e., transparency) and the soundness of the approach used. We refer those interested in the concepts of rigour and quality to the work of Templier and Paré (2015) which offers a detailed set of methodological guidelines for conducting and evaluating various types of review articles.

To conclude, our main objective in this chapter was to demystify the various types of literature reviews that are central to the continuous development of the eHealth field. It is our hope that our descriptive account will serve as a valuable source for those conducting, evaluating or using reviews in this important and growing domain.

- Ammenwerth E., de Keizer N. An inventory of evaluation studies of information technology in health care. Trends in evaluation research, 1982-2002. International Journal of Medical Informatics. 2004; 44 (1):44–56. [ PubMed : 15778794 ]

- Anderson S., Allen P., Peckham S., Goodwin N. Asking the right questions: scoping studies in the commissioning of research on the organisation and delivery of health services. Health Research Policy and Systems. 2008; 6 (7):1–12. [ PMC free article : PMC2500008 ] [ PubMed : 18613961 ] [ CrossRef ]

- Archer N., Fevrier-Thomas U., Lokker C., McKibbon K. A., Straus S.E. Personal health records: a scoping review. Journal of American Medical Informatics Association. 2011; 18 (4):515–522. [ PMC free article : PMC3128401 ] [ PubMed : 21672914 ]

- Arksey H., O’Malley L. Scoping studies: towards a methodological framework. International Journal of Social Research Methodology. 2005; 8 (1):19–32.

- A systematic, tool-supported method for conducting literature reviews in information systems. Paper presented at the Proceedings of the 19th European Conference on Information Systems ( ecis 2011); June 9 to 11; Helsinki, Finland. 2011.

- Baumeister R. F., Leary M.R. Writing narrative literature reviews. Review of General Psychology. 1997; 1 (3):311–320.

- Becker L. A., Oxman A.D. In: Cochrane handbook for systematic reviews of interventions. Higgins J. P. T., Green S., editors. Hoboken, nj : John Wiley & Sons, Ltd; 2008. Overviews of reviews; pp. 607–631.

- Borenstein M., Hedges L., Higgins J., Rothstein H. Introduction to meta-analysis. Hoboken, nj : John Wiley & Sons Inc; 2009.

- Cook D. J., Mulrow C. D., Haynes B. Systematic reviews: Synthesis of best evidence for clinical decisions. Annals of Internal Medicine. 1997; 126 (5):376–380. [ PubMed : 9054282 ]

- Cooper H., Hedges L.V. In: The handbook of research synthesis and meta-analysis. 2nd ed. Cooper H., Hedges L. V., Valentine J. C., editors. New York: Russell Sage Foundation; 2009. Research synthesis as a scientific process; pp. 3–17.

- Cooper H. M. Organizing knowledge syntheses: A taxonomy of literature reviews. Knowledge in Society. 1988; 1 (1):104–126.

- Cronin P., Ryan F., Coughlan M. Undertaking a literature review: a step-by-step approach. British Journal of Nursing. 2008; 17 (1):38–43. [ PubMed : 18399395 ]

- Darlow S., Wen K.Y. Development testing of mobile health interventions for cancer patient self-management: A review. Health Informatics Journal. 2015 (online before print). [ PubMed : 25916831 ] [ CrossRef ]

- Daudt H. M., van Mossel C., Scott S.J. Enhancing the scoping study methodology: a large, inter-professional team’s experience with Arksey and O’Malley’s framework. bmc Medical Research Methodology. 2013; 13 :48. [ PMC free article : PMC3614526 ] [ PubMed : 23522333 ] [ CrossRef ]

- Davies P. The relevance of systematic reviews to educational policy and practice. Oxford Review of Education. 2000; 26 (3-4):365–378.

- Deeks J. J., Higgins J. P. T., Altman D.G. In: Cochrane handbook for systematic reviews of interventions. Higgins J. P. T., Green S., editors. Hoboken, nj : John Wiley & Sons, Ltd; 2008. Analysing data and undertaking meta-analyses; pp. 243–296.

- Deshazo J. P., Lavallie D. L., Wolf F.M. Publication trends in the medical informatics literature: 20 years of “Medical Informatics” in mesh . bmc Medical Informatics and Decision Making. 2009; 9 :7. [ PMC free article : PMC2652453 ] [ PubMed : 19159472 ] [ CrossRef ]

- Dixon-Woods M., Agarwal S., Jones D., Young B., Sutton A. Synthesising qualitative and quantitative evidence: a review of possible methods. Journal of Health Services Research and Policy. 2005; 10 (1):45–53. [ PubMed : 15667704 ]

- Finfgeld-Connett D., Johnson E.D. Literature search strategies for conducting knowledge-building and theory-generating qualitative systematic reviews. Journal of Advanced Nursing. 2013; 69 (1):194–204. [ PMC free article : PMC3424349 ] [ PubMed : 22591030 ]

- Grady B., Myers K. M., Nelson E. L., Belz N., Bennett L., Carnahan L. … Guidelines Working Group. Evidence-based practice for telemental health. Telemedicine Journal and E Health. 2011; 17 (2):131–148. [ PubMed : 21385026 ]