Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Prevent plagiarism. Run a free check.

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved June 7, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

5. METHODOLOGY 5.1 Hypotheses The research questions presented informally in Chapters 2 and 3 (see pp. 19, 22, 29, 30, 39, & 42) are recast below as formal hypotheses underlying the present research. The order coincides with the earlier discussions. H1 : Correlation between MT and ID is independent of model (Fitts, Welford, or Shannon) for index of difficulty H2 : Correlation between MT and ID is the same using ID calculated from the effective target width (in 1-D or 2-D) rather than the specified target width H3 : MT is independent of approach angle H4 : Error rate is independent of approach angle H5 : Correlation between MT and ID is independent of several methods of calculating W in the index of difficulty H6 : Movement time is independent of device or task H7 : Error rate is independent of device or task H8 : Index of performance is independent of device or task H9 : Point-drag-select tasks can be modeled as two separate Fitts' law tasks Hypotheses H1 , H2 , H6 , H7 , and H8 will be tested in the first experiment, H3 , H4 , and H5 in the second experiment, and H9 in the third. If the hypotheses were ranked by importance, H1 and H5 would stand out. The main contributions in this research lie in extending the theoretical and empirical arguments for the Shannon formulation over the Fitts' formulation ( H1 ), and in introducing a useful technique for extending Fitts' law to two dimensional target acquisition tasks ( H5 ). H1 has already been tested in the re-analysis of data from experiments by Fitts and others. Although the analyses provide evidence to reject the hypothesis (with the Shannon formulation out-performing the Fitts formulation), Experiment 1 is an attempt to provide first-hand empirical evidence. The extension of Fitts' law to dragging tasks ( H6 - H9 ) is important in light of the current genre of direct manipulation interfaces employing a variety of State 1 (pointing) and State 2 (dragging) transactions. Testing for device differences across tasks is important to aid in the design of direct manipulation systems employing pointing and dragging operations. H9 , tested in Experiment 3, is the weakest hypotheses in a statistical sense since rejecting a null hypothesis is not the basis of the analysis. Demonstration is the main objective. When a State 2 action follows a State 1 action (as commonly occurs), it is claimed that the effect is that of two Fitts' law tasks in sequence. Two prediction equations should apply, reflecting the inherent information processing capacities in each task. 5.2 Experiment 1 In Fitts' original experiment, subjects moved a stylus back and forth between two targets and tapped on them as quickly and accurately as possible (see Figure 1). Experiment 1 mimicked Fitts' paradigm except subjects manipulated an input device to move a cursor between two targets displayed on a CRT display. 5.2.1 Subjects Twelve male students in a computer engineering technology programme at a local college volunteered as subjects and were paid an hourly rate. All subjects used computers on a daily basis. Experience with input devices (other than a keyboard) was minimal. 5.2.2 Apparatus An Apple Macintosh II microcomputer (running system software version 6.0.4) served as the host computer with input through a mouse (standard equipment), a trackball, and a pressure-sensing tablet-with-stylus. The output display was a 33 cm colour CRT monitor (used in black-and-white mode) with a resolution of 640 by 480 pixels. The trackball was a Turbo Mouse ADB Version 3.0 by Kensington Microware Ltd. Both the mouse and the trackball contained a momentary switch – a "button" – which was pressed and released to select targets. The tablet, a Model SD42X by Wacom Inc., sensed pressure and absolute x-y coordinates using a stylus as input. The pressure of the stylus was sensed with 6 bits of resolution. Positioning was absolute: If the stylus was raised more than 1/4 inch above the tablet and then repositioned, the cursor jumped to the new x-y position when the stylus was re-engaged. If the stylus remained within 1/4 inch of the tablet, the cursor continued to track as the stylus moved. The control-display gain for the tablet-with-stylus was matched to that for the mouse which in turn was set to .53 (equivalent to the "fast" setting in the Macintosh control panel). Although the tablet-with-stylus had the potential for pseudo-analogue applications (with 6 bits of resolution), the present experiment used a threshold value set between 0 and 63 to signal a "select" operation. A threshold of 50 was chosen based on the subjective preferences of experienced users. 5.2.3 Procedure Subjects performed multiple trials on two different tasks using three different devices. The operation of the devices and the requirements of the tasks were explained and demonstrated to each subject before beginning. One warm-up block of trials was given prior to data collection. The two tasks were "point-select" and "drag-select". For the point-select task, subjects moved the cursor back and forth between the targets and selected each target by pressing and releasing a button on the device (or applying and releasing pressure on the stylus). An arrow pointing up appeared just below the target to be selected. After each selection, the arrow moved to the opposite target, thereby guiding subjects through the block of trials (see Figure 14a). Further feedback was provided by a black rectangle which appeared across the top of the display while in State 2. This feedback was particularly important with the stylus to inform subjects of a state change. (a) (b) Figure 14. Sample conditions for Experiment 1 showing (a) the point-select task and (b) the drag-select task

Figure 15 . Sample condition for Experiment 2

Figure 16. Sample condition for Experiment 3

Stats Hypothesis testing

Hypothesis testing

Hypothesis testing is one of the most widely used approaches of statistical inference.

The idea of hypothesis testing (more formally: null hypothesis significance testing - NHST) is the following: if we have some data observed, and we have a statistical model, we can use this statistical model to specify a fixed hypothesis about how the data did arise. For the example with the plants and music, this hypothesis could be: music has no influence on plants, all differences we see are due to random variation between individuals.

The null hypothesis H0 and the alternative hypothesis H1

Such a scenario is called the null hypothesis H0. Although it is very typical to use the assumption of no effect as null-hypothesis, note that it is really your choice, and you could use anything as null hypothesis, also the assumption: “classical music doubles the growth of plants”. The fact that it’s the analyst’s choice what to fix as null hypothesis is part of the reason why there are are a large number of tests available. We will see a few of them in the following chapter about important hypothesis tests.

The hypothesis that H0 is wrong, or !H0, is usually called the alternative hypothesis, H1

Given a statistical model, a “normal” or “simple” null hypothesis specifies a single value for the parameter of interest as the “base expectation”. A composite null hypothesis specifies a range of values for the parameter.

If we have a null hypothesis, we calculate the probability that we would see the observed data or data more extreme under this scenario. This is called a hypothesis tests, and we call the probability the p-value. If the p-value falls under a certain level (the significance level $\alpha$) we say the null hypothesis was rejected, and there is significant support for the alternative hypothesis. The level of $\alpha$ is a convention, in ecology we chose typically 0.05, so if a p-value falls below 0.05, we can reject the null hypothesis.

Test Statistic

Type I and II error

Significance level, Power

Misinterpretations

A problem with hypothesis tests and p-values is that their results are notoriously misinterpreted. The p-value is NOT the probability that the null hypothesis is true, or the probability that the alternative hypothesis is false, although many authors have made the mistake of interpreting it like that \citep[][]{Cohen-earthisround-1994}. Rather, the idea of p-values is to control the rate of false positives (Type I error). When doing hypothesis tests on random data, with an $\alpha$ level of 0.05, one will get exactly 5\% false positives. Not more and not less.

Further readings

- The Essential Statistics lecture notes

- http://www.stats.gla.ac.uk/steps/glossary/hypothesis_testing.html

Examples in R

Recall statistical tests, or more formally, null-hypothesis significance testing (NHST) is one of several ways in which you can approach data. The idea is that you define a null-hypothesis, and then you look a the probability that the data would occur under the assumption that the null hypothesis is true.

Now, there can be many null hypothesis, so you need many tests. The most widely used tests are given here.

The t -test can be used to test whether one sample is different from a reference value (e.g. 0: one-sample t -test), whether two samples are different (two-sample t -test) or whether two paired samples are different (paired t -test).

The t -test assumes that the data are normally distributed. It can handle samples with same or different variances, but needs to be “told” so.

t-test for 1 sample (PARAMETRIC TEST)

The one-sample t-test compares the MEAN score of a sample to a known value, usually the population MEAN (the average for the outcome of some population of interest).

Our null hypothesis is that the mean of the sample is not less than 2.5 (real example: weight data of 200 lizards collected for a research, we want to compare it with the known average weights available in the scientific literature)

t-test for 1 sample (NON-PARAMETRIC TEST)

One-sample Wilcoxon signed rank test is a non-parametric alternative method of one-sample t-test, which is used to test whether the location (MEDIAN) of the measurement is equal to a specified value

Create fake data log-normally distributed and verify data distribution

Our null hypothesis is that the median of x is not different from 1

Two Independent Samples T-test (PARAMETRIC TEST)

Parametric method for examining the difference in MEANS between two independent populations. The t -test should be preceeded by a graphical depiction of the data in order to check for normality within groups and for evidence of heteroscedasticity (= differences in variance), like so:

Reshape the data:

Now plot them as points (not box-n-whiskers):

The points to the right scatter similar to those on the left, although a bit more asymmetrically. Although we know that they are from a log-normal distribution (right), they don’t look problematic.

If data are not normally distributed, we sometimes succeed making data normal by using transformations, such as square-root, log, or alike (see section on transformations).

While t -tests on transformed data now actually test for differences between these transformed data, that is typically fine. Think of the pH-value, which is only a log-transform of the proton concentration. Do we care whether two treatments are different in pH or in proton concentrations? If so, then we need to choose the right data set. Most likely, we don’t and only choose the log-transform because the data are actually lognormally distributed, not normally.

A non-parametric alternative is the Mann-Whitney-U-test, or, the ANOVA-equivalent, the Kruskal-Wallis test. Both are available in R and explained later, but instead we recommend the following:

Use rank-transformations, which replaces the values by their rank (i.e. the lowest value receives a 1, the second lowest a 2 and so forth). A t -test of rank-transformed data is not the same as the Mann-Whitney-U-test, but it is more sensitive and hence preferable (Ruxton 2006) or at least equivalent (Zimmerman 2012).

To use the rank, we need to employ the “formula”-invokation of t.test! In this case, results are the same, indicating that our hunch about acceptable skew and scatter was correct.

(Note that the original t -test is a test for differences between means, while the rank- t -test becomes a test for general differences in values between the two groups, not specifically of the mean.)

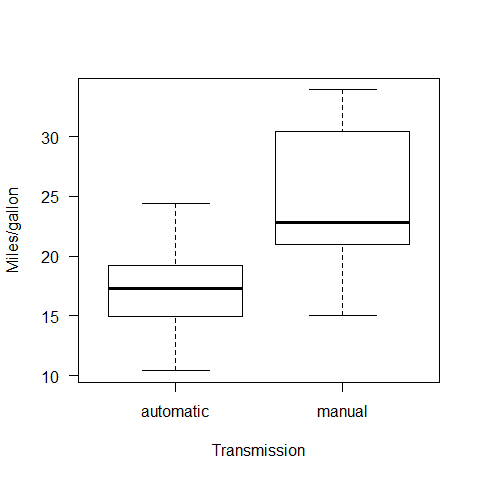

Cars example:

Test the difference in car consumption depending on the transmission type. Check wherever the 2 ‘independent populations’ are normally distributed

Graphic representation

We have two ~normally distributed populations. In order to test for differences in means, we applied a t-test for independent samples.

Any time we work with the t-test, we have to verify whether the variance is equal betwenn the 2 populations or not, then we fit the t-test accordingly. Our Ho or null hypothesis is that the consumption is the same irrespective to transmission. We assume non-equal variances

From the output: please note that CIs are the confidence intervales for differences in means

Same results if you run the following (meaning that the other commands were all by default)

The alternative could be one-sided (greater, lesser) as we discussed earlier for one-sample t-tests

If we assume equal variance, we run the following

Ways to check for equal / not equal variance

1) To examine the boxplot visually

2) To compute the actual variance

There is 2/3 times difference in variance.

3) Levene’s test

Mann-Whitney U test/Wilcoxon rank-sum test for two independent samples (NON-PARAMETRIC TEST)

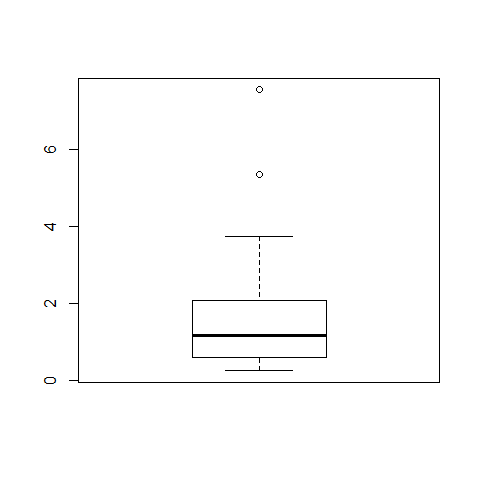

We change the response variable to hp (Gross horsepower)

The ‘population’ of cars with manual transmission has a hp not normally distributed, so we have to use a test for independent samples - non-parametric

We want to test a difference in hp depending on the transmission Using a non-parametric test, we test for differences in MEDIANS between 2 independent populations

Our null hypothesis will be that the medians are equal (two-sided)

Wilcoxon signed rank test for two dependend samples (NON PARAMETRIC)

This is a non-parametric method appropriate for examining the median difference in 2 populations observations that are paired or dependent one of the other.

This is a dataset about some water measurements taken at different levels of a river: ‘up’ and ‘down’ are water quality measurements of the same river taken before and after a water treatment filter, respectively

The line you see in the plot corresponds to x=y, that is, the same water measuremets before and after the water treatment (it seems to be true in 2 rivers only, 5 and 15)

Our null hypothesis is that the median before and after the treatment are not different

the assumption of normality is certainly not met for the measurements after the treatment

Paired T-test for two dependend samples test. (PARAMETRIC)

This parametric method examinates the difference in means for two populations that are paired or dependent one of the other

This is a dataset about the density of a fish prey species (fish/km2) in 121 lakes before and after removing a non-native predator

changing the order of variables, we have a change in the sign of the t-test estimated mean of differences

low p ->reject Ho, means are equal

Testing for normality

The normal distribution is the most important and most widely used distribution in statistics. We can say that a distribution is normally distributed when: 1) is symmetric around their mean. 2) the mean, median, and mode of a normal distribution are equal. 3) the area under the normal curve is equal to 1.0. 4) distributions are denser in the center and less dense in the tails. 5) distributions are defined by two parameters, the mean and the standard deviation (sd). 6) 68% of the area of a normal distribution is within one standard deviation of the mean. 7) Approximately 95% of the area of a normal distribution is within two standard deviations of the mean.

Normal distribution

Load example data

Visualize example data

Visual Check for Normality: quantile-quantile plot

This one plots the ranked samples from our distribution against a similar number of ranked quantiles taken from a normal distribution. If our sample is normally distributed then the line will be straight. Exceptions from normality show up different sorts of non-linearity (e.g. S-shapes or banana shapes).

Normality test: the shapiro.test

As an example we will create a fake data log-normally distributed and verify the assumption of normality

Correlations tests

Correlation tests measure the relationship between variables. This relationship can goes from +1 to -1, where 0 means no relation. Some of the tests that we can use to estimate this relationship are the following:

-Pearson’s correlation is a parametric measure of the linear association between 2 numeric variables (PARAMETRIC TEST)

-Spearman’s rank correlation is a non-parametric measure of the monotonic association between 2 numeric variables (NON-PARAMETRIC TEST)

-Kendall’s rank correlation is another non-parametric measure of the associtaion, based on concordance or discordance of x-y pairs (NON-PARAMETRIC TEST)

Compute the three correlation coefficients

Test the null hypothesis, that means that the correlation is 0 (there is no correlation)

When we have non-parametric data and we do not know which correlation method to choose, as a rule of thumb, if the correlation looks non-linear, Kendall tau should be better than Spearman Rho.

Further handy functions for correlations

Plot all possible combinations with “pairs”

To make it simpler we select what we are interested

Building a correlation matrix

- Ruxton, G. D. (2006). The unequal variance t-test is an underused alternative to Student??????s t-test and the Mann-Whitney U test. Behavioral Ecology, 17, 688-690.

- Zimmerman, D. W. (2012). A note on consistency of non-parametric rank tests and related rank transformations. British Journal of Mathematical and Statistical Psychology, 65, 122-44.

- http://www.uni-kiel.de/psychologie/rexrepos/rerDescriptive.html

Hypothesis Testing

- First Online: 01 October 2020

Cite this chapter

- Benito Damasceno 2

386 Accesses

This chapter deals with the one-tailed and two-tailed testing of the null (Ho) hypothesis versus the experimental (H1) one. It describes types of errors (I and II) and ways to avoid them; limitations of α significance level in reporting research results as compared to confidence interval and effect size, which does not depend on sample size and is useful in meta-analysis studies; the value of pre-establishing a large enough sample size and sufficient statistical power for avoiding type I and II errors, particularly in clinical trials with new kinds of interventions; calculation of sample size; the problems of dropouts and small samples; and the contribution that can be given by studies with small samples or even single cases (e.g., in rare conditions as autism) using appropriate designs as the ABAB.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Seuc AH (1996) En defensa de la hipótesis nula: un comentario acerca de la significación estadística y la aceptación de la hipótesis nula. Bol Oficina Sanit Panam 120(3):218–224

PubMed Google Scholar

Kirk RE (2009) Practical significance: a concept whose time has come. In: Gorard S (ed) Quantitative research in education. Sage, Thousand Oaks

Google Scholar

Nuzzo R (2014) Statistical errors. Nature 506:150–152

Article Google Scholar

Cumming G (2014) The new statistics: why and how. Psychol Sci 25(1):7–29

Ioannides JPA (2019) The importance of predefined rules and prespecified statistical analysis: do not abandon significance. JAMA 321(21):2067–2068. https://doi.org/10.1001/jama.2019.4582

Ioannides JPA (2018) The proposal to lower P value thresholds to .005. JAMA 319(14):1429–1430. https://doi.org/10.1001/jama.2018.1536

Cohen J (1988) Statistical power analyses for the behavioral sciences, 2nd edn. Erlbaum, Hillsdale

Clark-Carter D (1997) Doing quantitative psychological research: from design to report. Psychology Press Ltd, Hove

Rosnow RL, Rosenthal R (1989) Statistical procedures and the justification of knowledge in psychological science. Am Psychol 44(10):1276–1284

Sullivan GM, Feinn R (2012) Using effect size – or why the P value is not enough. J Grad Med Educ 4(3):279–282

Faul F, Erdfelder E, Lang A-G, Buchner A (2007) G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods 39:175–191

Damasceno A, Amaral JMSDS, Barreira AA, Becker J, Callegaro D, Campanholo KR, Damasceno LA, Diniz DS, Fragoso YD, Franco PS, Finkelsztejn A, Jorge FMH, Lana-Peixoto MA, Matta APDC, Mendonça ACR, Noal J, Paes RA, Papais-Alvarenga RM, Spedo CT, Damasceno BP (2018) Normative values of the brief repeatable battery of neuropsychological tests in a Brazilian population sample: discrete and regression-based norms. Arq Neuropsiquiatr 76(3):163–169

Dupont WD, Plummer WD (1990) Power and sample size calculations: a review and computer program. Control Clin Trials 11:116–128

Button KS, Ioannidis JPA, Mokrysz C, Nosek BA, Flint J, Robinson ESJ, Munafò MR (2013) Power failure: why small sample size undermines the reliability of neuroscience. Nat Rev Neurosci 14:365–376

Sturgis P (2006) Surveys and sampling. In: Breakwell GM, Hammond S, Fife-Schaw C, Smith JA (eds) Research methods in psychology, 3rd edn. Sage Publications, London

Kazdin AE (2016) Chapter 29: Single-case experimental research designs. In: Kazdin AE (ed) Methodological issues & strategies in clinical research, 4th edn. American Psychological Association, Washington, DC, pp. 459–483

Madden GJ (ed) (2013) APA handbook of behavior analysis, vol 1 & 2. American Psychological Association, Washington, DC

Download references

Author information

Authors and affiliations.

Department of Neurology, State University of Campinas (UNICAMP), Campinas, São Paulo, Brazil

Benito Damasceno

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Damasceno, B. (2020). Hypothesis Testing. In: Research on Cognition Disorders. Springer, Cham. https://doi.org/10.1007/978-3-030-57267-9_16

Download citation

DOI : https://doi.org/10.1007/978-3-030-57267-9_16

Published : 01 October 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-57265-5

Online ISBN : 978-3-030-57267-9

eBook Packages : Behavioral Science and Psychology Behavioral Science and Psychology (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Hypothesis testing

- PMID: 8900794

- DOI: 10.1097/00002800-199607000-00009

Hypothesis testing is the process of making a choice between two conflicting hypotheses. The null hypothesis, H0, is a statistical proposition stating that there is no significant difference between a hypothesized value of a population parameter and its value estimated from a sample drawn from that population. The alternative hypothesis, H1 or Ha, is a statistical proposition stating that there is a significant difference between a hypothesized value of a population parameter and its estimated value. When the null hypothesis is tested, a decision is either correct or incorrect. An incorrect decision can be made in two ways: We can reject the null hypothesis when it is true (Type I error) or we can fail to reject the null hypothesis when it is false (Type II error). The probability of making Type I and Type II errors is designated by alpha and beta, respectively. The smallest observed significance level for which the null hypothesis would be rejected is referred to as the p-value. The p-value only has meaning as a measure of confidence when the decision is to reject the null hypothesis. It has no meaning when the decision is that the null hypothesis is true.

PubMed Disclaimer

Similar articles

- [Principles of tests of hypotheses in statistics: alpha, beta and P]. Riou B, Landais P. Riou B, et al. Ann Fr Anesth Reanim. 1998;17(9):1168-80. doi: 10.1016/s0750-7658(00)80015-5. Ann Fr Anesth Reanim. 1998. PMID: 9835991 French.

- P value and the theory of hypothesis testing: an explanation for new researchers. Biau DJ, Jolles BM, Porcher R. Biau DJ, et al. Clin Orthop Relat Res. 2010 Mar;468(3):885-92. doi: 10.1007/s11999-009-1164-4. Clin Orthop Relat Res. 2010. PMID: 19921345 Free PMC article.

- Statistical reasoning in clinical trials: hypothesis testing. Kelen GD, Brown CG, Ashton J. Kelen GD, et al. Am J Emerg Med. 1988 Jan;6(1):52-61. doi: 10.1016/0735-6757(88)90207-0. Am J Emerg Med. 1988. PMID: 3275456 Review.

- Statistical Significance. Tenny S, Abdelgawad I. Tenny S, et al. 2023 Nov 23. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan–. 2023 Nov 23. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan–. PMID: 29083828 Free Books & Documents.

- Issues in biomedical statistics: statistical inference. Ludbrook J, Dudley H. Ludbrook J, et al. Aust N Z J Surg. 1994 Sep;64(9):630-6. doi: 10.1111/j.1445-2197.1994.tb02308.x. Aust N Z J Surg. 1994. PMID: 8085981 Review.

- Search in MeSH

LinkOut - more resources

Full text sources.

- Ovid Technologies, Inc.

- Wolters Kluwer

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Bayes Optimal Classifier and Naive Bayes Classifier

The Bayes Optimal Classifier is a probabilistic model that predicts the most likely outcome for a new situation. In this blog, we’ll have a look at Bayes optimal classifier and Naive Bayes Classifier.

The Bayes theorem is a method for calculating a hypothesis’s probability based on its prior probability, the probabilities of observing specific data given the hypothesis, and the seen data itself.

BAYES OPTIMAL CLASSIFIER

The Bayes Theorem, which provides a systematic means of computing a conditional probability, is used to describe it. It’s also related to Maximum a Posteriori (MAP), a probabilistic framework for determining the most likely hypothesis for a training dataset.

Take a hypothesis space that has 3 hypotheses h1, h2, and h3.

The posterior probabilities of the hypotheses are as follows:

h1 -> 0.4

h2 -> 0.3

h3 -> 0.3

Hence, h1 is the MAP hypothesis. (MAP => max posterior)

Suppose a new instance x is encountered, which is classified negative by h2 and h3 but positive by h1.

Taking all hypotheses into account, the probability that x is positive is .4 and the probability that it is negative is therefore .6.

The classification generated by the MAP hypothesis is different from the most probable classification in this case which is negative.

The most probable classification of the new instance is obtained by combining the predictions of all hypotheses, weighted by their posterior probabilities.

If the new example’s probable classification can be any value vj from a set V, the probability P(vj/D) that the right classification for the new instance is vj is merely

The denominator is omitted since we’re only using this for comparison and all the values of P(v j /D) will have the same denominator.

The value v j , for which P (v j /D) is maximum, is the best classification for the new instance.

A Bayes optimal classifier is a system that classifies new cases according to Equation. This strategy increases the likelihood that the new instance will be appropriately classified.

Consider an example for Bayes Optimal Classification,

Let there be 5 hypotheses h 1 through h 5 .

| P(hi/D) | P(F/ hi) | P(L/hi) | P(R/hi) |

| 0.4 | 1 | 0 | 0 |

| 0.2 | 0 | 1 | 0 |

| 0.1 | 0 | 0 | 1 |

| 0.1 | 0 | 1 | 0 |

| 0.2 | 0 | 1 | 0 |

The MAP theory, therefore, argues that the robot should proceed forward (F). Let’s see what the Bayes optimal procedure suggests.

Thus, the Bayes optimal procedure recommends the robot turn left.

Naive Bayes Classifier

The Naive Bayes classifiers, which are a set of classification algorithms, are created using the Bayes’ Theorem. ‘Each pair of features categorized is independent of the others. Naive Bayes Classifier is a group of algorithms that all work on the above principle.

The naive Bayes classifier is useful for learning tasks in which each instance x is represented by a set of attribute values and the target function f(x) can take any value from a finite set V.

A set of target function training examples is provided, as well as a new instance specified by the tuple of attribute values (a1, a2.. .an).

The learner is given the task of estimating the goal value. The most likely target value V MAP is assigned in the Bayesian strategy to classify the new instance.

Simply count the number of times each target value v j appears in the training data to estimate each P(v j ).

Where VNB stands for the Naive Bayes classifier’s target value.

The naive Bayes learning approach includes a learning stage in which the different P(v j ) and P(ai/v j ) variables are estimated using the training data’s frequency distribution.

The learned hypothesis is represented by the set of these estimations.

The basic Naive Bayes assumption is that each feature has the following effect:

Contribution to the ultimate product that is both independent and equal.

Let’s understand the concept of the Naive Bayes classifier better, with the help of an example.

Let’s use the naive Bayes classifier to solve a problem we discussed during our decision tree learning discussion: classifying days based on whether or not someone will play tennis.

Table 3.2 above shows 14 training instances of the goal concept PlayTennis, with the characteristics Outlook, Temperature, Humidity, and Wind describing each day. To categorize the following novel instance, we utilize the naive Bayes classifier and the training data from this table:

First, based on the frequencies of the 14 training instances, the probability of the various goal values may be easily determined.

We can also estimate conditional probabilities in the same way. Those for Wind = strong, for example, include

Based on the probability estimates learned from the training data, the naive Bayes classifier gives the goal value PlayTennis = no to this new occurrence. Furthermore, given the observed attribute values, we can determine the conditional probability that the target value is no by normalizing the above amounts to sum to one.

For the current example, this probability is,

Bayes Optimal Classifier and Naive Bayes Classifier- 1

Bayes Optimal Classifier and Naive Bayes Classifier- 2

- Bahasa Indonesia

- Eastern Europe

- Moscow Oblast

Elektrostal

Elektrostal Localisation : Country Russia , Oblast Moscow Oblast . Available Information : Geographical coordinates , Population, Altitude, Area, Weather and Hotel . Nearby cities and villages : Noginsk , Pavlovsky Posad and Staraya Kupavna .

Information

Find all the information of Elektrostal or click on the section of your choice in the left menu.

- Update data

| Country | |

|---|---|

| Oblast |

Elektrostal Demography

Information on the people and the population of Elektrostal.

| Elektrostal Population | 157,409 inhabitants |

|---|---|

| Elektrostal Population Density | 3,179.3 /km² (8,234.4 /sq mi) |

Elektrostal Geography

Geographic Information regarding City of Elektrostal .

| Elektrostal Geographical coordinates | Latitude: , Longitude: 55° 48′ 0″ North, 38° 27′ 0″ East |

|---|---|

| Elektrostal Area | 4,951 hectares 49.51 km² (19.12 sq mi) |

| Elektrostal Altitude | 164 m (538 ft) |

| Elektrostal Climate | Humid continental climate (Köppen climate classification: Dfb) |

Elektrostal Distance

Distance (in kilometers) between Elektrostal and the biggest cities of Russia.

Elektrostal Map

Locate simply the city of Elektrostal through the card, map and satellite image of the city.

Elektrostal Nearby cities and villages

Elektrostal Weather

Weather forecast for the next coming days and current time of Elektrostal.

Elektrostal Sunrise and sunset

Find below the times of sunrise and sunset calculated 7 days to Elektrostal.

| Day | Sunrise and sunset | Twilight | Nautical twilight | Astronomical twilight |

|---|---|---|---|---|

| 8 June | 02:43 - 11:25 - 20:07 | 01:43 - 21:07 | 01:00 - 01:00 | 01:00 - 01:00 |

| 9 June | 02:42 - 11:25 - 20:08 | 01:42 - 21:08 | 01:00 - 01:00 | 01:00 - 01:00 |

| 10 June | 02:42 - 11:25 - 20:09 | 01:41 - 21:09 | 01:00 - 01:00 | 01:00 - 01:00 |

| 11 June | 02:41 - 11:25 - 20:10 | 01:41 - 21:10 | 01:00 - 01:00 | 01:00 - 01:00 |

| 12 June | 02:41 - 11:26 - 20:11 | 01:40 - 21:11 | 01:00 - 01:00 | 01:00 - 01:00 |

| 13 June | 02:40 - 11:26 - 20:11 | 01:40 - 21:12 | 01:00 - 01:00 | 01:00 - 01:00 |

| 14 June | 02:40 - 11:26 - 20:12 | 01:39 - 21:13 | 01:00 - 01:00 | 01:00 - 01:00 |

Elektrostal Hotel

Our team has selected for you a list of hotel in Elektrostal classified by value for money. Book your hotel room at the best price.

| Located next to Noginskoye Highway in Electrostal, Apelsin Hotel offers comfortable rooms with free Wi-Fi. Free parking is available. The elegant rooms are air conditioned and feature a flat-screen satellite TV and fridge... | from | |

| Located in the green area Yamskiye Woods, 5 km from Elektrostal city centre, this hotel features a sauna and a restaurant. It offers rooms with a kitchen... | from | |

| Ekotel Bogorodsk Hotel is located in a picturesque park near Chernogolovsky Pond. It features an indoor swimming pool and a wellness centre. Free Wi-Fi and private parking are provided... | from | |

| Surrounded by 420,000 m² of parkland and overlooking Kovershi Lake, this hotel outside Moscow offers spa and fitness facilities, and a private beach area with volleyball court and loungers... | from | |

| Surrounded by green parklands, this hotel in the Moscow region features 2 restaurants, a bowling alley with bar, and several spa and fitness facilities. Moscow Ring Road is 17 km away... | from | |

Elektrostal Nearby

Below is a list of activities and point of interest in Elektrostal and its surroundings.

Elektrostal Page

| Direct link | |

|---|---|

| DB-City.com | Elektrostal /5 (2021-10-07 13:22:50) |

- Information /Russian-Federation--Moscow-Oblast--Elektrostal#info

- Demography /Russian-Federation--Moscow-Oblast--Elektrostal#demo

- Geography /Russian-Federation--Moscow-Oblast--Elektrostal#geo

- Distance /Russian-Federation--Moscow-Oblast--Elektrostal#dist1

- Map /Russian-Federation--Moscow-Oblast--Elektrostal#map

- Nearby cities and villages /Russian-Federation--Moscow-Oblast--Elektrostal#dist2

- Weather /Russian-Federation--Moscow-Oblast--Elektrostal#weather

- Sunrise and sunset /Russian-Federation--Moscow-Oblast--Elektrostal#sun

- Hotel /Russian-Federation--Moscow-Oblast--Elektrostal#hotel

- Nearby /Russian-Federation--Moscow-Oblast--Elektrostal#around

- Page /Russian-Federation--Moscow-Oblast--Elektrostal#page

- Terms of Use

- Copyright © 2024 DB-City - All rights reserved

- Change Ad Consent Do not sell my data

Current time by city

For example, New York

Current time by country

For example, Japan

Time difference

For example, London

For example, Dubai

Coordinates

For example, Hong Kong

For example, Delhi

For example, Sydney

Geographic coordinates of Elektrostal, Moscow Oblast, Russia

City coordinates

Coordinates of Elektrostal in decimal degrees

Coordinates of elektrostal in degrees and decimal minutes, utm coordinates of elektrostal, geographic coordinate systems.

WGS 84 coordinate reference system is the latest revision of the World Geodetic System, which is used in mapping and navigation, including GPS satellite navigation system (the Global Positioning System).

Geographic coordinates (latitude and longitude) define a position on the Earth’s surface. Coordinates are angular units. The canonical form of latitude and longitude representation uses degrees (°), minutes (′), and seconds (″). GPS systems widely use coordinates in degrees and decimal minutes, or in decimal degrees.

Latitude varies from −90° to 90°. The latitude of the Equator is 0°; the latitude of the South Pole is −90°; the latitude of the North Pole is 90°. Positive latitude values correspond to the geographic locations north of the Equator (abbrev. N). Negative latitude values correspond to the geographic locations south of the Equator (abbrev. S).

Longitude is counted from the prime meridian ( IERS Reference Meridian for WGS 84) and varies from −180° to 180°. Positive longitude values correspond to the geographic locations east of the prime meridian (abbrev. E). Negative longitude values correspond to the geographic locations west of the prime meridian (abbrev. W).

UTM or Universal Transverse Mercator coordinate system divides the Earth’s surface into 60 longitudinal zones. The coordinates of a location within each zone are defined as a planar coordinate pair related to the intersection of the equator and the zone’s central meridian, and measured in meters.

Elevation above sea level is a measure of a geographic location’s height. We are using the global digital elevation model GTOPO30 .

Elektrostal , Moscow Oblast, Russia

COMMENTS

The null and alternative hypotheses offer competing answers to your research question. When the research question asks "Does the independent variable affect the dependent variable?": The null hypothesis ( H0) answers "No, there's no effect in the population.". The alternative hypothesis ( Ha) answers "Yes, there is an effect in the ...

Hypothesis testing is formulated in terms of two hypotheses: H0: the null hypothesis; H1: the alternate hypothesis. The hypothesis we want to test is if H1 is \likely" true. So, there are two possible outcomes: Reject H0 and accept H1 because of su the sample in favor or H1; cient evidence in.

H1, H2, and H3 Hypothesis 1, Hypothesis 2, and Hypothesis 3. This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely ...

Table of contents. Step 1: State your null and alternate hypothesis. Step 2: Collect data. Step 3: Perform a statistical test. Step 4: Decide whether to reject or fail to reject your null hypothesis. Step 5: Present your findings. Other interesting articles. Frequently asked questions about hypothesis testing.

Hypotheses H1, H2, H6, H7, and H8 will be tested in the first experiment, H3, H4, and H5 in the second experiment, and H9 in the third. If the hypotheses were ranked by importance, H1 and H5 would stand out. The main contributions in this research lie in extending the theoretical and empirical arguments for the Shannon formulation over the Fitts' formulation (H1), and in introducing a useful ...

The hypothesis that H0 is wrong, or !H0, is usually called the alternative hypothesis, H1. Given a statistical model, a "normal" or "simple" null hypothesis specifies a single value for the parameter of interest as the "base expectation". A composite null hypothesis specifies a range of values for the parameter. p-value

Alternative Hypothesis ("H1", "H2"…) e.g., "There is a difference in typing speed between males and females" Directional Hypothesis („H1a"): e.g., "Males have a lower typing speed than females" Null hypothesis ("H0") e.g., "There is no difference in typing speed between males and females" Types

They want to test what proportion of the parts do not meet the specifications. Since they claim that the proportion is less than 2%, the symbol for the Alternative Hypothesis will be <. As is the usual practice, an equal symbol is used for the Null Hypothesis. H0: p = 0.02 H1: p < 0.02 (This is the claim). This instructional aid was prepared by ...

Hypotheses. Follow these six steps to set your hypotheses accurately: Structure them as follows: H1, H2, H3. Name the variables in the order in which they occur or will be measured. Assign a relationship among the variables and reference the population. Stick to what will be studied, not implications or your value judgments.

This chapter deals with the one-tailed and two-tailed testing of the null (Ho) hypothesis versus the experimental (H1) one. It describes types of errors (I and II) and ways to avoid them; limitations of α significance level in reporting research results as compared to confidence interval and effect size, which does not depend on sample size and is useful in meta-analysis studies; the value of ...

Download scientific diagram | A Research Model Note: H1 = Hypothesis 1; H2 = Hypothesis 2; H3 = Hypothesis 3; H4 = Hypothesis 4; H5 = Hypothesis 5. from publication: Comparative Analysis of ...

Download scientific diagram | Theoretical model. H1, H2, and H3 Hypothesis 1, Hypothesis 2, and Hypothesis 3. from publication: A Multilevel Investigation of Motivational Cultural Intelligence ...

The alternative hypothesis, H1 or Ha, is a statistical proposition stating that there is a significant difference between a hypothesized value of a population parameter and its estimated value. When the null hypothesis is tested, a decision is either correct or incorrect. An incorrect decision can be made in two ways: We can reject the null ...

ON TESTING MORE THAN ONE HYPOTHESIS BY J. N. DARROCH' AND S. D. SILVEY University of Manchester 1. Introduction. In two illuminating papers, Lehmann [51, [6] considered ... (H1 A H2 A H3) cannot be small when L(H1), L(H2) and L(H3) are all near 1. This is clearly a requirement which is quite distinct from the require-

Title Page Italicized items are what you should input The topic and page numbers are in the header in word, right justified Topic is 1-2 words Running Head- left justified followed by a shortened title Full title in middle of page, centered Your Name Affiliation Date The title needs to be informative and interesting.

Take a hypothesis space that has 3 hypotheses h1, h2, and h3. The posterior probabilities of the hypotheses are as follows: h1 -> 0.4. h2 -> 0.3. h3 -> 0.3 . Hence, h1 is the MAP hypothesis. (MAP => max posterior) Suppose a new instance x is encountered, which is classified negative by h2 and h3 but positive by h1.

Download scientific diagram | Conceptual model for Hypotheses H1, H2, and H3. from publication: R&D Cooperation and Knowledge Spillover Effects for Sustainable Business Innovation in the Chemical ...

In 1938, it was granted town status. [citation needed]Administrative and municipal status. Within the framework of administrative divisions, it is incorporated as Elektrostal City Under Oblast Jurisdiction—an administrative unit with the status equal to that of the districts. As a municipal division, Elektrostal City Under Oblast Jurisdiction is incorporated as Elektrostal Urban Okrug.

Elektrostal Geography. Geographic Information regarding City of Elektrostal. Elektrostal Geographical coordinates. Latitude: 55.8, Longitude: 38.45. 55° 48′ 0″ North, 38° 27′ 0″ East. Elektrostal Area. 4,951 hectares. 49.51 km² (19.12 sq mi) Elektrostal Altitude.

Cities near Elektrostal. Places of interest. Pavlovskiy Posad Noginsk. Travel guide resource for your visit to Elektrostal. Discover the best of Elektrostal so you can plan your trip right.

Download scientific diagram | | Research model for hypotheses H1, H2, H3, H4, and H5. from publication: Insights Into the Factors Influencing Student Motivation in Augmented Reality Learning ...

Geographic coordinates of Elektrostal, Moscow Oblast, Russia in WGS 84 coordinate system which is a standard in cartography, geodesy, and navigation, including Global Positioning System (GPS). Latitude of Elektrostal, longitude of Elektrostal, elevation above sea level of Elektrostal.

As for H3, the path hypothesized between symbolic capital and per- sonal development is significant (o ¼ 0.36, p < 0.01) and is supported by H3, with 42% of the personal development explained by ...