Jump to navigation

Cochrane Training

Chapter 12: synthesizing and presenting findings using other methods.

Joanne E McKenzie, Sue E Brennan

Key Points:

- Meta-analysis of effect estimates has many advantages, but other synthesis methods may need to be considered in the circumstance where there is incompletely reported data in the primary studies.

- Alternative synthesis methods differ in the completeness of the data they require, the hypotheses they address, and the conclusions and recommendations that can be drawn from their findings.

- These methods provide more limited information for healthcare decision making than meta-analysis, but may be superior to a narrative description where some results are privileged above others without appropriate justification.

- Tabulation and visual display of the results should always be presented alongside any synthesis, and are especially important for transparent reporting in reviews without meta-analysis.

- Alternative synthesis and visual display methods should be planned and specified in the protocol. When writing the review, details of the synthesis methods should be described.

- Synthesis methods that involve vote counting based on statistical significance have serious limitations and are unacceptable.

Cite this chapter as: McKenzie JE, Brennan SE. Chapter 12: Synthesizing and presenting findings using other methods. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

12.1 Why a meta-analysis of effect estimates may not be possible

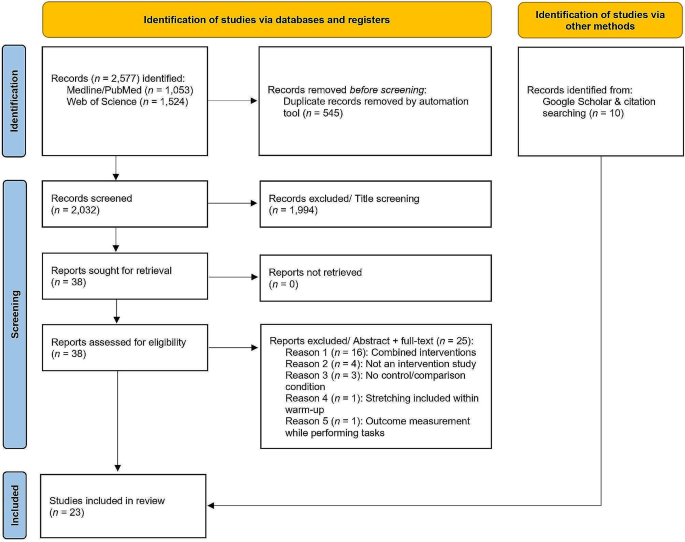

Meta-analysis of effect estimates has many potential advantages (see Chapter 10 and Chapter 11 ). However, there are circumstances where it may not be possible to undertake a meta-analysis and other statistical synthesis methods may be considered (McKenzie and Brennan 2014).

Some common reasons why it may not be possible to undertake a meta-analysis are outlined in Table 12.1.a . Legitimate reasons include limited evidence; incompletely reported outcome/effect estimates, or different effect measures used across studies; and bias in the evidence. Other commonly cited reasons for not using meta-analysis are because of too much clinical or methodological diversity, or statistical heterogeneity (Achana et al 2014). However, meta-analysis methods should be considered in these circumstances, as they may provide important insights if undertaken and interpreted appropriately.

Table 12.1.a Scenarios that may preclude meta-analysis, with possible solutions

12.2 Statistical synthesis when meta-analysis of effect estimates is not possible

A range of statistical synthesis methods are available, and these may be divided into three categories based on their preferability ( Table 12.2.a ). Preferable methods are the meta-analysis methods outlined in Chapter 10 and Chapter 11 , and are not discussed in detail here. This chapter focuses on methods that might be considered when a meta-analysis of effect estimates is not possible due to incompletely reported data in the primary studies. These methods divide into those that are ‘acceptable’ and ‘unacceptable’. The ‘acceptable’ methods differ in the data they require, the hypotheses they address, limitations around their use, and the conclusions and recommendations that can be drawn (see Section 12.2.1 ). The ‘unacceptable’ methods in common use are described (see Section 12.2.2 ), along with the reasons for why they are problematic.

Compared with meta-analysis methods, the ‘acceptable’ synthesis methods provide more limited information for healthcare decision making. However, these ‘acceptable’ methods may be superior to a narrative that describes results study by study, which comes with the risk that some studies or findings are privileged above others without appropriate justification. Further, in reviews with little or no synthesis, readers are left to make sense of the research themselves, which may result in the use of seemingly simple yet problematic synthesis methods such as vote counting based on statistical significance (see Section 12.2.2.1 ).

All methods first involve calculation of a ‘standardized metric’, followed by application of a synthesis method. In applying any of the following synthesis methods, it is important that only one outcome per study (or other independent unit, for example one comparison from a trial with multiple intervention groups) contributes to the synthesis. Chapter 9 outlines approaches for selecting an outcome when multiple have been measured. Similar to meta-analysis, sensitivity analyses can be undertaken to examine if the findings of the synthesis are robust to potentially influential decisions (see Chapter 10, Section 10.14 and Section 12.4 for examples).

Authors should report the specific methods used in lieu of meta-analysis (including approaches used for presentation and visual display), rather than stating that they have conducted a ‘narrative synthesis’ or ‘narrative summary’ without elaboration. The limitations of the chosen methods must be described, and conclusions worded with appropriate caution. The aim of reporting this detail is to make the synthesis process more transparent and reproducible, and help ensure use of appropriate methods and interpretation.

Table 12.2.a Summary of preferable and acceptable synthesis methods

12.2.1 Acceptable synthesis methods

12.2.1.1 summarizing effect estimates.

Description of method Summarizing effect estimates might be considered in the circumstance where estimates of intervention effect are available (or can be calculated), but the variances of the effects are not reported or are incorrect (and cannot be calculated from other statistics, or reasonably imputed) (Grimshaw et al 2003). Incorrect calculation of variances arises more commonly in non-standard study designs that involve clustering or matching ( Chapter 23 ). While missing variances may limit the possibility of meta-analysis, the (standardized) effects can be summarized using descriptive statistics such as the median, interquartile range, and the range. Calculating these statistics addresses the question ‘What is the range and distribution of observed effects?’

Reporting of methods and results The statistics that will be used to summarize the effects (e.g. median, interquartile range) should be reported. Box-and-whisker or bubble plots will complement reporting of the summary statistics by providing a visual display of the distribution of observed effects (Section 12.3.3 ). Tabulation of the available effect estimates will provide transparency for readers by linking the effects to the studies (Section 12.3.1 ). Limitations of the method should be acknowledged ( Table 12.2.a ).

12.2.1.2 Combining P values

Description of method Combining P values can be considered in the circumstance where there is no, or minimal, information reported beyond P values and the direction of effect; the types of outcomes and statistical tests differ across the studies; or results from non-parametric tests are reported (Borenstein et al 2009). Combining P values addresses the question ‘Is there evidence that there is an effect in at least one study?’ There are several methods available (Loughin 2004), with the method proposed by Fisher outlined here (Becker 1994).

Fisher’s method combines the P values from statistical tests across k studies using the formula:

One-sided P values are used, since these contain information about the direction of effect. However, these P values must reflect the same directional hypothesis (e.g. all testing if intervention A is more effective than intervention B). This is analogous to standardizing the direction of effects before undertaking a meta-analysis. Two-sided P values, which do not contain information about the direction, must first be converted to one-sided P values. If the effect is consistent with the directional hypothesis (e.g. intervention A is beneficial compared with B), then the one-sided P value is calculated as

In studies that do not report an exact P value but report a conventional level of significance (e.g. P<0.05), a conservative option is to use the threshold (e.g. 0.05). The P values must have been computed from statistical tests that appropriately account for the features of the design, such as clustering or matching, otherwise they will likely be incorrect.

Reporting of methods and results There are several methods for combining P values (Loughin 2004), so the chosen method should be reported, along with details of sensitivity analyses that examine if the results are sensitive to the choice of method. The results from the test should be reported alongside any available effect estimates (either individual results or meta-analysis results of a subset of studies) using text, tabulation and appropriate visual displays (Section 12.3 ). The albatross plot is likely to complement the analysis (Section 12.3.4 ). Limitations of the method should be acknowledged ( Table 12.2.a ).

12.2.1.3 Vote counting based on the direction of effect

Description of method Vote counting based on the direction of effect might be considered in the circumstance where the direction of effect is reported (with no further information), or there is no consistent effect measure or data reported across studies. The essence of vote counting is to compare the number of effects showing benefit to the number of effects showing harm for a particular outcome. However, there is wide variation in the implementation of the method due to differences in how ‘benefit’ and ‘harm’ are defined. Rules based on subjective decisions or statistical significance are problematic and should be avoided (see Section 12.2.2 ).

To undertake vote counting properly, each effect estimate is first categorized as showing benefit or harm based on the observed direction of effect alone, thereby creating a standardized binary metric. A count of the number of effects showing benefit is then compared with the number showing harm. Neither statistical significance nor the size of the effect are considered in the categorization. A sign test can be used to answer the question ‘is there any evidence of an effect?’ If there is no effect, the study effects will be distributed evenly around the null hypothesis of no difference. This is equivalent to testing if the true proportion of effects favouring the intervention (or comparator) is equal to 0.5 (Bushman and Wang 2009) (see Section 12.4.2.3 for guidance on implementing the sign test). An estimate of the proportion of effects favouring the intervention can be calculated ( p = u / n , where u = number of effects favouring the intervention, and n = number of studies) along with a confidence interval (e.g. using the Wilson or Jeffreys interval methods (Brown et al 2001)). Unless there are many studies contributing effects to the analysis, there will be large uncertainty in this estimated proportion.

Reporting of methods and results The vote counting method should be reported in the ‘Data synthesis’ section of the review. Failure to recognize vote counting as a synthesis method has led to it being applied informally (and perhaps unintentionally) to summarize results (e.g. through the use of wording such as ‘3 of 10 studies showed improvement in the outcome with intervention compared to control’; ‘most studies found’; ‘the majority of studies’; ‘few studies’ etc). In such instances, the method is rarely reported, and it may not be possible to determine whether an unacceptable (invalid) rule has been used to define benefit and harm (Section 12.2.2 ). The results from vote counting should be reported alongside any available effect estimates (either individual results or meta-analysis results of a subset of studies) using text, tabulation and appropriate visual displays (Section 12.3 ). The number of studies contributing to a synthesis based on vote counting may be larger than a meta-analysis, because only minimal statistical information (i.e. direction of effect) is required from each study to vote count. Vote counting results are used to derive the harvest and effect direction plots, although often using unacceptable methods of vote counting (see Section 12.3.5 ). Limitations of the method should be acknowledged ( Table 12.2.a ).

12.2.2 Unacceptable synthesis methods

12.2.2.1 vote counting based on statistical significance.

Conventional forms of vote counting use rules based on statistical significance and direction to categorize effects. For example, effects may be categorized into three groups: those that favour the intervention and are statistically significant (based on some predefined P value), those that favour the comparator and are statistically significant, and those that are statistically non-significant (Hedges and Vevea 1998). In a simpler formulation, effects may be categorized into two groups: those that favour the intervention and are statistically significant, and all others (Friedman 2001). Regardless of the specific formulation, when based on statistical significance, all have serious limitations and can lead to the wrong conclusion.

The conventional vote counting method fails because underpowered studies that do not rule out clinically important effects are counted as not showing benefit. Suppose, for example, the effect sizes estimated in two studies were identical. However, only one of the studies was adequately powered, and the effect in this study was statistically significant. Only this one effect (of the two identical effects) would be counted as showing ‘benefit’. Paradoxically, Hedges and Vevea showed that as the number of studies increases, the power of conventional vote counting tends to zero, except with large studies and at least moderate intervention effects (Hedges and Vevea 1998). Further, conventional vote counting suffers the same disadvantages as vote counting based on direction of effect, namely, that it does not provide information on the magnitude of effects and does not account for differences in the relative sizes of the studies.

12.2.2.2 Vote counting based on subjective rules

Subjective rules, involving a combination of direction, statistical significance and magnitude of effect, are sometimes used to categorize effects. For example, in a review examining the effectiveness of interventions for teaching quality improvement to clinicians, the authors categorized results as ‘beneficial effects’, ‘no effects’ or ‘detrimental effects’ (Boonyasai et al 2007). Categorization was based on direction of effect and statistical significance (using a predefined P value of 0.05) when available. If statistical significance was not reported, effects greater than 10% were categorized as ‘beneficial’ or ‘detrimental’, depending on their direction. These subjective rules often vary in the elements, cut-offs and algorithms used to categorize effects, and while detailed descriptions of the rules may provide a veneer of legitimacy, such rules have poor performance validity (Ioannidis et al 2008).

A further problem occurs when the rules are not described in sufficient detail for the results to be reproduced (e.g. ter Wee et al 2012, Thornicroft et al 2016). This lack of transparency does not allow determination of whether an acceptable or unacceptable vote counting method has been used (Valentine et al 2010).

12.3 Visual display and presentation of the data

Visual display and presentation of data is especially important for transparent reporting in reviews without meta-analysis, and should be considered irrespective of whether synthesis is undertaken (see Table 12.2.a for a summary of plots associated with each synthesis method). Tables and plots structure information to show patterns in the data and convey detailed information more efficiently than text. This aids interpretation and helps readers assess the veracity of the review findings.

12.3.1 Structured tabulation of results across studies

Ordering studies alphabetically by study ID is the simplest approach to tabulation; however, more information can be conveyed when studies are grouped in subpanels or ordered by a characteristic important for interpreting findings. The grouping of studies in tables should generally follow the structure of the synthesis presented in the text, which should closely reflect the review questions. This grouping should help readers identify the data on which findings are based and verify the review authors’ interpretation.

If the purpose of the table is comparative, grouping studies by any of following characteristics might be informative:

- comparisons considered in the review, or outcome domains (according to the structure of the synthesis);

- study characteristics that may reveal patterns in the data, for example potential effect modifiers including population subgroups, settings or intervention components.

If the purpose of the table is complete and transparent reporting of data, then ordering the studies to increase the prominence of the most relevant and trustworthy evidence should be considered. Possibilities include:

- certainty of the evidence (synthesized result or individual studies if no synthesis);

- risk of bias, study size or study design characteristics; and

- characteristics that determine how directly a study addresses the review question, for example relevance and validity of the outcome measures.

One disadvantage of grouping by study characteristics is that it can be harder to locate specific studies than when tables are ordered by study ID alone, for example when cross-referencing between the text and tables. Ordering by study ID within categories may partly address this.

The value of standardizing intervention and outcome labels is discussed in Chapter 3, Section 3.2.2 and Section 3.2.4 ), while the importance and methods for standardizing effect estimates is described in Chapter 6 . These practices can aid readers’ interpretation of tabulated data, especially when the purpose of a table is comparative.

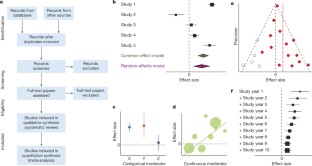

12.3.2 Forest plots

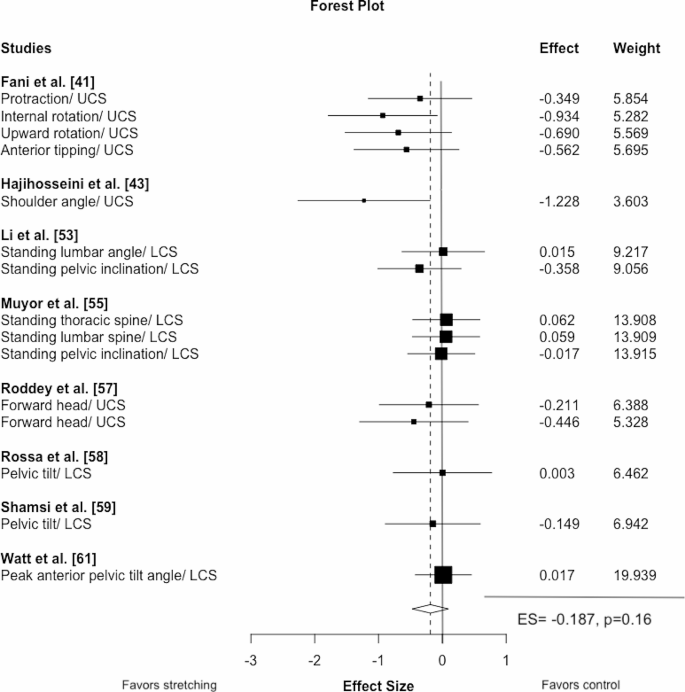

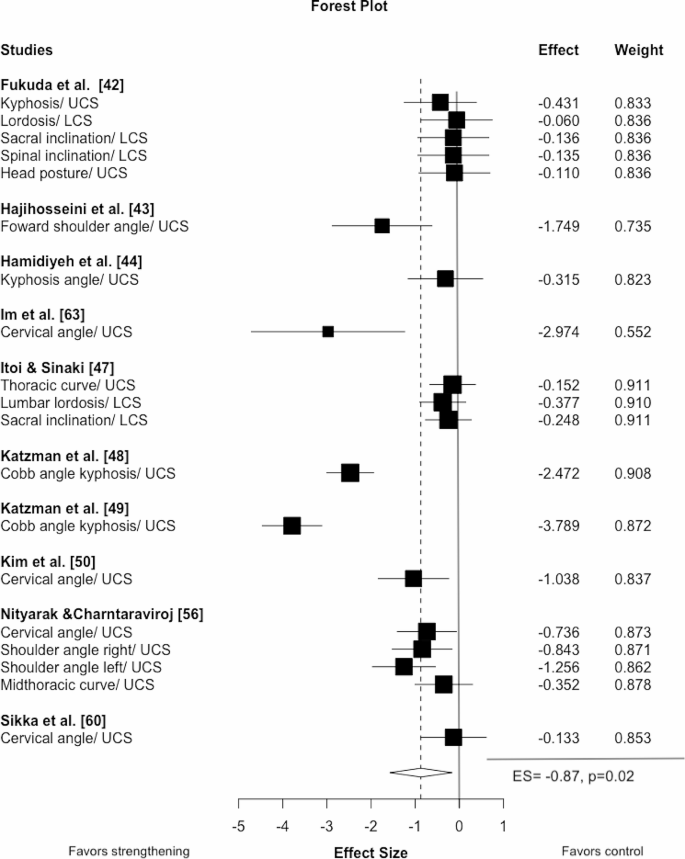

Forest plots and methods for preparing them are described elsewhere ( Chapter 10, Section 10.2 ). Some mention is warranted here of their importance for displaying study results when meta-analysis is not undertaken (i.e. without the summary diamond). Forest plots can aid interpretation of individual study results and convey overall patterns in the data, especially when studies are ordered by a characteristic important for interpreting results (e.g. dose and effect size, sample size). Similarly, grouping studies in subpanels based on characteristics thought to modify effects, such as population subgroups, variants of an intervention, or risk of bias, may help explore and explain differences across studies (Schriger et al 2010). These approaches to ordering provide important techniques for informally exploring heterogeneity in reviews without meta-analysis, and should be considered in preference to alphabetical ordering by study ID alone (Schriger et al 2010).

12.3.3 Box-and-whisker plots and bubble plots

Box-and-whisker plots (see Figure 12.4.a , Panel A) provide a visual display of the distribution of effect estimates (Section 12.2.1.1 ). The plot conventionally depicts five values. The upper and lower limits (or ‘hinges’) of the box, represent the 75th and 25th percentiles, respectively. The line within the box represents the 50th percentile (median), and the whiskers represent the extreme values (McGill et al 1978). Multiple box plots can be juxtaposed, providing a visual comparison of the distributions of effect estimates (Schriger et al 2006). For example, in a review examining the effects of audit and feedback on professional practice, the format of the feedback (verbal, written, both verbal and written) was hypothesized to be an effect modifier (Ivers et al 2012). Box-and-whisker plots of the risk differences were presented separately by the format of feedback, to allow visual comparison of the impact of format on the distribution of effects. When presenting multiple box-and-whisker plots, the width of the box can be varied to indicate the number of studies contributing to each. The plot’s common usage facilitates rapid and correct interpretation by readers (Schriger et al 2010). The individual studies contributing to the plot are not identified (as in a forest plot), however, and the plot is not appropriate when there are few studies (Schriger et al 2006).

A bubble plot (see Figure 12.4.a , Panel B) can also be used to provide a visual display of the distribution of effects, and is more suited than the box-and-whisker plot when there are few studies (Schriger et al 2006). The plot is a scatter plot that can display multiple dimensions through the location, size and colour of the bubbles. In a review examining the effects of educational outreach visits on professional practice, a bubble plot was used to examine visually whether the distribution of effects was modified by the targeted behaviour (O’Brien et al 2007). Each bubble represented the effect size (y-axis) and whether the study targeted a prescribing or other behaviour (x-axis). The size of the bubbles reflected the number of study participants. However, different formulations of the bubble plot can display other characteristics of the data (e.g. precision, risk-of-bias assessments).

12.3.4 Albatross plot

The albatross plot (see Figure 12.4.a , Panel C) allows approximate examination of the underlying intervention effect sizes where there is minimal reporting of results within studies (Harrison et al 2017). The plot only requires a two-sided P value, sample size and direction of effect (or equivalently, a one-sided P value and a sample size) for each result. The plot is a scatter plot of the study sample sizes against two-sided P values, where the results are separated by the direction of effect. Superimposed on the plot are ‘effect size contours’ (inspiring the plot’s name). These contours are specific to the type of data (e.g. continuous, binary) and statistical methods used to calculate the P values. The contours allow interpretation of the approximate effect sizes of the studies, which would otherwise not be possible due to the limited reporting of the results. Characteristics of studies (e.g. type of study design) can be identified using different colours or symbols, allowing informal comparison of subgroups.

The plot is likely to be more inclusive of the available studies than meta-analysis, because of its minimal data requirements. However, the plot should complement the results from a statistical synthesis, ideally a meta-analysis of available effects.

12.3.5 Harvest and effect direction plots

Harvest plots (see Figure 12.4.a , Panel D) provide a visual extension of vote counting results (Ogilvie et al 2008). In the plot, studies based on the categorization of their effects (e.g. ‘beneficial effects’, ‘no effects’ or ‘detrimental effects’) are grouped together. Each study is represented by a bar positioned according to its categorization. The bars can be ‘visually weighted’ (by height or width) and annotated to highlight study and outcome characteristics (e.g. risk-of-bias domains, proximal or distal outcomes, study design, sample size) (Ogilvie et al 2008, Crowther et al 2011). Annotation can also be used to identify the studies. A series of plots may be combined in a matrix that displays, for example, the vote counting results from different interventions or outcome domains.

The methods papers describing harvest plots have employed vote counting based on statistical significance (Ogilvie et al 2008, Crowther et al 2011). For the reasons outlined in Section 12.2.2.1 , this can be misleading. However, an acceptable approach would be to display the results based on direction of effect.

The effect direction plot is similar in concept to the harvest plot in the sense that both display information on the direction of effects (Thomson and Thomas 2013). In the first version of the effect direction plot, the direction of effects for each outcome within a single study are displayed, while the second version displays the direction of the effects for outcome domains across studies . In this second version, an algorithm is first applied to ‘synthesize’ the directions of effect for all outcomes within a domain (e.g. outcomes ‘sleep disturbed by wheeze’, ‘wheeze limits speech’, ‘wheeze during exercise’ in the outcome domain ‘respiratory’). This algorithm is based on the proportion of effects that are in a consistent direction and statistical significance. Arrows are used to indicate the reported direction of effect (for either outcomes or outcome domains). Features such as statistical significance, study design and sample size are denoted using size and colour. While this version of the plot conveys a large amount of information, it requires further development before its use can be recommended since the algorithm underlying the plot is likely to have poor performance validity.

12.4 Worked example

The example that follows uses four scenarios to illustrate methods for presentation and synthesis when meta-analysis is not possible. The first scenario contrasts a common approach to tabulation with alternative presentations that may enhance the transparency of reporting and interpretation of findings. Subsequent scenarios show the application of the synthesis approaches outlined in preceding sections of the chapter. Box 12.4.a summarizes the review comparisons and outcomes, and decisions taken by the review authors in planning their synthesis. While the example is loosely based on an actual review, the review description, scenarios and data are fabricated for illustration.

Box 12.4.a The review

12.4.1 Scenario 1: structured reporting of effects

We first address a scenario in which review authors have decided that the tools used to measure satisfaction measured concepts that were too dissimilar across studies for synthesis to be appropriate. Setting aside three of the 15 studies that reported on the birth partner’s satisfaction with care, a structured summary of effects is sought of the remaining 12 studies. To keep the example table short, only one outcome is shown per study for each of the measurement periods (antenatal, intrapartum or postpartum).

Table 12.4.a depicts a common yet suboptimal approach to presenting results. Note two features.

- Studies are ordered by study ID, rather than grouped by characteristics that might enhance interpretation (e.g. risk of bias, study size, validity of the measures, certainty of the evidence (GRADE)).

- Data reported are as extracted from each study; effect estimates were not calculated by the review authors and, where reported, were not standardized across studies (although data were available to do both).

Table 12.4.b shows an improved presentation of the same results. In line with best practice, here effect estimates have been calculated by the review authors for all outcomes, and a common metric computed to aid interpretation (in this case an odds ratio; see Chapter 6 for guidance on conversion of statistics to the desired format). Redundant information has been removed (‘statistical test’ and ‘P value’ columns). The studies have been re-ordered, first to group outcomes by period of care (intrapartum outcomes are shown here), and then by risk of bias. This re-ordering serves two purposes. Grouping by period of care aligns with the plan to consider outcomes for each period separately and ensures the table structure matches the order in which results are described in the text. Re-ordering by risk of bias increases the prominence of studies at lowest risk of bias, focusing attention on the results that should most influence conclusions. Had the review authors determined that a synthesis would be informative, then ordering to facilitate comparison across studies would be appropriate; for example, ordering by the type of satisfaction outcome (as pre-defined in the protocol, starting with global measures of satisfaction), or the comparisons made in the studies.

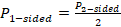

The results may also be presented in a forest plot, as shown in Figure 12.4.b . In both the table and figure, studies are grouped by risk of bias to focus attention on the most trustworthy evidence. The pattern of effects across studies is immediately apparent in Figure 12.4.b and can be described efficiently without having to interpret each estimate (e.g. difference between studies at low and high risk of bias emerge), although these results should be interpreted with caution in the absence of a formal test for subgroup differences (see Chapter 10, Section 10.11 ). Only outcomes measured during the intrapartum period are displayed, although outcomes from other periods could be added, maximizing the information conveyed.

An example description of the results from Scenario 1 is provided in Box 12.4.b . It shows that describing results study by study becomes unwieldy with more than a few studies, highlighting the importance of tables and plots. It also brings into focus the risk of presenting results without any synthesis, since it seems likely that the reader will try to make sense of the results by drawing inferences across studies. Since a synthesis was considered inappropriate, GRADE was applied to individual studies and then used to prioritize the reporting of results, focusing attention on the most relevant and trustworthy evidence. An alternative might be to report results at low risk of bias, an approach analogous to limiting a meta-analysis to studies at low risk of bias. Where possible, these and other approaches to prioritizing (or ordering) results from individual studies in text and tables should be pre-specified at the protocol stage.

Table 12.4.a Scenario 1: table ordered by study ID, data as reported by study authors

* All scales operate in the same direction; higher scores indicate greater satisfaction. CI = confidence interval; MD = mean difference; OR = odds ratio; POR = proportional odds ratio; RD = risk difference; RR = risk ratio.

Table 12.4.b Scenario 1: intrapartum outcome table ordered by risk of bias, standardized effect estimates calculated for all studies

* Outcomes operate in the same direction. A higher score, or an event, indicates greater satisfaction. ** Mean difference calculated for studies reporting continuous outcomes. † For binary outcomes, odds ratios were calculated from the reported summary statistics or were directly extracted from the study. For continuous outcomes, standardized mean differences were calculated and converted to odds ratios (see Chapter 6 ). CI = confidence interval; POR = proportional odds ratio.

Figure 12.4.b Forest plot depicting standardized effect estimates (odds ratios) for satisfaction

Box 12.4.b How to describe the results from this structured summary

12.4.2 Overview of scenarios 2–4: synthesis approaches

We now address three scenarios in which review authors have decided that the outcomes reported in the 15 studies all broadly reflect satisfaction with care. While the measures were quite diverse, a synthesis is sought to help decision makers understand whether women and their birth partners were generally more satisfied with the care received in midwife-led continuity models compared with other models. The three scenarios differ according to the data available (see Table 12.4.c ), with each reflecting progressively less complete reporting of the effect estimates. The data available determine the synthesis method that can be applied.

- Scenario 2: effect estimates available without measures of precision (illustrating synthesis of summary statistics).

- Scenario 3: P values available (illustrating synthesis of P values).

- Scenario 4: directions of effect available (illustrating synthesis using vote-counting based on direction of effect).

For studies that reported multiple satisfaction outcomes, one result is selected for synthesis using the decision rules in Box 12.4.a (point 2).

Table 12.4.c Scenarios 2, 3 and 4: available data for the selected outcome from each study

* All scales operate in the same direction. Higher scores indicate greater satisfaction. ** For a particular scenario, the ‘available data’ column indicates the data that were directly reported, or were calculated from the reported statistics, in terms of: effect estimate, direction of effect, confidence interval, precise P value, or statement regarding statistical significance (either statistically significant, or not). CI = confidence interval; direction = direction of effect reported or can be calculated; MD = mean difference; NS = not statistically significant; OR = odds ratio; RD = risk difference; RoB = risk of bias; RR = risk ratio; sig. = statistically significant; SMD = standardized mean difference; Stand. = standardized.

12.4.2.1 Scenario 2: summarizing effect estimates

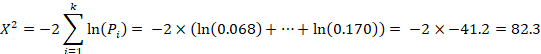

In Scenario 2, effect estimates are available for all outcomes. However, for most studies, a measure of variance is not reported, or cannot be calculated from the available data. We illustrate how the effect estimates may be summarized using descriptive statistics. In this scenario, it is possible to calculate odds ratios for all studies. For the continuous outcomes, this involves first calculating a standardized mean difference, and then converting this to an odds ratio ( Chapter 10, Section 10.6 ). The median odds ratio is 1.32 with an interquartile range of 1.02 to 1.53 (15 studies). Box-and-whisker plots may be used to display these results and examine informally whether the distribution of effects differs by the overall risk-of-bias assessment ( Figure 12.4.a , Panel A). However, because there are relatively few effects, a reasonable alternative would be to present bubble plots ( Figure 12.4.a , Panel B).

An example description of the results from the synthesis is provided in Box 12.4.c .

Box 12.4.c How to describe the results from this synthesis

12.4.2.2 Scenario 3: combining P values

In Scenario 3, there is minimal reporting of the data, and the type of data and statistical methods and tests vary. However, 11 of the 15 studies provide a precise P value and direction of effect, and a further two report a P value less than a threshold (<0.001) and direction. We use this scenario to illustrate a synthesis of P values. Since the reported P values are two-sided ( Table 12.4.c , column 6), they must first be converted to one-sided P values, which incorporate the direction of effect ( Table 12.4.c , column 7).

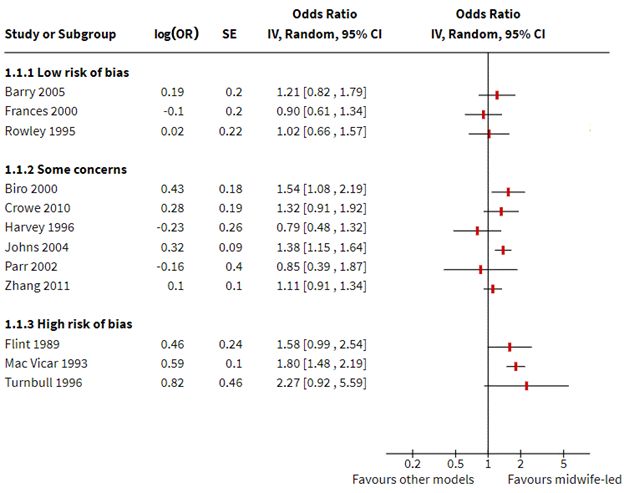

Fisher’s method for combining P values involved calculating the following statistic:

The combination of P values suggests there is strong evidence of benefit of midwife-led models of care in at least one study (P < 0.001 from a Chi 2 test, 13 studies). Restricting this analysis to those studies judged to be at an overall low risk of bias (sensitivity analysis), there is no longer evidence to reject the null hypothesis of no benefit of midwife-led model of care in any studies (P = 0.314, 3 studies). For the five studies reporting continuous satisfaction outcomes, sufficient data (precise P value, direction, total sample size) are reported to construct an albatross plot ( Figure 12.4.a , Panel C). The location of the points relative to the standardized mean difference contours indicate that the likely effects of the intervention in these studies are small.

An example description of the results from the synthesis is provided in Box 12.4.d .

Box 12.4.d How to describe the results from this synthesis

12.4.2.3 Scenario 4: vote counting based on direction of effect

In Scenario 4, there is minimal reporting of the data, and the type of effect measure (when used) varies across the studies (e.g. mean difference, proportional odds ratio). Of the 15 results, only five report data suitable for meta-analysis (effect estimate and measure of precision; Table 12.4.c , column 8), and no studies reported precise P values. We use this scenario to illustrate vote counting based on direction of effect. For each study, the effect is categorized as beneficial or harmful based on the direction of effect (indicated as a binary metric; Table 12.4.c , column 9).

Of the 15 studies, we exclude three because they do not provide information on the direction of effect, leaving 12 studies to contribute to the synthesis. Of these 12, 10 effects favour midwife-led models of care (83%). The probability of observing this result if midwife-led models of care are truly ineffective is 0.039 (from a binomial probability test, or equivalently, the sign test). The 95% confidence interval for the percentage of effects favouring midwife-led care is wide (55% to 95%).

The binomial test can be implemented using standard computer spreadsheet or statistical packages. For example, the two-sided P value from the binomial probability test presented can be obtained from Microsoft Excel by typing =2*BINOM.DIST(2, 12, 0.5, TRUE) into any cell in the spreadsheet. The syntax requires the smaller of the ‘number of effects favouring the intervention’ or ‘the number of effects favouring the control’ (here, the smaller of these counts is 2), the number of effects (here 12), and the null value (true proportion of effects favouring the intervention = 0.5). In Stata, the bitest command could be used (e.g. bitesti 12 10 0.5 ).

A harvest plot can be used to display the results ( Figure 12.4.a , Panel D), with characteristics of the studies represented using different heights and shading. A sensitivity analysis might be considered, restricting the analysis to those studies judged to be at an overall low risk of bias. However, only four studies were judged to be at a low risk of bias (of which, three favoured midwife-led models of care), precluding reasonable interpretation of the count.

An example description of the results from the synthesis is provided in Box 12.4.e .

Box 12.4.e How to describe the results from this synthesis

Figure 12.4.a Possible graphical displays of different types of data. (A) Box-and-whisker plots of odds ratios for all outcomes and separately by overall risk of bias. (B) Bubble plot of odds ratios for all outcomes and separately by the model of care. The colours of the bubbles represent the overall risk of bias judgement (green = low risk of bias; yellow = some concerns; red = high risk of bias). (C) Albatross plot of the study sample size against P values (for the five continuous outcomes in Table 12.4.c , column 6). The effect contours represent standardized mean differences. (D) Harvest plot (height depicts overall risk of bias judgement (tall = low risk of bias; medium = some concerns; short = high risk of bias), shading depicts model of care (light grey = caseload; dark grey = team), alphabet characters represent the studies)

12.5 Chapter information

Authors: Joanne E McKenzie, Sue E Brennan

Acknowledgements: Sections of this chapter build on chapter 9 of version 5.1 of the Handbook , with editors Jonathan J Deeks, Julian PT Higgins and Douglas G Altman.

We are grateful to the following for commenting helpfully on earlier drafts: Miranda Cumpston, Jamie Hartmann-Boyce, Tianjing Li, Rebecca Ryan and Hilary Thomson.

Funding: JEM is supported by an Australian National Health and Medical Research Council (NHMRC) Career Development Fellowship (1143429). SEB’s position is supported by the NHMRC Cochrane Collaboration Funding Program.

12.6 References

Achana F, Hubbard S, Sutton A, Kendrick D, Cooper N. An exploration of synthesis methods in public health evaluations of interventions concludes that the use of modern statistical methods would be beneficial. Journal of Clinical Epidemiology 2014; 67 : 376–390.

Becker BJ. Combining significance levels. In: Cooper H, Hedges LV, editors. A handbook of research synthesis . New York (NY): Russell Sage; 1994. p. 215–235.

Boonyasai RT, Windish DM, Chakraborti C, Feldman LS, Rubin HR, Bass EB. Effectiveness of teaching quality improvement to clinicians: a systematic review. JAMA 2007; 298 : 1023–1037.

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Meta-Analysis methods based on direction and p-values. Introduction to Meta-Analysis . Chichester (UK): John Wiley & Sons, Ltd; 2009. pp. 325–330.

Brown LD, Cai TT, DasGupta A. Interval estimation for a binomial proportion. Statistical Science 2001; 16 : 101–117.

Bushman BJ, Wang MC. Vote-counting procedures in meta-analysis. In: Cooper H, Hedges LV, Valentine JC, editors. Handbook of Research Synthesis and Meta-Analysis . 2nd ed. New York (NY): Russell Sage Foundation; 2009. p. 207–220.

Crowther M, Avenell A, MacLennan G, Mowatt G. A further use for the Harvest plot: a novel method for the presentation of data synthesis. Research Synthesis Methods 2011; 2 : 79–83.

Friedman L. Why vote-count reviews don’t count. Biological Psychiatry 2001; 49 : 161–162.

Grimshaw J, McAuley LM, Bero LA, Grilli R, Oxman AD, Ramsay C, Vale L, Zwarenstein M. Systematic reviews of the effectiveness of quality improvement strategies and programmes. Quality and Safety in Health Care 2003; 12 : 298–303.

Harrison S, Jones HE, Martin RM, Lewis SJ, Higgins JPT. The albatross plot: a novel graphical tool for presenting results of diversely reported studies in a systematic review. Research Synthesis Methods 2017; 8 : 281–289.

Hedges L, Vevea J. Fixed- and random-effects models in meta-analysis. Psychological Methods 1998; 3 : 486–504.

Ioannidis JP, Patsopoulos NA, Rothstein HR. Reasons or excuses for avoiding meta-analysis in forest plots. BMJ 2008; 336 : 1413–1415.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, O’Brien MA, Johansen M, Grimshaw J, Oxman AD. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database of Systematic Reviews 2012; 6 : CD000259.

Jones DR. Meta-analysis: weighing the evidence. Statistics in Medicine 1995; 14 : 137–149.

Loughin TM. A systematic comparison of methods for combining p-values from independent tests. Computational Statistics & Data Analysis 2004; 47 : 467–485.

McGill R, Tukey JW, Larsen WA. Variations of box plots. The American Statistician 1978; 32 : 12–16.

McKenzie JE, Brennan SE. Complex reviews: methods and considerations for summarising and synthesising results in systematic reviews with complexity. Report to the Australian National Health and Medical Research Council. 2014.

O’Brien MA, Rogers S, Jamtvedt G, Oxman AD, Odgaard-Jensen J, Kristoffersen DT, Forsetlund L, Bainbridge D, Freemantle N, Davis DA, Haynes RB, Harvey EL. Educational outreach visits: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews 2007; 4 : CD000409.

Ogilvie D, Fayter D, Petticrew M, Sowden A, Thomas S, Whitehead M, Worthy G. The harvest plot: a method for synthesising evidence about the differential effects of interventions. BMC Medical Research Methodology 2008; 8 : 8.

Riley RD, Higgins JP, Deeks JJ. Interpretation of random effects meta-analyses. BMJ 2011; 342 : d549.

Schriger DL, Sinha R, Schroter S, Liu PY, Altman DG. From submission to publication: a retrospective review of the tables and figures in a cohort of randomized controlled trials submitted to the British Medical Journal. Annals of Emergency Medicine 2006; 48 : 750–756, 756 e751–721.

Schriger DL, Altman DG, Vetter JA, Heafner T, Moher D. Forest plots in reports of systematic reviews: a cross-sectional study reviewing current practice. International Journal of Epidemiology 2010; 39 : 421–429.

ter Wee MM, Lems WF, Usan H, Gulpen A, Boonen A. The effect of biological agents on work participation in rheumatoid arthritis patients: a systematic review. Annals of the Rheumatic Diseases 2012; 71 : 161–171.

Thomson HJ, Thomas S. The effect direction plot: visual display of non-standardised effects across multiple outcome domains. Research Synthesis Methods 2013; 4 : 95–101.

Thornicroft G, Mehta N, Clement S, Evans-Lacko S, Doherty M, Rose D, Koschorke M, Shidhaye R, O’Reilly C, Henderson C. Evidence for effective interventions to reduce mental-health-related stigma and discrimination. Lancet 2016; 387 : 1123–1132.

Valentine JC, Pigott TD, Rothstein HR. How many studies do you need?: a primer on statistical power for meta-analysis. Journal of Educational and Behavioral Statistics 2010; 35 : 215–247.

For permission to re-use material from the Handbook (either academic or commercial), please see here for full details.

Analysis vs. Synthesis

What's the difference.

Analysis and synthesis are two fundamental processes in problem-solving and decision-making. Analysis involves breaking down a complex problem or situation into its constituent parts, examining each part individually, and understanding their relationships and interactions. It focuses on understanding the components and their characteristics, identifying patterns and trends, and drawing conclusions based on evidence and data. On the other hand, synthesis involves combining different elements or ideas to create a new whole or solution. It involves integrating information from various sources, identifying commonalities and differences, and generating new insights or solutions. While analysis is more focused on understanding and deconstructing a problem, synthesis is about creating something new by combining different elements. Both processes are essential for effective problem-solving and decision-making, as they complement each other and provide a holistic approach to understanding and solving complex problems.

Further Detail

Introduction.

Analysis and synthesis are two fundamental processes in various fields of study, including science, philosophy, and problem-solving. While they are distinct approaches, they are often interconnected and complementary. Analysis involves breaking down complex ideas or systems into smaller components to understand their individual parts and relationships. On the other hand, synthesis involves combining separate elements or ideas to create a new whole or understanding. In this article, we will explore the attributes of analysis and synthesis, highlighting their differences and similarities.

Attributes of Analysis

1. Focus on details: Analysis involves a meticulous examination of individual components, details, or aspects of a subject. It aims to understand the specific characteristics, functions, and relationships of these elements. By breaking down complex ideas into smaller parts, analysis provides a deeper understanding of the subject matter.

2. Objective approach: Analysis is often driven by objectivity and relies on empirical evidence, data, or logical reasoning. It aims to uncover patterns, trends, or underlying principles through systematic observation and investigation. By employing a structured and logical approach, analysis helps in drawing accurate conclusions and making informed decisions.

3. Critical thinking: Analysis requires critical thinking skills to evaluate and interpret information. It involves questioning assumptions, identifying biases, and considering multiple perspectives. Through critical thinking, analysis helps in identifying strengths, weaknesses, opportunities, and threats, enabling a comprehensive understanding of the subject matter.

4. Reductionist approach: Analysis often adopts a reductionist approach, breaking down complex systems into simpler components. This reductionist perspective allows for a detailed examination of each part, facilitating a more in-depth understanding of the subject matter. However, it may sometimes overlook the holistic view or emergent properties of the system.

5. Diagnostic tool: Analysis is commonly used as a diagnostic tool to identify problems, errors, or inefficiencies within a system. By examining individual components and their interactions, analysis helps in pinpointing the root causes of issues, enabling effective problem-solving and optimization.

Attributes of Synthesis

1. Integration of ideas: Synthesis involves combining separate ideas, concepts, or elements to create a new whole or understanding. It aims to generate novel insights, solutions, or perspectives by integrating diverse information or viewpoints. Through synthesis, complex systems or ideas can be approached holistically, considering the interconnections and interdependencies between various components.

2. Creative thinking: Synthesis requires creative thinking skills to generate new ideas, concepts, or solutions. It involves making connections, recognizing patterns, and thinking beyond traditional boundaries. By embracing divergent thinking, synthesis enables innovation and the development of unique perspectives.

3. Systems thinking: Synthesis often adopts a systems thinking approach, considering the interactions and interdependencies between various components. It recognizes that the whole is more than the sum of its parts and aims to understand emergent properties or behaviors that arise from the integration of these parts. Systems thinking allows for a comprehensive understanding of complex phenomena.

4. Constructive approach: Synthesis is a constructive process that builds upon existing knowledge or ideas. It involves organizing, reorganizing, or restructuring information to create a new framework or understanding. By integrating diverse perspectives or concepts, synthesis helps in generating comprehensive and innovative solutions.

5. Design tool: Synthesis is often used as a design tool to create new products, systems, or theories. By combining different elements or ideas, synthesis enables the development of innovative and functional solutions. It allows for the exploration of multiple possibilities and the creation of something new and valuable.

Interplay between Analysis and Synthesis

While analysis and synthesis are distinct processes, they are not mutually exclusive. In fact, they often complement each other and are interconnected in various ways. Analysis provides the foundation for synthesis by breaking down complex ideas or systems into manageable components. It helps in understanding the individual parts and their relationships, which is essential for effective synthesis.

On the other hand, synthesis builds upon the insights gained from analysis by integrating separate elements or ideas to create a new whole. It allows for a holistic understanding of complex phenomena, considering the interconnections and emergent properties that analysis alone may overlook. Synthesis also helps in identifying gaps or limitations in existing knowledge, which can then be further analyzed to gain a deeper understanding.

Furthermore, analysis and synthesis often involve an iterative process. Initial analysis may lead to the identification of patterns or relationships that can inform the synthesis process. Synthesis, in turn, may generate new insights or questions that require further analysis. This iterative cycle allows for continuous refinement and improvement of understanding.

Analysis and synthesis are two essential processes that play a crucial role in various fields of study. While analysis focuses on breaking down complex ideas into smaller components to understand their individual parts and relationships, synthesis involves integrating separate elements or ideas to create a new whole or understanding. Both approaches have their unique attributes and strengths, and they often complement each other in a cyclical and iterative process. By employing analysis and synthesis effectively, we can gain a comprehensive understanding of complex phenomena, generate innovative solutions, and make informed decisions.

Comparisons may contain inaccurate information about people, places, or facts. Please report any issues.

- Open access

- Published: 11 August 2009

Methods for the synthesis of qualitative research: a critical review

- Elaine Barnett-Page 1 &

- James Thomas 1

BMC Medical Research Methodology volume 9 , Article number: 59 ( 2009 ) Cite this article

198k Accesses

1142 Citations

40 Altmetric

Metrics details

In recent years, a growing number of methods for synthesising qualitative research have emerged, particularly in relation to health-related research. There is a need for both researchers and commissioners to be able to distinguish between these methods and to select which method is the most appropriate to their situation.

A number of methodological and conceptual links between these methods were identified and explored, while contrasting epistemological positions explained differences in approaches to issues such as quality assessment and extent of iteration. Methods broadly fall into 'realist' or 'idealist' epistemologies, which partly accounts for these differences.

Methods for qualitative synthesis vary across a range of dimensions. Commissioners of qualitative syntheses might wish to consider the kind of product they want and select their method – or type of method – accordingly.

Peer Review reports

The range of different methods for synthesising qualitative research has been growing over recent years [ 1 , 2 ], alongside an increasing interest in qualitative synthesis to inform health-related policy and practice [ 3 ]. While the terms 'meta-analysis' (a statistical method to combine the results of primary studies), or sometimes 'narrative synthesis', are frequently used to describe how quantitative research is synthesised, far more terms are used to describe the synthesis of qualitative research. This profusion of terms can mask some of the basic similarities in approach that the different methods share, and also lead to some confusion regarding which method is most appropriate in a given situation. This paper does not argue that the various nomenclatures are unnecessary, but rather seeks to draw together and review the full range of methods of synthesis available to assist future reviewers in selecting a method that is fit for their purpose. It also represents an attempt to guide the reader through some of the varied terminology to spring up around qualitative synthesis. Other helpful reviews of synthesis methods have been undertaken in recent years with slightly different foci to this paper. Two recent studies have focused on describing and critiquing methods for the integration of qualitative research with quantitative [ 4 , 5 ] rather than exclusively examining the detail and rationale of methods for the synthesis of qualitative research. Two other significant pieces of work give practical advice for conducting the synthesis of qualitative research, but do not discuss the full range of methods available [ 6 , 7 ]. We begin our Discussion by outlining each method of synthesis in turn, before comparing and contrasting characteristics of these different methods across a range of dimensions. Readers who are more familiar with the synthesis methods described here may prefer to turn straight to the 'dimensions of difference' analysis in the second part of the Discussion.

Overview of synthesis methods

Meta-ethnography.

In their seminal work of 1988, Noblit and Hare proposed meta-ethnography as an alternative to meta-analysis [ 8 ]. They cited Strike and Posner's [ 9 ] definition of synthesis as an activity in which separate parts are brought together to form a 'whole'; this construction of the whole is essentially characterised by some degree of innovation, so that the result is greater than the sum of its parts. They also borrowed from Turner's theory of social explanation [ 10 ], a key tenet of which was building 'comparative understanding' [[ 8 ], p22] rather than aggregating data.

To Noblit and Hare, synthesis provided an answer to the question of 'how to "put together" written interpretive accounts' [[ 8 ], p7], where mere integration would not be appropriate. Noblit and Hare's early work synthesised research from the field of education.

Three different methods of synthesis are used in meta-ethnography. One involves the 'translation' of concepts from individual studies into one another, thereby evolving overarching concepts or metaphors. Noblit and Hare called this process reciprocal translational analysis (RTA). Refutational synthesis involves exploring and explaining contradictions between individual studies. Lines-of-argument (LOA) synthesis involves building up a picture of the whole (i.e. culture, organisation etc) from studies of its parts. The authors conceptualised this latter approach as a type of grounded theorising.

Britten et al [ 11 ] and Campbell et al [ 12 ] have both conducted evaluations of meta-ethnography and claim to have succeeded, by using this method, in producing theories with greater explanatory power than could be achieved in a narrative literature review. While both these evaluations used small numbers of studies, more recently Pound et al [ 13 ] conducted both an RTA and an LOA synthesis using a much larger number of studies (37) on resisting medicines. These studies demonstrate that meta-ethnography has evolved since Noblit and Hare first introduced it. Campbell et al claim to have applied the method successfully to non-ethnographical studies. Based on their reading of Schutz [ 14 ], Britten et al have developed both second and third order constructs in their synthesis (Noblit and Hare briefly allude to the possibility of a 'second level of synthesis' [[ 8 ], p28] but do not demonstrate or further develop the idea).

In a more recent development, Sandelowski & Barroso [ 15 ] write of adapting RTA by using it to ' integrate findings interpretively, as opposed to comparing them interpretively' (p204). The former would involve looking to see whether the same concept, theory etc exists in different studies; the latter would involve the construction of a bigger picture or theory (i.e. LOA synthesis). They also talk about comparing or integrating imported concepts (e.g. from other disciplines) as well as those evolved 'in vivo'.

Grounded theory

Kearney [ 16 ], Eaves [ 17 ] and Finfgeld [ 18 ] have all adapted grounded theory to formulate a method of synthesis. Key methods and assumptions of grounded theory, as originally formulated and subsequently refined by Glaser and Strauss [ 19 ] and Strauss and Corbin [ 20 , 21 ], include: simultaneous phases of data collection and analysis; an inductive approach to analysis, allowing the theory to emerge from the data; the use of the constant comparison method; the use of theoretical sampling to reach theoretical saturation; and the generation of new theory. Eaves cited grounded theorists Charmaz [ 22 ] and Chesler [ 23 ], as well as Strauss and Corbin [ 20 ], as informing her approach to synthesis.

Glaser and Strauss [ 19 ] foresaw a time when a substantive body of grounded research should be pushed towards a higher, more abstract level. As a piece of methodological work, Eaves undertook her own synthesis of the synthesis methods used by these authors to produce her own clear and explicit guide to synthesis in grounded formal theory. Kearney stated that 'grounded formal theory', as she termed this method of synthesis, 'is suited to study of phenomena involving processes of contextualized understanding and action' [[ 24 ], p180] and, as such, is particularly applicable to nurses' research interests.

As Kearney suggested, the examples examined here were largely dominated by research in nursing. Eaves synthesised studies on care-giving in rural African-American families for elderly stroke survivors; Finfgeld on courage among individuals with long-term health problems; Kearney on women's experiences of domestic violence.

Kearney explicitly chose 'grounded formal theory' because it matches 'like' with 'like': that is, it applies the same methods that have been used to generate the original grounded theories included in the synthesis – produced by constant comparison and theoretical sampling – to generate a higher-level grounded theory. The wish to match 'like' with 'like' is also implicit in Eaves' paper. This distinguishes grounded formal theory from more recent applications of meta-ethnography, which have sought to include qualitative research using diverse methodological approaches [ 12 ].

- Thematic Synthesis

Thomas and Harden [ 25 ] have developed an approach to synthesis which they term 'thematic synthesis'. This combines and adapts approaches from both meta-ethnography and grounded theory. The method was developed out of a need to conduct reviews that addressed questions relating to intervention need, appropriateness and acceptability – as well as those relating to effectiveness – without compromising on key principles developed in systematic reviews. They applied thematic synthesis in a review of the barriers to, and facilitators of, healthy eating amongst children.

Free codes of findings are organised into 'descriptive' themes, which are then further interpreted to yield 'analytical' themes. This approach shares characteristics with later adaptations of meta-ethnography, in that the analytical themes are comparable to 'third order interpretations' and that the development of descriptive and analytical themes using coding invoke reciprocal 'translation'. It also shares much with grounded theory, in that the approach is inductive and themes are developed using a 'constant comparison' method. A novel aspect of their approach is the use of computer software to code the results of included studies line-by-line, thus borrowing another technique from methods usually used to analyse primary research.

Textual Narrative Synthesis

Textual narrative synthesis is an approach which arranges studies into more homogenous groups. Lucas et al [ 26 ] comment that it has proved useful in synthesising evidence of different types (qualitative, quantitative, economic etc). Typically, study characteristics, context, quality and findings are reported on according to a standard format and similarities and differences are compared across studies. Structured summaries may also be developed, elaborating on and putting into context the extracted data [ 27 ].

Lucas et al [ 26 ] compared thematic synthesis with textual narrative synthesis. They found that 'thematic synthesis holds most potential for hypothesis generation' whereas textual narrative synthesis is more likely to make transparent heterogeneity between studies (as does meta-ethnography, with refutational synthesis) and issues of quality appraisal. This is possibly because textual narrative synthesis makes clearer the context and characteristics of each study, while the thematic approach organises data according to themes. However, Lucas et al found that textual narrative synthesis is 'less good at identifying commonality' (p2); the authors do not make explicit why this should be, although it may be that organising according to themes, as the thematic approach does, is comparatively more successful in revealing commonality.

Paterson et al [ 28 ] have evolved a multi-faceted approach to synthesis, which they call 'meta-study'. The sociologist Zhao [ 29 ], drawing on Ritzer's work [ 30 ], outlined three components of analysis, which they proposed should be undertaken prior to synthesis. These are meta-data-analysis (the analysis of findings), meta-method (the analysis of methods) and meta-theory (the analysis of theory). Collectively, these three elements of analysis, culminating in synthesis, make up the practice of 'meta-study'. Paterson et al pointed out that the different components of analysis may be conducted concurrently.

Paterson et al argued that primary research is a construction; secondary research is therefore a construction of a construction. There is need for an approach that recognises this, and that also recognises research to be a product of its social, historical and ideological context. Such an approach would be useful in accounting for differences in research findings. For Paterson et al, there is no such thing as 'absolute truth'.

Meta-study was developed to study the experiences of adults living with a chronic illness. Meta-data-analysis was conceived of by Paterson et al in similar terms to Noblit and Hare's meta-ethnography (see above), in that it is essentially interpretive and seeks to reveal similarities and discrepancies among accounts of a particular phenomenon. Meta-method involves the examination of the methodologies of the individual studies under review. Part of the process of meta-method is to consider different aspects of methodology such as sampling, data collection, research design etc, similar to procedures others have called 'critical appraisal' (CASP [ 31 ]). However, Paterson et al take their critique to a deeper level by establishing the underlying assumptions of the methodologies used and the relationship between research outcomes and methods used. Meta-theory involves scrutiny of the philosophical and theoretical assumptions of the included research papers; this includes looking at the wider context in which new theory is generated. Paterson et al described meta-synthesis as a process which creates a new interpretation which accounts for the results of all three elements of analysis. The process of synthesis is iterative and reflexive and the authors were unwilling to oversimplify the process by 'codifying' procedures for bringing all three components of analysis together.

Meta-narrative

Greenhalgh et al [ 32 ]'s meta-narrative approach to synthesis arose out of the need to synthesise evidence to inform complex policy-making questions and was assisted by the formation of a multi-disciplinary team. Their approach to review was informed by Thomas Kuhn's The Structure of Scientific Revolutions [ 33 ], in which he proposed that knowledge is produced within particular paradigms which have their own assumptions about theory, about what is a legitimate object of study, about what are legitimate research questions and about what constitutes a finding. Paradigms also tend to develop through time according to a particular set of stages, central to which is the stage of 'normal science', in which the particular standards of the paradigm are largely unchallenged and seen to be self-evident. As Greenhalgh et al pointed out, Kuhn saw paradigms as largely incommensurable: 'that is, an empirical discovery made using one set of concepts, theories, methods and instruments cannot be satisfactorily explained through a different paradigmatic lens' [[ 32 ], p419].

Greenhalgh et al synthesised research from a wide range of disciplines; their research question related to the diffusion of innovations in health service delivery and organisation. They thus identified a need to synthesise findings from research which contains many different theories arising from many different disciplines and study designs.

Based on Kuhn's work, Greenhalgh et al proposed that, across different paradigms, there were multiple – and potentially mutually contradictory – ways of understanding the concept at the heart of their review, namely the diffusion of innovation. Bearing this in mind, the reviewers deliberately chose to select key papers from a number of different research 'paradigms' or 'traditions', both within and beyond healthcare, guided by their multidisciplinary research team. They took as their unit of analysis the 'unfolding "storyline" of a research tradition over time' [[ 32 ], p417) and sought to understand diffusion of innovation as it was conceptualised in each of these traditions. Key features of each tradition were mapped: historical roots, scope, theoretical basis; research questions asked and methods/instruments used; main empirical findings; historical development of the body of knowledge (how have earlier findings led to later findings); and strengths and limitations of the tradition. The results of this exercise led to maps of 13 'meta-narratives' in total, from which seven key dimensions, or themes, were identified and distilled for the synthesis phase of the review.

Critical Interpretive Synthesis

Dixon-Woods et al [ 34 ] developed their own approach to synthesising multi-disciplinary and multi-method evidence, termed 'critical interpretive synthesis', while researching access to healthcare by vulnerable groups. Critical interpretive synthesis is an adaptation of meta-ethnography, as well as borrowing techniques from grounded theory. The authors stated that they needed to adapt traditional meta-ethnographic methods for synthesis, since these had never been applied to quantitative as well as qualitative data, nor had they been applied to a substantial body of data (in this case, 119 papers).

Dixon-Woods et al presented critical interpretive synthesis as an approach to the whole process of review, rather than to just the synthesis component. It involves an iterative approach to refining the research question and searching and selecting from the literature (using theoretical sampling) and defining and applying codes and categories. It also has a particular approach to appraising quality, using relevance – i.e. likely contribution to theory development – rather than methodological characteristics as a means of determining the 'quality' of individual papers [ 35 ]. The authors also stress, as a defining characteristic, critical interpretive synthesis's critical approach to the literature in terms of deconstructing research traditions or theoretical assumptions as a means of contextualising findings.

Dixon-Woods et al rejected reciprocal translational analysis (RTA) as this produced 'only a summary in terms that have already been used in the literature' [[ 34 ], p5], which was seen as less helpful when dealing with a large and diverse body of literature. Instead, Dixon-Woods et al adopted a lines-of-argument (LOA) synthesis, in which – rejecting the difference between first, second and third order constructs – they instead developed 'synthetic constructs' which were then linked with constructs arising directly from the literature.

The influence of grounded theory can be seen in particular in critical interpretive synthesis's inductive approach to formulating the review question and to developing categories and concepts, rejecting a 'stage' approach to systematic reviewing, and in selecting papers using theoretical sampling. Dixon-Woods et al also claim that critical interpretive synthesis is distinct in its 'explicit orientation towards theory generation' [[ 34 ], p9].

Ecological Triangulation

Jim Banning is the author of 'ecological triangulation' or 'ecological sentence synthesis', applying this method to the evidence for what works for youth with disabilities. He borrows from Webb et al [ 36 ] and Denzin [ 37 ] the concept of triangulation, in which phenomena are studied from a variety of vantage points. His rationale is that building an 'evidence base' of effectiveness requires the synthesis of cumulative, multi-faceted evidence in order to find out 'what intervention works for what kind of outcomes for what kind of persons under what kind of conditions' [[ 38 ], p1].

Ecological triangulation unpicks the mutually interdependent relationships between behaviour, persons and environments. The method requires that, for data extraction and synthesis, 'ecological sentences' are formulated following the pattern: 'With this intervention, these outcomes occur with these population foci and within these grades (ages), with these genders ... and these ethnicities in these settings' [[ 39 ], p1].

Framework Synthesis

Brunton et al [ 40 ] and Oliver et al [ 41 ] have applied a 'framework synthesis' approach in their reviews. Framework synthesis is based on framework analysis, which was outlined by Pope, Ziebland and Mays [ 42 ], and draws upon the work of Ritchie and Spencer [ 43 ] and Miles and Huberman [ 44 ]. Its rationale is that qualitative research produces large amounts of textual data in the form of transcripts, observational fieldnotes etc. The sheer wealth of information poses a challenge for rigorous analysis. Framework synthesis offers a highly structured approach to organising and analysing data (e.g. indexing using numerical codes, rearranging data into charts etc).

Brunton et al applied the approach to a review of children's, young people's and parents' views of walking and cycling; Oliver et al to an analysis of public involvement in health services research. Framework synthesis is distinct from the other methods outlined here in that it utilises an a priori 'framework' – informed by background material and team discussions – to extract and synthesise findings. As such, it is largely a deductive approach although, in addition to topics identified by the framework, new topics may be developed and incorporated as they emerge from the data. The synthetic product can be expressed in the form of a chart for each key dimension identified, which may be used to map the nature and range of the concept under study and find associations between themes and exceptions to these [ 40 ].

'Fledgling' approaches

There are three other approaches to synthesis which have not yet been widely used. One is an approach using content analysis [ 45 , 46 ] in which text is condensed into fewer content-related categories. Another is 'meta-interpretation' [ 47 ], featuring the following: an ideographic rather than pre-determined approach to the development of exclusion criteria; a focus on meaning in context; interpretations as raw data for synthesis (although this feature doesn't distinguish it from other synthesis methods); an iterative approach to the theoretical sampling of studies for synthesis; and a transparent audit trail demonstrating the trustworthiness of the synthesis.

In addition to the synthesis methods discussed above, Sandelowski and Barroso propose a method they call 'qualitative metasummary' [ 15 ]. It is mentioned here as a new and original approach to handling a collection of qualitative studies but is qualitatively different to the other methods described here since it is aggregative; that is, findings are accumulated and summarised rather than 'transformed'. Metasummary is a way of producing a 'map' of the contents of qualitative studies and – according to Sandelowski and Barroso – 'reflect [s] a quantitative logic' [[ 15 ], p151]. The frequency of each finding is determined and the higher the frequency of a particular finding, the greater its validity. The authors even discuss the calculation of 'effect sizes' for qualitative findings. Qualitative metasummaries can be undertaken as an end in themselves or may serve as a basis for a further synthesis.

Dimensions of difference

Having outlined the range of methods identified, we now turn to an examination of how they compare with one another. It is clear that they have come from many different contexts and have different approaches to understanding knowledge, but what do these differences mean in practice? Our framework for this analysis is shown in Additional file 1 : dimensions of difference [ 48 ]. We have examined the epistemology of each of the methods and found that, to some extent, this explains the need for different methods and their various approaches to synthesis.

Epistemology

The first dimension that we will consider is that of the researchers' epistemological assumptions. Spencer et al [ 49 ] outline a range of epistemological positions, which might be organised into a spectrum as follows:

Subjective idealism : there is no shared reality independent of multiple alternative human constructions

Objective idealism : there is a world of collectively shared understandings

Critical realism : knowledge of reality is mediated by our perceptions and beliefs

Scientific realism : it is possible for knowledge to approximate closely an external reality

Naïve realism : reality exists independently of human constructions and can be known directly [ 49 , 45 , 46 ].

Thus, at one end of the spectrum we have a highly constructivist view of knowledge and, at the other, an unproblematized 'direct window onto the world' view.

Nearly all of positions along this spectrum are represented in the range of methodological approaches to synthesis covered in this paper. The originators of meta-narrative synthesis, critical interpretive synthesis and meta-study all articulate what might be termed a 'subjective idealist' approach to knowledge. Paterson et al [ 28 ] state that meta-study shies away from creating 'grand theories' within the health or social sciences and assume that no single objective reality will be found. Primary studies, they argue, are themselves constructions; meta-synthesis, then, 'deals with constructions of constructions' (p7). Greenhalgh et al [ 32 ] also view knowledge as a product of its disciplinary paradigm and use this to explain conflicting findings: again, the authors neither seek, nor expect to find, one final, non-contestable answer to their research question. Critical interpretive synthesis is similar in seeking to place literature within its context, to question its assumptions and to produce a theoretical model of a phenomenon which – because highly interpretive – may not be reproducible by different research teams at alternative points in time [[ 34 ], p11].

Methods used to synthesise grounded theory studies in order to produce a higher level of grounded theory [ 24 ] appear to be informed by 'objective idealism', as does meta-ethnography. Kearney argues for the near-universal applicability of a 'ready-to-wear' theory across contexts and populations. This approach is clearly distinct from one which recognises multiple realities. The emphasis is on examining commonalities amongst, rather than discrepancies between, accounts. This emphasis is similarly apparent in most meta-ethnographies, which are conducted either according to Noblit and Hare's 'reciprocal translational analysis' technique or to their 'lines-of-argument' technique and which seek to provide a 'whole' which has a greater explanatory power. Although Noblit and Hare also propose 'refutational synthesis', in which contradictory findings might be explored, there are few examples of this having been undertaken in practice, and the aim of the method appears to be to explain and explore differences due to context, rather than multiple realities.