An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.23(Suppl 3); 2019 Sep

An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors

Priya ranganathan.

1 Department of Anesthesiology, Critical Care and Pain, Tata Memorial Hospital, Mumbai, Maharashtra, India

2 Department of Surgical Oncology, Tata Memorial Centre, Mumbai, Maharashtra, India

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors.

How to cite this article

Ranganathan P, Pramesh CS. An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors. Indian J Crit Care Med 2019;23(Suppl 3):S230–S231.

Two papers quoted in this issue of the Indian Journal of Critical Care Medicine report. The results of studies aim to prove that a new intervention is better than (superior to) an existing treatment. In the ABLE study, the investigators wanted to show that transfusion of fresh red blood cells would be superior to standard-issue red cells in reducing 90-day mortality in ICU patients. 1 The PROPPR study was designed to prove that transfusion of a lower ratio of plasma and platelets to red cells would be superior to a higher ratio in decreasing 24-hour and 30-day mortality in critically ill patients. 2 These studies are known as superiority studies (as opposed to noninferiority or equivalence studies which will be discussed in a subsequent article).

SAMPLE VERSUS POPULATION

A sample represents a group of participants selected from the entire population. Since studies cannot be carried out on entire populations, researchers choose samples, which are representative of the population. This is similar to walking into a grocery store and examining a few grains of rice or wheat before purchasing an entire bag; we assume that the few grains that we select (the sample) are representative of the entire sack of grains (the population).

The results of the study are then extrapolated to generate inferences about the population. We do this using a process known as hypothesis testing. This means that the results of the study may not always be identical to the results we would expect to find in the population; i.e., there is the possibility that the study results may be erroneous.

HYPOTHESIS TESTING

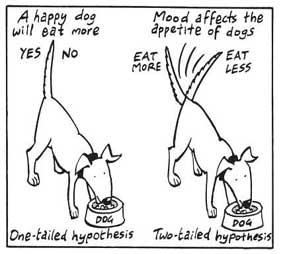

A clinical trial begins with an assumption or belief, and then proceeds to either prove or disprove this assumption. In statistical terms, this belief or assumption is known as a hypothesis. Counterintuitively, what the researcher believes in (or is trying to prove) is called the “alternate” hypothesis, and the opposite is called the “null” hypothesis; every study has a null hypothesis and an alternate hypothesis. For superiority studies, the alternate hypothesis states that one treatment (usually the new or experimental treatment) is superior to the other; the null hypothesis states that there is no difference between the treatments (the treatments are equal). For example, in the ABLE study, we start by stating the null hypothesis—there is no difference in mortality between groups receiving fresh RBCs and standard-issue RBCs. We then state the alternate hypothesis—There is a difference between groups receiving fresh RBCs and standard-issue RBCs. It is important to note that we have stated that the groups are different, without specifying which group will be better than the other. This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we start a study, we are not 100% certain that the new treatment can only be better than the standard treatment—it could be worse, and if it is so, the study should pick it up as well. One tailed hypothesis and one-sided statistical testing is done for non-inferiority studies, which will be discussed in a subsequent paper in this series.

STATISTICAL ERRORS

There are two possibilities to consider when interpreting the results of a superiority study. The first possibility is that there is truly no difference between the treatments but the study finds that they are different. This is called a Type-1 error or false-positive error or alpha error. This means falsely rejecting the null hypothesis.

The second possibility is that there is a difference between the treatments and the study does not pick up this difference. This is called a Type 2 error or false-negative error or beta error. This means falsely accepting the null hypothesis.

The power of the study is the ability to detect a difference between groups and is the converse of the beta error; i.e., power = 1-beta error. Alpha and beta errors are finalized when the protocol is written and form the basis for sample size calculation for the study. In an ideal world, we would not like any error in the results of our study; however, we would need to do the study in the entire population (infinite sample size) to be able to get a 0% alpha and beta error. These two errors enable us to do studies with realistic sample sizes, with the compromise that there is a small possibility that the results may not always reflect the truth. The basis for this will be discussed in a subsequent paper in this series dealing with sample size calculation.

Conventionally, type 1 or alpha error is set at 5%. This means, that at the end of the study, if there is a difference between groups, we want to be 95% certain that this is a true difference and allow only a 5% probability that this difference has occurred by chance (false positive). Type 2 or beta error is usually set between 10% and 20%; therefore, the power of the study is 90% or 80%. This means that if there is a difference between groups, we want to be 80% (or 90%) certain that the study will detect that difference. For example, in the ABLE study, sample size was calculated with a type 1 error of 5% (two-sided) and power of 90% (type 2 error of 10%) (1).

Table 1 gives a summary of the two types of statistical errors with an example

Statistical errors

In the next article in this series, we will look at the meaning and interpretation of ‘ p ’ value and confidence intervals for hypothesis testing.

Source of support: Nil

Conflict of interest: None

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

5.2 - writing hypotheses.

The first step in conducting a hypothesis test is to write the hypothesis statements that are going to be tested. For each test you will have a null hypothesis (\(H_0\)) and an alternative hypothesis (\(H_a\)).

When writing hypotheses there are three things that we need to know: (1) the parameter that we are testing (2) the direction of the test (non-directional, right-tailed or left-tailed), and (3) the value of the hypothesized parameter.

- At this point we can write hypotheses for a single mean (\(\mu\)), paired means(\(\mu_d\)), a single proportion (\(p\)), the difference between two independent means (\(\mu_1-\mu_2\)), the difference between two proportions (\(p_1-p_2\)), a simple linear regression slope (\(\beta\)), and a correlation (\(\rho\)).

- The research question will give us the information necessary to determine if the test is two-tailed (e.g., "different from," "not equal to"), right-tailed (e.g., "greater than," "more than"), or left-tailed (e.g., "less than," "fewer than").

- The research question will also give us the hypothesized parameter value. This is the number that goes in the hypothesis statements (i.e., \(\mu_0\) and \(p_0\)). For the difference between two groups, regression, and correlation, this value is typically 0.

Hypotheses are always written in terms of population parameters (e.g., \(p\) and \(\mu\)). The tables below display all of the possible hypotheses for the parameters that we have learned thus far. Note that the null hypothesis always includes the equality (i.e., =).

- Search Search Please fill out this field.

What Is a Two-Tailed Test?

Understanding a two-tailed test, special considerations, two-tailed vs. one-tailed test.

- Two-Tailed Test FAQs

- Corporate Finance

- Financial Analysis

What Is a Two-Tailed Test? Definition and Example

Adam Hayes, Ph.D., CFA, is a financial writer with 15+ years Wall Street experience as a derivatives trader. Besides his extensive derivative trading expertise, Adam is an expert in economics and behavioral finance. Adam received his master's in economics from The New School for Social Research and his Ph.D. from the University of Wisconsin-Madison in sociology. He is a CFA charterholder as well as holding FINRA Series 7, 55 & 63 licenses. He currently researches and teaches economic sociology and the social studies of finance at the Hebrew University in Jerusalem.

:max_bytes(150000):strip_icc():format(webp)/adam_hayes-5bfc262a46e0fb005118b414.jpg)

Investopedia / Joules Garcia

A two-tailed test, in statistics, is a method in which the critical area of a distribution is two-sided and tests whether a sample is greater than or less than a certain range of values. It is used in null-hypothesis testing and testing for statistical significance . If the sample being tested falls into either of the critical areas, the alternative hypothesis is accepted instead of the null hypothesis.

Key Takeaways

- In statistics, a two-tailed test is a method in which the critical area of a distribution is two-sided and tests whether a sample is greater or less than a range of values.

- It is used in null-hypothesis testing and testing for statistical significance.

- If the sample being tested falls into either of the critical areas, the alternative hypothesis is accepted instead of the null hypothesis.

- By convention two-tailed tests are used to determine significance at the 5% level, meaning each side of the distribution is cut at 2.5%.

A basic concept of inferential statistics is hypothesis testing , which determines whether a claim is true or not given a population parameter. A hypothesis test that is designed to show whether the mean of a sample is significantly greater than and significantly less than the mean of a population is referred to as a two-tailed test. The two-tailed test gets its name from testing the area under both tails of a normal distribution , although the test can be used in other non-normal distributions.

A two-tailed test is designed to examine both sides of a specified data range as designated by the probability distribution involved. The probability distribution should represent the likelihood of a specified outcome based on predetermined standards. This requires the setting of a limit designating the highest (or upper) and lowest (or lower) accepted variable values included within the range. Any data point that exists above the upper limit or below the lower limit is considered out of the acceptance range and in an area referred to as the rejection range.

There is no inherent standard about the number of data points that must exist within the acceptance range. In instances where precision is required, such as in the creation of pharmaceutical drugs, a rejection rate of 0.001% or less may be instituted. In instances where precision is less critical, such as the number of food items in a product bag, a rejection rate of 5% may be appropriate.

A two-tailed test can also be used practically during certain production activities in a firm, such as with the production and packaging of candy at a particular facility. If the production facility designates 50 candies per bag as its goal, with an acceptable distribution of 45 to 55 candies, any bag found with an amount below 45 or above 55 is considered within the rejection range.

To confirm the packaging mechanisms are properly calibrated to meet the expected output, random sampling may be taken to confirm accuracy. A simple random sample takes a small, random portion of the entire population to represent the entire data set, where each member has an equal probability of being chosen.

For the packaging mechanisms to be considered accurate, an average of 50 candies per bag with an appropriate distribution is desired. Additionally, the number of bags that fall within the rejection range needs to fall within the probability distribution limit considered acceptable as an error rate. Here, the null hypothesis would be that the mean is 50 while the alternate hypothesis would be that it is not 50.

If, after conducting the two-tailed test, the z-score falls in the rejection region, meaning that the deviation is too far from the desired mean, then adjustments to the facility or associated equipment may be required to correct the error. Regular use of two-tailed testing methods can help ensure production stays within limits over the long term.

Be careful to note if a statistical test is one- or two-tailed as this will greatly influence a model's interpretation.

When a hypothesis test is set up to show that the sample mean would be only higher than the population mean, this is referred to as a one-tailed test . A formulation of this hypothesis would be, for example, that "the returns on an investment fund would be at least x%." One-tailed tests could also be set up to show that the sample mean could be only less than the population mean. The key difference from a two-tailed test is that in a two-tailed test, the sample mean could be different from the population mean by being either higher or lower than it.

If the sample being tested falls into the one-sided critical area, the alternative hypothesis will be accepted instead of the null hypothesis. A one-tailed test is also known as a directional hypothesis or directional test.

A two-tailed test, on the other hand, is designed to examine both sides of a specified data range to test whether a sample is greater than or less than the range of values.

Example of a Two-Tailed Test

As a hypothetical example, imagine that a new stockbroker , named XYZ, claims that their brokerage fees are lower than that of your current stockbroker, ABC) Data available from an independent research firm indicates that the mean and standard deviation of all ABC broker clients are $18 and $6, respectively.

A sample of 100 clients of ABC is taken, and brokerage charges are calculated with the new rates of XYZ broker. If the mean of the sample is $18.75 and the sample standard deviation is $6, can any inference be made about the difference in the average brokerage bill between ABC and XYZ broker?

- H 0 : Null Hypothesis: mean = 18

- H 1 : Alternative Hypothesis: mean <> 18 (This is what we want to prove.)

- Rejection region: Z <= - Z 2.5 and Z>=Z 2.5 (assuming 5% significance level, split 2.5 each on either side).

- Z = (sample mean – mean) / (std-dev / sqrt (no. of samples)) = (18.75 – 18) / (6/(sqrt(100)) = 1.25

This calculated Z value falls between the two limits defined by: - Z 2.5 = -1.96 and Z 2.5 = 1.96.

This concludes that there is insufficient evidence to infer that there is any difference between the rates of your existing broker and the new broker. Therefore, the null hypothesis cannot be rejected. Alternatively, the p-value = P(Z< -1.25)+P(Z >1.25) = 2 * 0.1056 = 0.2112 = 21.12%, which is greater than 0.05 or 5%, leads to the same conclusion.

How Is a Two-Tailed Test Designed?

A two-tailed test is designed to determine whether a claim is true or not given a population parameter. It examines both sides of a specified data range as designated by the probability distribution involved. As such, the probability distribution should represent the likelihood of a specified outcome based on predetermined standards.

What Is the Difference Between a Two-Tailed and One-Tailed Test?

A two-tailed hypothesis test is designed to show whether the sample mean is significantly greater than or significantly less than the mean of a population. The two-tailed test gets its name from testing the area under both tails (sides) of a normal distribution. A one-tailed hypothesis test, on the other hand, is set up to show only one test; that the sample mean would be higher than the population mean, or, in a separate test, that the sample mean would be lower than the population mean.

What Is a Z-score?

A Z-score numerically describes a value's relationship to the mean of a group of values and is measured in terms of the number of standard deviations from the mean. If a Z-score is 0, it indicates that the data point's score is identical to the mean score whereas Z-scores of 1.0 and -1.0 would indicate values one standard deviation above or below the mean. In most large data sets, 99% of values have a Z-score between -3 and 3, meaning they lie within three standard deviations above and below the mean.

San Jose State University. " 6: Introduction to Null Hypothesis Significance Testing ."

:max_bytes(150000):strip_icc():format(webp)/shutterstock_67023106-5bfc2b9846e0fb005144dd87.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Hypothesis Testing for Means & Proportions

- 1

- | 2

- | 3

- | 4

- | 5

- | 6

- | 7

- | 8

- | 9

- | 10

Hypothesis Testing: Upper-, Lower, and Two Tailed Tests

Type i and type ii errors.

All Modules

Z score Table

t score Table

The procedure for hypothesis testing is based on the ideas described above. Specifically, we set up competing hypotheses, select a random sample from the population of interest and compute summary statistics. We then determine whether the sample data supports the null or alternative hypotheses. The procedure can be broken down into the following five steps.

- Step 1. Set up hypotheses and select the level of significance α.

H 0 : Null hypothesis (no change, no difference);

H 1 : Research hypothesis (investigator's belief); α =0.05

- Step 2. Select the appropriate test statistic.

The test statistic is a single number that summarizes the sample information. An example of a test statistic is the Z statistic computed as follows:

When the sample size is small, we will use t statistics (just as we did when constructing confidence intervals for small samples). As we present each scenario, alternative test statistics are provided along with conditions for their appropriate use.

- Step 3. Set up decision rule.

The decision rule is a statement that tells under what circumstances to reject the null hypothesis. The decision rule is based on specific values of the test statistic (e.g., reject H 0 if Z > 1.645). The decision rule for a specific test depends on 3 factors: the research or alternative hypothesis, the test statistic and the level of significance. Each is discussed below.

- The decision rule depends on whether an upper-tailed, lower-tailed, or two-tailed test is proposed. In an upper-tailed test the decision rule has investigators reject H 0 if the test statistic is larger than the critical value. In a lower-tailed test the decision rule has investigators reject H 0 if the test statistic is smaller than the critical value. In a two-tailed test the decision rule has investigators reject H 0 if the test statistic is extreme, either larger than an upper critical value or smaller than a lower critical value.

- The exact form of the test statistic is also important in determining the decision rule. If the test statistic follows the standard normal distribution (Z), then the decision rule will be based on the standard normal distribution. If the test statistic follows the t distribution, then the decision rule will be based on the t distribution. The appropriate critical value will be selected from the t distribution again depending on the specific alternative hypothesis and the level of significance.

- The third factor is the level of significance. The level of significance which is selected in Step 1 (e.g., α =0.05) dictates the critical value. For example, in an upper tailed Z test, if α =0.05 then the critical value is Z=1.645.

The following figures illustrate the rejection regions defined by the decision rule for upper-, lower- and two-tailed Z tests with α=0.05. Notice that the rejection regions are in the upper, lower and both tails of the curves, respectively. The decision rules are written below each figure.

Rejection Region for Lower-Tailed Z Test (H 1 : μ < μ 0 ) with α =0.05

The decision rule is: Reject H 0 if Z < 1.645.

Rejection Region for Two-Tailed Z Test (H 1 : μ ≠ μ 0 ) with α =0.05

The decision rule is: Reject H 0 if Z < -1.960 or if Z > 1.960.

The complete table of critical values of Z for upper, lower and two-tailed tests can be found in the table of Z values to the right in "Other Resources."

Critical values of t for upper, lower and two-tailed tests can be found in the table of t values in "Other Resources."

- Step 4. Compute the test statistic.

Here we compute the test statistic by substituting the observed sample data into the test statistic identified in Step 2.

- Step 5. Conclusion.

The final conclusion is made by comparing the test statistic (which is a summary of the information observed in the sample) to the decision rule. The final conclusion will be either to reject the null hypothesis (because the sample data are very unlikely if the null hypothesis is true) or not to reject the null hypothesis (because the sample data are not very unlikely).

If the null hypothesis is rejected, then an exact significance level is computed to describe the likelihood of observing the sample data assuming that the null hypothesis is true. The exact level of significance is called the p-value and it will be less than the chosen level of significance if we reject H 0 .

Statistical computing packages provide exact p-values as part of their standard output for hypothesis tests. In fact, when using a statistical computing package, the steps outlined about can be abbreviated. The hypotheses (step 1) should always be set up in advance of any analysis and the significance criterion should also be determined (e.g., α =0.05). Statistical computing packages will produce the test statistic (usually reporting the test statistic as t) and a p-value. The investigator can then determine statistical significance using the following: If p < α then reject H 0 .

- Step 1. Set up hypotheses and determine level of significance

H 0 : μ = 191 H 1 : μ > 191 α =0.05

The research hypothesis is that weights have increased, and therefore an upper tailed test is used.

- Step 2. Select the appropriate test statistic.

Because the sample size is large (n > 30) the appropriate test statistic is

- Step 3. Set up decision rule.

In this example, we are performing an upper tailed test (H 1 : μ> 191), with a Z test statistic and selected α =0.05. Reject H 0 if Z > 1.645.

We now substitute the sample data into the formula for the test statistic identified in Step 2.

We reject H 0 because 2.38 > 1.645. We have statistically significant evidence at a =0.05, to show that the mean weight in men in 2006 is more than 191 pounds. Because we rejected the null hypothesis, we now approximate the p-value which is the likelihood of observing the sample data if the null hypothesis is true. An alternative definition of the p-value is the smallest level of significance where we can still reject H 0 . In this example, we observed Z=2.38 and for α=0.05, the critical value was 1.645. Because 2.38 exceeded 1.645 we rejected H 0 . In our conclusion we reported a statistically significant increase in mean weight at a 5% level of significance. Using the table of critical values for upper tailed tests, we can approximate the p-value. If we select α=0.025, the critical value is 1.96, and we still reject H 0 because 2.38 > 1.960. If we select α=0.010 the critical value is 2.326, and we still reject H 0 because 2.38 > 2.326. However, if we select α=0.005, the critical value is 2.576, and we cannot reject H 0 because 2.38 < 2.576. Therefore, the smallest α where we still reject H 0 is 0.010. This is the p-value. A statistical computing package would produce a more precise p-value which would be in between 0.005 and 0.010. Here we are approximating the p-value and would report p < 0.010.

In all tests of hypothesis, there are two types of errors that can be committed. The first is called a Type I error and refers to the situation where we incorrectly reject H 0 when in fact it is true. This is also called a false positive result (as we incorrectly conclude that the research hypothesis is true when in fact it is not). When we run a test of hypothesis and decide to reject H 0 (e.g., because the test statistic exceeds the critical value in an upper tailed test) then either we make a correct decision because the research hypothesis is true or we commit a Type I error. The different conclusions are summarized in the table below. Note that we will never know whether the null hypothesis is really true or false (i.e., we will never know which row of the following table reflects reality).

Table - Conclusions in Test of Hypothesis

In the first step of the hypothesis test, we select a level of significance, α, and α= P(Type I error). Because we purposely select a small value for α, we control the probability of committing a Type I error. For example, if we select α=0.05, and our test tells us to reject H 0 , then there is a 5% probability that we commit a Type I error. Most investigators are very comfortable with this and are confident when rejecting H 0 that the research hypothesis is true (as it is the more likely scenario when we reject H 0 ).

When we run a test of hypothesis and decide not to reject H 0 (e.g., because the test statistic is below the critical value in an upper tailed test) then either we make a correct decision because the null hypothesis is true or we commit a Type II error. Beta (β) represents the probability of a Type II error and is defined as follows: β=P(Type II error) = P(Do not Reject H 0 | H 0 is false). Unfortunately, we cannot choose β to be small (e.g., 0.05) to control the probability of committing a Type II error because β depends on several factors including the sample size, α, and the research hypothesis. When we do not reject H 0 , it may be very likely that we are committing a Type II error (i.e., failing to reject H 0 when in fact it is false). Therefore, when tests are run and the null hypothesis is not rejected we often make a weak concluding statement allowing for the possibility that we might be committing a Type II error. If we do not reject H 0 , we conclude that we do not have significant evidence to show that H 1 is true. We do not conclude that H 0 is true.

The most common reason for a Type II error is a small sample size.

return to top | previous page | next page

Content ©2017. All Rights Reserved. Date last modified: November 6, 2017. Wayne W. LaMorte, MD, PhD, MPH

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

11.4: One- and Two-Tailed Tests

- Last updated

- Save as PDF

- Page ID 2148

- Rice University

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- Define Type I and Type II errors

- Interpret significant and non-significant differences

- Explain why the null hypothesis should not be accepted when the effect is not significant

In the James Bond case study, Mr. Bond was given \(16\) trials on which he judged whether a martini had been shaken or stirred. He was correct on \(13\) of the trials. From the binomial distribution, we know that the probability of being correct \(13\) or more times out of \(16\) if one is only guessing is \(0.0106\). Figure \(\PageIndex{1}\) shows a graph of the binomial distribution. The red bars show the values greater than or equal to \(13\). As you can see in the figure, the probabilities are calculated for the upper tail of the distribution. A probability calculated in only one tail of the distribution is called a "one-tailed probability."

Binomial Calculator

A slightly different question can be asked of the data: "What is the probability of getting a result as extreme or more extreme than the one observed?" Since the chance expectation is \(8/16\), a result of \(3/16\) is equally as extreme as \(13/16\). Thus, to calculate this probability, we would consider both tails of the distribution. Since the binomial distribution is symmetric when \(\pi =0.5\), this probability is exactly double the probability of \(0.0106\) computed previously. Therefore, \(p = 0.0212\). A probability calculated in both tails of a distribution is called a "two-tailed probability" (see Figure \(\PageIndex{2}\)).

Should the one-tailed or the two-tailed probability be used to assess Mr. Bond's performance? That depends on the way the question is posed. If we are asking whether Mr. Bond can tell the difference between shaken or stirred martinis, then we would conclude he could if he performed either much better than chance or much worse than chance. If he performed much worse than chance, we would conclude that he can tell the difference, but he does not know which is which. Therefore, since we are going to reject the null hypothesis if Mr. Bond does either very well or very poorly, we will use a two-tailed probability.

On the other hand, if our question is whether Mr. Bond is better than chance at determining whether a martini is shaken or stirred, we would use a one-tailed probability. What would the one-tailed probability be if Mr. Bond were correct on only \(3\) of the \(16\) trials? Since the one-tailed probability is the probability of the right-hand tail, it would be the probability of getting \(3\) or more correct out of \(16\). This is a very high probability and the null hypothesis would not be rejected.

The null hypothesis for the two-tailed test is \(\pi =0.5\). By contrast, the null hypothesis for the one-tailed test is \(\pi \leq 0.5\). Accordingly, we reject the two-tailed hypothesis if the sample proportion deviates greatly from \(0.5\) in either direction. The one-tailed hypothesis is rejected only if the sample proportion is much greater than \(0.5\). The alternative hypothesis in the two-tailed test is \(\pi \neq 0.5\). In the one-tailed test it is \(\pi > 0.5\).

You should always decide whether you are going to use a one-tailed or a two-tailed probability before looking at the data. Statistical tests that compute one-tailed probabilities are called one-tailed tests; those that compute two-tailed probabilities are called two-tailed tests. Two-tailed tests are much more common than one-tailed tests in scientific research because an outcome signifying that something other than chance is operating is usually worth noting. One-tailed tests are appropriate when it is not important to distinguish between no effect and an effect in the unexpected direction. For example, consider an experiment designed to test the efficacy of a treatment for the common cold. The researcher would only be interested in whether the treatment was better than a placebo control. It would not be worth distinguishing between the case in which the treatment was worse than a placebo and the case in which it was the same because in both cases the drug would be worthless.

Some have argued that a one-tailed test is justified whenever the researcher predicts the direction of an effect. The problem with this argument is that if the effect comes out strongly in the non-predicted direction, the researcher is not justified in concluding that the effect is not zero. Since this is unrealistic, one-tailed tests are usually viewed skeptically if justified on this basis alone.

Statistics Made Easy

Two Sample t-test: Definition, Formula, and Example

A two sample t-test is used to determine whether or not two population means are equal.

This tutorial explains the following:

- The motivation for performing a two sample t-test.

- The formula to perform a two sample t-test.

- The assumptions that should be met to perform a two sample t-test.

- An example of how to perform a two sample t-test.

Two Sample t-test: Motivation

Suppose we want to know whether or not the mean weight between two different species of turtles is equal. Since there are thousands of turtles in each population, it would be too time-consuming and costly to go around and weigh each individual turtle.

Instead, we might take a simple random sample of 15 turtles from each population and use the mean weight in each sample to determine if the mean weight is equal between the two populations:

However, it’s virtually guaranteed that the mean weight between the two samples will be at least a little different. The question is whether or not this difference is statistically significant . Fortunately, a two sample t-test allows us to answer this question.

Two Sample t-test: Formula

A two-sample t-test always uses the following null hypothesis:

- H 0 : μ 1 = μ 2 (the two population means are equal)

The alternative hypothesis can be either two-tailed, left-tailed, or right-tailed:

- H 1 (two-tailed): μ 1 ≠ μ 2 (the two population means are not equal)

- H 1 (left-tailed): μ 1 < μ 2 (population 1 mean is less than population 2 mean)

- H 1 (right-tailed): μ 1 > μ 2 (population 1 mean is greater than population 2 mean)

We use the following formula to calculate the test statistic t:

Test statistic: ( x 1 – x 2 ) / s p (√ 1/n 1 + 1/n 2 )

where x 1 and x 2 are the sample means, n 1 and n 2 are the sample sizes, and where s p is calculated as:

s p = √ (n 1 -1)s 1 2 + (n 2 -1)s 2 2 / (n 1 +n 2 -2)

where s 1 2 and s 2 2 are the sample variances.

If the p-value that corresponds to the test statistic t with (n 1 +n 2 -1) degrees of freedom is less than your chosen significance level (common choices are 0.10, 0.05, and 0.01) then you can reject the null hypothesis.

Two Sample t-test: Assumptions

For the results of a two sample t-test to be valid, the following assumptions should be met:

- The observations in one sample should be independent of the observations in the other sample.

- The data should be approximately normally distributed.

- The two samples should have approximately the same variance. If this assumption is not met, you should instead perform Welch’s t-test .

- The data in both samples was obtained using a random sampling method .

Two Sample t-test : Example

Suppose we want to know whether or not the mean weight between two different species of turtles is equal. To test this, will perform a two sample t-test at significance level α = 0.05 using the following steps:

Step 1: Gather the sample data.

Suppose we collect a random sample of turtles from each population with the following information:

- Sample size n 1 = 40

- Sample mean weight x 1 = 300

- Sample standard deviation s 1 = 18.5

- Sample size n 2 = 38

- Sample mean weight x 2 = 305

- Sample standard deviation s 2 = 16.7

Step 2: Define the hypotheses.

We will perform the two sample t-test with the following hypotheses:

- H 0 : μ 1 = μ 2 (the two population means are equal)

- H 1 : μ 1 ≠ μ 2 (the two population means are not equal)

Step 3: Calculate the test statistic t .

First, we will calculate the pooled standard deviation s p :

s p = √ (n 1 -1)s 1 2 + (n 2 -1)s 2 2 / (n 1 +n 2 -2) = √ (40-1)18.5 2 + (38-1)16.7 2 / (40+38-2) = 17.647

Next, we will calculate the test statistic t :

t = ( x 1 – x 2 ) / s p (√ 1/n 1 + 1/n 2 ) = (300-305) / 17.647(√ 1/40 + 1/38 ) = -1.2508

Step 4: Calculate the p-value of the test statistic t .

According to the T Score to P Value Calculator , the p-value associated with t = -1.2508 and degrees of freedom = n 1 +n 2 -2 = 40+38-2 = 76 is 0.21484 .

Step 5: Draw a conclusion.

Since this p-value is not less than our significance level α = 0.05, we fail to reject the null hypothesis. We do not have sufficient evidence to say that the mean weight of turtles between these two populations is different.

Note: You can also perform this entire two sample t-test by simply using the Two Sample t-test Calculator .

Additional Resources

The following tutorials explain how to perform a two-sample t-test using different statistical programs:

How to Perform a Two Sample t-test in Excel How to Perform a Two Sample t-test in SPSS How to Perform a Two Sample t-test in Stata How to Perform a Two Sample t-test in R How to Perform a Two Sample t-test in Python How to Perform a Two Sample t-test on a TI-84 Calculator

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

2 Replies to “Two Sample t-test: Definition, Formula, and Example”

I like the detailed information and simplified in the way I can understand and relate easily. Thank you

It seems a couple of parenthesis is missed at the pooled standard deviation formula. Under square root you have (n1-1)s12 + (n2-1)s22 / (n1+n2-2) but it should be [(n1-1)s12 + (n2-1)s22] / (n1+n2-2) I used square bracket

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

IMAGES

VIDEO

COMMENTS

One-tailed hypothesis tests are also known as directional and one-sided tests because you can test for effects in only one direction. When you perform a one-tailed test, the entire significance level percentage goes into the extreme end of one tail of the distribution. In the examples below, I use an alpha of 5%.

H0 (Null Hypothesis): μ = 20 grams. HA (Alternative Hypothesis): μ ≠ 20 grams. This is an example of a two-tailed hypothesis test because the alternative hypothesis contains the not equal "≠" sign. The engineer believes that the new method will influence widget weight, but doesn't specify whether it will cause average weight to ...

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors. How to cite this article. Ranganathan P, Pramesh CS. ... This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we ...

A two tailed test tells you that you're finding the area in the middle of a distribution. In other words, your rejection region (the place where you would reject the null hypothesis) is in both tails. For example, let's say you were running a z test with an alpha level of 5% (0.05). In a one tailed test, the entire 5% would be in a single tail.

5.2 - Writing Hypotheses. The first step in conducting a hypothesis test is to write the hypothesis statements that are going to be tested. For each test you will have a null hypothesis ( H 0) and an alternative hypothesis ( H a ). When writing hypotheses there are three things that we need to know: (1) the parameter that we are testing (2) the ...

Two-Tailed Test: A two-tailed test is a statistical test in which the critical area of a distribution is two-sided and tests whether a sample is greater than or less than a certain range of values ...

To perform a two-tailed test at a significance level of 0.05, you need to divide alpha by 2, giving a significance level of 0.025 for each distribution tail (0.05/2 = 0.025). This is done because the two-tailed test is looking for significance in either tail of the distribution. If the calculated test statistic falls in the rejection region of ...

At this point, you might use a statistical test, like unpaired or 2-sample t-test, to see if there's a significant difference between the two groups' means. Typically, an unpaired t-test starts with two hypotheses. The first hypothesis is called the null hypothesis, and it basically says there's no difference in the means of the two groups.

In coin flipping, the null hypothesis is a sequence of Bernoulli trials with probability 0.5, yielding a random variable X which is 1 for heads and 0 for tails, and a common test statistic is the sample mean (of the number of heads) ¯. If testing for whether the coin is biased towards heads, a one-tailed test would be used - only large numbers of heads would be significant.

The decision rule for a specific test depends on 3 factors: the research or alternative hypothesis, the test statistic and the level of significance. Each is discussed below. The decision rule depends on whether an upper-tailed, lower-tailed, or two-tailed test is proposed.

Present the findings in your results and discussion section. Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps. Table of contents. Step 1: State your null and alternate hypothesis. Step 2: Collect data. Step 3: Perform a statistical test.

4 One-tailed vs two-tailed test. To gain a deeper understanding of how to conduct a hypothesis test, this section will delve into the concepts of one-tailed and two-tailed tests. These tests are vital tools in statistical hypothesis testing, and the decision of which test to employ depends on the research question and hypothesis under examination.

To decide if a one-tailed test can be used, one has to have some extra information about the experiment to know the direction from the mean (H1: drug lowers the response time). If the direction of the effect is unknown, a two tailed test has to be used, and the H1 must be stated in a way where the direction of the effect is left uncertain (H1 ...

A research hypothesis, in its plural form "hypotheses," is a specific, testable prediction about the anticipated results of a study, established at its outset. ... A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of ...

The one-tailed hypothesis is rejected only if the sample proportion is much greater than \(0.5\). The alternative hypothesis in the two-tailed test is \(\pi \neq 0.5\). In the one-tailed test it is \(\pi > 0.5\). You should always decide whether you are going to use a one-tailed or a two-tailed probability before looking at the data.

The null hypothesis for the two-tailed test is π = 0.5. By contrast, the null hypothesis for the one-tailed test is π ≤ 0.5. ... Two-tailed tests are much more common than one-tailed tests in scientific research because an outcome signifying that something other than chance is operating is usually worth noting. One-tailed tests are ...

A two-tailed hypothesis test example: A machine is used to fill bags with coffee, and each bag is 1 kg. ... depending on the research question. A two-tailed significance test is a non-directional ...

The choice of a one- rather than a two-tailed hypothesis testing strategy can influence research outcomes, but information about the type of test conducted is rarely reported in articles appearing in educational and psychological journals.

A two-sample t-test always uses the following null hypothesis: H 0: μ 1 = μ 2 (the two population means are equal) The alternative hypothesis can be either two-tailed, left-tailed, or right-tailed: H 1 (two-tailed): μ 1 ≠ μ 2 (the two population means are not equal) H 1 (left-tailed): μ 1 < μ 2 (population 1 mean is less than population ...

To test the hypothesis, test statistics is required, which follows a known distribution. In a test, there are two divisions of probability density curve, i.e. region of acceptance and region of rejection. the region of rejection is called as a critical region.. In the field of research and experiments, it pays to know the difference between one-tailed and two-tailed test, as they are quite ...

A one-tailed test is appropriate if you only want to test if there is a difference between your groups in a specific direction. You would use a two-tailed test if you want to determine if there is any difference between the two groups you're comparing. As user Mur1lo says in their comment - you should never design your analysis after the data ...

I guess whether to take one-tailed or two-tailed depends completely on the Alternative hypothesis. Consider the following example of testing mean in a t-test: 0: μ = 0 0: μ = 0. : μ ≠ 0: μ ≠ 0. Now if you observe a very negative sample mean or a very positive sample mean, your hypothesis is unlikely to be true.