- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 7. The Results

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

The results section is where you report the findings of your study based upon the methodology [or methodologies] you applied to gather information. The results section should state the findings of the research arranged in a logical sequence without bias or interpretation. A section describing results should be particularly detailed if your paper includes data generated from your own research.

Annesley, Thomas M. "Show Your Cards: The Results Section and the Poker Game." Clinical Chemistry 56 (July 2010): 1066-1070.

Importance of a Good Results Section

When formulating the results section, it's important to remember that the results of a study do not prove anything . Findings can only confirm or reject the hypothesis underpinning your study. However, the act of articulating the results helps you to understand the problem from within, to break it into pieces, and to view the research problem from various perspectives.

The page length of this section is set by the amount and types of data to be reported . Be concise. Use non-textual elements appropriately, such as figures and tables, to present findings more effectively. In deciding what data to describe in your results section, you must clearly distinguish information that would normally be included in a research paper from any raw data or other content that could be included as an appendix. In general, raw data that has not been summarized should not be included in the main text of your paper unless requested to do so by your professor.

Avoid providing data that is not critical to answering the research question . The background information you described in the introduction section should provide the reader with any additional context or explanation needed to understand the results. A good strategy is to always re-read the background section of your paper after you have written up your results to ensure that the reader has enough context to understand the results [and, later, how you interpreted the results in the discussion section of your paper that follows].

Bavdekar, Sandeep B. and Sneha Chandak. "Results: Unraveling the Findings." Journal of the Association of Physicians of India 63 (September 2015): 44-46; Brett, Paul. "A Genre Analysis of the Results Section of Sociology Articles." English for Specific Speakers 13 (1994): 47-59; Go to English for Specific Purposes on ScienceDirect;Burton, Neil et al. Doing Your Education Research Project . Los Angeles, CA: SAGE, 2008; Results. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper. Department of Biology. Bates College; Kretchmer, Paul. Twelve Steps to Writing an Effective Results Section. San Francisco Edit; "Reporting Findings." In Making Sense of Social Research Malcolm Williams, editor. (London;: SAGE Publications, 2003) pp. 188-207.

Structure and Writing Style

I. Organization and Approach

For most research papers in the social and behavioral sciences, there are two possible ways of organizing the results . Both approaches are appropriate in how you report your findings, but use only one approach.

- Present a synopsis of the results followed by an explanation of key findings . This approach can be used to highlight important findings. For example, you may have noticed an unusual correlation between two variables during the analysis of your findings. It is appropriate to highlight this finding in the results section. However, speculating as to why this correlation exists and offering a hypothesis about what may be happening belongs in the discussion section of your paper.

- Present a result and then explain it, before presenting the next result then explaining it, and so on, then end with an overall synopsis . This is the preferred approach if you have multiple results of equal significance. It is more common in longer papers because it helps the reader to better understand each finding. In this model, it is helpful to provide a brief conclusion that ties each of the findings together and provides a narrative bridge to the discussion section of the your paper.

NOTE: Just as the literature review should be arranged under conceptual categories rather than systematically describing each source, you should also organize your findings under key themes related to addressing the research problem. This can be done under either format noted above [i.e., a thorough explanation of the key results or a sequential, thematic description and explanation of each finding].

II. Content

In general, the content of your results section should include the following:

- Introductory context for understanding the results by restating the research problem underpinning your study . This is useful in re-orientating the reader's focus back to the research problem after having read a review of the literature and your explanation of the methods used for gathering and analyzing information.

- Inclusion of non-textual elements, such as, figures, charts, photos, maps, tables, etc. to further illustrate key findings, if appropriate . Rather than relying entirely on descriptive text, consider how your findings can be presented visually. This is a helpful way of condensing a lot of data into one place that can then be referred to in the text. Consider referring to appendices if there is a lot of non-textual elements.

- A systematic description of your results, highlighting for the reader observations that are most relevant to the topic under investigation . Not all results that emerge from the methodology used to gather information may be related to answering the " So What? " question. Do not confuse observations with interpretations; observations in this context refers to highlighting important findings you discovered through a process of reviewing prior literature and gathering data.

- The page length of your results section is guided by the amount and types of data to be reported . However, focus on findings that are important and related to addressing the research problem. It is not uncommon to have unanticipated results that are not relevant to answering the research question. This is not to say that you don't acknowledge tangential findings and, in fact, can be referred to as areas for further research in the conclusion of your paper. However, spending time in the results section describing tangential findings clutters your overall results section and distracts the reader.

- A short paragraph that concludes the results section by synthesizing the key findings of the study . Highlight the most important findings you want readers to remember as they transition into the discussion section. This is particularly important if, for example, there are many results to report, the findings are complicated or unanticipated, or they are impactful or actionable in some way [i.e., able to be pursued in a feasible way applied to practice].

NOTE: Always use the past tense when referring to your study's findings. Reference to findings should always be described as having already happened because the method used to gather the information has been completed.

III. Problems to Avoid

When writing the results section, avoid doing the following :

- Discussing or interpreting your results . Save this for the discussion section of your paper, although where appropriate, you should compare or contrast specific results to those found in other studies [e.g., "Similar to the work of Smith [1990], one of the findings of this study is the strong correlation between motivation and academic achievement...."].

- Reporting background information or attempting to explain your findings. This should have been done in your introduction section, but don't panic! Often the results of a study point to the need for additional background information or to explain the topic further, so don't think you did something wrong. Writing up research is rarely a linear process. Always revise your introduction as needed.

- Ignoring negative results . A negative result generally refers to a finding that does not support the underlying assumptions of your study. Do not ignore them. Document these findings and then state in your discussion section why you believe a negative result emerged from your study. Note that negative results, and how you handle them, can give you an opportunity to write a more engaging discussion section, therefore, don't be hesitant to highlight them.

- Including raw data or intermediate calculations . Ask your professor if you need to include any raw data generated by your study, such as transcripts from interviews or data files. If raw data is to be included, place it in an appendix or set of appendices that are referred to in the text.

- Be as factual and concise as possible in reporting your findings . Do not use phrases that are vague or non-specific, such as, "appeared to be greater than other variables..." or "demonstrates promising trends that...." Subjective modifiers should be explained in the discussion section of the paper [i.e., why did one variable appear greater? Or, how does the finding demonstrate a promising trend?].

- Presenting the same data or repeating the same information more than once . If you want to highlight a particular finding, it is appropriate to do so in the results section. However, you should emphasize its significance in relation to addressing the research problem in the discussion section. Do not repeat it in your results section because you can do that in the conclusion of your paper.

- Confusing figures with tables . Be sure to properly label any non-textual elements in your paper. Don't call a chart an illustration or a figure a table. If you are not sure, go here .

Annesley, Thomas M. "Show Your Cards: The Results Section and the Poker Game." Clinical Chemistry 56 (July 2010): 1066-1070; Bavdekar, Sandeep B. and Sneha Chandak. "Results: Unraveling the Findings." Journal of the Association of Physicians of India 63 (September 2015): 44-46; Burton, Neil et al. Doing Your Education Research Project . Los Angeles, CA: SAGE, 2008; Caprette, David R. Writing Research Papers. Experimental Biosciences Resources. Rice University; Hancock, Dawson R. and Bob Algozzine. Doing Case Study Research: A Practical Guide for Beginning Researchers . 2nd ed. New York: Teachers College Press, 2011; Introduction to Nursing Research: Reporting Research Findings. Nursing Research: Open Access Nursing Research and Review Articles. (January 4, 2012); Kretchmer, Paul. Twelve Steps to Writing an Effective Results Section. San Francisco Edit ; Ng, K. H. and W. C. Peh. "Writing the Results." Singapore Medical Journal 49 (2008): 967-968; Reporting Research Findings. Wilder Research, in partnership with the Minnesota Department of Human Services. (February 2009); Results. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper. Department of Biology. Bates College; Schafer, Mickey S. Writing the Results. Thesis Writing in the Sciences. Course Syllabus. University of Florida.

Writing Tip

Why Don't I Just Combine the Results Section with the Discussion Section?

It's not unusual to find articles in scholarly social science journals where the author(s) have combined a description of the findings with a discussion about their significance and implications. You could do this. However, if you are inexperienced writing research papers, consider creating two distinct sections for each section in your paper as a way to better organize your thoughts and, by extension, your paper. Think of the results section as the place where you report what your study found; think of the discussion section as the place where you interpret the information and answer the "So What?" question. As you become more skilled writing research papers, you can consider melding the results of your study with a discussion of its implications.

Driscoll, Dana Lynn and Aleksandra Kasztalska. Writing the Experimental Report: Methods, Results, and Discussion. The Writing Lab and The OWL. Purdue University.

- << Previous: Insiderness

- Next: Using Non-Textual Elements >>

- Last Updated: Jun 18, 2024 10:45 AM

- URL: https://libguides.usc.edu/writingguide

- Affiliate Program

- UNITED STATES

- 台灣 (TAIWAN)

- TÜRKIYE (TURKEY)

- Academic Editing Services

- - Research Paper

- - Journal Manuscript

- - Dissertation

- - College & University Assignments

- Admissions Editing Services

- - Application Essay

- - Personal Statement

- - Recommendation Letter

- - Cover Letter

- - CV/Resume

- Business Editing Services

- - Business Documents

- - Report & Brochure

- - Website & Blog

- Writer Editing Services

- - Script & Screenplay

- Our Editors

- Client Reviews

- Editing & Proofreading Prices

- Wordvice Points

- Partner Discount

- Plagiarism Checker

- APA Citation Generator

- MLA Citation Generator

- Chicago Citation Generator

- Vancouver Citation Generator

- - APA Style

- - MLA Style

- - Chicago Style

- - Vancouver Style

- Writing & Editing Guide

- Academic Resources

- Admissions Resources

How to Write the Results/Findings Section in Research

What is the research paper Results section and what does it do?

The Results section of a scientific research paper represents the core findings of a study derived from the methods applied to gather and analyze information. It presents these findings in a logical sequence without bias or interpretation from the author, setting up the reader for later interpretation and evaluation in the Discussion section. A major purpose of the Results section is to break down the data into sentences that show its significance to the research question(s).

The Results section appears third in the section sequence in most scientific papers. It follows the presentation of the Methods and Materials and is presented before the Discussion section —although the Results and Discussion are presented together in many journals. This section answers the basic question “What did you find in your research?”

What is included in the Results section?

The Results section should include the findings of your study and ONLY the findings of your study. The findings include:

- Data presented in tables, charts, graphs, and other figures (may be placed into the text or on separate pages at the end of the manuscript)

- A contextual analysis of this data explaining its meaning in sentence form

- All data that corresponds to the central research question(s)

- All secondary findings (secondary outcomes, subgroup analyses, etc.)

If the scope of the study is broad, or if you studied a variety of variables, or if the methodology used yields a wide range of different results, the author should present only those results that are most relevant to the research question stated in the Introduction section .

As a general rule, any information that does not present the direct findings or outcome of the study should be left out of this section. Unless the journal requests that authors combine the Results and Discussion sections, explanations and interpretations should be omitted from the Results.

How are the results organized?

The best way to organize your Results section is “logically.” One logical and clear method of organizing research results is to provide them alongside the research questions—within each research question, present the type of data that addresses that research question.

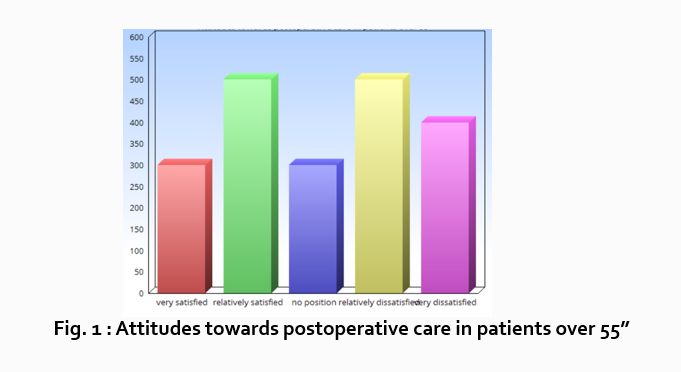

Let’s look at an example. Your research question is based on a survey among patients who were treated at a hospital and received postoperative care. Let’s say your first research question is:

“What do hospital patients over age 55 think about postoperative care?”

This can actually be represented as a heading within your Results section, though it might be presented as a statement rather than a question:

Attitudes towards postoperative care in patients over the age of 55

Now present the results that address this specific research question first. In this case, perhaps a table illustrating data from a survey. Likert items can be included in this example. Tables can also present standard deviations, probabilities, correlation matrices, etc.

Following this, present a content analysis, in words, of one end of the spectrum of the survey or data table. In our example case, start with the POSITIVE survey responses regarding postoperative care, using descriptive phrases. For example:

“Sixty-five percent of patients over 55 responded positively to the question “ Are you satisfied with your hospital’s postoperative care ?” (Fig. 2)

Include other results such as subcategory analyses. The amount of textual description used will depend on how much interpretation of tables and figures is necessary and how many examples the reader needs in order to understand the significance of your research findings.

Next, present a content analysis of another part of the spectrum of the same research question, perhaps the NEGATIVE or NEUTRAL responses to the survey. For instance:

“As Figure 1 shows, 15 out of 60 patients in Group A responded negatively to Question 2.”

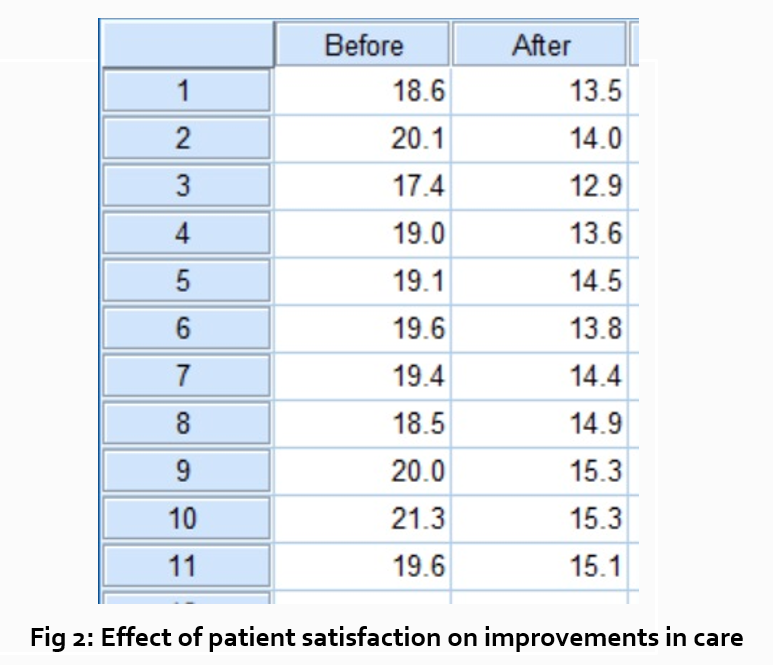

After you have assessed the data in one figure and explained it sufficiently, move on to your next research question. For example:

“How does patient satisfaction correspond to in-hospital improvements made to postoperative care?”

This kind of data may be presented through a figure or set of figures (for instance, a paired T-test table).

Explain the data you present, here in a table, with a concise content analysis:

“The p-value for the comparison between the before and after groups of patients was .03% (Fig. 2), indicating that the greater the dissatisfaction among patients, the more frequent the improvements that were made to postoperative care.”

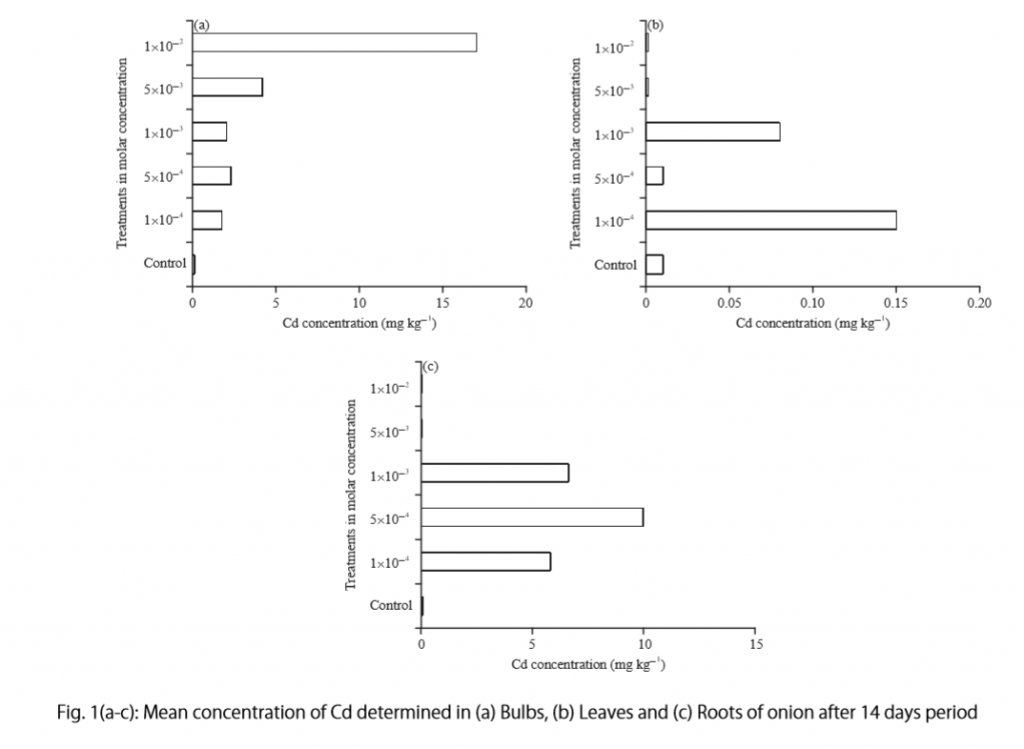

Let’s examine another example of a Results section from a study on plant tolerance to heavy metal stress . In the Introduction section, the aims of the study are presented as “determining the physiological and morphological responses of Allium cepa L. towards increased cadmium toxicity” and “evaluating its potential to accumulate the metal and its associated environmental consequences.” The Results section presents data showing how these aims are achieved in tables alongside a content analysis, beginning with an overview of the findings:

“Cadmium caused inhibition of root and leave elongation, with increasing effects at higher exposure doses (Fig. 1a-c).”

The figure containing this data is cited in parentheses. Note that this author has combined three graphs into one single figure. Separating the data into separate graphs focusing on specific aspects makes it easier for the reader to assess the findings, and consolidating this information into one figure saves space and makes it easy to locate the most relevant results.

Following this overall summary, the relevant data in the tables is broken down into greater detail in text form in the Results section.

- “Results on the bio-accumulation of cadmium were found to be the highest (17.5 mg kgG1) in the bulb, when the concentration of cadmium in the solution was 1×10G2 M and lowest (0.11 mg kgG1) in the leaves when the concentration was 1×10G3 M.”

Captioning and Referencing Tables and Figures

Tables and figures are central components of your Results section and you need to carefully think about the most effective way to use graphs and tables to present your findings . Therefore, it is crucial to know how to write strong figure captions and to refer to them within the text of the Results section.

The most important advice one can give here as well as throughout the paper is to check the requirements and standards of the journal to which you are submitting your work. Every journal has its own design and layout standards, which you can find in the author instructions on the target journal’s website. Perusing a journal’s published articles will also give you an idea of the proper number, size, and complexity of your figures.

Regardless of which format you use, the figures should be placed in the order they are referenced in the Results section and be as clear and easy to understand as possible. If there are multiple variables being considered (within one or more research questions), it can be a good idea to split these up into separate figures. Subsequently, these can be referenced and analyzed under separate headings and paragraphs in the text.

To create a caption, consider the research question being asked and change it into a phrase. For instance, if one question is “Which color did participants choose?”, the caption might be “Color choice by participant group.” Or in our last research paper example, where the question was “What is the concentration of cadmium in different parts of the onion after 14 days?” the caption reads:

“Fig. 1(a-c): Mean concentration of Cd determined in (a) bulbs, (b) leaves, and (c) roots of onions after a 14-day period.”

Steps for Composing the Results Section

Because each study is unique, there is no one-size-fits-all approach when it comes to designing a strategy for structuring and writing the section of a research paper where findings are presented. The content and layout of this section will be determined by the specific area of research, the design of the study and its particular methodologies, and the guidelines of the target journal and its editors. However, the following steps can be used to compose the results of most scientific research studies and are essential for researchers who are new to preparing a manuscript for publication or who need a reminder of how to construct the Results section.

Step 1 : Consult the guidelines or instructions that the target journal or publisher provides authors and read research papers it has published, especially those with similar topics, methods, or results to your study.

- The guidelines will generally outline specific requirements for the results or findings section, and the published articles will provide sound examples of successful approaches.

- Note length limitations on restrictions on content. For instance, while many journals require the Results and Discussion sections to be separate, others do not—qualitative research papers often include results and interpretations in the same section (“Results and Discussion”).

- Reading the aims and scope in the journal’s “ guide for authors ” section and understanding the interests of its readers will be invaluable in preparing to write the Results section.

Step 2 : Consider your research results in relation to the journal’s requirements and catalogue your results.

- Focus on experimental results and other findings that are especially relevant to your research questions and objectives and include them even if they are unexpected or do not support your ideas and hypotheses.

- Catalogue your findings—use subheadings to streamline and clarify your report. This will help you avoid excessive and peripheral details as you write and also help your reader understand and remember your findings. Create appendices that might interest specialists but prove too long or distracting for other readers.

- Decide how you will structure of your results. You might match the order of the research questions and hypotheses to your results, or you could arrange them according to the order presented in the Methods section. A chronological order or even a hierarchy of importance or meaningful grouping of main themes or categories might prove effective. Consider your audience, evidence, and most importantly, the objectives of your research when choosing a structure for presenting your findings.

Step 3 : Design figures and tables to present and illustrate your data.

- Tables and figures should be numbered according to the order in which they are mentioned in the main text of the paper.

- Information in figures should be relatively self-explanatory (with the aid of captions), and their design should include all definitions and other information necessary for readers to understand the findings without reading all of the text.

- Use tables and figures as a focal point to tell a clear and informative story about your research and avoid repeating information. But remember that while figures clarify and enhance the text, they cannot replace it.

Step 4 : Draft your Results section using the findings and figures you have organized.

- The goal is to communicate this complex information as clearly and precisely as possible; precise and compact phrases and sentences are most effective.

- In the opening paragraph of this section, restate your research questions or aims to focus the reader’s attention to what the results are trying to show. It is also a good idea to summarize key findings at the end of this section to create a logical transition to the interpretation and discussion that follows.

- Try to write in the past tense and the active voice to relay the findings since the research has already been done and the agent is usually clear. This will ensure that your explanations are also clear and logical.

- Make sure that any specialized terminology or abbreviation you have used here has been defined and clarified in the Introduction section .

Step 5 : Review your draft; edit and revise until it reports results exactly as you would like to have them reported to your readers.

- Double-check the accuracy and consistency of all the data, as well as all of the visual elements included.

- Read your draft aloud to catch language errors (grammar, spelling, and mechanics), awkward phrases, and missing transitions.

- Ensure that your results are presented in the best order to focus on objectives and prepare readers for interpretations, valuations, and recommendations in the Discussion section . Look back over the paper’s Introduction and background while anticipating the Discussion and Conclusion sections to ensure that the presentation of your results is consistent and effective.

- Consider seeking additional guidance on your paper. Find additional readers to look over your Results section and see if it can be improved in any way. Peers, professors, or qualified experts can provide valuable insights.

One excellent option is to use a professional English proofreading and editing service such as Wordvice, including our paper editing service . With hundreds of qualified editors from dozens of scientific fields, Wordvice has helped thousands of authors revise their manuscripts and get accepted into their target journals. Read more about the proofreading and editing process before proceeding with getting academic editing services and manuscript editing services for your manuscript.

As the representation of your study’s data output, the Results section presents the core information in your research paper. By writing with clarity and conciseness and by highlighting and explaining the crucial findings of their study, authors increase the impact and effectiveness of their research manuscripts.

For more articles and videos on writing your research manuscript, visit Wordvice’s Resources page.

Wordvice Resources

- How to Write a Research Paper Introduction

- Which Verb Tenses to Use in a Research Paper

- How to Write an Abstract for a Research Paper

- How to Write a Research Paper Title

- Useful Phrases for Academic Writing

- Common Transition Terms in Academic Papers

- Active and Passive Voice in Research Papers

- 100+ Verbs That Will Make Your Research Writing Amazing

- Tips for Paraphrasing in Research Papers

From Data to Discovery: The Findings Section of a Research Paper

Discover the role of the findings section of a research paper here. Explore strategies and techniques to maximize your understanding.

Are you curious about the Findings section of a research paper? Did you know that this is a part where all the juicy results and discoveries are laid out for the world to see? Undoubtedly, the findings section of a research paper plays a critical role in presenting and interpreting the collected data. It serves as a comprehensive account of the study’s results and their implications.

Well, look no further because we’ve got you covered! In this article, we’re diving into the ins and outs of presenting and interpreting data in the findings section. We’ll be sharing tips and tricks on how to effectively present your findings, whether it’s through tables, graphs, or good old descriptive statistics.

Overview of the Findings Section of a Research Paper

The findings section of a research paper presents the results and outcomes of the study or investigation. It is a crucial part of the research paper where researchers interpret and analyze the data collected and draw conclusions based on their findings. This section aims to answer the research questions or hypotheses formulated earlier in the paper and provide evidence to support or refute them.

In the findings section, researchers typically present the data clearly and organized. They may use tables, graphs, charts, or other visual aids to illustrate the patterns, trends, or relationships observed in the data. The findings should be presented objectively, without any bias or personal opinions, and should be accompanied by appropriate statistical analyses or methods to ensure the validity and reliability of the results.

Organizing the Findings Section

The findings section of the research paper organizes and presents the results obtained from the study in a clear and logical manner. Here is a suggested structure for organizing the Findings section:

Introduction to the Findings

Start the section by providing a brief overview of the research objectives and the methodology employed. Recapitulate the research questions or hypotheses addressed in the study.

To learn more about methodology, read this article .

Descriptive Statistics and Data Presentation

Present the collected data using appropriate descriptive statistics. This may involve using tables, graphs, charts, or other visual representations to convey the information effectively. Remember: we can easily help you with that.

Data Analysis and Interpretation

Perform a thorough analysis of the data collected and describe the key findings. Present the results of statistical analyses or any other relevant methods used to analyze the data.

Discussion of Findings

Analyze and interpret the findings in the context of existing literature or theoretical frameworks . Discuss any patterns, trends, or relationships observed in the data. Compare and contrast the results with prior studies, highlighting similarities and differences.

Limitations and Constraints

Acknowledge and discuss any limitations or constraints that may have influenced the findings. This could include issues such as sample size, data collection methods, or potential biases.

Summarize the main findings of the study and emphasize their significance. Revisit the research questions or hypotheses and discuss whether they have been supported or refuted by the findings.

Presenting Data in the Findings Section

There are several ways to present data in the findings section of a research paper. Here are some common methods:

- Tables : Tables are commonly used to present organized and structured data. They are particularly useful when presenting numerical data with multiple variables or categories. Tables allow readers to easily compare and interpret the information presented. Learn how to cite tables in research papers here .

- Graphs and Charts: Graphs and charts are effective visual tools for presenting data, especially when illustrating trends, patterns, or relationships. Common types include bar graphs, line graphs, scatter plots, pie charts, and histograms. Graphs and charts provide a visual representation of the data, making it easier for readers to comprehend and interpret.

- Figures and Images: Figures and images can be used to present data that requires visual representation, such as maps, diagrams, or experimental setups. They can enhance the understanding of complex data or provide visual evidence to support the research findings.

- Descriptive Statistics: Descriptive statistics provide summary measures of central tendency (e.g., mean, median, mode) and dispersion (e.g., standard deviation, range) for numerical data. These statistics can be included in the text or presented in tables or graphs to provide a concise summary of the data distribution.

How to Effectively Interpret Results

Interpreting the results is a crucial aspect of the findings section in a research paper. It involves analyzing the data collected and drawing meaningful conclusions based on the findings. Following are the guidelines on how to effectively interpret the results.

Step 1 – Begin with a Recap

Start by restating the research questions or hypotheses to provide context for the interpretation. Remind readers of the specific objectives of the study to help them understand the relevance of the findings.

Step 2 – Relate Findings to Research Questions

Clearly articulate how the results address the research questions or hypotheses. Discuss each finding in relation to the original objectives and explain how it contributes to answering the research questions or supporting/refuting the hypotheses.

Step 3 – Compare with Existing Literature

Compare and contrast the findings with previous studies or existing literature. Highlight similarities, differences, or discrepancies between your results and those of other researchers. Discuss any consistencies or contradictions and provide possible explanations for the observed variations.

Step 4 – Consider Limitations and Alternative Explanations

Acknowledge the limitations of the study and discuss how they may have influenced the results. Explore alternative explanations or factors that could potentially account for the findings. Evaluate the robustness of the results in light of the limitations and alternative interpretations.

Step 5 – Discuss Implications and Significance

Highlight any potential applications or areas where further research is needed based on the outcomes of the study.

Step 6 – Address Inconsistencies and Contradictions

If there are any inconsistencies or contradictions in the findings, address them directly. Discuss possible reasons for the discrepancies and consider their implications for the overall interpretation. Be transparent about any uncertainties or unresolved issues.

Step 7 – Be Objective and Data-Driven

Present the interpretation objectively, based on the evidence and data collected. Avoid personal biases or subjective opinions. Use logical reasoning and sound arguments to support your interpretations.

Reporting Statistical Significance

When reporting statistical significance in the findings section of a research paper, it is important to accurately convey the results of statistical analyses and their implications. Here are some guidelines on how to report statistical significance effectively:

- Clearly State the Statistical Test: Begin by clearly stating the specific statistical test or analysis used to determine statistical significance. For example, you might mention that a t-test, chi-square test, ANOVA, correlation analysis, or regression analysis was employed.

- Report the Test Statistic: Provide the value of the test statistic obtained from the analysis. This could be the t-value, F-value, chi-square value, correlation coefficient, or any other relevant statistic depending on the test used.

- State the Degrees of Freedom: Indicate the degrees of freedom associated with the statistical test. Degrees of freedom represent the number of independent pieces of information available for estimating a statistic. For example, in a t-test, degrees of freedom would be mentioned as (df = n1 + n2 – 2) for an independent samples test or (df = N – 2) for a paired samples test.

- Report the p-value: The p-value indicates the probability of obtaining results as extreme or more extreme than the observed results, assuming the null hypothesis is true. Report the p-value associated with the statistical test. For example, p < 0.05 denotes statistical significance at the conventional level of α = 0.05.

- Provide the Conclusion: Based on the p-value obtained, state whether the results are statistically significant or not. If the p-value is less than the predetermined threshold (e.g., p < 0.05), state that the results are statistically significant. If the p-value is greater than the threshold, state that the results are not statistically significant.

- Discuss the Interpretation: After reporting statistical significance, discuss the practical or theoretical implications of the finding. Explain what the significant result means in the context of your research questions or hypotheses. Address the effect size and practical significance of the findings, if applicable.

- Consider Effect Size Measures: Along with statistical significance, it is often important to report effect size measures. Effect size quantifies the magnitude of the relationship or difference observed in the data. Common effect size measures include Cohen’s d, eta-squared, or Pearson’s r. Reporting effect size provides additional meaningful information about the strength of the observed effects.

- Be Accurate and Transparent: Ensure that the reported statistical significance and associated values are accurate. Avoid misinterpreting or misrepresenting the results. Be transparent about the statistical tests conducted, any assumptions made, and potential limitations or caveats that may impact the interpretation of the significant results.

Conclusion of the Findings Section

The conclusion of the findings section in a research paper serves as a summary and synthesis of the key findings and their implications. It is an opportunity to tie together the results, discuss their significance, and address the research objectives. Here are some guidelines on how to write the conclusion of the Findings section:

Summarize the Key Findings

Begin by summarizing the main findings of the study. Provide a concise overview of the significant results, patterns, or relationships that emerged from the data analysis. Highlight the most important findings that directly address the research questions or hypotheses.

Revisit the Research Objectives

Remind the reader of the research objectives stated at the beginning of the paper. Discuss how the findings contribute to achieving those objectives and whether they support or challenge the initial research questions or hypotheses.

Suggest Future Directions

Identify areas for further research or future directions based on the findings. Discuss any unanswered questions, unresolved issues, or new avenues of inquiry that emerged during the study. Propose potential research opportunities that can build upon the current findings.

The Best Scientific Figures to Represent Your Findings

Have you heard of any tool that helps you represent your findings through visuals like graphs, pie charts, and infographics? Well, if you haven’t, then here’s the tool you need to explore – Mind the Graph . It’s the tool that has the best scientific figures to represent your findings. Go, try it now, and make your research findings stand out!

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

About Sowjanya Pedada

Sowjanya is a passionate writer and an avid reader. She holds MBA in Agribusiness Management and now is working as a content writer. She loves to play with words and hopes to make a difference in the world through her writings. Apart from writing, she is interested in reading fiction novels and doing craftwork. She also loves to travel and explore different cuisines and spend time with her family and friends.

Content tags

- How it works

How to Write the Dissertation Findings or Results – Steps & Tips

Published by Grace Graffin at August 11th, 2021 , Revised On June 11, 2024

Each part of the dissertation is unique, and some general and specific rules must be followed. The dissertation’s findings section presents the key results of your research without interpreting their meaning .

Theoretically, this is an exciting section of a dissertation because it involves writing what you have observed and found. However, it can be a little tricky if there is too much information to confuse the readers.

The goal is to include only the essential and relevant findings in this section. The results must be presented in an orderly sequence to provide clarity to the readers.

This section of the dissertation should be easy for the readers to follow, so you should avoid going into a lengthy debate over the interpretation of the results.

It is vitally important to focus only on clear and precise observations. The findings chapter of the dissertation is theoretically the easiest to write.

It includes statistical analysis and a brief write-up about whether or not the results emerging from the analysis are significant. This segment should be written in the past sentence as you describe what you have done in the past.

This article will provide detailed information about how to write the findings of a dissertation .

When to Write Dissertation Findings Chapter

As soon as you have gathered and analysed your data, you can start to write up the findings chapter of your dissertation paper. Remember that it is your chance to report the most notable findings of your research work and relate them to the research hypothesis or research questions set out in the introduction chapter of the dissertation .

You will be required to separately report your study’s findings before moving on to the discussion chapter if your dissertation is based on the collection of primary data or experimental work.

However, you may not be required to have an independent findings chapter if your dissertation is purely descriptive and focuses on the analysis of case studies or interpretation of texts.

- Always report the findings of your research in the past tense.

- The dissertation findings chapter varies from one project to another, depending on the data collected and analyzed.

- Avoid reporting results that are not relevant to your research questions or research hypothesis.

Does your Dissertation Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of the Dissertation strong.

1. Reporting Quantitative Findings

The best way to present your quantitative findings is to structure them around the research hypothesis or questions you intend to address as part of your dissertation project.

Report the relevant findings for each research question or hypothesis, focusing on how you analyzed them.

Analysis of your findings will help you determine how they relate to the different research questions and whether they support the hypothesis you formulated.

While you must highlight meaningful relationships, variances, and tendencies, it is important not to guess their interpretations and implications because this is something to save for the discussion and conclusion chapters.

Any findings not directly relevant to your research questions or explanations concerning the data collection process should be added to the dissertation paper’s appendix section.

Use of Figures and Tables in Dissertation Findings

Suppose your dissertation is based on quantitative research. In that case, it is important to include charts, graphs, tables, and other visual elements to help your readers understand the emerging trends and relationships in your findings.

Repeating information will give the impression that you are short on ideas. Refer to all charts, illustrations, and tables in your writing but avoid recurrence.

The text should be used only to elaborate and summarize certain parts of your results. On the other hand, illustrations and tables are used to present multifaceted data.

It is recommended to give descriptive labels and captions to all illustrations used so the readers can figure out what each refers to.

How to Report Quantitative Findings

Here is an example of how to report quantitative results in your dissertation findings chapter;

Two hundred seventeen participants completed both the pretest and post-test and a Pairwise T-test was used for the analysis. The quantitative data analysis reveals a statistically significant difference between the mean scores of the pretest and posttest scales from the Teachers Discovering Computers course. The pretest mean was 29.00 with a standard deviation of 7.65, while the posttest mean was 26.50 with a standard deviation of 9.74 (Table 1). These results yield a significance level of .000, indicating a strong treatment effect (see Table 3). With the correlation between the scores being .448, the little relationship is seen between the pretest and posttest scores (Table 2). This leads the researcher to conclude that the impact of the course on the educators’ perception and integration of technology into the curriculum is dramatic.

Paired Samples

| Mean | N | Std. Deviation | Std. Error Mean | ||

|---|---|---|---|---|---|

| PRESCORE | 29.00 | 217 | 7.65 | .519 | |

| PSTSCORE | 26.00 | 217 | 9.74 | .661 |

Paired Samples Correlation

| N | Correlation | Sig. | ||

|---|---|---|---|---|

| PRESCORE & PSTSCORE | 217 | .448 | .000 |

Paired Samples Test

| Paired Differences | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | Std. Deviation | Std. Error Mean | 95% Confidence Interval of the Difference | t | df | Sig. (2-tailed) | |||

| Lower | Upper | ||||||||

| Pair 1 | PRESCORE-PSTSCORE | 2.50 | 9.31 | .632 | 1.26 | 3.75 | 3.967 | 216 | .000 |

Also Read: How to Write the Abstract for the Dissertation.

2. Reporting Qualitative Findings

A notable issue with reporting qualitative findings is that not all results directly relate to your research questions or hypothesis.

The best way to present the results of qualitative research is to frame your findings around the most critical areas or themes you obtained after you examined the data.

In-depth data analysis will help you observe what the data shows for each theme. Any developments, relationships, patterns, and independent responses directly relevant to your research question or hypothesis should be mentioned to the readers.

Additional information not directly relevant to your research can be included in the appendix .

How to Report Qualitative Findings

Here is an example of how to report qualitative results in your dissertation findings chapter;

The last question of the interview focused on the need for improvement in Thai ready-to-eat products and the industry at large, emphasizing the need for enhancement in the current products being offered in the market. When asked if there was any particular need for Thai ready-to-eat meals to be improved and how to improve them in case of ‘yes,’ the males replied mainly by saying that the current products need improvement in terms of the use of healthier raw materials and preservatives or additives. There was an agreement amongst all males concerning the need to improve the industry for ready-to-eat meals and the use of more healthy items to prepare such meals. The females were also of the opinion that the fast-food items needed to be improved in the sense that more healthy raw materials such as vegetable oil and unsaturated fats, including whole-wheat products, to overcome risks associated with trans fat leading to obesity and hypertension should be used for the production of RTE products. The frozen RTE meals and packaged snacks included many preservatives and chemical-based flavouring enhancers that harmed human health and needed to be reduced. The industry is said to be aware of this fact and should try to produce RTE products that benefit the community in terms of healthy consumption.

Looking for dissertation help?

Research prospect to the rescue then.

We have expert writers on our team who are skilled at helping students with dissertations across a variety of disciplines. Guaranteeing 100% satisfaction!

What to Avoid in Dissertation Findings Chapter

- Avoid using interpretive and subjective phrases and terms such as “confirms,” “reveals,” “suggests,” or “validates.” These terms are more suitable for the discussion chapter , where you will be expected to interpret the results in detail.

- Only briefly explain findings in relation to the key themes, hypothesis, and research questions. You don’t want to write a detailed subjective explanation for any research questions at this stage.

The Do’s of Writing the Findings or Results Section

- Ensure you are not presenting results from other research studies in your findings.

- Observe whether or not your hypothesis is tested or research questions answered.

- Illustrations and tables present data and are labelled to help your readers understand what they relate to.

- Use software such as Excel, STATA, and SPSS to analyse results and important trends.

Essential Guidelines on How to Write Dissertation Findings

The dissertation findings chapter should provide the context for understanding the results. The research problem should be repeated, and the research goals should be stated briefly.

This approach helps to gain the reader’s attention toward the research problem. The first step towards writing the findings is identifying which results will be presented in this section.

The results relevant to the questions must be presented, considering whether the results support the hypothesis. You do not need to include every result in the findings section. The next step is ensuring the data can be appropriately organized and accurate.

You will need to have a basic idea about writing the findings of a dissertation because this will provide you with the knowledge to arrange the data chronologically.

Start each paragraph by writing about the most important results and concluding the section with the most negligible actual results.

A short paragraph can conclude the findings section, summarising the findings so readers will remember as they transition to the next chapter. This is essential if findings are unexpected or unfamiliar or impact the study.

Our writers can help you with all parts of your dissertation, including statistical analysis of your results . To obtain free non-binding quotes, please complete our online quote form here .

Be Impartial in your Writing

When crafting your findings, knowing how you will organize the work is important. The findings are the story that needs to be told in response to the research questions that have been answered.

Therefore, the story needs to be organized to make sense to you and the reader. The findings must be compelling and responsive to be linked to the research questions being answered.

Always ensure that the size and direction of any changes, including percentage change, can be mentioned in the section. The details of p values or confidence intervals and limits should be included.

The findings sections only have the relevant parts of the primary evidence mentioned. Still, it is a good practice to include all the primary evidence in an appendix that can be referred to later.

The results should always be written neutrally without speculation or implication. The statement of the results mustn’t have any form of evaluation or interpretation.

Negative results should be added in the findings section because they validate the results and provide high neutrality levels.

The length of the dissertation findings chapter is an important question that must be addressed. It should be noted that the length of the section is directly related to the total word count of your dissertation paper.

The writer should use their discretion in deciding the length of the findings section or refer to the dissertation handbook or structure guidelines.

It should neither belong nor be short nor concise and comprehensive to highlight the reader’s main findings.

Ethically, you should be confident in the findings and provide counter-evidence. Anything that does not have sufficient evidence should be discarded. The findings should respond to the problem presented and provide a solution to those questions.

Structure of the Findings Chapter

The chapter should use appropriate words and phrases to present the results to the readers. Logical sentences should be used, while paragraphs should be linked to produce cohesive work.

You must ensure all the significant results have been added in the section. Recheck after completing the section to ensure no mistakes have been made.

The structure of the findings section is something you may have to be sure of primarily because it will provide the basis for your research work and ensure that the discussions section can be written clearly and proficiently.

One way to arrange the results is to provide a brief synopsis and then explain the essential findings. However, there should be no speculation or explanation of the results, as this will be done in the discussion section.

Another way to arrange the section is to present and explain a result. This can be done for all the results while the section is concluded with an overall synopsis.

This is the preferred method when you are writing more extended dissertations. It can be helpful when multiple results are equally significant. A brief conclusion should be written to link all the results and transition to the discussion section.

Numerous data analysis dissertation examples are available on the Internet, which will help you improve your understanding of writing the dissertation’s findings.

Problems to Avoid When Writing Dissertation Findings

One of the problems to avoid while writing the dissertation findings is reporting background information or explaining the findings. This should be done in the introduction section .

You can always revise the introduction chapter based on the data you have collected if that seems an appropriate thing to do.

Raw data or intermediate calculations should not be added in the findings section. Always ask your professor if raw data needs to be included.

If the data is to be included, then use an appendix or a set of appendices referred to in the text of the findings chapter.

Do not use vague or non-specific phrases in the findings section. It is important to be factual and concise for the reader’s benefit.

The findings section presents the crucial data collected during the research process. It should be presented concisely and clearly to the reader. There should be no interpretation, speculation, or analysis of the data.

The significant results should be categorized systematically with the text used with charts, figures, and tables. Furthermore, avoiding using vague and non-specific words in this section is essential.

It is essential to label the tables and visual material properly. You should also check and proofread the section to avoid mistakes.

The dissertation findings chapter is a critical part of your overall dissertation paper. If you struggle with presenting your results and statistical analysis, our expert dissertation writers can help you get things right. Whether you need help with the entire dissertation paper or individual chapters, our dissertation experts can provide customized dissertation support .

FAQs About Findings of a Dissertation

How do i report quantitative findings.

The best way to present your quantitative findings is to structure them around the research hypothesis or research questions you intended to address as part of your dissertation project. Report the relevant findings for each of the research questions or hypotheses, focusing on how you analyzed them.

How do I report qualitative findings?

The best way to present the qualitative research results is to frame your findings around the most important areas or themes that you obtained after examining the data.

An in-depth analysis of the data will help you observe what the data is showing for each theme. Any developments, relationships, patterns, and independent responses that are directly relevant to your research question or hypothesis should be clearly mentioned for the readers.

Can I use interpretive phrases like ‘it confirms’ in the finding chapter?

No, It is highly advisable to avoid using interpretive and subjective phrases in the finding chapter. These terms are more suitable for the discussion chapter , where you will be expected to provide your interpretation of the results in detail.

Can I report the results from other research papers in my findings chapter?

NO, you must not be presenting results from other research studies in your findings.

You May Also Like

Do dissertations scare you? Struggling with writing a flawless dissertation? Well, congratulations, you have landed in the perfect place. In this blog, we will take you through the detailed process of writing a dissertation. Sounds fun? We thought so!

This article is a step-by-step guide to how to write statement of a problem in research. The research problem will be half-solved by defining it correctly.

Dissertation discussion is where you explore the relevance and significance of results. Here are guidelines to help you write the perfect discussion chapter.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

How To Write The Results/Findings Chapter

For quantitative studies (dissertations & theses).

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | July 2021

So, you’ve completed your quantitative data analysis and it’s time to report on your findings. But where do you start? In this post, we’ll walk you through the results chapter (also called the findings or analysis chapter), step by step, so that you can craft this section of your dissertation or thesis with confidence. If you’re looking for information regarding the results chapter for qualitative studies, you can find that here .

Overview: Quantitative Results Chapter

- What exactly the results chapter is

- What you need to include in your chapter

- How to structure the chapter

- Tips and tricks for writing a top-notch chapter

- Free results chapter template

What exactly is the results chapter?

The results chapter (also referred to as the findings or analysis chapter) is one of the most important chapters of your dissertation or thesis because it shows the reader what you’ve found in terms of the quantitative data you’ve collected. It presents the data using a clear text narrative, supported by tables, graphs and charts. In doing so, it also highlights any potential issues (such as outliers or unusual findings) you’ve come across.

But how’s that different from the discussion chapter?

Well, in the results chapter, you only present your statistical findings. Only the numbers, so to speak – no more, no less. Contrasted to this, in the discussion chapter , you interpret your findings and link them to prior research (i.e. your literature review), as well as your research objectives and research questions . In other words, the results chapter presents and describes the data, while the discussion chapter interprets the data.

Let’s look at an example.

In your results chapter, you may have a plot that shows how respondents to a survey responded: the numbers of respondents per category, for instance. You may also state whether this supports a hypothesis by using a p-value from a statistical test. But it is only in the discussion chapter where you will say why this is relevant or how it compares with the literature or the broader picture. So, in your results chapter, make sure that you don’t present anything other than the hard facts – this is not the place for subjectivity.

It’s worth mentioning that some universities prefer you to combine the results and discussion chapters. Even so, it is good practice to separate the results and discussion elements within the chapter, as this ensures your findings are fully described. Typically, though, the results and discussion chapters are split up in quantitative studies. If you’re unsure, chat with your research supervisor or chair to find out what their preference is.

What should you include in the results chapter?

Following your analysis, it’s likely you’ll have far more data than are necessary to include in your chapter. In all likelihood, you’ll have a mountain of SPSS or R output data, and it’s your job to decide what’s most relevant. You’ll need to cut through the noise and focus on the data that matters.

This doesn’t mean that those analyses were a waste of time – on the contrary, those analyses ensure that you have a good understanding of your dataset and how to interpret it. However, that doesn’t mean your reader or examiner needs to see the 165 histograms you created! Relevance is key.

How do I decide what’s relevant?

At this point, it can be difficult to strike a balance between what is and isn’t important. But the most important thing is to ensure your results reflect and align with the purpose of your study . So, you need to revisit your research aims, objectives and research questions and use these as a litmus test for relevance. Make sure that you refer back to these constantly when writing up your chapter so that you stay on track.

As a general guide, your results chapter will typically include the following:

- Some demographic data about your sample

- Reliability tests (if you used measurement scales)

- Descriptive statistics

- Inferential statistics (if your research objectives and questions require these)

- Hypothesis tests (again, if your research objectives and questions require these)

We’ll discuss each of these points in more detail in the next section.

Importantly, your results chapter needs to lay the foundation for your discussion chapter . This means that, in your results chapter, you need to include all the data that you will use as the basis for your interpretation in the discussion chapter.

For example, if you plan to highlight the strong relationship between Variable X and Variable Y in your discussion chapter, you need to present the respective analysis in your results chapter – perhaps a correlation or regression analysis.

Need a helping hand?

How do I write the results chapter?

There are multiple steps involved in writing up the results chapter for your quantitative research. The exact number of steps applicable to you will vary from study to study and will depend on the nature of the research aims, objectives and research questions . However, we’ll outline the generic steps below.

Step 1 – Revisit your research questions

The first step in writing your results chapter is to revisit your research objectives and research questions . These will be (or at least, should be!) the driving force behind your results and discussion chapters, so you need to review them and then ask yourself which statistical analyses and tests (from your mountain of data) would specifically help you address these . For each research objective and research question, list the specific piece (or pieces) of analysis that address it.

At this stage, it’s also useful to think about the key points that you want to raise in your discussion chapter and note these down so that you have a clear reminder of which data points and analyses you want to highlight in the results chapter. Again, list your points and then list the specific piece of analysis that addresses each point.

Next, you should draw up a rough outline of how you plan to structure your chapter . Which analyses and statistical tests will you present and in what order? We’ll discuss the “standard structure” in more detail later, but it’s worth mentioning now that it’s always useful to draw up a rough outline before you start writing (this advice applies to any chapter).

Step 2 – Craft an overview introduction

As with all chapters in your dissertation or thesis, you should start your quantitative results chapter by providing a brief overview of what you’ll do in the chapter and why . For example, you’d explain that you will start by presenting demographic data to understand the representativeness of the sample, before moving onto X, Y and Z.

This section shouldn’t be lengthy – a paragraph or two maximum. Also, it’s a good idea to weave the research questions into this section so that there’s a golden thread that runs through the document.

Step 3 – Present the sample demographic data

The first set of data that you’ll present is an overview of the sample demographics – in other words, the demographics of your respondents.

For example:

- What age range are they?

- How is gender distributed?

- How is ethnicity distributed?

- What areas do the participants live in?

The purpose of this is to assess how representative the sample is of the broader population. This is important for the sake of the generalisability of the results. If your sample is not representative of the population, you will not be able to generalise your findings. This is not necessarily the end of the world, but it is a limitation you’ll need to acknowledge.

Of course, to make this representativeness assessment, you’ll need to have a clear view of the demographics of the population. So, make sure that you design your survey to capture the correct demographic information that you will compare your sample to.

But what if I’m not interested in generalisability?

Well, even if your purpose is not necessarily to extrapolate your findings to the broader population, understanding your sample will allow you to interpret your findings appropriately, considering who responded. In other words, it will help you contextualise your findings . For example, if 80% of your sample was aged over 65, this may be a significant contextual factor to consider when interpreting the data. Therefore, it’s important to understand and present the demographic data.

Step 4 – Review composite measures and the data “shape”.

Before you undertake any statistical analysis, you’ll need to do some checks to ensure that your data are suitable for the analysis methods and techniques you plan to use. If you try to analyse data that doesn’t meet the assumptions of a specific statistical technique, your results will be largely meaningless. Therefore, you may need to show that the methods and techniques you’ll use are “allowed”.

Most commonly, there are two areas you need to pay attention to:

#1: Composite measures

The first is when you have multiple scale-based measures that combine to capture one construct – this is called a composite measure . For example, you may have four Likert scale-based measures that (should) all measure the same thing, but in different ways. In other words, in a survey, these four scales should all receive similar ratings. This is called “ internal consistency ”.

Internal consistency is not guaranteed though (especially if you developed the measures yourself), so you need to assess the reliability of each composite measure using a test. Typically, Cronbach’s Alpha is a common test used to assess internal consistency – i.e., to show that the items you’re combining are more or less saying the same thing. A high alpha score means that your measure is internally consistent. A low alpha score means you may need to consider scrapping one or more of the measures.

#2: Data shape

The second matter that you should address early on in your results chapter is data shape. In other words, you need to assess whether the data in your set are symmetrical (i.e. normally distributed) or not, as this will directly impact what type of analyses you can use. For many common inferential tests such as T-tests or ANOVAs (we’ll discuss these a bit later), your data needs to be normally distributed. If it’s not, you’ll need to adjust your strategy and use alternative tests.

To assess the shape of the data, you’ll usually assess a variety of descriptive statistics (such as the mean, median and skewness), which is what we’ll look at next.

Step 5 – Present the descriptive statistics

Now that you’ve laid the foundation by discussing the representativeness of your sample, as well as the reliability of your measures and the shape of your data, you can get started with the actual statistical analysis. The first step is to present the descriptive statistics for your variables.

For scaled data, this usually includes statistics such as:

- The mean – this is simply the mathematical average of a range of numbers.

- The median – this is the midpoint in a range of numbers when the numbers are arranged in order.

- The mode – this is the most commonly repeated number in the data set.

- Standard deviation – this metric indicates how dispersed a range of numbers is. In other words, how close all the numbers are to the mean (the average).

- Skewness – this indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph (this is called a normal or parametric distribution), or do they lean to the left or right (this is called a non-normal or non-parametric distribution).

- Kurtosis – this metric indicates whether the data are heavily or lightly-tailed, relative to the normal distribution. In other words, how peaked or flat the distribution is.

A large table that indicates all the above for multiple variables can be a very effective way to present your data economically. You can also use colour coding to help make the data more easily digestible.

For categorical data, where you show the percentage of people who chose or fit into a category, for instance, you can either just plain describe the percentages or numbers of people who responded to something or use graphs and charts (such as bar graphs and pie charts) to present your data in this section of the chapter.

When using figures, make sure that you label them simply and clearly , so that your reader can easily understand them. There’s nothing more frustrating than a graph that’s missing axis labels! Keep in mind that although you’ll be presenting charts and graphs, your text content needs to present a clear narrative that can stand on its own. In other words, don’t rely purely on your figures and tables to convey your key points: highlight the crucial trends and values in the text. Figures and tables should complement the writing, not carry it .

Depending on your research aims, objectives and research questions, you may stop your analysis at this point (i.e. descriptive statistics). However, if your study requires inferential statistics, then it’s time to deep dive into those .

Step 6 – Present the inferential statistics

Inferential statistics are used to make generalisations about a population , whereas descriptive statistics focus purely on the sample . Inferential statistical techniques, broadly speaking, can be broken down into two groups .

First, there are those that compare measurements between groups , such as t-tests (which measure differences between two groups) and ANOVAs (which measure differences between multiple groups). Second, there are techniques that assess the relationships between variables , such as correlation analysis and regression analysis. Within each of these, some tests can be used for normally distributed (parametric) data and some tests are designed specifically for use on non-parametric data.

There are a seemingly endless number of tests that you can use to crunch your data, so it’s easy to run down a rabbit hole and end up with piles of test data. Ultimately, the most important thing is to make sure that you adopt the tests and techniques that allow you to achieve your research objectives and answer your research questions .

In this section of the results chapter, you should try to make use of figures and visual components as effectively as possible. For example, if you present a correlation table, use colour coding to highlight the significance of the correlation values, or scatterplots to visually demonstrate what the trend is. The easier you make it for your reader to digest your findings, the more effectively you’ll be able to make your arguments in the next chapter.

Step 7 – Test your hypotheses

If your study requires it, the next stage is hypothesis testing. A hypothesis is a statement , often indicating a difference between groups or relationship between variables, that can be supported or rejected by a statistical test. However, not all studies will involve hypotheses (again, it depends on the research objectives), so don’t feel like you “must” present and test hypotheses just because you’re undertaking quantitative research.

The basic process for hypothesis testing is as follows:

- Specify your null hypothesis (for example, “The chemical psilocybin has no effect on time perception).

- Specify your alternative hypothesis (e.g., “The chemical psilocybin has an effect on time perception)

- Set your significance level (this is usually 0.05)

- Calculate your statistics and find your p-value (e.g., p=0.01)

- Draw your conclusions (e.g., “The chemical psilocybin does have an effect on time perception”)

Finally, if the aim of your study is to develop and test a conceptual framework , this is the time to present it, following the testing of your hypotheses. While you don’t need to develop or discuss these findings further in the results chapter, indicating whether the tests (and their p-values) support or reject the hypotheses is crucial.

Step 8 – Provide a chapter summary

To wrap up your results chapter and transition to the discussion chapter, you should provide a brief summary of the key findings . “Brief” is the keyword here – much like the chapter introduction, this shouldn’t be lengthy – a paragraph or two maximum. Highlight the findings most relevant to your research objectives and research questions, and wrap it up.

Some final thoughts, tips and tricks

Now that you’ve got the essentials down, here are a few tips and tricks to make your quantitative results chapter shine:

- When writing your results chapter, report your findings in the past tense . You’re talking about what you’ve found in your data, not what you are currently looking for or trying to find.

- Structure your results chapter systematically and sequentially . If you had two experiments where findings from the one generated inputs into the other, report on them in order.

- Make your own tables and graphs rather than copying and pasting them from statistical analysis programmes like SPSS. Check out the DataIsBeautiful reddit for some inspiration.

- Once you’re done writing, review your work to make sure that you have provided enough information to answer your research questions , but also that you didn’t include superfluous information.

If you’ve got any questions about writing up the quantitative results chapter, please leave a comment below. If you’d like 1-on-1 assistance with your quantitative analysis and discussion, check out our hands-on coaching service , or book a free consultation with a friendly coach.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Thank you. I will try my best to write my results.

Awesome content 👏🏾

this was great explaination

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Organizing Academic Research Papers: 7. The Results

- Purpose of Guide

- Design Flaws to Avoid

- Glossary of Research Terms

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Choosing a Title

- Making an Outline

- Paragraph Development

- Executive Summary

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tertiary Sources

- What Is Scholarly vs. Popular?

- Qualitative Methods

- Quantitative Methods

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes