IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2019

Direct Speech-to-Image Translation

Speech-to-image translation without text is an interesting and useful topic due to the potential applications in humancomputer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated. In this paper, we attempt to translate the speech signals into the image signals without the transcription stage by leveraging the advance of teacher-student learning and generative adversarial models. Specifically, a speech encoder is designed to represent the input speech signals as an embedding feature, and it is trained using teacher-student learning to obtain better generalization ability on new classes. Subsequently, a stacked adversarial generative network is used to synthesized high-quality images conditioned on the embedding feature encoded by the speech encoder. Experimental results on both synthesized and real data show that our proposed method is efficient to translate the raw speech signals into images without the middle text representation. Ablation study gives more insights about our method.

Results on synthesized data

Results on read data

Feature interpolation

Supplementary material

Data and Code

We use 3 datasets in our paper, the data can be downloaded form the following table.

The code can be found on my github . Any question about the code or the paper, feel free to mail me: [email protected]

Acknowledgment

We consider the task of reconstructing an image of a person’s face from a short input audio segment of speech. We show several results of our method on VoxCeleb dataset. Our model takes only an audio waveform as input (the true faces are shown just for reference) . Note that our goal is not to reconstruct an accurate image of the person, but rather to recover characteristic physical features that are correlated with the input speech. *The three authors contributed equally to this work.

How much can we infer about a person's looks from the way they speak? In this paper, we study the task of reconstructing a facial image of a person from a short audio recording of that person speaking. We design and train a deep neural network to perform this task using millions of natural videos of people speaking from Internet/Youtube. During training, our model learns audiovisual, voice-face correlations that allow it to produce images that capture various physical attributes of the speakers such as age, gender and ethnicity. This is done in a self-supervised manner, by utilizing the natural co-occurrence of faces and speech in Internet videos, without the need to model attributes explicitly. Our reconstructions, obtained directly from audio, reveal the correlations between faces and voices. We evaluate and numerically quantify how--and in what manner--our Speech2Face reconstructions from audio resemble the true face images of the speakers.

Supplementary Material

Ethical Considerations

Further Reading

Google Research Blog

Speech-to-Image Creation Using Jina

Use Jina, Whisper and Stable Diffusion to build a cloud-native application for generating images using your speech.

We’re all used to our digital assistants. They can schedule alarms, read the weather and reel off a few corny jokes. But how can we take that further? Can we use our voice to interact with the world (and other machines) in newer and more interesting ways?

Right now, most digital assistants operate in a mostly single-modal capacity. Your voice goes in, their voice comes out. Maybe they perform a few other actions along the way, but you get the idea.

This single-modality way of working is kind of like the Iron Man Mark I armor. Sure, it’s the best at what it does, but we want to do more. Especially since we have all these cool new toys today.

Let’s do the metaphorical equivalent of strapping some lasers and rocket boots onto boring old Alexa. We’ll build a speech recognition system of our own with the ability to generate beautiful AI-powered imagery. And we can apply the lessons from that to build even more complex applications further down the line.

Instead of a single-modal assistant like Alexa or Siri, we’re moving into the bright world of multi-modality , where we can use text to generate images, audio to generate video, or basically any modality (i.e. type of media) to create (or search) any other kind of modality.

You don’t need to be a Stark-level genius to make this happen. Hell, you barely need to be a Hulk level intellect. We’ll do it all in under 90 lines of code.

And what’s more, we’ll do all that with a cloud-native microservice architecture and deploy it to Kubernetes.

Preliminary research

AI has been exploding over the past few years, and we’re rapidly moving from primitive single-modality models (e.g. Transformers for text, Big Image Transfer for images) to multi-modal models that can handle different kinds of data at once (e.g. CLIP, which can handle text, images, and audio all at once).

But hell, even that’s yesterday’s news. Just this year we’ve seen an explosion in tools that can generate images from text prompts (again with that multi-modality), like DiscoArt , DALL-E 2 and Stable Diffusion. That’s not to mention some of the other models out there, which can generate video from text prompts or generate 3D meshes from images .

Let’s create some images with Stable Diffusion (since we’re using that to build our example):

But it’s not just multi-modal text-to-image generation that’s hot right now. Just a few weeks ago, OpenAI released Whisper , an automatic speech-recognition system robust enough to handle to accents, background noise, and technical language.

This post will integrate Whisper and Stable Diffusion, so a user can speak a phrase, Whisper will convert it to text, and Stable Diffusion will use that text to create an image.

Existing works

This isn’t really a new concept. Many people have written papers on it or created examples before:

- S2IGAN: Speech-to-Image Generation via Adversarial Learning

- Direct Speech-to-Image Translation

- Using AI to Generate Art - A Voice-Enabled Art Generation Tool

- Built with AssemblyAI - Real-time Speech-to-Image Generation

The difference is that our example will use cutting edge models, be fully scalable, leverage a microservices architecture, and be simple to deploy to Kubernetes. What’s more, it’ll do all of that in fewer lines of code than the above examples.

The problem

With all these new AI models for multi-modal generation, your imagination is the limit when it comes to thinking of what you can build. But just thinking something isn’t the same as building it. And therein lies some of the key problems:

Dependency Hell

Building fully-integrated monolithic systems is relatively straightforward, but tying together too many cutting edge deep learning models can lead to dependency conflicts. These models were built to showcase cool tech, not to play nice with others.

That’s like if Iron Man’s rocket boots were incompatible with his laser cannons. He’d be flying around shooting down Chitauri, then plummet out of the sky like a rock as his boots crashed.

That’s right everyone. This is no longer about just building a cool demo. We are saving. Iron Man’s. Life. [2]

Choosing a data format

If we’re dealing with multiple modalities, choosing a data format to interoperate between these different models is painful. If we’re just dealing with a single modality like text, we can use plain old strings. If we’re dealing with images we could use image tensors. In our example, we’re dealing with audio and images. How much pain will that be?

Tying it all together

But the biggest challenge of all is mixing different models to create a fully-fledged application . Sure, you can wrap one model in a web API, containerize it, and deploy it on the cloud. But as soon as you need to chain together two models to create a slightly-more-complex application it gets messy. Especially if you want to build a true microservices-based application than can, for instance, replicate some part of your pipeline to avoid downtime. How do you communicate between the different models? And that’s not to mention deploying on a cloud-native platform like Kubernetes or having observability and monitoring for your pipeline.

The solution

Jina AI is building a comprehensive MLOps platform around multimodal AI to help developers and businesses solve challenges like speech-to-image. To integrate Whisper and Stable Diffusion in a clean, scalable, deployable way, we’ll:

- Use Jina to wrap the deep learning models into Executor s.

- Combine these Executors into a complex Jina Flow (a cloud-native AI application with replication, sharding, etc).

- Use DocArray to stream our data (with different modalities) through the Flow, where it will be processed by each Executor in turn.

- Deploy all of that to Kubernetes / JCloud with full observability .

These pipelines and building blocks are not just concepts: A Flow is a cloud-native application, while each Executor is a microservice.

Jina translates the conceptual composition of building blocks at the programming language level (By module/class separation) into a cloud-native composition, with each Executor as its own microservice. These microservices can be seamlessly replicated and sharded. The Flow and the Executors are powered by state of the art networking tools and relies on duplex streaming networking.

This solves the problems we outlined above:

- Dependency Hell - each model will be wrapped into it’s own microservice, so dependencies don’t interfere with each other

- Choosing a data format - DocArray handles whatever we throw at it, be it audio, text, image or anything else.

- Tying it all together - A Jina Flow orchestrates the microservices and provides an API for users to interact with them. With Jina’s cloud-native functionality we can easily deploy to Kubernetes or JCloud and get monitoring and observability.

Building Executors

Every kind of multi-modal search or creation task requires several steps, which differ depending on what you’re trying to achieve. In our case, the steps are:

- Take a user’s voice as input in the UI.

- Transcribe that speech into text using Whisper.

- Take that text output and feed it into Stable Diffusion to generate an image.

- Display that image to the user in the UI.

In this example we’re just focusing on backend matters, so we’ll focus on just steps 2 and 3. For each of these we’ll wrap a model into an Executor:

- WhisperExecutor - transcribes a user’s voice input into text.

- StableDiffusionExecutor - generates images based on the text string generated by WhisperExecutor.

In Jina an Executor is a microservice that performs a single task. All Executors use Documents as their native data format. There’s more on that in the “streaming data” section.

We program each of these Executors at the programming language level (with module/class separation). Each can be seamlessly sharded, replicated, and deployed on Kubernetes. They’re even fully observable by default.

Executor code typically looks like the snippet below (taken from WhisperExecutor). Each function that a user can call has its own @requests decorator , specifying a network endpoint. Since we don’t specify a particular endpoint in this snippet, transcribe() gets called when accessing any endpoint:

Building a Flow

A Flow orchestrates Executors into a processing pipeline to build a multi-modal/cross-modal application. Documents “flow” through the pipeline and are processed by Executors.

You can think of Flow as an interface to configure and launch your microservice architecture , while the heavy lifting is done by the services (i.e. Executors) themselves. In particular, each Flow also launches a Gateway service, which can expose all other services through an API that you define. Its role is to link the Executors together. The Gateway ensures each request passes through the different Executors based on the Flow’s topology (i.e. it goes to Executor A before Executor B).

The Flow in this example has a simple topology , with the WhisperExecutor and StableDiffusionExecutor from above piped together. The code below defines the Flow then opens a port for users to connect and stream data back and forth:

A Flow can be visualized with flow.plot() :

Streaming data

Everything that comes into and goes out of our Flow is a Document , which is a class from the DocArray package. DocArray provides a common API for multiple modalities (in our case, audio, text, image), letting us mix concepts and create multi-modal Documents that can be sent over the wire.

That means that no matter what data modality we’re using, a Document can store it. And since all Executors use Documents (and DocumentArrays ) as their data format, consistency is ensured.

In this speech-to-image example, the input Document is an audio sample of the user’s voice, captured by the UI. This is stored in a Document as a tensor.

If we regard the input Document as doc :

- Initially the doc is created by the UI and the user’s voice input is stored in doc.tensor .

- The doc is send over the wire via gRPC call from the UI to the WhisperExecutor.

- Then the WhisperExecutor takes that tensor and transcribes it to doc.text .

- The doc moves onto the StableDiffusion Executor.

- The StableDiffusion Executor reads in doc.text and generates two images which get stored (as Documents) in doc.matches .

- The UI receives the doc back from the Flow.

- Finally, the UI takes that output Document and renders each of the matches.

Connecting to a Flow

After opening the Flow, users can connect with Jina Client or a third-party client . In this example, the Flow exposes gRPC endpoints but could be easily changed (with one line of code) to implement RESTful, WebSockets or GraphQL endpoints instead.

Deploying a Flow

As a cloud-native framework, Jina shines brightest when coupled with Kubernetes. The documentation explains how to deploy a Flow on Kubernetes, but let’s get some insight into what’s happening under the hood.

As mentioned earlier, an Executor is a containerized microservice. Both Executors in our application are deployed independently on Kubernetes as a Deployment . This means Kubernetes handles their lifecycle, scheduling on the correct machine etc. In addition, the Gateway is deployed in the Deployment to flow the requests through the Executors. This translation to Kubernetes concepts is done on the fly by Jina. You simply need to define your Flow in Python.

Alternatively a Flow can be deployed on JCloud, which handles all of the above, as well as providing an out-of-the-box monitoring dashboard. To do so, the Python Flow needs to be converted to a YAML Flow (with a few JCloud specific parameters ), and then deployed:

Let’s take the app for a spin and give it some test queries:

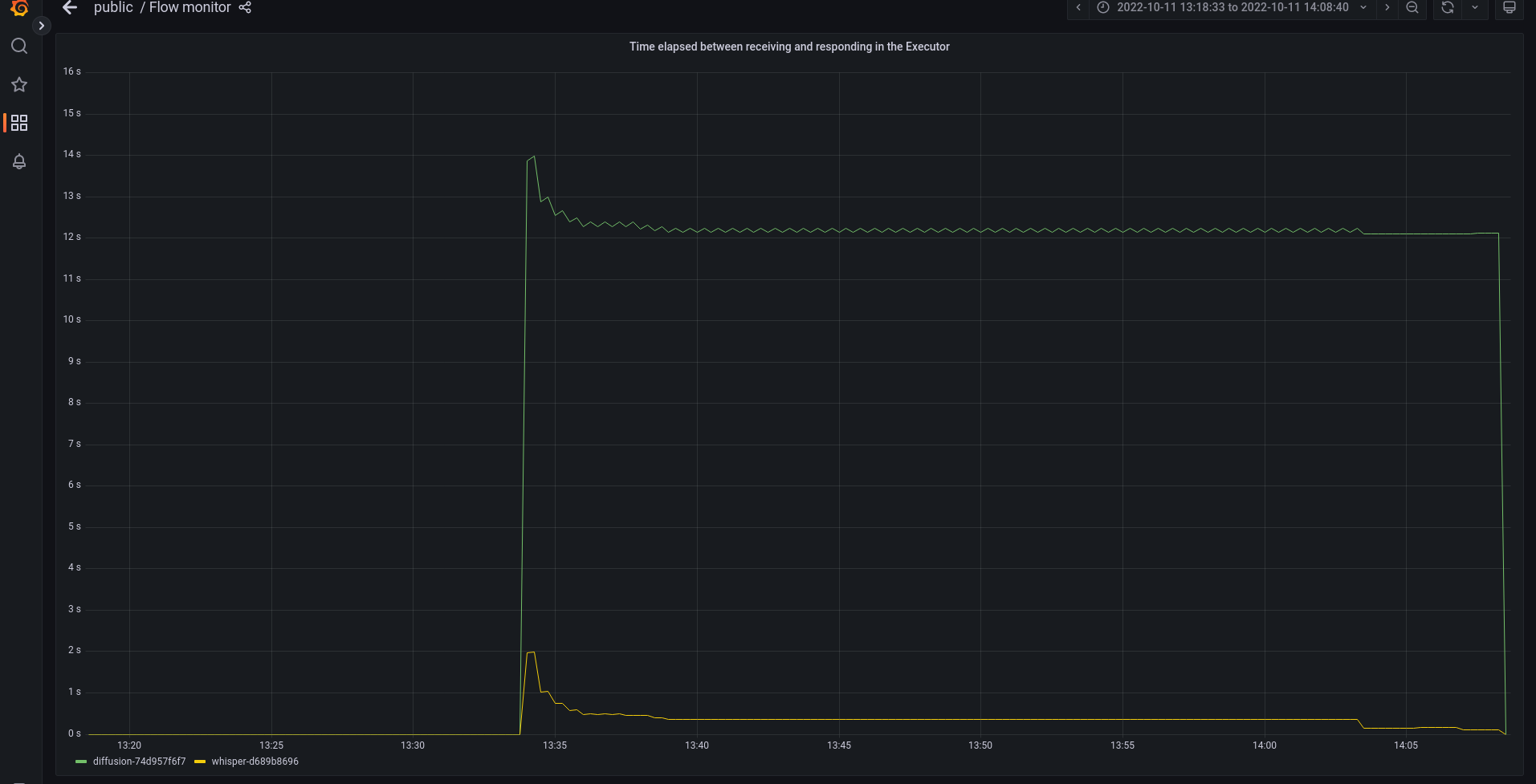

With monitoring enabled (which is the default in JCloud), everything that happens inside the Flow can be monitored on a Grafana dashboard:

One advantage of monitoring is that it can help you optimize your application to make it more resilient in a cost-effective way by detecting bottlenecks. The monitoring shows that the StableDiffusion Executor is a performance bottleneck:

This means that latency would skyrocket under a heavy workload. To get around this, we can replicate the StableDiffusion Executor to split image generation between different machines, thereby increasing efficiency:

or in the YAML file (for JCloud):

In this post we created a cloud-native audio-to-image generation tool using the state of the art AI models and the Jina AI MLOps platform.

With DocArray we used one data format to handle everything, allowing us to easily stream data back and forth. And with Jina we created a complex serving pipeline natively employing microservices, which we could then easily deploy to Kubernetes.

Since Jina provides a modular architecture, it’s straightforward to apply a similar solution to very different use cases, such as:

- Building a multi-modal PDF neural search engine, where a user can search for matching PDFs using strings or images.

- Building a multi-modal fashion neural search engine, where users can find products based on a string or image.

- Generating 3D models for movie scene design using a string or image as input.

- Generating complex blog posts using GPT-3.

All of these can be done in a manner that is cloud-native, scalable, and simple to deploy with Kubernetes.

2021 © Aspose

Convert image to speech online

Read an image or photo aloud with this free online application..

Powered by Aspose.com and Aspose.cloud

or drag it in this box *

Automatically adjust image contrast to make text clearer

Automatically straighten a skewed or rotated image

Upscale a low-resolution image to increase details

We try to recognize image type if it’s a document or just an image with text.

If your image is small or contains only lines of text without other content or noise.

Use document structure recognition model to extract structure of the document.

Use a text detection algorithm that works well with sparse text, tables, IDs, invoices, complex layouts, small images.

Combination of DSR and Text Detector algorithm if you had a document-like structure and want to detect all text.

Choose the optimal recognition mode

Do not read dim or blurry areas

Try to read all areas of an image

Downloading started...

Other OCR apps

We have already processed 2868888 files with a total size of 2655726 MB

Aspose.OCR image to speech

Aspose OCR technology can go beyond extracting text from scans, photos, screenshots, and other images. With it, you can convert almost any picture or photo with readable characters into a natural human voice that can be played in the background or downloaded. Read aloud scanned contracts, articles, books, and other documents in a car, help dyslexics and anyone with reading difficulties, and create accessible applications for visually impaired.

This free online application allows you to explore our image-to-speech capabilities without installing any applications and writing a single line of code. It supports any type of file that you can get from a smartphone, scanner, or camera, and can even work directly with content from external websites without downloading images to your computer. Just sit back and listen to the information you need.

This free app provided by Aspose OCR

How to convert image to speech

Provide an image.

Upload a scan or photo, or simply enter the image's web address.

Start recognition

Click "Recognize" button to start reading the image.

Wait a few seconds

Wait until the text is extracted from the image.

Read text aloud

Click "Play" button to read the image aloud or download an audio file.

Does this app support my language?

At the moment, the application can only read English. Support for other languages may be added in the future.

How to get the best result?

Good image quality is the cornerstone for accurate recognition. We recommend using a scanner or high-resolution camera to capture the original image.

I don’t have a scanner. Can I use a smartphone camera?

Yes, Aspose.OCR works equally well with scans and photographs. Just follow the basic rules to get a high-quality image: hold your smartphone parallel to the paper; make sure the paper is well lit; the text should cover the entire area of the photo.

I don’t like the result. What can I do to improve it?

Try enabling automatic image corrections under Options: enhance contrast, straighten and upscale image.

Can I use the app from mobile devices?

Yes, the application works in all popular web browsers on all devices and platforms, including smartphones. No additional software is required.

Is this app free?

Yes, the application provides full capabilities of Aspose.OCR for free, for as long as you need.

The features you will like

Sit back and listen

Listen to content that is not available as a podcast or text while staying focused on the task at hand: while driving, cooking, working out, and more.

Export speech to audio files

Save the image text as an audio file to listen to it later, send it via your favorite messenger or email, and share it in the cloud storage.

No equipment required

Aspose OCR allows for reliable recognition of any image, from scans to smartphone photos. Most of the pre-processing and image correction is done automatically, so you will only have to intervene in difficult cases.

Recognize images from the Internet

There is no need to upload images to the application. Just paste the web address of the image and get the text.

Flexible recognition settings

Built-in image pre-processing filters can straighten rotated and skewed images, automatically enhance contrast, or attempt to restore additional detail in low-resolution images. You can turn them on at any time to further improve recognition accuracy.

Zero system load

Recognition is carried out by high-performance Aspose Cloud. The application has minimum hardware or operating system requirements - you can use it even on entry-level systems and mobile devices without loss of accuracy and performance.

- Free Support

- Free Consulting

- Paid Consulting

© Aspose Pty Ltd 2001-2024. All Rights Reserved.

Something went wrong!

Enjoying this app.

Tell us about your experience

Help | Advanced Search

Computer Science > Multimedia

Title: direct speech-to-image translation.

Abstract: Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated. In this paper, we attempt to translate the speech signals into the image signals without the transcription stage. Specifically, a speech encoder is designed to represent the input speech signals as an embedding feature, and it is trained with a pretrained image encoder using teacher-student learning to obtain better generalization ability on new classes. Subsequently, a stacked generative adversarial network is used to synthesize high-quality images conditioned on the embedding feature. Experimental results on both synthesized and real data show that our proposed method is effective to translate the raw speech signals into images without the middle text representation. Ablation study gives more insights about our method.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

DBLP - CS Bibliography

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- About AssemblyAI

Announcements

Built with AssemblyAI - Real-time Speech-to-Image Generation

In our Built with AssemblyAI series, we showcase projects built with the AssemblyAI Core Transcription and Audio Intelligence APIs. This Real-time Speech-to-Image Generation project was built by students at ASU HACKML 2022.

Growth at AssemblyAI

In our Built with AssemblyAI series, we showcase developer and hackathon projects, innovative companies, and impressive products created using the AssemblyAI Core Transcription API and/or our Audio Intelligence APIs such as Sentiment Analysis , Auto Chapters , Entity Detection , and more.

This Real-time Speech-to-Image Generation project was built by students at ASU HACKML 2022.

Describe what you built with AssemblyAI.

Using the AssemblyAI Core Transcription API , we are able to reproduce elements of the zero-shot capabilities presented in the DALL-E 2 paper, in real-time. To accomplish this, we used a much less complex model that is trained on a summarized version of the training data they refer to as a meta-data set in the paper Less is More: Summary of Long Instructions is Better for Program Synthesis .

This is only possible with the combination of robust features present in the AssemblyAI API that allow for seamless integration with the Machine Learning model and web interface framework, as well as the corrective language modeling they use to repair malformed input and isolate sentences as they are spoken.

What was the inspiration for your product?

This project was inspired by a paper called Zero-Shot Text-to-Image Generation , by Open AI, that introduces an autoregressive language model called DALL-E 2 trained on images broken into segments that are given natural language descriptions.

How did you build your project?

There are two major components to this project:

- Real-time audio transcription . For this, we interfaced with the AssemblyAI API. The client-side interface was made with HTML and CSS. The server is hosted by Node.js using Express. The server will open up a connector to the AssemblyAI API after a button press is detected on the client-side.

- Text-to-Image generation. For this, pretrained models are deployed on the local machine and a Pytorch model runs in parallel with the client and server. After an asynchronous connection is established with the AssemblyAI API, Selenium will pass messages back and forth between the client and the pretrained model as the API responds with text transcribed from audio data. Selenium will update the image on the client-side while the model also saves images to the local drive.

What were your biggest takeaways from your project?

Audio transcription tools are getting more impressive all the time! Being able to produce similar results to the State-of-the-Art model with significantly less data also implies that the larger models are trained on some amount of superfluous data. Finally, changing the pretraining paradigm can make a big difference.

What's next for Speech-to-Image generation?

There are three main things on the horizon:

- Using a knowledge graph like ATOMIC , as a basis for commonsense, to check the semantic correctness of the object associations present in the image during the generation phase in order to get better images.

- Associating natural language with a series of images in a vector that can visually describe a sequence of events written in text.

- Generating a video from the resulting image vector.

Learn more about this project here .

Popular posts

AI trends in 2024: Graph Neural Networks

Developer Educator at AssemblyAI

AI for Universal Audio Understanding: Qwen-Audio Explained

Combining Speech Recognition and Diarization in one model

How DALL-E 2 Actually Works

Generating Images From Spoken Descriptions

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Turn any image to speech with Speechify

Featured In

Table of contents, what is ocr technology, benefits of turning images into speech, how to read images aloud with speechify’s ocr technology, why use speechify, speechify’s other features, speechify - turn any image into speech, how can i turn a picture into voice, is there an app that turns text into speech, what is a speech synthesizer, how is speech recognition different than text to speech, how can i turn image to audio on microsoft.

Take a look at how Speechify can turn any image to speech.

In this age of rapid technological growth, turning images into audible content has become a game-changer. With the help of Optical Character Recognition (OCR) technology, image to audio conversion can be accomplished in a few simple steps. Among the tools that excel in this field, Speechify stands out. This article dives into the core of how Speechify utilizes OCR to transform image text into audio files.

OCR, or Optical Character Recognition, is a technology rooted in computer vision and pattern recognition. Its primary function is to extract text from images. Using advanced artificial intelligence algorithms and machine learning, OCR can identify and convert image text into audio files for easy listening.

While images have always been a dominant means of conveying information, catering only to the visual sense may exclude a significant portion of the population, including the visually impaired. Transforming images into speech opens up new avenues of accessibility, comprehension, and interaction. Here is just a small look at the benefits of turning images into speech:

- Accessibility: For individuals with visual impairments, converting image text to speech allows for better comprehension.

- Efficiency: Transforming images to speech allows users to quickly digest content without the need to read, especially when multitasking.

- Convenience: With OCR technology, users can enjoy the convenience of turning a workbook page or web page screenshot into an audio file that can be listened to on the go.

- Language learning: Listening to the text aloud from an image can enhance pronunciation and comprehension for learners.

- Flexibility: With OCR technology, users can convert any image, whether it's a photo of a document, a screenshot of a web page, or even a snap of a handwritten note.

- Storage: Users can convert image text into smaller, high-quality MP3 files for easy storage and sharing.

- Real-time conversion: Instant text to speech conversion ensures no waiting time for users.

Speechify's OCR (Optical Character Recognition) technology offers a seamless way to convert images into spoken words, providing individuals with a practical and empowering tool to engage with text embedded within images. Whether for educational, professional, or personal purposes, this step-by-step guide will walk you through the process of using Speechify's OCR technology to unlock the content concealed within images, making it accessible to a wider audience and enhancing the overall reading experience:

- Launch Speechify: Download the Speechify app from your respective store (Android/iOS), install the Speechify Chrome extension, or launch the Speechify website.

- Choose image: Click upload file and select the image with the text you wish to convert or snap a photo of the text directly.

- Text detection: The app's OCR technology will process the image, detect the text, and transcribe image to text.

- Text to speech conversion: Once text is extracted, Speechify’s image processing uses speech synthesis to convert the detected text into audible content.

- Play: Listen in real-time or save it as an MP3 file for later use.

Speechify is a TTS app to which users can upload images with text, HTML files, web pages, docs, and more. The app works to extract text and convert it into easy-to-listen-to, natural-sounding audio that can read the text aloud. Whether you’re a busy professional who needs to get your information on the go or a student who is working to cram before a test, Speechify can make your life easier.

Speechify, while celebrated for its cutting-edge OCR (Optical Character Recognition) technology, is more than just an image-to-speech tool. This multifaceted platform boasts an array of features designed to empower its users, fostering a more inclusive, adaptable, and user-friendly reading environment. Here are just a few of the features Speechify users love:

- Text to speech (TTS): Apart from images, Speechify can convert any digital or physical text to a listening experience, including text files (like TXT), webpages, news articles, social media posts, study guides, emails, and so much more.

- API access: For developers, Speechify provides an API, enabling integration into various platforms, including web pages and Python scripts.

- Automatic library synchronization: Speechify automatically syncs your audio files between devices so that you’re able to keep listening where you left off no matter where you are.

- Multiple languages: With over 20+ available languages, Speechify users can upload text in a variety of language options. Many people who are learning a new language love that they can create an immersive experience using Speechify.

- Free trial: If you’re not sure whether a Speechify subscription is the right fit for you, no worries. You’ll be able to give the program a try for free to decide whether it’s the right fit for your needs.

- Natural-sounding voices: You’ll be able to choose from a variety of voices to make your Speechify experience perfect for you. When you get to listen to a human-like voice, it’s easier to focus on the information you’re learning, instead of focusing on pronunciation and semantic errors from a robot-like voice.

- Speed changes: With Speechify, you’ll get to choose the speed at which your audio files play. Going through information that you already have a good handle on? Speed it up to boost your productivity and get you moving to the information that you still need to learn.

Speechify stands at the frontier of accessibility tools, transforming the way we engage with written content. Speechify can turn any text into audio files, including text from physical documents or images, thanks to its advanced OCR technology. Whether it's a photographed page from a study guide, a screenshot of an email, or an image from a presentation, Speechify ensures users can listen to the content rather than solely rely on reading. This groundbreaking feature not only democratizes access for the visually impaired but also caters to learners and professionals who benefit from auditory processing. With Speechify, the barriers posed by the written word are effortlessly surmounted, making information universally accessible. Try Speechify for free today and see how it can level up your reading experience.

With the Speechify app, you can effortlessly turn a picture into voice by utilizing its advanced OCR technology to convert captured text into speech.

Yes, Speechify is an app that can turn text into speech, offering a wide range of features for enhanced accessibility and convenience.

A speech synthesizer is a computer-based system that generates spoken language by converting written text into a speech signal.

Text to speech converts written text into spoken language, while speech recognition translates spoken language into written text.

You can turn images into speech with OCR tools like Tesseract or Speechify. Speechify has the most likelike speech options on the market.

Text to Speech Google Docs: Everything You Need to Know

ChatGPT 5 Release Date and What to Expect

Tyler Weitzman

Tyler Weitzman is the Co-Founder, Head of Artificial Intelligence & President at Speechify, the #1 text-to-speech app in the world, totaling over 100,000 5-star reviews. Weitzman is a graduate of Stanford University, where he received a BS in mathematics and a MS in Computer Science in the Artificial Intelligence track. He has been selected by Inc. Magazine as a Top 50 Entrepreneur, and he has been featured in Business Insider, TechCrunch, LifeHacker, CBS, among other publications. Weitzman’s Masters degree research focused on artificial intelligence and text-to-speech, where his final paper was titled: “CloneBot: Personalized Dialogue-Response Predictions.”

Speech-to-image media conversion based on VQ and neural network

Research output : Chapter in Book/Report/Conference proceeding › Conference contribution

Automatic media conversion schemes from speech to a facial image and a construction of a real-time image synthesis system are presented. The purpose of this research is to realize an intelligent human-machine interface or intelligent communication system with synthesized human face images. A human face image is reconstructed on the display of a terminal using a 3-D surface model and texture mapping technique. Facial motion images are synthesized by transformation of the 3-D model. In the motion driving method, based on vector quantization and the neural network, the synthesized head image can appear to speak some given words and phrases naturally, in synchronization with voice signals from a speaker.

Publication series

Asjc scopus subject areas.

- Signal Processing

- Electrical and Electronic Engineering

Other files and links

- Link to publication in Scopus

- Link to citation list in Scopus

Fingerprint

- Neural networks Engineering & Materials Science 100%

- Vector quantization Engineering & Materials Science 92%

- Textures Engineering & Materials Science 66%

- Synchronization Engineering & Materials Science 65%

- Communication systems Engineering & Materials Science 65%

- Display devices Engineering & Materials Science 62%

T1 - Speech-to-image media conversion based on VQ and neural network

AU - Morishima, Shigeo

AU - Harashima, Hiroshi

PY - 1991/12/1

Y1 - 1991/12/1

N2 - Automatic media conversion schemes from speech to a facial image and a construction of a real-time image synthesis system are presented. The purpose of this research is to realize an intelligent human-machine interface or intelligent communication system with synthesized human face images. A human face image is reconstructed on the display of a terminal using a 3-D surface model and texture mapping technique. Facial motion images are synthesized by transformation of the 3-D model. In the motion driving method, based on vector quantization and the neural network, the synthesized head image can appear to speak some given words and phrases naturally, in synchronization with voice signals from a speaker.

AB - Automatic media conversion schemes from speech to a facial image and a construction of a real-time image synthesis system are presented. The purpose of this research is to realize an intelligent human-machine interface or intelligent communication system with synthesized human face images. A human face image is reconstructed on the display of a terminal using a 3-D surface model and texture mapping technique. Facial motion images are synthesized by transformation of the 3-D model. In the motion driving method, based on vector quantization and the neural network, the synthesized head image can appear to speak some given words and phrases naturally, in synchronization with voice signals from a speaker.

UR - http://www.scopus.com/inward/record.url?scp=0026396368&partnerID=8YFLogxK

UR - http://www.scopus.com/inward/citedby.url?scp=0026396368&partnerID=8YFLogxK

M3 - Conference contribution

AN - SCOPUS:0026396368

SN - 078030033

T3 - Proceedings - ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing

BT - Proceedings - ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing

A2 - Anon, null

PB - Publ by IEEE

T2 - Proceedings of the 1991 International Conference on Acoustics, Speech, and Signal Processing - ICASSP 91

Y2 - 14 May 1991 through 17 May 1991

Subscribe to the PwC Newsletter

Join the community, add a new evaluation result row, voice conversion.

152 papers with code • 2 benchmarks • 5 datasets

Voice Conversion is a technology that modifies the speech of a source speaker and makes their speech sound like that of another target speaker without changing the linguistic information.

Source: Joint training framework for text-to-speech and voice conversion using multi-source Tacotron and WaveNet

Benchmarks Add a Result

Most implemented papers

Stargan-vc: non-parallel many-to-many voice conversion with star generative adversarial networks.

This paper proposes a method that allows non-parallel many-to-many voice conversion (VC) by using a variant of a generative adversarial network (GAN) called StarGAN.

One-shot Voice Conversion by Separating Speaker and Content Representations with Instance Normalization

Recently, voice conversion (VC) without parallel data has been successfully adapted to multi-target scenario in which a single model is trained to convert the input voice to many different speakers.

AUTOVC: Zero-Shot Voice Style Transfer with Only Autoencoder Loss

On the other hand, CVAE training is simple but does not come with the distribution-matching property of a GAN.

Parallel-Data-Free Voice Conversion Using Cycle-Consistent Adversarial Networks

A subjective evaluation showed that the quality of the converted speech was comparable to that obtained with a Gaussian mixture model-based method under advantageous conditions with parallel and twice the amount of data.

CycleGAN-VC2: Improved CycleGAN-based Non-parallel Voice Conversion

Non-parallel voice conversion (VC) is a technique for learning the mapping from source to target speech without relying on parallel data.

MOSNet: Deep Learning based Objective Assessment for Voice Conversion

In this paper, we propose deep learning-based assessment models to predict human ratings of converted speech.

Unsupervised Speech Decomposition via Triple Information Bottleneck

Speech information can be roughly decomposed into four components: language content, timbre, pitch, and rhythm.

Utilizing Self-supervised Representations for MOS Prediction

In this paper, we use self-supervised pre-trained models for MOS prediction.

Defense for Black-box Attacks on Anti-spoofing Models by Self-Supervised Learning

To explore this issue, we proposed to employ Mockingjay, a self-supervised learning based model, to protect anti-spoofing models against adversarial attacks in the black-box scenario.

Voice Conversion from Non-parallel Corpora Using Variational Auto-encoder

We propose a flexible framework for spectral conversion (SC) that facilitates training with unaligned corpora.

- Case Studies

- Free Coaching Session

How to Use Image to Text Tools for Business Reports

Last Updated:

June 6, 2024

Businesses are often managing a large amount of information. Sometimes, this information is in the form of images and we need to extract text from those images. So using an image to text converter can solve this problem. This saves a lot of your time and energy. You only need to upload the image you want to convert into the tool. Then the converter will immediately extract text from the image. We are going to discuss all this in this article, so keep reading!

Key Takeaways on Using Image to Text Tools

- Definition of Image-to-Text Tools : These converters use optical character recognition (OCR) technology to extract text from images, making it editable and usable for business reports.

- Time-Saving : Image-to-text tools save time by quickly converting images into text, eliminating the need for manual typing.

- Error Reduction : These tools reduce errors associated with manual data entry, ensuring more accurate text extraction.

- Improved Efficiency : By streamlining the process of converting images to text, these tools enhance overall business productivity.

- Popular Tools : Some widely used image-to-text tools include Google Keep, Imgtotext.net, and Online OCR, each offering different features and benefits.

- Step-by-Step Usage : The process involves choosing a tool, uploading the image, converting it to text, and then copying the text for use in business reports.

- Best Practices : For optimal results, use high-quality images, review the extracted text for accuracy, and ensure the tool used keeps your data secure.

What Are Image-to-Text Tools?

Image-to-text converters help you extract text from images. These tools scan the image and convert the text within it into editable text. You can convert photos, scanned documents, or any picture containing text into editable text. This whole process is performed with the help of optical character recognition (OCR).

Why Use Image to Text Tools in Business Reports?

Using photo to text tools can help you in many ways. Some of the reasons are listed below:

● Save Time : Instead of typing out text from images, you can quickly extract it.

● Reduce Errors : Manual typing can lead to mistakes. OCR tools can reduce these errors.

● Improve Efficiency : Quickly convert images into editable text for reports and documents.

Popular Image-to-Text Tools

There are many picture to text converters available. Some of the popular ones include:

● Google Keep : Allows you to extract text from images using a mobile app.

● Imgtotext.net : A user-friendly tool that converts images to text easily.

● Online OCR : Supports various languages and formats.

How to Use Image-to-Text Tools

The use of these tools is very simple and user-friendly. Here is a step-by-step procedure on how you can do it!

Step 1: Choose the Right Tool

First, decide which image to text converter you want to use. For example, Imgtotext.net is a good choice for its ease of use.

Step 2: Upload the Image

Once you have chosen the tool, upload the image you want to turn. Most tools will have an "Upload" or "Choose File" button. Click this button and select the image from your computer or device.

Step 3: Convert Image to Text

After uploading the image, the converter will start the process. This usually takes a few seconds. Once you have completed the process, the extracted text will appear.

Step 4: Copy and Use the Text

Finally, you can copy text from images and paste it into your business report. The text can now be edited and formatted as needed.

Best Practices for Using Image to Text Converters

Use high-quality images.

For the best results, use clear and high-resolution images. Blurry or low-quality images may not produce accurate text extraction.

Review Extracted Text

Always review the extracted text for any errors. Even the best photo to text converters can make mistakes. So a quick review ensures accuracy in your business reports.

Keep Data Secure

You have to make sure that the tool you are using keeps your data secure. Some tools might store uploaded images, so choose a reputable service that respects your privacy.

Applications in Business Reports

● Extracting Text from Receipts: Businesses often need to keep track of expenses. You can use an image text extractor to extract text from receipts and include them in your reports.

● Converting Handwritten Notes: If you have handwritten notes from meetings or brainstorming sessions, you can convert these notes into text and include them in your business reports.

● Digitising Printed Documents: Old printed documents can also be digitised using these tools. Scan the documents and use an image-to-text converter to extract the text. This way, you can easily update and share old reports.

Finishing Up

Using an image to text converter can greatly benefit your business. It can help you save time, reduce errors, and improve overall productivity. You can easily use these tools for your business reports by following the easy steps we mentioned above. So start using image-to-text converters today and see the difference they make in your workflow.

People Also Like to Read...

Image to Text Converter for Document Management Solutions

Where to find Human Sounding Text To Speech?

© 2016 - 2024 Robin Waite. All rights reserved.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

image-to-speech

Here are 9 public repositories matching this topic..., ahmedgulabkhan / tei2s.

TEI2S is a project which is really helpful for the visually impaired, in a sense that it takes an image containing text embedding as the input, extracts the text from the image, and converts this text to speech, i.e; the output is an audio file containing the text which is embedded in the provided input image.

- Updated Nov 24, 2019

GURPREETKAURJETHRA / Image-to-Speech-GenAI-Tool-Using-LLM

AI tool that generates an Audio short story based on the context of an uploaded image by prompting a GenAI LLM model, Hugging Face AI models together with OpenAI & LangChain

- Updated Jan 11, 2024

santhalakshminarayana / image-to-pdf-text-speech

Image conversion to PDF document, text document, speech.

- Updated Nov 22, 2022

- Jupyter Notebook

aquatiko / Image-Text-Speech-Synthesizer-Converter

Converts image to speech to text using python and it's GUI feature

- Updated May 23, 2018

harshablaze / Printed-text-to-speech-using-ocr-and-spell-correction

printed text to speech conversion by improving OCR accuracy using spell correction

- Updated Jun 24, 2023

Moataz-Ab / Image-to-Speech-GenAI-tool

A tool that generates an audio short story based on the context of an uploaded image by prompting a GenAI LLM model

- Updated Oct 15, 2023

GURUAKASHSM / IMAGE-TEXT-SPEECH

Can convert English text image to Tamil, English, Hindi Text and Speech

- Updated Oct 25, 2023

TheSirC / PanGlo-TSE

Image-To-Speak Flask application

- Updated Jan 25, 2018

AsifAiub13 / TouchToHear

Touch the image, hear what it is, written in swift

- Updated Apr 15, 2018

Improve this page

Add a description, image, and links to the image-to-speech topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the image-to-speech topic, visit your repo's landing page and select "manage topics."

img2speech 1.0.13

pip install img2speech Copy PIP instructions

Released: Apr 24, 2023

Integrated Python package for converting Image to speech

Verified details

Maintainers.

Unverified details

View statistics for this project via Libraries.io , or by using our public dataset on Google BigQuery

License: MIT License

Author: Shreyas P J

Requires: Python >=3.8

Classifiers

- OSI Approved :: MIT License

- OS Independent

- Python :: 3

Project description

✅DESCRIPTION

An ITTTS (Image-to-text-to-speech) python package for integrated conversion of textual images and PDF document to human speech. This library aims at easing the internal image preprocessing and conversion of extracted text to human speech over multiple languages.

✅QUICK START

Dependencies.

This pipeline requires the dependencies which can be installed by running:

pip install -r requirements.txt

- image_to_sound(path_to_image, lang, pre_process="NO")

This main function is leveraged to convert the input textual image to human speech in the language present in the text. The function returns the intermediate text extracted and the speech generated. The speech can then be saved to a .mp3 file.

Parameters:

path_to_image : Defines the path to the "image" as .png or .jpg files. PDF files are also supported along with public image URLs from the internet

lang : Defines the language used in the text. The list of languages supported currently include:

["ENGLISH" , "HINDI", "TELUGU", "KANNADA"]

pre_process(optional) : If the user would like to use our internal pre-processing pipeline for better results.

For example:

image_to_sound("images/image1.png","ENGLISH",pre_process="YES")

image_to_sound("files/text.pdf","HINDI",pre_process="YES")

image_to_sound("https://www.techsmith.com/blog/wp-content/uploads/2020/11/TechSmith-Blog-ExtractText.png","ENGLISH",pre_process="YES")

- preprocess(path_to_image):

This function defines the internal pre-processing pipeline used in the package. The user could use this function to retrieve the intermediate preprocessed image before conversion to text and speech.

preprocess("images/image1.jpg"):

MODELS USED FOR OCR

After the input image is ensured to be of high quality, we use an efficient OCR tool called ”EasyOCR” for conversion of textual image to human readable text. We preferred EasyOCR over other tools like tesseract because EasyOCR provides us with pre-trained models for various languages. They also perform well on noisy or low-quality images. It is designed to be fast and can process multiple images in parallel making it suitable for use.

TEXT TO SPEECH CONVERSION

We leverage ”gTTS (Google Text-to-Speech)” to accomplish this task. ”gTTS” is a popular TTS (Text-to-Speech) engine that uses Google’s machine learning and neural network algorithms to synthesize natural-sounding speech from text input. We chose gTTS engine because GTTS allows for customization of voice, pitch, speaking rate, and volume to create a more personalized listening experience

INSTALLATION

Install using pip

For the latest stable release:

pip install img2speech

Project details

Release history release notifications | rss feed.

Apr 24, 2023

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Apr 24, 2023 Source

Built Distribution

Uploaded Apr 24, 2023 Python 3

Hashes for img2speech-1.0.13.tar.gz

Hashes for img2speech-1.0.13-py3-none-any.whl.

- português (Brasil)

Supported by

Copy and translate text from photos on your iPhone or iPad

You can use Live Text to copy text in photos or videos, translate languages, make a call, and more.

Use Live Text to get information from photos, videos, and images

Live Text recognizes information within your photos, videos, and images you find online. That means you can make a call, send an email, or look up directions with a tap. Your iPhone or iPad can also speak aloud Live Text with Speak Selection .

To turn on Live Text for all supported languages:

Open the Settings app.

Tap General.

Tap Language & Region, then turn on Live Text.

To use Live Text, you need an iPhone XS, iPhone XR, or later with iOS 15 or later.

Live Text is also available on iPad Pro (M4) models and iPad Air (M2) models, iPad Pro 12.9-inch (3rd generation) or later, iPad Pro 11-inch (all models), iPad Air (3rd generation) or later, iPad (8th generation) or later, and iPad mini (5th generation) or later with iPadOS 15.1 or later.

To use Live Text for video, you need iOS 16 or later or iPadOS 16 or later.

Copy text in a photo, video, or image

Open the Photos app and select a photo or video, or select an image online.

Touch and hold a word and move the grab points to adjust the selection. If the text is in a video, pause the video first.

Tap Copy. To select all of the text in the photo, tap Select All.

In iOS 16 and later, you can also isolate the subject of a photo and copy or share it through apps like Messages or Mail.

Make a call or send an email

Open a photo or video, or select an image online. If the text is in a video, pause the video first.

Tap the phone number or email address that appears to call or send an email. Depending on the photo, image, or website, you might see the option to Make a FaceTime call or Add to Contacts.

Translate text within a photo, video, or image

Tap Translate. You might need to tap Continue, then choose a language to translate in, or tap Change Language.

You can also translate text from images in the Translate app .

Learn which regions and languages currently support Live Text .

Search with text from your images

Open a photo or video, or select an image online.

Tap Look Up.

If you tap Look Up and select just one word, a dictionary appears. If you select more than one word, Siri Suggested Websites and other resources for the topic appear.

Related topics

Explore Apple Support Community

Find what’s been asked and answered by Apple customers.

Contact Apple Support

Need more help? Save time by starting your support request online and we'll connect you to an expert.

IMAGES

VIDEO

COMMENTS

Framework. Our framework for speech-to-image translation, which is composed with a speech encoder and a stacked generator. The speech encoder contains a multi-layer CNN and an RNN to encode the input time-frequency spectrogram into an embedding feature with 1024 dimensions. The speech encoder is trained using teacher-student learning with the ...

This post will integrate Whisper and Stable Diffusion, so a user can speak a phrase, Whisper will convert it to text, and Stable Diffusion will use that text to create an image. Existing works. ... In this speech-to-image example, the input Document is an audio sample of the user's voice, captured by the UI. This is stored in a Document as a ...

This free online application allows you to explore our image-to-speech capabilities without installing any applications and writing a single line of code. It supports any type of file that you can get from a smartphone, scanner, or camera, and can even work directly with content from external websites without downloading images to your computer.

Speech-to-image applications are systems that convert spoken language into visual representations, typically images or sequences of images. This process involves several key steps and technologies ...

This project uses advanced AI to generate high-quality images from speech inputs. Combining Hugging Face's StableDiffusionXLPipeline for image creation and Whisper for speech recognition, it allows users to record an audio description and transform it into a stunning visual representation through an interactive interface in a Jupyter Notebook ...

images can be synthesized, which means that the machine has understood the speech signal to some extent and been able to translate the semantic information in the speech signal into the image. Speech and image are in different modalities and the modality gap between these two types of data makes direct speech-to-image translation not trivial.

Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated ...

Experimental results on both synthesized and real data show that the proposed method is effective to translate the raw speech signals into images without the middle text representation. Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc.

Automatic media conversion schemes from speech to a facial image and a construction of a real-time image synthesis system are presented. The purpose of this research is to realize an intelligent human-machine interface or intelligent communication system with synthesized human face images. A human face image is reconstructed on the display of a terminal using a 3-D surface model and texture ...

We use a speech encoder based CNN+RNN to extract an embedding feature from the input speech description and synthesize images with semantical consistancy by a stacked generator. This project provides the code for training the model and the method to evaluate the model on synthesized and real data.

Direct Speech-to-image Translation. Jiguo Li, Xinfeng Zhang, Chuanmin Jia, Jizheng Xu, Li Zhang, Yue Wang, Siwei Ma, Wen Gao. Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc.

Add this topic to your repo. To associate your repository with the audio-to-image topic, visit your repo's landing page and select "manage topics." GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

CyTex is a new pitch-based speech-to-image transform for converting voice signals to images. Converting speech data to images using the fundamental frequency of the human voice is inspired by the fact that pitch is one of the most informative features of speech signals and conveys valuable information about emotional states. The proposed speech ...

This Real-time Speech-to-Image Generation project was built by students at ASU HACKML 2022. Describe what you built with AssemblyAI. Using the AssemblyAI Core Transcription API, we are able to reproduce elements of the zero-shot capabilities presented in the DALL-E 2 paper, in real-time. To accomplish this, we used a much less complex model ...

Text-based technologies, such as text translation from one language to another, and image captioning, are gaining popularity. However, approximately half of the world's languages are estimated to be lacking a commonly used written form. Consequently, these languages cannot benefit from text-based technologies. This paper presents 1) a new speech technology task, i.e., a speech-to-image ...

Choose image: Click upload file and select the image with the text you wish to convert or snap a photo of the text directly. Text detection: The app's OCR technology will process the image, detect the text, and transcribe image to text. Text to speech conversion: Once text is extracted, Speechify's image processing uses speech synthesis to ...

N2 - Automatic media conversion schemes from speech to a facial image and a construction of a real-time image synthesis system are presented. The purpose of this research is to realize an intelligent human-machine interface or intelligent communication system with synthesized human face images.

Image to plain text to speech reader speaks your picture. * Please upload an image (JPG/JPEG, PNG, Maximum upload file size is 5M) and select the language in the image. TextToSpeech will convert it to text then you can have it speaking. * If you have the content already and want a text to speech online conversion to mp3 files, checkout our text ...

152 papers with code • 2 benchmarks • 5 datasets. Voice Conversion is a technology that modifies the speech of a source speaker and makes their speech sound like that of another target speaker without changing the linguistic information. Source: Joint training framework for text-to-speech and voice conversion using multi-source Tacotron and ...

fake images and an image labelling module for creating labels. There are various steps in the image-to-text and speech conversion process using RESNET50. The input image is first preprocessed by being resized to the necessary input size and having the pixel values normalised. Then, to

Once you have chosen the tool, upload the image you want to turn. Most tools will have an "Upload" or "Choose File" button. Click this button and select the image from your computer or device. Step 3: Convert Image to Text. After uploading the image, the converter will start the process. This usually takes a few seconds.

TEI2S is a project which is really helpful for the visually impaired, in a sense that it takes an image containing text embedding as the input, extracts the text from the image, and converts this text to speech, i.e; the output is an audio file containing the text which is embedded in the provided input image.

image_to_sound (path_to_image, lang, pre_process="NO") This main function is leveraged to convert the input textual image to human speech in the language present in the text. The function returns the intermediate text extracted and the speech generated. The speech can then be saved to a .mp3 file.

Translate text within a photo, video, or image. Open a photo or video, or select an image online. If the text is in a video, pause the video first. Tap the Live Text button. Tap Translate. You might need to tap Continue, then choose a language to translate in, or tap Change Language. You can also translate text from images in the Translate app.