Research Paper Statistical Treatment of Data: A Primer

We can all agree that analyzing and presenting data effectively in a research paper is critical, yet often challenging.

This primer on statistical treatment of data will equip you with the key concepts and procedures to accurately analyze and clearly convey research findings.

You'll discover the fundamentals of statistical analysis and data management, the common quantitative and qualitative techniques, how to visually represent data, and best practices for writing the results - all framed specifically for research papers.

If you are curious on how AI can help you with statistica analysis for research, check Hepta AI .

Introduction to Statistical Treatment in Research

Statistical analysis is a crucial component of both quantitative and qualitative research. Properly treating data enables researchers to draw valid conclusions from their studies. This primer provides an introductory guide to fundamental statistical concepts and methods for manuscripts.

Understanding the Importance of Statistical Treatment

Careful statistical treatment demonstrates the reliability of results and ensures findings are grounded in robust quantitative evidence. From determining appropriate sample sizes to selecting accurate analytical tests, statistical rigor adds credibility. Both quantitative and qualitative papers benefit from precise data handling.

Objectives of the Primer

This primer aims to equip researchers with best practices for:

Statistical tools to apply during different research phases

Techniques to manage, analyze, and present data

Methods to demonstrate the validity and reliability of measurements

By covering fundamental concepts ranging from descriptive statistics to measurement validity, it enables both novice and experienced researchers to incorporate proper statistical treatment.

Navigating the Primer: Key Topics and Audience

The primer spans introductory topics including:

Research planning and design

Data collection, management, analysis

Result presentation and interpretation

While useful for researchers at any career stage, earlier-career scientists with limited statistical exposure will find it particularly valuable as they prepare manuscripts.

How do you write a statistical method in a research paper?

Statistical methods are a critical component of research papers, allowing you to analyze, interpret, and draw conclusions from your study data. When writing the statistical methods section, you need to provide enough detail so readers can evaluate the appropriateness of the methods you used.

Here are some key things to include when describing statistical methods in a research paper:

Type of Statistical Tests Used

Specify the types of statistical tests performed on the data, including:

Parametric vs nonparametric tests

Descriptive statistics (means, standard deviations)

Inferential statistics (t-tests, ANOVA, regression, etc.)

Statistical significance level (often p < 0.05)

For example: We used t-tests and one-way ANOVA to compare means across groups, with statistical significance set at p < 0.05.

Analysis of Subgroups

If you examined subgroups or additional variables, describe the methods used for these analyses.

For example: We stratified data by gender and used chi-square tests to analyze differences between subgroups.

Software and Versions

List any statistical software packages used for analysis, including version numbers. Common programs include SPSS, SAS, R, and Stata.

For example: Data were analyzed using SPSS version 25 (IBM Corp, Armonk, NY).

The key is to give readers enough detail to assess the rigor and appropriateness of your statistical methods. The methods should align with your research aims and design. Keep explanations clear and concise using consistent terminology throughout the paper.

What are the 5 statistical treatment in research?

The five most common statistical treatments used in academic research papers include:

The mean, or average, is used to describe the central tendency of a dataset. It provides a singular value that represents the middle of a distribution of numbers. Calculating means allows researchers to characterize typical observations within a sample.

Standard Deviation

Standard deviation measures the amount of variability in a dataset. A low standard deviation indicates observations are clustered closely around the mean, while a high standard deviation signifies the data is more spread out. Reporting standard deviations helps readers contextualize means.

Regression Analysis

Regression analysis models the relationship between independent and dependent variables. It generates an equation that predicts changes in the dependent variable based on changes in the independents. Regressions are useful for hypothesizing causal connections between variables.

Hypothesis Testing

Hypothesis testing evaluates assumptions about population parameters based on statistics calculated from a sample. Common hypothesis tests include t-tests, ANOVA, and chi-squared. These quantify the likelihood of observed differences being due to chance.

Sample Size Determination

Sample size calculations identify the minimum number of observations needed to detect effects of a given size at a desired statistical power. Appropriate sampling ensures studies can uncover true relationships within the constraints of resource limitations.

These five statistical analysis methods form the backbone of most quantitative research processes. Correct application allows researchers to characterize data trends, model predictive relationships, and make probabilistic inferences regarding broader populations. Expertise in these techniques is fundamental for producing valid, reliable, and publishable academic studies.

How do you know what statistical treatment to use in research?

The selection of appropriate statistical methods for the treatment of data in a research paper depends on three key factors:

The Aim and Objective of the Study

The aim and objectives that the study seeks to achieve will determine the type of statistical analysis required.

Descriptive research presenting characteristics of the data may only require descriptive statistics like measures of central tendency (mean, median, mode) and dispersion (range, standard deviation).

Studies aiming to establish relationships or differences between variables need inferential statistics like correlation, t-tests, ANOVA, regression etc.

Predictive modeling research requires methods like regression, discriminant analysis, logistic regression etc.

Thus, clearly identifying the research purpose and objectives is the first step in planning appropriate statistical treatment.

Type and Distribution of Data

The type of data (categorical, numerical) and its distribution (normal, skewed) also guide the choice of statistical techniques.

Parametric tests have assumptions related to normality and homogeneity of variance.

Non-parametric methods are distribution-free and better suited for non-normal or categorical data.

Testing data distribution and characteristics is therefore vital.

Nature of Observations

Statistical methods also differ based on whether the observations are paired or unpaired.

Analyzing changes within one group requires paired tests like paired t-test, Wilcoxon signed-rank test etc.

Comparing between two or more independent groups needs unpaired tests like independent t-test, ANOVA, Kruskal-Wallis test etc.

Thus the nature of observations is pivotal in selecting suitable statistical analyses.

In summary, clearly defining the research objectives, testing the collected data, and understanding the observational units guides proper statistical treatment and interpretation.

What is statistical techniques in research paper?

Statistical methods are essential tools in scientific research papers. They allow researchers to summarize, analyze, interpret and present data in meaningful ways.

Some key statistical techniques used in research papers include:

Descriptive statistics: These provide simple summaries of the sample and the measures. Common examples include measures of central tendency (mean, median, mode), measures of variability (range, standard deviation) and graphs (histograms, pie charts).

Inferential statistics: These help make inferences and predictions about a population from a sample. Common techniques include estimation of parameters, hypothesis testing, correlation and regression analysis.

Analysis of variance (ANOVA): This technique allows researchers to compare means across multiple groups and determine statistical significance.

Factor analysis: This technique identifies underlying relationships between variables and latent constructs. It allows reducing a large set of variables into fewer factors.

Structural equation modeling: This technique estimates causal relationships using both latent and observed factors. It is widely used for testing theoretical models in social sciences.

Proper statistical treatment and presentation of data are crucial for the integrity of any quantitative research paper. Statistical techniques help establish validity, account for errors, test hypotheses, build models and derive meaningful insights from the research.

Fundamental Concepts and Data Management

Exploring basic statistical terms.

Understanding key statistical concepts is essential for effective research design and data analysis. This includes defining key terms like:

Statistics : The science of collecting, organizing, analyzing, and interpreting numerical data to draw conclusions or make predictions.

Variables : Characteristics or attributes of the study participants that can take on different values.

Measurement : The process of assigning numbers to variables based on a set of rules.

Sampling : Selecting a subset of a larger population to estimate characteristics of the whole population.

Data types : Quantitative (numerical) or qualitative (categorical) data.

Descriptive vs. inferential statistics : Descriptive statistics summarize data while inferential statistics allow making conclusions from the sample to the larger population.

Ensuring Validity and Reliability in Measurement

When selecting measurement instruments, it is critical they demonstrate:

Validity : The extent to which the instrument measures what it intends to measure.

Reliability : The consistency of measurement over time and across raters.

Researchers should choose instruments aligned to their research questions and study methodology .

Data Management Essentials

Proper data management requires:

Ethical collection procedures respecting autonomy, justice, beneficence and non-maleficence.

Handling missing data through deletion, imputation or modeling procedures.

Data cleaning by identifying and fixing errors, inconsistencies and duplicates.

Data screening via visual inspection and statistical methods to detect anomalies.

Data Management Techniques and Ethical Considerations

Ethical data management includes:

Obtaining informed consent from all participants.

Anonymization and encryption to protect privacy.

Secure data storage and transfer procedures.

Responsible use of statistical tools free from manipulation or misrepresentation.

Adhering to ethical guidelines preserves public trust in the integrity of research.

Statistical Methods and Procedures

This section provides an introduction to key quantitative analysis techniques and guidance on when to apply them to different types of research questions and data.

Descriptive Statistics and Data Summarization

Descriptive statistics summarize and organize data characteristics such as central tendency, variability, and distributions. Common descriptive statistical methods include:

Measures of central tendency (mean, median, mode)

Measures of variability (range, interquartile range, standard deviation)

Graphical representations (histograms, box plots, scatter plots)

Frequency distributions and percentages

These methods help describe and summarize the sample data so researchers can spot patterns and trends.

Inferential Statistics for Generalizing Findings

While descriptive statistics summarize sample data, inferential statistics help generalize findings to the larger population. Common techniques include:

Hypothesis testing with t-tests, ANOVA

Correlation and regression analysis

Nonparametric tests

These methods allow researchers to draw conclusions and make predictions about the broader population based on the sample data.

Selecting the Right Statistical Tools

Choosing the appropriate analyses involves assessing:

The research design and questions asked

Type of data (categorical, continuous)

Data distributions

Statistical assumptions required

Matching the correct statistical tests to these elements helps ensure accurate results.

Statistical Treatment of Data for Quantitative Research

For quantitative research, common statistical data treatments include:

Testing data reliability and validity

Checking assumptions of statistical tests

Transforming non-normal data

Identifying and handling outliers

Applying appropriate analyses for the research questions and data type

Examples and case studies help demonstrate correct application of statistical tests.

Approaches to Qualitative Data Analysis

Qualitative data is analyzed through methods like:

Thematic analysis

Content analysis

Discourse analysis

Grounded theory

These help researchers discover concepts and patterns within non-numerical data to derive rich insights.

Data Presentation and Research Method

Crafting effective visuals for data presentation.

When presenting analyzed results and statistics in a research paper, well-designed tables, graphs, and charts are key for clearly showcasing patterns in the data to readers. Adhering to formatting standards like APA helps ensure professional data presentation. Consider these best practices:

Choose the appropriate visual type based on the type of data and relationship being depicted. For example, bar charts for comparing categorical data, line graphs to show trends over time.

Label the x-axis, y-axis, legends clearly. Include informative captions.

Use consistent, readable fonts and sizing. Avoid clutter with unnecessary elements. White space can aid readability.

Order data logically. Such as largest to smallest values, or chronologically.

Include clear statistical notations, like error bars, where applicable.

Following academic standards for visuals lends credibility while making interpretation intuitive for readers.

Writing the Results Section with Clarity

When writing the quantitative Results section, aim for clarity by balancing statistical reporting with interpretation of findings. Consider this structure:

Open with an overview of the analysis approach and measurements used.

Break down results by logical subsections for each hypothesis, construct measured etc.

Report exact statistics first, followed by interpretation of their meaning. For example, “Participants exposed to the intervention had significantly higher average scores (M=78, SD=3.2) compared to controls (M=71, SD=4.1), t(115)=3.42, p = 0.001. This suggests the intervention was highly effective for increasing scores.”

Use present verb tense. And scientific, formal language.

Include tables/figures where they aid understanding or visualization.

Writing results clearly gives readers deeper context around statistical findings.

Highlighting Research Method and Design

With a results section full of statistics, it's vital to communicate key aspects of the research method and design. Consider including:

Brief overview of study variables, materials, apparatus used. Helps reproducibility.

Descriptions of study sampling techniques, data collection procedures. Supports transparency.

Explanations around approaches to measurement, data analysis performed. Bolsters methodological rigor.

Noting control variables, attempts to limit biases etc. Demonstrates awareness of limitations.

Covering these methodological details shows readers the care taken in designing the study and analyzing the results obtained.

Acknowledging Limitations and Addressing Biases

Honestly recognizing methodological weaknesses and limitations goes a long way in establishing credibility within the published discussion section. Consider transparently noting:

Measurement errors and biases that may have impacted findings.

Limitations around sampling methods that constrain generalizability.

Caveats related to statistical assumptions, analysis techniques applied.

Attempts made to control/account for biases and directions for future research.

Rather than detracting value, acknowledging limitations demonstrates academic integrity regarding the research performed. It also gives readers deeper insight into interpreting the reported results and findings.

Conclusion: Synthesizing Statistical Treatment Insights

Recap of statistical treatment fundamentals.

Statistical treatment of data is a crucial component of high-quality quantitative research. Proper application of statistical methods and analysis principles enables valid interpretations and inferences from study data. Key fundamentals covered include:

Descriptive statistics to summarize and describe the basic features of study data

Inferential statistics to make judgments of the probability and significance based on the data

Using appropriate statistical tools aligned to the research design and objectives

Following established practices for measurement techniques, data collection, and reporting

Adhering to these core tenets ensures research integrity and allows findings to withstand scientific scrutiny.

Key Takeaways for Research Paper Success

When incorporating statistical treatment into a research paper, keep these best practices in mind:

Clearly state the research hypothesis and variables under examination

Select reliable and valid quantitative measures for assessment

Determine appropriate sample size to achieve statistical power

Apply correct analytical methods suited to the data type and distribution

Comprehensively report methodology procedures and statistical outputs

Interpret results in context of the study limitations and scope

Following these guidelines will bolster confidence in the statistical treatment and strengthen the research quality overall.

Encouraging Continued Learning and Application

As statistical techniques continue advancing, it is imperative for researchers to actively further their statistical literacy. Regularly reviewing new methodological developments and learning advanced tools will augment analytical capabilities. Persistently putting enhanced statistical knowledge into practice through research projects and manuscript preparations will cement competencies. Statistical treatment mastery is a journey requiring persistent effort, but one that pays dividends in research proficiency.

Antonio Carlos Filho @acfilho_dev

Community Blog

Keep up-to-date on postgraduate related issues with our quick reads written by students, postdocs, professors and industry leaders.

Statistical Treatment of Data – Explained & Example

- By DiscoverPhDs

- September 8, 2020

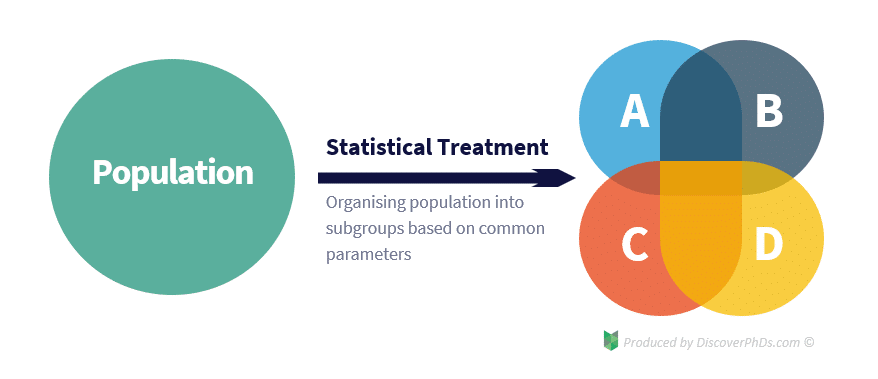

‘Statistical treatment’ is when you apply a statistical method to a data set to draw meaning from it. Statistical treatment can be either descriptive statistics, which describes the relationship between variables in a population, or inferential statistics, which tests a hypothesis by making inferences from the collected data.

Introduction to Statistical Treatment in Research

Every research student, regardless of whether they are a biologist, computer scientist or psychologist, must have a basic understanding of statistical treatment if their study is to be reliable.

This is because designing experiments and collecting data are only a small part of conducting research. The other components, which are often not so well understood by new researchers, are the analysis, interpretation and presentation of the data. This is just as important, if not more important, as this is where meaning is extracted from the study .

What is Statistical Treatment of Data?

Statistical treatment of data is when you apply some form of statistical method to a data set to transform it from a group of meaningless numbers into meaningful output.

Statistical treatment of data involves the use of statistical methods such as:

- regression,

- conditional probability,

- standard deviation and

- distribution range.

These statistical methods allow us to investigate the statistical relationships between the data and identify possible errors in the study.

In addition to being able to identify trends, statistical treatment also allows us to organise and process our data in the first place. This is because when carrying out statistical analysis of our data, it is generally more useful to draw several conclusions for each subgroup within our population than to draw a single, more general conclusion for the whole population. However, to do this, we need to be able to classify the population into different subgroups so that we can later break down our data in the same way before analysing it.

Statistical Treatment Example – Quantitative Research

For a statistical treatment of data example, consider a medical study that is investigating the effect of a drug on the human population. As the drug can affect different people in different ways based on parameters such as gender, age and race, the researchers would want to group the data into different subgroups based on these parameters to determine how each one affects the effectiveness of the drug. Categorising the data in this way is an example of performing basic statistical treatment.

Type of Errors

A fundamental part of statistical treatment is using statistical methods to identify possible outliers and errors. No matter how careful we are, all experiments are subject to inaccuracies resulting from two types of errors: systematic errors and random errors.

Systematic errors are errors associated with either the equipment being used to collect the data or with the method in which they are used. Random errors are errors that occur unknowingly or unpredictably in the experimental configuration, such as internal deformations within specimens or small voltage fluctuations in measurement testing instruments.

These experimental errors, in turn, can lead to two types of conclusion errors: type I errors and type II errors . A type I error is a false positive which occurs when a researcher rejects a true null hypothesis. On the other hand, a type II error is a false negative which occurs when a researcher fails to reject a false null hypothesis.

There’s no doubt about it – writing can be difficult. Whether you’re writing the first sentence of a paper or a grant proposal, it’s easy

Stay up to date with current information being provided by the UK Government and Universities about the impact of the global pandemic on PhD research studies.

Is it really possible to do a PhD while working? The answer is ‘yes’, but it comes with several ‘buts’. Read our post to find out if it’s for you.

Join thousands of other students and stay up to date with the latest PhD programmes, funding opportunities and advice.

Browse PhDs Now

The title page of your dissertation or thesis conveys all the essential details about your project. This guide helps you format it in the correct way.

This post gives you the best questions to ask at a PhD interview, to help you work out if your potential supervisor and lab is a good fit for you.

Dr Williams gained her PhD in Chemical Engineering at the Rensselaer Polytechnic Institute in Troy, New York in 2020. She is now a Presidential Postdoctoral Fellow at Cornell University, researching simplifying vaccine manufacturing in low-income countries.

Elpida is about to start her third year of PhD research at the University of Leicester. Her research focuses on preventing type 2 diabetes in women who had gestational diabetes, and she an active STEM Ambassador.

Join Thousands of Students

Statistical Treatment

Statistics Definitions > Statistical Treatment

What is Statistical Treatment?

Statistical treatment can mean a few different things:

- In Data Analysis : Applying any statistical method — like regression or calculating a mean — to data.

- In Factor Analysis : Any combination of factor levels is called a treatment.

- In a Thesis or Experiment : A summary of the procedure, including statistical methods used.

1. Statistical Treatment in Data Analysis

The term “statistical treatment” is a catch all term which means to apply any statistical method to your data. Treatments are divided into two groups: descriptive statistics , which summarize your data as a graph or summary statistic and inferential statistics , which make predictions and test hypotheses about your data. Treatments could include:

- Finding standard deviations and sample standard errors ,

- Finding T-Scores or Z-Scores .

- Calculating Correlation coefficients .

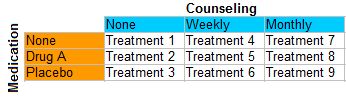

2. Treatments in Factor Analysis

3. Treatments in a Thesis or Experiment

Sometimes you might be asked to include a treatment as part of a thesis. This is asking you to summarize the data and analysis portion of your experiment, including measurements and formulas used. For example, the following experimental summary is from Statistical Treatment in Acta Physiologica Scandinavica. :

Each of the test solutions was injected twice in each subject…30-42 values were obtained for the intensity, and a like number for the duration, of the pain indiced by the solution. The pain values reported in the following are arithmetical means for these 30-42 injections.”

The author goes on to provide formulas for the mean, the standard deviation and the standard error of the mean.

Vogt, W.P. (2005). Dictionary of Statistics & Methodology: A Nontechnical Guide for the Social Sciences . SAGE. Wheelan, C. (2014). Naked Statistics . W. W. Norton & Company Unknown author (1961) Chapter 3: Statistical Treatment. Acta Physiologica Scandinavica. Volume 51, Issue s179 December Pages 16–20.

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

- Statistics >

Statistical Treatment Of Data

Statistical treatment of data is essential in order to make use of the data in the right form. Raw data collection is only one aspect of any experiment; the organization of data is equally important so that appropriate conclusions can be drawn. This is what statistical treatment of data is all about.

This article is a part of the guide:

- Statistics Tutorial

- Branches of Statistics

- Statistical Analysis

- Discrete Variables

Browse Full Outline

- 1 Statistics Tutorial

- 2.1 What is Statistics?

- 2.2 Learn Statistics

- 3 Probability

- 4 Branches of Statistics

- 5 Descriptive Statistics

- 6 Parameters

- 7.1 Data Treatment

- 7.2 Raw Data

- 7.3 Outliers

- 7.4 Data Output

- 8 Statistical Analysis

- 9 Measurement Scales

- 10 Variables and Statistics

- 11 Discrete Variables

There are many techniques involved in statistics that treat data in the required manner. Statistical treatment of data is essential in all experiments, whether social, scientific or any other form. Statistical treatment of data greatly depends on the kind of experiment and the desired result from the experiment.

For example, in a survey regarding the election of a Mayor, parameters like age, gender, occupation, etc. would be important in influencing the person's decision to vote for a particular candidate. Therefore the data needs to be treated in these reference frames.

An important aspect of statistical treatment of data is the handling of errors. All experiments invariably produce errors and noise. Both systematic and random errors need to be taken into consideration.

Depending on the type of experiment being performed, Type-I and Type-II errors also need to be handled. These are the cases of false positives and false negatives that are important to understand and eliminate in order to make sense from the result of the experiment.

Treatment of Data and Distribution

Trying to classify data into commonly known patterns is a tremendous help and is intricately related to statistical treatment of data. This is because distributions such as the normal probability distribution occur very commonly in nature that they are the underlying distributions in most medical, social and physical experiments.

Therefore if a given sample size is known to be normally distributed, then the statistical treatment of data is made easy for the researcher as he would already have a lot of back up theory in this aspect. Care should always be taken, however, not to assume all data to be normally distributed, and should always be confirmed with appropriate testing.

Statistical treatment of data also involves describing the data. The best way to do this is through the measures of central tendencies like mean , median and mode . These help the researcher explain in short how the data are concentrated. Range, uncertainty and standard deviation help to understand the distribution of the data. Therefore two distributions with the same mean can have wildly different standard deviation, which shows how well the data points are concentrated around the mean.

Statistical treatment of data is an important aspect of all experimentation today and a thorough understanding is necessary to conduct the right experiments with the right inferences from the data obtained.

- Psychology 101

- Flags and Countries

- Capitals and Countries

Siddharth Kalla (Apr 10, 2009). Statistical Treatment Of Data. Retrieved Jun 11, 2024 from Explorable.com: https://explorable.com/statistical-treatment-of-data

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Save this course for later.

Don't have time for it all now? No problem, save it as a course and come back to it later.

Footer bottom

- Privacy Policy

- Subscribe to our RSS Feed

- Like us on Facebook

- Follow us on Twitter

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

Statistical Treatment of Data

- Last updated

- Save as PDF

- Page ID 136262

- W. R. Fawcett, John Berg, P. B. Kelley, Carlito B. Lebrilla, Gang-yu Liu, Delmar Larsen, Paul Hrvatin, David Goodin, and Brooke McMahon

- University of California, Davis

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Many times during the course of the Chemistry 115 laboratory you will be asked to report an average, relative deviation, and a standard deviation. You may also have to analyze multiple trials to decide whether or not a certain piece of data should be discarded. This section describes these procedures.

Average and Standard Deviation

The average or mean of the data set, \(\bar{x}\), is defined by:

\(\bar{x} = \dfrac{\sum_{i=1}^N x_i}{N}\)

where x i is the result of the i th measurement, i = 1,…,N. The standard deviation, σ, measures how closely values are clustered about the mean. The standard deviation for small samples is defined by:

\( \sigma = \sqrt{\dfrac{\sum_{i=1}^N (x_i-\bar{x})^2}{N}} \)

The smaller the value of σ, the more closely packed the data are about the mean, and we say that the measurements are precise . In contrast, a high accuracy of the measurements occurs if the mean is close to the real result (presuming we know that information). It is easy to tell if your measurements are precise, but it is often difficult to tell if they are accurate.

Relative Deviation

The relative average deviation, d, like the standard deviation, is useful to determine how data are clustered about a mean. The advantage of a relative deviation is that it incorporates the relative numerical magnitude of the average. The relative average deviation, d, is calculated in the following way.

Analysis of Poor Data

Other important concepts and procedures.

- Normal error curve: Histogram of an infinitely large number of good measurements usually follow a Gaussian distribution

- Confidence limit (95%)

- Linear least squares fit

- Residual sum of squares

- Correlation coefficient

Just one more step to your free trial.

.surveysparrow.com

Already using SurveySparrow? Login

By clicking on "Get Started", I agree to the Privacy Policy and Terms of Service .

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Enterprise Survey Software

Enterprise Survey Software to thrive in your business ecosystem

NPS® Software

Turn customers into promoters

Offline Survey

Real-time data collection, on the move. Go internet-independent.

360 Assessment

Conduct omnidirectional employee assessments. Increase productivity, grow together.

Reputation Management

Turn your existing customers into raving promoters by monitoring online reviews.

Ticket Management

Build loyalty and advocacy by delivering personalized support experiences that matter.

Chatbot for Website

Collect feedback smartly from your website visitors with the engaging Chatbot for website.

Swift, easy, secure. Scalable for your organization.

Executive Dashboard

Customer journey map, craft beautiful surveys, share surveys, gain rich insights, recurring surveys, white label surveys, embedded surveys, conversational forms, mobile-first surveys, audience management, smart surveys, video surveys, secure surveys, api, webhooks, integrations, survey themes, accept payments, custom workflows, all features, customer experience, employee experience, product experience, marketing experience, sales experience, hospitality & travel, market research, saas startup programs, wall of love, success stories, sparrowcast, nps® benchmarks, learning centre, apps & integrations, testimonials.

Our surveys come with superpowers ⚡

Blog Customer Experience

Statistical Treatment of Data for Survey: The Right Approach

Last Updated:

30 May 2024

Statistical treatment of data is a process used to convert raw data into something interpretable. This process is essential because it allows businesses to make better decisions based on customer feedback. This blog post will give a short overview of the statistical treatment of data and how it can be used to improve your business.

What exactly is Statistical Treatment?

In its simplest form, statistical treatment of data is taking raw data and turning it into something that can be interpreted and used to make decisions. This process is important for businesses because it allows them to take customer feedback and turn it into actionable insights.

There are many different statistical data treatment methods, but the most common are surveys and polls. Surveys are a great way to collect large amounts of customer data, but they can be time-consuming and expensive to administer. Polls are a quicker and more efficient way to collect data, but they typically have a smaller sample size.

Statistical methods for surveys

These are some statistical treatment of data for survey and how they can help improve your customer feedback program.

Descriptive Statistics

Descriptive statistics are used to describe the overall characteristics of a dataset. This includes measures of central tendency (mean, median, mode) and dispersion (range, variance, standard deviation). Descriptive statistics can be used to generate summary reports of survey data. These reports can be used to understand responses’ distribution and identify outliers.

Here’s how you can generate a detailed summary of survey data using SurveySparrow…

To create similar dashboards for statistical analysis, sign up here for Free!

Please enter a valid Email ID.

14-Day Free Trial • No Credit Card Required • No Strings Attached

Inferential Statistics

Inferential statistics are used to make predictions or inferences about a population based on a sample. This is done by using estimation methods (point estimates and confidence intervals) and testing methods (hypothesis testing). Inferential statistics can be used to understand how likely it is that a particular population characteristic is true. For example, inferential statistics can be used to calculate the probability that a customer will be satisfied with a product or service.

Once you have collected your data, the next step is to choose a statistical analysis method. The most common methods are regression, correlation, and factor analysis.

Regression Analysis

Regression analysis is a method used to identify the relationships between different variables. For example, you could use regression analysis to understand how customer satisfaction ratings change based on the number of support tickets they open.

Correlation analysis

Correlation analysis is a method used to understand how two variables relate. For example, you could use correlation analysis to understand how customer satisfaction ratings change based on the number of support tickets they open.

Factor analysis

Factor analysis is a method used to identify which variables impact a particular outcome most. For example, you could use factor analysis to determine which factors influence customer satisfaction ratings most.

Once you have chosen a method of statistical treatment of data, the next step is to apply it to your dataset. This step can be done using Excel or another similar program. Once you have applied your chosen method, you can interpret the results and use them to make decisions about your business .

Wrapping up…

Statistical treatment of data for survey is a necessary process that allows businesses to take customer feedback and turn it into actionable insights. There are many different statistical data treatment methods, but the most common are surveys and polls. Online survey tools like SurveySparrow can help you with the same. Once you have collected your data, you must choose a form of statistical analysis and apply it to your dataset. You will then be able to interpret the results and use them to make decisions about your business. Thanks for reading!

Product Marketer

Frustrated developer turned joyous writer.

You Might Also Like

How to use surveys in seo analysis, how to get reviews on facebook & manage them: a quick guide, how to create a support ticketing system the easy way, everything about delighting customers. you’ll find them here..

Leave us your email, we wont spam. Promise!

Start your free trial today

No Credit Card Required. 14-Day Free Trial

Request a Demo

Want to learn more about SurveySparrow? We'll be in touch soon!

Take the right approach to statistical analysis now!

Create in-depth dashboards in seconds.

14-Day Free Trial • No Credit card required • 40% more completion rate

Hi there, we use cookies to offer you a better browsing experience and to analyze site traffic. By continuing to use our website, you consent to the use of these cookies. Learn More

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organisations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organise and summarise the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalise your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarise your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, frequently asked questions about statistics.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalise your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

| Variable | Type of data |

|---|---|

| Age | Quantitative (ratio) |

| Gender | Categorical (nominal) |

| Race or ethnicity | Categorical (nominal) |

| Baseline test scores | Quantitative (interval) |

| Final test scores | Quantitative (interval) |

| Parental income | Quantitative (ratio) |

|---|---|

| GPA | Quantitative (interval) |

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalisable findings, you should use a probability sampling method. Random selection reduces sampling bias and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to be biased, they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalising your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalise your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialised, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalised in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardised indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarise them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organising data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

- Visualising the relationship between two variables using a scatter plot .

By visualising your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

| Pretest scores | Posttest scores | |

|---|---|---|

| Mean | 68.44 | 75.25 |

| Standard deviation | 9.43 | 9.88 |

| Variance | 88.96 | 97.96 |

| Range | 36.25 | 45.12 |

| 30 | ||

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

| Parental income (USD) | GPA | |

|---|---|---|

| Mean | 62,100 | 3.12 |

| Standard deviation | 15,000 | 0.45 |

| Variance | 225,000,000 | 0.16 |

| Range | 8,000–378,000 | 2.64–4.00 |

| 653 | ||

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimise the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasises null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Statistical analysis is the main method for analyzing quantitative research data . It uses probabilities and models to test predictions about a population from sample data.

Is this article helpful?

Other students also liked, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control, between-subjects design | examples, pros & cons, more interesting articles.

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Correlation Coefficient | Types, Formulas & Examples

- Descriptive Statistics | Definitions, Types, Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | Meaning, Formula & Examples

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Inferential Statistics | An Easy Introduction & Examples

- Levels of measurement: Nominal, ordinal, interval, ratio

- Missing Data | Types, Explanation, & Imputation

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- Poisson Distributions | Definition, Formula & Examples

- Skewness | Definition, Examples & Formula

- T-Distribution | What It Is and How To Use It (With Examples)

- The Standard Normal Distribution | Calculator, Examples & Uses

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Interval Data? | Examples & Definition

- What Is Nominal Data? | Examples & Definition

- What Is Ordinal Data? | Examples & Definition

- What Is Ratio Data? | Examples & Definition

- What Is the Mode in Statistics? | Definition, Examples & Calculator

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

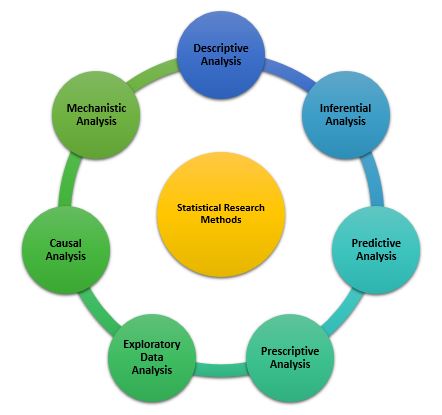

Types of Statistical Research Methods That Aid in Data Analysis

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing

This software package is used among human behavior research and other fields. R is a powerful tool and has a steep learning curve. However, it requires a certain level of coding. Furthermore, it comes with an active community that is engaged in building and enhancing the software and the associated plugins.

3. MATLAB (The Mathworks)

It is an analytical platform and a programming language. Researchers and engineers use this software and create their own code and help answer their research question. While MatLab can be a difficult tool to use for novices, it offers flexibility in terms of what the researcher needs.

4. Microsoft Excel

Not the best solution for statistical analysis in research, but MS Excel offers wide variety of tools for data visualization and simple statistics. It is easy to generate summary and customizable graphs and figures. MS Excel is the most accessible option for those wanting to start with statistics.

5. Statistical Analysis Software (SAS)

It is a statistical platform used in business, healthcare, and human behavior research alike. It can carry out advanced analyzes and produce publication-worthy figures, tables and charts .

6. GraphPad Prism