Linear Mixed Models in Linguistics and Psychology: A Comprehensive Introduction

6.2 the hypothesis matrix illustrated with a three-level factor.

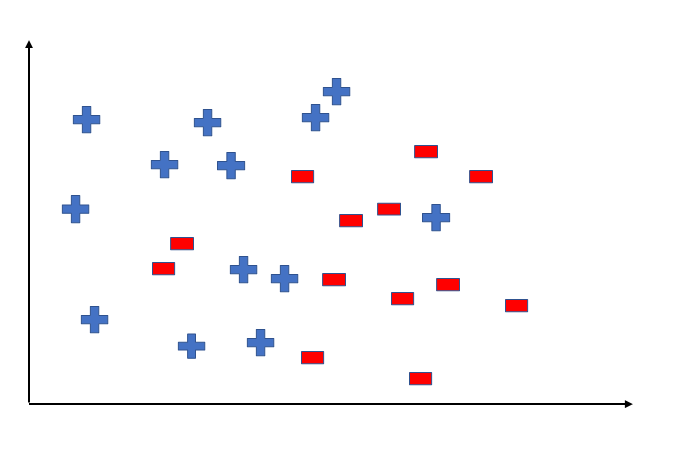

Consider an example with the three word classes nouns, verbs, and adjectives. We load simulated data from a lexical decision task with response times as dependent variable. The research question is: do response times differ as a function of the between-subject factor word class with three levels: nouns, verbs, and adjectives? We here make the ad-hoc assumption that nouns may have longer response times and that adjectives may have shorter response times. Here, we specify word class as a between-subject factor. In cognitive science experiments, word class will usually vary within subjects and between items. However, the within- or between-subjects status of an effect is independent of its contrast coding; we assume the manipulation to be between subjects for ease of exposition. The concepts presented here extend to repeated measures designs that are often analyzed using hierarchical Bayesian (linear mixed) models.

| Factor | N data | Est. means | Std. dev. | Std. errors |

|---|---|---|---|---|

| adjectives | 4 | 400.2 | 19.9 | 9.9 |

| nouns | 4 | 500.0 | 20.0 | 10.0 |

| verbs | 4 | 450.2 | 20.0 | 10.0 |

As shown in Table 6.2 , the estimated means reflect our assumptions about the true means in the data simulation: Response times are longest for nouns and shortest for adjectives. In the following sections, we use this data-set to illustrate sum . Furthermore, we will use an additional data set to illustrate repeated , polynomial , and custom contrasts. In practice, usually only one set of contrasts is selected when the expected pattern of means is formulated during the design of the experiment.

6.2.1 Sum contrasts

For didactic purposes, the next sections describe sum contrasts. Suppose that the expectation is that nouns are responded to slower and verbs words are responded to faster than the GM response time. Then, the research question could be: Do nouns differ from the GM and do adjectives differ from the GM? And if so, are they above or below the GM? We want to estimate the following two quantities:

\[\begin{equation} \beta_1 = \mu_1 - \frac{\mu_1+\mu_2+\mu_3}{3} = \mu_1 - GM \end{equation}\]

\[\begin{equation} \beta_2 = \mu_2 - \frac{\mu_1+\mu_2+\mu_3}{3} = \mu_2 - GM \end{equation}\]

This translates into the following two hypotheses:

\[\begin{equation} H_{0_1}: \mu_1 = \frac{\mu_1+\mu_2+\mu_3}{3} = GM \end{equation}\]

\[\begin{equation} H_{0_2}: \mu_2 = \frac{\mu_1+\mu_2+\mu_3}{3} = GM \end{equation}\]

\(H_{0_1}\) can also be written as:

\[\begin{align} \label{h01} & \mu_1 =\frac{\mu_1+\mu_2+\mu_3}{3}\\ \Leftrightarrow & \mu_1 - \frac{\mu_1+\mu_2+\mu_3}{3} = 0\\ \Leftrightarrow & \frac{2}{3} \mu_1 - \frac{1}{3}\mu_2 - \frac{1}{3}\mu_3 = 0 \end{align}\]

This corresponds to estimating the quantity \(\beta_1 = \frac{2}{3} \mu_1 - \frac{1}{3}\mu_2 - \frac{1}{3}\mu_3\) . Here, the weights \(2/3, -1/3, -1/3\) are informative about how to combine the condition means to estimate the linear model coefficient and to define the null hypothesis.

\(H_{0_2}\) is also rewritten as:

\[\begin{align}\label{h02} & \mu_2 = \frac{\mu_1+\mu_2+\mu_3}{3}\\ \Leftrightarrow & \mu_2 - \frac{\mu_1+\mu_2+\mu_3}{3} = 0 \\ \Leftrightarrow & -\frac{1}{3}\mu_1 + \frac{2}{3} \mu_2 - \frac{1}{3} \mu_3 = 0 \end{align}\]

This corresponds to estimating the quantity \(\beta_2 = -\frac{1}{3}\mu_1 + \frac{2}{3} \mu_2 - \frac{1}{3} \mu_3\) . Here, the weights are \(-1/3, 2/3, -1/3\) , and they again indicate how to combine the condition means for estimating the regression coefficient and for defining the null hypothesis.

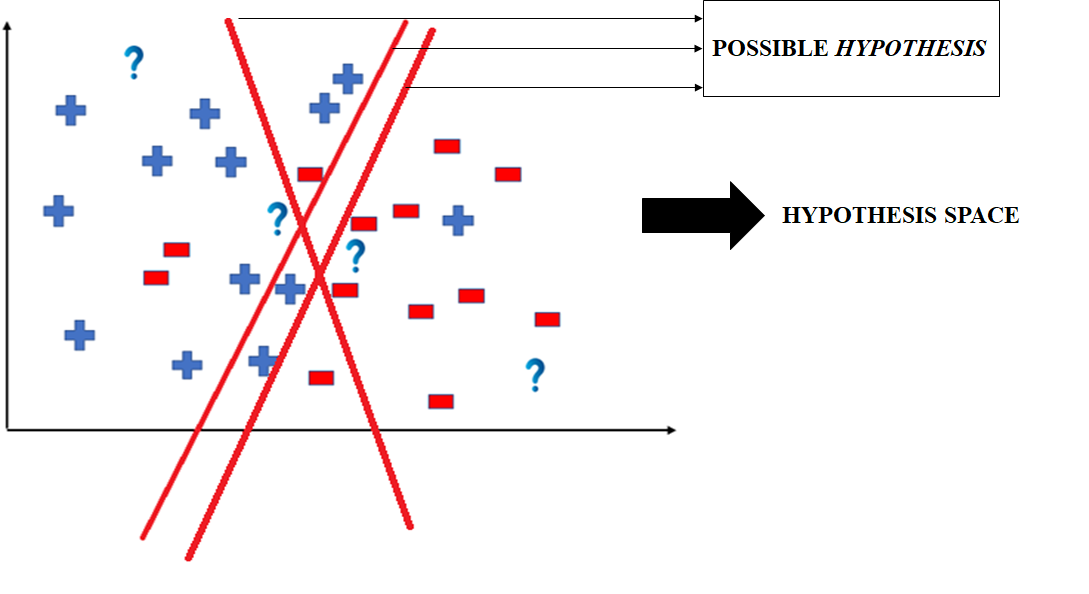

6.2.2 The hypothesis matrix

The weights of the condition means are not only useful to define parameter estimates and hypotheses. They also provide the starting step in a very powerful method which allows the researcher to generate the contrasts that are needed to test these hypotheses in a linear model. That is, what we did so far is to explain some kinds of different contrast codings that exist and what the hypotheses are that they test. That is, if a certain data-set is given and the goal is to estimate certain comparisons (and test certain hypotheses), then the procedure would be to check whether any of the contrasts that we encountered above happen to estimate these comparisons and test exactly the hypotheses of interest. Sometimes it suffices to use one of these existing contrasts. However, at other times, our research questions may not correspond exactly to any of the contrasts in the default set of standard contrasts provided in R. For these cases, or simply for more complex designs, it is very useful to know how contrast matrices are created. Indeed, a relatively simple procedure exists in which we write our comparisons or hypotheses formally, extract the weights of the condition means from the comparisons/hypotheses, and then automatically generate the correct contrast matrix that we need in order to estimate these comparisons or test these hypotheses in a linear model. Using this powerful method, it is not necessary to find a match to a contrast matrix provided by the family of functions in R starting with the prefix contr . Instead, it is possible to simply define the comparisons that one wants to estimate or the hypotheses that one wants to test, and to obtain the correct contrast matrix for these in an automatic procedure. Here, for pedagogical reasons, we show some examples of how to apply this procedure in cases where the comparisons/hypotheses do correspond to some of the existing contrasts.

Defining a custom contrast matrix involves four steps:

- Write down the estimated comparisons or hypotheses

- Extract the weights and write them into what we will call a hypothesis matrix

- Apply the generalized matrix inverse to the hypothesis matrix to create the contrast matrix

- Assign the contrast matrix to the factor and run the linear (mixed) model

Let us apply this four-step procedure to our example of the sum contrast. The first step, writing down the estimated parameters and hypotheses, is shown above. The second step involves writing down the weights that each comparison / hypothesis gives to condition means. The weights for the first comparison or null hypothesis are wH01=c(+2/3, -1/3, -1/3) , and the weights for the second comparison or null hypothesis are wH02=c(-1/3, +2/3, -1/3) .

Before writing these into a hypothesis matrix, we also define the estimated quantity and the null hypothesis for the intercept term. The intercept parameter estimates the mean across all conditions:

\[\begin{align} \alpha = \frac{\mu_1 + \mu_2 + \mu_3}{3} \\ \alpha = \frac{1}{3} \mu_1 + \frac{1}{3}\mu_2 + \frac{1}{3}\mu_3 \end{align}\]

This corresponds to the null hypothesis that the mean across all conditions is zero:

\[\begin{align} H_{0_0}: &\frac{\mu_1 + \mu_2 + \mu_3}{3} = 0 \\ H_{0_0}: &\frac{1}{3} \mu_1 + \frac{1}{3}\mu_2 + \frac{1}{3}\mu_3 = 0 \end{align}\]

This estimate and null hypothesis has weights of \(1/3\) for all condition means. The weights from all three model parameters / hypotheses that were defined are now combined and written into a matrix that we refer to as the hypothesis matrix ( Hc ):

Each set of weights is first entered as a row into the matrix (command rbind() ). This has mathematical reasons (see Schad et al. 2020b ) . However, we then switch rows and columns of the matrix for easier readability using the command t() (this transposes the matrix, i.e., switches rows and columns). The command fractions() turns the decimals into fractions to improve readability.

Now that the condition weights have been written into the hypothesis matrix, the third step of the procedure is implemented: a matrix operation called the ‘generalized matrix inverse’ 2 is used to obtain the contrast matrix that is needed to test these hypotheses in a linear model. In R this next step is done using the function ginv() from the MASS package. Here, we define a function ginv2() for nicer formatting of the output. 3

Applying the generalized inverse to the hypothesis matrix results in the new matrix XcSum . This is the contrast matrix \(X_c\) that estimates exactly those comparisons and tests exactly those hypotheses that were specified earlier:

This contrast matrix corresponds exactly to the sum contrasts described above. In the case of the sum contrast, the contrast matrix looks very different from the hypothesis matrix. The contrast matrix in sum contrasts codes with \(+1\) the condition that is to be compared to the GM. The condition that is never compared to the GM is coded as \(-1\) . This is a very different interpretation than the two-condition sum contrast we saw in earlier chapters!

The important point here is that unless we know the relationship between the hypothesis matrix and the contrast matrix, the meaning of the coefficients is completely opaque.

To verify this custom-made contrast matrix, it is compared to the sum contrast matrix as generated by the R function contr.sum() in the stats package. The resulting contrast matrix is identical to the result when adding the intercept term, a column of ones, to the contrast matrix:

In order to estimate model parameters, step four in our procedure involves assigning sum contrasts to the factor F in our example data, and running a linear (mixed) model. This allows us to estimate the regression coefficients associated with each contrast. We compare these to the data shown above (Table 6.2 ) to test whether the regression coefficients actually correspond to the differences of condition means, as intended. To define the contrast, it is necessary to remove the intercept term, as this is automatically added by the linear model function lm() .

The linear model regression coefficients show the GM response time of \(450\) ms in the intercept. Remember that the first regression coefficient FcH01 was designed to estimate the extent to which nouns are responded to slower than the GM. The regression coefficient FcH01 (‘Estimate’) of \(50\) reflects the difference between adjectives ( \(400\) ms) and the GM of \(450\) ms. The estimate of interest was in how response times for adjectives differ from the GM. The fact that the second regression coefficient FcH02 is close to \(-50\) indicates that response times for adjectives ( \(400\) ms) are 50 ms faster than the GM of \(450\) ms. Verbs are estimated to have \(50\) ms longer reading times than the GM (the second slope labeled FcH02).

We have now not only derived contrasts, parameter estimates, and hypotheses for the sum contrast, we have also used a powerful and highly general procedure that is used to generate contrasts for many kinds of different hypotheses and experimental designs.

6.2.3 Generating contrasts: The hypr package

To work with the 4-step procedure, i.e., to flexibly design contrasts to estimate specific comparisons, we have developed the R package hypr ( Rabe et al. 2020 ) . This package allows the user to specify comparisons as null hypotheses, and based on these null hypotheses, it automatically generates contrast matrices that allow the user to estimate these comparisons and test these hypotheses in linear models. It thus considerably simplifies the implementation of the 4-step procedure outlined above.

To illustrate the functionality of the hypr package, we will use the two comparisons and associated null hypotheses that we had defined and analyzed in the previous section:

These null hypotheses are effectively comparisons between condition means or between bundles of condition means. That is, \(\mu_1\) is compared to the GM and \(\mu_2\) is compared to the grand mean. These two comparisons/hypotheses can be directly entered into R using the hypr() function from the hypr package. To do so, we use some labels to indicate factor levels. E.g., nouns , verbs , and adjectives can represent factor levels \(\mu_1\) , \(\mu_2\) , and \(\mu_3\) . The first comparison/hypothesis specifies that \(\mu_1 = \frac{\mu_1+\mu_2+\mu_3}{3}\) . This can be written as a formula in R: nouns ~ (nouns + verbs + adjectives)/3 . The second comparison/hypothesis is that \(\mu_2 = \frac{\mu_1+\mu_2+\mu_3}{3}\) , which can be written in R as verbs ~ (nouns + verbs + adjectives)/3 .

The results show that the null hypotheses or comparisons between condition means have been re-written into a form where \(0\) is coded on the left side of the equation, and the condition means together with associated weights are written on the right side of the equation. This presentation makes it easy to see the weights of the condition means to code a certain null hypothesis or comparison. The next part of the results shows the hypothesis matrix, which contains the weights from the condition means. Thus, hypr takes as input comparisons between condition means, which also define null hypotheses, and automatically extracts the corresponding weights and encodes them into the hypothesis matrix. hypr moreover applies the generalized matrix inverse to obtain the contrast matrix from the hypothesis matrix. Note that the different steps correspond exactly to the steps we had carried out manually in the preceding section. hypr automatically performs these steps for us. We can now extract the contrast matrix by a simple function call:

We can assign this contrast to our factor as we did before, and again fit a linear model:

In the hypr , the focus lies on estimation of contrasts that code comparisons between condition means or groups of condition means. Thus, the null hypotheses that one specifies implicitly imply the estimation of a difference between condition means or bundles of condition means. The output of the hypr() function (see the first section of the results) makes this clear - these formulate the null hypotheses in a way that also illustrates the estimation of model parameters. I.e., the null hypothesis H0.b1: 0 = 2/3*m1 - 1/3*m2 - 1/3*m3 corresponds to a parameter estimate of b1 = 2/3*m1 - 1/3*m2 - 1/3*m3 . The resulting contrasts will then allow us to estimate the specified differences between condition means or bundles of condition means.

At this point, there is no need to understand in detail what this means. We refer the interested reader to Schad et al. ( 2020b ) . For a quick overview, we recommend a vignette explaining the generalized inverse in the matlib package ( Friendly, Fox, and Chalmers 2020 ) . ↩︎

The function from the package is used to make the output more easily readable, and the function is used to keep row and column names. ↩︎

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.19(7); 2019 Jul

Hypothesis tests

Associated data.

- • Hypothesis tests are used to assess whether a difference between two samples represents a real difference between the populations from which the samples were taken.

- • A null hypothesis of ‘no difference’ is taken as a starting point, and we calculate the probability that both sets of data came from the same population. This probability is expressed as a p -value.

- • When the null hypothesis is false, p- values tend to be small. When the null hypothesis is true, any p- value is equally likely.

Learning objectives

By reading this article, you should be able to:

- • Explain why hypothesis testing is used.

- • Use a table to determine which hypothesis test should be used for a particular situation.

- • Interpret a p- value.

A hypothesis test is a procedure used in statistics to assess whether a particular viewpoint is likely to be true. They follow a strict protocol, and they generate a ‘ p- value’, on the basis of which a decision is made about the truth of the hypothesis under investigation. All of the routine statistical ‘tests’ used in research— t- tests, χ 2 tests, Mann–Whitney tests, etc.—are all hypothesis tests, and in spite of their differences they are all used in essentially the same way. But why do we use them at all?

Comparing the heights of two individuals is easy: we can measure their height in a standardised way and compare them. When we want to compare the heights of two small well-defined groups (for example two groups of children), we need to use a summary statistic that we can calculate for each group. Such summaries (means, medians, etc.) form the basis of descriptive statistics, and are well described elsewhere. 1 However, a problem arises when we try to compare very large groups or populations: it may be impractical or even impossible to take a measurement from everyone in the population, and by the time you do so, the population itself will have changed. A similar problem arises when we try to describe the effects of drugs—for example by how much on average does a particular vasopressor increase MAP?

To solve this problem, we use random samples to estimate values for populations. By convention, the values we calculate from samples are referred to as statistics and denoted by Latin letters ( x ¯ for sample mean; SD for sample standard deviation) while the unknown population values are called parameters , and denoted by Greek letters (μ for population mean, σ for population standard deviation).

Inferential statistics describes the methods we use to estimate population parameters from random samples; how we can quantify the level of inaccuracy in a sample statistic; and how we can go on to use these estimates to compare populations.

Sampling error

There are many reasons why a sample may give an inaccurate picture of the population it represents: it may be biased, it may not be big enough, and it may not be truly random. However, even if we have been careful to avoid these pitfalls, there is an inherent difference between the sample and the population at large. To illustrate this, let us imagine that the actual average height of males in London is 174 cm. If I were to sample 100 male Londoners and take a mean of their heights, I would be very unlikely to get exactly 174 cm. Furthermore, if somebody else were to perform the same exercise, it would be unlikely that they would get the same answer as I did. The sample mean is different each time it is taken, and the way it differs from the actual mean of the population is described by the standard error of the mean (standard error, or SEM ). The standard error is larger if there is a lot of variation in the population, and becomes smaller as the sample size increases. It is calculated thus:

where SD is the sample standard deviation, and n is the sample size.

As errors are normally distributed, we can use this to estimate a 95% confidence interval on our sample mean as follows:

We can interpret this as meaning ‘We are 95% confident that the actual mean is within this range.’

Some confusion arises at this point between the SD and the standard error. The SD is a measure of variation in the sample. The range x ¯ ± ( 1.96 × SD ) will normally contain 95% of all your data. It can be used to illustrate the spread of the data and shows what values are likely. In contrast, standard error tells you about the precision of the mean and is used to calculate confidence intervals.

One straightforward way to compare two samples is to use confidence intervals. If we calculate the mean height of two groups and find that the 95% confidence intervals do not overlap, this can be taken as evidence of a difference between the two means. This method of statistical inference is reasonably intuitive and can be used in many situations. 2 Many journals, however, prefer to report inferential statistics using p -values.

Inference testing using a null hypothesis

In 1925, the British statistician R.A. Fisher described a technique for comparing groups using a null hypothesis , a method which has dominated statistical comparison ever since. The technique itself is rather straightforward, but often gets lost in the mechanics of how it is done. To illustrate, imagine we want to compare the HR of two different groups of people. We take a random sample from each group, which we call our data. Then:

- (i) Assume that both samples came from the same group. This is our ‘null hypothesis’.

- (ii) Calculate the probability that an experiment would give us these data, assuming that the null hypothesis is true. We express this probability as a p- value, a number between 0 and 1, where 0 is ‘impossible’ and 1 is ‘certain’.

- (iii) If the probability of the data is low, we reject the null hypothesis and conclude that there must be a difference between the two groups.

Formally, we can define a p- value as ‘the probability of finding the observed result or a more extreme result, if the null hypothesis were true.’ Standard practice is to set a cut-off at p <0.05 (this cut-off is termed the alpha value). If the null hypothesis were true, a result such as this would only occur 5% of the time or less; this in turn would indicate that the null hypothesis itself is unlikely. Fisher described the process as follows: ‘Set a low standard of significance at the 5 per cent point, and ignore entirely all results which fail to reach this level. A scientific fact should be regarded as experimentally established only if a properly designed experiment rarely fails to give this level of significance.’ 3 This probably remains the most succinct description of the procedure.

A question which often arises at this point is ‘Why do we use a null hypothesis?’ The simple answer is that it is easy: we can readily describe what we would expect of our data under a null hypothesis, we know how data would behave, and we can readily work out the probability of getting the result that we did. It therefore makes a very simple starting point for our probability assessment. All probabilities require a set of starting conditions, in much the same way that measuring the distance to London needs a starting point. The null hypothesis can be thought of as an easy place to put the start of your ruler.

If a null hypothesis is rejected, an alternate hypothesis must be adopted in its place. The null and alternate hypotheses must be mutually exclusive, but must also between them describe all situations. If a null hypothesis is ‘no difference exists’ then the alternate should be simply ‘a difference exists’.

Hypothesis testing in practice

The components of a hypothesis test can be readily described using the acronym GOST: identify the Groups you wish to compare; define the Outcome to be measured; collect and Summarise the data; then evaluate the likelihood of the null hypothesis, using a Test statistic .

When considering groups, think first about how many. Is there just one group being compared against an audit standard, or are you comparing one group with another? Some studies may wish to compare more than two groups. Another situation may involve a single group measured at different points in time, for example before or after a particular treatment. In this situation each participant is compared with themselves, and this is often referred to as a ‘paired’ or a ‘repeated measures’ design. It is possible to combine these types of groups—for example a researcher may measure arterial BP on a number of different occasions in five different groups of patients. Such studies can be difficult, both to analyse and interpret.

In other studies we may want to see how a continuous variable (such as age or height) affects the outcomes. These techniques involve regression analysis, and are beyond the scope of this article.

The outcome measures are the data being collected. This may be a continuous measure, such as temperature or BMI, or it may be a categorical measure, such as ASA status or surgical specialty. Often, inexperienced researchers will strive to collect lots of outcome measures in an attempt to find something that differs between the groups of interest; if this is done, a ‘primary outcome measure’ should be identified before the research begins. In addition, the results of any hypothesis tests will need to be corrected for multiple measures.

The summary and the test statistic will be defined by the type of data that have been collected. The test statistic is calculated then transformed into a p- value using tables or software. It is worth looking at two common tests in a little more detail: the χ 2 test, and the t -test.

Categorical data: the χ 2 test

The χ 2 test of independence is a test for comparing categorical outcomes in two or more groups. For example, a number of trials have compared surgical site infections in patients who have been given different concentrations of oxygen perioperatively. In the PROXI trial, 4 685 patients received oxygen 80%, and 701 patients received oxygen 30%. In the 80% group there were 131 infections, while in the 30% group there were 141 infections. In this study, the groups were oxygen 80% and oxygen 30%, and the outcome measure was the presence of a surgical site infection.

The summary is a table ( Table 1 ), and the hypothesis test compares this table (the ‘observed’ table) with the table that would be expected if the proportion of infections in each group was the same (the ‘expected’ table). The test statistic is χ 2 , from which a p- value is calculated. In this instance the p -value is 0.64, which means that results like this would occur 64% of the time if the null hypothesis were true. We thus have no evidence to reject the null hypothesis; the observed difference probably results from sampling variation rather than from an inherent difference between the two groups.

Table 1

Summary of the results of the PROXI trial. Figures are numbers of patients.

| Group | |||

|---|---|---|---|

| Oxygen 80% | Oxygen 30% | ||

| Outcome | Infection | 131 | 141 |

| No infection | 554 | 560 | |

| Total | 685 | 701 | |

Continuous data: the t- test

The t- test is a statistical method for comparing means, and is one of the most widely used hypothesis tests. Imagine a study where we try to see if there is a difference in the onset time of a new neuromuscular blocking agent compared with suxamethonium. We could enlist 100 volunteers, give them a general anaesthetic, and randomise 50 of them to receive the new drug and 50 of them to receive suxamethonium. We then time how long it takes (in seconds) to have ideal intubation conditions, as measured by a quantitative nerve stimulator. Our data are therefore a list of times. In this case, the groups are ‘new drug’ and suxamethonium, and the outcome is time, measured in seconds. This can be summarised by using means; the hypothesis test will compare the means of the two groups, using a p- value calculated from a ‘ t statistic’. Hopefully it is becoming obvious at this point that the test statistic is usually identified by a letter, and this letter is often cited in the name of the test.

The t -test comes in a number of guises, depending on the comparison being made. A single sample can be compared with a standard (Is the BMI of school leavers in this town different from the national average?); two samples can be compared with each other, as in the example above; or the same study subjects can be measured at two different times. The latter case is referred to as a paired t- test, because each participant provides a pair of measurements—such as in a pre- or postintervention study.

A large number of methods for testing hypotheses exist; the commonest ones and their uses are described in Table 2 . In each case, the test can be described by detailing the groups being compared ( Table 2 , columns) the outcome measures (rows), the summary, and the test statistic. The decision to use a particular test or method should be made during the planning stages of a trial or experiment. At this stage, an estimate needs to be made of how many test subjects will be needed. Such calculations are described in detail elsewhere. 5

Table 2

The principle types of hypothesis test. Tests comparing more than two samples can indicate that one group differs from the others, but will not identify which. Subsequent ‘post hoc’ testing is required if a difference is found.

| Type of data | Number of groups | ||||

|---|---|---|---|---|---|

| 1 (comparison with a standard) | 1 (before and after) | 2 | More than 2 | Measured over a continuous range | |

| Categorical | Binomial test | McNemar's test | χ test, or Fisher's exact (2×2 tables), or comparison of proportions | χ test | Logistic regression |

| Continuous (normal) | One-sample -test | Paired -test | Independent samples -test | Analysis of variance (ANOVA) | Regression analysis, correlation |

| Continuous (non-parametric) | Sign test (for median) | Sign test, or Wilcoxon matched-pairs test | Mann–Whitney test | Kruskal–Wallis test | Spearman's rank correlation |

Controversies surrounding hypothesis testing

Although hypothesis tests have been the basis of modern science since the middle of the 20th century, they have been plagued by misconceptions from the outset; this has led to what has been described as a crisis in science in the last few years: some journals have gone so far as to ban p -value s outright. 6 This is not because of any flaw in the concept of a p -value, but because of a lack of understanding of what they mean.

Possibly the most pervasive misunderstanding is the belief that the p- value is the chance that the null hypothesis is true, or that the p- value represents the frequency with which you will be wrong if you reject the null hypothesis (i.e. claim to have found a difference). This interpretation has frequently made it into the literature, and is a very easy trap to fall into when discussing hypothesis tests. To avoid this, it is important to remember that the p- value is telling us something about our sample , not about the null hypothesis. Put in simple terms, we would like to know the probability that the null hypothesis is true, given our data. The p- value tells us the probability of getting these data if the null hypothesis were true, which is not the same thing. This fallacy is referred to as ‘flipping the conditional’; the probability of an outcome under certain conditions is not the same as the probability of those conditions given that the outcome has happened.

A useful example is to imagine a magic trick in which you select a card from a normal deck of 52 cards, and the performer reveals your chosen card in a surprising manner. If the performer were relying purely on chance, this would only happen on average once in every 52 attempts. On the basis of this, we conclude that it is unlikely that the magician is simply relying on chance. Although simple, we have just performed an entire hypothesis test. We have declared a null hypothesis (the performer was relying on chance); we have even calculated a p -value (1 in 52, ≈0.02); and on the basis of this low p- value we have rejected our null hypothesis. We would, however, be wrong to suggest that there is a probability of 0.02 that the performer is relying on chance—that is not what our figure of 0.02 is telling us.

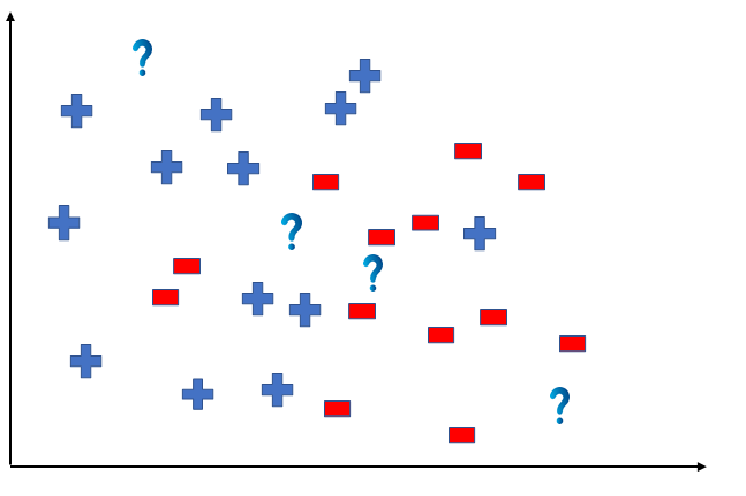

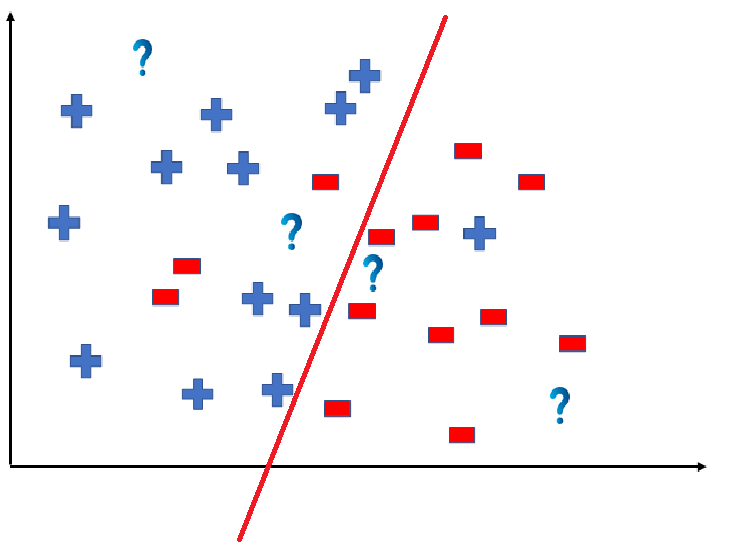

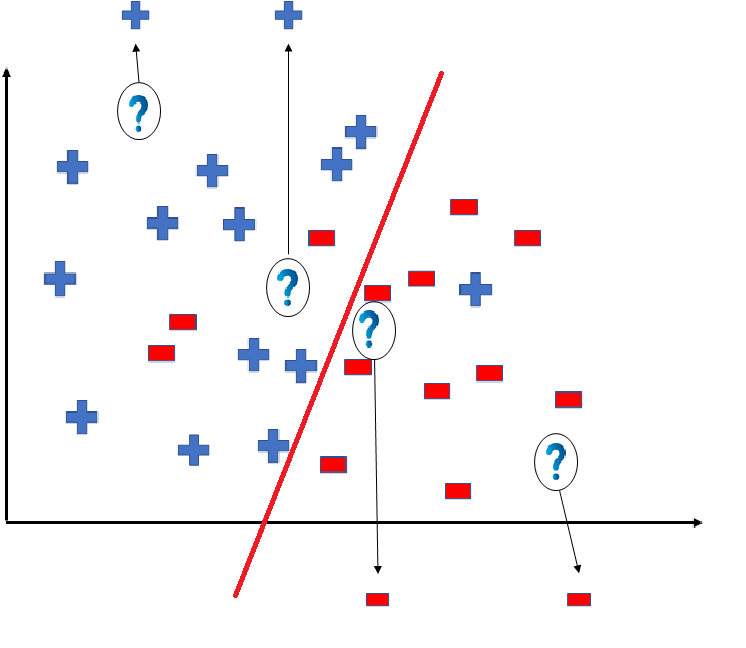

To explore this further we can create two populations, and watch what happens when we use simulation to take repeated samples to compare these populations. Computers allow us to do this repeatedly, and to see what p- value s are generated (see Supplementary online material). 7 Fig 1 illustrates the results of 100,000 simulated t -tests, generated in two set of circumstances. In Fig 1 a , we have a situation in which there is a difference between the two populations. The p- value s cluster below the 0.05 cut-off, although there is a small proportion with p >0.05. Interestingly, the proportion of comparisons where p <0.05 is 0.8 or 80%, which is the power of the study (the sample size was specifically calculated to give a power of 80%).

The p- value s generated when 100,000 t -tests are used to compare two samples taken from defined populations. ( a ) The populations have a difference and the p- value s are mostly significant. ( b ) The samples were taken from the same population (i.e. the null hypothesis is true) and the p- value s are distributed uniformly.

Figure 1 b depicts the situation where repeated samples are taken from the same parent population (i.e. the null hypothesis is true). Somewhat surprisingly, all p- value s occur with equal frequency, with p <0.05 occurring exactly 5% of the time. Thus, when the null hypothesis is true, a type I error will occur with a frequency equal to the alpha significance cut-off.

Figure 1 highlights the underlying problem: when presented with a p -value <0.05, is it possible with no further information, to determine whether you are looking at something from Fig 1 a or Fig 1 b ?

Finally, it cannot be stressed enough that although hypothesis testing identifies whether or not a difference is likely, it is up to us as clinicians to decide whether or not a statistically significant difference is also significant clinically.

Hypothesis testing: what next?

As mentioned above, some have suggested moving away from p -values, but it is not entirely clear what we should use instead. Some sources have advocated focussing more on effect size; however, without a measure of significance we have merely returned to our original problem: how do we know that our difference is not just a result of sampling variation?

One solution is to use Bayesian statistics. Up until very recently, these techniques have been considered both too difficult and not sufficiently rigorous. However, recent advances in computing have led to the development of Bayesian equivalents of a number of standard hypothesis tests. 8 These generate a ‘Bayes Factor’ (BF), which tells us how more (or less) likely the alternative hypothesis is after our experiment. A BF of 1.0 indicates that the likelihood of the alternate hypothesis has not changed. A BF of 10 indicates that the alternate hypothesis is 10 times more likely than we originally thought. A number of classifications for BF exist; greater than 10 can be considered ‘strong evidence’, while BF greater than 100 can be classed as ‘decisive’.

Figures such as the BF can be quoted in conjunction with the traditional p- value, but it remains to be seen whether they will become mainstream.

Declaration of interest

The author declares that they have no conflict of interest.

The associated MCQs (to support CME/CPD activity) will be accessible at www.bjaed.org/cme/home by subscribers to BJA Education .

Jason Walker FRCA FRSS BSc (Hons) Math Stat is a consultant anaesthetist at Ysbyty Gwynedd Hospital, Bangor, Wales, and an honorary senior lecturer at Bangor University. He is vice chair of his local research ethics committee, and an examiner for the Primary FRCA.

Matrix codes: 1A03, 2A04, 3J03

Supplementary data to this article can be found online at https://doi.org/10.1016/j.bjae.2019.03.006 .

Supplementary material

The following is the Supplementary data to this article:

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

| Research question | Hypothesis | Null hypothesis |

|---|---|---|

| What are the health benefits of eating an apple a day? | Increasing apple consumption in over-60s will result in decreasing frequency of doctor’s visits. | Increasing apple consumption in over-60s will have no effect on frequency of doctor’s visits. |

| Which airlines have the most delays? | Low-cost airlines are more likely to have delays than premium airlines. | Low-cost and premium airlines are equally likely to have delays. |

| Can flexible work arrangements improve job satisfaction? | Employees who have flexible working hours will report greater job satisfaction than employees who work fixed hours. | There is no relationship between working hour flexibility and job satisfaction. |

| How effective is high school sex education at reducing teen pregnancies? | Teenagers who received sex education lessons throughout high school will have lower rates of unplanned pregnancy teenagers who did not receive any sex education. | High school sex education has no effect on teen pregnancy rates. |

| What effect does daily use of social media have on the attention span of under-16s? | There is a negative between time spent on social media and attention span in under-16s. | There is no relationship between social media use and attention span in under-16s. |

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved September 9, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

17 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Hi” best wishes to you and your very nice blog”

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Privacy Policy

Home » What is a Hypothesis – Types, Examples and Writing Guide

What is a Hypothesis – Types, Examples and Writing Guide

Table of Contents

Definition:

Hypothesis is an educated guess or proposed explanation for a phenomenon, based on some initial observations or data. It is a tentative statement that can be tested and potentially proven or disproven through further investigation and experimentation.

Hypothesis is often used in scientific research to guide the design of experiments and the collection and analysis of data. It is an essential element of the scientific method, as it allows researchers to make predictions about the outcome of their experiments and to test those predictions to determine their accuracy.

Types of Hypothesis

Types of Hypothesis are as follows:

Research Hypothesis

A research hypothesis is a statement that predicts a relationship between variables. It is usually formulated as a specific statement that can be tested through research, and it is often used in scientific research to guide the design of experiments.

Null Hypothesis

The null hypothesis is a statement that assumes there is no significant difference or relationship between variables. It is often used as a starting point for testing the research hypothesis, and if the results of the study reject the null hypothesis, it suggests that there is a significant difference or relationship between variables.

Alternative Hypothesis

An alternative hypothesis is a statement that assumes there is a significant difference or relationship between variables. It is often used as an alternative to the null hypothesis and is tested against the null hypothesis to determine which statement is more accurate.

Directional Hypothesis

A directional hypothesis is a statement that predicts the direction of the relationship between variables. For example, a researcher might predict that increasing the amount of exercise will result in a decrease in body weight.

Non-directional Hypothesis

A non-directional hypothesis is a statement that predicts the relationship between variables but does not specify the direction. For example, a researcher might predict that there is a relationship between the amount of exercise and body weight, but they do not specify whether increasing or decreasing exercise will affect body weight.

Statistical Hypothesis

A statistical hypothesis is a statement that assumes a particular statistical model or distribution for the data. It is often used in statistical analysis to test the significance of a particular result.

Composite Hypothesis

A composite hypothesis is a statement that assumes more than one condition or outcome. It can be divided into several sub-hypotheses, each of which represents a different possible outcome.

Empirical Hypothesis

An empirical hypothesis is a statement that is based on observed phenomena or data. It is often used in scientific research to develop theories or models that explain the observed phenomena.

Simple Hypothesis

A simple hypothesis is a statement that assumes only one outcome or condition. It is often used in scientific research to test a single variable or factor.

Complex Hypothesis

A complex hypothesis is a statement that assumes multiple outcomes or conditions. It is often used in scientific research to test the effects of multiple variables or factors on a particular outcome.

Applications of Hypothesis

Hypotheses are used in various fields to guide research and make predictions about the outcomes of experiments or observations. Here are some examples of how hypotheses are applied in different fields:

- Science : In scientific research, hypotheses are used to test the validity of theories and models that explain natural phenomena. For example, a hypothesis might be formulated to test the effects of a particular variable on a natural system, such as the effects of climate change on an ecosystem.

- Medicine : In medical research, hypotheses are used to test the effectiveness of treatments and therapies for specific conditions. For example, a hypothesis might be formulated to test the effects of a new drug on a particular disease.

- Psychology : In psychology, hypotheses are used to test theories and models of human behavior and cognition. For example, a hypothesis might be formulated to test the effects of a particular stimulus on the brain or behavior.

- Sociology : In sociology, hypotheses are used to test theories and models of social phenomena, such as the effects of social structures or institutions on human behavior. For example, a hypothesis might be formulated to test the effects of income inequality on crime rates.

- Business : In business research, hypotheses are used to test the validity of theories and models that explain business phenomena, such as consumer behavior or market trends. For example, a hypothesis might be formulated to test the effects of a new marketing campaign on consumer buying behavior.

- Engineering : In engineering, hypotheses are used to test the effectiveness of new technologies or designs. For example, a hypothesis might be formulated to test the efficiency of a new solar panel design.

How to write a Hypothesis

Here are the steps to follow when writing a hypothesis:

Identify the Research Question

The first step is to identify the research question that you want to answer through your study. This question should be clear, specific, and focused. It should be something that can be investigated empirically and that has some relevance or significance in the field.

Conduct a Literature Review

Before writing your hypothesis, it’s essential to conduct a thorough literature review to understand what is already known about the topic. This will help you to identify the research gap and formulate a hypothesis that builds on existing knowledge.

Determine the Variables

The next step is to identify the variables involved in the research question. A variable is any characteristic or factor that can vary or change. There are two types of variables: independent and dependent. The independent variable is the one that is manipulated or changed by the researcher, while the dependent variable is the one that is measured or observed as a result of the independent variable.

Formulate the Hypothesis

Based on the research question and the variables involved, you can now formulate your hypothesis. A hypothesis should be a clear and concise statement that predicts the relationship between the variables. It should be testable through empirical research and based on existing theory or evidence.

Write the Null Hypothesis

The null hypothesis is the opposite of the alternative hypothesis, which is the hypothesis that you are testing. The null hypothesis states that there is no significant difference or relationship between the variables. It is important to write the null hypothesis because it allows you to compare your results with what would be expected by chance.

Refine the Hypothesis

After formulating the hypothesis, it’s important to refine it and make it more precise. This may involve clarifying the variables, specifying the direction of the relationship, or making the hypothesis more testable.

Examples of Hypothesis

Here are a few examples of hypotheses in different fields:

- Psychology : “Increased exposure to violent video games leads to increased aggressive behavior in adolescents.”

- Biology : “Higher levels of carbon dioxide in the atmosphere will lead to increased plant growth.”

- Sociology : “Individuals who grow up in households with higher socioeconomic status will have higher levels of education and income as adults.”

- Education : “Implementing a new teaching method will result in higher student achievement scores.”

- Marketing : “Customers who receive a personalized email will be more likely to make a purchase than those who receive a generic email.”

- Physics : “An increase in temperature will cause an increase in the volume of a gas, assuming all other variables remain constant.”

- Medicine : “Consuming a diet high in saturated fats will increase the risk of developing heart disease.”

Purpose of Hypothesis

The purpose of a hypothesis is to provide a testable explanation for an observed phenomenon or a prediction of a future outcome based on existing knowledge or theories. A hypothesis is an essential part of the scientific method and helps to guide the research process by providing a clear focus for investigation. It enables scientists to design experiments or studies to gather evidence and data that can support or refute the proposed explanation or prediction.

The formulation of a hypothesis is based on existing knowledge, observations, and theories, and it should be specific, testable, and falsifiable. A specific hypothesis helps to define the research question, which is important in the research process as it guides the selection of an appropriate research design and methodology. Testability of the hypothesis means that it can be proven or disproven through empirical data collection and analysis. Falsifiability means that the hypothesis should be formulated in such a way that it can be proven wrong if it is incorrect.

In addition to guiding the research process, the testing of hypotheses can lead to new discoveries and advancements in scientific knowledge. When a hypothesis is supported by the data, it can be used to develop new theories or models to explain the observed phenomenon. When a hypothesis is not supported by the data, it can help to refine existing theories or prompt the development of new hypotheses to explain the phenomenon.

When to use Hypothesis

Here are some common situations in which hypotheses are used:

- In scientific research , hypotheses are used to guide the design of experiments and to help researchers make predictions about the outcomes of those experiments.

- In social science research , hypotheses are used to test theories about human behavior, social relationships, and other phenomena.

- I n business , hypotheses can be used to guide decisions about marketing, product development, and other areas. For example, a hypothesis might be that a new product will sell well in a particular market, and this hypothesis can be tested through market research.

Characteristics of Hypothesis

Here are some common characteristics of a hypothesis:

- Testable : A hypothesis must be able to be tested through observation or experimentation. This means that it must be possible to collect data that will either support or refute the hypothesis.

- Falsifiable : A hypothesis must be able to be proven false if it is not supported by the data. If a hypothesis cannot be falsified, then it is not a scientific hypothesis.

- Clear and concise : A hypothesis should be stated in a clear and concise manner so that it can be easily understood and tested.

- Based on existing knowledge : A hypothesis should be based on existing knowledge and research in the field. It should not be based on personal beliefs or opinions.

- Specific : A hypothesis should be specific in terms of the variables being tested and the predicted outcome. This will help to ensure that the research is focused and well-designed.

- Tentative: A hypothesis is a tentative statement or assumption that requires further testing and evidence to be confirmed or refuted. It is not a final conclusion or assertion.

- Relevant : A hypothesis should be relevant to the research question or problem being studied. It should address a gap in knowledge or provide a new perspective on the issue.

Advantages of Hypothesis

Hypotheses have several advantages in scientific research and experimentation:

- Guides research: A hypothesis provides a clear and specific direction for research. It helps to focus the research question, select appropriate methods and variables, and interpret the results.

- Predictive powe r: A hypothesis makes predictions about the outcome of research, which can be tested through experimentation. This allows researchers to evaluate the validity of the hypothesis and make new discoveries.

- Facilitates communication: A hypothesis provides a common language and framework for scientists to communicate with one another about their research. This helps to facilitate the exchange of ideas and promotes collaboration.

- Efficient use of resources: A hypothesis helps researchers to use their time, resources, and funding efficiently by directing them towards specific research questions and methods that are most likely to yield results.

- Provides a basis for further research: A hypothesis that is supported by data provides a basis for further research and exploration. It can lead to new hypotheses, theories, and discoveries.

- Increases objectivity: A hypothesis can help to increase objectivity in research by providing a clear and specific framework for testing and interpreting results. This can reduce bias and increase the reliability of research findings.

Limitations of Hypothesis

Some Limitations of the Hypothesis are as follows:

- Limited to observable phenomena: Hypotheses are limited to observable phenomena and cannot account for unobservable or intangible factors. This means that some research questions may not be amenable to hypothesis testing.

- May be inaccurate or incomplete: Hypotheses are based on existing knowledge and research, which may be incomplete or inaccurate. This can lead to flawed hypotheses and erroneous conclusions.

- May be biased: Hypotheses may be biased by the researcher’s own beliefs, values, or assumptions. This can lead to selective interpretation of data and a lack of objectivity in research.

- Cannot prove causation: A hypothesis can only show a correlation between variables, but it cannot prove causation. This requires further experimentation and analysis.

- Limited to specific contexts: Hypotheses are limited to specific contexts and may not be generalizable to other situations or populations. This means that results may not be applicable in other contexts or may require further testing.

- May be affected by chance : Hypotheses may be affected by chance or random variation, which can obscure or distort the true relationship between variables.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Dissertation Methodology – Structure, Example...

Background of The Study – Examples and Writing...

Research Gap – Types, Examples and How to...

Data Collection – Methods Types and Examples

Data Interpretation – Process, Methods and...

Purpose of Research – Objectives and Applications

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Sweepstakes

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."