- Open access

- Published: 06 January 2010

A tutorial on pilot studies: the what, why and how

- Lehana Thabane 1 , 2 ,

- Jinhui Ma 1 , 2 ,

- Rong Chu 1 , 2 ,

- Ji Cheng 1 , 2 ,

- Afisi Ismaila 1 , 3 ,

- Lorena P Rios 1 , 2 ,

- Reid Robson 3 ,

- Marroon Thabane 1 , 4 ,

- Lora Giangregorio 5 &

- Charles H Goldsmith 1 , 2

BMC Medical Research Methodology volume 10 , Article number: 1 ( 2010 ) Cite this article

358k Accesses

1579 Citations

104 Altmetric

Metrics details

A Correction to this article was published on 11 March 2023

This article has been updated

Pilot studies for phase III trials - which are comparative randomized trials designed to provide preliminary evidence on the clinical efficacy of a drug or intervention - are routinely performed in many clinical areas. Also commonly know as "feasibility" or "vanguard" studies, they are designed to assess the safety of treatment or interventions; to assess recruitment potential; to assess the feasibility of international collaboration or coordination for multicentre trials; to increase clinical experience with the study medication or intervention for the phase III trials. They are the best way to assess feasibility of a large, expensive full-scale study, and in fact are an almost essential pre-requisite. Conducting a pilot prior to the main study can enhance the likelihood of success of the main study and potentially help to avoid doomed main studies. The objective of this paper is to provide a detailed examination of the key aspects of pilot studies for phase III trials including: 1) the general reasons for conducting a pilot study; 2) the relationships between pilot studies, proof-of-concept studies, and adaptive designs; 3) the challenges of and misconceptions about pilot studies; 4) the criteria for evaluating the success of a pilot study; 5) frequently asked questions about pilot studies; 7) some ethical aspects related to pilot studies; and 8) some suggestions on how to report the results of pilot investigations using the CONSORT format.

1. Introduction

The Concise Oxford Thesaurus [ 1 ] defines a pilot project or study as an experimental, exploratory, test, preliminary, trial or try out investigation. Epidemiology and statistics dictionaries provide similar definitions of a pilot study as a small scale

" ... test of the methods and procedures to be used on a larger scale if the pilot study demonstrates that the methods and procedures can work" [ 2 ];

"...investigation designed to test the feasibility of methods and procedures for later use on a large scale or to search for possible effects and associations that may be worth following up in a subsequent larger study" [ 3 ].

Table 1 provides a summary of definitions found on the Internet. A closer look at these definitions reveals that they are similar to the ones above in that a pilot study is synonymous with a feasibility study intended to guide the planning of a large-scale investigation. Pilot studies are sometimes referred to as "vanguard trials" (i.e. pre-studies) intended to assess the safety of treatment or interventions; to assess recruitment potential; to assess the feasibility of international collaboration or coordination for multicentre trials; to evaluate surrogate marker data in diverse patient cohorts; to increase clinical experience with the study medication or intervention, and identify the optimal dose of treatments for the phase III trials [ 4 ]. As suggested by an African proverb from the Ashanti people in Ghana " You never test the depth of a river with both feet ", the main goal of pilot studies is to assess feasibility so as to avoid potentially disastrous consequences of embarking on a large study - which could potentially "drown" the whole research effort.

Feasibility studies are routinely performed in many clinical areas. It is fair to say that every major clinical trial had to start with some piloting or a small scale investigation to assess the feasibility of conducting a larger scale study: critical care [ 5 ], diabetes management intervention trials [ 6 ], cardiovascular trials [ 7 ], primary healthcare [ 8 ], to mention a few.

Despite their noted importance, the reality is that pilot studies receive little or no attention in scientific research training. Few epidemiology or research textbooks cover the topic with the necessary detail. In fact, we are not aware of any textbook that dedicates a chapter on this issue - many just mention it in passing or provide a cursory coverage of the topic. The objective of this paper is to provide a detailed examination of the key aspects of pilot studies. In the next section, we narrow the focus of our definition of a pilot to phase III trials. Section 3 covers the general reasons for conducting a pilot study. Section 4 deals with the relationships between pilot studies, proof-of-concept studies, and adaptive designs, while section 5 addresses the challenges of pilot studies. Evaluation of a pilot study (i.e. how to determine if a pilot study was successful) is covered in Section 6. We deal with several frequently asked questions about pilot studies in Section 7 using a "question-and-answer" approach. Section 8 covers some ethical aspects related to pilot studies; and in Section 9, we follow the CONSORT format [ 9 ] to offer some suggestions on how to report the results of pilot investigations.

2. Narrowing the focus: Pilot studies for randomized studies

Pilot studies can be conducted in both quantitative and qualitative studies. Adopting a similar approach to Lancaster et al . [ 10 ], we focus on quantitative pilot studies - particularly those done prior to full-scale phase III trials. Phase I trials are non-randomized studies designed to investigate the pharmacokinetics of a drug (i.e. how a drug is distributed and metabolized in the body) including finding a dose that can be tolerated with minimal toxicity. Phase II trials provide preliminary evidence on the clinical efficacy of a drug or intervention. They may or may not be randomized. Phase III trials are randomized studies comparing two or more drugs or intervention strategies to assess efficacy and safety. Phase IV trials, usually done after registration or marketing of a drug, are non-randomized surveillance studies to document experiences (e.g. side-effects, interactions with other drugs, etc) with using the drug in practice.

For the purposes of this paper, our approach to utilizing pilot studies relies on the model for complex interventions advocated by the British Medical Research Council - which explicitly recommends the use of feasibility studies prior to Phase III clinical trials, but stresses the iterative nature of the processes of development, feasibility and piloting, evaluation and implementation [ 11 ].

3. Reasons for Conducting Pilot Studies

Van Teijlingen et al . [ 12 ] and van Teijlingen and Hundley [ 13 ] provide a summary of the reasons for performing a pilot study. In general, the rationale for a pilot study can be grouped under several broad classifications - process, resources, management and scientific (see also http://www.childrens-mercy.org/stats/plan/pilot.asp for a different classification):

Process: This assesses the feasibility of the steps that need to take place as part of the main study. Examples include determining recruitment rates, retention rates, etc.

Resources: This deals with assessing time and budget problems that can occur during the main study. The idea is to collect some pilot data on such things as the length of time to mail or fill out all the survey forms.

Management: This covers potential human and data optimization problems such as personnel and data management issues at participating centres.

Scientific: This deals with the assessment of treatment safety, determination of dose levels and response, and estimation of treatment effect and its variance.

Table 2 summarizes this classification with specific examples.

4. Relationships between Pilot Studies, Proof-of-Concept Studies, and Adaptive Designs

A proof-of-concept (PoC) study is defined as a clinical trial carried out to determine if a treatment (drug) is biologically active or inactive [ 14 ]. PoC studies usually use surrogate markers as endpoints. In general, they are phase I/II studies - which, as noted above, investigate the safety profile, dose level and response to new drugs [ 15 ]. Thus, although designed to inform the planning of phase III trials for registration or licensing of new drugs, PoC studies may not necessarily fit our restricted definition of pilot studies aimed at assessing feasibility of phase III trials as outlined in Section 2.

An adaptive trial design refers to a design that allows modifications to be made to a trial's design or statistical procedures during its conduct, with the purpose of efficiently identifying clinical benefits/risks of new drugs or to increase the probability of success of clinical development [ 16 ]. The adaptations can be prospective (e.g. stopping a trial early due to safety or futility or efficacy at interim analysis); concurrent (e.g. changes in eligibility criteria, hypotheses or study endpoints) or retrospective (e.g. changes to statistical analysis plan prior to locking database or revealing treatment codes to trial investigators or patients). Piloting is normally built into adaptive trial designs by determining a priori decision rules to guide the adaptations based on cumulative data. For example, data from interim analyses could be used to refine sample size calculations [ 17 , 18 ]. This approach is routinely used in internal pilot studies - which are primarily designed to inform sample size calculation for the main study, with recalculation of the sample size as the key adaptation. Unlike other phase III pilots, an internal pilot investigation does not usually address any other feasibility aspects - because it is essentially part of the main study [ 10 , 19 , 20 ]..

Nonetheless, we need to emphasize that whether or not a study is a pilot, depends on its objectives. An adaptive method is used as a strategy to reach that objective. Both a pilot and a non-pilot could be adaptive.

5. Challenges of and Common Misconceptions about Pilot Studies

Pilot studies can be very informative, not only to the researchers conducting them but also to others doing similar work. However, many of them never get published, often because of the way the results are presented [ 13 ]. Quite often the emphasis is wrongly placed on statistical significance, not on feasibility - which is the main focus of the pilot study. Our experience in reviewing submissions to a research ethics board also shows that most of the pilot projects are not well designed: i.e. there are no clear feasibility objectives; no clear analytic plans; and certainly no clear criteria for success of feasibility.

In many cases, pilot studies are conducted to generate data for sample size calculations. This seems especially sensible in situations where there are no data from previous studies to inform this process. However, it can be dangerous to use pilot studies to estimate treatment effects, as such estimates may be unrealistic/biased because of the limited sample sizes. Therefore if not used cautiously, results of pilot studies can potentially mislead sample size or power calculations [ 21 ] -- particularly if the pilot study was done to see if there is likely to be a treatment effect in the main study. In section 6, we provide guidance on how to proceed with caution in this regard.

There are also several misconceptions about pilot studies. Below are some of the common reasons that researchers have put forth for calling their study a pilot.

The first common reason is that a pilot study is a small single-centre study. For example, researchers often state lack of resources for a large multi-centre study as a reason for doing a pilot. The second common reason is that a pilot investigation is a small study that is similar in size to someone else's published study. In reviewing submissions to a research ethics board, we have come across sentiments such as

So-and-so did a similar study with 6 patients and got statistical significance - ours uses 12 patients (double the size)!

We did a similar pilot before (and it was published!)

The third most common reason is that a pilot is a small study done by a student or an intern - which can be completed quickly and does not require funding. Specific arguments include

I have funding for 10 patients only;

I have limited seed (start-up) funding;

This is just a student project!

My supervisor (boss) told me to do it as a pilot .

None of the above arguments qualifies as sound reasons for calling a study a pilot. A study should only be conducted if the results will be informative; studies conducted for the reasons above may result in findings of limited utility, which would be a waste of the researchers' and participants' efforts. The focus of a pilot study should be on assessment of feasibility, unless it was powered appropriately to assess statistical significance. Further, there is a vast number of poorly designed and reported studies. Assessment of the quality of a published report may be helpful to guide decisions of whether the report should be used to guide planning or designing of new studies. Finally, if a trainee or researcher is assigned a project as a pilot it is important to discuss how the results will inform the planning of the main study. In addition, clearly defined feasibility objectives and rationale to justify piloting should be provided.

Sample Size for Pilot Studies

In general, sample size calculations may not be required for some pilot studies. It is important that the sample for a pilot be representative of the target study population. It should also be based on the same inclusion/exclusion criteria as the main study. As a rule of thumb, a pilot study should be large enough to provide useful information about the aspects that are being assessed for feasibility. Note that PoC studies require sample size estimation based on surrogate markers [ 22 ], but they are usually not powered to detect meaningful differences in clinically important endpoints. The sample used in the pilot may be included in the main study, but caution is needed to ensure the key features of the main study are preserved in the pilot (e.g. blinding in randomized controlled trials). We recommend if any pooling of pilot and main study data is considered, this should be planned beforehand, described clearly in the protocol with clear discussion of the statistical consequences and methods. The goal is to avoid or minimize the potential bias that may occur due to multiple testing issues or any other opportunistic actions by investigators. In general, pooling when done appropriately can increase the efficiency of the main study [ 23 ].

As noted earlier, a carefully designed pilot study may be used to generate information for sample size calculations. Two approaches may be helpful to optimize information from a pilot study in this context: First, consider eliciting qualitative data to supplement the quantitative information obtained in the pilot. For example, consider having some discussions with clinicians using the approach suggested by Lenth [ 24 ] to illicit additional information on possible effect size and variance estimates. Second, consider creating a sample size table for various values of the effect or variance estimates to acknowledge the uncertainty surrounding the pilot estimates.

In some cases, one could use a confidence interval [CI] approach to estimate the sample size required to establish feasibility. For example, suppose we had a pilot trial designed primarily to determine adherence rates to the standardized risk assessment form to enhance venous thromboprophylaxis in hospitalized patients. Suppose it was also decided a priori that the criterion for success would be: the main trial would be ' feasibl e' if the risk assessment form is completed for ≥ 70% of eligible hospitalized patients.

6. How to Interpret the Results of a Pilot Study: Criteria for Success

It is always important to state the criteria for success of a pilot study. The criteria should be based on the primary feasibility objectives. These provide the basis for interpreting the results of the pilot study and determining whether it is feasible to proceed to the main study. In general, the outcome of a pilot study can be one of the following: (i) Stop - main study not feasible; (ii) Continue, but modify protocol - feasible with modifications; (iii) Continue without modifications, but monitor closely - feasible with close monitoring and (iv) Continue without modifications - feasible as is.

For example, the Prophylaxis of Thromboembolism in Critical Care Trial (PROTECT) was designed to assess the feasibility of a large-scale trial with the following criteria for determining success [ 25 ]:

98.5% of patients had to receive study drug within 12 hours of randomization;

91.7% of patients had to receive every scheduled dose of the study drug in a blinded manner;

90% or more of patients had to have lower limb compression ultrasounds performed at the specified times; and

> 90% of necessary dose adjustments had to have been made appropriately in response to pre-defined laboratory criteria .

In a second example, the PeriOperative Epidural Trial (POET) Pilot Study was designed to assess the feasibility of a large, multicentre trial with the following criteria for determining success [ 26 ]:

one subject per centre per week (i.e., 200 subjects from four centres over 50 weeks) can be recruited ;

at least 70% of all eligible patients can be recruited ;

no more than 5% of all recruited subjects crossed over from one modality to the other; and

complete follow-up in at least 95% of all recruited subjects .

7. Frequently asked questions about pilot studies

In this Section, we offer our thoughts on some of the frequently asked questions about pilot studies. These could be helpful to not only clinicians and trainees, but to anyone who is interested in health research.

Can I publish the results of a pilot study?

- Yes, every attempt should be made to publish.

Why is it important to publish the results of pilot studies?

- To provide information about feasibility to the research community to save resources being unnecessarily spent on studies that may not be feasible. Further, having such information can help researchers to avoid duplication of efforts in assessing feasibility.

- Finally, researchers have an ethical and scientific obligation to attempt publishing the results of every research endeavor. However, our focus should be on feasibility goals. Emphasis should not be placed on statistical significance when pilot studies are not powered to detect minimal clinically important differences. Such studies typically do not show statistically significant results - remember that underpowered studies (with no statistically significant results) are inconclusive, not negative since "no evidence of effect" is not "evidence of no effect" [ 27 ].

Can I combine data from a pilot with data from the main study?

- Yes, provided the sampling frame and methodologies are the same. This can increase the efficiency of the main study - see Section 5.

Can I combine the results of a pilot with the results of another study or in a meta-analysis?

- Yes, provided the sampling frame and methodologies are the same.

- No, if the main study is reported and it includes the pilot study.

Can the results of the pilot study be valid on their own, without existence of the main study

- Yes, if the results show that it is not feasible to proceed to the main study or there is insufficient funding.

Can I apply for funding for a pilot study?

- Yes. Like any grant, it is important to justify the need for piloting.

- The pilot has to be placed in the context of the main study.

Can I randomize patients in a pilot study?

- Yes. For a phase III pilot study, one of the goals could be to assess how a randomization procedure might work in the main study or whether the idea of randomization might be acceptable to patients [ 10 ]. In general, it is always best for a pilot to maintain the same design as the main study.

How can I use the information from a pilot to estimate the sample size?

- Use with caution, as results from pilot studies can potentially mislead sample size calculations.

- Consider supplementing the information with qualitative discussions with clinicians - see section 5; and

- Create a sample size table to acknowledge the uncertainty of the pilot information - see section 5.

Can I use the results of a pilot study to treat my patients?

- Not a good idea!

- Pilot studies are primarily for assessing feasibility.

What can I do with a failed or bad pilot study?

- No study is a complete failure; it can always be used as bad example! However, it is worth making clear that a pilot study that shows the main study is not likely to be feasible is not a failed (pilot) study. In fact, it is a success - because you avoided wasting scarce resources on a study destined for failure!

8. Ethical Aspects of Pilot Studies

Halpern et al . [ 28 ] stated that conducting underpowered trials is unethical. However, they proposed that underpowered trials are ethical in two situations: (i) small trials of interventions for rare diseases -- which require documenting explicit plans for including results with those of similar trials in a prospective meta-analysis; (ii) early-phase trials in the development of drugs or devices - provided they are adequately powered for defined purposes other than randomized treatment comparisons. Pilot studies of phase III trials (dealing with common diseases) are not addressed in their proposal. It is therefore prudent to ask: Is it ethical to conduct a study whose feasibility can not be guaranteed (i.e. with a high probability of success)?

It seems unethical to consider running a phase III study without having sufficient data or information about the feasibility. In fact, most granting agencies often require data on feasibility as part of their assessment of the scientific validity for funding decisions.

There is however one important ethical aspect about pilot studies that has received little or no attention from researchers, research ethics boards and ethicists alike. This pertains to the issue of the obligation that researchers have to patients or participants in a trial to disclose the feasibility nature of pilot studies. This is essential given that some pilot studies may not lead to further studies. A review of the commonly cited research ethics guidelines - the Nuremburg Code [ 29 ], Helsinki Declaration [ 30 ], the Belmont Report [ 31 ], ICH Good Clinical Practice [ 32 ], and the International Ethical Guidelines for Biomedical Research Involving Human Subjects [ 33 ] - shows that pilot studies are not addressed in any of these guidelines. Canadian researchers are also encouraged to follow the Tri-Council Policy Statement (TCPS) [ 34 ] - it too does not address how pilot studies need to be approached. It seems to us that given the special nature of feasibility or pilot studies, the disclosure of their purpose to study participants requires special wording - that informs them of the definition of a pilot study, the feasibility objectives of the study, and also clearly defines the criteria for success of feasibility. To fully inform participants, we suggest using the following wording in the consent form:

" The overall purpose of this pilot study is to assess the feasibility of conducting a large study to [state primary objective of the main study]. A feasibility or pilot study is a study that... [state a general definition of a feasibility study]. The specific feasibility objectives of this study are ... [state the specific feasibility objectives of the pilot study]. We will determine that it is feasible to carry on the main study if ... [state the criteria for success of feasibility] ."

9. Recommendation for Reporting the Results of Pilot Studies

Adopted from the CONSORT Statement [ 9 ], Table 3 provides a checklist of items to consider including in a report of a pilot study.

Title and abstract

Item #1: the title or abstract should indicate that the study is a "pilot" or "feasibility".

As a number one summary of the contents of any report, it is important for the title to clearly indicate that the report is for a pilot or feasibility study. This would also be helpful to other researchers during electronic information search about feasibility issues. Our quick search of PUBMED [on July 13, 2009], using the terms "pilot" OR "feasibility" OR "proof-of-concept" for revealed 24423 (16%) hits of studies that had these terms in the title or abstract compared with 149365 hits that had these terms anywhere in the text.

Item #2: Scientific background for the main study and explanation of rationale for assessing feasibility through piloting

The rationale for initiating a pilot should be based on the need to assess feasibility for the main study. Thus, the background of the main study should clearly describe what is known or not known about important feasibility aspects to provide context for piloting.

Item #3: Participants and setting of the study

The description of the inclusion-exclusion or eligibility criteria for participants should be the same as in the main study. The settings and locations where the data were collected should also be clearly described.

Item #4: Interventions

Precise details of the interventions intended for each group and how and when they were actually administered (if applicable) - state clearly if any aspects of the intervention are assessed for feasibility.

Item #5: Objectives

State the specific scientific primary and secondary objectives and hypotheses for the main study and the specific feasibility objectives. It is important to clearly indicate the feasibility objectives as the primary focus for the pilot.

Item #6: Outcomes

Clearly define primary and secondary outcome measures for the main study. Then, clearly define the feasibility outcomes and how they were operationalized - these should include key elements such as recruitment rates, consent rates, completion rates, variance estimates, etc. In some cases, a pilot study may be conducted with the aim to determine a suitable (clinical or surrogate) endpoint for the main study. In such a case, one may not be able to define the primary outcome of the main study until the pilot is finished. However, it is important that determining the primary outcome of the main study be clearly stated as part of feasibility outcomes.

Item #7: Sample Size

Describe how sample size was determined. If the pilot is a proof-of-concept study, is the sample size calculated based on primary/key surrogate marker(s)? In general if the pilot is for a phase III study, there may be no need for a formal sample size calculation. However, the confidence interval approach may be used to calculate and justify the sample size based on key feasibility objective(s).

Item #8: Feasibility criteria

Clearly describe the criteria for assessing success of feasibility - these should be based on the feasibility objectives.

Item #9: Statistical Analysis

Describe the statistical methods for the analysis of primary and secondary feasibility outcomes.

Item #10: Ethical Aspects

State whether the study received research ethics approval. Describe how informed consent was handled - given the feasibility nature of the study.

Item #11: Participant Flow

Describe the flow of participants through each stage of the study (use of a flow-diagram is strongly recommended -- see CONSORT [ 9 ] for a template). Describe protocol deviations from pilot study as planned with reasons for deviations. State the number of exclusions at each stage and corresponding reasons for exclusions.

Item #12: Recruitment

Report the dates defining the periods of recruitment and follow-up.

Item #13: Baseline Data

Report the baseline demographic and clinical characteristics of the participants.

Item #14: Outcomes and Estimation

For each primary and secondary feasibility outcomes, report the point estimate of effect and its precision ( e.g ., 95% CI) - if applicable.

Item # 15: Interpretation

Interpretation of the results should focus on feasibility, taking into account the stated criteria for success of feasibility, study hypotheses, sources of potential bias or imprecision (given the feasibility nature of the study) and the dangers associated with multiplicity - repeated testing on multiple outcomes.

Item #16: Generalizability

Discuss the generalizability (external validity) of the feasibility aspects observed in the study. State clearly what modifications in the design of the main study (if any) would be necessary to make it feasible.

Item #17: Overall evidence of feasibility

Discuss the general results in the context of overall evidence of feasibility. It is important that the focus be on feasibility.

9. Conclusions

Pilot or vanguard studies provide a good opportunity to assess feasibility of large full-scale studies. Pilot studies are the best way to assess feasibility of a large expensive full-scale study, and in fact are an almost essential pre-requisite. Conducting a pilot prior to the main study can enhance the likelihood of success of the main study and potentially help to avoid doomed main studies. Pilot studies should be well designed with clear feasibility objectives, clear analytic plans, and explicit criteria for determining success of feasibility. They should be used cautiously for determining treatment effects and variance estimates for power or sample size calculations. Finally, they should be scrutinized the same way as full scale studies, and every attempt should be taken to publish the results in peer-reviewed journals.

Change history

11 march 2023.

A Correction to this paper has been published: https://doi.org/10.1186/s12874-023-01880-1

Waite M: Concise Oxford Thesaurus. 2002, Oxford, England: Oxford University Press, 2

Google Scholar

Last JM, editor: A Dictionary of Epidemiology. 2001, Oxford University Press, 4

Everitt B: Medical Statistics from A to Z: A Guide for Clinicians and Medical Students. 2006, Cambridge University Press: Cambridge, 2

Book Google Scholar

Tavel JA, Fosdick L, ESPRIT Vanguard Group. ESPRIT Executive Committee: Closeout of four phase II Vanguard trials and patient rollover into a large international phase III HIV clinical endpoint trial. Control Clin Trials. 2001, 22: 42-48. 10.1016/S0197-2456(00)00114-8.

Article CAS PubMed Google Scholar

Arnold DM, Burns KE, Adhikari NK, Kho ME, Meade MO, Cook DJ: The design and interpretation of pilot trials in clinical research in critical care. Crit Care Med. 2009, 37 (Suppl 1): 69-74. 10.1097/CCM.0b013e3181920e33.

Article Google Scholar

Computerization of Medical Practice for the Enhancement of Therapeutic Effectiveness. Last accessed August 8, 2009, [ http://www.compete-study.com/index.htm ]

Heart Outcomes Prevention Evaluation Study. Last accessed August 8, 2009, [ http://www.ccc.mcmaster.ca/hope.htm ]

Cardiovascular Health Awareness Program. Last accessed August 8, 2009, [ http://www.chapprogram.ca/resources.html ]

Moher D, Schulz KF, Altman DG, CONSORT Group (Consolidated Standards of Reporting Trials): The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. J Am Podiatr Med Assoc. 2001, 91: 437-442.

Lancaster GA, Dodd S, Williamson PR: Design and analysis of pilot studies: recommendations for good practice. J Eval Clin Pract. 2004, 10: 307-12. 10.1111/j..2002.384.doc.x.

Article PubMed Google Scholar

Craig N, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M: Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008, 337: a1655-10.1136/bmj.a1655.

Article PubMed PubMed Central Google Scholar

Van Teijlingen ER, Rennie AM, Hundley V, Graham W: The importance of conducting and reporting pilot studies: the example of the Scottish Births Survey. J Adv Nurs. 2001, 34: 289-295. 10.1046/j.1365-2648.2001.01757.x.

Van Teijlingen ER, Hundley V: The Importance of Pilot Studies. Social Research Update. 2001, 35-[ http://sru.soc.surrey.ac.uk/SRU35.html ]

Lawrence Gould A: Timing of futility analyses for 'proof of concept' trials. Stat Med. 2005, 24: 1815-1835. 10.1002/sim.2087.

Fardon T, Haggart K, Lee DK, Lipworth BJ: A proof of concept study to evaluate stepping down the dose of fluticasone in combination with salmeterol and tiotropium in severe persistent asthma. Respir Med. 2007, 101: 1218-1228. 10.1016/j.rmed.2006.11.001.

Chow SC, Chang M: Adaptive design methods in clinical trials - a review. Orphanet J Rare Dis. 2008, 3: 11-10.1186/1750-1172-3-11.

Gould AL: Planning and revising the sample size for a trial. Stat Med. 1995, 14: 1039-1051. 10.1002/sim.4780140922.

Coffey CS, Muller KE: Properties of internal pilots with the univariate approach to repeated measures. Stat Med. 2003, 22: 2469-2485. 10.1002/sim.1466.

Zucker DM, Wittes JT, Schabenberger O, Brittain E: Internal pilot studies II: comparison of various procedures. Statistics in Medicine. 1999, 18: 3493-3509. 10.1002/(SICI)1097-0258(19991230)18:24<3493::AID-SIM302>3.0.CO;2-2.

Kieser M, Friede T: Re-calculating the sample size in internal pilot designs with control of the type I error rate. Statistics in Medicine. 2000, 19: 901-911. 10.1002/(SICI)1097-0258(20000415)19:7<901::AID-SIM405>3.0.CO;2-L.

Kraemer HC, Mintz J, Noda A, Tinklenberg J, Yesavage JA: Caution regarding the use of pilot studies to guide power calculations for study proposals. Arch Gen Psychiatry. 2006, 63: 484-489. 10.1001/archpsyc.63.5.484.

Yin Y: Sample size calculation for a proof of concept study. J Biopharm Stat. 2002, 12: 267-276. 10.1081/BIP-120015748.

Wittes J, Brittain E: The role of internal pilot studies in increasing the efficiency of clinical trials. Stat Med. 1990, 9: 65-71. 10.1002/sim.4780090113.

Lenth R: Some Practical Guidelines for Effective Sample Size Determination. The American Statistician. 2001, 55: 187-193. 10.1198/000313001317098149.

Cook DJ, Rocker G, Meade M, Guyatt G, Geerts W, Anderson D, Skrobik Y, Hebert P, Albert M, Cooper J, Bates S, Caco C, Finfer S, Fowler R, Freitag A, Granton J, Jones G, Langevin S, Mehta S, Pagliarello G, Poirier G, Rabbat C, Schiff D, Griffith L, Crowther M, PROTECT Investigators. Canadian Critical Care Trials Group: Prophylaxis of Thromboembolism in Critical Care (PROTECT) Trial: a pilot study. J Crit Care. 2005, 20: 364-372. 10.1016/j.jcrc.2005.09.010.

Choi PT, Beattie WS, Bryson GL, Paul JE, Yang H: Effects of neuraxial blockade may be difficult to study using large randomized controlled trials: the PeriOperative Epidural Trial (POET) Pilot Study. PLoS One. 2009, 4 (2): e4644-10.1371/journal.pone.0004644.

Article CAS PubMed PubMed Central Google Scholar

Altman DG, Bland JM: Absence of evidence is not evidence of absence. BMJ. 1995, 311: 485-

Halpern SD, Karlawish JH, Berlin JA: The continuing unethical conduct of underpowered clinical trials. JAMA. 2002, 288: 358-362. 10.1001/jama.288.3.358.

The Nuremberg Code, Research ethics guideline 2005. Last accessed August 8, 2009, [ http://www.hhs.gov/ohrp/references/nurcode.htm ]

The Declaration of Helsinki, Research ethics guideline. Last accessed December 22, 2009, [ http://www.wma.net/en/30publications/10policies/b3/index.html ]

The Belmont Report, Research ethics guideline. Last accessed August 8, 2009, [ http://ohsr.od.nih.gov/guidelines/belmont.html ]

The ICH Harmonized Tripartite Guideline-Guideline for Good Clinical Practice. Last accessed August 8, 2009, [ http://www.gcppl.org.pl/ma_struktura/docs/ich_gcp.pdf ]

The International Ethical Guidelines for Biomedical Research Involving Human Subjects. Last accessed August 8, 2009, [ http://www.fhi.org/training/fr/Retc/pdf_files/cioms.pdf ]

Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans, Government of Canada. Last accessed August 8, 2009, [ http://www.pre.ethics.gc.ca/english/policystatement/policystatement.cfm ]

Pre-publication history

The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1471-2288/10/1/prepub

Download references

Acknowledgements

Dr Lehana Thabane is clinical trials mentor for the Canadian Institutes of Health Research. We thank the reviewers for insightful comments and suggestions which led to improvements in the manuscript.

Author information

Authors and affiliations.

Department of Clinical Epidemiology and Biostatistics, McMaster University, Hamilton, ON, Canada

Lehana Thabane, Jinhui Ma, Rong Chu, Ji Cheng, Afisi Ismaila, Lorena P Rios, Marroon Thabane & Charles H Goldsmith

Biostatistics Unit, St Joseph's Healthcare Hamilton, Hamilton, ON, Canada

Lehana Thabane, Jinhui Ma, Rong Chu, Ji Cheng, Lorena P Rios & Charles H Goldsmith

Department of Medical Affairs, GlaxoSmithKline Inc., Mississauga, ON, Canada

Afisi Ismaila & Reid Robson

Department of Medicine, Division of Gastroenterology, McMaster University, Hamilton, ON, Canada

Marroon Thabane

Department of Kinesiology, University of Waterloo, Waterloo, ON, Canada

Lora Giangregorio

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Lehana Thabane .

Additional information

Competing interests.

The authors declare that they have no competing interests.

Authors' contributions

LT drafted the manuscript. All authors reviewed several versions of the manuscript, read and approved the final version.

The original online version of this article was revised: the authors would like to correct the number of sample size in the fourth paragraph under the heading Sample Size for Pilot Studies from “75 patients” to “289 patients”.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Thabane, L., Ma, J., Chu, R. et al. A tutorial on pilot studies: the what, why and how. BMC Med Res Methodol 10 , 1 (2010). https://doi.org/10.1186/1471-2288-10-1

Download citation

Received : 09 August 2009

Accepted : 06 January 2010

Published : 06 January 2010

DOI : https://doi.org/10.1186/1471-2288-10-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Pilot Study

- Sample Size Calculation

- Research Ethic Board

- Adaptive Design

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Pilot Study in Research: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A pilot study, also known as a feasibility study, is a small-scale preliminary study conducted before the main research to check the feasibility or improve the research design.

Pilot studies can be very important before conducting a full-scale research project, helping design the research methods and protocol.

How Does it Work?

Pilot studies are a fundamental stage of the research process. They can help identify design issues and evaluate a study’s feasibility, practicality, resources, time, and cost before the main research is conducted.

It involves selecting a few people and trying out the study on them. It is possible to save time and, in some cases, money by identifying any flaws in the procedures designed by the researcher.

A pilot study can help the researcher spot any ambiguities (i.e., unusual things), confusion in the information given to participants, or problems with the task devised.

Sometimes the task is too hard, and the researcher may get a floor effect because none of the participants can score at all or can complete the task – all performances are low.

The opposite effect is a ceiling effect, when the task is so easy that all achieve virtually full marks or top performances and are “hitting the ceiling.”

This enables researchers to predict an appropriate sample size, budget accordingly, and improve the study design before performing a full-scale project.

Pilot studies also provide researchers with preliminary data to gain insight into the potential results of their proposed experiment.

However, pilot studies should not be used to test hypotheses since the appropriate power and sample size are not calculated. Rather, pilot studies should be used to assess the feasibility of participant recruitment or study design.

By conducting a pilot study, researchers will be better prepared to face the challenges that might arise in the larger study. They will be more confident with the instruments they will use for data collection.

Multiple pilot studies may be needed in some studies, and qualitative and/or quantitative methods may be used.

To avoid bias, pilot studies are usually carried out on individuals who are as similar as possible to the target population but not on those who will be a part of the final sample.

Feedback from participants in the pilot study can be used to improve the experience for participants in the main study. This might include reducing the burden on participants, improving instructions, or identifying potential ethical issues.

Experiment Pilot Study

In a pilot study with an experimental design , you would want to ensure that your measures of these variables are reliable and valid.

You would also want to check that you can effectively manipulate your independent variables and that you can control for potential confounding variables.

A pilot study allows the research team to gain experience and training, which can be particularly beneficial if new experimental techniques or procedures are used.

Questionnaire Pilot Study

It is important to conduct a questionnaire pilot study for the following reasons:

- Check that respondents understand the terminology used in the questionnaire.

- Check that emotive questions are not used, as they make people defensive and could invalidate their answers.

- Check that leading questions have not been used as they could bias the respondent’s answer.

- Ensure that the questionnaire can be completed in a reasonable amount of time. If it’s too long, respondents may lose interest or not have enough time to complete it, which could affect the response rate and the data quality.

By identifying and addressing issues in the pilot study, researchers can reduce errors and risks in the main study. This increases the reliability and validity of the main study’s results.

Assessing the practicality and feasibility of the main study

Testing the efficacy of research instruments

Identifying and addressing any weaknesses or logistical problems

Collecting preliminary data

Estimating the time and costs required for the project

Determining what resources are needed for the study

Identifying the necessity to modify procedures that do not elicit useful data

Adding credibility and dependability to the study

Pretesting the interview format

Enabling researchers to develop consistent practices and familiarize themselves with the procedures in the protocol

Addressing safety issues and management problems

Limitations

Require extra costs, time, and resources.

Do not guarantee the success of the main study.

Contamination (ie: if data from the pilot study or pilot participants are included in the main study results).

Funding bodies may be reluctant to fund a further study if the pilot study results are published.

Do not have the power to assess treatment effects due to small sample size.

- Viscocanalostomy: A Pilot Study (Carassa, Bettin, Fiori, & Brancato, 1998)

- WHO International Pilot Study of Schizophrenia (Sartorius, Shapiro, Kimura, & Barrett, 1972)

- Stephen LaBerge of Stanford University ran a series of experiments in the 80s that investigated lucid dreaming. In 1985, he performed a pilot study that demonstrated that time perception is the same as during wakefulness. Specifically, he had participants go into a state of lucid dreaming and count out ten seconds, signaling the start and end with pre-determined eye movements measured with the EOG.

- Negative Word-of-Mouth by Dissatisfied Consumers: A Pilot Study (Richins, 1983)

- A pilot study and randomized controlled trial of the mindful self‐compassion program (Neff & Germer, 2013)

- Pilot study of secondary prevention of posttraumatic stress disorder with propranolol (Pitman et al., 2002)

- In unstructured observations, the researcher records all relevant behavior without a system. There may be too much to record, and the behaviors recorded may not necessarily be the most important, so the approach is usually used as a pilot study to see what type of behaviors would be recorded.

- Perspectives of the use of smartphones in travel behavior studies: Findings from a literature review and a pilot study (Gadziński, 2018)

Further Information

- Lancaster, G. A., Dodd, S., & Williamson, P. R. (2004). Design and analysis of pilot studies: recommendations for good practice. Journal of evaluation in clinical practice, 10 (2), 307-312.

- Thabane, L., Ma, J., Chu, R., Cheng, J., Ismaila, A., Rios, L. P., … & Goldsmith, C. H. (2010). A tutorial on pilot studies: the what, why and how. BMC Medical Research Methodology, 10 (1), 1-10.

- Moore, C. G., Carter, R. E., Nietert, P. J., & Stewart, P. W. (2011). Recommendations for planning pilot studies in clinical and translational research. Clinical and translational science, 4 (5), 332-337.

Carassa, R. G., Bettin, P., Fiori, M., & Brancato, R. (1998). Viscocanalostomy: a pilot study. European journal of ophthalmology, 8 (2), 57-61.

Gadziński, J. (2018). Perspectives of the use of smartphones in travel behaviour studies: Findings from a literature review and a pilot study. Transportation Research Part C: Emerging Technologies, 88 , 74-86.

In J. (2017). Introduction of a pilot study. Korean Journal of Anesthesiology, 70 (6), 601–605. https://doi.org/10.4097/kjae.2017.70.6.601

LaBerge, S., LaMarca, K., & Baird, B. (2018). Pre-sleep treatment with galantamine stimulates lucid dreaming: A double-blind, placebo-controlled, crossover study. PLoS One, 13 (8), e0201246.

Leon, A. C., Davis, L. L., & Kraemer, H. C. (2011). The role and interpretation of pilot studies in clinical research. Journal of psychiatric research, 45 (5), 626–629. https://doi.org/10.1016/j.jpsychires.2010.10.008

Malmqvist, J., Hellberg, K., Möllås, G., Rose, R., & Shevlin, M. (2019). Conducting the Pilot Study: A Neglected Part of the Research Process? Methodological Findings Supporting the Importance of Piloting in Qualitative Research Studies. International Journal of Qualitative Methods. https://doi.org/10.1177/1609406919878341

Neff, K. D., & Germer, C. K. (2013). A pilot study and randomized controlled trial of the mindful self‐compassion program. Journal of Clinical Psychology, 69 (1), 28-44.

Pitman, R. K., Sanders, K. M., Zusman, R. M., Healy, A. R., Cheema, F., Lasko, N. B., … & Orr, S. P. (2002). Pilot study of secondary prevention of posttraumatic stress disorder with propranolol. Biological psychiatry, 51 (2), 189-192.

Richins, M. L. (1983). Negative word-of-mouth by dissatisfied consumers: A pilot study. Journal of Marketing, 47 (1), 68-78.

Sartorius, N., Shapiro, R., Kimura, M., & Barrett, K. (1972). WHO International Pilot Study of Schizophrenia1. Psychological medicine, 2 (4), 422-425.

Teijlingen, E. R; V. Hundley (2001). The importance of pilot studies, Social research UPDATE, (35)

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

- Open access

- Published: 31 October 2020

Guidance for conducting feasibility and pilot studies for implementation trials

- Nicole Pearson ORCID: orcid.org/0000-0003-2677-2327 1 , 2 ,

- Patti-Jean Naylor 3 ,

- Maureen C. Ashe 5 ,

- Maria Fernandez 4 ,

- Sze Lin Yoong 1 , 2 &

- Luke Wolfenden 1 , 2

Pilot and Feasibility Studies volume 6 , Article number: 167 ( 2020 ) Cite this article

86k Accesses

129 Citations

24 Altmetric

Metrics details

Implementation trials aim to test the effects of implementation strategies on the adoption, integration or uptake of an evidence-based intervention within organisations or settings. Feasibility and pilot studies can assist with building and testing effective implementation strategies by helping to address uncertainties around design and methods, assessing potential implementation strategy effects and identifying potential causal mechanisms. This paper aims to provide broad guidance for the conduct of feasibility and pilot studies for implementation trials.

We convened a group with a mutual interest in the use of feasibility and pilot trials in implementation science including implementation and behavioural science experts and public health researchers. We conducted a literature review to identify existing recommendations for feasibility and pilot studies, as well as publications describing formative processes for implementation trials. In the absence of previous explicit guidance for the conduct of feasibility or pilot implementation trials specifically, we used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as a framework for conceptualising the application of feasibility and pilot testing of implementation interventions. We discuss and offer guidance regarding the aims, methods, design, measures, progression criteria and reporting for implementation feasibility and pilot studies.

Conclusions

This paper provides a resource for those undertaking preliminary work to enrich and inform larger scale implementation trials.

Peer Review reports

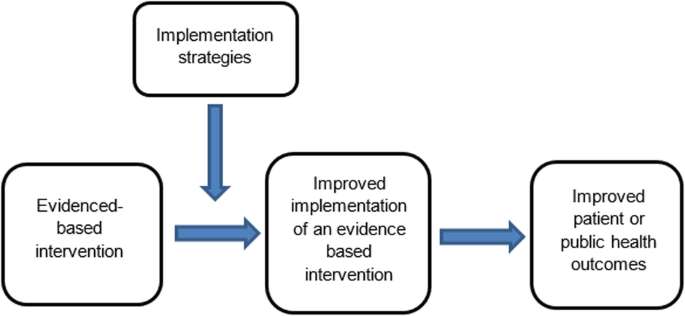

The failure to translate effective interventions for improving population and patient outcomes into policy and routine health service practice denies the community the benefits of investment in such research [ 1 ]. Improving the implementation of effective interventions has therefore been identified as a priority of health systems and research agencies internationally [ 2 , 3 , 4 , 5 , 6 ]. The increased emphasis on research translation has resulted in the rapid emergence of implementation science as a scientific discipline, with the goal of integrating effective medical and public health interventions into health care systems, policies and practice [ 1 ]. Implementation research aims to do this via the generation of new knowledge, including the evaluation of the effectiveness of implementation strategies [ 7 ]. The term “implementation strategies” is used to describe the methods or techniques (e.g. training, performance feedback, communities of practice) used to enhance the adoption, implementation and/or sustainability of evidence-based interventions (Fig. 1 ) [ 8 , 9 ].

| |

Feasibility studies: an umbrella term used to describe any type of study relating to the preparation for a main study | |

: a subset of feasibility studies that specifically look at a design feature proposed for the main trial, whether in part or in full, conducted on a smaller scale [ ] |

Conceptual role of implementation strategies in improving intervention implementation and patient and public health outcomes

While there has been a rapid increase in the number of implementation trials over the past decade, the quality of trials has been criticised, and the effects of the strategies for such trials on implementation, patient or public health outcomes have been modest [ 11 , 12 , 13 ]. To improve the likelihood of impact, factors that may impede intervention implementation should be considered during intervention development and across each phase of the research translation process [ 2 ]. Feasibility and pilot studies play an important role in improving the conduct and quality of a definitive randomised controlled trial (RCT) for both intervention and implementation trials [ 10 ]. For clinical or public health interventions, pilot and feasibility studies may serve to identify potential refinements to the intervention, address uncertainties around the feasibility of intervention trial methods, or test preliminary effects of the intervention [ 10 ]. In implementation research, feasibility and pilot studies perform the same functions as those for intervention trials, however with a focus on developing or refining implementation strategies, refining research methods for an implementation intervention trial, or undertake preliminary testing of implementation strategies [ 14 , 15 ]. Despite this, reviews of implementation studies appear to suggest that few full implementation randomised controlled trials have undertaken feasibility and pilot work in advance of a larger trial [ 16 ].

A range of publications provides guidance for the conduct of feasibility and pilot studies for conventional clinical or public health efficacy trials including Guidance for Exploratory Studies of complex public health interventions [ 17 ] and the Consolidated Standards of Reporting Trials (CONSORT 2010) for Pilot and Feasibility trials [ 18 ]. However, given the differences between implementation trials and conventional clinical or public health efficacy trials, the field of implementation science has identified the need for nuanced guidance [ 14 , 15 , 16 , 19 , 20 ]. Specifically, unlike traditional feasibility and pilot studies that may include the preliminary testing of interventions on individual clinical or public health outcomes, implementation feasibility and pilot studies that explore strategies to improve intervention implementation often require assessing changes across multiple levels including individuals (e.g. service providers or clinicians) and organisational systems [ 21 ]. Due to the complexity of influencing behaviour change, the role of feasibility and pilot studies of implementation may also extend to identifying potential causal mechanisms of change and facilitate an iterative process of refining intervention strategies and optimising their impact [ 16 , 17 ]. In addition, where conventional clinical or public health efficacy trials are typically conducted under controlled conditions and directed mostly by researchers, implementation trials are more pragmatic [ 15 ]. As is the case for well conducted effectiveness trials, implementation trials often require partnerships with end-users and at times, the prioritisation of end-user needs over methods (e.g. random assignment) that seek to maximise internal validity [ 15 , 22 ]. These factors pose additional challenges for implementation researchers and underscore the need for guidance on conducting feasibility and pilot implementation studies.

Given the importance of feasibility and pilot studies in improving implementation strategies and the quality of full-scale trials of those implementation strategies, our aim is to provide practice guidance for those undertaking formative feasibility or pilot studies in the field of implementation science. Specifically, we seek to provide guidance pertaining to the three possible purposes of undertaking pilot and feasibility studies, namely (i) to inform implementation strategy development, (ii) to assess potential implementation strategy effects and (iii) to assess the feasibility of study methods.

A series of three facilitated group discussions were conducted with a group comprising of the 6 members from Canada, the U.S. and Australia (authors of the manuscript) that were mutually interested in the use of feasibility and pilot trials in implementation science. Members included international experts in implementation and behavioural science, public health and trial methods, and had considerable experience in conducting feasibility, pilot and/ or implementation trials. The group was responsible for developing the guidance document, including identification and synthesis of pertinent literature, and approving the final guidance.

To inform guidance development, a literature review was undertaken in electronic bibliographic databases and google, to identify and compile existing recommendations and guidelines for feasibility and pilot studies broadly. Through this process, we identified 30 such guidelines and recommendations relevant to our aim [ 2 , 10 , 14 , 15 , 17 , 18 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 ]. In addition, seminal methods and implementation science texts recommended by the group were examined. These included the CONSORT 2010 Statement: extension to randomised pilot and feasibility trials [ 18 ], the Medical Research Council’s framework for development and evaluation of randomised controlled trials for complex interventions to improve health [ 2 ], the National Institute of Health Research (NIHR) definitions [ 39 ] and the Quality Enhancement Research Initiative (QUERI) Implementation Guide [ 4 ]. A summary of feasibility and pilot study guidelines and recommendations, and that of seminal methods and implementation science texts, was compiled by two authors. This document served as the primary discussion document in meetings of the group. Additional targeted searches of the literature were undertaken in circumstances where the identified literature did not provide sufficient guidance. The manuscript was developed iteratively over 9 months via electronic circulation and comment by the group. Any differences in views between reviewers was discussed and resolved via consensus during scheduled international video-conference calls. All members of the group supported and approved the content of the final document.

The broad guidance provided is intended to be used as supplementary resources to existing seminal feasibility and pilot study resources. We used the definitions of feasibility and pilot studies as proposed by Eldridge and colleagues [ 10 ]. These definitions propose that any type of study relating to the preparation for a main study may be classified as a “feasibility study”, and that the term “pilot” study represents a subset of feasibility studies that specifically look at a design feature proposed for the main trial, whether in part of in full, that is being conducted on a smaller scale [ 10 ]. In addition, when referring to pilot studies, unless explicitly stated otherwise, we will primarily focus on pilot trials using a randomised design. We focus on randomised trials as such designs are the most common trial design in implementation research, and randomised designs may provide the most robust estimates of the potential effect of implementation strategies [ 46 ]. Those undertaking pilot studies that employ non-randomised designs need to interpret the guidance provided in this context. We acknowledge, however, that using randomised designs can prove particularly challenging in the field of implementation science, where research is often undertaken in real-world contexts with pragmatic constraints.

We used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as the framework for conceptualising the application of feasibility testing of implementation interventions [ 47 ]. The typology makes an explicit distinction between the purpose and methods of implementation and conventional clinical (or public health efficacy) trials. Specifically, the first two of the three hybrid designs may be relevant for implementation feasibility or pilot studies. Hybrid Type 1 trials are those designed to test the effectiveness of an intervention on clinical or public health outcomes (primary aim) while conducting a feasibility or pilot study for future implementation via observing and gathering information regarding implementation in a real-world setting/situation (secondary aim) [ 47 ]. Hybrid Type 2 trials involve the simultaneous testing of both the clinical intervention and the testing or feasibility of a formed implementation intervention/strategy as co-primary aims. For this design, “testing” is inclusive of pilot studies with an outcome measure and related hypothesis [ 47 ]. Hybrid Type 3 trials are definitive implementation trials designed to test the effectiveness of an implementation strategy whilst also collecting secondary outcome data on clinical or public health outcomes on a population of interest [ 47 ]. As the implementation aim of the trial is a definitively powered trial, it was not considered relevant to the conduct of feasibility and pilot studies in the field and will not be discussed.

Embedding of feasibility and pilot studies within Type 1 and Type 2 effectiveness-implementation hybrid trials has been recommended as an efficient way to increase the availability of information and evidence to accelerate the field of implementation science and the development and testing of implementation strategies [ 4 ]. However, implementation feasibility and pilot studies are also undertaken as stand-alone exploratory studies and do not include effectiveness measures in terms of the patient or public health outcomes. As such, in addition to discussing feasibility and pilot trials embedded in hybrid trial designs, we will also refer to stand-alone implementation feasibility and pilot studies.

An overview of guidance (aims, design, measures, sample size and power, progression criteria and reporting) for feasibility and pilot implementation studies can be found in Table 1 .

Purpose (aims)

The primary objective of hybrid type 1 trial is to assess the effectiveness of a clinical or public health intervention (rather than an implementation strategy) on the patient or population health outcomes [ 47 ]. Implementation strategies employed in these trials are often designed to maximise the likelihood of an intervention effect [ 51 ], and may not be intended to represent the strategy that would (or could feasibly), be used to support implementation in more “real world” contexts. Specific aims of implementation feasibility or pilot studies undertaken as part of Hybrid Type 1 trials are therefore formative and descriptive as the implementation strategy has not been fully formed nor will be tested. Thus, the purpose of a Hybrid Type 1 feasibility study is generally to inform the development or refinement of the implementation strategy rather than to test potential effects or mechanisms [ 22 , 47 ]. An example of a Hybrid Type 1 trial by Cabassa and colleagues is provided in Additional file 1 [ 52 ].

In Hybrid Type 2 trial designs, there is a dual purpose to test: (i) the clinical or public health effectiveness of the intervention on clinical or public health outcomes (e.g. measure of disease or health behaviour) and (ii) test or measure the impact of the implementation strategy on implementation outcomes (e.g. adoption of health policy in a community setting) [ 53 ]. However, testing the implementation strategy on implementation outcomes may be a secondary aim in these trials and positioned as a pilot [ 22 ]. In Hybrid Type 2 trial designs, the implementation strategy is more developed than in Hybrid Type 1 trials, resembling that intended for future testing in a definitive implementation randomised controlled trial. The dual testing of the evidence-based intervention and implementation interventions or strategies in Hybrid Type 2 trial designs allows for direct assessment of potential effects of an implementation strategy and exploration of components of the strategy to further refine logic models. Additionally, such trials allow for assessments of the feasibility, utility, acceptability or quality of research methods for use in a planned definitive trial. An example of a Hybrid Type 2 trial design by Barnes and colleagues [ 54 ] is included in Additional file 2 .

Non-hybrid pilot implementation studies are undertaken in the absence of a broader effectiveness trial. Such studies typically occur when the effectiveness of a clinical or public health intervention is well established, but robust strategies to promote its broader uptake and integration into clinical or public health services remain untested [ 15 ]. In these situations, implementation pilot studies may test or explore specific trial methods for a future definitive randomised implementation trial. Similarly, a pilot implementation study may also be undertaken in a way that provides a more rigorous formative evaluation of hypothesised implementation strategy mechanisms [ 55 ], or potential impact of implementation strategies [ 56 ], using similar approaches to that employed in Hybrid Type 2 trials. Examples of potential aims for feasibility and pilot studies are outlined in Table 2 .

For implementation feasibility or pilot studies, as is the case for these types of studies in general, the selection of research design should be guided by the specific research question that the study is seeking to address [ 57 ]. Although almost any study design may be used, researchers should review the merits and potential threats to internal and external validity to help guide the selection of research design for feasibility/pilot testing [ 15 ].

As Hybrid Type 1 trials are primarily concerned with testing the effectiveness of an intervention (rather than implementation strategy), the research design will typically employ power calculations and randomisation procedures at the health outcome level to measure the effect on behaviour, symptoms, functional and/or other clinical or public health outcomes. Hybrid Type 1 feasibility studies may employ a variety of designs usually nested within the experimental group (those receiving the intervention and any form of an implementation support strategy) of the broader efficacy trial [ 47 ]. Consistent with the aims of Hybrid Type 1 feasibility and pilot studies, the research designs employed are likely to be non-comparative. Cross-sectional surveys, interviews or document review, qualitative research or mix methods approaches may be used to assess implementation contextual factors, such as barriers and enablers to implementation and/or the acceptability, perceived feasibility or utility of implementation strategies or research methods [ 47 ].

Pilot implementation studies as part of Hybrid Type 2 designs can make use of the comparative design of the broader effectiveness trial to examine the potential effects of the implementation strategy [ 47 ] and more robustly assess the implementation mechanisms, determinants and influence of broader contextual factors [ 53 ]. In this trial type, mixed method and qualitative methods may complement the findings of between group (implementation strategy arm versus comparison) quantitative comparisons, enable triangulation and provide more comprehensive evidence to inform implementation strategy development and assessment. Stand-alone implementation feasibility and pilot implementation studies are free from the constraints and opportunities of research embedded in broader effectiveness trials. As such, research can be designed in a way that best addresses the explicit implementation objectives of the study. Specifically, non-hybrid pilot studies can maximise the applicability of study findings for future definitive trials by employing methods to directly test trial methods such as recruitment or retention strategies [ 17 ], enabling estimates of implementation strategies effects [ 56 ] or capturing data to explicitly test logic models or strategy mechanisms.

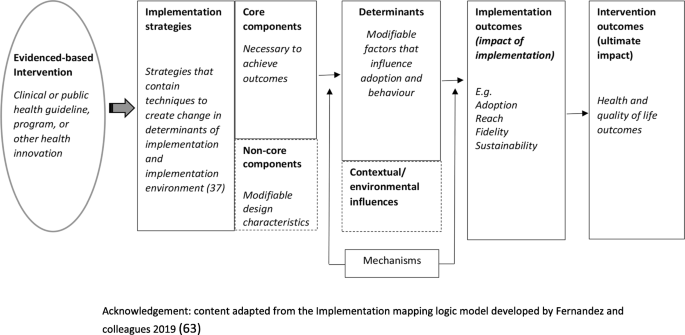

The selection of outcome measures should be linked directly to the objectives of the feasibility or pilot study. Where appropriate, measures should be objective or have suitable psychometric properties, such as evidence of reliability and validity [ 58 , 59 ]. Public health evaluation frameworks often guide the choice of outcome measure in feasibility and pilot implementation work and include RE_AIM [ 60 ], PRECEDE_PROCEED [ 61 ], Proctor and colleagues framework on outcomes for implementation research [ 62 ] and more recently, the “Implementation Mapping” framework [ 63 ]. Recent work by McKay and colleagues suggests a minimum data set of implementation outcomes that includes measures of adoption, reach, dose, fidelity and sustainability [ 46 ]. We discuss selected measures below and provide a summary in Table 3 [ 46 ]. Such measures could be assessed using quantitative or qualitative or mixed methods [ 46 ].

Measures to assess potential implementation strategy effects

In addition to assessing the effects of an intervention on individual clinical or public health outcomes, Hybrid Type 2 trials (and some non-hybrid pilot studies) are interested in measures of the potential effects of an implementation strategy on desired organisational or clinician practice change such as adherence to a guideline, process, clinical standard or delivery of a program [ 62 ]. A range of potential outcomes that could be used to assess implementation strategy effects has been identified, including measures of adoption, reach, fidelity and sustainability [ 46 ]. These outcomes are described in Table 2 , including definitions and examples of how they may be applied to the implementation component of innovation being piloted. Standardised tools to assess these outcomes are often unavailable due to the unique nature of interventions being implemented and the variable (and changing) implementation context in which the research is undertaken [ 64 ]. Researchers may collect outcome data for these measures as part of environmental observations, self-completed checklists or administrative records, audio recording of client sessions or other methods suited to their study and context [ 62 ]. The limitations of such methods, however, need to be considered.

Measures to inform the design or development of the implementation strategy

Measures informing the design or development of the implementation strategy are potentially part of all types of feasibility and pilot implementation studies. An understanding of the determinants of implementation is critical to implementation strategy development. A range of theoretical determinant frameworks have been published which describe factors that may influence intervention implementation [ 65 ], and systematic reviews have been undertaken describing the psychometric properties of many of these measures [ 64 , 66 ]. McKay and colleagues have also identified a priority set of determinants for implementation trials that could be considered for use in implementation feasibility and pilot studies, including measures of context, acceptability, adaptability, feasibility, compatibility, cost, culture, dose, complexity and self-efficacy [ 46 ]. These determinants are described in Table 3 , including definitions and how such measures may be applied to an implementation feasibility or pilot study. Researchers should consider, however, the application of such measures to assess both the intervention that is being implemented (as in a conventional intervention feasibility and pilot study) and the strategy that is being employed to facilitate its implementation, given the importance of the interaction between these factors and implementation success [ 46 ]. Examples of the potential application of measures to both the intervention and its implementation strategies have been outlined elsewhere [ 46 ]. Although a range of quantitative tools could be used to measure such determinants [ 58 , 66 ], qualitative or mixed methods are generally recommended given the capacity of qualitative measures to provide depth to the interpretation of such evaluations [ 40 ].

Measures of potential implementation determinants may be included to build or enhance logic models (Hybrid Type 1 and 2 feasibility and pilot studies) and explore implementation strategy mechanisms (Hybrid Type 2 pilot studies and non-hybrid pilot studies) [ 67 ]. If exploring strategy mechanisms, a hypothesized logic model underpinning the implementation strategy should be articulated including strategy-mechanism linkages, which are required to guide the measurement of key determinants [ 55 , 63 ]. An important determinant which can complicate logic model specification and measurement is the process of adaptation—modifications to the intervention or its delivery (implementation), through the input of service providers or implementers [ 68 ]. Logic models should specify components of implementation strategies thought to be “core” to their effects and those which are thought to be “non-core” where adaptation may occur without adversely impacting on effects. Stirman and colleagues propose a method for assessing adaptations that could be considered for use in pilot and feasibility studies of implementation trials [ 69 ]. Figure 2 provides an example of some of the implementation logic model components that may be developed or refined as part of feasibility or pilot studies of implementation [ 15 , 63 ].

Example of components of an Implementation logic model

Measures to assess the feasibility of study methods

Measures of implementation feasibility and pilot study methods are similar to those of conventional studies for clinical or public health interventions. For example, standard measures of study participation and thresholds for study attrition (e.g. >20%) rates [ 73 ] can be employed in implementation studies [ 67 ]. Previous studies have also surveyed study data collectors to assess the success of blinding strategies [ 74 ]. Researchers may also consider assessing participation or adherence to implementation data collection procedures, the comprehension of survey items, data management strategies or other measures of feasibility of study methods [ 15 ].

Pilot study sample size and power

In effectiveness trials, power calculations and sample size decisions are primarily based on the detection of a clinically meaningful difference in measures of the effects of the intervention on the patient or public health outcomes such as behaviour, disease, symptomatology or functional outcomes [ 24 ]. In this context, the available study sample for implementation measures included in Hybrid Type 1 or 2 feasibility and pilot studies may be constrained by the sample and power calculations of the broader effectiveness trial in which they are embedded [ 47 ]. Nonetheless, a justification for the anticipated sample size for all implementation feasibility or pilot studies (hybrid or stand-alone) is recommended [ 18 ], to ensure that implementation measures and outcomes achieve sufficient estimates of precision to be useful. For Hybrid type 2 and relevant stand-alone implementation pilot studies, sample size calculations for implementation outcomes should seek to achieve adequate estimates of precision deemed sufficient to inform progression to a fully powered trial [ 18 ].

Progression criteria

Stating progression criteria when reporting feasibility and pilot studies is recommended as part of the CONSORT 2010 extension to randomised pilot and feasibility trials guidelines [ 18 ]. Generally, it is recommended that progression criteria should be set a priori and be specific to the feasibility measures, components and/or outcomes assessed in the study [ 18 ]. While little guidance is available, ideas around suitable progression criteria include assessment of uncertainties around feasibility, meeting recruitment targets, cost-effectiveness and refining causal hypotheses to be tested in future trials [ 17 ]. When developing progression criteria, the use of guidelines is suggested rather than strict thresholds [ 18 ], in order to allow for appropriate interpretation and exploration of potential solutions, for example, the use of a traffic light system with varying levels of acceptability [ 17 , 24 ]. For example, Thabane and colleagues recommend that, in general, the outcome of a pilot study can be one of the following: (i) stop—main study not feasible (red); (ii) continue, but modify protocol—feasible with modifications (yellow); (iii) continue without modifications, but monitor closely—feasible with close monitoring and (iv) continue without modifications (green) (44)p5.